Assessing the accuracy of algorithms

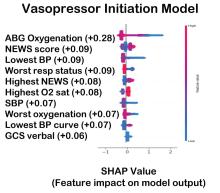

The value of the data set is directly related to the accuracy of its labels. Traditional methods that measure model performance, such as sensitivity, specificity, and predictive values (PPV and NPV), have important limitations. They provide little insight into how a complex model made its prediction. Understanding which individual features drive model accuracy is key to fostering trust in model predictions. This can be done by comparing model output with and without including individual features. The results of all possible combinations are aggregated according to feature importance, which is summarized in the Shapley value for each model feature. Higher values indicate greater relative importance. SHAP plots help identify how much and how often specific features change the model output, presenting values of individual model estimates with and without a specific feature (see Figure 1).

Promoting AI use

AI and machine learning algorithms are coming to patient care. Understanding the language of AI helps caregivers integrate these tools into their practices. The science of AI faces serious challenges. Algorithms must be recalibrated to keep pace as therapies advance, disease prevalence changes, and our population ages. AI must address new challenges as they confront those suffering from respiratory diseases. This resource encourages clinicians with novel approaches by using AI methodologies to advance their development. We can better address future health care needs by promoting the equitable use of AI technologies, especially among socially disadvantaged developers.

References

1. Lilly CM, Soni AV, Dunlap D, et al. Advancing point of care testing by application of machine learning techniques and artificial intelligence. Chest. 2024 (in press).

2. Lilly CM, Kirk D, Pessach IM, et al. Application of machine learning models to biomedical and information system signals from critically ill adults. Chest. 2024;165(5):1139-1148.