User login

Consider Ovarian Cancer as a Differential Diagnosis

This year in the United States, there were an estimated 22,530 new cases of ovarian cancer and an estimated 13,980 ovarian cancer deaths.1 Ovarian cancer accounts for more deaths than any other female reproductive system cancer.2 The high mortality rate is attributed to the advanced stage of cancer at initial presentation: Women diagnosed with localized disease have an estimated 5-year survival rate of 92%, while those diagnosed with advanced disease have a 5-year survival rate of 29%.3 For this reason, early detection of ovarian cancer is paramount.

A Personal Story

I think about ovarian cancer every day, because I am a survivor of this deadly disease. In 2018, at age 53, I received the diagnosis of stage 1A high-grade serous carcinoma of the left ovary. My cancer was discovered incidentally: I presented to my health care provider with a 6-month history of metrorrhagia and a prior history of regular menstruation with dysmenorrhea controlled with ibuprofen. My family and personal history of cancer was negative, I had a normal BMI, I didn’t smoke and consumed alcohol only moderately, my lifestyle was active, and I had no chronic diseases and used no medications regularly. My clinician performed a pelvic exam and ordered sexually transmitted infection testing and blood work (complete blood count, metabolic panel, and TSH). The differential diagnosis at this point included

- Thyroid dysfunction

- Perimenopause

- Sexually transmitted infection

- Coagulation defect

- Foreign body

- Infection.

All testing yielded normal findings. At my follow-up appointment, we discussed perimenopause symptoms and agreed that I would continue monitoring the bleeding. If at a later date I wanted to pursue an ultrasound, I was instructed to call the office. It was not suggested that I schedule a follow-up office visit.

Several months later, persistent metrorrhagia prompted me to request a transvaginal ultrasound (TVU)—resulting in the discovery of a left adnexal solid mass and probable endometrial polyp. A referral to a gynecologic oncologist resulted in further imaging, which confirmed the TVU results. Surgical intervention was recommended.

One week later, I underwent robotic-assisted total laparoscopic hysterectomy, bilateral salpingo-oophorectomy, left pelvic and periaortic lymph node dissection, and omentectomy. The pathology report confirmed stage 1A high-grade serous carcinoma of the left ovary, as well as stage 1A grade 1 endometrioid adenocarcinoma of the uterus. I required 6 cycles of chemotherapy before follow-up imaging yielded negative results, with no evidence of metastatic disease.

A Call to Action

The recently updated US Preventive Services Task Force guidelines continue not to recommend annual screening with TVU and/or cancer antigen 125 (CA-125) blood testing for ovarian cancer in asymptomatic, average-risk women. A review of the evidence found no mortality benefit and high false-positive rates, which led to unnecessary surgeries and physiologic stress due to excess cancer worry.4 This (lack of) recommendation leaves the clinician in the position of not performing or ordering screening tests, except in cases in which the patient presents with symptoms or requests screening for ovarian cancer.

Yet it cannot be overstated: The clinician’s role in identifying risk factors for and recognizing symptoms of ovarian cancer is extremely important in the absence of routine screening recommendations. Risk factors include a positive family history of gynecologic, breast, or colon cancers; genetic predisposition; personal history of breast cancer; use of menopausal hormone therapy; excess body weight; smoking; and sedentary lifestyle.3 In my case, my risk for ovarian cancer was average.

Continue to: With regard to symptoms...

With regard to symptoms, most women do not report any until ovarian cancer has reached advanced stages—and even then, the symptoms are vague and nonspecific.5 They may include urinary urgency or frequency; change in bowel habit; difficulty eating or feeling full quickly; persistent back, pelvic, or abdominal pain; extreme tiredness; vaginal bleeding after menopause; increased abdominal size; or bloating on most days.5

So what can we as clinicians do? First, if I may offer a word of caution: When confronted with those vague and nonspecific symptoms, be careful not to dismiss them out of hand as a result of aging, stress, or menopause. As my case demonstrates, for example, metrorrhagia is not necessarily a benign condition for the premenopausal woman.

Furthermore, we can empower patients by educating them about ovarian cancer symptoms and risk factors, information that may promote help-seeking behaviors that aid in early detection. In my case, the continued symptom of abnormal uterine bleeding prompted me to seek further assessment, which led to the discovery of ovarian cancer. Had I not been an educated and empowered patient, I would be telling a completely different story today—most likely one that would include advanced staging. Partner with your patient to discuss available diagnostic testing options and schedule follow-up appointments to monitor presenting complaints.

We also need to partner with our oncology colleagues and researchers. A positive diagnostic test result for possible malignancy necessitates referral to a gynecologic oncologist. Treatment by specialists in high-volume hospitals results in improved ovarian cancer outcomes.6 And we should advocate for continued research to support the discovery of an efficient population screening protocol for this deadly disease.

Finally, and perhaps most radically, I encourage you not to take a watch-and-wait approach in these situations. Ultrasounds are inexpensive, have low mortality risk, and achieve high sensitivity and specificity in detecting and managing adnexal abnormalities.7 In my opinion, the endorsement of TVU testing in this clinical situation is a proactive, prudent, and reasonable action compared with watching and waiting, and it may result in early detection as opposed to advanced disease.

Continue to: I hope that...

I hope that sharing my personal experience with ovarian cancer will compel health care providers to consider this disease as a differential diagnosis and perform appropriate testing when average-risk patients present with nonspecific symptoms. Ultimately, our collective goal should be to increase the survival rate and reduce the suffering associated with ovarian cancer.

1. National Cancer Institute. Cancer Stat Facts: Ovarian Cancer. https://seer.cancer.gov/statfacts/html/ovary.html. Accessed December 3, 2019.

2. American Cancer Society. Key Statistics for Ovarian Cancer. Revised January 8, 2019. www.cancer.org/cancer/ovarian-cancer/about/key-statistics.html. Accessed December 3, 2019.

3. American Cancer Society. Cancer Facts & Figures 2019. www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2019/cancer-facts-and-figures-2019.pdf . Accessed December 4, 2019.

4. Grossman DC, Surry SJ, Owens DK, et al. Screening for ovarian cancer: US Preventive Services Task Force Recommendation Statement. JAMA. 2018;319(6):588-594.

5. Smits S, Boivin J, Menon U, Brain K. Influences on anticipated time to ovarian cancer symptom presentation in women at increased risk compared to population risk of ovarian cancer. BMC Cancer. 2017;17(814):1-11.

6. Pavlik EJ. Ten important considerations for ovarian cancer screening. Diagnostics. 2017;7(22):1-11.

7. Ormsby EL, Pavlik EJ, McGahan JP. Ultrasound monitoring of extant adnexal masses in the era of type 1 and type 2 ovarian cancers: lessons learned from ovarian cancer screening trials. Diagnostics. 2017;7(25):1-19.

This year in the United States, there were an estimated 22,530 new cases of ovarian cancer and an estimated 13,980 ovarian cancer deaths.1 Ovarian cancer accounts for more deaths than any other female reproductive system cancer.2 The high mortality rate is attributed to the advanced stage of cancer at initial presentation: Women diagnosed with localized disease have an estimated 5-year survival rate of 92%, while those diagnosed with advanced disease have a 5-year survival rate of 29%.3 For this reason, early detection of ovarian cancer is paramount.

A Personal Story

I think about ovarian cancer every day, because I am a survivor of this deadly disease. In 2018, at age 53, I received the diagnosis of stage 1A high-grade serous carcinoma of the left ovary. My cancer was discovered incidentally: I presented to my health care provider with a 6-month history of metrorrhagia and a prior history of regular menstruation with dysmenorrhea controlled with ibuprofen. My family and personal history of cancer was negative, I had a normal BMI, I didn’t smoke and consumed alcohol only moderately, my lifestyle was active, and I had no chronic diseases and used no medications regularly. My clinician performed a pelvic exam and ordered sexually transmitted infection testing and blood work (complete blood count, metabolic panel, and TSH). The differential diagnosis at this point included

- Thyroid dysfunction

- Perimenopause

- Sexually transmitted infection

- Coagulation defect

- Foreign body

- Infection.

All testing yielded normal findings. At my follow-up appointment, we discussed perimenopause symptoms and agreed that I would continue monitoring the bleeding. If at a later date I wanted to pursue an ultrasound, I was instructed to call the office. It was not suggested that I schedule a follow-up office visit.

Several months later, persistent metrorrhagia prompted me to request a transvaginal ultrasound (TVU)—resulting in the discovery of a left adnexal solid mass and probable endometrial polyp. A referral to a gynecologic oncologist resulted in further imaging, which confirmed the TVU results. Surgical intervention was recommended.

One week later, I underwent robotic-assisted total laparoscopic hysterectomy, bilateral salpingo-oophorectomy, left pelvic and periaortic lymph node dissection, and omentectomy. The pathology report confirmed stage 1A high-grade serous carcinoma of the left ovary, as well as stage 1A grade 1 endometrioid adenocarcinoma of the uterus. I required 6 cycles of chemotherapy before follow-up imaging yielded negative results, with no evidence of metastatic disease.

A Call to Action

The recently updated US Preventive Services Task Force guidelines continue not to recommend annual screening with TVU and/or cancer antigen 125 (CA-125) blood testing for ovarian cancer in asymptomatic, average-risk women. A review of the evidence found no mortality benefit and high false-positive rates, which led to unnecessary surgeries and physiologic stress due to excess cancer worry.4 This (lack of) recommendation leaves the clinician in the position of not performing or ordering screening tests, except in cases in which the patient presents with symptoms or requests screening for ovarian cancer.

Yet it cannot be overstated: The clinician’s role in identifying risk factors for and recognizing symptoms of ovarian cancer is extremely important in the absence of routine screening recommendations. Risk factors include a positive family history of gynecologic, breast, or colon cancers; genetic predisposition; personal history of breast cancer; use of menopausal hormone therapy; excess body weight; smoking; and sedentary lifestyle.3 In my case, my risk for ovarian cancer was average.

Continue to: With regard to symptoms...

With regard to symptoms, most women do not report any until ovarian cancer has reached advanced stages—and even then, the symptoms are vague and nonspecific.5 They may include urinary urgency or frequency; change in bowel habit; difficulty eating or feeling full quickly; persistent back, pelvic, or abdominal pain; extreme tiredness; vaginal bleeding after menopause; increased abdominal size; or bloating on most days.5

So what can we as clinicians do? First, if I may offer a word of caution: When confronted with those vague and nonspecific symptoms, be careful not to dismiss them out of hand as a result of aging, stress, or menopause. As my case demonstrates, for example, metrorrhagia is not necessarily a benign condition for the premenopausal woman.

Furthermore, we can empower patients by educating them about ovarian cancer symptoms and risk factors, information that may promote help-seeking behaviors that aid in early detection. In my case, the continued symptom of abnormal uterine bleeding prompted me to seek further assessment, which led to the discovery of ovarian cancer. Had I not been an educated and empowered patient, I would be telling a completely different story today—most likely one that would include advanced staging. Partner with your patient to discuss available diagnostic testing options and schedule follow-up appointments to monitor presenting complaints.

We also need to partner with our oncology colleagues and researchers. A positive diagnostic test result for possible malignancy necessitates referral to a gynecologic oncologist. Treatment by specialists in high-volume hospitals results in improved ovarian cancer outcomes.6 And we should advocate for continued research to support the discovery of an efficient population screening protocol for this deadly disease.

Finally, and perhaps most radically, I encourage you not to take a watch-and-wait approach in these situations. Ultrasounds are inexpensive, have low mortality risk, and achieve high sensitivity and specificity in detecting and managing adnexal abnormalities.7 In my opinion, the endorsement of TVU testing in this clinical situation is a proactive, prudent, and reasonable action compared with watching and waiting, and it may result in early detection as opposed to advanced disease.

Continue to: I hope that...

I hope that sharing my personal experience with ovarian cancer will compel health care providers to consider this disease as a differential diagnosis and perform appropriate testing when average-risk patients present with nonspecific symptoms. Ultimately, our collective goal should be to increase the survival rate and reduce the suffering associated with ovarian cancer.

This year in the United States, there were an estimated 22,530 new cases of ovarian cancer and an estimated 13,980 ovarian cancer deaths.1 Ovarian cancer accounts for more deaths than any other female reproductive system cancer.2 The high mortality rate is attributed to the advanced stage of cancer at initial presentation: Women diagnosed with localized disease have an estimated 5-year survival rate of 92%, while those diagnosed with advanced disease have a 5-year survival rate of 29%.3 For this reason, early detection of ovarian cancer is paramount.

A Personal Story

I think about ovarian cancer every day, because I am a survivor of this deadly disease. In 2018, at age 53, I received the diagnosis of stage 1A high-grade serous carcinoma of the left ovary. My cancer was discovered incidentally: I presented to my health care provider with a 6-month history of metrorrhagia and a prior history of regular menstruation with dysmenorrhea controlled with ibuprofen. My family and personal history of cancer was negative, I had a normal BMI, I didn’t smoke and consumed alcohol only moderately, my lifestyle was active, and I had no chronic diseases and used no medications regularly. My clinician performed a pelvic exam and ordered sexually transmitted infection testing and blood work (complete blood count, metabolic panel, and TSH). The differential diagnosis at this point included

- Thyroid dysfunction

- Perimenopause

- Sexually transmitted infection

- Coagulation defect

- Foreign body

- Infection.

All testing yielded normal findings. At my follow-up appointment, we discussed perimenopause symptoms and agreed that I would continue monitoring the bleeding. If at a later date I wanted to pursue an ultrasound, I was instructed to call the office. It was not suggested that I schedule a follow-up office visit.

Several months later, persistent metrorrhagia prompted me to request a transvaginal ultrasound (TVU)—resulting in the discovery of a left adnexal solid mass and probable endometrial polyp. A referral to a gynecologic oncologist resulted in further imaging, which confirmed the TVU results. Surgical intervention was recommended.

One week later, I underwent robotic-assisted total laparoscopic hysterectomy, bilateral salpingo-oophorectomy, left pelvic and periaortic lymph node dissection, and omentectomy. The pathology report confirmed stage 1A high-grade serous carcinoma of the left ovary, as well as stage 1A grade 1 endometrioid adenocarcinoma of the uterus. I required 6 cycles of chemotherapy before follow-up imaging yielded negative results, with no evidence of metastatic disease.

A Call to Action

The recently updated US Preventive Services Task Force guidelines continue not to recommend annual screening with TVU and/or cancer antigen 125 (CA-125) blood testing for ovarian cancer in asymptomatic, average-risk women. A review of the evidence found no mortality benefit and high false-positive rates, which led to unnecessary surgeries and physiologic stress due to excess cancer worry.4 This (lack of) recommendation leaves the clinician in the position of not performing or ordering screening tests, except in cases in which the patient presents with symptoms or requests screening for ovarian cancer.

Yet it cannot be overstated: The clinician’s role in identifying risk factors for and recognizing symptoms of ovarian cancer is extremely important in the absence of routine screening recommendations. Risk factors include a positive family history of gynecologic, breast, or colon cancers; genetic predisposition; personal history of breast cancer; use of menopausal hormone therapy; excess body weight; smoking; and sedentary lifestyle.3 In my case, my risk for ovarian cancer was average.

Continue to: With regard to symptoms...

With regard to symptoms, most women do not report any until ovarian cancer has reached advanced stages—and even then, the symptoms are vague and nonspecific.5 They may include urinary urgency or frequency; change in bowel habit; difficulty eating or feeling full quickly; persistent back, pelvic, or abdominal pain; extreme tiredness; vaginal bleeding after menopause; increased abdominal size; or bloating on most days.5

So what can we as clinicians do? First, if I may offer a word of caution: When confronted with those vague and nonspecific symptoms, be careful not to dismiss them out of hand as a result of aging, stress, or menopause. As my case demonstrates, for example, metrorrhagia is not necessarily a benign condition for the premenopausal woman.

Furthermore, we can empower patients by educating them about ovarian cancer symptoms and risk factors, information that may promote help-seeking behaviors that aid in early detection. In my case, the continued symptom of abnormal uterine bleeding prompted me to seek further assessment, which led to the discovery of ovarian cancer. Had I not been an educated and empowered patient, I would be telling a completely different story today—most likely one that would include advanced staging. Partner with your patient to discuss available diagnostic testing options and schedule follow-up appointments to monitor presenting complaints.

We also need to partner with our oncology colleagues and researchers. A positive diagnostic test result for possible malignancy necessitates referral to a gynecologic oncologist. Treatment by specialists in high-volume hospitals results in improved ovarian cancer outcomes.6 And we should advocate for continued research to support the discovery of an efficient population screening protocol for this deadly disease.

Finally, and perhaps most radically, I encourage you not to take a watch-and-wait approach in these situations. Ultrasounds are inexpensive, have low mortality risk, and achieve high sensitivity and specificity in detecting and managing adnexal abnormalities.7 In my opinion, the endorsement of TVU testing in this clinical situation is a proactive, prudent, and reasonable action compared with watching and waiting, and it may result in early detection as opposed to advanced disease.

Continue to: I hope that...

I hope that sharing my personal experience with ovarian cancer will compel health care providers to consider this disease as a differential diagnosis and perform appropriate testing when average-risk patients present with nonspecific symptoms. Ultimately, our collective goal should be to increase the survival rate and reduce the suffering associated with ovarian cancer.

1. National Cancer Institute. Cancer Stat Facts: Ovarian Cancer. https://seer.cancer.gov/statfacts/html/ovary.html. Accessed December 3, 2019.

2. American Cancer Society. Key Statistics for Ovarian Cancer. Revised January 8, 2019. www.cancer.org/cancer/ovarian-cancer/about/key-statistics.html. Accessed December 3, 2019.

3. American Cancer Society. Cancer Facts & Figures 2019. www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2019/cancer-facts-and-figures-2019.pdf . Accessed December 4, 2019.

4. Grossman DC, Surry SJ, Owens DK, et al. Screening for ovarian cancer: US Preventive Services Task Force Recommendation Statement. JAMA. 2018;319(6):588-594.

5. Smits S, Boivin J, Menon U, Brain K. Influences on anticipated time to ovarian cancer symptom presentation in women at increased risk compared to population risk of ovarian cancer. BMC Cancer. 2017;17(814):1-11.

6. Pavlik EJ. Ten important considerations for ovarian cancer screening. Diagnostics. 2017;7(22):1-11.

7. Ormsby EL, Pavlik EJ, McGahan JP. Ultrasound monitoring of extant adnexal masses in the era of type 1 and type 2 ovarian cancers: lessons learned from ovarian cancer screening trials. Diagnostics. 2017;7(25):1-19.

1. National Cancer Institute. Cancer Stat Facts: Ovarian Cancer. https://seer.cancer.gov/statfacts/html/ovary.html. Accessed December 3, 2019.

2. American Cancer Society. Key Statistics for Ovarian Cancer. Revised January 8, 2019. www.cancer.org/cancer/ovarian-cancer/about/key-statistics.html. Accessed December 3, 2019.

3. American Cancer Society. Cancer Facts & Figures 2019. www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2019/cancer-facts-and-figures-2019.pdf . Accessed December 4, 2019.

4. Grossman DC, Surry SJ, Owens DK, et al. Screening for ovarian cancer: US Preventive Services Task Force Recommendation Statement. JAMA. 2018;319(6):588-594.

5. Smits S, Boivin J, Menon U, Brain K. Influences on anticipated time to ovarian cancer symptom presentation in women at increased risk compared to population risk of ovarian cancer. BMC Cancer. 2017;17(814):1-11.

6. Pavlik EJ. Ten important considerations for ovarian cancer screening. Diagnostics. 2017;7(22):1-11.

7. Ormsby EL, Pavlik EJ, McGahan JP. Ultrasound monitoring of extant adnexal masses in the era of type 1 and type 2 ovarian cancers: lessons learned from ovarian cancer screening trials. Diagnostics. 2017;7(25):1-19.

New evidence further supports starting CRC screening at age 45

SAN ANTONIO – The American Cancer Society’s 2018 qualified recommendation to lower the starting age for colorectal cancer screening from 50 to 45 years in average-risk individuals has picked up new support from a New Hampshire Colonoscopy Registry analysis.

Data from the population-based statewide colonoscopy registry demonstrated that the prevalence of both advanced adenomas and clinically significant serrated polyps was closely similar for average-risk New Hampshirites age 45-49 years and for those age 50-54, Lynn F. Butterly, MD, reported at the annual meeting of the American College of Gastroenterology.

“The clinical implication is that our data support the recommendation to begin average-risk colorectal cancer screening at age 45,” declared Dr. Butterly, a gastroenterologist at Dartmouth-Hitchcock Medical Center in Lebanon, N.H.

The American Cancer Society recommendation to lower the initial screening age was designed to address a disturbing national trend: the climbing incidence of colorectal cancer in young adults. Indeed, the incidence increased by 55% among 20- to 49-year-olds during 1995-2016, even while falling by 38% in individuals age 50 years and older. The 2018 recommendation was billed as “qualified” because it was based upon predictive modeling and National Cancer Institute Surveillance, Epidemiology, and End Results data which have been criticized as subject to potential bias. Several studies conducted in Korea and other Asian countries have reported a lower colorectal cancer risk in the younger adult population than in those age 50 or older, but questions have been raised about the applicability of such data to the U.S. population.

For Dr. Butterly and coinvestigators, the research imperative was clear: “We need to generate U.S. outcomes data for average-risk individuals age 45-49, versus those over age 50, for whom colorectal cancer screening is already strongly recommended.”

Toward that end, the investigators turned to the New Hampshire Colonoscopy Registry, which contains detailed data on 200,000 colonoscopies, with some 400 variables recorded per patient. To zero in on an average-risk population below age 50, they restricted the analysis to patients undergoing their first colonoscopy for evaluation of low-risk conditions including abdominal pain or constipation while excluding those with GI bleeding, iron-deficiency anemia, abnormal imaging, or a family history of colorectal cancer.

The final study population included 42,600 New Hampshire residents who underwent their first colonoscopy. The key outcomes were the prevalence of advanced adenomas, defined as adenomas more than 1 cm in size, or with high-grade dysplasia or villous elements, and the prevalence of clinically significant serrated polyps larger than 1 cm, or larger than 5 mm if proximally located, as well as traditional serrated adenomas and those with sessile features.

The prevalence of advanced adenomas in 1,870 average-risk patients aged 45-49 years was 3.7% and nearly identical at 3.6% in 22,160 individuals undergoing screening colonoscopy at age 50-54. The rate of clinically significant serrated polyps was 5.9% in the 45- to 49-year-olds, closely similar to the 6.1% rate in patients age 50-54.

Of note, the prevalence of advanced adenomas was just 1.1% in individuals younger than age 40 years, jumping to 3.0% among 40- to 44-year-olds, 5.1% in those age 55-59, and 6.9% at age 60 or more. Clinically significant serrated polyps followed a similar pattern, with rates of 3.0% before age 40, 5.1% in 40- to 44-year-olds, 6.6% in 55- to 59-year-olds, and 6.0% in those who were older.

In a multivariate logistic regression analysis adjusted for sex, body mass index, smoking, and other potential confounders, 45- to 49-year-olds were at a 243% increased risk of finding advanced adenomas on colonoscopy, compared with those less than 40 years old, while the 50- to 54-year-olds had a virtually identical 244% increased risk.

Dr. Butterly noted that there are now 15,000 cases of colorectal cancer occurring annually in individuals under age 50 in the United States, with 3,600 deaths.

“Prevention of colorectal cancer in young, productive individuals is an essential clinical imperative that must be addressed,” she concluded.

She reported having no financial conflicts regarding her study.

SAN ANTONIO – The American Cancer Society’s 2018 qualified recommendation to lower the starting age for colorectal cancer screening from 50 to 45 years in average-risk individuals has picked up new support from a New Hampshire Colonoscopy Registry analysis.

Data from the population-based statewide colonoscopy registry demonstrated that the prevalence of both advanced adenomas and clinically significant serrated polyps was closely similar for average-risk New Hampshirites age 45-49 years and for those age 50-54, Lynn F. Butterly, MD, reported at the annual meeting of the American College of Gastroenterology.

“The clinical implication is that our data support the recommendation to begin average-risk colorectal cancer screening at age 45,” declared Dr. Butterly, a gastroenterologist at Dartmouth-Hitchcock Medical Center in Lebanon, N.H.

The American Cancer Society recommendation to lower the initial screening age was designed to address a disturbing national trend: the climbing incidence of colorectal cancer in young adults. Indeed, the incidence increased by 55% among 20- to 49-year-olds during 1995-2016, even while falling by 38% in individuals age 50 years and older. The 2018 recommendation was billed as “qualified” because it was based upon predictive modeling and National Cancer Institute Surveillance, Epidemiology, and End Results data which have been criticized as subject to potential bias. Several studies conducted in Korea and other Asian countries have reported a lower colorectal cancer risk in the younger adult population than in those age 50 or older, but questions have been raised about the applicability of such data to the U.S. population.

For Dr. Butterly and coinvestigators, the research imperative was clear: “We need to generate U.S. outcomes data for average-risk individuals age 45-49, versus those over age 50, for whom colorectal cancer screening is already strongly recommended.”

Toward that end, the investigators turned to the New Hampshire Colonoscopy Registry, which contains detailed data on 200,000 colonoscopies, with some 400 variables recorded per patient. To zero in on an average-risk population below age 50, they restricted the analysis to patients undergoing their first colonoscopy for evaluation of low-risk conditions including abdominal pain or constipation while excluding those with GI bleeding, iron-deficiency anemia, abnormal imaging, or a family history of colorectal cancer.

The final study population included 42,600 New Hampshire residents who underwent their first colonoscopy. The key outcomes were the prevalence of advanced adenomas, defined as adenomas more than 1 cm in size, or with high-grade dysplasia or villous elements, and the prevalence of clinically significant serrated polyps larger than 1 cm, or larger than 5 mm if proximally located, as well as traditional serrated adenomas and those with sessile features.

The prevalence of advanced adenomas in 1,870 average-risk patients aged 45-49 years was 3.7% and nearly identical at 3.6% in 22,160 individuals undergoing screening colonoscopy at age 50-54. The rate of clinically significant serrated polyps was 5.9% in the 45- to 49-year-olds, closely similar to the 6.1% rate in patients age 50-54.

Of note, the prevalence of advanced adenomas was just 1.1% in individuals younger than age 40 years, jumping to 3.0% among 40- to 44-year-olds, 5.1% in those age 55-59, and 6.9% at age 60 or more. Clinically significant serrated polyps followed a similar pattern, with rates of 3.0% before age 40, 5.1% in 40- to 44-year-olds, 6.6% in 55- to 59-year-olds, and 6.0% in those who were older.

In a multivariate logistic regression analysis adjusted for sex, body mass index, smoking, and other potential confounders, 45- to 49-year-olds were at a 243% increased risk of finding advanced adenomas on colonoscopy, compared with those less than 40 years old, while the 50- to 54-year-olds had a virtually identical 244% increased risk.

Dr. Butterly noted that there are now 15,000 cases of colorectal cancer occurring annually in individuals under age 50 in the United States, with 3,600 deaths.

“Prevention of colorectal cancer in young, productive individuals is an essential clinical imperative that must be addressed,” she concluded.

She reported having no financial conflicts regarding her study.

SAN ANTONIO – The American Cancer Society’s 2018 qualified recommendation to lower the starting age for colorectal cancer screening from 50 to 45 years in average-risk individuals has picked up new support from a New Hampshire Colonoscopy Registry analysis.

Data from the population-based statewide colonoscopy registry demonstrated that the prevalence of both advanced adenomas and clinically significant serrated polyps was closely similar for average-risk New Hampshirites age 45-49 years and for those age 50-54, Lynn F. Butterly, MD, reported at the annual meeting of the American College of Gastroenterology.

“The clinical implication is that our data support the recommendation to begin average-risk colorectal cancer screening at age 45,” declared Dr. Butterly, a gastroenterologist at Dartmouth-Hitchcock Medical Center in Lebanon, N.H.

The American Cancer Society recommendation to lower the initial screening age was designed to address a disturbing national trend: the climbing incidence of colorectal cancer in young adults. Indeed, the incidence increased by 55% among 20- to 49-year-olds during 1995-2016, even while falling by 38% in individuals age 50 years and older. The 2018 recommendation was billed as “qualified” because it was based upon predictive modeling and National Cancer Institute Surveillance, Epidemiology, and End Results data which have been criticized as subject to potential bias. Several studies conducted in Korea and other Asian countries have reported a lower colorectal cancer risk in the younger adult population than in those age 50 or older, but questions have been raised about the applicability of such data to the U.S. population.

For Dr. Butterly and coinvestigators, the research imperative was clear: “We need to generate U.S. outcomes data for average-risk individuals age 45-49, versus those over age 50, for whom colorectal cancer screening is already strongly recommended.”

Toward that end, the investigators turned to the New Hampshire Colonoscopy Registry, which contains detailed data on 200,000 colonoscopies, with some 400 variables recorded per patient. To zero in on an average-risk population below age 50, they restricted the analysis to patients undergoing their first colonoscopy for evaluation of low-risk conditions including abdominal pain or constipation while excluding those with GI bleeding, iron-deficiency anemia, abnormal imaging, or a family history of colorectal cancer.

The final study population included 42,600 New Hampshire residents who underwent their first colonoscopy. The key outcomes were the prevalence of advanced adenomas, defined as adenomas more than 1 cm in size, or with high-grade dysplasia or villous elements, and the prevalence of clinically significant serrated polyps larger than 1 cm, or larger than 5 mm if proximally located, as well as traditional serrated adenomas and those with sessile features.

The prevalence of advanced adenomas in 1,870 average-risk patients aged 45-49 years was 3.7% and nearly identical at 3.6% in 22,160 individuals undergoing screening colonoscopy at age 50-54. The rate of clinically significant serrated polyps was 5.9% in the 45- to 49-year-olds, closely similar to the 6.1% rate in patients age 50-54.

Of note, the prevalence of advanced adenomas was just 1.1% in individuals younger than age 40 years, jumping to 3.0% among 40- to 44-year-olds, 5.1% in those age 55-59, and 6.9% at age 60 or more. Clinically significant serrated polyps followed a similar pattern, with rates of 3.0% before age 40, 5.1% in 40- to 44-year-olds, 6.6% in 55- to 59-year-olds, and 6.0% in those who were older.

In a multivariate logistic regression analysis adjusted for sex, body mass index, smoking, and other potential confounders, 45- to 49-year-olds were at a 243% increased risk of finding advanced adenomas on colonoscopy, compared with those less than 40 years old, while the 50- to 54-year-olds had a virtually identical 244% increased risk.

Dr. Butterly noted that there are now 15,000 cases of colorectal cancer occurring annually in individuals under age 50 in the United States, with 3,600 deaths.

“Prevention of colorectal cancer in young, productive individuals is an essential clinical imperative that must be addressed,” she concluded.

She reported having no financial conflicts regarding her study.

REPORTING FROM ACG 2019

High infantile spasm risk should contraindicate sodium channel blocker antiepileptics

BALTIMORE – “This is scary and warrants caution,” said senior investigator and pediatric neurologist Shaun Hussain, MD, a pediatric neurologist at Mattel Children’s Hospital at UCLA. Because of the findings, “we are avoiding the use of voltage-gated sodium channel blockade in any child at risk for infantile spasms. More broadly, we are avoiding [them] in any infant if there is a good alternative medication, of which there are many in most cases.”

There have been a few previous case reports linking voltage-gated sodium channel blockers (SCBs) – which include oxcarbazepine, carbamazepine, lacosamide, and phenytoin – to infantile spasms, but they are still commonly used for infant seizures. There was some disagreement at UCLA whether there really was a link, so Dr. Hussain and his team took a look at the university’s experience. They matched 50 children with nonsyndromic epilepsy who subsequently developed video-EEG confirmed infantile spasms (cases) to 50 children who also had nonsyndromic epilepsy but did not develop spasms, based on follow-up duration and age and date of epilepsy onset.

The team then looked to see what drugs they had been on; it turned out that cases and controls were about equally as likely to have been treated with any specific antiepileptic, including SCBs. Infantile spasms were substantially more likely with SCB exposure in children with spasm risk factors, which also include focal cortical dysplasia, Aicardi syndrome, and other problems (HR 7.0; 95%; CI 2.5-19.8; P less than .001). Spasms were also more likely among even low-risk children treated with SCBs, although the trend was not statistically significant.

In the end, “we wonder how many cases of infantile spasms could [have been] prevented entirely if we had avoided sodium channel blockade,” Dr. Hussain said at the annual meeting of the American Epilepsy Society.

With so many other seizure options available – levetiracetam, topiramate, and phenobarbital, to name just a few – maybe it would be best “to stay away from” SCBs entirely in “infants with any form of epilepsy,” said lead investigator Jaeden Heesch, an undergraduate researcher who worked with Dr. Hussain.

It is unclear why SCBs increase infantile spasm risk; maybe nonselective voltage-gated sodium channel blockade interferes with proper neuron function in susceptible children, similar to the effects of sodium voltage-gated channel alpha subunit 1 mutations in Dravet syndrome, Dr. Hussain said. Perhaps the findings will inspire drug development. “If nonselective sodium channel blockade is bad, perhaps selective modulation of voltage-gated sodium currents [could be] beneficial or protective,” he said.

The age of epilepsy onset in the study was around 2 months. Children who went on to develop infantile spasms had an average of almost two seizures per day, versus fewer than one among controls, and were on an average of two, versus about 1.5 antiepileptics. The differences were not statistically significant.

The study looked at SCB exposure overall, but it’s possible that infantile spasm risk differs among the various class members.

The work was funded by the Elsie and Isaac Fogelman Endowment, the Hughes Family Foundation, and the UCLA Children’s Discovery and Innovation Institute. The investigators didn’t have any relevant disclosures.

SOURCE: Heesch J et al. AES 2019. Abstract 2.234.

BALTIMORE – “This is scary and warrants caution,” said senior investigator and pediatric neurologist Shaun Hussain, MD, a pediatric neurologist at Mattel Children’s Hospital at UCLA. Because of the findings, “we are avoiding the use of voltage-gated sodium channel blockade in any child at risk for infantile spasms. More broadly, we are avoiding [them] in any infant if there is a good alternative medication, of which there are many in most cases.”

There have been a few previous case reports linking voltage-gated sodium channel blockers (SCBs) – which include oxcarbazepine, carbamazepine, lacosamide, and phenytoin – to infantile spasms, but they are still commonly used for infant seizures. There was some disagreement at UCLA whether there really was a link, so Dr. Hussain and his team took a look at the university’s experience. They matched 50 children with nonsyndromic epilepsy who subsequently developed video-EEG confirmed infantile spasms (cases) to 50 children who also had nonsyndromic epilepsy but did not develop spasms, based on follow-up duration and age and date of epilepsy onset.

The team then looked to see what drugs they had been on; it turned out that cases and controls were about equally as likely to have been treated with any specific antiepileptic, including SCBs. Infantile spasms were substantially more likely with SCB exposure in children with spasm risk factors, which also include focal cortical dysplasia, Aicardi syndrome, and other problems (HR 7.0; 95%; CI 2.5-19.8; P less than .001). Spasms were also more likely among even low-risk children treated with SCBs, although the trend was not statistically significant.

In the end, “we wonder how many cases of infantile spasms could [have been] prevented entirely if we had avoided sodium channel blockade,” Dr. Hussain said at the annual meeting of the American Epilepsy Society.

With so many other seizure options available – levetiracetam, topiramate, and phenobarbital, to name just a few – maybe it would be best “to stay away from” SCBs entirely in “infants with any form of epilepsy,” said lead investigator Jaeden Heesch, an undergraduate researcher who worked with Dr. Hussain.

It is unclear why SCBs increase infantile spasm risk; maybe nonselective voltage-gated sodium channel blockade interferes with proper neuron function in susceptible children, similar to the effects of sodium voltage-gated channel alpha subunit 1 mutations in Dravet syndrome, Dr. Hussain said. Perhaps the findings will inspire drug development. “If nonselective sodium channel blockade is bad, perhaps selective modulation of voltage-gated sodium currents [could be] beneficial or protective,” he said.

The age of epilepsy onset in the study was around 2 months. Children who went on to develop infantile spasms had an average of almost two seizures per day, versus fewer than one among controls, and were on an average of two, versus about 1.5 antiepileptics. The differences were not statistically significant.

The study looked at SCB exposure overall, but it’s possible that infantile spasm risk differs among the various class members.

The work was funded by the Elsie and Isaac Fogelman Endowment, the Hughes Family Foundation, and the UCLA Children’s Discovery and Innovation Institute. The investigators didn’t have any relevant disclosures.

SOURCE: Heesch J et al. AES 2019. Abstract 2.234.

BALTIMORE – “This is scary and warrants caution,” said senior investigator and pediatric neurologist Shaun Hussain, MD, a pediatric neurologist at Mattel Children’s Hospital at UCLA. Because of the findings, “we are avoiding the use of voltage-gated sodium channel blockade in any child at risk for infantile spasms. More broadly, we are avoiding [them] in any infant if there is a good alternative medication, of which there are many in most cases.”

There have been a few previous case reports linking voltage-gated sodium channel blockers (SCBs) – which include oxcarbazepine, carbamazepine, lacosamide, and phenytoin – to infantile spasms, but they are still commonly used for infant seizures. There was some disagreement at UCLA whether there really was a link, so Dr. Hussain and his team took a look at the university’s experience. They matched 50 children with nonsyndromic epilepsy who subsequently developed video-EEG confirmed infantile spasms (cases) to 50 children who also had nonsyndromic epilepsy but did not develop spasms, based on follow-up duration and age and date of epilepsy onset.

The team then looked to see what drugs they had been on; it turned out that cases and controls were about equally as likely to have been treated with any specific antiepileptic, including SCBs. Infantile spasms were substantially more likely with SCB exposure in children with spasm risk factors, which also include focal cortical dysplasia, Aicardi syndrome, and other problems (HR 7.0; 95%; CI 2.5-19.8; P less than .001). Spasms were also more likely among even low-risk children treated with SCBs, although the trend was not statistically significant.

In the end, “we wonder how many cases of infantile spasms could [have been] prevented entirely if we had avoided sodium channel blockade,” Dr. Hussain said at the annual meeting of the American Epilepsy Society.

With so many other seizure options available – levetiracetam, topiramate, and phenobarbital, to name just a few – maybe it would be best “to stay away from” SCBs entirely in “infants with any form of epilepsy,” said lead investigator Jaeden Heesch, an undergraduate researcher who worked with Dr. Hussain.

It is unclear why SCBs increase infantile spasm risk; maybe nonselective voltage-gated sodium channel blockade interferes with proper neuron function in susceptible children, similar to the effects of sodium voltage-gated channel alpha subunit 1 mutations in Dravet syndrome, Dr. Hussain said. Perhaps the findings will inspire drug development. “If nonselective sodium channel blockade is bad, perhaps selective modulation of voltage-gated sodium currents [could be] beneficial or protective,” he said.

The age of epilepsy onset in the study was around 2 months. Children who went on to develop infantile spasms had an average of almost two seizures per day, versus fewer than one among controls, and were on an average of two, versus about 1.5 antiepileptics. The differences were not statistically significant.

The study looked at SCB exposure overall, but it’s possible that infantile spasm risk differs among the various class members.

The work was funded by the Elsie and Isaac Fogelman Endowment, the Hughes Family Foundation, and the UCLA Children’s Discovery and Innovation Institute. The investigators didn’t have any relevant disclosures.

SOURCE: Heesch J et al. AES 2019. Abstract 2.234.

REPORTING FROM AES 2019

Fast-tracking psilocybin for refractory depression makes sense

A significant proportion of patients with major depressive disorder (MDD) either do not respond or have partial responses to the currently available Food and Drug Administration–approved antidepressants.

In controlled clinical trials, there is about a 40%-60% symptom remission rate with a 20%-40% remission rate in community-based treatment settings. Not only do those medications lack efficacy in treating MDD, but there are currently no cures for this debilitating illness. As a result, many patients with MDD continue to suffer.

In response to those poor outcomes, researchers and clinicians have developed algorithms aimed at diagnosing the condition of treatment-resistant depression (TRD),1 which enable opportunities for various treatment methods.2 Several studies underway across the United States are testing what some might consider medically invasive procedures, such as electroconvulsive therapy (ECT), deep brain stimulation (DBS), and vagus nerve stimulation (VNS). ECT often is considered the gold standard of treatment response, but it requires anesthesia, induces a convulsion, and needs a willing patient and clinician. DBS has been used more widely in neurological treatment of movement disorders. Pioneering neurosurgical treatment for TRD reported recently in the American Journal of Psychiatry found that DBS of an area in the brain called the subcallosal cingulate produces clear and apparently sustained antidepressant effects.3 VNS4 remains an experimental treatment for MDD. TMS is safe, noninvasive, and approved by the FDA for depression, but responses appear similar to those with usual antidepressants.

It is not surprising, given those outcomes, that ketamine was fast-tracked in 2016. The enthusiasm related to ketamine’s effect on MDD and TRD has grown over time as more research findings reach the public. While it is unknown how ketamine affects the biological neural network, a single intravenous dose of ketamine (0.5 mg/kg) in patients diagnosed with TRD can lead to improved depression symptoms outcomes within a few hours – and those effects were sustained in 65%-70% of patients at 24 hours. Antidepressants take many weeks to show effects. Ketamine’s exciting findings also offered hope to clinicians and patients trying to manage suicidal thoughts and plans. Ketamine was quickly approved by the FDA as a nasal spray medication.

Now, in another encouraging development, the FDA has granted the Usona Institute Breakthrough Therapy designation for psilocybin for the treatment of MDD. The medical benefits of psilocybin, or “magic mushrooms,” has a long empirical history in our literature. Most recently, psilocybin was featured on “60 Minutes,”5 and in his book, “How to Change Your Mind,”6Michael Pollan details how psychedelic drugs where used to investigate and treat psychiatric disorders until the 1960s, when street use and unsupervised administration led to restrictions on their research and clinical use.

With protocol-driven specific trials, they might become critical medications for a wide range of psychiatric disorders, such as depression, PTSD, anxiety, and addictions. Exciting findings are coming from Roland R. Griffiths, PhD, and his team at Johns Hopkins University’s Center for Psychedelic and Consciousness Research. In a recent study8 with cancer patients suffering from depression and anxiety, carefully administered, specific and supervised high doses of psilocybin produced decreases in depression and anxiety, and increases in quality of life and life meaning attitudes. Those improved attitudes, behavior, and responses were sustained by 80% of the sample 6 months post treatment.

Dr. Griffiths’ center is collaborating with Usona, and this collaboration should result in specific guidelines for dose, safety, and protection against abuse and diversion,9 as the study and FDA trials for ketamine have as well.10 It is very encouraging that psychedelic drugs are receiving fast-track designations, and this development reflects a shift in the risk-benefit considerations taking place in our society. Changing attitudes about depression and other psychiatric diseases are encouraging new approaches and new treatments. Psychiatric suffering and pain are being prioritized in research and appreciated by the general public as devastating. Serious, random assignment placebo-controlled and double- blind research studies will define just how valuable these medications might be, what is the safe dose and duration, and for whom they might prove more effective than existing treatments.

The process will take some time. And it is worth remembering that, although research has been promising,11 the number of patients studied, research design, and outcomes are not yet proven for psilosybin.12 The FDA fast-track makes sense, and the agency should continue supporting these efforts for psychedelics. In fact, we think the FDA also should support the promising trials of nitrous oxide13 (laughing gas), and other safe and novel approaches to successfully treat refractory depression. While we wait for personalized psychiatric medicines to be developed and validated through the long process of FDA approval, we will at least have a larger suite of treatment options to match patients with, along with some new algorithms that treat MDD,* TRD, and other disorders just are around the corner.

Dr. Patterson Silver Wolf is an associate professor at Washington University in St. Louis’s Brown School of Social Work. He is a training faculty member for two National Institutes of Health–funded (T32) training programs and serves as the director of the Community Academic Partnership on Addiction (CAPA). He’s chief research officer at the new CAPA Clinic, a teaching addiction treatment facility that is incorporating and testing various performance-based practice technology tools to respond to the opioid crisis and improve addiction treatment outcomes. Dr. Gold is professor of psychiatry (adjunct) at Washington University, St. Louis. He is the 17th Distinguished Alumni Professor at the University of Florida, Gainesville. For more than 40 years, Dr. Gold has worked on developing models for understanding the effects of opioid, tobacco, cocaine, and other drugs, as well as food, on the brain and behavior. He has written several books and published more than 1,000 peer-reviewed scientific articles, texts, and practice guidelines.

References

1. Sackeim HA et al. J Psychiatr Res. 2019 Jun;113:125-36.

2. Conway CR et al. J Clin Psychiatry. 25 Nov;76(11):1569-70.

3. Crowell AL et al. Am J Psychiatry. 2019 Oct 4. doi: 10.1176.appi.ajp.2019.18121427.

4. Kumar A et al. Neuropsychiatr Dis Treat. 2019 Feb 13;15:457-68.

5. Psilocybin sessions: Psychedelics could help people with addiction and anxiety. “60 Minutes” CBS News. 2019 Oct 13.

6. Pollan M. How to Change Your Mind: What the New Science of Psychedelics Teaches Us About Consciousness, Dying, Addiction, Depression, and Transcendence (Penguin Random House, 2018).

7. Nutt D. Dialogues Clin Neurosci. 2019;21(2):139-47.

8. Griffiths RR et al. J Psychopharmacol 2016 Dec;30(12):1181-97.

9. Johnson MW et al. Neuropsychopharmacology. 2018 Nov;142:143-66.

10. Schwenk ES et al. Reg Anesth Pain Med. 2018 Jul;43(5):456-66.

11. Johnson MW et al. Neurotherapeutics. 2017 Jul;14(3):734-40.

12. Mutonni S et al. J Affect Disord. 2019 Nov.1;258:11-24.

13. Nagele P et al. J Clin Psychopharmacol. 2018 Apr;38(2):144-8.

*Correction, 1/9/2020: An earlier version of this story misidentified the intended disease state.

A significant proportion of patients with major depressive disorder (MDD) either do not respond or have partial responses to the currently available Food and Drug Administration–approved antidepressants.

In controlled clinical trials, there is about a 40%-60% symptom remission rate with a 20%-40% remission rate in community-based treatment settings. Not only do those medications lack efficacy in treating MDD, but there are currently no cures for this debilitating illness. As a result, many patients with MDD continue to suffer.

In response to those poor outcomes, researchers and clinicians have developed algorithms aimed at diagnosing the condition of treatment-resistant depression (TRD),1 which enable opportunities for various treatment methods.2 Several studies underway across the United States are testing what some might consider medically invasive procedures, such as electroconvulsive therapy (ECT), deep brain stimulation (DBS), and vagus nerve stimulation (VNS). ECT often is considered the gold standard of treatment response, but it requires anesthesia, induces a convulsion, and needs a willing patient and clinician. DBS has been used more widely in neurological treatment of movement disorders. Pioneering neurosurgical treatment for TRD reported recently in the American Journal of Psychiatry found that DBS of an area in the brain called the subcallosal cingulate produces clear and apparently sustained antidepressant effects.3 VNS4 remains an experimental treatment for MDD. TMS is safe, noninvasive, and approved by the FDA for depression, but responses appear similar to those with usual antidepressants.

It is not surprising, given those outcomes, that ketamine was fast-tracked in 2016. The enthusiasm related to ketamine’s effect on MDD and TRD has grown over time as more research findings reach the public. While it is unknown how ketamine affects the biological neural network, a single intravenous dose of ketamine (0.5 mg/kg) in patients diagnosed with TRD can lead to improved depression symptoms outcomes within a few hours – and those effects were sustained in 65%-70% of patients at 24 hours. Antidepressants take many weeks to show effects. Ketamine’s exciting findings also offered hope to clinicians and patients trying to manage suicidal thoughts and plans. Ketamine was quickly approved by the FDA as a nasal spray medication.

Now, in another encouraging development, the FDA has granted the Usona Institute Breakthrough Therapy designation for psilocybin for the treatment of MDD. The medical benefits of psilocybin, or “magic mushrooms,” has a long empirical history in our literature. Most recently, psilocybin was featured on “60 Minutes,”5 and in his book, “How to Change Your Mind,”6Michael Pollan details how psychedelic drugs where used to investigate and treat psychiatric disorders until the 1960s, when street use and unsupervised administration led to restrictions on their research and clinical use.

With protocol-driven specific trials, they might become critical medications for a wide range of psychiatric disorders, such as depression, PTSD, anxiety, and addictions. Exciting findings are coming from Roland R. Griffiths, PhD, and his team at Johns Hopkins University’s Center for Psychedelic and Consciousness Research. In a recent study8 with cancer patients suffering from depression and anxiety, carefully administered, specific and supervised high doses of psilocybin produced decreases in depression and anxiety, and increases in quality of life and life meaning attitudes. Those improved attitudes, behavior, and responses were sustained by 80% of the sample 6 months post treatment.

Dr. Griffiths’ center is collaborating with Usona, and this collaboration should result in specific guidelines for dose, safety, and protection against abuse and diversion,9 as the study and FDA trials for ketamine have as well.10 It is very encouraging that psychedelic drugs are receiving fast-track designations, and this development reflects a shift in the risk-benefit considerations taking place in our society. Changing attitudes about depression and other psychiatric diseases are encouraging new approaches and new treatments. Psychiatric suffering and pain are being prioritized in research and appreciated by the general public as devastating. Serious, random assignment placebo-controlled and double- blind research studies will define just how valuable these medications might be, what is the safe dose and duration, and for whom they might prove more effective than existing treatments.

The process will take some time. And it is worth remembering that, although research has been promising,11 the number of patients studied, research design, and outcomes are not yet proven for psilosybin.12 The FDA fast-track makes sense, and the agency should continue supporting these efforts for psychedelics. In fact, we think the FDA also should support the promising trials of nitrous oxide13 (laughing gas), and other safe and novel approaches to successfully treat refractory depression. While we wait for personalized psychiatric medicines to be developed and validated through the long process of FDA approval, we will at least have a larger suite of treatment options to match patients with, along with some new algorithms that treat MDD,* TRD, and other disorders just are around the corner.

Dr. Patterson Silver Wolf is an associate professor at Washington University in St. Louis’s Brown School of Social Work. He is a training faculty member for two National Institutes of Health–funded (T32) training programs and serves as the director of the Community Academic Partnership on Addiction (CAPA). He’s chief research officer at the new CAPA Clinic, a teaching addiction treatment facility that is incorporating and testing various performance-based practice technology tools to respond to the opioid crisis and improve addiction treatment outcomes. Dr. Gold is professor of psychiatry (adjunct) at Washington University, St. Louis. He is the 17th Distinguished Alumni Professor at the University of Florida, Gainesville. For more than 40 years, Dr. Gold has worked on developing models for understanding the effects of opioid, tobacco, cocaine, and other drugs, as well as food, on the brain and behavior. He has written several books and published more than 1,000 peer-reviewed scientific articles, texts, and practice guidelines.

References

1. Sackeim HA et al. J Psychiatr Res. 2019 Jun;113:125-36.

2. Conway CR et al. J Clin Psychiatry. 25 Nov;76(11):1569-70.

3. Crowell AL et al. Am J Psychiatry. 2019 Oct 4. doi: 10.1176.appi.ajp.2019.18121427.

4. Kumar A et al. Neuropsychiatr Dis Treat. 2019 Feb 13;15:457-68.

5. Psilocybin sessions: Psychedelics could help people with addiction and anxiety. “60 Minutes” CBS News. 2019 Oct 13.

6. Pollan M. How to Change Your Mind: What the New Science of Psychedelics Teaches Us About Consciousness, Dying, Addiction, Depression, and Transcendence (Penguin Random House, 2018).

7. Nutt D. Dialogues Clin Neurosci. 2019;21(2):139-47.

8. Griffiths RR et al. J Psychopharmacol 2016 Dec;30(12):1181-97.

9. Johnson MW et al. Neuropsychopharmacology. 2018 Nov;142:143-66.

10. Schwenk ES et al. Reg Anesth Pain Med. 2018 Jul;43(5):456-66.

11. Johnson MW et al. Neurotherapeutics. 2017 Jul;14(3):734-40.

12. Mutonni S et al. J Affect Disord. 2019 Nov.1;258:11-24.

13. Nagele P et al. J Clin Psychopharmacol. 2018 Apr;38(2):144-8.

*Correction, 1/9/2020: An earlier version of this story misidentified the intended disease state.

A significant proportion of patients with major depressive disorder (MDD) either do not respond or have partial responses to the currently available Food and Drug Administration–approved antidepressants.

In controlled clinical trials, there is about a 40%-60% symptom remission rate with a 20%-40% remission rate in community-based treatment settings. Not only do those medications lack efficacy in treating MDD, but there are currently no cures for this debilitating illness. As a result, many patients with MDD continue to suffer.

In response to those poor outcomes, researchers and clinicians have developed algorithms aimed at diagnosing the condition of treatment-resistant depression (TRD),1 which enable opportunities for various treatment methods.2 Several studies underway across the United States are testing what some might consider medically invasive procedures, such as electroconvulsive therapy (ECT), deep brain stimulation (DBS), and vagus nerve stimulation (VNS). ECT often is considered the gold standard of treatment response, but it requires anesthesia, induces a convulsion, and needs a willing patient and clinician. DBS has been used more widely in neurological treatment of movement disorders. Pioneering neurosurgical treatment for TRD reported recently in the American Journal of Psychiatry found that DBS of an area in the brain called the subcallosal cingulate produces clear and apparently sustained antidepressant effects.3 VNS4 remains an experimental treatment for MDD. TMS is safe, noninvasive, and approved by the FDA for depression, but responses appear similar to those with usual antidepressants.

It is not surprising, given those outcomes, that ketamine was fast-tracked in 2016. The enthusiasm related to ketamine’s effect on MDD and TRD has grown over time as more research findings reach the public. While it is unknown how ketamine affects the biological neural network, a single intravenous dose of ketamine (0.5 mg/kg) in patients diagnosed with TRD can lead to improved depression symptoms outcomes within a few hours – and those effects were sustained in 65%-70% of patients at 24 hours. Antidepressants take many weeks to show effects. Ketamine’s exciting findings also offered hope to clinicians and patients trying to manage suicidal thoughts and plans. Ketamine was quickly approved by the FDA as a nasal spray medication.

Now, in another encouraging development, the FDA has granted the Usona Institute Breakthrough Therapy designation for psilocybin for the treatment of MDD. The medical benefits of psilocybin, or “magic mushrooms,” has a long empirical history in our literature. Most recently, psilocybin was featured on “60 Minutes,”5 and in his book, “How to Change Your Mind,”6Michael Pollan details how psychedelic drugs where used to investigate and treat psychiatric disorders until the 1960s, when street use and unsupervised administration led to restrictions on their research and clinical use.

With protocol-driven specific trials, they might become critical medications for a wide range of psychiatric disorders, such as depression, PTSD, anxiety, and addictions. Exciting findings are coming from Roland R. Griffiths, PhD, and his team at Johns Hopkins University’s Center for Psychedelic and Consciousness Research. In a recent study8 with cancer patients suffering from depression and anxiety, carefully administered, specific and supervised high doses of psilocybin produced decreases in depression and anxiety, and increases in quality of life and life meaning attitudes. Those improved attitudes, behavior, and responses were sustained by 80% of the sample 6 months post treatment.

Dr. Griffiths’ center is collaborating with Usona, and this collaboration should result in specific guidelines for dose, safety, and protection against abuse and diversion,9 as the study and FDA trials for ketamine have as well.10 It is very encouraging that psychedelic drugs are receiving fast-track designations, and this development reflects a shift in the risk-benefit considerations taking place in our society. Changing attitudes about depression and other psychiatric diseases are encouraging new approaches and new treatments. Psychiatric suffering and pain are being prioritized in research and appreciated by the general public as devastating. Serious, random assignment placebo-controlled and double- blind research studies will define just how valuable these medications might be, what is the safe dose and duration, and for whom they might prove more effective than existing treatments.

The process will take some time. And it is worth remembering that, although research has been promising,11 the number of patients studied, research design, and outcomes are not yet proven for psilosybin.12 The FDA fast-track makes sense, and the agency should continue supporting these efforts for psychedelics. In fact, we think the FDA also should support the promising trials of nitrous oxide13 (laughing gas), and other safe and novel approaches to successfully treat refractory depression. While we wait for personalized psychiatric medicines to be developed and validated through the long process of FDA approval, we will at least have a larger suite of treatment options to match patients with, along with some new algorithms that treat MDD,* TRD, and other disorders just are around the corner.

Dr. Patterson Silver Wolf is an associate professor at Washington University in St. Louis’s Brown School of Social Work. He is a training faculty member for two National Institutes of Health–funded (T32) training programs and serves as the director of the Community Academic Partnership on Addiction (CAPA). He’s chief research officer at the new CAPA Clinic, a teaching addiction treatment facility that is incorporating and testing various performance-based practice technology tools to respond to the opioid crisis and improve addiction treatment outcomes. Dr. Gold is professor of psychiatry (adjunct) at Washington University, St. Louis. He is the 17th Distinguished Alumni Professor at the University of Florida, Gainesville. For more than 40 years, Dr. Gold has worked on developing models for understanding the effects of opioid, tobacco, cocaine, and other drugs, as well as food, on the brain and behavior. He has written several books and published more than 1,000 peer-reviewed scientific articles, texts, and practice guidelines.

References

1. Sackeim HA et al. J Psychiatr Res. 2019 Jun;113:125-36.

2. Conway CR et al. J Clin Psychiatry. 25 Nov;76(11):1569-70.

3. Crowell AL et al. Am J Psychiatry. 2019 Oct 4. doi: 10.1176.appi.ajp.2019.18121427.

4. Kumar A et al. Neuropsychiatr Dis Treat. 2019 Feb 13;15:457-68.

5. Psilocybin sessions: Psychedelics could help people with addiction and anxiety. “60 Minutes” CBS News. 2019 Oct 13.

6. Pollan M. How to Change Your Mind: What the New Science of Psychedelics Teaches Us About Consciousness, Dying, Addiction, Depression, and Transcendence (Penguin Random House, 2018).

7. Nutt D. Dialogues Clin Neurosci. 2019;21(2):139-47.

8. Griffiths RR et al. J Psychopharmacol 2016 Dec;30(12):1181-97.

9. Johnson MW et al. Neuropsychopharmacology. 2018 Nov;142:143-66.

10. Schwenk ES et al. Reg Anesth Pain Med. 2018 Jul;43(5):456-66.

11. Johnson MW et al. Neurotherapeutics. 2017 Jul;14(3):734-40.

12. Mutonni S et al. J Affect Disord. 2019 Nov.1;258:11-24.

13. Nagele P et al. J Clin Psychopharmacol. 2018 Apr;38(2):144-8.

*Correction, 1/9/2020: An earlier version of this story misidentified the intended disease state.

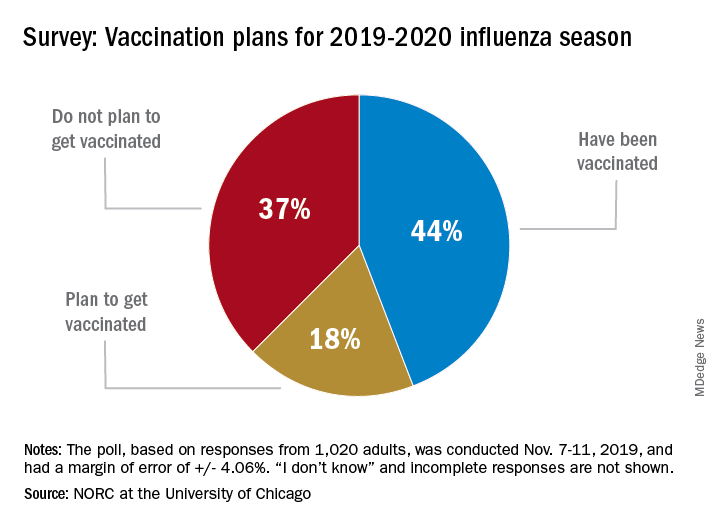

Many Americans planning to avoid flu vaccination

As the 2019-20 flu season got underway, more than half of American adults had not yet been vaccinated, according to a survey from the research organization NORC at the University of Chicago.

Only 44% of the 1,020 adults surveyed said that they had already received the vaccine as of Nov. 7-11, when the poll was conducted. Another the NORC reported. About 1% of those surveyed said they didn’t know or skipped the question.

Age was a strong determinant of vaccination status: 35% of those aged 18-29 years had gotten their flu shot, along with 36% of respondents aged 30-44 years and 34% of those aged 45- 59 years, compared with 65% of those aged 60 years and older. Of the respondents with children under age 18 years, 43% said that they were not planning to have the children vaccinated, the NORC said.

Concern about side effects, mentioned by 37% of those who were not planning to get vaccinated, was the most common reason given to avoid a flu shot, followed by belief that the vaccine doesn’t work very well (36%) and “never get the flu” (26%), the survey results showed.

“Widespread misconceptions exist regarding the safety and efficacy of flu shots. Because of the way the flu spreads in a community, failing to get a vaccination not only puts you at risk but also others for whom the consequences of the flu can be severe. Policymakers should focus on changing erroneous beliefs about immunizing against the flu,” said Caitlin Oppenheimer, who is senior vice president of public health research for the NORC, which has conducted the National Immunization Survey for the Centers for Disease Control and Prevention since 2005.

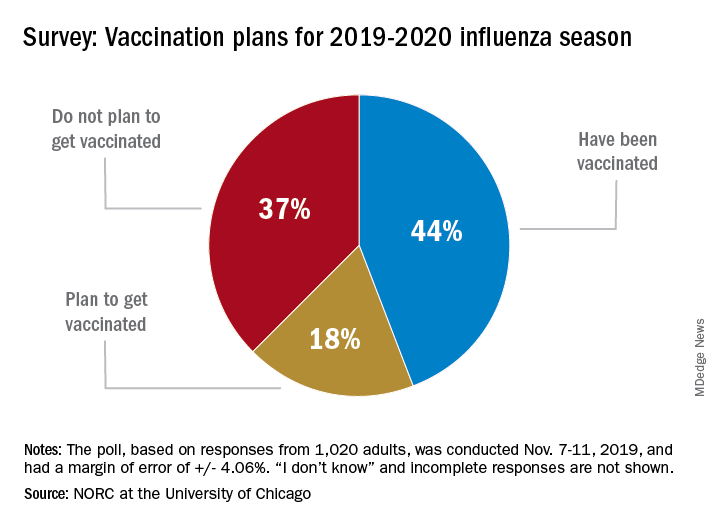

As the 2019-20 flu season got underway, more than half of American adults had not yet been vaccinated, according to a survey from the research organization NORC at the University of Chicago.

Only 44% of the 1,020 adults surveyed said that they had already received the vaccine as of Nov. 7-11, when the poll was conducted. Another the NORC reported. About 1% of those surveyed said they didn’t know or skipped the question.

Age was a strong determinant of vaccination status: 35% of those aged 18-29 years had gotten their flu shot, along with 36% of respondents aged 30-44 years and 34% of those aged 45- 59 years, compared with 65% of those aged 60 years and older. Of the respondents with children under age 18 years, 43% said that they were not planning to have the children vaccinated, the NORC said.

Concern about side effects, mentioned by 37% of those who were not planning to get vaccinated, was the most common reason given to avoid a flu shot, followed by belief that the vaccine doesn’t work very well (36%) and “never get the flu” (26%), the survey results showed.

“Widespread misconceptions exist regarding the safety and efficacy of flu shots. Because of the way the flu spreads in a community, failing to get a vaccination not only puts you at risk but also others for whom the consequences of the flu can be severe. Policymakers should focus on changing erroneous beliefs about immunizing against the flu,” said Caitlin Oppenheimer, who is senior vice president of public health research for the NORC, which has conducted the National Immunization Survey for the Centers for Disease Control and Prevention since 2005.

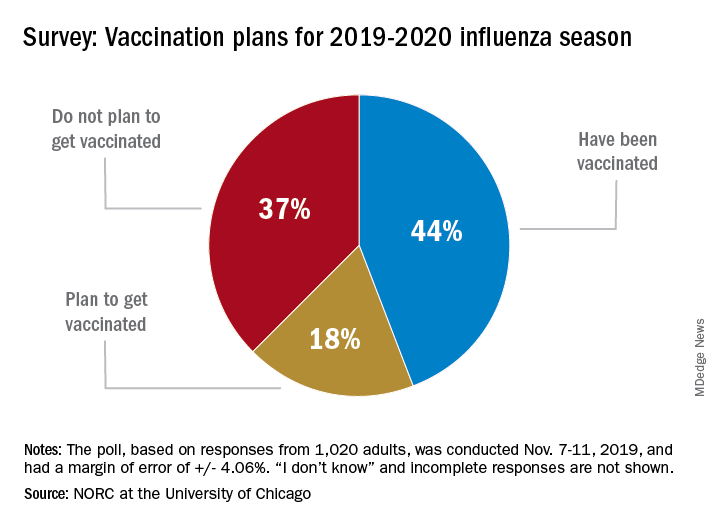

As the 2019-20 flu season got underway, more than half of American adults had not yet been vaccinated, according to a survey from the research organization NORC at the University of Chicago.

Only 44% of the 1,020 adults surveyed said that they had already received the vaccine as of Nov. 7-11, when the poll was conducted. Another the NORC reported. About 1% of those surveyed said they didn’t know or skipped the question.

Age was a strong determinant of vaccination status: 35% of those aged 18-29 years had gotten their flu shot, along with 36% of respondents aged 30-44 years and 34% of those aged 45- 59 years, compared with 65% of those aged 60 years and older. Of the respondents with children under age 18 years, 43% said that they were not planning to have the children vaccinated, the NORC said.

Concern about side effects, mentioned by 37% of those who were not planning to get vaccinated, was the most common reason given to avoid a flu shot, followed by belief that the vaccine doesn’t work very well (36%) and “never get the flu” (26%), the survey results showed.

“Widespread misconceptions exist regarding the safety and efficacy of flu shots. Because of the way the flu spreads in a community, failing to get a vaccination not only puts you at risk but also others for whom the consequences of the flu can be severe. Policymakers should focus on changing erroneous beliefs about immunizing against the flu,” said Caitlin Oppenheimer, who is senior vice president of public health research for the NORC, which has conducted the National Immunization Survey for the Centers for Disease Control and Prevention since 2005.

Health benefits of TAVR over SAVR sustained at 1 year

SAN FRANCISCO – Among patients with severe aortic stenosis at low surgical risk, both transcatheter and surgical aortic valve replacement resulted in substantial health status benefits at 1 year despite most patients having New York Heart Association class I or II symptoms at baseline.

However, when compared with surgical replacement,

The findings come from an analysis of patients enrolled in the randomized PARTNER 3 trial, which showed that transcatheter aortic valve replacement (TAVR) with the SAPIEN 3 valve. At 1 year post procedure, the rate of the primary composite endpoint comprising death, stroke, or cardiovascular rehospitalization was 8.5% in the TAVR group and 15.1% with surgical aortic valve replacement (SAVR), for a highly significant 46% relative risk reduction (N Engl J Med 2019 May 2;380:1695-705).

“The PARTNER 3 and Evolut Low Risk trials have demonstrated that transfemoral TAVR is both safe and effective when compared with SAVR in patients with severe aortic stenosis at low surgical risk,” Suzanne J. Baron, MD, MSc, said at the Transcatheter Cardiovascular Therapeutics annual meeting. “While prior studies have demonstrated improved early health status with transfemoral TAVR, compared with SAVR in intermediate and high-risk patients, there is little evidence of any late health status benefit with TAVR.”

To address this gap in knowledge, Dr. Baron, director of interventional cardiology research at Lahey Hospital and Medical Center in Burlington, Mass., and associates performed a prospective study alongside the PARTNER 3 randomized trial to understand the impact of valve replacement strategy on early and late health status in aortic stenosis patients at low surgical risk. She reported results from 449 low-risk patients with severe aortic stenosis who were assigned to transfemoral TAVR using a balloon-expandable valve, and 449 who were assigned to surgery in PARTNER 3. At baseline, the mean age of patients was 73 years, 69% were male, and the average STS (Society of Thoracic Surgeons) Risk Score was 1.9%. Rates of other comorbidities were generally low.

Patients in both groups reported a mild baseline impairment in health status. The mean Kansas City Cardiomyopathy Questionnaire–Overall Summary (KCCQ-OS) score was 70, “which corresponds to only New York Heart Association Class II symptoms,” Dr. Baron said. “The SF-36 [Short Form 36] physical summary score was 44 for both groups, which is approximately half of a standard deviation below the population mean.”

As expected, patients who underwent TAVR showed substantially improved health status at 1 month based on the KCCQ-OS (mean difference,16 points; P less than .001). However, in contrast to prior studies, the researchers observed a persistent, although attenuated, benefit of TAVR over SAVR in disease-specific health status at 6 and 12 months (mean difference in KCCQ-OS of 2.6 and 1.8 points respectively; P less than .04 for both).

Dr. Baron said that a sustained benefit of TAVR over SAVR at 6 months and 1 year was observed on several KCCQ subscales, but a similar benefit was not noted on the generic health status measures such as the SF-36 physical summary score. “That’s likely reflective of the fact that, as a disease-specific measure, the KCCQ is much more sensitive in detecting meaningful differences in this population,” she explained. When change in health status was analyzed as an ordinal variable, with death as the worst outcome and large clinical improvement, which was defined as a 20-point or greater increase in the KCCQ-OS score, TAVR showed a significant benefit, compared with surgery at all time points (P less than .05).