User login

Artificial intelligence (AI) has lagged in health care but has considerable potential to improve quality, safety, clinician experience, and access to care. It is being tested in areas like billing, hospital operations, and preventing adverse events (eg, sepsis mortality) with some early success. However, there are still many barriers preventing the widespread use of AI, such as data problems, mismatched rewards, and workplace obstacles. Innovative projects, partnerships, better rewards, and more investment could remove barriers. Implemented reliably and safely, AI can add to what clinicians know, help them work faster, cut costs, and, most importantly, improve patient care.1

AI can potentially bring several clinical benefits, such as reducing the administrative strain on clinicians and granting them more time for direct patient care. It can also improve diagnostic accuracy by analyzing patient data and diagnostic images, providing differential diagnoses, and increasing access to care by providing medical information and essential online services to patients.2

High Reliability Organizations

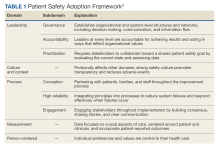

High reliability health care organizations have considerable experience safely launching new programs. For example, the Patient Safety Adoption Framework gives practical tips for smoothly rolling out safety initiatives (Table 1). Developed with experts and diverse views, this framework has 5 key areas: leadership, culture and context, process, measurement, and person-centeredness. These address adoption problems, guide leaders step-by-step, and focus on leadership buy-in, safety culture, cooperation, and local customization. Checklists and tools make it systematic to go from ideas to action on patient safety.3

Leadership involves establishing organizational commitment behind new safety programs. This visible commitment signals importance and priorities to others. Leaders model desired behaviors and language around safety, allocate resources, remove obstacles, and keep initiatives energized over time through consistent messaging.4 Culture and context recognizes that safety culture differs across units and facilities. Local input tailors programs to fit and examines strengths to build on, like psychological safety. Surveys gauge the existing culture and its need for change. Process details how to plan, design, test, implement, and improve new safety practices and provides a phased roadmap from idea to results. Measurement collects data to drive improvement and show impact. Metrics track progress and allow benchmarking. Person-centeredness puts patients first in safety efforts through participation, education, and transparency.

The Veterans Health Administration piloted a comprehensive high reliability hospital (HRH) model. Over 3 years, the Veterans Health Administration focused on leadership, culture, and process improvement at a hospital. After initiating the model, the pilot hospital improved its safety culture, reported more minor safety issues, and reduced deaths and complications better than other hospitals. The high-reliability approach successfully instilled principles and improved culture and outcomes. The HRH model is set to be expanded to 18 more US Department of Veterans Affairs (VA) sites for further evaluation across diverse settings.5

Trustworthy AI Framework

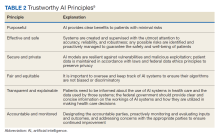

AI systems are growing more powerful and widespread, including in health care. Unfortunately, irresponsible AI can introduce new harm. ChatGPT and other large language models, for example, sometimes are known to provide erroneous information in a compelling way. Clinicians and patients who use such programs can act on such information, which would lead to unforeseen negative consequences. Several frameworks on ethical AI have come from governmental groups.6-9 In 2023, the VA National AI Institute suggested a Trustworthy AI Framework based on core principles tailored for federal health care. The framework has 6 key principles: purposeful, effective and safe, secure and private, fair and equitable, transparent and explainable, and accountable and monitored (Table 2).10

First, AI must clearly help veterans while minimizing risks. To ensure purpose, the VA will assess patient and clinician needs and design AI that targets meaningful problems to avoid scope creep or feature bloat. For example, adding new features to the AI software after release can clutter and complicate the interface, making it difficult to use. Rigorous testing will confirm that AI meets intent prior to deployment. Second, AI is designed and checked for effectiveness, safety, and reliability. The VA pledges to monitor AI’s impact to ensure it performs as expected without unintended consequences. Algorithms will be stress tested across representative datasets and approval processes will screen for safety issues. Third, AI models are secured from vulnerabilities and misuse. Technical controls will prevent unauthorized access or changes to AI systems. Audits will check for appropriate internal usage per policies. Continual patches and upgrades will maintain security. Fourth, the VA manages AI for fairness, avoiding bias. They will proactively assess datasets and algorithms for potential biases based on protected attributes like race, gender, or age. Biased outputs will be addressed through techniques such as data augmentation, reweighting, and algorithm tweaks. Fifth, transparency explains AI’s role in care. Documentation will detail an AI system’s data sources, methodology, testing, limitations, and integration with clinical workflows. Clinicians and patients will receive education on interpreting AI outputs. Finally, the VA pledges to closely monitor AI systems to sustain trust. The VA will establish oversight processes to quickly identify any declines in reliability or unfair impacts on subgroups. AI models will be retrained as needed based on incoming data patterns.

Each Trustworthy AI Framework principle connects to others in existing frameworks. The purpose principle aligns with human-centric AI focused on benefits. Effectiveness and safety link to technical robustness and risk management principles. Security maps to privacy protection principles. Fairness connects to principles of avoiding bias and discrimination. Transparency corresponds with accountable and explainable AI. Monitoring and accountability tie back to governance principles. Overall, the VA framework aims to guide ethical AI based on context. It offers a model for managing risks and building trust in health care AI.

Combining VA principles with high-reliability safety principles can ensure that AI benefits veterans. The leadership and culture aspects will drive commitment to trustworthy AI practices. Leaders will communicate the importance of responsible AI through words and actions. Culture surveys can assess baseline awareness of AI ethics issues to target education. AI security and fairness will be emphasized as safety critical. The process aspect will institute policies and procedures to uphold AI principles through the project lifecycle. For example, structured testing processes will validate safety. Measurement will collect data on principles like transparency and fairness. Dashboards can track metrics like explainability and biases. A patient-centered approach will incorporate veteran perspectives on AI through participatory design and advisory councils. They can give input on AI explainability and potential biases based on their diverse backgrounds.

Conclusions

Joint principles will lead to successful AI that improves care while proactively managing risks. Involve leaders to stress the necessity of eliminating biases. Build security into the AI development process. Co-design AI transparency features with end users. Closely monitor the impact of AI across safety, fairness, and other principles. Adhering to both Trustworthy AI and high reliability organizations principles will earn veterans’ confidence. Health care organizations like the VA can integrate ethical AI safely via established frameworks. With responsible design and implementation, AI’s potential to enhance care quality, safety, and access can be realized.

Acknowledgments

We would like to acknowledge Joshua Mueller, Theo Tiffney, John Zachary, and Gil Alterovitz for their excellent work creating the VA Trustworthy Principles. This material is the result of work supported by resources and the use of facilities at the James A. Haley Veterans’ Hospital.

1. Sahni NR, Carrus B. Artificial intelligence in U.S. health care delivery. N Engl J Med. 2023;389(4):348-358. doi:10.1056/NEJMra2204673

2. Borkowski AA, Jakey CE, Mastorides SM, et al. Applications of ChatGPT and large language models in medicine and health care: benefits and pitfalls. Fed Pract. 2023;40(6):170-173. doi:10.12788/fp.0386

3. Moyal-Smith R, Margo J, Maloney FL, et al. The patient safety adoption framework: a practical framework to bridge the know-do gap. J Patient Saf. 2023;19(4):243-248. doi:10.1097/PTS.0000000000001118

4. Isaacks DB, Anderson TM, Moore SC, Patterson W, Govindan S. High reliability organization principles improve VA workplace burnout: the Truman THRIVE2 model. Am J Med Qual. 2021;36(6):422-428. doi:10.1097/01.JMQ.0000735516.35323.97

5. Sculli GL, Pendley-Louis R, Neily J, et al. A high-reliability organization framework for health care: a multiyear implementation strategy and associated outcomes. J Patient Saf. 2022;18(1):64-70. doi:10.1097/PTS.0000000000000788

6. National Institute of Standards and Technology. AI risk management framework. Accessed January 2, 2024. https://www.nist.gov/itl/ai-risk-management-framework

7. Executive Office of the President, Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. Accessed January 11, 2024. https://www.whitehouse.gov/ostp/ai-bill-of-rights

8. Executive Office of the President. Executive Order 13960: promoting the use of trustworthy artificial intelligence in the federal government. Fed Regist. 2020;89(236):78939-78943.

9. Biden JR. Executive Order on the safe, secure, and trustworthy development and use of artificial intelligence. Published October 30, 2023. Accessed January 11, 2024. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

10. US Department of Veterans Affairs. Trustworthy AI. Accessed January 11, 2024. https://department.va.gov/ai/trustworthy/

Artificial intelligence (AI) has lagged in health care but has considerable potential to improve quality, safety, clinician experience, and access to care. It is being tested in areas like billing, hospital operations, and preventing adverse events (eg, sepsis mortality) with some early success. However, there are still many barriers preventing the widespread use of AI, such as data problems, mismatched rewards, and workplace obstacles. Innovative projects, partnerships, better rewards, and more investment could remove barriers. Implemented reliably and safely, AI can add to what clinicians know, help them work faster, cut costs, and, most importantly, improve patient care.1

AI can potentially bring several clinical benefits, such as reducing the administrative strain on clinicians and granting them more time for direct patient care. It can also improve diagnostic accuracy by analyzing patient data and diagnostic images, providing differential diagnoses, and increasing access to care by providing medical information and essential online services to patients.2

High Reliability Organizations

High reliability health care organizations have considerable experience safely launching new programs. For example, the Patient Safety Adoption Framework gives practical tips for smoothly rolling out safety initiatives (Table 1). Developed with experts and diverse views, this framework has 5 key areas: leadership, culture and context, process, measurement, and person-centeredness. These address adoption problems, guide leaders step-by-step, and focus on leadership buy-in, safety culture, cooperation, and local customization. Checklists and tools make it systematic to go from ideas to action on patient safety.3

Leadership involves establishing organizational commitment behind new safety programs. This visible commitment signals importance and priorities to others. Leaders model desired behaviors and language around safety, allocate resources, remove obstacles, and keep initiatives energized over time through consistent messaging.4 Culture and context recognizes that safety culture differs across units and facilities. Local input tailors programs to fit and examines strengths to build on, like psychological safety. Surveys gauge the existing culture and its need for change. Process details how to plan, design, test, implement, and improve new safety practices and provides a phased roadmap from idea to results. Measurement collects data to drive improvement and show impact. Metrics track progress and allow benchmarking. Person-centeredness puts patients first in safety efforts through participation, education, and transparency.

The Veterans Health Administration piloted a comprehensive high reliability hospital (HRH) model. Over 3 years, the Veterans Health Administration focused on leadership, culture, and process improvement at a hospital. After initiating the model, the pilot hospital improved its safety culture, reported more minor safety issues, and reduced deaths and complications better than other hospitals. The high-reliability approach successfully instilled principles and improved culture and outcomes. The HRH model is set to be expanded to 18 more US Department of Veterans Affairs (VA) sites for further evaluation across diverse settings.5

Trustworthy AI Framework

AI systems are growing more powerful and widespread, including in health care. Unfortunately, irresponsible AI can introduce new harm. ChatGPT and other large language models, for example, sometimes are known to provide erroneous information in a compelling way. Clinicians and patients who use such programs can act on such information, which would lead to unforeseen negative consequences. Several frameworks on ethical AI have come from governmental groups.6-9 In 2023, the VA National AI Institute suggested a Trustworthy AI Framework based on core principles tailored for federal health care. The framework has 6 key principles: purposeful, effective and safe, secure and private, fair and equitable, transparent and explainable, and accountable and monitored (Table 2).10

First, AI must clearly help veterans while minimizing risks. To ensure purpose, the VA will assess patient and clinician needs and design AI that targets meaningful problems to avoid scope creep or feature bloat. For example, adding new features to the AI software after release can clutter and complicate the interface, making it difficult to use. Rigorous testing will confirm that AI meets intent prior to deployment. Second, AI is designed and checked for effectiveness, safety, and reliability. The VA pledges to monitor AI’s impact to ensure it performs as expected without unintended consequences. Algorithms will be stress tested across representative datasets and approval processes will screen for safety issues. Third, AI models are secured from vulnerabilities and misuse. Technical controls will prevent unauthorized access or changes to AI systems. Audits will check for appropriate internal usage per policies. Continual patches and upgrades will maintain security. Fourth, the VA manages AI for fairness, avoiding bias. They will proactively assess datasets and algorithms for potential biases based on protected attributes like race, gender, or age. Biased outputs will be addressed through techniques such as data augmentation, reweighting, and algorithm tweaks. Fifth, transparency explains AI’s role in care. Documentation will detail an AI system’s data sources, methodology, testing, limitations, and integration with clinical workflows. Clinicians and patients will receive education on interpreting AI outputs. Finally, the VA pledges to closely monitor AI systems to sustain trust. The VA will establish oversight processes to quickly identify any declines in reliability or unfair impacts on subgroups. AI models will be retrained as needed based on incoming data patterns.

Each Trustworthy AI Framework principle connects to others in existing frameworks. The purpose principle aligns with human-centric AI focused on benefits. Effectiveness and safety link to technical robustness and risk management principles. Security maps to privacy protection principles. Fairness connects to principles of avoiding bias and discrimination. Transparency corresponds with accountable and explainable AI. Monitoring and accountability tie back to governance principles. Overall, the VA framework aims to guide ethical AI based on context. It offers a model for managing risks and building trust in health care AI.

Combining VA principles with high-reliability safety principles can ensure that AI benefits veterans. The leadership and culture aspects will drive commitment to trustworthy AI practices. Leaders will communicate the importance of responsible AI through words and actions. Culture surveys can assess baseline awareness of AI ethics issues to target education. AI security and fairness will be emphasized as safety critical. The process aspect will institute policies and procedures to uphold AI principles through the project lifecycle. For example, structured testing processes will validate safety. Measurement will collect data on principles like transparency and fairness. Dashboards can track metrics like explainability and biases. A patient-centered approach will incorporate veteran perspectives on AI through participatory design and advisory councils. They can give input on AI explainability and potential biases based on their diverse backgrounds.

Conclusions

Joint principles will lead to successful AI that improves care while proactively managing risks. Involve leaders to stress the necessity of eliminating biases. Build security into the AI development process. Co-design AI transparency features with end users. Closely monitor the impact of AI across safety, fairness, and other principles. Adhering to both Trustworthy AI and high reliability organizations principles will earn veterans’ confidence. Health care organizations like the VA can integrate ethical AI safely via established frameworks. With responsible design and implementation, AI’s potential to enhance care quality, safety, and access can be realized.

Acknowledgments

We would like to acknowledge Joshua Mueller, Theo Tiffney, John Zachary, and Gil Alterovitz for their excellent work creating the VA Trustworthy Principles. This material is the result of work supported by resources and the use of facilities at the James A. Haley Veterans’ Hospital.

Artificial intelligence (AI) has lagged in health care but has considerable potential to improve quality, safety, clinician experience, and access to care. It is being tested in areas like billing, hospital operations, and preventing adverse events (eg, sepsis mortality) with some early success. However, there are still many barriers preventing the widespread use of AI, such as data problems, mismatched rewards, and workplace obstacles. Innovative projects, partnerships, better rewards, and more investment could remove barriers. Implemented reliably and safely, AI can add to what clinicians know, help them work faster, cut costs, and, most importantly, improve patient care.1

AI can potentially bring several clinical benefits, such as reducing the administrative strain on clinicians and granting them more time for direct patient care. It can also improve diagnostic accuracy by analyzing patient data and diagnostic images, providing differential diagnoses, and increasing access to care by providing medical information and essential online services to patients.2

High Reliability Organizations

High reliability health care organizations have considerable experience safely launching new programs. For example, the Patient Safety Adoption Framework gives practical tips for smoothly rolling out safety initiatives (Table 1). Developed with experts and diverse views, this framework has 5 key areas: leadership, culture and context, process, measurement, and person-centeredness. These address adoption problems, guide leaders step-by-step, and focus on leadership buy-in, safety culture, cooperation, and local customization. Checklists and tools make it systematic to go from ideas to action on patient safety.3

Leadership involves establishing organizational commitment behind new safety programs. This visible commitment signals importance and priorities to others. Leaders model desired behaviors and language around safety, allocate resources, remove obstacles, and keep initiatives energized over time through consistent messaging.4 Culture and context recognizes that safety culture differs across units and facilities. Local input tailors programs to fit and examines strengths to build on, like psychological safety. Surveys gauge the existing culture and its need for change. Process details how to plan, design, test, implement, and improve new safety practices and provides a phased roadmap from idea to results. Measurement collects data to drive improvement and show impact. Metrics track progress and allow benchmarking. Person-centeredness puts patients first in safety efforts through participation, education, and transparency.

The Veterans Health Administration piloted a comprehensive high reliability hospital (HRH) model. Over 3 years, the Veterans Health Administration focused on leadership, culture, and process improvement at a hospital. After initiating the model, the pilot hospital improved its safety culture, reported more minor safety issues, and reduced deaths and complications better than other hospitals. The high-reliability approach successfully instilled principles and improved culture and outcomes. The HRH model is set to be expanded to 18 more US Department of Veterans Affairs (VA) sites for further evaluation across diverse settings.5

Trustworthy AI Framework

AI systems are growing more powerful and widespread, including in health care. Unfortunately, irresponsible AI can introduce new harm. ChatGPT and other large language models, for example, sometimes are known to provide erroneous information in a compelling way. Clinicians and patients who use such programs can act on such information, which would lead to unforeseen negative consequences. Several frameworks on ethical AI have come from governmental groups.6-9 In 2023, the VA National AI Institute suggested a Trustworthy AI Framework based on core principles tailored for federal health care. The framework has 6 key principles: purposeful, effective and safe, secure and private, fair and equitable, transparent and explainable, and accountable and monitored (Table 2).10

First, AI must clearly help veterans while minimizing risks. To ensure purpose, the VA will assess patient and clinician needs and design AI that targets meaningful problems to avoid scope creep or feature bloat. For example, adding new features to the AI software after release can clutter and complicate the interface, making it difficult to use. Rigorous testing will confirm that AI meets intent prior to deployment. Second, AI is designed and checked for effectiveness, safety, and reliability. The VA pledges to monitor AI’s impact to ensure it performs as expected without unintended consequences. Algorithms will be stress tested across representative datasets and approval processes will screen for safety issues. Third, AI models are secured from vulnerabilities and misuse. Technical controls will prevent unauthorized access or changes to AI systems. Audits will check for appropriate internal usage per policies. Continual patches and upgrades will maintain security. Fourth, the VA manages AI for fairness, avoiding bias. They will proactively assess datasets and algorithms for potential biases based on protected attributes like race, gender, or age. Biased outputs will be addressed through techniques such as data augmentation, reweighting, and algorithm tweaks. Fifth, transparency explains AI’s role in care. Documentation will detail an AI system’s data sources, methodology, testing, limitations, and integration with clinical workflows. Clinicians and patients will receive education on interpreting AI outputs. Finally, the VA pledges to closely monitor AI systems to sustain trust. The VA will establish oversight processes to quickly identify any declines in reliability or unfair impacts on subgroups. AI models will be retrained as needed based on incoming data patterns.

Each Trustworthy AI Framework principle connects to others in existing frameworks. The purpose principle aligns with human-centric AI focused on benefits. Effectiveness and safety link to technical robustness and risk management principles. Security maps to privacy protection principles. Fairness connects to principles of avoiding bias and discrimination. Transparency corresponds with accountable and explainable AI. Monitoring and accountability tie back to governance principles. Overall, the VA framework aims to guide ethical AI based on context. It offers a model for managing risks and building trust in health care AI.

Combining VA principles with high-reliability safety principles can ensure that AI benefits veterans. The leadership and culture aspects will drive commitment to trustworthy AI practices. Leaders will communicate the importance of responsible AI through words and actions. Culture surveys can assess baseline awareness of AI ethics issues to target education. AI security and fairness will be emphasized as safety critical. The process aspect will institute policies and procedures to uphold AI principles through the project lifecycle. For example, structured testing processes will validate safety. Measurement will collect data on principles like transparency and fairness. Dashboards can track metrics like explainability and biases. A patient-centered approach will incorporate veteran perspectives on AI through participatory design and advisory councils. They can give input on AI explainability and potential biases based on their diverse backgrounds.

Conclusions

Joint principles will lead to successful AI that improves care while proactively managing risks. Involve leaders to stress the necessity of eliminating biases. Build security into the AI development process. Co-design AI transparency features with end users. Closely monitor the impact of AI across safety, fairness, and other principles. Adhering to both Trustworthy AI and high reliability organizations principles will earn veterans’ confidence. Health care organizations like the VA can integrate ethical AI safely via established frameworks. With responsible design and implementation, AI’s potential to enhance care quality, safety, and access can be realized.

Acknowledgments

We would like to acknowledge Joshua Mueller, Theo Tiffney, John Zachary, and Gil Alterovitz for their excellent work creating the VA Trustworthy Principles. This material is the result of work supported by resources and the use of facilities at the James A. Haley Veterans’ Hospital.

1. Sahni NR, Carrus B. Artificial intelligence in U.S. health care delivery. N Engl J Med. 2023;389(4):348-358. doi:10.1056/NEJMra2204673

2. Borkowski AA, Jakey CE, Mastorides SM, et al. Applications of ChatGPT and large language models in medicine and health care: benefits and pitfalls. Fed Pract. 2023;40(6):170-173. doi:10.12788/fp.0386

3. Moyal-Smith R, Margo J, Maloney FL, et al. The patient safety adoption framework: a practical framework to bridge the know-do gap. J Patient Saf. 2023;19(4):243-248. doi:10.1097/PTS.0000000000001118

4. Isaacks DB, Anderson TM, Moore SC, Patterson W, Govindan S. High reliability organization principles improve VA workplace burnout: the Truman THRIVE2 model. Am J Med Qual. 2021;36(6):422-428. doi:10.1097/01.JMQ.0000735516.35323.97

5. Sculli GL, Pendley-Louis R, Neily J, et al. A high-reliability organization framework for health care: a multiyear implementation strategy and associated outcomes. J Patient Saf. 2022;18(1):64-70. doi:10.1097/PTS.0000000000000788

6. National Institute of Standards and Technology. AI risk management framework. Accessed January 2, 2024. https://www.nist.gov/itl/ai-risk-management-framework

7. Executive Office of the President, Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. Accessed January 11, 2024. https://www.whitehouse.gov/ostp/ai-bill-of-rights

8. Executive Office of the President. Executive Order 13960: promoting the use of trustworthy artificial intelligence in the federal government. Fed Regist. 2020;89(236):78939-78943.

9. Biden JR. Executive Order on the safe, secure, and trustworthy development and use of artificial intelligence. Published October 30, 2023. Accessed January 11, 2024. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

10. US Department of Veterans Affairs. Trustworthy AI. Accessed January 11, 2024. https://department.va.gov/ai/trustworthy/

1. Sahni NR, Carrus B. Artificial intelligence in U.S. health care delivery. N Engl J Med. 2023;389(4):348-358. doi:10.1056/NEJMra2204673

2. Borkowski AA, Jakey CE, Mastorides SM, et al. Applications of ChatGPT and large language models in medicine and health care: benefits and pitfalls. Fed Pract. 2023;40(6):170-173. doi:10.12788/fp.0386

3. Moyal-Smith R, Margo J, Maloney FL, et al. The patient safety adoption framework: a practical framework to bridge the know-do gap. J Patient Saf. 2023;19(4):243-248. doi:10.1097/PTS.0000000000001118

4. Isaacks DB, Anderson TM, Moore SC, Patterson W, Govindan S. High reliability organization principles improve VA workplace burnout: the Truman THRIVE2 model. Am J Med Qual. 2021;36(6):422-428. doi:10.1097/01.JMQ.0000735516.35323.97

5. Sculli GL, Pendley-Louis R, Neily J, et al. A high-reliability organization framework for health care: a multiyear implementation strategy and associated outcomes. J Patient Saf. 2022;18(1):64-70. doi:10.1097/PTS.0000000000000788

6. National Institute of Standards and Technology. AI risk management framework. Accessed January 2, 2024. https://www.nist.gov/itl/ai-risk-management-framework

7. Executive Office of the President, Office of Science and Technology Policy. Blueprint for an AI Bill of Rights. Accessed January 11, 2024. https://www.whitehouse.gov/ostp/ai-bill-of-rights

8. Executive Office of the President. Executive Order 13960: promoting the use of trustworthy artificial intelligence in the federal government. Fed Regist. 2020;89(236):78939-78943.

9. Biden JR. Executive Order on the safe, secure, and trustworthy development and use of artificial intelligence. Published October 30, 2023. Accessed January 11, 2024. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

10. US Department of Veterans Affairs. Trustworthy AI. Accessed January 11, 2024. https://department.va.gov/ai/trustworthy/