User login

The electronic health record (EHR) has revolutionized the practice of medicine. As part of the economic stimulus package in 2009, Congress enacted the Health Information Technology for Economic and Clinical Health Act, which included incentives for physicians and hospitals to adopt an EHR by 2015. In the setting of more limited duty hours and demands for increased clinical productivity, EHRs have functions that may improve the quality and efficiency of clinical documentation.[1, 2, 3, 4, 5]

The process of note writing and the use of notes for clinical care have changed substantially with EHR implementation. Use of efficiency tools (ie, copy forward functions and autopopulation of data) may increase the speed of documentation.[5] Notes in an EHR are more legible and accessible and may be able to organize data to improve clinical care.[6]

Yet, many have commented on the negative consequences of documentation in an EHR. In a New England Journal of Medicine Perspective article, Drs. Hartzband and Groopman wrote, we have observed the electronic medical record become a powerful vehicle for perpetuating erroneous information, leading to diagnostic errors that gain momentum when passed on electronically.[7] As a result, the copy forward and autopopulation functions have come under significant scrutiny.[8, 9, 10] A survey conducted at 2 academic institutions found that 71% of residents and attendings believed that the copy forward function led to inconsistencies and outdated information.[11] Autopopulation has been criticized for creating lengthy notes full of trivial or redundant data, a phenomenon termed note bloat. Bloated notes may be less effective as a communication tool.[12] Additionally, the process of composing a note often stimulates critical thinking and may lead to changes in care. The act of copying forward a previous note and autopopulating data bypasses that process and in effect may suppress critical thinking.[13] Previous studies have raised numerous concerns regarding copy forward and autopopulation functionality in the EHR. Many have described the duplication of outdated data and the possibility of the introduction and perpetuation of errors.[14, 15, 16] The Veterans Affairs (VA) Puget Sound Health system evaluated 6322 copy events and found that 1 in 10 electronic patient charts contained an instance of high‐risk copying.[17] In a survey of faculty and residents at a single academic medical center, the majority of users of copy and paste functionality recognized the hazards; they responded that their notes may contain more outdated (66%) and more inconsistent information (69%). Yet, most felt copy forwarding improved the documentation of the entire hospital course (87%), overall physician documentation (69%), and should definitely be continued (91%).[11] Others have complained about the impact of copy forward on the expression of clinical reasoning.[7, 9, 18]

Previous discussions on the topic of overall note quality following EHR implementation have been limited to perspectives or opinion pieces of individual attending providers.[18] We conducted a survey across 4 academic institutions to analyze both housestaff and attendings perceptions of the quality of notes since the implementation of an EHR to better inform the discussion of the impact of an EHR on note quality.

METHODS

Participants

Surveys were administered via email to interns, residents (second‐, third‐, or fourth‐year residents, hereafter referred to as residents) and attendings at 4 academic hospitals that use the Epic EHR (Epic Corp., Madison, WI). The 4 institutions each adopted the Epic EHR, with mandatory faculty and resident training, between 1 and 5 years prior to the survey. Three of the institutions previously used systems with electronic notes, whereas the fourth institution previously used a system with handwritten notes. The study participation emails included a link to an online survey in REDCap.[19] We included interns and residents from the following types of residency programs: internal medicine categorical or primary care, medicine‐pediatrics, or medicine‐psychiatry. For housestaff (the combination of both interns and residents), exclusion criteria included preliminary or transitional year interns, or any interns or residents from other specialties who rotate on the medicine service. For attendings, participants included hospitalists, general internal medicine attendings, chief residents, and subspecialty medicine attendings, each of whom had worked for any amount of time on the inpatient medicine teaching service in the prior 12 months.

Design

We developed 3 unique surveys for interns, residents, and attendings to assess their perception of inpatient progress notes (see Supporting Information, Appendix, in the online version of this article). The surveys incorporated questions from 2 previously published sources, the 9‐item Physician Documentation Quality Instrument (PDQI‐9) (see online Appendix), a validated note‐scoring tool, and the Accreditation Council for Graduate Medical Education note‐writing competency checklists.[20] Additionally, faculty at the participating institutions developed questions to address practices and attitudes toward autopopulation, copy forward, and the purposes of a progress note. Responses were based on a 5‐point Likert scale. The intern and resident surveys asked for self‐evaluation of their own progress notes and those of their peers, whereas the attending surveys asked for assessment of housestaff notes.

The survey was left open for a total of 55 days and participants were sent reminder emails. The study received a waiver from the institutional review board at all 4 institutions.

Data Analysis

Study data were collected and managed using REDCap electronic data capture tools hosted at the University of California, San Francisco (UCSF).[19] The survey data were analyzed and the figures were created using Microsoft Excel 2008 (Microsoft Corp., Redmond, WA). Mean values for each survey question were calculated. Differences between the means among the groups were assessed using 2‐sample t tests. P values <0.05 were considered statistically significant.

RESULTS

Demographics

We received 99 completed surveys from interns, 155 completed surveys from residents, and 153 completed surveys from attendings across the 4 institutions. The overall response rate for interns was 68%, ranging from 59% at the University of California, San Diego (UCSD) to 74% at the University of Iowa. The overall response rate for residents was 49%, ranging from 38% at UCSF to 66% at the University of California, Los Angeles. The overall response rate for attendings was 70%, ranging from 53% at UCSD to 74% at UCSF.

A total of 78% of interns and 72% of residents had used an EHR at a prior institution. Of the residents, 90 were second‐year residents, 64 were third‐year residents, and 2 were fourth‐year residents. A total of 76% of attendings self‐identified as hospitalists.

Overall Assessment of Note Quality

Participants were asked to rate the quality of progress notes on a 5‐point scale (poor, fair, good, very good, excellent). Half of interns and residents rated their own progress notes as very good or excellent. A total of 44% percent of interns and 24% of residents rated their peers notes as very good or excellent, whereas only 15% of attending physicians rated housestaff notes as very good or excellent.

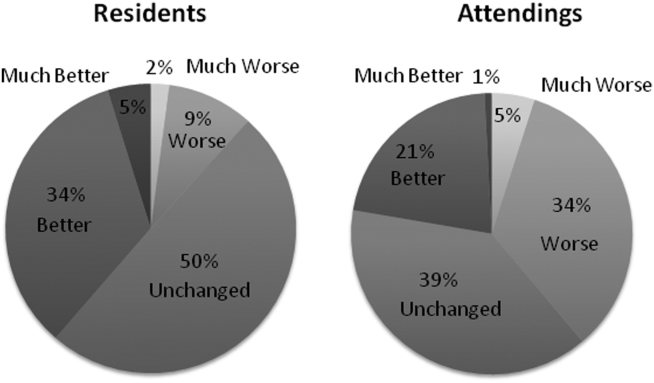

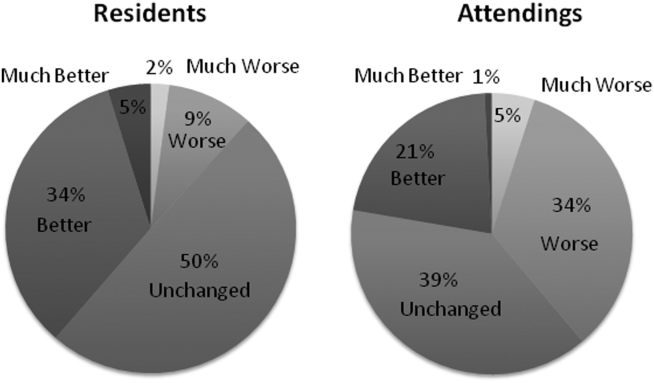

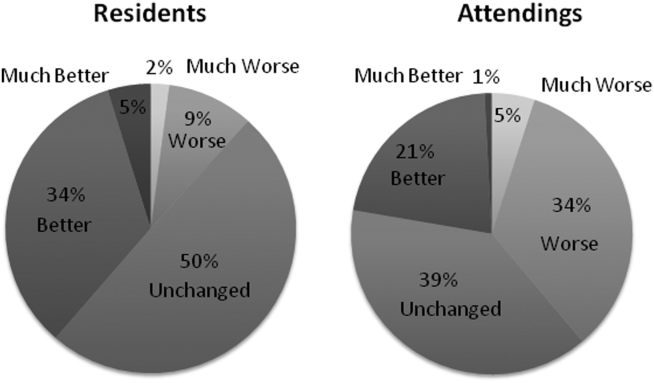

When asked to rate the change in progress note quality since their hospital had adopted the EHR, the majority of residents answered unchanged or better, and the majority of attendings answered unchanged or worse (Figure 1).

PDQI‐9 Framework

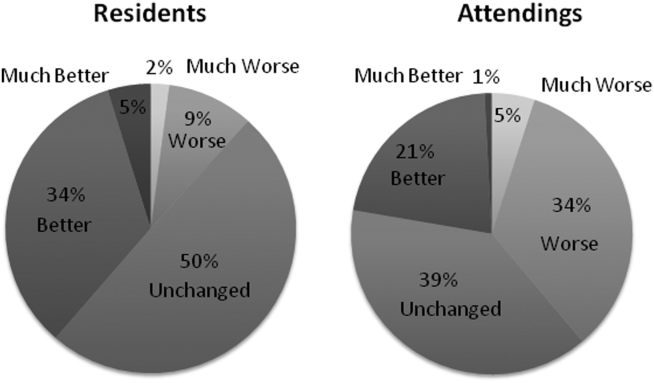

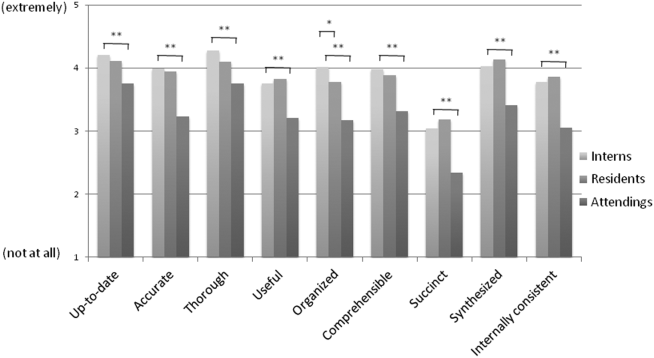

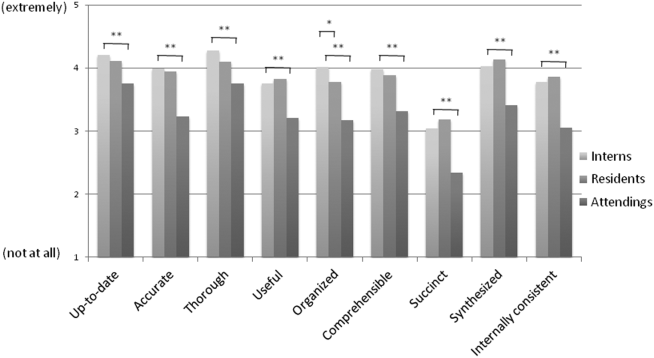

Participants answered each PDQI‐9 question on a 5‐point Likert scale ranging from not at all (1) to extremely (5). In 8 of the 9 PDQI‐9 domains, there were no significant differences between interns and residents. Across each domain, attending perceptions of housestaff notes were significantly lower than housestaff perceptions of their own notes (P<0.001) (Figure 2). Both housestaff and attendings gave the highest ratings to thorough, up to date, and synthesized and the lowest rating to succinct.

Copy Forward and Autopopulation

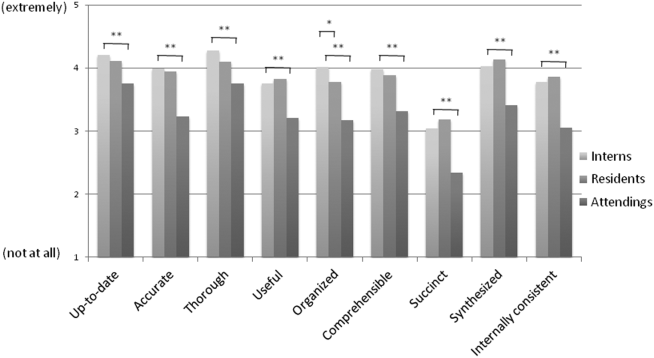

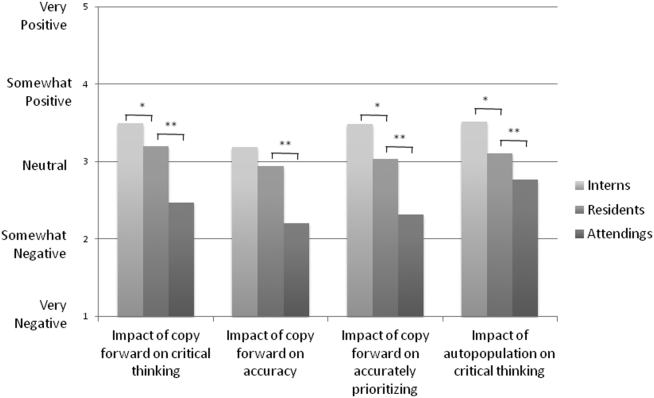

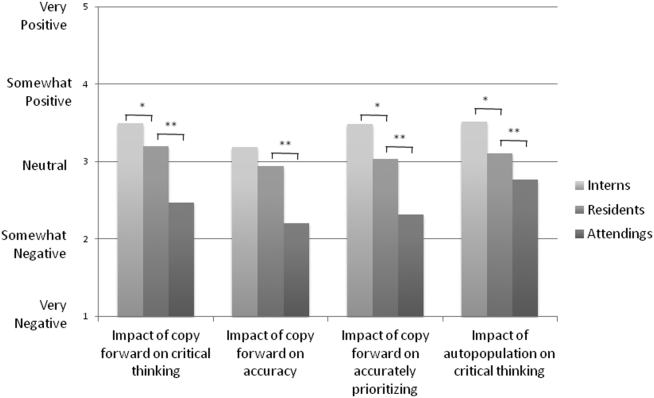

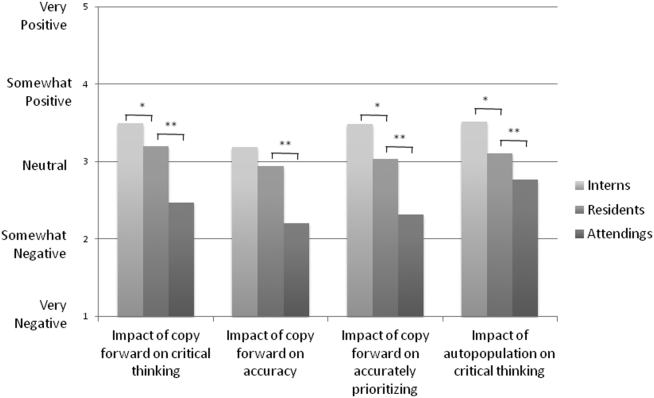

Overall, the effect of copy forward and autopopulation on critical thinking, note accuracy, and prioritizing the problem list was thought to be neutral or somewhat positive by interns, neutral by residents, and neutral or somewhat negative by attendings (P<0.001) (Figure 3). In all, 16% of interns, 22% of residents, and 55% of attendings reported that copy forward had a somewhat negative or very negative impact on critical thinking (P<0.001). In all, 16% of interns, 29% of residents and 39% of attendings thought that autopopulation had a somewhat negative or very negative impact on critical thinking (P<0.001).

Purpose of Progress Notes

Participants were provided with 7 possible purposes of a progress note and asked to rate the importance of each stated purpose. There was nearly perfect agreement between interns, residents, and attendings in the rank order of the importance of each purpose of a progress note (Table 1). Attendings and housestaff ranked communication with other providers and documenting important events and the plan for the day as the 2 most important purposes of a progress note, and billing and quality improvement as less important.

| Interns | Residents | Attendings | |

|---|---|---|---|

| Communication with other providers | 1 | 1 | 2 |

| Documenting important events and the plan for the day | 2 | 2 | 1 |

| Prioritizing issues going forward in the patient's care | 3 | 3 | 3 |

| Medicolegal | 4 | 4 | 4 |

| Stimulate critical thinking | 5 | 5 | 5 |

| Billing | 6 | 6 | 6 |

| Quality improvement | 7 | 7 | 7 |

DISCUSSION

This is the first large multicenter analysis of both attendings and housestaff perceptions of note quality in the EHR era. The findings provide insight into important differences and similarities in the perceptions of the 2 groups. Most striking is the difference in opinion of overall note quality, with only a small minority of faculty rating current housestaff notes as very good or excellent, whereas a much larger proportion of housestaff rated their own notes and those of their peers to be of high quality. Though participants were not specifically asked why note quality in general was suboptimal, housestaff and faculty rankings of specific domains from the PDQI‐9 may yield an important clue. Specifically, all groups expressed that the weakest attribute of current progress notes is succinct. This finding is consistent with the note bloat phenomenon, which has been maligned as a consequence of EHR implementation.[7, 14, 18, 21, 22]

One interesting finding was that only 5% of interns rated the notes of other housestaff as fair or poor. One possible explanation for this may be the tendency for an individual to enhance or augment the status or performance of the group to which he or she belongs as a mechanism to increase self‐image, known as the social identity theory.[23] Thus, housestaff may not criticize their peers to allow for identification with a group that is not deficient in note writing.

The more positive assessment of overall note quality among housestaff could be related to the different roles of housestaff and attendings on a teaching service. On a teaching service, housestaff are typically the writer, whereas attendings are almost exclusively the reader of progress notes. Housestaff may reap benefits, including efficiency, beyond the finished product. A perception of higher quality may reflect the process of note writing, data gathering, and critical thinking required to build an assessment and plan. The scores on the PDQI‐9 support this notion, as housestaff rated all 9 domains significantly higher than attendings.

Housestaff and attendings held greater differences of opinion with respect to the EHR's impact on note quality. Generally, housestaff perceived the EHR to have improved progress note quality, whereas attendings perceived the opposite. One explanation could be that these results reflect changing stages of development of physicians well described through the RIME framework (reporter, interpreter, manager, educator). Attendings may expect notes to reflect synthesis and analysis, whereas trainees may be satisfied with the data gathering that an EHR facilitates. In our survey, the trend of answers from intern to resident to attending suggests an evolving process of attitudes toward note quality.

The above reasons may also explain why housestaff were generally more positive than attendings about the effect of copy forward and autopopulation functions on critical thinking. Perhaps, as these functions can potentially increase efficiency and decrease time spent at the computer, although data are mixed on this finding, housestaff may have more time to spend with patients or develop a thorough plan and thus rate these functions positively.

Notably, housestaff and attendings had excellent agreement on the purposes of a progress note. They agreed that the 2 most important purposes were communication with other providers and documenting important events and the plan for the day. These are the 2 listed purposes that are most directly related to patient care. If future interventions to improve note quality require housestaff and attendings to significantly change their behavior, a focus on the impact on patient care might yield the best results.

There were several limitations in our study. Any study based on self‐assessment is subject to bias. A previous meta‐analysis and review described poor to moderate correlations between self‐assessed and external measures of performance.[24, 25] The survey data were aggregated from 4 institutions despite somewhat different, though relatively high, response rates between the institutions. There could be a response bias; those who did not respond may have systematically different perceptions of note quality. It should be noted that the general demographics of the respondents reflected those of the housestaff and attendings at 4 academic centers. All 4 of the participating institutions adopted the Epic EHR within the last several years of the survey being administered, and perceptions of note quality may be biased depending on the prior system used (ie, change from handwritten to electronic vs electronic to other electronic system). In addition, the survey results reflect experience with only 1 EHR, and our results may not apply to other EHR vendors or institutions like the VA, which have a long‐standing system in place. Last, we did not explore the impact of perceived note quality on the measured or perceived quality of care. One previous study found no direct correlation between note quality and clinical quality.[26]

There are several future directions for research based on our findings. First, potential differences between housestaff and attending perceptions of note quality could be further teased apart by studying the perceptions of attendings on a nonteaching service who write their own daily progress notes. Second, housestaff perceptions on why copy forward and autopopulation may increase critical thinking could be explored further with more direct questioning. Finally, although our study captured only perceptions of note quality, validated tools could be used to objectively measure note quality; these measurements could then be compared to perception of note quality as well as clinical outcomes.

Given the prevalence and the apparent belief that the benefits of an EHR outweigh the hazards, institutions should embrace these innovations but take steps to mitigate the potential errors and problems associated with copy forward and autopopulation. The results of our study should help inform future interventions.

Acknowledgements

The authors acknowledge the contributions of Russell Leslie from the University of Iowa.

Disclosure: Nothing to report.

- , , , et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752.

- , , , , . Clinical information technologies and inpatient outcomes: a multiple hospital study. Arch Intern Med. 2009;169(2):108–114.

- , , , et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280(15):1311–1316.

- , , , . Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(9):825–833.

- , , , et al. The impact of a clinical information system in an intensive care unit. J Clin Monit Comput. 2008;22(1):31–36.

- , . Can electronic clinical documentation help prevent diagnostic errors? N Engl J Med. 2010;362(12):1066–1069.

- , . Off the record—avoiding the pitfalls of going electronic. N Eng J Med. 2008;358(16):1656–1658.

- , , . Copying and pasting of examinations within the electronic medical record. Int J Med Inform. 2007;76(suppl 1):S122–S128.

- , . Copy and paste: a remediable hazard of electronic health records. Am J Med. 2009;122(6):495–496.

- , , . The role of copy‐and‐paste in the hospital electronic health record. JAMA Intern Med. 2014;174(8):1217–1218.

- , , , , , . Physicians’ attitudes towards copy and pasting in electronic note writing. J Gen Intern Med. 2009;24(1):63–68.

- , , , , . Medical education in the electronic medical record (EMR) era: benefits, challenges, and future directions. Acad Med. 2013;88(6):748–752.

- , . Educational impact of the electronic medical record. J Surg Educ. 2012;69(1):105–112.

- , , , , , . Direct text entry in electronic progress notes. An evaluation of input errors. Methods Inf Med. 2003;42(1):61–67.

- . The clinical record: a 200‐year‐old 21st‐century challenge. Ann Intern Med. 2010;153(10):682–683.

- . Sloppy and paste. Morbidity and Mortality Rounds on the Web. Available at: http://www.webmm.ahrq.gov/case.aspx?caseID=274. Published July 2012. Accessed September 26, 2014.

- , , , . Are electronic medical records trustworthy? Observations on copying, pasting and duplication. AMIA Annu Symp Proc. 2003:269–273.

- . A piece of my mind. John Lennon's elbow. JAMA. 2012;308(5):463–464.

- , , , , , . Research electronic data capture (REDCap)—a metadata‐driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381.

- , , . ACGME competency note checklist. Available at: http://www.im.org/p/cm/ld/fid=831. Accessed August 8, 2013.

- , , , . Assessing electronic note quality using the Physician Documentation Quality Instrument (PDQI‐9). Appl Clin Inform. 2012;3(2):164–174.

- , , , . Quantifying clinical narrative redundancy in an electronic health record. J Am Med Inform Assoc. 2010;17(1):49–53.

- , . The social identity theory of intergroup behavior. In: Psychology of Intergroup Relations. 2nd ed. Chicago, IL: Nelson‐Hall Publishers; 1986:7–24.

- , . Student self‐assessment in higher education: a meta‐analysis. Rev Educ Res. 1989;59:395–430.

- . A review of the validity and accuracy of self‐assessments in health professions training. Acad Med. 1991;66:762–769.

- , , , , . Association of note quality and quality of care: a cross‐sectional study. BMJ Qual Saf. 2014;23(5):406–413.

The electronic health record (EHR) has revolutionized the practice of medicine. As part of the economic stimulus package in 2009, Congress enacted the Health Information Technology for Economic and Clinical Health Act, which included incentives for physicians and hospitals to adopt an EHR by 2015. In the setting of more limited duty hours and demands for increased clinical productivity, EHRs have functions that may improve the quality and efficiency of clinical documentation.[1, 2, 3, 4, 5]

The process of note writing and the use of notes for clinical care have changed substantially with EHR implementation. Use of efficiency tools (ie, copy forward functions and autopopulation of data) may increase the speed of documentation.[5] Notes in an EHR are more legible and accessible and may be able to organize data to improve clinical care.[6]

Yet, many have commented on the negative consequences of documentation in an EHR. In a New England Journal of Medicine Perspective article, Drs. Hartzband and Groopman wrote, we have observed the electronic medical record become a powerful vehicle for perpetuating erroneous information, leading to diagnostic errors that gain momentum when passed on electronically.[7] As a result, the copy forward and autopopulation functions have come under significant scrutiny.[8, 9, 10] A survey conducted at 2 academic institutions found that 71% of residents and attendings believed that the copy forward function led to inconsistencies and outdated information.[11] Autopopulation has been criticized for creating lengthy notes full of trivial or redundant data, a phenomenon termed note bloat. Bloated notes may be less effective as a communication tool.[12] Additionally, the process of composing a note often stimulates critical thinking and may lead to changes in care. The act of copying forward a previous note and autopopulating data bypasses that process and in effect may suppress critical thinking.[13] Previous studies have raised numerous concerns regarding copy forward and autopopulation functionality in the EHR. Many have described the duplication of outdated data and the possibility of the introduction and perpetuation of errors.[14, 15, 16] The Veterans Affairs (VA) Puget Sound Health system evaluated 6322 copy events and found that 1 in 10 electronic patient charts contained an instance of high‐risk copying.[17] In a survey of faculty and residents at a single academic medical center, the majority of users of copy and paste functionality recognized the hazards; they responded that their notes may contain more outdated (66%) and more inconsistent information (69%). Yet, most felt copy forwarding improved the documentation of the entire hospital course (87%), overall physician documentation (69%), and should definitely be continued (91%).[11] Others have complained about the impact of copy forward on the expression of clinical reasoning.[7, 9, 18]

Previous discussions on the topic of overall note quality following EHR implementation have been limited to perspectives or opinion pieces of individual attending providers.[18] We conducted a survey across 4 academic institutions to analyze both housestaff and attendings perceptions of the quality of notes since the implementation of an EHR to better inform the discussion of the impact of an EHR on note quality.

METHODS

Participants

Surveys were administered via email to interns, residents (second‐, third‐, or fourth‐year residents, hereafter referred to as residents) and attendings at 4 academic hospitals that use the Epic EHR (Epic Corp., Madison, WI). The 4 institutions each adopted the Epic EHR, with mandatory faculty and resident training, between 1 and 5 years prior to the survey. Three of the institutions previously used systems with electronic notes, whereas the fourth institution previously used a system with handwritten notes. The study participation emails included a link to an online survey in REDCap.[19] We included interns and residents from the following types of residency programs: internal medicine categorical or primary care, medicine‐pediatrics, or medicine‐psychiatry. For housestaff (the combination of both interns and residents), exclusion criteria included preliminary or transitional year interns, or any interns or residents from other specialties who rotate on the medicine service. For attendings, participants included hospitalists, general internal medicine attendings, chief residents, and subspecialty medicine attendings, each of whom had worked for any amount of time on the inpatient medicine teaching service in the prior 12 months.

Design

We developed 3 unique surveys for interns, residents, and attendings to assess their perception of inpatient progress notes (see Supporting Information, Appendix, in the online version of this article). The surveys incorporated questions from 2 previously published sources, the 9‐item Physician Documentation Quality Instrument (PDQI‐9) (see online Appendix), a validated note‐scoring tool, and the Accreditation Council for Graduate Medical Education note‐writing competency checklists.[20] Additionally, faculty at the participating institutions developed questions to address practices and attitudes toward autopopulation, copy forward, and the purposes of a progress note. Responses were based on a 5‐point Likert scale. The intern and resident surveys asked for self‐evaluation of their own progress notes and those of their peers, whereas the attending surveys asked for assessment of housestaff notes.

The survey was left open for a total of 55 days and participants were sent reminder emails. The study received a waiver from the institutional review board at all 4 institutions.

Data Analysis

Study data were collected and managed using REDCap electronic data capture tools hosted at the University of California, San Francisco (UCSF).[19] The survey data were analyzed and the figures were created using Microsoft Excel 2008 (Microsoft Corp., Redmond, WA). Mean values for each survey question were calculated. Differences between the means among the groups were assessed using 2‐sample t tests. P values <0.05 were considered statistically significant.

RESULTS

Demographics

We received 99 completed surveys from interns, 155 completed surveys from residents, and 153 completed surveys from attendings across the 4 institutions. The overall response rate for interns was 68%, ranging from 59% at the University of California, San Diego (UCSD) to 74% at the University of Iowa. The overall response rate for residents was 49%, ranging from 38% at UCSF to 66% at the University of California, Los Angeles. The overall response rate for attendings was 70%, ranging from 53% at UCSD to 74% at UCSF.

A total of 78% of interns and 72% of residents had used an EHR at a prior institution. Of the residents, 90 were second‐year residents, 64 were third‐year residents, and 2 were fourth‐year residents. A total of 76% of attendings self‐identified as hospitalists.

Overall Assessment of Note Quality

Participants were asked to rate the quality of progress notes on a 5‐point scale (poor, fair, good, very good, excellent). Half of interns and residents rated their own progress notes as very good or excellent. A total of 44% percent of interns and 24% of residents rated their peers notes as very good or excellent, whereas only 15% of attending physicians rated housestaff notes as very good or excellent.

When asked to rate the change in progress note quality since their hospital had adopted the EHR, the majority of residents answered unchanged or better, and the majority of attendings answered unchanged or worse (Figure 1).

PDQI‐9 Framework

Participants answered each PDQI‐9 question on a 5‐point Likert scale ranging from not at all (1) to extremely (5). In 8 of the 9 PDQI‐9 domains, there were no significant differences between interns and residents. Across each domain, attending perceptions of housestaff notes were significantly lower than housestaff perceptions of their own notes (P<0.001) (Figure 2). Both housestaff and attendings gave the highest ratings to thorough, up to date, and synthesized and the lowest rating to succinct.

Copy Forward and Autopopulation

Overall, the effect of copy forward and autopopulation on critical thinking, note accuracy, and prioritizing the problem list was thought to be neutral or somewhat positive by interns, neutral by residents, and neutral or somewhat negative by attendings (P<0.001) (Figure 3). In all, 16% of interns, 22% of residents, and 55% of attendings reported that copy forward had a somewhat negative or very negative impact on critical thinking (P<0.001). In all, 16% of interns, 29% of residents and 39% of attendings thought that autopopulation had a somewhat negative or very negative impact on critical thinking (P<0.001).

Purpose of Progress Notes

Participants were provided with 7 possible purposes of a progress note and asked to rate the importance of each stated purpose. There was nearly perfect agreement between interns, residents, and attendings in the rank order of the importance of each purpose of a progress note (Table 1). Attendings and housestaff ranked communication with other providers and documenting important events and the plan for the day as the 2 most important purposes of a progress note, and billing and quality improvement as less important.

| Interns | Residents | Attendings | |

|---|---|---|---|

| Communication with other providers | 1 | 1 | 2 |

| Documenting important events and the plan for the day | 2 | 2 | 1 |

| Prioritizing issues going forward in the patient's care | 3 | 3 | 3 |

| Medicolegal | 4 | 4 | 4 |

| Stimulate critical thinking | 5 | 5 | 5 |

| Billing | 6 | 6 | 6 |

| Quality improvement | 7 | 7 | 7 |

DISCUSSION

This is the first large multicenter analysis of both attendings and housestaff perceptions of note quality in the EHR era. The findings provide insight into important differences and similarities in the perceptions of the 2 groups. Most striking is the difference in opinion of overall note quality, with only a small minority of faculty rating current housestaff notes as very good or excellent, whereas a much larger proportion of housestaff rated their own notes and those of their peers to be of high quality. Though participants were not specifically asked why note quality in general was suboptimal, housestaff and faculty rankings of specific domains from the PDQI‐9 may yield an important clue. Specifically, all groups expressed that the weakest attribute of current progress notes is succinct. This finding is consistent with the note bloat phenomenon, which has been maligned as a consequence of EHR implementation.[7, 14, 18, 21, 22]

One interesting finding was that only 5% of interns rated the notes of other housestaff as fair or poor. One possible explanation for this may be the tendency for an individual to enhance or augment the status or performance of the group to which he or she belongs as a mechanism to increase self‐image, known as the social identity theory.[23] Thus, housestaff may not criticize their peers to allow for identification with a group that is not deficient in note writing.

The more positive assessment of overall note quality among housestaff could be related to the different roles of housestaff and attendings on a teaching service. On a teaching service, housestaff are typically the writer, whereas attendings are almost exclusively the reader of progress notes. Housestaff may reap benefits, including efficiency, beyond the finished product. A perception of higher quality may reflect the process of note writing, data gathering, and critical thinking required to build an assessment and plan. The scores on the PDQI‐9 support this notion, as housestaff rated all 9 domains significantly higher than attendings.

Housestaff and attendings held greater differences of opinion with respect to the EHR's impact on note quality. Generally, housestaff perceived the EHR to have improved progress note quality, whereas attendings perceived the opposite. One explanation could be that these results reflect changing stages of development of physicians well described through the RIME framework (reporter, interpreter, manager, educator). Attendings may expect notes to reflect synthesis and analysis, whereas trainees may be satisfied with the data gathering that an EHR facilitates. In our survey, the trend of answers from intern to resident to attending suggests an evolving process of attitudes toward note quality.

The above reasons may also explain why housestaff were generally more positive than attendings about the effect of copy forward and autopopulation functions on critical thinking. Perhaps, as these functions can potentially increase efficiency and decrease time spent at the computer, although data are mixed on this finding, housestaff may have more time to spend with patients or develop a thorough plan and thus rate these functions positively.

Notably, housestaff and attendings had excellent agreement on the purposes of a progress note. They agreed that the 2 most important purposes were communication with other providers and documenting important events and the plan for the day. These are the 2 listed purposes that are most directly related to patient care. If future interventions to improve note quality require housestaff and attendings to significantly change their behavior, a focus on the impact on patient care might yield the best results.

There were several limitations in our study. Any study based on self‐assessment is subject to bias. A previous meta‐analysis and review described poor to moderate correlations between self‐assessed and external measures of performance.[24, 25] The survey data were aggregated from 4 institutions despite somewhat different, though relatively high, response rates between the institutions. There could be a response bias; those who did not respond may have systematically different perceptions of note quality. It should be noted that the general demographics of the respondents reflected those of the housestaff and attendings at 4 academic centers. All 4 of the participating institutions adopted the Epic EHR within the last several years of the survey being administered, and perceptions of note quality may be biased depending on the prior system used (ie, change from handwritten to electronic vs electronic to other electronic system). In addition, the survey results reflect experience with only 1 EHR, and our results may not apply to other EHR vendors or institutions like the VA, which have a long‐standing system in place. Last, we did not explore the impact of perceived note quality on the measured or perceived quality of care. One previous study found no direct correlation between note quality and clinical quality.[26]

There are several future directions for research based on our findings. First, potential differences between housestaff and attending perceptions of note quality could be further teased apart by studying the perceptions of attendings on a nonteaching service who write their own daily progress notes. Second, housestaff perceptions on why copy forward and autopopulation may increase critical thinking could be explored further with more direct questioning. Finally, although our study captured only perceptions of note quality, validated tools could be used to objectively measure note quality; these measurements could then be compared to perception of note quality as well as clinical outcomes.

Given the prevalence and the apparent belief that the benefits of an EHR outweigh the hazards, institutions should embrace these innovations but take steps to mitigate the potential errors and problems associated with copy forward and autopopulation. The results of our study should help inform future interventions.

Acknowledgements

The authors acknowledge the contributions of Russell Leslie from the University of Iowa.

Disclosure: Nothing to report.

The electronic health record (EHR) has revolutionized the practice of medicine. As part of the economic stimulus package in 2009, Congress enacted the Health Information Technology for Economic and Clinical Health Act, which included incentives for physicians and hospitals to adopt an EHR by 2015. In the setting of more limited duty hours and demands for increased clinical productivity, EHRs have functions that may improve the quality and efficiency of clinical documentation.[1, 2, 3, 4, 5]

The process of note writing and the use of notes for clinical care have changed substantially with EHR implementation. Use of efficiency tools (ie, copy forward functions and autopopulation of data) may increase the speed of documentation.[5] Notes in an EHR are more legible and accessible and may be able to organize data to improve clinical care.[6]

Yet, many have commented on the negative consequences of documentation in an EHR. In a New England Journal of Medicine Perspective article, Drs. Hartzband and Groopman wrote, we have observed the electronic medical record become a powerful vehicle for perpetuating erroneous information, leading to diagnostic errors that gain momentum when passed on electronically.[7] As a result, the copy forward and autopopulation functions have come under significant scrutiny.[8, 9, 10] A survey conducted at 2 academic institutions found that 71% of residents and attendings believed that the copy forward function led to inconsistencies and outdated information.[11] Autopopulation has been criticized for creating lengthy notes full of trivial or redundant data, a phenomenon termed note bloat. Bloated notes may be less effective as a communication tool.[12] Additionally, the process of composing a note often stimulates critical thinking and may lead to changes in care. The act of copying forward a previous note and autopopulating data bypasses that process and in effect may suppress critical thinking.[13] Previous studies have raised numerous concerns regarding copy forward and autopopulation functionality in the EHR. Many have described the duplication of outdated data and the possibility of the introduction and perpetuation of errors.[14, 15, 16] The Veterans Affairs (VA) Puget Sound Health system evaluated 6322 copy events and found that 1 in 10 electronic patient charts contained an instance of high‐risk copying.[17] In a survey of faculty and residents at a single academic medical center, the majority of users of copy and paste functionality recognized the hazards; they responded that their notes may contain more outdated (66%) and more inconsistent information (69%). Yet, most felt copy forwarding improved the documentation of the entire hospital course (87%), overall physician documentation (69%), and should definitely be continued (91%).[11] Others have complained about the impact of copy forward on the expression of clinical reasoning.[7, 9, 18]

Previous discussions on the topic of overall note quality following EHR implementation have been limited to perspectives or opinion pieces of individual attending providers.[18] We conducted a survey across 4 academic institutions to analyze both housestaff and attendings perceptions of the quality of notes since the implementation of an EHR to better inform the discussion of the impact of an EHR on note quality.

METHODS

Participants

Surveys were administered via email to interns, residents (second‐, third‐, or fourth‐year residents, hereafter referred to as residents) and attendings at 4 academic hospitals that use the Epic EHR (Epic Corp., Madison, WI). The 4 institutions each adopted the Epic EHR, with mandatory faculty and resident training, between 1 and 5 years prior to the survey. Three of the institutions previously used systems with electronic notes, whereas the fourth institution previously used a system with handwritten notes. The study participation emails included a link to an online survey in REDCap.[19] We included interns and residents from the following types of residency programs: internal medicine categorical or primary care, medicine‐pediatrics, or medicine‐psychiatry. For housestaff (the combination of both interns and residents), exclusion criteria included preliminary or transitional year interns, or any interns or residents from other specialties who rotate on the medicine service. For attendings, participants included hospitalists, general internal medicine attendings, chief residents, and subspecialty medicine attendings, each of whom had worked for any amount of time on the inpatient medicine teaching service in the prior 12 months.

Design

We developed 3 unique surveys for interns, residents, and attendings to assess their perception of inpatient progress notes (see Supporting Information, Appendix, in the online version of this article). The surveys incorporated questions from 2 previously published sources, the 9‐item Physician Documentation Quality Instrument (PDQI‐9) (see online Appendix), a validated note‐scoring tool, and the Accreditation Council for Graduate Medical Education note‐writing competency checklists.[20] Additionally, faculty at the participating institutions developed questions to address practices and attitudes toward autopopulation, copy forward, and the purposes of a progress note. Responses were based on a 5‐point Likert scale. The intern and resident surveys asked for self‐evaluation of their own progress notes and those of their peers, whereas the attending surveys asked for assessment of housestaff notes.

The survey was left open for a total of 55 days and participants were sent reminder emails. The study received a waiver from the institutional review board at all 4 institutions.

Data Analysis

Study data were collected and managed using REDCap electronic data capture tools hosted at the University of California, San Francisco (UCSF).[19] The survey data were analyzed and the figures were created using Microsoft Excel 2008 (Microsoft Corp., Redmond, WA). Mean values for each survey question were calculated. Differences between the means among the groups were assessed using 2‐sample t tests. P values <0.05 were considered statistically significant.

RESULTS

Demographics

We received 99 completed surveys from interns, 155 completed surveys from residents, and 153 completed surveys from attendings across the 4 institutions. The overall response rate for interns was 68%, ranging from 59% at the University of California, San Diego (UCSD) to 74% at the University of Iowa. The overall response rate for residents was 49%, ranging from 38% at UCSF to 66% at the University of California, Los Angeles. The overall response rate for attendings was 70%, ranging from 53% at UCSD to 74% at UCSF.

A total of 78% of interns and 72% of residents had used an EHR at a prior institution. Of the residents, 90 were second‐year residents, 64 were third‐year residents, and 2 were fourth‐year residents. A total of 76% of attendings self‐identified as hospitalists.

Overall Assessment of Note Quality

Participants were asked to rate the quality of progress notes on a 5‐point scale (poor, fair, good, very good, excellent). Half of interns and residents rated their own progress notes as very good or excellent. A total of 44% percent of interns and 24% of residents rated their peers notes as very good or excellent, whereas only 15% of attending physicians rated housestaff notes as very good or excellent.

When asked to rate the change in progress note quality since their hospital had adopted the EHR, the majority of residents answered unchanged or better, and the majority of attendings answered unchanged or worse (Figure 1).

PDQI‐9 Framework

Participants answered each PDQI‐9 question on a 5‐point Likert scale ranging from not at all (1) to extremely (5). In 8 of the 9 PDQI‐9 domains, there were no significant differences between interns and residents. Across each domain, attending perceptions of housestaff notes were significantly lower than housestaff perceptions of their own notes (P<0.001) (Figure 2). Both housestaff and attendings gave the highest ratings to thorough, up to date, and synthesized and the lowest rating to succinct.

Copy Forward and Autopopulation

Overall, the effect of copy forward and autopopulation on critical thinking, note accuracy, and prioritizing the problem list was thought to be neutral or somewhat positive by interns, neutral by residents, and neutral or somewhat negative by attendings (P<0.001) (Figure 3). In all, 16% of interns, 22% of residents, and 55% of attendings reported that copy forward had a somewhat negative or very negative impact on critical thinking (P<0.001). In all, 16% of interns, 29% of residents and 39% of attendings thought that autopopulation had a somewhat negative or very negative impact on critical thinking (P<0.001).

Purpose of Progress Notes

Participants were provided with 7 possible purposes of a progress note and asked to rate the importance of each stated purpose. There was nearly perfect agreement between interns, residents, and attendings in the rank order of the importance of each purpose of a progress note (Table 1). Attendings and housestaff ranked communication with other providers and documenting important events and the plan for the day as the 2 most important purposes of a progress note, and billing and quality improvement as less important.

| Interns | Residents | Attendings | |

|---|---|---|---|

| Communication with other providers | 1 | 1 | 2 |

| Documenting important events and the plan for the day | 2 | 2 | 1 |

| Prioritizing issues going forward in the patient's care | 3 | 3 | 3 |

| Medicolegal | 4 | 4 | 4 |

| Stimulate critical thinking | 5 | 5 | 5 |

| Billing | 6 | 6 | 6 |

| Quality improvement | 7 | 7 | 7 |

DISCUSSION

This is the first large multicenter analysis of both attendings and housestaff perceptions of note quality in the EHR era. The findings provide insight into important differences and similarities in the perceptions of the 2 groups. Most striking is the difference in opinion of overall note quality, with only a small minority of faculty rating current housestaff notes as very good or excellent, whereas a much larger proportion of housestaff rated their own notes and those of their peers to be of high quality. Though participants were not specifically asked why note quality in general was suboptimal, housestaff and faculty rankings of specific domains from the PDQI‐9 may yield an important clue. Specifically, all groups expressed that the weakest attribute of current progress notes is succinct. This finding is consistent with the note bloat phenomenon, which has been maligned as a consequence of EHR implementation.[7, 14, 18, 21, 22]

One interesting finding was that only 5% of interns rated the notes of other housestaff as fair or poor. One possible explanation for this may be the tendency for an individual to enhance or augment the status or performance of the group to which he or she belongs as a mechanism to increase self‐image, known as the social identity theory.[23] Thus, housestaff may not criticize their peers to allow for identification with a group that is not deficient in note writing.

The more positive assessment of overall note quality among housestaff could be related to the different roles of housestaff and attendings on a teaching service. On a teaching service, housestaff are typically the writer, whereas attendings are almost exclusively the reader of progress notes. Housestaff may reap benefits, including efficiency, beyond the finished product. A perception of higher quality may reflect the process of note writing, data gathering, and critical thinking required to build an assessment and plan. The scores on the PDQI‐9 support this notion, as housestaff rated all 9 domains significantly higher than attendings.

Housestaff and attendings held greater differences of opinion with respect to the EHR's impact on note quality. Generally, housestaff perceived the EHR to have improved progress note quality, whereas attendings perceived the opposite. One explanation could be that these results reflect changing stages of development of physicians well described through the RIME framework (reporter, interpreter, manager, educator). Attendings may expect notes to reflect synthesis and analysis, whereas trainees may be satisfied with the data gathering that an EHR facilitates. In our survey, the trend of answers from intern to resident to attending suggests an evolving process of attitudes toward note quality.

The above reasons may also explain why housestaff were generally more positive than attendings about the effect of copy forward and autopopulation functions on critical thinking. Perhaps, as these functions can potentially increase efficiency and decrease time spent at the computer, although data are mixed on this finding, housestaff may have more time to spend with patients or develop a thorough plan and thus rate these functions positively.

Notably, housestaff and attendings had excellent agreement on the purposes of a progress note. They agreed that the 2 most important purposes were communication with other providers and documenting important events and the plan for the day. These are the 2 listed purposes that are most directly related to patient care. If future interventions to improve note quality require housestaff and attendings to significantly change their behavior, a focus on the impact on patient care might yield the best results.

There were several limitations in our study. Any study based on self‐assessment is subject to bias. A previous meta‐analysis and review described poor to moderate correlations between self‐assessed and external measures of performance.[24, 25] The survey data were aggregated from 4 institutions despite somewhat different, though relatively high, response rates between the institutions. There could be a response bias; those who did not respond may have systematically different perceptions of note quality. It should be noted that the general demographics of the respondents reflected those of the housestaff and attendings at 4 academic centers. All 4 of the participating institutions adopted the Epic EHR within the last several years of the survey being administered, and perceptions of note quality may be biased depending on the prior system used (ie, change from handwritten to electronic vs electronic to other electronic system). In addition, the survey results reflect experience with only 1 EHR, and our results may not apply to other EHR vendors or institutions like the VA, which have a long‐standing system in place. Last, we did not explore the impact of perceived note quality on the measured or perceived quality of care. One previous study found no direct correlation between note quality and clinical quality.[26]

There are several future directions for research based on our findings. First, potential differences between housestaff and attending perceptions of note quality could be further teased apart by studying the perceptions of attendings on a nonteaching service who write their own daily progress notes. Second, housestaff perceptions on why copy forward and autopopulation may increase critical thinking could be explored further with more direct questioning. Finally, although our study captured only perceptions of note quality, validated tools could be used to objectively measure note quality; these measurements could then be compared to perception of note quality as well as clinical outcomes.

Given the prevalence and the apparent belief that the benefits of an EHR outweigh the hazards, institutions should embrace these innovations but take steps to mitigate the potential errors and problems associated with copy forward and autopopulation. The results of our study should help inform future interventions.

Acknowledgements

The authors acknowledge the contributions of Russell Leslie from the University of Iowa.

Disclosure: Nothing to report.

- , , , et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752.

- , , , , . Clinical information technologies and inpatient outcomes: a multiple hospital study. Arch Intern Med. 2009;169(2):108–114.

- , , , et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280(15):1311–1316.

- , , , . Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(9):825–833.

- , , , et al. The impact of a clinical information system in an intensive care unit. J Clin Monit Comput. 2008;22(1):31–36.

- , . Can electronic clinical documentation help prevent diagnostic errors? N Engl J Med. 2010;362(12):1066–1069.

- , . Off the record—avoiding the pitfalls of going electronic. N Eng J Med. 2008;358(16):1656–1658.

- , , . Copying and pasting of examinations within the electronic medical record. Int J Med Inform. 2007;76(suppl 1):S122–S128.

- , . Copy and paste: a remediable hazard of electronic health records. Am J Med. 2009;122(6):495–496.

- , , . The role of copy‐and‐paste in the hospital electronic health record. JAMA Intern Med. 2014;174(8):1217–1218.

- , , , , , . Physicians’ attitudes towards copy and pasting in electronic note writing. J Gen Intern Med. 2009;24(1):63–68.

- , , , , . Medical education in the electronic medical record (EMR) era: benefits, challenges, and future directions. Acad Med. 2013;88(6):748–752.

- , . Educational impact of the electronic medical record. J Surg Educ. 2012;69(1):105–112.

- , , , , , . Direct text entry in electronic progress notes. An evaluation of input errors. Methods Inf Med. 2003;42(1):61–67.

- . The clinical record: a 200‐year‐old 21st‐century challenge. Ann Intern Med. 2010;153(10):682–683.

- . Sloppy and paste. Morbidity and Mortality Rounds on the Web. Available at: http://www.webmm.ahrq.gov/case.aspx?caseID=274. Published July 2012. Accessed September 26, 2014.

- , , , . Are electronic medical records trustworthy? Observations on copying, pasting and duplication. AMIA Annu Symp Proc. 2003:269–273.

- . A piece of my mind. John Lennon's elbow. JAMA. 2012;308(5):463–464.

- , , , , , . Research electronic data capture (REDCap)—a metadata‐driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381.

- , , . ACGME competency note checklist. Available at: http://www.im.org/p/cm/ld/fid=831. Accessed August 8, 2013.

- , , , . Assessing electronic note quality using the Physician Documentation Quality Instrument (PDQI‐9). Appl Clin Inform. 2012;3(2):164–174.

- , , , . Quantifying clinical narrative redundancy in an electronic health record. J Am Med Inform Assoc. 2010;17(1):49–53.

- , . The social identity theory of intergroup behavior. In: Psychology of Intergroup Relations. 2nd ed. Chicago, IL: Nelson‐Hall Publishers; 1986:7–24.

- , . Student self‐assessment in higher education: a meta‐analysis. Rev Educ Res. 1989;59:395–430.

- . A review of the validity and accuracy of self‐assessments in health professions training. Acad Med. 1991;66:762–769.

- , , , , . Association of note quality and quality of care: a cross‐sectional study. BMJ Qual Saf. 2014;23(5):406–413.

- , , , et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752.

- , , , , . Clinical information technologies and inpatient outcomes: a multiple hospital study. Arch Intern Med. 2009;169(2):108–114.

- , , , et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280(15):1311–1316.

- , , , . Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(9):825–833.

- , , , et al. The impact of a clinical information system in an intensive care unit. J Clin Monit Comput. 2008;22(1):31–36.

- , . Can electronic clinical documentation help prevent diagnostic errors? N Engl J Med. 2010;362(12):1066–1069.

- , . Off the record—avoiding the pitfalls of going electronic. N Eng J Med. 2008;358(16):1656–1658.

- , , . Copying and pasting of examinations within the electronic medical record. Int J Med Inform. 2007;76(suppl 1):S122–S128.

- , . Copy and paste: a remediable hazard of electronic health records. Am J Med. 2009;122(6):495–496.

- , , . The role of copy‐and‐paste in the hospital electronic health record. JAMA Intern Med. 2014;174(8):1217–1218.

- , , , , , . Physicians’ attitudes towards copy and pasting in electronic note writing. J Gen Intern Med. 2009;24(1):63–68.

- , , , , . Medical education in the electronic medical record (EMR) era: benefits, challenges, and future directions. Acad Med. 2013;88(6):748–752.

- , . Educational impact of the electronic medical record. J Surg Educ. 2012;69(1):105–112.

- , , , , , . Direct text entry in electronic progress notes. An evaluation of input errors. Methods Inf Med. 2003;42(1):61–67.

- . The clinical record: a 200‐year‐old 21st‐century challenge. Ann Intern Med. 2010;153(10):682–683.

- . Sloppy and paste. Morbidity and Mortality Rounds on the Web. Available at: http://www.webmm.ahrq.gov/case.aspx?caseID=274. Published July 2012. Accessed September 26, 2014.

- , , , . Are electronic medical records trustworthy? Observations on copying, pasting and duplication. AMIA Annu Symp Proc. 2003:269–273.

- . A piece of my mind. John Lennon's elbow. JAMA. 2012;308(5):463–464.

- , , , , , . Research electronic data capture (REDCap)—a metadata‐driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381.

- , , . ACGME competency note checklist. Available at: http://www.im.org/p/cm/ld/fid=831. Accessed August 8, 2013.

- , , , . Assessing electronic note quality using the Physician Documentation Quality Instrument (PDQI‐9). Appl Clin Inform. 2012;3(2):164–174.

- , , , . Quantifying clinical narrative redundancy in an electronic health record. J Am Med Inform Assoc. 2010;17(1):49–53.

- , . The social identity theory of intergroup behavior. In: Psychology of Intergroup Relations. 2nd ed. Chicago, IL: Nelson‐Hall Publishers; 1986:7–24.

- , . Student self‐assessment in higher education: a meta‐analysis. Rev Educ Res. 1989;59:395–430.

- . A review of the validity and accuracy of self‐assessments in health professions training. Acad Med. 1991;66:762–769.

- , , , , . Association of note quality and quality of care: a cross‐sectional study. BMJ Qual Saf. 2014;23(5):406–413.

© 2015 Society of Hospital Medicine