User login

Protection of human subjects participating in research is critically important during this era of rapid medical progress and the increasing emphasis on translating discoveries from basic science research into clinical practices. The Federal Policy for the Protection of Human Subjects, also known as the Common Rule, was established based on the Belmont Report’s ethical principles of respect for persons, beneficence, and justice.1,2 Under the Common Rule, institutional review boards (IRBs) are responsible for reviewing and approving human research protocols and providing oversight to ensure protection of human research subjects.1

In addition to IRBs, investigators, institutions, research volunteers, sponsors of research, and the federal government share responsibilities for protecting research subjects.3 Institutions conducting research involving human subjects have thus established operational frameworks, referred to as human research protection programs (HRPPs), to ensure the rights and welfare of research participants and to meet the ethical and regulatory requirements.3,4

Related: Empathic Disclosure of Adverse Events to Patients

In the late 1990s and early 2000s, a number of major academic institutions’ federally supported research programs were suspended due to persistent noncompliance with federal regulations, including some issues that resulted in the death of healthy volunteers.5,6 In response to increased public scrutiny of clinical research, considerable efforts have been made to improve the protection of research subjects.5,7-9 These efforts included stronger federal oversight of research, voluntary accreditation of institutional HRPPs, increased institutional support for HRPPs, improved training for investigators and IRB members, improved monitoring and reporting of adverse events (AEs), and greater involvement of research participants and the public.9

Despite considerable investment to improve research subject protections, scant data exist showing that these efforts have made human research safer than before. Although research subject protection cannot be directly measured, quality assessment of HRPPs is possible. High-quality HRPPs are expected to minimize risk to research participants to the extent possible while maintaining the integrity of the research.10

Related: Improving Veteran Access to Clinical Trials

The VA health care system is the largest integrated health care system in the country. Currently, there are 107 VA facilities conducting research involving human subjects. In addition to federal regulations governing research with human subjects, VA researchers must also comply with requirements established by the VA. For example, in the VA the IRB is a subcommittee of the research and development committee (R&DC). Research involving human subjects may not be initiated until approved by both the IRB and the R&DC.4,11 All VA investigators are required to have approved research scopes of practice and training in ethical principles and current good clinical practices.4

Recently, the VA Office of Research Oversight (VAORO) developed a set of indicators for assessing the quality of VA HRPPs.10 Since 2010, VAORO has been collecting quality indicator (QI) data from all VA research facilities for quality improvement purposes.12-14 In this study, VAORO analyzed these data to assess changes in VA HRPP QI data from 2010 to 2012 and identify areas for improvement.

Methods

As part of the VA HRPP quality assurance program, each VA research facility was required to conduct annual audits of all informed consent documents (ICDs) and regulatory audits of all human research protocols once every 3 years by qualified research compliance officers (RCOs).15 Protocol regulatory audits were limited to a 3-year look back of the protocols. Tools were developed for the annual ICD and triennial protocol regulatory audits (available at http://www.va.gov/ORO/Research_Compliance_Education.asp). Facility RCOs were then trained to use these tools to conduct audits.

Data Collection

Data were collected annually from all 107 VA research facilities. Information collected included compliance with ICD and Health Insurance Portability and Accountability Act authorization requirements; compliance with requirements for IRB and R&DC initial approval of human research protocols; compliance with selected informed consent requirements; for-cause suspension or termination of human research protocols; research-related serious AEs; compliance with continuing review requirements; subject enrollment according to inclusion and exclusion criteria; research personnel scopes of practice; investigator human research protection training; international research; and research involving vulnerable subjects. No individually identifiable personal information was collected. As this was a VA quality assurance project and no individually identifiable information was collected, no IRB review and approval of the project was required.16

All data collected were entered into a database for analysis. When necessary, facilities were contacted to verify the accuracy and uniformity of data reported.

Data Analysis

The Mantel-Haenszel chi-square test for trend was used to determine the trend of changes from 2010 through 2012.17 A P value of < .05 was considered to be statistically significant. For those QIs with statistically significant changes, VAORO calculated the percent changes and the actual numbers impacted, ie, the actual numbers of ICDs, human research protocols, case histories, or research personnel affected by these changes.18

Results

The HRPP QI data was collected from 2010 through 2012 from all 107 VA research facilities (Table 1). There were a total of 25 QIs; 18 had all 3-year data available and 7 lacked 2010 data. Only those 18 QI data available from all 3 years were included for this analysis. The 2010 data collected for QIs related to for-cause suspension or termination of protocols and research personnel scopes of practice and training requirements were derived from all human, animal, and safety research protocols audited, not just the human research protocols audited. However, these data were included for comparison with the 2011 and 2012 data, because nonhuman research protocols audited constituted < 30% of the total. Based on VAORO on-site routine reviews of facilities’ HRPPs, animal care and use programs, as well as research safety and security programs, the authors believe that the QI rates in these nonhuman research protocols were similar to those of human research protocols.

From a total of 18 QIs with all 3-year data available for analysis, 9 QIs did not show any statistically significant changes; whereas 9 QIs showed statistically significant changes from 2010 to 2012 (Table 1). These 9 QIs were: (1) incorrect ICDs used; (2) number of protocols suspended or terminated due to cause; (3) protocols suspended or terminated due to investigator concerns; (4) informed consent not obtained prior to initiation of the study; (5) research personnel without research scopes of practice; (6) research personnel working outside of scopes of practice; (7) required training not current for research personnel; (8) research personnel working without initial training; and (9) research personnel lapsed in continuing training.

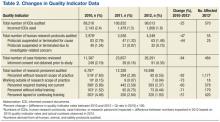

Table 2 shows the percent changes and the actual numbers impacted by the changes in the 9 QIs that showed statistically significant changes. The percent changes describe the magnitude of changes, and the numbers impacted provide information on the actual numbers of events (ie, ICDs, human research protocols, case histories, or research personnel) affected by these changes in 2012 if the QI rates had stayed the same as those of 2010.

All 9 QIs with statistically significant changes showed improvement, ranging from 25% improvement in incorrect ICDs used to 92% improvement in research personnel without scopes of practice (Table 2). The actual numbers impacted (ie, the difference between numbers expected in 2012 based on 2010 QI rates and the actual numbers observed in 2012) ranged from 55 protocols suspended or terminated for cause to 1,177 research scopes of practice.

Of the 9 QIs with no statistically significant changes, all but 2 QIs had QI rates of < 1% in 2010, suggesting that these QI rates were already so low that further improvement was difficult to achieve. The 2 exceptions were lapses in IRB continuing reviews and international research conducted without VA Chief Research and Development Officer (CRADO) approval.

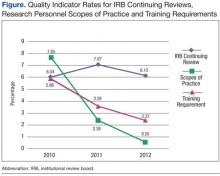

The rates of lapse in IRB continuing reviews remained high at 6% to 7% between 2010 and 2012 (Figure). In contrast, the rates of research personnel lacking scopes of practice and required training not current, which had comparable high rates in 2010, decreased sharply from 2010 to 2012.

Federal policies require that all individuals participating in research at international sites be provided with appropriate protections that are in accord with those given to research subjects within the U.S. as well as protections considered appropriate by local authorities and customary at the international site.1 VA policies require that permissions be obtained from the CRADO prior to initiating any VA-approved international research.4

Likewise, federal policies require additional protections when research involves vulnerable populations, such as children and prisoners.1 VA policies require that permission be obtained from the CRADO prior to initiating any research involving children or prisoners.4

Data on international research were available for all 3 years (Table 1). However, data on research involving children and prisoners were available only in 2011 and 2012. Although the numbers of these research protocols were small, ranging from 0 to 8 protocols, a high percentage of these protocols, ranging from 21% to 100%, did not receive CRADO approval prior to the initiation of the studies.

Discussion

The data presented in this report reveal that there has been considerable improvement in VA HRPPs since VAORO started to collect QI data in 2010. Of the QI data available from 2010 through 2012, 9 showed improvement, none showed deterioration. Of the 9 QIs that showed no statistically significant differences, 7 had very low QI rates in 2010 (most were < 1%). Consequently, further improvement may be difficult to achieve. On the other hand, VAORO identified 2 QIs to be in need of improvement.

The main purpose of collecting these data is to promote quality improvement. Each year VAORO provides feedback to VA research facilities by giving each facility its QI data along with the national and network averages so that each facility knows where it stands at the national and VISN level. It is hoped that with this information, facilities will be able to identify strengths and weaknesses and carry out quality improvement measures accordingly.

Several potential reasons exist for the observed improvements. Possibly, improvements could be due to reporting errors, for example, if facilities were underreporting noncompliance. However, underreporting is unlikely, because data were collected from independent RCO audits of ICDs and regulatory protocol audits. At VA, RCOs report directly to institutional officials and function independently of the Research Service.

Some facilities also may have been systematically “gaming the system” in order to make their programs look better. For example, some IRBs might become less likely to suspend a protocol when it should be suspended. While the above possibilities cannot be ruled out completely, the authors believe that they are unlikely. First, not all QIs were improved. Particularly, lapse in IRB continuing reviews remained high and unchanged from 2010 to 2012. In addition, routine on-site reviews of facility’s HRPPs have independently verified some of the improvements observed in these QI data.

Two areas in need of improvement have been identified: lapses in IRB continuing reviews and studies requiring CRADO. These 2 areas can be easily improved if facilities are willing to devote effort and resources to improve IRB procedures and practices. In a previous study based on 2011 QI data, the authors reported that VA facilities with a small human research program (active human research protocols of < 50) had a rate of lapse in IRB continuing reviews of 3.2%; facilities with a medium research program (50-200 active human research protocols) had a rate of 5.5%; and facilities with a large research program (> 200 active human research protocols) had a rate of 8.6%.14 Thus, facilities with a large research program particularly need to improve their IRB continuing review processes.

In addition to QI, these data provide opportunities to answer a number of important questions regarding HRPPs. For example, based on 2011 QI data, the authors had previously shown that HRPPs of facilities using their own VA IRBs and those using affiliated university IRBs as their IRBs of record performed equally well, providing scientific data for the first time to support the long-standing VA policy that it is acceptable for VA facilities to use their own IRB or the affiliated university IRB as the IRB of record.4,13 Likewise, there has been concern that facilities with small research programs may not have sufficient resources to support a vigorous HRPP.

In a previous study based on analysis of 2011 QI data, the authors showed that HRPPs of facilities with small research programs performed at least as well as facilities with medium and large research programs.14 Facilities with large research programs seemed to perform not as well as facilities with small and medium research programs, suggesting that facilities with large research programs may need to allocate additional resources to support HRPPs.

Two fundamental questions remain unanswered. First, are these QIs the most optimal for evaluating HRPPs? Second, do high-quality HRPPs as measured using QIs actually provide better human research subject protections? Although no clear answers to these important questions exist at this time, there is a clear need to measure the quality of HRPPs. Undoubtedly, modification of current QIs or the addition of new ones is needed. However, the authors are sharing their experience with academic and other non-VA research institutions as they develop their own QIs for assessing the quality of their HRPPs.

Acknowledgement

The authors wish to thank J. Thomas Puglisi, PhD, chief officer, Office of Research Oversight, for his support and critical review of the manuscript and thank all VA research compliance officers for their contributions in conducting audits and collecting the data presented in this report.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. U.S. Department of Health and Human Services. 1991. Federal Policy for the Protection of Human Subjects. 45 Code of Federal Registration (CFR) 46.

2. National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. The Belmont Report: Ethical principles and guidelines for the protection of human subjects of research. U.S. Department of Health and Human Services Website. http://www.hhs.gov/ohrp /humansubjects/guidance/belmont.html. Published April 18, 1979. Accessed March 6, 2015.

3. Institute of Medicine (U.S.) Committee on Assessing the System for Protecting Human Research Subjects. Preserving Public Trust: Accreditation and Human Research Participant Protection Programs. Washington, DC: National Academies Press; 2001.

4. U.S. Department of Veterans Affairs, Veterans Health Administration. Requirements for the protection of human subjects in research. Handbook 1200.05. U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications /ViewPublication.asp?pub_ID=3052. November 12, 2014. Accessed March 6, 2015.

5. Kizer KW. Statement on Oversight in the Veterans Health Administration before the Subcommittee on Veterans’ Affairs, U.S. House of Representatives. U.S. Department of Veterans Affairs Website. http://www .va.gov/OCA/testimony/hvac/sh/21AP9910.asp. April 21, 1999. Accessed March 19, 2015.

6. Steinbrook R. Protecting research subjects—The crisis at Johns Hopkins. N Engl J Med. 2002;346(9):716-720.

7. Kranish M. System for protecting humans in research faulted. Boston Globe. March 25, 2002:A1.

8. Shalala D. Protecting research subjects—What must be done. N Engl J Med. 2000;343(11):808-810.

9. Steinbrook R. Improving protection for research subjects. N Engl J Med. 2002;346(18):1425-1430.

10. Tsan MF, Smith K, Gao B. Assessing the quality of human research protection programs: the experience at the Department of Veterans Affairs. IRB. 2010;32(4):16-19.

11. U.S. Department of Veterans Affairs, Veterans Health Administration. Research and development committee. Handbook 1200.01 U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications/ViewPublication.asp?pub_ID=2038. Published June 16, 2009. Accessed March 6, 2015.

12. Tsan MF, Nguyen Y, Brooks R. Using quality indicators to assess human research protection programs at the Department of Veterans Affairs. IRB. 2013;35(1):10-14.

13. Tsan MF, Nguyen Y, Brooks R. Assessing the quality of VA human research protection programs: VA vs affiliated University Institutional Review Board. J Empir Res Hum Res Ethics. 2013;8(2):153-160.

14. Nguyen Y, Brooks R, Tsan MF. Human research protection programs at the Department of Veterans Affairs: quality indicators and program size. IRB. 2014;36(1):16-19.

15. U.S. Department of Veterans Affairs, Veterans Health Administration. Research compliance reporting requirements. Handbook 1058.01. U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications/viewpublication.asp?pub_id=2463. Published November 15, 2011. Accessed March 6, 2015.

16. Tsan MF, Puglisi JT. Health care operations activities that may constitute research: the Department of Veterans Affairs’s perspective. IRB. 2014;36(1):9-11.

17. Woodward M. Epidemiology. Study Design and Data Analysis. Boca Raton, FL: Chapman and Hall/CRC; 2014.

18. Tsan L, Davis C, Langberg R, Pierce JR. Quality indicators in the Department of Veterans Affairs nursing home care units: a preliminary assessment. Am J Med Qual. 2007;22(5):344-350.

Protection of human subjects participating in research is critically important during this era of rapid medical progress and the increasing emphasis on translating discoveries from basic science research into clinical practices. The Federal Policy for the Protection of Human Subjects, also known as the Common Rule, was established based on the Belmont Report’s ethical principles of respect for persons, beneficence, and justice.1,2 Under the Common Rule, institutional review boards (IRBs) are responsible for reviewing and approving human research protocols and providing oversight to ensure protection of human research subjects.1

In addition to IRBs, investigators, institutions, research volunteers, sponsors of research, and the federal government share responsibilities for protecting research subjects.3 Institutions conducting research involving human subjects have thus established operational frameworks, referred to as human research protection programs (HRPPs), to ensure the rights and welfare of research participants and to meet the ethical and regulatory requirements.3,4

Related: Empathic Disclosure of Adverse Events to Patients

In the late 1990s and early 2000s, a number of major academic institutions’ federally supported research programs were suspended due to persistent noncompliance with federal regulations, including some issues that resulted in the death of healthy volunteers.5,6 In response to increased public scrutiny of clinical research, considerable efforts have been made to improve the protection of research subjects.5,7-9 These efforts included stronger federal oversight of research, voluntary accreditation of institutional HRPPs, increased institutional support for HRPPs, improved training for investigators and IRB members, improved monitoring and reporting of adverse events (AEs), and greater involvement of research participants and the public.9

Despite considerable investment to improve research subject protections, scant data exist showing that these efforts have made human research safer than before. Although research subject protection cannot be directly measured, quality assessment of HRPPs is possible. High-quality HRPPs are expected to minimize risk to research participants to the extent possible while maintaining the integrity of the research.10

Related: Improving Veteran Access to Clinical Trials

The VA health care system is the largest integrated health care system in the country. Currently, there are 107 VA facilities conducting research involving human subjects. In addition to federal regulations governing research with human subjects, VA researchers must also comply with requirements established by the VA. For example, in the VA the IRB is a subcommittee of the research and development committee (R&DC). Research involving human subjects may not be initiated until approved by both the IRB and the R&DC.4,11 All VA investigators are required to have approved research scopes of practice and training in ethical principles and current good clinical practices.4

Recently, the VA Office of Research Oversight (VAORO) developed a set of indicators for assessing the quality of VA HRPPs.10 Since 2010, VAORO has been collecting quality indicator (QI) data from all VA research facilities for quality improvement purposes.12-14 In this study, VAORO analyzed these data to assess changes in VA HRPP QI data from 2010 to 2012 and identify areas for improvement.

Methods

As part of the VA HRPP quality assurance program, each VA research facility was required to conduct annual audits of all informed consent documents (ICDs) and regulatory audits of all human research protocols once every 3 years by qualified research compliance officers (RCOs).15 Protocol regulatory audits were limited to a 3-year look back of the protocols. Tools were developed for the annual ICD and triennial protocol regulatory audits (available at http://www.va.gov/ORO/Research_Compliance_Education.asp). Facility RCOs were then trained to use these tools to conduct audits.

Data Collection

Data were collected annually from all 107 VA research facilities. Information collected included compliance with ICD and Health Insurance Portability and Accountability Act authorization requirements; compliance with requirements for IRB and R&DC initial approval of human research protocols; compliance with selected informed consent requirements; for-cause suspension or termination of human research protocols; research-related serious AEs; compliance with continuing review requirements; subject enrollment according to inclusion and exclusion criteria; research personnel scopes of practice; investigator human research protection training; international research; and research involving vulnerable subjects. No individually identifiable personal information was collected. As this was a VA quality assurance project and no individually identifiable information was collected, no IRB review and approval of the project was required.16

All data collected were entered into a database for analysis. When necessary, facilities were contacted to verify the accuracy and uniformity of data reported.

Data Analysis

The Mantel-Haenszel chi-square test for trend was used to determine the trend of changes from 2010 through 2012.17 A P value of < .05 was considered to be statistically significant. For those QIs with statistically significant changes, VAORO calculated the percent changes and the actual numbers impacted, ie, the actual numbers of ICDs, human research protocols, case histories, or research personnel affected by these changes.18

Results

The HRPP QI data was collected from 2010 through 2012 from all 107 VA research facilities (Table 1). There were a total of 25 QIs; 18 had all 3-year data available and 7 lacked 2010 data. Only those 18 QI data available from all 3 years were included for this analysis. The 2010 data collected for QIs related to for-cause suspension or termination of protocols and research personnel scopes of practice and training requirements were derived from all human, animal, and safety research protocols audited, not just the human research protocols audited. However, these data were included for comparison with the 2011 and 2012 data, because nonhuman research protocols audited constituted < 30% of the total. Based on VAORO on-site routine reviews of facilities’ HRPPs, animal care and use programs, as well as research safety and security programs, the authors believe that the QI rates in these nonhuman research protocols were similar to those of human research protocols.

From a total of 18 QIs with all 3-year data available for analysis, 9 QIs did not show any statistically significant changes; whereas 9 QIs showed statistically significant changes from 2010 to 2012 (Table 1). These 9 QIs were: (1) incorrect ICDs used; (2) number of protocols suspended or terminated due to cause; (3) protocols suspended or terminated due to investigator concerns; (4) informed consent not obtained prior to initiation of the study; (5) research personnel without research scopes of practice; (6) research personnel working outside of scopes of practice; (7) required training not current for research personnel; (8) research personnel working without initial training; and (9) research personnel lapsed in continuing training.

Table 2 shows the percent changes and the actual numbers impacted by the changes in the 9 QIs that showed statistically significant changes. The percent changes describe the magnitude of changes, and the numbers impacted provide information on the actual numbers of events (ie, ICDs, human research protocols, case histories, or research personnel) affected by these changes in 2012 if the QI rates had stayed the same as those of 2010.

All 9 QIs with statistically significant changes showed improvement, ranging from 25% improvement in incorrect ICDs used to 92% improvement in research personnel without scopes of practice (Table 2). The actual numbers impacted (ie, the difference between numbers expected in 2012 based on 2010 QI rates and the actual numbers observed in 2012) ranged from 55 protocols suspended or terminated for cause to 1,177 research scopes of practice.

Of the 9 QIs with no statistically significant changes, all but 2 QIs had QI rates of < 1% in 2010, suggesting that these QI rates were already so low that further improvement was difficult to achieve. The 2 exceptions were lapses in IRB continuing reviews and international research conducted without VA Chief Research and Development Officer (CRADO) approval.

The rates of lapse in IRB continuing reviews remained high at 6% to 7% between 2010 and 2012 (Figure). In contrast, the rates of research personnel lacking scopes of practice and required training not current, which had comparable high rates in 2010, decreased sharply from 2010 to 2012.

Federal policies require that all individuals participating in research at international sites be provided with appropriate protections that are in accord with those given to research subjects within the U.S. as well as protections considered appropriate by local authorities and customary at the international site.1 VA policies require that permissions be obtained from the CRADO prior to initiating any VA-approved international research.4

Likewise, federal policies require additional protections when research involves vulnerable populations, such as children and prisoners.1 VA policies require that permission be obtained from the CRADO prior to initiating any research involving children or prisoners.4

Data on international research were available for all 3 years (Table 1). However, data on research involving children and prisoners were available only in 2011 and 2012. Although the numbers of these research protocols were small, ranging from 0 to 8 protocols, a high percentage of these protocols, ranging from 21% to 100%, did not receive CRADO approval prior to the initiation of the studies.

Discussion

The data presented in this report reveal that there has been considerable improvement in VA HRPPs since VAORO started to collect QI data in 2010. Of the QI data available from 2010 through 2012, 9 showed improvement, none showed deterioration. Of the 9 QIs that showed no statistically significant differences, 7 had very low QI rates in 2010 (most were < 1%). Consequently, further improvement may be difficult to achieve. On the other hand, VAORO identified 2 QIs to be in need of improvement.

The main purpose of collecting these data is to promote quality improvement. Each year VAORO provides feedback to VA research facilities by giving each facility its QI data along with the national and network averages so that each facility knows where it stands at the national and VISN level. It is hoped that with this information, facilities will be able to identify strengths and weaknesses and carry out quality improvement measures accordingly.

Several potential reasons exist for the observed improvements. Possibly, improvements could be due to reporting errors, for example, if facilities were underreporting noncompliance. However, underreporting is unlikely, because data were collected from independent RCO audits of ICDs and regulatory protocol audits. At VA, RCOs report directly to institutional officials and function independently of the Research Service.

Some facilities also may have been systematically “gaming the system” in order to make their programs look better. For example, some IRBs might become less likely to suspend a protocol when it should be suspended. While the above possibilities cannot be ruled out completely, the authors believe that they are unlikely. First, not all QIs were improved. Particularly, lapse in IRB continuing reviews remained high and unchanged from 2010 to 2012. In addition, routine on-site reviews of facility’s HRPPs have independently verified some of the improvements observed in these QI data.

Two areas in need of improvement have been identified: lapses in IRB continuing reviews and studies requiring CRADO. These 2 areas can be easily improved if facilities are willing to devote effort and resources to improve IRB procedures and practices. In a previous study based on 2011 QI data, the authors reported that VA facilities with a small human research program (active human research protocols of < 50) had a rate of lapse in IRB continuing reviews of 3.2%; facilities with a medium research program (50-200 active human research protocols) had a rate of 5.5%; and facilities with a large research program (> 200 active human research protocols) had a rate of 8.6%.14 Thus, facilities with a large research program particularly need to improve their IRB continuing review processes.

In addition to QI, these data provide opportunities to answer a number of important questions regarding HRPPs. For example, based on 2011 QI data, the authors had previously shown that HRPPs of facilities using their own VA IRBs and those using affiliated university IRBs as their IRBs of record performed equally well, providing scientific data for the first time to support the long-standing VA policy that it is acceptable for VA facilities to use their own IRB or the affiliated university IRB as the IRB of record.4,13 Likewise, there has been concern that facilities with small research programs may not have sufficient resources to support a vigorous HRPP.

In a previous study based on analysis of 2011 QI data, the authors showed that HRPPs of facilities with small research programs performed at least as well as facilities with medium and large research programs.14 Facilities with large research programs seemed to perform not as well as facilities with small and medium research programs, suggesting that facilities with large research programs may need to allocate additional resources to support HRPPs.

Two fundamental questions remain unanswered. First, are these QIs the most optimal for evaluating HRPPs? Second, do high-quality HRPPs as measured using QIs actually provide better human research subject protections? Although no clear answers to these important questions exist at this time, there is a clear need to measure the quality of HRPPs. Undoubtedly, modification of current QIs or the addition of new ones is needed. However, the authors are sharing their experience with academic and other non-VA research institutions as they develop their own QIs for assessing the quality of their HRPPs.

Acknowledgement

The authors wish to thank J. Thomas Puglisi, PhD, chief officer, Office of Research Oversight, for his support and critical review of the manuscript and thank all VA research compliance officers for their contributions in conducting audits and collecting the data presented in this report.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

Protection of human subjects participating in research is critically important during this era of rapid medical progress and the increasing emphasis on translating discoveries from basic science research into clinical practices. The Federal Policy for the Protection of Human Subjects, also known as the Common Rule, was established based on the Belmont Report’s ethical principles of respect for persons, beneficence, and justice.1,2 Under the Common Rule, institutional review boards (IRBs) are responsible for reviewing and approving human research protocols and providing oversight to ensure protection of human research subjects.1

In addition to IRBs, investigators, institutions, research volunteers, sponsors of research, and the federal government share responsibilities for protecting research subjects.3 Institutions conducting research involving human subjects have thus established operational frameworks, referred to as human research protection programs (HRPPs), to ensure the rights and welfare of research participants and to meet the ethical and regulatory requirements.3,4

Related: Empathic Disclosure of Adverse Events to Patients

In the late 1990s and early 2000s, a number of major academic institutions’ federally supported research programs were suspended due to persistent noncompliance with federal regulations, including some issues that resulted in the death of healthy volunteers.5,6 In response to increased public scrutiny of clinical research, considerable efforts have been made to improve the protection of research subjects.5,7-9 These efforts included stronger federal oversight of research, voluntary accreditation of institutional HRPPs, increased institutional support for HRPPs, improved training for investigators and IRB members, improved monitoring and reporting of adverse events (AEs), and greater involvement of research participants and the public.9

Despite considerable investment to improve research subject protections, scant data exist showing that these efforts have made human research safer than before. Although research subject protection cannot be directly measured, quality assessment of HRPPs is possible. High-quality HRPPs are expected to minimize risk to research participants to the extent possible while maintaining the integrity of the research.10

Related: Improving Veteran Access to Clinical Trials

The VA health care system is the largest integrated health care system in the country. Currently, there are 107 VA facilities conducting research involving human subjects. In addition to federal regulations governing research with human subjects, VA researchers must also comply with requirements established by the VA. For example, in the VA the IRB is a subcommittee of the research and development committee (R&DC). Research involving human subjects may not be initiated until approved by both the IRB and the R&DC.4,11 All VA investigators are required to have approved research scopes of practice and training in ethical principles and current good clinical practices.4

Recently, the VA Office of Research Oversight (VAORO) developed a set of indicators for assessing the quality of VA HRPPs.10 Since 2010, VAORO has been collecting quality indicator (QI) data from all VA research facilities for quality improvement purposes.12-14 In this study, VAORO analyzed these data to assess changes in VA HRPP QI data from 2010 to 2012 and identify areas for improvement.

Methods

As part of the VA HRPP quality assurance program, each VA research facility was required to conduct annual audits of all informed consent documents (ICDs) and regulatory audits of all human research protocols once every 3 years by qualified research compliance officers (RCOs).15 Protocol regulatory audits were limited to a 3-year look back of the protocols. Tools were developed for the annual ICD and triennial protocol regulatory audits (available at http://www.va.gov/ORO/Research_Compliance_Education.asp). Facility RCOs were then trained to use these tools to conduct audits.

Data Collection

Data were collected annually from all 107 VA research facilities. Information collected included compliance with ICD and Health Insurance Portability and Accountability Act authorization requirements; compliance with requirements for IRB and R&DC initial approval of human research protocols; compliance with selected informed consent requirements; for-cause suspension or termination of human research protocols; research-related serious AEs; compliance with continuing review requirements; subject enrollment according to inclusion and exclusion criteria; research personnel scopes of practice; investigator human research protection training; international research; and research involving vulnerable subjects. No individually identifiable personal information was collected. As this was a VA quality assurance project and no individually identifiable information was collected, no IRB review and approval of the project was required.16

All data collected were entered into a database for analysis. When necessary, facilities were contacted to verify the accuracy and uniformity of data reported.

Data Analysis

The Mantel-Haenszel chi-square test for trend was used to determine the trend of changes from 2010 through 2012.17 A P value of < .05 was considered to be statistically significant. For those QIs with statistically significant changes, VAORO calculated the percent changes and the actual numbers impacted, ie, the actual numbers of ICDs, human research protocols, case histories, or research personnel affected by these changes.18

Results

The HRPP QI data was collected from 2010 through 2012 from all 107 VA research facilities (Table 1). There were a total of 25 QIs; 18 had all 3-year data available and 7 lacked 2010 data. Only those 18 QI data available from all 3 years were included for this analysis. The 2010 data collected for QIs related to for-cause suspension or termination of protocols and research personnel scopes of practice and training requirements were derived from all human, animal, and safety research protocols audited, not just the human research protocols audited. However, these data were included for comparison with the 2011 and 2012 data, because nonhuman research protocols audited constituted < 30% of the total. Based on VAORO on-site routine reviews of facilities’ HRPPs, animal care and use programs, as well as research safety and security programs, the authors believe that the QI rates in these nonhuman research protocols were similar to those of human research protocols.

From a total of 18 QIs with all 3-year data available for analysis, 9 QIs did not show any statistically significant changes; whereas 9 QIs showed statistically significant changes from 2010 to 2012 (Table 1). These 9 QIs were: (1) incorrect ICDs used; (2) number of protocols suspended or terminated due to cause; (3) protocols suspended or terminated due to investigator concerns; (4) informed consent not obtained prior to initiation of the study; (5) research personnel without research scopes of practice; (6) research personnel working outside of scopes of practice; (7) required training not current for research personnel; (8) research personnel working without initial training; and (9) research personnel lapsed in continuing training.

Table 2 shows the percent changes and the actual numbers impacted by the changes in the 9 QIs that showed statistically significant changes. The percent changes describe the magnitude of changes, and the numbers impacted provide information on the actual numbers of events (ie, ICDs, human research protocols, case histories, or research personnel) affected by these changes in 2012 if the QI rates had stayed the same as those of 2010.

All 9 QIs with statistically significant changes showed improvement, ranging from 25% improvement in incorrect ICDs used to 92% improvement in research personnel without scopes of practice (Table 2). The actual numbers impacted (ie, the difference between numbers expected in 2012 based on 2010 QI rates and the actual numbers observed in 2012) ranged from 55 protocols suspended or terminated for cause to 1,177 research scopes of practice.

Of the 9 QIs with no statistically significant changes, all but 2 QIs had QI rates of < 1% in 2010, suggesting that these QI rates were already so low that further improvement was difficult to achieve. The 2 exceptions were lapses in IRB continuing reviews and international research conducted without VA Chief Research and Development Officer (CRADO) approval.

The rates of lapse in IRB continuing reviews remained high at 6% to 7% between 2010 and 2012 (Figure). In contrast, the rates of research personnel lacking scopes of practice and required training not current, which had comparable high rates in 2010, decreased sharply from 2010 to 2012.

Federal policies require that all individuals participating in research at international sites be provided with appropriate protections that are in accord with those given to research subjects within the U.S. as well as protections considered appropriate by local authorities and customary at the international site.1 VA policies require that permissions be obtained from the CRADO prior to initiating any VA-approved international research.4

Likewise, federal policies require additional protections when research involves vulnerable populations, such as children and prisoners.1 VA policies require that permission be obtained from the CRADO prior to initiating any research involving children or prisoners.4

Data on international research were available for all 3 years (Table 1). However, data on research involving children and prisoners were available only in 2011 and 2012. Although the numbers of these research protocols were small, ranging from 0 to 8 protocols, a high percentage of these protocols, ranging from 21% to 100%, did not receive CRADO approval prior to the initiation of the studies.

Discussion

The data presented in this report reveal that there has been considerable improvement in VA HRPPs since VAORO started to collect QI data in 2010. Of the QI data available from 2010 through 2012, 9 showed improvement, none showed deterioration. Of the 9 QIs that showed no statistically significant differences, 7 had very low QI rates in 2010 (most were < 1%). Consequently, further improvement may be difficult to achieve. On the other hand, VAORO identified 2 QIs to be in need of improvement.

The main purpose of collecting these data is to promote quality improvement. Each year VAORO provides feedback to VA research facilities by giving each facility its QI data along with the national and network averages so that each facility knows where it stands at the national and VISN level. It is hoped that with this information, facilities will be able to identify strengths and weaknesses and carry out quality improvement measures accordingly.

Several potential reasons exist for the observed improvements. Possibly, improvements could be due to reporting errors, for example, if facilities were underreporting noncompliance. However, underreporting is unlikely, because data were collected from independent RCO audits of ICDs and regulatory protocol audits. At VA, RCOs report directly to institutional officials and function independently of the Research Service.

Some facilities also may have been systematically “gaming the system” in order to make their programs look better. For example, some IRBs might become less likely to suspend a protocol when it should be suspended. While the above possibilities cannot be ruled out completely, the authors believe that they are unlikely. First, not all QIs were improved. Particularly, lapse in IRB continuing reviews remained high and unchanged from 2010 to 2012. In addition, routine on-site reviews of facility’s HRPPs have independently verified some of the improvements observed in these QI data.

Two areas in need of improvement have been identified: lapses in IRB continuing reviews and studies requiring CRADO. These 2 areas can be easily improved if facilities are willing to devote effort and resources to improve IRB procedures and practices. In a previous study based on 2011 QI data, the authors reported that VA facilities with a small human research program (active human research protocols of < 50) had a rate of lapse in IRB continuing reviews of 3.2%; facilities with a medium research program (50-200 active human research protocols) had a rate of 5.5%; and facilities with a large research program (> 200 active human research protocols) had a rate of 8.6%.14 Thus, facilities with a large research program particularly need to improve their IRB continuing review processes.

In addition to QI, these data provide opportunities to answer a number of important questions regarding HRPPs. For example, based on 2011 QI data, the authors had previously shown that HRPPs of facilities using their own VA IRBs and those using affiliated university IRBs as their IRBs of record performed equally well, providing scientific data for the first time to support the long-standing VA policy that it is acceptable for VA facilities to use their own IRB or the affiliated university IRB as the IRB of record.4,13 Likewise, there has been concern that facilities with small research programs may not have sufficient resources to support a vigorous HRPP.

In a previous study based on analysis of 2011 QI data, the authors showed that HRPPs of facilities with small research programs performed at least as well as facilities with medium and large research programs.14 Facilities with large research programs seemed to perform not as well as facilities with small and medium research programs, suggesting that facilities with large research programs may need to allocate additional resources to support HRPPs.

Two fundamental questions remain unanswered. First, are these QIs the most optimal for evaluating HRPPs? Second, do high-quality HRPPs as measured using QIs actually provide better human research subject protections? Although no clear answers to these important questions exist at this time, there is a clear need to measure the quality of HRPPs. Undoubtedly, modification of current QIs or the addition of new ones is needed. However, the authors are sharing their experience with academic and other non-VA research institutions as they develop their own QIs for assessing the quality of their HRPPs.

Acknowledgement

The authors wish to thank J. Thomas Puglisi, PhD, chief officer, Office of Research Oversight, for his support and critical review of the manuscript and thank all VA research compliance officers for their contributions in conducting audits and collecting the data presented in this report.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. U.S. Department of Health and Human Services. 1991. Federal Policy for the Protection of Human Subjects. 45 Code of Federal Registration (CFR) 46.

2. National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. The Belmont Report: Ethical principles and guidelines for the protection of human subjects of research. U.S. Department of Health and Human Services Website. http://www.hhs.gov/ohrp /humansubjects/guidance/belmont.html. Published April 18, 1979. Accessed March 6, 2015.

3. Institute of Medicine (U.S.) Committee on Assessing the System for Protecting Human Research Subjects. Preserving Public Trust: Accreditation and Human Research Participant Protection Programs. Washington, DC: National Academies Press; 2001.

4. U.S. Department of Veterans Affairs, Veterans Health Administration. Requirements for the protection of human subjects in research. Handbook 1200.05. U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications /ViewPublication.asp?pub_ID=3052. November 12, 2014. Accessed March 6, 2015.

5. Kizer KW. Statement on Oversight in the Veterans Health Administration before the Subcommittee on Veterans’ Affairs, U.S. House of Representatives. U.S. Department of Veterans Affairs Website. http://www .va.gov/OCA/testimony/hvac/sh/21AP9910.asp. April 21, 1999. Accessed March 19, 2015.

6. Steinbrook R. Protecting research subjects—The crisis at Johns Hopkins. N Engl J Med. 2002;346(9):716-720.

7. Kranish M. System for protecting humans in research faulted. Boston Globe. March 25, 2002:A1.

8. Shalala D. Protecting research subjects—What must be done. N Engl J Med. 2000;343(11):808-810.

9. Steinbrook R. Improving protection for research subjects. N Engl J Med. 2002;346(18):1425-1430.

10. Tsan MF, Smith K, Gao B. Assessing the quality of human research protection programs: the experience at the Department of Veterans Affairs. IRB. 2010;32(4):16-19.

11. U.S. Department of Veterans Affairs, Veterans Health Administration. Research and development committee. Handbook 1200.01 U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications/ViewPublication.asp?pub_ID=2038. Published June 16, 2009. Accessed March 6, 2015.

12. Tsan MF, Nguyen Y, Brooks R. Using quality indicators to assess human research protection programs at the Department of Veterans Affairs. IRB. 2013;35(1):10-14.

13. Tsan MF, Nguyen Y, Brooks R. Assessing the quality of VA human research protection programs: VA vs affiliated University Institutional Review Board. J Empir Res Hum Res Ethics. 2013;8(2):153-160.

14. Nguyen Y, Brooks R, Tsan MF. Human research protection programs at the Department of Veterans Affairs: quality indicators and program size. IRB. 2014;36(1):16-19.

15. U.S. Department of Veterans Affairs, Veterans Health Administration. Research compliance reporting requirements. Handbook 1058.01. U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications/viewpublication.asp?pub_id=2463. Published November 15, 2011. Accessed March 6, 2015.

16. Tsan MF, Puglisi JT. Health care operations activities that may constitute research: the Department of Veterans Affairs’s perspective. IRB. 2014;36(1):9-11.

17. Woodward M. Epidemiology. Study Design and Data Analysis. Boca Raton, FL: Chapman and Hall/CRC; 2014.

18. Tsan L, Davis C, Langberg R, Pierce JR. Quality indicators in the Department of Veterans Affairs nursing home care units: a preliminary assessment. Am J Med Qual. 2007;22(5):344-350.

1. U.S. Department of Health and Human Services. 1991. Federal Policy for the Protection of Human Subjects. 45 Code of Federal Registration (CFR) 46.

2. National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. The Belmont Report: Ethical principles and guidelines for the protection of human subjects of research. U.S. Department of Health and Human Services Website. http://www.hhs.gov/ohrp /humansubjects/guidance/belmont.html. Published April 18, 1979. Accessed March 6, 2015.

3. Institute of Medicine (U.S.) Committee on Assessing the System for Protecting Human Research Subjects. Preserving Public Trust: Accreditation and Human Research Participant Protection Programs. Washington, DC: National Academies Press; 2001.

4. U.S. Department of Veterans Affairs, Veterans Health Administration. Requirements for the protection of human subjects in research. Handbook 1200.05. U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications /ViewPublication.asp?pub_ID=3052. November 12, 2014. Accessed March 6, 2015.

5. Kizer KW. Statement on Oversight in the Veterans Health Administration before the Subcommittee on Veterans’ Affairs, U.S. House of Representatives. U.S. Department of Veterans Affairs Website. http://www .va.gov/OCA/testimony/hvac/sh/21AP9910.asp. April 21, 1999. Accessed March 19, 2015.

6. Steinbrook R. Protecting research subjects—The crisis at Johns Hopkins. N Engl J Med. 2002;346(9):716-720.

7. Kranish M. System for protecting humans in research faulted. Boston Globe. March 25, 2002:A1.

8. Shalala D. Protecting research subjects—What must be done. N Engl J Med. 2000;343(11):808-810.

9. Steinbrook R. Improving protection for research subjects. N Engl J Med. 2002;346(18):1425-1430.

10. Tsan MF, Smith K, Gao B. Assessing the quality of human research protection programs: the experience at the Department of Veterans Affairs. IRB. 2010;32(4):16-19.

11. U.S. Department of Veterans Affairs, Veterans Health Administration. Research and development committee. Handbook 1200.01 U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications/ViewPublication.asp?pub_ID=2038. Published June 16, 2009. Accessed March 6, 2015.

12. Tsan MF, Nguyen Y, Brooks R. Using quality indicators to assess human research protection programs at the Department of Veterans Affairs. IRB. 2013;35(1):10-14.

13. Tsan MF, Nguyen Y, Brooks R. Assessing the quality of VA human research protection programs: VA vs affiliated University Institutional Review Board. J Empir Res Hum Res Ethics. 2013;8(2):153-160.

14. Nguyen Y, Brooks R, Tsan MF. Human research protection programs at the Department of Veterans Affairs: quality indicators and program size. IRB. 2014;36(1):16-19.

15. U.S. Department of Veterans Affairs, Veterans Health Administration. Research compliance reporting requirements. Handbook 1058.01. U.S. Department of Veterans Affairs Website. http://www.va.gov/vhapublications/viewpublication.asp?pub_id=2463. Published November 15, 2011. Accessed March 6, 2015.

16. Tsan MF, Puglisi JT. Health care operations activities that may constitute research: the Department of Veterans Affairs’s perspective. IRB. 2014;36(1):9-11.

17. Woodward M. Epidemiology. Study Design and Data Analysis. Boca Raton, FL: Chapman and Hall/CRC; 2014.

18. Tsan L, Davis C, Langberg R, Pierce JR. Quality indicators in the Department of Veterans Affairs nursing home care units: a preliminary assessment. Am J Med Qual. 2007;22(5):344-350.