User login

Innovative Cardiac Examination Curriculum

Despite impressive advances in cardiac diagnostic technology, cardiac examination (CE) remains an essential skill for screening for abnormal sounds, for evaluating cardiovascular system function, and for guiding further diagnostic testing.16

In practice, these benefits may be attenuated if CE skills are inadequate. Numerous studies have documented substantial CE deficiencies among physicians at various points in their careers from medical school to practice.79 In 1 study, residents' CE mistakes accounted for one‐third of all physical diagnostic errors.10 When murmurs are detected, physicians will often reflexively order an echocardiogram and refer to a cardiologist, regardless of the cost or indication. As a consequence, echocardiography use is rising faster than the aging population or the incidence of cardiac pathological conditions would explain.11 Because cost‐effective medicine depends on the appropriate application of clinical skills like CE, the loss of these skills is a major shortcoming.1215

The reasons for the decline in physicians' CE skills are numerous. High reliance on ordering diagnostic tests,16 conducting teaching rounds away from the bedside,17, 18 time constraints during residency,16, 19 and declining CE skills of faculty members themselves7 all may contribute to the diminished CE skills of residents. Residents, who themselves identify abnormal heart sounds at alarmingly low rates, play an ever‐increasing role in medical students' instruction,7, 9 exacerbating the problem.

Responding to growing concerns over patient safety and quality of care,16, 20 public and professional organizations have called for renewed emphasis on teaching and evaluating clinical skills.21 For example, the American Board of Internal Medicine has added a physical diagnosis component to its recertification program.22 The Accreditation Council for Graduate Medical Education (ACGME) describes general competencies for residents, including patient care that should include proper physical examination skills.23 Although mandating uniform standards is a welcome change for improving CE competence, the challenge remains for medical school deans and program directors to fit structured physical examination skills training into an already crowded curriculum.16, 24 Moreover, the impact of these efforts to improve CE is uncertain because programs lack an objective measure of CE competence.

The CE training is itself a challenge: sight, sound, and touch all contribute to the clinical impression. For this reason, it is difficult to teach away from the bedside. Unlike pulmonary examination, for which a diagnosis is best made by listening, cardiac auscultation is only one (frequently overemphasized) aspect of CE.25 Medical knowledge of cardiac anatomy and physiology, visualization of cardiovascular findings, and integration of auditory and visual findings are all components of accurate CE.7 Traditionally, CE was taught through direct experience with patients at the bedside under the supervision of seasoned clinicians. However, exposure and learning from good teaching patients has waned. Audiotapes, heart sound simulators, mannequins, and other computer‐based interventions have been used as surrogates, but none has been widely adopted.26, 27 The best practice for teaching CE is not known.

To help to improve CE education during residency, we implemented and evaluated a novel Web‐based CE curriculum that emphasized 4 aspects of CE: cardiovascular anatomy and physiology, auditory skills, visual skills, and integration of auscultatory and visual findings. Our hypothesis was that this new curriculum would improve learning of CE skills, that residents would retain what they learn, and that this curriculum would be better than conventional education in teaching CE skills.

METHODS

Study Participants, Site, and Design

Internal medicine (IM) and family medicine (FM) interns (R1s, n = 59) from university‐ and community‐based residency programs, respectively, participated in this controlled trial of an educational intervention to teach CE.

The intervention group consisted of 26 IM and 8 FM interns at the beginning of the academic year in June 2003. To establish baseline scores, all interns took a 50‐question multimedia test of CE competency described previously.7, 28, 29 Subsequently, all interns completed a required 4‐week cardiology ward rotation. During this rotation, they were instructed to complete a Web‐based CE tutorial with accompanying worksheet and to attend 3 one‐hour sessions with a hospitalist instructor. Their schedules were arranged to allow for this educational time. During the third meeting with the instructor, interns were tested again to establish posttraining scores. Finally, at the end of the academic year, interns were tested once again to establish retention scores.

The control group consisted of 25 first‐year IM residents who were tested at the end of their academic year in June 2003. These test scores served as historical controls for interns who had just completed their first year of residency and who had received standard ward rotation without incorporated Web‐based training in CE. Interns from both groups had many opportunities for one‐on‐one instruction in CE because each intern was assigned for the cardiology rotation to a private practice cardiology attending. Figure 1 outlines the number of IM and FM interns eligible and the number actually tested at each stage of the study.

Educational Intervention

The CE curriculum consisted of a Web‐based program and 3 tutored sessions. The program used virtual patientsaudiovisual recordings of actual patientscombined with computer graphic animations and text to teach cardiac anatomy, hemodynamics, pathophysiology, and visual and auditory findings.30 This multimedia program was interactive and allowed comparisons to normal or to similar lesions. The content included cardiac findings identified as important by a survey of IM residency program directors,8 as well as ACGME training requirements for IM residents23 and cardiology fellows.31 Table 1 outlines the content of the Web‐based curriculum.

| 1. Frontal anatomy of heart, lungs, and vessels with: |

| a. Interactive illustrations allowing depiction of individual structures |

| b. Separate cartoons of anatomy of the right heart, left heart, and entire heart |

| c. Correlation with borders forming regions on chest X‐ray |

| 2. Interactive phases of the cardiac cycle including: |

| a. Phonocardiogram of normal heart sounds (S1, S2) |

| b. ECG recording |

| c. Left heart (aortic, left atrial, and left ventricular) pressures |

| d. Right heart (pulmonary artery, right atrial, and right ventricular) pressures |

| e. Animations of the left heart. |

| 3. Physiological splitting of S2 with: |

| a. Phonocardiogram of normal heart sounds |

| b. ECG tracing |

| c. Left heart (aortic, left atrial, and left ventricular) pressures |

| d. Right heart (pulmonary artery, right atrial, and right ventricular) pressures |

| e. Interactive animations of the heart and lungs with respiration |

| 4. Patients with aortic regurgitation (AR) |

| a. Integrating pulse with sounds and murmurs |

| b. Acute severe AR |

| Recognizing Quincke's pulse |

| c. Austin Flint murmur |

| Differentiating it from the pericardial rub |

| d. Hemodynamics of chronic and acute AR and comparisons |

| e. Well‐tolerated AR |

| 5. Patient with aortic stenosis (AS) |

| a. Integrating pulse with sounds and murmurs |

| Comparison with HCM |

| b. Interactive descriptions of hemodynamics and flow |

| 6. Patients with mitral regurgitation (MR) |

| a. Chronic MR |

| b. Hemodynamics and comparisons of clinical findings for: |

| i. Normal |

| ii. Mitral valve prolapse (MVP) |

| iii. Acute MR |

| iv. Compensated MR |

| c. Acute MR |

| 7. Patients with mitral stenosis (MS) |

| a. Introduction: integrating inspection and auscultation |

| b. Compare sounds: opening snap, split S2, S3 |

| c. Severe MS: interactive comparison of sounds at apex and base |

| d. Hemodynamic effects of heart rate |

This training was designed for typical conditions of residency training programs: studentteacher contact time was limited to three 1‐hour sessions; the instructor (J.K.) was an internist hospitalist (trained and facile in the use of the program), not a cardiologist; and self‐paced study was Web‐based to allow access at all hours at the hospital or at home. In their first session, at the beginning of the cardiology block, interns were introduced to the Web site and given a 1‐page homework assignment that corresponded to the Web‐based content (Table 2). During the second session, in the middle of the 4‐week block, a group discussion was held with the Web‐based program, in which the interns asked questions and reviewed their worksheet answers and program as needed with the hospitalist. During the third session, at the end of the block, questions were reviewed, and the interns took the posttraining test.

| In preparation for the cardiology heart sounds module during the cardiology block at LBMMC, please answer the following questions and bring the completed questionnaire with you. The correct responses to these questions as well as the underlying mechanisms can be found in the Heart Sounds Tutorial ( |

|---|

| 1. Which cardiac chamber is farthest from the anterior chest wall? __________ |

| 2. Which cardiac chamber is closest to the left sternal border? ___________ |

| 3. Are the mitral and aortic valves ever closed at the same time? ____________ |

| 4. Why does inspiratory lung inflation delay the pulmonic second sound? ____________ |

| 5. Three or more murmurs of different origin can be heard in aortic regurgitation. What is their timing (within the cardiac cycle) and causation? ____________ |

| 6. How do you elicit Quincke's pulse? ____________ |

| 7. Is arterial pulse pressure greater in acute or chronic aortic regurgitation? ____________ |

| 8. How does the severity of aortic regurgitation correlate with duration of the early diastolic murmur? ____________ |

| 9. Splitting of the first sound heard with the stethoscope diaphragm in a patient with aortic stenosis is caused by? ____________ |

| 10. What effect does the severity have on the duration of the murmur of aortic stenosis? ____________ |

| 11. Is the murmur of aortic stenosis ever holosystolic? ____________ |

| 12. Is the duration of the murmur of acute mitral regurgitation shorter or longer than that of chronic mitral regurgitation? ____________ |

| 13. What causes the third heart sound (S3) in mitral regurgitation? ____________ |

| 14. Why is the jugular venous a‐wave often prominent in mitral stenosis? ____________ |

| 15. Which heart sound is loudest in mitral stenosis?Why? ____________ |

| 16. What causes the split sound heard in mitral stenosis? ____________ |

| 17. Where (on the precordium) would you hear the murmur of mitral stenosis ____________ |

| 18. How do postural maneuvers affect the heart sounds and murmur in mitral prolapse? ____________ |

| 19. A 3‐phase friction rub can be confused with the 3‐murmur auditory complex in which valvar lesion? ____________ |

| 20. What are some causes of third heart sounds that do not imply poor ventricular function? ____________ |

| 21. Is a fourth heart sound usually best heard at the base (□Yes □No) or the apex (□Yes □No)? |

Evaluation

To evaluate what the R1 intervention group learned, we tested them at baseline, during internship orientation; in posttraining, at the end of their cardiology rotation; and for retention, at the end of their internship year. To evaluate what the controls learned, we tested them at the end of their internship year. The evaluation included a brief survey and the previously validated CE Test.7, 28, 29 Test scores did not carry academic consequences.

For the survey, we asked participants whether they had some prior training in CE and how many hours they estimated having spent learning CE skills during this study with a teacher or in a course. Using a 5‐point Likert scale, they self‐rated their interest and confidence in their own CE skills.

The CE Test is a 50‐question interactive multimedia program that evaluates CE competency using recordings from actual patients. For the CE Test, an overall score (maximum 100 points) and scores for 4 subcategories (expressed as percentages)knowledge of cardiac physiology (interpretation of pressures, sounds and flow related to cardiac contraction and relaxation), audio skills, visual skills, and integration of audio and visual skillsare computed. The same assessment instrument was used for all groups.

Statistical Analysis

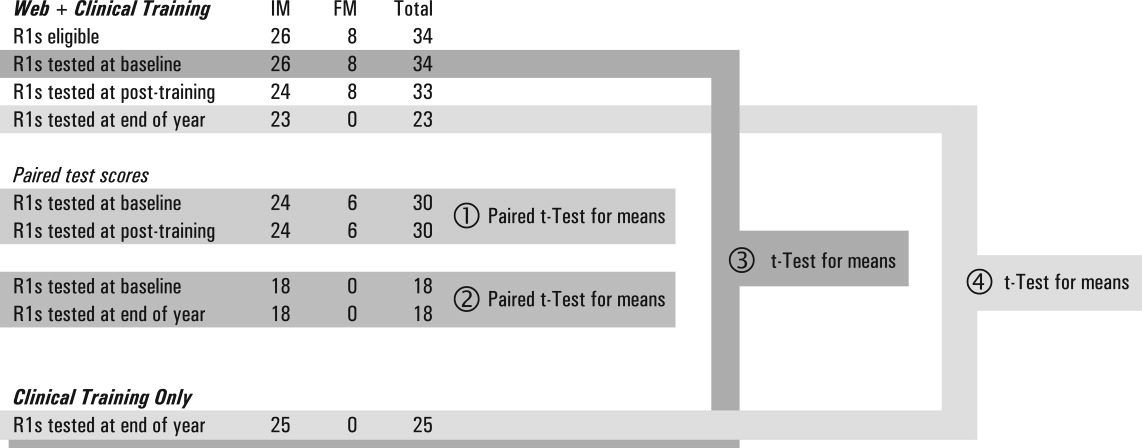

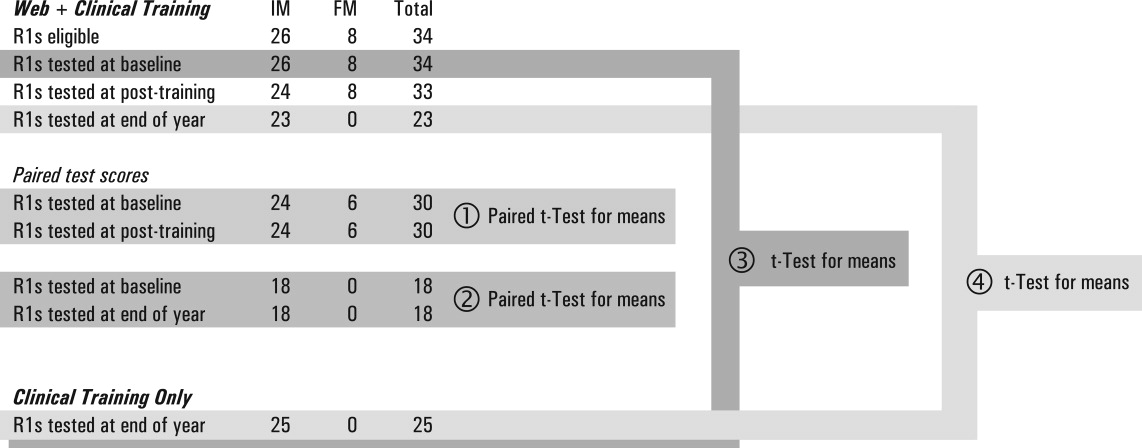

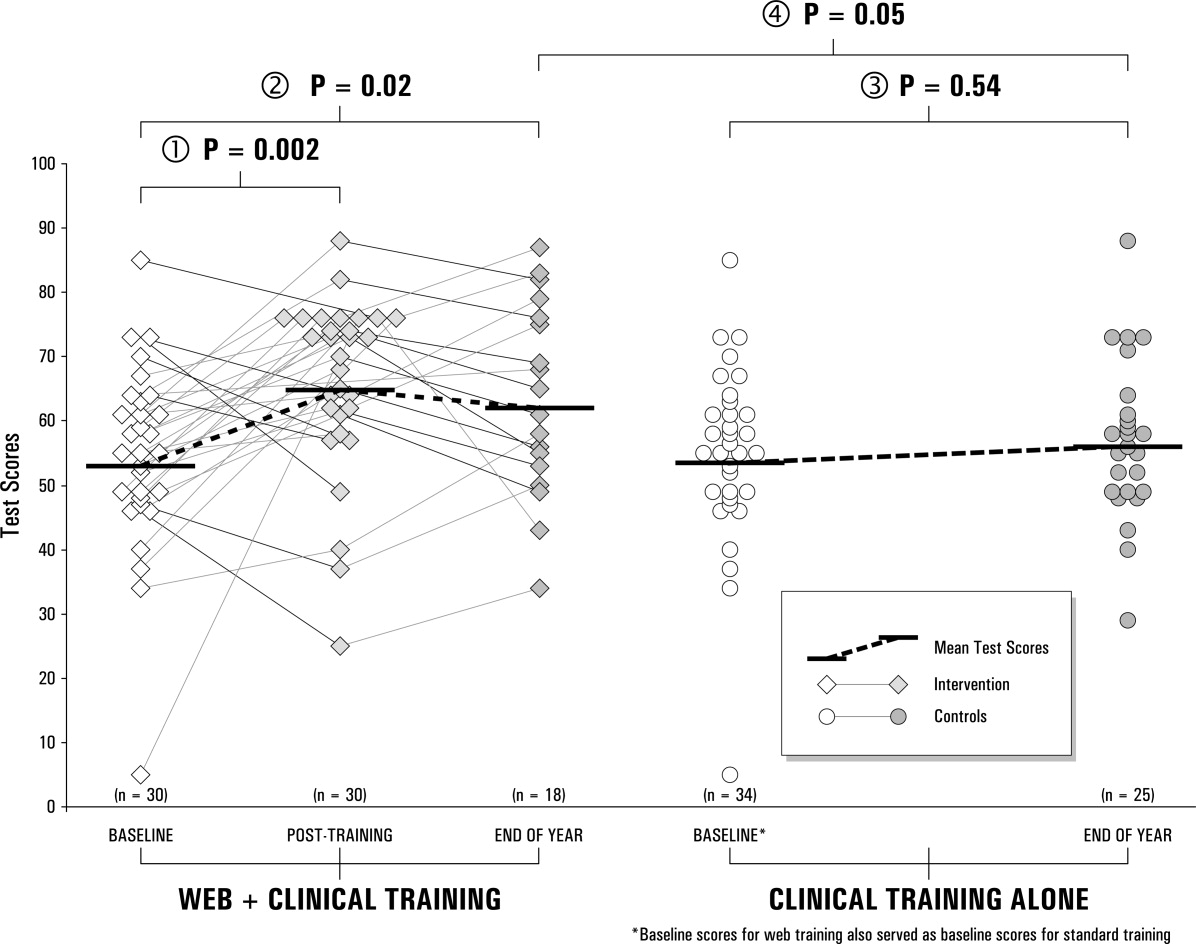

Figure 1 lists the tests used to answer the following research questions:

Does the Web‐based curriculum improve CE skills? We compared intervention baseline and posttraining scores using the paired t test for means.

Do interns retain this improvement in skills? We compared intervention baseline and end‐of‐year scores using paired the t test for means.

Does clinical training alone improve CE skills? We compared intervention baseline and control end‐of‐year scores using the t test for means.

Is the Web‐based curriculum better than clinical training alone? We compared intervention and control end‐of‐year scores using the t test for means.

We used the paired t test when baseline and posttraining scores of the same intern could be matched. To test for differences in CE competency between the intervention and control groups, we compared mean scores using the independent Student t test for equal or unequal group variances, as appropriate. Because the interval from posttraining to end‐of‐year testing was variable, it was possible that longer time intervals could allow learning to decay. Therefore, we computed the Spearman correlation coefficient between follow‐up months, and the change in score from post‐Web training to end‐of‐year Pearson correlation coefficients was computed to examine associations of survey variables with test scores. The 2‐sided nominal P < .05 criterion was used to determine statistical significance. Analyses were performed with SPSS statistical software, version 13.0 (SPSS, Inc., Chicago, IL).

Institutional review board approval was granted to this study as exempted research in established educational settings involving normal educational practices.

RESULTS

Research Question 1

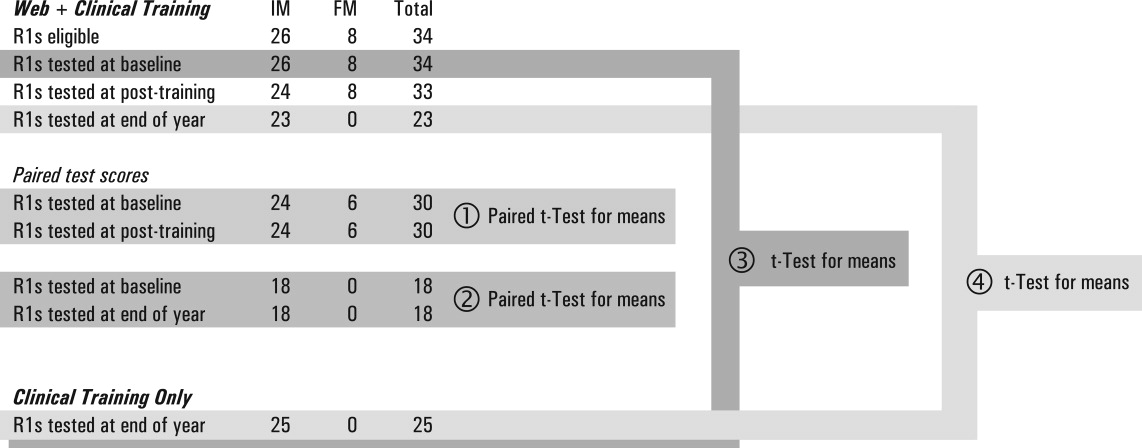

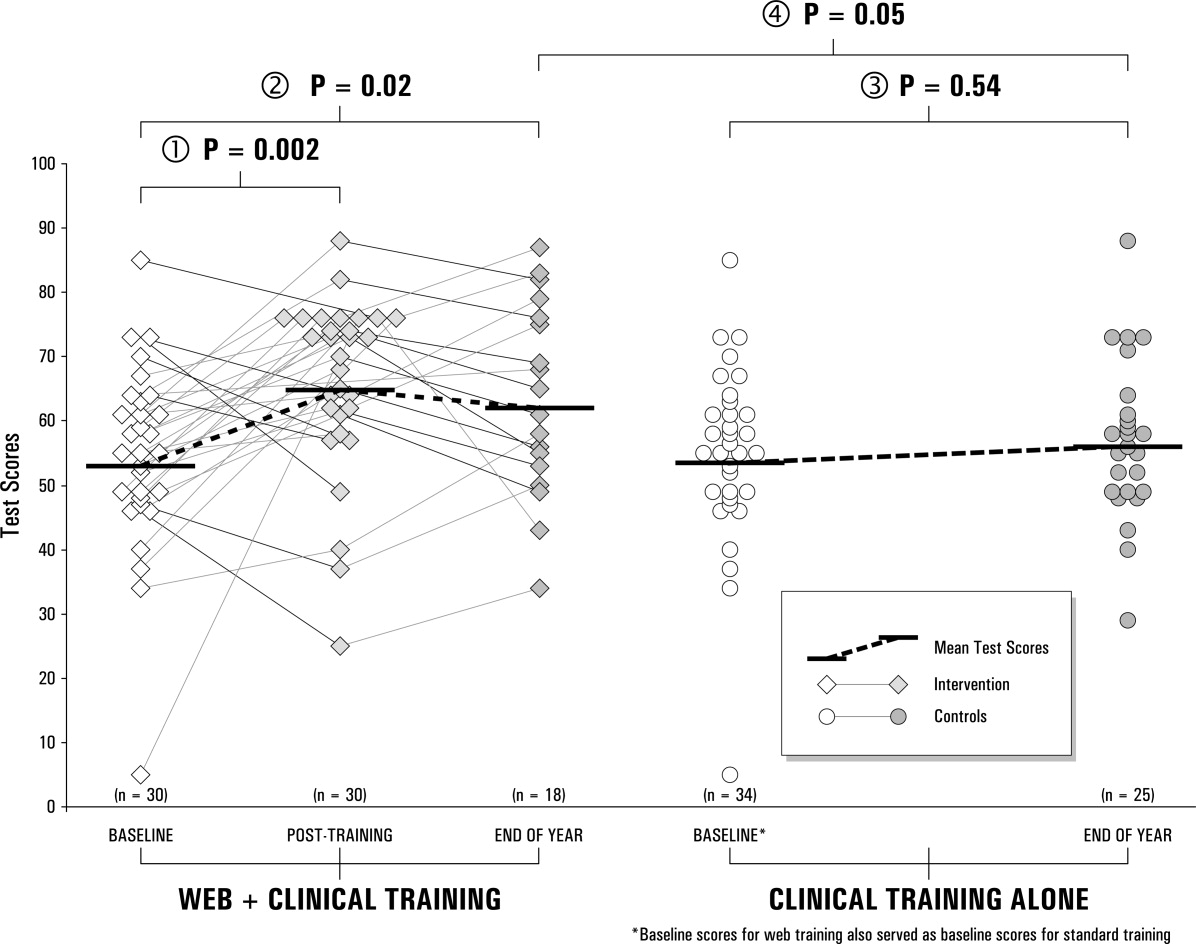

Individual baseline and posttraining CE Test scores for the intervention group are plotted in the first 2 columns of Figure 2. The posttraining mean score improved from baseline (66.0 5.2 vs. 54.2 5.4, P = .002). The knowledge, audio, and visual subcategories of CE competence showed similar improvements (P .001; Table 3). The score for the integration of audiovisual skills subcategory was higher at baseline than the other subcategory scores and remained unchanged.

| Question 1. Does the Web‐based curriculum improve CE skills? | |||||

|---|---|---|---|---|---|

| Subcategory | Intervention baseline (n = 30) | Intervention end of year (n = 30) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (paired t test) | |

| Question 2. Do interns retain this improvement? | |||||

| Subcategory | Intervention baseline (n = 18) | Intervention end of year (n = 18) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (paired t test) | |

| Question 3. Does clinical training alone improve CE skills? | |||||

| Subcategory | Intervention baseline* (n = 34) | Control end of year (n = 25) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (t test) | |

| Question 4. Is the Web‐based curriculum better than clinical training alone? | |||||

| Subcategory | Intervention end of year (n = 23) | Control end of year (n = 25) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (t test) | |

| |||||

| Knowledge | 55.9% (14.7%) | 50.4%‐61.4% | 68.9% (15.3%) | 63.2%‐74.6% | <.001 |

| Audio | 69.6% (17.4%) | 63.1%‐76.0% | 83.6% (10.3%) | 79.7%‐87.4 % | <.001 |

| Visual | 56.7% (21.4%) | 48.7%‐64.7% | 71.9% (16.6%) | 65.7%‐78.1% | <.001 |

| Integration | 77.5% (14.5%) | 72.1%‐83.0% | 76.8% (12.8%) | 72.1%‐81.6% | .85 |

| Overall score | 54.2% (14.5%) | 48.8%‐59.6% | 66.0% (13.6%) | 60.9%‐71.2% | .002 |

| Knowledge | 58.6% (15.2%) | 51.0%‐66.2% | 67.6% (16.7%) | 60.4%‐74.9% | .05 |

| Audio | 67.4% (17.1%) | 58.9%‐75.9% | 82.3% (10.4%) | 77.8%‐86.8% | .004 |

| Visual | 51.6% (22.0%) | 40.7%‐62.5% | 65.2% (20.6%) | 56.3%‐74.1% | .13 |

| Integration | 76.0 % (15.8%) | 68.2%‐83.8% | 77.1% (13.2%) | 71.4%‐82.8% | .95 |

| Overall score | 51.8 % (15.0%) | 44.3%‐59.3% | 63.5% (14.9%) | 56.1%‐70.9% | .02 |

| Knowledge | 56.5% (14.6%) | 51.4%‐61.6% | 57.8% (15.4%) | 51.4%‐64.1% | .75 |

| Audio | 70.0% (16.9%) | 64.1%‐75.9% | 73.3% (13.6%) | 67.7%‐79.0% | .42 |

| Visual | 57.6% (20.9%) | 50.3%‐64.9% | 66.9% (15.3%) | 60.6%‐73.2% | .07 |

| Integration | 77.8% (13.8%) | 72.9%‐82.5% | 76.0% (12.2%) | 71.0%‐81.0% | .62 |

| Overall score | 54.7% (13.7%) | 49.9%‐59.5% | 56.8% (12.4%) | 51.7%‐61.9% | .54 |

| Knowledge | 67.6% (16.7%) | 60.4%‐74.8% | 57.8% (15.4%) | 51.4%‐64.1% | 0.04 |

| Audio | 82.3% (10.4%) | 77.8%‐86.8% | 73.3% (13.6%) | 67.7%‐79.0% | 0.01 |

| Visual | 65.2% (20.6%) | 56.3%‐74.1% | 66.9% (15.3%) | 60.6%‐73.2% | 0.75 |

| Integration | 77.1% (13.2%) | 71.4%‐82.8% | 76.0% (12.2%) | 71.0%‐81.0% | 0.76 |

| Overall score | 64.7% (14.5%) | 58.4%‐71.0% | 56.8% (12.4%) | 51.7%‐61.9% | 0.05 |

Research Question 2

End‐of‐year scores are plotted in the third column of Figure 2. Overall scores remained higher at the end of the internship year than at baseline (63.5 7.4, P = .02). Improvements in knowledge and audio skills were retained as well (P .05; Table 3). Visual skills (inspection of neck and precordium), however, showed a steep decline, from 71.9 to 65.2, a score indistinguishable from that of the controls (66.9, P = .75). The interval from posttraining to end‐of‐year testing varied; the mean was 21 weeks. The Spearman correlation coefficient between follow‐up months and change in score from post‐Web training to the end of the year was not significant. ( = 0.12, P = .64). To reinforce the results of the correlational analysis, the paired t test was used to compute the t value for 18 interns matched at post‐Web training and retention testing, but the result was not significant (P = .61), indicating that without further training, R1 intervention scores did not change over time.

Research Question 3

Baseline scores for the control group (the prior year's interns) were not available, since the interns had just completed their academic year when the study began. Therefore the baseline scores from the present year's interns were used as a surrogate. Comparing these 2 groups (intervention at baseline, and controls at the end of the year), no difference was observed between mean scores (54.7 4.8 vs. 56.8 5.1, P = .54). Controls scored slightly higher at baseline in visual skills than did interns who received the intervention, but not significantly so (P = .07). There were also no differences in knowledge, audio skills, and integration of audio and visual skills.

Research Question 4

When we compared overall scores at the end of the year, the mean score for the intervention group was higher than that of the controls (64.7 6.3 vs. 56.8 5.1, P = .05). Similarly, knowledge and auditory scores indicated the intervention group had better competency (P .04), but visual and integration skills did not.

Survey

The vast majority of those in both the intervention (88%) and control groups (83%) reported some prior training in CE. In this study the mean total number of hours that those in the intervention group reported they spent preparing was 5.1 2.0 hours (range 2‐25 hours). This number of hours included the three 1‐hour sessions spent with the instructor. The control group reported they spent almost twice as many hours in CE instruction: a mean of 10.1 4.8 hours (range 2‐30 hours, P = .05). On a Likert scale of 1‐5 (from no interest to high interest), both the intervention and control groups expressed high interest in learning CE (4.7 0.2 and 4.4 0.5, respectively; P = .3). Despite significant differences in CE Test scores, the intervention and control groups shared the same confidence in their abilities (2.5 0.3, P = .82). Neither time spent learning nor interest level correlated with overall CE Test score.

DISCUSSION

To improve CE competence of residents, we designed and implemented a Web‐based CE curriculum using virtual patients (VPs), which standardized the interns' exposure to a spectrum of medical conditions and allowed them flexibility in choosing when and where the training occurred.30 In this controlled educational intervention, we found significant improvements in interns' mean CE competency scores immediately following the Web‐based training; these improvements were retained at the end of the academic year. The Web‐based curriculum also improved scores in all 4 subcategories except integration of audio and visual skills. Although cardiac physiology knowledge and audio skills were retained, visual skills showed a steep decline. This decline suggests that visual skills may be more labile and could benefit from more regular reinforcement. It may also be that old habits die hard: in an earlier study of baseline CE competency, we observed that most participants listened with their eyes closed or averted, actively tuning out the visual timing reference that would help them answer the question.7

Comparing control and intervention retention scores suggests that the Web‐based training is more effective than traditional training in CE. The interns spent a mean of 5 hours learning, including 3 hours with the hospitalist‐instructor. Of those 3 hours, 30 minutes was spent taking the test. Earlier studies32, 33 showed improved CE skills with 12‐20 hours of instructor‐led tutorials. The shorter instruction time for interns in this study translated into about half the magnitude of improvement that was seen in third‐year medical students, whose mean score improved from 58.7 6.7 to 73.4 3.8. Moreover, the students in the earlier study continued to show improvement in their CE competency without further intervention, whereas the test scores of interns in this study appeared to plateau. It is therefore likely that 5 hours per year is a lower bound for successful Web‐based CE training. For this reason, we do not recommend fewer than 5 hours per year of CE training using a Web‐based curriculum (including self‐study). Ideally, sessions should be scheduled each year in residency in order to form a critical mass of learning that has the potential to continue improvements beyond residency training.

When surveyed, interns in both the intervention and control groups were very interested in improving their skills but were not confident in their abilities. In fact, confidence did not correlate with ability, which suggests interns (and possibly others)34 do not have a reliable, intrinsic sense of their CE skills. Curiously, the control group reported spending almost twice the number of hours learning CE that the intervention group did. It is unlikely that the intervention group received less traditional instruction than the control group. Therefore, it is more likely that in estimating the hours spent learning CE, the intervention group reported only the formal instruction plus self‐study hours spent on cardiac examination. One way to interpret the greater number of hours of instruction reported by the controls is to look at their end‐of‐the‐year estimate of how much instruction they had received in the previous 12 months. The controls did not benefit from formal instruction in cardiac examination, and so their estimate included the hours spent with physician attendings receiving traditional bedside instruction. The number of hours reported by the controls could be accurate or could be an overestimate. If accurate, the greater number of hours for the controls did not translate into superior performance on the CE Test. If an overestimate, hindsight bias may have inflated the actual hours spent.

Some may argue that VPs are a poor substitute for actual patients. With few reservations, we are inclined to agree. The best training for CE is at the bedside with a clinical master and the luxury of time. Today, however, teaching has shifted from the bedside to conference rooms and even corridors.17, 18 Consequently, opportunities to practice hands‐on CE have become rare. Even when physical examination is performed at the bedside, the quality of the instruction on it depends on the teacher's interest, abilities, and available patients. Our intent was to implement a standardized curriculum that ensures that CE is practiced and tested regularly in order to prevent the creation of a generation of practicing physicians who never develop these skills.

A hospitalist, not a cardiologist, led the intervention. Because cardiac conditions are involved in more than 40% of admissions to the medical wards, a hospitalist should be able to evaluate a patient through physical examination in order to determine whether further tests are necessary or whether a cardiologist should be consulted. In academic medicine, most teaching of cardiac examination is no longer done by cardiologists, but rather by internists.31 Hospital medicine attendings often play a central role as attending physicians for medical students and house officers, and the hospitalist can and should play a larger role in bedside teaching. A Web‐based curriculum for residents that can be accessed at all hours is also suited to residents who have inpatient rotations.

Our CE curriculum was designed for the typical conditions of today's residency training programs, in which education must be balanced with the demands of patient care and the constraints of the limits on resident duty hours.16, 24 Studentfaculty contact time was limited to three 1‐hour sessions, reflecting the time constraints of most training programs.13, 16, 19 The program was incorporated into the regularly scheduled cardiology block throughout the academic year, ensuring that residents received broad exposure to cardiac clinical presentations at a time when bedside encounters with important cardiac physical findings were most likely. The goal was not to reduce or eliminate bedside teaching but to make the limited time that remained more productive. The fidelity of the training to actual bedside encounters was high: unlike the lone audio recordings of heart sounds, VPs in this study also simultaneously presented visual pulsation in the neck or precordium, training the resident to use these visual timing cues while auscultating patients. Furthermore, the Web‐based multimedia technology employed allowed direct comparison of a patient's bedside findings with case‐matched laboratory studies, which was enhanced with explanatory animations and tutorials. Working within the constraints of residency training programs, we were able to standardize the cardiac clinical exposure of trainees and to test for improvement in and retention of CE skills.

Several limitations should be considered. Although VPs mimic bedside patient encounters, we do not know if improvements from this Web‐based curriculum translate into improvements in patient care. Ideally, the study would have been conducted at more than 1 site in order to test whether the positive results we found are replicated elsewhere. Mitigating these drawbacks are the real‐world conditions of our study: the teaching hospital that conducted the study was not involved in the Web site development, and an internist‐hospitalist, not a cardiologist, led the instruction. In theory, the baseline testing itself may have boosted the posttraining scores of the intervention group simply by motivating the interns to improve their test scores. However, only an overall score was reported to interns; they were not told which questions they missed or in what subcategory they were most deficient. Although simple exposure to the test may be the reason for the interns' improvement, it has been shown that the test has testretest reliability over a 4‐ to 6‐week period28, 29 and that test scores do not improve with prior exposure to the test. Because those in the intervention group knew that they would be retested, we cannot eliminate the possibility that they were somehow more motivated to do well on the test by the end of the year. Finally, there may have been differences in the ward training received by the 2 groups of interns studied. The test scores of interns in the control group may not have been as high because of differences in patients admitted, topics discussed, or teaching attendings assigned to them during the academic year; however, because there were no radical changes from the previous year in either the patient population or the group of attending physicians, the differences in the quality of patient encounters between the control and intervention groups are thought to be minor.

In conclusion, the Web‐based curriculum met all the requirements for being effective education: it was self‐directed, interactive, relevant, and cost effective. This novel curriculum standardized learning experience during the cardiology block and saved time for both teacher and trainees. Baseline assessment helped to focus learning. Involvement of an instructor‐hospitalist added accountability, a resource for answering questions, and encouragement for building self‐study skills. More importantly, a realistic and reproducible test of CE skills documented whether the learning objectives were meta feature that is becoming increasingly desirable to residency program directors.35 Our study showed that training with virtual patients yields superior mean CE competency scores over those with standard cardiology ward rotations. Further studies may confirm whether this improvement in CE translates to improvement in making appropriate observations and diagnoses of actual patients.

Acknowledgements

The authors first thank their interns and residents for their willingness to participate in the training and to take the test. They also thank Dr. Lloyd Rucker, program director, University of California Irvine Internal Medicine Residency Program and Dr. John Michael Criley for his guidance in how to use the test and in designing the intervention. Finally, they thank David Criley for his contribution in developing the Web site.

- ,,,,,.Cross‐sectional study of contribution of clinical assessment and simple cardiac investigations to diagnosis of left ventricular systolic dysfunction in patients admitted with acute dyspnea.BMJ.1997;314:936–940.

- Agency for Health Care Policy and Research.Heart failure: evaluation and care of patients with left‐ventricular systolic dysfunction.Rockville, MD:U.S. Department of Health and Human Services;1994.

- ,,.Value of the cardiovascular physical examination for detecting valvular heart disease in asymptomatic subjects.Am J Cardiol.1996;77:1327–1331.

- ,,, et al.Bedside diagnosis of systolic murmurs.N Engl J Med.1988;318:1572–1578.

- ,.Gumbiner C. Cost assessment for evaluation of heart murmurs in children.Pediatrics.1993;91:365:368.

- .Estimation of pressure gradients by auscultation: an innovative and accurate physical examination technique.Am Heart J.2001;141:500–506.

- ,,, et al.Competency in cardiac examination skills in medical students, trainees, physicians and faculty: a multicenter study.Arch Intern Med.2006;166:610–616.

- ,,,.The teaching and practice of cardiac auscultation during internal medicine and cardiology training.Ann Intern Med.1993;119:47–54.

- ,.Cardiac auscultatory skills of internal medicine and family practice trainees: a comparison of diagnostic proficiency.JAMA.1997;278:717–722.

- ,.Detection and correction of house staff error in physical diagnosis.JAMA.1983;249:1035–1037.

- .Is listening through a stethoscope a dying art?Boston Globe May 25,2004.

- ,.Salvaging the history, physical examination and doctor‐patient relationship in a technological cardiology environment.J Am Coll Cardiol.1999;33:892–893.

- .Wither the Cardiac Physical Examination?J Am Coll Cardiol.2006;48:2156–2157.

- ,.New techniques should enhance, not replace, bedside diagnostic skills in cardiology, Part 1.Mod Concepts Cardiovasc Dis.1990;59:19–24.

- ,.New techniques should enhance, not replace, bedside diagnostic skills in cardiology, Part 2.Mod Concepts Cardiovasc Dis.1990;59:25–30.

- .Time, now, to recover the fun in the physical examination rather than abandon it.Arch Intern Med.2006;166:603–604.

- .On bedside teaching.Ann Intern Med.1997;126:217–220.

- .Cassie JM, Daggett CJ. The role of the attending physician in clinical training.J Med Educ.1978;53:429–431.

- ..Teaching the resident in infernal medicine: present practices and suggestions for the future.JAMA.1986:256:725–729.

- .Bedside rounds revisited.N Engl J Med.1997;336:1174–1175.

- ,,.Effects of training in direct observation of medical residents' clinical competence a randomized trial.Ann Intern Med.2004;140:874–881.

- American Board of Internal Medicine. Clinical Skills/PESEP. Available at: http://www.abim.org/moc/semmed.shtm. Accessed July 9,2007.

- Accreditation Council for Graduate Medical Education (ACGME). General Competencies and Outcomes Assessment for Designated Institutional Officials. Available at: http://www.acgme.org/outcome/comp/compFull.asp. Accessed July 9,2007.

- Accreditation Council for Graduate Medical Education, Resident Duty Hours. Available at: http://www.acgme.org/acWebsite/dutyHours/dh_dutyHoursCommonPR.pdf. Accessed, July 9,2007.

- .Letter to the Editor re: Cardiac auscultatory skills of physicians‐in‐training: comparison of three English‐speaking countries.Am J Med.2001;111:505–506.

- ,,, et al.Assessing housestaff diagnostic skills using a cardiology patient simulator.Ann Intern Med.1992;117:751–756.

- ,,,.A comparison of computer assisted instruction and small group teaching of cardiac auscultation to medical students.Med Educ.1991;25:389–395.

- ,,,,.Validation of a multimedia measure of cardiac physical examination proficiency.Boston, MA:Association of American Medical Colleges Group on Educational Affairs, Research in Medical Education Summary Presentations;November,2004.

- ,,,,.Validation of a computerized test to assess competence in the cardiac physical examination. Submitted for publication,2007.

- Blaufuss Medical Multimedia Laboratory. Heart sounds tutorial. Available at: http://www.blaufuss.org. Accessed July 7,2007.

- ,.30th Bethesda Conference: The Future of Academic Cardiology. Task force 3: teaching.J Am Coll Cardiol.1999;33:1120–1127.

- ,,.Beyond Heart Sounds: An interactive teaching and skills testing program for cardiac examination.Comput Cardiol.2000;27:591–594.

- ,,,,.Using virtual patients to improve cardiac examination competency in medical students. In press,Clin Cardiol.

- ,,,,,.Accuracy of physician self‐assessment compared with observed measures of competence; a systematic review.JAMA.2006;296: 9;1094–1102.

- .Assessing the ACGME general competencies: general considerations and assessment methods.Acad Emerg Med.2002;9:1278–1288.

Despite impressive advances in cardiac diagnostic technology, cardiac examination (CE) remains an essential skill for screening for abnormal sounds, for evaluating cardiovascular system function, and for guiding further diagnostic testing.16

In practice, these benefits may be attenuated if CE skills are inadequate. Numerous studies have documented substantial CE deficiencies among physicians at various points in their careers from medical school to practice.79 In 1 study, residents' CE mistakes accounted for one‐third of all physical diagnostic errors.10 When murmurs are detected, physicians will often reflexively order an echocardiogram and refer to a cardiologist, regardless of the cost or indication. As a consequence, echocardiography use is rising faster than the aging population or the incidence of cardiac pathological conditions would explain.11 Because cost‐effective medicine depends on the appropriate application of clinical skills like CE, the loss of these skills is a major shortcoming.1215

The reasons for the decline in physicians' CE skills are numerous. High reliance on ordering diagnostic tests,16 conducting teaching rounds away from the bedside,17, 18 time constraints during residency,16, 19 and declining CE skills of faculty members themselves7 all may contribute to the diminished CE skills of residents. Residents, who themselves identify abnormal heart sounds at alarmingly low rates, play an ever‐increasing role in medical students' instruction,7, 9 exacerbating the problem.

Responding to growing concerns over patient safety and quality of care,16, 20 public and professional organizations have called for renewed emphasis on teaching and evaluating clinical skills.21 For example, the American Board of Internal Medicine has added a physical diagnosis component to its recertification program.22 The Accreditation Council for Graduate Medical Education (ACGME) describes general competencies for residents, including patient care that should include proper physical examination skills.23 Although mandating uniform standards is a welcome change for improving CE competence, the challenge remains for medical school deans and program directors to fit structured physical examination skills training into an already crowded curriculum.16, 24 Moreover, the impact of these efforts to improve CE is uncertain because programs lack an objective measure of CE competence.

The CE training is itself a challenge: sight, sound, and touch all contribute to the clinical impression. For this reason, it is difficult to teach away from the bedside. Unlike pulmonary examination, for which a diagnosis is best made by listening, cardiac auscultation is only one (frequently overemphasized) aspect of CE.25 Medical knowledge of cardiac anatomy and physiology, visualization of cardiovascular findings, and integration of auditory and visual findings are all components of accurate CE.7 Traditionally, CE was taught through direct experience with patients at the bedside under the supervision of seasoned clinicians. However, exposure and learning from good teaching patients has waned. Audiotapes, heart sound simulators, mannequins, and other computer‐based interventions have been used as surrogates, but none has been widely adopted.26, 27 The best practice for teaching CE is not known.

To help to improve CE education during residency, we implemented and evaluated a novel Web‐based CE curriculum that emphasized 4 aspects of CE: cardiovascular anatomy and physiology, auditory skills, visual skills, and integration of auscultatory and visual findings. Our hypothesis was that this new curriculum would improve learning of CE skills, that residents would retain what they learn, and that this curriculum would be better than conventional education in teaching CE skills.

METHODS

Study Participants, Site, and Design

Internal medicine (IM) and family medicine (FM) interns (R1s, n = 59) from university‐ and community‐based residency programs, respectively, participated in this controlled trial of an educational intervention to teach CE.

The intervention group consisted of 26 IM and 8 FM interns at the beginning of the academic year in June 2003. To establish baseline scores, all interns took a 50‐question multimedia test of CE competency described previously.7, 28, 29 Subsequently, all interns completed a required 4‐week cardiology ward rotation. During this rotation, they were instructed to complete a Web‐based CE tutorial with accompanying worksheet and to attend 3 one‐hour sessions with a hospitalist instructor. Their schedules were arranged to allow for this educational time. During the third meeting with the instructor, interns were tested again to establish posttraining scores. Finally, at the end of the academic year, interns were tested once again to establish retention scores.

The control group consisted of 25 first‐year IM residents who were tested at the end of their academic year in June 2003. These test scores served as historical controls for interns who had just completed their first year of residency and who had received standard ward rotation without incorporated Web‐based training in CE. Interns from both groups had many opportunities for one‐on‐one instruction in CE because each intern was assigned for the cardiology rotation to a private practice cardiology attending. Figure 1 outlines the number of IM and FM interns eligible and the number actually tested at each stage of the study.

Educational Intervention

The CE curriculum consisted of a Web‐based program and 3 tutored sessions. The program used virtual patientsaudiovisual recordings of actual patientscombined with computer graphic animations and text to teach cardiac anatomy, hemodynamics, pathophysiology, and visual and auditory findings.30 This multimedia program was interactive and allowed comparisons to normal or to similar lesions. The content included cardiac findings identified as important by a survey of IM residency program directors,8 as well as ACGME training requirements for IM residents23 and cardiology fellows.31 Table 1 outlines the content of the Web‐based curriculum.

| 1. Frontal anatomy of heart, lungs, and vessels with: |

| a. Interactive illustrations allowing depiction of individual structures |

| b. Separate cartoons of anatomy of the right heart, left heart, and entire heart |

| c. Correlation with borders forming regions on chest X‐ray |

| 2. Interactive phases of the cardiac cycle including: |

| a. Phonocardiogram of normal heart sounds (S1, S2) |

| b. ECG recording |

| c. Left heart (aortic, left atrial, and left ventricular) pressures |

| d. Right heart (pulmonary artery, right atrial, and right ventricular) pressures |

| e. Animations of the left heart. |

| 3. Physiological splitting of S2 with: |

| a. Phonocardiogram of normal heart sounds |

| b. ECG tracing |

| c. Left heart (aortic, left atrial, and left ventricular) pressures |

| d. Right heart (pulmonary artery, right atrial, and right ventricular) pressures |

| e. Interactive animations of the heart and lungs with respiration |

| 4. Patients with aortic regurgitation (AR) |

| a. Integrating pulse with sounds and murmurs |

| b. Acute severe AR |

| Recognizing Quincke's pulse |

| c. Austin Flint murmur |

| Differentiating it from the pericardial rub |

| d. Hemodynamics of chronic and acute AR and comparisons |

| e. Well‐tolerated AR |

| 5. Patient with aortic stenosis (AS) |

| a. Integrating pulse with sounds and murmurs |

| Comparison with HCM |

| b. Interactive descriptions of hemodynamics and flow |

| 6. Patients with mitral regurgitation (MR) |

| a. Chronic MR |

| b. Hemodynamics and comparisons of clinical findings for: |

| i. Normal |

| ii. Mitral valve prolapse (MVP) |

| iii. Acute MR |

| iv. Compensated MR |

| c. Acute MR |

| 7. Patients with mitral stenosis (MS) |

| a. Introduction: integrating inspection and auscultation |

| b. Compare sounds: opening snap, split S2, S3 |

| c. Severe MS: interactive comparison of sounds at apex and base |

| d. Hemodynamic effects of heart rate |

This training was designed for typical conditions of residency training programs: studentteacher contact time was limited to three 1‐hour sessions; the instructor (J.K.) was an internist hospitalist (trained and facile in the use of the program), not a cardiologist; and self‐paced study was Web‐based to allow access at all hours at the hospital or at home. In their first session, at the beginning of the cardiology block, interns were introduced to the Web site and given a 1‐page homework assignment that corresponded to the Web‐based content (Table 2). During the second session, in the middle of the 4‐week block, a group discussion was held with the Web‐based program, in which the interns asked questions and reviewed their worksheet answers and program as needed with the hospitalist. During the third session, at the end of the block, questions were reviewed, and the interns took the posttraining test.

| In preparation for the cardiology heart sounds module during the cardiology block at LBMMC, please answer the following questions and bring the completed questionnaire with you. The correct responses to these questions as well as the underlying mechanisms can be found in the Heart Sounds Tutorial ( |

|---|

| 1. Which cardiac chamber is farthest from the anterior chest wall? __________ |

| 2. Which cardiac chamber is closest to the left sternal border? ___________ |

| 3. Are the mitral and aortic valves ever closed at the same time? ____________ |

| 4. Why does inspiratory lung inflation delay the pulmonic second sound? ____________ |

| 5. Three or more murmurs of different origin can be heard in aortic regurgitation. What is their timing (within the cardiac cycle) and causation? ____________ |

| 6. How do you elicit Quincke's pulse? ____________ |

| 7. Is arterial pulse pressure greater in acute or chronic aortic regurgitation? ____________ |

| 8. How does the severity of aortic regurgitation correlate with duration of the early diastolic murmur? ____________ |

| 9. Splitting of the first sound heard with the stethoscope diaphragm in a patient with aortic stenosis is caused by? ____________ |

| 10. What effect does the severity have on the duration of the murmur of aortic stenosis? ____________ |

| 11. Is the murmur of aortic stenosis ever holosystolic? ____________ |

| 12. Is the duration of the murmur of acute mitral regurgitation shorter or longer than that of chronic mitral regurgitation? ____________ |

| 13. What causes the third heart sound (S3) in mitral regurgitation? ____________ |

| 14. Why is the jugular venous a‐wave often prominent in mitral stenosis? ____________ |

| 15. Which heart sound is loudest in mitral stenosis?Why? ____________ |

| 16. What causes the split sound heard in mitral stenosis? ____________ |

| 17. Where (on the precordium) would you hear the murmur of mitral stenosis ____________ |

| 18. How do postural maneuvers affect the heart sounds and murmur in mitral prolapse? ____________ |

| 19. A 3‐phase friction rub can be confused with the 3‐murmur auditory complex in which valvar lesion? ____________ |

| 20. What are some causes of third heart sounds that do not imply poor ventricular function? ____________ |

| 21. Is a fourth heart sound usually best heard at the base (□Yes □No) or the apex (□Yes □No)? |

Evaluation

To evaluate what the R1 intervention group learned, we tested them at baseline, during internship orientation; in posttraining, at the end of their cardiology rotation; and for retention, at the end of their internship year. To evaluate what the controls learned, we tested them at the end of their internship year. The evaluation included a brief survey and the previously validated CE Test.7, 28, 29 Test scores did not carry academic consequences.

For the survey, we asked participants whether they had some prior training in CE and how many hours they estimated having spent learning CE skills during this study with a teacher or in a course. Using a 5‐point Likert scale, they self‐rated their interest and confidence in their own CE skills.

The CE Test is a 50‐question interactive multimedia program that evaluates CE competency using recordings from actual patients. For the CE Test, an overall score (maximum 100 points) and scores for 4 subcategories (expressed as percentages)knowledge of cardiac physiology (interpretation of pressures, sounds and flow related to cardiac contraction and relaxation), audio skills, visual skills, and integration of audio and visual skillsare computed. The same assessment instrument was used for all groups.

Statistical Analysis

Figure 1 lists the tests used to answer the following research questions:

Does the Web‐based curriculum improve CE skills? We compared intervention baseline and posttraining scores using the paired t test for means.

Do interns retain this improvement in skills? We compared intervention baseline and end‐of‐year scores using paired the t test for means.

Does clinical training alone improve CE skills? We compared intervention baseline and control end‐of‐year scores using the t test for means.

Is the Web‐based curriculum better than clinical training alone? We compared intervention and control end‐of‐year scores using the t test for means.

We used the paired t test when baseline and posttraining scores of the same intern could be matched. To test for differences in CE competency between the intervention and control groups, we compared mean scores using the independent Student t test for equal or unequal group variances, as appropriate. Because the interval from posttraining to end‐of‐year testing was variable, it was possible that longer time intervals could allow learning to decay. Therefore, we computed the Spearman correlation coefficient between follow‐up months, and the change in score from post‐Web training to end‐of‐year Pearson correlation coefficients was computed to examine associations of survey variables with test scores. The 2‐sided nominal P < .05 criterion was used to determine statistical significance. Analyses were performed with SPSS statistical software, version 13.0 (SPSS, Inc., Chicago, IL).

Institutional review board approval was granted to this study as exempted research in established educational settings involving normal educational practices.

RESULTS

Research Question 1

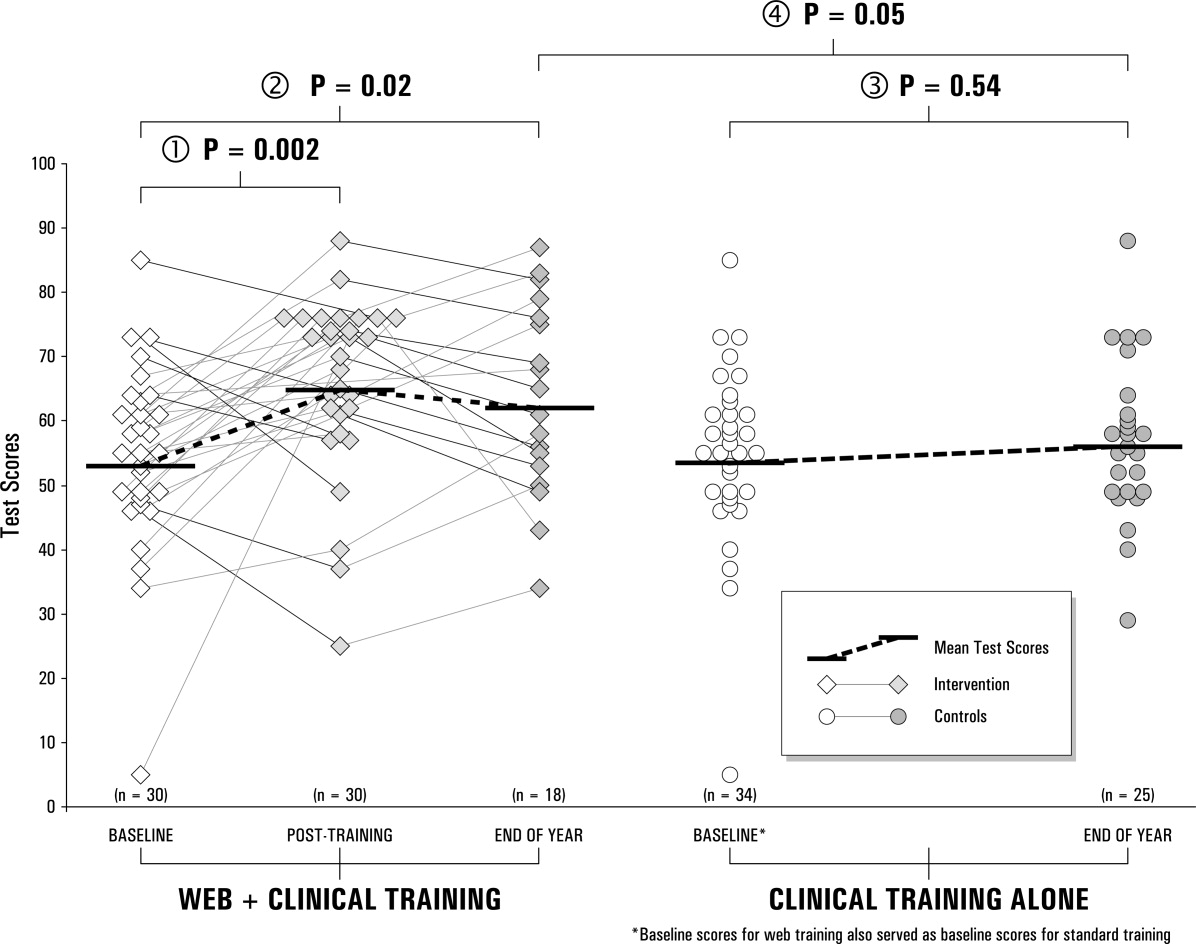

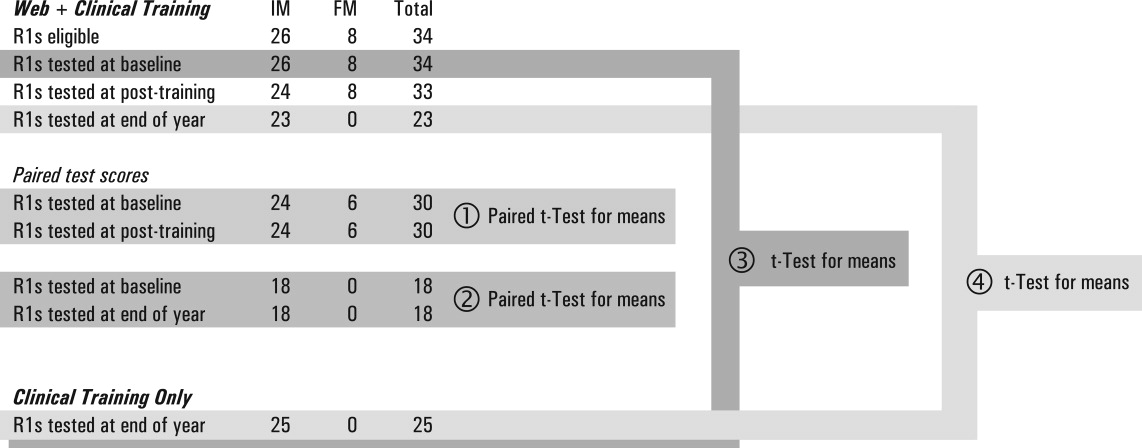

Individual baseline and posttraining CE Test scores for the intervention group are plotted in the first 2 columns of Figure 2. The posttraining mean score improved from baseline (66.0 5.2 vs. 54.2 5.4, P = .002). The knowledge, audio, and visual subcategories of CE competence showed similar improvements (P .001; Table 3). The score for the integration of audiovisual skills subcategory was higher at baseline than the other subcategory scores and remained unchanged.

| Question 1. Does the Web‐based curriculum improve CE skills? | |||||

|---|---|---|---|---|---|

| Subcategory | Intervention baseline (n = 30) | Intervention end of year (n = 30) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (paired t test) | |

| Question 2. Do interns retain this improvement? | |||||

| Subcategory | Intervention baseline (n = 18) | Intervention end of year (n = 18) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (paired t test) | |

| Question 3. Does clinical training alone improve CE skills? | |||||

| Subcategory | Intervention baseline* (n = 34) | Control end of year (n = 25) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (t test) | |

| Question 4. Is the Web‐based curriculum better than clinical training alone? | |||||

| Subcategory | Intervention end of year (n = 23) | Control end of year (n = 25) | |||

| Mean, % (SD) | 95% CI | Mean, % (SD) | 95% CI | P value (t test) | |

| |||||

| Knowledge | 55.9% (14.7%) | 50.4%‐61.4% | 68.9% (15.3%) | 63.2%‐74.6% | <.001 |

| Audio | 69.6% (17.4%) | 63.1%‐76.0% | 83.6% (10.3%) | 79.7%‐87.4 % | <.001 |

| Visual | 56.7% (21.4%) | 48.7%‐64.7% | 71.9% (16.6%) | 65.7%‐78.1% | <.001 |

| Integration | 77.5% (14.5%) | 72.1%‐83.0% | 76.8% (12.8%) | 72.1%‐81.6% | .85 |

| Overall score | 54.2% (14.5%) | 48.8%‐59.6% | 66.0% (13.6%) | 60.9%‐71.2% | .002 |

| Knowledge | 58.6% (15.2%) | 51.0%‐66.2% | 67.6% (16.7%) | 60.4%‐74.9% | .05 |

| Audio | 67.4% (17.1%) | 58.9%‐75.9% | 82.3% (10.4%) | 77.8%‐86.8% | .004 |

| Visual | 51.6% (22.0%) | 40.7%‐62.5% | 65.2% (20.6%) | 56.3%‐74.1% | .13 |

| Integration | 76.0 % (15.8%) | 68.2%‐83.8% | 77.1% (13.2%) | 71.4%‐82.8% | .95 |

| Overall score | 51.8 % (15.0%) | 44.3%‐59.3% | 63.5% (14.9%) | 56.1%‐70.9% | .02 |

| Knowledge | 56.5% (14.6%) | 51.4%‐61.6% | 57.8% (15.4%) | 51.4%‐64.1% | .75 |

| Audio | 70.0% (16.9%) | 64.1%‐75.9% | 73.3% (13.6%) | 67.7%‐79.0% | .42 |

| Visual | 57.6% (20.9%) | 50.3%‐64.9% | 66.9% (15.3%) | 60.6%‐73.2% | .07 |

| Integration | 77.8% (13.8%) | 72.9%‐82.5% | 76.0% (12.2%) | 71.0%‐81.0% | .62 |

| Overall score | 54.7% (13.7%) | 49.9%‐59.5% | 56.8% (12.4%) | 51.7%‐61.9% | .54 |

| Knowledge | 67.6% (16.7%) | 60.4%‐74.8% | 57.8% (15.4%) | 51.4%‐64.1% | 0.04 |

| Audio | 82.3% (10.4%) | 77.8%‐86.8% | 73.3% (13.6%) | 67.7%‐79.0% | 0.01 |

| Visual | 65.2% (20.6%) | 56.3%‐74.1% | 66.9% (15.3%) | 60.6%‐73.2% | 0.75 |

| Integration | 77.1% (13.2%) | 71.4%‐82.8% | 76.0% (12.2%) | 71.0%‐81.0% | 0.76 |

| Overall score | 64.7% (14.5%) | 58.4%‐71.0% | 56.8% (12.4%) | 51.7%‐61.9% | 0.05 |

Research Question 2

End‐of‐year scores are plotted in the third column of Figure 2. Overall scores remained higher at the end of the internship year than at baseline (63.5 7.4, P = .02). Improvements in knowledge and audio skills were retained as well (P .05; Table 3). Visual skills (inspection of neck and precordium), however, showed a steep decline, from 71.9 to 65.2, a score indistinguishable from that of the controls (66.9, P = .75). The interval from posttraining to end‐of‐year testing varied; the mean was 21 weeks. The Spearman correlation coefficient between follow‐up months and change in score from post‐Web training to the end of the year was not significant. ( = 0.12, P = .64). To reinforce the results of the correlational analysis, the paired t test was used to compute the t value for 18 interns matched at post‐Web training and retention testing, but the result was not significant (P = .61), indicating that without further training, R1 intervention scores did not change over time.

Research Question 3

Baseline scores for the control group (the prior year's interns) were not available, since the interns had just completed their academic year when the study began. Therefore the baseline scores from the present year's interns were used as a surrogate. Comparing these 2 groups (intervention at baseline, and controls at the end of the year), no difference was observed between mean scores (54.7 4.8 vs. 56.8 5.1, P = .54). Controls scored slightly higher at baseline in visual skills than did interns who received the intervention, but not significantly so (P = .07). There were also no differences in knowledge, audio skills, and integration of audio and visual skills.

Research Question 4

When we compared overall scores at the end of the year, the mean score for the intervention group was higher than that of the controls (64.7 6.3 vs. 56.8 5.1, P = .05). Similarly, knowledge and auditory scores indicated the intervention group had better competency (P .04), but visual and integration skills did not.

Survey

The vast majority of those in both the intervention (88%) and control groups (83%) reported some prior training in CE. In this study the mean total number of hours that those in the intervention group reported they spent preparing was 5.1 2.0 hours (range 2‐25 hours). This number of hours included the three 1‐hour sessions spent with the instructor. The control group reported they spent almost twice as many hours in CE instruction: a mean of 10.1 4.8 hours (range 2‐30 hours, P = .05). On a Likert scale of 1‐5 (from no interest to high interest), both the intervention and control groups expressed high interest in learning CE (4.7 0.2 and 4.4 0.5, respectively; P = .3). Despite significant differences in CE Test scores, the intervention and control groups shared the same confidence in their abilities (2.5 0.3, P = .82). Neither time spent learning nor interest level correlated with overall CE Test score.

DISCUSSION

To improve CE competence of residents, we designed and implemented a Web‐based CE curriculum using virtual patients (VPs), which standardized the interns' exposure to a spectrum of medical conditions and allowed them flexibility in choosing when and where the training occurred.30 In this controlled educational intervention, we found significant improvements in interns' mean CE competency scores immediately following the Web‐based training; these improvements were retained at the end of the academic year. The Web‐based curriculum also improved scores in all 4 subcategories except integration of audio and visual skills. Although cardiac physiology knowledge and audio skills were retained, visual skills showed a steep decline. This decline suggests that visual skills may be more labile and could benefit from more regular reinforcement. It may also be that old habits die hard: in an earlier study of baseline CE competency, we observed that most participants listened with their eyes closed or averted, actively tuning out the visual timing reference that would help them answer the question.7

Comparing control and intervention retention scores suggests that the Web‐based training is more effective than traditional training in CE. The interns spent a mean of 5 hours learning, including 3 hours with the hospitalist‐instructor. Of those 3 hours, 30 minutes was spent taking the test. Earlier studies32, 33 showed improved CE skills with 12‐20 hours of instructor‐led tutorials. The shorter instruction time for interns in this study translated into about half the magnitude of improvement that was seen in third‐year medical students, whose mean score improved from 58.7 6.7 to 73.4 3.8. Moreover, the students in the earlier study continued to show improvement in their CE competency without further intervention, whereas the test scores of interns in this study appeared to plateau. It is therefore likely that 5 hours per year is a lower bound for successful Web‐based CE training. For this reason, we do not recommend fewer than 5 hours per year of CE training using a Web‐based curriculum (including self‐study). Ideally, sessions should be scheduled each year in residency in order to form a critical mass of learning that has the potential to continue improvements beyond residency training.

When surveyed, interns in both the intervention and control groups were very interested in improving their skills but were not confident in their abilities. In fact, confidence did not correlate with ability, which suggests interns (and possibly others)34 do not have a reliable, intrinsic sense of their CE skills. Curiously, the control group reported spending almost twice the number of hours learning CE that the intervention group did. It is unlikely that the intervention group received less traditional instruction than the control group. Therefore, it is more likely that in estimating the hours spent learning CE, the intervention group reported only the formal instruction plus self‐study hours spent on cardiac examination. One way to interpret the greater number of hours of instruction reported by the controls is to look at their end‐of‐the‐year estimate of how much instruction they had received in the previous 12 months. The controls did not benefit from formal instruction in cardiac examination, and so their estimate included the hours spent with physician attendings receiving traditional bedside instruction. The number of hours reported by the controls could be accurate or could be an overestimate. If accurate, the greater number of hours for the controls did not translate into superior performance on the CE Test. If an overestimate, hindsight bias may have inflated the actual hours spent.

Some may argue that VPs are a poor substitute for actual patients. With few reservations, we are inclined to agree. The best training for CE is at the bedside with a clinical master and the luxury of time. Today, however, teaching has shifted from the bedside to conference rooms and even corridors.17, 18 Consequently, opportunities to practice hands‐on CE have become rare. Even when physical examination is performed at the bedside, the quality of the instruction on it depends on the teacher's interest, abilities, and available patients. Our intent was to implement a standardized curriculum that ensures that CE is practiced and tested regularly in order to prevent the creation of a generation of practicing physicians who never develop these skills.

A hospitalist, not a cardiologist, led the intervention. Because cardiac conditions are involved in more than 40% of admissions to the medical wards, a hospitalist should be able to evaluate a patient through physical examination in order to determine whether further tests are necessary or whether a cardiologist should be consulted. In academic medicine, most teaching of cardiac examination is no longer done by cardiologists, but rather by internists.31 Hospital medicine attendings often play a central role as attending physicians for medical students and house officers, and the hospitalist can and should play a larger role in bedside teaching. A Web‐based curriculum for residents that can be accessed at all hours is also suited to residents who have inpatient rotations.

Our CE curriculum was designed for the typical conditions of today's residency training programs, in which education must be balanced with the demands of patient care and the constraints of the limits on resident duty hours.16, 24 Studentfaculty contact time was limited to three 1‐hour sessions, reflecting the time constraints of most training programs.13, 16, 19 The program was incorporated into the regularly scheduled cardiology block throughout the academic year, ensuring that residents received broad exposure to cardiac clinical presentations at a time when bedside encounters with important cardiac physical findings were most likely. The goal was not to reduce or eliminate bedside teaching but to make the limited time that remained more productive. The fidelity of the training to actual bedside encounters was high: unlike the lone audio recordings of heart sounds, VPs in this study also simultaneously presented visual pulsation in the neck or precordium, training the resident to use these visual timing cues while auscultating patients. Furthermore, the Web‐based multimedia technology employed allowed direct comparison of a patient's bedside findings with case‐matched laboratory studies, which was enhanced with explanatory animations and tutorials. Working within the constraints of residency training programs, we were able to standardize the cardiac clinical exposure of trainees and to test for improvement in and retention of CE skills.

Several limitations should be considered. Although VPs mimic bedside patient encounters, we do not know if improvements from this Web‐based curriculum translate into improvements in patient care. Ideally, the study would have been conducted at more than 1 site in order to test whether the positive results we found are replicated elsewhere. Mitigating these drawbacks are the real‐world conditions of our study: the teaching hospital that conducted the study was not involved in the Web site development, and an internist‐hospitalist, not a cardiologist, led the instruction. In theory, the baseline testing itself may have boosted the posttraining scores of the intervention group simply by motivating the interns to improve their test scores. However, only an overall score was reported to interns; they were not told which questions they missed or in what subcategory they were most deficient. Although simple exposure to the test may be the reason for the interns' improvement, it has been shown that the test has testretest reliability over a 4‐ to 6‐week period28, 29 and that test scores do not improve with prior exposure to the test. Because those in the intervention group knew that they would be retested, we cannot eliminate the possibility that they were somehow more motivated to do well on the test by the end of the year. Finally, there may have been differences in the ward training received by the 2 groups of interns studied. The test scores of interns in the control group may not have been as high because of differences in patients admitted, topics discussed, or teaching attendings assigned to them during the academic year; however, because there were no radical changes from the previous year in either the patient population or the group of attending physicians, the differences in the quality of patient encounters between the control and intervention groups are thought to be minor.

In conclusion, the Web‐based curriculum met all the requirements for being effective education: it was self‐directed, interactive, relevant, and cost effective. This novel curriculum standardized learning experience during the cardiology block and saved time for both teacher and trainees. Baseline assessment helped to focus learning. Involvement of an instructor‐hospitalist added accountability, a resource for answering questions, and encouragement for building self‐study skills. More importantly, a realistic and reproducible test of CE skills documented whether the learning objectives were meta feature that is becoming increasingly desirable to residency program directors.35 Our study showed that training with virtual patients yields superior mean CE competency scores over those with standard cardiology ward rotations. Further studies may confirm whether this improvement in CE translates to improvement in making appropriate observations and diagnoses of actual patients.

Acknowledgements

The authors first thank their interns and residents for their willingness to participate in the training and to take the test. They also thank Dr. Lloyd Rucker, program director, University of California Irvine Internal Medicine Residency Program and Dr. John Michael Criley for his guidance in how to use the test and in designing the intervention. Finally, they thank David Criley for his contribution in developing the Web site.

Despite impressive advances in cardiac diagnostic technology, cardiac examination (CE) remains an essential skill for screening for abnormal sounds, for evaluating cardiovascular system function, and for guiding further diagnostic testing.16

In practice, these benefits may be attenuated if CE skills are inadequate. Numerous studies have documented substantial CE deficiencies among physicians at various points in their careers from medical school to practice.79 In 1 study, residents' CE mistakes accounted for one‐third of all physical diagnostic errors.10 When murmurs are detected, physicians will often reflexively order an echocardiogram and refer to a cardiologist, regardless of the cost or indication. As a consequence, echocardiography use is rising faster than the aging population or the incidence of cardiac pathological conditions would explain.11 Because cost‐effective medicine depends on the appropriate application of clinical skills like CE, the loss of these skills is a major shortcoming.1215

The reasons for the decline in physicians' CE skills are numerous. High reliance on ordering diagnostic tests,16 conducting teaching rounds away from the bedside,17, 18 time constraints during residency,16, 19 and declining CE skills of faculty members themselves7 all may contribute to the diminished CE skills of residents. Residents, who themselves identify abnormal heart sounds at alarmingly low rates, play an ever‐increasing role in medical students' instruction,7, 9 exacerbating the problem.

Responding to growing concerns over patient safety and quality of care,16, 20 public and professional organizations have called for renewed emphasis on teaching and evaluating clinical skills.21 For example, the American Board of Internal Medicine has added a physical diagnosis component to its recertification program.22 The Accreditation Council for Graduate Medical Education (ACGME) describes general competencies for residents, including patient care that should include proper physical examination skills.23 Although mandating uniform standards is a welcome change for improving CE competence, the challenge remains for medical school deans and program directors to fit structured physical examination skills training into an already crowded curriculum.16, 24 Moreover, the impact of these efforts to improve CE is uncertain because programs lack an objective measure of CE competence.

The CE training is itself a challenge: sight, sound, and touch all contribute to the clinical impression. For this reason, it is difficult to teach away from the bedside. Unlike pulmonary examination, for which a diagnosis is best made by listening, cardiac auscultation is only one (frequently overemphasized) aspect of CE.25 Medical knowledge of cardiac anatomy and physiology, visualization of cardiovascular findings, and integration of auditory and visual findings are all components of accurate CE.7 Traditionally, CE was taught through direct experience with patients at the bedside under the supervision of seasoned clinicians. However, exposure and learning from good teaching patients has waned. Audiotapes, heart sound simulators, mannequins, and other computer‐based interventions have been used as surrogates, but none has been widely adopted.26, 27 The best practice for teaching CE is not known.

To help to improve CE education during residency, we implemented and evaluated a novel Web‐based CE curriculum that emphasized 4 aspects of CE: cardiovascular anatomy and physiology, auditory skills, visual skills, and integration of auscultatory and visual findings. Our hypothesis was that this new curriculum would improve learning of CE skills, that residents would retain what they learn, and that this curriculum would be better than conventional education in teaching CE skills.

METHODS

Study Participants, Site, and Design

Internal medicine (IM) and family medicine (FM) interns (R1s, n = 59) from university‐ and community‐based residency programs, respectively, participated in this controlled trial of an educational intervention to teach CE.

The intervention group consisted of 26 IM and 8 FM interns at the beginning of the academic year in June 2003. To establish baseline scores, all interns took a 50‐question multimedia test of CE competency described previously.7, 28, 29 Subsequently, all interns completed a required 4‐week cardiology ward rotation. During this rotation, they were instructed to complete a Web‐based CE tutorial with accompanying worksheet and to attend 3 one‐hour sessions with a hospitalist instructor. Their schedules were arranged to allow for this educational time. During the third meeting with the instructor, interns were tested again to establish posttraining scores. Finally, at the end of the academic year, interns were tested once again to establish retention scores.

The control group consisted of 25 first‐year IM residents who were tested at the end of their academic year in June 2003. These test scores served as historical controls for interns who had just completed their first year of residency and who had received standard ward rotation without incorporated Web‐based training in CE. Interns from both groups had many opportunities for one‐on‐one instruction in CE because each intern was assigned for the cardiology rotation to a private practice cardiology attending. Figure 1 outlines the number of IM and FM interns eligible and the number actually tested at each stage of the study.

Educational Intervention

The CE curriculum consisted of a Web‐based program and 3 tutored sessions. The program used virtual patientsaudiovisual recordings of actual patientscombined with computer graphic animations and text to teach cardiac anatomy, hemodynamics, pathophysiology, and visual and auditory findings.30 This multimedia program was interactive and allowed comparisons to normal or to similar lesions. The content included cardiac findings identified as important by a survey of IM residency program directors,8 as well as ACGME training requirements for IM residents23 and cardiology fellows.31 Table 1 outlines the content of the Web‐based curriculum.

| 1. Frontal anatomy of heart, lungs, and vessels with: |

| a. Interactive illustrations allowing depiction of individual structures |

| b. Separate cartoons of anatomy of the right heart, left heart, and entire heart |

| c. Correlation with borders forming regions on chest X‐ray |

| 2. Interactive phases of the cardiac cycle including: |

| a. Phonocardiogram of normal heart sounds (S1, S2) |

| b. ECG recording |

| c. Left heart (aortic, left atrial, and left ventricular) pressures |

| d. Right heart (pulmonary artery, right atrial, and right ventricular) pressures |

| e. Animations of the left heart. |

| 3. Physiological splitting of S2 with: |

| a. Phonocardiogram of normal heart sounds |

| b. ECG tracing |

| c. Left heart (aortic, left atrial, and left ventricular) pressures |

| d. Right heart (pulmonary artery, right atrial, and right ventricular) pressures |

| e. Interactive animations of the heart and lungs with respiration |

| 4. Patients with aortic regurgitation (AR) |

| a. Integrating pulse with sounds and murmurs |

| b. Acute severe AR |

| Recognizing Quincke's pulse |

| c. Austin Flint murmur |

| Differentiating it from the pericardial rub |

| d. Hemodynamics of chronic and acute AR and comparisons |

| e. Well‐tolerated AR |

| 5. Patient with aortic stenosis (AS) |

| a. Integrating pulse with sounds and murmurs |

| Comparison with HCM |

| b. Interactive descriptions of hemodynamics and flow |

| 6. Patients with mitral regurgitation (MR) |

| a. Chronic MR |

| b. Hemodynamics and comparisons of clinical findings for: |

| i. Normal |

| ii. Mitral valve prolapse (MVP) |

| iii. Acute MR |

| iv. Compensated MR |

| c. Acute MR |

| 7. Patients with mitral stenosis (MS) |

| a. Introduction: integrating inspection and auscultation |

| b. Compare sounds: opening snap, split S2, S3 |

| c. Severe MS: interactive comparison of sounds at apex and base |

| d. Hemodynamic effects of heart rate |

This training was designed for typical conditions of residency training programs: studentteacher contact time was limited to three 1‐hour sessions; the instructor (J.K.) was an internist hospitalist (trained and facile in the use of the program), not a cardiologist; and self‐paced study was Web‐based to allow access at all hours at the hospital or at home. In their first session, at the beginning of the cardiology block, interns were introduced to the Web site and given a 1‐page homework assignment that corresponded to the Web‐based content (Table 2). During the second session, in the middle of the 4‐week block, a group discussion was held with the Web‐based program, in which the interns asked questions and reviewed their worksheet answers and program as needed with the hospitalist. During the third session, at the end of the block, questions were reviewed, and the interns took the posttraining test.

| In preparation for the cardiology heart sounds module during the cardiology block at LBMMC, please answer the following questions and bring the completed questionnaire with you. The correct responses to these questions as well as the underlying mechanisms can be found in the Heart Sounds Tutorial ( |

|---|

| 1. Which cardiac chamber is farthest from the anterior chest wall? __________ |

| 2. Which cardiac chamber is closest to the left sternal border? ___________ |

| 3. Are the mitral and aortic valves ever closed at the same time? ____________ |

| 4. Why does inspiratory lung inflation delay the pulmonic second sound? ____________ |

| 5. Three or more murmurs of different origin can be heard in aortic regurgitation. What is their timing (within the cardiac cycle) and causation? ____________ |

| 6. How do you elicit Quincke's pulse? ____________ |

| 7. Is arterial pulse pressure greater in acute or chronic aortic regurgitation? ____________ |

| 8. How does the severity of aortic regurgitation correlate with duration of the early diastolic murmur? ____________ |

| 9. Splitting of the first sound heard with the stethoscope diaphragm in a patient with aortic stenosis is caused by? ____________ |

| 10. What effect does the severity have on the duration of the murmur of aortic stenosis? ____________ |

| 11. Is the murmur of aortic stenosis ever holosystolic? ____________ |

| 12. Is the duration of the murmur of acute mitral regurgitation shorter or longer than that of chronic mitral regurgitation? ____________ |

| 13. What causes the third heart sound (S3) in mitral regurgitation? ____________ |

| 14. Why is the jugular venous a‐wave often prominent in mitral stenosis? ____________ |

| 15. Which heart sound is loudest in mitral stenosis?Why? ____________ |

| 16. What causes the split sound heard in mitral stenosis? ____________ |

| 17. Where (on the precordium) would you hear the murmur of mitral stenosis ____________ |

| 18. How do postural maneuvers affect the heart sounds and murmur in mitral prolapse? ____________ |

| 19. A 3‐phase friction rub can be confused with the 3‐murmur auditory complex in which valvar lesion? ____________ |

| 20. What are some causes of third heart sounds that do not imply poor ventricular function? ____________ |

| 21. Is a fourth heart sound usually best heard at the base (□Yes □No) or the apex (□Yes □No)? |

Evaluation

To evaluate what the R1 intervention group learned, we tested them at baseline, during internship orientation; in posttraining, at the end of their cardiology rotation; and for retention, at the end of their internship year. To evaluate what the controls learned, we tested them at the end of their internship year. The evaluation included a brief survey and the previously validated CE Test.7, 28, 29 Test scores did not carry academic consequences.