User login

The psychiatric clinic of the future

Despite the tremendous advances in psychiatry in recent years, the current clinical practice of psychiatry continues to rely on data from intermittent assessments along with subjective and unquantifiable accounts from patients and caregivers. Furthermore, there continues to be significant diagnostic variations among practitioners. Fortunately, technology to address these issues appears to be on the horizon.

How might the psychiatric clinic of the future look? What changes could we envision? These 4 critical factors may soon bring about dynamic changes in the way we practice psychiatry:

- precision psychiatry

- digital psychiatry

- technology-enhanced psychotherapy

- electronic health record (EHR) reforms.

In this article, we review how advances in each of these areas might lead to improved care for our patients.

Precision psychiatry

Precision psychiatry takes into account each patient’s variability in genes, environment, and lifestyle to determine individualized treatment and prevention strategies. It relies on pharmacogenomic testing as the primary tool. Pharmacogenomics is the study of variability in drug response due to heredity.

Emerging data on the clinical utility and cost-effectiveness of pharmacogenomic testing are encouraging, but its routine use is not well supported by current evidence.2 One limit to using pharmacogenomic testing is that many genes simultaneously exert an effect on the structure and function of neurons and associated pathophysiology. According to the International Society of Psychiatric Genetics, no single genetic variant is sufficient to cause psychiatric disorders such as depression, bipolar disorder, substance dependence, or schizophrenia. This limits the possibility of using genetic tests to establish a diagnosis.3

In the future, better algorithms could promote more accurate pharmacogenomics profiles for individual patients, which could influence treatment.

Precision psychiatry could lead to:

- identification of novel targets for new medications

- pharmacogenetic profiling of the patient to predict disease susceptibility and medication response

- personalized therapy: the right drug at the right dose for the right patient.

- improved efficacy and fewer adverse medication reactions.

Continue to: Digital psychiatry

Digital psychiatry

Integrating computer-based technology into psychiatric practice has given birth to a new frontier that could be called digital psychiatry. This might encompass the following:

- telepsychiatry

- social media with a mental health focus

- web-based applications/devices

- artificial intelligence (AI).

Telepsychiatry. Videoconferencing is the most widely used form of telepsychiatry. It provides patients with easier access to mental health treatment.4 Telepsychiatry has the potential to match patients and clinicians with similar cultural backgrounds, thus minimizing cultural gaps and misunderstandings. Most importantly, it is comparable to face-to-face interviews in terms of the reliability of assessment and treatment outcomes.5

Telepsychiatry might be particularly helpful for patients with restricted mobility, such as those who live in remote areas, nursing homes, or correctional facilities. In correctional settings, transferring prisoners is expensive and carries the risk of escape. In a small study (N = 86) conducted in Hong Kong, Chen et al6 found that using videoconferencing to conduct clinical interviews of inmates was cost-efficient and scored high in terms of patient acceptability.

Social media. Social media could be a powerful platform for early detection of mental illness. Staying connected with patients on social media could allow psychiatrists to be more aware of their patient’s mood fluctuations, which might lead to more timely assessments. Physicians could be automatically notified about changes in their patients’ social media activity that indicate changes in mental state, which could solicit immediate intervention and treatment. On the other hand, such use of social media could blur professional boundaries. Psychiatrists also could use social media to promote awareness of mental health and educate the public on ways to improve or maintain their mental well-being.7

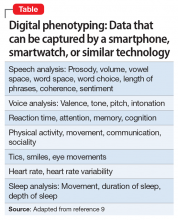

Web-based applications/devices. Real-time monitoring through applications or internet-based smart devices creates a new avenue for patients to receive personalized assessments, treatment, and intervention.8 Smartwatches with internet connectivity may offer a glimpse of the wearer’s sleep architecture and duration, thus providing real-time data on patients who have insomnia. We can now passively collect objective data from devices, such as smartphones and laptops, to phenotype an individual’s mood and mental state, a process called digital phenotyping. The Table9 lists examples of the types of mental health–related metrics that can be captured by smartphones, smartwatches, and similar technology. Information from these devices can be accumulated to create a database that can be used to predict symptoms.10 For example, the way people use a smartphone’s keyboard, including latency time between space and character types, can be used to generate variables for data. This type of information is being studied for use in screening depression and passively assessing mood in real time.11

Continue to: Artificial intelligence

Artificial intelligence—the development of computer systems able to perform tasks that normally require human intelligence—is being increasingly used in psychiatry. Some studies have suggested AI can be used to identify patients’ risk of suicide12-15 or psychosis.16,17Kalanderian and Nasrallah18 reviewed several of these studies in

Other researchers have found clinical uses for machine learning, a subset of AI that uses methods to automatically detect patterns and make predictions based on those patterns. In one study, a machine learning analysis of functional MRI scans was able to identify 4 distinct subtypes of depression.19 In another study, a machine learning model was able to predict with 60% accuracy which patients with depression would respond to antidepressants.20

In the future, AI might be used to change mental health classification systems. Because many mental health disorders share similar symptom clusters, machine learning can help to identify associations between symptoms, behavior, brain function, and real-world function across different diagnoses, potentially affecting how we will classify mental disorders.21

Technology-enhanced psychotherapy

In the future, it might be common for psychotherapy to be provided by a computer, or “virtual therapist.” Several studies have evaluated the use of technology-enhanced psychotherapy.

Lucas et al22 investigated patients’ interactions with a virtual therapist. Participants were interviewed by an avatar named Ellie, who they saw on a TV screen. Half of the participants were told Ellie was not human, and half were told Ellie was being controlled remotely by a human. Three psychologists who were blinded to group allocations analyzed transcripts of the interviews and video recordings of participants’ facial expressions to quantify the participants’ fear, sadness, and other emotional responses during the interviews, as well as their openness to the questions. Participants who believed Ellie was fully automated reported significantly lower fear of self-disclosure and impression management (attempts to control how others perceive them) than participants who were told that Ellie was operated by a human. Additionally, participants who believed they were interacting with a computer were more open during the interview.22

Continue to: Researchers at the University of Southern California...

Researchers at the University of Southern California developed software that assessed 74 acoustic features, including pitch, volume, quality, shimmer, jitter, and prosody, to predict outcomes among patients receiving couples therapy. This software was able to predict marital discord at least as well as human therapists.23

Many mental health apps purport to implement specific components of psychotherapy. Many of these apps focus on cognitive-behavioral therapy worksheets, mindfulness exercises, and/or mood tracking. The features provided by such apps emulate the tasks and intended outcomes of traditional psychotherapy, but in an entirely decentralized venue.24

Some have expressed concern that an increased use of virtual therapists powered by AI might lead to a dehumanization of psychiatry (Box25,26).

Box

Whether there are aspects of the psychiatric patient encounter that cannot be managed by a “virtual clinician” created by artificial intelligence (AI) remains to be determined. Some of the benefits of using AI in this manner may be difficult to anticipate, or may be specific to an individual’s relationship with his/her clinician.25

On the other hand, AI systems blur previously assumed boundaries between reality and fiction, and this could have complex effects on patients. Similar to therapeutic relationships with a human clinician, there is the risk of transference of emotions, thoughts, and feelings to a virtual therapist powered by AI. Unlike with a psychiatrist or therapist, however, there is no person on the other side of this transference. Whether virtual clinicians will be able to manage such transference remains to be seen.

In Deep Medicine,26 cardiologist Eric Topol, MD, emphasizes a crucial component of a patient encounter that AI will be unlikely able to provide: empathy. Virtual therapists powered by AI will inherit the tasks best done by machines, leaving humans more time to do what they do best—providing empathy and being “present” for patients.

Electronic health record reforms

Although many clinicians find EHRs to be onerous and time-consuming, EHR technology is constantly improving, and EHRs have revolutionized documentation and order implementation. Several potential advances could improve clinical practice. For example, EHRs could incorporate a clinical decision support system that uses AI-based algorithms to assist psychiatrists with diagnosis, monitoring, and treatment.27 In the future, EHRs might have the ability to monitor and learn from errors and adverse events, and automatically design an algorithm to avoid them.28 They should be designed to better manage analysis of pharmacogenetic test results, which is challenging due to the amount and complexity of the data.29 Future EHRs should eliminate the non-intuitive and multi-click interfaces and cumbersome data searches of today’s EHRs.30

Technology brings new ethical considerations

Mental health interventions based on AI typically work with algorithms, and algorithms bring ethical issues. Mental health devices or systems that use AI could contain biases that have the potential to harm in unintended ways, such as a data-driven sexist or racist bias.31 This may require investing additional time to explain to patients (and their families) what an algorithm is and how it works in relation to the therapy provided.

Continue to: Another concern is patient...

Another concern is patient autonomy.32 For example, it would be ethically problematic if a patient were to assume that there was a human physician “at the other end” of a virtual therapist or other technology who is communicating or reviewing his/her messages. Similarly, an older adult or a patient with intellectual disabilities may not be able to understand advanced technology or what it does when it is installed in their home to monitor the patient’s activities. This would increase the risk of privacy violations, manipulation, or even coercion if the requirements for informed consent are not satisfied.

A flowchart for the future

Although current research and innovations typically target specific areas of psychiatry, these advances can be integrated by devising algorithms and protocols that will change the current practice of psychiatry. The Figure provides a glimpse of how the psychiatry clinic of the future might work. A maxim of management is that “the best way to predict the future is to create it.” However, the mere conception of a vision is not enough—working towards it is essential.

Bottom Line

With advances in technology, psychiatric practice will soon be radically different from what it is today. The expanded use of telepsychiatry, social media, artificial intelligence, and web-based applications/devices holds great promise for psychiatric assessment, diagnosis, and treatment, although certain ethical and privacy concerns need to be adequately addressed.

Related Resources

- National Institute of Mental Health. Technology and the future of mental health treatment. www.nimh.nih.gov/health/topics/technology-and-the-future-of-mental-health-treatment/index.shtml. Revised September 2019.

- Hays R, Farrell HM, Touros J. Mobile apps and mental health: using technology to quantify real-time clinical risk. Current Psychiatry. 2019;18(6):37-41.

- Torous J, Luo J, Chan SR. Mental health apps: what to tell patients. Current Psychiatry. 2018;17(3):21-25.

1. Pirmohamed M. Pharmacogenetics and pharmacogenomics. Br J Clin Pharmacol. 2001;52(4):345-347.

2. Benitez J, Cool CL, Scotti DJ. Use of combinatorial pharmacogenomic guidance in treating psychiatric disorders. Per Med. 2018;15(6):481-494.

3. Cannon TD. Candidate gene studies in the GWAS era: the MET proto-oncogene, neurocognition, and schizophrenia. Am J Psychiatry. 2010;167(4):4,369-372.

4. Greenwood J, Chamberlain C, Parker G. Evaluation of a rural telepsychiatry service. Australas Psychiatry. 2004;12(3):268-272.

5. Hubley S, Lynch SB, Schneck C, et al. Review of key telepsychiatry outcomes. World J Psychiatry. 2016;6(2):269-282.

6. Cheng KM, Siu BW, Yeung CC, et al. Telepsychiatry for stable Chinese psychiatric out-patients in custody in Hong Kong: a case-control pilot study. Hong Kong Med J. 2018;24(4):378-383.

7. Frankish K, Ryan C, Harris A. Psychiatry and online social media: potential, pitfalls and ethical guidelines for psychiatrists and trainees. Australasian Psychiatry. 2012;20(3):181-187.

8. de la Torre Díez I, Alonso SG, Hamrioui S, et al. IoT-based services and applications for mental health in the literature. J Med Syst. 2019;43(1):4-9.

9. Topol E. Deep Medicine. New York, NY: Basic Books; 2019:168.

10. Adams RA, Huys QJM, Roiser JP. Computational Psychiatry: towards a mathematically informed understanding of mental illness. J Neurol Neurosurg Psychiatry. 2016;87(1):53-63.

11. Insel TR. Bending the curve for mental health: technology for a public health approach. Am J Public Health. 2019;109(suppl 3):S168-S170.

12. Just MA, Pan L, Cherkassky VL, et al. Machine learning of neural representations of suicide and emotion concepts identifies suicidal youth. Nat Hum Behav. 2017;1:911-919.

13. Pestian J, Nasrallah H, Matykiewicz P, et al. Suicide note classification using natural language processing: a content analysis. Biomed Inform Insights. 2010;2010(3):19-28.

14. Walsh CG, Ribeiro JD, Franklin JC. Predicting risk of suicide attempts over time through machine learning. Clinical Psychological Science. 2017;5(3):457-469.

15. Pestian JP, Sorter M, Connolly B, et al; STM Research Group. A machine learning approach to identifying the thought markers of suicidal subjects: a prospective multicenter trial. Suicide Life Threat Behav. 2017;47(1):112-121.

16. Corcoran CM, Carrillo F, Fernández-Slezak D, et al. Prediction of psychosis across protocols and risk cohorts using automated language analysis. World Psychiatry. 2018;17(1):67-75.

17. Bedi G, Carrillo F, Cecchi GA, et al. Automated analysis of free speech predicts psychosis onset in high-risk youths. NPJ Schizophr. 2015;1:15030. doi: 10.1038/npjschz.2015.30.

18. Kalanderian H, Nasrallah HA. Artificial intelligence in psychiatry. Current Psychiatry. 2019;18(8):33-38.

19. Drysdale AT, Grosenick L, Downar J, et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat Med. 2017;23(1):28-38.

20. Chekroud AM, Zotti RJ, Shehzad Z, et al. Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet Psychiatry. 2016;3(3):243-250.

21. Grisanzio KA, Goldstein-Piekarski AN, Wang MY, et al. Transdiagnostic symptom clusters and associations with brain, behavior, and daily function in mood, anxiety, and trauma disorders. JAMA Psychiatry. 2018;75(2):201-209.

22. Lucas G, Gratch J, King A, et al. It’s only a computer: virtual humans increase willingness to disclose. Computers in Human Behavior. 2014;37:94-100.

23. Nasir M, Baucom BR, Georgiou P, et al. Predicting couple therapy outcomes based on speech acoustic features. PLoS One. 2017;12(9):e0185123. doi: 10.1371/journal.pone.0185123.

24. Huguet A, Rao S, McGrath PJ, et al. A systematic review of cognitive behavioral therapy and behavioral activation apps for depression. PLoS One. 2016;11(5):e0154248. doi: 10.1371/journal.pone.0154248.

25. Scholten MR, Kelders SM, Van Gemert-Pijnen JE. Self-guided web-based interventions: scoping review on user needs and the potential of embodied conversational agents to address them. J Med Internet Res. 2017;19(11):e383.

26. Topol E. Deep Medicine. New York, NY: Basic Books; 2019:283-310.

27. Abramson EL, McGinnis S, Edwards A, et al. Electronic health record adoption and health information exchange among hospitals in New York State. J Eval Clin Pract. 2012;18(6):1156-1162.

28. Meeks DW, Smith MW, Taylor L, et al. An analysis of electronic health record-related patient safety concerns. J Am Med Inform Assoc. 2014;21(6):1053-1059.

29. Kho AN, Rasmussen LV, Connolly JJ, et al. Practical challenges in integrating genomic data into the electronic health record. Genet Med. 2013;15(10):772-778.

30. Ornstein SM, Oates RB, Fox GN. The computer-based medical record: current status. J Fam Pract. 1992;35(5):556-565.

31. Corea F. Machine ethics and artificial moral agents. In: Applied artificial intelligence: where AI can be used in business. Basel, Switzerland: Springer; 2019:33-41.

32. Beauchamp T, Childress J. Principles of biomedical ethics. 7th ed. New York, NY: Oxford University Press; 2012:44.

Despite the tremendous advances in psychiatry in recent years, the current clinical practice of psychiatry continues to rely on data from intermittent assessments along with subjective and unquantifiable accounts from patients and caregivers. Furthermore, there continues to be significant diagnostic variations among practitioners. Fortunately, technology to address these issues appears to be on the horizon.

How might the psychiatric clinic of the future look? What changes could we envision? These 4 critical factors may soon bring about dynamic changes in the way we practice psychiatry:

- precision psychiatry

- digital psychiatry

- technology-enhanced psychotherapy

- electronic health record (EHR) reforms.

In this article, we review how advances in each of these areas might lead to improved care for our patients.

Precision psychiatry

Precision psychiatry takes into account each patient’s variability in genes, environment, and lifestyle to determine individualized treatment and prevention strategies. It relies on pharmacogenomic testing as the primary tool. Pharmacogenomics is the study of variability in drug response due to heredity.

Emerging data on the clinical utility and cost-effectiveness of pharmacogenomic testing are encouraging, but its routine use is not well supported by current evidence.2 One limit to using pharmacogenomic testing is that many genes simultaneously exert an effect on the structure and function of neurons and associated pathophysiology. According to the International Society of Psychiatric Genetics, no single genetic variant is sufficient to cause psychiatric disorders such as depression, bipolar disorder, substance dependence, or schizophrenia. This limits the possibility of using genetic tests to establish a diagnosis.3

In the future, better algorithms could promote more accurate pharmacogenomics profiles for individual patients, which could influence treatment.

Precision psychiatry could lead to:

- identification of novel targets for new medications

- pharmacogenetic profiling of the patient to predict disease susceptibility and medication response

- personalized therapy: the right drug at the right dose for the right patient.

- improved efficacy and fewer adverse medication reactions.

Continue to: Digital psychiatry

Digital psychiatry

Integrating computer-based technology into psychiatric practice has given birth to a new frontier that could be called digital psychiatry. This might encompass the following:

- telepsychiatry

- social media with a mental health focus

- web-based applications/devices

- artificial intelligence (AI).

Telepsychiatry. Videoconferencing is the most widely used form of telepsychiatry. It provides patients with easier access to mental health treatment.4 Telepsychiatry has the potential to match patients and clinicians with similar cultural backgrounds, thus minimizing cultural gaps and misunderstandings. Most importantly, it is comparable to face-to-face interviews in terms of the reliability of assessment and treatment outcomes.5

Telepsychiatry might be particularly helpful for patients with restricted mobility, such as those who live in remote areas, nursing homes, or correctional facilities. In correctional settings, transferring prisoners is expensive and carries the risk of escape. In a small study (N = 86) conducted in Hong Kong, Chen et al6 found that using videoconferencing to conduct clinical interviews of inmates was cost-efficient and scored high in terms of patient acceptability.

Social media. Social media could be a powerful platform for early detection of mental illness. Staying connected with patients on social media could allow psychiatrists to be more aware of their patient’s mood fluctuations, which might lead to more timely assessments. Physicians could be automatically notified about changes in their patients’ social media activity that indicate changes in mental state, which could solicit immediate intervention and treatment. On the other hand, such use of social media could blur professional boundaries. Psychiatrists also could use social media to promote awareness of mental health and educate the public on ways to improve or maintain their mental well-being.7

Web-based applications/devices. Real-time monitoring through applications or internet-based smart devices creates a new avenue for patients to receive personalized assessments, treatment, and intervention.8 Smartwatches with internet connectivity may offer a glimpse of the wearer’s sleep architecture and duration, thus providing real-time data on patients who have insomnia. We can now passively collect objective data from devices, such as smartphones and laptops, to phenotype an individual’s mood and mental state, a process called digital phenotyping. The Table9 lists examples of the types of mental health–related metrics that can be captured by smartphones, smartwatches, and similar technology. Information from these devices can be accumulated to create a database that can be used to predict symptoms.10 For example, the way people use a smartphone’s keyboard, including latency time between space and character types, can be used to generate variables for data. This type of information is being studied for use in screening depression and passively assessing mood in real time.11

Continue to: Artificial intelligence

Artificial intelligence—the development of computer systems able to perform tasks that normally require human intelligence—is being increasingly used in psychiatry. Some studies have suggested AI can be used to identify patients’ risk of suicide12-15 or psychosis.16,17Kalanderian and Nasrallah18 reviewed several of these studies in

Other researchers have found clinical uses for machine learning, a subset of AI that uses methods to automatically detect patterns and make predictions based on those patterns. In one study, a machine learning analysis of functional MRI scans was able to identify 4 distinct subtypes of depression.19 In another study, a machine learning model was able to predict with 60% accuracy which patients with depression would respond to antidepressants.20

In the future, AI might be used to change mental health classification systems. Because many mental health disorders share similar symptom clusters, machine learning can help to identify associations between symptoms, behavior, brain function, and real-world function across different diagnoses, potentially affecting how we will classify mental disorders.21

Technology-enhanced psychotherapy

In the future, it might be common for psychotherapy to be provided by a computer, or “virtual therapist.” Several studies have evaluated the use of technology-enhanced psychotherapy.

Lucas et al22 investigated patients’ interactions with a virtual therapist. Participants were interviewed by an avatar named Ellie, who they saw on a TV screen. Half of the participants were told Ellie was not human, and half were told Ellie was being controlled remotely by a human. Three psychologists who were blinded to group allocations analyzed transcripts of the interviews and video recordings of participants’ facial expressions to quantify the participants’ fear, sadness, and other emotional responses during the interviews, as well as their openness to the questions. Participants who believed Ellie was fully automated reported significantly lower fear of self-disclosure and impression management (attempts to control how others perceive them) than participants who were told that Ellie was operated by a human. Additionally, participants who believed they were interacting with a computer were more open during the interview.22

Continue to: Researchers at the University of Southern California...

Researchers at the University of Southern California developed software that assessed 74 acoustic features, including pitch, volume, quality, shimmer, jitter, and prosody, to predict outcomes among patients receiving couples therapy. This software was able to predict marital discord at least as well as human therapists.23

Many mental health apps purport to implement specific components of psychotherapy. Many of these apps focus on cognitive-behavioral therapy worksheets, mindfulness exercises, and/or mood tracking. The features provided by such apps emulate the tasks and intended outcomes of traditional psychotherapy, but in an entirely decentralized venue.24

Some have expressed concern that an increased use of virtual therapists powered by AI might lead to a dehumanization of psychiatry (Box25,26).

Box

Whether there are aspects of the psychiatric patient encounter that cannot be managed by a “virtual clinician” created by artificial intelligence (AI) remains to be determined. Some of the benefits of using AI in this manner may be difficult to anticipate, or may be specific to an individual’s relationship with his/her clinician.25

On the other hand, AI systems blur previously assumed boundaries between reality and fiction, and this could have complex effects on patients. Similar to therapeutic relationships with a human clinician, there is the risk of transference of emotions, thoughts, and feelings to a virtual therapist powered by AI. Unlike with a psychiatrist or therapist, however, there is no person on the other side of this transference. Whether virtual clinicians will be able to manage such transference remains to be seen.

In Deep Medicine,26 cardiologist Eric Topol, MD, emphasizes a crucial component of a patient encounter that AI will be unlikely able to provide: empathy. Virtual therapists powered by AI will inherit the tasks best done by machines, leaving humans more time to do what they do best—providing empathy and being “present” for patients.

Electronic health record reforms

Although many clinicians find EHRs to be onerous and time-consuming, EHR technology is constantly improving, and EHRs have revolutionized documentation and order implementation. Several potential advances could improve clinical practice. For example, EHRs could incorporate a clinical decision support system that uses AI-based algorithms to assist psychiatrists with diagnosis, monitoring, and treatment.27 In the future, EHRs might have the ability to monitor and learn from errors and adverse events, and automatically design an algorithm to avoid them.28 They should be designed to better manage analysis of pharmacogenetic test results, which is challenging due to the amount and complexity of the data.29 Future EHRs should eliminate the non-intuitive and multi-click interfaces and cumbersome data searches of today’s EHRs.30

Technology brings new ethical considerations

Mental health interventions based on AI typically work with algorithms, and algorithms bring ethical issues. Mental health devices or systems that use AI could contain biases that have the potential to harm in unintended ways, such as a data-driven sexist or racist bias.31 This may require investing additional time to explain to patients (and their families) what an algorithm is and how it works in relation to the therapy provided.

Continue to: Another concern is patient...

Another concern is patient autonomy.32 For example, it would be ethically problematic if a patient were to assume that there was a human physician “at the other end” of a virtual therapist or other technology who is communicating or reviewing his/her messages. Similarly, an older adult or a patient with intellectual disabilities may not be able to understand advanced technology or what it does when it is installed in their home to monitor the patient’s activities. This would increase the risk of privacy violations, manipulation, or even coercion if the requirements for informed consent are not satisfied.

A flowchart for the future

Although current research and innovations typically target specific areas of psychiatry, these advances can be integrated by devising algorithms and protocols that will change the current practice of psychiatry. The Figure provides a glimpse of how the psychiatry clinic of the future might work. A maxim of management is that “the best way to predict the future is to create it.” However, the mere conception of a vision is not enough—working towards it is essential.

Bottom Line

With advances in technology, psychiatric practice will soon be radically different from what it is today. The expanded use of telepsychiatry, social media, artificial intelligence, and web-based applications/devices holds great promise for psychiatric assessment, diagnosis, and treatment, although certain ethical and privacy concerns need to be adequately addressed.

Related Resources

- National Institute of Mental Health. Technology and the future of mental health treatment. www.nimh.nih.gov/health/topics/technology-and-the-future-of-mental-health-treatment/index.shtml. Revised September 2019.

- Hays R, Farrell HM, Touros J. Mobile apps and mental health: using technology to quantify real-time clinical risk. Current Psychiatry. 2019;18(6):37-41.

- Torous J, Luo J, Chan SR. Mental health apps: what to tell patients. Current Psychiatry. 2018;17(3):21-25.

Despite the tremendous advances in psychiatry in recent years, the current clinical practice of psychiatry continues to rely on data from intermittent assessments along with subjective and unquantifiable accounts from patients and caregivers. Furthermore, there continues to be significant diagnostic variations among practitioners. Fortunately, technology to address these issues appears to be on the horizon.

How might the psychiatric clinic of the future look? What changes could we envision? These 4 critical factors may soon bring about dynamic changes in the way we practice psychiatry:

- precision psychiatry

- digital psychiatry

- technology-enhanced psychotherapy

- electronic health record (EHR) reforms.

In this article, we review how advances in each of these areas might lead to improved care for our patients.

Precision psychiatry

Precision psychiatry takes into account each patient’s variability in genes, environment, and lifestyle to determine individualized treatment and prevention strategies. It relies on pharmacogenomic testing as the primary tool. Pharmacogenomics is the study of variability in drug response due to heredity.

Emerging data on the clinical utility and cost-effectiveness of pharmacogenomic testing are encouraging, but its routine use is not well supported by current evidence.2 One limit to using pharmacogenomic testing is that many genes simultaneously exert an effect on the structure and function of neurons and associated pathophysiology. According to the International Society of Psychiatric Genetics, no single genetic variant is sufficient to cause psychiatric disorders such as depression, bipolar disorder, substance dependence, or schizophrenia. This limits the possibility of using genetic tests to establish a diagnosis.3

In the future, better algorithms could promote more accurate pharmacogenomics profiles for individual patients, which could influence treatment.

Precision psychiatry could lead to:

- identification of novel targets for new medications

- pharmacogenetic profiling of the patient to predict disease susceptibility and medication response

- personalized therapy: the right drug at the right dose for the right patient.

- improved efficacy and fewer adverse medication reactions.

Continue to: Digital psychiatry

Digital psychiatry

Integrating computer-based technology into psychiatric practice has given birth to a new frontier that could be called digital psychiatry. This might encompass the following:

- telepsychiatry

- social media with a mental health focus

- web-based applications/devices

- artificial intelligence (AI).

Telepsychiatry. Videoconferencing is the most widely used form of telepsychiatry. It provides patients with easier access to mental health treatment.4 Telepsychiatry has the potential to match patients and clinicians with similar cultural backgrounds, thus minimizing cultural gaps and misunderstandings. Most importantly, it is comparable to face-to-face interviews in terms of the reliability of assessment and treatment outcomes.5

Telepsychiatry might be particularly helpful for patients with restricted mobility, such as those who live in remote areas, nursing homes, or correctional facilities. In correctional settings, transferring prisoners is expensive and carries the risk of escape. In a small study (N = 86) conducted in Hong Kong, Chen et al6 found that using videoconferencing to conduct clinical interviews of inmates was cost-efficient and scored high in terms of patient acceptability.

Social media. Social media could be a powerful platform for early detection of mental illness. Staying connected with patients on social media could allow psychiatrists to be more aware of their patient’s mood fluctuations, which might lead to more timely assessments. Physicians could be automatically notified about changes in their patients’ social media activity that indicate changes in mental state, which could solicit immediate intervention and treatment. On the other hand, such use of social media could blur professional boundaries. Psychiatrists also could use social media to promote awareness of mental health and educate the public on ways to improve or maintain their mental well-being.7

Web-based applications/devices. Real-time monitoring through applications or internet-based smart devices creates a new avenue for patients to receive personalized assessments, treatment, and intervention.8 Smartwatches with internet connectivity may offer a glimpse of the wearer’s sleep architecture and duration, thus providing real-time data on patients who have insomnia. We can now passively collect objective data from devices, such as smartphones and laptops, to phenotype an individual’s mood and mental state, a process called digital phenotyping. The Table9 lists examples of the types of mental health–related metrics that can be captured by smartphones, smartwatches, and similar technology. Information from these devices can be accumulated to create a database that can be used to predict symptoms.10 For example, the way people use a smartphone’s keyboard, including latency time between space and character types, can be used to generate variables for data. This type of information is being studied for use in screening depression and passively assessing mood in real time.11

Continue to: Artificial intelligence

Artificial intelligence—the development of computer systems able to perform tasks that normally require human intelligence—is being increasingly used in psychiatry. Some studies have suggested AI can be used to identify patients’ risk of suicide12-15 or psychosis.16,17Kalanderian and Nasrallah18 reviewed several of these studies in

Other researchers have found clinical uses for machine learning, a subset of AI that uses methods to automatically detect patterns and make predictions based on those patterns. In one study, a machine learning analysis of functional MRI scans was able to identify 4 distinct subtypes of depression.19 In another study, a machine learning model was able to predict with 60% accuracy which patients with depression would respond to antidepressants.20

In the future, AI might be used to change mental health classification systems. Because many mental health disorders share similar symptom clusters, machine learning can help to identify associations between symptoms, behavior, brain function, and real-world function across different diagnoses, potentially affecting how we will classify mental disorders.21

Technology-enhanced psychotherapy

In the future, it might be common for psychotherapy to be provided by a computer, or “virtual therapist.” Several studies have evaluated the use of technology-enhanced psychotherapy.

Lucas et al22 investigated patients’ interactions with a virtual therapist. Participants were interviewed by an avatar named Ellie, who they saw on a TV screen. Half of the participants were told Ellie was not human, and half were told Ellie was being controlled remotely by a human. Three psychologists who were blinded to group allocations analyzed transcripts of the interviews and video recordings of participants’ facial expressions to quantify the participants’ fear, sadness, and other emotional responses during the interviews, as well as their openness to the questions. Participants who believed Ellie was fully automated reported significantly lower fear of self-disclosure and impression management (attempts to control how others perceive them) than participants who were told that Ellie was operated by a human. Additionally, participants who believed they were interacting with a computer were more open during the interview.22

Continue to: Researchers at the University of Southern California...

Researchers at the University of Southern California developed software that assessed 74 acoustic features, including pitch, volume, quality, shimmer, jitter, and prosody, to predict outcomes among patients receiving couples therapy. This software was able to predict marital discord at least as well as human therapists.23

Many mental health apps purport to implement specific components of psychotherapy. Many of these apps focus on cognitive-behavioral therapy worksheets, mindfulness exercises, and/or mood tracking. The features provided by such apps emulate the tasks and intended outcomes of traditional psychotherapy, but in an entirely decentralized venue.24

Some have expressed concern that an increased use of virtual therapists powered by AI might lead to a dehumanization of psychiatry (Box25,26).

Box

Whether there are aspects of the psychiatric patient encounter that cannot be managed by a “virtual clinician” created by artificial intelligence (AI) remains to be determined. Some of the benefits of using AI in this manner may be difficult to anticipate, or may be specific to an individual’s relationship with his/her clinician.25

On the other hand, AI systems blur previously assumed boundaries between reality and fiction, and this could have complex effects on patients. Similar to therapeutic relationships with a human clinician, there is the risk of transference of emotions, thoughts, and feelings to a virtual therapist powered by AI. Unlike with a psychiatrist or therapist, however, there is no person on the other side of this transference. Whether virtual clinicians will be able to manage such transference remains to be seen.

In Deep Medicine,26 cardiologist Eric Topol, MD, emphasizes a crucial component of a patient encounter that AI will be unlikely able to provide: empathy. Virtual therapists powered by AI will inherit the tasks best done by machines, leaving humans more time to do what they do best—providing empathy and being “present” for patients.

Electronic health record reforms

Although many clinicians find EHRs to be onerous and time-consuming, EHR technology is constantly improving, and EHRs have revolutionized documentation and order implementation. Several potential advances could improve clinical practice. For example, EHRs could incorporate a clinical decision support system that uses AI-based algorithms to assist psychiatrists with diagnosis, monitoring, and treatment.27 In the future, EHRs might have the ability to monitor and learn from errors and adverse events, and automatically design an algorithm to avoid them.28 They should be designed to better manage analysis of pharmacogenetic test results, which is challenging due to the amount and complexity of the data.29 Future EHRs should eliminate the non-intuitive and multi-click interfaces and cumbersome data searches of today’s EHRs.30

Technology brings new ethical considerations

Mental health interventions based on AI typically work with algorithms, and algorithms bring ethical issues. Mental health devices or systems that use AI could contain biases that have the potential to harm in unintended ways, such as a data-driven sexist or racist bias.31 This may require investing additional time to explain to patients (and their families) what an algorithm is and how it works in relation to the therapy provided.

Continue to: Another concern is patient...

Another concern is patient autonomy.32 For example, it would be ethically problematic if a patient were to assume that there was a human physician “at the other end” of a virtual therapist or other technology who is communicating or reviewing his/her messages. Similarly, an older adult or a patient with intellectual disabilities may not be able to understand advanced technology or what it does when it is installed in their home to monitor the patient’s activities. This would increase the risk of privacy violations, manipulation, or even coercion if the requirements for informed consent are not satisfied.

A flowchart for the future

Although current research and innovations typically target specific areas of psychiatry, these advances can be integrated by devising algorithms and protocols that will change the current practice of psychiatry. The Figure provides a glimpse of how the psychiatry clinic of the future might work. A maxim of management is that “the best way to predict the future is to create it.” However, the mere conception of a vision is not enough—working towards it is essential.

Bottom Line

With advances in technology, psychiatric practice will soon be radically different from what it is today. The expanded use of telepsychiatry, social media, artificial intelligence, and web-based applications/devices holds great promise for psychiatric assessment, diagnosis, and treatment, although certain ethical and privacy concerns need to be adequately addressed.

Related Resources

- National Institute of Mental Health. Technology and the future of mental health treatment. www.nimh.nih.gov/health/topics/technology-and-the-future-of-mental-health-treatment/index.shtml. Revised September 2019.

- Hays R, Farrell HM, Touros J. Mobile apps and mental health: using technology to quantify real-time clinical risk. Current Psychiatry. 2019;18(6):37-41.

- Torous J, Luo J, Chan SR. Mental health apps: what to tell patients. Current Psychiatry. 2018;17(3):21-25.

1. Pirmohamed M. Pharmacogenetics and pharmacogenomics. Br J Clin Pharmacol. 2001;52(4):345-347.

2. Benitez J, Cool CL, Scotti DJ. Use of combinatorial pharmacogenomic guidance in treating psychiatric disorders. Per Med. 2018;15(6):481-494.

3. Cannon TD. Candidate gene studies in the GWAS era: the MET proto-oncogene, neurocognition, and schizophrenia. Am J Psychiatry. 2010;167(4):4,369-372.

4. Greenwood J, Chamberlain C, Parker G. Evaluation of a rural telepsychiatry service. Australas Psychiatry. 2004;12(3):268-272.

5. Hubley S, Lynch SB, Schneck C, et al. Review of key telepsychiatry outcomes. World J Psychiatry. 2016;6(2):269-282.

6. Cheng KM, Siu BW, Yeung CC, et al. Telepsychiatry for stable Chinese psychiatric out-patients in custody in Hong Kong: a case-control pilot study. Hong Kong Med J. 2018;24(4):378-383.

7. Frankish K, Ryan C, Harris A. Psychiatry and online social media: potential, pitfalls and ethical guidelines for psychiatrists and trainees. Australasian Psychiatry. 2012;20(3):181-187.

8. de la Torre Díez I, Alonso SG, Hamrioui S, et al. IoT-based services and applications for mental health in the literature. J Med Syst. 2019;43(1):4-9.

9. Topol E. Deep Medicine. New York, NY: Basic Books; 2019:168.

10. Adams RA, Huys QJM, Roiser JP. Computational Psychiatry: towards a mathematically informed understanding of mental illness. J Neurol Neurosurg Psychiatry. 2016;87(1):53-63.

11. Insel TR. Bending the curve for mental health: technology for a public health approach. Am J Public Health. 2019;109(suppl 3):S168-S170.

12. Just MA, Pan L, Cherkassky VL, et al. Machine learning of neural representations of suicide and emotion concepts identifies suicidal youth. Nat Hum Behav. 2017;1:911-919.

13. Pestian J, Nasrallah H, Matykiewicz P, et al. Suicide note classification using natural language processing: a content analysis. Biomed Inform Insights. 2010;2010(3):19-28.

14. Walsh CG, Ribeiro JD, Franklin JC. Predicting risk of suicide attempts over time through machine learning. Clinical Psychological Science. 2017;5(3):457-469.

15. Pestian JP, Sorter M, Connolly B, et al; STM Research Group. A machine learning approach to identifying the thought markers of suicidal subjects: a prospective multicenter trial. Suicide Life Threat Behav. 2017;47(1):112-121.

16. Corcoran CM, Carrillo F, Fernández-Slezak D, et al. Prediction of psychosis across protocols and risk cohorts using automated language analysis. World Psychiatry. 2018;17(1):67-75.

17. Bedi G, Carrillo F, Cecchi GA, et al. Automated analysis of free speech predicts psychosis onset in high-risk youths. NPJ Schizophr. 2015;1:15030. doi: 10.1038/npjschz.2015.30.

18. Kalanderian H, Nasrallah HA. Artificial intelligence in psychiatry. Current Psychiatry. 2019;18(8):33-38.

19. Drysdale AT, Grosenick L, Downar J, et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat Med. 2017;23(1):28-38.

20. Chekroud AM, Zotti RJ, Shehzad Z, et al. Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet Psychiatry. 2016;3(3):243-250.

21. Grisanzio KA, Goldstein-Piekarski AN, Wang MY, et al. Transdiagnostic symptom clusters and associations with brain, behavior, and daily function in mood, anxiety, and trauma disorders. JAMA Psychiatry. 2018;75(2):201-209.

22. Lucas G, Gratch J, King A, et al. It’s only a computer: virtual humans increase willingness to disclose. Computers in Human Behavior. 2014;37:94-100.

23. Nasir M, Baucom BR, Georgiou P, et al. Predicting couple therapy outcomes based on speech acoustic features. PLoS One. 2017;12(9):e0185123. doi: 10.1371/journal.pone.0185123.

24. Huguet A, Rao S, McGrath PJ, et al. A systematic review of cognitive behavioral therapy and behavioral activation apps for depression. PLoS One. 2016;11(5):e0154248. doi: 10.1371/journal.pone.0154248.

25. Scholten MR, Kelders SM, Van Gemert-Pijnen JE. Self-guided web-based interventions: scoping review on user needs and the potential of embodied conversational agents to address them. J Med Internet Res. 2017;19(11):e383.

26. Topol E. Deep Medicine. New York, NY: Basic Books; 2019:283-310.

27. Abramson EL, McGinnis S, Edwards A, et al. Electronic health record adoption and health information exchange among hospitals in New York State. J Eval Clin Pract. 2012;18(6):1156-1162.

28. Meeks DW, Smith MW, Taylor L, et al. An analysis of electronic health record-related patient safety concerns. J Am Med Inform Assoc. 2014;21(6):1053-1059.

29. Kho AN, Rasmussen LV, Connolly JJ, et al. Practical challenges in integrating genomic data into the electronic health record. Genet Med. 2013;15(10):772-778.

30. Ornstein SM, Oates RB, Fox GN. The computer-based medical record: current status. J Fam Pract. 1992;35(5):556-565.

31. Corea F. Machine ethics and artificial moral agents. In: Applied artificial intelligence: where AI can be used in business. Basel, Switzerland: Springer; 2019:33-41.

32. Beauchamp T, Childress J. Principles of biomedical ethics. 7th ed. New York, NY: Oxford University Press; 2012:44.

1. Pirmohamed M. Pharmacogenetics and pharmacogenomics. Br J Clin Pharmacol. 2001;52(4):345-347.

2. Benitez J, Cool CL, Scotti DJ. Use of combinatorial pharmacogenomic guidance in treating psychiatric disorders. Per Med. 2018;15(6):481-494.

3. Cannon TD. Candidate gene studies in the GWAS era: the MET proto-oncogene, neurocognition, and schizophrenia. Am J Psychiatry. 2010;167(4):4,369-372.

4. Greenwood J, Chamberlain C, Parker G. Evaluation of a rural telepsychiatry service. Australas Psychiatry. 2004;12(3):268-272.

5. Hubley S, Lynch SB, Schneck C, et al. Review of key telepsychiatry outcomes. World J Psychiatry. 2016;6(2):269-282.

6. Cheng KM, Siu BW, Yeung CC, et al. Telepsychiatry for stable Chinese psychiatric out-patients in custody in Hong Kong: a case-control pilot study. Hong Kong Med J. 2018;24(4):378-383.

7. Frankish K, Ryan C, Harris A. Psychiatry and online social media: potential, pitfalls and ethical guidelines for psychiatrists and trainees. Australasian Psychiatry. 2012;20(3):181-187.

8. de la Torre Díez I, Alonso SG, Hamrioui S, et al. IoT-based services and applications for mental health in the literature. J Med Syst. 2019;43(1):4-9.

9. Topol E. Deep Medicine. New York, NY: Basic Books; 2019:168.

10. Adams RA, Huys QJM, Roiser JP. Computational Psychiatry: towards a mathematically informed understanding of mental illness. J Neurol Neurosurg Psychiatry. 2016;87(1):53-63.

11. Insel TR. Bending the curve for mental health: technology for a public health approach. Am J Public Health. 2019;109(suppl 3):S168-S170.

12. Just MA, Pan L, Cherkassky VL, et al. Machine learning of neural representations of suicide and emotion concepts identifies suicidal youth. Nat Hum Behav. 2017;1:911-919.

13. Pestian J, Nasrallah H, Matykiewicz P, et al. Suicide note classification using natural language processing: a content analysis. Biomed Inform Insights. 2010;2010(3):19-28.

14. Walsh CG, Ribeiro JD, Franklin JC. Predicting risk of suicide attempts over time through machine learning. Clinical Psychological Science. 2017;5(3):457-469.

15. Pestian JP, Sorter M, Connolly B, et al; STM Research Group. A machine learning approach to identifying the thought markers of suicidal subjects: a prospective multicenter trial. Suicide Life Threat Behav. 2017;47(1):112-121.

16. Corcoran CM, Carrillo F, Fernández-Slezak D, et al. Prediction of psychosis across protocols and risk cohorts using automated language analysis. World Psychiatry. 2018;17(1):67-75.

17. Bedi G, Carrillo F, Cecchi GA, et al. Automated analysis of free speech predicts psychosis onset in high-risk youths. NPJ Schizophr. 2015;1:15030. doi: 10.1038/npjschz.2015.30.

18. Kalanderian H, Nasrallah HA. Artificial intelligence in psychiatry. Current Psychiatry. 2019;18(8):33-38.

19. Drysdale AT, Grosenick L, Downar J, et al. Resting-state connectivity biomarkers define neurophysiological subtypes of depression. Nat Med. 2017;23(1):28-38.

20. Chekroud AM, Zotti RJ, Shehzad Z, et al. Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet Psychiatry. 2016;3(3):243-250.

21. Grisanzio KA, Goldstein-Piekarski AN, Wang MY, et al. Transdiagnostic symptom clusters and associations with brain, behavior, and daily function in mood, anxiety, and trauma disorders. JAMA Psychiatry. 2018;75(2):201-209.

22. Lucas G, Gratch J, King A, et al. It’s only a computer: virtual humans increase willingness to disclose. Computers in Human Behavior. 2014;37:94-100.

23. Nasir M, Baucom BR, Georgiou P, et al. Predicting couple therapy outcomes based on speech acoustic features. PLoS One. 2017;12(9):e0185123. doi: 10.1371/journal.pone.0185123.

24. Huguet A, Rao S, McGrath PJ, et al. A systematic review of cognitive behavioral therapy and behavioral activation apps for depression. PLoS One. 2016;11(5):e0154248. doi: 10.1371/journal.pone.0154248.

25. Scholten MR, Kelders SM, Van Gemert-Pijnen JE. Self-guided web-based interventions: scoping review on user needs and the potential of embodied conversational agents to address them. J Med Internet Res. 2017;19(11):e383.

26. Topol E. Deep Medicine. New York, NY: Basic Books; 2019:283-310.

27. Abramson EL, McGinnis S, Edwards A, et al. Electronic health record adoption and health information exchange among hospitals in New York State. J Eval Clin Pract. 2012;18(6):1156-1162.

28. Meeks DW, Smith MW, Taylor L, et al. An analysis of electronic health record-related patient safety concerns. J Am Med Inform Assoc. 2014;21(6):1053-1059.

29. Kho AN, Rasmussen LV, Connolly JJ, et al. Practical challenges in integrating genomic data into the electronic health record. Genet Med. 2013;15(10):772-778.

30. Ornstein SM, Oates RB, Fox GN. The computer-based medical record: current status. J Fam Pract. 1992;35(5):556-565.

31. Corea F. Machine ethics and artificial moral agents. In: Applied artificial intelligence: where AI can be used in business. Basel, Switzerland: Springer; 2019:33-41.

32. Beauchamp T, Childress J. Principles of biomedical ethics. 7th ed. New York, NY: Oxford University Press; 2012:44.