User login

The early identification of clinical deterioration among adult hospitalized patients remains a challenge.1 Delayed identification is associated with increased morbidity and mortality, unplanned intensive care unit (ICU) admissions, prolonged hospitalization, and higher costs.2,3 Earlier detection of deterioration using predictive algorithms of vital sign monitoring might avoid these negative outcomes.4 In this scoping review, we summarize current algorithms and their evidence.

Vital signs provide the backbone for detecting clinical deterioration. Early warning scores (EWS) and outreach protocols were developed to bring structure to the assessment of vital signs. Most EWS claim to predict clinical end points such as unplanned ICU admission up to 24 hours in advance.5,6 Reviews of EWS showed a positive trend toward reduced length of stay and mortality. However, conclusions about general efficacy could not be generated because of case heterogeneity and methodologic shortcomings.4,7 Continuous automated vital sign monitoring of patients on the general ward can now be accomplished with wearable devices.8 The first reports on continuous monitoring showed earlier detection of deterioration but not improved clinical end points.4,9 Since then, different reports on continuous monitoring have shown positive effects but concluded that unprocessed monitoring data per se falls short of generating actionable alarms.4,10,11

Predictive algorithms, which often use artificial intelligence (AI), are increasingly employed to recognize complex patterns or abnormalities and support predictions of events in big data sets.12,13 Especially when combined with continuous vital sign monitoring, predictive algorithms have the potential to expedite detection of clinical deterioration and improve patient outcomes. Predictive algorithms using vital signs in the ICU have shown promising results.14 The impact of predictive algorithms on the general wards, however, is unclear.

The aims of our scoping review were to explore the extent and range of and evidence for predictive vital signs–based algorithms on the adult general ward; to describe the variety of these algorithms; and to categorize effects, facilitators, and barriers of their implementation.15

MATERIALS AND METHODS

We performed a scoping review to create a summary of the current state of research. We used the five-step method of Levac and followed the Preferred Reporting Items for Systematic reviews and Meta-Analyses Extension for Scoping Reviews guidelines (Appendix 1).16,17

PubMed, Embase, and CINAHL databases were searched for English-language articles written between January 1, 2010, and November 20, 2020. We developed the search queries with an experienced information scientist, and we used database-specific terms and strategies for input, clinical outcome, method, predictive capability, and population (Appendix 2). Additionally, we searched the references of the selected articles, as well as publications citing these articles.

All studies identified were screened by title and abstract by two researchers (RP and YE). The selected studies were read in their entirety and checked for eligibility using the following inclusion criteria: automated algorithm; vital signs-based; real-time prediction; of clinical deterioration; in an adult, general ward population. In cases where there were successive publications with the same algorithm and population, we selected the most recent study.

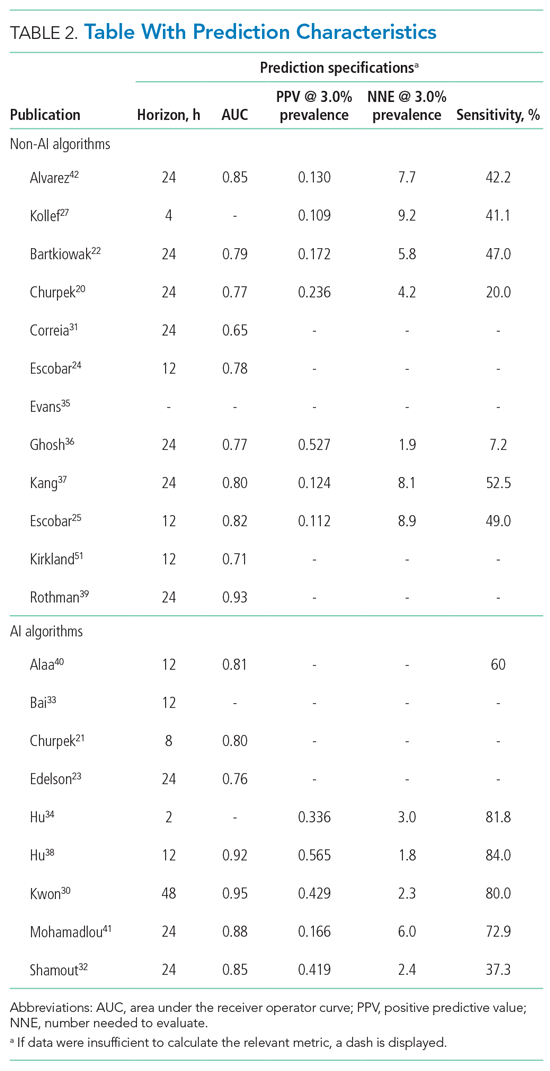

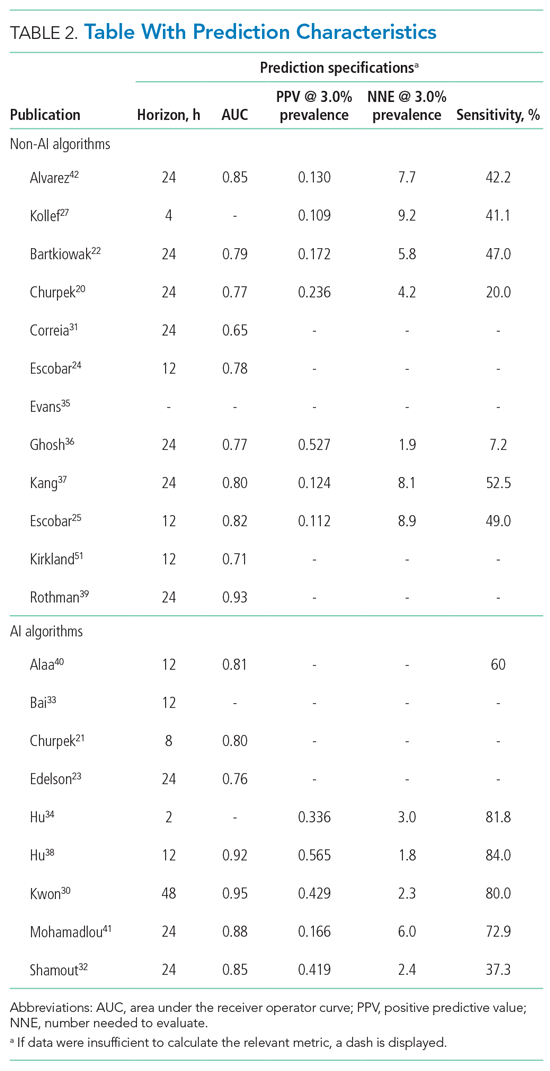

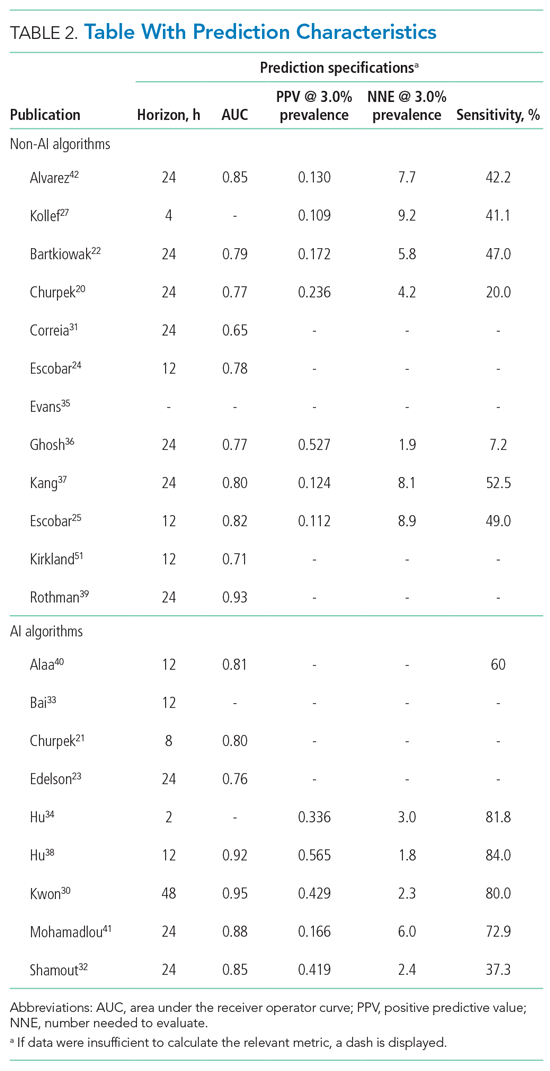

For screening and selection, we used the Rayyan QCRI online tool (Qatar Computing Research Institute) and Endnote X9 (Clarivate Analytics). We extracted information using a data extraction form and organized it into descriptive characteristics of the selected studies (Table 1): an input data table showing number of admissions, intermittent or continuous measurements, vital signs measured, laboratory results (Appendix Table 1), a table summarizing study designs and settings (Appendix Table 2), and a prediction performance table (Table 2). We report characteristics of the populations and algorithms, prediction specifications such as area under the receiver operating curve (AUROC), and predictive values. Predictive values are affected by prevalence, which may differ among populations. To compare the algorithms, we calculated an indexed positive predictive value (PPV) and a number needed to evaluate (NNE) using a weighted average prevalence of clinical deterioration of 3.0%.

We defined clinical deterioration as end points, including rapid response team activation, cardiopulmonary resuscitation, transfer to an ICU, or death.

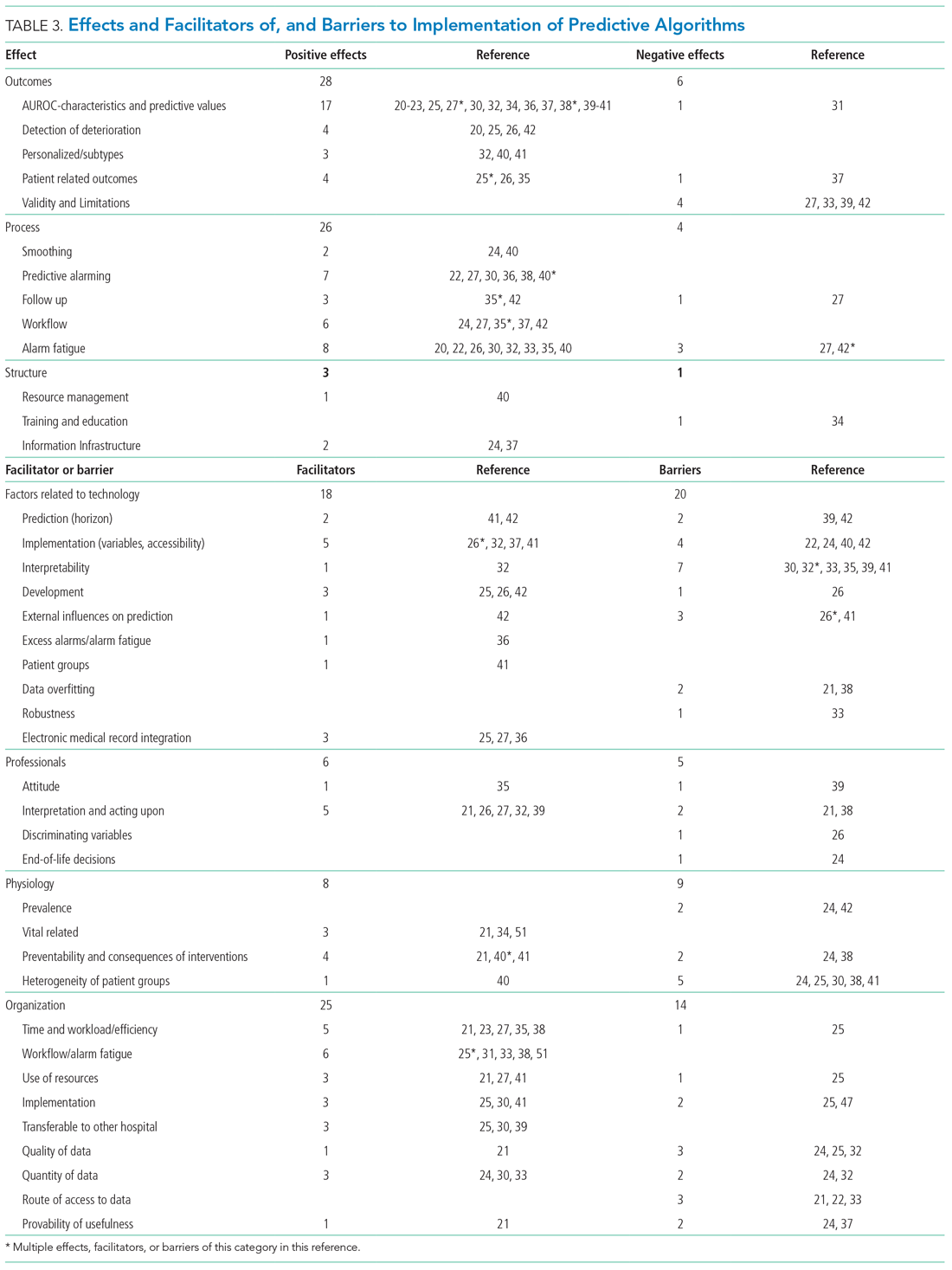

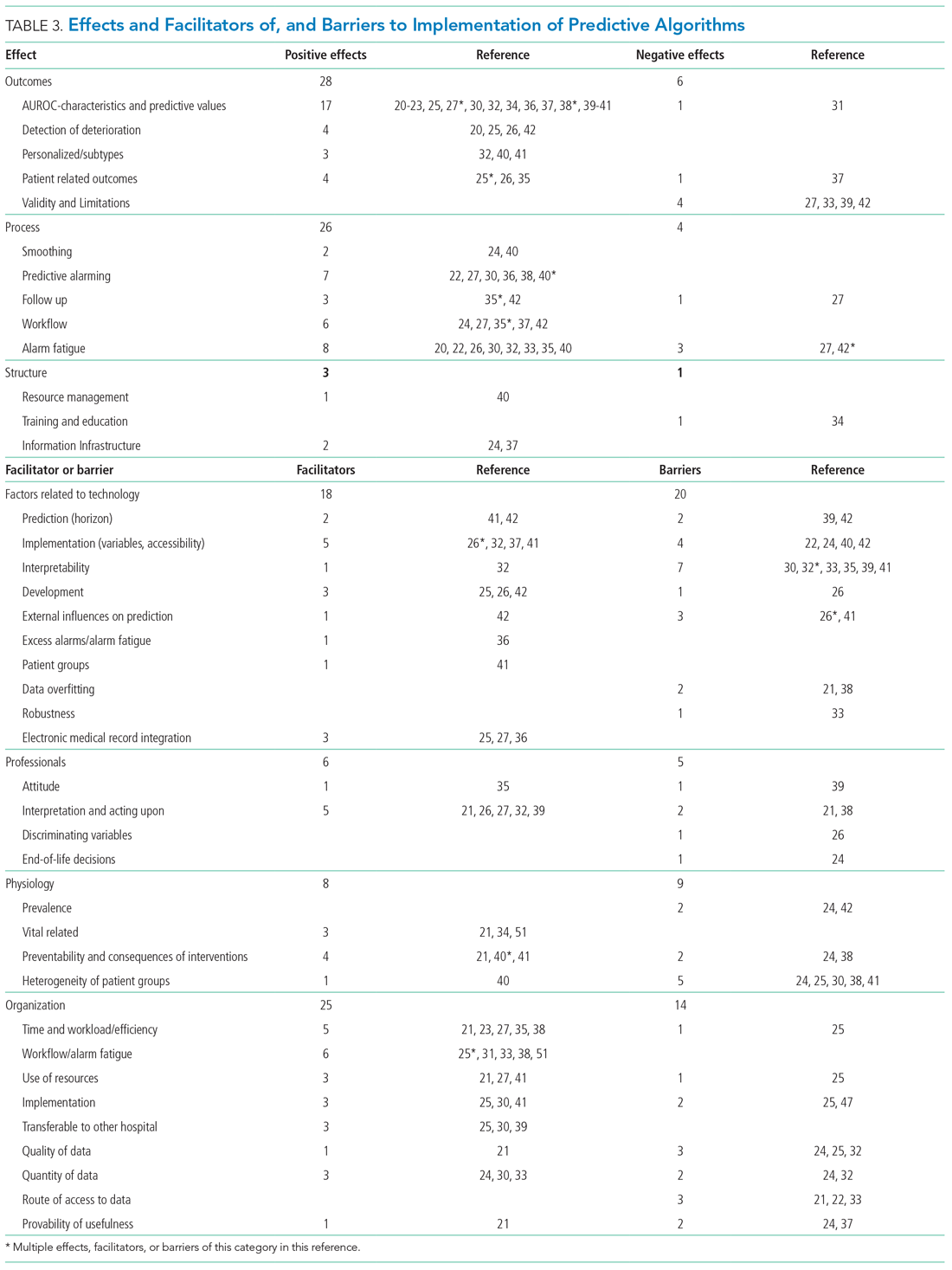

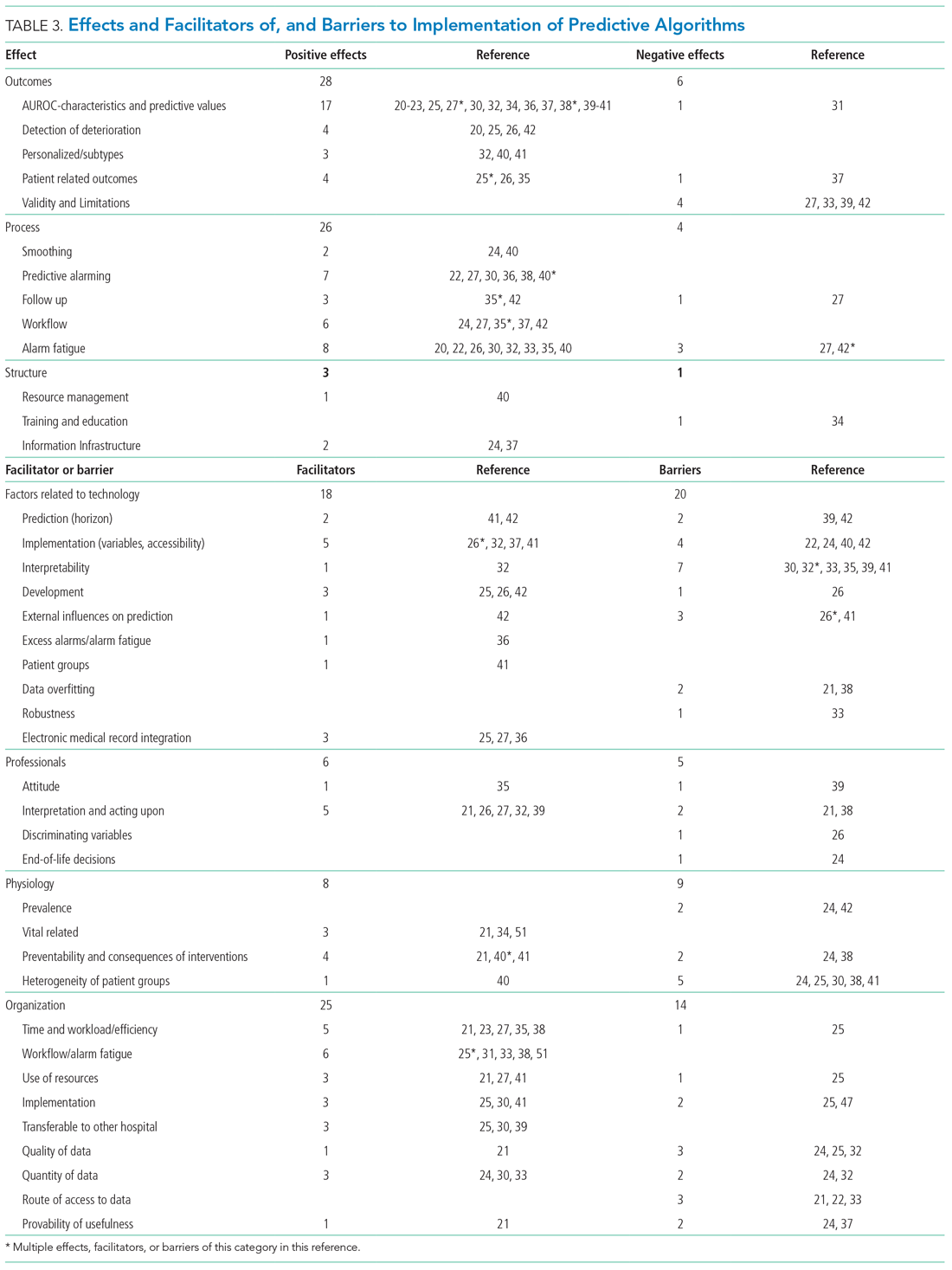

Effects, facilitators, and barriers were identified and categorized using ATLAS.ti 8 software (ATLAS.ti) and evaluated by three researchers (RP, MK, and THvdB). These were categorized using the adapted frameworks of Gagnon et al18 for the barriers and facilitators and of Donabedian19 for the effects (Appendix 3).

The Gagnon et al framework was adapted by changing two of four domains—that is, “Individual” was changed to “Professional” and “Human” to “Physiology.” The domains of “Technology” and “Organization” remained unchanged. The Donabedian domains of “Outcome,” “Process,” and “Structure” also remained unchanged (Table 3).

We divided the studies into two groups: studies on predictive algorithms with and without AI when reporting on characteristics and performance. For the secondary aim of exploring implementation impact, we reported facilitators and barriers in a narrative way, highlighting the most frequent and notable findings.

RESULTS

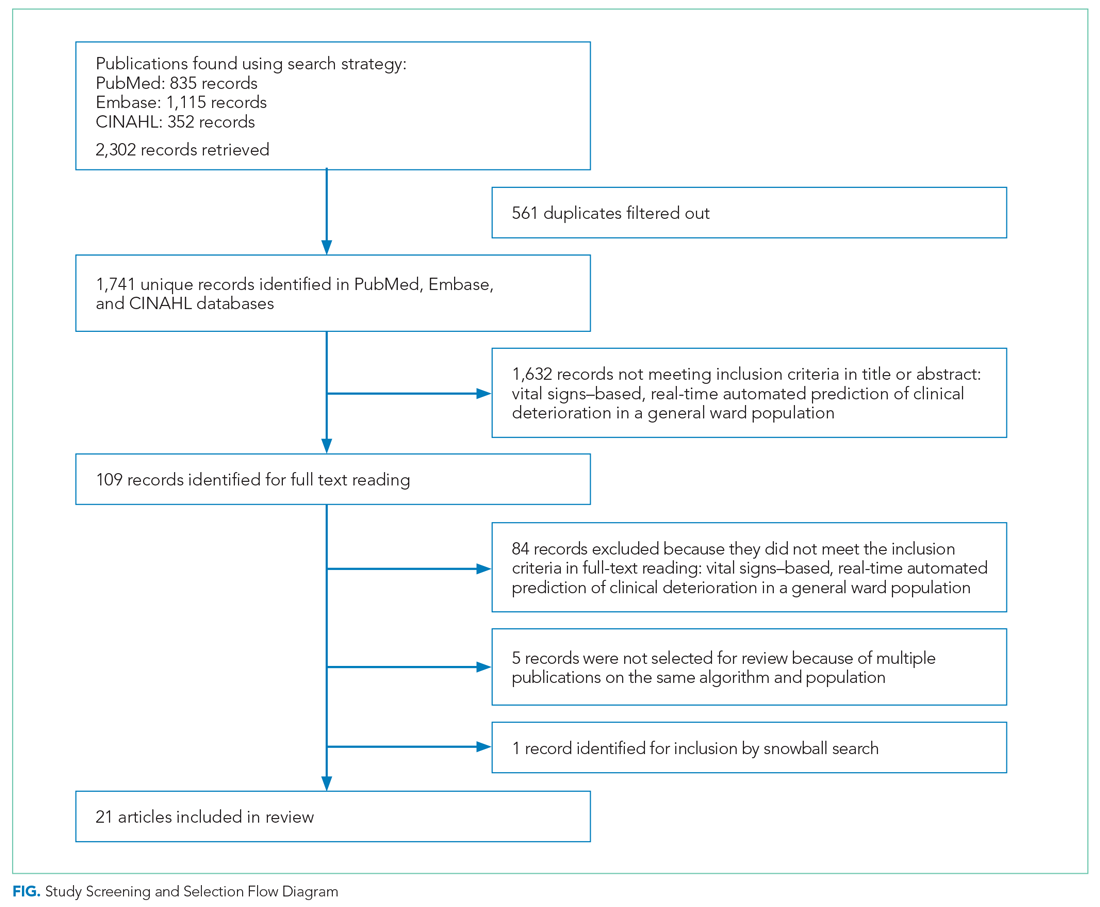

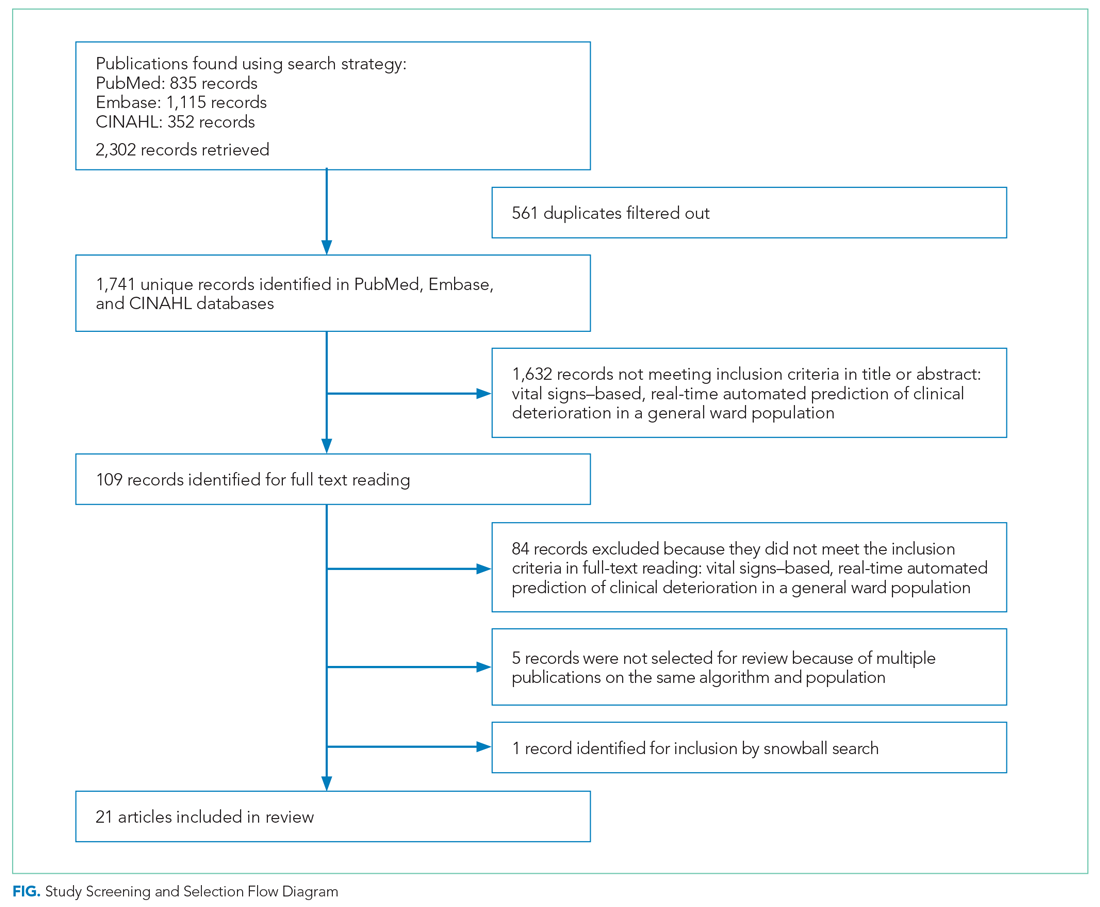

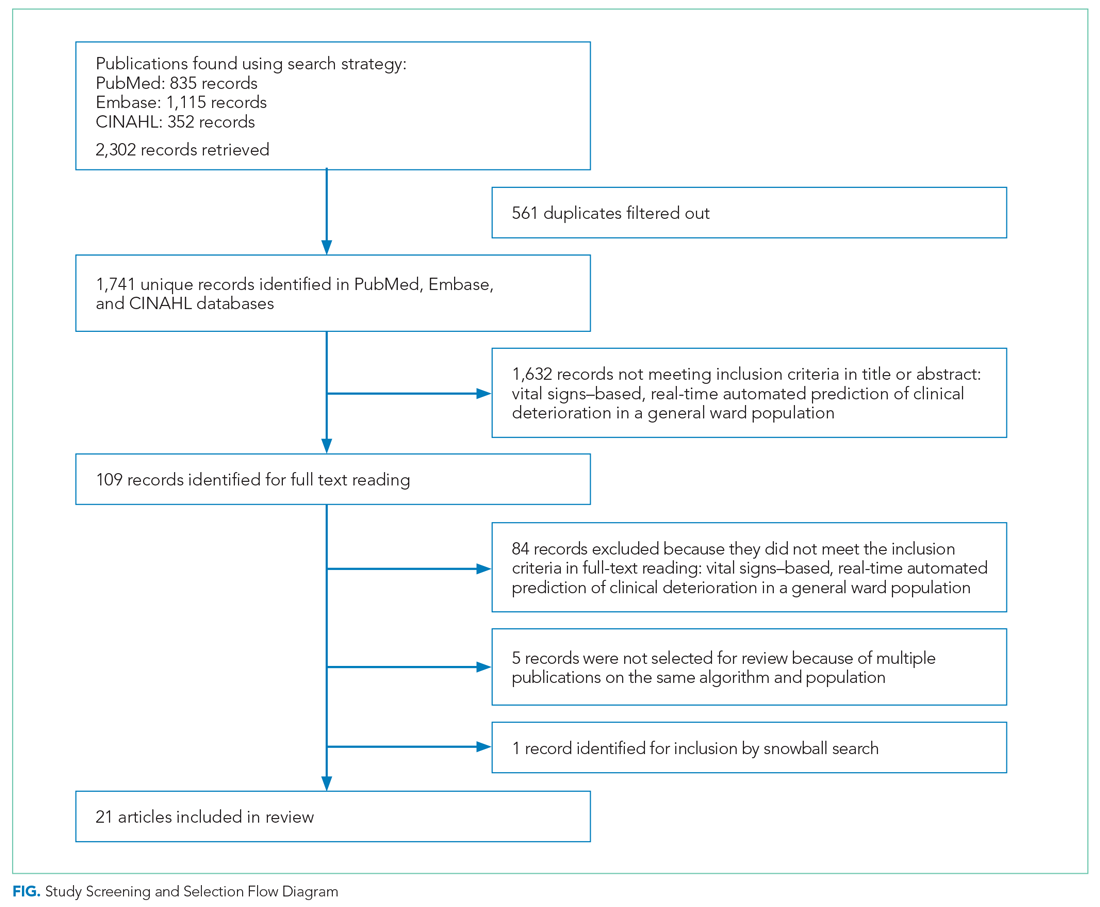

As shown in the Figure, we found 1741 publications, of which we read the full-text of 109. There were 1632 publications that did not meet the inclusion criteria. The publications by Churpek et al,20,21 Bartkiowak et al,22 Edelson et al,23 Escobar et al,24,25 and Kipnis et al26 reported on the same algorithms or databases but had significantly different approaches. For multiple publications using the same algorithm and population, the most recent was named with inclusion of the earlier findings.20,21,27-29 The resulting 21 papers are included in this review.

Descriptive characteristics of the studies are summarized in Table 1. Nineteen of the publications were full papers and two were conference abstracts. Most of the studies (n = 18) were from the United States; there was one study from South Korea,30 one study from Portugal,31 and one study from the United Kingdom.32 In 15 of the studies, there was a strict focus on general or specific wards; 6 studies also included the ICU and/or emergency departments.

Two of the studies were clinical trials, 2 were prospective observational studies, and 17 were retrospective studies. Five studies reported on an active predictive model during admission. Of these, 3 reported that the model was clinically implemented, using the predictions in their clinical workflow. None of the implemented studies used AI.

All input variables are presented in Appendix Table 1.

The non-AI algorithm prediction horizons ranged from 4 to 24 hours, with a median of 24 hours (interquartile range [IQR], 12-24 hours). The AI algorithms ranged from 2 to 48 hours and had a median horizon of 14 hours (IQR, 12-24 hours).

We found three studies reporting patient outcomes. The most recent of these was a large multicenter implementation study by Escobar et al25 that included an extensive follow-up response. This study reported a significantly decreased 30-day mortality in the intervention cohort. A smaller randomized controlled trial reported no significant differences in patient outcomes with earlier warning alarms.27 A third study reported more appropriate rapid response team deployment and decreased mortality in a subgroup analysis.35

Effects, Facilitators, and Barriers

As shown in the Appendix Figure and further detailed in Table 3, the described effects were predominantly positive—57 positive effects vs 11 negative effects. These positive effects sorted primarily into the outcome and process domains.

All of the studies that compared their proposed model with one of various warning systems (eg, EWS, National Early Warning Score [NEWS], Modified Early Warning Score [MEWS]) showed superior performance (based on AUROC and reported predictive values). In 17 studies, the authors reported their model as more useful or superior to the EWS.20-23,26-28,34,36-41 Four studies reported real-time detection of deterioration before regular EWS,20,26,42 and three studies reported positive effects on patient-related outcomes.26,35 Four negative effects were noted on the controllability, validity, and potential limitations.27,42

Of the 38 remarks in the Technology domain, difficulty with implementation in daily practice was a commonly cited barrier.22,24,40,42 Difficulties included creating real-time data feeds out of the EMR, though there were mentions of some successful examples.25,27,36 Difficulty in the interpretability of AI was also considered a potential barrier.30,32,33,35,39,41 There were remarks as to the applicability of the prolonged prediction horizon because of the associated decoupling from the clinical view.39,42

Conservative attitudes toward new technologies and inadequate knowledge were mentioned as barriers.39 Repeated remarks were made on the difficulty of interpreting and responding to a predicted escalation, as the clinical pattern might not be recognizable at such an early stage. On the other hand, it is expected that less invasive countermeasures would be adequate to avert further escalation. Earlier recognition of possible escalations also raised potential ethical questions, such as when to discuss palliative care.24

The heterogeneity of the general ward population and the relatively low prevalence of deterioration were mentioned as barriers.24,30,38,41 There were also concerns that not all escalations are preventable and that some patient outcomes may not be modifiable.24,38

Many investigators expected reductions in false alarms and associated alarm fatigue (reflected as higher PPVs). Furthermore, they expected workflow to improve and workload to decrease.21,23,27,31,33,35,38,41 Despite the capacity of modern EMRs to store large amounts of patient data, some investigators felt improvements to real-time access, data quality and validity, and data density are needed to ensure valid associated predictions.21,22,24,32,37

DISCUSSION

As the complexity and comorbidity of hospitalized adults grow, predicting clinical deterioration is becoming more important. With an ever-increasing amount of available

There are several important limitations across these studies. In a clinical setting, these models would function as a screening test. Almost all studies report an AUROC; however, sensitivity and PPV or NNE (defined as 1/PPV) may be more useful than AUROC when predicting low-frequency events with high-potential clinical impact.44 Assessing the NNE is especially relevant because of its relation to alarm fatigue and responsiveness of clinicians.43 Alarm fatigue and lack of adequate response to alarms were repeatedly cited as potential barriers for application of automated scores.

Although the results of our scoping review are promising, there are limited data on clinical outcomes using these algorithms. Only three of five algorithms were used to guide clinical decision-making.25,27,35 Kollef et al27 showed shorter hospitalizations and Evans et al35 found decreased mortality rates in a multimorbid subgroup. Escobar et al25 found an overall and consistent decrease in mortality in a large, heterogenic population of inpatients across 21 hospitals. While Escobar et al’s findings provide strong evidence that predictive algorithms and structured follow-up on alarms can improve patient outcomes, it recognizes that not all facilities will have the resources to implement them.25 Dedicated round-the-clock follow-up of alarms has yet to be proven feasible for smaller institutions, and leaner solutions must be explored. The example set by Escobar et al25 should be translated into various settings to prove its reproducibility and to substantiate the clinical impact of predictive models and structured follow-up.

According to expert opinion, the use of high-frequency or continuous monitoring at low-acuity wards and AI algorithms to detect trends and patterns will reduce failure-to-rescue rates.4,9,43 However, most studies in our review focused on periodic spot-checked vital signs, and none of the AI algorithms were implemented in clinical care (Appendix Table 1

STRENGTHS AND LIMITATIONS

We performed a comprehensive review of the current literature using a clear and reproducible methodology to minimize the risk of missing relevant publications. The identified research is mainly limited to large US centers and consists of mostly retrospective studies. Heterogeneity among inputs, endpoints, time horizons, and evaluation metrics make comparisons challenging. Comments on facilitators, barriers, and effects were limited.

RECOMMENDATIONS FOR FUTURE RESEARCH

Artificial intelligence and the use of continuous monitoring hold great promise in creating optimal predictive algorithms. Future studies should directly compare AI- and non-AI-based algorithms using continuous monitoring to determine predictive accuracy, feasibility, costs, and outcomes. A consensus on endpoint definitions, input variables, methodology, and reporting is needed to enhance reproducibility, comparability, and generalizability of future research.

CONCLUSION

- van Galen LS, Struik PW, Driesen BEJM, et al. Delayed recognition of deterioration of patients in general wards is mostly caused by human related monitoring failures: a root cause analysis of unplanned ICU admissions. PLoS One. 2016;11(8):e0161393. https://doi.org/10.1371/journal. pone.0161393

- Mardini L, Lipes J, Jayaraman D. Adverse outcomes associated with delayed intensive care consultation in medical and surgical inpatients. J Crit Care. 2012;27(6):688-693. https://doi.org/10.1016/j.jcrc.2012.04.011

- Young MP, Gooder VJ, McBride K, James B, Fisher ES. Inpatient transfers to the intensive care unit: delays are associated with increased mortality and morbidity. J Gen Intern Med. 2003;18(2):77-83. https://doi.org/10.1046/ j.1525-1497.2003.20441.x

- Khanna AK, Hoppe P, Saugel B. Automated continuous noninvasive ward monitoring: future directions and challenges. Crit Care. 2019;23(1):194. https://doi.org/10.1186/s13054-019-2485-7

- Ludikhuize J, Hamming A, de Jonge E, Fikkers BG. Rapid response systems in The Netherlands. Jt Comm J Qual Patient Saf. 2011;37(3):138-197. https:// doi.org/10.1016/s1553-7250(11)37017-1

- Cuthbertson BH, Boroujerdi M, McKie L, Aucott L, Prescott G. Can physiological variables and early warning scoring systems allow early recognition of the deteriorating surgical patient? Crit Care Med. 2007;35(2):402-409. https://doi.org/10.1097/01.ccm.0000254826.10520.87

- Alam N, Hobbelink EL, van Tienhoven AJ, van de Ven PM, Jansma EP, Nanayakkara PWB. The impact of the use of the Early Warning Score (EWS) on patient outcomes: a systematic review. Resuscitation. 2014;85(5):587-594. https://doi.org/10.1016/j.resuscitation.2014.01.013

- Weenk M, Koeneman M, van de Belt TH, Engelen LJLPG, van Goor H, Bredie SJH. Wireless and continuous monitoring of vital signs in patients at the general ward. Resuscitation. 2019;136:47-53. https://doi.org/10.1016/j.resuscitation.2019.01.017

- Cardona-Morrell M, Prgomet M, Turner RM, Nicholson M, Hillman K. Effectiveness of continuous or intermittent vital signs monitoring in preventing adverse events on general wards: a systematic review and meta-analysis. Int J Clin Pract. 2016;70(10):806-824. https://doi.org/10.1111/ijcp.12846

- Brown H, Terrence J, Vasquez P, Bates DW, Zimlichman E. Continuous monitoring in an inpatient medical-surgical unit: a controlled clinical trial. Am J Med. 2014;127(3):226-232. https://doi.org/10.1016/j.amjmed.2013.12.004

- Mestrom E, De Bie A, van de Steeg M, Driessen M, Atallah L, Bezemer R. Implementation of an automated early warning scoring system in a E8 Journal of Hospital Medicine® Published Online June 2021 An Official Publication of the Society of Hospital Medicine Peelen et al | Predicting Deterioration: A Scoping Review surgical ward: practical use and effects on patient outcomes. PLoS One. 2019;14(5):e0213402. https://doi.org/10.1371/journal.pone.0213402

- Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4):230-243. https://doi.org/10.1136/ svn-2017-000101

- Iwashyna TJ, Liu V. What’s so different about big data? A primer for clinicians trained to think epidemiologically. Ann Am Thorac Soc. 2014;11(7):1130- 1135. https://doi.org/10.1513/annalsats.201405-185as

- Jalali A, Bender D, Rehman M, Nadkanri V, Nataraj C. Advanced analytics for outcome prediction in intensive care units. Conf Proc IEEE Eng Med Biol Soc. 2016;2016:2520-2524. https://doi.org/10.1109/embc.2016.7591243

- Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18(1):143. https://doi.org/10.1186/s12874-018-0611-x

- Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19-32. https://doi.org/10.1080/13645 57032000119616

- Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMAScR): checklist and explanation. Ann Intern Med. 2018;169(7):467- 473. https://doi.org/10.7326/m18-0850

- Gagnon MP, Desmartis M, Gagnon J, et al. Framework for user involvement in health technology assessment at the local level: views of health managers, user representatives, and clinicians. Int J Technol Assess Health Care. 2015;31(1-2):68-77. https://doi.org/10.1017/s0266462315000070

- Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743-1748. https://doi.org/10.1001/jama.260.12.1743

- Churpek MM, Yuen TC, Winslow C, et al. Multicenter development and validation of a risk stratification tool for ward patients. Am J Respir Crit Care Med. 2014;190(6):649-655. https://doi.org/10.1164/rccm.201406-1022oc

- Churpek MM, Yuen TC, Winslow C, Meltzer DO, Kattan MW, Edelson DP. Multicenter comparison of machine learning methods and conventional regression for predicting clinical deterioration on the wards. Crit Care Med. 2016;44(2):368-374. https://doi.org/10.1097/ccm.0000000000001571

- Bartkowiak B, Snyder AM, Benjamin A, et al. Validating the electronic cardiac arrest risk triage (eCART) score for risk stratification of surgical inpatients in the postoperative setting: retrospective cohort study. Ann Surg. 2019;269(6):1059-1063. https://doi.org/10.1097/sla.0000000000002665

- Edelson DP, Carey K, Winslow CJ, Churpek MM. Less is more: detecting clinical deterioration in the hospital with machine learning using only age, heart rate and respiratory rate. Abstract presented at: American Thoracic Society International Conference; May 22, 2018; San Diego, California. Am J Resp Crit Care Med. 2018;197:A4444.

- Escobar GJ, LaGuardia JC, Turk BJ, Ragins A, Kipnis P, Draper D. Early detection of impending physiologic deterioration among patients who are not in intensive care: development of predictive models using data from an automated electronic medical record. J Hosp Med. 2012;7(5):388-395. https:// doi.org/10.1002/jhm.1929

- Escobar GJ, Liu VX, Schuler A, Lawson B, Greene JD, Kipnis P. Automated identification of adults at risk for in-hospital clinical deterioration. N Engl J Med. 2020;383(20):1951-1960. https://doi.org/10.1056/nejmsa2001090

- Kipnis P, Turk BJ, Wulf DA, et al. Development and validation of an electronic medical record-based alert score for detection of inpatient deterioration outside the ICU. J Biomed Inform. 2016;64:10-19. https://doi.org/10.1016/j. jbi.2016.09.013

- Kollef MH, Chen Y, Heard K, et al. A randomized trial of real-time automated clinical deterioration alerts sent to a rapid response team. J Hosp Med. 2014;9(7):424-429. https://doi.org/10.1002/jhm.2193

- Hackmann G, Chen M, Chipara O, et al. Toward a two-tier clinical warning system for hospitalized patients. AMIA Annu Symp Proc. 2011;2011:511-519.

- Bailey TC, Chen Y, Mao Y, Lu, C, Hackmann G, Micek ST. A trial of a real-time alert for clinical deterioration in patients hospitalized on general medical wards. J Hosp Med. 2013;8(5):236-242. https://doi.org/10.1002/jhm.2009

- Kwon JM, Lee Y, Lee Y, Lee S, Park J. An algorithm based on deep learning for predicting in-hospital cardiac arrest. J Am Heart Assoc. 2018;7(13):e008678. https://doi.org/10.1161/jaha.118.008678

- Correia S, Gomes A, Shahriari S, Almeida JP, Severo M, Azevedo A. Performance of the early warning system vital to predict unanticipated higher-level of care admission and in-hospital death of ward patients. Value Health. 2018;21(S3):S360. https://doi.org/10.1016/j.jval.2018.09.2152

- Shamout FE, Zhu T, Sharma P, Watkinson PJ, Clifton DA. Deep interpretable early warning system for the detection of clinical deterioration. IEEE J Biomed Health Inform. 2020;24(2):437-446. https://doi.org/10.1109/ jbhi.2019.2937803

- Bai Y, Do DH, Harris PRE, et al. Integrating monitor alarms with laboratory test results to enhance patient deterioration prediction. J Biomed Inform. 2015;53:81-92. https://doi.org/10.1016/j.jbi.2014.09.006

- Hu X, Sapo M, Nenov V, et al. Predictive combinations of monitor alarms preceding in-hospital code blue events. J Biomed Inform. 2012;45(5):913-921. https://doi.org/10.1016/j.jbi.2012.03.001

- Evans RS, Kuttler KG, Simpson KJ, et al. Automated detection of physiologic deterioration in hospitalized patients. J Am Med Inform Assoc. 2015;22(2):350-360. https://doi.org/10.1136/amiajnl-2014-002816

- Ghosh E, Eshelman L, Yang L, Carlson E, Lord B. Early deterioration indicator: data-driven approach to detecting deterioration in general ward. Resuscitation. 2018;122:99-105. https://doi.org/10.1016/j.resuscitation. 2017.10.026

- Kang MA, Churpek MM, Zadravecz FJ, Adhikari R, Twu NM, Edelson DP: Real-time risk prediction on the wards: a feasibility study. Crit Care Med. 2016;44(8):1468-1473. https://doi.org/10.1097/ccm.0000000000001716

- Hu SB, Wong DJL, Correa A, Li N, Deng JC. Prediction of clinical deterioration in hospitalized adult patients with hematologic malignancies using a neural network model. PLoS One. 2016;11(8):e0161401. https://doi. org/10.1371/journal.pone.0161401

- Rothman MJ, Rothman SI, Beals J 4th. Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46(5):837-848. https://doi.org/10.1016/j. jbi.2013.06.011

- Alaa AM, Yoon J, Hu S, van der Schaar M. Personalized risk scoring for critical care prognosis using mixtures of Gaussian processes. IEEE Trans Biomed Eng. 2018;65(1):207-218. https://doi.org/10.1109/tbme.2017.2698602

- Mohamadlou H, Panchavati S, Calvert J, et al. Multicenter validation of a machine-learning algorithm for 48-h all-cause mortality prediction. Health Informatics J. 2020;26(3):1912-1925. https://doi.org/10.1177/1460458219894494

- Alvarez CA, Clark CA, Zhang S, et al. Predicting out of intensive care unit cardiopulmonary arrest or death using electronic medical record data. BMC Med Inform Decis Mak. 2013;13:28. https://doi.org/10.1186/1472-6947-13-28

- Vincent JL, Einav S, Pearse R, et al. Improving detection of patient deterioration in the general hospital ward environment. Eur J Anaesthesiol. 2018;35(5):325-333. https://doi.org/10.1097/eja.0000000000000798

- Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19(1):285. https://doi.org/10.1186/s13054-015-0999-1

- Weenk M, Bredie SJ, Koeneman M, Hesselink G, van Goor H, van de Belt TH. Continuous monitoring of the vital signs in the general ward using wearable devices: randomized controlled trial. J Med Internet Res. 2020;22(6):e15471. https://doi.org/10.2196/15471

- Wellner B, Grand J, Canzone E, et al. Predicting unplanned transfers to the intensive care unit: a machine learning approach leveraging diverse clinical elements. JMIR Med Inform. 2017;5(4):e45. https://doi.org/10.2196/medinform.8680

- Elliott M, Baird J. Pulse oximetry and the enduring neglect of respiratory rate assessment: a commentary on patient surveillance. Br J Nurs. 2019;28(19):1256-1259. https://doi.org/10.12968/bjon.2019.28.19.1256

- Blackwell JN, Keim-Malpass J, Clark MT, et al. Early detection of in-patient deterioration: one prediction model does not fit all. Crit Care Explor. 2020;2(5):e0116. https://doi.org/10.1097/cce.0000000000000116

- Johnson AEW, Pollard TJ, Shen L, et al. MIMIC-III, a freely accessible critical care database. Sci Data. 2016;3:160035. https://doi.org/10.1038/sdata.2016.35

- Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(6):573-576. https://doi. org/10.1370/afm.1713

- Kirkland LL, Malinchoc M, O’Byrne M, et al. A clinical deterioration prediction tool for internal medicine patients. Am J Med Qual. 2013;28(2):135-142 https://doi.org/10.1177/1062860612450459

The early identification of clinical deterioration among adult hospitalized patients remains a challenge.1 Delayed identification is associated with increased morbidity and mortality, unplanned intensive care unit (ICU) admissions, prolonged hospitalization, and higher costs.2,3 Earlier detection of deterioration using predictive algorithms of vital sign monitoring might avoid these negative outcomes.4 In this scoping review, we summarize current algorithms and their evidence.

Vital signs provide the backbone for detecting clinical deterioration. Early warning scores (EWS) and outreach protocols were developed to bring structure to the assessment of vital signs. Most EWS claim to predict clinical end points such as unplanned ICU admission up to 24 hours in advance.5,6 Reviews of EWS showed a positive trend toward reduced length of stay and mortality. However, conclusions about general efficacy could not be generated because of case heterogeneity and methodologic shortcomings.4,7 Continuous automated vital sign monitoring of patients on the general ward can now be accomplished with wearable devices.8 The first reports on continuous monitoring showed earlier detection of deterioration but not improved clinical end points.4,9 Since then, different reports on continuous monitoring have shown positive effects but concluded that unprocessed monitoring data per se falls short of generating actionable alarms.4,10,11

Predictive algorithms, which often use artificial intelligence (AI), are increasingly employed to recognize complex patterns or abnormalities and support predictions of events in big data sets.12,13 Especially when combined with continuous vital sign monitoring, predictive algorithms have the potential to expedite detection of clinical deterioration and improve patient outcomes. Predictive algorithms using vital signs in the ICU have shown promising results.14 The impact of predictive algorithms on the general wards, however, is unclear.

The aims of our scoping review were to explore the extent and range of and evidence for predictive vital signs–based algorithms on the adult general ward; to describe the variety of these algorithms; and to categorize effects, facilitators, and barriers of their implementation.15

MATERIALS AND METHODS

We performed a scoping review to create a summary of the current state of research. We used the five-step method of Levac and followed the Preferred Reporting Items for Systematic reviews and Meta-Analyses Extension for Scoping Reviews guidelines (Appendix 1).16,17

PubMed, Embase, and CINAHL databases were searched for English-language articles written between January 1, 2010, and November 20, 2020. We developed the search queries with an experienced information scientist, and we used database-specific terms and strategies for input, clinical outcome, method, predictive capability, and population (Appendix 2). Additionally, we searched the references of the selected articles, as well as publications citing these articles.

All studies identified were screened by title and abstract by two researchers (RP and YE). The selected studies were read in their entirety and checked for eligibility using the following inclusion criteria: automated algorithm; vital signs-based; real-time prediction; of clinical deterioration; in an adult, general ward population. In cases where there were successive publications with the same algorithm and population, we selected the most recent study.

For screening and selection, we used the Rayyan QCRI online tool (Qatar Computing Research Institute) and Endnote X9 (Clarivate Analytics). We extracted information using a data extraction form and organized it into descriptive characteristics of the selected studies (Table 1): an input data table showing number of admissions, intermittent or continuous measurements, vital signs measured, laboratory results (Appendix Table 1), a table summarizing study designs and settings (Appendix Table 2), and a prediction performance table (Table 2). We report characteristics of the populations and algorithms, prediction specifications such as area under the receiver operating curve (AUROC), and predictive values. Predictive values are affected by prevalence, which may differ among populations. To compare the algorithms, we calculated an indexed positive predictive value (PPV) and a number needed to evaluate (NNE) using a weighted average prevalence of clinical deterioration of 3.0%.

We defined clinical deterioration as end points, including rapid response team activation, cardiopulmonary resuscitation, transfer to an ICU, or death.

Effects, facilitators, and barriers were identified and categorized using ATLAS.ti 8 software (ATLAS.ti) and evaluated by three researchers (RP, MK, and THvdB). These were categorized using the adapted frameworks of Gagnon et al18 for the barriers and facilitators and of Donabedian19 for the effects (Appendix 3).

The Gagnon et al framework was adapted by changing two of four domains—that is, “Individual” was changed to “Professional” and “Human” to “Physiology.” The domains of “Technology” and “Organization” remained unchanged. The Donabedian domains of “Outcome,” “Process,” and “Structure” also remained unchanged (Table 3).

We divided the studies into two groups: studies on predictive algorithms with and without AI when reporting on characteristics and performance. For the secondary aim of exploring implementation impact, we reported facilitators and barriers in a narrative way, highlighting the most frequent and notable findings.

RESULTS

As shown in the Figure, we found 1741 publications, of which we read the full-text of 109. There were 1632 publications that did not meet the inclusion criteria. The publications by Churpek et al,20,21 Bartkiowak et al,22 Edelson et al,23 Escobar et al,24,25 and Kipnis et al26 reported on the same algorithms or databases but had significantly different approaches. For multiple publications using the same algorithm and population, the most recent was named with inclusion of the earlier findings.20,21,27-29 The resulting 21 papers are included in this review.

Descriptive characteristics of the studies are summarized in Table 1. Nineteen of the publications were full papers and two were conference abstracts. Most of the studies (n = 18) were from the United States; there was one study from South Korea,30 one study from Portugal,31 and one study from the United Kingdom.32 In 15 of the studies, there was a strict focus on general or specific wards; 6 studies also included the ICU and/or emergency departments.

Two of the studies were clinical trials, 2 were prospective observational studies, and 17 were retrospective studies. Five studies reported on an active predictive model during admission. Of these, 3 reported that the model was clinically implemented, using the predictions in their clinical workflow. None of the implemented studies used AI.

All input variables are presented in Appendix Table 1.

The non-AI algorithm prediction horizons ranged from 4 to 24 hours, with a median of 24 hours (interquartile range [IQR], 12-24 hours). The AI algorithms ranged from 2 to 48 hours and had a median horizon of 14 hours (IQR, 12-24 hours).

We found three studies reporting patient outcomes. The most recent of these was a large multicenter implementation study by Escobar et al25 that included an extensive follow-up response. This study reported a significantly decreased 30-day mortality in the intervention cohort. A smaller randomized controlled trial reported no significant differences in patient outcomes with earlier warning alarms.27 A third study reported more appropriate rapid response team deployment and decreased mortality in a subgroup analysis.35

Effects, Facilitators, and Barriers

As shown in the Appendix Figure and further detailed in Table 3, the described effects were predominantly positive—57 positive effects vs 11 negative effects. These positive effects sorted primarily into the outcome and process domains.

All of the studies that compared their proposed model with one of various warning systems (eg, EWS, National Early Warning Score [NEWS], Modified Early Warning Score [MEWS]) showed superior performance (based on AUROC and reported predictive values). In 17 studies, the authors reported their model as more useful or superior to the EWS.20-23,26-28,34,36-41 Four studies reported real-time detection of deterioration before regular EWS,20,26,42 and three studies reported positive effects on patient-related outcomes.26,35 Four negative effects were noted on the controllability, validity, and potential limitations.27,42

Of the 38 remarks in the Technology domain, difficulty with implementation in daily practice was a commonly cited barrier.22,24,40,42 Difficulties included creating real-time data feeds out of the EMR, though there were mentions of some successful examples.25,27,36 Difficulty in the interpretability of AI was also considered a potential barrier.30,32,33,35,39,41 There were remarks as to the applicability of the prolonged prediction horizon because of the associated decoupling from the clinical view.39,42

Conservative attitudes toward new technologies and inadequate knowledge were mentioned as barriers.39 Repeated remarks were made on the difficulty of interpreting and responding to a predicted escalation, as the clinical pattern might not be recognizable at such an early stage. On the other hand, it is expected that less invasive countermeasures would be adequate to avert further escalation. Earlier recognition of possible escalations also raised potential ethical questions, such as when to discuss palliative care.24

The heterogeneity of the general ward population and the relatively low prevalence of deterioration were mentioned as barriers.24,30,38,41 There were also concerns that not all escalations are preventable and that some patient outcomes may not be modifiable.24,38

Many investigators expected reductions in false alarms and associated alarm fatigue (reflected as higher PPVs). Furthermore, they expected workflow to improve and workload to decrease.21,23,27,31,33,35,38,41 Despite the capacity of modern EMRs to store large amounts of patient data, some investigators felt improvements to real-time access, data quality and validity, and data density are needed to ensure valid associated predictions.21,22,24,32,37

DISCUSSION

As the complexity and comorbidity of hospitalized adults grow, predicting clinical deterioration is becoming more important. With an ever-increasing amount of available

There are several important limitations across these studies. In a clinical setting, these models would function as a screening test. Almost all studies report an AUROC; however, sensitivity and PPV or NNE (defined as 1/PPV) may be more useful than AUROC when predicting low-frequency events with high-potential clinical impact.44 Assessing the NNE is especially relevant because of its relation to alarm fatigue and responsiveness of clinicians.43 Alarm fatigue and lack of adequate response to alarms were repeatedly cited as potential barriers for application of automated scores.

Although the results of our scoping review are promising, there are limited data on clinical outcomes using these algorithms. Only three of five algorithms were used to guide clinical decision-making.25,27,35 Kollef et al27 showed shorter hospitalizations and Evans et al35 found decreased mortality rates in a multimorbid subgroup. Escobar et al25 found an overall and consistent decrease in mortality in a large, heterogenic population of inpatients across 21 hospitals. While Escobar et al’s findings provide strong evidence that predictive algorithms and structured follow-up on alarms can improve patient outcomes, it recognizes that not all facilities will have the resources to implement them.25 Dedicated round-the-clock follow-up of alarms has yet to be proven feasible for smaller institutions, and leaner solutions must be explored. The example set by Escobar et al25 should be translated into various settings to prove its reproducibility and to substantiate the clinical impact of predictive models and structured follow-up.

According to expert opinion, the use of high-frequency or continuous monitoring at low-acuity wards and AI algorithms to detect trends and patterns will reduce failure-to-rescue rates.4,9,43 However, most studies in our review focused on periodic spot-checked vital signs, and none of the AI algorithms were implemented in clinical care (Appendix Table 1

STRENGTHS AND LIMITATIONS

We performed a comprehensive review of the current literature using a clear and reproducible methodology to minimize the risk of missing relevant publications. The identified research is mainly limited to large US centers and consists of mostly retrospective studies. Heterogeneity among inputs, endpoints, time horizons, and evaluation metrics make comparisons challenging. Comments on facilitators, barriers, and effects were limited.

RECOMMENDATIONS FOR FUTURE RESEARCH

Artificial intelligence and the use of continuous monitoring hold great promise in creating optimal predictive algorithms. Future studies should directly compare AI- and non-AI-based algorithms using continuous monitoring to determine predictive accuracy, feasibility, costs, and outcomes. A consensus on endpoint definitions, input variables, methodology, and reporting is needed to enhance reproducibility, comparability, and generalizability of future research.

CONCLUSION

The early identification of clinical deterioration among adult hospitalized patients remains a challenge.1 Delayed identification is associated with increased morbidity and mortality, unplanned intensive care unit (ICU) admissions, prolonged hospitalization, and higher costs.2,3 Earlier detection of deterioration using predictive algorithms of vital sign monitoring might avoid these negative outcomes.4 In this scoping review, we summarize current algorithms and their evidence.

Vital signs provide the backbone for detecting clinical deterioration. Early warning scores (EWS) and outreach protocols were developed to bring structure to the assessment of vital signs. Most EWS claim to predict clinical end points such as unplanned ICU admission up to 24 hours in advance.5,6 Reviews of EWS showed a positive trend toward reduced length of stay and mortality. However, conclusions about general efficacy could not be generated because of case heterogeneity and methodologic shortcomings.4,7 Continuous automated vital sign monitoring of patients on the general ward can now be accomplished with wearable devices.8 The first reports on continuous monitoring showed earlier detection of deterioration but not improved clinical end points.4,9 Since then, different reports on continuous monitoring have shown positive effects but concluded that unprocessed monitoring data per se falls short of generating actionable alarms.4,10,11

Predictive algorithms, which often use artificial intelligence (AI), are increasingly employed to recognize complex patterns or abnormalities and support predictions of events in big data sets.12,13 Especially when combined with continuous vital sign monitoring, predictive algorithms have the potential to expedite detection of clinical deterioration and improve patient outcomes. Predictive algorithms using vital signs in the ICU have shown promising results.14 The impact of predictive algorithms on the general wards, however, is unclear.

The aims of our scoping review were to explore the extent and range of and evidence for predictive vital signs–based algorithms on the adult general ward; to describe the variety of these algorithms; and to categorize effects, facilitators, and barriers of their implementation.15

MATERIALS AND METHODS

We performed a scoping review to create a summary of the current state of research. We used the five-step method of Levac and followed the Preferred Reporting Items for Systematic reviews and Meta-Analyses Extension for Scoping Reviews guidelines (Appendix 1).16,17

PubMed, Embase, and CINAHL databases were searched for English-language articles written between January 1, 2010, and November 20, 2020. We developed the search queries with an experienced information scientist, and we used database-specific terms and strategies for input, clinical outcome, method, predictive capability, and population (Appendix 2). Additionally, we searched the references of the selected articles, as well as publications citing these articles.

All studies identified were screened by title and abstract by two researchers (RP and YE). The selected studies were read in their entirety and checked for eligibility using the following inclusion criteria: automated algorithm; vital signs-based; real-time prediction; of clinical deterioration; in an adult, general ward population. In cases where there were successive publications with the same algorithm and population, we selected the most recent study.

For screening and selection, we used the Rayyan QCRI online tool (Qatar Computing Research Institute) and Endnote X9 (Clarivate Analytics). We extracted information using a data extraction form and organized it into descriptive characteristics of the selected studies (Table 1): an input data table showing number of admissions, intermittent or continuous measurements, vital signs measured, laboratory results (Appendix Table 1), a table summarizing study designs and settings (Appendix Table 2), and a prediction performance table (Table 2). We report characteristics of the populations and algorithms, prediction specifications such as area under the receiver operating curve (AUROC), and predictive values. Predictive values are affected by prevalence, which may differ among populations. To compare the algorithms, we calculated an indexed positive predictive value (PPV) and a number needed to evaluate (NNE) using a weighted average prevalence of clinical deterioration of 3.0%.

We defined clinical deterioration as end points, including rapid response team activation, cardiopulmonary resuscitation, transfer to an ICU, or death.

Effects, facilitators, and barriers were identified and categorized using ATLAS.ti 8 software (ATLAS.ti) and evaluated by three researchers (RP, MK, and THvdB). These were categorized using the adapted frameworks of Gagnon et al18 for the barriers and facilitators and of Donabedian19 for the effects (Appendix 3).

The Gagnon et al framework was adapted by changing two of four domains—that is, “Individual” was changed to “Professional” and “Human” to “Physiology.” The domains of “Technology” and “Organization” remained unchanged. The Donabedian domains of “Outcome,” “Process,” and “Structure” also remained unchanged (Table 3).

We divided the studies into two groups: studies on predictive algorithms with and without AI when reporting on characteristics and performance. For the secondary aim of exploring implementation impact, we reported facilitators and barriers in a narrative way, highlighting the most frequent and notable findings.

RESULTS

As shown in the Figure, we found 1741 publications, of which we read the full-text of 109. There were 1632 publications that did not meet the inclusion criteria. The publications by Churpek et al,20,21 Bartkiowak et al,22 Edelson et al,23 Escobar et al,24,25 and Kipnis et al26 reported on the same algorithms or databases but had significantly different approaches. For multiple publications using the same algorithm and population, the most recent was named with inclusion of the earlier findings.20,21,27-29 The resulting 21 papers are included in this review.

Descriptive characteristics of the studies are summarized in Table 1. Nineteen of the publications were full papers and two were conference abstracts. Most of the studies (n = 18) were from the United States; there was one study from South Korea,30 one study from Portugal,31 and one study from the United Kingdom.32 In 15 of the studies, there was a strict focus on general or specific wards; 6 studies also included the ICU and/or emergency departments.

Two of the studies were clinical trials, 2 were prospective observational studies, and 17 were retrospective studies. Five studies reported on an active predictive model during admission. Of these, 3 reported that the model was clinically implemented, using the predictions in their clinical workflow. None of the implemented studies used AI.

All input variables are presented in Appendix Table 1.

The non-AI algorithm prediction horizons ranged from 4 to 24 hours, with a median of 24 hours (interquartile range [IQR], 12-24 hours). The AI algorithms ranged from 2 to 48 hours and had a median horizon of 14 hours (IQR, 12-24 hours).

We found three studies reporting patient outcomes. The most recent of these was a large multicenter implementation study by Escobar et al25 that included an extensive follow-up response. This study reported a significantly decreased 30-day mortality in the intervention cohort. A smaller randomized controlled trial reported no significant differences in patient outcomes with earlier warning alarms.27 A third study reported more appropriate rapid response team deployment and decreased mortality in a subgroup analysis.35

Effects, Facilitators, and Barriers

As shown in the Appendix Figure and further detailed in Table 3, the described effects were predominantly positive—57 positive effects vs 11 negative effects. These positive effects sorted primarily into the outcome and process domains.

All of the studies that compared their proposed model with one of various warning systems (eg, EWS, National Early Warning Score [NEWS], Modified Early Warning Score [MEWS]) showed superior performance (based on AUROC and reported predictive values). In 17 studies, the authors reported their model as more useful or superior to the EWS.20-23,26-28,34,36-41 Four studies reported real-time detection of deterioration before regular EWS,20,26,42 and three studies reported positive effects on patient-related outcomes.26,35 Four negative effects were noted on the controllability, validity, and potential limitations.27,42

Of the 38 remarks in the Technology domain, difficulty with implementation in daily practice was a commonly cited barrier.22,24,40,42 Difficulties included creating real-time data feeds out of the EMR, though there were mentions of some successful examples.25,27,36 Difficulty in the interpretability of AI was also considered a potential barrier.30,32,33,35,39,41 There were remarks as to the applicability of the prolonged prediction horizon because of the associated decoupling from the clinical view.39,42

Conservative attitudes toward new technologies and inadequate knowledge were mentioned as barriers.39 Repeated remarks were made on the difficulty of interpreting and responding to a predicted escalation, as the clinical pattern might not be recognizable at such an early stage. On the other hand, it is expected that less invasive countermeasures would be adequate to avert further escalation. Earlier recognition of possible escalations also raised potential ethical questions, such as when to discuss palliative care.24

The heterogeneity of the general ward population and the relatively low prevalence of deterioration were mentioned as barriers.24,30,38,41 There were also concerns that not all escalations are preventable and that some patient outcomes may not be modifiable.24,38

Many investigators expected reductions in false alarms and associated alarm fatigue (reflected as higher PPVs). Furthermore, they expected workflow to improve and workload to decrease.21,23,27,31,33,35,38,41 Despite the capacity of modern EMRs to store large amounts of patient data, some investigators felt improvements to real-time access, data quality and validity, and data density are needed to ensure valid associated predictions.21,22,24,32,37

DISCUSSION

As the complexity and comorbidity of hospitalized adults grow, predicting clinical deterioration is becoming more important. With an ever-increasing amount of available

There are several important limitations across these studies. In a clinical setting, these models would function as a screening test. Almost all studies report an AUROC; however, sensitivity and PPV or NNE (defined as 1/PPV) may be more useful than AUROC when predicting low-frequency events with high-potential clinical impact.44 Assessing the NNE is especially relevant because of its relation to alarm fatigue and responsiveness of clinicians.43 Alarm fatigue and lack of adequate response to alarms were repeatedly cited as potential barriers for application of automated scores.

Although the results of our scoping review are promising, there are limited data on clinical outcomes using these algorithms. Only three of five algorithms were used to guide clinical decision-making.25,27,35 Kollef et al27 showed shorter hospitalizations and Evans et al35 found decreased mortality rates in a multimorbid subgroup. Escobar et al25 found an overall and consistent decrease in mortality in a large, heterogenic population of inpatients across 21 hospitals. While Escobar et al’s findings provide strong evidence that predictive algorithms and structured follow-up on alarms can improve patient outcomes, it recognizes that not all facilities will have the resources to implement them.25 Dedicated round-the-clock follow-up of alarms has yet to be proven feasible for smaller institutions, and leaner solutions must be explored. The example set by Escobar et al25 should be translated into various settings to prove its reproducibility and to substantiate the clinical impact of predictive models and structured follow-up.

According to expert opinion, the use of high-frequency or continuous monitoring at low-acuity wards and AI algorithms to detect trends and patterns will reduce failure-to-rescue rates.4,9,43 However, most studies in our review focused on periodic spot-checked vital signs, and none of the AI algorithms were implemented in clinical care (Appendix Table 1

STRENGTHS AND LIMITATIONS

We performed a comprehensive review of the current literature using a clear and reproducible methodology to minimize the risk of missing relevant publications. The identified research is mainly limited to large US centers and consists of mostly retrospective studies. Heterogeneity among inputs, endpoints, time horizons, and evaluation metrics make comparisons challenging. Comments on facilitators, barriers, and effects were limited.

RECOMMENDATIONS FOR FUTURE RESEARCH

Artificial intelligence and the use of continuous monitoring hold great promise in creating optimal predictive algorithms. Future studies should directly compare AI- and non-AI-based algorithms using continuous monitoring to determine predictive accuracy, feasibility, costs, and outcomes. A consensus on endpoint definitions, input variables, methodology, and reporting is needed to enhance reproducibility, comparability, and generalizability of future research.

CONCLUSION

- van Galen LS, Struik PW, Driesen BEJM, et al. Delayed recognition of deterioration of patients in general wards is mostly caused by human related monitoring failures: a root cause analysis of unplanned ICU admissions. PLoS One. 2016;11(8):e0161393. https://doi.org/10.1371/journal. pone.0161393

- Mardini L, Lipes J, Jayaraman D. Adverse outcomes associated with delayed intensive care consultation in medical and surgical inpatients. J Crit Care. 2012;27(6):688-693. https://doi.org/10.1016/j.jcrc.2012.04.011

- Young MP, Gooder VJ, McBride K, James B, Fisher ES. Inpatient transfers to the intensive care unit: delays are associated with increased mortality and morbidity. J Gen Intern Med. 2003;18(2):77-83. https://doi.org/10.1046/ j.1525-1497.2003.20441.x

- Khanna AK, Hoppe P, Saugel B. Automated continuous noninvasive ward monitoring: future directions and challenges. Crit Care. 2019;23(1):194. https://doi.org/10.1186/s13054-019-2485-7

- Ludikhuize J, Hamming A, de Jonge E, Fikkers BG. Rapid response systems in The Netherlands. Jt Comm J Qual Patient Saf. 2011;37(3):138-197. https:// doi.org/10.1016/s1553-7250(11)37017-1

- Cuthbertson BH, Boroujerdi M, McKie L, Aucott L, Prescott G. Can physiological variables and early warning scoring systems allow early recognition of the deteriorating surgical patient? Crit Care Med. 2007;35(2):402-409. https://doi.org/10.1097/01.ccm.0000254826.10520.87

- Alam N, Hobbelink EL, van Tienhoven AJ, van de Ven PM, Jansma EP, Nanayakkara PWB. The impact of the use of the Early Warning Score (EWS) on patient outcomes: a systematic review. Resuscitation. 2014;85(5):587-594. https://doi.org/10.1016/j.resuscitation.2014.01.013

- Weenk M, Koeneman M, van de Belt TH, Engelen LJLPG, van Goor H, Bredie SJH. Wireless and continuous monitoring of vital signs in patients at the general ward. Resuscitation. 2019;136:47-53. https://doi.org/10.1016/j.resuscitation.2019.01.017

- Cardona-Morrell M, Prgomet M, Turner RM, Nicholson M, Hillman K. Effectiveness of continuous or intermittent vital signs monitoring in preventing adverse events on general wards: a systematic review and meta-analysis. Int J Clin Pract. 2016;70(10):806-824. https://doi.org/10.1111/ijcp.12846

- Brown H, Terrence J, Vasquez P, Bates DW, Zimlichman E. Continuous monitoring in an inpatient medical-surgical unit: a controlled clinical trial. Am J Med. 2014;127(3):226-232. https://doi.org/10.1016/j.amjmed.2013.12.004

- Mestrom E, De Bie A, van de Steeg M, Driessen M, Atallah L, Bezemer R. Implementation of an automated early warning scoring system in a E8 Journal of Hospital Medicine® Published Online June 2021 An Official Publication of the Society of Hospital Medicine Peelen et al | Predicting Deterioration: A Scoping Review surgical ward: practical use and effects on patient outcomes. PLoS One. 2019;14(5):e0213402. https://doi.org/10.1371/journal.pone.0213402

- Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4):230-243. https://doi.org/10.1136/ svn-2017-000101

- Iwashyna TJ, Liu V. What’s so different about big data? A primer for clinicians trained to think epidemiologically. Ann Am Thorac Soc. 2014;11(7):1130- 1135. https://doi.org/10.1513/annalsats.201405-185as

- Jalali A, Bender D, Rehman M, Nadkanri V, Nataraj C. Advanced analytics for outcome prediction in intensive care units. Conf Proc IEEE Eng Med Biol Soc. 2016;2016:2520-2524. https://doi.org/10.1109/embc.2016.7591243

- Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18(1):143. https://doi.org/10.1186/s12874-018-0611-x

- Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19-32. https://doi.org/10.1080/13645 57032000119616

- Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMAScR): checklist and explanation. Ann Intern Med. 2018;169(7):467- 473. https://doi.org/10.7326/m18-0850

- Gagnon MP, Desmartis M, Gagnon J, et al. Framework for user involvement in health technology assessment at the local level: views of health managers, user representatives, and clinicians. Int J Technol Assess Health Care. 2015;31(1-2):68-77. https://doi.org/10.1017/s0266462315000070

- Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743-1748. https://doi.org/10.1001/jama.260.12.1743

- Churpek MM, Yuen TC, Winslow C, et al. Multicenter development and validation of a risk stratification tool for ward patients. Am J Respir Crit Care Med. 2014;190(6):649-655. https://doi.org/10.1164/rccm.201406-1022oc

- Churpek MM, Yuen TC, Winslow C, Meltzer DO, Kattan MW, Edelson DP. Multicenter comparison of machine learning methods and conventional regression for predicting clinical deterioration on the wards. Crit Care Med. 2016;44(2):368-374. https://doi.org/10.1097/ccm.0000000000001571

- Bartkowiak B, Snyder AM, Benjamin A, et al. Validating the electronic cardiac arrest risk triage (eCART) score for risk stratification of surgical inpatients in the postoperative setting: retrospective cohort study. Ann Surg. 2019;269(6):1059-1063. https://doi.org/10.1097/sla.0000000000002665

- Edelson DP, Carey K, Winslow CJ, Churpek MM. Less is more: detecting clinical deterioration in the hospital with machine learning using only age, heart rate and respiratory rate. Abstract presented at: American Thoracic Society International Conference; May 22, 2018; San Diego, California. Am J Resp Crit Care Med. 2018;197:A4444.

- Escobar GJ, LaGuardia JC, Turk BJ, Ragins A, Kipnis P, Draper D. Early detection of impending physiologic deterioration among patients who are not in intensive care: development of predictive models using data from an automated electronic medical record. J Hosp Med. 2012;7(5):388-395. https:// doi.org/10.1002/jhm.1929

- Escobar GJ, Liu VX, Schuler A, Lawson B, Greene JD, Kipnis P. Automated identification of adults at risk for in-hospital clinical deterioration. N Engl J Med. 2020;383(20):1951-1960. https://doi.org/10.1056/nejmsa2001090

- Kipnis P, Turk BJ, Wulf DA, et al. Development and validation of an electronic medical record-based alert score for detection of inpatient deterioration outside the ICU. J Biomed Inform. 2016;64:10-19. https://doi.org/10.1016/j. jbi.2016.09.013

- Kollef MH, Chen Y, Heard K, et al. A randomized trial of real-time automated clinical deterioration alerts sent to a rapid response team. J Hosp Med. 2014;9(7):424-429. https://doi.org/10.1002/jhm.2193

- Hackmann G, Chen M, Chipara O, et al. Toward a two-tier clinical warning system for hospitalized patients. AMIA Annu Symp Proc. 2011;2011:511-519.

- Bailey TC, Chen Y, Mao Y, Lu, C, Hackmann G, Micek ST. A trial of a real-time alert for clinical deterioration in patients hospitalized on general medical wards. J Hosp Med. 2013;8(5):236-242. https://doi.org/10.1002/jhm.2009

- Kwon JM, Lee Y, Lee Y, Lee S, Park J. An algorithm based on deep learning for predicting in-hospital cardiac arrest. J Am Heart Assoc. 2018;7(13):e008678. https://doi.org/10.1161/jaha.118.008678

- Correia S, Gomes A, Shahriari S, Almeida JP, Severo M, Azevedo A. Performance of the early warning system vital to predict unanticipated higher-level of care admission and in-hospital death of ward patients. Value Health. 2018;21(S3):S360. https://doi.org/10.1016/j.jval.2018.09.2152

- Shamout FE, Zhu T, Sharma P, Watkinson PJ, Clifton DA. Deep interpretable early warning system for the detection of clinical deterioration. IEEE J Biomed Health Inform. 2020;24(2):437-446. https://doi.org/10.1109/ jbhi.2019.2937803

- Bai Y, Do DH, Harris PRE, et al. Integrating monitor alarms with laboratory test results to enhance patient deterioration prediction. J Biomed Inform. 2015;53:81-92. https://doi.org/10.1016/j.jbi.2014.09.006

- Hu X, Sapo M, Nenov V, et al. Predictive combinations of monitor alarms preceding in-hospital code blue events. J Biomed Inform. 2012;45(5):913-921. https://doi.org/10.1016/j.jbi.2012.03.001

- Evans RS, Kuttler KG, Simpson KJ, et al. Automated detection of physiologic deterioration in hospitalized patients. J Am Med Inform Assoc. 2015;22(2):350-360. https://doi.org/10.1136/amiajnl-2014-002816

- Ghosh E, Eshelman L, Yang L, Carlson E, Lord B. Early deterioration indicator: data-driven approach to detecting deterioration in general ward. Resuscitation. 2018;122:99-105. https://doi.org/10.1016/j.resuscitation. 2017.10.026

- Kang MA, Churpek MM, Zadravecz FJ, Adhikari R, Twu NM, Edelson DP: Real-time risk prediction on the wards: a feasibility study. Crit Care Med. 2016;44(8):1468-1473. https://doi.org/10.1097/ccm.0000000000001716

- Hu SB, Wong DJL, Correa A, Li N, Deng JC. Prediction of clinical deterioration in hospitalized adult patients with hematologic malignancies using a neural network model. PLoS One. 2016;11(8):e0161401. https://doi. org/10.1371/journal.pone.0161401

- Rothman MJ, Rothman SI, Beals J 4th. Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46(5):837-848. https://doi.org/10.1016/j. jbi.2013.06.011

- Alaa AM, Yoon J, Hu S, van der Schaar M. Personalized risk scoring for critical care prognosis using mixtures of Gaussian processes. IEEE Trans Biomed Eng. 2018;65(1):207-218. https://doi.org/10.1109/tbme.2017.2698602

- Mohamadlou H, Panchavati S, Calvert J, et al. Multicenter validation of a machine-learning algorithm for 48-h all-cause mortality prediction. Health Informatics J. 2020;26(3):1912-1925. https://doi.org/10.1177/1460458219894494

- Alvarez CA, Clark CA, Zhang S, et al. Predicting out of intensive care unit cardiopulmonary arrest or death using electronic medical record data. BMC Med Inform Decis Mak. 2013;13:28. https://doi.org/10.1186/1472-6947-13-28

- Vincent JL, Einav S, Pearse R, et al. Improving detection of patient deterioration in the general hospital ward environment. Eur J Anaesthesiol. 2018;35(5):325-333. https://doi.org/10.1097/eja.0000000000000798

- Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19(1):285. https://doi.org/10.1186/s13054-015-0999-1

- Weenk M, Bredie SJ, Koeneman M, Hesselink G, van Goor H, van de Belt TH. Continuous monitoring of the vital signs in the general ward using wearable devices: randomized controlled trial. J Med Internet Res. 2020;22(6):e15471. https://doi.org/10.2196/15471

- Wellner B, Grand J, Canzone E, et al. Predicting unplanned transfers to the intensive care unit: a machine learning approach leveraging diverse clinical elements. JMIR Med Inform. 2017;5(4):e45. https://doi.org/10.2196/medinform.8680

- Elliott M, Baird J. Pulse oximetry and the enduring neglect of respiratory rate assessment: a commentary on patient surveillance. Br J Nurs. 2019;28(19):1256-1259. https://doi.org/10.12968/bjon.2019.28.19.1256

- Blackwell JN, Keim-Malpass J, Clark MT, et al. Early detection of in-patient deterioration: one prediction model does not fit all. Crit Care Explor. 2020;2(5):e0116. https://doi.org/10.1097/cce.0000000000000116

- Johnson AEW, Pollard TJ, Shen L, et al. MIMIC-III, a freely accessible critical care database. Sci Data. 2016;3:160035. https://doi.org/10.1038/sdata.2016.35

- Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(6):573-576. https://doi. org/10.1370/afm.1713

- Kirkland LL, Malinchoc M, O’Byrne M, et al. A clinical deterioration prediction tool for internal medicine patients. Am J Med Qual. 2013;28(2):135-142 https://doi.org/10.1177/1062860612450459

- van Galen LS, Struik PW, Driesen BEJM, et al. Delayed recognition of deterioration of patients in general wards is mostly caused by human related monitoring failures: a root cause analysis of unplanned ICU admissions. PLoS One. 2016;11(8):e0161393. https://doi.org/10.1371/journal. pone.0161393

- Mardini L, Lipes J, Jayaraman D. Adverse outcomes associated with delayed intensive care consultation in medical and surgical inpatients. J Crit Care. 2012;27(6):688-693. https://doi.org/10.1016/j.jcrc.2012.04.011

- Young MP, Gooder VJ, McBride K, James B, Fisher ES. Inpatient transfers to the intensive care unit: delays are associated with increased mortality and morbidity. J Gen Intern Med. 2003;18(2):77-83. https://doi.org/10.1046/ j.1525-1497.2003.20441.x

- Khanna AK, Hoppe P, Saugel B. Automated continuous noninvasive ward monitoring: future directions and challenges. Crit Care. 2019;23(1):194. https://doi.org/10.1186/s13054-019-2485-7

- Ludikhuize J, Hamming A, de Jonge E, Fikkers BG. Rapid response systems in The Netherlands. Jt Comm J Qual Patient Saf. 2011;37(3):138-197. https:// doi.org/10.1016/s1553-7250(11)37017-1

- Cuthbertson BH, Boroujerdi M, McKie L, Aucott L, Prescott G. Can physiological variables and early warning scoring systems allow early recognition of the deteriorating surgical patient? Crit Care Med. 2007;35(2):402-409. https://doi.org/10.1097/01.ccm.0000254826.10520.87

- Alam N, Hobbelink EL, van Tienhoven AJ, van de Ven PM, Jansma EP, Nanayakkara PWB. The impact of the use of the Early Warning Score (EWS) on patient outcomes: a systematic review. Resuscitation. 2014;85(5):587-594. https://doi.org/10.1016/j.resuscitation.2014.01.013

- Weenk M, Koeneman M, van de Belt TH, Engelen LJLPG, van Goor H, Bredie SJH. Wireless and continuous monitoring of vital signs in patients at the general ward. Resuscitation. 2019;136:47-53. https://doi.org/10.1016/j.resuscitation.2019.01.017

- Cardona-Morrell M, Prgomet M, Turner RM, Nicholson M, Hillman K. Effectiveness of continuous or intermittent vital signs monitoring in preventing adverse events on general wards: a systematic review and meta-analysis. Int J Clin Pract. 2016;70(10):806-824. https://doi.org/10.1111/ijcp.12846

- Brown H, Terrence J, Vasquez P, Bates DW, Zimlichman E. Continuous monitoring in an inpatient medical-surgical unit: a controlled clinical trial. Am J Med. 2014;127(3):226-232. https://doi.org/10.1016/j.amjmed.2013.12.004

- Mestrom E, De Bie A, van de Steeg M, Driessen M, Atallah L, Bezemer R. Implementation of an automated early warning scoring system in a E8 Journal of Hospital Medicine® Published Online June 2021 An Official Publication of the Society of Hospital Medicine Peelen et al | Predicting Deterioration: A Scoping Review surgical ward: practical use and effects on patient outcomes. PLoS One. 2019;14(5):e0213402. https://doi.org/10.1371/journal.pone.0213402

- Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4):230-243. https://doi.org/10.1136/ svn-2017-000101

- Iwashyna TJ, Liu V. What’s so different about big data? A primer for clinicians trained to think epidemiologically. Ann Am Thorac Soc. 2014;11(7):1130- 1135. https://doi.org/10.1513/annalsats.201405-185as

- Jalali A, Bender D, Rehman M, Nadkanri V, Nataraj C. Advanced analytics for outcome prediction in intensive care units. Conf Proc IEEE Eng Med Biol Soc. 2016;2016:2520-2524. https://doi.org/10.1109/embc.2016.7591243

- Munn Z, Peters MDJ, Stern C, Tufanaru C, McArthur A, Aromataris E. Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Med Res Methodol. 2018;18(1):143. https://doi.org/10.1186/s12874-018-0611-x

- Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19-32. https://doi.org/10.1080/13645 57032000119616

- Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMAScR): checklist and explanation. Ann Intern Med. 2018;169(7):467- 473. https://doi.org/10.7326/m18-0850

- Gagnon MP, Desmartis M, Gagnon J, et al. Framework for user involvement in health technology assessment at the local level: views of health managers, user representatives, and clinicians. Int J Technol Assess Health Care. 2015;31(1-2):68-77. https://doi.org/10.1017/s0266462315000070

- Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743-1748. https://doi.org/10.1001/jama.260.12.1743

- Churpek MM, Yuen TC, Winslow C, et al. Multicenter development and validation of a risk stratification tool for ward patients. Am J Respir Crit Care Med. 2014;190(6):649-655. https://doi.org/10.1164/rccm.201406-1022oc

- Churpek MM, Yuen TC, Winslow C, Meltzer DO, Kattan MW, Edelson DP. Multicenter comparison of machine learning methods and conventional regression for predicting clinical deterioration on the wards. Crit Care Med. 2016;44(2):368-374. https://doi.org/10.1097/ccm.0000000000001571

- Bartkowiak B, Snyder AM, Benjamin A, et al. Validating the electronic cardiac arrest risk triage (eCART) score for risk stratification of surgical inpatients in the postoperative setting: retrospective cohort study. Ann Surg. 2019;269(6):1059-1063. https://doi.org/10.1097/sla.0000000000002665

- Edelson DP, Carey K, Winslow CJ, Churpek MM. Less is more: detecting clinical deterioration in the hospital with machine learning using only age, heart rate and respiratory rate. Abstract presented at: American Thoracic Society International Conference; May 22, 2018; San Diego, California. Am J Resp Crit Care Med. 2018;197:A4444.

- Escobar GJ, LaGuardia JC, Turk BJ, Ragins A, Kipnis P, Draper D. Early detection of impending physiologic deterioration among patients who are not in intensive care: development of predictive models using data from an automated electronic medical record. J Hosp Med. 2012;7(5):388-395. https:// doi.org/10.1002/jhm.1929

- Escobar GJ, Liu VX, Schuler A, Lawson B, Greene JD, Kipnis P. Automated identification of adults at risk for in-hospital clinical deterioration. N Engl J Med. 2020;383(20):1951-1960. https://doi.org/10.1056/nejmsa2001090

- Kipnis P, Turk BJ, Wulf DA, et al. Development and validation of an electronic medical record-based alert score for detection of inpatient deterioration outside the ICU. J Biomed Inform. 2016;64:10-19. https://doi.org/10.1016/j. jbi.2016.09.013

- Kollef MH, Chen Y, Heard K, et al. A randomized trial of real-time automated clinical deterioration alerts sent to a rapid response team. J Hosp Med. 2014;9(7):424-429. https://doi.org/10.1002/jhm.2193

- Hackmann G, Chen M, Chipara O, et al. Toward a two-tier clinical warning system for hospitalized patients. AMIA Annu Symp Proc. 2011;2011:511-519.

- Bailey TC, Chen Y, Mao Y, Lu, C, Hackmann G, Micek ST. A trial of a real-time alert for clinical deterioration in patients hospitalized on general medical wards. J Hosp Med. 2013;8(5):236-242. https://doi.org/10.1002/jhm.2009

- Kwon JM, Lee Y, Lee Y, Lee S, Park J. An algorithm based on deep learning for predicting in-hospital cardiac arrest. J Am Heart Assoc. 2018;7(13):e008678. https://doi.org/10.1161/jaha.118.008678

- Correia S, Gomes A, Shahriari S, Almeida JP, Severo M, Azevedo A. Performance of the early warning system vital to predict unanticipated higher-level of care admission and in-hospital death of ward patients. Value Health. 2018;21(S3):S360. https://doi.org/10.1016/j.jval.2018.09.2152

- Shamout FE, Zhu T, Sharma P, Watkinson PJ, Clifton DA. Deep interpretable early warning system for the detection of clinical deterioration. IEEE J Biomed Health Inform. 2020;24(2):437-446. https://doi.org/10.1109/ jbhi.2019.2937803

- Bai Y, Do DH, Harris PRE, et al. Integrating monitor alarms with laboratory test results to enhance patient deterioration prediction. J Biomed Inform. 2015;53:81-92. https://doi.org/10.1016/j.jbi.2014.09.006

- Hu X, Sapo M, Nenov V, et al. Predictive combinations of monitor alarms preceding in-hospital code blue events. J Biomed Inform. 2012;45(5):913-921. https://doi.org/10.1016/j.jbi.2012.03.001

- Evans RS, Kuttler KG, Simpson KJ, et al. Automated detection of physiologic deterioration in hospitalized patients. J Am Med Inform Assoc. 2015;22(2):350-360. https://doi.org/10.1136/amiajnl-2014-002816

- Ghosh E, Eshelman L, Yang L, Carlson E, Lord B. Early deterioration indicator: data-driven approach to detecting deterioration in general ward. Resuscitation. 2018;122:99-105. https://doi.org/10.1016/j.resuscitation. 2017.10.026

- Kang MA, Churpek MM, Zadravecz FJ, Adhikari R, Twu NM, Edelson DP: Real-time risk prediction on the wards: a feasibility study. Crit Care Med. 2016;44(8):1468-1473. https://doi.org/10.1097/ccm.0000000000001716

- Hu SB, Wong DJL, Correa A, Li N, Deng JC. Prediction of clinical deterioration in hospitalized adult patients with hematologic malignancies using a neural network model. PLoS One. 2016;11(8):e0161401. https://doi. org/10.1371/journal.pone.0161401

- Rothman MJ, Rothman SI, Beals J 4th. Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46(5):837-848. https://doi.org/10.1016/j. jbi.2013.06.011

- Alaa AM, Yoon J, Hu S, van der Schaar M. Personalized risk scoring for critical care prognosis using mixtures of Gaussian processes. IEEE Trans Biomed Eng. 2018;65(1):207-218. https://doi.org/10.1109/tbme.2017.2698602

- Mohamadlou H, Panchavati S, Calvert J, et al. Multicenter validation of a machine-learning algorithm for 48-h all-cause mortality prediction. Health Informatics J. 2020;26(3):1912-1925. https://doi.org/10.1177/1460458219894494

- Alvarez CA, Clark CA, Zhang S, et al. Predicting out of intensive care unit cardiopulmonary arrest or death using electronic medical record data. BMC Med Inform Decis Mak. 2013;13:28. https://doi.org/10.1186/1472-6947-13-28

- Vincent JL, Einav S, Pearse R, et al. Improving detection of patient deterioration in the general hospital ward environment. Eur J Anaesthesiol. 2018;35(5):325-333. https://doi.org/10.1097/eja.0000000000000798

- Romero-Brufau S, Huddleston JM, Escobar GJ, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19(1):285. https://doi.org/10.1186/s13054-015-0999-1

- Weenk M, Bredie SJ, Koeneman M, Hesselink G, van Goor H, van de Belt TH. Continuous monitoring of the vital signs in the general ward using wearable devices: randomized controlled trial. J Med Internet Res. 2020;22(6):e15471. https://doi.org/10.2196/15471

- Wellner B, Grand J, Canzone E, et al. Predicting unplanned transfers to the intensive care unit: a machine learning approach leveraging diverse clinical elements. JMIR Med Inform. 2017;5(4):e45. https://doi.org/10.2196/medinform.8680

- Elliott M, Baird J. Pulse oximetry and the enduring neglect of respiratory rate assessment: a commentary on patient surveillance. Br J Nurs. 2019;28(19):1256-1259. https://doi.org/10.12968/bjon.2019.28.19.1256

- Blackwell JN, Keim-Malpass J, Clark MT, et al. Early detection of in-patient deterioration: one prediction model does not fit all. Crit Care Explor. 2020;2(5):e0116. https://doi.org/10.1097/cce.0000000000000116

- Johnson AEW, Pollard TJ, Shen L, et al. MIMIC-III, a freely accessible critical care database. Sci Data. 2016;3:160035. https://doi.org/10.1038/sdata.2016.35

- Bodenheimer T, Sinsky C. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. 2014;12(6):573-576. https://doi. org/10.1370/afm.1713

- Kirkland LL, Malinchoc M, O’Byrne M, et al. A clinical deterioration prediction tool for internal medicine patients. Am J Med Qual. 2013;28(2):135-142 https://doi.org/10.1177/1062860612450459