User login

Inconsistent Code Status Documentation

For hospitalized patients, providers should ideally establish advanced directives for cardiopulmonary resuscitation, commonly referred to as a patient's code status. Having an end‐of‐life plan is important and is associated with better quality of life for patients.[1, 2, 3, 4, 5] Advanced directive discussions and documentation are key quality measures to improve end‐of‐life care for vulnerable elders.[6, 7, 8]

Clear and consistent code status documentation is a prerequisite to providing care that respects hospitalized patients' preferences. Code status documentation only occurs in a minority of hospitalized patients, ranging from 25% of patients on a general medical ward to 36% of patients on elderly‐care wards.[9] Even in high‐risk patients, such as patients with metastatic cancer, providers only documented code status 20% of the time.[10] Even when code status documentation occurs, the amount of detail regarding patient goals and values, prognosis, and treatment options is generally poor.[11, 12] There are also concerns about the accuracy of code status documentation.[13, 14, 15, 16, 17, 18, 19] For example, a recent study found that for patients who had discussed their code status during their hospitalization, only 30% had documentation of their preferences in their chart that accurately reflected what was discussed.[20]

Further complicating matters is the fact that providers document key patient information, such as a patient's code status, in multiple places (eg, progress notes, physician orders). As a result, an additional documentation problem of inconsistency can arise for 2 reasons. First, code status documentation can be inconsistent because of incomplete documentation. Incomplete documentation is primarily a problem in patients who do not want to be resuscitated (ie, do not resuscitate [DNR]), because the absence of code status documentation leads front‐line staff to assume that the patient wants to be resuscitated (ie, full code). Second, inconsistent documentation can occur because of conflicting documentation (eg, a patient has a different code status documented in 2 or more places).

Together, these documentation problems have the potential to lead healthcare providers to resuscitate patients who do not wish to be resuscitated, or for patients who wish to be resuscitated to have delays in their resuscitation efforts. This study will extend the knowledge from the previous literature by exploring how the complexity and redundancy of clinical documentation practices affect the quality of code status documentation. To our knowledge, there are no prior studies that focus specifically on the frequency and clinical relevance of inconsistent code status documentation for inpatients across multiple documentation sources.

METHODS

Study Context

This is a point‐prevalence study conducted at 3 academic medical centers (AMCs) affiliated with the University of Toronto. At all 3 AMCs, the majority of general internal medicine (GIM) patients are admitted to 1 of 4 clinical teaching units (CTUs). The physician team on each CTU consists of 1 attending staff, 1 senior resident (second or third year resident), 2 to 3 first‐year residents, and 2 to 3 medical students. CTUs typically care for between 15 and 25 patients. The research ethics boards at each of the AMCs approved this study.

Existing Code Status Documentation Processes

At all 3 AMCs, providers document patient code status in 5 different places: (1) progress notes (admission and daily progress notes in the paper chart), (2) physician orders (computerized orders at 1 site, paper orders at the other 2 sites), (3) electronic sign‐out lists (Web‐based tools used by residents to support patient handover), (4) nursing‐care plan (used by nurses to document care plans for their assigned patients), and (5) DNR sheet (a cover sheet placed at the front of the paper chart in patients who have a DNR order) (see Supporting Information, Appendix, in the online version of this article). None of these documentation sources link automatically to one another. Once a physician establishes a patient's code status, it should be documented in the progress notes. The same physician should also write the code status as a physician order and update the patient's code status in the Web‐based electronic sign‐out list. The nurse responsible for the patient transcribes the code status order in the nursing‐care plan. For DNR patients, nurses or physicians (depending on the AMC) also place the DNR sheet in the front of the chart.

At our 3 AMCs, in the event of a cardiac arrest, resident physicians and nurses are typically the first responders. To quickly determine a patient's code status nurses and resident physicians look for the presence or absence of a DNR sheet. In addition, nurses rely on their nursing‐care plan and resident physicians rely on their electronic sign‐out list.

Eligibility Criteria and Sampling Strategy

Our study included GIM patients admitted to a CTU at 1 of 3 AMCs, and excluded admitted GIM patients who remained in the emergency department (due to differences in code status documentation processes). Data collection took place between September 2010 and September 2011 on days when the principal author (A.S.W.) was available to collect the data.

We collected data for all patients from a single GIM CTU on the same day to minimize the chance that a team updates or changes a patient's code status during data collection. We included each of the 4 CTUs at the 3 study sites once during the study period (ie, 12 full days of data collection).

Study Measures and Data Collection

One study author (A.S.W.) screened the 5 code status documentation sources listed above for each patient and recorded the documented code status as full code, DNR, or blank (if there was nothing entered) in a database. We also collected patient demographic data, admitting diagnosis, length of stay, admission to home ward (ie, the medicine ward affiliated with the CTU team that admitted the patient), free‐text code status documentation, transfer to the intensive care unit during their hospitalization, and whether the patient is receiving comfort measures, up to the time of data collection. Because the study investigators were not members of the team providing care to patients included in the study, we could not directly elicit the patient's actual code status.

The primary study outcome measures were the completeness and consistency of code status documentation across the 5 documentation sources. For completeness, we included data relating to 4 documentation sources only, excluding the DNR sheet because it is only relevant for DNR patients. We defined inconsistent code status documentation a priori as (1) the code status is conflicting in at least 2 documentation sources (eg, full code in 1 source and DNR in another) or (2) the code status is documented in 1 or more documentation source and not documented in at least 1 documentation source (eg, full code in 1 source and blank in another).

We then subdivided code status documentation inconsistencies into nonclinically relevant and clinically relevant subcategories. For example, a nonclinically relevant inconsistency would be if a physician documented full code in the physician orders, but a nurse did not document anything in the nursing‐care plan, because most providers would assume a preference for resuscitation in the absence of code status documentation in the nursing‐care plan.

We defined clinically relevant inconsistencies as those that would reasonably lead healthcare providers referring to different documentation sources to respond differently in the event of a cardiac arrest (eg, the physician orders show DNR whereas the nursing‐care plan is blanka provider who refers to the physician orders would not resuscitate the patient, but another provider who refers to the blank nursing‐care plan would resuscitate the patient).

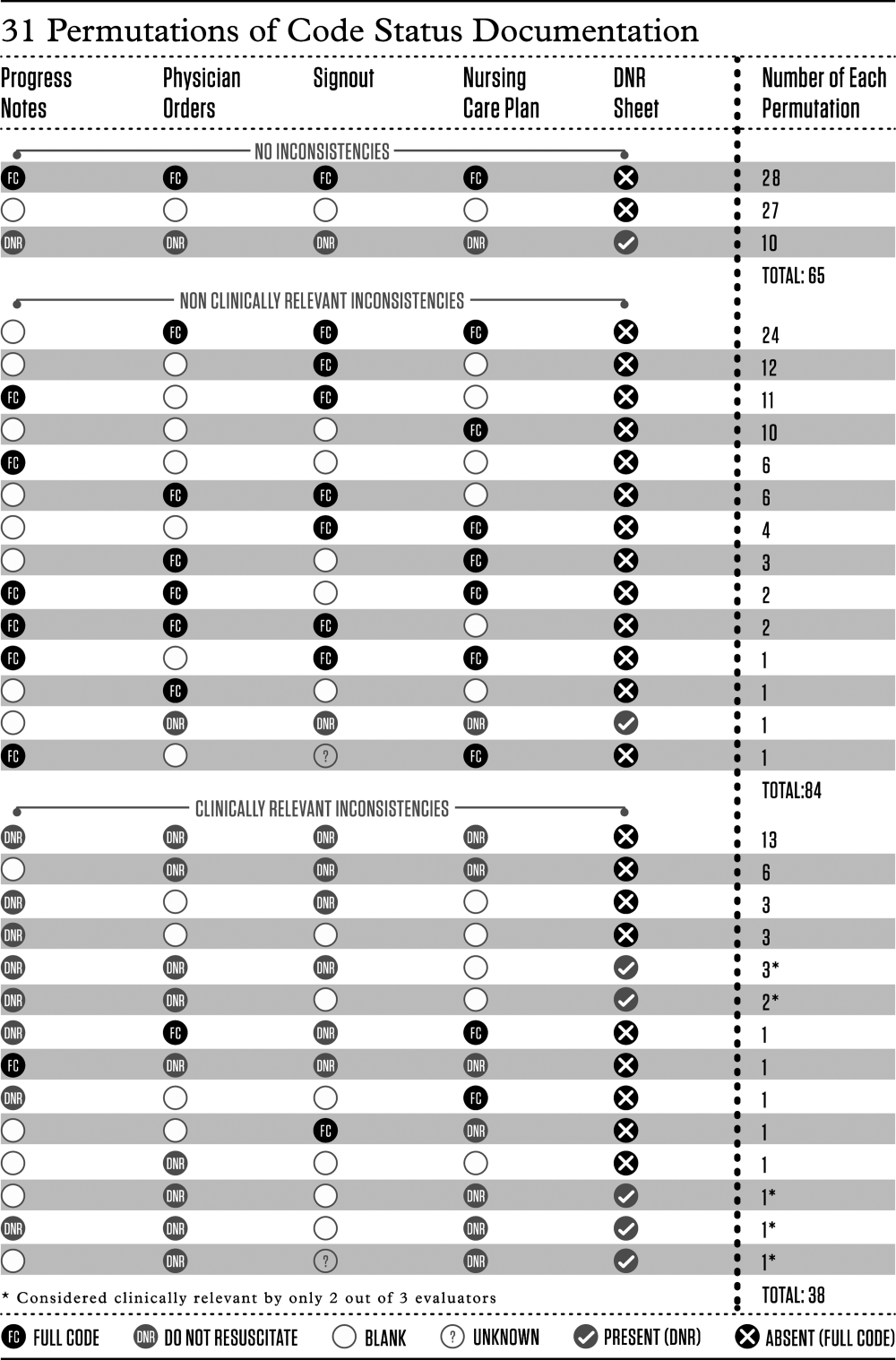

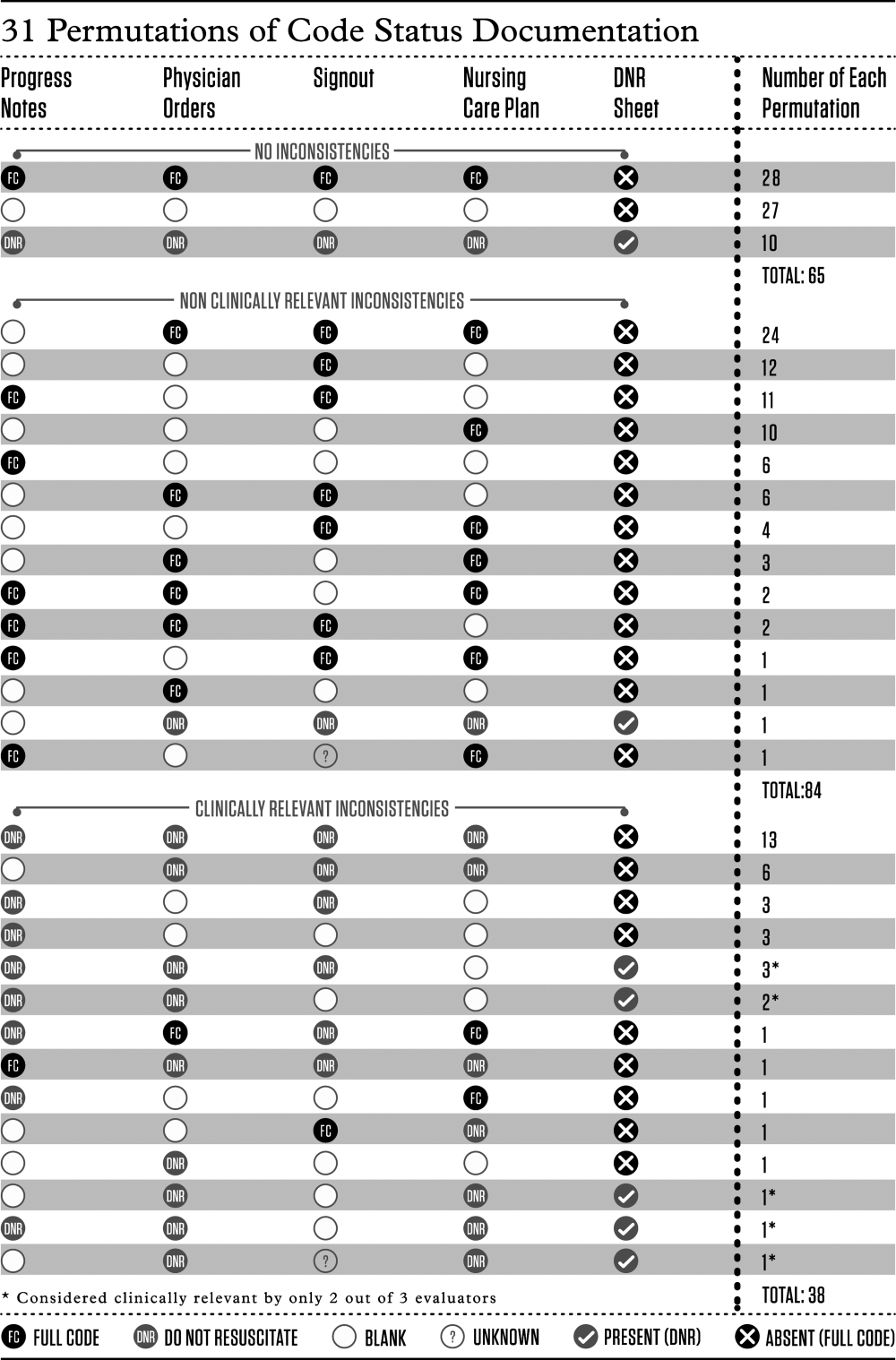

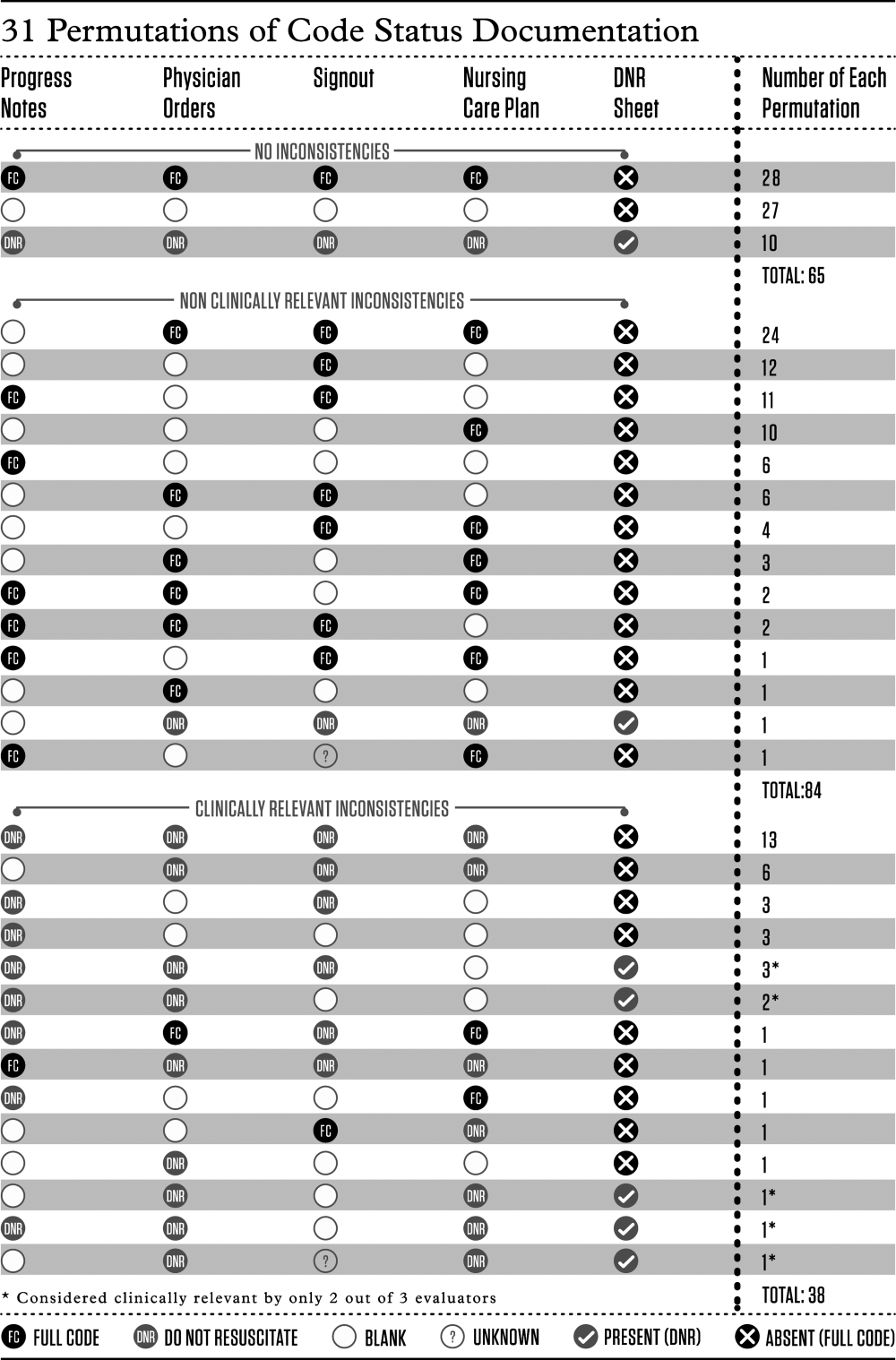

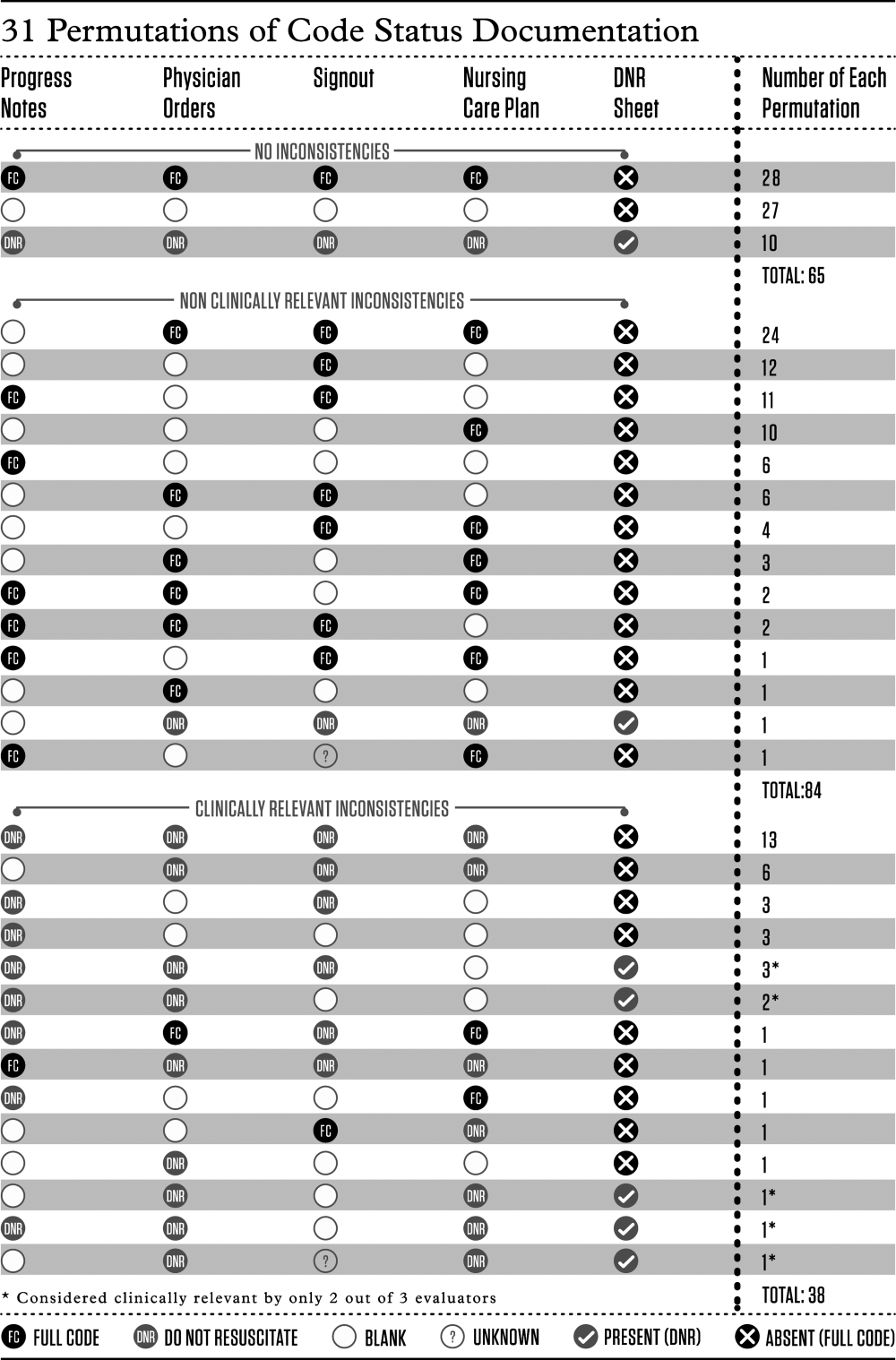

We determined the proportion of patients with inconsistent code status documentation by listing the 31 different permutations of code status documentation in our data (Figure 1). Using the prespecified definition of inconsistent code status documentation, 3 study authors (I.A.D., B.M.W., R.C.W.) independently determined whether each permutation met the criteria for inconsistent code status documentation, and judged the clinical relevance of each documentation inconsistency. We resolved disagreements by consensus.

Statistical Analysis

We calculated descriptive statistics for all variables, summarizing continuous measures using means and standard deviations, and categorical measures using counts, percentages, and their associated 95% confidence intervals. Logistic regression analyses adjusting for the correlation among observations taken from the same team were carried out. Each of the 4 variables of interest (patient age, length of stay, receiving comfort measures, free text code status documentation) was run in a bivariate model to obtain unadjusted estimates as well as the final multivariable model. All estimates were displayed as odds ratios (ORs) and their associated 95% confidence intervals (CIs). A P value <0.05 was used to denote statistical significance. We also carried out a kappa analysis to assess inter‐rater agreement when judging whether inconsistent documentation is clinically relevant. All analyses were carried out using SAS version 9.3 (SAS Institute, Cary, NC).

RESULTS

There were 194 patients potentially eligible for inclusion. Seven admitted GIM patients who had not been transferred from the emergency department were excluded, leaving 187 patients in the study. The mean patient age was 70 years; 83 (44%) were female. The median length of stay up to the time of data collection was 6 days, with the majority (156 [83%]) of patients admitted to their home ward. Ten (5%) patients were receiving comfort measures.

Completeness of Code Status Documentation

Thirty‐eight (20%; 95% CI, 14%‐26%) patients had complete and consistent code status documentation across all documentation sources, whereas 27 (14%; 95% CI, 9%‐19%) patients had no code status documented in any documentation source. By documentation source, providers documented code status in the progress notes for 89 patients (48%; 95% CI, 40%‐55%), the physician orders for 107 patients (57%; 95% CI, 50%‐64%), the nursing‐care plan for 110 patients (59%; 95% CI, 51%‐66%), and the electronic sign‐out list for 129 patients (69%; 95% CI, 62%‐76%).

Consistency of Code Status Documentation

The remaining 122 patients (65%; 95% CI, 58%‐72%) had at least 1 code status documentation inconsistency. Of these, 38 patients (20%; 95% CI, 14%‐26%) had a clinically relevant code status documentation inconsistency. Code status documentation inconsistency differed by site; the 2 hospitals with paper‐based physician orders had fewer patients with complete and consistent code status documentation compared to the hospital where physician orders are electronic (15% vs 42%, respectively, P<0.001) (Table 1).

| Physician Code Status Order | Sites 1 & 2: Paper‐Based, N=108 | Site 3: Electronic, N=52 | P Value |

|---|---|---|---|

| Consistent code status documentation | 16 (15%) | 22 (42%) | |

| Inconsistent code status documentation, nonclinically relevant | 60 (56%) | 30 (58%) | <0.0001 |

| Inconsistent code status documentation, clinically relevant | 32 (30%) | 6 (12%) |

The permutations of clinically relevant and nonclinically relevant inconsistencies are summarized in Figure 1. We achieved high inter‐rater reliability among the 3 independent reviewers with respect to rating the clinical relevance of documentation inconsistencies (=0.86 [95% CI, 0.76‐0.95]).

To identify correlates of clinically relevant code status documentation inconsistencies, we included 4 variables of interest (patient age, length of stay, receiving comfort measures, and free text code status documentation) in a logistic regression analysis. Bivariate analyses demonstrated that increased age (OR =1.07 [95% CI, 1.05‐1.10] for every 1‐year increase in age, P<0.001) and receiving comfort measures (OR= 10.98 [95% CI, 1.94‐62.12], P=0.007) were associated with a clinically relevant code status documentation inconsistency. Using these 4 variables in a multivariable analysis clustering for physician team, increased age (OR=1.07 [95% CI, 1.04‐1.10] for every 1‐year increase in age, P<0.0001) and receiving comfort measures (OR=9.39 [95% CI, 1.3565.19], P=0.02) remained as independent positive correlates of having a clinically relevant code status documentation inconsistency (Table 2).

| Clinically Relevant Inconsistencies, N=38 | No Inconsistencies and Nonclinically Relevant Inconsistencies, N=149 | P Value | |

|---|---|---|---|

| |||

| Age, y, mean (SD) | 83 (10) | 67 (19) | <0.0001* |

| Length of stay, d, median (IQR) | 6.5 (310) | 6 (219) | 0.39 |

| Receiving comfort measures, n (%) | 7 (18%) | 3 (2%) | <0.0001* |

| Free‐text code status documentation, n (%) | 18 (47%) | 58 (39%) | 0.34 |

DISCUSSION

We found that 2 out of 3 patients had at least 1 inconsistency in code status documentation, and that 1 in 5 patients had at least 1 clinically relevant code status documentation inconsistency. The majority of clinically relevant inconsistencies occurred because there was a DNR order written in some sources of code status documentation, and no orders in other documentation sources. However, there were 4 striking examples where DNR was written in some sources of code status documentation and full code was written in other documentation sources (Figure 1).

Older patients and patients receiving comfort measures were more likely to have a clinically relevant inconsistency in code status documentation. This is particularly concerning, because they are among the most vulnerable patients at highest risk for having an in‐hospital cardiac arrest.

Our study extends the findings of prior studies that identified gaps in completeness and accuracy of code status documentation and describes another important gap in the quality and consistency of code status documentation.[20] This becomes particularly important because efforts aimed at increasing documentation of patients' code status without ensuring consistency across documentation sources may still result in patients being resuscitated or not resuscitated inappropriately.

This issue of poorly integrated health records is relevant for many other aspects of patient care. For example, 1 study found significant discrepancies in patient medication allergy documentation across multiple health records.[21] This fragmentation of documentation of the same patient information in multiple health records requires attention and should be the focus of institutional quality improvement efforts.

There are several potential ways to improve the code status documentation process. First, the use of standard fields or standardized orders can increase the completeness and improve the clarity of code status documentation.[22, 23] For institutions with an electronic medical record, forcing functions may further increase code status documentation. One study found that the implementation of an electronic medical record increased code status documentation from 4% to 63%.[24] We found similarly that the site with electronic physician orders had higher rates of complete and consistent code status documentation.

A second approach is to minimize the number of different sources for code status documentation. Institutions should critically examine each place where providers could document code status and decide whether this information adds value, and create policies to restrict unnecessary duplicate documentation and ensure accurate documentation of code status in several key, centralized locations.[25] A third option would be to automatically synchronize all code status documentation sources.[25] This final approach requires a fully integrated electronic health record.

Our study has several limitations. Although we report a large number of code status documentation inconsistencies, we do not know how many of these lead to incorrect resuscitative measures, so the actual impact on patient care is unknown. Also, because we were focusing on inconsistencies among sources of code status documentation, and not on accurate documentation of a patients' code status, the patients' actual preferences were not elicited and are not known. Finally, we carried out our study in 3 AMCs with residents that rotate from 1 site to another. The transient nature of resident work may increase the likelihood of documentation inconsistencies, because trainees may be less aware of local processes. In addition, the way front‐line staff uses clinical documentation sources to determine a patient's code status may differ at other institutions. Therefore, our estimate of clinical relevance may not be generalizable to other institutions with different front‐line processes or with healthcare teams that are more stable and aware of local documentation processes.

In summary, our study uncovered significant gaps in the quality of code status documentation that span 3 different AMCs. Having multiple, poorly integrated sources for code status documentation leads to a significant number of concerning inconsistencies that create opportunities for healthcare providers to inappropriately deliver or withhold resuscitative measures that conflict with patients' expressed wishes. Institutions need to be aware of this potential documentation hazard and take steps to minimize code status documentation inconsistencies. Even though cardiac arrests occur infrequently, if healthcare teams take inappropriate action because of these code status documentation inconsistencies, the consequences can be devastating.

Disclosure

Nothing to report.

- , , , et al. Advance directives for medical care—a case for greater use. N Engl J Med. 1991;324(13):889–895.

- , , , et al; Canadian Researchers at the End of Life Network (CARENET). What matters most in end‐of‐life care: perceptions of seriously ill patients and their family memebrs. CMAJ. 2006;174(5):627–633.

- , , . Advance directives and outcomes of surrogate decision making before death. N Eng J Med. 2010;362(13):1211–1218.

- , , , et al. Associations between end‐of‐life discussions, patient mental health, medical care near death, and caregiver bereavement adjustment. JAMA. 2008;300(14):1665–1673.

- , , , . The impact of advance care planning on end of life care in elderly patients: randomized controlled trial. BMJ. 2010;340:c1345.

- , . Quality indicators for end‐of‐life care in vulnerable elders. Ann Intern Med. 2001;135(8):667–685.

- , , , . Assessing care of vulnerable elders: methods for developing quality indicators. Ann Intern Med. 2001;135:647–652.

- , , , et al. Perceptions by family members of the dying experience of older and seriously ill patients. Ann Intern Med. 1997;126(2):97–106.

- , . Cardiopulmonary resuscitation: capacity, discussion and documentation. Q J Med. 2006;99(10):683–690.

- , , , et al. Code status documentation in the outpatient electronic medical records or patients with metastatic cancer. J Gen Intern Med. 2009;25(2):150–153.

- , , , et al. Factors associated with discussion of care plans and code status at the time of hospital admission: results from the multicenter hospitalist study. J Hosp Med. 2008;3(6):437–445.

- , , , . Documentation quality of inpatient code status discussions. J Pain Symptom Manage. 2014;48(4):632–638.

- , , , . The use of life‐sustaining treatments in hospitalized persons aged 80 and older. J Am Geriatr Soc. 2002;50(5):930–934.

- The SUPPORT Principal Investigators. A controlled trial to improve care for seriously ill hospitalized patients: the Study to Understand Prognoses and Preferences for Outcomes and Risks of Treatments (SUPPORT). JAMA. 1995;274(20):1591–1598.

- , , . Some treatment‐withholding implications of no‐code orders in an academic hospital. Crit Care Med. 1984;12(10):879–881.

- . A prospective study of patients with DNR orders in a teaching hospital. Arch Intern Med. 1998;148(10):2193–2198.

- , . Compliance with do‐not‐resuscitate orders for hospitalized patient transported to radiology department. Ann Int Med. 1998;129(10):801–805.

- , . Identification of inpatient DNR status: a safety hazard begging for standardization. J Hosp Med. 2007;2(6):366–371.

- . Controlling death: the false promise of advance directives. Ann Int Med. 2007;147(1):51–57.

- , , , et al. Failure to engage hospitalized elderly patients and their families in advance care planning. JAMA Intern Med. 2013;173(9):778–787.

- , , , et al. The recording of drug sensitivities for older people living in care homes. Br J Clin Pharmacol. 2010;69(5):553–557.

- , , , , , . A comparison of methods to communicate treatment preferences in nursing facilities: traditional practices versus the physician orders for life‐sustaining treatment program. J Am Geriatr Soc. 2010;58(7):1241–1248.

- , , , et al. Evaluation of a treatment limitation policy with a specific treatment‐limiting order page. Arch Intern Med. 1994;154(4):425–432.

- , , , et al. An electronic medical record intervention increased nursing home advance directive orders and documentation. J Am Geriatr Soc. 2007;55(7):1001–1006.

- , , , et al. Honouring patient's resuscitation wishes: a multiphased effort to improve identification and documentation. BMJ Quality Safety. 2013;22(1):85–92.

For hospitalized patients, providers should ideally establish advanced directives for cardiopulmonary resuscitation, commonly referred to as a patient's code status. Having an end‐of‐life plan is important and is associated with better quality of life for patients.[1, 2, 3, 4, 5] Advanced directive discussions and documentation are key quality measures to improve end‐of‐life care for vulnerable elders.[6, 7, 8]

Clear and consistent code status documentation is a prerequisite to providing care that respects hospitalized patients' preferences. Code status documentation only occurs in a minority of hospitalized patients, ranging from 25% of patients on a general medical ward to 36% of patients on elderly‐care wards.[9] Even in high‐risk patients, such as patients with metastatic cancer, providers only documented code status 20% of the time.[10] Even when code status documentation occurs, the amount of detail regarding patient goals and values, prognosis, and treatment options is generally poor.[11, 12] There are also concerns about the accuracy of code status documentation.[13, 14, 15, 16, 17, 18, 19] For example, a recent study found that for patients who had discussed their code status during their hospitalization, only 30% had documentation of their preferences in their chart that accurately reflected what was discussed.[20]

Further complicating matters is the fact that providers document key patient information, such as a patient's code status, in multiple places (eg, progress notes, physician orders). As a result, an additional documentation problem of inconsistency can arise for 2 reasons. First, code status documentation can be inconsistent because of incomplete documentation. Incomplete documentation is primarily a problem in patients who do not want to be resuscitated (ie, do not resuscitate [DNR]), because the absence of code status documentation leads front‐line staff to assume that the patient wants to be resuscitated (ie, full code). Second, inconsistent documentation can occur because of conflicting documentation (eg, a patient has a different code status documented in 2 or more places).

Together, these documentation problems have the potential to lead healthcare providers to resuscitate patients who do not wish to be resuscitated, or for patients who wish to be resuscitated to have delays in their resuscitation efforts. This study will extend the knowledge from the previous literature by exploring how the complexity and redundancy of clinical documentation practices affect the quality of code status documentation. To our knowledge, there are no prior studies that focus specifically on the frequency and clinical relevance of inconsistent code status documentation for inpatients across multiple documentation sources.

METHODS

Study Context

This is a point‐prevalence study conducted at 3 academic medical centers (AMCs) affiliated with the University of Toronto. At all 3 AMCs, the majority of general internal medicine (GIM) patients are admitted to 1 of 4 clinical teaching units (CTUs). The physician team on each CTU consists of 1 attending staff, 1 senior resident (second or third year resident), 2 to 3 first‐year residents, and 2 to 3 medical students. CTUs typically care for between 15 and 25 patients. The research ethics boards at each of the AMCs approved this study.

Existing Code Status Documentation Processes

At all 3 AMCs, providers document patient code status in 5 different places: (1) progress notes (admission and daily progress notes in the paper chart), (2) physician orders (computerized orders at 1 site, paper orders at the other 2 sites), (3) electronic sign‐out lists (Web‐based tools used by residents to support patient handover), (4) nursing‐care plan (used by nurses to document care plans for their assigned patients), and (5) DNR sheet (a cover sheet placed at the front of the paper chart in patients who have a DNR order) (see Supporting Information, Appendix, in the online version of this article). None of these documentation sources link automatically to one another. Once a physician establishes a patient's code status, it should be documented in the progress notes. The same physician should also write the code status as a physician order and update the patient's code status in the Web‐based electronic sign‐out list. The nurse responsible for the patient transcribes the code status order in the nursing‐care plan. For DNR patients, nurses or physicians (depending on the AMC) also place the DNR sheet in the front of the chart.

At our 3 AMCs, in the event of a cardiac arrest, resident physicians and nurses are typically the first responders. To quickly determine a patient's code status nurses and resident physicians look for the presence or absence of a DNR sheet. In addition, nurses rely on their nursing‐care plan and resident physicians rely on their electronic sign‐out list.

Eligibility Criteria and Sampling Strategy

Our study included GIM patients admitted to a CTU at 1 of 3 AMCs, and excluded admitted GIM patients who remained in the emergency department (due to differences in code status documentation processes). Data collection took place between September 2010 and September 2011 on days when the principal author (A.S.W.) was available to collect the data.

We collected data for all patients from a single GIM CTU on the same day to minimize the chance that a team updates or changes a patient's code status during data collection. We included each of the 4 CTUs at the 3 study sites once during the study period (ie, 12 full days of data collection).

Study Measures and Data Collection

One study author (A.S.W.) screened the 5 code status documentation sources listed above for each patient and recorded the documented code status as full code, DNR, or blank (if there was nothing entered) in a database. We also collected patient demographic data, admitting diagnosis, length of stay, admission to home ward (ie, the medicine ward affiliated with the CTU team that admitted the patient), free‐text code status documentation, transfer to the intensive care unit during their hospitalization, and whether the patient is receiving comfort measures, up to the time of data collection. Because the study investigators were not members of the team providing care to patients included in the study, we could not directly elicit the patient's actual code status.

The primary study outcome measures were the completeness and consistency of code status documentation across the 5 documentation sources. For completeness, we included data relating to 4 documentation sources only, excluding the DNR sheet because it is only relevant for DNR patients. We defined inconsistent code status documentation a priori as (1) the code status is conflicting in at least 2 documentation sources (eg, full code in 1 source and DNR in another) or (2) the code status is documented in 1 or more documentation source and not documented in at least 1 documentation source (eg, full code in 1 source and blank in another).

We then subdivided code status documentation inconsistencies into nonclinically relevant and clinically relevant subcategories. For example, a nonclinically relevant inconsistency would be if a physician documented full code in the physician orders, but a nurse did not document anything in the nursing‐care plan, because most providers would assume a preference for resuscitation in the absence of code status documentation in the nursing‐care plan.

We defined clinically relevant inconsistencies as those that would reasonably lead healthcare providers referring to different documentation sources to respond differently in the event of a cardiac arrest (eg, the physician orders show DNR whereas the nursing‐care plan is blanka provider who refers to the physician orders would not resuscitate the patient, but another provider who refers to the blank nursing‐care plan would resuscitate the patient).

We determined the proportion of patients with inconsistent code status documentation by listing the 31 different permutations of code status documentation in our data (Figure 1). Using the prespecified definition of inconsistent code status documentation, 3 study authors (I.A.D., B.M.W., R.C.W.) independently determined whether each permutation met the criteria for inconsistent code status documentation, and judged the clinical relevance of each documentation inconsistency. We resolved disagreements by consensus.

Statistical Analysis

We calculated descriptive statistics for all variables, summarizing continuous measures using means and standard deviations, and categorical measures using counts, percentages, and their associated 95% confidence intervals. Logistic regression analyses adjusting for the correlation among observations taken from the same team were carried out. Each of the 4 variables of interest (patient age, length of stay, receiving comfort measures, free text code status documentation) was run in a bivariate model to obtain unadjusted estimates as well as the final multivariable model. All estimates were displayed as odds ratios (ORs) and their associated 95% confidence intervals (CIs). A P value <0.05 was used to denote statistical significance. We also carried out a kappa analysis to assess inter‐rater agreement when judging whether inconsistent documentation is clinically relevant. All analyses were carried out using SAS version 9.3 (SAS Institute, Cary, NC).

RESULTS

There were 194 patients potentially eligible for inclusion. Seven admitted GIM patients who had not been transferred from the emergency department were excluded, leaving 187 patients in the study. The mean patient age was 70 years; 83 (44%) were female. The median length of stay up to the time of data collection was 6 days, with the majority (156 [83%]) of patients admitted to their home ward. Ten (5%) patients were receiving comfort measures.

Completeness of Code Status Documentation

Thirty‐eight (20%; 95% CI, 14%‐26%) patients had complete and consistent code status documentation across all documentation sources, whereas 27 (14%; 95% CI, 9%‐19%) patients had no code status documented in any documentation source. By documentation source, providers documented code status in the progress notes for 89 patients (48%; 95% CI, 40%‐55%), the physician orders for 107 patients (57%; 95% CI, 50%‐64%), the nursing‐care plan for 110 patients (59%; 95% CI, 51%‐66%), and the electronic sign‐out list for 129 patients (69%; 95% CI, 62%‐76%).

Consistency of Code Status Documentation

The remaining 122 patients (65%; 95% CI, 58%‐72%) had at least 1 code status documentation inconsistency. Of these, 38 patients (20%; 95% CI, 14%‐26%) had a clinically relevant code status documentation inconsistency. Code status documentation inconsistency differed by site; the 2 hospitals with paper‐based physician orders had fewer patients with complete and consistent code status documentation compared to the hospital where physician orders are electronic (15% vs 42%, respectively, P<0.001) (Table 1).

| Physician Code Status Order | Sites 1 & 2: Paper‐Based, N=108 | Site 3: Electronic, N=52 | P Value |

|---|---|---|---|

| Consistent code status documentation | 16 (15%) | 22 (42%) | |

| Inconsistent code status documentation, nonclinically relevant | 60 (56%) | 30 (58%) | <0.0001 |

| Inconsistent code status documentation, clinically relevant | 32 (30%) | 6 (12%) |

The permutations of clinically relevant and nonclinically relevant inconsistencies are summarized in Figure 1. We achieved high inter‐rater reliability among the 3 independent reviewers with respect to rating the clinical relevance of documentation inconsistencies (=0.86 [95% CI, 0.76‐0.95]).

To identify correlates of clinically relevant code status documentation inconsistencies, we included 4 variables of interest (patient age, length of stay, receiving comfort measures, and free text code status documentation) in a logistic regression analysis. Bivariate analyses demonstrated that increased age (OR =1.07 [95% CI, 1.05‐1.10] for every 1‐year increase in age, P<0.001) and receiving comfort measures (OR= 10.98 [95% CI, 1.94‐62.12], P=0.007) were associated with a clinically relevant code status documentation inconsistency. Using these 4 variables in a multivariable analysis clustering for physician team, increased age (OR=1.07 [95% CI, 1.04‐1.10] for every 1‐year increase in age, P<0.0001) and receiving comfort measures (OR=9.39 [95% CI, 1.3565.19], P=0.02) remained as independent positive correlates of having a clinically relevant code status documentation inconsistency (Table 2).

| Clinically Relevant Inconsistencies, N=38 | No Inconsistencies and Nonclinically Relevant Inconsistencies, N=149 | P Value | |

|---|---|---|---|

| |||

| Age, y, mean (SD) | 83 (10) | 67 (19) | <0.0001* |

| Length of stay, d, median (IQR) | 6.5 (310) | 6 (219) | 0.39 |

| Receiving comfort measures, n (%) | 7 (18%) | 3 (2%) | <0.0001* |

| Free‐text code status documentation, n (%) | 18 (47%) | 58 (39%) | 0.34 |

DISCUSSION

We found that 2 out of 3 patients had at least 1 inconsistency in code status documentation, and that 1 in 5 patients had at least 1 clinically relevant code status documentation inconsistency. The majority of clinically relevant inconsistencies occurred because there was a DNR order written in some sources of code status documentation, and no orders in other documentation sources. However, there were 4 striking examples where DNR was written in some sources of code status documentation and full code was written in other documentation sources (Figure 1).

Older patients and patients receiving comfort measures were more likely to have a clinically relevant inconsistency in code status documentation. This is particularly concerning, because they are among the most vulnerable patients at highest risk for having an in‐hospital cardiac arrest.

Our study extends the findings of prior studies that identified gaps in completeness and accuracy of code status documentation and describes another important gap in the quality and consistency of code status documentation.[20] This becomes particularly important because efforts aimed at increasing documentation of patients' code status without ensuring consistency across documentation sources may still result in patients being resuscitated or not resuscitated inappropriately.

This issue of poorly integrated health records is relevant for many other aspects of patient care. For example, 1 study found significant discrepancies in patient medication allergy documentation across multiple health records.[21] This fragmentation of documentation of the same patient information in multiple health records requires attention and should be the focus of institutional quality improvement efforts.

There are several potential ways to improve the code status documentation process. First, the use of standard fields or standardized orders can increase the completeness and improve the clarity of code status documentation.[22, 23] For institutions with an electronic medical record, forcing functions may further increase code status documentation. One study found that the implementation of an electronic medical record increased code status documentation from 4% to 63%.[24] We found similarly that the site with electronic physician orders had higher rates of complete and consistent code status documentation.

A second approach is to minimize the number of different sources for code status documentation. Institutions should critically examine each place where providers could document code status and decide whether this information adds value, and create policies to restrict unnecessary duplicate documentation and ensure accurate documentation of code status in several key, centralized locations.[25] A third option would be to automatically synchronize all code status documentation sources.[25] This final approach requires a fully integrated electronic health record.

Our study has several limitations. Although we report a large number of code status documentation inconsistencies, we do not know how many of these lead to incorrect resuscitative measures, so the actual impact on patient care is unknown. Also, because we were focusing on inconsistencies among sources of code status documentation, and not on accurate documentation of a patients' code status, the patients' actual preferences were not elicited and are not known. Finally, we carried out our study in 3 AMCs with residents that rotate from 1 site to another. The transient nature of resident work may increase the likelihood of documentation inconsistencies, because trainees may be less aware of local processes. In addition, the way front‐line staff uses clinical documentation sources to determine a patient's code status may differ at other institutions. Therefore, our estimate of clinical relevance may not be generalizable to other institutions with different front‐line processes or with healthcare teams that are more stable and aware of local documentation processes.

In summary, our study uncovered significant gaps in the quality of code status documentation that span 3 different AMCs. Having multiple, poorly integrated sources for code status documentation leads to a significant number of concerning inconsistencies that create opportunities for healthcare providers to inappropriately deliver or withhold resuscitative measures that conflict with patients' expressed wishes. Institutions need to be aware of this potential documentation hazard and take steps to minimize code status documentation inconsistencies. Even though cardiac arrests occur infrequently, if healthcare teams take inappropriate action because of these code status documentation inconsistencies, the consequences can be devastating.

Disclosure

Nothing to report.

For hospitalized patients, providers should ideally establish advanced directives for cardiopulmonary resuscitation, commonly referred to as a patient's code status. Having an end‐of‐life plan is important and is associated with better quality of life for patients.[1, 2, 3, 4, 5] Advanced directive discussions and documentation are key quality measures to improve end‐of‐life care for vulnerable elders.[6, 7, 8]

Clear and consistent code status documentation is a prerequisite to providing care that respects hospitalized patients' preferences. Code status documentation only occurs in a minority of hospitalized patients, ranging from 25% of patients on a general medical ward to 36% of patients on elderly‐care wards.[9] Even in high‐risk patients, such as patients with metastatic cancer, providers only documented code status 20% of the time.[10] Even when code status documentation occurs, the amount of detail regarding patient goals and values, prognosis, and treatment options is generally poor.[11, 12] There are also concerns about the accuracy of code status documentation.[13, 14, 15, 16, 17, 18, 19] For example, a recent study found that for patients who had discussed their code status during their hospitalization, only 30% had documentation of their preferences in their chart that accurately reflected what was discussed.[20]

Further complicating matters is the fact that providers document key patient information, such as a patient's code status, in multiple places (eg, progress notes, physician orders). As a result, an additional documentation problem of inconsistency can arise for 2 reasons. First, code status documentation can be inconsistent because of incomplete documentation. Incomplete documentation is primarily a problem in patients who do not want to be resuscitated (ie, do not resuscitate [DNR]), because the absence of code status documentation leads front‐line staff to assume that the patient wants to be resuscitated (ie, full code). Second, inconsistent documentation can occur because of conflicting documentation (eg, a patient has a different code status documented in 2 or more places).

Together, these documentation problems have the potential to lead healthcare providers to resuscitate patients who do not wish to be resuscitated, or for patients who wish to be resuscitated to have delays in their resuscitation efforts. This study will extend the knowledge from the previous literature by exploring how the complexity and redundancy of clinical documentation practices affect the quality of code status documentation. To our knowledge, there are no prior studies that focus specifically on the frequency and clinical relevance of inconsistent code status documentation for inpatients across multiple documentation sources.

METHODS

Study Context

This is a point‐prevalence study conducted at 3 academic medical centers (AMCs) affiliated with the University of Toronto. At all 3 AMCs, the majority of general internal medicine (GIM) patients are admitted to 1 of 4 clinical teaching units (CTUs). The physician team on each CTU consists of 1 attending staff, 1 senior resident (second or third year resident), 2 to 3 first‐year residents, and 2 to 3 medical students. CTUs typically care for between 15 and 25 patients. The research ethics boards at each of the AMCs approved this study.

Existing Code Status Documentation Processes

At all 3 AMCs, providers document patient code status in 5 different places: (1) progress notes (admission and daily progress notes in the paper chart), (2) physician orders (computerized orders at 1 site, paper orders at the other 2 sites), (3) electronic sign‐out lists (Web‐based tools used by residents to support patient handover), (4) nursing‐care plan (used by nurses to document care plans for their assigned patients), and (5) DNR sheet (a cover sheet placed at the front of the paper chart in patients who have a DNR order) (see Supporting Information, Appendix, in the online version of this article). None of these documentation sources link automatically to one another. Once a physician establishes a patient's code status, it should be documented in the progress notes. The same physician should also write the code status as a physician order and update the patient's code status in the Web‐based electronic sign‐out list. The nurse responsible for the patient transcribes the code status order in the nursing‐care plan. For DNR patients, nurses or physicians (depending on the AMC) also place the DNR sheet in the front of the chart.

At our 3 AMCs, in the event of a cardiac arrest, resident physicians and nurses are typically the first responders. To quickly determine a patient's code status nurses and resident physicians look for the presence or absence of a DNR sheet. In addition, nurses rely on their nursing‐care plan and resident physicians rely on their electronic sign‐out list.

Eligibility Criteria and Sampling Strategy

Our study included GIM patients admitted to a CTU at 1 of 3 AMCs, and excluded admitted GIM patients who remained in the emergency department (due to differences in code status documentation processes). Data collection took place between September 2010 and September 2011 on days when the principal author (A.S.W.) was available to collect the data.

We collected data for all patients from a single GIM CTU on the same day to minimize the chance that a team updates or changes a patient's code status during data collection. We included each of the 4 CTUs at the 3 study sites once during the study period (ie, 12 full days of data collection).

Study Measures and Data Collection

One study author (A.S.W.) screened the 5 code status documentation sources listed above for each patient and recorded the documented code status as full code, DNR, or blank (if there was nothing entered) in a database. We also collected patient demographic data, admitting diagnosis, length of stay, admission to home ward (ie, the medicine ward affiliated with the CTU team that admitted the patient), free‐text code status documentation, transfer to the intensive care unit during their hospitalization, and whether the patient is receiving comfort measures, up to the time of data collection. Because the study investigators were not members of the team providing care to patients included in the study, we could not directly elicit the patient's actual code status.

The primary study outcome measures were the completeness and consistency of code status documentation across the 5 documentation sources. For completeness, we included data relating to 4 documentation sources only, excluding the DNR sheet because it is only relevant for DNR patients. We defined inconsistent code status documentation a priori as (1) the code status is conflicting in at least 2 documentation sources (eg, full code in 1 source and DNR in another) or (2) the code status is documented in 1 or more documentation source and not documented in at least 1 documentation source (eg, full code in 1 source and blank in another).

We then subdivided code status documentation inconsistencies into nonclinically relevant and clinically relevant subcategories. For example, a nonclinically relevant inconsistency would be if a physician documented full code in the physician orders, but a nurse did not document anything in the nursing‐care plan, because most providers would assume a preference for resuscitation in the absence of code status documentation in the nursing‐care plan.

We defined clinically relevant inconsistencies as those that would reasonably lead healthcare providers referring to different documentation sources to respond differently in the event of a cardiac arrest (eg, the physician orders show DNR whereas the nursing‐care plan is blanka provider who refers to the physician orders would not resuscitate the patient, but another provider who refers to the blank nursing‐care plan would resuscitate the patient).

We determined the proportion of patients with inconsistent code status documentation by listing the 31 different permutations of code status documentation in our data (Figure 1). Using the prespecified definition of inconsistent code status documentation, 3 study authors (I.A.D., B.M.W., R.C.W.) independently determined whether each permutation met the criteria for inconsistent code status documentation, and judged the clinical relevance of each documentation inconsistency. We resolved disagreements by consensus.

Statistical Analysis

We calculated descriptive statistics for all variables, summarizing continuous measures using means and standard deviations, and categorical measures using counts, percentages, and their associated 95% confidence intervals. Logistic regression analyses adjusting for the correlation among observations taken from the same team were carried out. Each of the 4 variables of interest (patient age, length of stay, receiving comfort measures, free text code status documentation) was run in a bivariate model to obtain unadjusted estimates as well as the final multivariable model. All estimates were displayed as odds ratios (ORs) and their associated 95% confidence intervals (CIs). A P value <0.05 was used to denote statistical significance. We also carried out a kappa analysis to assess inter‐rater agreement when judging whether inconsistent documentation is clinically relevant. All analyses were carried out using SAS version 9.3 (SAS Institute, Cary, NC).

RESULTS

There were 194 patients potentially eligible for inclusion. Seven admitted GIM patients who had not been transferred from the emergency department were excluded, leaving 187 patients in the study. The mean patient age was 70 years; 83 (44%) were female. The median length of stay up to the time of data collection was 6 days, with the majority (156 [83%]) of patients admitted to their home ward. Ten (5%) patients were receiving comfort measures.

Completeness of Code Status Documentation

Thirty‐eight (20%; 95% CI, 14%‐26%) patients had complete and consistent code status documentation across all documentation sources, whereas 27 (14%; 95% CI, 9%‐19%) patients had no code status documented in any documentation source. By documentation source, providers documented code status in the progress notes for 89 patients (48%; 95% CI, 40%‐55%), the physician orders for 107 patients (57%; 95% CI, 50%‐64%), the nursing‐care plan for 110 patients (59%; 95% CI, 51%‐66%), and the electronic sign‐out list for 129 patients (69%; 95% CI, 62%‐76%).

Consistency of Code Status Documentation

The remaining 122 patients (65%; 95% CI, 58%‐72%) had at least 1 code status documentation inconsistency. Of these, 38 patients (20%; 95% CI, 14%‐26%) had a clinically relevant code status documentation inconsistency. Code status documentation inconsistency differed by site; the 2 hospitals with paper‐based physician orders had fewer patients with complete and consistent code status documentation compared to the hospital where physician orders are electronic (15% vs 42%, respectively, P<0.001) (Table 1).

| Physician Code Status Order | Sites 1 & 2: Paper‐Based, N=108 | Site 3: Electronic, N=52 | P Value |

|---|---|---|---|

| Consistent code status documentation | 16 (15%) | 22 (42%) | |

| Inconsistent code status documentation, nonclinically relevant | 60 (56%) | 30 (58%) | <0.0001 |

| Inconsistent code status documentation, clinically relevant | 32 (30%) | 6 (12%) |

The permutations of clinically relevant and nonclinically relevant inconsistencies are summarized in Figure 1. We achieved high inter‐rater reliability among the 3 independent reviewers with respect to rating the clinical relevance of documentation inconsistencies (=0.86 [95% CI, 0.76‐0.95]).

To identify correlates of clinically relevant code status documentation inconsistencies, we included 4 variables of interest (patient age, length of stay, receiving comfort measures, and free text code status documentation) in a logistic regression analysis. Bivariate analyses demonstrated that increased age (OR =1.07 [95% CI, 1.05‐1.10] for every 1‐year increase in age, P<0.001) and receiving comfort measures (OR= 10.98 [95% CI, 1.94‐62.12], P=0.007) were associated with a clinically relevant code status documentation inconsistency. Using these 4 variables in a multivariable analysis clustering for physician team, increased age (OR=1.07 [95% CI, 1.04‐1.10] for every 1‐year increase in age, P<0.0001) and receiving comfort measures (OR=9.39 [95% CI, 1.3565.19], P=0.02) remained as independent positive correlates of having a clinically relevant code status documentation inconsistency (Table 2).

| Clinically Relevant Inconsistencies, N=38 | No Inconsistencies and Nonclinically Relevant Inconsistencies, N=149 | P Value | |

|---|---|---|---|

| |||

| Age, y, mean (SD) | 83 (10) | 67 (19) | <0.0001* |

| Length of stay, d, median (IQR) | 6.5 (310) | 6 (219) | 0.39 |

| Receiving comfort measures, n (%) | 7 (18%) | 3 (2%) | <0.0001* |

| Free‐text code status documentation, n (%) | 18 (47%) | 58 (39%) | 0.34 |

DISCUSSION

We found that 2 out of 3 patients had at least 1 inconsistency in code status documentation, and that 1 in 5 patients had at least 1 clinically relevant code status documentation inconsistency. The majority of clinically relevant inconsistencies occurred because there was a DNR order written in some sources of code status documentation, and no orders in other documentation sources. However, there were 4 striking examples where DNR was written in some sources of code status documentation and full code was written in other documentation sources (Figure 1).

Older patients and patients receiving comfort measures were more likely to have a clinically relevant inconsistency in code status documentation. This is particularly concerning, because they are among the most vulnerable patients at highest risk for having an in‐hospital cardiac arrest.

Our study extends the findings of prior studies that identified gaps in completeness and accuracy of code status documentation and describes another important gap in the quality and consistency of code status documentation.[20] This becomes particularly important because efforts aimed at increasing documentation of patients' code status without ensuring consistency across documentation sources may still result in patients being resuscitated or not resuscitated inappropriately.

This issue of poorly integrated health records is relevant for many other aspects of patient care. For example, 1 study found significant discrepancies in patient medication allergy documentation across multiple health records.[21] This fragmentation of documentation of the same patient information in multiple health records requires attention and should be the focus of institutional quality improvement efforts.

There are several potential ways to improve the code status documentation process. First, the use of standard fields or standardized orders can increase the completeness and improve the clarity of code status documentation.[22, 23] For institutions with an electronic medical record, forcing functions may further increase code status documentation. One study found that the implementation of an electronic medical record increased code status documentation from 4% to 63%.[24] We found similarly that the site with electronic physician orders had higher rates of complete and consistent code status documentation.

A second approach is to minimize the number of different sources for code status documentation. Institutions should critically examine each place where providers could document code status and decide whether this information adds value, and create policies to restrict unnecessary duplicate documentation and ensure accurate documentation of code status in several key, centralized locations.[25] A third option would be to automatically synchronize all code status documentation sources.[25] This final approach requires a fully integrated electronic health record.

Our study has several limitations. Although we report a large number of code status documentation inconsistencies, we do not know how many of these lead to incorrect resuscitative measures, so the actual impact on patient care is unknown. Also, because we were focusing on inconsistencies among sources of code status documentation, and not on accurate documentation of a patients' code status, the patients' actual preferences were not elicited and are not known. Finally, we carried out our study in 3 AMCs with residents that rotate from 1 site to another. The transient nature of resident work may increase the likelihood of documentation inconsistencies, because trainees may be less aware of local processes. In addition, the way front‐line staff uses clinical documentation sources to determine a patient's code status may differ at other institutions. Therefore, our estimate of clinical relevance may not be generalizable to other institutions with different front‐line processes or with healthcare teams that are more stable and aware of local documentation processes.

In summary, our study uncovered significant gaps in the quality of code status documentation that span 3 different AMCs. Having multiple, poorly integrated sources for code status documentation leads to a significant number of concerning inconsistencies that create opportunities for healthcare providers to inappropriately deliver or withhold resuscitative measures that conflict with patients' expressed wishes. Institutions need to be aware of this potential documentation hazard and take steps to minimize code status documentation inconsistencies. Even though cardiac arrests occur infrequently, if healthcare teams take inappropriate action because of these code status documentation inconsistencies, the consequences can be devastating.

Disclosure

Nothing to report.

- , , , et al. Advance directives for medical care—a case for greater use. N Engl J Med. 1991;324(13):889–895.

- , , , et al; Canadian Researchers at the End of Life Network (CARENET). What matters most in end‐of‐life care: perceptions of seriously ill patients and their family memebrs. CMAJ. 2006;174(5):627–633.

- , , . Advance directives and outcomes of surrogate decision making before death. N Eng J Med. 2010;362(13):1211–1218.

- , , , et al. Associations between end‐of‐life discussions, patient mental health, medical care near death, and caregiver bereavement adjustment. JAMA. 2008;300(14):1665–1673.

- , , , . The impact of advance care planning on end of life care in elderly patients: randomized controlled trial. BMJ. 2010;340:c1345.

- , . Quality indicators for end‐of‐life care in vulnerable elders. Ann Intern Med. 2001;135(8):667–685.

- , , , . Assessing care of vulnerable elders: methods for developing quality indicators. Ann Intern Med. 2001;135:647–652.

- , , , et al. Perceptions by family members of the dying experience of older and seriously ill patients. Ann Intern Med. 1997;126(2):97–106.

- , . Cardiopulmonary resuscitation: capacity, discussion and documentation. Q J Med. 2006;99(10):683–690.

- , , , et al. Code status documentation in the outpatient electronic medical records or patients with metastatic cancer. J Gen Intern Med. 2009;25(2):150–153.

- , , , et al. Factors associated with discussion of care plans and code status at the time of hospital admission: results from the multicenter hospitalist study. J Hosp Med. 2008;3(6):437–445.

- , , , . Documentation quality of inpatient code status discussions. J Pain Symptom Manage. 2014;48(4):632–638.

- , , , . The use of life‐sustaining treatments in hospitalized persons aged 80 and older. J Am Geriatr Soc. 2002;50(5):930–934.

- The SUPPORT Principal Investigators. A controlled trial to improve care for seriously ill hospitalized patients: the Study to Understand Prognoses and Preferences for Outcomes and Risks of Treatments (SUPPORT). JAMA. 1995;274(20):1591–1598.

- , , . Some treatment‐withholding implications of no‐code orders in an academic hospital. Crit Care Med. 1984;12(10):879–881.

- . A prospective study of patients with DNR orders in a teaching hospital. Arch Intern Med. 1998;148(10):2193–2198.

- , . Compliance with do‐not‐resuscitate orders for hospitalized patient transported to radiology department. Ann Int Med. 1998;129(10):801–805.

- , . Identification of inpatient DNR status: a safety hazard begging for standardization. J Hosp Med. 2007;2(6):366–371.

- . Controlling death: the false promise of advance directives. Ann Int Med. 2007;147(1):51–57.

- , , , et al. Failure to engage hospitalized elderly patients and their families in advance care planning. JAMA Intern Med. 2013;173(9):778–787.

- , , , et al. The recording of drug sensitivities for older people living in care homes. Br J Clin Pharmacol. 2010;69(5):553–557.

- , , , , , . A comparison of methods to communicate treatment preferences in nursing facilities: traditional practices versus the physician orders for life‐sustaining treatment program. J Am Geriatr Soc. 2010;58(7):1241–1248.

- , , , et al. Evaluation of a treatment limitation policy with a specific treatment‐limiting order page. Arch Intern Med. 1994;154(4):425–432.

- , , , et al. An electronic medical record intervention increased nursing home advance directive orders and documentation. J Am Geriatr Soc. 2007;55(7):1001–1006.

- , , , et al. Honouring patient's resuscitation wishes: a multiphased effort to improve identification and documentation. BMJ Quality Safety. 2013;22(1):85–92.

- , , , et al. Advance directives for medical care—a case for greater use. N Engl J Med. 1991;324(13):889–895.

- , , , et al; Canadian Researchers at the End of Life Network (CARENET). What matters most in end‐of‐life care: perceptions of seriously ill patients and their family memebrs. CMAJ. 2006;174(5):627–633.

- , , . Advance directives and outcomes of surrogate decision making before death. N Eng J Med. 2010;362(13):1211–1218.

- , , , et al. Associations between end‐of‐life discussions, patient mental health, medical care near death, and caregiver bereavement adjustment. JAMA. 2008;300(14):1665–1673.

- , , , . The impact of advance care planning on end of life care in elderly patients: randomized controlled trial. BMJ. 2010;340:c1345.

- , . Quality indicators for end‐of‐life care in vulnerable elders. Ann Intern Med. 2001;135(8):667–685.

- , , , . Assessing care of vulnerable elders: methods for developing quality indicators. Ann Intern Med. 2001;135:647–652.

- , , , et al. Perceptions by family members of the dying experience of older and seriously ill patients. Ann Intern Med. 1997;126(2):97–106.

- , . Cardiopulmonary resuscitation: capacity, discussion and documentation. Q J Med. 2006;99(10):683–690.

- , , , et al. Code status documentation in the outpatient electronic medical records or patients with metastatic cancer. J Gen Intern Med. 2009;25(2):150–153.

- , , , et al. Factors associated with discussion of care plans and code status at the time of hospital admission: results from the multicenter hospitalist study. J Hosp Med. 2008;3(6):437–445.

- , , , . Documentation quality of inpatient code status discussions. J Pain Symptom Manage. 2014;48(4):632–638.

- , , , . The use of life‐sustaining treatments in hospitalized persons aged 80 and older. J Am Geriatr Soc. 2002;50(5):930–934.

- The SUPPORT Principal Investigators. A controlled trial to improve care for seriously ill hospitalized patients: the Study to Understand Prognoses and Preferences for Outcomes and Risks of Treatments (SUPPORT). JAMA. 1995;274(20):1591–1598.

- , , . Some treatment‐withholding implications of no‐code orders in an academic hospital. Crit Care Med. 1984;12(10):879–881.

- . A prospective study of patients with DNR orders in a teaching hospital. Arch Intern Med. 1998;148(10):2193–2198.

- , . Compliance with do‐not‐resuscitate orders for hospitalized patient transported to radiology department. Ann Int Med. 1998;129(10):801–805.

- , . Identification of inpatient DNR status: a safety hazard begging for standardization. J Hosp Med. 2007;2(6):366–371.

- . Controlling death: the false promise of advance directives. Ann Int Med. 2007;147(1):51–57.

- , , , et al. Failure to engage hospitalized elderly patients and their families in advance care planning. JAMA Intern Med. 2013;173(9):778–787.

- , , , et al. The recording of drug sensitivities for older people living in care homes. Br J Clin Pharmacol. 2010;69(5):553–557.

- , , , , , . A comparison of methods to communicate treatment preferences in nursing facilities: traditional practices versus the physician orders for life‐sustaining treatment program. J Am Geriatr Soc. 2010;58(7):1241–1248.

- , , , et al. Evaluation of a treatment limitation policy with a specific treatment‐limiting order page. Arch Intern Med. 1994;154(4):425–432.

- , , , et al. An electronic medical record intervention increased nursing home advance directive orders and documentation. J Am Geriatr Soc. 2007;55(7):1001–1006.

- , , , et al. Honouring patient's resuscitation wishes: a multiphased effort to improve identification and documentation. BMJ Quality Safety. 2013;22(1):85–92.

© 2015 Society of Hospital Medicine