User login

Variation in Printed Handoff Documents

Handoffs among hospital providers are highly error prone and can result in serious morbidity and mortality. Best practices for verbal handoffs have been described[1, 2, 3, 4] and include conducting verbal handoffs face to face, providing opportunities for questions, having the receiver perform a readback, as well as specific content recommendations including action items. Far less research has focused on best practices for printed handoff documents,[5, 6] despite the routine use of written handoff tools as a reference by on‐call physicians.[7, 8] Erroneous or outdated information on the written handoff can mislead on‐call providers, potentially leading to serious medical errors.

In their most basic form, printed handoff documents list patients for whom a provider is responsible. Typically, they also contain demographic information, reason for hospital admission, and a task list for each patient. They may also contain more detailed information on patient history, hospital course, and/or care plan, and may vary among specialties.[9] They come in various forms, ranging from index cards with handwritten notes, to word‐processor or spreadsheet documents, to printed documents that are autopopulated from the electronic health record (EHR).[2] Importantly, printed handoff documents supplement the verbal handoff by allowing receivers to follow along as patients are presented. The concurrent use of written and verbal handoffs may improve retention of clinical information as compared with either alone.[10, 11]

The Joint Commission requires an institutional approach to patient handoffs.[12] The requirements state that handoff communication solutions should take a standardized form, but they do not provide details regarding what data elements should be included in printed or verbal handoffs. Accreditation Council for Graduate Medical Education Common Program Requirements likewise require that residents must become competent in patient handoffs[13] but do not provide specific details or measurement tools. Absent widely accepted guidelines, decisions regarding which elements to include in printed handoff documents are currently made at an individual or institutional level.

The I‐PASS study is a federally funded multi‐institutional project that demonstrated a decrease in medical errors and preventable adverse events after implementation of a standardized resident handoff bundle.[14, 15] The I‐PASS Study Group developed a bundle of handoff interventions, beginning with a handoff and teamwork training program (based in part on TeamSTEPPS [Team Strategies and Tools to Enhance Performance and Patient Safety]),[16] a novel verbal mnemonic, I‐PASS (Illness Severity, Patient Summary, Action List, Situation Awareness and Contingency Planning, and Synthesis by Receiver),[17] and changes to the verbal handoff process, in addition to several other elements.

We hypothesized that developing a standardized printed handoff template would reinforce the handoff training and enhance the value of the verbal handoff process changes. Given the paucity of data on best printed handoff practices, however, we first conducted a needs assessment to identify which data elements were currently contained in printed handoffs across sites, and to allow an expert panel to make recommendations for best practices.

METHODS

I‐PASS Study sites included 9 pediatric residency programs at academic medical centers from across North America. Programs were identified through professional networks and invited to participate. The nonintensive care unit hospitalist services at these medical centers are primarily staffed by residents and medical students with attending supervision. At 1 site, nurse practitioners also participate in care. Additional details about study sites can be found in the study descriptions previously published.[14, 15] All sites received local institutional review board approval.

We began by inviting members of the I‐PASS Education Executive Committee (EEC)[14] to build a collective, comprehensive list of possible data elements for printed handoff documents. This committee included pediatric residency program directors, pediatric hospitalists, education researchers, health services researchers, and patient safety experts. We obtained sample handoff documents from pediatric hospitalist services at each of 9 institutions in the United States and Canada (with protected health information redacted). We reviewed these sample handoff documents to characterize their format and to determine what discrete data elements appeared in each site's printed handoff document. Presence or absence of each data element across sites was tabulated. We also queried sites to determine the feasibility of including elements that were not presently included.

Subsequently, I‐PASS site investigators led structured group interviews at participating sites to gather additional information about handoff practices at each site. These structured group interviews included diverse representation from residents, faculty, and residency program leadership, as well as hospitalists and medical students, to ensure the comprehensive acquisition of information regarding site‐specific characteristics. Each group provided answers to a standardized set of open‐ended questions that addressed current practices, handoff education, simulation use, team structure, and the nature of current written handoff tools, if applicable, at each site. One member of the structured group interview served as a scribe and created a document that summarized the content of the structured group interview meeting and answers to the standardized questions.

Consensus on Content

The initial data collection also included a multivote process[18] of the full I‐PASS EEC to help prioritize data elements. Committee members brainstormed a list of all possible data elements for a printed handoff document. Each member (n=14) was given 10 votes to distribute among the elements. Committee members could assign more than 1 vote to an element to emphasize its importance.

The results of this process as well as the current data elements included in each printed handoff tool were reviewed by a subgroup of the I‐PASS EEC. These expert panel members participated in a series of conference calls during which they tabulated categorical information, reviewed narrative comments, discussed existing evidence, and conducted simple content analysis to identify areas of concordance or discordance. Areas of discordance were discussed by the committee. Disagreements were resolved with group consensus with attention to published evidence or best practices, if available.

Elements were divided into those that were essential (unanimous consensus, no conflicting literature) and those that were recommended (majority supported inclusion of element, no conflicting literature). Ratings were assigned using the American College of Cardiology/American Heart Association framework for practice guidelines,[19] in which each element is assigned a classification (I=effective, II=conflicting evidence/opinion, III=not effective) and a level of evidence to support that classification (A=multiple large randomized controlled trials, B=single randomized trial, or nonrandomized studies, C=expert consensus).

The expert panel reached consensus, through active discussion, on a list of data elements that should be included in an ideal printed handoff document. Elements were chosen based on perceived importance, with attention to published best practices[1, 16] and the multivoting results. In making recommendations, consideration was given to whether data elements could be electronically imported into the printed handoff document from the EHR, or whether they would be entered manually. The potential for serious medical errors due to possible errors in manual entry of data was an important aspect of recommendations made. The list of candidate elements was then reviewed by a larger group of investigators from the I‐PASS Education Executive Committee and Coordinating Council for additional input.

The panel asked site investigators from each participating hospital to gather data on the feasibility of redesigning the printed handoff at that hospital to include each recommended element. Site investigators reported whether each element was already included, possible to include but not included currently, or not currently possible to include within that site's printed handoff tool. Site investigators also reported how data elements were populated in their handoff documents, with options including: (1) autopopulated from administrative data (eg, pharmacy‐entered medication list, demographic data entered by admitting office), (2) autoimported from physicians' free‐text entries elsewhere in the EHR (eg, progress notes), (3) free text entered specifically for the printed handoff, or (4) not applicable (element cannot be included).

RESULTS

Nine programs (100%) provided data on the structure and contents of their printed handoff documents. We found wide variation in structure across the 9 sites. Three sites used a word‐processorbased document that required manual entry of all data elements. The other 6 institutions had a direct link with the EHR to enable autopopulation of between 10 and 20 elements on the printed handoff document.

The content of written handoff documents, as well as the sources of data included in them (present or future), likewise varied substantially across sites (Table 1). Only 4 data elements (name, age, weight, and a list of medications) were universally included at all 9 sites. Among the 6 institutions that linked the printed handoff to the EHR, there was also substantial variation in which elements were autoimported. Only 7 elements were universally autoimported at these 6 sites: patient name, medical record number, room number, weight, date of birth, age, and date of admission. Two elements from the original brainstorming were not presently included in any sites' documents (emergency contact and primary language).

| Data Elements | Sites With Data Element Included at Initial Needs Assessment (Out of Nine Sites) | Data Source (Current or Anticipated) | ||

|---|---|---|---|---|

| Autoimported* | Manually Entered | Not Applicable | ||

| ||||

| Name | 9 | 6 | 3 | 0 |

| Medical record number | 8 | 6 | 3 | 0 |

| Room number | 8 | 6 | 3 | 0 |

| Allergies | 6 | 4 | 5 | 0 |

| Weight | 9 | 6 | 3 | 0 |

| Age | 9 | 6 | 3 | 0 |

| Date of birth | 6 | 6 | 3 | 0 |

| Admission date | 8 | 6 | 3 | 0 |

| Attending name | 5 | 4 | 5 | 0 |

| Team/service | 7 | 4 | 5 | 0 |

| Illness severity | 1 | 0 | 9 | 0 |

| Patient summary | 8 | 0 | 9 | 0 |

| Action items | 8 | 0 | 9 | 0 |

| Situation monitoring/contingency plan | 5 | 0 | 9 | 0 |

| Medication name | 9 | 4 | 5 | 0 |

| Medication name and dose/route/frequency | 4 | 4 | 5 | 0 |

| Code status | 2 | 2 | 7 | 0 |

| Labs | 6 | 5 | 4 | 0 |

| Access | 2 | 2 | 7 | 0 |

| Ins/outs | 2 | 4 | 4 | 1 |

| Primary language | 0 | 3 | 6 | 0 |

| Vital signs | 3 | 4 | 4 | 1 |

| Emergency contact | 0 | 2 | 7 | 0 |

| Primary care provider | 4 | 4 | 5 | 0 |

Nine institutions (100%) conducted structured group interviews, ranging in size from 4 to 27 individuals with a median of 5 participants. The documents containing information from each site were provided to the authors. The authors then tabulated categorical information, reviewed narrative comments to understand current institutional practices, and conducted simple content analysis to identify areas of concordance or discordance, particularly with respect to data elements and EHR usage. Based on the results of the printed handoff document review and structured group interviews, with additional perspectives provided by the I‐PASS EEC, the expert panel came to consensus on a list of 23 elements that should be included in printed handoff documents, including 15 essential data elements and 8 additional recommended elements (Table 2).

|

| Essential Elements |

| Patient identifiers |

| Patient name (class I, level of evidence C) |

| Medical record number (class I, level of evidence C) |

| Date of birth (class I, level of evidence C) |

| Hospital service identifiers |

| Attending name (class I, level of evidence C) |

| Team/service (class I, level of evidence C) |

| Room number (class I, level of evidence C) |

| Admission date (class I, level of evidence C) |

| Age (class I, level of evidence C) |

| Weight (class I, level of evidence C) |

| Illness severity (class I, level of evidence B)[20, 21] |

| Patient summary (class I, level of evidence B)[21, 22] |

| Action items (class I, level of evidence B) [21, 22] |

| Situation awareness/contingency planning (class I, level of evidence B) [21, 22] |

| Allergies (class I, level of evidence C) |

| Medications |

| Autopopulation of medications (class I, level of evidence B)[22, 23, 24] |

| Free‐text entry of medications (class IIa, level of evidence C) |

| Recommended elements |

| Primary language (class IIa, level of evidence C) |

| Emergency contact (class IIa, level of evidence C) |

| Primary care provider (class IIa, level of evidence C) |

| Code status (class IIb, level of evidence C) |

| Labs (class IIa, level of evidence C) |

| Access (class IIa, level of evidence C) |

| Ins/outs (class IIa, level of evidence C) |

| Vital signs (class IIa, level of evidence C) |

Evidence ratings[19] of these elements are included. Several elements are classified as I‐B (effective, nonrandomized studies) based on either studies of individual elements, or greater than 1 study of bundled elements that could reasonably be extrapolated. These include Illness severity,[20, 21] patient summary,[21, 22] action items[21, 22] (to do lists), situation awareness and contingency plan,[21, 22] and medications[22, 23, 24] with attention to importing from the EHR. Medications entered as free text were classified as IIa‐C because of risk and potential significance of errors; in particular there was concern that transcription errors, errors of omission, or errors of commission could potentially lead to patient harms. The remaining essential elements are classified as I‐C (effective, expert consensus). Of note, date of birth was specifically included as a patient identifier, distinct from age, which was felt to be useful as a descriptor (often within a one‐liner or as part of the patient summary).

The 8 recommended elements were elements for which there was not unanimous agreement on inclusion, but the majority of the panel felt they should be included. These elements were classified as IIa‐C, with 1 exception. Code status generated significant controversy among the group. After extensive discussion among the group and consideration of safety, supervision, educational, and pediatric‐specific considerations, all members of the group agreed on the categorization as a recommended element; it is classified as IIb‐C.

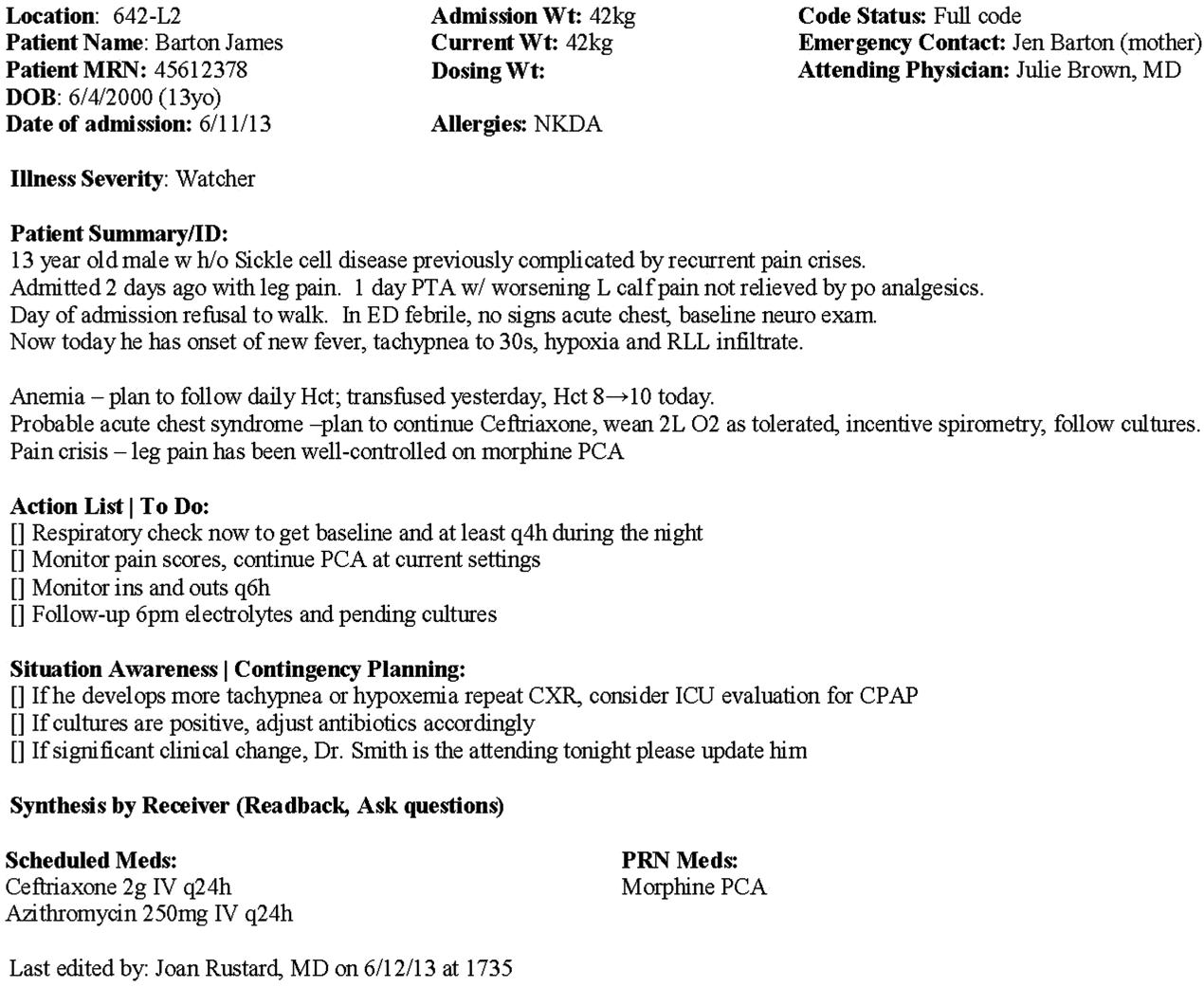

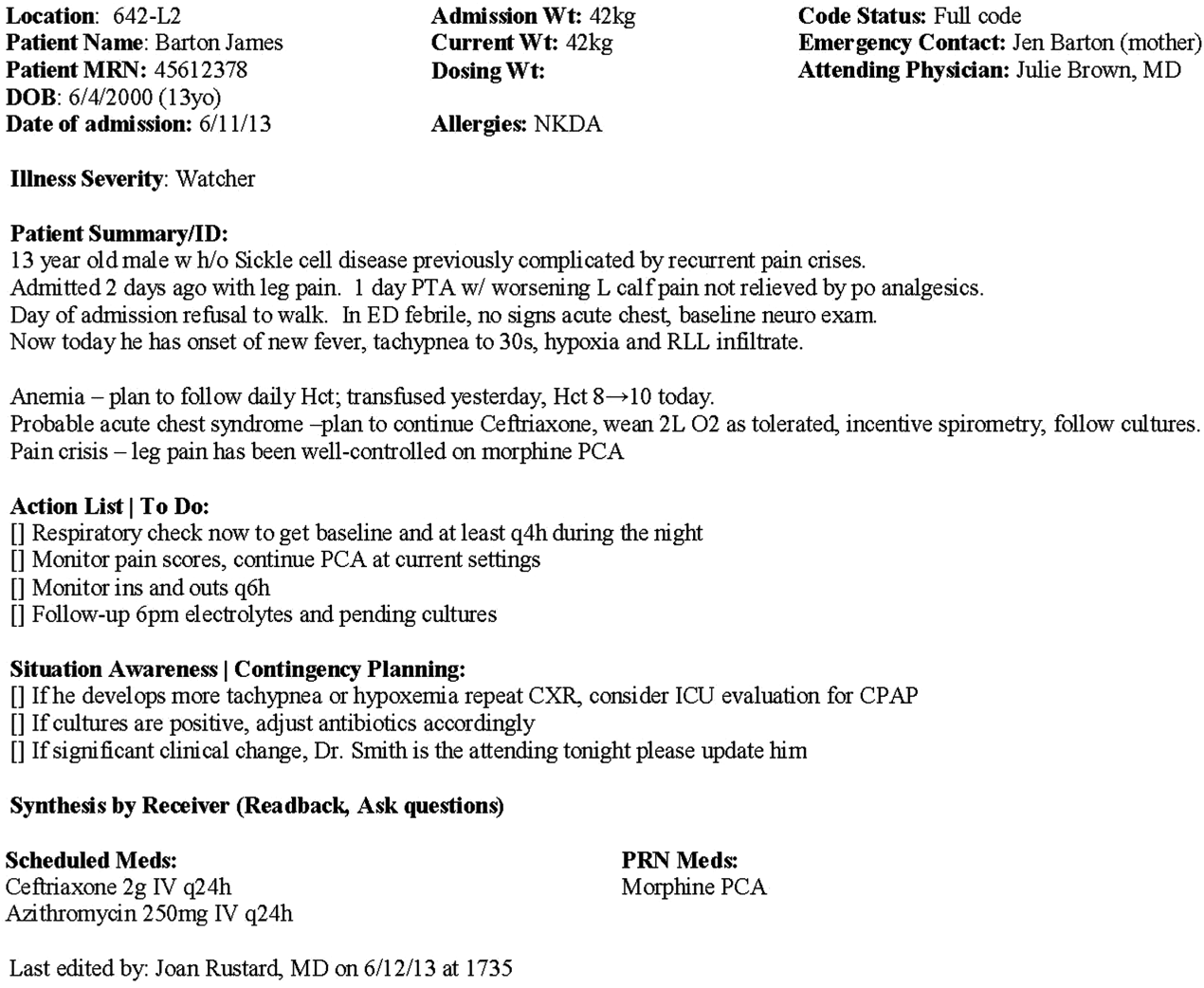

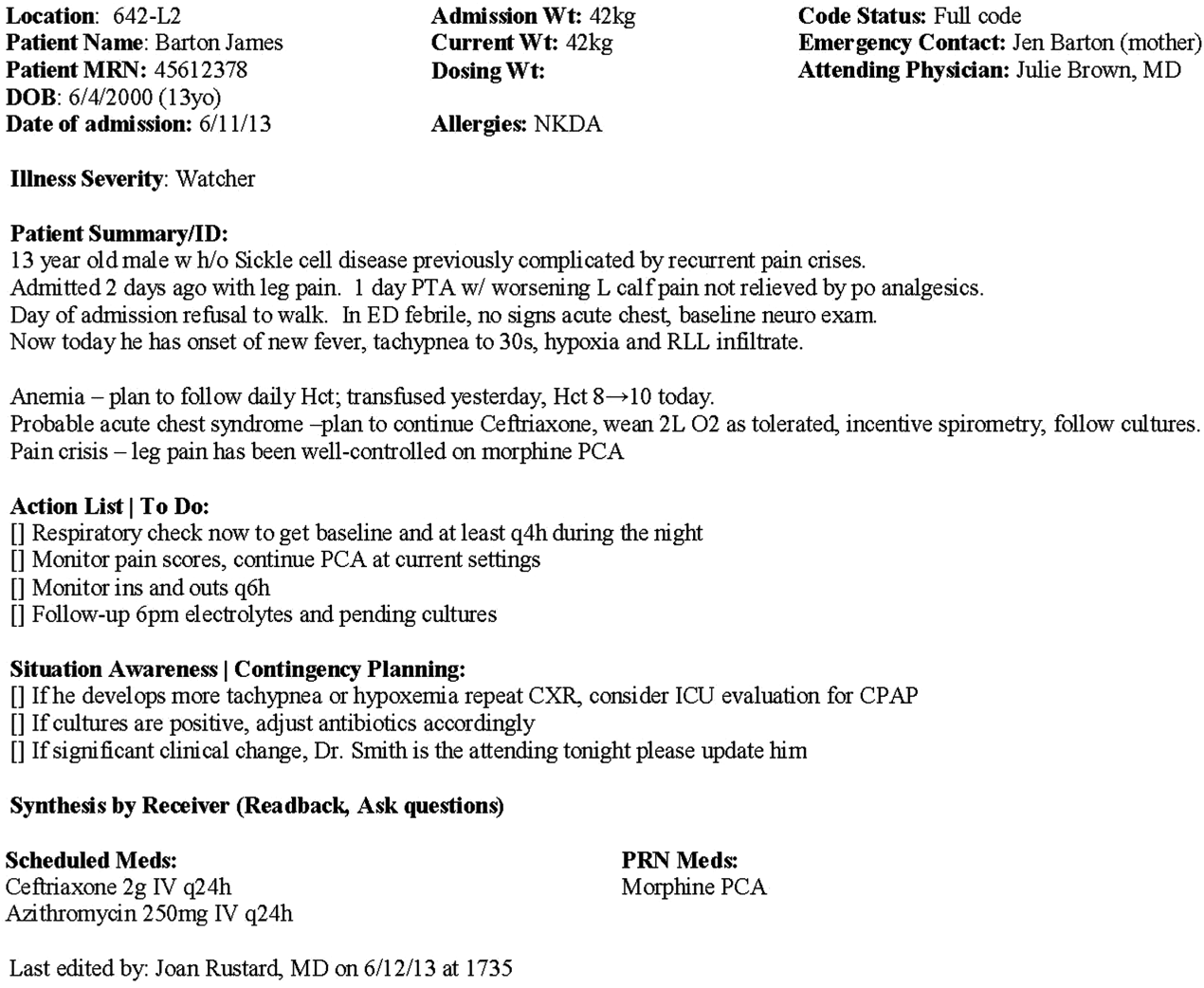

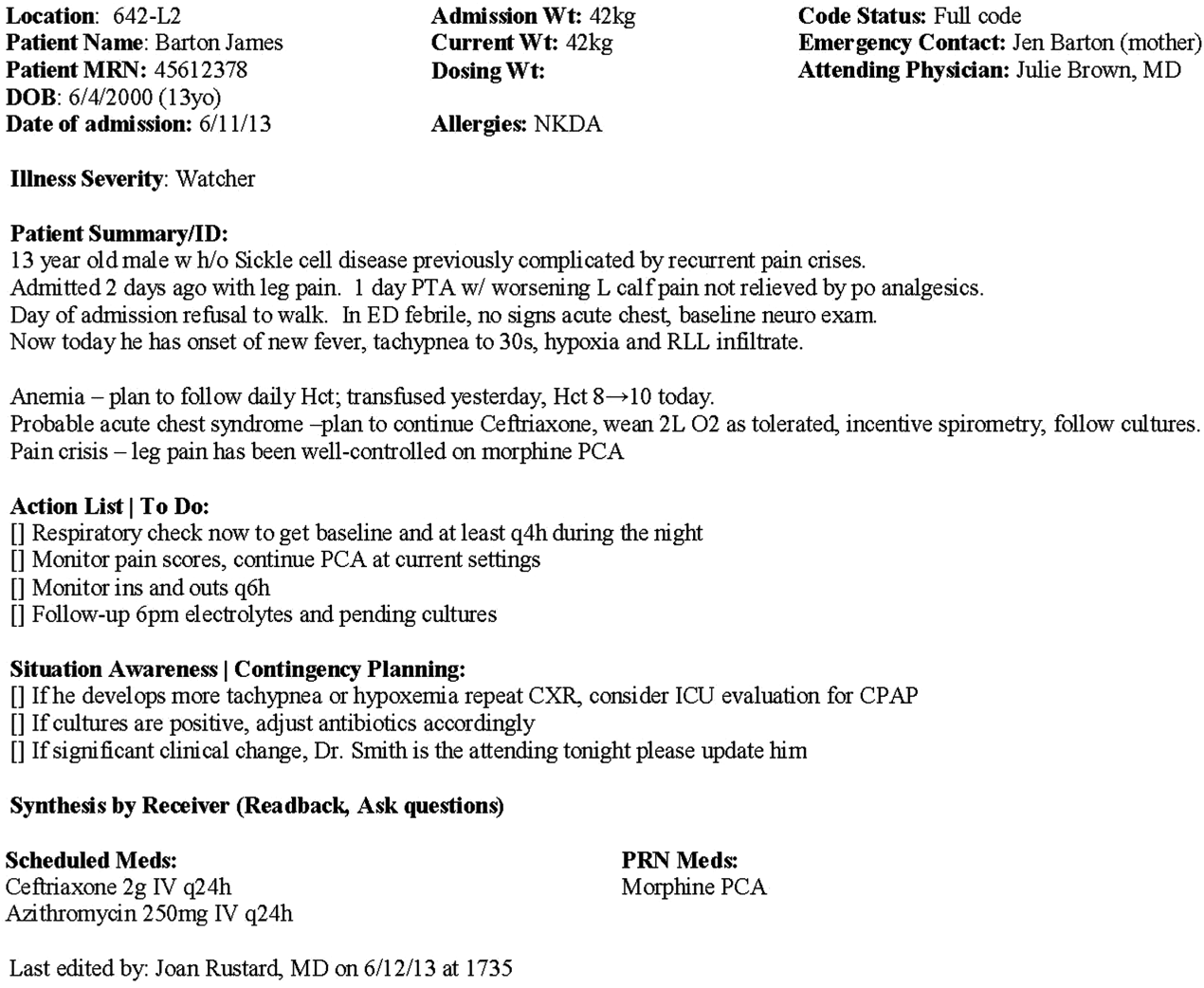

All members of the group agreed that data elements should be directly imported from the EHR whenever possible. Finally, members agreed that the elements that make up the I‐PASS mnemonic (illness severity, patient summary, action items, situation awareness/contingency planning) should be listed in that order whenever possible. A sample I‐PASS‐compliant printed handoff document is shown Figure 1.

DISCUSSION

We identified substantial variability in the structure and content of printed handoff documents used by 9 pediatric hospitalist teaching services, reflective of a lack of standardization. We found that institutional printed handoff documents shared some demographic elements (eg, name, room, medical record number) but also varied in clinical content (eg, vital signs, lab tests, code status). Our expert panel developed a list of 15 essential and 8 recommended data elements for printed handoff documents. Although this is a large number of fields, the majority of the essential fields were already included by most sites, and many are basic demographic identifiers. Illness severity is the 1 essential field that was not routinely included; however, including this type of overview is consistently recommended[2, 4] and supported by evidence,[20, 21] and contributes to building a shared mental model.[16] We recommend the categories of stable/watcher/unstable.[17]

Several prior single‐center studies have found that introducing a printed handoff document can lead to improvements in workflow, communication, and patient safety. In an early study, Petersen et al.[25] showed an association between use of a computerized sign‐out program and reduced odds of preventable adverse events during periods of cross‐coverage. Wayne et al.[26] reported fewer perceived inaccuracies in handoff documents as well as improved clarity at the time of transfer, supporting the role for standardization. Van Eaton et al.[27] demonstrated rapid uptake and desirability of a computerized handoff document, which combined autoimportation of information from an EHR with resident‐entered patient details, reflecting the importance of both data sources. In addition, they demonstrated improvements in both the rounding and sign‐out processes.[28]

Two studies specifically reported the increased use of specific fields after implementation. Payne et al. implemented a Web‐based handoff tool and documented significant increases in the number of handoffs containing problem lists, medication lists, and code status, accompanied by perceived improvements in quality of handoffs and fewer near‐miss events.[24] Starmer et al. found that introduction of a resident handoff bundle that included a printed handoff tool led to reduction in medical errors and adverse events.[22] The study group using the tool populated 11 data elements more often after implementation, and introduction of this printed handoff tool in particular was associated with reductions in written handoff miscommunications. Neither of these studies included subanalysis to indicate which data elements may have been most important.

In contrast to previous single‐institution studies, our recommendations for a printed handoff template come from evaluations of tools and discussions with front line providers across 9 institutions. We had substantial overlap with data elements recommended by Van Eaton et al.[27] However, there were several areas in which we did not have overlap with published templates including weight, ins/outs, primary language, emergency contact information, or primary care provider. Other published handoff tools have been highly specialized (eg, for cardiac intensive care) or included many fewer data elements than our group felt were essential. These differences may reflect the unique aspects of caring for pediatric patients (eg, need for weights) and the absence of defined protocols for many pediatric conditions. In addition, the level of detail needed for contingency planning may vary between teaching and nonteaching services.

Resident physicians may provide valuable information in the development of standardized handoff documents. Clark et al.,[29] at Virginia Mason University, utilized resident‐driven continuous quality improvement processes including real‐time feedback to implement an electronic template. They found that engagement of both senior leaders and front‐line users was an important component of their success in uptake. Our study utilized residents as essential members of structured group interviews to ensure that front‐line users' needs were represented as recommendations for a printed handoff tool template were developed.

As previously described,[17] our study group had identified several key data elements that should be included in verbal handoffs: illness severity, a patient summary, a discrete action list, situation awareness/contingency planning, and a synthesis by receiver. With consideration of the multivoting results as well as known best practices,[1, 4, 12] the expert panel for this study agreed that each of these elements should also be highlighted in the printed template to ensure consistency between the printed document and the verbal handoff, and to have each reinforce the other. On the printed handoff tool, the final S in the I‐PASS mnemonic (synthesis by receiver) cannot be prepopulated, but considering the importance of this step,[16, 30, 31, 32] it should be printed as synthesis by receiver to serve as a text‐reminder to both givers and receivers.

The panel also felt, however, that the printed handoff document should provide additional background information not routinely included in a verbal handoff. It should serve as a reference tool both at the time of verbal handoff and throughout the day and night, and therefore should include more comprehensive information than is necessary or appropriate to convey during the verbal handoff. We identified 10 data elements that are essential in a printed handoff document in addition to the I‐PASS elements (Table 2).

Patient demographic data elements, as well as team assignments and attending physician, were uniformly supported for inclusion. The medication list was viewed as essential; however, the panel also recognized the potential for medical errors due to inaccuracies in the medication list. In particular, there was concern that including all fields of a medication order (drug, dose, route, frequency) would result in handoffs containing a high proportion of inaccurate information, particularly for complex patients whose medication regimens may vary over the course of hospitalization. Therefore, the panel agreed that if medication lists were entered manually, then only the medication name should be included as they did not wish to perpetuate inaccurate or potentially harmful information. If medication lists were autoimported from an EHR, then they should include drug name, dose, route, and frequency if possible.

In the I‐PASS study,[15] all institutions implemented printed handoff documents that included fields for the essential data elements. After implementation, there was a significant increase in completion of all essential fields. Although there is limited evidence to support any individual data element, increased usage of these elements was associated with the overall study finding of decreased rates of medical errors and preventable adverse events.

EHRs have the potential to help standardize printed handoff documents[5, 6, 33, 34, 35]; all participants in our study agreed that printed handoff documents should ideally be linked with the EHR and should autoimport data wherever appropriate. Manually populated (eg, word processor‐ or spreadsheet‐based) handoff tools have important limitations, particularly related to the potential for typographical errors as well as accidental omission of data fields, and lead to unnecessary duplication of work (eg, re‐entering data already included in a progress note) that can waste providers' time. It was also acknowledged that word processor‐ or spreadsheet‐based documents may have flexibility that is lacking in EHR‐based handoff documents. For example, formatting can more easily be adjusted to increase the number of patients per printed page. As technology advances, printed documents may be phased out in favor of EHR‐based on‐screen reports, which by their nature would be more accurate due to real‐time autoupdates.

In making recommendations about essential versus recommended items for inclusion in the printed handoff template, the only data element that generated controversy among our experts was code status. Some felt that it should be included as an essential element, whereas others did not. We believe that this was unique to our practice in pediatric hospital ward settings, as codes in most pediatric ward settings are rare. Among the concerns expressed with including code status for all patients were that residents might assume patients were full‐code without verifying. The potential inaccuracy created by this might have severe implications. Alternatively, residents might feel obligated to have code discussions with all patients regardless of severity of illness, which may be inappropriate in a pediatric population. Several educators expressed concerns about trainees having unsupervised code‐status conversations with families of pediatric patients. Conversely, although codes are rare in pediatric ward settings, concerns were raised that not including code status could be problematic during these rare but critically important events. Other fields, such as weight, might have less relevance for an adult population in which emergency drug doses are standardized.

Limitations

Our study has several limitations. We only collected data from hospitalist services at pediatric sites. It is likely that providers in other specialties would have specific data elements they felt were essential (eg, postoperative day, code status). Our methodology was expert consensus based, driven by data collection from sites that were already participating in the I‐PASS study. Although the I‐PASS study demonstrated decreased rates of medical errors and preventable adverse events with inclusion of these data elements as part of a bundle, future research will be required to evaluate whether some of these items are more important than others in improving written communication and ultimately patient safety. In spite of these limitations, our work represents an important starting point for the development of standards for written handoff documents that should be used in patient handoffs, particularly those generated from EHRs.

CONCLUSIONS

In this article we describe the results of a needs assessment that informed expert consensus‐based recommendations for data elements to include in a printed handoff document. We recommend that pediatric programs include the elements identified as part of a standardized written handoff tool. Although many of these elements are also applicable to other specialties, future work should be conducted to adapt the printed handoff document elements described here for use in other specialties and settings. Future studies should work to validate the importance of these elements, studying the manner in which their inclusion affects the quality of written handoffs, and ultimately patient safety.

Acknowledgements

Members of the I‐PASS Study Education Executive Committee who contributed to this manuscript include: Boston Children's Hospital/Harvard Medical School (primary site) (Christopher P. Landrigan, MD, MPH, Elizabeth L. Noble, BA. Theodore C. Sectish, MD. Lisa L. Tse, BA). Cincinnati Children's Hospital Medical Center/University of Cincinnati College of Medicine (Jennifer K. O'Toole, MD, MEd). Doernbecher Children's Hospital/Oregon Health and Science University (Amy J. Starmer, MD, MPH). Hospital for Sick Children/University of Toronto (Zia Bismilla, MD. Maitreya Coffey, MD). Lucile Packard Children's Hospital/Stanford University (Lauren A. Destino, MD. Jennifer L. Everhart, MD. Shilpa J. Patel, MD [currently at Kapi'olani Children's Hospital/University of Hawai'i School of Medicine]). National Capital Consortium (Jennifer H. Hepps, MD. Joseph O. Lopreiato, MD, MPH. Clifton E. Yu, MD). Primary Children's Medical Center/University of Utah (James F. Bale, Jr., MD. Adam T. Stevenson, MD). St. Louis Children's Hospital/Washington University (F. Sessions Cole, MD). St. Christopher's Hospital for Children/Drexel University College of Medicine (Sharon Calaman, MD. Nancy D. Spector, MD). Benioff Children's Hospital/University of California San Francisco School of Medicine (Glenn Rosenbluth, MD. Daniel C. West, MD).

Additional I‐PASS Study Group members who contributed to this manuscript include April D. Allen, MPA, MA (Heller School for Social Policy and Management, Brandeis University, previously affiliated with Boston Children's Hospital), Madelyn D. Kahana, MD (The Children's Hospital at Montefiore/Albert Einstein College of Medicine, previously affiliated with Lucile Packard Children's Hospital/Stanford University), Robert S. McGregor, MD (Akron Children's Hospital/Northeast Ohio Medical University, previously affiliated with St. Christopher's Hospital for Children/Drexel University), and John S. Webster, MD, MBA, MS (Webster Healthcare Consulting Inc., formerly of the Department of Defense).

Members of the I‐PASS Study Group include individuals from the institutions listed below as follows: Boston Children's Hospital/Harvard Medical School (primary site): April D. Allen, MPA, MA (currently at Heller School for Social Policy and Management, Brandeis University), Angela M. Feraco, MD, Christopher P. Landrigan, MD, MPH, Elizabeth L. Noble, BA, Theodore C. Sectish, MD, Lisa L. Tse, BA. Brigham and Women's Hospital (data coordinating center): Anuj K. Dalal, MD, Carol A. Keohane, BSN, RN, Stuart Lipsitz, PhD, Jeffrey M. Rothschild, MD, MPH, Matt F. Wien, BS, Catherine S. Yoon, MS, Katherine R. Zigmont, BSN, RN. Cincinnati Children's Hospital Medical Center/University of Cincinnati College of Medicine: Javier Gonzalez del Rey, MD, MEd, Jennifer K. O'Toole, MD, MEd, Lauren G. Solan, MD. Doernbecher Children's Hospital/Oregon Health and Science University: Megan E. Aylor, MD, Amy J. Starmer, MD, MPH, Windy Stevenson, MD, Tamara Wagner, MD. Hospital for Sick Children/University of Toronto: Zia Bismilla, MD, Maitreya Coffey, MD, Sanjay Mahant, MD, MSc. Lucile Packard Children's Hospital/Stanford University: Rebecca L. Blankenburg, MD, MPH, Lauren A. Destino, MD, Jennifer L. Everhart, MD, Madelyn Kahana, MD, Shilpa J. Patel, MD (currently at Kapi'olani Children's Hospital/University of Hawaii School of Medicine). National Capital Consortium: Jennifer H. Hepps, MD, Joseph O. Lopreiato, MD, MPH, Clifton E. Yu, MD. Primary Children's Hospital/University of Utah: James F. Bale, Jr., MD, Jaime Blank Spackman, MSHS, CCRP, Rajendu Srivastava, MD, FRCP(C), MPH, Adam Stevenson, MD. St. Louis Children's Hospital/Washington University: Kevin Barton, MD, Kathleen Berchelmann, MD, F. Sessions Cole, MD, Christine Hrach, MD, Kyle S. Schultz, MD, Michael P. Turmelle, MD, Andrew J. White, MD. St. Christopher's Hospital for Children/Drexel University: Sharon Calaman, MD, Bronwyn D. Carlson, MD, Robert S. McGregor, MD (currently at Akron Children's Hospital/Northeast Ohio Medical University), Vahideh Nilforoshan, MD, Nancy D. Spector, MD. and Benioff Children's Hospital/University of California San Francisco School of Medicine: Glenn Rosenbluth, MD, Daniel C. West, MD. Dorene Balmer, PhD, RD, Carol L. Carraccio, MD, MA, Laura Degnon, CAE, and David McDonald, and Alan Schwartz PhD serve the I‐PASS Study Group as part of the IIPE. Karen M. Wilson, MD, MPH serves the I‐PASS Study Group as part of the advisory board from the PRIS Executive Council. John Webster served the I‐PASS Study Group and Education Executive Committee as a representative from TeamSTEPPS.

Disclosures: The I‐PASS Study was primarily supported by the US Department of Health and Human Services, Office of the Assistant Secretary for Planning and Evaluation (1R18AE000029‐01). The opinions and conclusions expressed herein are solely those of the author(s) and should not be constructed as representing the opinions or policy of any agency of the federal government. Developed with input from the Initiative for Innovation in Pediatric Education and the Pediatric Research in Inpatient Settings Network (supported by the Children's Hospital Association, the Academic Pediatric Association, the American Academy of Pediatrics, and the Society of Hospital Medicine). A. J. S. was supported by the Agency for Healthcare Research and Quality/Oregon Comparative Effectiveness Research K12 Program (1K12HS019456‐01). Additional funding for the I‐PASS Study was provided by the Medical Research Foundation of Oregon, Physician Services Incorporated Foundation (Ontario, Canada), and Pfizer (unrestricted medical education grant to N.D.S.). C.P.L, A.J.S. were supported by the Oregon Comparative Effectiveness Research K12 Program (1K12HS019456 from the Agency for Healthcare Research and Quality). A.J.S. was also supported by the Medical Research Foundation of Oregon. The authors report no conflicts of interest.

- , , , , . Handoff strategies in settings with high consequences for failure: lessons for health care operations. Int J Qual Health Care. 2004;16(2):125–132.

- , , , , . Managing discontinuity in academic medical centers: strategies for a safe and effective resident sign‐out. J Hosp Med. 2006;1(4):257–266.

- , , . Development and implementation of an oral sign‐out skills curriculum. J Gen Intern Med. 2007;22(10):1470–1474.

- , , , , , . Hospitalist handoffs: a systematic review and task force recommendations. J Hosp Med. 2009;4(7):433–440.

- , , . A systematic review of the literature on the evaluation of handoff tools: implications for research and practice. J Am Med Inform Assoc. 2014;21(1):154–162.

- , , , , . Review of computerized physician handoff tools for improving the quality of patient care. J Hosp Med. 2013;8(8):456–463.

- , , , , . Answering questions on call: pediatric resident physicians' use of handoffs and other resources. J Hosp Med. 2013;8(6):328–333.

- , , , . Effectiveness of written hospitalist sign‐outs in answering overnight inquiries. J Hosp Med. 2013;8(11):609–614.

- , , . Sign‐out snapshot: cross‐sectional evaluation of written sign‐outs among specialties. BMJ Qual Saf. 2014;23(1):66–72.

- , , , . An experimental comparison of handover methods. Ann R Coll Surg Engl. 2007;89(3):298–300.

- , , , . Pilot study to show the loss of important data in nursing handover. Br J Nurs. 2005;14(20):1090–1093.

- The Joint Commission. Hospital Accreditation Standards 2015: Joint Commission Resources; 2015:PC.02.02.01.

- Accreditation Council for Graduate Medical Education. Common Program Requirements. 2013; http://acgme.org/acgmeweb/tabid/429/ProgramandInstitutionalAccreditation/CommonProgramRequirements.aspx. Accessed May 11, 2015.

- , , , . Establishing a multisite education and research project requires leadership, expertise, collaboration, and an important aim. Pediatrics. 2010;126(4):619–622.

- , , , et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803–1812.

- US Department of Health and Human Services. Agency for Healthcare Research and Quality. TeamSTEPPS website. Available at: http://teamstepps.ahrq.gov/. Accessed July 12, 2013.

- , , , , , . I‐PASS, a mnemonic to standardize verbal handoffs. Pediatrics. 2012;129(2):201–204.

- , , . The Team Handbook. 3rd ed. Middleton, WI: Oriel STAT A MATRIX; 2010.

- ACC/AHA Task Force on Practice Guidelines. Methodology Manual and Policies From the ACCF/AHA Task Force on Practice Guidelines. Available at: http://my.americanheart.org/idc/groups/ahamah‐public/@wcm/@sop/documents/downloadable/ucm_319826.pdf. Published June 2010. Accessed January 11, 2015.

- , , , et al. Effect of illness severity and comorbidity on patient safety and adverse events. Am J Med Qual. 2012;27(1):48–57.

- , , , , . Consequences of inadequate sign‐out for patient care. Arch Intern Med. 2008;168(16):1755–1760.

- , , , et al. Rates of medical errors and preventable adverse events among hospitalized children following implementation of a resident handoff bundle. JAMA. 2013;310(21):2262–2270.

- , , , , . Medication discrepancies in resident sign‐outs and their potential to harm. J Gen Intern Med. 2007;22(12):1751–1755.

- , , , . Avoiding handover fumbles: a controlled trial of a structured handover tool versus traditional handover methods. BMJ Qual Saf. 2012;21(11):925–932.

- , , , , . Using a computerized sign‐out program to improve continuity of inpatient care and prevent adverse events. Jt Comm J Qual Improv. 1998;24(2):77–87.

- , , , et al. Simple standardized patient handoff system that increases accuracy and completeness. J Surg Educ. 2008;65(6):476–485.

- , , , . Organizing the transfer of patient care information: the development of a computerized resident sign‐out system. Surgery. 2004;136(1):5–13.

- , , , , . A randomized, controlled trial evaluating the impact of a computerized rounding and sign‐out system on continuity of care and resident work hours. J Am Coll Surg. 2005;200(4):538–545.

- , , . Template for success: using a resident‐designed sign‐out template in the handover of patient care. J Surg Educ. 2011;68(1):52–57.

- , , , , , . Read‐back improves information transfer in simulated clinical crises. BMJ Qual Saf. 2014;23(12):989–993.

- , , , , . Interns overestimate the effectiveness of their hand‐off communication. Pediatrics. 2010;125(3):491–496.

- , , , , , . Improving patient safety by repeating (read‐back) telephone reports of critical information. Am J Clin Pathol. 2004;121(6):801–803.

- , , , , . Content overlap in nurse and physician handoff artifacts and the potential role of electronic health records: a systematic review. J Biomed Inform. 2011;44(4):704–712.

- , , , . Clinical summarization capabilities of commercially‐available and internally‐developed electronic health records. Appl Clin Inform. 2012;3(1):80–93.

- , . An analysis and recommendations for multidisciplinary computerized handoff applications in hospitals. AMIA Annu Symp Proc. 2011;2011:588–597.

Handoffs among hospital providers are highly error prone and can result in serious morbidity and mortality. Best practices for verbal handoffs have been described[1, 2, 3, 4] and include conducting verbal handoffs face to face, providing opportunities for questions, having the receiver perform a readback, as well as specific content recommendations including action items. Far less research has focused on best practices for printed handoff documents,[5, 6] despite the routine use of written handoff tools as a reference by on‐call physicians.[7, 8] Erroneous or outdated information on the written handoff can mislead on‐call providers, potentially leading to serious medical errors.

In their most basic form, printed handoff documents list patients for whom a provider is responsible. Typically, they also contain demographic information, reason for hospital admission, and a task list for each patient. They may also contain more detailed information on patient history, hospital course, and/or care plan, and may vary among specialties.[9] They come in various forms, ranging from index cards with handwritten notes, to word‐processor or spreadsheet documents, to printed documents that are autopopulated from the electronic health record (EHR).[2] Importantly, printed handoff documents supplement the verbal handoff by allowing receivers to follow along as patients are presented. The concurrent use of written and verbal handoffs may improve retention of clinical information as compared with either alone.[10, 11]

The Joint Commission requires an institutional approach to patient handoffs.[12] The requirements state that handoff communication solutions should take a standardized form, but they do not provide details regarding what data elements should be included in printed or verbal handoffs. Accreditation Council for Graduate Medical Education Common Program Requirements likewise require that residents must become competent in patient handoffs[13] but do not provide specific details or measurement tools. Absent widely accepted guidelines, decisions regarding which elements to include in printed handoff documents are currently made at an individual or institutional level.

The I‐PASS study is a federally funded multi‐institutional project that demonstrated a decrease in medical errors and preventable adverse events after implementation of a standardized resident handoff bundle.[14, 15] The I‐PASS Study Group developed a bundle of handoff interventions, beginning with a handoff and teamwork training program (based in part on TeamSTEPPS [Team Strategies and Tools to Enhance Performance and Patient Safety]),[16] a novel verbal mnemonic, I‐PASS (Illness Severity, Patient Summary, Action List, Situation Awareness and Contingency Planning, and Synthesis by Receiver),[17] and changes to the verbal handoff process, in addition to several other elements.

We hypothesized that developing a standardized printed handoff template would reinforce the handoff training and enhance the value of the verbal handoff process changes. Given the paucity of data on best printed handoff practices, however, we first conducted a needs assessment to identify which data elements were currently contained in printed handoffs across sites, and to allow an expert panel to make recommendations for best practices.

METHODS

I‐PASS Study sites included 9 pediatric residency programs at academic medical centers from across North America. Programs were identified through professional networks and invited to participate. The nonintensive care unit hospitalist services at these medical centers are primarily staffed by residents and medical students with attending supervision. At 1 site, nurse practitioners also participate in care. Additional details about study sites can be found in the study descriptions previously published.[14, 15] All sites received local institutional review board approval.

We began by inviting members of the I‐PASS Education Executive Committee (EEC)[14] to build a collective, comprehensive list of possible data elements for printed handoff documents. This committee included pediatric residency program directors, pediatric hospitalists, education researchers, health services researchers, and patient safety experts. We obtained sample handoff documents from pediatric hospitalist services at each of 9 institutions in the United States and Canada (with protected health information redacted). We reviewed these sample handoff documents to characterize their format and to determine what discrete data elements appeared in each site's printed handoff document. Presence or absence of each data element across sites was tabulated. We also queried sites to determine the feasibility of including elements that were not presently included.

Subsequently, I‐PASS site investigators led structured group interviews at participating sites to gather additional information about handoff practices at each site. These structured group interviews included diverse representation from residents, faculty, and residency program leadership, as well as hospitalists and medical students, to ensure the comprehensive acquisition of information regarding site‐specific characteristics. Each group provided answers to a standardized set of open‐ended questions that addressed current practices, handoff education, simulation use, team structure, and the nature of current written handoff tools, if applicable, at each site. One member of the structured group interview served as a scribe and created a document that summarized the content of the structured group interview meeting and answers to the standardized questions.

Consensus on Content

The initial data collection also included a multivote process[18] of the full I‐PASS EEC to help prioritize data elements. Committee members brainstormed a list of all possible data elements for a printed handoff document. Each member (n=14) was given 10 votes to distribute among the elements. Committee members could assign more than 1 vote to an element to emphasize its importance.

The results of this process as well as the current data elements included in each printed handoff tool were reviewed by a subgroup of the I‐PASS EEC. These expert panel members participated in a series of conference calls during which they tabulated categorical information, reviewed narrative comments, discussed existing evidence, and conducted simple content analysis to identify areas of concordance or discordance. Areas of discordance were discussed by the committee. Disagreements were resolved with group consensus with attention to published evidence or best practices, if available.

Elements were divided into those that were essential (unanimous consensus, no conflicting literature) and those that were recommended (majority supported inclusion of element, no conflicting literature). Ratings were assigned using the American College of Cardiology/American Heart Association framework for practice guidelines,[19] in which each element is assigned a classification (I=effective, II=conflicting evidence/opinion, III=not effective) and a level of evidence to support that classification (A=multiple large randomized controlled trials, B=single randomized trial, or nonrandomized studies, C=expert consensus).

The expert panel reached consensus, through active discussion, on a list of data elements that should be included in an ideal printed handoff document. Elements were chosen based on perceived importance, with attention to published best practices[1, 16] and the multivoting results. In making recommendations, consideration was given to whether data elements could be electronically imported into the printed handoff document from the EHR, or whether they would be entered manually. The potential for serious medical errors due to possible errors in manual entry of data was an important aspect of recommendations made. The list of candidate elements was then reviewed by a larger group of investigators from the I‐PASS Education Executive Committee and Coordinating Council for additional input.

The panel asked site investigators from each participating hospital to gather data on the feasibility of redesigning the printed handoff at that hospital to include each recommended element. Site investigators reported whether each element was already included, possible to include but not included currently, or not currently possible to include within that site's printed handoff tool. Site investigators also reported how data elements were populated in their handoff documents, with options including: (1) autopopulated from administrative data (eg, pharmacy‐entered medication list, demographic data entered by admitting office), (2) autoimported from physicians' free‐text entries elsewhere in the EHR (eg, progress notes), (3) free text entered specifically for the printed handoff, or (4) not applicable (element cannot be included).

RESULTS

Nine programs (100%) provided data on the structure and contents of their printed handoff documents. We found wide variation in structure across the 9 sites. Three sites used a word‐processorbased document that required manual entry of all data elements. The other 6 institutions had a direct link with the EHR to enable autopopulation of between 10 and 20 elements on the printed handoff document.

The content of written handoff documents, as well as the sources of data included in them (present or future), likewise varied substantially across sites (Table 1). Only 4 data elements (name, age, weight, and a list of medications) were universally included at all 9 sites. Among the 6 institutions that linked the printed handoff to the EHR, there was also substantial variation in which elements were autoimported. Only 7 elements were universally autoimported at these 6 sites: patient name, medical record number, room number, weight, date of birth, age, and date of admission. Two elements from the original brainstorming were not presently included in any sites' documents (emergency contact and primary language).

| Data Elements | Sites With Data Element Included at Initial Needs Assessment (Out of Nine Sites) | Data Source (Current or Anticipated) | ||

|---|---|---|---|---|

| Autoimported* | Manually Entered | Not Applicable | ||

| ||||

| Name | 9 | 6 | 3 | 0 |

| Medical record number | 8 | 6 | 3 | 0 |

| Room number | 8 | 6 | 3 | 0 |

| Allergies | 6 | 4 | 5 | 0 |

| Weight | 9 | 6 | 3 | 0 |

| Age | 9 | 6 | 3 | 0 |

| Date of birth | 6 | 6 | 3 | 0 |

| Admission date | 8 | 6 | 3 | 0 |

| Attending name | 5 | 4 | 5 | 0 |

| Team/service | 7 | 4 | 5 | 0 |

| Illness severity | 1 | 0 | 9 | 0 |

| Patient summary | 8 | 0 | 9 | 0 |

| Action items | 8 | 0 | 9 | 0 |

| Situation monitoring/contingency plan | 5 | 0 | 9 | 0 |

| Medication name | 9 | 4 | 5 | 0 |

| Medication name and dose/route/frequency | 4 | 4 | 5 | 0 |

| Code status | 2 | 2 | 7 | 0 |

| Labs | 6 | 5 | 4 | 0 |

| Access | 2 | 2 | 7 | 0 |

| Ins/outs | 2 | 4 | 4 | 1 |

| Primary language | 0 | 3 | 6 | 0 |

| Vital signs | 3 | 4 | 4 | 1 |

| Emergency contact | 0 | 2 | 7 | 0 |

| Primary care provider | 4 | 4 | 5 | 0 |

Nine institutions (100%) conducted structured group interviews, ranging in size from 4 to 27 individuals with a median of 5 participants. The documents containing information from each site were provided to the authors. The authors then tabulated categorical information, reviewed narrative comments to understand current institutional practices, and conducted simple content analysis to identify areas of concordance or discordance, particularly with respect to data elements and EHR usage. Based on the results of the printed handoff document review and structured group interviews, with additional perspectives provided by the I‐PASS EEC, the expert panel came to consensus on a list of 23 elements that should be included in printed handoff documents, including 15 essential data elements and 8 additional recommended elements (Table 2).

|

| Essential Elements |

| Patient identifiers |

| Patient name (class I, level of evidence C) |

| Medical record number (class I, level of evidence C) |

| Date of birth (class I, level of evidence C) |

| Hospital service identifiers |

| Attending name (class I, level of evidence C) |

| Team/service (class I, level of evidence C) |

| Room number (class I, level of evidence C) |

| Admission date (class I, level of evidence C) |

| Age (class I, level of evidence C) |

| Weight (class I, level of evidence C) |

| Illness severity (class I, level of evidence B)[20, 21] |

| Patient summary (class I, level of evidence B)[21, 22] |

| Action items (class I, level of evidence B) [21, 22] |

| Situation awareness/contingency planning (class I, level of evidence B) [21, 22] |

| Allergies (class I, level of evidence C) |

| Medications |

| Autopopulation of medications (class I, level of evidence B)[22, 23, 24] |

| Free‐text entry of medications (class IIa, level of evidence C) |

| Recommended elements |

| Primary language (class IIa, level of evidence C) |

| Emergency contact (class IIa, level of evidence C) |

| Primary care provider (class IIa, level of evidence C) |

| Code status (class IIb, level of evidence C) |

| Labs (class IIa, level of evidence C) |

| Access (class IIa, level of evidence C) |

| Ins/outs (class IIa, level of evidence C) |

| Vital signs (class IIa, level of evidence C) |

Evidence ratings[19] of these elements are included. Several elements are classified as I‐B (effective, nonrandomized studies) based on either studies of individual elements, or greater than 1 study of bundled elements that could reasonably be extrapolated. These include Illness severity,[20, 21] patient summary,[21, 22] action items[21, 22] (to do lists), situation awareness and contingency plan,[21, 22] and medications[22, 23, 24] with attention to importing from the EHR. Medications entered as free text were classified as IIa‐C because of risk and potential significance of errors; in particular there was concern that transcription errors, errors of omission, or errors of commission could potentially lead to patient harms. The remaining essential elements are classified as I‐C (effective, expert consensus). Of note, date of birth was specifically included as a patient identifier, distinct from age, which was felt to be useful as a descriptor (often within a one‐liner or as part of the patient summary).

The 8 recommended elements were elements for which there was not unanimous agreement on inclusion, but the majority of the panel felt they should be included. These elements were classified as IIa‐C, with 1 exception. Code status generated significant controversy among the group. After extensive discussion among the group and consideration of safety, supervision, educational, and pediatric‐specific considerations, all members of the group agreed on the categorization as a recommended element; it is classified as IIb‐C.

All members of the group agreed that data elements should be directly imported from the EHR whenever possible. Finally, members agreed that the elements that make up the I‐PASS mnemonic (illness severity, patient summary, action items, situation awareness/contingency planning) should be listed in that order whenever possible. A sample I‐PASS‐compliant printed handoff document is shown Figure 1.

DISCUSSION

We identified substantial variability in the structure and content of printed handoff documents used by 9 pediatric hospitalist teaching services, reflective of a lack of standardization. We found that institutional printed handoff documents shared some demographic elements (eg, name, room, medical record number) but also varied in clinical content (eg, vital signs, lab tests, code status). Our expert panel developed a list of 15 essential and 8 recommended data elements for printed handoff documents. Although this is a large number of fields, the majority of the essential fields were already included by most sites, and many are basic demographic identifiers. Illness severity is the 1 essential field that was not routinely included; however, including this type of overview is consistently recommended[2, 4] and supported by evidence,[20, 21] and contributes to building a shared mental model.[16] We recommend the categories of stable/watcher/unstable.[17]

Several prior single‐center studies have found that introducing a printed handoff document can lead to improvements in workflow, communication, and patient safety. In an early study, Petersen et al.[25] showed an association between use of a computerized sign‐out program and reduced odds of preventable adverse events during periods of cross‐coverage. Wayne et al.[26] reported fewer perceived inaccuracies in handoff documents as well as improved clarity at the time of transfer, supporting the role for standardization. Van Eaton et al.[27] demonstrated rapid uptake and desirability of a computerized handoff document, which combined autoimportation of information from an EHR with resident‐entered patient details, reflecting the importance of both data sources. In addition, they demonstrated improvements in both the rounding and sign‐out processes.[28]

Two studies specifically reported the increased use of specific fields after implementation. Payne et al. implemented a Web‐based handoff tool and documented significant increases in the number of handoffs containing problem lists, medication lists, and code status, accompanied by perceived improvements in quality of handoffs and fewer near‐miss events.[24] Starmer et al. found that introduction of a resident handoff bundle that included a printed handoff tool led to reduction in medical errors and adverse events.[22] The study group using the tool populated 11 data elements more often after implementation, and introduction of this printed handoff tool in particular was associated with reductions in written handoff miscommunications. Neither of these studies included subanalysis to indicate which data elements may have been most important.

In contrast to previous single‐institution studies, our recommendations for a printed handoff template come from evaluations of tools and discussions with front line providers across 9 institutions. We had substantial overlap with data elements recommended by Van Eaton et al.[27] However, there were several areas in which we did not have overlap with published templates including weight, ins/outs, primary language, emergency contact information, or primary care provider. Other published handoff tools have been highly specialized (eg, for cardiac intensive care) or included many fewer data elements than our group felt were essential. These differences may reflect the unique aspects of caring for pediatric patients (eg, need for weights) and the absence of defined protocols for many pediatric conditions. In addition, the level of detail needed for contingency planning may vary between teaching and nonteaching services.

Resident physicians may provide valuable information in the development of standardized handoff documents. Clark et al.,[29] at Virginia Mason University, utilized resident‐driven continuous quality improvement processes including real‐time feedback to implement an electronic template. They found that engagement of both senior leaders and front‐line users was an important component of their success in uptake. Our study utilized residents as essential members of structured group interviews to ensure that front‐line users' needs were represented as recommendations for a printed handoff tool template were developed.

As previously described,[17] our study group had identified several key data elements that should be included in verbal handoffs: illness severity, a patient summary, a discrete action list, situation awareness/contingency planning, and a synthesis by receiver. With consideration of the multivoting results as well as known best practices,[1, 4, 12] the expert panel for this study agreed that each of these elements should also be highlighted in the printed template to ensure consistency between the printed document and the verbal handoff, and to have each reinforce the other. On the printed handoff tool, the final S in the I‐PASS mnemonic (synthesis by receiver) cannot be prepopulated, but considering the importance of this step,[16, 30, 31, 32] it should be printed as synthesis by receiver to serve as a text‐reminder to both givers and receivers.

The panel also felt, however, that the printed handoff document should provide additional background information not routinely included in a verbal handoff. It should serve as a reference tool both at the time of verbal handoff and throughout the day and night, and therefore should include more comprehensive information than is necessary or appropriate to convey during the verbal handoff. We identified 10 data elements that are essential in a printed handoff document in addition to the I‐PASS elements (Table 2).

Patient demographic data elements, as well as team assignments and attending physician, were uniformly supported for inclusion. The medication list was viewed as essential; however, the panel also recognized the potential for medical errors due to inaccuracies in the medication list. In particular, there was concern that including all fields of a medication order (drug, dose, route, frequency) would result in handoffs containing a high proportion of inaccurate information, particularly for complex patients whose medication regimens may vary over the course of hospitalization. Therefore, the panel agreed that if medication lists were entered manually, then only the medication name should be included as they did not wish to perpetuate inaccurate or potentially harmful information. If medication lists were autoimported from an EHR, then they should include drug name, dose, route, and frequency if possible.

In the I‐PASS study,[15] all institutions implemented printed handoff documents that included fields for the essential data elements. After implementation, there was a significant increase in completion of all essential fields. Although there is limited evidence to support any individual data element, increased usage of these elements was associated with the overall study finding of decreased rates of medical errors and preventable adverse events.

EHRs have the potential to help standardize printed handoff documents[5, 6, 33, 34, 35]; all participants in our study agreed that printed handoff documents should ideally be linked with the EHR and should autoimport data wherever appropriate. Manually populated (eg, word processor‐ or spreadsheet‐based) handoff tools have important limitations, particularly related to the potential for typographical errors as well as accidental omission of data fields, and lead to unnecessary duplication of work (eg, re‐entering data already included in a progress note) that can waste providers' time. It was also acknowledged that word processor‐ or spreadsheet‐based documents may have flexibility that is lacking in EHR‐based handoff documents. For example, formatting can more easily be adjusted to increase the number of patients per printed page. As technology advances, printed documents may be phased out in favor of EHR‐based on‐screen reports, which by their nature would be more accurate due to real‐time autoupdates.

In making recommendations about essential versus recommended items for inclusion in the printed handoff template, the only data element that generated controversy among our experts was code status. Some felt that it should be included as an essential element, whereas others did not. We believe that this was unique to our practice in pediatric hospital ward settings, as codes in most pediatric ward settings are rare. Among the concerns expressed with including code status for all patients were that residents might assume patients were full‐code without verifying. The potential inaccuracy created by this might have severe implications. Alternatively, residents might feel obligated to have code discussions with all patients regardless of severity of illness, which may be inappropriate in a pediatric population. Several educators expressed concerns about trainees having unsupervised code‐status conversations with families of pediatric patients. Conversely, although codes are rare in pediatric ward settings, concerns were raised that not including code status could be problematic during these rare but critically important events. Other fields, such as weight, might have less relevance for an adult population in which emergency drug doses are standardized.

Limitations

Our study has several limitations. We only collected data from hospitalist services at pediatric sites. It is likely that providers in other specialties would have specific data elements they felt were essential (eg, postoperative day, code status). Our methodology was expert consensus based, driven by data collection from sites that were already participating in the I‐PASS study. Although the I‐PASS study demonstrated decreased rates of medical errors and preventable adverse events with inclusion of these data elements as part of a bundle, future research will be required to evaluate whether some of these items are more important than others in improving written communication and ultimately patient safety. In spite of these limitations, our work represents an important starting point for the development of standards for written handoff documents that should be used in patient handoffs, particularly those generated from EHRs.

CONCLUSIONS

In this article we describe the results of a needs assessment that informed expert consensus‐based recommendations for data elements to include in a printed handoff document. We recommend that pediatric programs include the elements identified as part of a standardized written handoff tool. Although many of these elements are also applicable to other specialties, future work should be conducted to adapt the printed handoff document elements described here for use in other specialties and settings. Future studies should work to validate the importance of these elements, studying the manner in which their inclusion affects the quality of written handoffs, and ultimately patient safety.

Acknowledgements

Members of the I‐PASS Study Education Executive Committee who contributed to this manuscript include: Boston Children's Hospital/Harvard Medical School (primary site) (Christopher P. Landrigan, MD, MPH, Elizabeth L. Noble, BA. Theodore C. Sectish, MD. Lisa L. Tse, BA). Cincinnati Children's Hospital Medical Center/University of Cincinnati College of Medicine (Jennifer K. O'Toole, MD, MEd). Doernbecher Children's Hospital/Oregon Health and Science University (Amy J. Starmer, MD, MPH). Hospital for Sick Children/University of Toronto (Zia Bismilla, MD. Maitreya Coffey, MD). Lucile Packard Children's Hospital/Stanford University (Lauren A. Destino, MD. Jennifer L. Everhart, MD. Shilpa J. Patel, MD [currently at Kapi'olani Children's Hospital/University of Hawai'i School of Medicine]). National Capital Consortium (Jennifer H. Hepps, MD. Joseph O. Lopreiato, MD, MPH. Clifton E. Yu, MD). Primary Children's Medical Center/University of Utah (James F. Bale, Jr., MD. Adam T. Stevenson, MD). St. Louis Children's Hospital/Washington University (F. Sessions Cole, MD). St. Christopher's Hospital for Children/Drexel University College of Medicine (Sharon Calaman, MD. Nancy D. Spector, MD). Benioff Children's Hospital/University of California San Francisco School of Medicine (Glenn Rosenbluth, MD. Daniel C. West, MD).

Additional I‐PASS Study Group members who contributed to this manuscript include April D. Allen, MPA, MA (Heller School for Social Policy and Management, Brandeis University, previously affiliated with Boston Children's Hospital), Madelyn D. Kahana, MD (The Children's Hospital at Montefiore/Albert Einstein College of Medicine, previously affiliated with Lucile Packard Children's Hospital/Stanford University), Robert S. McGregor, MD (Akron Children's Hospital/Northeast Ohio Medical University, previously affiliated with St. Christopher's Hospital for Children/Drexel University), and John S. Webster, MD, MBA, MS (Webster Healthcare Consulting Inc., formerly of the Department of Defense).

Members of the I‐PASS Study Group include individuals from the institutions listed below as follows: Boston Children's Hospital/Harvard Medical School (primary site): April D. Allen, MPA, MA (currently at Heller School for Social Policy and Management, Brandeis University), Angela M. Feraco, MD, Christopher P. Landrigan, MD, MPH, Elizabeth L. Noble, BA, Theodore C. Sectish, MD, Lisa L. Tse, BA. Brigham and Women's Hospital (data coordinating center): Anuj K. Dalal, MD, Carol A. Keohane, BSN, RN, Stuart Lipsitz, PhD, Jeffrey M. Rothschild, MD, MPH, Matt F. Wien, BS, Catherine S. Yoon, MS, Katherine R. Zigmont, BSN, RN. Cincinnati Children's Hospital Medical Center/University of Cincinnati College of Medicine: Javier Gonzalez del Rey, MD, MEd, Jennifer K. O'Toole, MD, MEd, Lauren G. Solan, MD. Doernbecher Children's Hospital/Oregon Health and Science University: Megan E. Aylor, MD, Amy J. Starmer, MD, MPH, Windy Stevenson, MD, Tamara Wagner, MD. Hospital for Sick Children/University of Toronto: Zia Bismilla, MD, Maitreya Coffey, MD, Sanjay Mahant, MD, MSc. Lucile Packard Children's Hospital/Stanford University: Rebecca L. Blankenburg, MD, MPH, Lauren A. Destino, MD, Jennifer L. Everhart, MD, Madelyn Kahana, MD, Shilpa J. Patel, MD (currently at Kapi'olani Children's Hospital/University of Hawaii School of Medicine). National Capital Consortium: Jennifer H. Hepps, MD, Joseph O. Lopreiato, MD, MPH, Clifton E. Yu, MD. Primary Children's Hospital/University of Utah: James F. Bale, Jr., MD, Jaime Blank Spackman, MSHS, CCRP, Rajendu Srivastava, MD, FRCP(C), MPH, Adam Stevenson, MD. St. Louis Children's Hospital/Washington University: Kevin Barton, MD, Kathleen Berchelmann, MD, F. Sessions Cole, MD, Christine Hrach, MD, Kyle S. Schultz, MD, Michael P. Turmelle, MD, Andrew J. White, MD. St. Christopher's Hospital for Children/Drexel University: Sharon Calaman, MD, Bronwyn D. Carlson, MD, Robert S. McGregor, MD (currently at Akron Children's Hospital/Northeast Ohio Medical University), Vahideh Nilforoshan, MD, Nancy D. Spector, MD. and Benioff Children's Hospital/University of California San Francisco School of Medicine: Glenn Rosenbluth, MD, Daniel C. West, MD. Dorene Balmer, PhD, RD, Carol L. Carraccio, MD, MA, Laura Degnon, CAE, and David McDonald, and Alan Schwartz PhD serve the I‐PASS Study Group as part of the IIPE. Karen M. Wilson, MD, MPH serves the I‐PASS Study Group as part of the advisory board from the PRIS Executive Council. John Webster served the I‐PASS Study Group and Education Executive Committee as a representative from TeamSTEPPS.

Disclosures: The I‐PASS Study was primarily supported by the US Department of Health and Human Services, Office of the Assistant Secretary for Planning and Evaluation (1R18AE000029‐01). The opinions and conclusions expressed herein are solely those of the author(s) and should not be constructed as representing the opinions or policy of any agency of the federal government. Developed with input from the Initiative for Innovation in Pediatric Education and the Pediatric Research in Inpatient Settings Network (supported by the Children's Hospital Association, the Academic Pediatric Association, the American Academy of Pediatrics, and the Society of Hospital Medicine). A. J. S. was supported by the Agency for Healthcare Research and Quality/Oregon Comparative Effectiveness Research K12 Program (1K12HS019456‐01). Additional funding for the I‐PASS Study was provided by the Medical Research Foundation of Oregon, Physician Services Incorporated Foundation (Ontario, Canada), and Pfizer (unrestricted medical education grant to N.D.S.). C.P.L, A.J.S. were supported by the Oregon Comparative Effectiveness Research K12 Program (1K12HS019456 from the Agency for Healthcare Research and Quality). A.J.S. was also supported by the Medical Research Foundation of Oregon. The authors report no conflicts of interest.

Handoffs among hospital providers are highly error prone and can result in serious morbidity and mortality. Best practices for verbal handoffs have been described[1, 2, 3, 4] and include conducting verbal handoffs face to face, providing opportunities for questions, having the receiver perform a readback, as well as specific content recommendations including action items. Far less research has focused on best practices for printed handoff documents,[5, 6] despite the routine use of written handoff tools as a reference by on‐call physicians.[7, 8] Erroneous or outdated information on the written handoff can mislead on‐call providers, potentially leading to serious medical errors.

In their most basic form, printed handoff documents list patients for whom a provider is responsible. Typically, they also contain demographic information, reason for hospital admission, and a task list for each patient. They may also contain more detailed information on patient history, hospital course, and/or care plan, and may vary among specialties.[9] They come in various forms, ranging from index cards with handwritten notes, to word‐processor or spreadsheet documents, to printed documents that are autopopulated from the electronic health record (EHR).[2] Importantly, printed handoff documents supplement the verbal handoff by allowing receivers to follow along as patients are presented. The concurrent use of written and verbal handoffs may improve retention of clinical information as compared with either alone.[10, 11]

The Joint Commission requires an institutional approach to patient handoffs.[12] The requirements state that handoff communication solutions should take a standardized form, but they do not provide details regarding what data elements should be included in printed or verbal handoffs. Accreditation Council for Graduate Medical Education Common Program Requirements likewise require that residents must become competent in patient handoffs[13] but do not provide specific details or measurement tools. Absent widely accepted guidelines, decisions regarding which elements to include in printed handoff documents are currently made at an individual or institutional level.

The I‐PASS study is a federally funded multi‐institutional project that demonstrated a decrease in medical errors and preventable adverse events after implementation of a standardized resident handoff bundle.[14, 15] The I‐PASS Study Group developed a bundle of handoff interventions, beginning with a handoff and teamwork training program (based in part on TeamSTEPPS [Team Strategies and Tools to Enhance Performance and Patient Safety]),[16] a novel verbal mnemonic, I‐PASS (Illness Severity, Patient Summary, Action List, Situation Awareness and Contingency Planning, and Synthesis by Receiver),[17] and changes to the verbal handoff process, in addition to several other elements.

We hypothesized that developing a standardized printed handoff template would reinforce the handoff training and enhance the value of the verbal handoff process changes. Given the paucity of data on best printed handoff practices, however, we first conducted a needs assessment to identify which data elements were currently contained in printed handoffs across sites, and to allow an expert panel to make recommendations for best practices.

METHODS

I‐PASS Study sites included 9 pediatric residency programs at academic medical centers from across North America. Programs were identified through professional networks and invited to participate. The nonintensive care unit hospitalist services at these medical centers are primarily staffed by residents and medical students with attending supervision. At 1 site, nurse practitioners also participate in care. Additional details about study sites can be found in the study descriptions previously published.[14, 15] All sites received local institutional review board approval.

We began by inviting members of the I‐PASS Education Executive Committee (EEC)[14] to build a collective, comprehensive list of possible data elements for printed handoff documents. This committee included pediatric residency program directors, pediatric hospitalists, education researchers, health services researchers, and patient safety experts. We obtained sample handoff documents from pediatric hospitalist services at each of 9 institutions in the United States and Canada (with protected health information redacted). We reviewed these sample handoff documents to characterize their format and to determine what discrete data elements appeared in each site's printed handoff document. Presence or absence of each data element across sites was tabulated. We also queried sites to determine the feasibility of including elements that were not presently included.

Subsequently, I‐PASS site investigators led structured group interviews at participating sites to gather additional information about handoff practices at each site. These structured group interviews included diverse representation from residents, faculty, and residency program leadership, as well as hospitalists and medical students, to ensure the comprehensive acquisition of information regarding site‐specific characteristics. Each group provided answers to a standardized set of open‐ended questions that addressed current practices, handoff education, simulation use, team structure, and the nature of current written handoff tools, if applicable, at each site. One member of the structured group interview served as a scribe and created a document that summarized the content of the structured group interview meeting and answers to the standardized questions.

Consensus on Content

The initial data collection also included a multivote process[18] of the full I‐PASS EEC to help prioritize data elements. Committee members brainstormed a list of all possible data elements for a printed handoff document. Each member (n=14) was given 10 votes to distribute among the elements. Committee members could assign more than 1 vote to an element to emphasize its importance.

The results of this process as well as the current data elements included in each printed handoff tool were reviewed by a subgroup of the I‐PASS EEC. These expert panel members participated in a series of conference calls during which they tabulated categorical information, reviewed narrative comments, discussed existing evidence, and conducted simple content analysis to identify areas of concordance or discordance. Areas of discordance were discussed by the committee. Disagreements were resolved with group consensus with attention to published evidence or best practices, if available.

Elements were divided into those that were essential (unanimous consensus, no conflicting literature) and those that were recommended (majority supported inclusion of element, no conflicting literature). Ratings were assigned using the American College of Cardiology/American Heart Association framework for practice guidelines,[19] in which each element is assigned a classification (I=effective, II=conflicting evidence/opinion, III=not effective) and a level of evidence to support that classification (A=multiple large randomized controlled trials, B=single randomized trial, or nonrandomized studies, C=expert consensus).

The expert panel reached consensus, through active discussion, on a list of data elements that should be included in an ideal printed handoff document. Elements were chosen based on perceived importance, with attention to published best practices[1, 16] and the multivoting results. In making recommendations, consideration was given to whether data elements could be electronically imported into the printed handoff document from the EHR, or whether they would be entered manually. The potential for serious medical errors due to possible errors in manual entry of data was an important aspect of recommendations made. The list of candidate elements was then reviewed by a larger group of investigators from the I‐PASS Education Executive Committee and Coordinating Council for additional input.

The panel asked site investigators from each participating hospital to gather data on the feasibility of redesigning the printed handoff at that hospital to include each recommended element. Site investigators reported whether each element was already included, possible to include but not included currently, or not currently possible to include within that site's printed handoff tool. Site investigators also reported how data elements were populated in their handoff documents, with options including: (1) autopopulated from administrative data (eg, pharmacy‐entered medication list, demographic data entered by admitting office), (2) autoimported from physicians' free‐text entries elsewhere in the EHR (eg, progress notes), (3) free text entered specifically for the printed handoff, or (4) not applicable (element cannot be included).

RESULTS