User login

Quality Tools: Root Cause Analysis (RCA) and Failure Modes and Effects Analysis (FMEA)

When we speak of “quality” in health care, we generally think of mortality outcomes or regulatory requirements that are mandated by the JCAHO (Joint Commission for Accreditation of Healthcare Organizations). But how do these relate to and impact our everyday lives as hospitalists? At the 8th Annual Meeting of SHM we presented a workshop on RCA and FMEA, taking a practical approach to illustrate how these two JCAHO required methodologies can improve patient care as well as improve the work environment for hospitalists by addressing the systemic issues that can compromise care.

The workshop starts by stepping into the life of a hospitalist and something we all fear: “Something bad happens. Then what?” Depending on the severity of the event, the options include peer review, notifying the Department Chief, calling the Risk Manager, calling your lawyer, or doing nothing. You’ve probably had many experiences when “something wasn’t quite right,” but often there is no obvious bad outcome or obvious solution, so we shrug our shoulders and say, “Oh well, we got lucky this time; no harm, no foul.” The problem is, there are recurring patterns to these types of events, and the same issues may affect the next patient, who may not be so lucky.

Defining “Something Bad”

These types of cases, which have outcomes ranging from no effect on the patient to death, may be approached several different ways. The terms “near miss” or “close call” refer to an incident where a mistake was made but caught in time, so no harm was done to the patient. An example of this is when a physician makes a mistake on a medication order, but it is caught and corrected by a pharmacist or nurse.

When adverse outcomes do occur, think about and define etiologies so that you identify and address underlying causes. Is the outcome an expected or unexpected complication of therapy? Was there an error involved? In asking these questions, remember that you can have harm without error and error without harm. Error is defined as “failure of a planned action to be completed as intended or use of a wrong plan to achieve an aim; the accumulation of errors results in accidents” (Kohn, et al). This definition points out that usually a chain of events rather than a single individual or event results in a bad outcome. The purpose of defining etiologies is not to assign blame but to identify underlying issues and surrounding circumstances that may have contributed to the adverse outcome.

Significant adverse events are called “sentinel events” and defined as an “unexpected occurrence involving death or serious physical or psychological injury, or the risk thereof. Serious injury specifically includes loss of limb or function” (JCAHO 1998).

How We Approach Error

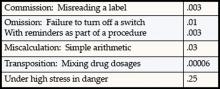

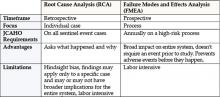

Unfortunately, as humans we are fallible and make errors quite reliably. Table 1 demonstrates types of errors and expected rates of errors. For example, we make errors of omission 0.01% of the time, but the good news is that with reminders or ticklers, we can reduce this rate to 0.003%. Unfortunately, when humans are under high stress in danger, research from the military indicates error rates of 25% (Salvendy 1997). In a complex ICU setting, researchers have documented an average of 178 activities per patient per day with an error rate of 0.95%. Despite an error rate of less than 1%, the yield of errors during the 4-month period of observation was still over 1000 errors, 29% of which were considered to have severe or potentially severe consequences (Donchin, et al).

The reality is that we err. Having the unrealistic expectations developed in medical training of being perfect in all our actions perpetuates the blame cycle when the inevitable mistake occurs, and it prevents us from implementing solutions that prevent errors from ever occurring or catching them before they cause harm.

RCA and FMEA Help Us Create Solutions That Make a Difference

Briefly, Root Cause Analysis (RCA) is a retrospective investigation that is required by JCAHO after a sentinel event: “Root cause analysis is a process for identifying the basic or causal factor(s) that underlies variation in performance, including the occurrence or possible occurrence of a sentinel event. A root cause is that most fundamental reason a problem―a situation where performance does not meet expectations―has occurred” (JCAHO 1998). An RCA looks back in time at an event and asks the question “What

happened?” The utility of this methodology lies in the fact that it not only asks what happened but also asks “Why did this happen” rather than focus on “Who is to blame?” Some hospitals use this methodology for cases that are not sentinel events, because the knowledge gained from these investigations often uncovers system issues previously not known and that negatively impact many departments, not just the departments involved in a particular case.

Failure Modes and Effects Analysis (FMEA) is a prospective investigation aimed at identifying vulnerabilities and preventing failures in the future. It looks forward and asks what could go wrong? Performance of an FMEA is also required yearly by JCAHO and focuses on improving risky processes such as blood transfusions, chemotherapy, and other high risk medications.

Approaching a clinical case clearly demonstrates the differences between RCA and FMEA. Imagine a 72-year-old patient admitted to your hospital with findings of an acute abdomen requiring surgery. The patient is a smoker, with Type 2 diabetes and an admission blood sugar of 465, but no evidence of DKA. She normally takes an oral hypoglycemic to control her diabetes and an ACE inhibitor for high blood pressure but no other medications. She is taken to the OR emergently, where surgery seems to go well, and post-operatively is admitted to the ICU. Subsequently, her blood glucose ranges from 260 to 370 and is “controlled” with sliding scale insulin. Unfortunately, within 18 hours of surgery she suffers an MI and develops a postoperative wound infection 4 days after surgery. She eventually dies from sepsis.

An RCA of this case might reveal causal factors such as lack of use of a beta-blocker preoperatively and lack of use of IV insulin to lower her blood sugars to the 80–110 range. While possibly identifying the root cause of this adverse outcome, an RCA is limited by its hindsight bias and the labor-intensive nature of the investigation that may or may not have broad application, since it is an in-depth study of one case. However, RCA’s do have the salutary effects of building teamwork, identifying needed changes, and if carried out impartially without assigning blame can facilitate a culture of patient safety.

FMEA takes a different approach and proactively aims to prevent failure. It is a systematic method of identifying and preventing product and process failures before they occur. It does not require a specific case or adverse event. Rather, a high-risk process is chosen for study, and an interdisciplinary team asks the question “What can go wrong with this process and how can we prevent failures?” Considering the above case, imagine that before it ever occurred you as the hospitalist concerned with patient safety decided to conduct an FMEA on controlling blood sugar in the ICU or administering beta-blockers perioperatively to patients who are appropriate candidates.

For example, using FMEA methodology to study the process of intensive insulin therapy to achieve tight control of glucose in the ICU would identify potential barriers and failures preventing successful implementation. A significant risk encountered in achieving tight glucose control in the range of 80–110 includes hypoglycemia. Common pitfalls of insulin administration include administration and calculation errors that can result in 10-fold differences in doses of insulin. Other details of administration, such as type of IV tubing used and how the IV tubing is primed, can greatly affect the amount of insulin delivered to the patient and thus the glucose levels. If an inadequate amount of solution is flushed through to prime the tubing, the patient may receive saline rather than insulin for a few hours, resulting in higher-than-expected glucose levels and titration of insulin to higher doses. The result would then be an unexpectedly low glucose several hours later. Once failure modes such as these are identified, a fail-safe system can be designed so that failures are less likely to occur.

The advantages of FMEA include its focus on system design rather than on a single incident such as in RCA. By focusing on systems and processes, the learning and changes implemented are likely to impact a larger number of patients.

Summary and Discussion

To summarize, RCA is retrospective and dissects a case, while FMEA is prospective and dissects a process. It is important to remember that given the right set of circumstances, any physician can make a mistake. It makes sense to apply methodologies that probe into surrounding circumstances and contributing factors so that knowledge gained can be used to prevent the same mistakes from happening to different individuals and have broader impact on healthcare systems.

Resources

- www.patientsafety.gov: VA National Center for Patient Safety. Excellent website with very helpful, practical tools.

- www.ihi.org: Institute for Healthcare Improvement website. Has a nice FMEA toolkit.

- www.jcaho.com: The Joint Commission for Accreditation of Healthcare Organizations website. Has information on sentinel events and use of RCA.

Bibliography

- Kohn LT, Corrigan JM, Eds. To Err is Human. Building a Safer Helath System. Washington, DC: National Academy Press; 1999.

- Joint Commission on Accreditation of Healthcare Organizations. Sentinel events: evaluating cause and planning improvement. 1998. Library of congress catalog number 97-80531.

- Salvendy G, ed. Handbook of Human Factors and Ergonomics. New York: John Wiley & Sons;1997:163

- Donchin Y, Gopher D, Olin M, et al. A look into the nature and causes of human errors in the intensive care unit. Crit Care Med. 1995;23:294-300.

- McNutt R, Abrams R, Hasler S, et al. Determining medical error: three case reports. Eff Clin Pract. 2002;5:23-8.

- Senders JW. FMEA and RCA: the mantras of modern risk management. Qual Saf Health Care. 2004;13:249-50.

- Spath PL. Investigating Sentinel Events: How to Find and Resolve Root Causes. Forest Grove, OR: Brown Spath and Associates; 1997.

- Wald H, Shojania KG. Root cause analysis. In: Shojania KG, McDonald KM, Wachter RM, eds. Making Health Care Safer: A Critical Analysis of Patient Safety Practices. Evidence Report/Technology Assessment No. 43, AHRQ Publication No. 01-E058; July 2001. Available at http://www.ahrq.gov.

- Woodhouse S, Burney B, Coste K. To err is human: improving patient safety through failure mode and effect analysis. Clin leadersh Manag Rev. 2004;18:32-6.

When we speak of “quality” in health care, we generally think of mortality outcomes or regulatory requirements that are mandated by the JCAHO (Joint Commission for Accreditation of Healthcare Organizations). But how do these relate to and impact our everyday lives as hospitalists? At the 8th Annual Meeting of SHM we presented a workshop on RCA and FMEA, taking a practical approach to illustrate how these two JCAHO required methodologies can improve patient care as well as improve the work environment for hospitalists by addressing the systemic issues that can compromise care.

The workshop starts by stepping into the life of a hospitalist and something we all fear: “Something bad happens. Then what?” Depending on the severity of the event, the options include peer review, notifying the Department Chief, calling the Risk Manager, calling your lawyer, or doing nothing. You’ve probably had many experiences when “something wasn’t quite right,” but often there is no obvious bad outcome or obvious solution, so we shrug our shoulders and say, “Oh well, we got lucky this time; no harm, no foul.” The problem is, there are recurring patterns to these types of events, and the same issues may affect the next patient, who may not be so lucky.

Defining “Something Bad”

These types of cases, which have outcomes ranging from no effect on the patient to death, may be approached several different ways. The terms “near miss” or “close call” refer to an incident where a mistake was made but caught in time, so no harm was done to the patient. An example of this is when a physician makes a mistake on a medication order, but it is caught and corrected by a pharmacist or nurse.

When adverse outcomes do occur, think about and define etiologies so that you identify and address underlying causes. Is the outcome an expected or unexpected complication of therapy? Was there an error involved? In asking these questions, remember that you can have harm without error and error without harm. Error is defined as “failure of a planned action to be completed as intended or use of a wrong plan to achieve an aim; the accumulation of errors results in accidents” (Kohn, et al). This definition points out that usually a chain of events rather than a single individual or event results in a bad outcome. The purpose of defining etiologies is not to assign blame but to identify underlying issues and surrounding circumstances that may have contributed to the adverse outcome.

Significant adverse events are called “sentinel events” and defined as an “unexpected occurrence involving death or serious physical or psychological injury, or the risk thereof. Serious injury specifically includes loss of limb or function” (JCAHO 1998).

How We Approach Error

Unfortunately, as humans we are fallible and make errors quite reliably. Table 1 demonstrates types of errors and expected rates of errors. For example, we make errors of omission 0.01% of the time, but the good news is that with reminders or ticklers, we can reduce this rate to 0.003%. Unfortunately, when humans are under high stress in danger, research from the military indicates error rates of 25% (Salvendy 1997). In a complex ICU setting, researchers have documented an average of 178 activities per patient per day with an error rate of 0.95%. Despite an error rate of less than 1%, the yield of errors during the 4-month period of observation was still over 1000 errors, 29% of which were considered to have severe or potentially severe consequences (Donchin, et al).

The reality is that we err. Having the unrealistic expectations developed in medical training of being perfect in all our actions perpetuates the blame cycle when the inevitable mistake occurs, and it prevents us from implementing solutions that prevent errors from ever occurring or catching them before they cause harm.

RCA and FMEA Help Us Create Solutions That Make a Difference

Briefly, Root Cause Analysis (RCA) is a retrospective investigation that is required by JCAHO after a sentinel event: “Root cause analysis is a process for identifying the basic or causal factor(s) that underlies variation in performance, including the occurrence or possible occurrence of a sentinel event. A root cause is that most fundamental reason a problem―a situation where performance does not meet expectations―has occurred” (JCAHO 1998). An RCA looks back in time at an event and asks the question “What

happened?” The utility of this methodology lies in the fact that it not only asks what happened but also asks “Why did this happen” rather than focus on “Who is to blame?” Some hospitals use this methodology for cases that are not sentinel events, because the knowledge gained from these investigations often uncovers system issues previously not known and that negatively impact many departments, not just the departments involved in a particular case.

Failure Modes and Effects Analysis (FMEA) is a prospective investigation aimed at identifying vulnerabilities and preventing failures in the future. It looks forward and asks what could go wrong? Performance of an FMEA is also required yearly by JCAHO and focuses on improving risky processes such as blood transfusions, chemotherapy, and other high risk medications.

Approaching a clinical case clearly demonstrates the differences between RCA and FMEA. Imagine a 72-year-old patient admitted to your hospital with findings of an acute abdomen requiring surgery. The patient is a smoker, with Type 2 diabetes and an admission blood sugar of 465, but no evidence of DKA. She normally takes an oral hypoglycemic to control her diabetes and an ACE inhibitor for high blood pressure but no other medications. She is taken to the OR emergently, where surgery seems to go well, and post-operatively is admitted to the ICU. Subsequently, her blood glucose ranges from 260 to 370 and is “controlled” with sliding scale insulin. Unfortunately, within 18 hours of surgery she suffers an MI and develops a postoperative wound infection 4 days after surgery. She eventually dies from sepsis.

An RCA of this case might reveal causal factors such as lack of use of a beta-blocker preoperatively and lack of use of IV insulin to lower her blood sugars to the 80–110 range. While possibly identifying the root cause of this adverse outcome, an RCA is limited by its hindsight bias and the labor-intensive nature of the investigation that may or may not have broad application, since it is an in-depth study of one case. However, RCA’s do have the salutary effects of building teamwork, identifying needed changes, and if carried out impartially without assigning blame can facilitate a culture of patient safety.

FMEA takes a different approach and proactively aims to prevent failure. It is a systematic method of identifying and preventing product and process failures before they occur. It does not require a specific case or adverse event. Rather, a high-risk process is chosen for study, and an interdisciplinary team asks the question “What can go wrong with this process and how can we prevent failures?” Considering the above case, imagine that before it ever occurred you as the hospitalist concerned with patient safety decided to conduct an FMEA on controlling blood sugar in the ICU or administering beta-blockers perioperatively to patients who are appropriate candidates.

For example, using FMEA methodology to study the process of intensive insulin therapy to achieve tight control of glucose in the ICU would identify potential barriers and failures preventing successful implementation. A significant risk encountered in achieving tight glucose control in the range of 80–110 includes hypoglycemia. Common pitfalls of insulin administration include administration and calculation errors that can result in 10-fold differences in doses of insulin. Other details of administration, such as type of IV tubing used and how the IV tubing is primed, can greatly affect the amount of insulin delivered to the patient and thus the glucose levels. If an inadequate amount of solution is flushed through to prime the tubing, the patient may receive saline rather than insulin for a few hours, resulting in higher-than-expected glucose levels and titration of insulin to higher doses. The result would then be an unexpectedly low glucose several hours later. Once failure modes such as these are identified, a fail-safe system can be designed so that failures are less likely to occur.

The advantages of FMEA include its focus on system design rather than on a single incident such as in RCA. By focusing on systems and processes, the learning and changes implemented are likely to impact a larger number of patients.

Summary and Discussion

To summarize, RCA is retrospective and dissects a case, while FMEA is prospective and dissects a process. It is important to remember that given the right set of circumstances, any physician can make a mistake. It makes sense to apply methodologies that probe into surrounding circumstances and contributing factors so that knowledge gained can be used to prevent the same mistakes from happening to different individuals and have broader impact on healthcare systems.

Resources

- www.patientsafety.gov: VA National Center for Patient Safety. Excellent website with very helpful, practical tools.

- www.ihi.org: Institute for Healthcare Improvement website. Has a nice FMEA toolkit.

- www.jcaho.com: The Joint Commission for Accreditation of Healthcare Organizations website. Has information on sentinel events and use of RCA.

Bibliography

- Kohn LT, Corrigan JM, Eds. To Err is Human. Building a Safer Helath System. Washington, DC: National Academy Press; 1999.

- Joint Commission on Accreditation of Healthcare Organizations. Sentinel events: evaluating cause and planning improvement. 1998. Library of congress catalog number 97-80531.

- Salvendy G, ed. Handbook of Human Factors and Ergonomics. New York: John Wiley & Sons;1997:163

- Donchin Y, Gopher D, Olin M, et al. A look into the nature and causes of human errors in the intensive care unit. Crit Care Med. 1995;23:294-300.

- McNutt R, Abrams R, Hasler S, et al. Determining medical error: three case reports. Eff Clin Pract. 2002;5:23-8.

- Senders JW. FMEA and RCA: the mantras of modern risk management. Qual Saf Health Care. 2004;13:249-50.

- Spath PL. Investigating Sentinel Events: How to Find and Resolve Root Causes. Forest Grove, OR: Brown Spath and Associates; 1997.

- Wald H, Shojania KG. Root cause analysis. In: Shojania KG, McDonald KM, Wachter RM, eds. Making Health Care Safer: A Critical Analysis of Patient Safety Practices. Evidence Report/Technology Assessment No. 43, AHRQ Publication No. 01-E058; July 2001. Available at http://www.ahrq.gov.

- Woodhouse S, Burney B, Coste K. To err is human: improving patient safety through failure mode and effect analysis. Clin leadersh Manag Rev. 2004;18:32-6.

When we speak of “quality” in health care, we generally think of mortality outcomes or regulatory requirements that are mandated by the JCAHO (Joint Commission for Accreditation of Healthcare Organizations). But how do these relate to and impact our everyday lives as hospitalists? At the 8th Annual Meeting of SHM we presented a workshop on RCA and FMEA, taking a practical approach to illustrate how these two JCAHO required methodologies can improve patient care as well as improve the work environment for hospitalists by addressing the systemic issues that can compromise care.

The workshop starts by stepping into the life of a hospitalist and something we all fear: “Something bad happens. Then what?” Depending on the severity of the event, the options include peer review, notifying the Department Chief, calling the Risk Manager, calling your lawyer, or doing nothing. You’ve probably had many experiences when “something wasn’t quite right,” but often there is no obvious bad outcome or obvious solution, so we shrug our shoulders and say, “Oh well, we got lucky this time; no harm, no foul.” The problem is, there are recurring patterns to these types of events, and the same issues may affect the next patient, who may not be so lucky.

Defining “Something Bad”

These types of cases, which have outcomes ranging from no effect on the patient to death, may be approached several different ways. The terms “near miss” or “close call” refer to an incident where a mistake was made but caught in time, so no harm was done to the patient. An example of this is when a physician makes a mistake on a medication order, but it is caught and corrected by a pharmacist or nurse.

When adverse outcomes do occur, think about and define etiologies so that you identify and address underlying causes. Is the outcome an expected or unexpected complication of therapy? Was there an error involved? In asking these questions, remember that you can have harm without error and error without harm. Error is defined as “failure of a planned action to be completed as intended or use of a wrong plan to achieve an aim; the accumulation of errors results in accidents” (Kohn, et al). This definition points out that usually a chain of events rather than a single individual or event results in a bad outcome. The purpose of defining etiologies is not to assign blame but to identify underlying issues and surrounding circumstances that may have contributed to the adverse outcome.

Significant adverse events are called “sentinel events” and defined as an “unexpected occurrence involving death or serious physical or psychological injury, or the risk thereof. Serious injury specifically includes loss of limb or function” (JCAHO 1998).

How We Approach Error

Unfortunately, as humans we are fallible and make errors quite reliably. Table 1 demonstrates types of errors and expected rates of errors. For example, we make errors of omission 0.01% of the time, but the good news is that with reminders or ticklers, we can reduce this rate to 0.003%. Unfortunately, when humans are under high stress in danger, research from the military indicates error rates of 25% (Salvendy 1997). In a complex ICU setting, researchers have documented an average of 178 activities per patient per day with an error rate of 0.95%. Despite an error rate of less than 1%, the yield of errors during the 4-month period of observation was still over 1000 errors, 29% of which were considered to have severe or potentially severe consequences (Donchin, et al).

The reality is that we err. Having the unrealistic expectations developed in medical training of being perfect in all our actions perpetuates the blame cycle when the inevitable mistake occurs, and it prevents us from implementing solutions that prevent errors from ever occurring or catching them before they cause harm.

RCA and FMEA Help Us Create Solutions That Make a Difference

Briefly, Root Cause Analysis (RCA) is a retrospective investigation that is required by JCAHO after a sentinel event: “Root cause analysis is a process for identifying the basic or causal factor(s) that underlies variation in performance, including the occurrence or possible occurrence of a sentinel event. A root cause is that most fundamental reason a problem―a situation where performance does not meet expectations―has occurred” (JCAHO 1998). An RCA looks back in time at an event and asks the question “What

happened?” The utility of this methodology lies in the fact that it not only asks what happened but also asks “Why did this happen” rather than focus on “Who is to blame?” Some hospitals use this methodology for cases that are not sentinel events, because the knowledge gained from these investigations often uncovers system issues previously not known and that negatively impact many departments, not just the departments involved in a particular case.

Failure Modes and Effects Analysis (FMEA) is a prospective investigation aimed at identifying vulnerabilities and preventing failures in the future. It looks forward and asks what could go wrong? Performance of an FMEA is also required yearly by JCAHO and focuses on improving risky processes such as blood transfusions, chemotherapy, and other high risk medications.

Approaching a clinical case clearly demonstrates the differences between RCA and FMEA. Imagine a 72-year-old patient admitted to your hospital with findings of an acute abdomen requiring surgery. The patient is a smoker, with Type 2 diabetes and an admission blood sugar of 465, but no evidence of DKA. She normally takes an oral hypoglycemic to control her diabetes and an ACE inhibitor for high blood pressure but no other medications. She is taken to the OR emergently, where surgery seems to go well, and post-operatively is admitted to the ICU. Subsequently, her blood glucose ranges from 260 to 370 and is “controlled” with sliding scale insulin. Unfortunately, within 18 hours of surgery she suffers an MI and develops a postoperative wound infection 4 days after surgery. She eventually dies from sepsis.

An RCA of this case might reveal causal factors such as lack of use of a beta-blocker preoperatively and lack of use of IV insulin to lower her blood sugars to the 80–110 range. While possibly identifying the root cause of this adverse outcome, an RCA is limited by its hindsight bias and the labor-intensive nature of the investigation that may or may not have broad application, since it is an in-depth study of one case. However, RCA’s do have the salutary effects of building teamwork, identifying needed changes, and if carried out impartially without assigning blame can facilitate a culture of patient safety.

FMEA takes a different approach and proactively aims to prevent failure. It is a systematic method of identifying and preventing product and process failures before they occur. It does not require a specific case or adverse event. Rather, a high-risk process is chosen for study, and an interdisciplinary team asks the question “What can go wrong with this process and how can we prevent failures?” Considering the above case, imagine that before it ever occurred you as the hospitalist concerned with patient safety decided to conduct an FMEA on controlling blood sugar in the ICU or administering beta-blockers perioperatively to patients who are appropriate candidates.

For example, using FMEA methodology to study the process of intensive insulin therapy to achieve tight control of glucose in the ICU would identify potential barriers and failures preventing successful implementation. A significant risk encountered in achieving tight glucose control in the range of 80–110 includes hypoglycemia. Common pitfalls of insulin administration include administration and calculation errors that can result in 10-fold differences in doses of insulin. Other details of administration, such as type of IV tubing used and how the IV tubing is primed, can greatly affect the amount of insulin delivered to the patient and thus the glucose levels. If an inadequate amount of solution is flushed through to prime the tubing, the patient may receive saline rather than insulin for a few hours, resulting in higher-than-expected glucose levels and titration of insulin to higher doses. The result would then be an unexpectedly low glucose several hours later. Once failure modes such as these are identified, a fail-safe system can be designed so that failures are less likely to occur.

The advantages of FMEA include its focus on system design rather than on a single incident such as in RCA. By focusing on systems and processes, the learning and changes implemented are likely to impact a larger number of patients.

Summary and Discussion

To summarize, RCA is retrospective and dissects a case, while FMEA is prospective and dissects a process. It is important to remember that given the right set of circumstances, any physician can make a mistake. It makes sense to apply methodologies that probe into surrounding circumstances and contributing factors so that knowledge gained can be used to prevent the same mistakes from happening to different individuals and have broader impact on healthcare systems.

Resources

- www.patientsafety.gov: VA National Center for Patient Safety. Excellent website with very helpful, practical tools.

- www.ihi.org: Institute for Healthcare Improvement website. Has a nice FMEA toolkit.

- www.jcaho.com: The Joint Commission for Accreditation of Healthcare Organizations website. Has information on sentinel events and use of RCA.

Bibliography

- Kohn LT, Corrigan JM, Eds. To Err is Human. Building a Safer Helath System. Washington, DC: National Academy Press; 1999.

- Joint Commission on Accreditation of Healthcare Organizations. Sentinel events: evaluating cause and planning improvement. 1998. Library of congress catalog number 97-80531.

- Salvendy G, ed. Handbook of Human Factors and Ergonomics. New York: John Wiley & Sons;1997:163

- Donchin Y, Gopher D, Olin M, et al. A look into the nature and causes of human errors in the intensive care unit. Crit Care Med. 1995;23:294-300.

- McNutt R, Abrams R, Hasler S, et al. Determining medical error: three case reports. Eff Clin Pract. 2002;5:23-8.

- Senders JW. FMEA and RCA: the mantras of modern risk management. Qual Saf Health Care. 2004;13:249-50.

- Spath PL. Investigating Sentinel Events: How to Find and Resolve Root Causes. Forest Grove, OR: Brown Spath and Associates; 1997.

- Wald H, Shojania KG. Root cause analysis. In: Shojania KG, McDonald KM, Wachter RM, eds. Making Health Care Safer: A Critical Analysis of Patient Safety Practices. Evidence Report/Technology Assessment No. 43, AHRQ Publication No. 01-E058; July 2001. Available at http://www.ahrq.gov.

- Woodhouse S, Burney B, Coste K. To err is human: improving patient safety through failure mode and effect analysis. Clin leadersh Manag Rev. 2004;18:32-6.