User login

The Design and Evaluation of the Comprehensive Hospitalist Assessment and Mentorship with Portfolios (CHAMP) Ultrasound Program

Point-of-care ultrasound (POCUS) is a valuable tool to assist in the diagnosis and treatment of many common diseases.1-11 Its use has increased in clinical settings over the years, primarily because of more portable, economical, high-quality devices and training availability.12 POCUS improves procedural success and guides the diagnostic management of hospitalized patients.2,9-12 Literature details the training of medical students,13,14 residents,15-21 and providers in emergency medicine22 and critical care,23,24 as well as focused cardiac training with hospitalists.25-27 However, no literature exists describing a comprehensive longitudinal training program for hospitalists or skills retention.

This document details the hospital medicine department’s ultrasound training program from Regions Hospital, part of HealthPartners in Saint Paul, Minnesota, a large tertiary care medical center. We describe the development and effectiveness of the Comprehensive Hospitalist Assessment and Mentorship with Portfolios (CHAMP) Ultrasound Program. This approach is intended to support the development of POCUS training programs at other organizations.

The aim of the program was to build a comprehensive bedside ultrasound training paradigm for hospitalists. The primary objective of the study was to assess the program’s effect on skills over time. Secondary objectives were confidence ratings in the use of ultrasound and with various patient care realms (volume management, quality of physical exam, and ability to narrow the differential diagnosis). We hypothesized there would be higher retention of ultrasound skills in those who completed portfolios and/or monthly scanning sessions as well as increased confidence through all secondary outcome measures (see below).

MATERIALS AND METHODS

This was a retrospective descriptive report of hospitalists who entered the CHAMP Ultrasound Program. Study participants were providers from the 454-bed Regions Hospital in Saint Paul, Minnesota. The study was deemed exempt by the HealthPartners Institutional Review Board. Three discrete 3-day courses and two 1-day in-person courses held at the Regions Hospital Simulation Center (Saint Paul, Minnesota) were studied.

Program Description

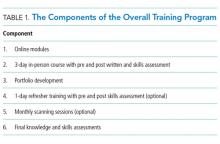

In 2014, a working group was developed in the hospital medicine department to support the hospital-wide POCUS committee with a charter to provide standardized training for providers to complete credentialing.28 The goal of the hospital medicine ultrasound program was to establish the use of ultrasound by credentialed hospitalists into well-defined applications integrated into the practice of hospital medicine. Two providers were selected to lead the efforts and completed additional training through the American College of Chest Physicians (CHEST) Certificate of Completion Program.29 An overall director was designated with the responsibilities delineated in supplementary Appendix 1. This director provided leadership on group practice, protocols, and equipment, creating the organizational framework for success with the training program. The hospital medicine training program had a 3-day in-person component built off the CHEST Critical Care Ultrasonography Program.24 The curriculum was adapted from the American College of Chest Physicians/Société de Réanimation de Langue Française Statement on Competence in Critical Care Ultrasonography.30 See Table 1 for the components of the training program.

Online Modules

3-Day In-Person Course with Assessments

The 3-day course provided 6 hours of didactics, 8 hours of image interpretation, and 9 hours of hands-on instruction (supplementary Appendix 4). Hospitalists first attended a large group didactic, followed by divided groups in image interpretation and hands-on scanning.24

Didactics were provided in a room with a 2-screen set up. Providers used 1 screen to present primary content and the other for simultaneously scanning a human model.

Image interpretation sessions were interactive smaller group learning forums in which participants reviewed high-yield images related to the care of hospital medicine patients and received feedback. Approximately 45 videos with normal and abnormal findings were reviewed during each session.

The hands-on scanning component was accomplished with human models and a faculty-to-participant ratio between 1:2 and

Portfolios

Refresher Training: 1-Day In-Person Course with Assessments and Monthly Scanning Sessions (Optional)

Only hospitalists who completed the 3-day course were eligible to take the 1-day in-person refresher course (supplementary Appendix 5). The first half of the course incorporated scanning with live human models, while the second half of the course had scanning with hospitalized patients focusing on pathology (pleural effusion, hydronephrosis, reduced left ventricular function, etc.). The course was offered at 3, 6, and 12 months after the initial 3-day course.

Monthly scanning sessions occurred for 2 hours every third Friday and were also available prior to the 1-day refresher. The first 90 minutes had a hands-on scanning component with hospitalized patients with faculty supervision (1:2 ratio). The last 30 minutes had an image interpretation component.

Assessments

Knowledge and skills assessment were adapted from the CHEST model (supplementary Appendix 6).24 Before and after the 3-day and 1-day in-person courses, the same hands-on skills assessment with a checklist was provided (supplementary Appendix 7). Before and after the 3-day course, a written knowledge assessment with case-based image interpretation was provided (supplementary Appendix 6).

Measurement

Participant demographic and clinical information was collected at the initial 3-day course for all participants, including age, gender, specialty, years of experience, and number and type of ultrasound procedures personally conducted or supervised in the past year. For skills assessment, a 20-item dichotomous checklist was developed and scored as done correctly or not done/done incorrectly. This same assessment was provided both before and after each of the 3-day and 1-day courses. A 20-question image-based knowledge assessment was also developed and administered both before and after the 3-day course only. The same 20-item checklist was used for the final skills examination. However, a new more detailed 50-question examination was written for the final examination after the portfolio of images was complete. Self-reported measures were confidence in the use of ultrasound, volume management, quality of physical exam, and ability to narrow the differential diagnosis. Confidence in ultrasound use, confidence in volume management, and quality of physical exam were assessed by using a questionnaire both before and after the 3-day course and 1-day course. Participants rated confidence and quality on a 5-point scale, 1 being least confident and 5 being most confident.

Statistical Analysis

Demographics of the included hospitalist population and pre and post 3-day assessments, including knowledge score, skills score, confidence in ultrasound use, confidence in volume management, and quality of physical exam, were summarized. Values for all assessment variables are presented as percentages. Confidence scores were reported as a percentage of the Likert scale (eg, 4/5 was reported as 80%). Skills and written examinations were expressed as percentages of items correct. Data were reported as median and interquartile range or means and standard deviation based on variable distributions. Differences between pre- and postvalues for 3-day course variables were assessed by using 2-sample paired Wilcoxon signed rank tests with a 95% confidence level.

For the subset of hospitalists who also completed the 1-day course, pre and post 1-day course assessments, including skills score, confidence in ultrasound use, confidence in volume management, and quality of physical exam, were summarized. Differences between pre- and postvalues for 1-day assessment variables were assessed by using 2-sample paired Wilcoxon signed rank tests with a 95% confidence level.

Multiple linear regression was performed with the change in skills assessment score from postcompletion of the 3-day course to precompletion of the 1-day course as the dependent variable. Hospitalists were split into 2 age groups (30-39 and 40-49) for the purpose of this analysis. The percent of monthly scanning sessions attended, age category, timing of 1-day course, and percent portfolio were assessed as possible predictors of the skills score by using simple linear regression with a P = .05 cutoff. A final model was chosen based on predictors significant in simple linear regression and included the percent of the portfolio completed and attendance of monthly scanning sessions.

RESULTS

Demographics

3-Day In-Person Course

For the 53 hospitalists who completed skills-based assessments, performance increased significantly after the 3-day course. Knowledge scores also increased significantly from preassessment to postassessment. Self-reported confidence ratings for ultrasound use, confidence in volume management, and quality of physical exam all increased significantly from preassessment to postassessment (Table 2).

Refresher Training: 1-Day In-Person Course

Because the refresher training was encouraged but not required, only 25 of 53 hospitalists, 23 with complete data, completed the 1-day course. For the 23 hospitalists who completed skills-based assessments before and after the 1-day course, mean skills scores increased significantly (Table 2). Self-reported confidence ratings for ultrasound use, confidence in volume management, and quality of physical exam all increased significantly from preassessment to postassessment (Table 2).

Monthly Scanning Sessions and Portfolio Development

The skills retention from initial course to refresher course by portfolio completion and monthly scanning sessions is shown in Table 2. Multiple regression analysis showed that for every 10% increase in the percent of monthly sessions attended, the mean change in skills score was 3.7% (P = .017), and for every 10% increase in the percent of portfolio completed, the mean change in skills score was 2.5% (P = .04), showing that both monthly scanning session attendance and portfolio completion are significantly predictive of skills retention over time.

Final Assessments

DISCUSSION

This is the first description of a successful longitudinal training program with assessments in POCUS for hospital medicine providers that shows an increase in skill retention with the use of a follow-up course and bedside scanning.

The CHAMP Ultrasound Program was developed to provide hospital medicine clinicians with a specialty focused in-house training pathway in POCUS and to assist in sustained skills acquisition by providing opportunities for regular feedback and practice. Practice with regular expert feedback is a critical aspect to develop and maintain skills in POCUS.32,33 Arntfield34 described the utility of remote supervision with feedback for ultrasound training in critical care, which demonstrated varying learning curves in the submission of portfolio images.35,36 The CHAMP Ultrasound training program provided expert oversight, longitudinal supervision, and feedback for course participants. The educational method of mastery learning was employed by setting minimum standards and allowing learners to practice until they met that standard.37-39

This unique program is made possible by the availability of expert-level faculty. Assessment scores improved with an initial 3-day course; however, they also decayed over time, most prominently with hospitalists that did not continue with POCUS scanning after their initial course. Ironically, those who performed more ultrasounds in the year prior to beginning the 3-day course had lower confidence ratings, likely explained by their awareness of their limitations and opportunities for improvement. The incorporation of refresher training to supplement the core 3-day course and portfolio development are key additions that differentiate this training program. These additions and the demonstration of successful training make this a durable pathway for other hospitalist programs. There are many workshops and short courses for medical students, residents, and practicing providers in POCUS.40-43 However, without an opportunity for longitudinal supervision and feedback, there is a noted decrease in the skills for participants. The refresher training with its 2 components (1-day in-person course and monthly scanning sessions) provides evidence of the value of mentored training.

In the initial program development, refresher training was encouraged but optional. We intentionally tracked those that completed refresher training compared with those that did not. Based on the results showing significant skills retention among those attending some form of refresher training, the program is planned to change to make this a requirement. We recommend refresher training within 12 months of the initial introductory course. There were several hospitalists that were unable to accommodate taking a full-day refresher course and, therefore, monthly scanning sessions were provided as an alternative.

The main limitation of the study is that it was completed in a single hospital system with available training mentors in POCUS. This gave us the ability to perform longitudinal training but may make this less reproducible in other hospital systems. Another limitation is that our course participants did not complete the pre- and postknowledge assessments for the refresher training components of the program, though they did for the initial 3-day course. Our pre- and postassessments have not been externally shown to produce valid data, though they are based on the already validated CHEST ultrasound data.44

Finally, our CHAMP Ultrasound Program required a significant time commitment by both faculty and learners. A relatively small percentage of hospitalists have completed the final assessments. The reasons are multifactorial, including program rigor, desire by certain hospitalists to know the basics but not pursue more expertise, and the challenges of developing a skillset that takes dedicated practice over time. We have aimed to address these barriers by providing additional hands-on scanning opportunities, giving timely feedback with portfolios, and obtaining more ultrasound machines. We expect more hospitalists to complete the final assessments in the coming year as evidenced by portfolio submissions to the shared online portal and many choosing to attend either the monthly scanning sessions and/or the 1-day course. We recognize that other institutions may need to adapt our program to suit their local environment.

CONCLUSION

A comprehensive longitudinal ultrasound training program including competency assessments significantly improved ultrasound acquisition skills with hospitalists. Those attending monthly scanning sessions and participating in the portfolio completion as well as a refresher course significantly retained and augmented their skills.

Acknowledgments

The authors would like to acknowledge Kelly Logue, Jason Robertson, MD, Jerome Siy, MD, Shauna Baer, and Jack Dressen for their support in the development and implementation of the POCUS program in hospital medicine.

Disclosure

The authors do not have any relevant financial disclosures to report.

1. Spevack R, Al Shukairi M, Jayaraman D, Dankoff J, Rudski L, Lipes J. Serial lung and IVC ultrasound in the assessment of congestive heart failure. Crit Ultrasound J. 2017;9:7-13. PubMed

2. Soni NJ, Franco R, Velez M, et al. Ultrasound in the diagnosis and management of pleural effusions. J Hosp Med. 2015 Dec;10(12):811-816. PubMed

3. Boyd JH, Sirounis D, Maizel J, Slama M. Echocardiography as a guide for fluid management. Crit Care. 2016;20(1):274-280. PubMed

4. Mantuani D, Frazee BW, Fahimi J, Nagdev A. Point-of-care multi-organ ultrasound improves diagnostic accuracy in adults presenting to the emergency department with acute dyspnea. West J Emerg Med. 2016;17(1):46-53. PubMed

5. Glockner E, Christ M, Geier F, et al. Accuracy of Point-of-Care B-Line Lung Ultrasound in Comparison to NT-ProBNP for Screening Acute Heart Failure. Ultrasound Int Open. 2016;2(3):E90-E92. PubMed

6. Bhagra A, Tierney DM, Sekiguchi H, Soni NH. Point-of-Care Ultrasonography for Primary Care Physicians and General Internists. Mayo Clin Proc. 2016 Dec;91(12):1811-1827. PubMed

7. Crisp JG, Lovato LM, Jang TB. Compression ultrasonography of the lower extremity with portable vascular ultrasonography can accurately detect deep venous thrombosis in the emergency department. Ann Emerg Med. 2010;56(6):601-610. PubMed

8. Squire BT, Fox JC, Anderson C. ABSCESS: Applied bedside sonography for convenient. Evaluation of superficial soft tissue infections. Acad Emerg Med. 2005;12(7):601-606. PubMed

9. Narasimhan M, Koenig SJ, Mayo PH. A Whole-Body Approach to Point of Care Ultrasound. Chest. 2016;150(4):772-776. PubMed

10. Copetti R, Soldati G, Copetti P. Chest sonography: a useful tool to differentiate acute cardiogenic pulmonary edema from acute respiratory distress syndrome. Cardiovasc Ultrasound. 2008;6:16-25. PubMed

11. Soni NJ, Arntfield R, Kory P. Point of Care Ultrasound. Philadelphia: Elsevier Saunders; 2015.

12. Moore CL, Copel JA. Point-of-Care Ultrasonography. N Engl J Med. 2011;364(8):749-757. PubMed

13. Rempell JS, Saldana F, DiSalvo D, et al. Pilot Point-of-Care Ultrasound Curriculum at Harvard Medical School: Early Experience. West J Emerg Med. 2016;17(6):734-740. doi:10.5811/westjem.2016.8.31387. PubMed

14. Heiberg J, Hansen LS, Wemmelund K, et al. Point-of-Care Clinical Ultrasound for Medical Students. Ultrasound Int Open. 2015;1(2):E58-E66. doi:10.1055/s-0035-1565173. PubMed

15. Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr. 2011;24(12):1319-1324. PubMed

16. Alexander JH, Peterson ED, Chen AY, Harding TM, Adams DB, Kisslo JA Jr. Feasibility of point-of-care echocardiography by internal medicine house staff. Am Heart J. 2004;147(3):476-481. PubMed

17. Hellmann DB, Whiting-O’Keefe Q, Shapiro EP, Martin LD, Martire C, Ziegelstein RC. The rate at which residents learn to use hand-held echocardiography at the bedside. Am J Med. 2005;118(9):1010-1018. PubMed

18. Kimura BJ, Amundson SA, Phan JN, Agan DL, Shaw DJ. Observations during development of an internal medicine residency training program in cardiovascular limited ultrasound examination. J Hosp Med. 2012;7(7):537-542. PubMed

19. Akhtar S, Theodoro D, Gaspari R, et al. Resident training in emergency ultrasound: consensus recommendations from the 2008 Council of Emergency Medicine Residency Directors Conference. Acad Emerg Med. 2009;16(s2):S32-S36. PubMed

, , , , , . Can emergency medicine residents detect acute deep venous thrombosis with a limited, two-site ultrasound examination? J Emerg Med. 2007;32(2):197-200. PubMed

, , , . Resident-performed compression ultrasonography for the detection of proximal deep vein thrombosis: fast and accurate. Acad Emerg Med. 2004;11(3):319-322. PubMed

22. Mandavia D, Aragona J, Chan L, et al. Ultrasound training for emergency physicians—a prospective study. Acad Emerg Med. 2000;7(9):1008-1014. PubMed

23. Koenig SJ, Narasimhan M, Mayo PH. Thoracic ultrasonography for the pulmonary specialist. Chest. 2011;140(5):1332-1341. doi: 10.1378/chest.11-0348. PubMed

24. Greenstein YY, Littauer R, Narasimhan M, Mayo PH, Koenig SJ. Effectiveness of a Critical Care Ultrasonography Course. Chest. 2017;151(1):34-40. doi:10.1016/j.chest.2016.08.1465. PubMed

25. Martin LD, Howell EE, Ziegelstein RC, Martire C, Shapiro EP, Hellmann DB. Hospitalist performance of cardiac hand-carried ultrasound after focused training. Am J Med. 2007;120(11):1000-1004. PubMed

26. Martin LD, Howell EE, Ziegelstein RC, et al.

27. Lucas BP, Candotti C, Margeta B, et al. Diagnostic accuracy of hospitalist-performed hand-carried ultrasound echocardiography after a brief training program. J Hosp Med. 2009;4(6):340-349. PubMed

28.

29. Critical Care Ultrasonography Certificate of Completion Program. American College of Chest Physicians. http://www.chestnet.org/Education/Advanced-Clinical-Training/Certificate-of-Completion-Program/Critical-Care-Ultrasonography. Accessed March 30, 2017

30. Mayo PH, Beaulieu Y, Doelken P, et al. American College of Chest Physicians/Société de Réanimation de Langue Française statement on competence in critical care ultrasonography. Chest. 2009;135(4):1050-1060. PubMed

31. Donlon TF, Angoff WH. The scholastic aptitude test. The College Board Admissions Testing Program; 1971:15-47.

32. Ericsson KA, Lehmann AC. Expert and exceptional performance: Evidence of maximal adaptation to task constraints. Annu Rev Psychol. 1996;47:273-305. PubMed

33. Ericcson KA, Krampe RT, Tesch-Romer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 1993;100(3):363-406.

34. Arntfield RT. The utility of remote supervision with feedback as a method to deliver high-volume critical care ultrasound training. J Crit Care. 2015;30(2):441.e1-e6. PubMed

35. Ma OJ, Gaddis G, Norvell JG, Subramanian S. How fast is the focused assessment with sonography for trauma examination learning curve? Emerg Med Australas. 2008;20(1):32-37. PubMed

36. Gaspari RJ, Dickman E, Blehar D. Learning curve of bedside ultrasound of the gallbladder. J Emerg Med. 2009;37(1):51-66. doi:10.1016/j.jemermed.2007.10.070. PubMed

37. Barsuk JH, McGaghie WC, Cohen ER, Balachandran JS, Wane DB. Use of simulation-based mastery learning to improve quality of central venous catheter placement in a medical intensive care unit. J Hosp Med. 2009:4(7):397-403. PubMed

38. McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014:48(4):375-385. PubMed

39. Guskey TR. The essential elements of mastery learning. J Classroom Interac. 1987;22:19-22.

40. Ultrasound Institute. Introduction to Primary Care Ultrasound. University of South Carolina School of Medicine. http://ultrasoundinstitute.med.sc.edu/UIcme.asp. Accessed October 24, 2017.

41. Society of Critical Care Medicine. Live Critical Care Ultrasound: Adult. http://www.sccm.org/Education-Center/Ultrasound/Pages/Fundamentals.aspx. Accessed October 24, 2017.

42. Castlefest Ultrasound Event. Castlefest 2018. http://castlefest2018.com/. Accessed October 24, 2017.

43. Office of Continuing Medical Education. Point of Care Ultrasound Workshop. UT Health San Antonio Joe R. & Teresa Lozano Long School of Medicine. http://cme.uthscsa.edu/ultrasound.asp. Accessed October 24, 2017.

44. Patrawalla P, Eisen LA, Shiloh A, et al. Development and Validation of an Assessment Tool for Competency in Critical Care Ultrasound. J Grad Med Educ. 2015;7(4):567-573. PubMed

Point-of-care ultrasound (POCUS) is a valuable tool to assist in the diagnosis and treatment of many common diseases.1-11 Its use has increased in clinical settings over the years, primarily because of more portable, economical, high-quality devices and training availability.12 POCUS improves procedural success and guides the diagnostic management of hospitalized patients.2,9-12 Literature details the training of medical students,13,14 residents,15-21 and providers in emergency medicine22 and critical care,23,24 as well as focused cardiac training with hospitalists.25-27 However, no literature exists describing a comprehensive longitudinal training program for hospitalists or skills retention.

This document details the hospital medicine department’s ultrasound training program from Regions Hospital, part of HealthPartners in Saint Paul, Minnesota, a large tertiary care medical center. We describe the development and effectiveness of the Comprehensive Hospitalist Assessment and Mentorship with Portfolios (CHAMP) Ultrasound Program. This approach is intended to support the development of POCUS training programs at other organizations.

The aim of the program was to build a comprehensive bedside ultrasound training paradigm for hospitalists. The primary objective of the study was to assess the program’s effect on skills over time. Secondary objectives were confidence ratings in the use of ultrasound and with various patient care realms (volume management, quality of physical exam, and ability to narrow the differential diagnosis). We hypothesized there would be higher retention of ultrasound skills in those who completed portfolios and/or monthly scanning sessions as well as increased confidence through all secondary outcome measures (see below).

MATERIALS AND METHODS

This was a retrospective descriptive report of hospitalists who entered the CHAMP Ultrasound Program. Study participants were providers from the 454-bed Regions Hospital in Saint Paul, Minnesota. The study was deemed exempt by the HealthPartners Institutional Review Board. Three discrete 3-day courses and two 1-day in-person courses held at the Regions Hospital Simulation Center (Saint Paul, Minnesota) were studied.

Program Description

In 2014, a working group was developed in the hospital medicine department to support the hospital-wide POCUS committee with a charter to provide standardized training for providers to complete credentialing.28 The goal of the hospital medicine ultrasound program was to establish the use of ultrasound by credentialed hospitalists into well-defined applications integrated into the practice of hospital medicine. Two providers were selected to lead the efforts and completed additional training through the American College of Chest Physicians (CHEST) Certificate of Completion Program.29 An overall director was designated with the responsibilities delineated in supplementary Appendix 1. This director provided leadership on group practice, protocols, and equipment, creating the organizational framework for success with the training program. The hospital medicine training program had a 3-day in-person component built off the CHEST Critical Care Ultrasonography Program.24 The curriculum was adapted from the American College of Chest Physicians/Société de Réanimation de Langue Française Statement on Competence in Critical Care Ultrasonography.30 See Table 1 for the components of the training program.

Online Modules

3-Day In-Person Course with Assessments

The 3-day course provided 6 hours of didactics, 8 hours of image interpretation, and 9 hours of hands-on instruction (supplementary Appendix 4). Hospitalists first attended a large group didactic, followed by divided groups in image interpretation and hands-on scanning.24

Didactics were provided in a room with a 2-screen set up. Providers used 1 screen to present primary content and the other for simultaneously scanning a human model.

Image interpretation sessions were interactive smaller group learning forums in which participants reviewed high-yield images related to the care of hospital medicine patients and received feedback. Approximately 45 videos with normal and abnormal findings were reviewed during each session.

The hands-on scanning component was accomplished with human models and a faculty-to-participant ratio between 1:2 and

Portfolios

Refresher Training: 1-Day In-Person Course with Assessments and Monthly Scanning Sessions (Optional)

Only hospitalists who completed the 3-day course were eligible to take the 1-day in-person refresher course (supplementary Appendix 5). The first half of the course incorporated scanning with live human models, while the second half of the course had scanning with hospitalized patients focusing on pathology (pleural effusion, hydronephrosis, reduced left ventricular function, etc.). The course was offered at 3, 6, and 12 months after the initial 3-day course.

Monthly scanning sessions occurred for 2 hours every third Friday and were also available prior to the 1-day refresher. The first 90 minutes had a hands-on scanning component with hospitalized patients with faculty supervision (1:2 ratio). The last 30 minutes had an image interpretation component.

Assessments

Knowledge and skills assessment were adapted from the CHEST model (supplementary Appendix 6).24 Before and after the 3-day and 1-day in-person courses, the same hands-on skills assessment with a checklist was provided (supplementary Appendix 7). Before and after the 3-day course, a written knowledge assessment with case-based image interpretation was provided (supplementary Appendix 6).

Measurement

Participant demographic and clinical information was collected at the initial 3-day course for all participants, including age, gender, specialty, years of experience, and number and type of ultrasound procedures personally conducted or supervised in the past year. For skills assessment, a 20-item dichotomous checklist was developed and scored as done correctly or not done/done incorrectly. This same assessment was provided both before and after each of the 3-day and 1-day courses. A 20-question image-based knowledge assessment was also developed and administered both before and after the 3-day course only. The same 20-item checklist was used for the final skills examination. However, a new more detailed 50-question examination was written for the final examination after the portfolio of images was complete. Self-reported measures were confidence in the use of ultrasound, volume management, quality of physical exam, and ability to narrow the differential diagnosis. Confidence in ultrasound use, confidence in volume management, and quality of physical exam were assessed by using a questionnaire both before and after the 3-day course and 1-day course. Participants rated confidence and quality on a 5-point scale, 1 being least confident and 5 being most confident.

Statistical Analysis

Demographics of the included hospitalist population and pre and post 3-day assessments, including knowledge score, skills score, confidence in ultrasound use, confidence in volume management, and quality of physical exam, were summarized. Values for all assessment variables are presented as percentages. Confidence scores were reported as a percentage of the Likert scale (eg, 4/5 was reported as 80%). Skills and written examinations were expressed as percentages of items correct. Data were reported as median and interquartile range or means and standard deviation based on variable distributions. Differences between pre- and postvalues for 3-day course variables were assessed by using 2-sample paired Wilcoxon signed rank tests with a 95% confidence level.

For the subset of hospitalists who also completed the 1-day course, pre and post 1-day course assessments, including skills score, confidence in ultrasound use, confidence in volume management, and quality of physical exam, were summarized. Differences between pre- and postvalues for 1-day assessment variables were assessed by using 2-sample paired Wilcoxon signed rank tests with a 95% confidence level.

Multiple linear regression was performed with the change in skills assessment score from postcompletion of the 3-day course to precompletion of the 1-day course as the dependent variable. Hospitalists were split into 2 age groups (30-39 and 40-49) for the purpose of this analysis. The percent of monthly scanning sessions attended, age category, timing of 1-day course, and percent portfolio were assessed as possible predictors of the skills score by using simple linear regression with a P = .05 cutoff. A final model was chosen based on predictors significant in simple linear regression and included the percent of the portfolio completed and attendance of monthly scanning sessions.

RESULTS

Demographics

3-Day In-Person Course

For the 53 hospitalists who completed skills-based assessments, performance increased significantly after the 3-day course. Knowledge scores also increased significantly from preassessment to postassessment. Self-reported confidence ratings for ultrasound use, confidence in volume management, and quality of physical exam all increased significantly from preassessment to postassessment (Table 2).

Refresher Training: 1-Day In-Person Course

Because the refresher training was encouraged but not required, only 25 of 53 hospitalists, 23 with complete data, completed the 1-day course. For the 23 hospitalists who completed skills-based assessments before and after the 1-day course, mean skills scores increased significantly (Table 2). Self-reported confidence ratings for ultrasound use, confidence in volume management, and quality of physical exam all increased significantly from preassessment to postassessment (Table 2).

Monthly Scanning Sessions and Portfolio Development

The skills retention from initial course to refresher course by portfolio completion and monthly scanning sessions is shown in Table 2. Multiple regression analysis showed that for every 10% increase in the percent of monthly sessions attended, the mean change in skills score was 3.7% (P = .017), and for every 10% increase in the percent of portfolio completed, the mean change in skills score was 2.5% (P = .04), showing that both monthly scanning session attendance and portfolio completion are significantly predictive of skills retention over time.

Final Assessments

DISCUSSION

This is the first description of a successful longitudinal training program with assessments in POCUS for hospital medicine providers that shows an increase in skill retention with the use of a follow-up course and bedside scanning.

The CHAMP Ultrasound Program was developed to provide hospital medicine clinicians with a specialty focused in-house training pathway in POCUS and to assist in sustained skills acquisition by providing opportunities for regular feedback and practice. Practice with regular expert feedback is a critical aspect to develop and maintain skills in POCUS.32,33 Arntfield34 described the utility of remote supervision with feedback for ultrasound training in critical care, which demonstrated varying learning curves in the submission of portfolio images.35,36 The CHAMP Ultrasound training program provided expert oversight, longitudinal supervision, and feedback for course participants. The educational method of mastery learning was employed by setting minimum standards and allowing learners to practice until they met that standard.37-39

This unique program is made possible by the availability of expert-level faculty. Assessment scores improved with an initial 3-day course; however, they also decayed over time, most prominently with hospitalists that did not continue with POCUS scanning after their initial course. Ironically, those who performed more ultrasounds in the year prior to beginning the 3-day course had lower confidence ratings, likely explained by their awareness of their limitations and opportunities for improvement. The incorporation of refresher training to supplement the core 3-day course and portfolio development are key additions that differentiate this training program. These additions and the demonstration of successful training make this a durable pathway for other hospitalist programs. There are many workshops and short courses for medical students, residents, and practicing providers in POCUS.40-43 However, without an opportunity for longitudinal supervision and feedback, there is a noted decrease in the skills for participants. The refresher training with its 2 components (1-day in-person course and monthly scanning sessions) provides evidence of the value of mentored training.

In the initial program development, refresher training was encouraged but optional. We intentionally tracked those that completed refresher training compared with those that did not. Based on the results showing significant skills retention among those attending some form of refresher training, the program is planned to change to make this a requirement. We recommend refresher training within 12 months of the initial introductory course. There were several hospitalists that were unable to accommodate taking a full-day refresher course and, therefore, monthly scanning sessions were provided as an alternative.

The main limitation of the study is that it was completed in a single hospital system with available training mentors in POCUS. This gave us the ability to perform longitudinal training but may make this less reproducible in other hospital systems. Another limitation is that our course participants did not complete the pre- and postknowledge assessments for the refresher training components of the program, though they did for the initial 3-day course. Our pre- and postassessments have not been externally shown to produce valid data, though they are based on the already validated CHEST ultrasound data.44

Finally, our CHAMP Ultrasound Program required a significant time commitment by both faculty and learners. A relatively small percentage of hospitalists have completed the final assessments. The reasons are multifactorial, including program rigor, desire by certain hospitalists to know the basics but not pursue more expertise, and the challenges of developing a skillset that takes dedicated practice over time. We have aimed to address these barriers by providing additional hands-on scanning opportunities, giving timely feedback with portfolios, and obtaining more ultrasound machines. We expect more hospitalists to complete the final assessments in the coming year as evidenced by portfolio submissions to the shared online portal and many choosing to attend either the monthly scanning sessions and/or the 1-day course. We recognize that other institutions may need to adapt our program to suit their local environment.

CONCLUSION

A comprehensive longitudinal ultrasound training program including competency assessments significantly improved ultrasound acquisition skills with hospitalists. Those attending monthly scanning sessions and participating in the portfolio completion as well as a refresher course significantly retained and augmented their skills.

Acknowledgments

The authors would like to acknowledge Kelly Logue, Jason Robertson, MD, Jerome Siy, MD, Shauna Baer, and Jack Dressen for their support in the development and implementation of the POCUS program in hospital medicine.

Disclosure

The authors do not have any relevant financial disclosures to report.

Point-of-care ultrasound (POCUS) is a valuable tool to assist in the diagnosis and treatment of many common diseases.1-11 Its use has increased in clinical settings over the years, primarily because of more portable, economical, high-quality devices and training availability.12 POCUS improves procedural success and guides the diagnostic management of hospitalized patients.2,9-12 Literature details the training of medical students,13,14 residents,15-21 and providers in emergency medicine22 and critical care,23,24 as well as focused cardiac training with hospitalists.25-27 However, no literature exists describing a comprehensive longitudinal training program for hospitalists or skills retention.

This document details the hospital medicine department’s ultrasound training program from Regions Hospital, part of HealthPartners in Saint Paul, Minnesota, a large tertiary care medical center. We describe the development and effectiveness of the Comprehensive Hospitalist Assessment and Mentorship with Portfolios (CHAMP) Ultrasound Program. This approach is intended to support the development of POCUS training programs at other organizations.

The aim of the program was to build a comprehensive bedside ultrasound training paradigm for hospitalists. The primary objective of the study was to assess the program’s effect on skills over time. Secondary objectives were confidence ratings in the use of ultrasound and with various patient care realms (volume management, quality of physical exam, and ability to narrow the differential diagnosis). We hypothesized there would be higher retention of ultrasound skills in those who completed portfolios and/or monthly scanning sessions as well as increased confidence through all secondary outcome measures (see below).

MATERIALS AND METHODS

This was a retrospective descriptive report of hospitalists who entered the CHAMP Ultrasound Program. Study participants were providers from the 454-bed Regions Hospital in Saint Paul, Minnesota. The study was deemed exempt by the HealthPartners Institutional Review Board. Three discrete 3-day courses and two 1-day in-person courses held at the Regions Hospital Simulation Center (Saint Paul, Minnesota) were studied.

Program Description

In 2014, a working group was developed in the hospital medicine department to support the hospital-wide POCUS committee with a charter to provide standardized training for providers to complete credentialing.28 The goal of the hospital medicine ultrasound program was to establish the use of ultrasound by credentialed hospitalists into well-defined applications integrated into the practice of hospital medicine. Two providers were selected to lead the efforts and completed additional training through the American College of Chest Physicians (CHEST) Certificate of Completion Program.29 An overall director was designated with the responsibilities delineated in supplementary Appendix 1. This director provided leadership on group practice, protocols, and equipment, creating the organizational framework for success with the training program. The hospital medicine training program had a 3-day in-person component built off the CHEST Critical Care Ultrasonography Program.24 The curriculum was adapted from the American College of Chest Physicians/Société de Réanimation de Langue Française Statement on Competence in Critical Care Ultrasonography.30 See Table 1 for the components of the training program.

Online Modules

3-Day In-Person Course with Assessments

The 3-day course provided 6 hours of didactics, 8 hours of image interpretation, and 9 hours of hands-on instruction (supplementary Appendix 4). Hospitalists first attended a large group didactic, followed by divided groups in image interpretation and hands-on scanning.24

Didactics were provided in a room with a 2-screen set up. Providers used 1 screen to present primary content and the other for simultaneously scanning a human model.

Image interpretation sessions were interactive smaller group learning forums in which participants reviewed high-yield images related to the care of hospital medicine patients and received feedback. Approximately 45 videos with normal and abnormal findings were reviewed during each session.

The hands-on scanning component was accomplished with human models and a faculty-to-participant ratio between 1:2 and

Portfolios

Refresher Training: 1-Day In-Person Course with Assessments and Monthly Scanning Sessions (Optional)

Only hospitalists who completed the 3-day course were eligible to take the 1-day in-person refresher course (supplementary Appendix 5). The first half of the course incorporated scanning with live human models, while the second half of the course had scanning with hospitalized patients focusing on pathology (pleural effusion, hydronephrosis, reduced left ventricular function, etc.). The course was offered at 3, 6, and 12 months after the initial 3-day course.

Monthly scanning sessions occurred for 2 hours every third Friday and were also available prior to the 1-day refresher. The first 90 minutes had a hands-on scanning component with hospitalized patients with faculty supervision (1:2 ratio). The last 30 minutes had an image interpretation component.

Assessments

Knowledge and skills assessment were adapted from the CHEST model (supplementary Appendix 6).24 Before and after the 3-day and 1-day in-person courses, the same hands-on skills assessment with a checklist was provided (supplementary Appendix 7). Before and after the 3-day course, a written knowledge assessment with case-based image interpretation was provided (supplementary Appendix 6).

Measurement

Participant demographic and clinical information was collected at the initial 3-day course for all participants, including age, gender, specialty, years of experience, and number and type of ultrasound procedures personally conducted or supervised in the past year. For skills assessment, a 20-item dichotomous checklist was developed and scored as done correctly or not done/done incorrectly. This same assessment was provided both before and after each of the 3-day and 1-day courses. A 20-question image-based knowledge assessment was also developed and administered both before and after the 3-day course only. The same 20-item checklist was used for the final skills examination. However, a new more detailed 50-question examination was written for the final examination after the portfolio of images was complete. Self-reported measures were confidence in the use of ultrasound, volume management, quality of physical exam, and ability to narrow the differential diagnosis. Confidence in ultrasound use, confidence in volume management, and quality of physical exam were assessed by using a questionnaire both before and after the 3-day course and 1-day course. Participants rated confidence and quality on a 5-point scale, 1 being least confident and 5 being most confident.

Statistical Analysis

Demographics of the included hospitalist population and pre and post 3-day assessments, including knowledge score, skills score, confidence in ultrasound use, confidence in volume management, and quality of physical exam, were summarized. Values for all assessment variables are presented as percentages. Confidence scores were reported as a percentage of the Likert scale (eg, 4/5 was reported as 80%). Skills and written examinations were expressed as percentages of items correct. Data were reported as median and interquartile range or means and standard deviation based on variable distributions. Differences between pre- and postvalues for 3-day course variables were assessed by using 2-sample paired Wilcoxon signed rank tests with a 95% confidence level.

For the subset of hospitalists who also completed the 1-day course, pre and post 1-day course assessments, including skills score, confidence in ultrasound use, confidence in volume management, and quality of physical exam, were summarized. Differences between pre- and postvalues for 1-day assessment variables were assessed by using 2-sample paired Wilcoxon signed rank tests with a 95% confidence level.

Multiple linear regression was performed with the change in skills assessment score from postcompletion of the 3-day course to precompletion of the 1-day course as the dependent variable. Hospitalists were split into 2 age groups (30-39 and 40-49) for the purpose of this analysis. The percent of monthly scanning sessions attended, age category, timing of 1-day course, and percent portfolio were assessed as possible predictors of the skills score by using simple linear regression with a P = .05 cutoff. A final model was chosen based on predictors significant in simple linear regression and included the percent of the portfolio completed and attendance of monthly scanning sessions.

RESULTS

Demographics

3-Day In-Person Course

For the 53 hospitalists who completed skills-based assessments, performance increased significantly after the 3-day course. Knowledge scores also increased significantly from preassessment to postassessment. Self-reported confidence ratings for ultrasound use, confidence in volume management, and quality of physical exam all increased significantly from preassessment to postassessment (Table 2).

Refresher Training: 1-Day In-Person Course

Because the refresher training was encouraged but not required, only 25 of 53 hospitalists, 23 with complete data, completed the 1-day course. For the 23 hospitalists who completed skills-based assessments before and after the 1-day course, mean skills scores increased significantly (Table 2). Self-reported confidence ratings for ultrasound use, confidence in volume management, and quality of physical exam all increased significantly from preassessment to postassessment (Table 2).

Monthly Scanning Sessions and Portfolio Development

The skills retention from initial course to refresher course by portfolio completion and monthly scanning sessions is shown in Table 2. Multiple regression analysis showed that for every 10% increase in the percent of monthly sessions attended, the mean change in skills score was 3.7% (P = .017), and for every 10% increase in the percent of portfolio completed, the mean change in skills score was 2.5% (P = .04), showing that both monthly scanning session attendance and portfolio completion are significantly predictive of skills retention over time.

Final Assessments

DISCUSSION

This is the first description of a successful longitudinal training program with assessments in POCUS for hospital medicine providers that shows an increase in skill retention with the use of a follow-up course and bedside scanning.

The CHAMP Ultrasound Program was developed to provide hospital medicine clinicians with a specialty focused in-house training pathway in POCUS and to assist in sustained skills acquisition by providing opportunities for regular feedback and practice. Practice with regular expert feedback is a critical aspect to develop and maintain skills in POCUS.32,33 Arntfield34 described the utility of remote supervision with feedback for ultrasound training in critical care, which demonstrated varying learning curves in the submission of portfolio images.35,36 The CHAMP Ultrasound training program provided expert oversight, longitudinal supervision, and feedback for course participants. The educational method of mastery learning was employed by setting minimum standards and allowing learners to practice until they met that standard.37-39

This unique program is made possible by the availability of expert-level faculty. Assessment scores improved with an initial 3-day course; however, they also decayed over time, most prominently with hospitalists that did not continue with POCUS scanning after their initial course. Ironically, those who performed more ultrasounds in the year prior to beginning the 3-day course had lower confidence ratings, likely explained by their awareness of their limitations and opportunities for improvement. The incorporation of refresher training to supplement the core 3-day course and portfolio development are key additions that differentiate this training program. These additions and the demonstration of successful training make this a durable pathway for other hospitalist programs. There are many workshops and short courses for medical students, residents, and practicing providers in POCUS.40-43 However, without an opportunity for longitudinal supervision and feedback, there is a noted decrease in the skills for participants. The refresher training with its 2 components (1-day in-person course and monthly scanning sessions) provides evidence of the value of mentored training.

In the initial program development, refresher training was encouraged but optional. We intentionally tracked those that completed refresher training compared with those that did not. Based on the results showing significant skills retention among those attending some form of refresher training, the program is planned to change to make this a requirement. We recommend refresher training within 12 months of the initial introductory course. There were several hospitalists that were unable to accommodate taking a full-day refresher course and, therefore, monthly scanning sessions were provided as an alternative.

The main limitation of the study is that it was completed in a single hospital system with available training mentors in POCUS. This gave us the ability to perform longitudinal training but may make this less reproducible in other hospital systems. Another limitation is that our course participants did not complete the pre- and postknowledge assessments for the refresher training components of the program, though they did for the initial 3-day course. Our pre- and postassessments have not been externally shown to produce valid data, though they are based on the already validated CHEST ultrasound data.44

Finally, our CHAMP Ultrasound Program required a significant time commitment by both faculty and learners. A relatively small percentage of hospitalists have completed the final assessments. The reasons are multifactorial, including program rigor, desire by certain hospitalists to know the basics but not pursue more expertise, and the challenges of developing a skillset that takes dedicated practice over time. We have aimed to address these barriers by providing additional hands-on scanning opportunities, giving timely feedback with portfolios, and obtaining more ultrasound machines. We expect more hospitalists to complete the final assessments in the coming year as evidenced by portfolio submissions to the shared online portal and many choosing to attend either the monthly scanning sessions and/or the 1-day course. We recognize that other institutions may need to adapt our program to suit their local environment.

CONCLUSION

A comprehensive longitudinal ultrasound training program including competency assessments significantly improved ultrasound acquisition skills with hospitalists. Those attending monthly scanning sessions and participating in the portfolio completion as well as a refresher course significantly retained and augmented their skills.

Acknowledgments

The authors would like to acknowledge Kelly Logue, Jason Robertson, MD, Jerome Siy, MD, Shauna Baer, and Jack Dressen for their support in the development and implementation of the POCUS program in hospital medicine.

Disclosure

The authors do not have any relevant financial disclosures to report.

1. Spevack R, Al Shukairi M, Jayaraman D, Dankoff J, Rudski L, Lipes J. Serial lung and IVC ultrasound in the assessment of congestive heart failure. Crit Ultrasound J. 2017;9:7-13. PubMed

2. Soni NJ, Franco R, Velez M, et al. Ultrasound in the diagnosis and management of pleural effusions. J Hosp Med. 2015 Dec;10(12):811-816. PubMed

3. Boyd JH, Sirounis D, Maizel J, Slama M. Echocardiography as a guide for fluid management. Crit Care. 2016;20(1):274-280. PubMed

4. Mantuani D, Frazee BW, Fahimi J, Nagdev A. Point-of-care multi-organ ultrasound improves diagnostic accuracy in adults presenting to the emergency department with acute dyspnea. West J Emerg Med. 2016;17(1):46-53. PubMed

5. Glockner E, Christ M, Geier F, et al. Accuracy of Point-of-Care B-Line Lung Ultrasound in Comparison to NT-ProBNP for Screening Acute Heart Failure. Ultrasound Int Open. 2016;2(3):E90-E92. PubMed

6. Bhagra A, Tierney DM, Sekiguchi H, Soni NH. Point-of-Care Ultrasonography for Primary Care Physicians and General Internists. Mayo Clin Proc. 2016 Dec;91(12):1811-1827. PubMed

7. Crisp JG, Lovato LM, Jang TB. Compression ultrasonography of the lower extremity with portable vascular ultrasonography can accurately detect deep venous thrombosis in the emergency department. Ann Emerg Med. 2010;56(6):601-610. PubMed

8. Squire BT, Fox JC, Anderson C. ABSCESS: Applied bedside sonography for convenient. Evaluation of superficial soft tissue infections. Acad Emerg Med. 2005;12(7):601-606. PubMed

9. Narasimhan M, Koenig SJ, Mayo PH. A Whole-Body Approach to Point of Care Ultrasound. Chest. 2016;150(4):772-776. PubMed

10. Copetti R, Soldati G, Copetti P. Chest sonography: a useful tool to differentiate acute cardiogenic pulmonary edema from acute respiratory distress syndrome. Cardiovasc Ultrasound. 2008;6:16-25. PubMed

11. Soni NJ, Arntfield R, Kory P. Point of Care Ultrasound. Philadelphia: Elsevier Saunders; 2015.

12. Moore CL, Copel JA. Point-of-Care Ultrasonography. N Engl J Med. 2011;364(8):749-757. PubMed

13. Rempell JS, Saldana F, DiSalvo D, et al. Pilot Point-of-Care Ultrasound Curriculum at Harvard Medical School: Early Experience. West J Emerg Med. 2016;17(6):734-740. doi:10.5811/westjem.2016.8.31387. PubMed

14. Heiberg J, Hansen LS, Wemmelund K, et al. Point-of-Care Clinical Ultrasound for Medical Students. Ultrasound Int Open. 2015;1(2):E58-E66. doi:10.1055/s-0035-1565173. PubMed

15. Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr. 2011;24(12):1319-1324. PubMed

16. Alexander JH, Peterson ED, Chen AY, Harding TM, Adams DB, Kisslo JA Jr. Feasibility of point-of-care echocardiography by internal medicine house staff. Am Heart J. 2004;147(3):476-481. PubMed

17. Hellmann DB, Whiting-O’Keefe Q, Shapiro EP, Martin LD, Martire C, Ziegelstein RC. The rate at which residents learn to use hand-held echocardiography at the bedside. Am J Med. 2005;118(9):1010-1018. PubMed

18. Kimura BJ, Amundson SA, Phan JN, Agan DL, Shaw DJ. Observations during development of an internal medicine residency training program in cardiovascular limited ultrasound examination. J Hosp Med. 2012;7(7):537-542. PubMed

19. Akhtar S, Theodoro D, Gaspari R, et al. Resident training in emergency ultrasound: consensus recommendations from the 2008 Council of Emergency Medicine Residency Directors Conference. Acad Emerg Med. 2009;16(s2):S32-S36. PubMed

, , , , , . Can emergency medicine residents detect acute deep venous thrombosis with a limited, two-site ultrasound examination? J Emerg Med. 2007;32(2):197-200. PubMed

, , , . Resident-performed compression ultrasonography for the detection of proximal deep vein thrombosis: fast and accurate. Acad Emerg Med. 2004;11(3):319-322. PubMed

22. Mandavia D, Aragona J, Chan L, et al. Ultrasound training for emergency physicians—a prospective study. Acad Emerg Med. 2000;7(9):1008-1014. PubMed

23. Koenig SJ, Narasimhan M, Mayo PH. Thoracic ultrasonography for the pulmonary specialist. Chest. 2011;140(5):1332-1341. doi: 10.1378/chest.11-0348. PubMed

24. Greenstein YY, Littauer R, Narasimhan M, Mayo PH, Koenig SJ. Effectiveness of a Critical Care Ultrasonography Course. Chest. 2017;151(1):34-40. doi:10.1016/j.chest.2016.08.1465. PubMed

25. Martin LD, Howell EE, Ziegelstein RC, Martire C, Shapiro EP, Hellmann DB. Hospitalist performance of cardiac hand-carried ultrasound after focused training. Am J Med. 2007;120(11):1000-1004. PubMed

26. Martin LD, Howell EE, Ziegelstein RC, et al.

27. Lucas BP, Candotti C, Margeta B, et al. Diagnostic accuracy of hospitalist-performed hand-carried ultrasound echocardiography after a brief training program. J Hosp Med. 2009;4(6):340-349. PubMed

28.

29. Critical Care Ultrasonography Certificate of Completion Program. American College of Chest Physicians. http://www.chestnet.org/Education/Advanced-Clinical-Training/Certificate-of-Completion-Program/Critical-Care-Ultrasonography. Accessed March 30, 2017

30. Mayo PH, Beaulieu Y, Doelken P, et al. American College of Chest Physicians/Société de Réanimation de Langue Française statement on competence in critical care ultrasonography. Chest. 2009;135(4):1050-1060. PubMed

31. Donlon TF, Angoff WH. The scholastic aptitude test. The College Board Admissions Testing Program; 1971:15-47.

32. Ericsson KA, Lehmann AC. Expert and exceptional performance: Evidence of maximal adaptation to task constraints. Annu Rev Psychol. 1996;47:273-305. PubMed

33. Ericcson KA, Krampe RT, Tesch-Romer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 1993;100(3):363-406.

34. Arntfield RT. The utility of remote supervision with feedback as a method to deliver high-volume critical care ultrasound training. J Crit Care. 2015;30(2):441.e1-e6. PubMed

35. Ma OJ, Gaddis G, Norvell JG, Subramanian S. How fast is the focused assessment with sonography for trauma examination learning curve? Emerg Med Australas. 2008;20(1):32-37. PubMed

36. Gaspari RJ, Dickman E, Blehar D. Learning curve of bedside ultrasound of the gallbladder. J Emerg Med. 2009;37(1):51-66. doi:10.1016/j.jemermed.2007.10.070. PubMed

37. Barsuk JH, McGaghie WC, Cohen ER, Balachandran JS, Wane DB. Use of simulation-based mastery learning to improve quality of central venous catheter placement in a medical intensive care unit. J Hosp Med. 2009:4(7):397-403. PubMed

38. McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014:48(4):375-385. PubMed

39. Guskey TR. The essential elements of mastery learning. J Classroom Interac. 1987;22:19-22.

40. Ultrasound Institute. Introduction to Primary Care Ultrasound. University of South Carolina School of Medicine. http://ultrasoundinstitute.med.sc.edu/UIcme.asp. Accessed October 24, 2017.

41. Society of Critical Care Medicine. Live Critical Care Ultrasound: Adult. http://www.sccm.org/Education-Center/Ultrasound/Pages/Fundamentals.aspx. Accessed October 24, 2017.

42. Castlefest Ultrasound Event. Castlefest 2018. http://castlefest2018.com/. Accessed October 24, 2017.

43. Office of Continuing Medical Education. Point of Care Ultrasound Workshop. UT Health San Antonio Joe R. & Teresa Lozano Long School of Medicine. http://cme.uthscsa.edu/ultrasound.asp. Accessed October 24, 2017.

44. Patrawalla P, Eisen LA, Shiloh A, et al. Development and Validation of an Assessment Tool for Competency in Critical Care Ultrasound. J Grad Med Educ. 2015;7(4):567-573. PubMed

1. Spevack R, Al Shukairi M, Jayaraman D, Dankoff J, Rudski L, Lipes J. Serial lung and IVC ultrasound in the assessment of congestive heart failure. Crit Ultrasound J. 2017;9:7-13. PubMed

2. Soni NJ, Franco R, Velez M, et al. Ultrasound in the diagnosis and management of pleural effusions. J Hosp Med. 2015 Dec;10(12):811-816. PubMed

3. Boyd JH, Sirounis D, Maizel J, Slama M. Echocardiography as a guide for fluid management. Crit Care. 2016;20(1):274-280. PubMed

4. Mantuani D, Frazee BW, Fahimi J, Nagdev A. Point-of-care multi-organ ultrasound improves diagnostic accuracy in adults presenting to the emergency department with acute dyspnea. West J Emerg Med. 2016;17(1):46-53. PubMed

5. Glockner E, Christ M, Geier F, et al. Accuracy of Point-of-Care B-Line Lung Ultrasound in Comparison to NT-ProBNP for Screening Acute Heart Failure. Ultrasound Int Open. 2016;2(3):E90-E92. PubMed

6. Bhagra A, Tierney DM, Sekiguchi H, Soni NH. Point-of-Care Ultrasonography for Primary Care Physicians and General Internists. Mayo Clin Proc. 2016 Dec;91(12):1811-1827. PubMed

7. Crisp JG, Lovato LM, Jang TB. Compression ultrasonography of the lower extremity with portable vascular ultrasonography can accurately detect deep venous thrombosis in the emergency department. Ann Emerg Med. 2010;56(6):601-610. PubMed

8. Squire BT, Fox JC, Anderson C. ABSCESS: Applied bedside sonography for convenient. Evaluation of superficial soft tissue infections. Acad Emerg Med. 2005;12(7):601-606. PubMed

9. Narasimhan M, Koenig SJ, Mayo PH. A Whole-Body Approach to Point of Care Ultrasound. Chest. 2016;150(4):772-776. PubMed

10. Copetti R, Soldati G, Copetti P. Chest sonography: a useful tool to differentiate acute cardiogenic pulmonary edema from acute respiratory distress syndrome. Cardiovasc Ultrasound. 2008;6:16-25. PubMed

11. Soni NJ, Arntfield R, Kory P. Point of Care Ultrasound. Philadelphia: Elsevier Saunders; 2015.

12. Moore CL, Copel JA. Point-of-Care Ultrasonography. N Engl J Med. 2011;364(8):749-757. PubMed

13. Rempell JS, Saldana F, DiSalvo D, et al. Pilot Point-of-Care Ultrasound Curriculum at Harvard Medical School: Early Experience. West J Emerg Med. 2016;17(6):734-740. doi:10.5811/westjem.2016.8.31387. PubMed

14. Heiberg J, Hansen LS, Wemmelund K, et al. Point-of-Care Clinical Ultrasound for Medical Students. Ultrasound Int Open. 2015;1(2):E58-E66. doi:10.1055/s-0035-1565173. PubMed

15. Razi R, Estrada JR, Doll J, Spencer KT. Bedside hand-carried ultrasound by internal medicine residents versus traditional clinical assessment for the identification of systolic dysfunction in patients admitted with decompensated heart failure. J Am Soc Echocardiogr. 2011;24(12):1319-1324. PubMed

16. Alexander JH, Peterson ED, Chen AY, Harding TM, Adams DB, Kisslo JA Jr. Feasibility of point-of-care echocardiography by internal medicine house staff. Am Heart J. 2004;147(3):476-481. PubMed

17. Hellmann DB, Whiting-O’Keefe Q, Shapiro EP, Martin LD, Martire C, Ziegelstein RC. The rate at which residents learn to use hand-held echocardiography at the bedside. Am J Med. 2005;118(9):1010-1018. PubMed

18. Kimura BJ, Amundson SA, Phan JN, Agan DL, Shaw DJ. Observations during development of an internal medicine residency training program in cardiovascular limited ultrasound examination. J Hosp Med. 2012;7(7):537-542. PubMed

19. Akhtar S, Theodoro D, Gaspari R, et al. Resident training in emergency ultrasound: consensus recommendations from the 2008 Council of Emergency Medicine Residency Directors Conference. Acad Emerg Med. 2009;16(s2):S32-S36. PubMed

, , , , , . Can emergency medicine residents detect acute deep venous thrombosis with a limited, two-site ultrasound examination? J Emerg Med. 2007;32(2):197-200. PubMed

, , , . Resident-performed compression ultrasonography for the detection of proximal deep vein thrombosis: fast and accurate. Acad Emerg Med. 2004;11(3):319-322. PubMed

22. Mandavia D, Aragona J, Chan L, et al. Ultrasound training for emergency physicians—a prospective study. Acad Emerg Med. 2000;7(9):1008-1014. PubMed

23. Koenig SJ, Narasimhan M, Mayo PH. Thoracic ultrasonography for the pulmonary specialist. Chest. 2011;140(5):1332-1341. doi: 10.1378/chest.11-0348. PubMed

24. Greenstein YY, Littauer R, Narasimhan M, Mayo PH, Koenig SJ. Effectiveness of a Critical Care Ultrasonography Course. Chest. 2017;151(1):34-40. doi:10.1016/j.chest.2016.08.1465. PubMed

25. Martin LD, Howell EE, Ziegelstein RC, Martire C, Shapiro EP, Hellmann DB. Hospitalist performance of cardiac hand-carried ultrasound after focused training. Am J Med. 2007;120(11):1000-1004. PubMed

26. Martin LD, Howell EE, Ziegelstein RC, et al.

27. Lucas BP, Candotti C, Margeta B, et al. Diagnostic accuracy of hospitalist-performed hand-carried ultrasound echocardiography after a brief training program. J Hosp Med. 2009;4(6):340-349. PubMed

28.

29. Critical Care Ultrasonography Certificate of Completion Program. American College of Chest Physicians. http://www.chestnet.org/Education/Advanced-Clinical-Training/Certificate-of-Completion-Program/Critical-Care-Ultrasonography. Accessed March 30, 2017

30. Mayo PH, Beaulieu Y, Doelken P, et al. American College of Chest Physicians/Société de Réanimation de Langue Française statement on competence in critical care ultrasonography. Chest. 2009;135(4):1050-1060. PubMed

31. Donlon TF, Angoff WH. The scholastic aptitude test. The College Board Admissions Testing Program; 1971:15-47.

32. Ericsson KA, Lehmann AC. Expert and exceptional performance: Evidence of maximal adaptation to task constraints. Annu Rev Psychol. 1996;47:273-305. PubMed

33. Ericcson KA, Krampe RT, Tesch-Romer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 1993;100(3):363-406.

34. Arntfield RT. The utility of remote supervision with feedback as a method to deliver high-volume critical care ultrasound training. J Crit Care. 2015;30(2):441.e1-e6. PubMed

35. Ma OJ, Gaddis G, Norvell JG, Subramanian S. How fast is the focused assessment with sonography for trauma examination learning curve? Emerg Med Australas. 2008;20(1):32-37. PubMed

36. Gaspari RJ, Dickman E, Blehar D. Learning curve of bedside ultrasound of the gallbladder. J Emerg Med. 2009;37(1):51-66. doi:10.1016/j.jemermed.2007.10.070. PubMed

37. Barsuk JH, McGaghie WC, Cohen ER, Balachandran JS, Wane DB. Use of simulation-based mastery learning to improve quality of central venous catheter placement in a medical intensive care unit. J Hosp Med. 2009:4(7):397-403. PubMed

38. McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014:48(4):375-385. PubMed

39. Guskey TR. The essential elements of mastery learning. J Classroom Interac. 1987;22:19-22.

40. Ultrasound Institute. Introduction to Primary Care Ultrasound. University of South Carolina School of Medicine. http://ultrasoundinstitute.med.sc.edu/UIcme.asp. Accessed October 24, 2017.

41. Society of Critical Care Medicine. Live Critical Care Ultrasound: Adult. http://www.sccm.org/Education-Center/Ultrasound/Pages/Fundamentals.aspx. Accessed October 24, 2017.

42. Castlefest Ultrasound Event. Castlefest 2018. http://castlefest2018.com/. Accessed October 24, 2017.

43. Office of Continuing Medical Education. Point of Care Ultrasound Workshop. UT Health San Antonio Joe R. & Teresa Lozano Long School of Medicine. http://cme.uthscsa.edu/ultrasound.asp. Accessed October 24, 2017.

44. Patrawalla P, Eisen LA, Shiloh A, et al. Development and Validation of an Assessment Tool for Competency in Critical Care Ultrasound. J Grad Med Educ. 2015;7(4):567-573. PubMed

© 2018 Society of Hospital Medicine

Turnover rate for hospitalist groups trending downward

According to the 2016 State of Hospital Medicine Report based on 2015 data, the median physician turnover rate for hospital medicine groups (HMGs) serving adults only is 6.9%, lower compared with results from prior surveys. Particularly, turnover in 2010 was more than double the current rate (see Figure 1). This steady decline over the years is intriguing, yet encouraging, since hospital medicine is well known for its high turnover compared to other specialties.

Similarly, results from State of Hospital Medicine surveys also reveal a consistent trend for groups with no turnover. As expected, lower turnover rate usually parallels with higher percentage of groups with no turnover. This year, 40.2% of hospitalist groups reported no physician turnover at all, continuing the upward trend from 2014 (38.1%) and 2012 (36%). It is speculating that these groups are not just simply fortunate, but rather work zealously to build a strong internal culture within the group and proactively create a shared vision, values, accountability, and career goals.

Sources in search of why providers leave a practice and advice on specific strategies to retain them are abundant. To secure retention, at a minimum, employers, leaders, or administrators should pay close attention to such basic factors as work schedules, workload, and compensation – and even consider using national and regional data from the State of Hospital Medicine Report for benchmarking to remain attractive and competitive in the market. Low or no turnover rate indicates workforce stability and program credibility, and allows cost saving as the overall estimated cost of turnover (losing a provider and hiring another one) ranges from $400,000 to $600,000 per provider.1

The turnover data further delineates differences based on academic status, Medicare Indirect Medical Education (IME) program status, and geographic region. For instance, the academic groups consistently report a higher turnover rate, compared with the nonacademic groups. The latter mirrors the overall decreasing trend of physician turnover. Non-teaching hospitals also score significantly higher on the number of groups with no turnover (42% as opposed to 24%-27% for teaching hospitals). Geographically, HMGs in the South and Midwest regions of the United States are the winners this year, with more than 50% of the groups reporting no turnover at all.

Specific information regarding turnover for nurse practitioners and physician assistants (NPs/PAs) can also be found in the report. This rate has been increasing slightly compared with the past, with a subsequent drop in the percentage of groups reporting no turnover. Yet, the overall percentage with no turnover for NPs/PAs remains impressively high at 62%.

The turnover rates for HMGs serving both adults and children, and groups serving children only, appear somewhat similar to those of groups serving adults only, though we cannot reliably analyze the data, elucidate significant differences, or detect any meaningful trends from these two groups because of insufficient numbers of responders.

The downward trend of hospitalist turnover found in SHM’s 2016 State of Hospital Medicine Report is reassuring, indicative of a higher retention rate and an extended stability for many programs. Although some hospitalists continue to shop around, most leaders and employers of HMGs work endlessly to strengthen their programs in hope to minimize turnover. The promising data likely reflect such effort. Hopefully, this trend will continue when the next State of Hospital Medicine report comes out.

Dr. Vuong is a hospitalist at HealthPartners Medical Group in St Paul, Minn., and an assistant professor of medicine at the University of Minnesota. He is a member of SHM’s Practice Analysis Committee.

Reference

1. Frenz, D (2016). The staggering costs of physician turnover. Today’s Hospitalist.

According to the 2016 State of Hospital Medicine Report based on 2015 data, the median physician turnover rate for hospital medicine groups (HMGs) serving adults only is 6.9%, lower compared with results from prior surveys. Particularly, turnover in 2010 was more than double the current rate (see Figure 1). This steady decline over the years is intriguing, yet encouraging, since hospital medicine is well known for its high turnover compared to other specialties.

Similarly, results from State of Hospital Medicine surveys also reveal a consistent trend for groups with no turnover. As expected, lower turnover rate usually parallels with higher percentage of groups with no turnover. This year, 40.2% of hospitalist groups reported no physician turnover at all, continuing the upward trend from 2014 (38.1%) and 2012 (36%). It is speculating that these groups are not just simply fortunate, but rather work zealously to build a strong internal culture within the group and proactively create a shared vision, values, accountability, and career goals.

Sources in search of why providers leave a practice and advice on specific strategies to retain them are abundant. To secure retention, at a minimum, employers, leaders, or administrators should pay close attention to such basic factors as work schedules, workload, and compensation – and even consider using national and regional data from the State of Hospital Medicine Report for benchmarking to remain attractive and competitive in the market. Low or no turnover rate indicates workforce stability and program credibility, and allows cost saving as the overall estimated cost of turnover (losing a provider and hiring another one) ranges from $400,000 to $600,000 per provider.1

The turnover data further delineates differences based on academic status, Medicare Indirect Medical Education (IME) program status, and geographic region. For instance, the academic groups consistently report a higher turnover rate, compared with the nonacademic groups. The latter mirrors the overall decreasing trend of physician turnover. Non-teaching hospitals also score significantly higher on the number of groups with no turnover (42% as opposed to 24%-27% for teaching hospitals). Geographically, HMGs in the South and Midwest regions of the United States are the winners this year, with more than 50% of the groups reporting no turnover at all.

Specific information regarding turnover for nurse practitioners and physician assistants (NPs/PAs) can also be found in the report. This rate has been increasing slightly compared with the past, with a subsequent drop in the percentage of groups reporting no turnover. Yet, the overall percentage with no turnover for NPs/PAs remains impressively high at 62%.

The turnover rates for HMGs serving both adults and children, and groups serving children only, appear somewhat similar to those of groups serving adults only, though we cannot reliably analyze the data, elucidate significant differences, or detect any meaningful trends from these two groups because of insufficient numbers of responders.

The downward trend of hospitalist turnover found in SHM’s 2016 State of Hospital Medicine Report is reassuring, indicative of a higher retention rate and an extended stability for many programs. Although some hospitalists continue to shop around, most leaders and employers of HMGs work endlessly to strengthen their programs in hope to minimize turnover. The promising data likely reflect such effort. Hopefully, this trend will continue when the next State of Hospital Medicine report comes out.

Dr. Vuong is a hospitalist at HealthPartners Medical Group in St Paul, Minn., and an assistant professor of medicine at the University of Minnesota. He is a member of SHM’s Practice Analysis Committee.

Reference

1. Frenz, D (2016). The staggering costs of physician turnover. Today’s Hospitalist.

According to the 2016 State of Hospital Medicine Report based on 2015 data, the median physician turnover rate for hospital medicine groups (HMGs) serving adults only is 6.9%, lower compared with results from prior surveys. Particularly, turnover in 2010 was more than double the current rate (see Figure 1). This steady decline over the years is intriguing, yet encouraging, since hospital medicine is well known for its high turnover compared to other specialties.