User login

Patient-Reported Outcome Measures: How Do Digital Tablets Stack Up to Paper Forms? A Randomized, Controlled Study

Over the past several decades, patient-reported outcomes (PROs) have become increasingly important in assessing the quality and effectiveness of medical and surgical care.1,2 The benefit lies in the ability of PROs to characterize the impact of medical interventions on symptoms, function, and other outcomes from the patient’s perspective. Consequently, clinical practices can improve patients’ objective findings (from radiographic and clinical examinations) as well as their preferences in a social-psychological context.2,3 As a patient’s satisfaction with a surgical intervention may not correlate with the surgeon’s objective assessment of outcome, PROs offer unique insight into the patient’s perceptions of well-being.4

Health-related quality-of-life assessments can be made with either general-health or disease-specific instruments. These instruments traditionally are administered with pen and paper—a data collection method with several limitations, chief being the need to manually transfer the data into an electronic medical record, a research database, or both. In addition, administering surveys on paper risks potential disqualification of partially or incorrectly completed surveys. With pen and paper, it is difficult to mandate that every question be answered accurately.

Currently, there is a potential role for electronic medical records and digital tablet devices in survey administration and data collection and storage. Theoretical advantages include direct input of survey data into databases (eliminating manual data entry and associated entry errors), improved accuracy and completion rates, and long-term storage not dependent on paper charts.5To our knowledge, there have been no prospective studies of different orthopedic outcomes collection methods. Some studies have evaluated use of touch-based tablets in data collection. Dy and colleagues6 considered administration of the DASH (Disabilities of the Arm, Shoulder, and Hand) survey on an iPad tablet (Apple Computers) and retrospectively compared the tablet and paper completion rates. The tablet group’s rate (98%) was significantly higher than the paper group’s rate (76%). Aktas and colleagues7 reported a high completion rate for a tablet survey of palliative care outcomes (they did not compare modalities). A handful of other studies have found higher intraclass correlation and validation for digital data collection than for paper collection.7-14 The comparability of the data collected digitally vs on paper was the nidus for our decision to prospectively evaluate the ease and reliability of digital data collection.

We conducted a prospective, randomized study to compare the performance of tablet and paper versions of several general-health and musculoskeletal disease–specific questionnaires. We hypothesized the tablet and paper surveys would have similar completion rates and times.

Methods

This study was approved by our Institutional Review Board. Participants were recruited during their clinic visit to 3 subspecialty orthopedic services (upper extremity, spine, arthroplasty). The questionnaires included basic demographics questions and questions about tablet use (comfort level with computers, measured on a Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree), and ownership of a tablet or smartphone). Also included were European Quality of Life–5 Dimensions (EQ-5D, General Health), a disease questionnaire specific to 1 of the 3 subspecialty services, and a satisfaction survey. Patients were asked to complete the Oswestry Disability Index (ODI) for low-back pain, the Neck Disability Index (NDI) for neck pain, the Hip Disability and Osteoarthritis Outcomes Score (HOOS) for hip pain, the Knee Injury and Osteoarthritis Outcomes Score (KOOS) for knee pain, or the QuickDASH survey for upper extremity complaints (subspecialty-specific). After recruitment, a computer-generated randomization technique was used to randomly assign patients to either a paper or an electronic (iPad) data collection group.15 We included all surveys for which patients had sufficient completion time (no clinic staff interruptions) and excluded surveys marked incomplete (because of interruptions for clinic workflow efficiency). For direct input from tablets and for data storage, we used the Research Electronic Data Capture (REDCap) system hosted at our institution.16 Our staff registered patients as REDCap participants, assigned them to their disease-specific study arms, and gave them tablets to use to complete the surveys.

Patients who were randomly assigned to take the surveys on paper were given a packet that included the demographics survey, the EQ-5D, a disease-specific survey, and a satisfaction survey. Their responses were then manually entered by the investigators into the REDCap system.

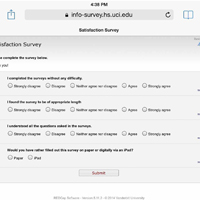

Patients who were randomly assigned to take the surveys on tablets used the REDCap survey feature, which allowed them to directly input their responses into the database (Figure).

Our primary outcome measure was survey completion rate. Secondary outcome measures were total time for completion, number of questions left unanswered on incomplete surveys, patient satisfaction with survey length (Likert scale, 1-5), ease of completion (Likert scale, 1-5), ability to comprehend questions (Likert scale, 1-5), and preference for the other survey modality (Appendix).

We used SPSS statistical software (IBM) to analyze our data, t test to compare continuous variables, χ2 test to compare categorical variables, and linear regression to test the relationship between number of questions and completion rate. Statistical significance was set at P < .05.

Results

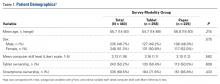

Of the 510 patients enrolled in the study, 483 completed the initial demographics questionnaire and were included in the analysis. Patients were excluded if they were unable to complete the initial demographics questionnaire because of clinic workflow (eg, immediate need to be seen by physician, need to transfer to radiology for imaging and not being able to revisit the survey). Mean age was 56 years (range, 14-93 years), and 51% of the respondents were female. Fifty percent owned tablets, 70% owned smartphones, and mean (SD) self-rating of computer skills was 3.13 (1.16) (Likert scale, 1-5). There were no significant demographic differences between the tablet and paper groups (Table 1).

For each disease-specific questionnaire, the instrument’s published instructions for calculating scores were followed; these scores were then compared in order to further characterize the groups. There were significant differences in scores on the EQ-5D descriptive questions, a pain visual analog scale (VAS), and the NDI. Mean EQ-5D score was 0.664 for the tablet group and 0.699 for the paper group (P = .041), mean pain VAS score was 62.5 for the tablet group and 71.6 for the paper group (P < .001), and mean NDI score was 42.8 for the tablet group and 32.4 for the paper group (P = .033).

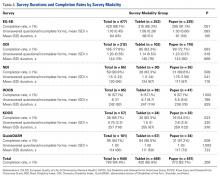

The overall completion rate for all questionnaires was 84.4%. The KOOS completion rate was 83.3% for the tablet group and 54.5% for the paper group (P = .023). Although it was not statistically significant, there was a trend toward higher rates of completing all disease-specific questionnaires in the tablet group relative to the paper group.

Satisfaction regarding the surveys and their modalities was similar between the groups.

Discussion

Electronic data entry has many advantages over traditional paper-based data collection and can be used with PRO surveys to measure response to treatment. Our study evaluated whether completion rates differed between surveys administered on digital tablets and those administered on traditional paper forms in a clinic setting. We selected general-health and disease-specific instruments commonly used to collect PROs from orthopedic patients. Our primary outcome measure was survey completion rate. Secondary outcome measures were total time for completion, number of questions left unanswered on incomplete surveys, patient satisfaction, and survey preferences.

In this study, our tablet and paper groups had similar overall survey completion rates, which suggests digital tablet-based data collection is noninferior to traditional pen-and-paper data collection with respect to patient response rate in the clinical setting. It is worth emphasizing that the tablet surveys were made to resemble and function as much as possible like the paper surveys. For example, patients were allowed to select multiple answers as well as advance without answering a question. Paper surveys were mimicked so we could study inherent differences in patient responsiveness without adding digital features to prevent patients from selecting multiple answers or skipping questions. We postulate that adding these digital features could have introduced a significant difference in patient responsiveness.

Time for survey completion was not significantly different between the tablet and paper groups, demonstrating that data can be digitally collected and the aforementioned advantages realized without significant delay or clinic workflow disruption. In the future, patients may be able to complete their forms digitally, on their own devices, before arriving for their clinic visits—resulting in improved clinic workflow and data collection efficiency.

Scores computed for the health-related quality-of-life questionnaires were not significantly different between the tablet and paper groups, except for EQ-5D and NDI. Although statistically significant, the 0.035 difference between the groups’ EQ-5D scores (0.664, 0.699) is not clinically significant. (Pickard and colleagues17 established that 0.06 is the clinically significant difference between EQ-5D scores in the United States.) If there were any clinical difference in the present study, our paper group patients appeared to be in better health than our tablet group patients.

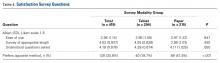

Patients’ motivation to complete surveys often plays a large role in meaningful rates of completion. On our subjective satisfaction survey, a larger percentage of patients reported they would prefer to use a tablet for future surveys (Table 4). This finding may be driven by the novelty or ease of using a popular device. Nevertheless, we think it is worthwhile to heed patient preferences, as they may point to more successful data collection and compliance.

Several other studies have compared electronic and paper data capture.6,7,9-14,18-22 Dy and colleagues6 reported on administering the DASH survey on an iPad tablet using REDCap in an outpatient setting. They found that the percentage of surveys that could be scored (<3 questions left unanswered) was significantly higher for their tablet group (98%) than their paper group (76%). The larger difference in survey completion rates in their study (vs ours) may be attributable to their use of DASH, which has more survey items (compared with QuickDASH, the instrument we used) and thus may be more sensitive to detecting differences, at the risk of increasing the burden on survey takers.23 Aktas and colleagues7 conducted a similar but smaller study of completion rates, completion times, and overall practicality of using digital tablets to collect PROs in a palliative care clinic (they did not compare tablet and paper modalities). Marsh and colleagues,12 who studied the agreement between data collected on electronic and paper versions of the WOMAC (Western Ontario and McMaster Universities) Osteoarthritis Index and the SF-12 (12-item Short Form Health Survey, Version 2) after total hip and total knee arthroplasty, found a high intraclass correlation coefficient between the 2 methods. Griffiths-Jones and colleagues11 also found a high degree of agreement between patient data collected on digital and paper surveys. In a similar study, Fanning and McAuley10 compared digital tablet and paper survey administration in an older population and found a higher percentage of preference for tablets, with ease of use and anxiety during survey completion correlating with preference. These findings mirror ours, even with our inclusion of patients in a broader age range.

Strengths of our study included its overall cohort size and the variety of measurement instruments used. In addition, we measured time for survey completion to assess the practicality of tablet-based data collection and refrained from using digital features that could have artificially improved the completion rate for this survey modality.

Our study had a few limitations. First, we recruited unequal numbers of patients from the different subspecialties—a result of each subspecialty having a different number of attending physicians and a different patient volume. Given randomization and use of similar patients across the study arms, however, this likely did not present any significant bias. Second, each patient completed a tablet survey or a paper survey but not both, and therefore we could not compare a patient’s performance on the 2 modalities. However, the burden of completing the same survey more than once likely would have lowered our participation rate and introduced additional biases we wanted to avoid. Third, despite our attempt to mimic the look of a paper survey, the tablet’s user interface presented several potential difficulties. For example, its small text and small answer buttons may have been limiting for patients with poor vision. These design features emphasize the importance of having a user interface that can be adapted to the individual, regardless of handicap. Indeed, adaptability is a potential strength of digital interfaces. For adaptability, an interface designer can use large, scalable text and add audio prompts and other features.

Our findings can be useful in evaluating patient responsiveness to surveys administered on digital tablets in an outpatient clinic setting. In this prospective, randomized study, we found that, for survey completion, use of a tablet device did not require more time than use of a paper form. In addition, the administration modalities had similar completion and error rates for a variety of orthopedic outcomes surveys. We did not activate digital features that would have given unfair advantage to the digital data collection modality. We also found a strong preference for use of technology in PRO data collection, and this may help improve collection rates. Last, though optimizing the flow of patients in our clinic was not a strict research metric, we prioritized making sure patients were not spending any more time completing these surveys than in the past. Given the potential benefits of digital surveys—immediate and accurate transfer of collected data into multiple databases, including the patient’s electronic medical record—our experience supports continuing validation of these instruments for potential wider use.

Am J Orthop. 2016;45(7):E451-E457. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Howie L, Hirsch B, Locklear T, Abernethy AP. Assessing the value of patient-generated data to comparative effectiveness research. Health Aff (Millwood). 2014;33(7):1220-1228.

2. Higginson IJ, Carr AJ. Measuring quality of life: using quality of life measures in the clinical setting. BMJ. 2001;322(7297):1297-1300.

3. Revicki D, Hays RD, Cella D, Sloan J. Recommended methods for determining responsiveness and minimally important differences for patient-reported outcomes. J Clin Epidemiol. 2008;61(2):102-109.

4. Guyatt GH, Feeny DH, Patrick DL. Measuring health-related quality of life. Ann Intern Med. 1993;118(8):622-629.

5. Paudel D, Ahmed M, Pradhan A, Lal Dangol R. Successful use of tablet personal computers and wireless technologies for the 2011 Nepal Demographic and Health Survey. Glob Heal Sci Pract. 2013;1(2):277-284.

6. Dy CJ, Schmicker T, Tran Q, Chadwick B, Daluiski A. The use of a tablet computer to complete the DASH questionnaire. J Hand Surg Am. 2012;37(12):2589-2594.

7. Aktas A, Hullihen B, Shrotriya S, Thomas S, Walsh D, Estfan B. Connected health: cancer symptom and quality-of-life assessment using a tablet computer: a pilot study. Am J Hosp Palliat Care. 2015;32(2):189-197.

8. Basnov M, Kongsved SM, Bech P, Hjollund NH. Reliability of Short Form-36 in an internet- and a pen-and-paper version. Inform Health Soc Care. 2009;34(1):53-58.

9. Bellamy N, Wilson C, Hendrikz J, et al; EDC Study Group. Osteoarthritis Index delivered by mobile phone (m-WOMAC) is valid, reliable, and responsive. J Clin Epidemiol. 2011;64(2):182-190.

10. Fanning J, McAuley E. A comparison of tablet computer and paper-based questionnaires in healthy aging research. JMIR Res Protoc. 2014;3(3):e38.

11. Griffiths-Jones W, Norton MR, Fern ED, Williams DH. The equivalence of remote electronic and paper patient reported outcome (PRO) collection. J Arthroplasty. 2014;29(11):2136-2139.

12. Marsh JD, Bryant DM, Macdonald SJ, Naudie DD. Patients respond similarly to paper and electronic versions of the WOMAC and SF-12 following total joint arthroplasty. J Arthroplasty. 2014;29(4):670-673.

13. Olajos-Clow J, Minard J, Szpiro K, et al. Validation of an electronic version of the Mini Asthma Quality of Life Questionnaire. Respir Med. 2010;104(5):658-667.

14. Shervin N, Dorrwachter J, Bragdon CR, Shervin D, Zurakowski D, Malchau H. Comparison of paper and computer-based questionnaire modes for measuring health outcomes in patients undergoing total hip arthroplasty. J Bone Joint Surg Am. 2011;93(3):285-293.

15. Suresh K. An overview of randomization techniques: an unbiased assessment of outcome in clinical research. J Hum Reprod Sci. 2011;4(1):8-11.

16. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381.

17. Pickard AS, Neary MP, Cella D. Estimation of minimally important differences in EQ-5D utility and VAS scores in cancer. Health Qual Life Outcomes. 2007;5:70.

18. Abdel Messih M, Naylor JM, Descallar J, Manickam A, Mittal R, Harris IA. Mail versus telephone administration of the Oxford Knee and Hip Scores. J Arthroplasty. 2014;29(3):491-494.

19. Kongsved SM, Basnov M, Holm-Christensen K, Hjollund NH. Response rate and completeness of questionnaires: a randomized study of internet versus paper-and-pencil versions. J Med Internet Res. 2007;9(3):e25.

20. Theiler R, Bischoff-Ferrari HA, Good M, Bellamy N. Responsiveness of the electronic touch screen WOMAC 3.1 OA Index in a short term clinical trial with rofecoxib. Osteoarthritis Cartilage. 2004;12(11):912-916.

21. Ryan JM, Corry JR, Attewell R, Smithson MJ. A comparison of an electronic version of the SF-36 General Health Questionnaire to the standard paper version. Qual Life Res. 2002;11(1):19-26.

22. Wilson AS, Kitas GD, Carruthers DM, et al. Computerized information-gathering in specialist rheumatology clinics: an initial evaluation of an electronic version of the Short Form 36. Rheumatology. 2002;41(3):268-273.

23. Angst F, Goldhahn J, Drerup S, Flury M, Schwyzer HK, Simmen BR. How sharp is the short QuickDASH? A refined content and validity analysis of the Short Form of the Disabilities of the Shoulder, Arm and Hand questionnaire in the strata of symptoms and function and specific joint conditions. Qual Life Res. 2009;18(8):1043-1051.

Over the past several decades, patient-reported outcomes (PROs) have become increasingly important in assessing the quality and effectiveness of medical and surgical care.1,2 The benefit lies in the ability of PROs to characterize the impact of medical interventions on symptoms, function, and other outcomes from the patient’s perspective. Consequently, clinical practices can improve patients’ objective findings (from radiographic and clinical examinations) as well as their preferences in a social-psychological context.2,3 As a patient’s satisfaction with a surgical intervention may not correlate with the surgeon’s objective assessment of outcome, PROs offer unique insight into the patient’s perceptions of well-being.4

Health-related quality-of-life assessments can be made with either general-health or disease-specific instruments. These instruments traditionally are administered with pen and paper—a data collection method with several limitations, chief being the need to manually transfer the data into an electronic medical record, a research database, or both. In addition, administering surveys on paper risks potential disqualification of partially or incorrectly completed surveys. With pen and paper, it is difficult to mandate that every question be answered accurately.

Currently, there is a potential role for electronic medical records and digital tablet devices in survey administration and data collection and storage. Theoretical advantages include direct input of survey data into databases (eliminating manual data entry and associated entry errors), improved accuracy and completion rates, and long-term storage not dependent on paper charts.5To our knowledge, there have been no prospective studies of different orthopedic outcomes collection methods. Some studies have evaluated use of touch-based tablets in data collection. Dy and colleagues6 considered administration of the DASH (Disabilities of the Arm, Shoulder, and Hand) survey on an iPad tablet (Apple Computers) and retrospectively compared the tablet and paper completion rates. The tablet group’s rate (98%) was significantly higher than the paper group’s rate (76%). Aktas and colleagues7 reported a high completion rate for a tablet survey of palliative care outcomes (they did not compare modalities). A handful of other studies have found higher intraclass correlation and validation for digital data collection than for paper collection.7-14 The comparability of the data collected digitally vs on paper was the nidus for our decision to prospectively evaluate the ease and reliability of digital data collection.

We conducted a prospective, randomized study to compare the performance of tablet and paper versions of several general-health and musculoskeletal disease–specific questionnaires. We hypothesized the tablet and paper surveys would have similar completion rates and times.

Methods

This study was approved by our Institutional Review Board. Participants were recruited during their clinic visit to 3 subspecialty orthopedic services (upper extremity, spine, arthroplasty). The questionnaires included basic demographics questions and questions about tablet use (comfort level with computers, measured on a Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree), and ownership of a tablet or smartphone). Also included were European Quality of Life–5 Dimensions (EQ-5D, General Health), a disease questionnaire specific to 1 of the 3 subspecialty services, and a satisfaction survey. Patients were asked to complete the Oswestry Disability Index (ODI) for low-back pain, the Neck Disability Index (NDI) for neck pain, the Hip Disability and Osteoarthritis Outcomes Score (HOOS) for hip pain, the Knee Injury and Osteoarthritis Outcomes Score (KOOS) for knee pain, or the QuickDASH survey for upper extremity complaints (subspecialty-specific). After recruitment, a computer-generated randomization technique was used to randomly assign patients to either a paper or an electronic (iPad) data collection group.15 We included all surveys for which patients had sufficient completion time (no clinic staff interruptions) and excluded surveys marked incomplete (because of interruptions for clinic workflow efficiency). For direct input from tablets and for data storage, we used the Research Electronic Data Capture (REDCap) system hosted at our institution.16 Our staff registered patients as REDCap participants, assigned them to their disease-specific study arms, and gave them tablets to use to complete the surveys.

Patients who were randomly assigned to take the surveys on paper were given a packet that included the demographics survey, the EQ-5D, a disease-specific survey, and a satisfaction survey. Their responses were then manually entered by the investigators into the REDCap system.

Patients who were randomly assigned to take the surveys on tablets used the REDCap survey feature, which allowed them to directly input their responses into the database (Figure).

Our primary outcome measure was survey completion rate. Secondary outcome measures were total time for completion, number of questions left unanswered on incomplete surveys, patient satisfaction with survey length (Likert scale, 1-5), ease of completion (Likert scale, 1-5), ability to comprehend questions (Likert scale, 1-5), and preference for the other survey modality (Appendix).

We used SPSS statistical software (IBM) to analyze our data, t test to compare continuous variables, χ2 test to compare categorical variables, and linear regression to test the relationship between number of questions and completion rate. Statistical significance was set at P < .05.

Results

Of the 510 patients enrolled in the study, 483 completed the initial demographics questionnaire and were included in the analysis. Patients were excluded if they were unable to complete the initial demographics questionnaire because of clinic workflow (eg, immediate need to be seen by physician, need to transfer to radiology for imaging and not being able to revisit the survey). Mean age was 56 years (range, 14-93 years), and 51% of the respondents were female. Fifty percent owned tablets, 70% owned smartphones, and mean (SD) self-rating of computer skills was 3.13 (1.16) (Likert scale, 1-5). There were no significant demographic differences between the tablet and paper groups (Table 1).

For each disease-specific questionnaire, the instrument’s published instructions for calculating scores were followed; these scores were then compared in order to further characterize the groups. There were significant differences in scores on the EQ-5D descriptive questions, a pain visual analog scale (VAS), and the NDI. Mean EQ-5D score was 0.664 for the tablet group and 0.699 for the paper group (P = .041), mean pain VAS score was 62.5 for the tablet group and 71.6 for the paper group (P < .001), and mean NDI score was 42.8 for the tablet group and 32.4 for the paper group (P = .033).

The overall completion rate for all questionnaires was 84.4%. The KOOS completion rate was 83.3% for the tablet group and 54.5% for the paper group (P = .023). Although it was not statistically significant, there was a trend toward higher rates of completing all disease-specific questionnaires in the tablet group relative to the paper group.

Satisfaction regarding the surveys and their modalities was similar between the groups.

Discussion

Electronic data entry has many advantages over traditional paper-based data collection and can be used with PRO surveys to measure response to treatment. Our study evaluated whether completion rates differed between surveys administered on digital tablets and those administered on traditional paper forms in a clinic setting. We selected general-health and disease-specific instruments commonly used to collect PROs from orthopedic patients. Our primary outcome measure was survey completion rate. Secondary outcome measures were total time for completion, number of questions left unanswered on incomplete surveys, patient satisfaction, and survey preferences.

In this study, our tablet and paper groups had similar overall survey completion rates, which suggests digital tablet-based data collection is noninferior to traditional pen-and-paper data collection with respect to patient response rate in the clinical setting. It is worth emphasizing that the tablet surveys were made to resemble and function as much as possible like the paper surveys. For example, patients were allowed to select multiple answers as well as advance without answering a question. Paper surveys were mimicked so we could study inherent differences in patient responsiveness without adding digital features to prevent patients from selecting multiple answers or skipping questions. We postulate that adding these digital features could have introduced a significant difference in patient responsiveness.

Time for survey completion was not significantly different between the tablet and paper groups, demonstrating that data can be digitally collected and the aforementioned advantages realized without significant delay or clinic workflow disruption. In the future, patients may be able to complete their forms digitally, on their own devices, before arriving for their clinic visits—resulting in improved clinic workflow and data collection efficiency.

Scores computed for the health-related quality-of-life questionnaires were not significantly different between the tablet and paper groups, except for EQ-5D and NDI. Although statistically significant, the 0.035 difference between the groups’ EQ-5D scores (0.664, 0.699) is not clinically significant. (Pickard and colleagues17 established that 0.06 is the clinically significant difference between EQ-5D scores in the United States.) If there were any clinical difference in the present study, our paper group patients appeared to be in better health than our tablet group patients.

Patients’ motivation to complete surveys often plays a large role in meaningful rates of completion. On our subjective satisfaction survey, a larger percentage of patients reported they would prefer to use a tablet for future surveys (Table 4). This finding may be driven by the novelty or ease of using a popular device. Nevertheless, we think it is worthwhile to heed patient preferences, as they may point to more successful data collection and compliance.

Several other studies have compared electronic and paper data capture.6,7,9-14,18-22 Dy and colleagues6 reported on administering the DASH survey on an iPad tablet using REDCap in an outpatient setting. They found that the percentage of surveys that could be scored (<3 questions left unanswered) was significantly higher for their tablet group (98%) than their paper group (76%). The larger difference in survey completion rates in their study (vs ours) may be attributable to their use of DASH, which has more survey items (compared with QuickDASH, the instrument we used) and thus may be more sensitive to detecting differences, at the risk of increasing the burden on survey takers.23 Aktas and colleagues7 conducted a similar but smaller study of completion rates, completion times, and overall practicality of using digital tablets to collect PROs in a palliative care clinic (they did not compare tablet and paper modalities). Marsh and colleagues,12 who studied the agreement between data collected on electronic and paper versions of the WOMAC (Western Ontario and McMaster Universities) Osteoarthritis Index and the SF-12 (12-item Short Form Health Survey, Version 2) after total hip and total knee arthroplasty, found a high intraclass correlation coefficient between the 2 methods. Griffiths-Jones and colleagues11 also found a high degree of agreement between patient data collected on digital and paper surveys. In a similar study, Fanning and McAuley10 compared digital tablet and paper survey administration in an older population and found a higher percentage of preference for tablets, with ease of use and anxiety during survey completion correlating with preference. These findings mirror ours, even with our inclusion of patients in a broader age range.

Strengths of our study included its overall cohort size and the variety of measurement instruments used. In addition, we measured time for survey completion to assess the practicality of tablet-based data collection and refrained from using digital features that could have artificially improved the completion rate for this survey modality.

Our study had a few limitations. First, we recruited unequal numbers of patients from the different subspecialties—a result of each subspecialty having a different number of attending physicians and a different patient volume. Given randomization and use of similar patients across the study arms, however, this likely did not present any significant bias. Second, each patient completed a tablet survey or a paper survey but not both, and therefore we could not compare a patient’s performance on the 2 modalities. However, the burden of completing the same survey more than once likely would have lowered our participation rate and introduced additional biases we wanted to avoid. Third, despite our attempt to mimic the look of a paper survey, the tablet’s user interface presented several potential difficulties. For example, its small text and small answer buttons may have been limiting for patients with poor vision. These design features emphasize the importance of having a user interface that can be adapted to the individual, regardless of handicap. Indeed, adaptability is a potential strength of digital interfaces. For adaptability, an interface designer can use large, scalable text and add audio prompts and other features.

Our findings can be useful in evaluating patient responsiveness to surveys administered on digital tablets in an outpatient clinic setting. In this prospective, randomized study, we found that, for survey completion, use of a tablet device did not require more time than use of a paper form. In addition, the administration modalities had similar completion and error rates for a variety of orthopedic outcomes surveys. We did not activate digital features that would have given unfair advantage to the digital data collection modality. We also found a strong preference for use of technology in PRO data collection, and this may help improve collection rates. Last, though optimizing the flow of patients in our clinic was not a strict research metric, we prioritized making sure patients were not spending any more time completing these surveys than in the past. Given the potential benefits of digital surveys—immediate and accurate transfer of collected data into multiple databases, including the patient’s electronic medical record—our experience supports continuing validation of these instruments for potential wider use.

Am J Orthop. 2016;45(7):E451-E457. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

Over the past several decades, patient-reported outcomes (PROs) have become increasingly important in assessing the quality and effectiveness of medical and surgical care.1,2 The benefit lies in the ability of PROs to characterize the impact of medical interventions on symptoms, function, and other outcomes from the patient’s perspective. Consequently, clinical practices can improve patients’ objective findings (from radiographic and clinical examinations) as well as their preferences in a social-psychological context.2,3 As a patient’s satisfaction with a surgical intervention may not correlate with the surgeon’s objective assessment of outcome, PROs offer unique insight into the patient’s perceptions of well-being.4

Health-related quality-of-life assessments can be made with either general-health or disease-specific instruments. These instruments traditionally are administered with pen and paper—a data collection method with several limitations, chief being the need to manually transfer the data into an electronic medical record, a research database, or both. In addition, administering surveys on paper risks potential disqualification of partially or incorrectly completed surveys. With pen and paper, it is difficult to mandate that every question be answered accurately.

Currently, there is a potential role for electronic medical records and digital tablet devices in survey administration and data collection and storage. Theoretical advantages include direct input of survey data into databases (eliminating manual data entry and associated entry errors), improved accuracy and completion rates, and long-term storage not dependent on paper charts.5To our knowledge, there have been no prospective studies of different orthopedic outcomes collection methods. Some studies have evaluated use of touch-based tablets in data collection. Dy and colleagues6 considered administration of the DASH (Disabilities of the Arm, Shoulder, and Hand) survey on an iPad tablet (Apple Computers) and retrospectively compared the tablet and paper completion rates. The tablet group’s rate (98%) was significantly higher than the paper group’s rate (76%). Aktas and colleagues7 reported a high completion rate for a tablet survey of palliative care outcomes (they did not compare modalities). A handful of other studies have found higher intraclass correlation and validation for digital data collection than for paper collection.7-14 The comparability of the data collected digitally vs on paper was the nidus for our decision to prospectively evaluate the ease and reliability of digital data collection.

We conducted a prospective, randomized study to compare the performance of tablet and paper versions of several general-health and musculoskeletal disease–specific questionnaires. We hypothesized the tablet and paper surveys would have similar completion rates and times.

Methods

This study was approved by our Institutional Review Board. Participants were recruited during their clinic visit to 3 subspecialty orthopedic services (upper extremity, spine, arthroplasty). The questionnaires included basic demographics questions and questions about tablet use (comfort level with computers, measured on a Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree), and ownership of a tablet or smartphone). Also included were European Quality of Life–5 Dimensions (EQ-5D, General Health), a disease questionnaire specific to 1 of the 3 subspecialty services, and a satisfaction survey. Patients were asked to complete the Oswestry Disability Index (ODI) for low-back pain, the Neck Disability Index (NDI) for neck pain, the Hip Disability and Osteoarthritis Outcomes Score (HOOS) for hip pain, the Knee Injury and Osteoarthritis Outcomes Score (KOOS) for knee pain, or the QuickDASH survey for upper extremity complaints (subspecialty-specific). After recruitment, a computer-generated randomization technique was used to randomly assign patients to either a paper or an electronic (iPad) data collection group.15 We included all surveys for which patients had sufficient completion time (no clinic staff interruptions) and excluded surveys marked incomplete (because of interruptions for clinic workflow efficiency). For direct input from tablets and for data storage, we used the Research Electronic Data Capture (REDCap) system hosted at our institution.16 Our staff registered patients as REDCap participants, assigned them to their disease-specific study arms, and gave them tablets to use to complete the surveys.

Patients who were randomly assigned to take the surveys on paper were given a packet that included the demographics survey, the EQ-5D, a disease-specific survey, and a satisfaction survey. Their responses were then manually entered by the investigators into the REDCap system.

Patients who were randomly assigned to take the surveys on tablets used the REDCap survey feature, which allowed them to directly input their responses into the database (Figure).

Our primary outcome measure was survey completion rate. Secondary outcome measures were total time for completion, number of questions left unanswered on incomplete surveys, patient satisfaction with survey length (Likert scale, 1-5), ease of completion (Likert scale, 1-5), ability to comprehend questions (Likert scale, 1-5), and preference for the other survey modality (Appendix).

We used SPSS statistical software (IBM) to analyze our data, t test to compare continuous variables, χ2 test to compare categorical variables, and linear regression to test the relationship between number of questions and completion rate. Statistical significance was set at P < .05.

Results

Of the 510 patients enrolled in the study, 483 completed the initial demographics questionnaire and were included in the analysis. Patients were excluded if they were unable to complete the initial demographics questionnaire because of clinic workflow (eg, immediate need to be seen by physician, need to transfer to radiology for imaging and not being able to revisit the survey). Mean age was 56 years (range, 14-93 years), and 51% of the respondents were female. Fifty percent owned tablets, 70% owned smartphones, and mean (SD) self-rating of computer skills was 3.13 (1.16) (Likert scale, 1-5). There were no significant demographic differences between the tablet and paper groups (Table 1).

For each disease-specific questionnaire, the instrument’s published instructions for calculating scores were followed; these scores were then compared in order to further characterize the groups. There were significant differences in scores on the EQ-5D descriptive questions, a pain visual analog scale (VAS), and the NDI. Mean EQ-5D score was 0.664 for the tablet group and 0.699 for the paper group (P = .041), mean pain VAS score was 62.5 for the tablet group and 71.6 for the paper group (P < .001), and mean NDI score was 42.8 for the tablet group and 32.4 for the paper group (P = .033).

The overall completion rate for all questionnaires was 84.4%. The KOOS completion rate was 83.3% for the tablet group and 54.5% for the paper group (P = .023). Although it was not statistically significant, there was a trend toward higher rates of completing all disease-specific questionnaires in the tablet group relative to the paper group.

Satisfaction regarding the surveys and their modalities was similar between the groups.

Discussion

Electronic data entry has many advantages over traditional paper-based data collection and can be used with PRO surveys to measure response to treatment. Our study evaluated whether completion rates differed between surveys administered on digital tablets and those administered on traditional paper forms in a clinic setting. We selected general-health and disease-specific instruments commonly used to collect PROs from orthopedic patients. Our primary outcome measure was survey completion rate. Secondary outcome measures were total time for completion, number of questions left unanswered on incomplete surveys, patient satisfaction, and survey preferences.

In this study, our tablet and paper groups had similar overall survey completion rates, which suggests digital tablet-based data collection is noninferior to traditional pen-and-paper data collection with respect to patient response rate in the clinical setting. It is worth emphasizing that the tablet surveys were made to resemble and function as much as possible like the paper surveys. For example, patients were allowed to select multiple answers as well as advance without answering a question. Paper surveys were mimicked so we could study inherent differences in patient responsiveness without adding digital features to prevent patients from selecting multiple answers or skipping questions. We postulate that adding these digital features could have introduced a significant difference in patient responsiveness.

Time for survey completion was not significantly different between the tablet and paper groups, demonstrating that data can be digitally collected and the aforementioned advantages realized without significant delay or clinic workflow disruption. In the future, patients may be able to complete their forms digitally, on their own devices, before arriving for their clinic visits—resulting in improved clinic workflow and data collection efficiency.

Scores computed for the health-related quality-of-life questionnaires were not significantly different between the tablet and paper groups, except for EQ-5D and NDI. Although statistically significant, the 0.035 difference between the groups’ EQ-5D scores (0.664, 0.699) is not clinically significant. (Pickard and colleagues17 established that 0.06 is the clinically significant difference between EQ-5D scores in the United States.) If there were any clinical difference in the present study, our paper group patients appeared to be in better health than our tablet group patients.

Patients’ motivation to complete surveys often plays a large role in meaningful rates of completion. On our subjective satisfaction survey, a larger percentage of patients reported they would prefer to use a tablet for future surveys (Table 4). This finding may be driven by the novelty or ease of using a popular device. Nevertheless, we think it is worthwhile to heed patient preferences, as they may point to more successful data collection and compliance.

Several other studies have compared electronic and paper data capture.6,7,9-14,18-22 Dy and colleagues6 reported on administering the DASH survey on an iPad tablet using REDCap in an outpatient setting. They found that the percentage of surveys that could be scored (<3 questions left unanswered) was significantly higher for their tablet group (98%) than their paper group (76%). The larger difference in survey completion rates in their study (vs ours) may be attributable to their use of DASH, which has more survey items (compared with QuickDASH, the instrument we used) and thus may be more sensitive to detecting differences, at the risk of increasing the burden on survey takers.23 Aktas and colleagues7 conducted a similar but smaller study of completion rates, completion times, and overall practicality of using digital tablets to collect PROs in a palliative care clinic (they did not compare tablet and paper modalities). Marsh and colleagues,12 who studied the agreement between data collected on electronic and paper versions of the WOMAC (Western Ontario and McMaster Universities) Osteoarthritis Index and the SF-12 (12-item Short Form Health Survey, Version 2) after total hip and total knee arthroplasty, found a high intraclass correlation coefficient between the 2 methods. Griffiths-Jones and colleagues11 also found a high degree of agreement between patient data collected on digital and paper surveys. In a similar study, Fanning and McAuley10 compared digital tablet and paper survey administration in an older population and found a higher percentage of preference for tablets, with ease of use and anxiety during survey completion correlating with preference. These findings mirror ours, even with our inclusion of patients in a broader age range.

Strengths of our study included its overall cohort size and the variety of measurement instruments used. In addition, we measured time for survey completion to assess the practicality of tablet-based data collection and refrained from using digital features that could have artificially improved the completion rate for this survey modality.

Our study had a few limitations. First, we recruited unequal numbers of patients from the different subspecialties—a result of each subspecialty having a different number of attending physicians and a different patient volume. Given randomization and use of similar patients across the study arms, however, this likely did not present any significant bias. Second, each patient completed a tablet survey or a paper survey but not both, and therefore we could not compare a patient’s performance on the 2 modalities. However, the burden of completing the same survey more than once likely would have lowered our participation rate and introduced additional biases we wanted to avoid. Third, despite our attempt to mimic the look of a paper survey, the tablet’s user interface presented several potential difficulties. For example, its small text and small answer buttons may have been limiting for patients with poor vision. These design features emphasize the importance of having a user interface that can be adapted to the individual, regardless of handicap. Indeed, adaptability is a potential strength of digital interfaces. For adaptability, an interface designer can use large, scalable text and add audio prompts and other features.

Our findings can be useful in evaluating patient responsiveness to surveys administered on digital tablets in an outpatient clinic setting. In this prospective, randomized study, we found that, for survey completion, use of a tablet device did not require more time than use of a paper form. In addition, the administration modalities had similar completion and error rates for a variety of orthopedic outcomes surveys. We did not activate digital features that would have given unfair advantage to the digital data collection modality. We also found a strong preference for use of technology in PRO data collection, and this may help improve collection rates. Last, though optimizing the flow of patients in our clinic was not a strict research metric, we prioritized making sure patients were not spending any more time completing these surveys than in the past. Given the potential benefits of digital surveys—immediate and accurate transfer of collected data into multiple databases, including the patient’s electronic medical record—our experience supports continuing validation of these instruments for potential wider use.

Am J Orthop. 2016;45(7):E451-E457. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Howie L, Hirsch B, Locklear T, Abernethy AP. Assessing the value of patient-generated data to comparative effectiveness research. Health Aff (Millwood). 2014;33(7):1220-1228.

2. Higginson IJ, Carr AJ. Measuring quality of life: using quality of life measures in the clinical setting. BMJ. 2001;322(7297):1297-1300.

3. Revicki D, Hays RD, Cella D, Sloan J. Recommended methods for determining responsiveness and minimally important differences for patient-reported outcomes. J Clin Epidemiol. 2008;61(2):102-109.

4. Guyatt GH, Feeny DH, Patrick DL. Measuring health-related quality of life. Ann Intern Med. 1993;118(8):622-629.

5. Paudel D, Ahmed M, Pradhan A, Lal Dangol R. Successful use of tablet personal computers and wireless technologies for the 2011 Nepal Demographic and Health Survey. Glob Heal Sci Pract. 2013;1(2):277-284.

6. Dy CJ, Schmicker T, Tran Q, Chadwick B, Daluiski A. The use of a tablet computer to complete the DASH questionnaire. J Hand Surg Am. 2012;37(12):2589-2594.

7. Aktas A, Hullihen B, Shrotriya S, Thomas S, Walsh D, Estfan B. Connected health: cancer symptom and quality-of-life assessment using a tablet computer: a pilot study. Am J Hosp Palliat Care. 2015;32(2):189-197.

8. Basnov M, Kongsved SM, Bech P, Hjollund NH. Reliability of Short Form-36 in an internet- and a pen-and-paper version. Inform Health Soc Care. 2009;34(1):53-58.

9. Bellamy N, Wilson C, Hendrikz J, et al; EDC Study Group. Osteoarthritis Index delivered by mobile phone (m-WOMAC) is valid, reliable, and responsive. J Clin Epidemiol. 2011;64(2):182-190.

10. Fanning J, McAuley E. A comparison of tablet computer and paper-based questionnaires in healthy aging research. JMIR Res Protoc. 2014;3(3):e38.

11. Griffiths-Jones W, Norton MR, Fern ED, Williams DH. The equivalence of remote electronic and paper patient reported outcome (PRO) collection. J Arthroplasty. 2014;29(11):2136-2139.

12. Marsh JD, Bryant DM, Macdonald SJ, Naudie DD. Patients respond similarly to paper and electronic versions of the WOMAC and SF-12 following total joint arthroplasty. J Arthroplasty. 2014;29(4):670-673.

13. Olajos-Clow J, Minard J, Szpiro K, et al. Validation of an electronic version of the Mini Asthma Quality of Life Questionnaire. Respir Med. 2010;104(5):658-667.

14. Shervin N, Dorrwachter J, Bragdon CR, Shervin D, Zurakowski D, Malchau H. Comparison of paper and computer-based questionnaire modes for measuring health outcomes in patients undergoing total hip arthroplasty. J Bone Joint Surg Am. 2011;93(3):285-293.

15. Suresh K. An overview of randomization techniques: an unbiased assessment of outcome in clinical research. J Hum Reprod Sci. 2011;4(1):8-11.

16. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381.

17. Pickard AS, Neary MP, Cella D. Estimation of minimally important differences in EQ-5D utility and VAS scores in cancer. Health Qual Life Outcomes. 2007;5:70.

18. Abdel Messih M, Naylor JM, Descallar J, Manickam A, Mittal R, Harris IA. Mail versus telephone administration of the Oxford Knee and Hip Scores. J Arthroplasty. 2014;29(3):491-494.

19. Kongsved SM, Basnov M, Holm-Christensen K, Hjollund NH. Response rate and completeness of questionnaires: a randomized study of internet versus paper-and-pencil versions. J Med Internet Res. 2007;9(3):e25.

20. Theiler R, Bischoff-Ferrari HA, Good M, Bellamy N. Responsiveness of the electronic touch screen WOMAC 3.1 OA Index in a short term clinical trial with rofecoxib. Osteoarthritis Cartilage. 2004;12(11):912-916.

21. Ryan JM, Corry JR, Attewell R, Smithson MJ. A comparison of an electronic version of the SF-36 General Health Questionnaire to the standard paper version. Qual Life Res. 2002;11(1):19-26.

22. Wilson AS, Kitas GD, Carruthers DM, et al. Computerized information-gathering in specialist rheumatology clinics: an initial evaluation of an electronic version of the Short Form 36. Rheumatology. 2002;41(3):268-273.

23. Angst F, Goldhahn J, Drerup S, Flury M, Schwyzer HK, Simmen BR. How sharp is the short QuickDASH? A refined content and validity analysis of the Short Form of the Disabilities of the Shoulder, Arm and Hand questionnaire in the strata of symptoms and function and specific joint conditions. Qual Life Res. 2009;18(8):1043-1051.

1. Howie L, Hirsch B, Locklear T, Abernethy AP. Assessing the value of patient-generated data to comparative effectiveness research. Health Aff (Millwood). 2014;33(7):1220-1228.

2. Higginson IJ, Carr AJ. Measuring quality of life: using quality of life measures in the clinical setting. BMJ. 2001;322(7297):1297-1300.

3. Revicki D, Hays RD, Cella D, Sloan J. Recommended methods for determining responsiveness and minimally important differences for patient-reported outcomes. J Clin Epidemiol. 2008;61(2):102-109.

4. Guyatt GH, Feeny DH, Patrick DL. Measuring health-related quality of life. Ann Intern Med. 1993;118(8):622-629.

5. Paudel D, Ahmed M, Pradhan A, Lal Dangol R. Successful use of tablet personal computers and wireless technologies for the 2011 Nepal Demographic and Health Survey. Glob Heal Sci Pract. 2013;1(2):277-284.

6. Dy CJ, Schmicker T, Tran Q, Chadwick B, Daluiski A. The use of a tablet computer to complete the DASH questionnaire. J Hand Surg Am. 2012;37(12):2589-2594.

7. Aktas A, Hullihen B, Shrotriya S, Thomas S, Walsh D, Estfan B. Connected health: cancer symptom and quality-of-life assessment using a tablet computer: a pilot study. Am J Hosp Palliat Care. 2015;32(2):189-197.

8. Basnov M, Kongsved SM, Bech P, Hjollund NH. Reliability of Short Form-36 in an internet- and a pen-and-paper version. Inform Health Soc Care. 2009;34(1):53-58.

9. Bellamy N, Wilson C, Hendrikz J, et al; EDC Study Group. Osteoarthritis Index delivered by mobile phone (m-WOMAC) is valid, reliable, and responsive. J Clin Epidemiol. 2011;64(2):182-190.

10. Fanning J, McAuley E. A comparison of tablet computer and paper-based questionnaires in healthy aging research. JMIR Res Protoc. 2014;3(3):e38.

11. Griffiths-Jones W, Norton MR, Fern ED, Williams DH. The equivalence of remote electronic and paper patient reported outcome (PRO) collection. J Arthroplasty. 2014;29(11):2136-2139.

12. Marsh JD, Bryant DM, Macdonald SJ, Naudie DD. Patients respond similarly to paper and electronic versions of the WOMAC and SF-12 following total joint arthroplasty. J Arthroplasty. 2014;29(4):670-673.

13. Olajos-Clow J, Minard J, Szpiro K, et al. Validation of an electronic version of the Mini Asthma Quality of Life Questionnaire. Respir Med. 2010;104(5):658-667.

14. Shervin N, Dorrwachter J, Bragdon CR, Shervin D, Zurakowski D, Malchau H. Comparison of paper and computer-based questionnaire modes for measuring health outcomes in patients undergoing total hip arthroplasty. J Bone Joint Surg Am. 2011;93(3):285-293.

15. Suresh K. An overview of randomization techniques: an unbiased assessment of outcome in clinical research. J Hum Reprod Sci. 2011;4(1):8-11.

16. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381.

17. Pickard AS, Neary MP, Cella D. Estimation of minimally important differences in EQ-5D utility and VAS scores in cancer. Health Qual Life Outcomes. 2007;5:70.

18. Abdel Messih M, Naylor JM, Descallar J, Manickam A, Mittal R, Harris IA. Mail versus telephone administration of the Oxford Knee and Hip Scores. J Arthroplasty. 2014;29(3):491-494.

19. Kongsved SM, Basnov M, Holm-Christensen K, Hjollund NH. Response rate and completeness of questionnaires: a randomized study of internet versus paper-and-pencil versions. J Med Internet Res. 2007;9(3):e25.

20. Theiler R, Bischoff-Ferrari HA, Good M, Bellamy N. Responsiveness of the electronic touch screen WOMAC 3.1 OA Index in a short term clinical trial with rofecoxib. Osteoarthritis Cartilage. 2004;12(11):912-916.

21. Ryan JM, Corry JR, Attewell R, Smithson MJ. A comparison of an electronic version of the SF-36 General Health Questionnaire to the standard paper version. Qual Life Res. 2002;11(1):19-26.

22. Wilson AS, Kitas GD, Carruthers DM, et al. Computerized information-gathering in specialist rheumatology clinics: an initial evaluation of an electronic version of the Short Form 36. Rheumatology. 2002;41(3):268-273.

23. Angst F, Goldhahn J, Drerup S, Flury M, Schwyzer HK, Simmen BR. How sharp is the short QuickDASH? A refined content and validity analysis of the Short Form of the Disabilities of the Shoulder, Arm and Hand questionnaire in the strata of symptoms and function and specific joint conditions. Qual Life Res. 2009;18(8):1043-1051.