User login

Chest Radiograph Interpretation

The inclusion of clinical information in diagnostic testing may influence the interpretation of the clinical findings. Historical and clinical findings may focus the reader's attention to the relevant details, thereby improving the accuracy of the interpretation. However, such information may cause the reader to have preconceived notions about the results, biasing the overall interpretation.

The impact of clinical information on the interpretation of radiographic studies remains an issue of debate. Previous studies have found that clinical information improves the accuracy of radiographic interpretation for a broad range of diagnoses,[1, 2, 3, 4] whereas others do not show improvement.[5, 6, 7] Additionally, clinical information may serve as a distraction that leads to more false‐positive interpretations.[8] For this reason, many radiologists prefer to review radiographs without knowledge of the clinical scenario prompting the study to avoid focusing on the expected findings and potentially missing other important abnormalities.[9]

The chest radiograph (CXR) is the most commonly used diagnostic imaging modality. Nevertheless, poor agreement exists among radiologists in the interpretation of chest radiographs for the diagnosis of pneumonia in both adults and children.[10, 11, 12, 13, 14, 15] Recent studies have found a high degree of agreement among pediatric radiologists with implementation of the World Health Organization (WHO) criteria for standardized CXR interpretation for diagnosis of bacterial pneumonia in children.[16, 17, 18] In these studies, participants were blinded to the clinical presentation. Data investigating the impact of clinical history on CXR interpretation in the pediatric population are limited.[19]

We conducted this prospective case‐based study to evaluate the impact of clinical information on the reliability of radiographic diagnosis of pneumonia among children presenting to a pediatric emergency department (ED) with clinical suspicion of pneumonia.

METHODS

Study Subjects

Six board‐certified radiologists at 2 academic children's hospitals (Children's Hospital of Philadelphia [n = 3] and Boston Children's Hospital [n = 3]) interpreted the same 110 chest radiographs (100 original and 10 duplicates) on 2 separate occasions. Clinical information was withheld during the first interpretation. The inter‐ and inter‐rater reliability for the interpretation of these 110 radiographs without clinical information have been previously reported.[18] After a period of 6 months, the radiologists reviewed the radiographs with access to clinical information provided by the physician ordering the CXR. This clinical information included age, sex, clinical indication for obtaining the radiograph, relevant history, and physical examination findings. The radiologists did not have access to the patients' medical records. The radiologists varied with respect to the number of years practicing pediatric radiology (median, 8 years; range, 336 years).

Radiographs were selected from children who presented to the ED at Boston Children's Hospital with concern of pneumonia. We selected radiographs with a spectrum of respiratory disease processes encountered in a pediatric population. The final radiographs included 50 radiographs with a final reading in the medical record without suspicion for pneumonia and 50 radiographs with suspicion of pneumonia. In the latter group, 25 radiographs had a final reading suggestive of an alveolar infiltrate, and 25 radiographs had a final reading suggestive of an interstitial infiltrate. Ten duplicate radiographs were included.

Radiograph Interpretation

The radiologists interpreted both anterior‐posterior and lateral views for each subject. Digital Imaging and Communications in Medicine images were downloaded from a registry at Boston Children's Hospital, and were copied to DVDs that were provided to each radiologist. Standardized radiographic imaging software (eFilm Lite; Merge Healthcare, Chicago, Illinois) was used by each radiologist.

Each radiologist completed a study questionnaire for each radiograph (see Supporting Information, Appendix 1, in the online version of this article). The questionnaire utilized radiographic descriptors of primary endpoint pneumonia described by the WHO to standardize the radiographic diagnosis of pneumonia.[20, 21] No additional training was provided to the radiologists. The main outcome of interest was the presence or absence of an infiltrate. Among radiographs in which an infiltrate was identified, radiologists selected whether there was an alveolar infiltrate, interstitial infiltrate, or both. Alveolar infiltrate and interstitial infiltrate are defined on the study questionnaire (Appendix 1). A radiograph classified as having either an alveolar infiltrate or interstitial infiltrate (not atelectasis) was considered to have any infiltrate. Additional findings including air bronchograms, hilar adenopathy, pleural effusion, and location of abnormalities were also recorded.

Statistical Analysis

Inter‐rater reliability was assessed using the kappa statistic to determine the overall agreement among the 6 radiologists for each outcome (eg, presence or absence of alveolar infiltrate). The kappa statistic for more than 2 raters utilizes an analysis of variance approach.[22] To calculate 95% confidence intervals (CI) for kappa statistics with more than 2 raters, we employed a bootstrapping method with 1000 replications of samples equal in size to the study sample. Intra‐rater reliability was evaluated by examining the agreement within each radiologist upon review of 10 duplicate radiographs. We used the following benchmarks to classify the strength of agreement: poor (<0.0), slight (00.20), fair (0.210.40), moderate (0.410.60), substantial (0.610.80), almost perfect (0.811.0).[23] Negative kappa values represent agreement less than would be predicted by chance alone.[24, 25] To calculate the kappa, a value must be recorded in 3 of 4 of the following categories: negative to positive, positive to negative, concordant negative, and concordant positive reporting of pneumonia. If raters did not fulfill 3 categories, the kappa could not be calculated.

The inter‐rater concordance for identification of an alveolar infiltrate was calculated for each radiologist by comparing their reporting of alveolar infiltrate with and without clinical history for each of the 100 radiographs. Radiographs that were identified by an individual rater as no alveolar infiltrate when read without clinical history, but those subsequently identified as alveolar infiltrate with clinical history were categorized as negative to positive reporting of pneumonia with clinical history. Those that were identified as alveolar infiltrate but subsequently identified as no alveolar infiltrate were categorized as positive to negative reporting of pneumonia with clinical history. Those radiographs in which there was no change in identification of alveolar infiltrate with clinical information were categorized as concordant reporting of pneumonia.

The study was approved by the institutional review boards at both children's hospitals.

RESULTS

Patient Sample

The radiographs were from patients ranging in age from 1 week to 19 years (median, 3.5 years; interquartile range, 1.66.0 years). Fifty (50%) patients were male.

Inter‐rater Reliability

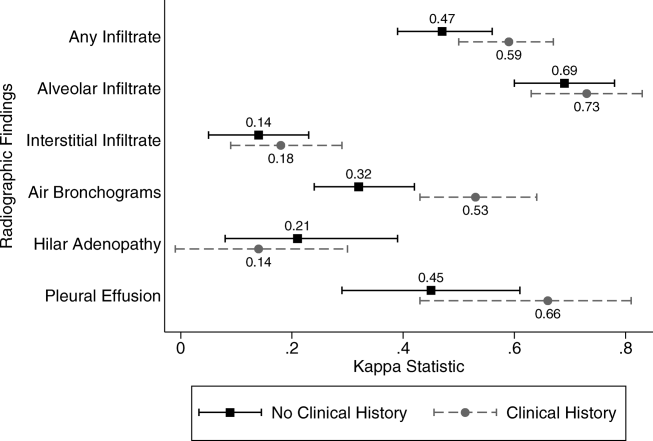

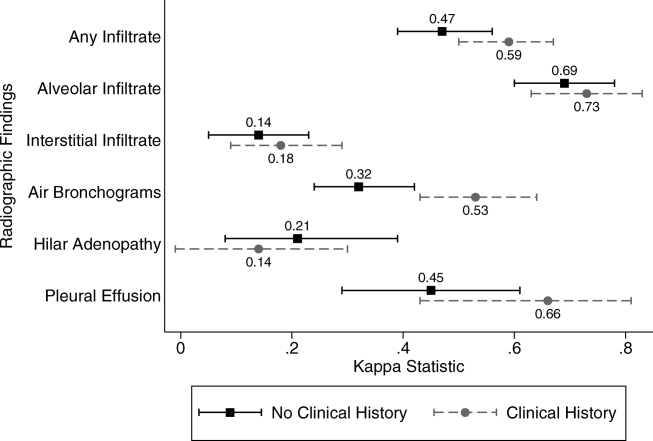

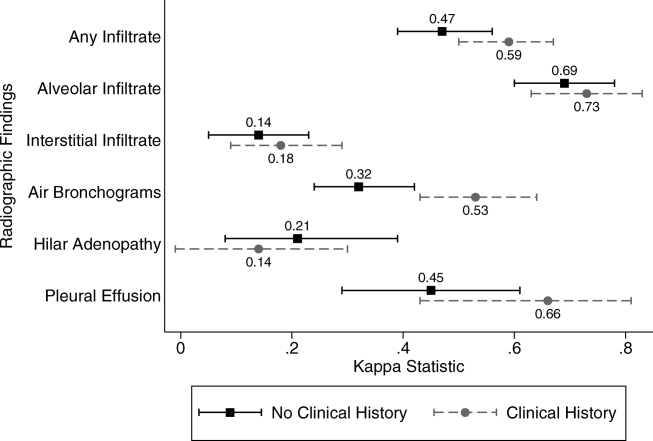

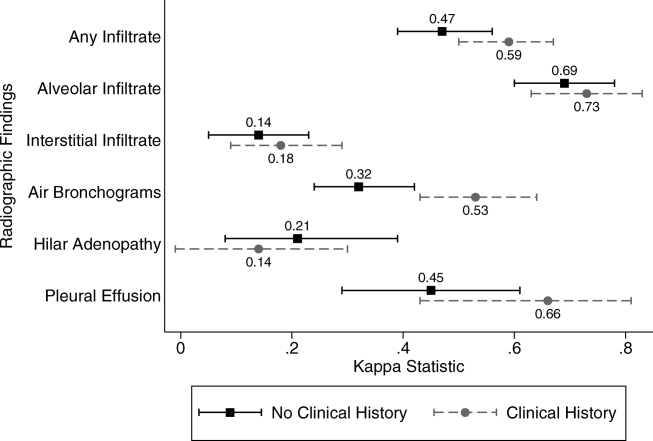

The kappa coefficients of inter‐rater reliability between the radiologists across the 6 clinical measures of interest with and without access to clinical history are plotted in Figure 1. Reliability improved from fair (k = 0.32, 95% CI: 0.24 to 0.42) to moderate (k = 0.53, 95% CI: 0.43 to 0.64) for identification of air bronchograms with the addition of clinical history. Although there was an increase in kappa values for identification of any infiltrate, alveolar infiltrate, interstitial infiltrate, and pleural effusion, and a decrease in the kappa value for identification of hilar adenopathy with the addition of clinical information, there was substantial overlap of the 95% CIs, suggesting that inclusion of clinical history did not result in a statistically significant change in the reliability of these findings.

Intra‐rater Reliability

The estimates of inter‐rater reliability for the interpretation of the 10 duplicate images with and without clinical history are shown in Table 1. The inter‐rater reliability in the identification of alveolar infiltrate remained substantial to almost perfect for each rater with and without access to clinical history. Rater 1 had a decrease in inter‐rater reliability from almost perfect (k = 1.0, 95% CI: 1.0 to 1.0) to fair (k = 0.21, 95% CI: 0.43 to 0.85) in the identification of interstitial infiltrate with the addition of clinical history. This rater also had a decrease in agreement from almost perfect (k = 1.0, 95% CI: 1.0 to 1.0) to fair (k = 0.4, 95% CI: 0.16 to 0.96) in the identification of any infiltrate.

| Phase 1No Clinical History | Phase 2Access to Clinical History | |||

|---|---|---|---|---|

| Kappa | 95% Confidence Interval | Kappa | 95% Confidence Interval | |

| ||||

| Any infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 0.40 | 0.16 to 0.96 |

| Rater 2 | 0.60 | 0.10 to 1.00 | 0.58 | 0.07 to 1.00 |

| Rater 3 | 0.80 | 0.44 to 1.00 | 0.80 | 0.44 to 1.00 |

| Rater 4 | 1.00 | 1.00 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 5 | N/Aa | 0.11 | 0.36 to 0.14 | |

| Rater 6 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Alveolar infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 2 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 3 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 4 | 1.00 | 1.00 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 5 | 0.78 | 0.39 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 6 | 0.74 | 0.27 to 1.00 | 0.78 | 0.39 to 1.00 |

| Interstitial infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 0.21 | 0.43 to 0.85 |

| Rater 2 | 0.21 | 0.43 to 0.85 | 0.11 | 0.36 to 0.14 |

| Rater 3 | 0.74 | 0.27 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 4 | N/A | N/A | ||

| Rater 5 | 0.58 | 0.07 to 1.00 | 0.52 | 0.05 to 1.00 |

| Rater 6 | 0.62 | 0.5 to 1.00 | N/Aa | |

Intra‐rater Concordance

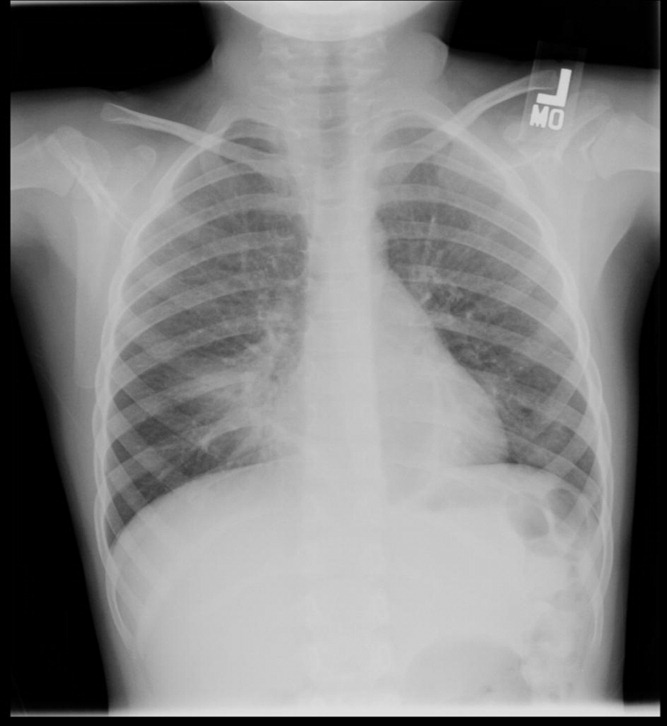

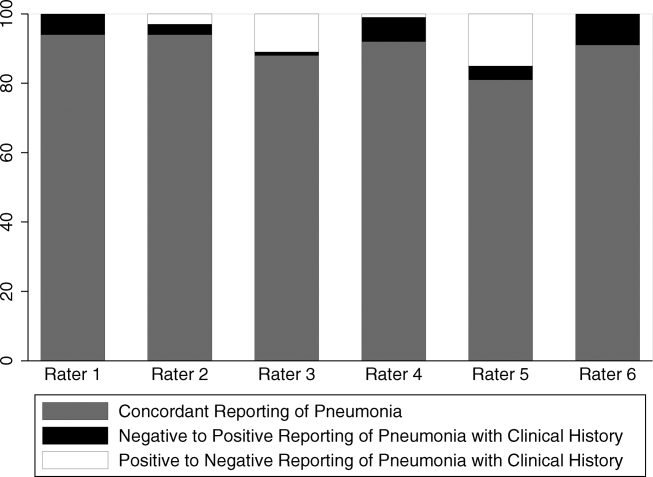

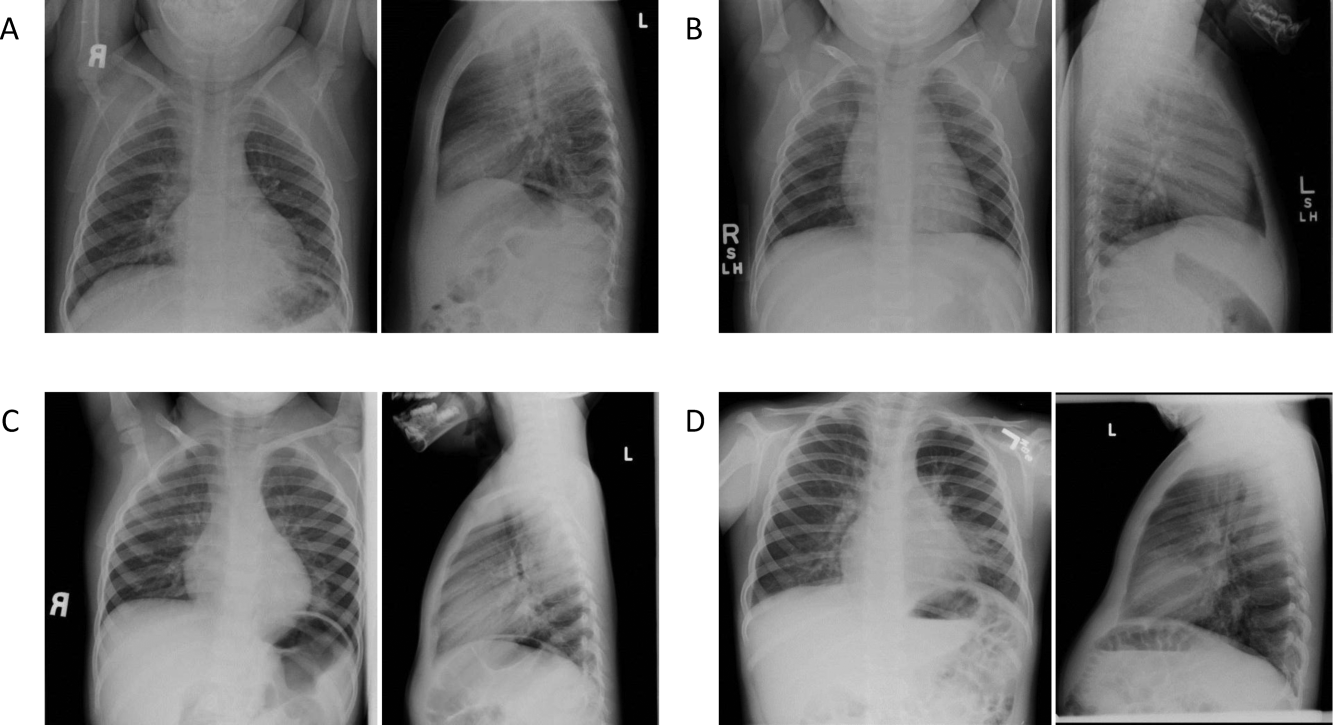

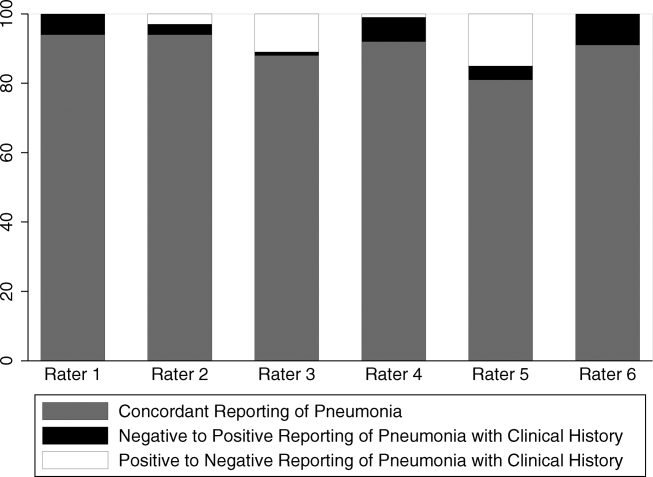

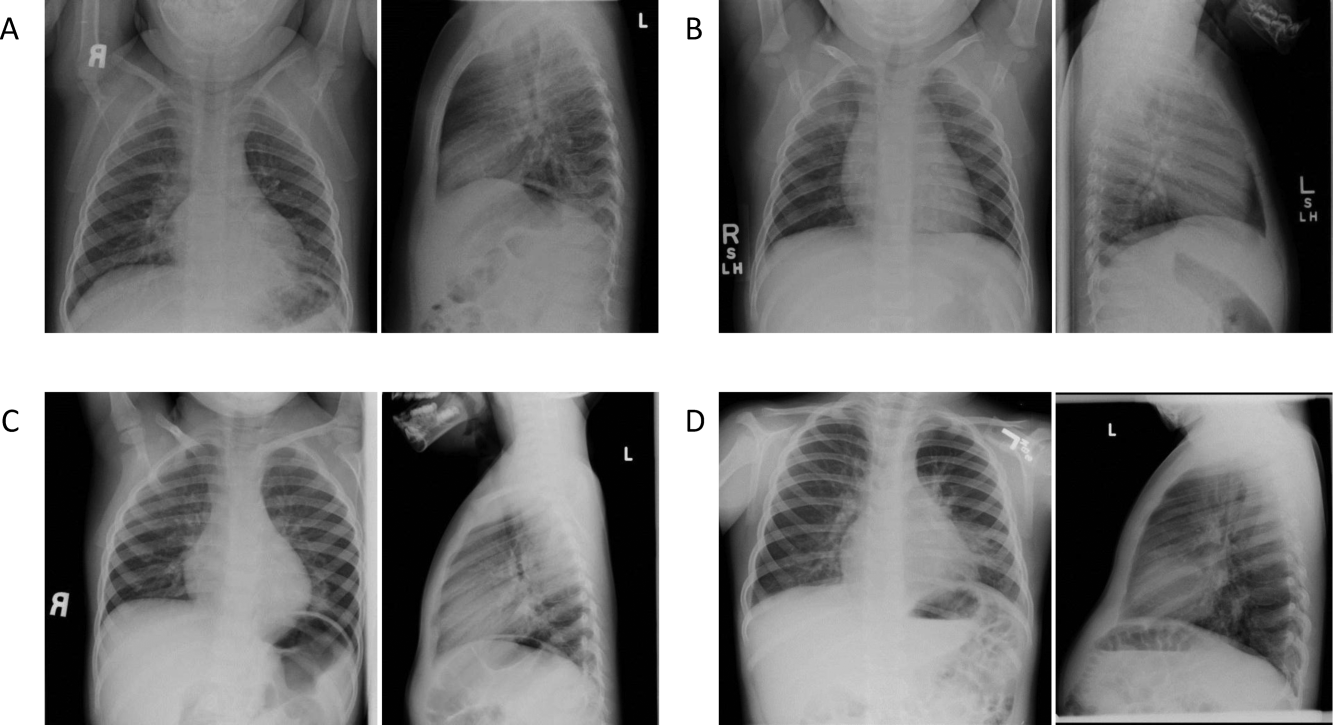

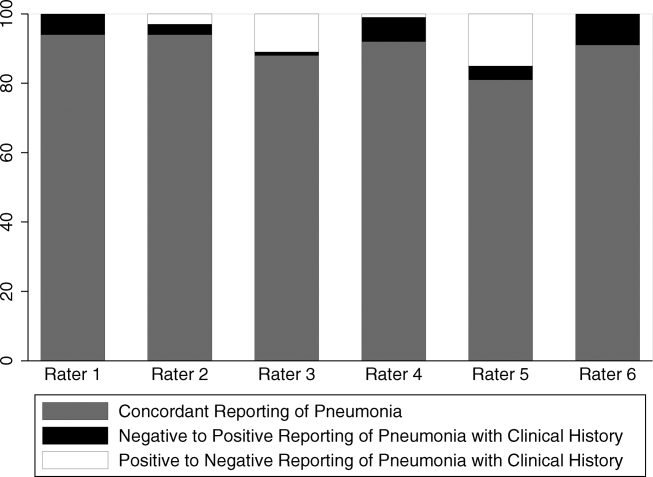

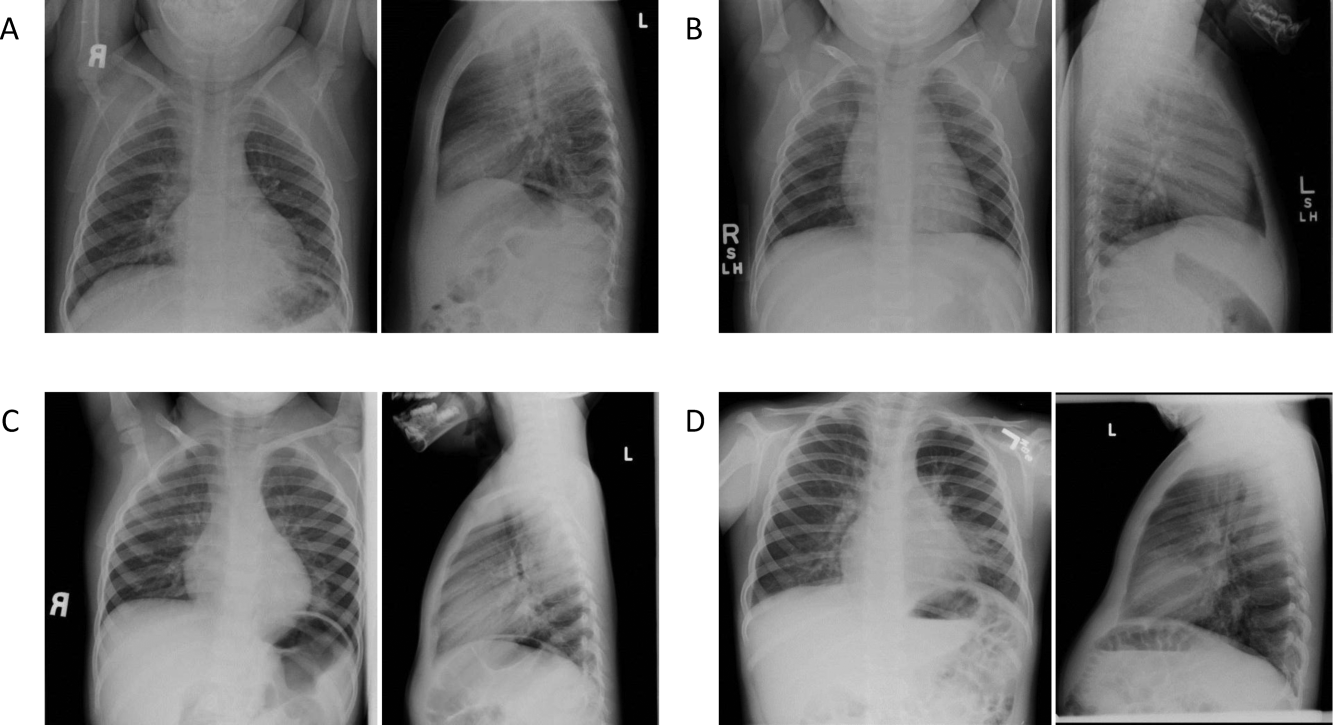

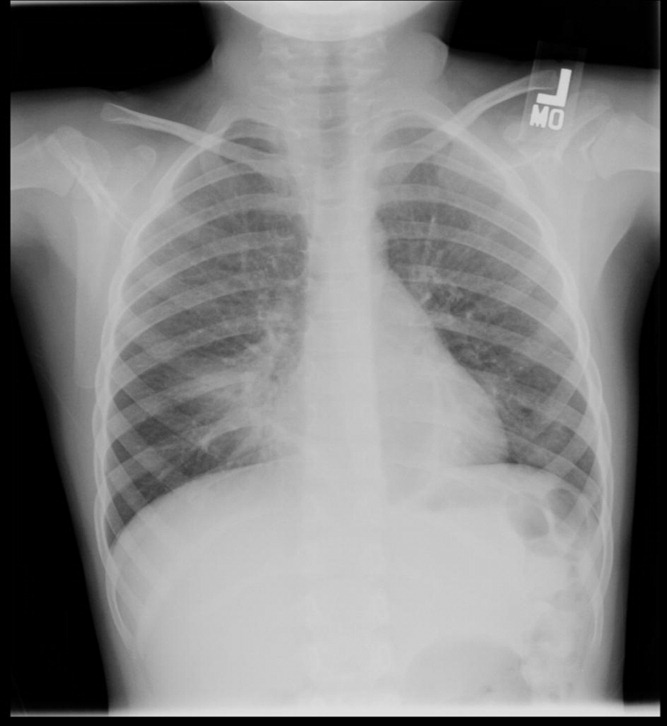

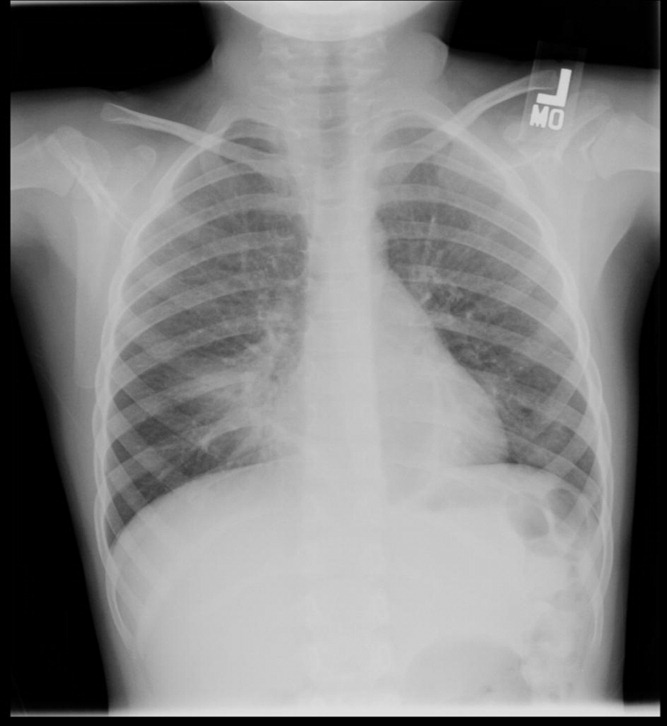

The inter‐rater concordance of the radiologists for the identification of alveolar infiltrate during the interpretation of the 100 chest radiographs with and without access to clinical history is shown in Figure 2. The availability of clinical information impacted physicians differently in the evaluation of alveolar infiltrates. Raters 1, 4, and 6 appeared more likely to identify an alveolar infiltrate with access to the clinical information, whereas raters 3 and 5 appeared less likely to identify an alveolar infiltrate. Of the 100 films that were interpreted with and without clinical information, the mean number of discordant interpretations per rater was 10, with values ranging from 6 to 19 for the individual raters. Radiographs in which more than 3 raters changed their interpretation regarding the presence of an alveolar infiltrate are shown in Figure 3. For Figure 3D, 4 radiologists changed their interpretation from no alveolar infiltrate to alveolar infiltrate, and 1 radiologist changed from alveolar infiltrate to no alveolar infiltrate with the addition of clinical history.

Comment

We investigated the impact of the availability of clinical information on the reliability of chest radiographic interpretation in the diagnosis of pneumonia. There was improved inter‐rater reliability in the identification of air bronchograms with the addition of clinical information; however, clinical history did not have a substantial impact on the inter‐rater reliability of other findings. The addition of clinical information did not alter the inter‐rater reliability in the identification of alveolar infiltrate. Clinical history affected individual raters differently in their interpretation of alveolar infiltrate, with 3 raters more likely to identify an alveolar infiltrate and 2 raters less likely to identify an alveolar infiltrate.

Most studies addressing the impact of clinical history on radiographic interpretation evaluated accuracy. In many of these studies, accuracy was defined as the raters' agreement with the final interpretation of each film as documented in the medical record or their agreement with the interpretation of the radiologists selecting the cases.[1, 2, 3, 5, 6, 7] Given the known inter‐rater variability in radiographic interpretation,[10, 11, 12, 13, 14, 15] accuracy of a radiologist's interpretation cannot be appropriately assessed through agreement with their peers. Because a true measure of accuracy in the radiographic diagnosis of pneumonia can only be determined through invasive testing, such as lung biopsy, reliability serves as a more appropriate measure of performance. Inclusion of clinical information in chest radiograph interpretation has been shown to improve reliability in the radiographic diagnosis of a broad range of conditions.[15]

The primary outcome in this study was the identification of an infiltrate. Previous studies have noted consistent identification of the radiographic features that are most suggestive of bacterial pneumonia, such as alveolar infiltrate, and less consistent identification of other radiographic findings, including interstitial infiltrate.[18, 26, 27] Among the radiologists in this study, the addition of clinical information did not have a meaningful impact on the reliability of either of these findings, as there was substantial inter‐rater agreement for the identification of alveolar infiltrate and only slight agreement for the identification of interstitial infiltrate, both with and without clinical history. Additionally, inter‐rater reliability for the identification of alveolar infiltrate remained substantial to almost perfect for all 6 raters with the addition of clinical information.

Clinical information impacted the raters differently in their pattern of alveolar infiltrate identification, suggesting that radiologists may differ in their approach to incorporating clinical history in the interpretation of chest radiographs. The inclusion of clinical information may impact a radiologist's perception, leading to improved identification of abnormalities; however, it may also guide their decision making about the relevance of previously identified abnormalities.[28] Some radiologists may use clinical information to support or suggest possible radiographic findings, whereas others may use the information to challenge potential findings. This study did not address the manner in which the individual raters utilized the clinical history. There were also several radiographs in which the clinical information resulted in a change in the identification of an alveolar infiltrate by 3 or more raters, with as many as 5 of 6 raters changing their interpretation for 1 particular radiograph. These changes in identification of an infiltrate suggest that unidentified aspects of a history may be likely to influence a rater's interpretation of a radiograph. Nevertheless, these changes did not result in improved reliability and it is not possible to determine if these changes resulted in improved accuracy in interpretation.

This study had several limitations. First, radiographs were purposefully selected to encompass a broad spectrum of radiographic findings. Thus, the prevalence of pneumonia and other abnormal findings was artificially higher than typically observed among a cohort of children for whom pneumonia is considered. Second, the radiologists recruited for this study all practice in an academic children's hospital setting. These factors may limit the generalizability of our findings. However, we would expect these results to be generalizable to pediatric radiologists from other academic institutions. Third, this study does not meet the criteria of a balanced study design as defined by Loy and Irwig.[19] A study was characterized as balanced if half of the radiographs were read with and half without clinical information in each of the 2 reading sessions. The proposed benefit of such a design is to control for possible changes in ability or reporting practices of the raters that may have occurred between study periods. The use of a standardized reporting tool likely minimized changes in reporting practices. Also, it is unlikely that the ability or reporting practices of an experienced radiologist would change over the study period. Fourth, the radiologists interpreted the films outside of their standard workflow and utilized a standardized reporting tool that focused on the presence or absence of pneumonia indicators. These factors may have increased the radiologists' suspicion for pneumonia even in the absence of clinical information. This may have biased the results toward finding no difference in the identification of pneumonia with the addition of detailed clinical history. Thus, the inclusion of clinical information in radiograph interpretation in clinical practice may have greater impact on the identification of these pneumonia indicators than was found in this study.[29] Finally, reliability does not imply accuracy, and it is unknown if changes in the identification of pneumonia indicators led to more accurate interpretation with respect to the clinical or pathologic diagnosis of pneumonia.

In conclusion, we observed high intra‐ and inter‐rater reliability among radiologists in the identification of an alveolar infiltrate, the radiographic finding most suggestive of bacterial pneumonia.[16, 17, 18, 30] The addition of clinical information did not have a substantial impact on the reliability of its identification.

- , , , et al. Tentative diagnoses facilitate the detection of diverse lesions in chest radiographs. Invest Radiol. 1986;21(7):532–539.

- , , , . Influence of clinical history upon detection of nodules and other lesions. Invest Radiol. 1988;23(1):48–55.

- , , , . Influence of clinical history on perception of abnormalities in pediatric radiographs. Acad Radiol. 1994;1(3):217–223.

- , , , et al. Impact of clinical history on film interpretation. Yonsei Med J. 1992;33(2):168–172.

- , , , et al. The effect of clinical history on chest radiograph interpretations in a PACS environment. Invest Radiol. 1990;25(6):670–674.

- , , , et al. Does knowledge of the clinical history affect the accuracy of chest radiograph interpretation?AJR Am J Roentgenol. 1990;154(4):709–712.

- , , , . Detection of lung cancer on the chest radiograph: impact of previous films, clinical information, double reading, and dual reading. J Clin Epidemiol. 2001;54(11):1146–1150.

- , , , . The effect of clinical bias on the interpretation of myelography and spinal computed tomography. Radiology. 1982;145(1):85–89.

- . A suggestion: look at the images first, before you read the history. Radiology. 2002;223(1):9–10.

- , , , et al. Interobserver reliability of the chest radiograph in community‐acquired pneumonia. PORT Investigators. Chest. 1996;110(2):343–350.

- , , , , . Inter‐ and intra‐observer variability in the assessment of atelectasis and consolidation in neonatal chest radiographs. Pediatr Radiol. 1999;29(6):459–462.

- , , , , . Chest radiographs in the emergency department: is the radiologist really necessary?Postgrad Med J. 2003;79(930):214–217.

- , , , . Inter‐observer variation in the interpretation of chest radiographs for pneumonia in community‐acquired lower respiratory tract infections. Clin Radiol. 2004;59(8):743–752.

- , , , , , . Disagreement in the interpretation of chest radiographs among specialists and clinical outcomes of patients hospitalized with suspected pneumonia. Eur J Intern Med. 2006;17(1):43–47.

- , , . An assessment of inter‐observer agreement and accuracy when reporting plain radiographs. Clin Radiol. 1997;52(3):235–238.

- , , , et al. Evaluation of the World Health Organization criteria for chest radiographs for pneumonia diagnosis in children. Eur J Pediatr. 2011;171(2):369–374.

- , , , et al. Standardized interpretation of paediatric chest radiographs for the diagnosis of pneumonia in epidemiological studies. Bull World Health Organ. 2005;83(5):353–359.

- , , , et al. Variability in the interpretation of chest radiographs for the diagnosis of pneumonia in children. J Hosp Med. 2012;7(4):294–298.

- , . Accuracy of diagnostic tests read with and without clinical information: a systematic review. JAMA. 2004;292(13):1602–1609.

- Standardization of interpretation of chest radiographs for the diagnosis of pneumonia in children. In:World Health Organization: Pneumonia Vaccine Trial Investigators' Group.Geneva: Department of Vaccine and Biologics;2001.

- , , , et al. Effectiveness of heptavalent pneumococcal conjugate vaccine in children younger than 5 years of age for prevention of pneumonia: updated analysis using World Health Organization standardized interpretation of chest radiographs. Pediatr Infect Dis J. 2006;25(9):779–781.

- , . A one‐way components of variance model for categorical data. Biometrics. 1977;33:671–679.

- , . The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–174.

- , . Kappa statistic. CMAJ. 2005;173(1):16.

- , . Clinical biostatistics. LIV. The biostatistics of concordance. Clin Pharmacol Ther. 1981;29(1):111–123.

- , , , , , . Practice guidelines for the management of community‐acquired pneumonia in adults. Infectious Diseases Society of America. Clin Infect Dis. 2000;31(2):347–382.

- , , , et al. Guidelines for the management of adults with community‐acquired pneumonia. Diagnosis, assessment of severity, antimicrobial therapy, and prevention. Am J Respir Crit Care Med. 2001;163(7):1730–1754.

- , . Commentary does clinical history affect perception?Acad Radiol. 2006;13(3):402–403.

- , . The effect of clinical history on chest radiograph interpretations in a PACS environment. Invest Radiol. 1991;26(5):512–514.

- , , , . Comparison of radiological findings and microbial aetiology of childhood pneumonia. Acta Paediatr. 1993;82(4):360–363.

The inclusion of clinical information in diagnostic testing may influence the interpretation of the clinical findings. Historical and clinical findings may focus the reader's attention to the relevant details, thereby improving the accuracy of the interpretation. However, such information may cause the reader to have preconceived notions about the results, biasing the overall interpretation.

The impact of clinical information on the interpretation of radiographic studies remains an issue of debate. Previous studies have found that clinical information improves the accuracy of radiographic interpretation for a broad range of diagnoses,[1, 2, 3, 4] whereas others do not show improvement.[5, 6, 7] Additionally, clinical information may serve as a distraction that leads to more false‐positive interpretations.[8] For this reason, many radiologists prefer to review radiographs without knowledge of the clinical scenario prompting the study to avoid focusing on the expected findings and potentially missing other important abnormalities.[9]

The chest radiograph (CXR) is the most commonly used diagnostic imaging modality. Nevertheless, poor agreement exists among radiologists in the interpretation of chest radiographs for the diagnosis of pneumonia in both adults and children.[10, 11, 12, 13, 14, 15] Recent studies have found a high degree of agreement among pediatric radiologists with implementation of the World Health Organization (WHO) criteria for standardized CXR interpretation for diagnosis of bacterial pneumonia in children.[16, 17, 18] In these studies, participants were blinded to the clinical presentation. Data investigating the impact of clinical history on CXR interpretation in the pediatric population are limited.[19]

We conducted this prospective case‐based study to evaluate the impact of clinical information on the reliability of radiographic diagnosis of pneumonia among children presenting to a pediatric emergency department (ED) with clinical suspicion of pneumonia.

METHODS

Study Subjects

Six board‐certified radiologists at 2 academic children's hospitals (Children's Hospital of Philadelphia [n = 3] and Boston Children's Hospital [n = 3]) interpreted the same 110 chest radiographs (100 original and 10 duplicates) on 2 separate occasions. Clinical information was withheld during the first interpretation. The inter‐ and inter‐rater reliability for the interpretation of these 110 radiographs without clinical information have been previously reported.[18] After a period of 6 months, the radiologists reviewed the radiographs with access to clinical information provided by the physician ordering the CXR. This clinical information included age, sex, clinical indication for obtaining the radiograph, relevant history, and physical examination findings. The radiologists did not have access to the patients' medical records. The radiologists varied with respect to the number of years practicing pediatric radiology (median, 8 years; range, 336 years).

Radiographs were selected from children who presented to the ED at Boston Children's Hospital with concern of pneumonia. We selected radiographs with a spectrum of respiratory disease processes encountered in a pediatric population. The final radiographs included 50 radiographs with a final reading in the medical record without suspicion for pneumonia and 50 radiographs with suspicion of pneumonia. In the latter group, 25 radiographs had a final reading suggestive of an alveolar infiltrate, and 25 radiographs had a final reading suggestive of an interstitial infiltrate. Ten duplicate radiographs were included.

Radiograph Interpretation

The radiologists interpreted both anterior‐posterior and lateral views for each subject. Digital Imaging and Communications in Medicine images were downloaded from a registry at Boston Children's Hospital, and were copied to DVDs that were provided to each radiologist. Standardized radiographic imaging software (eFilm Lite; Merge Healthcare, Chicago, Illinois) was used by each radiologist.

Each radiologist completed a study questionnaire for each radiograph (see Supporting Information, Appendix 1, in the online version of this article). The questionnaire utilized radiographic descriptors of primary endpoint pneumonia described by the WHO to standardize the radiographic diagnosis of pneumonia.[20, 21] No additional training was provided to the radiologists. The main outcome of interest was the presence or absence of an infiltrate. Among radiographs in which an infiltrate was identified, radiologists selected whether there was an alveolar infiltrate, interstitial infiltrate, or both. Alveolar infiltrate and interstitial infiltrate are defined on the study questionnaire (Appendix 1). A radiograph classified as having either an alveolar infiltrate or interstitial infiltrate (not atelectasis) was considered to have any infiltrate. Additional findings including air bronchograms, hilar adenopathy, pleural effusion, and location of abnormalities were also recorded.

Statistical Analysis

Inter‐rater reliability was assessed using the kappa statistic to determine the overall agreement among the 6 radiologists for each outcome (eg, presence or absence of alveolar infiltrate). The kappa statistic for more than 2 raters utilizes an analysis of variance approach.[22] To calculate 95% confidence intervals (CI) for kappa statistics with more than 2 raters, we employed a bootstrapping method with 1000 replications of samples equal in size to the study sample. Intra‐rater reliability was evaluated by examining the agreement within each radiologist upon review of 10 duplicate radiographs. We used the following benchmarks to classify the strength of agreement: poor (<0.0), slight (00.20), fair (0.210.40), moderate (0.410.60), substantial (0.610.80), almost perfect (0.811.0).[23] Negative kappa values represent agreement less than would be predicted by chance alone.[24, 25] To calculate the kappa, a value must be recorded in 3 of 4 of the following categories: negative to positive, positive to negative, concordant negative, and concordant positive reporting of pneumonia. If raters did not fulfill 3 categories, the kappa could not be calculated.

The inter‐rater concordance for identification of an alveolar infiltrate was calculated for each radiologist by comparing their reporting of alveolar infiltrate with and without clinical history for each of the 100 radiographs. Radiographs that were identified by an individual rater as no alveolar infiltrate when read without clinical history, but those subsequently identified as alveolar infiltrate with clinical history were categorized as negative to positive reporting of pneumonia with clinical history. Those that were identified as alveolar infiltrate but subsequently identified as no alveolar infiltrate were categorized as positive to negative reporting of pneumonia with clinical history. Those radiographs in which there was no change in identification of alveolar infiltrate with clinical information were categorized as concordant reporting of pneumonia.

The study was approved by the institutional review boards at both children's hospitals.

RESULTS

Patient Sample

The radiographs were from patients ranging in age from 1 week to 19 years (median, 3.5 years; interquartile range, 1.66.0 years). Fifty (50%) patients were male.

Inter‐rater Reliability

The kappa coefficients of inter‐rater reliability between the radiologists across the 6 clinical measures of interest with and without access to clinical history are plotted in Figure 1. Reliability improved from fair (k = 0.32, 95% CI: 0.24 to 0.42) to moderate (k = 0.53, 95% CI: 0.43 to 0.64) for identification of air bronchograms with the addition of clinical history. Although there was an increase in kappa values for identification of any infiltrate, alveolar infiltrate, interstitial infiltrate, and pleural effusion, and a decrease in the kappa value for identification of hilar adenopathy with the addition of clinical information, there was substantial overlap of the 95% CIs, suggesting that inclusion of clinical history did not result in a statistically significant change in the reliability of these findings.

Intra‐rater Reliability

The estimates of inter‐rater reliability for the interpretation of the 10 duplicate images with and without clinical history are shown in Table 1. The inter‐rater reliability in the identification of alveolar infiltrate remained substantial to almost perfect for each rater with and without access to clinical history. Rater 1 had a decrease in inter‐rater reliability from almost perfect (k = 1.0, 95% CI: 1.0 to 1.0) to fair (k = 0.21, 95% CI: 0.43 to 0.85) in the identification of interstitial infiltrate with the addition of clinical history. This rater also had a decrease in agreement from almost perfect (k = 1.0, 95% CI: 1.0 to 1.0) to fair (k = 0.4, 95% CI: 0.16 to 0.96) in the identification of any infiltrate.

| Phase 1No Clinical History | Phase 2Access to Clinical History | |||

|---|---|---|---|---|

| Kappa | 95% Confidence Interval | Kappa | 95% Confidence Interval | |

| ||||

| Any infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 0.40 | 0.16 to 0.96 |

| Rater 2 | 0.60 | 0.10 to 1.00 | 0.58 | 0.07 to 1.00 |

| Rater 3 | 0.80 | 0.44 to 1.00 | 0.80 | 0.44 to 1.00 |

| Rater 4 | 1.00 | 1.00 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 5 | N/Aa | 0.11 | 0.36 to 0.14 | |

| Rater 6 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Alveolar infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 2 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 3 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 4 | 1.00 | 1.00 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 5 | 0.78 | 0.39 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 6 | 0.74 | 0.27 to 1.00 | 0.78 | 0.39 to 1.00 |

| Interstitial infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 0.21 | 0.43 to 0.85 |

| Rater 2 | 0.21 | 0.43 to 0.85 | 0.11 | 0.36 to 0.14 |

| Rater 3 | 0.74 | 0.27 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 4 | N/A | N/A | ||

| Rater 5 | 0.58 | 0.07 to 1.00 | 0.52 | 0.05 to 1.00 |

| Rater 6 | 0.62 | 0.5 to 1.00 | N/Aa | |

Intra‐rater Concordance

The inter‐rater concordance of the radiologists for the identification of alveolar infiltrate during the interpretation of the 100 chest radiographs with and without access to clinical history is shown in Figure 2. The availability of clinical information impacted physicians differently in the evaluation of alveolar infiltrates. Raters 1, 4, and 6 appeared more likely to identify an alveolar infiltrate with access to the clinical information, whereas raters 3 and 5 appeared less likely to identify an alveolar infiltrate. Of the 100 films that were interpreted with and without clinical information, the mean number of discordant interpretations per rater was 10, with values ranging from 6 to 19 for the individual raters. Radiographs in which more than 3 raters changed their interpretation regarding the presence of an alveolar infiltrate are shown in Figure 3. For Figure 3D, 4 radiologists changed their interpretation from no alveolar infiltrate to alveolar infiltrate, and 1 radiologist changed from alveolar infiltrate to no alveolar infiltrate with the addition of clinical history.

Comment

We investigated the impact of the availability of clinical information on the reliability of chest radiographic interpretation in the diagnosis of pneumonia. There was improved inter‐rater reliability in the identification of air bronchograms with the addition of clinical information; however, clinical history did not have a substantial impact on the inter‐rater reliability of other findings. The addition of clinical information did not alter the inter‐rater reliability in the identification of alveolar infiltrate. Clinical history affected individual raters differently in their interpretation of alveolar infiltrate, with 3 raters more likely to identify an alveolar infiltrate and 2 raters less likely to identify an alveolar infiltrate.

Most studies addressing the impact of clinical history on radiographic interpretation evaluated accuracy. In many of these studies, accuracy was defined as the raters' agreement with the final interpretation of each film as documented in the medical record or their agreement with the interpretation of the radiologists selecting the cases.[1, 2, 3, 5, 6, 7] Given the known inter‐rater variability in radiographic interpretation,[10, 11, 12, 13, 14, 15] accuracy of a radiologist's interpretation cannot be appropriately assessed through agreement with their peers. Because a true measure of accuracy in the radiographic diagnosis of pneumonia can only be determined through invasive testing, such as lung biopsy, reliability serves as a more appropriate measure of performance. Inclusion of clinical information in chest radiograph interpretation has been shown to improve reliability in the radiographic diagnosis of a broad range of conditions.[15]

The primary outcome in this study was the identification of an infiltrate. Previous studies have noted consistent identification of the radiographic features that are most suggestive of bacterial pneumonia, such as alveolar infiltrate, and less consistent identification of other radiographic findings, including interstitial infiltrate.[18, 26, 27] Among the radiologists in this study, the addition of clinical information did not have a meaningful impact on the reliability of either of these findings, as there was substantial inter‐rater agreement for the identification of alveolar infiltrate and only slight agreement for the identification of interstitial infiltrate, both with and without clinical history. Additionally, inter‐rater reliability for the identification of alveolar infiltrate remained substantial to almost perfect for all 6 raters with the addition of clinical information.

Clinical information impacted the raters differently in their pattern of alveolar infiltrate identification, suggesting that radiologists may differ in their approach to incorporating clinical history in the interpretation of chest radiographs. The inclusion of clinical information may impact a radiologist's perception, leading to improved identification of abnormalities; however, it may also guide their decision making about the relevance of previously identified abnormalities.[28] Some radiologists may use clinical information to support or suggest possible radiographic findings, whereas others may use the information to challenge potential findings. This study did not address the manner in which the individual raters utilized the clinical history. There were also several radiographs in which the clinical information resulted in a change in the identification of an alveolar infiltrate by 3 or more raters, with as many as 5 of 6 raters changing their interpretation for 1 particular radiograph. These changes in identification of an infiltrate suggest that unidentified aspects of a history may be likely to influence a rater's interpretation of a radiograph. Nevertheless, these changes did not result in improved reliability and it is not possible to determine if these changes resulted in improved accuracy in interpretation.

This study had several limitations. First, radiographs were purposefully selected to encompass a broad spectrum of radiographic findings. Thus, the prevalence of pneumonia and other abnormal findings was artificially higher than typically observed among a cohort of children for whom pneumonia is considered. Second, the radiologists recruited for this study all practice in an academic children's hospital setting. These factors may limit the generalizability of our findings. However, we would expect these results to be generalizable to pediatric radiologists from other academic institutions. Third, this study does not meet the criteria of a balanced study design as defined by Loy and Irwig.[19] A study was characterized as balanced if half of the radiographs were read with and half without clinical information in each of the 2 reading sessions. The proposed benefit of such a design is to control for possible changes in ability or reporting practices of the raters that may have occurred between study periods. The use of a standardized reporting tool likely minimized changes in reporting practices. Also, it is unlikely that the ability or reporting practices of an experienced radiologist would change over the study period. Fourth, the radiologists interpreted the films outside of their standard workflow and utilized a standardized reporting tool that focused on the presence or absence of pneumonia indicators. These factors may have increased the radiologists' suspicion for pneumonia even in the absence of clinical information. This may have biased the results toward finding no difference in the identification of pneumonia with the addition of detailed clinical history. Thus, the inclusion of clinical information in radiograph interpretation in clinical practice may have greater impact on the identification of these pneumonia indicators than was found in this study.[29] Finally, reliability does not imply accuracy, and it is unknown if changes in the identification of pneumonia indicators led to more accurate interpretation with respect to the clinical or pathologic diagnosis of pneumonia.

In conclusion, we observed high intra‐ and inter‐rater reliability among radiologists in the identification of an alveolar infiltrate, the radiographic finding most suggestive of bacterial pneumonia.[16, 17, 18, 30] The addition of clinical information did not have a substantial impact on the reliability of its identification.

The inclusion of clinical information in diagnostic testing may influence the interpretation of the clinical findings. Historical and clinical findings may focus the reader's attention to the relevant details, thereby improving the accuracy of the interpretation. However, such information may cause the reader to have preconceived notions about the results, biasing the overall interpretation.

The impact of clinical information on the interpretation of radiographic studies remains an issue of debate. Previous studies have found that clinical information improves the accuracy of radiographic interpretation for a broad range of diagnoses,[1, 2, 3, 4] whereas others do not show improvement.[5, 6, 7] Additionally, clinical information may serve as a distraction that leads to more false‐positive interpretations.[8] For this reason, many radiologists prefer to review radiographs without knowledge of the clinical scenario prompting the study to avoid focusing on the expected findings and potentially missing other important abnormalities.[9]

The chest radiograph (CXR) is the most commonly used diagnostic imaging modality. Nevertheless, poor agreement exists among radiologists in the interpretation of chest radiographs for the diagnosis of pneumonia in both adults and children.[10, 11, 12, 13, 14, 15] Recent studies have found a high degree of agreement among pediatric radiologists with implementation of the World Health Organization (WHO) criteria for standardized CXR interpretation for diagnosis of bacterial pneumonia in children.[16, 17, 18] In these studies, participants were blinded to the clinical presentation. Data investigating the impact of clinical history on CXR interpretation in the pediatric population are limited.[19]

We conducted this prospective case‐based study to evaluate the impact of clinical information on the reliability of radiographic diagnosis of pneumonia among children presenting to a pediatric emergency department (ED) with clinical suspicion of pneumonia.

METHODS

Study Subjects

Six board‐certified radiologists at 2 academic children's hospitals (Children's Hospital of Philadelphia [n = 3] and Boston Children's Hospital [n = 3]) interpreted the same 110 chest radiographs (100 original and 10 duplicates) on 2 separate occasions. Clinical information was withheld during the first interpretation. The inter‐ and inter‐rater reliability for the interpretation of these 110 radiographs without clinical information have been previously reported.[18] After a period of 6 months, the radiologists reviewed the radiographs with access to clinical information provided by the physician ordering the CXR. This clinical information included age, sex, clinical indication for obtaining the radiograph, relevant history, and physical examination findings. The radiologists did not have access to the patients' medical records. The radiologists varied with respect to the number of years practicing pediatric radiology (median, 8 years; range, 336 years).

Radiographs were selected from children who presented to the ED at Boston Children's Hospital with concern of pneumonia. We selected radiographs with a spectrum of respiratory disease processes encountered in a pediatric population. The final radiographs included 50 radiographs with a final reading in the medical record without suspicion for pneumonia and 50 radiographs with suspicion of pneumonia. In the latter group, 25 radiographs had a final reading suggestive of an alveolar infiltrate, and 25 radiographs had a final reading suggestive of an interstitial infiltrate. Ten duplicate radiographs were included.

Radiograph Interpretation

The radiologists interpreted both anterior‐posterior and lateral views for each subject. Digital Imaging and Communications in Medicine images were downloaded from a registry at Boston Children's Hospital, and were copied to DVDs that were provided to each radiologist. Standardized radiographic imaging software (eFilm Lite; Merge Healthcare, Chicago, Illinois) was used by each radiologist.

Each radiologist completed a study questionnaire for each radiograph (see Supporting Information, Appendix 1, in the online version of this article). The questionnaire utilized radiographic descriptors of primary endpoint pneumonia described by the WHO to standardize the radiographic diagnosis of pneumonia.[20, 21] No additional training was provided to the radiologists. The main outcome of interest was the presence or absence of an infiltrate. Among radiographs in which an infiltrate was identified, radiologists selected whether there was an alveolar infiltrate, interstitial infiltrate, or both. Alveolar infiltrate and interstitial infiltrate are defined on the study questionnaire (Appendix 1). A radiograph classified as having either an alveolar infiltrate or interstitial infiltrate (not atelectasis) was considered to have any infiltrate. Additional findings including air bronchograms, hilar adenopathy, pleural effusion, and location of abnormalities were also recorded.

Statistical Analysis

Inter‐rater reliability was assessed using the kappa statistic to determine the overall agreement among the 6 radiologists for each outcome (eg, presence or absence of alveolar infiltrate). The kappa statistic for more than 2 raters utilizes an analysis of variance approach.[22] To calculate 95% confidence intervals (CI) for kappa statistics with more than 2 raters, we employed a bootstrapping method with 1000 replications of samples equal in size to the study sample. Intra‐rater reliability was evaluated by examining the agreement within each radiologist upon review of 10 duplicate radiographs. We used the following benchmarks to classify the strength of agreement: poor (<0.0), slight (00.20), fair (0.210.40), moderate (0.410.60), substantial (0.610.80), almost perfect (0.811.0).[23] Negative kappa values represent agreement less than would be predicted by chance alone.[24, 25] To calculate the kappa, a value must be recorded in 3 of 4 of the following categories: negative to positive, positive to negative, concordant negative, and concordant positive reporting of pneumonia. If raters did not fulfill 3 categories, the kappa could not be calculated.

The inter‐rater concordance for identification of an alveolar infiltrate was calculated for each radiologist by comparing their reporting of alveolar infiltrate with and without clinical history for each of the 100 radiographs. Radiographs that were identified by an individual rater as no alveolar infiltrate when read without clinical history, but those subsequently identified as alveolar infiltrate with clinical history were categorized as negative to positive reporting of pneumonia with clinical history. Those that were identified as alveolar infiltrate but subsequently identified as no alveolar infiltrate were categorized as positive to negative reporting of pneumonia with clinical history. Those radiographs in which there was no change in identification of alveolar infiltrate with clinical information were categorized as concordant reporting of pneumonia.

The study was approved by the institutional review boards at both children's hospitals.

RESULTS

Patient Sample

The radiographs were from patients ranging in age from 1 week to 19 years (median, 3.5 years; interquartile range, 1.66.0 years). Fifty (50%) patients were male.

Inter‐rater Reliability

The kappa coefficients of inter‐rater reliability between the radiologists across the 6 clinical measures of interest with and without access to clinical history are plotted in Figure 1. Reliability improved from fair (k = 0.32, 95% CI: 0.24 to 0.42) to moderate (k = 0.53, 95% CI: 0.43 to 0.64) for identification of air bronchograms with the addition of clinical history. Although there was an increase in kappa values for identification of any infiltrate, alveolar infiltrate, interstitial infiltrate, and pleural effusion, and a decrease in the kappa value for identification of hilar adenopathy with the addition of clinical information, there was substantial overlap of the 95% CIs, suggesting that inclusion of clinical history did not result in a statistically significant change in the reliability of these findings.

Intra‐rater Reliability

The estimates of inter‐rater reliability for the interpretation of the 10 duplicate images with and without clinical history are shown in Table 1. The inter‐rater reliability in the identification of alveolar infiltrate remained substantial to almost perfect for each rater with and without access to clinical history. Rater 1 had a decrease in inter‐rater reliability from almost perfect (k = 1.0, 95% CI: 1.0 to 1.0) to fair (k = 0.21, 95% CI: 0.43 to 0.85) in the identification of interstitial infiltrate with the addition of clinical history. This rater also had a decrease in agreement from almost perfect (k = 1.0, 95% CI: 1.0 to 1.0) to fair (k = 0.4, 95% CI: 0.16 to 0.96) in the identification of any infiltrate.

| Phase 1No Clinical History | Phase 2Access to Clinical History | |||

|---|---|---|---|---|

| Kappa | 95% Confidence Interval | Kappa | 95% Confidence Interval | |

| ||||

| Any infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 0.40 | 0.16 to 0.96 |

| Rater 2 | 0.60 | 0.10 to 1.00 | 0.58 | 0.07 to 1.00 |

| Rater 3 | 0.80 | 0.44 to 1.00 | 0.80 | 0.44 to 1.00 |

| Rater 4 | 1.00 | 1.00 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 5 | N/Aa | 0.11 | 0.36 to 0.14 | |

| Rater 6 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Alveolar infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 2 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 3 | 1.00 | 1.00 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 4 | 1.00 | 1.00 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 5 | 0.78 | 0.39 to 1.00 | 1.00 | 1.00 to 1.00 |

| Rater 6 | 0.74 | 0.27 to 1.00 | 0.78 | 0.39 to 1.00 |

| Interstitial infiltrate | ||||

| Rater 1 | 1.00 | 1.00 to 1.00 | 0.21 | 0.43 to 0.85 |

| Rater 2 | 0.21 | 0.43 to 0.85 | 0.11 | 0.36 to 0.14 |

| Rater 3 | 0.74 | 0.27 to 1.00 | 0.78 | 0.39 to 1.00 |

| Rater 4 | N/A | N/A | ||

| Rater 5 | 0.58 | 0.07 to 1.00 | 0.52 | 0.05 to 1.00 |

| Rater 6 | 0.62 | 0.5 to 1.00 | N/Aa | |

Intra‐rater Concordance

The inter‐rater concordance of the radiologists for the identification of alveolar infiltrate during the interpretation of the 100 chest radiographs with and without access to clinical history is shown in Figure 2. The availability of clinical information impacted physicians differently in the evaluation of alveolar infiltrates. Raters 1, 4, and 6 appeared more likely to identify an alveolar infiltrate with access to the clinical information, whereas raters 3 and 5 appeared less likely to identify an alveolar infiltrate. Of the 100 films that were interpreted with and without clinical information, the mean number of discordant interpretations per rater was 10, with values ranging from 6 to 19 for the individual raters. Radiographs in which more than 3 raters changed their interpretation regarding the presence of an alveolar infiltrate are shown in Figure 3. For Figure 3D, 4 radiologists changed their interpretation from no alveolar infiltrate to alveolar infiltrate, and 1 radiologist changed from alveolar infiltrate to no alveolar infiltrate with the addition of clinical history.

Comment

We investigated the impact of the availability of clinical information on the reliability of chest radiographic interpretation in the diagnosis of pneumonia. There was improved inter‐rater reliability in the identification of air bronchograms with the addition of clinical information; however, clinical history did not have a substantial impact on the inter‐rater reliability of other findings. The addition of clinical information did not alter the inter‐rater reliability in the identification of alveolar infiltrate. Clinical history affected individual raters differently in their interpretation of alveolar infiltrate, with 3 raters more likely to identify an alveolar infiltrate and 2 raters less likely to identify an alveolar infiltrate.

Most studies addressing the impact of clinical history on radiographic interpretation evaluated accuracy. In many of these studies, accuracy was defined as the raters' agreement with the final interpretation of each film as documented in the medical record or their agreement with the interpretation of the radiologists selecting the cases.[1, 2, 3, 5, 6, 7] Given the known inter‐rater variability in radiographic interpretation,[10, 11, 12, 13, 14, 15] accuracy of a radiologist's interpretation cannot be appropriately assessed through agreement with their peers. Because a true measure of accuracy in the radiographic diagnosis of pneumonia can only be determined through invasive testing, such as lung biopsy, reliability serves as a more appropriate measure of performance. Inclusion of clinical information in chest radiograph interpretation has been shown to improve reliability in the radiographic diagnosis of a broad range of conditions.[15]

The primary outcome in this study was the identification of an infiltrate. Previous studies have noted consistent identification of the radiographic features that are most suggestive of bacterial pneumonia, such as alveolar infiltrate, and less consistent identification of other radiographic findings, including interstitial infiltrate.[18, 26, 27] Among the radiologists in this study, the addition of clinical information did not have a meaningful impact on the reliability of either of these findings, as there was substantial inter‐rater agreement for the identification of alveolar infiltrate and only slight agreement for the identification of interstitial infiltrate, both with and without clinical history. Additionally, inter‐rater reliability for the identification of alveolar infiltrate remained substantial to almost perfect for all 6 raters with the addition of clinical information.

Clinical information impacted the raters differently in their pattern of alveolar infiltrate identification, suggesting that radiologists may differ in their approach to incorporating clinical history in the interpretation of chest radiographs. The inclusion of clinical information may impact a radiologist's perception, leading to improved identification of abnormalities; however, it may also guide their decision making about the relevance of previously identified abnormalities.[28] Some radiologists may use clinical information to support or suggest possible radiographic findings, whereas others may use the information to challenge potential findings. This study did not address the manner in which the individual raters utilized the clinical history. There were also several radiographs in which the clinical information resulted in a change in the identification of an alveolar infiltrate by 3 or more raters, with as many as 5 of 6 raters changing their interpretation for 1 particular radiograph. These changes in identification of an infiltrate suggest that unidentified aspects of a history may be likely to influence a rater's interpretation of a radiograph. Nevertheless, these changes did not result in improved reliability and it is not possible to determine if these changes resulted in improved accuracy in interpretation.

This study had several limitations. First, radiographs were purposefully selected to encompass a broad spectrum of radiographic findings. Thus, the prevalence of pneumonia and other abnormal findings was artificially higher than typically observed among a cohort of children for whom pneumonia is considered. Second, the radiologists recruited for this study all practice in an academic children's hospital setting. These factors may limit the generalizability of our findings. However, we would expect these results to be generalizable to pediatric radiologists from other academic institutions. Third, this study does not meet the criteria of a balanced study design as defined by Loy and Irwig.[19] A study was characterized as balanced if half of the radiographs were read with and half without clinical information in each of the 2 reading sessions. The proposed benefit of such a design is to control for possible changes in ability or reporting practices of the raters that may have occurred between study periods. The use of a standardized reporting tool likely minimized changes in reporting practices. Also, it is unlikely that the ability or reporting practices of an experienced radiologist would change over the study period. Fourth, the radiologists interpreted the films outside of their standard workflow and utilized a standardized reporting tool that focused on the presence or absence of pneumonia indicators. These factors may have increased the radiologists' suspicion for pneumonia even in the absence of clinical information. This may have biased the results toward finding no difference in the identification of pneumonia with the addition of detailed clinical history. Thus, the inclusion of clinical information in radiograph interpretation in clinical practice may have greater impact on the identification of these pneumonia indicators than was found in this study.[29] Finally, reliability does not imply accuracy, and it is unknown if changes in the identification of pneumonia indicators led to more accurate interpretation with respect to the clinical or pathologic diagnosis of pneumonia.

In conclusion, we observed high intra‐ and inter‐rater reliability among radiologists in the identification of an alveolar infiltrate, the radiographic finding most suggestive of bacterial pneumonia.[16, 17, 18, 30] The addition of clinical information did not have a substantial impact on the reliability of its identification.

- , , , et al. Tentative diagnoses facilitate the detection of diverse lesions in chest radiographs. Invest Radiol. 1986;21(7):532–539.

- , , , . Influence of clinical history upon detection of nodules and other lesions. Invest Radiol. 1988;23(1):48–55.

- , , , . Influence of clinical history on perception of abnormalities in pediatric radiographs. Acad Radiol. 1994;1(3):217–223.

- , , , et al. Impact of clinical history on film interpretation. Yonsei Med J. 1992;33(2):168–172.

- , , , et al. The effect of clinical history on chest radiograph interpretations in a PACS environment. Invest Radiol. 1990;25(6):670–674.

- , , , et al. Does knowledge of the clinical history affect the accuracy of chest radiograph interpretation?AJR Am J Roentgenol. 1990;154(4):709–712.

- , , , . Detection of lung cancer on the chest radiograph: impact of previous films, clinical information, double reading, and dual reading. J Clin Epidemiol. 2001;54(11):1146–1150.

- , , , . The effect of clinical bias on the interpretation of myelography and spinal computed tomography. Radiology. 1982;145(1):85–89.

- . A suggestion: look at the images first, before you read the history. Radiology. 2002;223(1):9–10.

- , , , et al. Interobserver reliability of the chest radiograph in community‐acquired pneumonia. PORT Investigators. Chest. 1996;110(2):343–350.

- , , , , . Inter‐ and intra‐observer variability in the assessment of atelectasis and consolidation in neonatal chest radiographs. Pediatr Radiol. 1999;29(6):459–462.

- , , , , . Chest radiographs in the emergency department: is the radiologist really necessary?Postgrad Med J. 2003;79(930):214–217.

- , , , . Inter‐observer variation in the interpretation of chest radiographs for pneumonia in community‐acquired lower respiratory tract infections. Clin Radiol. 2004;59(8):743–752.

- , , , , , . Disagreement in the interpretation of chest radiographs among specialists and clinical outcomes of patients hospitalized with suspected pneumonia. Eur J Intern Med. 2006;17(1):43–47.

- , , . An assessment of inter‐observer agreement and accuracy when reporting plain radiographs. Clin Radiol. 1997;52(3):235–238.

- , , , et al. Evaluation of the World Health Organization criteria for chest radiographs for pneumonia diagnosis in children. Eur J Pediatr. 2011;171(2):369–374.

- , , , et al. Standardized interpretation of paediatric chest radiographs for the diagnosis of pneumonia in epidemiological studies. Bull World Health Organ. 2005;83(5):353–359.

- , , , et al. Variability in the interpretation of chest radiographs for the diagnosis of pneumonia in children. J Hosp Med. 2012;7(4):294–298.

- , . Accuracy of diagnostic tests read with and without clinical information: a systematic review. JAMA. 2004;292(13):1602–1609.

- Standardization of interpretation of chest radiographs for the diagnosis of pneumonia in children. In:World Health Organization: Pneumonia Vaccine Trial Investigators' Group.Geneva: Department of Vaccine and Biologics;2001.

- , , , et al. Effectiveness of heptavalent pneumococcal conjugate vaccine in children younger than 5 years of age for prevention of pneumonia: updated analysis using World Health Organization standardized interpretation of chest radiographs. Pediatr Infect Dis J. 2006;25(9):779–781.

- , . A one‐way components of variance model for categorical data. Biometrics. 1977;33:671–679.

- , . The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–174.

- , . Kappa statistic. CMAJ. 2005;173(1):16.

- , . Clinical biostatistics. LIV. The biostatistics of concordance. Clin Pharmacol Ther. 1981;29(1):111–123.

- , , , , , . Practice guidelines for the management of community‐acquired pneumonia in adults. Infectious Diseases Society of America. Clin Infect Dis. 2000;31(2):347–382.

- , , , et al. Guidelines for the management of adults with community‐acquired pneumonia. Diagnosis, assessment of severity, antimicrobial therapy, and prevention. Am J Respir Crit Care Med. 2001;163(7):1730–1754.

- , . Commentary does clinical history affect perception?Acad Radiol. 2006;13(3):402–403.

- , . The effect of clinical history on chest radiograph interpretations in a PACS environment. Invest Radiol. 1991;26(5):512–514.

- , , , . Comparison of radiological findings and microbial aetiology of childhood pneumonia. Acta Paediatr. 1993;82(4):360–363.

- , , , et al. Tentative diagnoses facilitate the detection of diverse lesions in chest radiographs. Invest Radiol. 1986;21(7):532–539.

- , , , . Influence of clinical history upon detection of nodules and other lesions. Invest Radiol. 1988;23(1):48–55.

- , , , . Influence of clinical history on perception of abnormalities in pediatric radiographs. Acad Radiol. 1994;1(3):217–223.

- , , , et al. Impact of clinical history on film interpretation. Yonsei Med J. 1992;33(2):168–172.

- , , , et al. The effect of clinical history on chest radiograph interpretations in a PACS environment. Invest Radiol. 1990;25(6):670–674.

- , , , et al. Does knowledge of the clinical history affect the accuracy of chest radiograph interpretation?AJR Am J Roentgenol. 1990;154(4):709–712.

- , , , . Detection of lung cancer on the chest radiograph: impact of previous films, clinical information, double reading, and dual reading. J Clin Epidemiol. 2001;54(11):1146–1150.

- , , , . The effect of clinical bias on the interpretation of myelography and spinal computed tomography. Radiology. 1982;145(1):85–89.

- . A suggestion: look at the images first, before you read the history. Radiology. 2002;223(1):9–10.

- , , , et al. Interobserver reliability of the chest radiograph in community‐acquired pneumonia. PORT Investigators. Chest. 1996;110(2):343–350.

- , , , , . Inter‐ and intra‐observer variability in the assessment of atelectasis and consolidation in neonatal chest radiographs. Pediatr Radiol. 1999;29(6):459–462.

- , , , , . Chest radiographs in the emergency department: is the radiologist really necessary?Postgrad Med J. 2003;79(930):214–217.

- , , , . Inter‐observer variation in the interpretation of chest radiographs for pneumonia in community‐acquired lower respiratory tract infections. Clin Radiol. 2004;59(8):743–752.

- , , , , , . Disagreement in the interpretation of chest radiographs among specialists and clinical outcomes of patients hospitalized with suspected pneumonia. Eur J Intern Med. 2006;17(1):43–47.

- , , . An assessment of inter‐observer agreement and accuracy when reporting plain radiographs. Clin Radiol. 1997;52(3):235–238.

- , , , et al. Evaluation of the World Health Organization criteria for chest radiographs for pneumonia diagnosis in children. Eur J Pediatr. 2011;171(2):369–374.

- , , , et al. Standardized interpretation of paediatric chest radiographs for the diagnosis of pneumonia in epidemiological studies. Bull World Health Organ. 2005;83(5):353–359.

- , , , et al. Variability in the interpretation of chest radiographs for the diagnosis of pneumonia in children. J Hosp Med. 2012;7(4):294–298.

- , . Accuracy of diagnostic tests read with and without clinical information: a systematic review. JAMA. 2004;292(13):1602–1609.

- Standardization of interpretation of chest radiographs for the diagnosis of pneumonia in children. In:World Health Organization: Pneumonia Vaccine Trial Investigators' Group.Geneva: Department of Vaccine and Biologics;2001.

- , , , et al. Effectiveness of heptavalent pneumococcal conjugate vaccine in children younger than 5 years of age for prevention of pneumonia: updated analysis using World Health Organization standardized interpretation of chest radiographs. Pediatr Infect Dis J. 2006;25(9):779–781.

- , . A one‐way components of variance model for categorical data. Biometrics. 1977;33:671–679.

- , . The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–174.

- , . Kappa statistic. CMAJ. 2005;173(1):16.

- , . Clinical biostatistics. LIV. The biostatistics of concordance. Clin Pharmacol Ther. 1981;29(1):111–123.

- , , , , , . Practice guidelines for the management of community‐acquired pneumonia in adults. Infectious Diseases Society of America. Clin Infect Dis. 2000;31(2):347–382.

- , , , et al. Guidelines for the management of adults with community‐acquired pneumonia. Diagnosis, assessment of severity, antimicrobial therapy, and prevention. Am J Respir Crit Care Med. 2001;163(7):1730–1754.

- , . Commentary does clinical history affect perception?Acad Radiol. 2006;13(3):402–403.

- , . The effect of clinical history on chest radiograph interpretations in a PACS environment. Invest Radiol. 1991;26(5):512–514.

- , , , . Comparison of radiological findings and microbial aetiology of childhood pneumonia. Acta Paediatr. 1993;82(4):360–363.

© 2012 Society of Hospital Medicine

Reliability of CXR for Pneumonia

The chest radiograph (CXR) is the most commonly used diagnostic imaging modality in children, and is considered to be the gold standard for the diagnosis of pneumonia. As such, physicians in developed countries rely on chest radiography to establish the diagnosis of pneumonia.13 However, there are limited data investigating the reliability of this test for the diagnosis of pneumonia in children.2, 46

Prior investigations have noted poor overall agreement by emergency medicine, infectious diseases, and pulmonary medicine physicians, and even radiologists, in their interpretation of chest radiographs for the diagnosis of pneumonia.2, 5, 710 The World Health Organization (WHO) developed criteria to standardize CXR interpretation for the diagnosis of pneumonia in children for use in epidemiologic studies.11 These standardized definitions of pneumonia have been formally evaluated by the WHO6 and utilized in epidemiologic studies of vaccine efficacy,12 but the overall reliability of these radiographic criteria have not been studied outside of these forums.

We conducted this prospective case‐based study to evaluate the reliability of the radiographic diagnosis of pneumonia among children presenting to a pediatric emergency department with clinical suspicion of pneumonia. We were primarily interested in assessing the overall reliability in CXR interpretation for the diagnosis of pneumonia, and identifying which radiographic features of pneumonia were consistently identified by radiologists.

MATERIALS AND METHODS

Study Subjects

We evaluated the reliability of CXR interpretation with respect to the diagnosis of pneumonia among radiologists. Six board‐certified radiologists at 2 academic children's hospitals (Children's Hospital of Philadelphia, Philadelphia, PA [n = 3] and Children's Hospital, Boston, Boston, MA [n = 3]) interpreted the same 110 chest radiographs in a blinded fashion. The radiologists varied with respect to the number of years practicing pediatric radiology (median 8 years, range 3‐36 years). Clinical information such as age, gender, clinical indication for obtaining the radiograph, history, and physical examination findings were not provided. Aside from the study form which stated the WHO classification scheme for radiographic pneumonia, no other information or training was provided to the radiologists as part of this study.

Radiographs were selected among a population of children presenting to the emergency department at Children's Hospital, Boston, who had a radiograph obtained for concern of pneumonia. From this cohort, we selected children who had radiographs which encompassed the spectrum of respiratory disease processes encountered in a pediatric population. The radiographs selected for review included 50 radiographs with a final reading in the medical record without suspicion for pneumonia, and 50 radiographs in which the diagnosis of pneumonia could not be excluded. In the latter group, 25 radiographs had a final reading suggestive of an alveolar infiltrate, and 25 radiographs had a final reading suggestive of an interstitial infiltrate. Ten duplicate radiographs were included to permit assessment of intra‐rater reliability.

Radiograph Interpretation

Radiologists at both sites interpreted the identical 110 radiographs (both anteroposterior [AP] and lateral views for each subject). Digital Imaging and Communications in Medicine (DICOM) images were downloaded from a registry at Children's Hospital, Boston, and were copied to DVDs which were provided to each radiologist. Standardized radiographic imaging software (eFilm Lite [Mississauga, Canada]) was used by each radiologist to view and interpret the radiographs.

Each radiologist completed a study questionnaire for each radiograph interpreted (see Supporting Appendix A in the online version of this article). The questionnaire utilized radiographic descriptors of primary end‐point pneumonia described by the WHO which were procured to standardize the radiographic diagnosis of pneumonia.11, 12 The main outcome of interest was the presence or absence of an infiltrate. Among radiographs in which an infiltrate was identified, radiologists selected whether there was an alveolar infiltrate, interstitial infiltrate, or both. An alveolar infiltrate was defined as a dense or fluffy opacity that occupies a portion or whole of a lobe, or of the entire lung, that may or may not contain air bronchograms.11, 12 An interstitial infiltrate was defined by a lacy pattern involving both lungs, featuring peribronchial thickening and multiple areas of atelectasis.11, 12 It also included minor patchy infiltrates that were not of sufficient magnitude to constitute consolidation, and small areas of atelectasis that in children may be difficult to distinguish from consolidation. Among interstitial infiltrates, radiologists were asked to distinguish infiltrate from atelectasis. A radiograph classified as having either an alveolar infiltrate or interstitial infiltrate (not atelectasis) was considered to have any infiltrate. Additional findings including air bronchograms, hilar adenopathy, pleural effusion, and location of abnormalities were also recorded.

Statistical Analysis

Inter‐rater reliability was assessed using the kappa statistic to determine the overall agreement between the 6 radiologists for each binary outcome (ie, presence or absence of alveolar infiltrate). To calculate 95% confidence intervals (CI) for kappa statistics with more than 2 raters, we employed a bootstrapping method with 1000 replications of samples equal in size to the study sample, using the kapci program as implemented by STATA software (version 10.1, STATA Corp, College Station, TX). Also, intra‐rater reliability was evaluated by examining the agreement within each radiologist upon review of 10 duplicate radiographs that had been randomly inserted into the case‐mix. We used the benchmarks proposed by Landis and Koch to classify the strength of agreement measured by the kappa statistic, as follows: poor (<0.0); slight (0‐0.20); fair (0.21‐0.40); moderate (0.41‐0.60); substantial (0.61‐0.80); almost perfect (0.81‐1.0).13

The study was approved by the institutional review boards at Children's Hospital, Boston and Children's Hospital of Philadelphia.

RESULTS

Patient Sample

The sample of 110 radiographs was obtained from 100 children presenting to the emergency department at Children's Hospital, Boston, with concern of pneumonia. These patients ranged in age from 1 week to 19 years (median, 3.5 years; interquartile range [IQR], 1.6‐6.0 years). Fifty (50%) of these patients were male. As stated above, the sample comprised 50 radiographs with a final reading in the medical record without suspicion for pneumonia, and 50 radiographs in which the diagnosis of pneumonia could not be excluded. The 10 duplicate radiographs encompassed a similar spectrum of findings.

Inter‐Rater Reliability

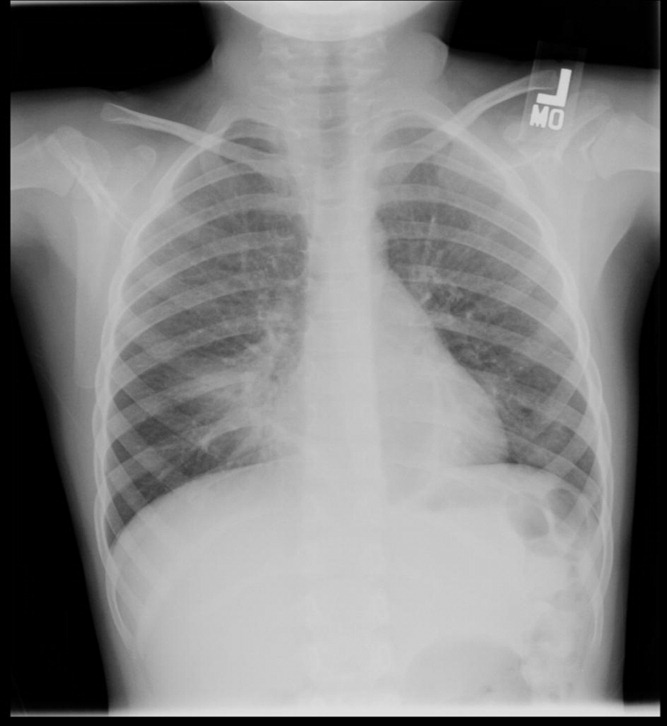

The kappa coefficients of inter‐rater reliability between the radiologists across the 6 clinical measures of interest are displayed in Table 1. As shown, the most reliable measure was that of alveolar infiltrate (Figure 1), which attained a substantial degree of agreement between the radiologists. Two other measures, any infiltrate and pleural effusion, attained moderate reliability, while bronchograms and hilar adenopathy were each classified as having fair reliability. However, interstitial infiltrate (Figure 2) was found to have the lowest kappa estimate, with a slight degree of reliability. When examining inter‐rater reliability among the radiologists separately from each institution, the pattern of results was similar.

| All Radiologists (n = 6) | Kappa | 95% Confidence Interval |

|---|---|---|

| ||

| Any infiltrate | 0.47 | 0.39, 0.56 |

| Alveolar infiltrate | 0.69 | 0.60, 0.78 |

| Interstitial infiltrate | 0.14 | 0.05, 0.23 |

| Air bronchograms | 0.32 | 0.24, 0.42 |

| Hilar adenopathy | 0.21 | 0.08, 0.39 |

| Pleural effusion | 0.45 | 0.29, 0.61 |

At least 4 of the 6 radiologists agreed on the presence or absence of an alveolar infiltrate for 95 of the 100 unique CXRs; all 6 radiologists agreed regarding the presence or absence of an alveolar infiltrate in 72 of the 100 unique CXRs. At least 4 of the 6 radiologists agreed on the presence or absence of any infiltrate and interstitial infiltrate 96% and 90% of the time, respectively. All 6 of the radiologists agreed on the presence or absence of any infiltrate and interstitial infiltrate 35% and 27% of the time, respectively.

Intra‐Rater Reliability

Estimates of intra‐rater reliability on the primary clinical outcomes (alveolar infiltrate, interstitial infiltrate, and any infiltrate) are found in Table 2. Across the 6 raters, the kappa estimates for alveolar infiltrate were all classified as substantial or almost perfect. The kappa estimates for interstitial infiltrate varied widely, ranging from fair to almost perfect, while for any infiltrate, reliability ranged from moderate to almost perfect.

| Kappa | 95% Confidence Interval | |

|---|---|---|

| ||

| Any infiltrate | ||

| Rater 1 | 1.00 | 1.00, 1.00 |

| Rater 2 | 0.60 | 0.10, 1.00 |

| Rater 3 | 0.80 | 0.44, 1.00 |

| Rater 4 | 1.00 | 1.00, 1.00 |

| Rater 5 | n/a* | |

| Rater 6 | 1.00 | 1.00, 1.00 |

| Alveolar infiltrate | ||

| Rater 1 | 1.00 | 1.00, 1.00 |

| Rater 2 | 1.00 | 1.00, 1.00 |

| Rater 3 | 1.00 | 1.00, 1.00 |

| Rater 4 | 1.00 | 1.00, 1.00 |

| Rater 5 | 0.78 | 0.39, 1.00 |

| Rater 6 | 0.74 | 0.27, 1.00 |

| Interstitial infiltrate | ||

| Rater 1 | 1.00 | 1.00, 1.00 |

| Rater 2 | 0.21 | 0.43, 0.85 |

| Rater 3 | 0.74 | 0.27, 1.00 |

| Rater 4 | n/a | |

| Rater 5 | 0.58 | 0.07, 1.00 |

| Rater 6 | 0.62 | 0.5, 1.00 |

DISCUSSION

The chest radiograph serves as an integral component of the reference standard for the diagnosis of childhood pneumonia. Few prior studies have assessed the reliability of chest radiograph findings in children.3, 5, 12, 14, 15 We found a high degree of agreement among radiologists for radiologic findings consistent with bacterial pneumonia when standardized interpretation criteria were applied. In this study, we identified radiographic features of pneumonia, such as alveolar infiltrate and pleural effusion, that were consistently identified by different radiologists reviewing the same radiograph and by the same radiologist reviewing the same radiograph. These data support the notion that radiographic features most suggestive of bacterial pneumonia are consistently identified by radiologists.16, 17 There was less consistency in the identification of other radiographic findings, such as interstitial infiltrates, air bronchograms, and hilar lymphadenopathy.

Prior studies have found high levels of disagreement among radiologists in the interpretation of chest radiographs.2, 3, 15, 18 Many of these prior studies emphasized variation in detection of radiographic findings that would not typically alter clinical management. We observed high intra‐rater, and inter‐rater reliability among radiologists for the findings of alveolar infiltrate and pleural effusion. These are the radiographic findings most consistent with a bacterial etiologic agent for pneumonia.19 Other studies have also found that the presence of an alveolar infiltrate is a reliable radiographic finding in children18 and adults.7, 9, 10 These findings support the use of the WHO definition of primary endpoint pneumonia for use in epidemiologic studies.4, 6, 11