User login

Other Literature of Interest

1. Chimowitz MI, Lynn MJ, Howlett-Smith H, et al. Comparison of warfarin and aspirin for symptomatic intracranial arterial stenosis. N Engl J Med. 352:1305-16.

This is the first prospective study comparing antithrombotic therapies for patients with atherosclerotic stenosis of major intracranial arteries. This multicenter, NINDS-sponsored, placebo-controlled, blinded study randomized 569 patients to aspirin (650 mg twice daily) or warfarin (initially 5 mg daily, titrated to achieve an INR of 2.0–3.0) and followed them for nearly 2 years. The study was terminated early over safely concerns about patients in the warfarin group. Baseline characteristics between the 2 groups were not significantly different. Warfarin was not more effective than aspirin in its effect on the primary endpoints of ischemic stroke, brain hemorrhage, or vascular death other than from stroke (as defined in the study protocol). However, major cardiac events (myocardial infarction or sudden death) were significantly higher in the warfarin group, and major hemorrhage (defined as any intracranial or systemic hemorrhage requiring hospitalization, transfusion, or surgical intervention) was also significantly higher in the warfarin group. The authors note the difficulty maintaining the INR in the target range (achieved only 63.1 % of the time during the maintenance period, an observation in line with other anticoagulation studies). In an accompanying editorial, Dr. Koroshetz of the stroke service at the Massachusetts General Hospital also observed that difficulties in achieving the therapeutic goal with warfarin could have impacted the results. The authors also note that the dose of aspirin employed in this study is somewhat higher than in previous trials. Nevertheless, until other data emerge, this investigation’s results favor aspirin in preference to warfarin for this high-risk condition.

2. Cornish PL, Knowles, SR, Marchesano R, et al. Unintended medication discrepancies at the time of hospital admission Arch Intern Med. 2005;165:424-9

Of the various types of medical errors, medication errors are believed to be the most common. At the time of hospital admission, medication discrepancies may lead to unintended drug interactions, toxicity, or interruption of appropriate drug therapies. These investigators performed a prospective study to identify unintended medication discrepancies between the patient’s home medications and those ordered at the time of the patient’s admission and to evaluate the potential clinical significance of these discrepancies.

This study was conducted at a 1,000-bed tertiary care hospital in Canada on the general medicine teaching service. A member of the study team reviewed each medical record to ascertain the physician-recorded medication history, the nurse-recorded medication history, the admission medication orders, and demographic information. A comprehensive list of all of the patient’s prescription or nonprescription drugs was compiled by interviewing patients, families, and pharmacists, and by inspecting the bottles. A discrepancy was defined as any difference between this comprehensive list and the admission medication orders. These were categorized into omission or addition of a medication, substitution of an agent within the same drug class, and change in dose, route, and frequency of administration of an agent. The medical team caring for the patient was then asked whether or not these discrepancies were intended. The team then reconciled any unintended discrepancies. These unintended discrepancies were further classified according to their potential for harm by 3 medical hospitalists into Class 1, 2, 3, in increasing order of potential harm. One hundred fifty-one patients were included in the analysis. A total of 140 errors occurred in 81 patients (54%). The overall error rate was 0.93 per patient. Of the errors, 46% consisted of omission of a regularly prescribed medication, 25% involved discrepant doses, 17.1% involved discrepant frequency, and 11.4% were actually incorrect drugs. Breakdown of error severity resulted in designation of 61% as Class 1, 33% as Class 2, and 5.7% as Class 3. The interrater agreement was a kappa of 0.26. These discrepancies were not found to be associated with night or weekend admissions, high patient volume, or high numbers of medications.

Real-time clinical correlation with the responsible physicians allowed distinction of intended from unintended discrepancies. This presumably improved the accuracy of the error rate measurement. This study confirmed the relatively high rate previously reported. Further study can focus on possible intervention to minimize these errors.

3. Liperoti R, Gambassi G, Lapane KL, et al. Conventional and atypical antipsychotics and the risk of hospitalization for ventricular arrhythmias or cardiac arrest Arch Intern Med. 2005;165:696-701.

As the number of hospitalized elderly and demented patients increases, use of both typical and atypical antipsychotics has become prevalent. QT prolongation, ventricular arrhythmia, and cardiac arrest are more commonly associated with the older conventional antipsychotics than with newer atypical agents. This case-control study was conducted to estimate the effect of both conventional and atypical antipsychotics use on the risk of hospital admission for ventricular arrhythmia or cardiac arrest.

The patient population involved consisted of elderly nursing home residents in 6 US states. The investigators utilized Systematic Assessment of Geriatric Drug Use via Epidemiology database that contains data from minimum data set (MDS), a standardized data set required of all certified nursing homes in the United States. Case patients were selected by ICD-9 codes for cardiac arrest or ventricular arrhythmia. Control patients were selected via ICD-9 codes of 6 other common inpatient diagnoses. Antipsychotic exposure was determined by use of the most recent assessment in the nursing homes prior to admission. Exposed patients were those who received atypical antipsychotics such as risperidone, olanzapine, quetiapine, and clozapine, and those who used conventional agents such as haloperidol and others. After control for potential confounders, users of conventional antipsychotics showed an 86% increase in the risk of hospitalization for ventricular arrhythmias or cardiac arrest (OR: 1.86) compared with nonusers. No increased risk was reported for users of atypical antipsychotics. (OR: 0.87). When compared with atypical antipsychotic use, conventional antipsychotic use carries an OR of 2.13 for these cardiac outcomes. In patients using conventional antipsychotics, the presence and absence of cardiac diseases were 3.27 times and 2.05 times, respectively, more likely to be associated with hospitalization for ventricular arrhythmias and cardiac arrest, compared with nonusers without cardiac diseases.

These results suggest that atypical antipsychotics may carry less cardiac risk than conventional agents. In an inpatient population with advancing age and increasing prevalence of dementia and cardiac disease, use of atypical antipsychotic agents may be safer than older, typical agents.

4. Mayer SA, Brun NC, Begtrup K, et al. Recombinant activated factor VII for acute intracerebral hemorrhage. N Engl J Med. 352:777-85.

This placebo-controlled, double-blind, multicenter, industry-sponsored trial of early treatment of hemorrhagic stroke with rFVIIa at 3 escalating doses, evaluated stroke hematoma growth, mortality, and functional outcomes up to 90 days. The authors note the substantial mortality and high morbidity of this condition, which currently lacks definitive treatment. Patients within 3 hours of symptoms with intracerebral hemorrhage on CT and who met study criteria were randomized to receive either placebo or a single intravenous dose of 40, 80, or 160 mcg/kg of rFVIIa within 1 hour of baseline CT and no more than 4 hours after symptoms. Follow-up CTs at 24 and 72 hours were obtained and functional assessments were performed serially at frequent intervals throughout the study period. Three hundred ninety-nine patients were analyzed and were found similar in their baseline characteristics. Lesion volume was significantly less with treatment, in a dose-dependent fashion. Mortality at 3 months was significantly less (29% vs. 18%) with treatment, and all 4 of the global functional outcome scales utilized were favorable, 3 of them (modified Rankin Scale for all doses, NIH Stroke Scale for all doses, and the Barthel Index at the 80 and 160 mcg/kg doses) in a statistically significant fashion. However, the authors noted an increase in serious thromboembolic events in the treatment groups, with a statistically significant increased frequency of arterial thromboembolic events. These included myocardial ischemic events and cerebral infarction, and most occurred within 3 days of rFVIIa treatment. Of note, the majority of patients who suffered these events made recovery from their complications, and the overall rates of fatal or disabling thromboembolic occurrences between the treatment and placebo groups were similar. This study offers new and exciting insights into potential therapy for this serious form of stroke, although safety concerns merit further study.

5. Siguret V, Gouin I, Debray M, et al. Initiation of warfarin therapy in elderly medical inpatients: a safe and accurate regimen. Am J Med. 2005; 118:137-142.

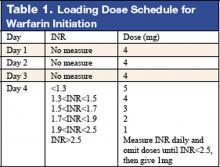

Warfarin therapy is widely used in geriatric populations. Sometimes over-anticoagulation occurs when warfarin therapy is initiated based on standard loading and maintenance dose in the hospital setting. This is mainly due to decreased hepatic clearance and polypharmacy in the geriatric population. A recent study in France demonstrated a useful and simple low-dose regimen for starting warfarin therapy (target INR: 2.0–3.0) in the elderly without over-anticoagulation. The patients enrolled in this study were typical geriatric patients with multiple comorbid conditions. These patients also received concomitant medications known to potentiate the effect of warfarin. One hundred six consecutive inpatients (age %70, mean age of 85 years) were given a 4-mg induction dose of warfarin for 3 days, and INR levels were measured on the 4th day. From this point, the daily warfarin dose was adjusted according to an algorithm (see Table 1), and INR values were obtained every 2–3 days until actual maintenance doses were determined. The maintenance dose was defined as the amount of warfarin required to yield an INR in 2.0 to 3.0 range on 2 consecutive samples obtained 48–72 hours apart in the absence of any dosage change for at least 4 days. Based on this algorithm, the predicted daily warfarin dose (3.1 ± 1.6 mg/day) correlated closely with the actual maintenance dose (3.2 ± 1.7 mg/day). The average time needed to achieve a therapeutic INR was 6.7 ± 3.3 days. None of the patients had an INR >4.0 during the induction period. This regimen also required fewer INR measurements.

Intracranial hemorrhage and gastrointestinal bleeding are serious complications of over-anticoagulation. The majority of gastrointestinal bleeding episodes respond to withholding warfarin and reversing anticoagulation. However, intracranial hemorrhage frequently leads to devastating outcomes. A recent report suggested that an age over 85 and INR of 3.5 or greater were associated with increased risk of intracranial hemorrhage. The warfarin algorithm proposed in this study provides a simple, safe, and effective tool to predict warfarin dosing in elderly hospitalized patients without over-anticoagulation. Although this regimen still needs to be validated in a large patient population in the future, it can be incorporated into computer-based dosing entry programs in the hospital setting to guide physicians in initiating warfarin therapy.

6. Wisnivesky JP, Henschke C, Balentine J, Willner, C, Deloire AM, McGinn TG. Prospective validation of a prediction model for isolating inpatients with suspected pulmonary tuberculosis. Arch Intern Med. 2005;165:453-7.

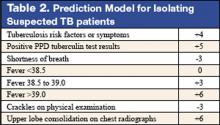

Whether to isolate a patient for suspected pulmonary tuberculosis (TB) is often a balancing act between clinical risk assessment and optimal hospital resource utilitization. Practitioners need a relatively simple but sophisticated tool that they can use at the bedside to more precisely assess the likelihood of TB for more efficient and effective triage.

These authors previously developed such a tool with a sensitivity of 98% and specificity of 46%. (See Table 2 for details) This study was designed to validate this decision rule in a new set of patients. Patients were enrolled in 2 tertiary-care hospitals in New York City area over a 21-month period. They were all admitted and isolated because of clinical suspicion for pulmonary TB, not utilizing the decision rule under study. Study team members collected demographic, clinical risk factors, presenting symptoms, and signs, laboratory, and radiographic findings. Chest x-ray findings were reviewed by investigators who were blinded to the other clinical and demographical information. The gold standard of diagnosis was at least 1 sputum culture that was positive for Mycobacterium tuberculosis.

A total of 516 patients were enrolled in this study. Of the 516, 19 (3.7%) were found to have culture-proven pulmonary TB. Univariate analyses showed that history of positive PPD, higher (98% vs. 95%) oxygen saturation, upper-lobe consolidation (not upper lobe cavity), and lymphadenopathy (hilar, mediastinal, or paratracheal) were all associated with the presence of pulmonary TB. Shortness of breath was associated with the absence of TB. A total score of 1 or higher in the prediction rule had a sensitivity of 95% for pulmonary TB, and score of less than 1 had a specificity of 35%. The investigators estimated a prevalence of 3.7%, thereby yielding a positive predictive value of 9.6% but a negative predictive value of 99.7%. They estimated that 35% of patients isolated would not have been with this prediction rule.

Though validated scientifically, this tool still has a false-negative rate of 5%. In a less endemic area, the false-negative rate would be correspondingly lower and thus more acceptable from a public health perspective. This is one step closer to a balance of optimal bed utilization and reasoned clinical assessment.

1. Chimowitz MI, Lynn MJ, Howlett-Smith H, et al. Comparison of warfarin and aspirin for symptomatic intracranial arterial stenosis. N Engl J Med. 352:1305-16.

This is the first prospective study comparing antithrombotic therapies for patients with atherosclerotic stenosis of major intracranial arteries. This multicenter, NINDS-sponsored, placebo-controlled, blinded study randomized 569 patients to aspirin (650 mg twice daily) or warfarin (initially 5 mg daily, titrated to achieve an INR of 2.0–3.0) and followed them for nearly 2 years. The study was terminated early over safely concerns about patients in the warfarin group. Baseline characteristics between the 2 groups were not significantly different. Warfarin was not more effective than aspirin in its effect on the primary endpoints of ischemic stroke, brain hemorrhage, or vascular death other than from stroke (as defined in the study protocol). However, major cardiac events (myocardial infarction or sudden death) were significantly higher in the warfarin group, and major hemorrhage (defined as any intracranial or systemic hemorrhage requiring hospitalization, transfusion, or surgical intervention) was also significantly higher in the warfarin group. The authors note the difficulty maintaining the INR in the target range (achieved only 63.1 % of the time during the maintenance period, an observation in line with other anticoagulation studies). In an accompanying editorial, Dr. Koroshetz of the stroke service at the Massachusetts General Hospital also observed that difficulties in achieving the therapeutic goal with warfarin could have impacted the results. The authors also note that the dose of aspirin employed in this study is somewhat higher than in previous trials. Nevertheless, until other data emerge, this investigation’s results favor aspirin in preference to warfarin for this high-risk condition.

2. Cornish PL, Knowles, SR, Marchesano R, et al. Unintended medication discrepancies at the time of hospital admission Arch Intern Med. 2005;165:424-9

Of the various types of medical errors, medication errors are believed to be the most common. At the time of hospital admission, medication discrepancies may lead to unintended drug interactions, toxicity, or interruption of appropriate drug therapies. These investigators performed a prospective study to identify unintended medication discrepancies between the patient’s home medications and those ordered at the time of the patient’s admission and to evaluate the potential clinical significance of these discrepancies.

This study was conducted at a 1,000-bed tertiary care hospital in Canada on the general medicine teaching service. A member of the study team reviewed each medical record to ascertain the physician-recorded medication history, the nurse-recorded medication history, the admission medication orders, and demographic information. A comprehensive list of all of the patient’s prescription or nonprescription drugs was compiled by interviewing patients, families, and pharmacists, and by inspecting the bottles. A discrepancy was defined as any difference between this comprehensive list and the admission medication orders. These were categorized into omission or addition of a medication, substitution of an agent within the same drug class, and change in dose, route, and frequency of administration of an agent. The medical team caring for the patient was then asked whether or not these discrepancies were intended. The team then reconciled any unintended discrepancies. These unintended discrepancies were further classified according to their potential for harm by 3 medical hospitalists into Class 1, 2, 3, in increasing order of potential harm. One hundred fifty-one patients were included in the analysis. A total of 140 errors occurred in 81 patients (54%). The overall error rate was 0.93 per patient. Of the errors, 46% consisted of omission of a regularly prescribed medication, 25% involved discrepant doses, 17.1% involved discrepant frequency, and 11.4% were actually incorrect drugs. Breakdown of error severity resulted in designation of 61% as Class 1, 33% as Class 2, and 5.7% as Class 3. The interrater agreement was a kappa of 0.26. These discrepancies were not found to be associated with night or weekend admissions, high patient volume, or high numbers of medications.

Real-time clinical correlation with the responsible physicians allowed distinction of intended from unintended discrepancies. This presumably improved the accuracy of the error rate measurement. This study confirmed the relatively high rate previously reported. Further study can focus on possible intervention to minimize these errors.

3. Liperoti R, Gambassi G, Lapane KL, et al. Conventional and atypical antipsychotics and the risk of hospitalization for ventricular arrhythmias or cardiac arrest Arch Intern Med. 2005;165:696-701.

As the number of hospitalized elderly and demented patients increases, use of both typical and atypical antipsychotics has become prevalent. QT prolongation, ventricular arrhythmia, and cardiac arrest are more commonly associated with the older conventional antipsychotics than with newer atypical agents. This case-control study was conducted to estimate the effect of both conventional and atypical antipsychotics use on the risk of hospital admission for ventricular arrhythmia or cardiac arrest.

The patient population involved consisted of elderly nursing home residents in 6 US states. The investigators utilized Systematic Assessment of Geriatric Drug Use via Epidemiology database that contains data from minimum data set (MDS), a standardized data set required of all certified nursing homes in the United States. Case patients were selected by ICD-9 codes for cardiac arrest or ventricular arrhythmia. Control patients were selected via ICD-9 codes of 6 other common inpatient diagnoses. Antipsychotic exposure was determined by use of the most recent assessment in the nursing homes prior to admission. Exposed patients were those who received atypical antipsychotics such as risperidone, olanzapine, quetiapine, and clozapine, and those who used conventional agents such as haloperidol and others. After control for potential confounders, users of conventional antipsychotics showed an 86% increase in the risk of hospitalization for ventricular arrhythmias or cardiac arrest (OR: 1.86) compared with nonusers. No increased risk was reported for users of atypical antipsychotics. (OR: 0.87). When compared with atypical antipsychotic use, conventional antipsychotic use carries an OR of 2.13 for these cardiac outcomes. In patients using conventional antipsychotics, the presence and absence of cardiac diseases were 3.27 times and 2.05 times, respectively, more likely to be associated with hospitalization for ventricular arrhythmias and cardiac arrest, compared with nonusers without cardiac diseases.

These results suggest that atypical antipsychotics may carry less cardiac risk than conventional agents. In an inpatient population with advancing age and increasing prevalence of dementia and cardiac disease, use of atypical antipsychotic agents may be safer than older, typical agents.

4. Mayer SA, Brun NC, Begtrup K, et al. Recombinant activated factor VII for acute intracerebral hemorrhage. N Engl J Med. 352:777-85.

This placebo-controlled, double-blind, multicenter, industry-sponsored trial of early treatment of hemorrhagic stroke with rFVIIa at 3 escalating doses, evaluated stroke hematoma growth, mortality, and functional outcomes up to 90 days. The authors note the substantial mortality and high morbidity of this condition, which currently lacks definitive treatment. Patients within 3 hours of symptoms with intracerebral hemorrhage on CT and who met study criteria were randomized to receive either placebo or a single intravenous dose of 40, 80, or 160 mcg/kg of rFVIIa within 1 hour of baseline CT and no more than 4 hours after symptoms. Follow-up CTs at 24 and 72 hours were obtained and functional assessments were performed serially at frequent intervals throughout the study period. Three hundred ninety-nine patients were analyzed and were found similar in their baseline characteristics. Lesion volume was significantly less with treatment, in a dose-dependent fashion. Mortality at 3 months was significantly less (29% vs. 18%) with treatment, and all 4 of the global functional outcome scales utilized were favorable, 3 of them (modified Rankin Scale for all doses, NIH Stroke Scale for all doses, and the Barthel Index at the 80 and 160 mcg/kg doses) in a statistically significant fashion. However, the authors noted an increase in serious thromboembolic events in the treatment groups, with a statistically significant increased frequency of arterial thromboembolic events. These included myocardial ischemic events and cerebral infarction, and most occurred within 3 days of rFVIIa treatment. Of note, the majority of patients who suffered these events made recovery from their complications, and the overall rates of fatal or disabling thromboembolic occurrences between the treatment and placebo groups were similar. This study offers new and exciting insights into potential therapy for this serious form of stroke, although safety concerns merit further study.

5. Siguret V, Gouin I, Debray M, et al. Initiation of warfarin therapy in elderly medical inpatients: a safe and accurate regimen. Am J Med. 2005; 118:137-142.

Warfarin therapy is widely used in geriatric populations. Sometimes over-anticoagulation occurs when warfarin therapy is initiated based on standard loading and maintenance dose in the hospital setting. This is mainly due to decreased hepatic clearance and polypharmacy in the geriatric population. A recent study in France demonstrated a useful and simple low-dose regimen for starting warfarin therapy (target INR: 2.0–3.0) in the elderly without over-anticoagulation. The patients enrolled in this study were typical geriatric patients with multiple comorbid conditions. These patients also received concomitant medications known to potentiate the effect of warfarin. One hundred six consecutive inpatients (age %70, mean age of 85 years) were given a 4-mg induction dose of warfarin for 3 days, and INR levels were measured on the 4th day. From this point, the daily warfarin dose was adjusted according to an algorithm (see Table 1), and INR values were obtained every 2–3 days until actual maintenance doses were determined. The maintenance dose was defined as the amount of warfarin required to yield an INR in 2.0 to 3.0 range on 2 consecutive samples obtained 48–72 hours apart in the absence of any dosage change for at least 4 days. Based on this algorithm, the predicted daily warfarin dose (3.1 ± 1.6 mg/day) correlated closely with the actual maintenance dose (3.2 ± 1.7 mg/day). The average time needed to achieve a therapeutic INR was 6.7 ± 3.3 days. None of the patients had an INR >4.0 during the induction period. This regimen also required fewer INR measurements.

Intracranial hemorrhage and gastrointestinal bleeding are serious complications of over-anticoagulation. The majority of gastrointestinal bleeding episodes respond to withholding warfarin and reversing anticoagulation. However, intracranial hemorrhage frequently leads to devastating outcomes. A recent report suggested that an age over 85 and INR of 3.5 or greater were associated with increased risk of intracranial hemorrhage. The warfarin algorithm proposed in this study provides a simple, safe, and effective tool to predict warfarin dosing in elderly hospitalized patients without over-anticoagulation. Although this regimen still needs to be validated in a large patient population in the future, it can be incorporated into computer-based dosing entry programs in the hospital setting to guide physicians in initiating warfarin therapy.

6. Wisnivesky JP, Henschke C, Balentine J, Willner, C, Deloire AM, McGinn TG. Prospective validation of a prediction model for isolating inpatients with suspected pulmonary tuberculosis. Arch Intern Med. 2005;165:453-7.

Whether to isolate a patient for suspected pulmonary tuberculosis (TB) is often a balancing act between clinical risk assessment and optimal hospital resource utilitization. Practitioners need a relatively simple but sophisticated tool that they can use at the bedside to more precisely assess the likelihood of TB for more efficient and effective triage.

These authors previously developed such a tool with a sensitivity of 98% and specificity of 46%. (See Table 2 for details) This study was designed to validate this decision rule in a new set of patients. Patients were enrolled in 2 tertiary-care hospitals in New York City area over a 21-month period. They were all admitted and isolated because of clinical suspicion for pulmonary TB, not utilizing the decision rule under study. Study team members collected demographic, clinical risk factors, presenting symptoms, and signs, laboratory, and radiographic findings. Chest x-ray findings were reviewed by investigators who were blinded to the other clinical and demographical information. The gold standard of diagnosis was at least 1 sputum culture that was positive for Mycobacterium tuberculosis.

A total of 516 patients were enrolled in this study. Of the 516, 19 (3.7%) were found to have culture-proven pulmonary TB. Univariate analyses showed that history of positive PPD, higher (98% vs. 95%) oxygen saturation, upper-lobe consolidation (not upper lobe cavity), and lymphadenopathy (hilar, mediastinal, or paratracheal) were all associated with the presence of pulmonary TB. Shortness of breath was associated with the absence of TB. A total score of 1 or higher in the prediction rule had a sensitivity of 95% for pulmonary TB, and score of less than 1 had a specificity of 35%. The investigators estimated a prevalence of 3.7%, thereby yielding a positive predictive value of 9.6% but a negative predictive value of 99.7%. They estimated that 35% of patients isolated would not have been with this prediction rule.

Though validated scientifically, this tool still has a false-negative rate of 5%. In a less endemic area, the false-negative rate would be correspondingly lower and thus more acceptable from a public health perspective. This is one step closer to a balance of optimal bed utilization and reasoned clinical assessment.

1. Chimowitz MI, Lynn MJ, Howlett-Smith H, et al. Comparison of warfarin and aspirin for symptomatic intracranial arterial stenosis. N Engl J Med. 352:1305-16.

This is the first prospective study comparing antithrombotic therapies for patients with atherosclerotic stenosis of major intracranial arteries. This multicenter, NINDS-sponsored, placebo-controlled, blinded study randomized 569 patients to aspirin (650 mg twice daily) or warfarin (initially 5 mg daily, titrated to achieve an INR of 2.0–3.0) and followed them for nearly 2 years. The study was terminated early over safely concerns about patients in the warfarin group. Baseline characteristics between the 2 groups were not significantly different. Warfarin was not more effective than aspirin in its effect on the primary endpoints of ischemic stroke, brain hemorrhage, or vascular death other than from stroke (as defined in the study protocol). However, major cardiac events (myocardial infarction or sudden death) were significantly higher in the warfarin group, and major hemorrhage (defined as any intracranial or systemic hemorrhage requiring hospitalization, transfusion, or surgical intervention) was also significantly higher in the warfarin group. The authors note the difficulty maintaining the INR in the target range (achieved only 63.1 % of the time during the maintenance period, an observation in line with other anticoagulation studies). In an accompanying editorial, Dr. Koroshetz of the stroke service at the Massachusetts General Hospital also observed that difficulties in achieving the therapeutic goal with warfarin could have impacted the results. The authors also note that the dose of aspirin employed in this study is somewhat higher than in previous trials. Nevertheless, until other data emerge, this investigation’s results favor aspirin in preference to warfarin for this high-risk condition.

2. Cornish PL, Knowles, SR, Marchesano R, et al. Unintended medication discrepancies at the time of hospital admission Arch Intern Med. 2005;165:424-9

Of the various types of medical errors, medication errors are believed to be the most common. At the time of hospital admission, medication discrepancies may lead to unintended drug interactions, toxicity, or interruption of appropriate drug therapies. These investigators performed a prospective study to identify unintended medication discrepancies between the patient’s home medications and those ordered at the time of the patient’s admission and to evaluate the potential clinical significance of these discrepancies.

This study was conducted at a 1,000-bed tertiary care hospital in Canada on the general medicine teaching service. A member of the study team reviewed each medical record to ascertain the physician-recorded medication history, the nurse-recorded medication history, the admission medication orders, and demographic information. A comprehensive list of all of the patient’s prescription or nonprescription drugs was compiled by interviewing patients, families, and pharmacists, and by inspecting the bottles. A discrepancy was defined as any difference between this comprehensive list and the admission medication orders. These were categorized into omission or addition of a medication, substitution of an agent within the same drug class, and change in dose, route, and frequency of administration of an agent. The medical team caring for the patient was then asked whether or not these discrepancies were intended. The team then reconciled any unintended discrepancies. These unintended discrepancies were further classified according to their potential for harm by 3 medical hospitalists into Class 1, 2, 3, in increasing order of potential harm. One hundred fifty-one patients were included in the analysis. A total of 140 errors occurred in 81 patients (54%). The overall error rate was 0.93 per patient. Of the errors, 46% consisted of omission of a regularly prescribed medication, 25% involved discrepant doses, 17.1% involved discrepant frequency, and 11.4% were actually incorrect drugs. Breakdown of error severity resulted in designation of 61% as Class 1, 33% as Class 2, and 5.7% as Class 3. The interrater agreement was a kappa of 0.26. These discrepancies were not found to be associated with night or weekend admissions, high patient volume, or high numbers of medications.

Real-time clinical correlation with the responsible physicians allowed distinction of intended from unintended discrepancies. This presumably improved the accuracy of the error rate measurement. This study confirmed the relatively high rate previously reported. Further study can focus on possible intervention to minimize these errors.

3. Liperoti R, Gambassi G, Lapane KL, et al. Conventional and atypical antipsychotics and the risk of hospitalization for ventricular arrhythmias or cardiac arrest Arch Intern Med. 2005;165:696-701.

As the number of hospitalized elderly and demented patients increases, use of both typical and atypical antipsychotics has become prevalent. QT prolongation, ventricular arrhythmia, and cardiac arrest are more commonly associated with the older conventional antipsychotics than with newer atypical agents. This case-control study was conducted to estimate the effect of both conventional and atypical antipsychotics use on the risk of hospital admission for ventricular arrhythmia or cardiac arrest.

The patient population involved consisted of elderly nursing home residents in 6 US states. The investigators utilized Systematic Assessment of Geriatric Drug Use via Epidemiology database that contains data from minimum data set (MDS), a standardized data set required of all certified nursing homes in the United States. Case patients were selected by ICD-9 codes for cardiac arrest or ventricular arrhythmia. Control patients were selected via ICD-9 codes of 6 other common inpatient diagnoses. Antipsychotic exposure was determined by use of the most recent assessment in the nursing homes prior to admission. Exposed patients were those who received atypical antipsychotics such as risperidone, olanzapine, quetiapine, and clozapine, and those who used conventional agents such as haloperidol and others. After control for potential confounders, users of conventional antipsychotics showed an 86% increase in the risk of hospitalization for ventricular arrhythmias or cardiac arrest (OR: 1.86) compared with nonusers. No increased risk was reported for users of atypical antipsychotics. (OR: 0.87). When compared with atypical antipsychotic use, conventional antipsychotic use carries an OR of 2.13 for these cardiac outcomes. In patients using conventional antipsychotics, the presence and absence of cardiac diseases were 3.27 times and 2.05 times, respectively, more likely to be associated with hospitalization for ventricular arrhythmias and cardiac arrest, compared with nonusers without cardiac diseases.

These results suggest that atypical antipsychotics may carry less cardiac risk than conventional agents. In an inpatient population with advancing age and increasing prevalence of dementia and cardiac disease, use of atypical antipsychotic agents may be safer than older, typical agents.

4. Mayer SA, Brun NC, Begtrup K, et al. Recombinant activated factor VII for acute intracerebral hemorrhage. N Engl J Med. 352:777-85.

This placebo-controlled, double-blind, multicenter, industry-sponsored trial of early treatment of hemorrhagic stroke with rFVIIa at 3 escalating doses, evaluated stroke hematoma growth, mortality, and functional outcomes up to 90 days. The authors note the substantial mortality and high morbidity of this condition, which currently lacks definitive treatment. Patients within 3 hours of symptoms with intracerebral hemorrhage on CT and who met study criteria were randomized to receive either placebo or a single intravenous dose of 40, 80, or 160 mcg/kg of rFVIIa within 1 hour of baseline CT and no more than 4 hours after symptoms. Follow-up CTs at 24 and 72 hours were obtained and functional assessments were performed serially at frequent intervals throughout the study period. Three hundred ninety-nine patients were analyzed and were found similar in their baseline characteristics. Lesion volume was significantly less with treatment, in a dose-dependent fashion. Mortality at 3 months was significantly less (29% vs. 18%) with treatment, and all 4 of the global functional outcome scales utilized were favorable, 3 of them (modified Rankin Scale for all doses, NIH Stroke Scale for all doses, and the Barthel Index at the 80 and 160 mcg/kg doses) in a statistically significant fashion. However, the authors noted an increase in serious thromboembolic events in the treatment groups, with a statistically significant increased frequency of arterial thromboembolic events. These included myocardial ischemic events and cerebral infarction, and most occurred within 3 days of rFVIIa treatment. Of note, the majority of patients who suffered these events made recovery from their complications, and the overall rates of fatal or disabling thromboembolic occurrences between the treatment and placebo groups were similar. This study offers new and exciting insights into potential therapy for this serious form of stroke, although safety concerns merit further study.

5. Siguret V, Gouin I, Debray M, et al. Initiation of warfarin therapy in elderly medical inpatients: a safe and accurate regimen. Am J Med. 2005; 118:137-142.

Warfarin therapy is widely used in geriatric populations. Sometimes over-anticoagulation occurs when warfarin therapy is initiated based on standard loading and maintenance dose in the hospital setting. This is mainly due to decreased hepatic clearance and polypharmacy in the geriatric population. A recent study in France demonstrated a useful and simple low-dose regimen for starting warfarin therapy (target INR: 2.0–3.0) in the elderly without over-anticoagulation. The patients enrolled in this study were typical geriatric patients with multiple comorbid conditions. These patients also received concomitant medications known to potentiate the effect of warfarin. One hundred six consecutive inpatients (age %70, mean age of 85 years) were given a 4-mg induction dose of warfarin for 3 days, and INR levels were measured on the 4th day. From this point, the daily warfarin dose was adjusted according to an algorithm (see Table 1), and INR values were obtained every 2–3 days until actual maintenance doses were determined. The maintenance dose was defined as the amount of warfarin required to yield an INR in 2.0 to 3.0 range on 2 consecutive samples obtained 48–72 hours apart in the absence of any dosage change for at least 4 days. Based on this algorithm, the predicted daily warfarin dose (3.1 ± 1.6 mg/day) correlated closely with the actual maintenance dose (3.2 ± 1.7 mg/day). The average time needed to achieve a therapeutic INR was 6.7 ± 3.3 days. None of the patients had an INR >4.0 during the induction period. This regimen also required fewer INR measurements.

Intracranial hemorrhage and gastrointestinal bleeding are serious complications of over-anticoagulation. The majority of gastrointestinal bleeding episodes respond to withholding warfarin and reversing anticoagulation. However, intracranial hemorrhage frequently leads to devastating outcomes. A recent report suggested that an age over 85 and INR of 3.5 or greater were associated with increased risk of intracranial hemorrhage. The warfarin algorithm proposed in this study provides a simple, safe, and effective tool to predict warfarin dosing in elderly hospitalized patients without over-anticoagulation. Although this regimen still needs to be validated in a large patient population in the future, it can be incorporated into computer-based dosing entry programs in the hospital setting to guide physicians in initiating warfarin therapy.

6. Wisnivesky JP, Henschke C, Balentine J, Willner, C, Deloire AM, McGinn TG. Prospective validation of a prediction model for isolating inpatients with suspected pulmonary tuberculosis. Arch Intern Med. 2005;165:453-7.

Whether to isolate a patient for suspected pulmonary tuberculosis (TB) is often a balancing act between clinical risk assessment and optimal hospital resource utilitization. Practitioners need a relatively simple but sophisticated tool that they can use at the bedside to more precisely assess the likelihood of TB for more efficient and effective triage.

These authors previously developed such a tool with a sensitivity of 98% and specificity of 46%. (See Table 2 for details) This study was designed to validate this decision rule in a new set of patients. Patients were enrolled in 2 tertiary-care hospitals in New York City area over a 21-month period. They were all admitted and isolated because of clinical suspicion for pulmonary TB, not utilizing the decision rule under study. Study team members collected demographic, clinical risk factors, presenting symptoms, and signs, laboratory, and radiographic findings. Chest x-ray findings were reviewed by investigators who were blinded to the other clinical and demographical information. The gold standard of diagnosis was at least 1 sputum culture that was positive for Mycobacterium tuberculosis.

A total of 516 patients were enrolled in this study. Of the 516, 19 (3.7%) were found to have culture-proven pulmonary TB. Univariate analyses showed that history of positive PPD, higher (98% vs. 95%) oxygen saturation, upper-lobe consolidation (not upper lobe cavity), and lymphadenopathy (hilar, mediastinal, or paratracheal) were all associated with the presence of pulmonary TB. Shortness of breath was associated with the absence of TB. A total score of 1 or higher in the prediction rule had a sensitivity of 95% for pulmonary TB, and score of less than 1 had a specificity of 35%. The investigators estimated a prevalence of 3.7%, thereby yielding a positive predictive value of 9.6% but a negative predictive value of 99.7%. They estimated that 35% of patients isolated would not have been with this prediction rule.

Though validated scientifically, this tool still has a false-negative rate of 5%. In a less endemic area, the false-negative rate would be correspondingly lower and thus more acceptable from a public health perspective. This is one step closer to a balance of optimal bed utilization and reasoned clinical assessment.