User login

To the Editor:

Prior studies have assessed the quality of text-based dermatology information on the Internet using traditional search engine queries.1 However, little is understood about the sources, accuracy, and quality of online dermatology images derived from direct image searches. Previous work has shown that direct search engine image queries were largely accurate for 3 pediatric dermatology diagnosis searches: atopic dermatitis, lichen striatus, and subcutaneous fat necrosis.2 We assessed images obtained for common dermatologic conditions from a Google image search (GIS) compared to a traditional text-based Google web search (GWS).

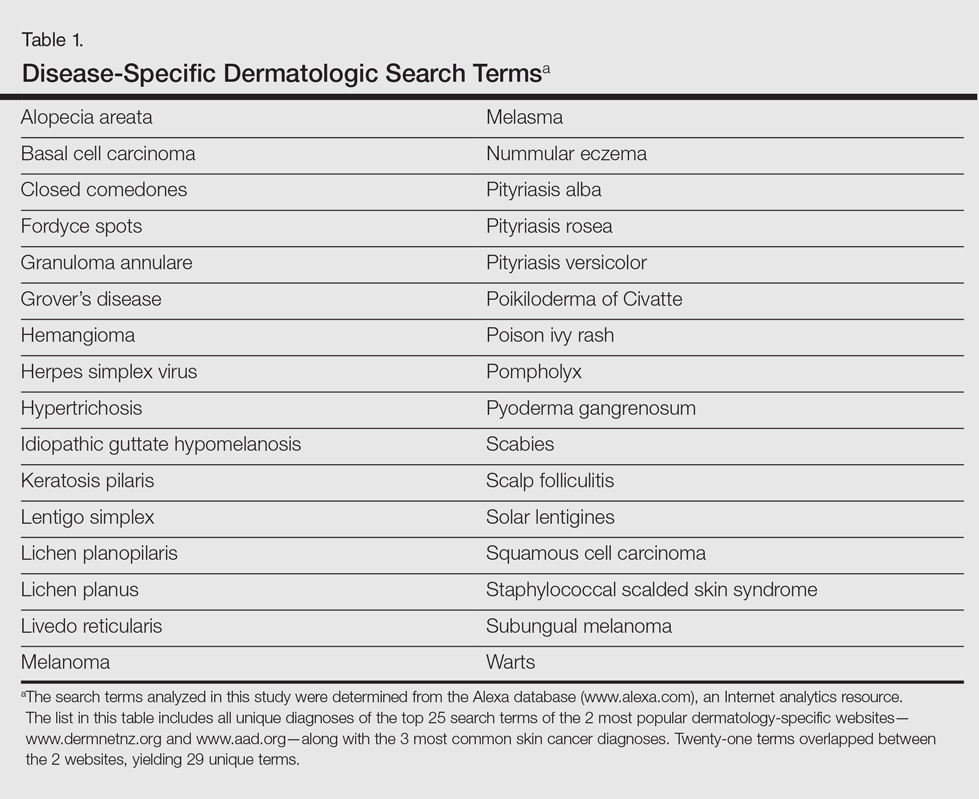

Image results for 32 unique dermatologic search terms were analyzed (Table 1). These search terms were selected using the results of a prior study that identified the most common dermatologic diagnoses that led users to the 2 most popular dermatology-specific websites worldwide: the American Academy of Dermatology (www.aad.org) and DermNet New Zealand (www.dermnetnz.org).3 The Alexa directory (www.alexa.com), a large publicly available Internet analytics resource, was used to determine the most common dermatology search terms that led a user to either www.dermnetnz.org or www.aad.org. In addition, searches for the 3 most common types of skin cancer—melanoma, squamous cell carcinoma, and basal cell carcinoma—were included. Each term was entered into a GIS and a GWS. The first 10 results, which represent 92% of the websites ultimately visited by users,4 were analyzed. The source, diagnostic accuracy, and Fitzpatrick skin type of the images was determined. Website sources were organized into 11 categories. All data collection occurred within a 1-week period in August 2015.

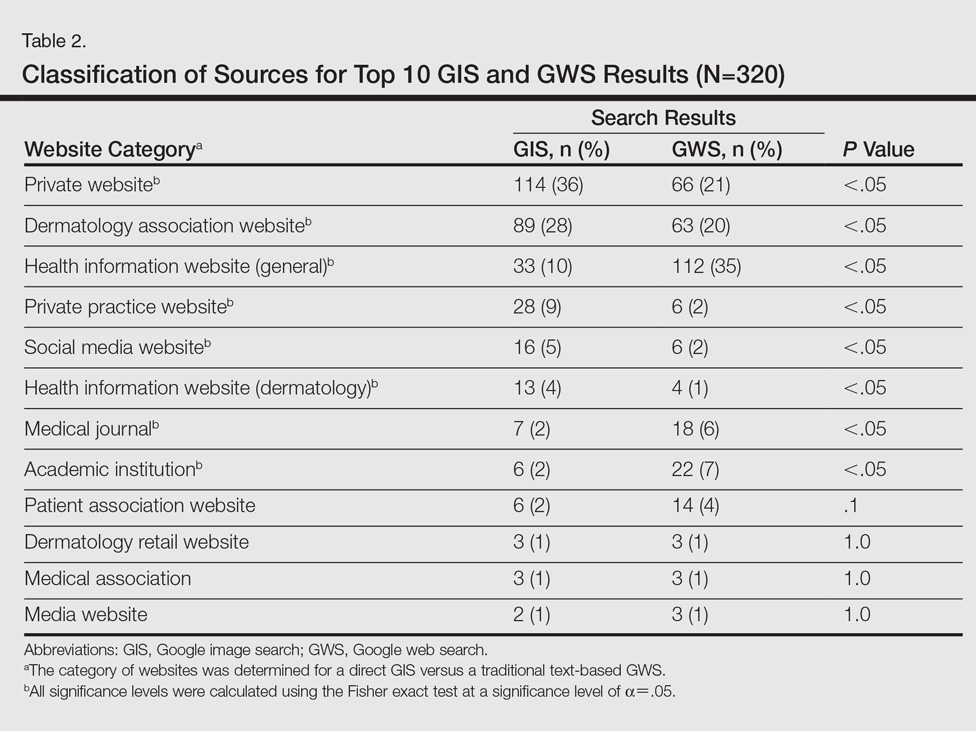

A total of 320 images were analyzed. In the GIS, private websites (36%), dermatology association websites (28%), and general health information websites (10%) were the 3 most common sources. In the GWS, health information websites (35%), private websites (21%), and dermatology association websites (20%) accounted for the most common sources (Table 2). The majority of images were of Fitzpatrick skin types I and II (89%) and nearly all images were diagnostically accurate (98%). There was no statistically significant difference in accuracy of diagnosis between physician-associated websites (100% accuracy) versus nonphysician-associated sites (98% accuracy, P=.25).

Our results showed high diagnostic accuracy among the top GIS results for common dermatology search terms. Diagnostic accuracy did not vary between websites that were physician associated versus those that were not. Our results are comparable to the reported accuracy of online dermatologic health information.1 In GIS results, the majority of images were provided by private websites, whereas the top websites in GWS results were health information websites.

Only 1% of images were of Fitzpatrick skin types VI and VII. Presentation of skin diseases is remarkably different based on the patient’s skin type.5 The shortage of readily accessible images of skin of color is in line with the lack of familiarity physicians and trainees have with dermatologic conditions in ethnic skin.6

Based on the results from this analysis, providers and patients searching for dermatologic conditions via a direct GIS should be cognizant of several considerations. Although our results showed that GIS was accurate, the searcher should note that image-based searches are not accompanied by relevant text that can help confirm relevancy and accuracy. Image searches depend on textual tags added by the source website. Websites that represent dermatological associations and academic centers can add an additional layer of confidence for users. Patients and clinicians also should be aware that the consideration of a patient’s Fitzpatrick skin type is critical when assessing the relevancy of a GIS result. In conclusion, search results via GIS queries are accurate for the dermatological diagnoses tested but may be lacking in skin of color variations, suggesting a potential unmet need based on our growing ethnic skin population.

- Jensen JD, Dunnick CA, Arbuckle HA, et al. Dermatology information on the Internet: an appraisal by dermatologists and dermatology residents. J Am Acad Dermatol. 2010;63:1101-1103.

- Cutrone M, Grimalt R. Dermatological image search engines on the Internet: do they work? J Eur Acad Dermatol Venereol. 2007;21:175-177.

- Xu S, Nault A, Bhatia A. Search and engagement analysis of association websites representing dermatologists—implications and opportunities for web visibility and patient education: website rankings of dermatology associations. Pract Dermatol. In press.

- comScore releases July 2015 U.S. desktop search engine rankings [press release]. Reston, VA: comScore, Inc; August 14, 2015. http://www.comscore.com/Insights/Market-Rankings/comScore-Releases-July-2015-U.S.-Desktop-Search-Engine-Rankings. Accessed October 18, 2016.

- Kundu RV, Patterson S. Dermatologic conditions in skin of color: part I. special considerations for common skin disorders. Am Fam Physician. 2013;87:850-856.

- Nijhawan RI, Jacob SE, Woolery-Lloyd H. Skin of color education in dermatology residency programs: does residency training reflect the changing demographics of the United States? J Am Acad Dermatol. 2008;59:615-618.

To the Editor:

Prior studies have assessed the quality of text-based dermatology information on the Internet using traditional search engine queries.1 However, little is understood about the sources, accuracy, and quality of online dermatology images derived from direct image searches. Previous work has shown that direct search engine image queries were largely accurate for 3 pediatric dermatology diagnosis searches: atopic dermatitis, lichen striatus, and subcutaneous fat necrosis.2 We assessed images obtained for common dermatologic conditions from a Google image search (GIS) compared to a traditional text-based Google web search (GWS).

Image results for 32 unique dermatologic search terms were analyzed (Table 1). These search terms were selected using the results of a prior study that identified the most common dermatologic diagnoses that led users to the 2 most popular dermatology-specific websites worldwide: the American Academy of Dermatology (www.aad.org) and DermNet New Zealand (www.dermnetnz.org).3 The Alexa directory (www.alexa.com), a large publicly available Internet analytics resource, was used to determine the most common dermatology search terms that led a user to either www.dermnetnz.org or www.aad.org. In addition, searches for the 3 most common types of skin cancer—melanoma, squamous cell carcinoma, and basal cell carcinoma—were included. Each term was entered into a GIS and a GWS. The first 10 results, which represent 92% of the websites ultimately visited by users,4 were analyzed. The source, diagnostic accuracy, and Fitzpatrick skin type of the images was determined. Website sources were organized into 11 categories. All data collection occurred within a 1-week period in August 2015.

A total of 320 images were analyzed. In the GIS, private websites (36%), dermatology association websites (28%), and general health information websites (10%) were the 3 most common sources. In the GWS, health information websites (35%), private websites (21%), and dermatology association websites (20%) accounted for the most common sources (Table 2). The majority of images were of Fitzpatrick skin types I and II (89%) and nearly all images were diagnostically accurate (98%). There was no statistically significant difference in accuracy of diagnosis between physician-associated websites (100% accuracy) versus nonphysician-associated sites (98% accuracy, P=.25).

Our results showed high diagnostic accuracy among the top GIS results for common dermatology search terms. Diagnostic accuracy did not vary between websites that were physician associated versus those that were not. Our results are comparable to the reported accuracy of online dermatologic health information.1 In GIS results, the majority of images were provided by private websites, whereas the top websites in GWS results were health information websites.

Only 1% of images were of Fitzpatrick skin types VI and VII. Presentation of skin diseases is remarkably different based on the patient’s skin type.5 The shortage of readily accessible images of skin of color is in line with the lack of familiarity physicians and trainees have with dermatologic conditions in ethnic skin.6

Based on the results from this analysis, providers and patients searching for dermatologic conditions via a direct GIS should be cognizant of several considerations. Although our results showed that GIS was accurate, the searcher should note that image-based searches are not accompanied by relevant text that can help confirm relevancy and accuracy. Image searches depend on textual tags added by the source website. Websites that represent dermatological associations and academic centers can add an additional layer of confidence for users. Patients and clinicians also should be aware that the consideration of a patient’s Fitzpatrick skin type is critical when assessing the relevancy of a GIS result. In conclusion, search results via GIS queries are accurate for the dermatological diagnoses tested but may be lacking in skin of color variations, suggesting a potential unmet need based on our growing ethnic skin population.

To the Editor:

Prior studies have assessed the quality of text-based dermatology information on the Internet using traditional search engine queries.1 However, little is understood about the sources, accuracy, and quality of online dermatology images derived from direct image searches. Previous work has shown that direct search engine image queries were largely accurate for 3 pediatric dermatology diagnosis searches: atopic dermatitis, lichen striatus, and subcutaneous fat necrosis.2 We assessed images obtained for common dermatologic conditions from a Google image search (GIS) compared to a traditional text-based Google web search (GWS).

Image results for 32 unique dermatologic search terms were analyzed (Table 1). These search terms were selected using the results of a prior study that identified the most common dermatologic diagnoses that led users to the 2 most popular dermatology-specific websites worldwide: the American Academy of Dermatology (www.aad.org) and DermNet New Zealand (www.dermnetnz.org).3 The Alexa directory (www.alexa.com), a large publicly available Internet analytics resource, was used to determine the most common dermatology search terms that led a user to either www.dermnetnz.org or www.aad.org. In addition, searches for the 3 most common types of skin cancer—melanoma, squamous cell carcinoma, and basal cell carcinoma—were included. Each term was entered into a GIS and a GWS. The first 10 results, which represent 92% of the websites ultimately visited by users,4 were analyzed. The source, diagnostic accuracy, and Fitzpatrick skin type of the images was determined. Website sources were organized into 11 categories. All data collection occurred within a 1-week period in August 2015.

A total of 320 images were analyzed. In the GIS, private websites (36%), dermatology association websites (28%), and general health information websites (10%) were the 3 most common sources. In the GWS, health information websites (35%), private websites (21%), and dermatology association websites (20%) accounted for the most common sources (Table 2). The majority of images were of Fitzpatrick skin types I and II (89%) and nearly all images were diagnostically accurate (98%). There was no statistically significant difference in accuracy of diagnosis between physician-associated websites (100% accuracy) versus nonphysician-associated sites (98% accuracy, P=.25).

Our results showed high diagnostic accuracy among the top GIS results for common dermatology search terms. Diagnostic accuracy did not vary between websites that were physician associated versus those that were not. Our results are comparable to the reported accuracy of online dermatologic health information.1 In GIS results, the majority of images were provided by private websites, whereas the top websites in GWS results were health information websites.

Only 1% of images were of Fitzpatrick skin types VI and VII. Presentation of skin diseases is remarkably different based on the patient’s skin type.5 The shortage of readily accessible images of skin of color is in line with the lack of familiarity physicians and trainees have with dermatologic conditions in ethnic skin.6

Based on the results from this analysis, providers and patients searching for dermatologic conditions via a direct GIS should be cognizant of several considerations. Although our results showed that GIS was accurate, the searcher should note that image-based searches are not accompanied by relevant text that can help confirm relevancy and accuracy. Image searches depend on textual tags added by the source website. Websites that represent dermatological associations and academic centers can add an additional layer of confidence for users. Patients and clinicians also should be aware that the consideration of a patient’s Fitzpatrick skin type is critical when assessing the relevancy of a GIS result. In conclusion, search results via GIS queries are accurate for the dermatological diagnoses tested but may be lacking in skin of color variations, suggesting a potential unmet need based on our growing ethnic skin population.

- Jensen JD, Dunnick CA, Arbuckle HA, et al. Dermatology information on the Internet: an appraisal by dermatologists and dermatology residents. J Am Acad Dermatol. 2010;63:1101-1103.

- Cutrone M, Grimalt R. Dermatological image search engines on the Internet: do they work? J Eur Acad Dermatol Venereol. 2007;21:175-177.

- Xu S, Nault A, Bhatia A. Search and engagement analysis of association websites representing dermatologists—implications and opportunities for web visibility and patient education: website rankings of dermatology associations. Pract Dermatol. In press.

- comScore releases July 2015 U.S. desktop search engine rankings [press release]. Reston, VA: comScore, Inc; August 14, 2015. http://www.comscore.com/Insights/Market-Rankings/comScore-Releases-July-2015-U.S.-Desktop-Search-Engine-Rankings. Accessed October 18, 2016.

- Kundu RV, Patterson S. Dermatologic conditions in skin of color: part I. special considerations for common skin disorders. Am Fam Physician. 2013;87:850-856.

- Nijhawan RI, Jacob SE, Woolery-Lloyd H. Skin of color education in dermatology residency programs: does residency training reflect the changing demographics of the United States? J Am Acad Dermatol. 2008;59:615-618.

- Jensen JD, Dunnick CA, Arbuckle HA, et al. Dermatology information on the Internet: an appraisal by dermatologists and dermatology residents. J Am Acad Dermatol. 2010;63:1101-1103.

- Cutrone M, Grimalt R. Dermatological image search engines on the Internet: do they work? J Eur Acad Dermatol Venereol. 2007;21:175-177.

- Xu S, Nault A, Bhatia A. Search and engagement analysis of association websites representing dermatologists—implications and opportunities for web visibility and patient education: website rankings of dermatology associations. Pract Dermatol. In press.

- comScore releases July 2015 U.S. desktop search engine rankings [press release]. Reston, VA: comScore, Inc; August 14, 2015. http://www.comscore.com/Insights/Market-Rankings/comScore-Releases-July-2015-U.S.-Desktop-Search-Engine-Rankings. Accessed October 18, 2016.

- Kundu RV, Patterson S. Dermatologic conditions in skin of color: part I. special considerations for common skin disorders. Am Fam Physician. 2013;87:850-856.

- Nijhawan RI, Jacob SE, Woolery-Lloyd H. Skin of color education in dermatology residency programs: does residency training reflect the changing demographics of the United States? J Am Acad Dermatol. 2008;59:615-618.

Practice Points

- Direct Google image searches largely deliver accurate results for common dermatological diagnoses.

- Greater effort should be made to include more publicly available images for dermatological diseases in darker skin types.