User login

Skip that repeat DXA scan in these postmenopausal women

ILLUSTRATIVE CASE

A 70-year-old White woman with a history of type 2 diabetes and a normal

As many as 1 in 2 postmenopausal women are at risk for an osteoporosis-related fracture.2 Each year, about 2 million fragility fractures occur in the United States.2,3 The

Two prospective cohort studies determined that repeat BMD testing 4 to 8 years after baseline screening did not improve fracture risk prediction.5,6 Limitations of these studies included no analysis of high-risk subgroups, as well as failure to include many younger postmenopausal women in the studies.5,6 An additional longitudinal study that followed postmenopausal women for up to 15 years estimated that the interval for at least 10% of women to develop osteoporosis after initial screening was more than 15 years for women with normal BMD and about 5 years for those with moderate osteopenia.7

STUDY SUMMARY

The current study examined data from the

Study participants averaged 66 years of age, with a mean BMI of 29, and 23% were non-White. In addition, 97% had either normal BMD or osteopenia (T score ≥ −2.4). Participants were excluded from the study if they had been treated with bone-active medications other than vitamin D and calcium, reported a history of MOF (fracture of the hip, spine, radius, ulna, wrist, upper arm, or shoulder) at baseline or between BMD tests, missed follow-up visits after the Year 3 BMD scan, or had missing covariate data. Participants self-reported fractures on annual patient questionnaires, and hip fractures were confirmed through medical records.

During the mean follow-up period of 9 years after the second BMD test, 139 women (1.9%) had 1 or more hip fractures, and 732 women (9.9%) had 1 or more MOFs.

Area under the receiver operating characteristic curve (AU-ROC) values for baseline BMD screening and baseline plus 3-year BMD measurement were similar in their ability to discriminate between women who had a hip fracture or MOF and women who did not. AU-ROC values communicate the usefulness of a diagnostic or screening test. An AU-ROC value of 1 would be considered perfect (100% sensitive and 100% specific), whereas an AU-ROC of 0.5 suggests a test with no ability to discriminate at all. Values between 0.7 and 0.8 would be considered acceptable, and those between 0.8 and 0.9, excellent.

Continue to: The AU-ROCs in this study...

The AU-ROCs in this study were 0.71 (95% CI, 0.67-0.75) for baseline total hip BMD, 0.61 (95% CI, 0.56-0.65) for change in total hip BMD between baseline and 3-year BMD scan, and 0.73 (95% CI, 0.69-0.77) for the combined baseline total hip BMD and change in total hip BMD. For femoral neck and lumbar spine BMD, AU-ROC values demonstrated comparable discrimination of hip fracture and MOF as those for total hip BMD. The AU-ROC values among age subgroups (< 65 years, 65-74 years, and ≥ 75 years) were also similar. Associations between change in bone density and fracture risk did not change when adjusted for factors such as BMI, race/ethnicity, diabetes, or baseline BMD.

WHAT’S NEW

Results can be applied to a wider range of patients

This study found that for postmenopausal women, a repeat BMD measurement obtained 3 years after the initial assessment did not improve risk discrimination for hip fracture or MOF beyond the baseline BMD value and should not be routinely performed. Additionally, evidence from this study allows this recommendation to apply to younger postmenopausal women and a variety of high-risk subgroups.

CAVEATS

Possible bias due to self-reporting of fractures

This study suggests that for women without a diagnosis of osteoporosis at initial screening, repeat testing is unlikely to affect future risk stratification. Repeat BMD testing should still be considered when the results are likely to influence clinical management.

However, an important consideration is that fractures were self-reported in this study, introducing a possible source of bias. Additionally, although this study supports foregoing repeat screening at a 3-year interval, there is still no agreed-upon determination of when (or if) to repeat BMD screening in women without osteoporosis.

A large subset of the study population was younger than 65 (44%), the age when family physicians typically recommend screening for osteoporosis. However, the age-adjusted AU-ROC values for fracture risk prediction were the same, and this should not invalidate the conclusions for the study population at large.

CHALLENGES TO IMPLEMENTATION

No challenges seen

We see no challenges in implementing this recommendation.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

1. Crandall CJ, Larson J, Wright NC, et al. Serial bone density measurement and incident fracture risk discrimination in postmenopausal women. JAMA Intern Med. 2020;180:1232-1240. doi: 10.1001/jamainternmed.2020.2986

2. US Preventive Services Task Force. Screening for osteoporosis: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2011;154:356-364. doi: 10.7326/0003-4819-154-5-201103010-00307

3. Burge R, Dawson-Hughes B, Solomon DH, et al. Incidence and economic burden of osteoporosis-related fractures in the United States, 2005-2025. J Bone Miner Res. 2007;22:465-475. doi: 10.1359/jbmr.061113

4. US Preventive Services Task Force; Curry SJ, Krist AH, Owens DK, et al. Screening for osteoporosis to prevent fractures: US Preventive Services Task Force recommendation statement. JAMA. 2018;319:2521-2531. doi: 10.1001/jama.2018.7498

5. Hillier TA, Stone KL, Bauer DC, et al. Evaluating the value of repeat bone mineral density measurement and prediction of fractures in older women: the study of osteoporotic fractures. Arch Intern Med. 2007;167:155-160. doi: 10.1001/archinte.167.2.155

6. Berry SD, Samelson EJ, Pencina MJ, et al. Repeat bone mineral density screening and prediction of hip and major osteoporotic fracture. JAMA. 2013;310:1256-1262. doi: 10.1001/jama.2013.277817

7. Gourlay ML, Fine JP, Preisser JS, et al; Study of Osteoporotic Fractures Research Group. Bone-density testing interval and transition to osteoporosis in older women. N Engl J Med. 2012;366:225-233. doi: 10.1056/NEJMoa1107142

ILLUSTRATIVE CASE

A 70-year-old White woman with a history of type 2 diabetes and a normal

As many as 1 in 2 postmenopausal women are at risk for an osteoporosis-related fracture.2 Each year, about 2 million fragility fractures occur in the United States.2,3 The

Two prospective cohort studies determined that repeat BMD testing 4 to 8 years after baseline screening did not improve fracture risk prediction.5,6 Limitations of these studies included no analysis of high-risk subgroups, as well as failure to include many younger postmenopausal women in the studies.5,6 An additional longitudinal study that followed postmenopausal women for up to 15 years estimated that the interval for at least 10% of women to develop osteoporosis after initial screening was more than 15 years for women with normal BMD and about 5 years for those with moderate osteopenia.7

STUDY SUMMARY

The current study examined data from the

Study participants averaged 66 years of age, with a mean BMI of 29, and 23% were non-White. In addition, 97% had either normal BMD or osteopenia (T score ≥ −2.4). Participants were excluded from the study if they had been treated with bone-active medications other than vitamin D and calcium, reported a history of MOF (fracture of the hip, spine, radius, ulna, wrist, upper arm, or shoulder) at baseline or between BMD tests, missed follow-up visits after the Year 3 BMD scan, or had missing covariate data. Participants self-reported fractures on annual patient questionnaires, and hip fractures were confirmed through medical records.

During the mean follow-up period of 9 years after the second BMD test, 139 women (1.9%) had 1 or more hip fractures, and 732 women (9.9%) had 1 or more MOFs.

Area under the receiver operating characteristic curve (AU-ROC) values for baseline BMD screening and baseline plus 3-year BMD measurement were similar in their ability to discriminate between women who had a hip fracture or MOF and women who did not. AU-ROC values communicate the usefulness of a diagnostic or screening test. An AU-ROC value of 1 would be considered perfect (100% sensitive and 100% specific), whereas an AU-ROC of 0.5 suggests a test with no ability to discriminate at all. Values between 0.7 and 0.8 would be considered acceptable, and those between 0.8 and 0.9, excellent.

Continue to: The AU-ROCs in this study...

The AU-ROCs in this study were 0.71 (95% CI, 0.67-0.75) for baseline total hip BMD, 0.61 (95% CI, 0.56-0.65) for change in total hip BMD between baseline and 3-year BMD scan, and 0.73 (95% CI, 0.69-0.77) for the combined baseline total hip BMD and change in total hip BMD. For femoral neck and lumbar spine BMD, AU-ROC values demonstrated comparable discrimination of hip fracture and MOF as those for total hip BMD. The AU-ROC values among age subgroups (< 65 years, 65-74 years, and ≥ 75 years) were also similar. Associations between change in bone density and fracture risk did not change when adjusted for factors such as BMI, race/ethnicity, diabetes, or baseline BMD.

WHAT’S NEW

Results can be applied to a wider range of patients

This study found that for postmenopausal women, a repeat BMD measurement obtained 3 years after the initial assessment did not improve risk discrimination for hip fracture or MOF beyond the baseline BMD value and should not be routinely performed. Additionally, evidence from this study allows this recommendation to apply to younger postmenopausal women and a variety of high-risk subgroups.

CAVEATS

Possible bias due to self-reporting of fractures

This study suggests that for women without a diagnosis of osteoporosis at initial screening, repeat testing is unlikely to affect future risk stratification. Repeat BMD testing should still be considered when the results are likely to influence clinical management.

However, an important consideration is that fractures were self-reported in this study, introducing a possible source of bias. Additionally, although this study supports foregoing repeat screening at a 3-year interval, there is still no agreed-upon determination of when (or if) to repeat BMD screening in women without osteoporosis.

A large subset of the study population was younger than 65 (44%), the age when family physicians typically recommend screening for osteoporosis. However, the age-adjusted AU-ROC values for fracture risk prediction were the same, and this should not invalidate the conclusions for the study population at large.

CHALLENGES TO IMPLEMENTATION

No challenges seen

We see no challenges in implementing this recommendation.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

ILLUSTRATIVE CASE

A 70-year-old White woman with a history of type 2 diabetes and a normal

As many as 1 in 2 postmenopausal women are at risk for an osteoporosis-related fracture.2 Each year, about 2 million fragility fractures occur in the United States.2,3 The

Two prospective cohort studies determined that repeat BMD testing 4 to 8 years after baseline screening did not improve fracture risk prediction.5,6 Limitations of these studies included no analysis of high-risk subgroups, as well as failure to include many younger postmenopausal women in the studies.5,6 An additional longitudinal study that followed postmenopausal women for up to 15 years estimated that the interval for at least 10% of women to develop osteoporosis after initial screening was more than 15 years for women with normal BMD and about 5 years for those with moderate osteopenia.7

STUDY SUMMARY

The current study examined data from the

Study participants averaged 66 years of age, with a mean BMI of 29, and 23% were non-White. In addition, 97% had either normal BMD or osteopenia (T score ≥ −2.4). Participants were excluded from the study if they had been treated with bone-active medications other than vitamin D and calcium, reported a history of MOF (fracture of the hip, spine, radius, ulna, wrist, upper arm, or shoulder) at baseline or between BMD tests, missed follow-up visits after the Year 3 BMD scan, or had missing covariate data. Participants self-reported fractures on annual patient questionnaires, and hip fractures were confirmed through medical records.

During the mean follow-up period of 9 years after the second BMD test, 139 women (1.9%) had 1 or more hip fractures, and 732 women (9.9%) had 1 or more MOFs.

Area under the receiver operating characteristic curve (AU-ROC) values for baseline BMD screening and baseline plus 3-year BMD measurement were similar in their ability to discriminate between women who had a hip fracture or MOF and women who did not. AU-ROC values communicate the usefulness of a diagnostic or screening test. An AU-ROC value of 1 would be considered perfect (100% sensitive and 100% specific), whereas an AU-ROC of 0.5 suggests a test with no ability to discriminate at all. Values between 0.7 and 0.8 would be considered acceptable, and those between 0.8 and 0.9, excellent.

Continue to: The AU-ROCs in this study...

The AU-ROCs in this study were 0.71 (95% CI, 0.67-0.75) for baseline total hip BMD, 0.61 (95% CI, 0.56-0.65) for change in total hip BMD between baseline and 3-year BMD scan, and 0.73 (95% CI, 0.69-0.77) for the combined baseline total hip BMD and change in total hip BMD. For femoral neck and lumbar spine BMD, AU-ROC values demonstrated comparable discrimination of hip fracture and MOF as those for total hip BMD. The AU-ROC values among age subgroups (< 65 years, 65-74 years, and ≥ 75 years) were also similar. Associations between change in bone density and fracture risk did not change when adjusted for factors such as BMI, race/ethnicity, diabetes, or baseline BMD.

WHAT’S NEW

Results can be applied to a wider range of patients

This study found that for postmenopausal women, a repeat BMD measurement obtained 3 years after the initial assessment did not improve risk discrimination for hip fracture or MOF beyond the baseline BMD value and should not be routinely performed. Additionally, evidence from this study allows this recommendation to apply to younger postmenopausal women and a variety of high-risk subgroups.

CAVEATS

Possible bias due to self-reporting of fractures

This study suggests that for women without a diagnosis of osteoporosis at initial screening, repeat testing is unlikely to affect future risk stratification. Repeat BMD testing should still be considered when the results are likely to influence clinical management.

However, an important consideration is that fractures were self-reported in this study, introducing a possible source of bias. Additionally, although this study supports foregoing repeat screening at a 3-year interval, there is still no agreed-upon determination of when (or if) to repeat BMD screening in women without osteoporosis.

A large subset of the study population was younger than 65 (44%), the age when family physicians typically recommend screening for osteoporosis. However, the age-adjusted AU-ROC values for fracture risk prediction were the same, and this should not invalidate the conclusions for the study population at large.

CHALLENGES TO IMPLEMENTATION

No challenges seen

We see no challenges in implementing this recommendation.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

1. Crandall CJ, Larson J, Wright NC, et al. Serial bone density measurement and incident fracture risk discrimination in postmenopausal women. JAMA Intern Med. 2020;180:1232-1240. doi: 10.1001/jamainternmed.2020.2986

2. US Preventive Services Task Force. Screening for osteoporosis: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2011;154:356-364. doi: 10.7326/0003-4819-154-5-201103010-00307

3. Burge R, Dawson-Hughes B, Solomon DH, et al. Incidence and economic burden of osteoporosis-related fractures in the United States, 2005-2025. J Bone Miner Res. 2007;22:465-475. doi: 10.1359/jbmr.061113

4. US Preventive Services Task Force; Curry SJ, Krist AH, Owens DK, et al. Screening for osteoporosis to prevent fractures: US Preventive Services Task Force recommendation statement. JAMA. 2018;319:2521-2531. doi: 10.1001/jama.2018.7498

5. Hillier TA, Stone KL, Bauer DC, et al. Evaluating the value of repeat bone mineral density measurement and prediction of fractures in older women: the study of osteoporotic fractures. Arch Intern Med. 2007;167:155-160. doi: 10.1001/archinte.167.2.155

6. Berry SD, Samelson EJ, Pencina MJ, et al. Repeat bone mineral density screening and prediction of hip and major osteoporotic fracture. JAMA. 2013;310:1256-1262. doi: 10.1001/jama.2013.277817

7. Gourlay ML, Fine JP, Preisser JS, et al; Study of Osteoporotic Fractures Research Group. Bone-density testing interval and transition to osteoporosis in older women. N Engl J Med. 2012;366:225-233. doi: 10.1056/NEJMoa1107142

1. Crandall CJ, Larson J, Wright NC, et al. Serial bone density measurement and incident fracture risk discrimination in postmenopausal women. JAMA Intern Med. 2020;180:1232-1240. doi: 10.1001/jamainternmed.2020.2986

2. US Preventive Services Task Force. Screening for osteoporosis: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2011;154:356-364. doi: 10.7326/0003-4819-154-5-201103010-00307

3. Burge R, Dawson-Hughes B, Solomon DH, et al. Incidence and economic burden of osteoporosis-related fractures in the United States, 2005-2025. J Bone Miner Res. 2007;22:465-475. doi: 10.1359/jbmr.061113

4. US Preventive Services Task Force; Curry SJ, Krist AH, Owens DK, et al. Screening for osteoporosis to prevent fractures: US Preventive Services Task Force recommendation statement. JAMA. 2018;319:2521-2531. doi: 10.1001/jama.2018.7498

5. Hillier TA, Stone KL, Bauer DC, et al. Evaluating the value of repeat bone mineral density measurement and prediction of fractures in older women: the study of osteoporotic fractures. Arch Intern Med. 2007;167:155-160. doi: 10.1001/archinte.167.2.155

6. Berry SD, Samelson EJ, Pencina MJ, et al. Repeat bone mineral density screening and prediction of hip and major osteoporotic fracture. JAMA. 2013;310:1256-1262. doi: 10.1001/jama.2013.277817

7. Gourlay ML, Fine JP, Preisser JS, et al; Study of Osteoporotic Fractures Research Group. Bone-density testing interval and transition to osteoporosis in older women. N Engl J Med. 2012;366:225-233. doi: 10.1056/NEJMoa1107142

PRACTICE CHANGER

Do not routinely repeat bone density testing 3 years after initial screening in postmenopausal patients who do not have osteoporosis.

STRENGTH OF RECOMMENDATION

A: Based on several large, good-quality prospective cohort studies1

Crandall CJ, Larson J, Wright NC, et al. Serial bone density measurement and incident fracture risk discrimination in postmenopausal women. JAMA Intern Med. 2020;180:1232-1240. doi: 10.1001/jamainternmed.2020.2986

Validated scoring system identifies low-risk syncope patients

ILLUSTRATIVE CASE

A 30-year-old woman presented to the ED after she “passed out” while standing at a concert. She lost consciousness for 10 seconds. After she revived, her friends drove her to the ED. She is healthy, with no chronic medical conditions, no medication use, and no drug or alcohol use. Should she be admitted to the hospital for observation?

Syncope, a transient loss of consciousness followed by spontaneous complete recovery, accounts for 1% of ED visits.2 Approximately 10% of patients presenting to the ED will have a serious underlying condition identified and among 3% to 5% of these patients with syncope, the serious condition will be identified only after they leave the ED.1 Most patients have a benign course, but more than half of all patients presenting to the ED with syncope will be hospitalized, costing $2.4 billion annually.2

Because of the high hospitalization rate of patients with syncope, a practical and accurate tool to risk-stratify patients is vital. Other tools, such as the San Francisco Syncope Rule, Short-Term Prognosis of Syncope, and Risk Stratification of Syncope in the Emergency Department, lack validation or are excessively complex, with extensive lab work or testing.3

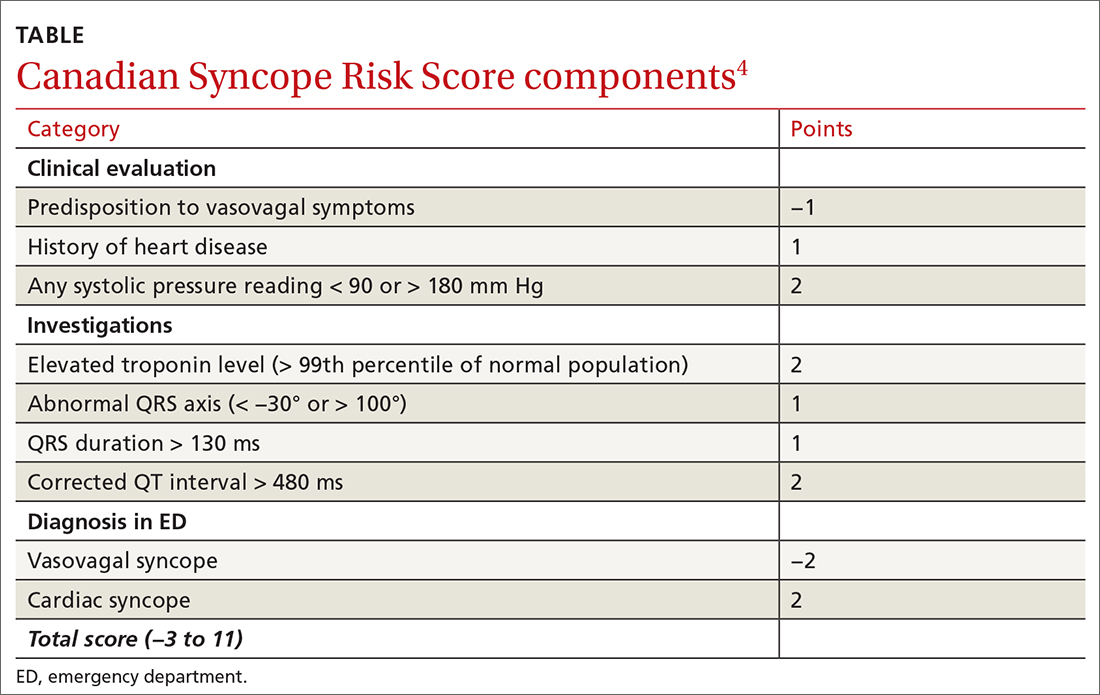

The CSRS was previously derived from a large, multisite consecutive cohort, and was internally validated and reported according to the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis guideline statement.4 Patients are assigned points based on clinical findings, test results, and the diagnosis given in the ED (TABLE4). The scoring system is used to stratify patients as very low (−3, −2), low (−1, 0), medium (1, 2, 3), high (4, 5), or very high (≥6) risk.4

STUDY SUMMARY

Less than 1% of very low– and low-risk patients had serious 30-day outcomes

This multisite Canadian prospective validation cohort study enrolled patients age ≥ 16 years who presented to the ED within 24 hours of syncope. Both discharged and hospitalized patients were included.1

Patients were excluded if they had loss of consciousness for > 5 minutes, mental status changes at presentation, history of current or previous seizure, or head trauma

ED physicians confirmed patient eligibility, obtained verbal consent, and completed the data collection form. In addition, research assistants sought to identify eligible patients who were not previously enrolled by reviewing all ED visits during the study period.

Continue to: To examine 30-day outcomes...

To examine 30-day outcomes, researchers reviewed all available patient medical record

A total of 4131 patients made up the validation cohort. A serious condition was identified during the initial ED visit in 160 patients (3.9%), who were excluded from the study, and 152 patients (3.7%) were lost to follow-up. Of the 3819 patients included in the final analysis, troponin was not measured in 1566 patients (41%), and an electrocardiogram was not obtained in 114 patients (3%). A serious outcome within 30 days was experienced by 139 patients (3.6%; 95% CI, 3.1%-4.3%). There was good correlation to the model-predicted serious outcome probability of 3.2% (95% CI, 2.7%-3.8%).1

Three of 1631 (0.2%) patients classified as very low risk and 9 of 1254 (0.7%) low-risk patients experienced a serious outcome, and no patients died. In the group classified as medium risk, 55 of 687 (8%) patients experienced a serious outcome, and there was 1 death. In the high-risk group, 32 of 167 (19.2%) patients experienced a serious outcome, and there were 5 deaths. In the group classified as very high risk, 40 of 78 (51.3%) patients experienced a serious outcome, and there were 7 deaths. The CSRS was able to identify very low– or low-risk patients (score of −1 or better) with a sensitivity of 97.8% (95% CI, 93.8%-99.6%) and a specificity of 44.3% (95% CI, 42.7%-45.9%).1

WHAT’S NEW

This scoring system offers a validated method to risk-stratify ED patients

Previous recommendations from the American College of Cardiology/American Heart Associationsuggested determining disposition of ED patients by using clinical judgment based on a list of risk factors such as age, chronic conditions, and medications. However, there was no scoring system.3 This new scoring system allows physicians to send home very low– and low-risk patients with reassurance that the likelihood of a serious outcome is less than 1%. High-risk and very high–risk patients should be admitted to the hospital for further evaluation. Most moderate-risk patients (8% risk of serious outcome but 0.1% risk of death) can also be discharged after providers have a risk/benefit discussion, including precautions for signs of arrhythmia or need for urgent return to the hospital.

CAVEATS

The study does not translate to all clinical settings

Because this study was done in EDs, the scoring system cannot necessarily be applied to urgent care or outpatient settings. However, 41% of the patients in the study did not have troponin testing performed. Therefore, physicians could consider using the scoring system in settings where this lab test is not immediately available.

Continue to: This scoring system was also only...

This scoring system was also only validated with adult patients presenting within 24 hours of their syncopal episode. It is unknown how it may predict the outcomes of patients who present > 24 hours after syncope.

CHALLENGES TO IMPLEMENTATION

Clinicians may not be awareof the CSRS scoring system

The main challenge to implementation is practitioner awareness of the CSRS scoring system and how to use it appropriately, as there are several different syncopal scoring systems that may already be in use. Additionally, depending on the electronic health record used, the CSRS scoring system may not be embedded. Using and documenting scores may also be a challenge.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

1. Thiruganasambandamoorthy V, Sivilotti MLA, Le Sage N, et al. Multicenter emergency department validation of the Canadian Syncope Risk Score. JAMA Intern Med. 2020;180:737-744. doi:10.1001/jamainternmed.2020.0288

2. Probst MA, Kanzaria HK, Gbedemah M, et al. National trends in resource utilization associated with ED visits for syncope. Am J Emerg Med. 2015;33:998-1001. doi:10.1016/j.ajem.2015.04.030

3. Shen WK, Sheldon RS, Benditt DG, et al. 2017 ACC/AHA/HRS guideline for the evaluation and management of patients with syncope: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Rhythm Society. J Am Coll Cardiol. 2017;70:620-663. doi:10.1016/j.jacc.2017.03.002

4. Thiruganasambandamoorthy V, Kwong K, Wells GA, et al. Development of the Canadian Syncope Risk Score to predict serious adverse events after emergency department assessment of syncope. CMAJ. 2016;188:E289-E298. doi:10.1503/cmaj.151469

ILLUSTRATIVE CASE

A 30-year-old woman presented to the ED after she “passed out” while standing at a concert. She lost consciousness for 10 seconds. After she revived, her friends drove her to the ED. She is healthy, with no chronic medical conditions, no medication use, and no drug or alcohol use. Should she be admitted to the hospital for observation?

Syncope, a transient loss of consciousness followed by spontaneous complete recovery, accounts for 1% of ED visits.2 Approximately 10% of patients presenting to the ED will have a serious underlying condition identified and among 3% to 5% of these patients with syncope, the serious condition will be identified only after they leave the ED.1 Most patients have a benign course, but more than half of all patients presenting to the ED with syncope will be hospitalized, costing $2.4 billion annually.2

Because of the high hospitalization rate of patients with syncope, a practical and accurate tool to risk-stratify patients is vital. Other tools, such as the San Francisco Syncope Rule, Short-Term Prognosis of Syncope, and Risk Stratification of Syncope in the Emergency Department, lack validation or are excessively complex, with extensive lab work or testing.3

The CSRS was previously derived from a large, multisite consecutive cohort, and was internally validated and reported according to the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis guideline statement.4 Patients are assigned points based on clinical findings, test results, and the diagnosis given in the ED (TABLE4). The scoring system is used to stratify patients as very low (−3, −2), low (−1, 0), medium (1, 2, 3), high (4, 5), or very high (≥6) risk.4

STUDY SUMMARY

Less than 1% of very low– and low-risk patients had serious 30-day outcomes

This multisite Canadian prospective validation cohort study enrolled patients age ≥ 16 years who presented to the ED within 24 hours of syncope. Both discharged and hospitalized patients were included.1

Patients were excluded if they had loss of consciousness for > 5 minutes, mental status changes at presentation, history of current or previous seizure, or head trauma

ED physicians confirmed patient eligibility, obtained verbal consent, and completed the data collection form. In addition, research assistants sought to identify eligible patients who were not previously enrolled by reviewing all ED visits during the study period.

Continue to: To examine 30-day outcomes...

To examine 30-day outcomes, researchers reviewed all available patient medical record

A total of 4131 patients made up the validation cohort. A serious condition was identified during the initial ED visit in 160 patients (3.9%), who were excluded from the study, and 152 patients (3.7%) were lost to follow-up. Of the 3819 patients included in the final analysis, troponin was not measured in 1566 patients (41%), and an electrocardiogram was not obtained in 114 patients (3%). A serious outcome within 30 days was experienced by 139 patients (3.6%; 95% CI, 3.1%-4.3%). There was good correlation to the model-predicted serious outcome probability of 3.2% (95% CI, 2.7%-3.8%).1

Three of 1631 (0.2%) patients classified as very low risk and 9 of 1254 (0.7%) low-risk patients experienced a serious outcome, and no patients died. In the group classified as medium risk, 55 of 687 (8%) patients experienced a serious outcome, and there was 1 death. In the high-risk group, 32 of 167 (19.2%) patients experienced a serious outcome, and there were 5 deaths. In the group classified as very high risk, 40 of 78 (51.3%) patients experienced a serious outcome, and there were 7 deaths. The CSRS was able to identify very low– or low-risk patients (score of −1 or better) with a sensitivity of 97.8% (95% CI, 93.8%-99.6%) and a specificity of 44.3% (95% CI, 42.7%-45.9%).1

WHAT’S NEW

This scoring system offers a validated method to risk-stratify ED patients

Previous recommendations from the American College of Cardiology/American Heart Associationsuggested determining disposition of ED patients by using clinical judgment based on a list of risk factors such as age, chronic conditions, and medications. However, there was no scoring system.3 This new scoring system allows physicians to send home very low– and low-risk patients with reassurance that the likelihood of a serious outcome is less than 1%. High-risk and very high–risk patients should be admitted to the hospital for further evaluation. Most moderate-risk patients (8% risk of serious outcome but 0.1% risk of death) can also be discharged after providers have a risk/benefit discussion, including precautions for signs of arrhythmia or need for urgent return to the hospital.

CAVEATS

The study does not translate to all clinical settings

Because this study was done in EDs, the scoring system cannot necessarily be applied to urgent care or outpatient settings. However, 41% of the patients in the study did not have troponin testing performed. Therefore, physicians could consider using the scoring system in settings where this lab test is not immediately available.

Continue to: This scoring system was also only...

This scoring system was also only validated with adult patients presenting within 24 hours of their syncopal episode. It is unknown how it may predict the outcomes of patients who present > 24 hours after syncope.

CHALLENGES TO IMPLEMENTATION

Clinicians may not be awareof the CSRS scoring system

The main challenge to implementation is practitioner awareness of the CSRS scoring system and how to use it appropriately, as there are several different syncopal scoring systems that may already be in use. Additionally, depending on the electronic health record used, the CSRS scoring system may not be embedded. Using and documenting scores may also be a challenge.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

ILLUSTRATIVE CASE

A 30-year-old woman presented to the ED after she “passed out” while standing at a concert. She lost consciousness for 10 seconds. After she revived, her friends drove her to the ED. She is healthy, with no chronic medical conditions, no medication use, and no drug or alcohol use. Should she be admitted to the hospital for observation?

Syncope, a transient loss of consciousness followed by spontaneous complete recovery, accounts for 1% of ED visits.2 Approximately 10% of patients presenting to the ED will have a serious underlying condition identified and among 3% to 5% of these patients with syncope, the serious condition will be identified only after they leave the ED.1 Most patients have a benign course, but more than half of all patients presenting to the ED with syncope will be hospitalized, costing $2.4 billion annually.2

Because of the high hospitalization rate of patients with syncope, a practical and accurate tool to risk-stratify patients is vital. Other tools, such as the San Francisco Syncope Rule, Short-Term Prognosis of Syncope, and Risk Stratification of Syncope in the Emergency Department, lack validation or are excessively complex, with extensive lab work or testing.3

The CSRS was previously derived from a large, multisite consecutive cohort, and was internally validated and reported according to the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis guideline statement.4 Patients are assigned points based on clinical findings, test results, and the diagnosis given in the ED (TABLE4). The scoring system is used to stratify patients as very low (−3, −2), low (−1, 0), medium (1, 2, 3), high (4, 5), or very high (≥6) risk.4

STUDY SUMMARY

Less than 1% of very low– and low-risk patients had serious 30-day outcomes

This multisite Canadian prospective validation cohort study enrolled patients age ≥ 16 years who presented to the ED within 24 hours of syncope. Both discharged and hospitalized patients were included.1

Patients were excluded if they had loss of consciousness for > 5 minutes, mental status changes at presentation, history of current or previous seizure, or head trauma

ED physicians confirmed patient eligibility, obtained verbal consent, and completed the data collection form. In addition, research assistants sought to identify eligible patients who were not previously enrolled by reviewing all ED visits during the study period.

Continue to: To examine 30-day outcomes...

To examine 30-day outcomes, researchers reviewed all available patient medical record

A total of 4131 patients made up the validation cohort. A serious condition was identified during the initial ED visit in 160 patients (3.9%), who were excluded from the study, and 152 patients (3.7%) were lost to follow-up. Of the 3819 patients included in the final analysis, troponin was not measured in 1566 patients (41%), and an electrocardiogram was not obtained in 114 patients (3%). A serious outcome within 30 days was experienced by 139 patients (3.6%; 95% CI, 3.1%-4.3%). There was good correlation to the model-predicted serious outcome probability of 3.2% (95% CI, 2.7%-3.8%).1

Three of 1631 (0.2%) patients classified as very low risk and 9 of 1254 (0.7%) low-risk patients experienced a serious outcome, and no patients died. In the group classified as medium risk, 55 of 687 (8%) patients experienced a serious outcome, and there was 1 death. In the high-risk group, 32 of 167 (19.2%) patients experienced a serious outcome, and there were 5 deaths. In the group classified as very high risk, 40 of 78 (51.3%) patients experienced a serious outcome, and there were 7 deaths. The CSRS was able to identify very low– or low-risk patients (score of −1 or better) with a sensitivity of 97.8% (95% CI, 93.8%-99.6%) and a specificity of 44.3% (95% CI, 42.7%-45.9%).1

WHAT’S NEW

This scoring system offers a validated method to risk-stratify ED patients

Previous recommendations from the American College of Cardiology/American Heart Associationsuggested determining disposition of ED patients by using clinical judgment based on a list of risk factors such as age, chronic conditions, and medications. However, there was no scoring system.3 This new scoring system allows physicians to send home very low– and low-risk patients with reassurance that the likelihood of a serious outcome is less than 1%. High-risk and very high–risk patients should be admitted to the hospital for further evaluation. Most moderate-risk patients (8% risk of serious outcome but 0.1% risk of death) can also be discharged after providers have a risk/benefit discussion, including precautions for signs of arrhythmia or need for urgent return to the hospital.

CAVEATS

The study does not translate to all clinical settings

Because this study was done in EDs, the scoring system cannot necessarily be applied to urgent care or outpatient settings. However, 41% of the patients in the study did not have troponin testing performed. Therefore, physicians could consider using the scoring system in settings where this lab test is not immediately available.

Continue to: This scoring system was also only...

This scoring system was also only validated with adult patients presenting within 24 hours of their syncopal episode. It is unknown how it may predict the outcomes of patients who present > 24 hours after syncope.

CHALLENGES TO IMPLEMENTATION

Clinicians may not be awareof the CSRS scoring system

The main challenge to implementation is practitioner awareness of the CSRS scoring system and how to use it appropriately, as there are several different syncopal scoring systems that may already be in use. Additionally, depending on the electronic health record used, the CSRS scoring system may not be embedded. Using and documenting scores may also be a challenge.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

1. Thiruganasambandamoorthy V, Sivilotti MLA, Le Sage N, et al. Multicenter emergency department validation of the Canadian Syncope Risk Score. JAMA Intern Med. 2020;180:737-744. doi:10.1001/jamainternmed.2020.0288

2. Probst MA, Kanzaria HK, Gbedemah M, et al. National trends in resource utilization associated with ED visits for syncope. Am J Emerg Med. 2015;33:998-1001. doi:10.1016/j.ajem.2015.04.030

3. Shen WK, Sheldon RS, Benditt DG, et al. 2017 ACC/AHA/HRS guideline for the evaluation and management of patients with syncope: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Rhythm Society. J Am Coll Cardiol. 2017;70:620-663. doi:10.1016/j.jacc.2017.03.002

4. Thiruganasambandamoorthy V, Kwong K, Wells GA, et al. Development of the Canadian Syncope Risk Score to predict serious adverse events after emergency department assessment of syncope. CMAJ. 2016;188:E289-E298. doi:10.1503/cmaj.151469

1. Thiruganasambandamoorthy V, Sivilotti MLA, Le Sage N, et al. Multicenter emergency department validation of the Canadian Syncope Risk Score. JAMA Intern Med. 2020;180:737-744. doi:10.1001/jamainternmed.2020.0288

2. Probst MA, Kanzaria HK, Gbedemah M, et al. National trends in resource utilization associated with ED visits for syncope. Am J Emerg Med. 2015;33:998-1001. doi:10.1016/j.ajem.2015.04.030

3. Shen WK, Sheldon RS, Benditt DG, et al. 2017 ACC/AHA/HRS guideline for the evaluation and management of patients with syncope: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines and the Heart Rhythm Society. J Am Coll Cardiol. 2017;70:620-663. doi:10.1016/j.jacc.2017.03.002

4. Thiruganasambandamoorthy V, Kwong K, Wells GA, et al. Development of the Canadian Syncope Risk Score to predict serious adverse events after emergency department assessment of syncope. CMAJ. 2016;188:E289-E298. doi:10.1503/cmaj.151469

PRACTICE CHANGER

Physicians should use the Canadian Syncope Risk Score (CSRS) to identify and send home very low– and low-risk patients from the emergency department (ED) after a syncopal episode.

STRENGTH OF RECOMMENDATION

A: Validated clinical decision rule based on a prospective cohort study1

Thiruganasambandamoorthy V, Sivilotti MLA, Le Sage N, et al. Multicenter emergency department validation of the Canadian Syncope Risk Score. JAMA Intern Med. 2020;180:737-744. doi:10.1001/jamainternmed.2020.0288

Monotherapy for nonvalvular A-fib with stable CAD?

ILLUSTRATIVE CASE

A 67-year-old man with a history of coronary artery stenting 7 years prior and nonvalvular AF that is well controlled with a beta-blocker comes in for a routine health maintenance visit. You note that the patient takes warfarin, metoprolol, and aspirin. The patient has not had any thrombotic or bleeding events in his lifetime. Does this patient need to take both warfarin and aspirin? Do the antithrombotic benefits of dual therapy outweigh the risk of bleeding?

Antiplatelet agents have long been recommended for secondary prevention of cardiovascular (CV) events in patients with IHD. The goal is to reduce the risk of coronary artery thrombosis.2 Many patients with IHD also develop AF and are treated with OACs such as warfarin or direct oral anticoagulants (DOACs) to prevent thromboembolic events.

There has been a paucity of data to determine the risks and benefits of OAC monotherapy compared to OAC plus single antiplatelet therapy (SAPT). Given research that shows increased risks of bleeding and all-cause mortality when aspirin is used for primary prevention of CV disease,3,4 it is prudent to examine if the harms of aspirin outweigh its benefits for the secondary prevention of acute coronary events in patients already taking antithrombotic agents.

STUDY SUMMARY

Reduced bleeding risk, with no difference in major adverse cardiovascular events

This study by Lee and colleagues1 was a meta-analysis of 8855 patients with nonvalvular AF and stable coronary artery disease (CAD), from 6 trials comparing OAC monotherapy vs OAC plus SAPT. The meta-analysis involved 3 studies using patient registries, 2 cohort studies, and an open-label randomized trial that together spanned the period from 2002 to 2016. The longest study period was 9 years (1 study) and the shortest, 1 year (2 studies). Oral anticoagulation consisted of either vitamin K antagonist (VKA) therapy (the majority of the patients studied) or DOAC therapy (8.6% of the patients studied). SAPT was either aspirin or clopidogrel.

The primary outcome measure was major adverse CV events (MACE). Secondary outcome measures included major bleeding, stroke, all-cause mortality, and net adverse events. The definitions used by the studies for major bleeding were deemed “largely consistent” with the International Society on Thrombosis and Haemostasis major bleeding criteria, ie, fatal bleeding, symptomatic bleeding in a critical area or organ (intracranial, intraspinal, intraocular, retroperitoneal, intra-articular, pericardial, or intramuscular causing compartment syndrome), or a drop in hemoglobin (≥ 2 g/dL or requiring transfusion of ≥ 2 units of whole blood or red cells).5

There was no difference in MACE between the monotherapy and OAC plus SAPT groups (hazard ratio [HR] = 1.09; 95% CI, 0.92-1.29). Similarly, there were no differences in stroke and all-cause mortality between the groups. However, there was a significant association of higher risk of major bleeding (HR = 1.61; 95% CI, 1.38-1.87) and net adverse events (HR = 1.21; 95% CI, 1.02-1.43) in the OAC plus SAPT group compared with the OAC monotherapy group.

This study’s limitations included its low percentage of patients taking a DOAC. Also, due to variations in methods of reporting CHA2DS2-VASc and HAS-BLED scores among the studies (for risk of stroke in patients with nonrheumatic AF and for risk of bleeding in AF patients taking anticoagulants), this meta-analysis could not determine if different outcomes might be found in patients with different CHA2DS2-VASc and HAS-BLED scores.

Continue to: WHAT'S NEW

WHAT’S NEW

OAC monotherapy benefit for patients with nonvalvular AF

This study strongly suggests that there is a large subgroup of patients with stable CAD for whom SAPT should not be prescribed as a preventive medication: patients with nonvalvular AF who are receiving OAC therapy. This study concurs with the results of the 2019 AFIRE (Atrial Fibrillation and Ischemic Events with Rivaroxaban in Patients with Stable Coronary Artery Disease) trial in Japan, in which 2236 patients with stable IHD (coronary artery bypass grafting, stenting, or cardiac catheterization > 1 year earlier) were randomized to receive rivaroxaban either alone or with an antiplatelet agent. All-cause mortality and major bleeding were lower in the monotherapy group.6

This meta-analysis calls into question the baseline recommendation from the 2012 American College of Cardiology Foundation/American Heart Association (ACCF/AHA) guideline to prescribe aspirin indefinitely for patients with stable CAD unless there is a contraindication (oral anticoagulation is not listed as a contraindication).2 The 2020 ACC Expert Consensus Decision Pathway7 published in February 2021 stated that for patients requiring long-term anticoagulation therapy who have completed 12 months of SAPT after percutaneous coronary intervention, anticoagulation therapy alone “could be used long-term”; however, the 2019 study by Lee was not listed among their references. Inclusion of the Lee study might have contributed to a stronger recommendation.

Also, the new guidelines include clinical situations in which dual therapy could still be continued: “… if perceived thrombotic risk is high (eg, prior myocardial infarction, complex lesions, presence of select traditional cardiovascular risk factors, or extensive [atherosclerotic cardiovascular disease]), and the patient is at low bleeding risk.” The guidelines state that in this situation, “… it is reasonable to continue SAPT beyond 12 months (in line with prior ACC/AHA recommendations).”7 However, the cited study compared dual therapy (dabigatran plus APT) to warfarin triple therapy. Single OAC therapy was not studied.8

CAVEATS

DOAC patient populationwas not well represented

The study had a low percentage of patients taking a DOAC. Also, because there were variations in how the studies reported CHA2DS2-VASc and HAS-BLED scores, this meta-analysis was unable to determine if different scores might have produced different outcomes. However, the studies involving registries had the advantage of looking at the data for this population over long periods of time and included a wide variety of patients, making the recommendation likely valid.

CHALLENGES TO IMPLEMENTATION

Primary care approach may not sync with specialist practice

We see no challenges to implementation except for potential differences between primary care physicians and specialists regarding the use of antiplatelet agents in this patient population.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

1. Lee SR, Rhee TM, Kang DY, et al. Meta-analysis of oral anticoagulant monotherapy as an antithrombotic strategy in patients with stable coronary artery disease and nonvalvular atrial fibrillation. Am J Cardiol. 2019;124:879-885. doi: 10.1016/j.amjcard.2019.05.072

2. Fihn SD, Gardin JM, Abrams J, et al; American College of Cardiology Foundation; American Heart Association Task Force on Practice Guidelines; American College of Physicians; American Association for Thoracic Surgery; Preventive Cardiovascular Nurses Association; Society for Cardiovascular Angiography and Interventions; Society of Thoracic Surgeons. 2012 ACCF/AHA/ACP/AATS/PCNA/SCAI/STS guideline for the diagnosis and management of patients with stable ischemic heart disease: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines, and the American College of Physicians, American Association for Thoracic Surgery, Preventive Cardiovascular Nurses Association, Society for Cardiovascular Angiography and Interventions, and Society of Thoracic Surgeons. J Am Coll Cardiol. 2012;60:e44-e164.

3. Whitlock EP, Burda BU, Williams SB, et al. Bleeding risks with aspirin use for primary prevention in adults: a systematic review for the U.S. Preventive Services Task Force. Ann Intern Med. 2016;164:826-835. doi: 10.7326/M15-2112

4. McNeil JJ, Nelson MR, Woods RL, et al; ASPREE Investigator Group. Effect of aspirin on all-cause mortality in the healthy elderly. N Engl J Med. 2018;379:1519-1528. doi: 10.1056/NEJMoa1803955

5. Schulman S, Kearon C; Subcommittee on Control of Anticoagulation of the Scientific and Standardization Committee of the International Society on Thrombosis and Haemostasis. Definition of major bleeding in clinical investigations of antihemostatic medicinal products in non-surgical patients. J Thromb Haemost. 2005;3:692-694. doi: 10.1111/j.1538-7836.2005.01204.x

6. Yasuda S, Kaikita K, Akao M, et al; AFIRE Investigators. Antithrombotic therapy for atrial fibrillation with stable coronary disease. N Engl J Med. 2019;381:1103-1113. doi: 10.1056/NEJMoa1904143

7. Kumbhani DJ, Cannon CP, Beavers CJ, et al. 2020 ACC expert consensus decision pathway for anticoagulant and antiplatelet therapy in patients with atrial fibrillation or venous thromboembolism undergoing percutaneous coronary intervention or with atherosclerotic cardiovascular disease: a report of the American College of Cardiology Solution Set Oversight Committee. J Am Coll Cardiol. 2021;77:629-658. doi: 10.1016/j.jacc.2020.09.011

8. Berry NC, Mauri L, Steg PG, et al. Effect of lesion complexity and clinical risk factors on the efficacy and safety of dabigatran dual therapy versus warfarin triple therapy in atrial fibrillation after percutaneous coronary intervention: a subgroup analysis from the REDUAL PCI trial. Circ Cardiovasc Interv. 2020;13:e008349. doi: 10.1161/CIRCINTERVENTIONS.119.008349

ILLUSTRATIVE CASE

A 67-year-old man with a history of coronary artery stenting 7 years prior and nonvalvular AF that is well controlled with a beta-blocker comes in for a routine health maintenance visit. You note that the patient takes warfarin, metoprolol, and aspirin. The patient has not had any thrombotic or bleeding events in his lifetime. Does this patient need to take both warfarin and aspirin? Do the antithrombotic benefits of dual therapy outweigh the risk of bleeding?

Antiplatelet agents have long been recommended for secondary prevention of cardiovascular (CV) events in patients with IHD. The goal is to reduce the risk of coronary artery thrombosis.2 Many patients with IHD also develop AF and are treated with OACs such as warfarin or direct oral anticoagulants (DOACs) to prevent thromboembolic events.

There has been a paucity of data to determine the risks and benefits of OAC monotherapy compared to OAC plus single antiplatelet therapy (SAPT). Given research that shows increased risks of bleeding and all-cause mortality when aspirin is used for primary prevention of CV disease,3,4 it is prudent to examine if the harms of aspirin outweigh its benefits for the secondary prevention of acute coronary events in patients already taking antithrombotic agents.

STUDY SUMMARY

Reduced bleeding risk, with no difference in major adverse cardiovascular events

This study by Lee and colleagues1 was a meta-analysis of 8855 patients with nonvalvular AF and stable coronary artery disease (CAD), from 6 trials comparing OAC monotherapy vs OAC plus SAPT. The meta-analysis involved 3 studies using patient registries, 2 cohort studies, and an open-label randomized trial that together spanned the period from 2002 to 2016. The longest study period was 9 years (1 study) and the shortest, 1 year (2 studies). Oral anticoagulation consisted of either vitamin K antagonist (VKA) therapy (the majority of the patients studied) or DOAC therapy (8.6% of the patients studied). SAPT was either aspirin or clopidogrel.

The primary outcome measure was major adverse CV events (MACE). Secondary outcome measures included major bleeding, stroke, all-cause mortality, and net adverse events. The definitions used by the studies for major bleeding were deemed “largely consistent” with the International Society on Thrombosis and Haemostasis major bleeding criteria, ie, fatal bleeding, symptomatic bleeding in a critical area or organ (intracranial, intraspinal, intraocular, retroperitoneal, intra-articular, pericardial, or intramuscular causing compartment syndrome), or a drop in hemoglobin (≥ 2 g/dL or requiring transfusion of ≥ 2 units of whole blood or red cells).5

There was no difference in MACE between the monotherapy and OAC plus SAPT groups (hazard ratio [HR] = 1.09; 95% CI, 0.92-1.29). Similarly, there were no differences in stroke and all-cause mortality between the groups. However, there was a significant association of higher risk of major bleeding (HR = 1.61; 95% CI, 1.38-1.87) and net adverse events (HR = 1.21; 95% CI, 1.02-1.43) in the OAC plus SAPT group compared with the OAC monotherapy group.

This study’s limitations included its low percentage of patients taking a DOAC. Also, due to variations in methods of reporting CHA2DS2-VASc and HAS-BLED scores among the studies (for risk of stroke in patients with nonrheumatic AF and for risk of bleeding in AF patients taking anticoagulants), this meta-analysis could not determine if different outcomes might be found in patients with different CHA2DS2-VASc and HAS-BLED scores.

Continue to: WHAT'S NEW

WHAT’S NEW

OAC monotherapy benefit for patients with nonvalvular AF

This study strongly suggests that there is a large subgroup of patients with stable CAD for whom SAPT should not be prescribed as a preventive medication: patients with nonvalvular AF who are receiving OAC therapy. This study concurs with the results of the 2019 AFIRE (Atrial Fibrillation and Ischemic Events with Rivaroxaban in Patients with Stable Coronary Artery Disease) trial in Japan, in which 2236 patients with stable IHD (coronary artery bypass grafting, stenting, or cardiac catheterization > 1 year earlier) were randomized to receive rivaroxaban either alone or with an antiplatelet agent. All-cause mortality and major bleeding were lower in the monotherapy group.6

This meta-analysis calls into question the baseline recommendation from the 2012 American College of Cardiology Foundation/American Heart Association (ACCF/AHA) guideline to prescribe aspirin indefinitely for patients with stable CAD unless there is a contraindication (oral anticoagulation is not listed as a contraindication).2 The 2020 ACC Expert Consensus Decision Pathway7 published in February 2021 stated that for patients requiring long-term anticoagulation therapy who have completed 12 months of SAPT after percutaneous coronary intervention, anticoagulation therapy alone “could be used long-term”; however, the 2019 study by Lee was not listed among their references. Inclusion of the Lee study might have contributed to a stronger recommendation.

Also, the new guidelines include clinical situations in which dual therapy could still be continued: “… if perceived thrombotic risk is high (eg, prior myocardial infarction, complex lesions, presence of select traditional cardiovascular risk factors, or extensive [atherosclerotic cardiovascular disease]), and the patient is at low bleeding risk.” The guidelines state that in this situation, “… it is reasonable to continue SAPT beyond 12 months (in line with prior ACC/AHA recommendations).”7 However, the cited study compared dual therapy (dabigatran plus APT) to warfarin triple therapy. Single OAC therapy was not studied.8

CAVEATS

DOAC patient populationwas not well represented

The study had a low percentage of patients taking a DOAC. Also, because there were variations in how the studies reported CHA2DS2-VASc and HAS-BLED scores, this meta-analysis was unable to determine if different scores might have produced different outcomes. However, the studies involving registries had the advantage of looking at the data for this population over long periods of time and included a wide variety of patients, making the recommendation likely valid.

CHALLENGES TO IMPLEMENTATION

Primary care approach may not sync with specialist practice

We see no challenges to implementation except for potential differences between primary care physicians and specialists regarding the use of antiplatelet agents in this patient population.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

ILLUSTRATIVE CASE

A 67-year-old man with a history of coronary artery stenting 7 years prior and nonvalvular AF that is well controlled with a beta-blocker comes in for a routine health maintenance visit. You note that the patient takes warfarin, metoprolol, and aspirin. The patient has not had any thrombotic or bleeding events in his lifetime. Does this patient need to take both warfarin and aspirin? Do the antithrombotic benefits of dual therapy outweigh the risk of bleeding?

Antiplatelet agents have long been recommended for secondary prevention of cardiovascular (CV) events in patients with IHD. The goal is to reduce the risk of coronary artery thrombosis.2 Many patients with IHD also develop AF and are treated with OACs such as warfarin or direct oral anticoagulants (DOACs) to prevent thromboembolic events.

There has been a paucity of data to determine the risks and benefits of OAC monotherapy compared to OAC plus single antiplatelet therapy (SAPT). Given research that shows increased risks of bleeding and all-cause mortality when aspirin is used for primary prevention of CV disease,3,4 it is prudent to examine if the harms of aspirin outweigh its benefits for the secondary prevention of acute coronary events in patients already taking antithrombotic agents.

STUDY SUMMARY

Reduced bleeding risk, with no difference in major adverse cardiovascular events

This study by Lee and colleagues1 was a meta-analysis of 8855 patients with nonvalvular AF and stable coronary artery disease (CAD), from 6 trials comparing OAC monotherapy vs OAC plus SAPT. The meta-analysis involved 3 studies using patient registries, 2 cohort studies, and an open-label randomized trial that together spanned the period from 2002 to 2016. The longest study period was 9 years (1 study) and the shortest, 1 year (2 studies). Oral anticoagulation consisted of either vitamin K antagonist (VKA) therapy (the majority of the patients studied) or DOAC therapy (8.6% of the patients studied). SAPT was either aspirin or clopidogrel.

The primary outcome measure was major adverse CV events (MACE). Secondary outcome measures included major bleeding, stroke, all-cause mortality, and net adverse events. The definitions used by the studies for major bleeding were deemed “largely consistent” with the International Society on Thrombosis and Haemostasis major bleeding criteria, ie, fatal bleeding, symptomatic bleeding in a critical area or organ (intracranial, intraspinal, intraocular, retroperitoneal, intra-articular, pericardial, or intramuscular causing compartment syndrome), or a drop in hemoglobin (≥ 2 g/dL or requiring transfusion of ≥ 2 units of whole blood or red cells).5

There was no difference in MACE between the monotherapy and OAC plus SAPT groups (hazard ratio [HR] = 1.09; 95% CI, 0.92-1.29). Similarly, there were no differences in stroke and all-cause mortality between the groups. However, there was a significant association of higher risk of major bleeding (HR = 1.61; 95% CI, 1.38-1.87) and net adverse events (HR = 1.21; 95% CI, 1.02-1.43) in the OAC plus SAPT group compared with the OAC monotherapy group.

This study’s limitations included its low percentage of patients taking a DOAC. Also, due to variations in methods of reporting CHA2DS2-VASc and HAS-BLED scores among the studies (for risk of stroke in patients with nonrheumatic AF and for risk of bleeding in AF patients taking anticoagulants), this meta-analysis could not determine if different outcomes might be found in patients with different CHA2DS2-VASc and HAS-BLED scores.

Continue to: WHAT'S NEW

WHAT’S NEW

OAC monotherapy benefit for patients with nonvalvular AF

This study strongly suggests that there is a large subgroup of patients with stable CAD for whom SAPT should not be prescribed as a preventive medication: patients with nonvalvular AF who are receiving OAC therapy. This study concurs with the results of the 2019 AFIRE (Atrial Fibrillation and Ischemic Events with Rivaroxaban in Patients with Stable Coronary Artery Disease) trial in Japan, in which 2236 patients with stable IHD (coronary artery bypass grafting, stenting, or cardiac catheterization > 1 year earlier) were randomized to receive rivaroxaban either alone or with an antiplatelet agent. All-cause mortality and major bleeding were lower in the monotherapy group.6

This meta-analysis calls into question the baseline recommendation from the 2012 American College of Cardiology Foundation/American Heart Association (ACCF/AHA) guideline to prescribe aspirin indefinitely for patients with stable CAD unless there is a contraindication (oral anticoagulation is not listed as a contraindication).2 The 2020 ACC Expert Consensus Decision Pathway7 published in February 2021 stated that for patients requiring long-term anticoagulation therapy who have completed 12 months of SAPT after percutaneous coronary intervention, anticoagulation therapy alone “could be used long-term”; however, the 2019 study by Lee was not listed among their references. Inclusion of the Lee study might have contributed to a stronger recommendation.

Also, the new guidelines include clinical situations in which dual therapy could still be continued: “… if perceived thrombotic risk is high (eg, prior myocardial infarction, complex lesions, presence of select traditional cardiovascular risk factors, or extensive [atherosclerotic cardiovascular disease]), and the patient is at low bleeding risk.” The guidelines state that in this situation, “… it is reasonable to continue SAPT beyond 12 months (in line with prior ACC/AHA recommendations).”7 However, the cited study compared dual therapy (dabigatran plus APT) to warfarin triple therapy. Single OAC therapy was not studied.8

CAVEATS

DOAC patient populationwas not well represented

The study had a low percentage of patients taking a DOAC. Also, because there were variations in how the studies reported CHA2DS2-VASc and HAS-BLED scores, this meta-analysis was unable to determine if different scores might have produced different outcomes. However, the studies involving registries had the advantage of looking at the data for this population over long periods of time and included a wide variety of patients, making the recommendation likely valid.

CHALLENGES TO IMPLEMENTATION

Primary care approach may not sync with specialist practice

We see no challenges to implementation except for potential differences between primary care physicians and specialists regarding the use of antiplatelet agents in this patient population.

ACKNOWLEDGEMENT

The PURLs Surveillance System was supported in part by Grant Number UL1RR024999 from the National Center for Research Resources, a Clinical Translational Science Award to the University of Chicago. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources or the National Institutes of Health.

1. Lee SR, Rhee TM, Kang DY, et al. Meta-analysis of oral anticoagulant monotherapy as an antithrombotic strategy in patients with stable coronary artery disease and nonvalvular atrial fibrillation. Am J Cardiol. 2019;124:879-885. doi: 10.1016/j.amjcard.2019.05.072

2. Fihn SD, Gardin JM, Abrams J, et al; American College of Cardiology Foundation; American Heart Association Task Force on Practice Guidelines; American College of Physicians; American Association for Thoracic Surgery; Preventive Cardiovascular Nurses Association; Society for Cardiovascular Angiography and Interventions; Society of Thoracic Surgeons. 2012 ACCF/AHA/ACP/AATS/PCNA/SCAI/STS guideline for the diagnosis and management of patients with stable ischemic heart disease: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines, and the American College of Physicians, American Association for Thoracic Surgery, Preventive Cardiovascular Nurses Association, Society for Cardiovascular Angiography and Interventions, and Society of Thoracic Surgeons. J Am Coll Cardiol. 2012;60:e44-e164.

3. Whitlock EP, Burda BU, Williams SB, et al. Bleeding risks with aspirin use for primary prevention in adults: a systematic review for the U.S. Preventive Services Task Force. Ann Intern Med. 2016;164:826-835. doi: 10.7326/M15-2112

4. McNeil JJ, Nelson MR, Woods RL, et al; ASPREE Investigator Group. Effect of aspirin on all-cause mortality in the healthy elderly. N Engl J Med. 2018;379:1519-1528. doi: 10.1056/NEJMoa1803955

5. Schulman S, Kearon C; Subcommittee on Control of Anticoagulation of the Scientific and Standardization Committee of the International Society on Thrombosis and Haemostasis. Definition of major bleeding in clinical investigations of antihemostatic medicinal products in non-surgical patients. J Thromb Haemost. 2005;3:692-694. doi: 10.1111/j.1538-7836.2005.01204.x

6. Yasuda S, Kaikita K, Akao M, et al; AFIRE Investigators. Antithrombotic therapy for atrial fibrillation with stable coronary disease. N Engl J Med. 2019;381:1103-1113. doi: 10.1056/NEJMoa1904143

7. Kumbhani DJ, Cannon CP, Beavers CJ, et al. 2020 ACC expert consensus decision pathway for anticoagulant and antiplatelet therapy in patients with atrial fibrillation or venous thromboembolism undergoing percutaneous coronary intervention or with atherosclerotic cardiovascular disease: a report of the American College of Cardiology Solution Set Oversight Committee. J Am Coll Cardiol. 2021;77:629-658. doi: 10.1016/j.jacc.2020.09.011

8. Berry NC, Mauri L, Steg PG, et al. Effect of lesion complexity and clinical risk factors on the efficacy and safety of dabigatran dual therapy versus warfarin triple therapy in atrial fibrillation after percutaneous coronary intervention: a subgroup analysis from the REDUAL PCI trial. Circ Cardiovasc Interv. 2020;13:e008349. doi: 10.1161/CIRCINTERVENTIONS.119.008349

1. Lee SR, Rhee TM, Kang DY, et al. Meta-analysis of oral anticoagulant monotherapy as an antithrombotic strategy in patients with stable coronary artery disease and nonvalvular atrial fibrillation. Am J Cardiol. 2019;124:879-885. doi: 10.1016/j.amjcard.2019.05.072

2. Fihn SD, Gardin JM, Abrams J, et al; American College of Cardiology Foundation; American Heart Association Task Force on Practice Guidelines; American College of Physicians; American Association for Thoracic Surgery; Preventive Cardiovascular Nurses Association; Society for Cardiovascular Angiography and Interventions; Society of Thoracic Surgeons. 2012 ACCF/AHA/ACP/AATS/PCNA/SCAI/STS guideline for the diagnosis and management of patients with stable ischemic heart disease: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines, and the American College of Physicians, American Association for Thoracic Surgery, Preventive Cardiovascular Nurses Association, Society for Cardiovascular Angiography and Interventions, and Society of Thoracic Surgeons. J Am Coll Cardiol. 2012;60:e44-e164.

3. Whitlock EP, Burda BU, Williams SB, et al. Bleeding risks with aspirin use for primary prevention in adults: a systematic review for the U.S. Preventive Services Task Force. Ann Intern Med. 2016;164:826-835. doi: 10.7326/M15-2112

4. McNeil JJ, Nelson MR, Woods RL, et al; ASPREE Investigator Group. Effect of aspirin on all-cause mortality in the healthy elderly. N Engl J Med. 2018;379:1519-1528. doi: 10.1056/NEJMoa1803955

5. Schulman S, Kearon C; Subcommittee on Control of Anticoagulation of the Scientific and Standardization Committee of the International Society on Thrombosis and Haemostasis. Definition of major bleeding in clinical investigations of antihemostatic medicinal products in non-surgical patients. J Thromb Haemost. 2005;3:692-694. doi: 10.1111/j.1538-7836.2005.01204.x

6. Yasuda S, Kaikita K, Akao M, et al; AFIRE Investigators. Antithrombotic therapy for atrial fibrillation with stable coronary disease. N Engl J Med. 2019;381:1103-1113. doi: 10.1056/NEJMoa1904143

7. Kumbhani DJ, Cannon CP, Beavers CJ, et al. 2020 ACC expert consensus decision pathway for anticoagulant and antiplatelet therapy in patients with atrial fibrillation or venous thromboembolism undergoing percutaneous coronary intervention or with atherosclerotic cardiovascular disease: a report of the American College of Cardiology Solution Set Oversight Committee. J Am Coll Cardiol. 2021;77:629-658. doi: 10.1016/j.jacc.2020.09.011

8. Berry NC, Mauri L, Steg PG, et al. Effect of lesion complexity and clinical risk factors on the efficacy and safety of dabigatran dual therapy versus warfarin triple therapy in atrial fibrillation after percutaneous coronary intervention: a subgroup analysis from the REDUAL PCI trial. Circ Cardiovasc Interv. 2020;13:e008349. doi: 10.1161/CIRCINTERVENTIONS.119.008349

PRACTICE CHANGER

Recommend the use of a single oral anticoagulant (OAC) over combination therapy with an OAC and an antiplatelet agent for patients with nonvalvular atrial fibrillation (AF) and stable ischemic heart disease (IHD). Doing so may confer the same benefits with fewer risks.

STRENGTH OF RECOMMENDATION

A: Meta-analysis of 7 trials1

Lee SR, Rhee TM, Kang DY, et al. Meta-analysis of oral anticoagulant monotherapy as an antithrombotic strategy in patients with stable coronary artery disease and nonvalvular atrial fibrillation. Am J Cardiol. 2019;124:879-885. doi: 10.1016/j.amjcard.2019.05.072

Updated USPSTF screening guidelines may reduce lung cancer deaths

ILLUSTRATIVE CASE

A 50-year-old woman presents to your office for a well-woman exam. Her past medical history includes a 22-pack-year smoking history (she quit 5 years ago), well-controlled hypertension, and mild obesity. She has no family history of cancer, but she does have a family history of type 2 diabetes and heart disease. Besides age- and risk-appropriate laboratory tests, cervical cancer screening, breast cancer screening, and initial colon cancer screening, are there any other preventive services you would offer her?

Lung cancer is the second most common cancer in both men and women, and it is the leading cause of cancer death in the United States—regardless of gender. The American Cancer Society estimates that 235,760 people will be diagnosed with lung cancer and 131,880 people will die of the disease in 2021.2

In the 2015 National Cancer Institute report on the economic costs of cancer, direct and indirect costs of lung cancer totaled $21.1 billion annually. Lost productivity from lung cancer added another $36.1 billion in annual costs.3 The economic costs increased to $23.8 billion in 2020, with no data on lost productivity.4

Smoking tobacco is by far the primary risk factor for lung cancer, and it is estimated to account for 90% of all lung cancer cases. Compared with nonsmokers, the relative risk of lung cancer is approximately 20 times higher for smokers.5,6

Because the median age of lung cancer diagnosis is 70 years, increasing age is also considered a risk factor for lung cancer.2,7

Although lung cancer has a relatively poor prognosis—with an average 5-year survival rate of 20.5%—early-stage lung cancer is more amenable to treatment and has a better prognosis (as is true with many cancers).1

LDCT has a high sensitivity, as well as a reasonable specificity, for lung cancer detection. There is demonstrated benefit in screening patients who are at high risk for lung cancer.8-11 In 2013, the USPSTF recommended annual lung cancer screening (B recommendation) with LDCT in adults 55 to 80 years of age who have a 30-pack-year smoking history, and who currently smoke or quit within the past 15 years.1

Continue to: STUDY SUMMARY

STUDY SUMMARY

Broader eligibility for screening supports mortality benefit

This is an update to the 2013 clinical practice guideline on lung cancer screening. The USPSTF used 2 methods to provide the best possible evidence for the recommendations. The first method was a systematic review of the accuracy of screening for lung cancer with LDCT, evaluating both the benefits and harms of lung cancer screening. The systematic review examined various subgroups, the number and/or frequency of LDCT scans, and various approaches to reducing false-positive results. In addition to the systematic review, they used collaborative modeling studies to determine the optimal age for beginning and ending screening, the optimal screening interval, and the relative benefits and harms of various screening strategies. These modeling studies complemented the evidence review.

The review included 7 randomized controlled trials (RCTs), plus the modeling studies. Only the National Lung Screening Trial (NLST; N = 53,454) and the Nederlands-Leuvens Longkanker Screenings Onderzoek (NELSON) trial (N = 15,792) had adequate power to detect a mortality benefit from screening (NLST: relative risk reduction = 16%; 95% CI, 5%-25%; NELSON: incidence rate ratio = 0.75; 95% CI, 0.61-0.90) compared with no screening.

Screening intervals, from the NLST and NELSON trials as well as the modeling studies, revealed the greatest benefit from annual screening (statistics not shared). Evidence also showed that screening those with lighter smoking histories (< 30 pack-years) and at an earlier age (age 50) provided increased mortality benefit. No evidence was found for a benefit of screening past 80 years of age. The modeling studies concluded that the 2013 USPSTF screening program, using a starting age of 55 and a 30-pack-year smoking history, would reduce mortality by 9.8%, but by changing to a starting age of 50, a 20-pack-year smoking history, and annual screening, the mortality benefit was increased to 13%.1,11

Comparison with computer-based risk prediction models from the Cancer Intervention and Surveillance Modeling Network (CISNET) revealed insufficient evidence at this time to show that prediction model–based screening offered any benefit beyond that of the age and smoking history risk factor model.

The incidence of false-positive results was > 25% in the NLST at baseline and at 1 year. Use of a classification system such as the Lung Imaging Reporting and Data System (Lung-RADS) could reduce that from 26.6% to 12.8%.2 Another potential harm from LDCT screening is radiation exposure. Evidence from several RCTs and cohort studies showed the exposure from 1 LDCT scan to be 0.65 to 2.36 mSv, whereas the annual background radiation in the United States is 2.4 mSv. The modeling studies estimated that there would be 1 death caused by LDCT for every 18.5 cancer deaths avoided.1,11

Continue to: WHAT'S NEW

WHAT’S NEW

Expanded age range, reduced pack-year history