User login

Primary Care Provider Preferences for Communication with Inpatient Teams: One Size Does Not Fit All

As the hospitalist’s role in medicine grows, the transition of care from inpatient to primary care providers (PCPs, including primary care physicians, nurse practitioners, or physician assistants), becomes increasingly important. Inadequate communication at this transition is associated with preventable adverse events leading to rehospitalization, disability, and death.1-

Providing PCPs access to the inpatient electronic health record (EHR) may reduce the need for active communication. However, a recent survey of PCPs in the general internal medicine division of an academic hospital found a strong preference for additional communication with inpatient providers, despite a shared EHR.5

We examined communication preferences of general internal medicine PCPs at a different academic institution and extended our study to include community-based PCPs who were both affiliated and unaffiliated with the institution.

METHODS

Between October 2015 and June 2016, we surveyed PCPs from 3 practice groups with institutional affiliation or proximity to The Johns Hopkins Hospital: all general internal medicine faculty with outpatient practices (“academic,” 2 practice sites, n = 35), all community-based PCPs affiliated with the health system (“community,” 36 practice sites, n = 220), and all PCPs from an unaffiliated managed care organization (“unaffiliated,” 5 practice sites ranging from 0.3 to 4 miles from The Johns Hopkins Hospital, n = 29).

All groups have work-sponsored e-mail services. At the time of the survey, both the academic and community groups used an EHR that allowed access to inpatient laboratory and radiology data and discharge summaries. The unaffiliated group used paper health records. The hospital faxes discharge summaries to all PCPs who are identified by patients.

The investigators and representatives from each practice group collaborated to develop 15 questions with mutually exclusive answers to evaluate PCP experiences with and preferences for communication with inpatient teams. The survey was constructed and administered through Qualtrics’ online platform (Qualtrics, Provo, UT) and distributed via e-mail. The study was reviewed and acknowledged by the Johns Hopkins institutional review board as quality improvement activity.

The survey contained branching logic. Only respondents who indicated preference for communication received questions regarding preferred mode of communication. We used the preferred mode of communication for initial contact from the inpatient team in our analysis. χ2 and Fischer’s exact tests were performed with JMP 12 software (SAS Institute Inc, Cary, NC).

RESULTS

Fourteen (40%) academic, 43 (14%) community, and 16 (55%) unaffiliated PCPs completed the survey, for 73 total responses from 284 surveys distributed (26%).

Among the 73 responding PCPs, 31 (42%) reported receiving notification of admission during “every” or “almost every” hospitalization, with no significant variation across practice groups (P = 0.5).

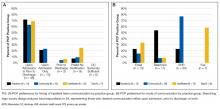

Across all groups, 64 PCPs (88%) preferred communication at 1 or more points during hospitalizations (panel A of Figure). “Both upon admission and prior to discharge” was selected most frequently, and there were no differences between practice groups (P = 0.2).

Preferred mode of communication, however, differed significantly between groups (panel B of Figure). The academic group had a greater preference for telephone (54%) than the community (8%; P < 0.001) and unaffiliated groups (8%; P < 0.001), the community group a greater preference for EHR (77%) than the academic (23%; P = 0.002) and unaffiliated groups (0%; P < 0.001), and the unaffiliated group a greater preference for fax (58%) than the other groups (both 0%; P < 0.001).

DISCUSSION

Our findings add to previous evidence of low rates of communication between inpatient providers and PCPs6 and a preference from PCPs for communication during hospitalizations despite shared EHRs.5 We extend previous work by demonstrating that PCP preferences for mode of communication vary by practice setting. Our findings lead us to hypothesize that identifying and incorporating PCP preferences may improve communication, though at the potential expense of standardization and efficiency.

There may be several reasons for the differing communication preferences observed. Most academic PCPs are located near or have admitting privileges to the hospital and are not in clinic full time. Their preference for the telephone may thus result from interpersonal relationships born from proximity and greater availability for telephone calls, or reduced fluency with the EHR compared to full-time community clinicians.

The unaffiliated group’s preference for fax may reflect a desire for communication that integrates easily with paper charts and is least disruptive to workflow, or concerns about health information confidentiality in e-mails.

Our study’s generalizability is limited by a low response rate, though it is comparable to prior studies.7 The unaffiliated group was accessed by convenience (acquaintance with the medical director); however, we note it had the highest response rate (55%).

In summary, we found low rates of communication between inpatient providers and PCPs, despite a strong preference from most PCPs for such communication during hospitalizations. PCPs’ preferred mode of communication differed based on practice setting. Addressing PCP communication preferences may be important to future care transition interventions.

Disclosure

The authors report no conflicts of interest.

1. Forster AJ, Murff HJ, Peterson JF, Gandhi TK, Bates DW. The incidence and severity of adverse events affecting patients after discharge from the hospital. Ann Intern Med. 2003;138(3):161-174. PubMed

2. Moore C, Wisnivesky J, Williams S, McGinn T. Medical errors related to discontinuity of care from an inpatient to an outpatient setting. J Gen Intern Med. 2003;18(8):646-651. PubMed

3. van Walraven C, Mamdani M, Fang J, Austin PC. Continuity of care and patient outcomes after hospital discharge. J Gen Intern Med. 2004;19(6):624-631. PubMed

4. Snow V, Beck D, Budnitz T, et al. Transitions of Care Consensus policy statement: American College of Physicians, Society of General Internal Medicine, Society of Hospital Medicine, American Geriatrics Society, American College Of Emergency Physicians, and Society for Academic Emergency M. J Hosp Med. 2009;4(6):364-370. PubMed

5. Sheu L, Fung K, Mourad M, Ranji S, Wu E. We need to talk: Primary care provider communication at discharge in the era of a shared electronic medical record. J Hosp Med. 2015;10(5):307-310. PubMed

6. Kripalani S, LeFevre F, Phillips CO, Williams MV, Basaviah P, Baker DW. Deficits in communication and information transfer between hospital-based and primary care physicians. JAMA. 2007;297(8):831-841. PubMed

7. Pantilat SZ, Lindenauer PK, Katz PP, Wachter RM. Primary care physician attitudes regarding communication with hospitalists. Am J Med. 2001(9B);111:15-20. PubMed

As the hospitalist’s role in medicine grows, the transition of care from inpatient to primary care providers (PCPs, including primary care physicians, nurse practitioners, or physician assistants), becomes increasingly important. Inadequate communication at this transition is associated with preventable adverse events leading to rehospitalization, disability, and death.1-

Providing PCPs access to the inpatient electronic health record (EHR) may reduce the need for active communication. However, a recent survey of PCPs in the general internal medicine division of an academic hospital found a strong preference for additional communication with inpatient providers, despite a shared EHR.5

We examined communication preferences of general internal medicine PCPs at a different academic institution and extended our study to include community-based PCPs who were both affiliated and unaffiliated with the institution.

METHODS

Between October 2015 and June 2016, we surveyed PCPs from 3 practice groups with institutional affiliation or proximity to The Johns Hopkins Hospital: all general internal medicine faculty with outpatient practices (“academic,” 2 practice sites, n = 35), all community-based PCPs affiliated with the health system (“community,” 36 practice sites, n = 220), and all PCPs from an unaffiliated managed care organization (“unaffiliated,” 5 practice sites ranging from 0.3 to 4 miles from The Johns Hopkins Hospital, n = 29).

All groups have work-sponsored e-mail services. At the time of the survey, both the academic and community groups used an EHR that allowed access to inpatient laboratory and radiology data and discharge summaries. The unaffiliated group used paper health records. The hospital faxes discharge summaries to all PCPs who are identified by patients.

The investigators and representatives from each practice group collaborated to develop 15 questions with mutually exclusive answers to evaluate PCP experiences with and preferences for communication with inpatient teams. The survey was constructed and administered through Qualtrics’ online platform (Qualtrics, Provo, UT) and distributed via e-mail. The study was reviewed and acknowledged by the Johns Hopkins institutional review board as quality improvement activity.

The survey contained branching logic. Only respondents who indicated preference for communication received questions regarding preferred mode of communication. We used the preferred mode of communication for initial contact from the inpatient team in our analysis. χ2 and Fischer’s exact tests were performed with JMP 12 software (SAS Institute Inc, Cary, NC).

RESULTS

Fourteen (40%) academic, 43 (14%) community, and 16 (55%) unaffiliated PCPs completed the survey, for 73 total responses from 284 surveys distributed (26%).

Among the 73 responding PCPs, 31 (42%) reported receiving notification of admission during “every” or “almost every” hospitalization, with no significant variation across practice groups (P = 0.5).

Across all groups, 64 PCPs (88%) preferred communication at 1 or more points during hospitalizations (panel A of Figure). “Both upon admission and prior to discharge” was selected most frequently, and there were no differences between practice groups (P = 0.2).

Preferred mode of communication, however, differed significantly between groups (panel B of Figure). The academic group had a greater preference for telephone (54%) than the community (8%; P < 0.001) and unaffiliated groups (8%; P < 0.001), the community group a greater preference for EHR (77%) than the academic (23%; P = 0.002) and unaffiliated groups (0%; P < 0.001), and the unaffiliated group a greater preference for fax (58%) than the other groups (both 0%; P < 0.001).

DISCUSSION

Our findings add to previous evidence of low rates of communication between inpatient providers and PCPs6 and a preference from PCPs for communication during hospitalizations despite shared EHRs.5 We extend previous work by demonstrating that PCP preferences for mode of communication vary by practice setting. Our findings lead us to hypothesize that identifying and incorporating PCP preferences may improve communication, though at the potential expense of standardization and efficiency.

There may be several reasons for the differing communication preferences observed. Most academic PCPs are located near or have admitting privileges to the hospital and are not in clinic full time. Their preference for the telephone may thus result from interpersonal relationships born from proximity and greater availability for telephone calls, or reduced fluency with the EHR compared to full-time community clinicians.

The unaffiliated group’s preference for fax may reflect a desire for communication that integrates easily with paper charts and is least disruptive to workflow, or concerns about health information confidentiality in e-mails.

Our study’s generalizability is limited by a low response rate, though it is comparable to prior studies.7 The unaffiliated group was accessed by convenience (acquaintance with the medical director); however, we note it had the highest response rate (55%).

In summary, we found low rates of communication between inpatient providers and PCPs, despite a strong preference from most PCPs for such communication during hospitalizations. PCPs’ preferred mode of communication differed based on practice setting. Addressing PCP communication preferences may be important to future care transition interventions.

Disclosure

The authors report no conflicts of interest.

As the hospitalist’s role in medicine grows, the transition of care from inpatient to primary care providers (PCPs, including primary care physicians, nurse practitioners, or physician assistants), becomes increasingly important. Inadequate communication at this transition is associated with preventable adverse events leading to rehospitalization, disability, and death.1-

Providing PCPs access to the inpatient electronic health record (EHR) may reduce the need for active communication. However, a recent survey of PCPs in the general internal medicine division of an academic hospital found a strong preference for additional communication with inpatient providers, despite a shared EHR.5

We examined communication preferences of general internal medicine PCPs at a different academic institution and extended our study to include community-based PCPs who were both affiliated and unaffiliated with the institution.

METHODS

Between October 2015 and June 2016, we surveyed PCPs from 3 practice groups with institutional affiliation or proximity to The Johns Hopkins Hospital: all general internal medicine faculty with outpatient practices (“academic,” 2 practice sites, n = 35), all community-based PCPs affiliated with the health system (“community,” 36 practice sites, n = 220), and all PCPs from an unaffiliated managed care organization (“unaffiliated,” 5 practice sites ranging from 0.3 to 4 miles from The Johns Hopkins Hospital, n = 29).

All groups have work-sponsored e-mail services. At the time of the survey, both the academic and community groups used an EHR that allowed access to inpatient laboratory and radiology data and discharge summaries. The unaffiliated group used paper health records. The hospital faxes discharge summaries to all PCPs who are identified by patients.

The investigators and representatives from each practice group collaborated to develop 15 questions with mutually exclusive answers to evaluate PCP experiences with and preferences for communication with inpatient teams. The survey was constructed and administered through Qualtrics’ online platform (Qualtrics, Provo, UT) and distributed via e-mail. The study was reviewed and acknowledged by the Johns Hopkins institutional review board as quality improvement activity.

The survey contained branching logic. Only respondents who indicated preference for communication received questions regarding preferred mode of communication. We used the preferred mode of communication for initial contact from the inpatient team in our analysis. χ2 and Fischer’s exact tests were performed with JMP 12 software (SAS Institute Inc, Cary, NC).

RESULTS

Fourteen (40%) academic, 43 (14%) community, and 16 (55%) unaffiliated PCPs completed the survey, for 73 total responses from 284 surveys distributed (26%).

Among the 73 responding PCPs, 31 (42%) reported receiving notification of admission during “every” or “almost every” hospitalization, with no significant variation across practice groups (P = 0.5).

Across all groups, 64 PCPs (88%) preferred communication at 1 or more points during hospitalizations (panel A of Figure). “Both upon admission and prior to discharge” was selected most frequently, and there were no differences between practice groups (P = 0.2).

Preferred mode of communication, however, differed significantly between groups (panel B of Figure). The academic group had a greater preference for telephone (54%) than the community (8%; P < 0.001) and unaffiliated groups (8%; P < 0.001), the community group a greater preference for EHR (77%) than the academic (23%; P = 0.002) and unaffiliated groups (0%; P < 0.001), and the unaffiliated group a greater preference for fax (58%) than the other groups (both 0%; P < 0.001).

DISCUSSION

Our findings add to previous evidence of low rates of communication between inpatient providers and PCPs6 and a preference from PCPs for communication during hospitalizations despite shared EHRs.5 We extend previous work by demonstrating that PCP preferences for mode of communication vary by practice setting. Our findings lead us to hypothesize that identifying and incorporating PCP preferences may improve communication, though at the potential expense of standardization and efficiency.

There may be several reasons for the differing communication preferences observed. Most academic PCPs are located near or have admitting privileges to the hospital and are not in clinic full time. Their preference for the telephone may thus result from interpersonal relationships born from proximity and greater availability for telephone calls, or reduced fluency with the EHR compared to full-time community clinicians.

The unaffiliated group’s preference for fax may reflect a desire for communication that integrates easily with paper charts and is least disruptive to workflow, or concerns about health information confidentiality in e-mails.

Our study’s generalizability is limited by a low response rate, though it is comparable to prior studies.7 The unaffiliated group was accessed by convenience (acquaintance with the medical director); however, we note it had the highest response rate (55%).

In summary, we found low rates of communication between inpatient providers and PCPs, despite a strong preference from most PCPs for such communication during hospitalizations. PCPs’ preferred mode of communication differed based on practice setting. Addressing PCP communication preferences may be important to future care transition interventions.

Disclosure

The authors report no conflicts of interest.

1. Forster AJ, Murff HJ, Peterson JF, Gandhi TK, Bates DW. The incidence and severity of adverse events affecting patients after discharge from the hospital. Ann Intern Med. 2003;138(3):161-174. PubMed

2. Moore C, Wisnivesky J, Williams S, McGinn T. Medical errors related to discontinuity of care from an inpatient to an outpatient setting. J Gen Intern Med. 2003;18(8):646-651. PubMed

3. van Walraven C, Mamdani M, Fang J, Austin PC. Continuity of care and patient outcomes after hospital discharge. J Gen Intern Med. 2004;19(6):624-631. PubMed

4. Snow V, Beck D, Budnitz T, et al. Transitions of Care Consensus policy statement: American College of Physicians, Society of General Internal Medicine, Society of Hospital Medicine, American Geriatrics Society, American College Of Emergency Physicians, and Society for Academic Emergency M. J Hosp Med. 2009;4(6):364-370. PubMed

5. Sheu L, Fung K, Mourad M, Ranji S, Wu E. We need to talk: Primary care provider communication at discharge in the era of a shared electronic medical record. J Hosp Med. 2015;10(5):307-310. PubMed

6. Kripalani S, LeFevre F, Phillips CO, Williams MV, Basaviah P, Baker DW. Deficits in communication and information transfer between hospital-based and primary care physicians. JAMA. 2007;297(8):831-841. PubMed

7. Pantilat SZ, Lindenauer PK, Katz PP, Wachter RM. Primary care physician attitudes regarding communication with hospitalists. Am J Med. 2001(9B);111:15-20. PubMed

1. Forster AJ, Murff HJ, Peterson JF, Gandhi TK, Bates DW. The incidence and severity of adverse events affecting patients after discharge from the hospital. Ann Intern Med. 2003;138(3):161-174. PubMed

2. Moore C, Wisnivesky J, Williams S, McGinn T. Medical errors related to discontinuity of care from an inpatient to an outpatient setting. J Gen Intern Med. 2003;18(8):646-651. PubMed

3. van Walraven C, Mamdani M, Fang J, Austin PC. Continuity of care and patient outcomes after hospital discharge. J Gen Intern Med. 2004;19(6):624-631. PubMed

4. Snow V, Beck D, Budnitz T, et al. Transitions of Care Consensus policy statement: American College of Physicians, Society of General Internal Medicine, Society of Hospital Medicine, American Geriatrics Society, American College Of Emergency Physicians, and Society for Academic Emergency M. J Hosp Med. 2009;4(6):364-370. PubMed

5. Sheu L, Fung K, Mourad M, Ranji S, Wu E. We need to talk: Primary care provider communication at discharge in the era of a shared electronic medical record. J Hosp Med. 2015;10(5):307-310. PubMed

6. Kripalani S, LeFevre F, Phillips CO, Williams MV, Basaviah P, Baker DW. Deficits in communication and information transfer between hospital-based and primary care physicians. JAMA. 2007;297(8):831-841. PubMed

7. Pantilat SZ, Lindenauer PK, Katz PP, Wachter RM. Primary care physician attitudes regarding communication with hospitalists. Am J Med. 2001(9B);111:15-20. PubMed

© 2017 Society of Hospital Medicine

sberry8@jhmi.edu

Impact of Displaying Inpatient Pharmaceutical Costs at the Time of Order Entry: Lessons From a Tertiary Care Center

Secondary to rising healthcare costs in the United States, broad efforts are underway to identify and reduce waste in the health system.1,2 A recent systematic review exhibited that many physicians inaccurately estimate the cost of medications.3 Raising awareness of medication costs among prescribers is one potential way to promote high-value care.

Some evidence suggests that cost transparency may help prescribers understand how medication orders drive costs. In a previous study carried out at the Johns Hopkins Hospital, fee data were displayed to providers for diagnostic laboratory tests.4 An 8.6% decrease (95% confidence interval [CI], –8.99% to –8.19%) in test ordering was observed when costs were displayed vs a 5.6% increase (95% CI, 4.90% to 6.39%) in ordering when costs were not displayed during a 6-month intervention period (P < 0.001). Conversely, a similar study that investigated the impact of cost transparency on inpatient imaging utilization did not demonstrate a significant influence of cost display.5 This suggests that cost transparency may work in some areas of care but not in others. A systematic review that investigated price-display interventions for imaging, laboratory studies, and medications reported 10 studies that demonstrated a statistically significant decrease in expenditures without an effect on patient safety.6

Informing prescribers of institution-specific medication costs within and between drug classes may enable the selection of less expensive, therapeutically equivalent drugs. Prior studies investigating the effect of medication cost display were conducted in a variety of patient care settings, including ambulatory clinics,7 urgent care centers,8 and operating rooms,9,10 with some yielding positive results in terms of ordering and cost11,12 and others having no impact.13,14 Currently, there is little evidence specifically addressing the effect of cost display for medications in the inpatient setting.

As part of an institutional initiative to control pharmaceutical expenditures, informational messaging for several high-cost drugs was initiated at our tertiary care hospital in April 2015. The goal of our study was to assess the effect of these medication cost messages on ordering practices. We hypothesized that the display of inpatient pharmaceutical costs at the time of order entry would result in a reduction in ordering.

METHODS

Setting, Intervention, and Participants

As part of an effort to educate prescribers about the high cost of medications, 9 intravenous (IV) medications were selected by the Johns Hopkins Hospital Pharmacy and Therapeutics Committee as targets for drug cost messaging. The intention of the committee was to implement a rapid, low-cost, proof-of-concept, quality-improvement project that was not designed as prospective research. Representatives from the pharmacy and clinicians from relevant clinical areas participated in preimplementation discussions to help identify medications that were subjectively felt to be overused at our institution and potentially modifiable through provider education. The criteria for selecting drug targets included a variety of factors, such as medications infrequently ordered but representing a significant cost per dose (eg, eculizumab and ribavirin), frequently ordered medications with less expensive substitutes (eg, linezolid and voriconazole), and high-cost medications without direct therapeutic alternatives (eg, calcitonin). From April 10, 2015, to October 5, 2015, the computerized Provider Order Entry System (cPOE), Sunrise Clinical Manager (Allscripts Corporation, Chicago, IL), displayed the cost for targeted medications. Seven of the medication alerts also included a reasonable therapeutic alternative and its cost. There were no restrictions placed on ordering; prescribers were able to choose the high-cost medications at their discretion.

Despite the fact that this initiative was not designed as a research project, we felt it was important to formally evaluate the impact of the drug cost messaging effort to inform future quality-improvement interventions. Each medication was compared to its preintervention baseline utilization dating back to January 1, 2013. For the 7 medications with alternatives offered, we also analyzed use of the suggested alternative during these time periods.

Data Sources and Measurement

Our study utilized data obtained from the pharmacy order verification system and the cPOE database. Data were collected over a period of 143 weeks from January 1, 2013, to October 5, 2015, to allow for a baseline period (January 1, 2013, to April 9, 2015) and an intervention period (April 10, 2015, to October 5, 2015). Data elements extracted included drug characteristics (dosage form, route, cost, strength, name, and quantity), patient characteristics (race, gender, and age), clinical setting (facility location, inpatient or outpatient), and billing information (provider name, doses dispensed from pharmacy, order number, revenue or procedure code, record number, date of service, and unique billing number) for each admission. Using these elements, we generated the following 8 variables to use in our analyses: week, month, period identifier, drug name, dosage form, weekly orders, weekly patient days, and number of weekly orders per 10,000 patient days. Average wholesale price (AWP), referred to as medication cost in this manuscript, was used to report all drug costs in all associated cost calculations. While the actual cost of acquisition and price charged to the patient may vary based on several factors, including manufacturer and payer, we chose to use AWP as a generalizable estimate of the cost of acquisition of the drug for the hospital.

Variables

“Week” and “month” were defined as the week and month of our study, respectively. The “period identifier” was a binary variable that identified the time period before and after the intervention. “Weekly orders” was defined as the total number of new orders placed per week for each specified drug included in our study. For example, if a patient received 2 discrete, new orders for a medication in a given week, 2 orders would be counted toward the “weekly orders” variable. “Patient days,” defined as the total number of patients treated at our facility, was summated for each week of our study to yield “weekly patient days.” To derive the “number of weekly orders per 10,000 patient days,” we divided weekly orders by weekly patient days and multiplied the resultant figure by 10,000.

Statistical Analysis

Segmented regression, a form of interrupted time series analysis, is a quasi-experimental design that was used to determine the immediate and sustained effects of the drug cost messages on the rate of medication ordering.15-17 The model enabled the use of comparison groups (alternative medications, as described above) to enhance internal validity.

In time series data, outcomes may not be independent over time. Autocorrelation of the error terms can arise when outcomes are more similar at time points closer together than outcomes at time points further apart. Failure to account for autocorrelation of the error terms may lead to underestimated standard errors. The presence of autocorrelation, assessed by calculating the Durbin-Watson statistic, was significant among our data. To adjust for this, we employed a Prais-Winsten estimation to adjust the error term (εt) calculated in our models.

Two segmented linear regression models were used to estimate trends in ordering before and after the intervention. The presence or absence of a comparator drug determined which model was to be used. When only single medications were under study, as in the case of eculizumab and calcitonin, our regression model was as follows:

Yt = (β0) + (β1)(Timet) + (β2)(Interventiont) + (β3)(Post-Intervention Timet) + (εt)

In our single-drug model, Yt denoted the number of orders per 10,000 patient days at week “t”; Timet was a continuous variable that indicated the number of weeks prior to or after the study intervention (April 10, 2015) and ranged from –116 to 27 weeks. Post-Intervention Timet was a continuous variable that denoted the number of weeks since the start of the intervention and is coded as zero for all time periods prior to the intervention. β0 was the estimated baseline number of orders per 10,000 patient days at the beginning of the study. β1 is the trend of orders per 10,000 patient days per week during the preintervention period; β2 represents an estimate of the change in the number of orders per 10,000 patient days immediately after the intervention; β3 denotes the difference between preintervention and postintervention slopes; and εt is the “error term,” which represents autocorrelation and random variability of the data.

As mentioned previously, alternative dosage forms of 7 medications included in our study were utilized as comparison groups. In these instances (when multiple drugs were included in our analyses), the following regression model was applied:

Y t = ( β 0 ) + ( β 1 )(Time t ) + ( β 2 )(Intervention t ) + ( β 3 )(Post-Intervention Time t ) + ( β 4 )(Cohort) + ( β 5 )(Cohort)(Time t ) + ( β 6 )(Cohort)(Intervention t ) + ( β 7 )(Cohort)(Post-Intervention Time t ) + ( ε t )

Here, 3 coefficients were added (β4-β7) to describe an additional cohort of orders. Cohort, a binary indicator variable, held a value of either 0 or 1 when the model was used to describe the treatment or comparison group, respectively. The coefficients β4-β7 described the treatment group, and β0-β3 described the comparison group. β4 was the difference in the number of baseline orders per 10,000 patient days between treatment and comparison groups; Β5 represented the difference between the estimated ordering trends of treatment and comparison groups; and Β6 indicated the difference in immediate changes in the number of orders per 10,000 patient days in the 2 groups following the intervention.

The number of orders per week was recorded for each medicine, which enabled a large number of data points to be included in our analyses. This allowed for more accurate and stable estimates to be made in our regression model. A total of 143 data points were collected for each study group, 116 before and 27 following each intervention.

All analyses were conducted by using STATA version 13.1 (StataCorp LP, College Station, TX).

RESULTS

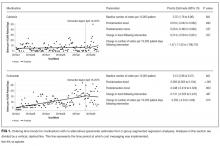

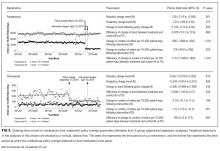

Initial results pertaining to 9 IV medications were examined (Table). Following the implementation of cost messaging, no significant changes were observed in order frequency or trend for IV formulations of eculizumab, calcitonin, levetiracetam, linezolid, mycophenolate, ribavirin, voriconazole, and levothyroxine (Figures 1 and 2). However, a significant decrease in the number of oral ribavirin orders (Figure 2), the control group for the IV form, was observed (–16.3 orders per 10,000 patient days; P = .004; 95% CI, –27.2 to –5.31).

DISCUSSION

Our results suggest that the passive strategy of displaying cost alone was not effective in altering prescriber ordering patterns for the selected medications. This may be due to a lack of awareness regarding direct financial impact on the patient, importance of costs in medical decision-making, or a perceived lack of alternatives or suitability of recommended alternatives. These results may prove valuable to hospital and pharmacy leadership as they develop strategies to curb medication expense.

Changes observed in IV pantoprazole ordering are instructive. Due to a national shortage, the IV form of this medication underwent a restriction, which required approval by the pharmacy prior to dispensing. This restriction was instituted independently of our study and led to a 73% decrease from usage rates prior to policy implementation (Figure 3). Ordering was restricted according to defined criteria for IV use. The restriction did not apply to oral pantoprazole, and no significant change in ordering of the oral formulation was noted during the evaluated period (Figure 3).

The dramatic effect of policy changes, as observed with pantoprazole and voriconazole, suggests that a more active strategy may have a greater impact on prescriber behavior when it comes to medication ordering in the inpatient setting. It also highlights several potential sources of confounding that may introduce bias to cost-transparency studies.

This study has multiple limitations. First, as with all observational study designs, causation cannot be drawn with certainty from our results. While we were able to compare medications to their preintervention baselines, the data could have been impacted by longitudinal or seasonal trends in medication ordering, which may have been impacted by seasonal variability in disease prevalence, changes in resistance patterns, and annual cycling of house staff in an academic medical center. While there appear to be potential seasonal patterns regarding prescribing patterns for some of the medications included in this analysis, we also believe the linear regressions capture the overall trends in prescribing adequately. Nonstationarity, or trends in the mean and variance of the outcome that are not related to the intervention, may introduce bias in the interpretation of our findings. However, we believe the parameters included in our models, namely the immediate change in the intercept following the intervention and the change in the trend of the rate of prescribing over time from pre- to postintervention, provide substantial protections from faulty interpretation. Our models are limited to the extent that these parameters do not account for nonstationarity. Additionally, we did not collect data on dosing frequency or duration of treatment, which would have been dependent on factors that are not readily quantified, such as indication, clinical rationale, or patient response. Thus, we were not able to evaluate the impact of the intervention on these factors.

Although intended to enhance internal validity, comparison groups were also subject to external influence. For example, we observed a significant, short-lived rise in oral ribavirin (a control medication) ordering during the preintervention baseline period that appeared to be independent of our intervention and may speak to the unaccounted-for longitudinal variability detailed above.

Finally, the clinical indication and setting may be important. Previous studies performed at the same hospital with price displays showed a reduction in laboratory ordering but no change in imaging.18,19 One might speculate that ordering fewer laboratory tests is viewed by providers as eliminating waste rather than choosing a less expensive option to accomplish the same diagnostic task at hand. Therapeutics may be more similar to radiology tests, because patients presumably need the treatment and often do not have the option of simply not ordering without a concerted effort to reevaluate the treatment plan. Additionally, in a tertiary care teaching center such as ours, a junior clinician, oftentimes at the behest of a more senior colleague, enters most orders. In an environment in which the ordering prescriber has more autonomy or when the order is driven by a junior practitioner rather than an attending (such as daily laboratories), results may be different. Additionally, institutions that incentivize prescribers directly to practice cost-conscious care may experience different results from similar interventions.

We conclude that, in the case of medication cost messaging, a strategy of displaying cost information alone was insufficient to affect prescriber ordering behavior. Coupling cost transparency with educational interventions and active stewardship to impact clinical practice is worthy of further study.

Disclosures: The authors state that there were no external sponsors for this work. The Johns Hopkins Hospital and University “funded” this work by paying the salaries of the authors. The author team maintained independence and made all decisions regarding the study design, data collection, data analysis, interpretation of results, writing of the research report, and decision to submit it for publication. Dr. Shermock had full access to all the study data and takes responsibility for the integrity of the data and accuracy of the data analysis.

1. Berwick DM, Hackbarth AD. Eliminating Waste in US Health Care. JAMA. 2012;307(14):1513-1516. PubMed

2. PricewaterhouseCoopers’ Health Research Institute. The Price of Excess: Identifying Waste in Healthcare Spending. http://www.pwc.com/us/en/healthcare/publications/the-price-of-excess.html. Accessed June 17, 2015.

3. Allan GM, Lexchin J, Wiebe N. Physician awareness of drug cost: a systematic review. PLoS Med. 2007;4(9):e283. PubMed

4. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

5. Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol. 2013;10(2):108-113. PubMed

6. Silvestri MT, Bongiovanni TR, Glover JG, Gross CP. Impact of price display on provider ordering: A systematic review. J Hosp Med. 2016;11(1):65-76. PubMed

7. Ornstein SM, MacFarlane LL, Jenkins RG, Pan Q, Wager KA. Medication cost information in a computer-based patient record system. Impact on prescribing in a family medicine clinical practice. Arch Fam Med. 1999;8(2):118-121. PubMed

8. Guterman JJ, Chernof BA, Mares B, Gross-Schulman SG, Gan PG, Thomas D. Modifying provider behavior: A low-tech approach to pharmaceutical ordering. J Gen Intern Med. 2002;17(10):792-796. PubMed

9. McNitt JD, Bode ET, Nelson RE. Long-term pharmaceutical cost reduction using a data management system. Anesth Analg. 1998;87(4):837-842. PubMed

10. Horrow JC, Rosenberg H. Price stickers do not alter drug usage. Can J Anaesth. 1994;41(11):1047-1052. PubMed

11. Guterman JJ, Chernof BA, Mares B, Gross-Schulman SG, Gan PG, Thomas D. Modifying provider behavior: A low-tech approach to pharmaceutical ordering. J Gen Intern Med. 2002;17(10):792-796. PubMed

12. McNitt JD, Bode ET, Nelson RE. Long-term pharmaceutical cost reduction using a data management system. Anesth Analg. 1998;87(4):837-842. PubMed

13. Ornstein SM, MacFarlane LL, Jenkins RG, Pan Q, Wager KA. Medication cost information in a computer-based patient record system. Impact on prescribing in a family medicine clinical practice. Arch Fam Med. 1999;8(2):118-121. PubMed

14. Horrow JC, Rosenberg H. Price stickers do not alter drug usage. Can J Anaesth. 1994;41(11):1047-1052. PubMed

15. Jandoc R, Burden AM, Mamdani M, Levesque LE, Cadarette SM. Interrupted time series analysis in drug utilization research is increasing: Systematic review and recommendations. J Clin Epidemiol. 2015;68(8):950-56. PubMed

16. Linden A. Conducting interrupted time-series analysis for single- and multiple-group comparisons. Stata J. 2015;15(2):480-500.

17. Linden A, Adams JL. Applying a propensity score-based weighting model to interrupted time series data: improving causal inference in programme evaluation. J Eval Clin Pract. 2011;17(6):1231-1238. PubMed

18. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

19. Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol. 2013;10(2):108-113. PubMed

Secondary to rising healthcare costs in the United States, broad efforts are underway to identify and reduce waste in the health system.1,2 A recent systematic review exhibited that many physicians inaccurately estimate the cost of medications.3 Raising awareness of medication costs among prescribers is one potential way to promote high-value care.

Some evidence suggests that cost transparency may help prescribers understand how medication orders drive costs. In a previous study carried out at the Johns Hopkins Hospital, fee data were displayed to providers for diagnostic laboratory tests.4 An 8.6% decrease (95% confidence interval [CI], –8.99% to –8.19%) in test ordering was observed when costs were displayed vs a 5.6% increase (95% CI, 4.90% to 6.39%) in ordering when costs were not displayed during a 6-month intervention period (P < 0.001). Conversely, a similar study that investigated the impact of cost transparency on inpatient imaging utilization did not demonstrate a significant influence of cost display.5 This suggests that cost transparency may work in some areas of care but not in others. A systematic review that investigated price-display interventions for imaging, laboratory studies, and medications reported 10 studies that demonstrated a statistically significant decrease in expenditures without an effect on patient safety.6

Informing prescribers of institution-specific medication costs within and between drug classes may enable the selection of less expensive, therapeutically equivalent drugs. Prior studies investigating the effect of medication cost display were conducted in a variety of patient care settings, including ambulatory clinics,7 urgent care centers,8 and operating rooms,9,10 with some yielding positive results in terms of ordering and cost11,12 and others having no impact.13,14 Currently, there is little evidence specifically addressing the effect of cost display for medications in the inpatient setting.

As part of an institutional initiative to control pharmaceutical expenditures, informational messaging for several high-cost drugs was initiated at our tertiary care hospital in April 2015. The goal of our study was to assess the effect of these medication cost messages on ordering practices. We hypothesized that the display of inpatient pharmaceutical costs at the time of order entry would result in a reduction in ordering.

METHODS

Setting, Intervention, and Participants

As part of an effort to educate prescribers about the high cost of medications, 9 intravenous (IV) medications were selected by the Johns Hopkins Hospital Pharmacy and Therapeutics Committee as targets for drug cost messaging. The intention of the committee was to implement a rapid, low-cost, proof-of-concept, quality-improvement project that was not designed as prospective research. Representatives from the pharmacy and clinicians from relevant clinical areas participated in preimplementation discussions to help identify medications that were subjectively felt to be overused at our institution and potentially modifiable through provider education. The criteria for selecting drug targets included a variety of factors, such as medications infrequently ordered but representing a significant cost per dose (eg, eculizumab and ribavirin), frequently ordered medications with less expensive substitutes (eg, linezolid and voriconazole), and high-cost medications without direct therapeutic alternatives (eg, calcitonin). From April 10, 2015, to October 5, 2015, the computerized Provider Order Entry System (cPOE), Sunrise Clinical Manager (Allscripts Corporation, Chicago, IL), displayed the cost for targeted medications. Seven of the medication alerts also included a reasonable therapeutic alternative and its cost. There were no restrictions placed on ordering; prescribers were able to choose the high-cost medications at their discretion.

Despite the fact that this initiative was not designed as a research project, we felt it was important to formally evaluate the impact of the drug cost messaging effort to inform future quality-improvement interventions. Each medication was compared to its preintervention baseline utilization dating back to January 1, 2013. For the 7 medications with alternatives offered, we also analyzed use of the suggested alternative during these time periods.

Data Sources and Measurement

Our study utilized data obtained from the pharmacy order verification system and the cPOE database. Data were collected over a period of 143 weeks from January 1, 2013, to October 5, 2015, to allow for a baseline period (January 1, 2013, to April 9, 2015) and an intervention period (April 10, 2015, to October 5, 2015). Data elements extracted included drug characteristics (dosage form, route, cost, strength, name, and quantity), patient characteristics (race, gender, and age), clinical setting (facility location, inpatient or outpatient), and billing information (provider name, doses dispensed from pharmacy, order number, revenue or procedure code, record number, date of service, and unique billing number) for each admission. Using these elements, we generated the following 8 variables to use in our analyses: week, month, period identifier, drug name, dosage form, weekly orders, weekly patient days, and number of weekly orders per 10,000 patient days. Average wholesale price (AWP), referred to as medication cost in this manuscript, was used to report all drug costs in all associated cost calculations. While the actual cost of acquisition and price charged to the patient may vary based on several factors, including manufacturer and payer, we chose to use AWP as a generalizable estimate of the cost of acquisition of the drug for the hospital.

Variables

“Week” and “month” were defined as the week and month of our study, respectively. The “period identifier” was a binary variable that identified the time period before and after the intervention. “Weekly orders” was defined as the total number of new orders placed per week for each specified drug included in our study. For example, if a patient received 2 discrete, new orders for a medication in a given week, 2 orders would be counted toward the “weekly orders” variable. “Patient days,” defined as the total number of patients treated at our facility, was summated for each week of our study to yield “weekly patient days.” To derive the “number of weekly orders per 10,000 patient days,” we divided weekly orders by weekly patient days and multiplied the resultant figure by 10,000.

Statistical Analysis

Segmented regression, a form of interrupted time series analysis, is a quasi-experimental design that was used to determine the immediate and sustained effects of the drug cost messages on the rate of medication ordering.15-17 The model enabled the use of comparison groups (alternative medications, as described above) to enhance internal validity.

In time series data, outcomes may not be independent over time. Autocorrelation of the error terms can arise when outcomes are more similar at time points closer together than outcomes at time points further apart. Failure to account for autocorrelation of the error terms may lead to underestimated standard errors. The presence of autocorrelation, assessed by calculating the Durbin-Watson statistic, was significant among our data. To adjust for this, we employed a Prais-Winsten estimation to adjust the error term (εt) calculated in our models.

Two segmented linear regression models were used to estimate trends in ordering before and after the intervention. The presence or absence of a comparator drug determined which model was to be used. When only single medications were under study, as in the case of eculizumab and calcitonin, our regression model was as follows:

Yt = (β0) + (β1)(Timet) + (β2)(Interventiont) + (β3)(Post-Intervention Timet) + (εt)

In our single-drug model, Yt denoted the number of orders per 10,000 patient days at week “t”; Timet was a continuous variable that indicated the number of weeks prior to or after the study intervention (April 10, 2015) and ranged from –116 to 27 weeks. Post-Intervention Timet was a continuous variable that denoted the number of weeks since the start of the intervention and is coded as zero for all time periods prior to the intervention. β0 was the estimated baseline number of orders per 10,000 patient days at the beginning of the study. β1 is the trend of orders per 10,000 patient days per week during the preintervention period; β2 represents an estimate of the change in the number of orders per 10,000 patient days immediately after the intervention; β3 denotes the difference between preintervention and postintervention slopes; and εt is the “error term,” which represents autocorrelation and random variability of the data.

As mentioned previously, alternative dosage forms of 7 medications included in our study were utilized as comparison groups. In these instances (when multiple drugs were included in our analyses), the following regression model was applied:

Y t = ( β 0 ) + ( β 1 )(Time t ) + ( β 2 )(Intervention t ) + ( β 3 )(Post-Intervention Time t ) + ( β 4 )(Cohort) + ( β 5 )(Cohort)(Time t ) + ( β 6 )(Cohort)(Intervention t ) + ( β 7 )(Cohort)(Post-Intervention Time t ) + ( ε t )

Here, 3 coefficients were added (β4-β7) to describe an additional cohort of orders. Cohort, a binary indicator variable, held a value of either 0 or 1 when the model was used to describe the treatment or comparison group, respectively. The coefficients β4-β7 described the treatment group, and β0-β3 described the comparison group. β4 was the difference in the number of baseline orders per 10,000 patient days between treatment and comparison groups; Β5 represented the difference between the estimated ordering trends of treatment and comparison groups; and Β6 indicated the difference in immediate changes in the number of orders per 10,000 patient days in the 2 groups following the intervention.

The number of orders per week was recorded for each medicine, which enabled a large number of data points to be included in our analyses. This allowed for more accurate and stable estimates to be made in our regression model. A total of 143 data points were collected for each study group, 116 before and 27 following each intervention.

All analyses were conducted by using STATA version 13.1 (StataCorp LP, College Station, TX).

RESULTS

Initial results pertaining to 9 IV medications were examined (Table). Following the implementation of cost messaging, no significant changes were observed in order frequency or trend for IV formulations of eculizumab, calcitonin, levetiracetam, linezolid, mycophenolate, ribavirin, voriconazole, and levothyroxine (Figures 1 and 2). However, a significant decrease in the number of oral ribavirin orders (Figure 2), the control group for the IV form, was observed (–16.3 orders per 10,000 patient days; P = .004; 95% CI, –27.2 to –5.31).

DISCUSSION

Our results suggest that the passive strategy of displaying cost alone was not effective in altering prescriber ordering patterns for the selected medications. This may be due to a lack of awareness regarding direct financial impact on the patient, importance of costs in medical decision-making, or a perceived lack of alternatives or suitability of recommended alternatives. These results may prove valuable to hospital and pharmacy leadership as they develop strategies to curb medication expense.

Changes observed in IV pantoprazole ordering are instructive. Due to a national shortage, the IV form of this medication underwent a restriction, which required approval by the pharmacy prior to dispensing. This restriction was instituted independently of our study and led to a 73% decrease from usage rates prior to policy implementation (Figure 3). Ordering was restricted according to defined criteria for IV use. The restriction did not apply to oral pantoprazole, and no significant change in ordering of the oral formulation was noted during the evaluated period (Figure 3).

The dramatic effect of policy changes, as observed with pantoprazole and voriconazole, suggests that a more active strategy may have a greater impact on prescriber behavior when it comes to medication ordering in the inpatient setting. It also highlights several potential sources of confounding that may introduce bias to cost-transparency studies.

This study has multiple limitations. First, as with all observational study designs, causation cannot be drawn with certainty from our results. While we were able to compare medications to their preintervention baselines, the data could have been impacted by longitudinal or seasonal trends in medication ordering, which may have been impacted by seasonal variability in disease prevalence, changes in resistance patterns, and annual cycling of house staff in an academic medical center. While there appear to be potential seasonal patterns regarding prescribing patterns for some of the medications included in this analysis, we also believe the linear regressions capture the overall trends in prescribing adequately. Nonstationarity, or trends in the mean and variance of the outcome that are not related to the intervention, may introduce bias in the interpretation of our findings. However, we believe the parameters included in our models, namely the immediate change in the intercept following the intervention and the change in the trend of the rate of prescribing over time from pre- to postintervention, provide substantial protections from faulty interpretation. Our models are limited to the extent that these parameters do not account for nonstationarity. Additionally, we did not collect data on dosing frequency or duration of treatment, which would have been dependent on factors that are not readily quantified, such as indication, clinical rationale, or patient response. Thus, we were not able to evaluate the impact of the intervention on these factors.

Although intended to enhance internal validity, comparison groups were also subject to external influence. For example, we observed a significant, short-lived rise in oral ribavirin (a control medication) ordering during the preintervention baseline period that appeared to be independent of our intervention and may speak to the unaccounted-for longitudinal variability detailed above.

Finally, the clinical indication and setting may be important. Previous studies performed at the same hospital with price displays showed a reduction in laboratory ordering but no change in imaging.18,19 One might speculate that ordering fewer laboratory tests is viewed by providers as eliminating waste rather than choosing a less expensive option to accomplish the same diagnostic task at hand. Therapeutics may be more similar to radiology tests, because patients presumably need the treatment and often do not have the option of simply not ordering without a concerted effort to reevaluate the treatment plan. Additionally, in a tertiary care teaching center such as ours, a junior clinician, oftentimes at the behest of a more senior colleague, enters most orders. In an environment in which the ordering prescriber has more autonomy or when the order is driven by a junior practitioner rather than an attending (such as daily laboratories), results may be different. Additionally, institutions that incentivize prescribers directly to practice cost-conscious care may experience different results from similar interventions.

We conclude that, in the case of medication cost messaging, a strategy of displaying cost information alone was insufficient to affect prescriber ordering behavior. Coupling cost transparency with educational interventions and active stewardship to impact clinical practice is worthy of further study.

Disclosures: The authors state that there were no external sponsors for this work. The Johns Hopkins Hospital and University “funded” this work by paying the salaries of the authors. The author team maintained independence and made all decisions regarding the study design, data collection, data analysis, interpretation of results, writing of the research report, and decision to submit it for publication. Dr. Shermock had full access to all the study data and takes responsibility for the integrity of the data and accuracy of the data analysis.

Secondary to rising healthcare costs in the United States, broad efforts are underway to identify and reduce waste in the health system.1,2 A recent systematic review exhibited that many physicians inaccurately estimate the cost of medications.3 Raising awareness of medication costs among prescribers is one potential way to promote high-value care.

Some evidence suggests that cost transparency may help prescribers understand how medication orders drive costs. In a previous study carried out at the Johns Hopkins Hospital, fee data were displayed to providers for diagnostic laboratory tests.4 An 8.6% decrease (95% confidence interval [CI], –8.99% to –8.19%) in test ordering was observed when costs were displayed vs a 5.6% increase (95% CI, 4.90% to 6.39%) in ordering when costs were not displayed during a 6-month intervention period (P < 0.001). Conversely, a similar study that investigated the impact of cost transparency on inpatient imaging utilization did not demonstrate a significant influence of cost display.5 This suggests that cost transparency may work in some areas of care but not in others. A systematic review that investigated price-display interventions for imaging, laboratory studies, and medications reported 10 studies that demonstrated a statistically significant decrease in expenditures without an effect on patient safety.6

Informing prescribers of institution-specific medication costs within and between drug classes may enable the selection of less expensive, therapeutically equivalent drugs. Prior studies investigating the effect of medication cost display were conducted in a variety of patient care settings, including ambulatory clinics,7 urgent care centers,8 and operating rooms,9,10 with some yielding positive results in terms of ordering and cost11,12 and others having no impact.13,14 Currently, there is little evidence specifically addressing the effect of cost display for medications in the inpatient setting.

As part of an institutional initiative to control pharmaceutical expenditures, informational messaging for several high-cost drugs was initiated at our tertiary care hospital in April 2015. The goal of our study was to assess the effect of these medication cost messages on ordering practices. We hypothesized that the display of inpatient pharmaceutical costs at the time of order entry would result in a reduction in ordering.

METHODS

Setting, Intervention, and Participants

As part of an effort to educate prescribers about the high cost of medications, 9 intravenous (IV) medications were selected by the Johns Hopkins Hospital Pharmacy and Therapeutics Committee as targets for drug cost messaging. The intention of the committee was to implement a rapid, low-cost, proof-of-concept, quality-improvement project that was not designed as prospective research. Representatives from the pharmacy and clinicians from relevant clinical areas participated in preimplementation discussions to help identify medications that were subjectively felt to be overused at our institution and potentially modifiable through provider education. The criteria for selecting drug targets included a variety of factors, such as medications infrequently ordered but representing a significant cost per dose (eg, eculizumab and ribavirin), frequently ordered medications with less expensive substitutes (eg, linezolid and voriconazole), and high-cost medications without direct therapeutic alternatives (eg, calcitonin). From April 10, 2015, to October 5, 2015, the computerized Provider Order Entry System (cPOE), Sunrise Clinical Manager (Allscripts Corporation, Chicago, IL), displayed the cost for targeted medications. Seven of the medication alerts also included a reasonable therapeutic alternative and its cost. There were no restrictions placed on ordering; prescribers were able to choose the high-cost medications at their discretion.

Despite the fact that this initiative was not designed as a research project, we felt it was important to formally evaluate the impact of the drug cost messaging effort to inform future quality-improvement interventions. Each medication was compared to its preintervention baseline utilization dating back to January 1, 2013. For the 7 medications with alternatives offered, we also analyzed use of the suggested alternative during these time periods.

Data Sources and Measurement

Our study utilized data obtained from the pharmacy order verification system and the cPOE database. Data were collected over a period of 143 weeks from January 1, 2013, to October 5, 2015, to allow for a baseline period (January 1, 2013, to April 9, 2015) and an intervention period (April 10, 2015, to October 5, 2015). Data elements extracted included drug characteristics (dosage form, route, cost, strength, name, and quantity), patient characteristics (race, gender, and age), clinical setting (facility location, inpatient or outpatient), and billing information (provider name, doses dispensed from pharmacy, order number, revenue or procedure code, record number, date of service, and unique billing number) for each admission. Using these elements, we generated the following 8 variables to use in our analyses: week, month, period identifier, drug name, dosage form, weekly orders, weekly patient days, and number of weekly orders per 10,000 patient days. Average wholesale price (AWP), referred to as medication cost in this manuscript, was used to report all drug costs in all associated cost calculations. While the actual cost of acquisition and price charged to the patient may vary based on several factors, including manufacturer and payer, we chose to use AWP as a generalizable estimate of the cost of acquisition of the drug for the hospital.

Variables

“Week” and “month” were defined as the week and month of our study, respectively. The “period identifier” was a binary variable that identified the time period before and after the intervention. “Weekly orders” was defined as the total number of new orders placed per week for each specified drug included in our study. For example, if a patient received 2 discrete, new orders for a medication in a given week, 2 orders would be counted toward the “weekly orders” variable. “Patient days,” defined as the total number of patients treated at our facility, was summated for each week of our study to yield “weekly patient days.” To derive the “number of weekly orders per 10,000 patient days,” we divided weekly orders by weekly patient days and multiplied the resultant figure by 10,000.

Statistical Analysis

Segmented regression, a form of interrupted time series analysis, is a quasi-experimental design that was used to determine the immediate and sustained effects of the drug cost messages on the rate of medication ordering.15-17 The model enabled the use of comparison groups (alternative medications, as described above) to enhance internal validity.

In time series data, outcomes may not be independent over time. Autocorrelation of the error terms can arise when outcomes are more similar at time points closer together than outcomes at time points further apart. Failure to account for autocorrelation of the error terms may lead to underestimated standard errors. The presence of autocorrelation, assessed by calculating the Durbin-Watson statistic, was significant among our data. To adjust for this, we employed a Prais-Winsten estimation to adjust the error term (εt) calculated in our models.

Two segmented linear regression models were used to estimate trends in ordering before and after the intervention. The presence or absence of a comparator drug determined which model was to be used. When only single medications were under study, as in the case of eculizumab and calcitonin, our regression model was as follows:

Yt = (β0) + (β1)(Timet) + (β2)(Interventiont) + (β3)(Post-Intervention Timet) + (εt)

In our single-drug model, Yt denoted the number of orders per 10,000 patient days at week “t”; Timet was a continuous variable that indicated the number of weeks prior to or after the study intervention (April 10, 2015) and ranged from –116 to 27 weeks. Post-Intervention Timet was a continuous variable that denoted the number of weeks since the start of the intervention and is coded as zero for all time periods prior to the intervention. β0 was the estimated baseline number of orders per 10,000 patient days at the beginning of the study. β1 is the trend of orders per 10,000 patient days per week during the preintervention period; β2 represents an estimate of the change in the number of orders per 10,000 patient days immediately after the intervention; β3 denotes the difference between preintervention and postintervention slopes; and εt is the “error term,” which represents autocorrelation and random variability of the data.

As mentioned previously, alternative dosage forms of 7 medications included in our study were utilized as comparison groups. In these instances (when multiple drugs were included in our analyses), the following regression model was applied:

Y t = ( β 0 ) + ( β 1 )(Time t ) + ( β 2 )(Intervention t ) + ( β 3 )(Post-Intervention Time t ) + ( β 4 )(Cohort) + ( β 5 )(Cohort)(Time t ) + ( β 6 )(Cohort)(Intervention t ) + ( β 7 )(Cohort)(Post-Intervention Time t ) + ( ε t )

Here, 3 coefficients were added (β4-β7) to describe an additional cohort of orders. Cohort, a binary indicator variable, held a value of either 0 or 1 when the model was used to describe the treatment or comparison group, respectively. The coefficients β4-β7 described the treatment group, and β0-β3 described the comparison group. β4 was the difference in the number of baseline orders per 10,000 patient days between treatment and comparison groups; Β5 represented the difference between the estimated ordering trends of treatment and comparison groups; and Β6 indicated the difference in immediate changes in the number of orders per 10,000 patient days in the 2 groups following the intervention.

The number of orders per week was recorded for each medicine, which enabled a large number of data points to be included in our analyses. This allowed for more accurate and stable estimates to be made in our regression model. A total of 143 data points were collected for each study group, 116 before and 27 following each intervention.

All analyses were conducted by using STATA version 13.1 (StataCorp LP, College Station, TX).

RESULTS

Initial results pertaining to 9 IV medications were examined (Table). Following the implementation of cost messaging, no significant changes were observed in order frequency or trend for IV formulations of eculizumab, calcitonin, levetiracetam, linezolid, mycophenolate, ribavirin, voriconazole, and levothyroxine (Figures 1 and 2). However, a significant decrease in the number of oral ribavirin orders (Figure 2), the control group for the IV form, was observed (–16.3 orders per 10,000 patient days; P = .004; 95% CI, –27.2 to –5.31).

DISCUSSION

Our results suggest that the passive strategy of displaying cost alone was not effective in altering prescriber ordering patterns for the selected medications. This may be due to a lack of awareness regarding direct financial impact on the patient, importance of costs in medical decision-making, or a perceived lack of alternatives or suitability of recommended alternatives. These results may prove valuable to hospital and pharmacy leadership as they develop strategies to curb medication expense.

Changes observed in IV pantoprazole ordering are instructive. Due to a national shortage, the IV form of this medication underwent a restriction, which required approval by the pharmacy prior to dispensing. This restriction was instituted independently of our study and led to a 73% decrease from usage rates prior to policy implementation (Figure 3). Ordering was restricted according to defined criteria for IV use. The restriction did not apply to oral pantoprazole, and no significant change in ordering of the oral formulation was noted during the evaluated period (Figure 3).

The dramatic effect of policy changes, as observed with pantoprazole and voriconazole, suggests that a more active strategy may have a greater impact on prescriber behavior when it comes to medication ordering in the inpatient setting. It also highlights several potential sources of confounding that may introduce bias to cost-transparency studies.

This study has multiple limitations. First, as with all observational study designs, causation cannot be drawn with certainty from our results. While we were able to compare medications to their preintervention baselines, the data could have been impacted by longitudinal or seasonal trends in medication ordering, which may have been impacted by seasonal variability in disease prevalence, changes in resistance patterns, and annual cycling of house staff in an academic medical center. While there appear to be potential seasonal patterns regarding prescribing patterns for some of the medications included in this analysis, we also believe the linear regressions capture the overall trends in prescribing adequately. Nonstationarity, or trends in the mean and variance of the outcome that are not related to the intervention, may introduce bias in the interpretation of our findings. However, we believe the parameters included in our models, namely the immediate change in the intercept following the intervention and the change in the trend of the rate of prescribing over time from pre- to postintervention, provide substantial protections from faulty interpretation. Our models are limited to the extent that these parameters do not account for nonstationarity. Additionally, we did not collect data on dosing frequency or duration of treatment, which would have been dependent on factors that are not readily quantified, such as indication, clinical rationale, or patient response. Thus, we were not able to evaluate the impact of the intervention on these factors.

Although intended to enhance internal validity, comparison groups were also subject to external influence. For example, we observed a significant, short-lived rise in oral ribavirin (a control medication) ordering during the preintervention baseline period that appeared to be independent of our intervention and may speak to the unaccounted-for longitudinal variability detailed above.

Finally, the clinical indication and setting may be important. Previous studies performed at the same hospital with price displays showed a reduction in laboratory ordering but no change in imaging.18,19 One might speculate that ordering fewer laboratory tests is viewed by providers as eliminating waste rather than choosing a less expensive option to accomplish the same diagnostic task at hand. Therapeutics may be more similar to radiology tests, because patients presumably need the treatment and often do not have the option of simply not ordering without a concerted effort to reevaluate the treatment plan. Additionally, in a tertiary care teaching center such as ours, a junior clinician, oftentimes at the behest of a more senior colleague, enters most orders. In an environment in which the ordering prescriber has more autonomy or when the order is driven by a junior practitioner rather than an attending (such as daily laboratories), results may be different. Additionally, institutions that incentivize prescribers directly to practice cost-conscious care may experience different results from similar interventions.

We conclude that, in the case of medication cost messaging, a strategy of displaying cost information alone was insufficient to affect prescriber ordering behavior. Coupling cost transparency with educational interventions and active stewardship to impact clinical practice is worthy of further study.

Disclosures: The authors state that there were no external sponsors for this work. The Johns Hopkins Hospital and University “funded” this work by paying the salaries of the authors. The author team maintained independence and made all decisions regarding the study design, data collection, data analysis, interpretation of results, writing of the research report, and decision to submit it for publication. Dr. Shermock had full access to all the study data and takes responsibility for the integrity of the data and accuracy of the data analysis.

1. Berwick DM, Hackbarth AD. Eliminating Waste in US Health Care. JAMA. 2012;307(14):1513-1516. PubMed

2. PricewaterhouseCoopers’ Health Research Institute. The Price of Excess: Identifying Waste in Healthcare Spending. http://www.pwc.com/us/en/healthcare/publications/the-price-of-excess.html. Accessed June 17, 2015.

3. Allan GM, Lexchin J, Wiebe N. Physician awareness of drug cost: a systematic review. PLoS Med. 2007;4(9):e283. PubMed

4. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

5. Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol. 2013;10(2):108-113. PubMed

6. Silvestri MT, Bongiovanni TR, Glover JG, Gross CP. Impact of price display on provider ordering: A systematic review. J Hosp Med. 2016;11(1):65-76. PubMed

7. Ornstein SM, MacFarlane LL, Jenkins RG, Pan Q, Wager KA. Medication cost information in a computer-based patient record system. Impact on prescribing in a family medicine clinical practice. Arch Fam Med. 1999;8(2):118-121. PubMed

8. Guterman JJ, Chernof BA, Mares B, Gross-Schulman SG, Gan PG, Thomas D. Modifying provider behavior: A low-tech approach to pharmaceutical ordering. J Gen Intern Med. 2002;17(10):792-796. PubMed

9. McNitt JD, Bode ET, Nelson RE. Long-term pharmaceutical cost reduction using a data management system. Anesth Analg. 1998;87(4):837-842. PubMed

10. Horrow JC, Rosenberg H. Price stickers do not alter drug usage. Can J Anaesth. 1994;41(11):1047-1052. PubMed

11. Guterman JJ, Chernof BA, Mares B, Gross-Schulman SG, Gan PG, Thomas D. Modifying provider behavior: A low-tech approach to pharmaceutical ordering. J Gen Intern Med. 2002;17(10):792-796. PubMed

12. McNitt JD, Bode ET, Nelson RE. Long-term pharmaceutical cost reduction using a data management system. Anesth Analg. 1998;87(4):837-842. PubMed

13. Ornstein SM, MacFarlane LL, Jenkins RG, Pan Q, Wager KA. Medication cost information in a computer-based patient record system. Impact on prescribing in a family medicine clinical practice. Arch Fam Med. 1999;8(2):118-121. PubMed

14. Horrow JC, Rosenberg H. Price stickers do not alter drug usage. Can J Anaesth. 1994;41(11):1047-1052. PubMed

15. Jandoc R, Burden AM, Mamdani M, Levesque LE, Cadarette SM. Interrupted time series analysis in drug utilization research is increasing: Systematic review and recommendations. J Clin Epidemiol. 2015;68(8):950-56. PubMed

16. Linden A. Conducting interrupted time-series analysis for single- and multiple-group comparisons. Stata J. 2015;15(2):480-500.

17. Linden A, Adams JL. Applying a propensity score-based weighting model to interrupted time series data: improving causal inference in programme evaluation. J Eval Clin Pract. 2011;17(6):1231-1238. PubMed

18. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

19. Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol. 2013;10(2):108-113. PubMed

1. Berwick DM, Hackbarth AD. Eliminating Waste in US Health Care. JAMA. 2012;307(14):1513-1516. PubMed

2. PricewaterhouseCoopers’ Health Research Institute. The Price of Excess: Identifying Waste in Healthcare Spending. http://www.pwc.com/us/en/healthcare/publications/the-price-of-excess.html. Accessed June 17, 2015.

3. Allan GM, Lexchin J, Wiebe N. Physician awareness of drug cost: a systematic review. PLoS Med. 2007;4(9):e283. PubMed

4. Feldman LS, Shihab HM, Thiemann D, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173(10):903-908. PubMed

5. Durand DJ, Feldman LS, Lewin JS, Brotman DJ. Provider cost transparency alone has no impact on inpatient imaging utilization. J Am Coll Radiol. 2013;10(2):108-113. PubMed

6. Silvestri MT, Bongiovanni TR, Glover JG, Gross CP. Impact of price display on provider ordering: A systematic review. J Hosp Med. 2016;11(1):65-76. PubMed

7. Ornstein SM, MacFarlane LL, Jenkins RG, Pan Q, Wager KA. Medication cost information in a computer-based patient record system. Impact on prescribing in a family medicine clinical practice. Arch Fam Med. 1999;8(2):118-121. PubMed

8. Guterman JJ, Chernof BA, Mares B, Gross-Schulman SG, Gan PG, Thomas D. Modifying provider behavior: A low-tech approach to pharmaceutical ordering. J Gen Intern Med. 2002;17(10):792-796. PubMed

9. McNitt JD, Bode ET, Nelson RE. Long-term pharmaceutical cost reduction using a data management system. Anesth Analg. 1998;87(4):837-842. PubMed

10. Horrow JC, Rosenberg H. Price stickers do not alter drug usage. Can J Anaesth. 1994;41(11):1047-1052. PubMed

11. Guterman JJ, Chernof BA, Mares B, Gross-Schulman SG, Gan PG, Thomas D. Modifying provider behavior: A low-tech approach to pharmaceutical ordering. J Gen Intern Med. 2002;17(10):792-796. PubMed