User login

Attending Workload, Teaching, and Safety

Teaching attending physicians must balance clinical workload and resident education simultaneously while supervising inpatient services. The workload of teaching attendings has been increasing due to many factors. As patient complexity has increased, length of stay has decreased, creating higher turnover and higher acuity of hospitalized patients.[1, 2, 3, 4, 5] The rising burden of clinical documentation has increased demands on inpatient attending physicians' time.[6] Additionally, resident duty hour restrictions have shifted the responsibility for patient care to the teaching attending.[7] These factors contribute to the perception of unsafe workloads among attending physicians[8] and could impact the ability to teach well.

Teaching effectiveness is an important facet of the graduate medical education (GME) learning environment.[9] Residents perceive that education suffers when their own workload increases,[10, 11, 12, 13, 14] and higher on‐call workload is associated with lower likelihood of participation in educational activities.[15] More contact between resident trainees and supervisory staff may improve the clinical value of inpatient rotations.[16] Program directors have expressed concern about the educational ramifications of work compression.[17, 18, 19, 20] Higher workload for attending physicians can negatively impact patient safety and quality of care,[21, 22] and perception of higher attending workload is associated with less time for teaching.[23] However, the impact of objective measures of attending physician workload on educational outcomes has not been explored. When attending physicians are responsible for increasingly complex clinical care in addition to resident education, teaching effectiveness may suffer. With growing emphasis on the educational environment's effect on healthcare quality and safety,[24] it is imperative to consider the influence of attending workload on patient care and resident education.

The combination of increasing clinical demands, fewer hours in‐house for residents, and less time for teaching has the potential to decrease attending physician teaching effectiveness. In this study, we aimed to evaluate relationships among objective measures of attending physician workload, resident perception of teaching effectiveness, and patient outcomes. We hypothesized that higher workload for attending physicians would be associated with lower ratings of teaching effectiveness and poorer outcomes for patients.

METHODS

We performed a retrospective study of attending physicians who supervised inpatient internal medicine teaching services at Mayo ClinicRochester from July 2005 through June 2011 (6 full academic years). The team structure for each service was 1 attending physician, 1 senior resident, and 3 interns. Senior residents were on call every fourth night, and interns were on call every sixth night. Up to 2 admissions per service were received during the daytime short call, and up to 5 admissions per service were received during the overnight long call. Attending physicians included all supervising physicians in appointment categories of attending/consultant, senior associate consultant, and chief medical resident at the Mayo Clinic. Maximum continuous on‐call time for residents during the study period was restricted to 30 hours continuously. The timeframe of this study was chosen to minimize variability in resident work schedules; effective July 1, 2011, duty hours for postgraduate year 1 residents were further restricted to a maximum of 16 hours in duration.[25]

Measures of Attending Physician Workload

To measure attending physician workload, we examined mean service census as reported at midnight, mean patient length of stay, mean number of daily admissions, and mean number of daily discharges. We also calculated mean daily outpatient relative value units (RVUs) generated as a measure of outpatient workload while the attending was supervising the inpatient service. Similar measures of workload have been used in previous research.[26] Attending physicians in this study functioned as hospitalists during their time supervising the teaching services; that is, they were not routinely assigned to any outpatient responsibilities. The only way for an outpatient RVU to be generated during their time supervising the hospital service was for the attending physician to specifically request to see an outpatient in the clinic. Attending physicians only supervised 1 teaching service at a time and had no concurrent nonteaching service obligations. Admissions were received on a rotating basis. Because patient illness severity may impact workload, we also examined mean expected mortality (per 1000 patients) for all patients on the attending physicians' hospital services.[27]

The above workload variables were measured in the specific timeframe that corresponded to the number of days an attending physician was supervising a particular team; for example, mean census was the mean number of patients on the attending physician's hospital service during his or her time supervising that resident team.

Teaching Effectiveness Outcome Measures

Teaching effectiveness was measured using residents' evaluations of their attending physicians with a 5‐point scale (1 = needs improvement, 3 = average, 5 = top 10% of attending physicians) that has been previously validated in similar contexts.[28, 29, 30, 31, 32] The evaluation questions are shown in Supporting Information, Appendix A, in the online version of this article.

Patient Outcome Measures

Patient outcomes included applicable patient safety indicators (PSIs) as defined by the Agency for Healthcare Research and Quality[33] (see Supporting Information, Appendix B, in the online version of this article), patient transfers to the intensive care unit (ICU), calls to the rapid response team/cardiopulmonary resuscitation team, and patient deaths. Each indicator and event was summarized as occurred or did not occur at the service‐team level. For example, for a particular attendingresident team, the occurrence of each of these events at any point during the time they worked together was recorded as occurred (1) or did not occur (0). Similar measures of patient outcomes have been used in previous research.[32]

Statistical Analysis

Mixed linear models with variance components covariance structure (including random effects to account for repeated ratings by residents and of faculty) were fit using restricted maximum likelihood to examine associations of attending workload and demographics with teaching scores. Generalized linear regression models, estimated via generalized estimating equations, were used to examine associations of attending workload and demographics with patient outcomes. Due to the binary nature of the outcomes, the binomial distribution and logit link function were used, producing odds ratios (ORs) for covariates akin to those found in standard logistic regression. Multivariate models were used to adjust for physician demographics including age, gender, teaching appointment (consultant, senior associate consultant/temporary clinical appointment, or chief medical resident) and academic rank (professor, associate professor, assistant professor, instructor/none).

To account for multiple comparisons, a significance level of P < 0.01 was used. All analyses were performed using SAS statistical software (version 9.3; SAS Institute Inc., Cary, NC). This study was deemed minimal risk after review by the Mayo Clinic Institutional Review Board.

RESULTS

Over the 6‐year study period, 107 attending physicians supervised internal medicine teaching services. Twenty‐three percent of teaching attending physicians were female. Mean attending age was 42.6 years. Attendings supervised a given service for between 2 and 19 days (mean [standard deviation] = 10.1 [4.1] days). There were 542 internal medicine residents on these teaching services who completed at least 1 teaching evaluation. A total of 69,386 teaching evaluation items were submitted by these residents during the study period.

In a multivariate analysis adjusted for faculty demographics and workload measures, teaching evaluation scores were significantly higher for attending physicians who had an academic rank of professor when compared to attendings who were assistant professors ( = 0.12, P = 0.007), or instructors/no academic rank ( = 0.23, P < 0.0001). The number of days an attending physician spent with the team showed a positive association with teaching evaluations ( = +0.015, P < 0.0001).

Associations between measures of attending physician workload and teaching evaluation scores are shown in Table 1. Mean midnight census and mean number of daily discharges were associated with lower teaching evaluation scores (both = 0.026, P < 0.0001). Mean number of daily admissions was associated with higher teaching scores ( = +0.021, P = 0.001). The mean expected mortality among hospitalized patients on the services supervised by teaching attendings and the outpatient RVUs generated by these attendings during the time they were supervising the hospital service showed no association with teaching scores. The average number of RVUs generated during an attending's entire time supervising hospital service was <1.

| Attending Physician Workload Measure | Mean (SD) | Multivariate Analysis* | |||

|---|---|---|---|---|---|

| SE | 99% CI | P | |||

| |||||

| Midnight census | 8.86 (1.8) | 0.026 | 0.002 | (0.03, 0.02) | <0.0001 |

| Length of stay, d | 6.91 (3.0) | +0.006 | 0.001 | (0.002, 0.009) | <0.0001 |

| Expected mortality (per 1,000 patients) | 51.94 (27.4) | 0.0001 | 0.0001 | (0.0004, 0.0001) | 0.19 |

| Daily admissions | 2.23 (0.54) | +0.021 | 0.006 | (0.004, 0.037) | 0.001 |

| Daily discharges | 2.13 (0.56) | 0.026 | 0.006 | (0.041, 0.010) | <0.0001 |

| Daily outpatient relative value units | 0.69 (1.2) | +0.004 | 0.003 | (0.002, 0.011) | 0.10 |

Table 2 shows relationships between attending physician workload and patient outcomes for the patients on hospital services supervised by 107 attending physicians during the study period. Patient outcome data showed positive associations between measures of higher workload and PSIs. Specifically, for each 1‐patient increase in the average number of daily admissions to the attending and resident services, the cohort of patients under the team's care was 1.8 times more likely to include at least 1 patient with a PSI event (OR = 1.81, 99% confidence interval [CI]: 1.21, 2.71, P = 0.0001). Likewise, for each 1‐day increase in average length of stay, the cohort of patients under the team's care was 1.16 times more likely to have at least 1 patient with a PSI (OR = 1.16, 99% CI: 1.07, 1.26, P < 0.0001). As anticipated, mean expected mortality was associated with actual mortality, cardiopulmonary resuscitation/rapid response team calls, and ICU transfers. There were no associations between patient outcomes and workload measures of midnight census and outpatient RVUs.

| Patient Outcomes, Multivariate Analysis* | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Patient Safety Indicators, n = 513 | Deaths, n = 352 | CPR/RRT Calls, n = 409 | ICU Transfers, n = 737 | |||||||||||||

| Workload measures | OR | SE | P | 99% CI | OR | SE | P | 99% CI | OR | SE | P | 99% CI | OR | SE | P | 99% CI |

| ||||||||||||||||

| Midnight census | 1.10 | 0.05 | 0.04 | (0.98, 1.24) | 0.91 | 0.04 | 0.03 | (0.81, 1.02) | 0.95 | 0.04 | 0.16 | (0.86, 1.05) | 1.06 | 0.04 | 0.16 | (0.96, 1.17) |

| Length of stay | 1.16 | 0.04 | <0.0001 | (1.07, 1.26) | 1.03 | 0.03 | 0.39 | (0.95, 1.12) | 0.99 | 0.03 | 0.63 | (0.92, 1.05) | 1.10 | 0.03 | 0.0001 | (1.03, 1.18) |

| Expected mortality (per 1,000 patients) | 1.00 | 0.003 | 0.24 | (0.99, 1.01) | 1.01 | 0.00 | 0.002 | (1.00, 1.02) | 1.02 | 0.00 | <0.0001 | (1.01, 1.02) | 1.01 | 0.00 | 0.003 | (1.00, 1.01) |

| Daily admissions | 1.81 | 0.28 | 0.0001 | (1.21, 2.71) | 0.78 | 0.14 | 0.16 | (0.49, 1.24) | 1.11 | 0.20 | 0.57 | (0.69, 1.77) | 1.34 | 0.24 | 0.09 | (0.85, 2.11) |

| Daily discharges | 1.06 | 0.13 | 0.61 | (0.78, 1.45) | 2.36 | 0.38 | <0.0001 | (1.56, 3.57) | 0.94 | 0.16 | 0.70 | (0.60, 1.46) | 1.09 | 0.16 | 0.53 | (0.75, 1.60) |

| Daily outpatient relative value units | 0.81 | 0.07 | 0.01 | (0.65, 1.00) | 1.02 | 0.04 | 0.56 | (0.92, 1.13) | 1.05 | 0.04 | 0.23 | (0.95, 1.17) | 0.92 | 0.06 | 0.23 | (0.77, 1.09) |

DISCUSSION

This study of internal medicine attending physician workload and resident education demonstrates that higher workload among attending physicians is associated with slightly lower teaching evaluation scores from residents as well as increased risks to patient safety.

The prior literature examining relationships between workload and teaching effectiveness is largely survey‐based and reliant upon physicians' self‐reported perceptions of workload.[10, 13, 23] The present study strengthens this evidence by using multiple objective measures of workload, objective measures of patient safety, and a large sample of teaching evaluations.

An interesting finding in this study was that the number of patient dismissals per day was associated with a significant decrease in teaching scores, whereas the number of admissions per day was associated with increased teaching scores. These findings may seem contradictory, because the number of admissions and discharges both measure physician workload. However, a likely explanation for this apparent inconsistency is that on internal medicine inpatient teaching services, much of the teaching of residents occurs at the time of a patient admission as residents are presenting cases to the attending physician, exploring differential diagnoses, and discussing management plans. By contrast, a patient dismissal tends to consist mainly of patient interaction, paperwork, and phone calls by the resident with less input required from the attending physician. Our findings suggest that although patient admissions remain a rich opportunity for resident education, patient dismissals may increase workload without improving teaching evaluations. As the inpatient hospital environment evolves, exploring options for nonphysician providers to assist with or complete patient dismissals may have a beneficial effect on resident education.[34] In addition, exploring more efficient teaching strategies may be beneficial in the fast‐paced inpatient learning milieu.[35]

There was a statistically significant positive association between the number of days an attending physician spent with the team and teaching evaluations. Although prior work has examined advantages and disadvantages of various resident schedules,[36, 37, 38] our results suggest scheduling models that emphasize continuity of the teaching attending and residents may be preferred to enhance teaching effectiveness. Further study would help elucidate potential implications of this finding for the scheduling of supervisory attendings to optimize education.

In this analysis, patient outcome measures were largely independent of attending physician workload, with the exception of PSIs. PSIs have been associated with longer stays in the hospital,[39, 40] which is consistent with our findings. However, mean daily admissions were also associated with PSIs. It could be expected that the more patients on a hospital service, the more PSIs will result. However, there was not a significant association between midnight census and PSIs when other variables were accounted for. Because new patient admissions are time consuming and contribute to the workload of both residents and attending physicians, it is possible that safety of the service's hospitalized patients is compromised when the team is putting time and effort toward new patients. Previous research has shown variability in PSI trends with changes in the workload environment.[41] Further studies are needed to fully explore relationships between admission volume and PSIs on teaching services.

It is worthwhile to note that attending physicians have specific responsibilities of supervision and documentation for new admissions. Although it could be argued that new admissions raise the workload for the entire team, and the higher team workload may impact teaching evaluations, previous research has demonstrated that resident burnout and well‐being, which are influenced by workload, do not impact residents' assessments of teachers.[42] In addition, metrics that could arguably be more apt to measure the workload of the team as a whole (eg, team census) did not show a significant association with patient outcomes.

This study has important limitations. First, the cohort of attending physicians, residents, and patients was from a large single institution and may not be generalizable to all settings. Second, most attending physicians in this sample were experienced teachers, so consequences of increased workload may have been managed effectively without a major impact on resident education in some cases. Third, the magnitude of change in teaching effectiveness, although statistically significant, was small and might call into question the educational significance of these findings. Fourth, although resident satisfaction does not influence teaching scores, it is possible that residents' perception of their own workload may have impacted teaching evaluations. Finally, data collection was intentionally closed at the end of the 2011 academic year because accreditation standards for resident duty hours changed again at that time.[43] Thus, these data may not directly reflect the evolving hospital learning environment but serve as a useful benchmark for future studies of workload and teaching effectiveness in the inpatient setting. Once hospitals have had sufficient time and experience with the new duty hour standards, additional studies exploring relationships between workload, teaching effectiveness, and patient outcomes may be warranted.

Limitations notwithstanding, this study shows that attending physician workload may adversely impact teaching and patient safety on internal medicine hospital services. Ongoing efforts by residency programs to optimize the learning environment should include strategies to manage the workload of supervising attendings.

Disclosures

This publication was made possible in part by Clinical and Translational Science Award grant number UL1 TR000135 from the National Center for Advancing Translational Sciences, a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of NIH. Authors also acknowledge support for the Mayo Clinic Department of Medicine Write‐up and Publish grant. In addition, this study was supported in part by the Mayo Clinic Internal Medicine Residency Office of Education Innovations as part of the Accreditation Council for Graduate Medical Education Educational Innovations Project. The information contained in this article was based in part on the performance package data maintained by the University HealthSystem Consortium. Copyright 2015 UHC. All rights reserved.

- , , . The future of residents' education in internal medicine. Am J Med. 2004;116(9):648–650.

- , , , , . Redesigning residency education in internal medicine: a position paper from the Association of Program Directors in Internal Medicine. Ann Intern Med. 2006;144(12):920–926.

- , , . Residency training in the modern era: the pipe dream of less time to learn more, care better, and be more professional. Arch Intern Med. 2005;165(22):2561–2562.

- , , , et al. Trends in Hospitalizations Among Medicare Survivors of Aortic Valve Replacement in the United States From 1999 to 2010. Ann Thorac Surg. 2015;99(2):509–517.

- , , . Restructuring an inpatient resident service to improve outcomes for residents, students, and patients. Acad Med. 2011;86(12):1500–1507.

- , , , . Clinical documentation in the 21st century: executive summary of a policy position paper from the American College of Physicians. Ann Intern Med. 2015;162(4):301–303.

- , . Effect of ACGME duty hours on attending physician teaching and satisfaction. Arch Intern Med. 2008;168(11):1226–1228.

- , , , , . Identifying potential predictors of a safe attending physician workload: a survey of hospitalists. J Hosp Med. 2013;8(11):644–646.

- , , . The clinical learning environment: the foundation of graduate medical education. JAMA. 2013;309(16):1687–1688.

- , , , , , . Better rested, but more stressed? Evidence of the effects of resident work hour restrictions. Acad Pediatr. 2012;12(4):335–343.

- , , , . Multifaceted longitudinal study of surgical resident education, quality of life, and patient care before and after July 2011. J Surg Educ. 2013;70(6):769–776.

- , , . Impact of the new 16‐hour duty period on pediatric interns' neonatal education. Clin Pediatr (Phila). 2014;53(1):51–59.

- , , , , , . Relationship between resident workload and self‐perceived learning on inpatient medicine wards: a longitudinal study. BMC Med Educ. 2006;6:35.

- , , , . Perceptions of educational experience and inpatient workload among pediatric residents. Hosp Pediatr. 2013;3(3):276–284.

- , , , et al. Association of workload of on‐call medical interns with on‐call sleep duration, shift duration, and participation in educational activities. JAMA. 2008;300(10):1146–1153.

- , , , , , . Effects of increased overnight supervision on resident education, decision‐making, and autonomy. J Hosp Med. 2012;7(8):606–610.

- , , , , . Approval and perceived impact of duty hour regulations: survey of pediatric program directors. Pediatrics. 2013;132(5):819–824.

- , , , et al. Anticipated consequences of the 2011 duty hours standards: views of internal medicine and surgery program directors. Acad Med. 2012;87(7):895–903.

- , , , , . Training on the clock: family medicine residency directors' responses to resident duty hours reform. Acad Med. 2006;81(12):1032–1037.

- , , , et al. Duty hour recommendations and implications for meeting the ACGME core competencies: views of residency directors. Mayo Clin Proc. 2011;86(3):185–191.

- , , , et al. Does surgeon workload per day affect outcomes after pulmonary lobectomies? Ann Thorac Surg. 2012;94(3):966–973.

- , , , . Impact of attending physician workload on patient care: a survey of hospitalists. JAMA Intern Med. 2013;173(5):375–377.

- , , , et al. No time for teaching? Inpatient attending physicians' workload and teaching before and after the implementation of the 2003 duty hours regulations. Acad Med. 2013;88(9):1293–1298.

- Accreditation Council for Graduate Medical Education. Clinical Learning Environment Review (CLER) Program. Available at: http://www.acgme.org/acgmeweb/tabid/436/ProgramandInstitutionalAccreditation/NextAccreditationSystem/ClinicalLearningEnvironmentReviewProgram.aspx. Accessed April 27, 2015.

- Accreditation Council for Graduate Medical Education. Frequently Asked Questions: A ACGME common duty hour requirements. Available at: https://www.acgme.org/acgmeweb/Portals/0/PDFs/dh‐faqs 2011.pdf. Accessed April 27, 2015.

- , , , , . Effect of hospitalist workload on the quality and efficiency of care. JAMA Intern Med. 2014;174(5):786–793.

- University HealthSystem Consortium. UHC clinical database/resource manager for Mayo Clinic. Available at: http://www.uhc.edu. Data accessed August 25, 2011.

- , . The interpersonal, cognitive and efficiency domains of clinical teaching: construct validity of a multi‐dimensional scale. Med Educ. 2005;39(12):1221–1229.

- , , . Factor instability of clinical teaching assessment scores among general internists and cardiologists. Med Educ. 2006;40(12):1209–1216.

- , , , . Determining reliability of clinical assessment scores in real time. Teach Learn Med. 2009;21(3):188–194.

- , , , , , . Behaviors of highly professional resident physicians. JAMA. 2008;300(11):1326–1333.

- , , , et al. Service census caps and unit‐based admissions: resident workload, conference attendance, duty hour compliance, and patient safety. Mayo Clin Proc. 2012;87(4):320–327.

- Agency for Healthcare Research and Quality. Patient safety indicators technical specifications updates—Version 5.0, March 2015. Available at: http://www.qualityindicators.ahrq.gov/Modules/PSI_TechSpec.aspx. Accessed May 29, 2015.

- , , , , , . The impact of nonphysician clinicians: do they improve the quality and cost‐effectiveness of health care services? Med Care Res Rev. 2009;66(6 suppl):36S–89S.

- , , . Maximizing teaching on the wards: review and application of the One‐Minute Preceptor and SNAPPS models. J Hosp Med. 2015;10(2):125–130.

- , , , . Resident perceptions of the educational value of night float rotations. Teach Learn Med. 2010;22(3):196–201.

- , , , , , . An evaluation of internal medicine residency continuity clinic redesign to a 50/50 outpatient‐inpatient model. J Gen Intern Med. 2013;28(8):1014–1019.

- , , , et al. Revisiting the rotating call schedule in less than 80 hours per week. J Surg Educ. 2009;66(6):357–360.

- , . Excess length of stay, charges, and mortality attributable to medical injuries during hospitalization. JAMA. 2003;290(14):1868–1874.

- , , , . Agency for Healthcare Research and Quality patient safety indicators and mortality in surgical patients. Am Surg. 2014;80(8):801–804.

- , , , et al. Patient safety in the era of the 80‐hour workweek. J Surg Educ. 2014;71(4):551–559.

- , , , . Impact of resident well‐being and empathy on assessments of faculty physicians. J Gen Intern Med. 2010;25(1):52–56.

- , , , et al. Stress management training for surgeons‐a randomized, controlled, intervention study. Ann Surg. 2011;253(3):488–494.

Teaching attending physicians must balance clinical workload and resident education simultaneously while supervising inpatient services. The workload of teaching attendings has been increasing due to many factors. As patient complexity has increased, length of stay has decreased, creating higher turnover and higher acuity of hospitalized patients.[1, 2, 3, 4, 5] The rising burden of clinical documentation has increased demands on inpatient attending physicians' time.[6] Additionally, resident duty hour restrictions have shifted the responsibility for patient care to the teaching attending.[7] These factors contribute to the perception of unsafe workloads among attending physicians[8] and could impact the ability to teach well.

Teaching effectiveness is an important facet of the graduate medical education (GME) learning environment.[9] Residents perceive that education suffers when their own workload increases,[10, 11, 12, 13, 14] and higher on‐call workload is associated with lower likelihood of participation in educational activities.[15] More contact between resident trainees and supervisory staff may improve the clinical value of inpatient rotations.[16] Program directors have expressed concern about the educational ramifications of work compression.[17, 18, 19, 20] Higher workload for attending physicians can negatively impact patient safety and quality of care,[21, 22] and perception of higher attending workload is associated with less time for teaching.[23] However, the impact of objective measures of attending physician workload on educational outcomes has not been explored. When attending physicians are responsible for increasingly complex clinical care in addition to resident education, teaching effectiveness may suffer. With growing emphasis on the educational environment's effect on healthcare quality and safety,[24] it is imperative to consider the influence of attending workload on patient care and resident education.

The combination of increasing clinical demands, fewer hours in‐house for residents, and less time for teaching has the potential to decrease attending physician teaching effectiveness. In this study, we aimed to evaluate relationships among objective measures of attending physician workload, resident perception of teaching effectiveness, and patient outcomes. We hypothesized that higher workload for attending physicians would be associated with lower ratings of teaching effectiveness and poorer outcomes for patients.

METHODS

We performed a retrospective study of attending physicians who supervised inpatient internal medicine teaching services at Mayo ClinicRochester from July 2005 through June 2011 (6 full academic years). The team structure for each service was 1 attending physician, 1 senior resident, and 3 interns. Senior residents were on call every fourth night, and interns were on call every sixth night. Up to 2 admissions per service were received during the daytime short call, and up to 5 admissions per service were received during the overnight long call. Attending physicians included all supervising physicians in appointment categories of attending/consultant, senior associate consultant, and chief medical resident at the Mayo Clinic. Maximum continuous on‐call time for residents during the study period was restricted to 30 hours continuously. The timeframe of this study was chosen to minimize variability in resident work schedules; effective July 1, 2011, duty hours for postgraduate year 1 residents were further restricted to a maximum of 16 hours in duration.[25]

Measures of Attending Physician Workload

To measure attending physician workload, we examined mean service census as reported at midnight, mean patient length of stay, mean number of daily admissions, and mean number of daily discharges. We also calculated mean daily outpatient relative value units (RVUs) generated as a measure of outpatient workload while the attending was supervising the inpatient service. Similar measures of workload have been used in previous research.[26] Attending physicians in this study functioned as hospitalists during their time supervising the teaching services; that is, they were not routinely assigned to any outpatient responsibilities. The only way for an outpatient RVU to be generated during their time supervising the hospital service was for the attending physician to specifically request to see an outpatient in the clinic. Attending physicians only supervised 1 teaching service at a time and had no concurrent nonteaching service obligations. Admissions were received on a rotating basis. Because patient illness severity may impact workload, we also examined mean expected mortality (per 1000 patients) for all patients on the attending physicians' hospital services.[27]

The above workload variables were measured in the specific timeframe that corresponded to the number of days an attending physician was supervising a particular team; for example, mean census was the mean number of patients on the attending physician's hospital service during his or her time supervising that resident team.

Teaching Effectiveness Outcome Measures

Teaching effectiveness was measured using residents' evaluations of their attending physicians with a 5‐point scale (1 = needs improvement, 3 = average, 5 = top 10% of attending physicians) that has been previously validated in similar contexts.[28, 29, 30, 31, 32] The evaluation questions are shown in Supporting Information, Appendix A, in the online version of this article.

Patient Outcome Measures

Patient outcomes included applicable patient safety indicators (PSIs) as defined by the Agency for Healthcare Research and Quality[33] (see Supporting Information, Appendix B, in the online version of this article), patient transfers to the intensive care unit (ICU), calls to the rapid response team/cardiopulmonary resuscitation team, and patient deaths. Each indicator and event was summarized as occurred or did not occur at the service‐team level. For example, for a particular attendingresident team, the occurrence of each of these events at any point during the time they worked together was recorded as occurred (1) or did not occur (0). Similar measures of patient outcomes have been used in previous research.[32]

Statistical Analysis

Mixed linear models with variance components covariance structure (including random effects to account for repeated ratings by residents and of faculty) were fit using restricted maximum likelihood to examine associations of attending workload and demographics with teaching scores. Generalized linear regression models, estimated via generalized estimating equations, were used to examine associations of attending workload and demographics with patient outcomes. Due to the binary nature of the outcomes, the binomial distribution and logit link function were used, producing odds ratios (ORs) for covariates akin to those found in standard logistic regression. Multivariate models were used to adjust for physician demographics including age, gender, teaching appointment (consultant, senior associate consultant/temporary clinical appointment, or chief medical resident) and academic rank (professor, associate professor, assistant professor, instructor/none).

To account for multiple comparisons, a significance level of P < 0.01 was used. All analyses were performed using SAS statistical software (version 9.3; SAS Institute Inc., Cary, NC). This study was deemed minimal risk after review by the Mayo Clinic Institutional Review Board.

RESULTS

Over the 6‐year study period, 107 attending physicians supervised internal medicine teaching services. Twenty‐three percent of teaching attending physicians were female. Mean attending age was 42.6 years. Attendings supervised a given service for between 2 and 19 days (mean [standard deviation] = 10.1 [4.1] days). There were 542 internal medicine residents on these teaching services who completed at least 1 teaching evaluation. A total of 69,386 teaching evaluation items were submitted by these residents during the study period.

In a multivariate analysis adjusted for faculty demographics and workload measures, teaching evaluation scores were significantly higher for attending physicians who had an academic rank of professor when compared to attendings who were assistant professors ( = 0.12, P = 0.007), or instructors/no academic rank ( = 0.23, P < 0.0001). The number of days an attending physician spent with the team showed a positive association with teaching evaluations ( = +0.015, P < 0.0001).

Associations between measures of attending physician workload and teaching evaluation scores are shown in Table 1. Mean midnight census and mean number of daily discharges were associated with lower teaching evaluation scores (both = 0.026, P < 0.0001). Mean number of daily admissions was associated with higher teaching scores ( = +0.021, P = 0.001). The mean expected mortality among hospitalized patients on the services supervised by teaching attendings and the outpatient RVUs generated by these attendings during the time they were supervising the hospital service showed no association with teaching scores. The average number of RVUs generated during an attending's entire time supervising hospital service was <1.

| Attending Physician Workload Measure | Mean (SD) | Multivariate Analysis* | |||

|---|---|---|---|---|---|

| SE | 99% CI | P | |||

| |||||

| Midnight census | 8.86 (1.8) | 0.026 | 0.002 | (0.03, 0.02) | <0.0001 |

| Length of stay, d | 6.91 (3.0) | +0.006 | 0.001 | (0.002, 0.009) | <0.0001 |

| Expected mortality (per 1,000 patients) | 51.94 (27.4) | 0.0001 | 0.0001 | (0.0004, 0.0001) | 0.19 |

| Daily admissions | 2.23 (0.54) | +0.021 | 0.006 | (0.004, 0.037) | 0.001 |

| Daily discharges | 2.13 (0.56) | 0.026 | 0.006 | (0.041, 0.010) | <0.0001 |

| Daily outpatient relative value units | 0.69 (1.2) | +0.004 | 0.003 | (0.002, 0.011) | 0.10 |

Table 2 shows relationships between attending physician workload and patient outcomes for the patients on hospital services supervised by 107 attending physicians during the study period. Patient outcome data showed positive associations between measures of higher workload and PSIs. Specifically, for each 1‐patient increase in the average number of daily admissions to the attending and resident services, the cohort of patients under the team's care was 1.8 times more likely to include at least 1 patient with a PSI event (OR = 1.81, 99% confidence interval [CI]: 1.21, 2.71, P = 0.0001). Likewise, for each 1‐day increase in average length of stay, the cohort of patients under the team's care was 1.16 times more likely to have at least 1 patient with a PSI (OR = 1.16, 99% CI: 1.07, 1.26, P < 0.0001). As anticipated, mean expected mortality was associated with actual mortality, cardiopulmonary resuscitation/rapid response team calls, and ICU transfers. There were no associations between patient outcomes and workload measures of midnight census and outpatient RVUs.

| Patient Outcomes, Multivariate Analysis* | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Patient Safety Indicators, n = 513 | Deaths, n = 352 | CPR/RRT Calls, n = 409 | ICU Transfers, n = 737 | |||||||||||||

| Workload measures | OR | SE | P | 99% CI | OR | SE | P | 99% CI | OR | SE | P | 99% CI | OR | SE | P | 99% CI |

| ||||||||||||||||

| Midnight census | 1.10 | 0.05 | 0.04 | (0.98, 1.24) | 0.91 | 0.04 | 0.03 | (0.81, 1.02) | 0.95 | 0.04 | 0.16 | (0.86, 1.05) | 1.06 | 0.04 | 0.16 | (0.96, 1.17) |

| Length of stay | 1.16 | 0.04 | <0.0001 | (1.07, 1.26) | 1.03 | 0.03 | 0.39 | (0.95, 1.12) | 0.99 | 0.03 | 0.63 | (0.92, 1.05) | 1.10 | 0.03 | 0.0001 | (1.03, 1.18) |

| Expected mortality (per 1,000 patients) | 1.00 | 0.003 | 0.24 | (0.99, 1.01) | 1.01 | 0.00 | 0.002 | (1.00, 1.02) | 1.02 | 0.00 | <0.0001 | (1.01, 1.02) | 1.01 | 0.00 | 0.003 | (1.00, 1.01) |

| Daily admissions | 1.81 | 0.28 | 0.0001 | (1.21, 2.71) | 0.78 | 0.14 | 0.16 | (0.49, 1.24) | 1.11 | 0.20 | 0.57 | (0.69, 1.77) | 1.34 | 0.24 | 0.09 | (0.85, 2.11) |

| Daily discharges | 1.06 | 0.13 | 0.61 | (0.78, 1.45) | 2.36 | 0.38 | <0.0001 | (1.56, 3.57) | 0.94 | 0.16 | 0.70 | (0.60, 1.46) | 1.09 | 0.16 | 0.53 | (0.75, 1.60) |

| Daily outpatient relative value units | 0.81 | 0.07 | 0.01 | (0.65, 1.00) | 1.02 | 0.04 | 0.56 | (0.92, 1.13) | 1.05 | 0.04 | 0.23 | (0.95, 1.17) | 0.92 | 0.06 | 0.23 | (0.77, 1.09) |

DISCUSSION

This study of internal medicine attending physician workload and resident education demonstrates that higher workload among attending physicians is associated with slightly lower teaching evaluation scores from residents as well as increased risks to patient safety.

The prior literature examining relationships between workload and teaching effectiveness is largely survey‐based and reliant upon physicians' self‐reported perceptions of workload.[10, 13, 23] The present study strengthens this evidence by using multiple objective measures of workload, objective measures of patient safety, and a large sample of teaching evaluations.

An interesting finding in this study was that the number of patient dismissals per day was associated with a significant decrease in teaching scores, whereas the number of admissions per day was associated with increased teaching scores. These findings may seem contradictory, because the number of admissions and discharges both measure physician workload. However, a likely explanation for this apparent inconsistency is that on internal medicine inpatient teaching services, much of the teaching of residents occurs at the time of a patient admission as residents are presenting cases to the attending physician, exploring differential diagnoses, and discussing management plans. By contrast, a patient dismissal tends to consist mainly of patient interaction, paperwork, and phone calls by the resident with less input required from the attending physician. Our findings suggest that although patient admissions remain a rich opportunity for resident education, patient dismissals may increase workload without improving teaching evaluations. As the inpatient hospital environment evolves, exploring options for nonphysician providers to assist with or complete patient dismissals may have a beneficial effect on resident education.[34] In addition, exploring more efficient teaching strategies may be beneficial in the fast‐paced inpatient learning milieu.[35]

There was a statistically significant positive association between the number of days an attending physician spent with the team and teaching evaluations. Although prior work has examined advantages and disadvantages of various resident schedules,[36, 37, 38] our results suggest scheduling models that emphasize continuity of the teaching attending and residents may be preferred to enhance teaching effectiveness. Further study would help elucidate potential implications of this finding for the scheduling of supervisory attendings to optimize education.

In this analysis, patient outcome measures were largely independent of attending physician workload, with the exception of PSIs. PSIs have been associated with longer stays in the hospital,[39, 40] which is consistent with our findings. However, mean daily admissions were also associated with PSIs. It could be expected that the more patients on a hospital service, the more PSIs will result. However, there was not a significant association between midnight census and PSIs when other variables were accounted for. Because new patient admissions are time consuming and contribute to the workload of both residents and attending physicians, it is possible that safety of the service's hospitalized patients is compromised when the team is putting time and effort toward new patients. Previous research has shown variability in PSI trends with changes in the workload environment.[41] Further studies are needed to fully explore relationships between admission volume and PSIs on teaching services.

It is worthwhile to note that attending physicians have specific responsibilities of supervision and documentation for new admissions. Although it could be argued that new admissions raise the workload for the entire team, and the higher team workload may impact teaching evaluations, previous research has demonstrated that resident burnout and well‐being, which are influenced by workload, do not impact residents' assessments of teachers.[42] In addition, metrics that could arguably be more apt to measure the workload of the team as a whole (eg, team census) did not show a significant association with patient outcomes.

This study has important limitations. First, the cohort of attending physicians, residents, and patients was from a large single institution and may not be generalizable to all settings. Second, most attending physicians in this sample were experienced teachers, so consequences of increased workload may have been managed effectively without a major impact on resident education in some cases. Third, the magnitude of change in teaching effectiveness, although statistically significant, was small and might call into question the educational significance of these findings. Fourth, although resident satisfaction does not influence teaching scores, it is possible that residents' perception of their own workload may have impacted teaching evaluations. Finally, data collection was intentionally closed at the end of the 2011 academic year because accreditation standards for resident duty hours changed again at that time.[43] Thus, these data may not directly reflect the evolving hospital learning environment but serve as a useful benchmark for future studies of workload and teaching effectiveness in the inpatient setting. Once hospitals have had sufficient time and experience with the new duty hour standards, additional studies exploring relationships between workload, teaching effectiveness, and patient outcomes may be warranted.

Limitations notwithstanding, this study shows that attending physician workload may adversely impact teaching and patient safety on internal medicine hospital services. Ongoing efforts by residency programs to optimize the learning environment should include strategies to manage the workload of supervising attendings.

Disclosures

This publication was made possible in part by Clinical and Translational Science Award grant number UL1 TR000135 from the National Center for Advancing Translational Sciences, a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of NIH. Authors also acknowledge support for the Mayo Clinic Department of Medicine Write‐up and Publish grant. In addition, this study was supported in part by the Mayo Clinic Internal Medicine Residency Office of Education Innovations as part of the Accreditation Council for Graduate Medical Education Educational Innovations Project. The information contained in this article was based in part on the performance package data maintained by the University HealthSystem Consortium. Copyright 2015 UHC. All rights reserved.

Teaching attending physicians must balance clinical workload and resident education simultaneously while supervising inpatient services. The workload of teaching attendings has been increasing due to many factors. As patient complexity has increased, length of stay has decreased, creating higher turnover and higher acuity of hospitalized patients.[1, 2, 3, 4, 5] The rising burden of clinical documentation has increased demands on inpatient attending physicians' time.[6] Additionally, resident duty hour restrictions have shifted the responsibility for patient care to the teaching attending.[7] These factors contribute to the perception of unsafe workloads among attending physicians[8] and could impact the ability to teach well.

Teaching effectiveness is an important facet of the graduate medical education (GME) learning environment.[9] Residents perceive that education suffers when their own workload increases,[10, 11, 12, 13, 14] and higher on‐call workload is associated with lower likelihood of participation in educational activities.[15] More contact between resident trainees and supervisory staff may improve the clinical value of inpatient rotations.[16] Program directors have expressed concern about the educational ramifications of work compression.[17, 18, 19, 20] Higher workload for attending physicians can negatively impact patient safety and quality of care,[21, 22] and perception of higher attending workload is associated with less time for teaching.[23] However, the impact of objective measures of attending physician workload on educational outcomes has not been explored. When attending physicians are responsible for increasingly complex clinical care in addition to resident education, teaching effectiveness may suffer. With growing emphasis on the educational environment's effect on healthcare quality and safety,[24] it is imperative to consider the influence of attending workload on patient care and resident education.

The combination of increasing clinical demands, fewer hours in‐house for residents, and less time for teaching has the potential to decrease attending physician teaching effectiveness. In this study, we aimed to evaluate relationships among objective measures of attending physician workload, resident perception of teaching effectiveness, and patient outcomes. We hypothesized that higher workload for attending physicians would be associated with lower ratings of teaching effectiveness and poorer outcomes for patients.

METHODS

We performed a retrospective study of attending physicians who supervised inpatient internal medicine teaching services at Mayo ClinicRochester from July 2005 through June 2011 (6 full academic years). The team structure for each service was 1 attending physician, 1 senior resident, and 3 interns. Senior residents were on call every fourth night, and interns were on call every sixth night. Up to 2 admissions per service were received during the daytime short call, and up to 5 admissions per service were received during the overnight long call. Attending physicians included all supervising physicians in appointment categories of attending/consultant, senior associate consultant, and chief medical resident at the Mayo Clinic. Maximum continuous on‐call time for residents during the study period was restricted to 30 hours continuously. The timeframe of this study was chosen to minimize variability in resident work schedules; effective July 1, 2011, duty hours for postgraduate year 1 residents were further restricted to a maximum of 16 hours in duration.[25]

Measures of Attending Physician Workload

To measure attending physician workload, we examined mean service census as reported at midnight, mean patient length of stay, mean number of daily admissions, and mean number of daily discharges. We also calculated mean daily outpatient relative value units (RVUs) generated as a measure of outpatient workload while the attending was supervising the inpatient service. Similar measures of workload have been used in previous research.[26] Attending physicians in this study functioned as hospitalists during their time supervising the teaching services; that is, they were not routinely assigned to any outpatient responsibilities. The only way for an outpatient RVU to be generated during their time supervising the hospital service was for the attending physician to specifically request to see an outpatient in the clinic. Attending physicians only supervised 1 teaching service at a time and had no concurrent nonteaching service obligations. Admissions were received on a rotating basis. Because patient illness severity may impact workload, we also examined mean expected mortality (per 1000 patients) for all patients on the attending physicians' hospital services.[27]

The above workload variables were measured in the specific timeframe that corresponded to the number of days an attending physician was supervising a particular team; for example, mean census was the mean number of patients on the attending physician's hospital service during his or her time supervising that resident team.

Teaching Effectiveness Outcome Measures

Teaching effectiveness was measured using residents' evaluations of their attending physicians with a 5‐point scale (1 = needs improvement, 3 = average, 5 = top 10% of attending physicians) that has been previously validated in similar contexts.[28, 29, 30, 31, 32] The evaluation questions are shown in Supporting Information, Appendix A, in the online version of this article.

Patient Outcome Measures

Patient outcomes included applicable patient safety indicators (PSIs) as defined by the Agency for Healthcare Research and Quality[33] (see Supporting Information, Appendix B, in the online version of this article), patient transfers to the intensive care unit (ICU), calls to the rapid response team/cardiopulmonary resuscitation team, and patient deaths. Each indicator and event was summarized as occurred or did not occur at the service‐team level. For example, for a particular attendingresident team, the occurrence of each of these events at any point during the time they worked together was recorded as occurred (1) or did not occur (0). Similar measures of patient outcomes have been used in previous research.[32]

Statistical Analysis

Mixed linear models with variance components covariance structure (including random effects to account for repeated ratings by residents and of faculty) were fit using restricted maximum likelihood to examine associations of attending workload and demographics with teaching scores. Generalized linear regression models, estimated via generalized estimating equations, were used to examine associations of attending workload and demographics with patient outcomes. Due to the binary nature of the outcomes, the binomial distribution and logit link function were used, producing odds ratios (ORs) for covariates akin to those found in standard logistic regression. Multivariate models were used to adjust for physician demographics including age, gender, teaching appointment (consultant, senior associate consultant/temporary clinical appointment, or chief medical resident) and academic rank (professor, associate professor, assistant professor, instructor/none).

To account for multiple comparisons, a significance level of P < 0.01 was used. All analyses were performed using SAS statistical software (version 9.3; SAS Institute Inc., Cary, NC). This study was deemed minimal risk after review by the Mayo Clinic Institutional Review Board.

RESULTS

Over the 6‐year study period, 107 attending physicians supervised internal medicine teaching services. Twenty‐three percent of teaching attending physicians were female. Mean attending age was 42.6 years. Attendings supervised a given service for between 2 and 19 days (mean [standard deviation] = 10.1 [4.1] days). There were 542 internal medicine residents on these teaching services who completed at least 1 teaching evaluation. A total of 69,386 teaching evaluation items were submitted by these residents during the study period.

In a multivariate analysis adjusted for faculty demographics and workload measures, teaching evaluation scores were significantly higher for attending physicians who had an academic rank of professor when compared to attendings who were assistant professors ( = 0.12, P = 0.007), or instructors/no academic rank ( = 0.23, P < 0.0001). The number of days an attending physician spent with the team showed a positive association with teaching evaluations ( = +0.015, P < 0.0001).

Associations between measures of attending physician workload and teaching evaluation scores are shown in Table 1. Mean midnight census and mean number of daily discharges were associated with lower teaching evaluation scores (both = 0.026, P < 0.0001). Mean number of daily admissions was associated with higher teaching scores ( = +0.021, P = 0.001). The mean expected mortality among hospitalized patients on the services supervised by teaching attendings and the outpatient RVUs generated by these attendings during the time they were supervising the hospital service showed no association with teaching scores. The average number of RVUs generated during an attending's entire time supervising hospital service was <1.

| Attending Physician Workload Measure | Mean (SD) | Multivariate Analysis* | |||

|---|---|---|---|---|---|

| SE | 99% CI | P | |||

| |||||

| Midnight census | 8.86 (1.8) | 0.026 | 0.002 | (0.03, 0.02) | <0.0001 |

| Length of stay, d | 6.91 (3.0) | +0.006 | 0.001 | (0.002, 0.009) | <0.0001 |

| Expected mortality (per 1,000 patients) | 51.94 (27.4) | 0.0001 | 0.0001 | (0.0004, 0.0001) | 0.19 |

| Daily admissions | 2.23 (0.54) | +0.021 | 0.006 | (0.004, 0.037) | 0.001 |

| Daily discharges | 2.13 (0.56) | 0.026 | 0.006 | (0.041, 0.010) | <0.0001 |

| Daily outpatient relative value units | 0.69 (1.2) | +0.004 | 0.003 | (0.002, 0.011) | 0.10 |

Table 2 shows relationships between attending physician workload and patient outcomes for the patients on hospital services supervised by 107 attending physicians during the study period. Patient outcome data showed positive associations between measures of higher workload and PSIs. Specifically, for each 1‐patient increase in the average number of daily admissions to the attending and resident services, the cohort of patients under the team's care was 1.8 times more likely to include at least 1 patient with a PSI event (OR = 1.81, 99% confidence interval [CI]: 1.21, 2.71, P = 0.0001). Likewise, for each 1‐day increase in average length of stay, the cohort of patients under the team's care was 1.16 times more likely to have at least 1 patient with a PSI (OR = 1.16, 99% CI: 1.07, 1.26, P < 0.0001). As anticipated, mean expected mortality was associated with actual mortality, cardiopulmonary resuscitation/rapid response team calls, and ICU transfers. There were no associations between patient outcomes and workload measures of midnight census and outpatient RVUs.

| Patient Outcomes, Multivariate Analysis* | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Patient Safety Indicators, n = 513 | Deaths, n = 352 | CPR/RRT Calls, n = 409 | ICU Transfers, n = 737 | |||||||||||||

| Workload measures | OR | SE | P | 99% CI | OR | SE | P | 99% CI | OR | SE | P | 99% CI | OR | SE | P | 99% CI |

| ||||||||||||||||

| Midnight census | 1.10 | 0.05 | 0.04 | (0.98, 1.24) | 0.91 | 0.04 | 0.03 | (0.81, 1.02) | 0.95 | 0.04 | 0.16 | (0.86, 1.05) | 1.06 | 0.04 | 0.16 | (0.96, 1.17) |

| Length of stay | 1.16 | 0.04 | <0.0001 | (1.07, 1.26) | 1.03 | 0.03 | 0.39 | (0.95, 1.12) | 0.99 | 0.03 | 0.63 | (0.92, 1.05) | 1.10 | 0.03 | 0.0001 | (1.03, 1.18) |

| Expected mortality (per 1,000 patients) | 1.00 | 0.003 | 0.24 | (0.99, 1.01) | 1.01 | 0.00 | 0.002 | (1.00, 1.02) | 1.02 | 0.00 | <0.0001 | (1.01, 1.02) | 1.01 | 0.00 | 0.003 | (1.00, 1.01) |

| Daily admissions | 1.81 | 0.28 | 0.0001 | (1.21, 2.71) | 0.78 | 0.14 | 0.16 | (0.49, 1.24) | 1.11 | 0.20 | 0.57 | (0.69, 1.77) | 1.34 | 0.24 | 0.09 | (0.85, 2.11) |

| Daily discharges | 1.06 | 0.13 | 0.61 | (0.78, 1.45) | 2.36 | 0.38 | <0.0001 | (1.56, 3.57) | 0.94 | 0.16 | 0.70 | (0.60, 1.46) | 1.09 | 0.16 | 0.53 | (0.75, 1.60) |

| Daily outpatient relative value units | 0.81 | 0.07 | 0.01 | (0.65, 1.00) | 1.02 | 0.04 | 0.56 | (0.92, 1.13) | 1.05 | 0.04 | 0.23 | (0.95, 1.17) | 0.92 | 0.06 | 0.23 | (0.77, 1.09) |

DISCUSSION

This study of internal medicine attending physician workload and resident education demonstrates that higher workload among attending physicians is associated with slightly lower teaching evaluation scores from residents as well as increased risks to patient safety.

The prior literature examining relationships between workload and teaching effectiveness is largely survey‐based and reliant upon physicians' self‐reported perceptions of workload.[10, 13, 23] The present study strengthens this evidence by using multiple objective measures of workload, objective measures of patient safety, and a large sample of teaching evaluations.

An interesting finding in this study was that the number of patient dismissals per day was associated with a significant decrease in teaching scores, whereas the number of admissions per day was associated with increased teaching scores. These findings may seem contradictory, because the number of admissions and discharges both measure physician workload. However, a likely explanation for this apparent inconsistency is that on internal medicine inpatient teaching services, much of the teaching of residents occurs at the time of a patient admission as residents are presenting cases to the attending physician, exploring differential diagnoses, and discussing management plans. By contrast, a patient dismissal tends to consist mainly of patient interaction, paperwork, and phone calls by the resident with less input required from the attending physician. Our findings suggest that although patient admissions remain a rich opportunity for resident education, patient dismissals may increase workload without improving teaching evaluations. As the inpatient hospital environment evolves, exploring options for nonphysician providers to assist with or complete patient dismissals may have a beneficial effect on resident education.[34] In addition, exploring more efficient teaching strategies may be beneficial in the fast‐paced inpatient learning milieu.[35]

There was a statistically significant positive association between the number of days an attending physician spent with the team and teaching evaluations. Although prior work has examined advantages and disadvantages of various resident schedules,[36, 37, 38] our results suggest scheduling models that emphasize continuity of the teaching attending and residents may be preferred to enhance teaching effectiveness. Further study would help elucidate potential implications of this finding for the scheduling of supervisory attendings to optimize education.

In this analysis, patient outcome measures were largely independent of attending physician workload, with the exception of PSIs. PSIs have been associated with longer stays in the hospital,[39, 40] which is consistent with our findings. However, mean daily admissions were also associated with PSIs. It could be expected that the more patients on a hospital service, the more PSIs will result. However, there was not a significant association between midnight census and PSIs when other variables were accounted for. Because new patient admissions are time consuming and contribute to the workload of both residents and attending physicians, it is possible that safety of the service's hospitalized patients is compromised when the team is putting time and effort toward new patients. Previous research has shown variability in PSI trends with changes in the workload environment.[41] Further studies are needed to fully explore relationships between admission volume and PSIs on teaching services.

It is worthwhile to note that attending physicians have specific responsibilities of supervision and documentation for new admissions. Although it could be argued that new admissions raise the workload for the entire team, and the higher team workload may impact teaching evaluations, previous research has demonstrated that resident burnout and well‐being, which are influenced by workload, do not impact residents' assessments of teachers.[42] In addition, metrics that could arguably be more apt to measure the workload of the team as a whole (eg, team census) did not show a significant association with patient outcomes.

This study has important limitations. First, the cohort of attending physicians, residents, and patients was from a large single institution and may not be generalizable to all settings. Second, most attending physicians in this sample were experienced teachers, so consequences of increased workload may have been managed effectively without a major impact on resident education in some cases. Third, the magnitude of change in teaching effectiveness, although statistically significant, was small and might call into question the educational significance of these findings. Fourth, although resident satisfaction does not influence teaching scores, it is possible that residents' perception of their own workload may have impacted teaching evaluations. Finally, data collection was intentionally closed at the end of the 2011 academic year because accreditation standards for resident duty hours changed again at that time.[43] Thus, these data may not directly reflect the evolving hospital learning environment but serve as a useful benchmark for future studies of workload and teaching effectiveness in the inpatient setting. Once hospitals have had sufficient time and experience with the new duty hour standards, additional studies exploring relationships between workload, teaching effectiveness, and patient outcomes may be warranted.

Limitations notwithstanding, this study shows that attending physician workload may adversely impact teaching and patient safety on internal medicine hospital services. Ongoing efforts by residency programs to optimize the learning environment should include strategies to manage the workload of supervising attendings.

Disclosures

This publication was made possible in part by Clinical and Translational Science Award grant number UL1 TR000135 from the National Center for Advancing Translational Sciences, a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of NIH. Authors also acknowledge support for the Mayo Clinic Department of Medicine Write‐up and Publish grant. In addition, this study was supported in part by the Mayo Clinic Internal Medicine Residency Office of Education Innovations as part of the Accreditation Council for Graduate Medical Education Educational Innovations Project. The information contained in this article was based in part on the performance package data maintained by the University HealthSystem Consortium. Copyright 2015 UHC. All rights reserved.

- , , . The future of residents' education in internal medicine. Am J Med. 2004;116(9):648–650.

- , , , , . Redesigning residency education in internal medicine: a position paper from the Association of Program Directors in Internal Medicine. Ann Intern Med. 2006;144(12):920–926.

- , , . Residency training in the modern era: the pipe dream of less time to learn more, care better, and be more professional. Arch Intern Med. 2005;165(22):2561–2562.

- , , , et al. Trends in Hospitalizations Among Medicare Survivors of Aortic Valve Replacement in the United States From 1999 to 2010. Ann Thorac Surg. 2015;99(2):509–517.

- , , . Restructuring an inpatient resident service to improve outcomes for residents, students, and patients. Acad Med. 2011;86(12):1500–1507.

- , , , . Clinical documentation in the 21st century: executive summary of a policy position paper from the American College of Physicians. Ann Intern Med. 2015;162(4):301–303.

- , . Effect of ACGME duty hours on attending physician teaching and satisfaction. Arch Intern Med. 2008;168(11):1226–1228.

- , , , , . Identifying potential predictors of a safe attending physician workload: a survey of hospitalists. J Hosp Med. 2013;8(11):644–646.

- , , . The clinical learning environment: the foundation of graduate medical education. JAMA. 2013;309(16):1687–1688.

- , , , , , . Better rested, but more stressed? Evidence of the effects of resident work hour restrictions. Acad Pediatr. 2012;12(4):335–343.

- , , , . Multifaceted longitudinal study of surgical resident education, quality of life, and patient care before and after July 2011. J Surg Educ. 2013;70(6):769–776.

- , , . Impact of the new 16‐hour duty period on pediatric interns' neonatal education. Clin Pediatr (Phila). 2014;53(1):51–59.

- , , , , , . Relationship between resident workload and self‐perceived learning on inpatient medicine wards: a longitudinal study. BMC Med Educ. 2006;6:35.

- , , , . Perceptions of educational experience and inpatient workload among pediatric residents. Hosp Pediatr. 2013;3(3):276–284.

- , , , et al. Association of workload of on‐call medical interns with on‐call sleep duration, shift duration, and participation in educational activities. JAMA. 2008;300(10):1146–1153.

- , , , , , . Effects of increased overnight supervision on resident education, decision‐making, and autonomy. J Hosp Med. 2012;7(8):606–610.

- , , , , . Approval and perceived impact of duty hour regulations: survey of pediatric program directors. Pediatrics. 2013;132(5):819–824.

- , , , et al. Anticipated consequences of the 2011 duty hours standards: views of internal medicine and surgery program directors. Acad Med. 2012;87(7):895–903.

- , , , , . Training on the clock: family medicine residency directors' responses to resident duty hours reform. Acad Med. 2006;81(12):1032–1037.

- , , , et al. Duty hour recommendations and implications for meeting the ACGME core competencies: views of residency directors. Mayo Clin Proc. 2011;86(3):185–191.

- , , , et al. Does surgeon workload per day affect outcomes after pulmonary lobectomies? Ann Thorac Surg. 2012;94(3):966–973.

- , , , . Impact of attending physician workload on patient care: a survey of hospitalists. JAMA Intern Med. 2013;173(5):375–377.

- , , , et al. No time for teaching? Inpatient attending physicians' workload and teaching before and after the implementation of the 2003 duty hours regulations. Acad Med. 2013;88(9):1293–1298.

- Accreditation Council for Graduate Medical Education. Clinical Learning Environment Review (CLER) Program. Available at: http://www.acgme.org/acgmeweb/tabid/436/ProgramandInstitutionalAccreditation/NextAccreditationSystem/ClinicalLearningEnvironmentReviewProgram.aspx. Accessed April 27, 2015.

- Accreditation Council for Graduate Medical Education. Frequently Asked Questions: A ACGME common duty hour requirements. Available at: https://www.acgme.org/acgmeweb/Portals/0/PDFs/dh‐faqs 2011.pdf. Accessed April 27, 2015.

- , , , , . Effect of hospitalist workload on the quality and efficiency of care. JAMA Intern Med. 2014;174(5):786–793.

- University HealthSystem Consortium. UHC clinical database/resource manager for Mayo Clinic. Available at: http://www.uhc.edu. Data accessed August 25, 2011.

- , . The interpersonal, cognitive and efficiency domains of clinical teaching: construct validity of a multi‐dimensional scale. Med Educ. 2005;39(12):1221–1229.

- , , . Factor instability of clinical teaching assessment scores among general internists and cardiologists. Med Educ. 2006;40(12):1209–1216.

- , , , . Determining reliability of clinical assessment scores in real time. Teach Learn Med. 2009;21(3):188–194.

- , , , , , . Behaviors of highly professional resident physicians. JAMA. 2008;300(11):1326–1333.

- , , , et al. Service census caps and unit‐based admissions: resident workload, conference attendance, duty hour compliance, and patient safety. Mayo Clin Proc. 2012;87(4):320–327.

- Agency for Healthcare Research and Quality. Patient safety indicators technical specifications updates—Version 5.0, March 2015. Available at: http://www.qualityindicators.ahrq.gov/Modules/PSI_TechSpec.aspx. Accessed May 29, 2015.

- , , , , , . The impact of nonphysician clinicians: do they improve the quality and cost‐effectiveness of health care services? Med Care Res Rev. 2009;66(6 suppl):36S–89S.

- , , . Maximizing teaching on the wards: review and application of the One‐Minute Preceptor and SNAPPS models. J Hosp Med. 2015;10(2):125–130.

- , , , . Resident perceptions of the educational value of night float rotations. Teach Learn Med. 2010;22(3):196–201.

- , , , , , . An evaluation of internal medicine residency continuity clinic redesign to a 50/50 outpatient‐inpatient model. J Gen Intern Med. 2013;28(8):1014–1019.

- , , , et al. Revisiting the rotating call schedule in less than 80 hours per week. J Surg Educ. 2009;66(6):357–360.

- , . Excess length of stay, charges, and mortality attributable to medical injuries during hospitalization. JAMA. 2003;290(14):1868–1874.

- , , , . Agency for Healthcare Research and Quality patient safety indicators and mortality in surgical patients. Am Surg. 2014;80(8):801–804.

- , , , et al. Patient safety in the era of the 80‐hour workweek. J Surg Educ. 2014;71(4):551–559.

- , , , . Impact of resident well‐being and empathy on assessments of faculty physicians. J Gen Intern Med. 2010;25(1):52–56.

- , , , et al. Stress management training for surgeons‐a randomized, controlled, intervention study. Ann Surg. 2011;253(3):488–494.

- , , . The future of residents' education in internal medicine. Am J Med. 2004;116(9):648–650.

- , , , , . Redesigning residency education in internal medicine: a position paper from the Association of Program Directors in Internal Medicine. Ann Intern Med. 2006;144(12):920–926.

- , , . Residency training in the modern era: the pipe dream of less time to learn more, care better, and be more professional. Arch Intern Med. 2005;165(22):2561–2562.

- , , , et al. Trends in Hospitalizations Among Medicare Survivors of Aortic Valve Replacement in the United States From 1999 to 2010. Ann Thorac Surg. 2015;99(2):509–517.

- , , . Restructuring an inpatient resident service to improve outcomes for residents, students, and patients. Acad Med. 2011;86(12):1500–1507.

- , , , . Clinical documentation in the 21st century: executive summary of a policy position paper from the American College of Physicians. Ann Intern Med. 2015;162(4):301–303.

- , . Effect of ACGME duty hours on attending physician teaching and satisfaction. Arch Intern Med. 2008;168(11):1226–1228.

- , , , , . Identifying potential predictors of a safe attending physician workload: a survey of hospitalists. J Hosp Med. 2013;8(11):644–646.

- , , . The clinical learning environment: the foundation of graduate medical education. JAMA. 2013;309(16):1687–1688.

- , , , , , . Better rested, but more stressed? Evidence of the effects of resident work hour restrictions. Acad Pediatr. 2012;12(4):335–343.

- , , , . Multifaceted longitudinal study of surgical resident education, quality of life, and patient care before and after July 2011. J Surg Educ. 2013;70(6):769–776.

- , , . Impact of the new 16‐hour duty period on pediatric interns' neonatal education. Clin Pediatr (Phila). 2014;53(1):51–59.

- , , , , , . Relationship between resident workload and self‐perceived learning on inpatient medicine wards: a longitudinal study. BMC Med Educ. 2006;6:35.

- , , , . Perceptions of educational experience and inpatient workload among pediatric residents. Hosp Pediatr. 2013;3(3):276–284.

- , , , et al. Association of workload of on‐call medical interns with on‐call sleep duration, shift duration, and participation in educational activities. JAMA. 2008;300(10):1146–1153.

- , , , , , . Effects of increased overnight supervision on resident education, decision‐making, and autonomy. J Hosp Med. 2012;7(8):606–610.

- , , , , . Approval and perceived impact of duty hour regulations: survey of pediatric program directors. Pediatrics. 2013;132(5):819–824.

- , , , et al. Anticipated consequences of the 2011 duty hours standards: views of internal medicine and surgery program directors. Acad Med. 2012;87(7):895–903.

- , , , , . Training on the clock: family medicine residency directors' responses to resident duty hours reform. Acad Med. 2006;81(12):1032–1037.

- , , , et al. Duty hour recommendations and implications for meeting the ACGME core competencies: views of residency directors. Mayo Clin Proc. 2011;86(3):185–191.

- , , , et al. Does surgeon workload per day affect outcomes after pulmonary lobectomies? Ann Thorac Surg. 2012;94(3):966–973.

- , , , . Impact of attending physician workload on patient care: a survey of hospitalists. JAMA Intern Med. 2013;173(5):375–377.

- , , , et al. No time for teaching? Inpatient attending physicians' workload and teaching before and after the implementation of the 2003 duty hours regulations. Acad Med. 2013;88(9):1293–1298.

- Accreditation Council for Graduate Medical Education. Clinical Learning Environment Review (CLER) Program. Available at: http://www.acgme.org/acgmeweb/tabid/436/ProgramandInstitutionalAccreditation/NextAccreditationSystem/ClinicalLearningEnvironmentReviewProgram.aspx. Accessed April 27, 2015.

- Accreditation Council for Graduate Medical Education. Frequently Asked Questions: A ACGME common duty hour requirements. Available at: https://www.acgme.org/acgmeweb/Portals/0/PDFs/dh‐faqs 2011.pdf. Accessed April 27, 2015.

- , , , , . Effect of hospitalist workload on the quality and efficiency of care. JAMA Intern Med. 2014;174(5):786–793.

- University HealthSystem Consortium. UHC clinical database/resource manager for Mayo Clinic. Available at: http://www.uhc.edu. Data accessed August 25, 2011.

- , . The interpersonal, cognitive and efficiency domains of clinical teaching: construct validity of a multi‐dimensional scale. Med Educ. 2005;39(12):1221–1229.

- , , . Factor instability of clinical teaching assessment scores among general internists and cardiologists. Med Educ. 2006;40(12):1209–1216.

- , , , . Determining reliability of clinical assessment scores in real time. Teach Learn Med. 2009;21(3):188–194.

- , , , , , . Behaviors of highly professional resident physicians. JAMA. 2008;300(11):1326–1333.

- , , , et al. Service census caps and unit‐based admissions: resident workload, conference attendance, duty hour compliance, and patient safety. Mayo Clin Proc. 2012;87(4):320–327.

- Agency for Healthcare Research and Quality. Patient safety indicators technical specifications updates—Version 5.0, March 2015. Available at: http://www.qualityindicators.ahrq.gov/Modules/PSI_TechSpec.aspx. Accessed May 29, 2015.

- , , , , , . The impact of nonphysician clinicians: do they improve the quality and cost‐effectiveness of health care services? Med Care Res Rev. 2009;66(6 suppl):36S–89S.

- , , . Maximizing teaching on the wards: review and application of the One‐Minute Preceptor and SNAPPS models. J Hosp Med. 2015;10(2):125–130.

- , , , . Resident perceptions of the educational value of night float rotations. Teach Learn Med. 2010;22(3):196–201.

- , , , , , . An evaluation of internal medicine residency continuity clinic redesign to a 50/50 outpatient‐inpatient model. J Gen Intern Med. 2013;28(8):1014–1019.

- , , , et al. Revisiting the rotating call schedule in less than 80 hours per week. J Surg Educ. 2009;66(6):357–360.

- , . Excess length of stay, charges, and mortality attributable to medical injuries during hospitalization. JAMA. 2003;290(14):1868–1874.

- , , , . Agency for Healthcare Research and Quality patient safety indicators and mortality in surgical patients. Am Surg. 2014;80(8):801–804.

- , , , et al. Patient safety in the era of the 80‐hour workweek. J Surg Educ. 2014;71(4):551–559.

- , , , . Impact of resident well‐being and empathy on assessments of faculty physicians. J Gen Intern Med. 2010;25(1):52–56.

- , , , et al. Stress management training for surgeons‐a randomized, controlled, intervention study. Ann Surg. 2011;253(3):488–494.

© 2016 Society of Hospital Medicine

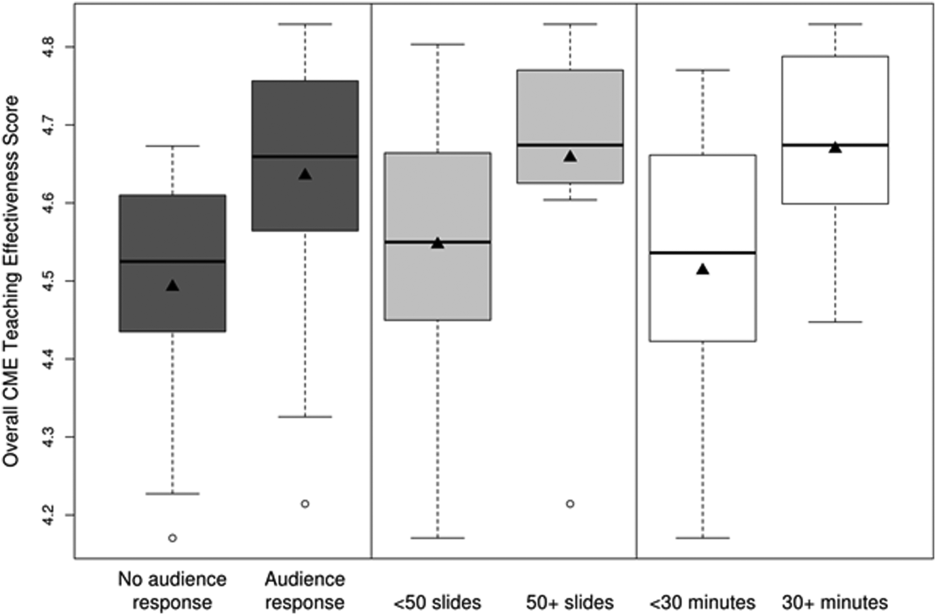

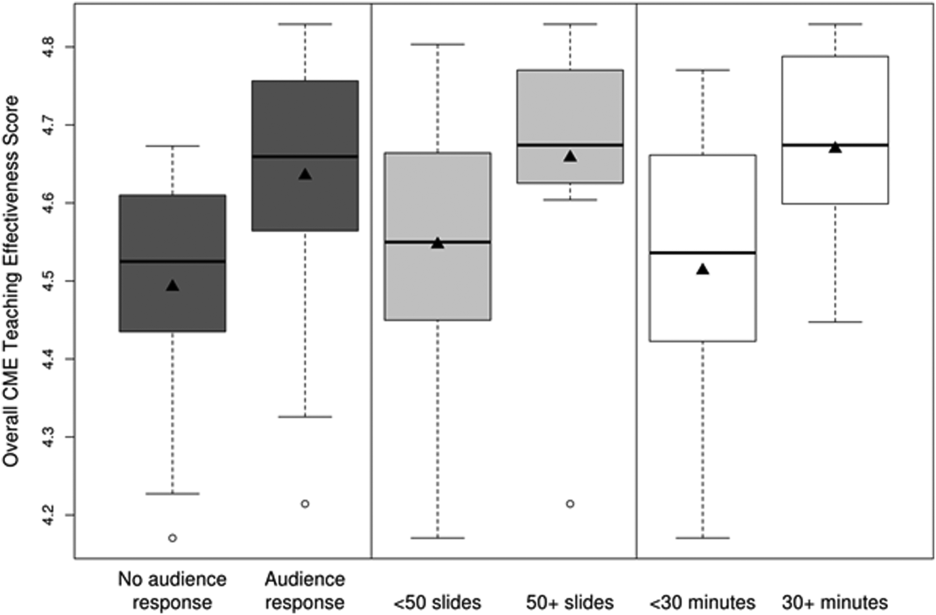

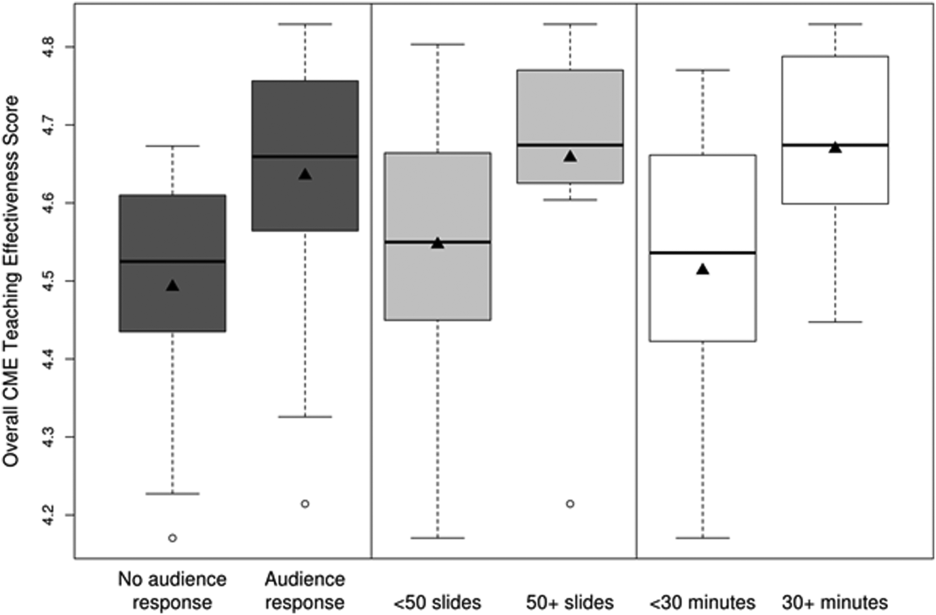

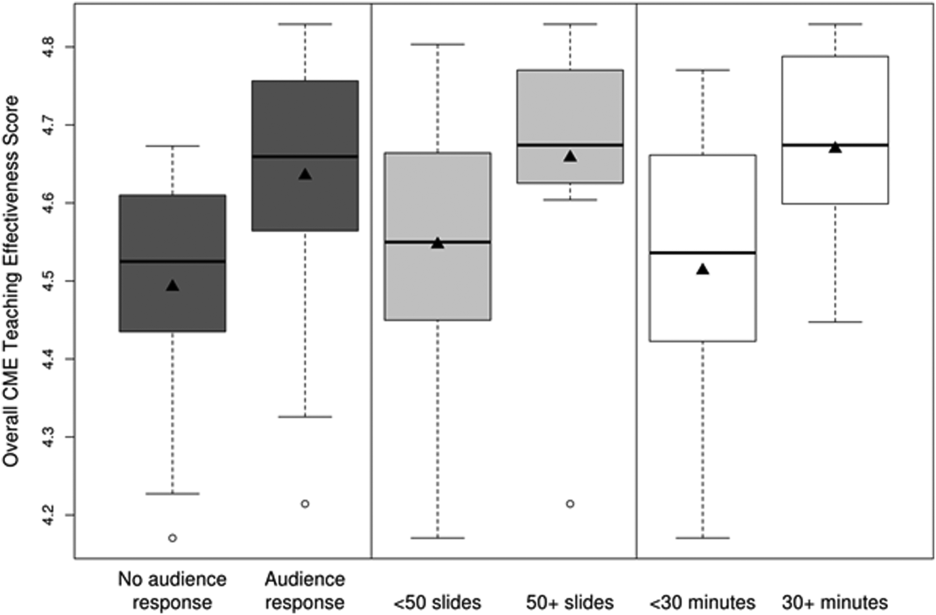

Teaching Effectiveness in HM

Hospital medicine (HM), which is the fastest growing medical specialty in the United States, includes more than 40,000 healthcare providers.[1] Hospitalists include practitioners from a variety of medical specialties, including internal medicine and pediatrics, and professional backgrounds such as physicians, nurse practitioners. and physician assistants.[2, 3] Originally defined as specialists of inpatient medicine, hospitalists must diagnose and manage a wide variety of clinical conditions, coordinate transitions of care, provide perioperative management to surgical patients, and contribute to quality improvement and hospital administration.[4, 5]