User login

Chronicles of Cancer: A history of mammography, part 2

The push and pull of social forces

Science and technology emerge from and are shaped by social forces outside the laboratory and clinic. This is an essential fact of most new medical technology. In the Chronicles of Cancer series, part 1 of the story of mammography focused on the technological determinants of its development and use. Part 2 will focus on some of the social forces that shaped the development of mammography.

“Few medical issues have been as controversial – or as political, at least in the United States – as the role of mammographic screening for breast cancer,” according to Donald A. Berry, PhD, a biostatistician at the University of Texas MD Anderson Cancer Center, Houston.1

In fact, technology aside, the history of mammography has been and remains rife with controversy on the one side and vigorous promotion on the other, all enmeshed within the War on Cancer, corporate and professional interests, and the women’s rights movement’s growing issues with what was seen as a patriarchal medical establishment.

Today the issue of conflicts of interest are paramount in any discussion of new medical developments, from the early preclinical stages to ultimate deployment. Then, as now, professional and advocacy societies had a profound influence on government and social decision-making, but in that earlier, more trusting era, buoyed by the amazing changes that technology was bringing to everyday life and an unshakable commitment to and belief in “progress,” science and the medical community held a far more effective sway over the beliefs and behavior of the general population.

Women’s health observed

Although the main focus of the women’s movement with regard to breast cancer was a struggle against the common practice of routine radical mastectomies and a push toward breast-conserving surgeries, the issue of preventive care and screening with regard to women’s health was also a major concern.

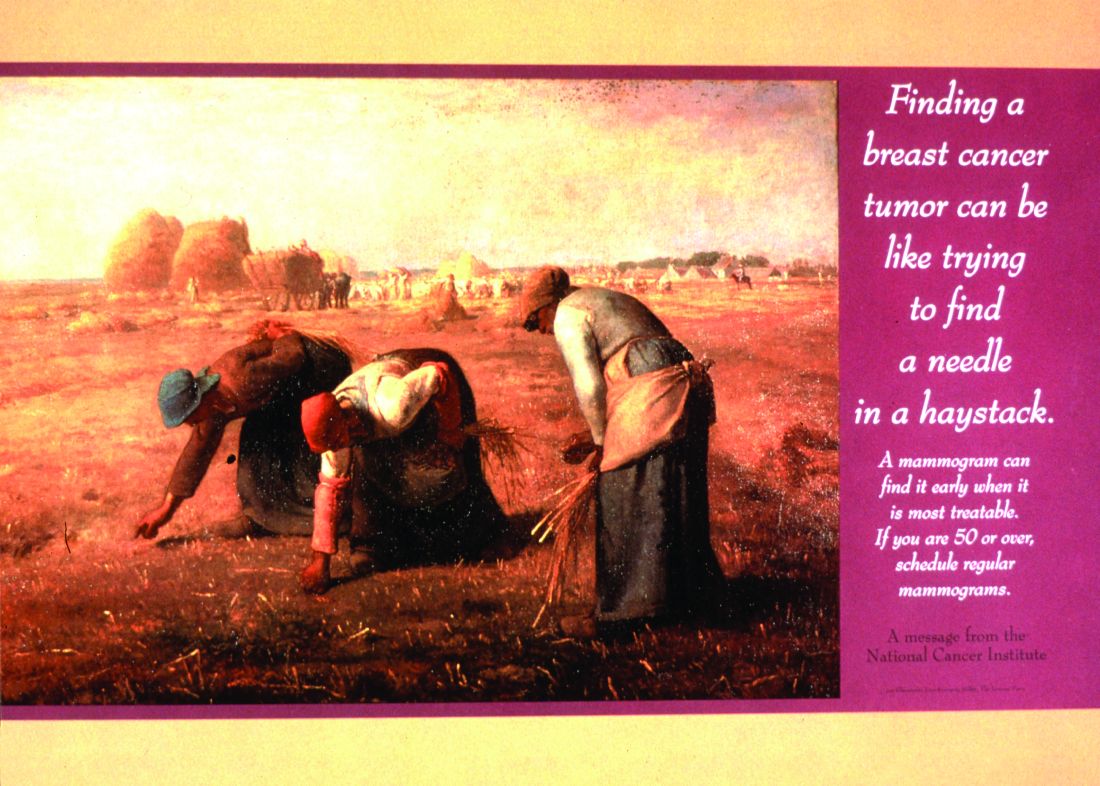

Regarding mammography, early enthusiasm in the medical community and among the general public was profound. In 1969, Robert Egan described how mammography had a “certain magic appeal.” The patient, he continued, “feels something special is being done for her.” Women whose cancers had been discovered on a mammogram praised radiologists as heroes who had saved their lives.2

In that era, however, beyond the confines of the doctor’s office, mammography and breast cancer remained topics not discussed among the public at large, despite efforts by the American Cancer Society to change this.

ACS weighs in

Various groups had been promoting the benefits of breast self-examination since the 1930s, and in 1947, the American Cancer Society launched an awareness campaign, “Look for a Lump or Thickening in the Breast,” instructing women to perform a monthly breast self-exam. Between self-examination and clinical breast examinations in physicians’ offices, the ACS believed that smaller and more treatable breast cancers could be discovered.

In 1972, the ACS, working with the National Cancer Institute (NCI), inaugurated the Breast Cancer Detection Demonstration Project (BCDDP), which planned to screen over a quarter of a million American women for breast cancer. The initiative was a direct outgrowth of the National Cancer Act of 1971,3 the key legislation of the War on Cancer, promoted by President Richard Nixon in his State of the Union address in 1971 and responsible for the creation of the National Cancer Institute.

Arthur I. Holleb, MD, ACS senior vice president for medical affairs and research, announced that, “[T]he time has come for the American Cancer Society to mount a massive program on mammography just as we did with the Pap test,”2 according to Barron Lerner, MD, whose book “The Breast Cancer Wars” provides a history of the long-term controversies involved.4

The Pap test, widely promulgated in the 1950s and 1960s, had produced a decline in mortality from cervical cancer.

Regardless of the lack of data on effectiveness at earlier ages, the ACS chose to include women as young as 35 in the BCDDP in order “to inculcate them with ‘good health habits’ ” and “to make our screenee want to return periodically and to want to act as a missionary to bring other women into the screening process.”2

Celebrity status matters

All of the elements of a social revolution in the use of mammography were in place in the late 1960s, but the final triggers to raise social consciousness were the cases of several high-profile female celebrities. In 1973, beloved former child star Shirley Temple Black revealed her breast cancer diagnosis and mastectomy in an era when public discussion of cancer – especially breast cancer – was rare.4

But it wasn’t until 1974 that public awareness and media coverage exploded, sparked by the impact of First Lady Betty Ford’s outspokenness on her own experience of breast cancer. “In obituaries prior to the 1950s and 1960s, women who died from breast cancer were often listed as dying from ‘a prolonged disease’ or ‘a woman’s disease,’ ” according to Tasha Dubriwny, PhD, now an associate professor of communication and women’s and gender studies at Texas A&M University, College Station, when interviewed by the American Association for Cancer Research.5Betty Ford openly addressed her breast cancer diagnosis and treatment and became a prominent advocate for early screening, transforming the landscape of breast cancer awareness. And although Betty Ford’s diagnosis was based on clinical examination rather than mammography, its boost to overall screening was indisputable.

“Within weeks [after Betty Ford’s announcement] thousands of women who had been reluctant to examine their breasts inundated cancer screening centers,” according to a 1987 article in the New York Times.6 Among these women was Happy Rockefeller, the wife of Vice President Nelson A. Rockefeller. Happy Rockefeller also found that she had breast cancer upon screening, and with Betty Ford would become another icon thereafter for breast cancer screening.

“Ford’s lesson for other women was straightforward: Get a mammogram, which she had not done. The American Cancer Society and National Cancer Institute had recently mounted a demonstration project to promote the detection of breast cancer as early as possible, when it was presumed to be more curable. The degree to which women embraced Ford’s message became clear through the famous ‘Betty Ford blip.’ So many women got breast examinations and mammograms for the first time after Ford’s announcement that the actual incidence of breast cancer in the United States went up by 15 percent.”4

In a 1975 address to the American Cancer Society, Betty Ford said: “One day I appeared to be fine and the next day I was in the hospital for a mastectomy. It made me realize how many women in the country could be in the same situation. That realization made me decide to discuss my breast cancer operation openly, because I thought of all the lives in jeopardy. My experience and frank discussion of breast cancer did prompt many women to learn about self-examination, regular checkups, and such detection techniques as mammography. These are so important. I just cannot stress enough how necessary it is for women to take an active interest in their own health and body.”7

ACS guidelines evolve

It wasn’t until 1976 that the ACS issued its first major guidelines for mammography screening. The ACS suggested mammograms may be called for in women aged 35-39 if there was a personal history of breast cancer, and between ages 40 and 49 if their mother or sisters had a history of breast cancer. Women aged 50 years and older could have yearly screening. Thereafter, the use of mammography was encouraged more and more with each new set of recommendations.8

Between 1980 and 1982, these guidelines expanded to advising a baseline mammogram for women aged 35-39 years; that women consult with their physician between ages 40 and 49; and that women over 50 have a yearly mammogram.

Between 1983 and 1991, the recommendations were for a baseline mammogram for women aged 35-39 years; a mammogram every 1-2 years for women aged 40-49; and yearly mammograms for women aged 50 and up. The baseline mammogram recommendation was dropped in 1992.

Between 1997 and 2015, the stakes were upped, and women aged 40-49 years were now recommended to have yearly mammograms, as were still all women aged 50 years and older.

In October 2015, the ACS changed their recommendation to say that women aged 40-44 years should have the choice of initiating mammogram screening, and that the risks and benefits of doing so should be discussed with their physicians. Women aged 45 years and older were still recommended for yearly mammogram screening. That recommendation stands today.

Controversies arise over risk/benefit

The technology was not, however, universally embraced. “By the late 1970s, mammography had diffused much more widely but had become a source of tremendous controversy. On the one hand, advocates of the technology enthusiastically touted its ability to detect smaller, more curable cancers. On the other hand, critics asked whether breast x-rays, particularly for women aged 50 and younger, actually caused more harm than benefit.”2

In addition, meta-analyses of the nine major screening trials conducted between 1965 and 1991 indicated that the reduced breast cancer mortality with screening was dependent on age. In particular, the results for women aged 40-49 years and 50-59 years showed only borderline statistical significance, and they varied depending on how cases were accrued in individual trials.

“Assuming that differences actually exist, the absolute breast cancer mortality reduction per 10,000 women screened for 10 years ranged from 3 for age 39-49 years; 5-8 for age 50-59 years; and 12-21 for age 60=69 years,” according to a review by the U.S. Preventive Services Task Force.9

The estimates for the group aged 70-74 years were limited by low numbers of events in trials that had smaller numbers of women in this age group.

Age has continued to be a major factor in determining the cost/benefit of routine mammography screening, with the American College of Physicians stating in its 2019 guidelines, “The potential harms outweigh the benefits in most women aged 40 to 49 years,” and adding, “In average-risk women aged 75 years or older or in women with a life expectancy of 10 years or less, clinicians should discontinue screening for breast cancer.”10

A Cochrane Report from 2013 was equally critical: “If we assume that screening reduces breast cancer mortality by 15% after 13 years of follow-up and that overdiagnosis and overtreatment is at 30%, it means that for every 2,000 women invited for screening throughout 10 years, one will avoid dying of breast cancer and 10 healthy women, who would not have been diagnosed if there had not been screening, will be treated unnecessarily. Furthermore, more than 200 women will experience important psychological distress including anxiety and uncertainty for years because of false positive findings.”11

Conflicting voices exist

These reports advising a more nuanced evaluation of the benefits of mammography, however, were received with skepticism from doctors committed to the vision of breast cancer screening and convinced by anecdotal evidence in their own practices.

These reports were also in direct contradiction to recommendations made in 1997 by the National Cancer Institute, which recommended screening mammograms every 3 years for women aged 40-49 years at average risk of breast cancer.

Such scientific vacillation has contributed to a love/hate relationship with mammography in the mainstream media, fueling new controversies with regard to breast cancer screening, sometimes as much driven by public suspicion and political advocacy as by scientific evolution.

Vocal opponents of universal mammography screening arose throughout the years, and even the cases of Betty Ford and Happy Rockefeller have been called into question as iconic demonstrations of the effectiveness of screening. And although not directly linked to the issue of screening, the rebellion against the routine use of radical mastectomies, a technique pioneered by Halsted in 1894 and in continuing use into the modern era, sparked outrage in women’s rights activists who saw it as evidence of a patriarchal medical establishment making arbitrary decisions concerning women’s bodies. For example, feminist and breast cancer activist Rose Kushner argued against the unnecessary disfigurement of women’s bodies and urged the use and development of less drastic techniques, including partial mastectomies and lumpectomies as viable choices. And these choices were increasingly supported by the medical community as safe and effective alternatives for many patients.12

A 2015 paper in the Journal of the Royal Society of Medicine was bluntly titled “Mammography screening is harmful and should be abandoned.”13 According to the author, who was the editor of the 2013 Cochrane Report, “I believe that if screening had been a drug, it would have been withdrawn from the market long ago.” And the popular press has not been shy at weighing in on the controversy, driven, in part, by the lack of consensus and continually changing guidelines, with major publications such as U.S. News and World Report, the Washington Post, and others addressing the issue over the years. And even public advocacy groups such as the Susan G. Komen organization14 are supporting the more modern professional guidelines in taking a more nuanced approach to the discussion of risks and benefits for individual women.

In 2014, the Swiss Medical Board, a nationally appointed body, recommended that new mammography screening programs should not be instituted in that country and that limits be placed on current programs because of the imbalance between risks and benefits of mammography screening.15 And a study done in Australia in 2020 agreed, stating, “Using data of 30% overdiagnosis of women aged 50 to 69 years in the NSW [New South Wales] BreastScreen program in 2012, we calculated an Australian ratio of harm of overdiagnosis to benefit (breast cancer deaths avoided) of 15:1 and recommended stopping the invitation to screening.”16

Conclusion

If nothing else, the history of mammography shows that the interconnection of social factors with the rise of a medical technology can have profound impacts on patient care. Technology developed by men for women became a touchstone of resentment in a world ever more aware of sex and gender biases in everything from the conduct of clinical trials to the care (or lack thereof) of women with heart disease. Tied for so many years to a radically disfiguring and drastic form of surgery that affected what many felt to be a hallmark and representation of womanhood1,17 mammography also carried the weight of both the real and imaginary fears of radiation exposure.

Well into its development, the technology still found itself under intense public scrutiny, and was enmeshed in a continual media circus, with ping-ponging discussions of risk/benefit in the scientific literature fueling complaints by many of the dominance of a patriarchal medical community over women’s bodies.

With guidelines for mammography still evolving, questions still remaining, and new technologies such as digital imaging falling short in their hoped-for promise, the story remains unfinished, and the future still uncertain. One thing remains clear, however: In the right circumstances, with the right patient population, and properly executed, mammography has saved lives when tied to effective, early treatment, whatever its flaws and failings. This truth goes hand in hand with another reality: It may have also contributed to considerable unanticipated harm through overdiagnosis and overtreatment.

Overall, the history of mammography is a cautionary tale for the entire medical community and for the development of new medical technologies. The push-pull of the demand for progress to save lives and the slowness and often inconclusiveness of scientific studies that validate new technologies create gray areas, where social determinants and professional interests vie in an information vacuum for control of the narrative of risks vs. benefits.

The story of mammography is not yet concluded, and may never be, especially given the unlikelihood of conducting the massive randomized clinical trials that would be needed to settle the issue. It is more likely to remain controversial, at least until the technology of mammography becomes obsolete, replaced by something new and different, which will likely start the push-pull cycle all over again.

And regardless of the risks and benefits of mammography screening, the issue of treatment once breast cancer is identified is perhaps one of more overwhelming import.

References

1. Berry, DA. The Breast. 2013;22[Supplement 2]:S73-S76.

2. Lerner, BH. “To See Today With the Eyes of Tomorrow: A History of Screening Mammography.” Background paper for the Institute of Medicine report Mammography and Beyond: Developing Technologies for the Early Detection of Breast Cancer. 2001.

3. NCI website. The National Cancer Act of 1971. www.cancer.gov/about-nci/overview/history/national-cancer-act-1971.

4. Lerner BH. The Huffington Post, Sep. 26, 2014.

5. Wu C. Cancer Today. 2012;2(3): Sep. 27.

6. “”The New York Times. Oct. 17, 1987.

7. Ford B. Remarks to the American Cancer Society. 1975.

8. The American Cancer Society website. History of ACS Recommendations for the Early Detection of Cancer in People Without Symptoms.

9. Nelson HD et al. Screening for Breast Cancer: A Systematic Review to Update the 2009 U.S. Preventive Services Task Force Recommendation. 2016; Evidence Syntheses, No. 124; pp.29-49.

10. Qasseem A et al. Annals of Internal Medicine. 2019;170(8):547-60.

11. Gotzsche PC et al. Cochrane Report 2013.

12. Lerner, BH. West J Med. May 2001;174(5):362-5.

13. Gotzsche PC. J R Soc Med. 2015;108(9): 341-5.

14. Susan G. Komen website. Weighing the Benefits and Risks of Mammography.

15. Biller-Andorno N et al. N Engl J Med 2014;370:1965-7.

16. Burton R et al. JAMA Netw Open. 2020;3(6):e208249.

17. Webb C et al. Plast Surg. 2019;27(1):49-53.

Mark Lesney is the editor of Hematology News and the managing editor of MDedge.com/IDPractioner. He has a PhD in plant virology and a PhD in the history of science, with a focus on the history of biotechnology and medicine. He has worked as a writer/editor for the American Chemical Society, and has served as an adjunct assistant professor in the department of biochemistry and molecular & cellular biology at Georgetown University, Washington.

The push and pull of social forces

The push and pull of social forces

Science and technology emerge from and are shaped by social forces outside the laboratory and clinic. This is an essential fact of most new medical technology. In the Chronicles of Cancer series, part 1 of the story of mammography focused on the technological determinants of its development and use. Part 2 will focus on some of the social forces that shaped the development of mammography.

“Few medical issues have been as controversial – or as political, at least in the United States – as the role of mammographic screening for breast cancer,” according to Donald A. Berry, PhD, a biostatistician at the University of Texas MD Anderson Cancer Center, Houston.1

In fact, technology aside, the history of mammography has been and remains rife with controversy on the one side and vigorous promotion on the other, all enmeshed within the War on Cancer, corporate and professional interests, and the women’s rights movement’s growing issues with what was seen as a patriarchal medical establishment.

Today the issue of conflicts of interest are paramount in any discussion of new medical developments, from the early preclinical stages to ultimate deployment. Then, as now, professional and advocacy societies had a profound influence on government and social decision-making, but in that earlier, more trusting era, buoyed by the amazing changes that technology was bringing to everyday life and an unshakable commitment to and belief in “progress,” science and the medical community held a far more effective sway over the beliefs and behavior of the general population.

Women’s health observed

Although the main focus of the women’s movement with regard to breast cancer was a struggle against the common practice of routine radical mastectomies and a push toward breast-conserving surgeries, the issue of preventive care and screening with regard to women’s health was also a major concern.

Regarding mammography, early enthusiasm in the medical community and among the general public was profound. In 1969, Robert Egan described how mammography had a “certain magic appeal.” The patient, he continued, “feels something special is being done for her.” Women whose cancers had been discovered on a mammogram praised radiologists as heroes who had saved their lives.2

In that era, however, beyond the confines of the doctor’s office, mammography and breast cancer remained topics not discussed among the public at large, despite efforts by the American Cancer Society to change this.

ACS weighs in

Various groups had been promoting the benefits of breast self-examination since the 1930s, and in 1947, the American Cancer Society launched an awareness campaign, “Look for a Lump or Thickening in the Breast,” instructing women to perform a monthly breast self-exam. Between self-examination and clinical breast examinations in physicians’ offices, the ACS believed that smaller and more treatable breast cancers could be discovered.

In 1972, the ACS, working with the National Cancer Institute (NCI), inaugurated the Breast Cancer Detection Demonstration Project (BCDDP), which planned to screen over a quarter of a million American women for breast cancer. The initiative was a direct outgrowth of the National Cancer Act of 1971,3 the key legislation of the War on Cancer, promoted by President Richard Nixon in his State of the Union address in 1971 and responsible for the creation of the National Cancer Institute.

Arthur I. Holleb, MD, ACS senior vice president for medical affairs and research, announced that, “[T]he time has come for the American Cancer Society to mount a massive program on mammography just as we did with the Pap test,”2 according to Barron Lerner, MD, whose book “The Breast Cancer Wars” provides a history of the long-term controversies involved.4

The Pap test, widely promulgated in the 1950s and 1960s, had produced a decline in mortality from cervical cancer.

Regardless of the lack of data on effectiveness at earlier ages, the ACS chose to include women as young as 35 in the BCDDP in order “to inculcate them with ‘good health habits’ ” and “to make our screenee want to return periodically and to want to act as a missionary to bring other women into the screening process.”2

Celebrity status matters

All of the elements of a social revolution in the use of mammography were in place in the late 1960s, but the final triggers to raise social consciousness were the cases of several high-profile female celebrities. In 1973, beloved former child star Shirley Temple Black revealed her breast cancer diagnosis and mastectomy in an era when public discussion of cancer – especially breast cancer – was rare.4

But it wasn’t until 1974 that public awareness and media coverage exploded, sparked by the impact of First Lady Betty Ford’s outspokenness on her own experience of breast cancer. “In obituaries prior to the 1950s and 1960s, women who died from breast cancer were often listed as dying from ‘a prolonged disease’ or ‘a woman’s disease,’ ” according to Tasha Dubriwny, PhD, now an associate professor of communication and women’s and gender studies at Texas A&M University, College Station, when interviewed by the American Association for Cancer Research.5Betty Ford openly addressed her breast cancer diagnosis and treatment and became a prominent advocate for early screening, transforming the landscape of breast cancer awareness. And although Betty Ford’s diagnosis was based on clinical examination rather than mammography, its boost to overall screening was indisputable.

“Within weeks [after Betty Ford’s announcement] thousands of women who had been reluctant to examine their breasts inundated cancer screening centers,” according to a 1987 article in the New York Times.6 Among these women was Happy Rockefeller, the wife of Vice President Nelson A. Rockefeller. Happy Rockefeller also found that she had breast cancer upon screening, and with Betty Ford would become another icon thereafter for breast cancer screening.

“Ford’s lesson for other women was straightforward: Get a mammogram, which she had not done. The American Cancer Society and National Cancer Institute had recently mounted a demonstration project to promote the detection of breast cancer as early as possible, when it was presumed to be more curable. The degree to which women embraced Ford’s message became clear through the famous ‘Betty Ford blip.’ So many women got breast examinations and mammograms for the first time after Ford’s announcement that the actual incidence of breast cancer in the United States went up by 15 percent.”4

In a 1975 address to the American Cancer Society, Betty Ford said: “One day I appeared to be fine and the next day I was in the hospital for a mastectomy. It made me realize how many women in the country could be in the same situation. That realization made me decide to discuss my breast cancer operation openly, because I thought of all the lives in jeopardy. My experience and frank discussion of breast cancer did prompt many women to learn about self-examination, regular checkups, and such detection techniques as mammography. These are so important. I just cannot stress enough how necessary it is for women to take an active interest in their own health and body.”7

ACS guidelines evolve

It wasn’t until 1976 that the ACS issued its first major guidelines for mammography screening. The ACS suggested mammograms may be called for in women aged 35-39 if there was a personal history of breast cancer, and between ages 40 and 49 if their mother or sisters had a history of breast cancer. Women aged 50 years and older could have yearly screening. Thereafter, the use of mammography was encouraged more and more with each new set of recommendations.8

Between 1980 and 1982, these guidelines expanded to advising a baseline mammogram for women aged 35-39 years; that women consult with their physician between ages 40 and 49; and that women over 50 have a yearly mammogram.

Between 1983 and 1991, the recommendations were for a baseline mammogram for women aged 35-39 years; a mammogram every 1-2 years for women aged 40-49; and yearly mammograms for women aged 50 and up. The baseline mammogram recommendation was dropped in 1992.

Between 1997 and 2015, the stakes were upped, and women aged 40-49 years were now recommended to have yearly mammograms, as were still all women aged 50 years and older.

In October 2015, the ACS changed their recommendation to say that women aged 40-44 years should have the choice of initiating mammogram screening, and that the risks and benefits of doing so should be discussed with their physicians. Women aged 45 years and older were still recommended for yearly mammogram screening. That recommendation stands today.

Controversies arise over risk/benefit

The technology was not, however, universally embraced. “By the late 1970s, mammography had diffused much more widely but had become a source of tremendous controversy. On the one hand, advocates of the technology enthusiastically touted its ability to detect smaller, more curable cancers. On the other hand, critics asked whether breast x-rays, particularly for women aged 50 and younger, actually caused more harm than benefit.”2

In addition, meta-analyses of the nine major screening trials conducted between 1965 and 1991 indicated that the reduced breast cancer mortality with screening was dependent on age. In particular, the results for women aged 40-49 years and 50-59 years showed only borderline statistical significance, and they varied depending on how cases were accrued in individual trials.

“Assuming that differences actually exist, the absolute breast cancer mortality reduction per 10,000 women screened for 10 years ranged from 3 for age 39-49 years; 5-8 for age 50-59 years; and 12-21 for age 60=69 years,” according to a review by the U.S. Preventive Services Task Force.9

The estimates for the group aged 70-74 years were limited by low numbers of events in trials that had smaller numbers of women in this age group.

Age has continued to be a major factor in determining the cost/benefit of routine mammography screening, with the American College of Physicians stating in its 2019 guidelines, “The potential harms outweigh the benefits in most women aged 40 to 49 years,” and adding, “In average-risk women aged 75 years or older or in women with a life expectancy of 10 years or less, clinicians should discontinue screening for breast cancer.”10

A Cochrane Report from 2013 was equally critical: “If we assume that screening reduces breast cancer mortality by 15% after 13 years of follow-up and that overdiagnosis and overtreatment is at 30%, it means that for every 2,000 women invited for screening throughout 10 years, one will avoid dying of breast cancer and 10 healthy women, who would not have been diagnosed if there had not been screening, will be treated unnecessarily. Furthermore, more than 200 women will experience important psychological distress including anxiety and uncertainty for years because of false positive findings.”11

Conflicting voices exist

These reports advising a more nuanced evaluation of the benefits of mammography, however, were received with skepticism from doctors committed to the vision of breast cancer screening and convinced by anecdotal evidence in their own practices.

These reports were also in direct contradiction to recommendations made in 1997 by the National Cancer Institute, which recommended screening mammograms every 3 years for women aged 40-49 years at average risk of breast cancer.

Such scientific vacillation has contributed to a love/hate relationship with mammography in the mainstream media, fueling new controversies with regard to breast cancer screening, sometimes as much driven by public suspicion and political advocacy as by scientific evolution.

Vocal opponents of universal mammography screening arose throughout the years, and even the cases of Betty Ford and Happy Rockefeller have been called into question as iconic demonstrations of the effectiveness of screening. And although not directly linked to the issue of screening, the rebellion against the routine use of radical mastectomies, a technique pioneered by Halsted in 1894 and in continuing use into the modern era, sparked outrage in women’s rights activists who saw it as evidence of a patriarchal medical establishment making arbitrary decisions concerning women’s bodies. For example, feminist and breast cancer activist Rose Kushner argued against the unnecessary disfigurement of women’s bodies and urged the use and development of less drastic techniques, including partial mastectomies and lumpectomies as viable choices. And these choices were increasingly supported by the medical community as safe and effective alternatives for many patients.12

A 2015 paper in the Journal of the Royal Society of Medicine was bluntly titled “Mammography screening is harmful and should be abandoned.”13 According to the author, who was the editor of the 2013 Cochrane Report, “I believe that if screening had been a drug, it would have been withdrawn from the market long ago.” And the popular press has not been shy at weighing in on the controversy, driven, in part, by the lack of consensus and continually changing guidelines, with major publications such as U.S. News and World Report, the Washington Post, and others addressing the issue over the years. And even public advocacy groups such as the Susan G. Komen organization14 are supporting the more modern professional guidelines in taking a more nuanced approach to the discussion of risks and benefits for individual women.

In 2014, the Swiss Medical Board, a nationally appointed body, recommended that new mammography screening programs should not be instituted in that country and that limits be placed on current programs because of the imbalance between risks and benefits of mammography screening.15 And a study done in Australia in 2020 agreed, stating, “Using data of 30% overdiagnosis of women aged 50 to 69 years in the NSW [New South Wales] BreastScreen program in 2012, we calculated an Australian ratio of harm of overdiagnosis to benefit (breast cancer deaths avoided) of 15:1 and recommended stopping the invitation to screening.”16

Conclusion

If nothing else, the history of mammography shows that the interconnection of social factors with the rise of a medical technology can have profound impacts on patient care. Technology developed by men for women became a touchstone of resentment in a world ever more aware of sex and gender biases in everything from the conduct of clinical trials to the care (or lack thereof) of women with heart disease. Tied for so many years to a radically disfiguring and drastic form of surgery that affected what many felt to be a hallmark and representation of womanhood1,17 mammography also carried the weight of both the real and imaginary fears of radiation exposure.

Well into its development, the technology still found itself under intense public scrutiny, and was enmeshed in a continual media circus, with ping-ponging discussions of risk/benefit in the scientific literature fueling complaints by many of the dominance of a patriarchal medical community over women’s bodies.

With guidelines for mammography still evolving, questions still remaining, and new technologies such as digital imaging falling short in their hoped-for promise, the story remains unfinished, and the future still uncertain. One thing remains clear, however: In the right circumstances, with the right patient population, and properly executed, mammography has saved lives when tied to effective, early treatment, whatever its flaws and failings. This truth goes hand in hand with another reality: It may have also contributed to considerable unanticipated harm through overdiagnosis and overtreatment.

Overall, the history of mammography is a cautionary tale for the entire medical community and for the development of new medical technologies. The push-pull of the demand for progress to save lives and the slowness and often inconclusiveness of scientific studies that validate new technologies create gray areas, where social determinants and professional interests vie in an information vacuum for control of the narrative of risks vs. benefits.

The story of mammography is not yet concluded, and may never be, especially given the unlikelihood of conducting the massive randomized clinical trials that would be needed to settle the issue. It is more likely to remain controversial, at least until the technology of mammography becomes obsolete, replaced by something new and different, which will likely start the push-pull cycle all over again.

And regardless of the risks and benefits of mammography screening, the issue of treatment once breast cancer is identified is perhaps one of more overwhelming import.

References

1. Berry, DA. The Breast. 2013;22[Supplement 2]:S73-S76.

2. Lerner, BH. “To See Today With the Eyes of Tomorrow: A History of Screening Mammography.” Background paper for the Institute of Medicine report Mammography and Beyond: Developing Technologies for the Early Detection of Breast Cancer. 2001.

3. NCI website. The National Cancer Act of 1971. www.cancer.gov/about-nci/overview/history/national-cancer-act-1971.

4. Lerner BH. The Huffington Post, Sep. 26, 2014.

5. Wu C. Cancer Today. 2012;2(3): Sep. 27.

6. “”The New York Times. Oct. 17, 1987.

7. Ford B. Remarks to the American Cancer Society. 1975.

8. The American Cancer Society website. History of ACS Recommendations for the Early Detection of Cancer in People Without Symptoms.

9. Nelson HD et al. Screening for Breast Cancer: A Systematic Review to Update the 2009 U.S. Preventive Services Task Force Recommendation. 2016; Evidence Syntheses, No. 124; pp.29-49.

10. Qasseem A et al. Annals of Internal Medicine. 2019;170(8):547-60.

11. Gotzsche PC et al. Cochrane Report 2013.

12. Lerner, BH. West J Med. May 2001;174(5):362-5.

13. Gotzsche PC. J R Soc Med. 2015;108(9): 341-5.

14. Susan G. Komen website. Weighing the Benefits and Risks of Mammography.

15. Biller-Andorno N et al. N Engl J Med 2014;370:1965-7.

16. Burton R et al. JAMA Netw Open. 2020;3(6):e208249.

17. Webb C et al. Plast Surg. 2019;27(1):49-53.

Mark Lesney is the editor of Hematology News and the managing editor of MDedge.com/IDPractioner. He has a PhD in plant virology and a PhD in the history of science, with a focus on the history of biotechnology and medicine. He has worked as a writer/editor for the American Chemical Society, and has served as an adjunct assistant professor in the department of biochemistry and molecular & cellular biology at Georgetown University, Washington.

Science and technology emerge from and are shaped by social forces outside the laboratory and clinic. This is an essential fact of most new medical technology. In the Chronicles of Cancer series, part 1 of the story of mammography focused on the technological determinants of its development and use. Part 2 will focus on some of the social forces that shaped the development of mammography.

“Few medical issues have been as controversial – or as political, at least in the United States – as the role of mammographic screening for breast cancer,” according to Donald A. Berry, PhD, a biostatistician at the University of Texas MD Anderson Cancer Center, Houston.1

In fact, technology aside, the history of mammography has been and remains rife with controversy on the one side and vigorous promotion on the other, all enmeshed within the War on Cancer, corporate and professional interests, and the women’s rights movement’s growing issues with what was seen as a patriarchal medical establishment.

Today the issue of conflicts of interest are paramount in any discussion of new medical developments, from the early preclinical stages to ultimate deployment. Then, as now, professional and advocacy societies had a profound influence on government and social decision-making, but in that earlier, more trusting era, buoyed by the amazing changes that technology was bringing to everyday life and an unshakable commitment to and belief in “progress,” science and the medical community held a far more effective sway over the beliefs and behavior of the general population.

Women’s health observed

Although the main focus of the women’s movement with regard to breast cancer was a struggle against the common practice of routine radical mastectomies and a push toward breast-conserving surgeries, the issue of preventive care and screening with regard to women’s health was also a major concern.

Regarding mammography, early enthusiasm in the medical community and among the general public was profound. In 1969, Robert Egan described how mammography had a “certain magic appeal.” The patient, he continued, “feels something special is being done for her.” Women whose cancers had been discovered on a mammogram praised radiologists as heroes who had saved their lives.2

In that era, however, beyond the confines of the doctor’s office, mammography and breast cancer remained topics not discussed among the public at large, despite efforts by the American Cancer Society to change this.

ACS weighs in

Various groups had been promoting the benefits of breast self-examination since the 1930s, and in 1947, the American Cancer Society launched an awareness campaign, “Look for a Lump or Thickening in the Breast,” instructing women to perform a monthly breast self-exam. Between self-examination and clinical breast examinations in physicians’ offices, the ACS believed that smaller and more treatable breast cancers could be discovered.

In 1972, the ACS, working with the National Cancer Institute (NCI), inaugurated the Breast Cancer Detection Demonstration Project (BCDDP), which planned to screen over a quarter of a million American women for breast cancer. The initiative was a direct outgrowth of the National Cancer Act of 1971,3 the key legislation of the War on Cancer, promoted by President Richard Nixon in his State of the Union address in 1971 and responsible for the creation of the National Cancer Institute.

Arthur I. Holleb, MD, ACS senior vice president for medical affairs and research, announced that, “[T]he time has come for the American Cancer Society to mount a massive program on mammography just as we did with the Pap test,”2 according to Barron Lerner, MD, whose book “The Breast Cancer Wars” provides a history of the long-term controversies involved.4

The Pap test, widely promulgated in the 1950s and 1960s, had produced a decline in mortality from cervical cancer.

Regardless of the lack of data on effectiveness at earlier ages, the ACS chose to include women as young as 35 in the BCDDP in order “to inculcate them with ‘good health habits’ ” and “to make our screenee want to return periodically and to want to act as a missionary to bring other women into the screening process.”2

Celebrity status matters

All of the elements of a social revolution in the use of mammography were in place in the late 1960s, but the final triggers to raise social consciousness were the cases of several high-profile female celebrities. In 1973, beloved former child star Shirley Temple Black revealed her breast cancer diagnosis and mastectomy in an era when public discussion of cancer – especially breast cancer – was rare.4

But it wasn’t until 1974 that public awareness and media coverage exploded, sparked by the impact of First Lady Betty Ford’s outspokenness on her own experience of breast cancer. “In obituaries prior to the 1950s and 1960s, women who died from breast cancer were often listed as dying from ‘a prolonged disease’ or ‘a woman’s disease,’ ” according to Tasha Dubriwny, PhD, now an associate professor of communication and women’s and gender studies at Texas A&M University, College Station, when interviewed by the American Association for Cancer Research.5Betty Ford openly addressed her breast cancer diagnosis and treatment and became a prominent advocate for early screening, transforming the landscape of breast cancer awareness. And although Betty Ford’s diagnosis was based on clinical examination rather than mammography, its boost to overall screening was indisputable.

“Within weeks [after Betty Ford’s announcement] thousands of women who had been reluctant to examine their breasts inundated cancer screening centers,” according to a 1987 article in the New York Times.6 Among these women was Happy Rockefeller, the wife of Vice President Nelson A. Rockefeller. Happy Rockefeller also found that she had breast cancer upon screening, and with Betty Ford would become another icon thereafter for breast cancer screening.

“Ford’s lesson for other women was straightforward: Get a mammogram, which she had not done. The American Cancer Society and National Cancer Institute had recently mounted a demonstration project to promote the detection of breast cancer as early as possible, when it was presumed to be more curable. The degree to which women embraced Ford’s message became clear through the famous ‘Betty Ford blip.’ So many women got breast examinations and mammograms for the first time after Ford’s announcement that the actual incidence of breast cancer in the United States went up by 15 percent.”4

In a 1975 address to the American Cancer Society, Betty Ford said: “One day I appeared to be fine and the next day I was in the hospital for a mastectomy. It made me realize how many women in the country could be in the same situation. That realization made me decide to discuss my breast cancer operation openly, because I thought of all the lives in jeopardy. My experience and frank discussion of breast cancer did prompt many women to learn about self-examination, regular checkups, and such detection techniques as mammography. These are so important. I just cannot stress enough how necessary it is for women to take an active interest in their own health and body.”7

ACS guidelines evolve

It wasn’t until 1976 that the ACS issued its first major guidelines for mammography screening. The ACS suggested mammograms may be called for in women aged 35-39 if there was a personal history of breast cancer, and between ages 40 and 49 if their mother or sisters had a history of breast cancer. Women aged 50 years and older could have yearly screening. Thereafter, the use of mammography was encouraged more and more with each new set of recommendations.8

Between 1980 and 1982, these guidelines expanded to advising a baseline mammogram for women aged 35-39 years; that women consult with their physician between ages 40 and 49; and that women over 50 have a yearly mammogram.

Between 1983 and 1991, the recommendations were for a baseline mammogram for women aged 35-39 years; a mammogram every 1-2 years for women aged 40-49; and yearly mammograms for women aged 50 and up. The baseline mammogram recommendation was dropped in 1992.

Between 1997 and 2015, the stakes were upped, and women aged 40-49 years were now recommended to have yearly mammograms, as were still all women aged 50 years and older.

In October 2015, the ACS changed their recommendation to say that women aged 40-44 years should have the choice of initiating mammogram screening, and that the risks and benefits of doing so should be discussed with their physicians. Women aged 45 years and older were still recommended for yearly mammogram screening. That recommendation stands today.

Controversies arise over risk/benefit

The technology was not, however, universally embraced. “By the late 1970s, mammography had diffused much more widely but had become a source of tremendous controversy. On the one hand, advocates of the technology enthusiastically touted its ability to detect smaller, more curable cancers. On the other hand, critics asked whether breast x-rays, particularly for women aged 50 and younger, actually caused more harm than benefit.”2

In addition, meta-analyses of the nine major screening trials conducted between 1965 and 1991 indicated that the reduced breast cancer mortality with screening was dependent on age. In particular, the results for women aged 40-49 years and 50-59 years showed only borderline statistical significance, and they varied depending on how cases were accrued in individual trials.

“Assuming that differences actually exist, the absolute breast cancer mortality reduction per 10,000 women screened for 10 years ranged from 3 for age 39-49 years; 5-8 for age 50-59 years; and 12-21 for age 60=69 years,” according to a review by the U.S. Preventive Services Task Force.9

The estimates for the group aged 70-74 years were limited by low numbers of events in trials that had smaller numbers of women in this age group.

Age has continued to be a major factor in determining the cost/benefit of routine mammography screening, with the American College of Physicians stating in its 2019 guidelines, “The potential harms outweigh the benefits in most women aged 40 to 49 years,” and adding, “In average-risk women aged 75 years or older or in women with a life expectancy of 10 years or less, clinicians should discontinue screening for breast cancer.”10

A Cochrane Report from 2013 was equally critical: “If we assume that screening reduces breast cancer mortality by 15% after 13 years of follow-up and that overdiagnosis and overtreatment is at 30%, it means that for every 2,000 women invited for screening throughout 10 years, one will avoid dying of breast cancer and 10 healthy women, who would not have been diagnosed if there had not been screening, will be treated unnecessarily. Furthermore, more than 200 women will experience important psychological distress including anxiety and uncertainty for years because of false positive findings.”11

Conflicting voices exist

These reports advising a more nuanced evaluation of the benefits of mammography, however, were received with skepticism from doctors committed to the vision of breast cancer screening and convinced by anecdotal evidence in their own practices.

These reports were also in direct contradiction to recommendations made in 1997 by the National Cancer Institute, which recommended screening mammograms every 3 years for women aged 40-49 years at average risk of breast cancer.

Such scientific vacillation has contributed to a love/hate relationship with mammography in the mainstream media, fueling new controversies with regard to breast cancer screening, sometimes as much driven by public suspicion and political advocacy as by scientific evolution.

Vocal opponents of universal mammography screening arose throughout the years, and even the cases of Betty Ford and Happy Rockefeller have been called into question as iconic demonstrations of the effectiveness of screening. And although not directly linked to the issue of screening, the rebellion against the routine use of radical mastectomies, a technique pioneered by Halsted in 1894 and in continuing use into the modern era, sparked outrage in women’s rights activists who saw it as evidence of a patriarchal medical establishment making arbitrary decisions concerning women’s bodies. For example, feminist and breast cancer activist Rose Kushner argued against the unnecessary disfigurement of women’s bodies and urged the use and development of less drastic techniques, including partial mastectomies and lumpectomies as viable choices. And these choices were increasingly supported by the medical community as safe and effective alternatives for many patients.12

A 2015 paper in the Journal of the Royal Society of Medicine was bluntly titled “Mammography screening is harmful and should be abandoned.”13 According to the author, who was the editor of the 2013 Cochrane Report, “I believe that if screening had been a drug, it would have been withdrawn from the market long ago.” And the popular press has not been shy at weighing in on the controversy, driven, in part, by the lack of consensus and continually changing guidelines, with major publications such as U.S. News and World Report, the Washington Post, and others addressing the issue over the years. And even public advocacy groups such as the Susan G. Komen organization14 are supporting the more modern professional guidelines in taking a more nuanced approach to the discussion of risks and benefits for individual women.

In 2014, the Swiss Medical Board, a nationally appointed body, recommended that new mammography screening programs should not be instituted in that country and that limits be placed on current programs because of the imbalance between risks and benefits of mammography screening.15 And a study done in Australia in 2020 agreed, stating, “Using data of 30% overdiagnosis of women aged 50 to 69 years in the NSW [New South Wales] BreastScreen program in 2012, we calculated an Australian ratio of harm of overdiagnosis to benefit (breast cancer deaths avoided) of 15:1 and recommended stopping the invitation to screening.”16

Conclusion

If nothing else, the history of mammography shows that the interconnection of social factors with the rise of a medical technology can have profound impacts on patient care. Technology developed by men for women became a touchstone of resentment in a world ever more aware of sex and gender biases in everything from the conduct of clinical trials to the care (or lack thereof) of women with heart disease. Tied for so many years to a radically disfiguring and drastic form of surgery that affected what many felt to be a hallmark and representation of womanhood1,17 mammography also carried the weight of both the real and imaginary fears of radiation exposure.

Well into its development, the technology still found itself under intense public scrutiny, and was enmeshed in a continual media circus, with ping-ponging discussions of risk/benefit in the scientific literature fueling complaints by many of the dominance of a patriarchal medical community over women’s bodies.

With guidelines for mammography still evolving, questions still remaining, and new technologies such as digital imaging falling short in their hoped-for promise, the story remains unfinished, and the future still uncertain. One thing remains clear, however: In the right circumstances, with the right patient population, and properly executed, mammography has saved lives when tied to effective, early treatment, whatever its flaws and failings. This truth goes hand in hand with another reality: It may have also contributed to considerable unanticipated harm through overdiagnosis and overtreatment.

Overall, the history of mammography is a cautionary tale for the entire medical community and for the development of new medical technologies. The push-pull of the demand for progress to save lives and the slowness and often inconclusiveness of scientific studies that validate new technologies create gray areas, where social determinants and professional interests vie in an information vacuum for control of the narrative of risks vs. benefits.

The story of mammography is not yet concluded, and may never be, especially given the unlikelihood of conducting the massive randomized clinical trials that would be needed to settle the issue. It is more likely to remain controversial, at least until the technology of mammography becomes obsolete, replaced by something new and different, which will likely start the push-pull cycle all over again.

And regardless of the risks and benefits of mammography screening, the issue of treatment once breast cancer is identified is perhaps one of more overwhelming import.

References

1. Berry, DA. The Breast. 2013;22[Supplement 2]:S73-S76.

2. Lerner, BH. “To See Today With the Eyes of Tomorrow: A History of Screening Mammography.” Background paper for the Institute of Medicine report Mammography and Beyond: Developing Technologies for the Early Detection of Breast Cancer. 2001.

3. NCI website. The National Cancer Act of 1971. www.cancer.gov/about-nci/overview/history/national-cancer-act-1971.

4. Lerner BH. The Huffington Post, Sep. 26, 2014.

5. Wu C. Cancer Today. 2012;2(3): Sep. 27.

6. “”The New York Times. Oct. 17, 1987.

7. Ford B. Remarks to the American Cancer Society. 1975.

8. The American Cancer Society website. History of ACS Recommendations for the Early Detection of Cancer in People Without Symptoms.

9. Nelson HD et al. Screening for Breast Cancer: A Systematic Review to Update the 2009 U.S. Preventive Services Task Force Recommendation. 2016; Evidence Syntheses, No. 124; pp.29-49.

10. Qasseem A et al. Annals of Internal Medicine. 2019;170(8):547-60.

11. Gotzsche PC et al. Cochrane Report 2013.

12. Lerner, BH. West J Med. May 2001;174(5):362-5.

13. Gotzsche PC. J R Soc Med. 2015;108(9): 341-5.

14. Susan G. Komen website. Weighing the Benefits and Risks of Mammography.

15. Biller-Andorno N et al. N Engl J Med 2014;370:1965-7.

16. Burton R et al. JAMA Netw Open. 2020;3(6):e208249.

17. Webb C et al. Plast Surg. 2019;27(1):49-53.

Mark Lesney is the editor of Hematology News and the managing editor of MDedge.com/IDPractioner. He has a PhD in plant virology and a PhD in the history of science, with a focus on the history of biotechnology and medicine. He has worked as a writer/editor for the American Chemical Society, and has served as an adjunct assistant professor in the department of biochemistry and molecular & cellular biology at Georgetown University, Washington.

Chronicles of cancer: A history of mammography, part 1

Technological imperatives

The history of mammography provides a powerful example of the connection between social factors and the rise of a medical technology. It is also an object lesson in the profound difficulties that the medical community faces when trying to evaluate and embrace new discoveries in such a complex area as cancer diagnosis and treatment, especially when tied to issues of sex-based bias and gender identity. Given its profound ties to women’s lives and women’s bodies, mammography holds a unique place in the history of cancer. Part 1 will examine the technological imperatives driving mammography forward, and part 2 will address the social factors that promoted and inhibited the developing technology.

All that glitters

Innovations in technology have contributed so greatly to the progress of medical science in saving and improving patients’ lives that the lure of new technology and the desire to see it succeed and to embrace it has become profound.

In a debate on the adoption of new technologies, Michael Rosen, MD, a surgeon at the Cleveland Clinic, Ohio, pointed out the inherent risks in the life cycle of medical technology: “The stages of surgical innovation have been well described as moving from the generation of a hypothesis with an early promising report to being accepted conclusively as a new standard without formal testing. As the life cycle continues and comparative effectiveness data begin to emerge slowly through appropriately designed trials, the procedure or device is often ultimately abandoned.”1

The history of mammography bears out this grim warning in example after example as an object lesson, revealing not only the difficulties involved in the development of new medical technologies, but also the profound problems involved in validating the effectiveness and appropriateness of a new technology from its inception to the present.

A modern failure?

In fact, one of the more modern developments in mammography technology – digital imaging – has recently been called into question with regard to its effectiveness in saving lives, even as the technology continues to spread throughout the medical community.

A recent meta-analysis has shown that there is little or no improvement in outcomes of breast cancer screening when using digital analysis and screening mammograms vs. traditional film recording.

The meta-analysis assessed 24 studies with a combined total of 16,583,743 screening examinations (10,968,843 film and 5,614,900 digital). The study found that the difference in cancer detection rate using digital rather than film screening showed an increase of only 0.51 detections per 1,000 screens.

The researchers concluded “that while digital mammography is beneficial for medical facilities due to easier storage and handling of images, these results suggest the transition from film to digital mammography has not resulted in health benefits for screened women.”2

In fact, the researchers added that “This analysis reinforces the need to carefully evaluate effects of future changes in technology, such as tomosynthesis, to ensure new technology leads to improved health outcomes and beyond technical gains.”2

None of the nine main randomized clinical trials that were used to determine the effectiveness of mammography screening from the 1960s to the 1990s used digital or 3-D digital mammography (digital breast tomosynthesis or DBT). The earliest trial used direct-exposure film mammography and the others relied upon screen-film mammography.3 And yet the assumptions of the validity of the new digital technologies were predicated on the generalized acceptance of the validity of screening derived from these studies, and a corollary assumption that any technological improvement in the quality of the image must inherently be an improvement of the overall results of screening.

The failure of new technologies to meet expectations is a sobering corrective to the high hopes of researchers, practitioners, and patient groups alike, and is perhaps destined to contribute more to the parallel history of controversy and distrust concerning the risk/benefits of mammography that has been a media and scientific mainstay.

Too often the history of medical technology has found disappointment at the end of the road for new discoveries. But although the disappointing results of digital screening might be considered a failure in the progress of mammography, it is likely just another pause on the road of this technology, the history of which has been rocky from the start.

The need for a new way of looking

The rationale behind the original and continuing development of mammography is a simple one, common to all cancer screening methods – the belief that the earlier the detection of a cancer, the more likely it is to be treated effectively with the therapeutic regimens at hand. While there is some controversy regarding the cost-benefit ratio of screening, especially when therapies for breast cancer are not perfect and vary widely in expense and availability globally, the driving belief has been that mammography provides an outcomes benefit in allowing early surgical and chemoradiation therapy with a curative intent.

There were two main driving forces behind the early development of mammography. The first was the highly lethal nature of breast cancer, especially when it was caught too late and had spread too far to benefit from the only available option at the time – surgery. The second was the severity of the surgical treatment, the only therapeutic option at the time, and the distressing number of women who faced the radical mastectomy procedure pioneered by physicians William Stewart Halsted (1852-1922) at Johns Hopkins University, Baltimore, and Willy Meyer (1858-1932) in New York.

In 1894, in an era when the development of anesthetics and antisepsis made ever more difficult surgical procedures possible without inevitably killing the patient, both men separately published their results of a highly extensive operation that consisted of removal of the breast, chest muscles, and axillary lymph nodes.

As long as there was no presurgical method of determining the extent of a breast cancer’s spread, much less an ability to visually distinguish malignant from benign growths, this “better safe than sorry” approach became the default approach of an increasing number of surgeons, and the drastic solution of radical mastectomy was increasingly applied universally.

But in 1895, with the discovery of x-rays, medical science recognized a nearly miraculous technology for visualizing the inside of the body, and radioactive materials were also routinely used in medical therapies, by both legitimate practitioners and hucksters.

However, in the very early days, the users of x-rays were unaware that large radiation doses could have serious biological effects and had no way of determining radiation field strength and accumulating dosage.

In fact, early calibration of x-ray tubes was based on the amount of skin reddening (erythema) produced when the operator placed a hand directly in the x-ray beam.

It was in this environment that, within only a few decades, the new x-rays, especially with the development of improvements in mammography imaging, were able in many cases to identify smaller, more curable breast cancers. This eventually allowed surgeons to develop and use less extensive operations than the highly disfiguring radical mastectomy that was simultaneously dreaded for its invasiveness and embraced for its life-saving potential.4

Pioneering era

The technological history of mammography was thus driven by the quest for better imaging and reproducibility in order to further the hopes of curative surgical approaches.

In 1913, the German surgeon Albert Salomon (1883-1976) was the first to detect breast cancer using x-rays, but its clinical use was not established, as the images published in his “Beiträge zur pathologie und klinik der mammakarzinome (Contributions to the pathology and clinic of breast cancers)” were photographs of postsurgical breast specimens that illustrated the anatomy and spread of breast cancer tumors but were not adapted to presurgical screening.

After Salomon’s work was published in 1913, there was no new mammography literature published until 1927, when German surgeon Otto Kleinschmidt (1880-1948) published a report describing the world’s first authentic mammography, which he attributed to his mentor, the plastic surgeon Erwin Payr (1871-1946).5

This was followed soon after in 1930 by the work of radiologist Stafford L. Warren (1896-1981), of the University of Rochester (N.Y.), who published a paper on the use of standard roentgenograms for the in vivo preoperative assessment of breast malignancies. His technique involved the use of a stereoscopic system with a grid mechanism and intensifying screens to amplify the image. Breast compression was not involved in his mammogram technique. “Dr. Warren claimed to be correct 92% of the time when using this technique to predict malignancy.”5

His study of 119 women with a histopathologic diagnosis (61 benign and 58 malignant) demonstrated the feasibility of the technique for routine use and “created a surge of interest.”6

But the technology of the time proved difficult to use, and the results difficult to reproduce from laboratory to laboratory, and ultimately did not gain wide acceptance. Among Warren’s other claims to fame, he was a participant in the Manhattan Project and was a member of the teams sent to assess radiation damage in Hiroshima and Nagasaki after the dropping of the atomic bombs.

And in fact, future developments in mammography and all other x-ray screening techniques included attempts to minimize radiation exposure; such attempts were driven, in part, by the tragic impact of atomic bomb radiation and the medical studies carried out on the survivors.

An image more deadly than the disease

Further improvements in mammography technique occurred through the 1930s and 1940s, including better visualization of the mammary ducts based upon the pioneering studies of Emil Ries, MD, in Chicago, who, along with Nymphus Frederick Hicken, MD (1900-1998), reported on the use of contrast mammography (also known as ductography or galactography). On a side note, Dr. Hicken was responsible for introducing the terms mammogram and mammography in 1937.

Problems with ductography, which involved the injection of a radiographically opaque contrast agent into the nipple, occurred when the early contrast agents, such as oil-based lipiodol, proved to be toxic and capable of causing abscesses.7This advance led to the development of other agents, and among the most popular at the time was one that would prove deadly to many.

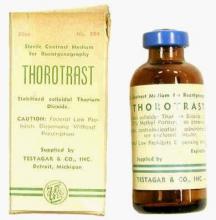

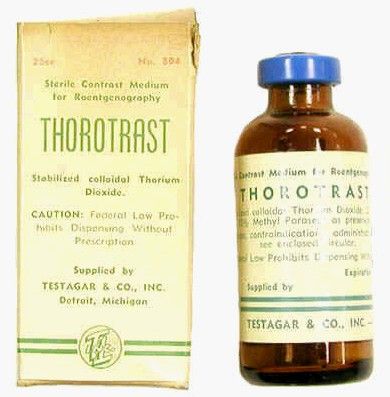

Thorotrast, first used in 1928, was widely embraced because of its lack of immediately noticeable side effects and the high-quality contrast it provided. Thorotrast was a suspension of radioactive thorium dioxide particles, which gained popularity for use as a radiological imaging agent from the 1930s to 1950s throughout the world, being used in an estimated 2-10 million radiographic exams, primarily for neurosurgery.

In the 1920s and 1930s, world governments had begun to recognize the dangers of radiation exposure, especially among workers, but thorotrast was a unique case because, unbeknownst to most practitioners at the time, thorium dioxide was retained in the body for the lifetime of the patient, with 70% deposited in the liver, 20% in the spleen, and the remaining in the bony medulla and in the peripheral lymph nodes.

Nineteen years after the first use of thorotrast, the first case of a human malignant tumor attributed to its exposure was reported. “Besides the liver neoplasm cases, aplastic anemia, leukemia and an impressive incidence of chromosome aberrations were registered in exposed individuals.”8

Despite its widespread adoption elsewhere, especially in Japan, the use of thorotrast never became popular in the United States, in part because in 1932 and 1937, warnings were issued by the American Medical Association to restrict its use.9

There was a shift to the use of iodinated hydrophilic molecules as contrast agents for conventional x-ray, computed tomography, and fluoroscopy procedures.9 However, it was discovered that these agents, too, have their own risks and dangerous side effects. They can cause severe adverse effects, including allergies, cardiovascular diseases, and nephrotoxicity in some patients.

Slow adoption and limited results

Between 1930 and 1950, Dr. Warren, Jacob Gershon-Cohen, MD (1899-1971) of Philadelphia, and radiologist Raul Leborgne of Uruguay “spread the gospel of mammography as an adjunct to physical examination for the diagnosis of breast cancer.”4 The latter also developed the breast compression technique to produce better quality images and lower the radiation exposure needed, and described the differences that could be visualized between benign and malign microcalcifications.

But despite the introduction of improvements such as double-emulsion film and breast compression to produce higher-quality images, “mammographic films often remained dark and hazy. Moreover, the new techniques, while improving the images, were not easily reproduced by other investigators and clinicians,” and therefore were still not widely adopted.4

Little noticeable effect of mammography

Although the technology existed and had its popularizers, mammography had little impact on an epidemiological level.

There was no major change in the mean maximum breast cancer tumor diameter and node positivity rate detected over the 20 years from 1929 to 1948.10 However, starting in the late 1940s, the American Cancer Society began public education campaigns and early detection education, and thereafter, there was a 3% decline in mean maximum diameter of tumor size seen every 10 years until 1968.

“We have interpreted this as the effect of public education and professional education about early detection through television, print media, and professional publications that began in 1947 because no other event was known to occur that would affect cancer detection beginning in the late 1940s.”10

However, the early detection methods at the time were self-examination and clinical examination for lumps, with mammography remaining a relatively limited tool until its general acceptance broadened a few decades later.

Robert Egan, “Father of Mammography,” et al.

The broad acceptance of mammography as a screening tool and its impacts on a broad population level resulted in large part from the work of Robert L. Egan, MD (1921-2001) in the late 1950s and 1960s.

Dr. Egan’s work was inspired in 1956 by a presentation by a visiting fellow, Jean Pierre Batiani, who brought a mammogram clearly showing a breast cancer from his institution, the Curie Foundation in Paris. The image had been made using very low kilowattage, high tube currents, and fine-grain film.

Dr. Egan, then a resident in radiology, was given the task by the head of his department of reproducing the results.

In 1959, Dr. Egan, then at the University of Texas MD Anderson Cancer Center, Houston, published a combined technique that used a high-milliamperage–low-voltage technique, a fine-grain intensifying screen, and single-emulsion films for mammography, thereby decreasing the radiation exposure significantly from previous x-ray techniques and improving the visualization and reproducibility of screening.

By 1960, Dr. Egan reported on 1,000 mammography cases at MD Anderson, demonstrating the ability of proper screening to detect unsuspected cancers and to limit mastectomies on benign masses. Of 245 breast cancers ultimately confirmed by biopsy, 238 were discovered by mammography, 19 of which were in women whose physical examinations had revealed no breast pathology. One of the cancers was only 8 mm in diameter when sectioned at biopsy.

Dr. Egan’s findings prompted an investigation by the Cancer Control Program (CCP) of the U.S. Public Health Service and led to a study jointly conducted by the National Cancer Institute and MD Anderson Hospital and the CCP, which involved 24 institutions and 1,500 patients.

“The results showed a 21% false-negative rate and a 79% true-positive rate for screening studies using Egan’s technique. This was a milestone for women’s imaging in the United States. Screening mammography was off to a tentative start.”5

“Egan was the man who developed a smooth-riding automobile compared to a Model T. He put mammography on the map and made it an intelligible, reproducible study. In short, he was the father of modern mammography,” according to his professor, mentor, and fellow mammography pioneer Gerald Dodd, MD (Emory School of Medicine website biography).

In 1964 Dr. Egan published his definitive book, “Mammography,” and in 1965 he hosted a 30-minute audiovisual presentation describing in detail his technique.11

The use of mammography was further powered by improved methods of preoperative needle localization, pioneered by Richard H. Gold, MD, in 1963 at Jefferson Medical College, Philadelphia, which eased obtaining a tissue diagnosis for any suspicious lesions detected in the mammogram. Dr. Gold performed needle localization of nonpalpable, mammographically visible lesions before biopsy, which allowed surgical resection of a smaller volume of breast tissue than was possible before.

Throughout the era, there were also incremental improvements in mammography machines and an increase in the number of commercial manufacturers.

Xeroradiography, an imaging technique adapted from xerographic photocopying, was seen as a major improvement over direct film imaging, and the technology became popular throughout the 1970s based on the research of John N. Wolfe, MD (1923-1993), who worked closely with the Xerox Corporation to improve the breast imaging process.6 However, this technology had all the same problems associated with running an office copying machine, including paper jams and toner issues, and the worst aspect was the high dose of radiation required. For this reason, it would quickly be superseded by the use of screen-film mammography, which eventually completely replaced the use of both xeromammography and direct-exposure film mammography.

The march of mammography

A series of nine randomized clinical trials (RCTs) between the 1960s and 1990s formed the foundation of the clinical use of mammography. These studies enrolled more than 600,000 women in the United States, Canada, the United Kingdom, and Sweden. The nine main RCTs of breast cancer screening were the Health Insurance Plan of Greater New York (HIP) trial, the Edinburgh trial, the Canadian National Breast Screening Study, the Canadian National Breast Screening Study 2, the United Kingdom Age trial, the Stockholm trial, the Malmö Mammographic Screening Trial, the Gothenburg trial, and the Swedish Two-County Study.3

These trials incorporated improvements in the technology as it developed, as seen in the fact that the earliest, the HIP trial, used direct-exposure film mammography and the other trials used screen-film mammography.3

Meta-analyses of the major nine screening trials indicated that reduced breast cancer mortality with screening was dependent on age. In particular, the results for women aged 40-49 years and 50-59 years showed only borderline statistical significance, and they varied depending on how cases were accrued in individual trials. “Assuming that differences actually exist, the absolute breast cancer mortality reduction per 10,000 women screened for 10 years ranged from 3 for age 39-49 years; 5-8 for age 50-59 years; and 12-21 for age 60-69 years.”3 In addition the estimates for women aged 70-74 years were limited by low numbers of events in trials that had smaller numbers of women in this age group.

However, at the time, the studies had a profound influence on increasing the popularity and spread of mammography.

As mammographies became more common, standardization became an important issue and a Mammography Accreditation Program began in 1987. Originally a voluntary program, it became mandatory with the Mammography Quality Standards Act of 1992, which required all U.S. mammography facilities to become accredited and certified.

In 1986, the American College of Radiology proposed its Breast Imaging Reporting and Data System (BI-RADS) initiative to enable standardized reporting of mammography; the first report was released in 1993.

BI-RADS is now on its fifth edition and has addressed the use of mammography, breast ultrasonography, and breast magnetic resonance imaging, developing standardized auditing approaches for all three techniques of breast cancer imaging.6

The digital era and beyond

With the dawn of the 21st century, the era of digital breast cancer screening began.

The screen-film mammography (SFM) technique employed throughout the 1980s and 1990s had significant advantages over earlier x-ray films for producing more vivid images of dense breast tissues. The next technology, digital mammography, was introduced in the late 20th century, and the first system was approved by the U.S. FDA in 2000.

One of the key benefits touted for digital mammograms is the fact that the radiologist can manipulate the contrast of the images, which allows for masses to be identified that might otherwise not be visible on standard film.

However, the recent meta-analysis discussed in the introduction calls such benefits into question, and a new controversy is likely to ensue on the question of the effectiveness of digital mammography on overall clinical outcomes.

But the technology continues to evolve.

“There has been a continuous and substantial technical development from SFM to full-field digital mammography and very recently also the introduction of digital breast tomosynthesis (DBT). This technical evolution calls for new evidence regarding the performance of screening using new mammography technologies, and the evidence needed to translate new technologies into screening practice,” according to an updated assessment by the U.S. Preventive Services Task Force.12