Opinion

Is Being ‘Manly’ a Threat to a Man’s Health?

- Author:

- F. Perry Wilson, MD, MSCE

We have empirical evidence that men downplay their medical symptoms — and that manlier men downplay them even more.

News

Why Cardiac Biomarkers Don’t Help Predict Heart Disease

- Author:

- F. Perry Wilson, MD, MSCE

Physician discusses recent study findings that cardiac biomarkers will not likely help risk-stratify patients.

Opinion

It Would Be Nice if Olive Oil Really Did Prevent Dementia

- Author:

- F. Perry Wilson, MD, MSCE

Does olive oil prevent dementia? Let’s weigh the evidence.

Opinion

Intermittent Fasting + HIIT: Fitness Fad or Fix?

- Author:

- F. Perry Wilson, MD, MSCE

Any lifestyle change is hard, but with persistence the changes become habits and, eventually, those habits do become pleasurable.

News

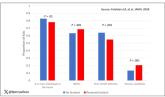

Are Women Better Doctors Than Men?

- Author:

- F. Perry Wilson, MD, MSCE

Study finds female hospitalists provided better care, defined as lower 30-day mortality, than male hospitalists.

News

‘Difficult Patient’: Stigmatizing Words and Medical Error

- Author:

- F. Perry Wilson, MD, MSCE

Dr. F. Perry Wilson comments on the potential of stigmatized language in medical records to lead to missed diagnoses and poor outcomes.

Opinion

A Banned Chemical That Is Still Causing Cancer

- Author:

- F. Perry Wilson, MD, MSCE

This carcinogen ‘is still around: in our soil, in our food, and in our blood.’

Opinion

Vitamin D Supplements May Be a Double-Edged Sword

- Author:

- F. Perry Wilson, MD, MSCE

I can tell you that for your “average woman,” vitamin D supplementation likely has no effect on mortality.

Opinion

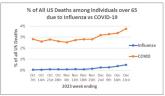

COVID-19 Is a Very Weird Virus

- Author:

- F. Perry Wilson, MD, MSCE

COVID may cause immune system dysfunction that puts patients at risk for autoimmunity.

News

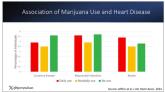

It Sure Looks Like Cannabis Is Bad for the Heart, Doesn’t It?

- Author:

- F. Perry Wilson, MD, MSCE

Dr. Wilson discusses recent research on cannabis and heart disease.

News

Bivalent Vaccines Protect Even Children Who’ve Had COVID

- Author:

- F. Perry Wilson, MD, MSCE

Doctor discusses the progression of understanding of COVID-19 and current vaccines.

News

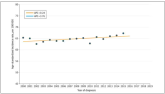

More Young Women Being Diagnosed With Breast Cancer Than Ever Before

- Author:

- F. Perry Wilson, MD, MSCE

Rates of breast cancer are up as well as among young women, according to study.

News

Even Intentional Weight Loss Linked With Cancer

- Author:

- F. Perry Wilson, MD, MSCE

Significant weight loss, even intentionally, can be indicative of cancer.

News

Testosterone Replacement May Cause ... Fracture?

- Author:

- F. Perry Wilson, MD, MSCE

Testosterone replacement linked to possible increased in fractures.

News

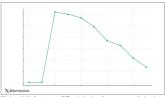

Yes, Patients Are Getting More Complicated

- Author:

- F. Perry Wilson, MD, MSCE

The average hospitalized patient is older, more likely to have kidney disease or diabetes, is on more medications, and has spent more time in the...