User login

Teaching Physical Examination to Medical Students on Inpatient Medicine Teams: A Prospective, Mixed-Methods Descriptive Study

1Medical College of Wisconsin Affiliated Hospitals, Milwaukee, Wisconsin. At the time of this study, Dr. Bergl was with the Division of General Internal Medicine, Medical College of Wisconsin, Milwaukee, Wisconsin. 2Medical College of Wisconsin, Milwaukee, Wisconsin.Physical examination (PE) is a core clinical skill in undergraduate medical education.1 Although the optimal approach to teaching clinical skills is debated, robust preclinical curricula should generally be followed by iterative skill development during clinical rotations.2,3

The internal medicine rotation represents a critical time to enhance PE skills. Diagnostic decision making and PE are highly prioritized competencies for the internal medicine clerkship,4 and students will likely utilize many core examination skills1,2 during this time. Bedside teaching of PE during the internal medicine service also provides an opportunity for students to receive feedback based on direct observation,5 a sine qua non of competency-based assessment.

Unfortunately, current internal medicine training environments limit opportunities for workplace-based instruction in PE. Recent studies suggest diminishing time spent on bedside patient care and teaching, with computer-based “indirect patient care” dominating much of the clinical workday of internal medicine services.6-8 However, the literature does not delineate how often medical students are enhancing their PE skills during clinical rotations or describe how the educational environment may influence PE teaching.

We aimed to describe the content and context of PE instruction during the internal medicine clerkship workflow. Specifically, we sought to explore what strategies physician team members used to teach PE to students. We also sought to describe factors in the inpatient learning environment that might explain why physical examination (PE) instruction occurs infrequently.

METHODS

We conducted a prospective mixed-methods study using time motion analysis, checklists on clinical teaching, and daily open-ended observations written by a trained observer from June through August 2015 at a single academic medical center. Subjects were recruited from internal medicine teaching teams and were allowed to opt out. Teaching teams had 2 formats: (1) traditional team with an attending physician (hospitalist or general internist), a senior resident, 2 interns, a fourth-year medical student, and 2 third-year students or (2) hospitalist team in which a third-year student works directly with a hospitalist and advanced practitioner. The proposal was submitted to the Medical College of Wisconsin Institutional Review Board and deemed exempt from further review.

All observations were carried out by a single investigator (A.T.), who was a second-year medical student at the time. To train this observer and to pilot the data collection instruments, our lead investigator (P.B.) directly supervised our observer on 4 separate occasions, totaling over 12 hours of mentored co-observation. Immediately after each training session, both investigators (A.T. and P.B.) debriefed to compare notes, to review checklists on recorded observations, and to discuss areas of uncertainty. During the training period, formal metrics of agreement (eg, kappa coefficients) were not gathered, as data collection instruments were still being refined.

Observation periods were centered on third-year medical students and their interactions with patients and members of the teaching team. Observed activities included pre-rounding, teaching rounds with the attending physician, and new patient admissions during call days. Observations generally occurred between the hours of 7 AM and 6 PM, and we limited periods of observation to 3 consecutive hours to minimize observer fatigue. Observation periods were selected to maximize the number of subjects and teams observed, to adequately capture pre-rounding and new admissions activities, and to account for variations in rounding styles throughout the call cycle. Teams were excluded if a member of the study team was an attending physician on the clinical team or if any member of the patient care team had opted out of the study.

Data were collected on paper checklists that included idealized bedside teaching activities around PE. Teaching activities were identified through a review of relevant literature9,10 and were further informed by our senior investigator’s own experience with faculty development in this area11 and team members’ attendance at bedside teaching workshops. At the end of each day, our observer also wrote brief observations that summarized factors affecting bedside teaching of PE. Checklist data were transferred to an Excel file (Microsoft), and written observations were imported into NVivo 10 (QRS International, Melbourne, Australia) for coding and analysis.

Checklist data were analyzed using simple descriptive statistics. We compared time spent on various types of rounding using ANOVA, and we used a Student two-tailed t-test to compare the amount of time students spent examining patients on pre-rounds versus new admissions. To ascertain differences in the frequency of PE teaching activities by location, we used chi-squared tests. Statistical analysis was performed using embedded statistics functions in Microsoft Excel. A P value of <.05 was used as the cut-off for significance.

We analyzed the written observations using conventional qualitative content analysis. Two investigators (A.T. and P.B.) reviewed the written comments and used open coding to devise a preliminary inductive coding scheme. Codes were refined iteratively, and a schema of categories and nodes was outlined in a codebook that was periodically reviewed by the entire research team. The coding investigators met regularly to ensure consistency in coding, and a third team member remained available to reconcile significant disagreements in code definitions.

RESULTS

Eighty-one subjects participated in the study: 21 were attending physicians, 12 residents, 21 interns, 11 senior medical students, and 26 junior medical students. We observed 16 distinct inpatient teaching teams and 329 unique patient-related events (discussions and/or patient-clinician encounters), with most events being observed during attending rounds (269/329, or 82%). There were 123 encounters at the bedside, averaging 7 minutes; 43 encounters occurred in the hallway, averaging 8 minutes each; and 163 encounters occurred in a workroom and averaged 7 minutes per patient discussion. We also observed 28 student-patient encounters during pre-round activities and 30 student-patient encounters during new admissions.

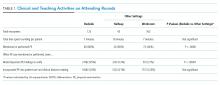

Teaching and Direct Observation

During 28 pre-rounding encounters, students usually examined the patient (26 out of 28 instances, 93%) but were observed only 4 times doing so (out of 26 instances, or 15%). During 30 new patient admissions, students examined 27 patients (90%) and had their PE observed 6 times (out of 27 instances, or 22%). There were no significant differences in frequency of these activities (P > .05, chi-squared) between pre-rounds or new admissions.

Observations on Teaching Strategies

In the written observations, we categorized various methods being used to teach PE. Bedside teaching of PE most often involved teachers simply describing or discussing physical findings (42 mentions in observations) or verifying a student’s reported findings (15 mentions). Teachers were also observed to use bedside teaching to contextualize findings (13 mentions), such as relating the quality of bowel sounds to the patient’s constipation or to discuss expected pupillary light reflexes in a neurologically intact patient. Less commonly, attending physicians narrated steps in their PE technique (9 mentions). Students were infrequently encouraged to practice a specific PE skill again (7 mentions) or allowed to re-examine and reconsider their initial interpretations (5 mentions).

DISCUSSION

This observational study of clinical teaching on internal medicine teaching services demonstrates that PE teaching is most likely to occur during bedside rounding. However, even in bedside encounters, most PE instruction is limited to physician team members pointing out significant findings. Although physical findings were mentioned for the majority of patients seen on rounds, attending physicians infrequently verified students’ or residents’ findings, demonstrated technique, or incorporated PE into clinical decision making. We witnessed an alarming dearth of direct observation of students and almost no real-time feedback in performing and teaching PE. Thus, students rarely had opportunities to engage in higher-order learning activities related to PE on the internal medicine rotation.

We posit that the learning environment influenced PE instruction on the internal medicine rotation. To optimize inpatient teaching of PE, attending physicians need to consider the factors we identified in Table 2. Such teaching may be effective with a more limited number of participants and without distraction from technology. Time constraints are one of the major perceived barriers to bedside teaching of PE, and our data support this concern, as teams spent an average of only 7 minutes on each bedside encounter. However, many of the strategies observed to be used in real-time PE instruction, such as validating the learners’ findings or examining patients as a team, naturally fit into clinical routines and generally do not require extra thought or preparation.

One of the key strengths of our study is the use of direct observation of students and their teachers. This study is unique in its exclusive focus on PE and its description of factors affecting PE teaching activities on an internal medicine service. This observational, descriptive study also has obvious limitations. The study was conducted at a single institution during a limited time period. Moreover, the study period June through August, which was chosen based on our observer’s availability, includes the transition to a new academic year (July 1, 2015) when medical students and residents were becoming acclimated to their new roles. Additionally, the data were collected by a single researcher, and observer bias may affect the results of qualitative analysis of journal entries.

In conclusion, this study highlights the infrequency of applied PE skills in the daily clinical and educational workflow of internal medicine teaching teams. These findings may reflect a more widespread problem in clinical education, and replication of our findings at other teaching centers could galvanize faculty development around bedside PE teaching.

Disclosures

Dr. Bergl has nothing to disclose. Ms. Taylor reports grant support from the Cohen Endowment for Medical Student Research at the Medical College of Wisconsin during the conduct of the study. Mrs. Klumb, Ms. Quirk, Dr. Muntz, and Dr. Fletcher have nothing to disclose.

Funding

This work was funded in part by the Cohen Endowment for Medical Student Research at the Medical College of Wisconsin.

1. Corbett E, Berkow R, Bernstein L, et al on behalf of the AAMC Task Force on the Preclerkship Clinical Skills Education of Medical Students. Recommendations for clinical skills curricula for undergraduate medical education. Achieving excellence in basic clinical method through clinical skills education: The medical school clinical skills curriculum. Association of American Medical Colleges; 2008. https://www.aamc.org/download/130608/data/clinicalskills_oct09.qxd.pdf.pdf. Accessed July 12, 2017.

2. Gowda D, Blatt B, Fink MJ, Kosowicz LY, Baecker A, Silvestri RC. A core physical exam for medical students: Results of a national survey. Acad Med. 2014;89(3):436-442. PubMed

3. Uchida T, Farnan JM, Schwartz JE, Heiman HL. Teaching the physical examination: A longitudinal strategy for tomorrow’s physicians. Acad Med. 2014;89(3):373-375. PubMed

4. Fazio S, De Fer T, Goroll A . Core Medicine Clerkship Curriculum Guide: A resource for teachers and learners. Clerkship Directors in Internal Medicine and Society of General Internal Medicine; 2006. http://www.im.org/d/do/2285/. Accessed July 12, 2017.

5. Gonzalo J, Heist B, Duffy B, et al. Content and timing of feedback and reflection: A multi-center qualitative study of experienced bedside teachers. BMC Med Educ. 2014;(14):212. doi: 10.1186/1472-6920-14-212. PubMed

6. Stickrath C, Noble M, Prochazka A, et al. Attending rounds in the current era: What is and is not happening. JAMA Intern Med. 2013;173(12):1084-1089. PubMed

7. Block L, Habicht R, Wu AW, et al. In the wake of the 2003 and 2011 duty hours regulations, how do internal medicine interns spend their time? J Gen Intern Med. 2013;28(8):1042-1047. PubMed

8. Wenger N, Méan M, Castioni J, Marques-Vidal P, Waeber G, Garnier A. Allocation of internal medicine resident time in a Swiss Hospital: A time and motion study of day and evening shifts. Ann Intern Med. 2017;166(8):579-586. PubMed

9. Ramani S. Twelve tips for excellent physical examination teaching. Med Teach. 2008;30(9-10):851-856. PubMed

10. Gonzalo JD, Heist BS, Duffy BL, et al. The art of bedside rounds: A multi-center qualitative study of strategies used by experienced bedside teachers. J Gen Intern Med. 2013;28(3):412-420. PubMed

11. Janicik RW, Fletcher KE. Teaching at the bedside: A new model. Med Teach. 2003;25(2):127-130. PubMed

1Medical College of Wisconsin Affiliated Hospitals, Milwaukee, Wisconsin. At the time of this study, Dr. Bergl was with the Division of General Internal Medicine, Medical College of Wisconsin, Milwaukee, Wisconsin. 2Medical College of Wisconsin, Milwaukee, Wisconsin.Physical examination (PE) is a core clinical skill in undergraduate medical education.1 Although the optimal approach to teaching clinical skills is debated, robust preclinical curricula should generally be followed by iterative skill development during clinical rotations.2,3

The internal medicine rotation represents a critical time to enhance PE skills. Diagnostic decision making and PE are highly prioritized competencies for the internal medicine clerkship,4 and students will likely utilize many core examination skills1,2 during this time. Bedside teaching of PE during the internal medicine service also provides an opportunity for students to receive feedback based on direct observation,5 a sine qua non of competency-based assessment.

Unfortunately, current internal medicine training environments limit opportunities for workplace-based instruction in PE. Recent studies suggest diminishing time spent on bedside patient care and teaching, with computer-based “indirect patient care” dominating much of the clinical workday of internal medicine services.6-8 However, the literature does not delineate how often medical students are enhancing their PE skills during clinical rotations or describe how the educational environment may influence PE teaching.

We aimed to describe the content and context of PE instruction during the internal medicine clerkship workflow. Specifically, we sought to explore what strategies physician team members used to teach PE to students. We also sought to describe factors in the inpatient learning environment that might explain why physical examination (PE) instruction occurs infrequently.

METHODS

We conducted a prospective mixed-methods study using time motion analysis, checklists on clinical teaching, and daily open-ended observations written by a trained observer from June through August 2015 at a single academic medical center. Subjects were recruited from internal medicine teaching teams and were allowed to opt out. Teaching teams had 2 formats: (1) traditional team with an attending physician (hospitalist or general internist), a senior resident, 2 interns, a fourth-year medical student, and 2 third-year students or (2) hospitalist team in which a third-year student works directly with a hospitalist and advanced practitioner. The proposal was submitted to the Medical College of Wisconsin Institutional Review Board and deemed exempt from further review.

All observations were carried out by a single investigator (A.T.), who was a second-year medical student at the time. To train this observer and to pilot the data collection instruments, our lead investigator (P.B.) directly supervised our observer on 4 separate occasions, totaling over 12 hours of mentored co-observation. Immediately after each training session, both investigators (A.T. and P.B.) debriefed to compare notes, to review checklists on recorded observations, and to discuss areas of uncertainty. During the training period, formal metrics of agreement (eg, kappa coefficients) were not gathered, as data collection instruments were still being refined.

Observation periods were centered on third-year medical students and their interactions with patients and members of the teaching team. Observed activities included pre-rounding, teaching rounds with the attending physician, and new patient admissions during call days. Observations generally occurred between the hours of 7 AM and 6 PM, and we limited periods of observation to 3 consecutive hours to minimize observer fatigue. Observation periods were selected to maximize the number of subjects and teams observed, to adequately capture pre-rounding and new admissions activities, and to account for variations in rounding styles throughout the call cycle. Teams were excluded if a member of the study team was an attending physician on the clinical team or if any member of the patient care team had opted out of the study.

Data were collected on paper checklists that included idealized bedside teaching activities around PE. Teaching activities were identified through a review of relevant literature9,10 and were further informed by our senior investigator’s own experience with faculty development in this area11 and team members’ attendance at bedside teaching workshops. At the end of each day, our observer also wrote brief observations that summarized factors affecting bedside teaching of PE. Checklist data were transferred to an Excel file (Microsoft), and written observations were imported into NVivo 10 (QRS International, Melbourne, Australia) for coding and analysis.

Checklist data were analyzed using simple descriptive statistics. We compared time spent on various types of rounding using ANOVA, and we used a Student two-tailed t-test to compare the amount of time students spent examining patients on pre-rounds versus new admissions. To ascertain differences in the frequency of PE teaching activities by location, we used chi-squared tests. Statistical analysis was performed using embedded statistics functions in Microsoft Excel. A P value of <.05 was used as the cut-off for significance.

We analyzed the written observations using conventional qualitative content analysis. Two investigators (A.T. and P.B.) reviewed the written comments and used open coding to devise a preliminary inductive coding scheme. Codes were refined iteratively, and a schema of categories and nodes was outlined in a codebook that was periodically reviewed by the entire research team. The coding investigators met regularly to ensure consistency in coding, and a third team member remained available to reconcile significant disagreements in code definitions.

RESULTS

Eighty-one subjects participated in the study: 21 were attending physicians, 12 residents, 21 interns, 11 senior medical students, and 26 junior medical students. We observed 16 distinct inpatient teaching teams and 329 unique patient-related events (discussions and/or patient-clinician encounters), with most events being observed during attending rounds (269/329, or 82%). There were 123 encounters at the bedside, averaging 7 minutes; 43 encounters occurred in the hallway, averaging 8 minutes each; and 163 encounters occurred in a workroom and averaged 7 minutes per patient discussion. We also observed 28 student-patient encounters during pre-round activities and 30 student-patient encounters during new admissions.

Teaching and Direct Observation

During 28 pre-rounding encounters, students usually examined the patient (26 out of 28 instances, 93%) but were observed only 4 times doing so (out of 26 instances, or 15%). During 30 new patient admissions, students examined 27 patients (90%) and had their PE observed 6 times (out of 27 instances, or 22%). There were no significant differences in frequency of these activities (P > .05, chi-squared) between pre-rounds or new admissions.

Observations on Teaching Strategies

In the written observations, we categorized various methods being used to teach PE. Bedside teaching of PE most often involved teachers simply describing or discussing physical findings (42 mentions in observations) or verifying a student’s reported findings (15 mentions). Teachers were also observed to use bedside teaching to contextualize findings (13 mentions), such as relating the quality of bowel sounds to the patient’s constipation or to discuss expected pupillary light reflexes in a neurologically intact patient. Less commonly, attending physicians narrated steps in their PE technique (9 mentions). Students were infrequently encouraged to practice a specific PE skill again (7 mentions) or allowed to re-examine and reconsider their initial interpretations (5 mentions).

DISCUSSION

This observational study of clinical teaching on internal medicine teaching services demonstrates that PE teaching is most likely to occur during bedside rounding. However, even in bedside encounters, most PE instruction is limited to physician team members pointing out significant findings. Although physical findings were mentioned for the majority of patients seen on rounds, attending physicians infrequently verified students’ or residents’ findings, demonstrated technique, or incorporated PE into clinical decision making. We witnessed an alarming dearth of direct observation of students and almost no real-time feedback in performing and teaching PE. Thus, students rarely had opportunities to engage in higher-order learning activities related to PE on the internal medicine rotation.

We posit that the learning environment influenced PE instruction on the internal medicine rotation. To optimize inpatient teaching of PE, attending physicians need to consider the factors we identified in Table 2. Such teaching may be effective with a more limited number of participants and without distraction from technology. Time constraints are one of the major perceived barriers to bedside teaching of PE, and our data support this concern, as teams spent an average of only 7 minutes on each bedside encounter. However, many of the strategies observed to be used in real-time PE instruction, such as validating the learners’ findings or examining patients as a team, naturally fit into clinical routines and generally do not require extra thought or preparation.

One of the key strengths of our study is the use of direct observation of students and their teachers. This study is unique in its exclusive focus on PE and its description of factors affecting PE teaching activities on an internal medicine service. This observational, descriptive study also has obvious limitations. The study was conducted at a single institution during a limited time period. Moreover, the study period June through August, which was chosen based on our observer’s availability, includes the transition to a new academic year (July 1, 2015) when medical students and residents were becoming acclimated to their new roles. Additionally, the data were collected by a single researcher, and observer bias may affect the results of qualitative analysis of journal entries.

In conclusion, this study highlights the infrequency of applied PE skills in the daily clinical and educational workflow of internal medicine teaching teams. These findings may reflect a more widespread problem in clinical education, and replication of our findings at other teaching centers could galvanize faculty development around bedside PE teaching.

Disclosures

Dr. Bergl has nothing to disclose. Ms. Taylor reports grant support from the Cohen Endowment for Medical Student Research at the Medical College of Wisconsin during the conduct of the study. Mrs. Klumb, Ms. Quirk, Dr. Muntz, and Dr. Fletcher have nothing to disclose.

Funding

This work was funded in part by the Cohen Endowment for Medical Student Research at the Medical College of Wisconsin.

1Medical College of Wisconsin Affiliated Hospitals, Milwaukee, Wisconsin. At the time of this study, Dr. Bergl was with the Division of General Internal Medicine, Medical College of Wisconsin, Milwaukee, Wisconsin. 2Medical College of Wisconsin, Milwaukee, Wisconsin.Physical examination (PE) is a core clinical skill in undergraduate medical education.1 Although the optimal approach to teaching clinical skills is debated, robust preclinical curricula should generally be followed by iterative skill development during clinical rotations.2,3

The internal medicine rotation represents a critical time to enhance PE skills. Diagnostic decision making and PE are highly prioritized competencies for the internal medicine clerkship,4 and students will likely utilize many core examination skills1,2 during this time. Bedside teaching of PE during the internal medicine service also provides an opportunity for students to receive feedback based on direct observation,5 a sine qua non of competency-based assessment.

Unfortunately, current internal medicine training environments limit opportunities for workplace-based instruction in PE. Recent studies suggest diminishing time spent on bedside patient care and teaching, with computer-based “indirect patient care” dominating much of the clinical workday of internal medicine services.6-8 However, the literature does not delineate how often medical students are enhancing their PE skills during clinical rotations or describe how the educational environment may influence PE teaching.

We aimed to describe the content and context of PE instruction during the internal medicine clerkship workflow. Specifically, we sought to explore what strategies physician team members used to teach PE to students. We also sought to describe factors in the inpatient learning environment that might explain why physical examination (PE) instruction occurs infrequently.

METHODS

We conducted a prospective mixed-methods study using time motion analysis, checklists on clinical teaching, and daily open-ended observations written by a trained observer from June through August 2015 at a single academic medical center. Subjects were recruited from internal medicine teaching teams and were allowed to opt out. Teaching teams had 2 formats: (1) traditional team with an attending physician (hospitalist or general internist), a senior resident, 2 interns, a fourth-year medical student, and 2 third-year students or (2) hospitalist team in which a third-year student works directly with a hospitalist and advanced practitioner. The proposal was submitted to the Medical College of Wisconsin Institutional Review Board and deemed exempt from further review.

All observations were carried out by a single investigator (A.T.), who was a second-year medical student at the time. To train this observer and to pilot the data collection instruments, our lead investigator (P.B.) directly supervised our observer on 4 separate occasions, totaling over 12 hours of mentored co-observation. Immediately after each training session, both investigators (A.T. and P.B.) debriefed to compare notes, to review checklists on recorded observations, and to discuss areas of uncertainty. During the training period, formal metrics of agreement (eg, kappa coefficients) were not gathered, as data collection instruments were still being refined.

Observation periods were centered on third-year medical students and their interactions with patients and members of the teaching team. Observed activities included pre-rounding, teaching rounds with the attending physician, and new patient admissions during call days. Observations generally occurred between the hours of 7 AM and 6 PM, and we limited periods of observation to 3 consecutive hours to minimize observer fatigue. Observation periods were selected to maximize the number of subjects and teams observed, to adequately capture pre-rounding and new admissions activities, and to account for variations in rounding styles throughout the call cycle. Teams were excluded if a member of the study team was an attending physician on the clinical team or if any member of the patient care team had opted out of the study.

Data were collected on paper checklists that included idealized bedside teaching activities around PE. Teaching activities were identified through a review of relevant literature9,10 and were further informed by our senior investigator’s own experience with faculty development in this area11 and team members’ attendance at bedside teaching workshops. At the end of each day, our observer also wrote brief observations that summarized factors affecting bedside teaching of PE. Checklist data were transferred to an Excel file (Microsoft), and written observations were imported into NVivo 10 (QRS International, Melbourne, Australia) for coding and analysis.

Checklist data were analyzed using simple descriptive statistics. We compared time spent on various types of rounding using ANOVA, and we used a Student two-tailed t-test to compare the amount of time students spent examining patients on pre-rounds versus new admissions. To ascertain differences in the frequency of PE teaching activities by location, we used chi-squared tests. Statistical analysis was performed using embedded statistics functions in Microsoft Excel. A P value of <.05 was used as the cut-off for significance.

We analyzed the written observations using conventional qualitative content analysis. Two investigators (A.T. and P.B.) reviewed the written comments and used open coding to devise a preliminary inductive coding scheme. Codes were refined iteratively, and a schema of categories and nodes was outlined in a codebook that was periodically reviewed by the entire research team. The coding investigators met regularly to ensure consistency in coding, and a third team member remained available to reconcile significant disagreements in code definitions.

RESULTS

Eighty-one subjects participated in the study: 21 were attending physicians, 12 residents, 21 interns, 11 senior medical students, and 26 junior medical students. We observed 16 distinct inpatient teaching teams and 329 unique patient-related events (discussions and/or patient-clinician encounters), with most events being observed during attending rounds (269/329, or 82%). There were 123 encounters at the bedside, averaging 7 minutes; 43 encounters occurred in the hallway, averaging 8 minutes each; and 163 encounters occurred in a workroom and averaged 7 minutes per patient discussion. We also observed 28 student-patient encounters during pre-round activities and 30 student-patient encounters during new admissions.

Teaching and Direct Observation

During 28 pre-rounding encounters, students usually examined the patient (26 out of 28 instances, 93%) but were observed only 4 times doing so (out of 26 instances, or 15%). During 30 new patient admissions, students examined 27 patients (90%) and had their PE observed 6 times (out of 27 instances, or 22%). There were no significant differences in frequency of these activities (P > .05, chi-squared) between pre-rounds or new admissions.

Observations on Teaching Strategies

In the written observations, we categorized various methods being used to teach PE. Bedside teaching of PE most often involved teachers simply describing or discussing physical findings (42 mentions in observations) or verifying a student’s reported findings (15 mentions). Teachers were also observed to use bedside teaching to contextualize findings (13 mentions), such as relating the quality of bowel sounds to the patient’s constipation or to discuss expected pupillary light reflexes in a neurologically intact patient. Less commonly, attending physicians narrated steps in their PE technique (9 mentions). Students were infrequently encouraged to practice a specific PE skill again (7 mentions) or allowed to re-examine and reconsider their initial interpretations (5 mentions).

DISCUSSION

This observational study of clinical teaching on internal medicine teaching services demonstrates that PE teaching is most likely to occur during bedside rounding. However, even in bedside encounters, most PE instruction is limited to physician team members pointing out significant findings. Although physical findings were mentioned for the majority of patients seen on rounds, attending physicians infrequently verified students’ or residents’ findings, demonstrated technique, or incorporated PE into clinical decision making. We witnessed an alarming dearth of direct observation of students and almost no real-time feedback in performing and teaching PE. Thus, students rarely had opportunities to engage in higher-order learning activities related to PE on the internal medicine rotation.

We posit that the learning environment influenced PE instruction on the internal medicine rotation. To optimize inpatient teaching of PE, attending physicians need to consider the factors we identified in Table 2. Such teaching may be effective with a more limited number of participants and without distraction from technology. Time constraints are one of the major perceived barriers to bedside teaching of PE, and our data support this concern, as teams spent an average of only 7 minutes on each bedside encounter. However, many of the strategies observed to be used in real-time PE instruction, such as validating the learners’ findings or examining patients as a team, naturally fit into clinical routines and generally do not require extra thought or preparation.

One of the key strengths of our study is the use of direct observation of students and their teachers. This study is unique in its exclusive focus on PE and its description of factors affecting PE teaching activities on an internal medicine service. This observational, descriptive study also has obvious limitations. The study was conducted at a single institution during a limited time period. Moreover, the study period June through August, which was chosen based on our observer’s availability, includes the transition to a new academic year (July 1, 2015) when medical students and residents were becoming acclimated to their new roles. Additionally, the data were collected by a single researcher, and observer bias may affect the results of qualitative analysis of journal entries.

In conclusion, this study highlights the infrequency of applied PE skills in the daily clinical and educational workflow of internal medicine teaching teams. These findings may reflect a more widespread problem in clinical education, and replication of our findings at other teaching centers could galvanize faculty development around bedside PE teaching.

Disclosures

Dr. Bergl has nothing to disclose. Ms. Taylor reports grant support from the Cohen Endowment for Medical Student Research at the Medical College of Wisconsin during the conduct of the study. Mrs. Klumb, Ms. Quirk, Dr. Muntz, and Dr. Fletcher have nothing to disclose.

Funding

This work was funded in part by the Cohen Endowment for Medical Student Research at the Medical College of Wisconsin.

1. Corbett E, Berkow R, Bernstein L, et al on behalf of the AAMC Task Force on the Preclerkship Clinical Skills Education of Medical Students. Recommendations for clinical skills curricula for undergraduate medical education. Achieving excellence in basic clinical method through clinical skills education: The medical school clinical skills curriculum. Association of American Medical Colleges; 2008. https://www.aamc.org/download/130608/data/clinicalskills_oct09.qxd.pdf.pdf. Accessed July 12, 2017.

2. Gowda D, Blatt B, Fink MJ, Kosowicz LY, Baecker A, Silvestri RC. A core physical exam for medical students: Results of a national survey. Acad Med. 2014;89(3):436-442. PubMed

3. Uchida T, Farnan JM, Schwartz JE, Heiman HL. Teaching the physical examination: A longitudinal strategy for tomorrow’s physicians. Acad Med. 2014;89(3):373-375. PubMed

4. Fazio S, De Fer T, Goroll A . Core Medicine Clerkship Curriculum Guide: A resource for teachers and learners. Clerkship Directors in Internal Medicine and Society of General Internal Medicine; 2006. http://www.im.org/d/do/2285/. Accessed July 12, 2017.

5. Gonzalo J, Heist B, Duffy B, et al. Content and timing of feedback and reflection: A multi-center qualitative study of experienced bedside teachers. BMC Med Educ. 2014;(14):212. doi: 10.1186/1472-6920-14-212. PubMed

6. Stickrath C, Noble M, Prochazka A, et al. Attending rounds in the current era: What is and is not happening. JAMA Intern Med. 2013;173(12):1084-1089. PubMed

7. Block L, Habicht R, Wu AW, et al. In the wake of the 2003 and 2011 duty hours regulations, how do internal medicine interns spend their time? J Gen Intern Med. 2013;28(8):1042-1047. PubMed

8. Wenger N, Méan M, Castioni J, Marques-Vidal P, Waeber G, Garnier A. Allocation of internal medicine resident time in a Swiss Hospital: A time and motion study of day and evening shifts. Ann Intern Med. 2017;166(8):579-586. PubMed

9. Ramani S. Twelve tips for excellent physical examination teaching. Med Teach. 2008;30(9-10):851-856. PubMed

10. Gonzalo JD, Heist BS, Duffy BL, et al. The art of bedside rounds: A multi-center qualitative study of strategies used by experienced bedside teachers. J Gen Intern Med. 2013;28(3):412-420. PubMed

11. Janicik RW, Fletcher KE. Teaching at the bedside: A new model. Med Teach. 2003;25(2):127-130. PubMed

1. Corbett E, Berkow R, Bernstein L, et al on behalf of the AAMC Task Force on the Preclerkship Clinical Skills Education of Medical Students. Recommendations for clinical skills curricula for undergraduate medical education. Achieving excellence in basic clinical method through clinical skills education: The medical school clinical skills curriculum. Association of American Medical Colleges; 2008. https://www.aamc.org/download/130608/data/clinicalskills_oct09.qxd.pdf.pdf. Accessed July 12, 2017.

2. Gowda D, Blatt B, Fink MJ, Kosowicz LY, Baecker A, Silvestri RC. A core physical exam for medical students: Results of a national survey. Acad Med. 2014;89(3):436-442. PubMed

3. Uchida T, Farnan JM, Schwartz JE, Heiman HL. Teaching the physical examination: A longitudinal strategy for tomorrow’s physicians. Acad Med. 2014;89(3):373-375. PubMed

4. Fazio S, De Fer T, Goroll A . Core Medicine Clerkship Curriculum Guide: A resource for teachers and learners. Clerkship Directors in Internal Medicine and Society of General Internal Medicine; 2006. http://www.im.org/d/do/2285/. Accessed July 12, 2017.

5. Gonzalo J, Heist B, Duffy B, et al. Content and timing of feedback and reflection: A multi-center qualitative study of experienced bedside teachers. BMC Med Educ. 2014;(14):212. doi: 10.1186/1472-6920-14-212. PubMed

6. Stickrath C, Noble M, Prochazka A, et al. Attending rounds in the current era: What is and is not happening. JAMA Intern Med. 2013;173(12):1084-1089. PubMed

7. Block L, Habicht R, Wu AW, et al. In the wake of the 2003 and 2011 duty hours regulations, how do internal medicine interns spend their time? J Gen Intern Med. 2013;28(8):1042-1047. PubMed

8. Wenger N, Méan M, Castioni J, Marques-Vidal P, Waeber G, Garnier A. Allocation of internal medicine resident time in a Swiss Hospital: A time and motion study of day and evening shifts. Ann Intern Med. 2017;166(8):579-586. PubMed

9. Ramani S. Twelve tips for excellent physical examination teaching. Med Teach. 2008;30(9-10):851-856. PubMed

10. Gonzalo JD, Heist BS, Duffy BL, et al. The art of bedside rounds: A multi-center qualitative study of strategies used by experienced bedside teachers. J Gen Intern Med. 2013;28(3):412-420. PubMed

11. Janicik RW, Fletcher KE. Teaching at the bedside: A new model. Med Teach. 2003;25(2):127-130. PubMed

© 2018 Society of Hospital Medicine

Intrateam Coverage and Handoffs

We have traditionally viewed continuity of care with a particular intern as important for high‐quality inpatient care, but this continuity is difficult to achieve. As we move to a model of team rather than individual continuity, information transfers between team members become critical.

When discontinuity between the primary team and a cross‐covering team occurs, this informational continuity is managed through formal handoffs.[1] Accordingly, there has been ample research on handoffs between different teams,[2, 3, 4, 5] but there has been little published literature to date to describe handoffs between members of the same team. Therefore, we set out (1) to learn how interns view intrateam handoffs and (2) to identify intern‐perceived problems with intrateam handoffs.

MATERIALS AND METHODS

This was a cross‐sectional survey study done at a 500‐bed academic medical center affiliated with a large internal medicine residency program. The survey was developed by the study team and reviewed for content and clarity by our chief residents and by 2 nationally known medical educators outside our institution. Study participants were internal medicine interns. Interns in this program rotate through 3 hospitals and do 7 to 8 ward months. The call schedules are different at each site (see Supporting Information, Appendix A, in the online version of this article). Opportunities for intrateam coverage of 1 intern by another include clinics (1/week), days off (1/week), some overnight periods, and occasional educational conferences. When possible, daily attending rounds include the entire team, but due to clinics, conferences, and days off, it is rare that the entire team is present. Bedside rounds are done at the discretion of the attending. The survey (see Supporting Information, Appendix B, in the online version of this article) included questions regarding situations when the respondent was covering his or her cointern's patients (cointern was defined as another intern on the respondent's same inpatient ward team). We also asked about situations when a cointern was covering the respondent's patients. For those questions, we considered answers of >60% to be a majority. We distributed this anonymous survey on 2 dates (January 2012 and March 2012) during regularly scheduled conferences. We mainly report descriptive findings. We also compared the percentage of study participants reporting problems when covering cointerns' patients to the percentage of study participants reporting problems when cointerns covered their (study participants') patients using 2, with significance set at P<0.05. This study was designated as exempt by the institutional review board.

RESULTS

Thirty‐four interns completed the survey out of a total of 44 interns present at the conferences (response rate=77%). There were 46 interns in the program, including categorical, medicine‐pediatrics, and preliminary interns. The mean age was 28 (standard deviation 2.8). Two‐thirds of respondents were female, and 65% were categorical.

Difference Between Intra‐ and Interteam Handoffs

Eighty‐eight percent felt that a handoff to a cointern was different than a handoff to an overnight cross‐cover intern; many interns said they assumed their cointerns had at least some knowledge of their patients, and therefore put less time and detail into their handoffs. When covering for their cointern, 47% reported feeling the same amount of responsibility as for their own patients, whereas 38% of interns reported feeling much or somewhat less responsible for their cointerns' patients and the remainder (15%) felt somewhat or much more responsible.

Knowledge of Cointern's Patients

Most (65%) interns reported at least 3 days in their last inpatient ward month when they covered a cointern's patient that had not been formally handed off to them. Forty‐five percent of respondents reported seldom or never receiving a written sign‐out on their cointern's patients.

Respondents were asked to think about times before they had covered their cointern's patients. Sixty‐eight percent of respondents reported knowing the number 1 problem for the majority of their cointern's patients. Twenty‐four percent reported having ever actually seen the majority of their cointern's patients. Only 3% of respondents said they had ever examined the majority of their cointern's patients prior to providing coverage.

Perceived Problems With Intrateam Coverage

While covering a cointern's patients, nearly half reported missing changes in patients' exams and forgetting to order labs or imaging. More than half reported unexpected family meetings or phone calls. In contrast, respondents noted more problems when their cointern had covered for them (Table 1). Seventy‐nine percent felt that patient care was at least sometimes delayed because of incomplete knowledge due to intrateam coverage.

| What Problems Have You Noticed | ||

|---|---|---|

| While Respondent Covers a Cointern's Patient? | After Respondent's Patients Were Covered by Cointern? | |

| ||

| Missed labs | 18% | 33% |

| Missed consult recommendations | 21% | 30% |

| Missed exam changes | 42% | 27% |

| Forgot to follow‐up imaging | 27% | 30% |

| Forgot to order labs or imaging | 42%a | 70%a |

| Failure to adjust meds | 27% | 27% |

| Unexpected family meeting/phone calls | 61%a | 30%a |

| Did not understand the plan from cointern's notes | 45% | 27% |

DISCUSSION

In our program, interns commonly cover for each other. This intrateam coverage frequently occurs without a formal handoff, and interns do not always know key information about their cointern's patients. Interns reported frequent problems with intrateam coverage such as missed lab results, consult recommendations, and changes in the physical exam. These missed items could result in delayed diagnoses and delayed treatment. These problems have been identified in interteam handoffs as well.[6, 7] Even in optimized interteam handoffs, receivers fail to identify the most important piece of information about 60% of the patients,[8] and our results mirror this finding.

The finding that fewer than a quarter of the respondents have ever seen the majority of their cointerns' patients is certainly of concern. This likely arises from several inter‐related factors: reduced hours for housestaff, schedules built to accommodate the reduced hours (eg, overlapping rather than simultaneous shifts), and the choice of some attendings to not take the entire team around to see every patient. In institutions where bedside rounds as a team are the norm, this finding will be less applicable, but others across the country have noticed this trend[9, 10] and have tried to counteract it.[11] This situation has both patient care and educational implications. The main patient care implication is that the other team members may be less able to seamlessly assume care when the primary intern is away or busy. Therefore, intrateam coverage becomes much more like traditional cross‐coverage of another team's patients, during which there is no expectation that the covering person will have ever seen the patients for whom they are assuming care. The main educational implication of not seeing the cointerns' patients is that the interns are seeing only half the patients that they could otherwise see. Learning medicine is experiential, and limiting opportunities for seeing and examining patients is unwise in this era of reduced time spent in the hospital.

Limitations of this study include being conducted in a single program. It will be important for other sites to assess their own practices with respect to intrateam handoffs. Another limitation is that it was a cross‐sectional survey subject to recall bias. We may have obtained more detailed information if we had conducted interviews. We also did not quantify the frequency of missed labs, consult recommendations, and physical examination changes that occurred during intrateam coverage. Finally, we did not independently verify the problems identified by the interns.

Some possible strategies to address this issue include (1) treating intrateam handoffs like interteam handoffs by implementing a formal system, (2) better utilizing senior residents/faculty when interns are covering for each other, (3) using bedside attending rounds to increase the exposure of all team members to the team's patients, (4) block scheduling to avoid absences due to clinics,[12] and (5) better communication and teamwork training to increase team awareness of all patients.[13]

Disclosures

Disclosures: There was no external funding for this work. However, this material is the result of work supported with resources and the use of facilities at the Clement J. Zablocki VA Medical Center, Milwaukee, WI. This work was presented in poster format at the national Society of Hospital Medicine meeting in National Harbor, Maryland in May 2013. The authors have no conflicts of interest to report.

- , , , et al. Residents' and attending physicians' handoffs: a systematic review of the literature. Acad Med. 2009;84(12):1775–1787.

- , , . Standardized sign‐out reduces intern perception of medical errors on the general internal medicine ward. Teach Learn Med. 2009;21(2):121–126.

- , , , . Faculty member review and feedback using a sign‐out checklist: improving intern written sign‐out. Acad Med. 2012;87(8):1125–1131.

- , , , , . Using a computerized sign‐out program to improve continuity of inpatient care and prevent adverse events. Jt Comm J Qual Improv. 1998;24(2):77–87.

- , , , . Transfers of patient care between house staff on internal medicine wards. Arch Intern Med. 2006;166:1173–1177.

- , , , , . Communication failures in patient sign‐out and suggestions for improvement: a critical incident analysis. Qual Saf Health Care 2005;14(6):401–407.

- , , , , . Consequences of inadequate sign‐out for patient care. Arch Intern Med. 2008;168(16):1755–1760.

- , , , , . Interns overestimate the effectiveness of their hand‐off communication. Pediatrics. 2010;125(3):491–496.

- . Culture shock—patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748–2751.

- , , , . Attending rounds and bedside case presentations: medical student and medicine resident experiences and attitudes. Teach Learn Med. 2009;21(2):105–110.

- , , , . The return of bedside rounds: an educational intervention. J Gen Intern Med. 2010;25(8):792–798.

- , , , et al. The ambulatory long‐block: an accreditation council for graduate medical education (ACGME) educational innovations project (EIP). J Gen Intern Med. 2008;23(7):921–926.

- AHRQ. TeamSTEPPS: National Implementation. Available at: http://teamstepps.ahrq.gov/. Accessed June 19, 2014.

We have traditionally viewed continuity of care with a particular intern as important for high‐quality inpatient care, but this continuity is difficult to achieve. As we move to a model of team rather than individual continuity, information transfers between team members become critical.

When discontinuity between the primary team and a cross‐covering team occurs, this informational continuity is managed through formal handoffs.[1] Accordingly, there has been ample research on handoffs between different teams,[2, 3, 4, 5] but there has been little published literature to date to describe handoffs between members of the same team. Therefore, we set out (1) to learn how interns view intrateam handoffs and (2) to identify intern‐perceived problems with intrateam handoffs.

MATERIALS AND METHODS

This was a cross‐sectional survey study done at a 500‐bed academic medical center affiliated with a large internal medicine residency program. The survey was developed by the study team and reviewed for content and clarity by our chief residents and by 2 nationally known medical educators outside our institution. Study participants were internal medicine interns. Interns in this program rotate through 3 hospitals and do 7 to 8 ward months. The call schedules are different at each site (see Supporting Information, Appendix A, in the online version of this article). Opportunities for intrateam coverage of 1 intern by another include clinics (1/week), days off (1/week), some overnight periods, and occasional educational conferences. When possible, daily attending rounds include the entire team, but due to clinics, conferences, and days off, it is rare that the entire team is present. Bedside rounds are done at the discretion of the attending. The survey (see Supporting Information, Appendix B, in the online version of this article) included questions regarding situations when the respondent was covering his or her cointern's patients (cointern was defined as another intern on the respondent's same inpatient ward team). We also asked about situations when a cointern was covering the respondent's patients. For those questions, we considered answers of >60% to be a majority. We distributed this anonymous survey on 2 dates (January 2012 and March 2012) during regularly scheduled conferences. We mainly report descriptive findings. We also compared the percentage of study participants reporting problems when covering cointerns' patients to the percentage of study participants reporting problems when cointerns covered their (study participants') patients using 2, with significance set at P<0.05. This study was designated as exempt by the institutional review board.

RESULTS

Thirty‐four interns completed the survey out of a total of 44 interns present at the conferences (response rate=77%). There were 46 interns in the program, including categorical, medicine‐pediatrics, and preliminary interns. The mean age was 28 (standard deviation 2.8). Two‐thirds of respondents were female, and 65% were categorical.

Difference Between Intra‐ and Interteam Handoffs

Eighty‐eight percent felt that a handoff to a cointern was different than a handoff to an overnight cross‐cover intern; many interns said they assumed their cointerns had at least some knowledge of their patients, and therefore put less time and detail into their handoffs. When covering for their cointern, 47% reported feeling the same amount of responsibility as for their own patients, whereas 38% of interns reported feeling much or somewhat less responsible for their cointerns' patients and the remainder (15%) felt somewhat or much more responsible.

Knowledge of Cointern's Patients

Most (65%) interns reported at least 3 days in their last inpatient ward month when they covered a cointern's patient that had not been formally handed off to them. Forty‐five percent of respondents reported seldom or never receiving a written sign‐out on their cointern's patients.

Respondents were asked to think about times before they had covered their cointern's patients. Sixty‐eight percent of respondents reported knowing the number 1 problem for the majority of their cointern's patients. Twenty‐four percent reported having ever actually seen the majority of their cointern's patients. Only 3% of respondents said they had ever examined the majority of their cointern's patients prior to providing coverage.

Perceived Problems With Intrateam Coverage

While covering a cointern's patients, nearly half reported missing changes in patients' exams and forgetting to order labs or imaging. More than half reported unexpected family meetings or phone calls. In contrast, respondents noted more problems when their cointern had covered for them (Table 1). Seventy‐nine percent felt that patient care was at least sometimes delayed because of incomplete knowledge due to intrateam coverage.

| What Problems Have You Noticed | ||

|---|---|---|

| While Respondent Covers a Cointern's Patient? | After Respondent's Patients Were Covered by Cointern? | |

| ||

| Missed labs | 18% | 33% |

| Missed consult recommendations | 21% | 30% |

| Missed exam changes | 42% | 27% |

| Forgot to follow‐up imaging | 27% | 30% |

| Forgot to order labs or imaging | 42%a | 70%a |

| Failure to adjust meds | 27% | 27% |

| Unexpected family meeting/phone calls | 61%a | 30%a |

| Did not understand the plan from cointern's notes | 45% | 27% |

DISCUSSION

In our program, interns commonly cover for each other. This intrateam coverage frequently occurs without a formal handoff, and interns do not always know key information about their cointern's patients. Interns reported frequent problems with intrateam coverage such as missed lab results, consult recommendations, and changes in the physical exam. These missed items could result in delayed diagnoses and delayed treatment. These problems have been identified in interteam handoffs as well.[6, 7] Even in optimized interteam handoffs, receivers fail to identify the most important piece of information about 60% of the patients,[8] and our results mirror this finding.

The finding that fewer than a quarter of the respondents have ever seen the majority of their cointerns' patients is certainly of concern. This likely arises from several inter‐related factors: reduced hours for housestaff, schedules built to accommodate the reduced hours (eg, overlapping rather than simultaneous shifts), and the choice of some attendings to not take the entire team around to see every patient. In institutions where bedside rounds as a team are the norm, this finding will be less applicable, but others across the country have noticed this trend[9, 10] and have tried to counteract it.[11] This situation has both patient care and educational implications. The main patient care implication is that the other team members may be less able to seamlessly assume care when the primary intern is away or busy. Therefore, intrateam coverage becomes much more like traditional cross‐coverage of another team's patients, during which there is no expectation that the covering person will have ever seen the patients for whom they are assuming care. The main educational implication of not seeing the cointerns' patients is that the interns are seeing only half the patients that they could otherwise see. Learning medicine is experiential, and limiting opportunities for seeing and examining patients is unwise in this era of reduced time spent in the hospital.

Limitations of this study include being conducted in a single program. It will be important for other sites to assess their own practices with respect to intrateam handoffs. Another limitation is that it was a cross‐sectional survey subject to recall bias. We may have obtained more detailed information if we had conducted interviews. We also did not quantify the frequency of missed labs, consult recommendations, and physical examination changes that occurred during intrateam coverage. Finally, we did not independently verify the problems identified by the interns.

Some possible strategies to address this issue include (1) treating intrateam handoffs like interteam handoffs by implementing a formal system, (2) better utilizing senior residents/faculty when interns are covering for each other, (3) using bedside attending rounds to increase the exposure of all team members to the team's patients, (4) block scheduling to avoid absences due to clinics,[12] and (5) better communication and teamwork training to increase team awareness of all patients.[13]

Disclosures

Disclosures: There was no external funding for this work. However, this material is the result of work supported with resources and the use of facilities at the Clement J. Zablocki VA Medical Center, Milwaukee, WI. This work was presented in poster format at the national Society of Hospital Medicine meeting in National Harbor, Maryland in May 2013. The authors have no conflicts of interest to report.

We have traditionally viewed continuity of care with a particular intern as important for high‐quality inpatient care, but this continuity is difficult to achieve. As we move to a model of team rather than individual continuity, information transfers between team members become critical.

When discontinuity between the primary team and a cross‐covering team occurs, this informational continuity is managed through formal handoffs.[1] Accordingly, there has been ample research on handoffs between different teams,[2, 3, 4, 5] but there has been little published literature to date to describe handoffs between members of the same team. Therefore, we set out (1) to learn how interns view intrateam handoffs and (2) to identify intern‐perceived problems with intrateam handoffs.

MATERIALS AND METHODS

This was a cross‐sectional survey study done at a 500‐bed academic medical center affiliated with a large internal medicine residency program. The survey was developed by the study team and reviewed for content and clarity by our chief residents and by 2 nationally known medical educators outside our institution. Study participants were internal medicine interns. Interns in this program rotate through 3 hospitals and do 7 to 8 ward months. The call schedules are different at each site (see Supporting Information, Appendix A, in the online version of this article). Opportunities for intrateam coverage of 1 intern by another include clinics (1/week), days off (1/week), some overnight periods, and occasional educational conferences. When possible, daily attending rounds include the entire team, but due to clinics, conferences, and days off, it is rare that the entire team is present. Bedside rounds are done at the discretion of the attending. The survey (see Supporting Information, Appendix B, in the online version of this article) included questions regarding situations when the respondent was covering his or her cointern's patients (cointern was defined as another intern on the respondent's same inpatient ward team). We also asked about situations when a cointern was covering the respondent's patients. For those questions, we considered answers of >60% to be a majority. We distributed this anonymous survey on 2 dates (January 2012 and March 2012) during regularly scheduled conferences. We mainly report descriptive findings. We also compared the percentage of study participants reporting problems when covering cointerns' patients to the percentage of study participants reporting problems when cointerns covered their (study participants') patients using 2, with significance set at P<0.05. This study was designated as exempt by the institutional review board.

RESULTS

Thirty‐four interns completed the survey out of a total of 44 interns present at the conferences (response rate=77%). There were 46 interns in the program, including categorical, medicine‐pediatrics, and preliminary interns. The mean age was 28 (standard deviation 2.8). Two‐thirds of respondents were female, and 65% were categorical.

Difference Between Intra‐ and Interteam Handoffs

Eighty‐eight percent felt that a handoff to a cointern was different than a handoff to an overnight cross‐cover intern; many interns said they assumed their cointerns had at least some knowledge of their patients, and therefore put less time and detail into their handoffs. When covering for their cointern, 47% reported feeling the same amount of responsibility as for their own patients, whereas 38% of interns reported feeling much or somewhat less responsible for their cointerns' patients and the remainder (15%) felt somewhat or much more responsible.

Knowledge of Cointern's Patients

Most (65%) interns reported at least 3 days in their last inpatient ward month when they covered a cointern's patient that had not been formally handed off to them. Forty‐five percent of respondents reported seldom or never receiving a written sign‐out on their cointern's patients.

Respondents were asked to think about times before they had covered their cointern's patients. Sixty‐eight percent of respondents reported knowing the number 1 problem for the majority of their cointern's patients. Twenty‐four percent reported having ever actually seen the majority of their cointern's patients. Only 3% of respondents said they had ever examined the majority of their cointern's patients prior to providing coverage.

Perceived Problems With Intrateam Coverage

While covering a cointern's patients, nearly half reported missing changes in patients' exams and forgetting to order labs or imaging. More than half reported unexpected family meetings or phone calls. In contrast, respondents noted more problems when their cointern had covered for them (Table 1). Seventy‐nine percent felt that patient care was at least sometimes delayed because of incomplete knowledge due to intrateam coverage.

| What Problems Have You Noticed | ||

|---|---|---|

| While Respondent Covers a Cointern's Patient? | After Respondent's Patients Were Covered by Cointern? | |

| ||

| Missed labs | 18% | 33% |

| Missed consult recommendations | 21% | 30% |

| Missed exam changes | 42% | 27% |

| Forgot to follow‐up imaging | 27% | 30% |

| Forgot to order labs or imaging | 42%a | 70%a |

| Failure to adjust meds | 27% | 27% |

| Unexpected family meeting/phone calls | 61%a | 30%a |

| Did not understand the plan from cointern's notes | 45% | 27% |

DISCUSSION

In our program, interns commonly cover for each other. This intrateam coverage frequently occurs without a formal handoff, and interns do not always know key information about their cointern's patients. Interns reported frequent problems with intrateam coverage such as missed lab results, consult recommendations, and changes in the physical exam. These missed items could result in delayed diagnoses and delayed treatment. These problems have been identified in interteam handoffs as well.[6, 7] Even in optimized interteam handoffs, receivers fail to identify the most important piece of information about 60% of the patients,[8] and our results mirror this finding.

The finding that fewer than a quarter of the respondents have ever seen the majority of their cointerns' patients is certainly of concern. This likely arises from several inter‐related factors: reduced hours for housestaff, schedules built to accommodate the reduced hours (eg, overlapping rather than simultaneous shifts), and the choice of some attendings to not take the entire team around to see every patient. In institutions where bedside rounds as a team are the norm, this finding will be less applicable, but others across the country have noticed this trend[9, 10] and have tried to counteract it.[11] This situation has both patient care and educational implications. The main patient care implication is that the other team members may be less able to seamlessly assume care when the primary intern is away or busy. Therefore, intrateam coverage becomes much more like traditional cross‐coverage of another team's patients, during which there is no expectation that the covering person will have ever seen the patients for whom they are assuming care. The main educational implication of not seeing the cointerns' patients is that the interns are seeing only half the patients that they could otherwise see. Learning medicine is experiential, and limiting opportunities for seeing and examining patients is unwise in this era of reduced time spent in the hospital.

Limitations of this study include being conducted in a single program. It will be important for other sites to assess their own practices with respect to intrateam handoffs. Another limitation is that it was a cross‐sectional survey subject to recall bias. We may have obtained more detailed information if we had conducted interviews. We also did not quantify the frequency of missed labs, consult recommendations, and physical examination changes that occurred during intrateam coverage. Finally, we did not independently verify the problems identified by the interns.

Some possible strategies to address this issue include (1) treating intrateam handoffs like interteam handoffs by implementing a formal system, (2) better utilizing senior residents/faculty when interns are covering for each other, (3) using bedside attending rounds to increase the exposure of all team members to the team's patients, (4) block scheduling to avoid absences due to clinics,[12] and (5) better communication and teamwork training to increase team awareness of all patients.[13]

Disclosures

Disclosures: There was no external funding for this work. However, this material is the result of work supported with resources and the use of facilities at the Clement J. Zablocki VA Medical Center, Milwaukee, WI. This work was presented in poster format at the national Society of Hospital Medicine meeting in National Harbor, Maryland in May 2013. The authors have no conflicts of interest to report.

- , , , et al. Residents' and attending physicians' handoffs: a systematic review of the literature. Acad Med. 2009;84(12):1775–1787.

- , , . Standardized sign‐out reduces intern perception of medical errors on the general internal medicine ward. Teach Learn Med. 2009;21(2):121–126.

- , , , . Faculty member review and feedback using a sign‐out checklist: improving intern written sign‐out. Acad Med. 2012;87(8):1125–1131.

- , , , , . Using a computerized sign‐out program to improve continuity of inpatient care and prevent adverse events. Jt Comm J Qual Improv. 1998;24(2):77–87.

- , , , . Transfers of patient care between house staff on internal medicine wards. Arch Intern Med. 2006;166:1173–1177.

- , , , , . Communication failures in patient sign‐out and suggestions for improvement: a critical incident analysis. Qual Saf Health Care 2005;14(6):401–407.

- , , , , . Consequences of inadequate sign‐out for patient care. Arch Intern Med. 2008;168(16):1755–1760.

- , , , , . Interns overestimate the effectiveness of their hand‐off communication. Pediatrics. 2010;125(3):491–496.

- . Culture shock—patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748–2751.

- , , , . Attending rounds and bedside case presentations: medical student and medicine resident experiences and attitudes. Teach Learn Med. 2009;21(2):105–110.

- , , , . The return of bedside rounds: an educational intervention. J Gen Intern Med. 2010;25(8):792–798.

- , , , et al. The ambulatory long‐block: an accreditation council for graduate medical education (ACGME) educational innovations project (EIP). J Gen Intern Med. 2008;23(7):921–926.

- AHRQ. TeamSTEPPS: National Implementation. Available at: http://teamstepps.ahrq.gov/. Accessed June 19, 2014.

- , , , et al. Residents' and attending physicians' handoffs: a systematic review of the literature. Acad Med. 2009;84(12):1775–1787.

- , , . Standardized sign‐out reduces intern perception of medical errors on the general internal medicine ward. Teach Learn Med. 2009;21(2):121–126.

- , , , . Faculty member review and feedback using a sign‐out checklist: improving intern written sign‐out. Acad Med. 2012;87(8):1125–1131.

- , , , , . Using a computerized sign‐out program to improve continuity of inpatient care and prevent adverse events. Jt Comm J Qual Improv. 1998;24(2):77–87.

- , , , . Transfers of patient care between house staff on internal medicine wards. Arch Intern Med. 2006;166:1173–1177.

- , , , , . Communication failures in patient sign‐out and suggestions for improvement: a critical incident analysis. Qual Saf Health Care 2005;14(6):401–407.

- , , , , . Consequences of inadequate sign‐out for patient care. Arch Intern Med. 2008;168(16):1755–1760.

- , , , , . Interns overestimate the effectiveness of their hand‐off communication. Pediatrics. 2010;125(3):491–496.

- . Culture shock—patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748–2751.

- , , , . Attending rounds and bedside case presentations: medical student and medicine resident experiences and attitudes. Teach Learn Med. 2009;21(2):105–110.

- , , , . The return of bedside rounds: an educational intervention. J Gen Intern Med. 2010;25(8):792–798.

- , , , et al. The ambulatory long‐block: an accreditation council for graduate medical education (ACGME) educational innovations project (EIP). J Gen Intern Med. 2008;23(7):921–926.

- AHRQ. TeamSTEPPS: National Implementation. Available at: http://teamstepps.ahrq.gov/. Accessed June 19, 2014.

Localizing General Medical Teams

Localizing inpatient general medical teams to nursing units has high intuitive validity for improving physician productivity, hospital efficiency, and patient outcomes. Motion or the moving of personnel between tasksso prominent if teams are not localizedis 1 of the 7 wastes in lean thinking.1 In a timemotion study, where hospitalists cared for patients on up to 5 different wards, O'Leary et al2 have reported large parts of hospitalists' workdays spent in indirect patient care (69%), paging (13%), and travel (3%). Localization could increase the amount of time available for direct patient care, decrease time spent for (and interruptions due to) paging, and decrease travel time, all leading to greater productivity.

O'Leary et al3 have also reported the beneficial effects of localization of medical inpatients on communication between nurses and physicians, who could identify each other more often, and reported greater communication (specifically face‐to‐face communication) with each other following localization. This improvement in communication and effective multidisciplinary rounds could lead to safer care4 and better outcomes.

Further investigations about the effect of localization are limited. Roy et al5 have compared the outcomes of patients localized to 2 inpatient pods medically staffed by hospitalists and physician assistants (PAs) to geographically dispersed, but structurally different, house staff teams. They noticed significantly lower costs, slight but nonsignificant increase in length of stay, and no difference in mortality or readmissions, but it is impossible to tease out the affect of localization versus the affect of team composition. In a before‐and‐after study, Findlay et al6 have reported a decrease in mortality and complication rates in clinically homogenous surgical patients (proximal hip fractures) when cared for by junior trainee physicians localized to a unit, but their experience cannot be extrapolated to the much more diverse general medical population.

In our hospital, each general medical team could admit patients dispersed over 14 different units. An internal group, commissioned to evaluate our hospitalist practice, recommended reducing this dispersal to improve physician productivity, hospital efficiency, and outcomes of care. We therefore conducted a project to evaluate the impact of localizing general medical inpatient teams to a single nursing unit.

METHODS

Setting

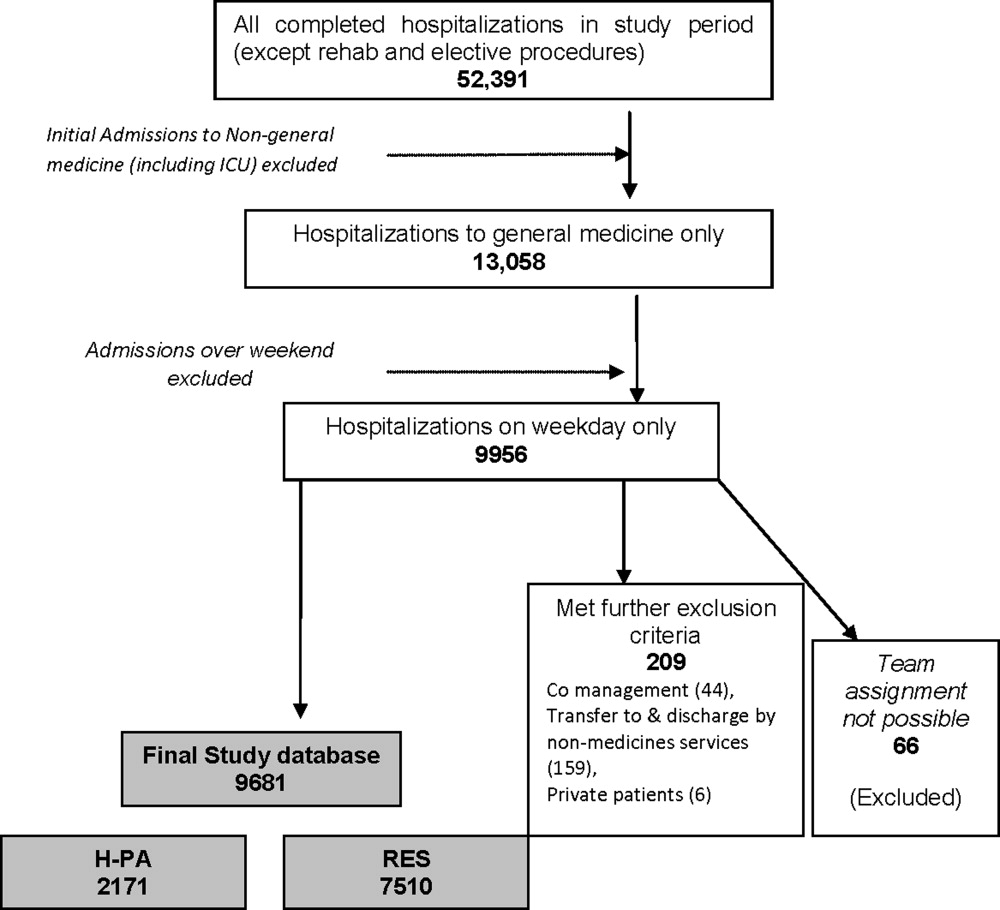

We conducted our project at a 490 bed, urban academic medical center in the midwestern United States where of the 10 total general medical teams, 6 were traditional resident‐based teams and 4 consisted of a hospitalist paired with a PA (H‐PA teams). We focused our study on the 4 H‐PA teams. The hospitalists could be assigned to any H‐PA team and staffed them for 2 weeks (including weekends). The PAs were always assigned to the same team but took weekends off. An in‐house hospitalist provided overnight cross‐coverage for the H‐PA teams. Prior to our intervention, these teams could admit patients to any of the 14 nursing units at our hospital. They admitted patients from 7 AM to 3 PM, and also accepted care of patients admitted overnight after the resident teams had reached their admission limits (overflow). A Faculty Admitting Medical Officer (AMO) balanced the existing workload of the teams against the number and complexity of incoming patients to decide team assignment for the patients. The AMO was given guidelines (soft caps) to limit total admissions to H‐PA teams to 5 per team per day (3 on a weekend), and to not exceed a total patient census of 16 for an H‐PA team.

Intervention

Starting April 1, 2010, until July 15, 2010, we localized patients admitted to 2 of our 4 H‐PA teams on a single 32‐bed nursing unit. The patients of the other 2 H‐PA teams remained dispersed throughout the hospital.

Transition

April 1, 2010 was a scheduled switch day for the hospitalists on the H‐PA teams. We took advantage of this switch day and reassigned all patients cared for by H‐PA teams on our localized unit to the 2 localized teams. Similarly, all patients on nonlocalized units cared for by H‐PA teams were reassigned to the 2 nonlocalized teams. All patients cared for by resident teams on the localized unit, that were anticipated to be discharged soon, stayed until discharge; those that had a longer stay anticipated were transferred to a nonlocalized unit.

Patient Assignment

The 4 H‐PA teams continued to accept patients between 7 AM and 3 PM, as well as overflow patients. Patients with sickle cell crises were admitted exclusively to the nonlocalized teams, as they were cared for on a specialized nursing unit. No other patient characteristic was used to decide team assignment.

The AMO balanced the existing workload of the teams against the number and complexity of incoming patients to decide team assignment for the patients, but if these factors were equivocal, the AMO was now asked to preferentially admit to the localized teams. The admission soft cap for the H‐PA teams remained the same (5 on weekdays and 3 on weekends). The soft cap on the total census of 16 patients for the nonlocalized teams remained, but we imposed hard caps on the total census for the localized teams. These hard caps were 16 for each localized team for the month of April (to fill a 32‐bed unit), then decreased to 12 for the month of May, as informal feedback from the teams suggested a need to decrease workload, and then rebalanced to 14 for the remaining study period.

Evaluation

Clinical Outcomes

Using both concurrent and historical controls, we evaluated the impact of localization on the following clinical outcome measures: length of stay (LOS), charges, and 30‐day readmission rates.

Inclusion Criteria