User login

Is it Time to Re-Examine the Physical Exam?

“Am I supposed to have such a hard time feeling the kidneys?” “I think I’m doing it wrong,” echoed another classmate. The frustration of these first-year students, who were already overwhelmed by the three pages of physical exam techniques that they were responsible for, became increasingly visible as they palpated the abdomens of their standardized patients. Then, they asked the dreaded question: “How often do you do this on real patients?”

When we teach first-year medical students the physical exam, these students are already aware that they have never observed physicians perform these maneuvers in their own medical care. “How come I’ve never seen my doctor do this before?” is a common question that we are often asked. We as faculty struggle with demonstrating and defending techniques that we hardly ever use given their variable utility in daily clinical practice. However, students are told that they must be familiar with the various “tools” in the repertoire, and they are led to believe that these skills will be a fundamental part of their future practice as physicians. Of course, when they begin their clerkships, the truth is revealed: the currency on the wards revolves around the computer. The experienced and passionate clinicians who may astonish them with the bedside exam are the exception and are hardly the rule.

In this issue of Journal of Hospital Medicine, Bergl et al.1 found that when medical students rotated on their internal medicine clerkship, patients were rarely examined during attending rounds and were even examined less often when these rounds were not at the bedside. Although the students themselves consistently incorporated the physical exam into patient assessments and presentations, neither their findings nor those of the residents were ever validated by the attending physician or by others. Notably, the physical exam did not influence clinical decision making as much as one might expect.

These findings should not come as a surprise. The current generation of residents and junior attendings today are more accustomed to emphasizing labs, imaging studies, pathology reports, and other data within the electronic health record (EHR) and with formulating initial plans before having met the patient.2 Physicians become uneasy when asked to decide without the reassurance of daily lab results, as if the information in the EHR is highly fundamental to patient care. Caring for the “iPatient” often trumps revisiting and reexamining the real patient.3 Medical teams are also bombarded with increasing demands for their attention and time and are pushed to expedite patient discharges while constantly responding to documentation queries in the EHR. Emphasis on patient throughput, quality metrics, and multidisciplinary communication is essential to provide effective patient care but often feels at odds with opportunities for bedside teaching.

Although discussions on these obstacles have increased in recent years, time-motion studies spanning decades and even preceding the duty-hours era have consistently shown that physicians reserve little time for physical examination and direct patient care.4 In other words, the challenges in bringing physicians to the bedside might have less to do with environmental barriers than we think.

Much of what we teach about physical diagnosis is imperfect,5 and the routine annual exam might well be eliminated given its low yield.6 Nevertheless, we cannot discount the importance of the physical exam in fostering the bond between the patient and the healthcare provider, particularly in patients with acute illnesses, and in making the interaction meaningful to the practitioner.

Many of us can easily recall embarrassing examples of obvious physical exam findings that were critical and overlooked with consequences – the missed incarcerated hernia in a patient labeled with gastritis and vomiting, or the patient with chest pain who had to undergo catheterization because the shingles rash was missed. The confidence in normal findings that might save a patient from unnecessary lab tests, imaging, or consultation is often not discussed. The burden is on us to retire maneuvers that have outlived their usefulness and to demonstrate to students the hazards and consequences of poor examination skills. We must also further what we know and understand about the physical exam as Osler, Laennec, and others before us once did. Point-of-care ultrasound is only one example of how innovation can bring trainees to the bedside, excite learners, engage patients, and affect care in a meaningful way while enhancing the nonultrasound-based skills of practitioners.7

It is promising that the students in this study consistently examined their patients each day. As future physicians, they can be very enthusiastic learners eager to apply the physical exam skills they have recently acquired during their early years of training. However, this excitement can taper off if not actively encouraged and reinforced, especially if role models are unintentionally sending the message that the physical exam does not matter or emphasizing exam maneuvers that do not serve a meaningful purpose. New technology will hopefully help us develop novel exam skills. If we can advance what we can diagnose at the bedside, students will remain motivated to improve and learn exam skills that truly affect patient-care decisions. After all, one day, they too will serve as role models for the next generation of physicians and hopefully will be the ones taking care of us at the bedside.

Disclosures

The authors declare no conflicts of interest.

1. Bergl PA, Taylor AC, Klumb J, et al. Teaching physical examination to medical students on inpatient medicine reams: A prospective mixed-methods descriptive study. J Hosp Med. 2018;13:399-402. PubMed

2. Chi J, Verghese A. Clinical education and the electronic health record: the flipped patient. JAMA. 2010;312(22):2331-2332. DOI: 10.1001/jama.2014.12820. PubMed

2. Verghese A. Culture shock—patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748-2751. DOI: 10.1056/NEJMp0807461 PubMed

3. Czernik Z, Lin CT. A piece of my mind. Time at the bedside (Computing). JAMA. 2016;315(22):2399-2400. DOI: 10.1001/jama.2016.1722 PubMed

5. Jauhar S. The demise of the physical exam. N Engl J Med. 2006;354(6):548-551. DOI: 10.1056/NEJMp068013 PubMed

6. Mehrotra A, Prochazka A. Improving value in health care--against the annual physical. N Engl J Med. 2015;373(16):1485-1487. DOI: 10.1056/NEJMp1507485 PubMed

7. Kugler J. Price and the evolution of the physical examination. JAMA Cardiol. 2018. DOI: 10.1001/jamacardio.2018.0002. [Epub ahead of print] PubMed

“Am I supposed to have such a hard time feeling the kidneys?” “I think I’m doing it wrong,” echoed another classmate. The frustration of these first-year students, who were already overwhelmed by the three pages of physical exam techniques that they were responsible for, became increasingly visible as they palpated the abdomens of their standardized patients. Then, they asked the dreaded question: “How often do you do this on real patients?”

When we teach first-year medical students the physical exam, these students are already aware that they have never observed physicians perform these maneuvers in their own medical care. “How come I’ve never seen my doctor do this before?” is a common question that we are often asked. We as faculty struggle with demonstrating and defending techniques that we hardly ever use given their variable utility in daily clinical practice. However, students are told that they must be familiar with the various “tools” in the repertoire, and they are led to believe that these skills will be a fundamental part of their future practice as physicians. Of course, when they begin their clerkships, the truth is revealed: the currency on the wards revolves around the computer. The experienced and passionate clinicians who may astonish them with the bedside exam are the exception and are hardly the rule.

In this issue of Journal of Hospital Medicine, Bergl et al.1 found that when medical students rotated on their internal medicine clerkship, patients were rarely examined during attending rounds and were even examined less often when these rounds were not at the bedside. Although the students themselves consistently incorporated the physical exam into patient assessments and presentations, neither their findings nor those of the residents were ever validated by the attending physician or by others. Notably, the physical exam did not influence clinical decision making as much as one might expect.

These findings should not come as a surprise. The current generation of residents and junior attendings today are more accustomed to emphasizing labs, imaging studies, pathology reports, and other data within the electronic health record (EHR) and with formulating initial plans before having met the patient.2 Physicians become uneasy when asked to decide without the reassurance of daily lab results, as if the information in the EHR is highly fundamental to patient care. Caring for the “iPatient” often trumps revisiting and reexamining the real patient.3 Medical teams are also bombarded with increasing demands for their attention and time and are pushed to expedite patient discharges while constantly responding to documentation queries in the EHR. Emphasis on patient throughput, quality metrics, and multidisciplinary communication is essential to provide effective patient care but often feels at odds with opportunities for bedside teaching.

Although discussions on these obstacles have increased in recent years, time-motion studies spanning decades and even preceding the duty-hours era have consistently shown that physicians reserve little time for physical examination and direct patient care.4 In other words, the challenges in bringing physicians to the bedside might have less to do with environmental barriers than we think.

Much of what we teach about physical diagnosis is imperfect,5 and the routine annual exam might well be eliminated given its low yield.6 Nevertheless, we cannot discount the importance of the physical exam in fostering the bond between the patient and the healthcare provider, particularly in patients with acute illnesses, and in making the interaction meaningful to the practitioner.

Many of us can easily recall embarrassing examples of obvious physical exam findings that were critical and overlooked with consequences – the missed incarcerated hernia in a patient labeled with gastritis and vomiting, or the patient with chest pain who had to undergo catheterization because the shingles rash was missed. The confidence in normal findings that might save a patient from unnecessary lab tests, imaging, or consultation is often not discussed. The burden is on us to retire maneuvers that have outlived their usefulness and to demonstrate to students the hazards and consequences of poor examination skills. We must also further what we know and understand about the physical exam as Osler, Laennec, and others before us once did. Point-of-care ultrasound is only one example of how innovation can bring trainees to the bedside, excite learners, engage patients, and affect care in a meaningful way while enhancing the nonultrasound-based skills of practitioners.7

It is promising that the students in this study consistently examined their patients each day. As future physicians, they can be very enthusiastic learners eager to apply the physical exam skills they have recently acquired during their early years of training. However, this excitement can taper off if not actively encouraged and reinforced, especially if role models are unintentionally sending the message that the physical exam does not matter or emphasizing exam maneuvers that do not serve a meaningful purpose. New technology will hopefully help us develop novel exam skills. If we can advance what we can diagnose at the bedside, students will remain motivated to improve and learn exam skills that truly affect patient-care decisions. After all, one day, they too will serve as role models for the next generation of physicians and hopefully will be the ones taking care of us at the bedside.

Disclosures

The authors declare no conflicts of interest.

“Am I supposed to have such a hard time feeling the kidneys?” “I think I’m doing it wrong,” echoed another classmate. The frustration of these first-year students, who were already overwhelmed by the three pages of physical exam techniques that they were responsible for, became increasingly visible as they palpated the abdomens of their standardized patients. Then, they asked the dreaded question: “How often do you do this on real patients?”

When we teach first-year medical students the physical exam, these students are already aware that they have never observed physicians perform these maneuvers in their own medical care. “How come I’ve never seen my doctor do this before?” is a common question that we are often asked. We as faculty struggle with demonstrating and defending techniques that we hardly ever use given their variable utility in daily clinical practice. However, students are told that they must be familiar with the various “tools” in the repertoire, and they are led to believe that these skills will be a fundamental part of their future practice as physicians. Of course, when they begin their clerkships, the truth is revealed: the currency on the wards revolves around the computer. The experienced and passionate clinicians who may astonish them with the bedside exam are the exception and are hardly the rule.

In this issue of Journal of Hospital Medicine, Bergl et al.1 found that when medical students rotated on their internal medicine clerkship, patients were rarely examined during attending rounds and were even examined less often when these rounds were not at the bedside. Although the students themselves consistently incorporated the physical exam into patient assessments and presentations, neither their findings nor those of the residents were ever validated by the attending physician or by others. Notably, the physical exam did not influence clinical decision making as much as one might expect.

These findings should not come as a surprise. The current generation of residents and junior attendings today are more accustomed to emphasizing labs, imaging studies, pathology reports, and other data within the electronic health record (EHR) and with formulating initial plans before having met the patient.2 Physicians become uneasy when asked to decide without the reassurance of daily lab results, as if the information in the EHR is highly fundamental to patient care. Caring for the “iPatient” often trumps revisiting and reexamining the real patient.3 Medical teams are also bombarded with increasing demands for their attention and time and are pushed to expedite patient discharges while constantly responding to documentation queries in the EHR. Emphasis on patient throughput, quality metrics, and multidisciplinary communication is essential to provide effective patient care but often feels at odds with opportunities for bedside teaching.

Although discussions on these obstacles have increased in recent years, time-motion studies spanning decades and even preceding the duty-hours era have consistently shown that physicians reserve little time for physical examination and direct patient care.4 In other words, the challenges in bringing physicians to the bedside might have less to do with environmental barriers than we think.

Much of what we teach about physical diagnosis is imperfect,5 and the routine annual exam might well be eliminated given its low yield.6 Nevertheless, we cannot discount the importance of the physical exam in fostering the bond between the patient and the healthcare provider, particularly in patients with acute illnesses, and in making the interaction meaningful to the practitioner.

Many of us can easily recall embarrassing examples of obvious physical exam findings that were critical and overlooked with consequences – the missed incarcerated hernia in a patient labeled with gastritis and vomiting, or the patient with chest pain who had to undergo catheterization because the shingles rash was missed. The confidence in normal findings that might save a patient from unnecessary lab tests, imaging, or consultation is often not discussed. The burden is on us to retire maneuvers that have outlived their usefulness and to demonstrate to students the hazards and consequences of poor examination skills. We must also further what we know and understand about the physical exam as Osler, Laennec, and others before us once did. Point-of-care ultrasound is only one example of how innovation can bring trainees to the bedside, excite learners, engage patients, and affect care in a meaningful way while enhancing the nonultrasound-based skills of practitioners.7

It is promising that the students in this study consistently examined their patients each day. As future physicians, they can be very enthusiastic learners eager to apply the physical exam skills they have recently acquired during their early years of training. However, this excitement can taper off if not actively encouraged and reinforced, especially if role models are unintentionally sending the message that the physical exam does not matter or emphasizing exam maneuvers that do not serve a meaningful purpose. New technology will hopefully help us develop novel exam skills. If we can advance what we can diagnose at the bedside, students will remain motivated to improve and learn exam skills that truly affect patient-care decisions. After all, one day, they too will serve as role models for the next generation of physicians and hopefully will be the ones taking care of us at the bedside.

Disclosures

The authors declare no conflicts of interest.

1. Bergl PA, Taylor AC, Klumb J, et al. Teaching physical examination to medical students on inpatient medicine reams: A prospective mixed-methods descriptive study. J Hosp Med. 2018;13:399-402. PubMed

2. Chi J, Verghese A. Clinical education and the electronic health record: the flipped patient. JAMA. 2010;312(22):2331-2332. DOI: 10.1001/jama.2014.12820. PubMed

2. Verghese A. Culture shock—patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748-2751. DOI: 10.1056/NEJMp0807461 PubMed

3. Czernik Z, Lin CT. A piece of my mind. Time at the bedside (Computing). JAMA. 2016;315(22):2399-2400. DOI: 10.1001/jama.2016.1722 PubMed

5. Jauhar S. The demise of the physical exam. N Engl J Med. 2006;354(6):548-551. DOI: 10.1056/NEJMp068013 PubMed

6. Mehrotra A, Prochazka A. Improving value in health care--against the annual physical. N Engl J Med. 2015;373(16):1485-1487. DOI: 10.1056/NEJMp1507485 PubMed

7. Kugler J. Price and the evolution of the physical examination. JAMA Cardiol. 2018. DOI: 10.1001/jamacardio.2018.0002. [Epub ahead of print] PubMed

1. Bergl PA, Taylor AC, Klumb J, et al. Teaching physical examination to medical students on inpatient medicine reams: A prospective mixed-methods descriptive study. J Hosp Med. 2018;13:399-402. PubMed

2. Chi J, Verghese A. Clinical education and the electronic health record: the flipped patient. JAMA. 2010;312(22):2331-2332. DOI: 10.1001/jama.2014.12820. PubMed

2. Verghese A. Culture shock—patient as icon, icon as patient. N Engl J Med. 2008;359(26):2748-2751. DOI: 10.1056/NEJMp0807461 PubMed

3. Czernik Z, Lin CT. A piece of my mind. Time at the bedside (Computing). JAMA. 2016;315(22):2399-2400. DOI: 10.1001/jama.2016.1722 PubMed

5. Jauhar S. The demise of the physical exam. N Engl J Med. 2006;354(6):548-551. DOI: 10.1056/NEJMp068013 PubMed

6. Mehrotra A, Prochazka A. Improving value in health care--against the annual physical. N Engl J Med. 2015;373(16):1485-1487. DOI: 10.1056/NEJMp1507485 PubMed

7. Kugler J. Price and the evolution of the physical examination. JAMA Cardiol. 2018. DOI: 10.1001/jamacardio.2018.0002. [Epub ahead of print] PubMed

©2018 Society of Hospital Medicine

Shared Decision-Making During Inpatient Rounds: Opportunities for Improvement in Patient Engagement and Communication

The ethos of medicine has shifted from paternalistic, physician-driven care to patient autonomy and engagement, in which the physician shares information and advises.1-3 Although there are ethical, legal, and practical reasons to respect patient preferences,1-4 patient engagement also fosters quality and safety5 and may improve clinical outcomes.5-8 Patients whose preferences are respected are more likely to trust their doctor, feel empowered, and adhere to treatments.9

Providers may partner with patients through shared decision-making (SDM).10,11 Several SDM models describe the process of providers and patients balancing evidence, preferences and context to arrive at a clinical decision.12-15 The National Academy of Medicine and the American Academy of Pediatrics has called for more SDM,16,17 including when clinical evidence is limited,2 equally beneficial options exist,18 clinical stakes are high,19 and even with deferential patients.20 Despite its value, SDM does not reliably occur21,22 and SDM training is often unavailable.4 Clinical decision tools, patient education aids, and various training interventions have shown promising, although inconsistent results.23, 24

Little is known about SDM in inpatient settings where unique patient, clinician, and environmental factors may influence SDM. This study describes the quality and possible predictors of inpatient SDM during attending rounds in 4 academic training settings. Although SDM may occur anytime during a hospitalization, attending rounds present a valuable opportunity for SDM observation given their centrality to inpatient care and teaching.25,26 Because attending physicians bear ultimate responsibility for patient management, we examined whether SDM performance varies among attendings within each service. In addition, we tested the hypothesis that service-level, team-level, and patient-level features explain variation in SDM quality more than individual attending physicians. Finally, we compared peer-observer perspectives of SDM behaviors with patient and/or guardian perspectives.

METHODS

Study Design and Setting

This cross-sectional, observational study examined the diversity of SDM practice within and between 4 inpatient services during attending rounds, including the internal medicine and pediatrics services at Stanford University and the University of California, San Francisco (UCSF). Both institutions provide quaternary care to diverse patient populations with approximately half enrolled in Medicare and/or Medicaid.

One institution had 42 internal medicine (Med-1) and 15 pediatric hospitalists (Peds-1) compared to 8 internal medicine (Med-2) and 12 pediatric hospitalists (Peds-2) at the second location. Both pediatric services used family-centered rounds that included discussions between the patients’ families and the whole team. One medicine service used a similar rounding model that did not necessarily involve the patients’ families. In contrast, the smaller medicine service typically began rounds by discussing all patients in a conference room and then visiting select patients afterwards.

From August 2014 to November 2014, peer observers gathered data on team SDM behaviors during attending rounds. After the rounding team departed, nonphysician interviewers surveyed consenting patients’ (or guardians’) views of the SDM experience, yielding paired evaluations for a subset of SDM encounters. Institutional review board approval was obtained from Stanford University and UCSF.

Participants and Inclusion Criteria

Attending physicians were hospitalists who supervised rounds at least 1 month per year, and did not include those conducting the study. All provided verbal assent to be observed on 3 days within a 7-day period. While team composition varied as needed (eg, to include the nurse, pharmacist, interpreter, etc), we restricted study observations to those teams with an attending and at least one learner (eg, resident, intern, medical student) to capture the influence of attending physicians in their training role. Because services vary in number of attendings on staff, rounds assigned per attending, and patients per round, it was not possible to enroll equal sample sizes per service in the study.

Nonintensive care unit patients who were deemed medically stable by the team were eligible for peer observation and participation in a subsequent patient interview once during the study period. Pediatric patients were invited for an interview if they were between 13 and 21 years old and had the option of having a parent or guardian present; if the pediatric patients were less than 13 years old or they were not interested in being interviewed, then their parents or guardians were invited to be interviewed. Interpreters were on rounds, and thus, non-English participants were able to participate in the peer observations, but could not participate in patient interviews because interpreters were not available during afternoons for study purposes. Consent was obtained from all participating patients and/or guardians.

Data Collection

Round and Patient Characteristics

Peer observers recorded rounding, team, and patient characteristics using a standardized form. Rounding data included date, attending name, duration of rounds, and patient census. Patient level data included the decision(s) discussed, the seniority of the clinician leading the discussion, team composition, minutes spent discussing the patient (both with the patient and/or guardian and total time), hospitalization week, and patient’s primary language. Additional patient data obtained from electronic health records included age, gender, race, ethnicity, date of admission, and admitting diagnosis.

SDM Measures

Peer-observed SDM behaviors were quantified per patient encounter using the 9-item Rochester Participatory Decision-Making Scale (RPAD), with credit given for SDM behaviors exhibited by anyone on the rounding team (team-level metric).27 Each item was scored on a 3-point scale (0 = absent, 0.5 = partial, and 1 = present) for a maximum of 9 points, with higher scores indicating higher-quality SDM (Peer-RPAD Score). We created semistructured patient interview guides by adapting each RPAD item into layperson language (Patient-RPAD Score) and adding open-ended questions to assess the patient experience.

Peer-Observer Training

Eight peer-observers (7 hospitalists and 1 palliative care physician) were trained to perform RPAD ratings using videos of patient encounters. Initially, raters viewed videos together and discussed ratings for each RPAD item. The observers incorporated behavioral anchors and clinical examples into the development of an RPAD rating guide, which they subsequently used to independently score 4 videos from an online medical communication library.28 These scores were discussed to resolve any differences before 4 additional videos were independently viewed, scored, and compared. Interrater reliability was achieved when the standard deviation of summed SDM scores across raters was less than 1 for all 4 videos.

Patient Interviewers

Interviewers were English-speaking volunteers without formal medical training. They were educated in hospital etiquette by a physician and in administering patient interviews through peer-to-peer role playing and an observation and feedback interview with at least 1 patient.

Data Analysis

The analysis set included every unique patient with whom a medical decision was made by an eligible clinical team. To account for the nested study design (patient-level scores within rounds, rounds within attending, and attendings within service), we used mixed-effects models to estimate mean (summary or item) RPAD score by levels of fixed covariate(s). The models included random effects accounting for attending-level and round-level correlations among scores via variance components, and allowing the attending-level random effect to differ by service. Analyses were performed using SAS version 9.4 (SAS Institute Inc, Cary, NC). We used descriptive statistics to summarize round- and patient-level characteristics.

SDM Variation by Attending and Service

Box plots were used to summarize raw patient-level, Peer-RPAD scores by service and attending. By using the methods described above, we estimated the mean score overall and by service. In both models, we examined the statistical significance of service-specific variation in attending-level random effects by using likelihood-ratio test (LRT) to compare models.

SDM Variation by Round and Patient Characteristics

We used the models described above to identify covariates associated with Peer-RPAD scores. We fit univariate models separately for each covariate, then fit 2 multivariable models, including (1) all covariates and (2) all effects significant in either model at P ≤ .20 according to F tests. For uniformity of presentation, we express continuous covariates categorically; however, we report P values based on continuous versions. Means generated by the multivariable models were calculated at the mean values of all other covariates in model.

Patient-Level RPAD Data

A subsample of patients completed semistructured interviews with analogous RPAD questions. To identify possible selection bias in the full sample, we summarized response rates by service and patient language and modeled Peer-RPAD scores by interview response status. Among responders, we estimated the mean Peer-RPAD and Patient-RPAD scores and their paired differences and correlations, testing for non-zero correlations via the Spearman rank test.

RESULTS

All Patient Encounters

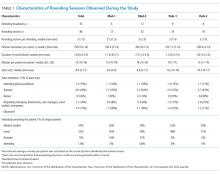

The median duration of rounding sessions was 1.8 hours, median patient census was 9, and median patient encounter was 13 minutes. The duration of rounds and minutes per patient were longest at Med-2 and shortest at Peds-1. See Table 1 for other team characteristics.

Peer Evaluations of SDM Encounters

Characteristics of Patients

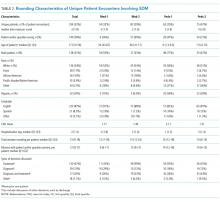

Teams spent a median of 13 minutes per SDM encounter, which was not higher than the round median. SDM topics discussed included 47% treatment, 15% diagnostic, 30% both treatment and diagnostic, and 7% other.

Variation in SDM Quality Among Attending Physicians

Overall Peer-RPAD Scores were normally distributed. After adjusting for the nested study design, the overall mean (standard error) score was 4.16 (0.11). Score variability among attendings differed significantly by service (LRT P = .0067). For example, raw scores were lower and more variable among attending physicians at Med-2 than other among attendings in other services (see Appendix Figure in Supporting Information). However, when service was included in the model as a fixed effect, mean scores varied significantly, from 3.0 at Med-2 to 4.7 at Med-1 (P < .0001), but the random variation among attendings no longer differed significantly by service (P = .13). This finding supports the hypothesis that service-level influences are stronger than influences of individual attending physicians, that is, that variation between services exceeded variation among attendings within service.

Aspects of SDM That Are More Prevalent on Rounds

Rounds and Patient Characteristics Associated With Peer-RPAD Scores

Patient-RPAD Results: Dissimilar Perspectives of Patients and/or Guardians and Physician Observers

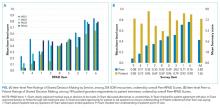

Among responders, mean Patient-RPAD scores were 6.8 to 7.1 for medicine services and 7.6 to 7.8 for pediatric services (P = .01). The overall mean Patient-RPAD score, 7.46, was significantly greater than the paired Peer-RPAD score by 3.5 (P = .011); however, correlations were not statistically significantly different from 0 (by service, each P > .12).

To understand drivers of the differences between Peer-RPAD and Patient-RPAD scores, we analyzed findings by item. Each mean patient-item score exceeded its peer counterpart (P ≤ .01; panel B of Figure). Peer-item scores fell below 33% on 2 items (Items 9 and 4) and only exceeded 67% on 2 items (Items 1 and 6), whereas patient-item scores ranged from 60% (Item 8) to 97% (Item 7). Three paired differences exceeded 50% (Items 9, 4, and 7) and 3 were below 20% (Items 6, 8 and 1), underlying the lack of correlation between peer and patient scores.

DISCUSSION

In this multisite study of SDM during inpatient attending rounds, SDM quality, specific SDM behaviors, and factors contributing to SDM were identified. Our study found an adjusted overall Peer-RPAD Score of 4.4 out of 9, and found the following 3 SDM elements most needing improvement according to trained peer observers: (1) “Checking understanding of the patient’s perspective”, (2) “Examining barriers to follow-through with the treatment plan”, and (3) “Asking if the patient has questions.” Areas of strength included explaining the clinical issue or nature of the decision and matching medical language to the patient’s level of understanding, with each rated highly by both peer-observers and patients. Broadly speaking, physicians were skillful in delivering information to patients but failed to solicit input from patients. Characteristics associated with increased SDM in the multivariate analysis included the following: service, patient gender, timing of rounds during patient’s hospital stay, and amount of time rounding with each patient.

Patients similarly found that physicians could improve their abilities to elicit information from patients and families, noting the 3 lowest patient-rated SDM elements were as follows: (1) asking open-ended questions, (2) discussing alternatives or uncertainties, and (3) discussing barriers to treatment plan follow through. Overall, patients and guardians perceived the quantity and quality of SDM on rounds more favorably than peer observers, which is consistent with other studies of patient perceptions of communication. 29-31 It is possible that patient ratings are more influenced by demand characteristics, fear of negatively impacting their patient-provider relationships, and conflation of overall satisfaction with quality of communication.32 This difference in patient perception of SDM is worthy of further study.

Prior work has revealed that SDM may occur infrequently during inpatient rounds.11 This study further elucidates specific SDM behaviors used along with univariate and multivariate modeling to explore possible contributing factors. The strengths and weaknesses found were similar at all 4 services and the influence of the service was more important than variability across attendings. This study’s findings are similar to a study by Shields et al.,33 in which the findings in a geographically different outpatient setting 10 years earlier suggesting global and enduring challenges to SDM. To our knowledge, this is the first published study to characterize inpatient SDM behaviors and may serve as the basis for future interventions.

Although the item-level components were ranked similarly across services, on average the summary Peer-RPAD score was lowest at Med-2, where we observed high variability within and between attendings, and was highest at Med-1, where variability was low. Med-2 carried the highest caseload and held the longest rounds, while Med-1 carried the lowest caseload, suggesting that modifiable burdens may hamper SDM performance. Prior studies suggest that patients are often selected based on teaching opportunities, immediate medical need and being newly admitted.34 The high scores at Med-1 may reflect that service’s prediscussion of patients during card-flipping rounds or their selection of which patients to round on as a team. Consistent with prior studies29,35 of SDM and the family-centered rounding model, which includes the involvement of nurses, respiratory therapists, pharmacists, case managers, social workers, and interpreters on rounds, both pediatrics services showed higher SDM scores.

In contrast to prior studies,34,36 team size and number of learners did not affect SDM performance, nor did decision type. Despite teams having up to 17 members, 8 learners, and 14 complex patients, SDM scores did not vary significantly by team. Nonetheless, trends were in the directions expected: Scores tended to decrease as the team size or the percentage of trainees grew, and increased with the seniority of the presenting physician. Interestingly, SDM performance decreased with round-average minutes per patient, which may be measuring on-going intensity across cases that leads to exhaustion. Statistically significant patient factors for increased SDM included longer duration of patient encounters, second week of hospital stay, and female patient gender. Although we anticipated that the high number of decisions made early in hospitalization would facilitate higher SDM scores, continuity and stronger patient-provider relationships may enhance SDM.36 We report service-specific team and patient characteristics, in addition to SDM findings in anticipation that some readers will identify with 1 service more than others.

This study has several important limitations. First, our peer observers were not blinded and primarily observed encounters at their own site. To minimize bias, observers periodically rated videos to recalibrate RPAD scoring. Second, additional SDM conversations with a patient and/or guardian may have occurred outside of rounds and were not captured, and poor patient recall may have affected Patient-RPAD scores despite interviewer prompts and timeliness of interviews within 12 hours of rounds. Third, there might have been a selection bias for the one service who selected a smaller number of patients to see, compared with the three other services that performed bedside rounds on all patients. It is possible that attending physicians selected patients who were deemed most able to have SDM conversations, thus affecting RPAD scores on that service. Fourth, study services had fewer patients on average than other academic hospitals (median 9, range 3-14), which might limit its generalizability. Last, as in any observational study, there is always the possibility of the Hawthorne effect. However, neither teams nor patients knew the study objectives.

Nevertheless, important findings emerged through the use of RPAD Scores to evaluate inpatient SDM practices. In particular, we found that to increase SDM quality in inpatient settings, practitioners should (1) check their understanding of the patient’s perspective, (2) examine barriers to follow-through with the treatment plan, and (3) ask if the patient has questions. Variation among services remained very influential after adjusting for team and patient characteristics, which suggests that “climate” or service culture should be targeted by an intervention, rather than individual attendings or subgroups defined by team or patient characteristics. Notably, team size, number of learners, patient census, and type of decision being made did not affect SDM performance, suggesting that even large, busy services can perform SDM if properly trained.

Acknowledgments

The authors thank the patients, families, pediatric and internal medicine residents, and hospitalists at Stanford School of Medicine and University of California, San Francisco School of Medicine for their participation in this study. We would also like to thank the student volunteers who collected patient perspectives on the encounters.

Disclosure

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article. The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was supported by an NIH/NCCIH grant R25 AT006573.

1. Braddock CH. The emerging importance and relevance of shared decision making to clinical practice. Med Decis Mak. 2010;30(5 Suppl):5S-7S. doi:10.1177/0272989X10381344. PubMed

2. Braddock CH. Supporting shared decision making when clinical evidence is low. Med Care Res Rev MCRR. 2013;70(1 Suppl):129S-140S. doi:10.1177/1077558712460280. PubMed

3. Elwyn G, Tilburt J, Montori V. The ethical imperative for shared decision-making. Eur J Pers Centered Healthc. 2013;1(1):129-131. doi:10.5750/ejpch.v1i1.645.

4. Stiggelbout AM, Pieterse AH, De Haes JCJM. Shared decision making: Concepts, evidence, and practice. Patient Educ Couns. 2015;98(10):1172-1179. doi:10.1016/j.pec.2015.06.022. PubMed

5. Stacey D, Légaré F, Col NF, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2014;(10):CD001431. doi:10.1002/14651858.CD001431.pub4. PubMed

6. Wilson SR, Strub P, Buist AS, et al. Shared treatment decision making improves adherence and outcomes in poorly controlled asthma. Am J Respir Crit Care Med. 2010;181(6):566-577. doi:10.1164/rccm.200906-0907OC. PubMed

7. Parchman ML, Zeber JE, Palmer RF. Participatory decision making, patient activation, medication adherence, and intermediate clinical outcomes in type 2 diabetes: a STARNet study. Ann Fam Med. 2010;8(5):410-417. doi:10.1370/afm.1161. PubMed

8. Weiner SJ, Schwartz A, Sharma G, et al. Patient-centered decision making and health care outcomes: an observational study. Ann Intern Med. 2013;158(8):573-579. doi:10.7326/0003-4819-158-8-201304160-00001. PubMed

9. Butterworth JE, Campbell JL. Older patients and their GPs: shared decision making in enhancing trust. Br J Gen Pract. 2014;64(628):e709-e718. doi:10.3399/bjgp14X682297. PubMed

10. Barry MJ, Edgman-Levitan S. Shared decision making--pinnacle of patient-centered care. N Engl J Med. 2012;366(9):780-781. doi:10.1056/NEJMp1109283. PubMed

11. Satterfield JM, Bereknyei S, Hilton JF, et al. The prevalence of social and behavioral topics and related educational opportunities during attending rounds. Acad Med J Assoc Am Med Coll. 2014;89(11):1548-1557. doi:10.1097/ACM.0000000000000483. PubMed

12. Charles C, Gafni A, Whelan T. Shared decision-making in the medical encounter: what does it mean? (or it takes at least two to tango). Soc Sci Med. 1997;44(5):681-692. PubMed

13. Elwyn G, Frosch D, Thomson R, et al. Shared decision making: a model for clinical practice. J Gen Intern Med. 2012;27(10):1361-1367. doi:10.1007/s11606-012-2077-6. PubMed

14. Légaré F, St-Jacques S, Gagnon S, et al. Prenatal screening for Down syndrome: a survey of willingness in women and family physicians to engage in shared decision-making. Prenat Diagn. 2011;31(4):319-326. doi:10.1002/pd.2624. PubMed

15. Satterfield JM, Spring B, Brownson RC, et al. Toward a Transdisciplinary Model of Evidence-Based Practice. Milbank Q. 2009;87(2):368-390. PubMed

16. National Academy of Medicine. Crossing the quality chasm: a new health system for the 21st century. https://www.nationalacademies.org/hmd/~/media/Files/Report%20Files/2001/Crossing-the-Quality-Chasm/Quality%20Chasm%202001%20%20report%20brief.pdf. Accessed on November 30, 2016.

17. Adams RC, Levy SE, Council on Children with Disabilities. Shared Decision-Making and Children with Disabilities: Pathways to Consensus. Pediatrics. 2017; 139(6):1-9. PubMed

18. Müller-Engelmann M, Keller H, Donner-Banzhoff N, Krones T. Shared decision making in medicine: The influence of situational treatment factors. Patient Educ Couns. 2011;82(2):240-246. doi:10.1016/j.pec.2010.04.028. PubMed

19. Whitney SN. A New Model of Medical Decisions: Exploring the Limits of Shared Decision Making. Med Decis Making. 2003;23(4):275-280. doi:10.1177/0272989X03256006. PubMed

20. Kehl KL, Landrum MB, Arora NK, et al. Association of Actual and Preferred Decision Roles With Patient-Reported Quality of Care: Shared Decision Making in Cancer Care. JAMA Oncol. 2015;1(1):50-58. doi:10.1001/jamaoncol.2014.112. PubMed

21. Couët N, Desroches S, Robitaille H, et al. Assessments of the extent to which health-care providers involve patients in decision making: a systematic review of studies using the OPTION instrument. Health Expect Int J Public Particip Health Care Health Policy. 2015;18(4):542-561. doi:10.1111/hex.12054. PubMed

22. Fowler FJ, Gerstein BS, Barry MJ. How patient centered are medical decisions?: Results of a national survey. JAMA Intern Med. 2013;173(13):1215-1221. doi:10.1001/jamainternmed.2013.6172. PubMed

23. Légaré F, Stacey D, Turcotte S, et al. Interventions for improving the adoption of shared decision making by healthcare professionals. Cochrane Database Syst Rev. 2014;(9):CD006732. doi:10.1002/14651858.CD006732.pub3. PubMed

24. Stacey D, Bennett CL, Barry MJ, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2011;(10):CD001431. doi:10.1002/14651858.CD001431.pub3. PubMed

25. Di Francesco L, Pistoria MJ, Auerbach AD, Nardino RJ, Holmboe ES. Internal medicine training in the inpatient setting. A review of published educational interventions. J Gen Intern Med. 2005;20(12):1173-1180. doi:10.1111/j.1525-1497.2005.00250.x. PubMed

26. Janicik RW, Fletcher KE. Teaching at the bedside: a new model. Med Teach. 2003;25(2):127-130. PubMed

27. Shields CG, Franks P, Fiscella K, Meldrum S, Epstein RM. Rochester Participatory Decision-Making Scale (RPAD): reliability and validity. Ann Fam Med. 2005;3(5):436-442. doi:10.1370/afm.305. PubMed

28. DocCom - enhancing competence in healthcare communication. https://webcampus.drexelmed.edu/doccom/user/. Accessed on November 30, 2016.

29. Bailey SM, Hendricks-Muñoz KD, Mally P. Parental influence on clinical management during neonatal intensive care: a survey of US neonatologists. J Matern Fetal Neonatal Med. 2013;26(12):1239-1244. doi:10.3109/14767058.2013.776531. PubMed

30. Janz NK, Wren PA, Copeland LA, Lowery JC, Goldfarb SL, Wilkins EG. Patient-physician concordance: preferences, perceptions, and factors influencing the breast cancer surgical decision. J Clin Oncol. 2004;22(15):3091-3098. doi:10.1200/JCO.2004.09.069. PubMed

31. Schoenborn NL, Cayea D, McNabney M, Ray A, Boyd C. Prognosis communication with older patients with multimorbidity: Assessment after an educational intervention. Gerontol Geriatr Educ. 2016;38(4):471-481. doi:10.1080/02701960.2015.1115983. PubMed

32. Lipkin M. Shared decision making. JAMA Intern Med. 2013;173(13):1204-1205. doi:10.1001/jamainternmed.2013.6248. PubMed

33. Gonzalo JD, Heist BS, Duffy BL, et al. The art of bedside rounds: a multi-center qualitative study of strategies used by experienced bedside teachers. J Gen Intern Med. 2013;28(3):412-420. doi:10.1007/s11606-012-2259-2. PubMed

34. Rosen P, Stenger E, Bochkoris M, Hannon MJ, Kwoh CK. Family-centered multidisciplinary rounds enhance the team approach in pediatrics. Pediatrics. 2009;123(4):e603-e608. doi:10.1542/peds.2008-2238. PubMed

35. Harrison R, Allen E. Teaching internal medicine residents in the new era. Inpatient attending with duty-hour regulations. J Gen Intern Med. 2006;21(5):447-452. doi:10.1111/j.1525-1497.2006.00425.x. PubMed

36. Smith SK, Dixon A, Trevena L, Nutbeam D, McCaffery KJ. Exploring patient involvement in healthcare decision making across different education and functional health literacy groups. Soc Sci Med 1982. 2009;69(12):1805-1812. doi:10.1016/j.socscimed.2009.09.056. PubMed

The ethos of medicine has shifted from paternalistic, physician-driven care to patient autonomy and engagement, in which the physician shares information and advises.1-3 Although there are ethical, legal, and practical reasons to respect patient preferences,1-4 patient engagement also fosters quality and safety5 and may improve clinical outcomes.5-8 Patients whose preferences are respected are more likely to trust their doctor, feel empowered, and adhere to treatments.9

Providers may partner with patients through shared decision-making (SDM).10,11 Several SDM models describe the process of providers and patients balancing evidence, preferences and context to arrive at a clinical decision.12-15 The National Academy of Medicine and the American Academy of Pediatrics has called for more SDM,16,17 including when clinical evidence is limited,2 equally beneficial options exist,18 clinical stakes are high,19 and even with deferential patients.20 Despite its value, SDM does not reliably occur21,22 and SDM training is often unavailable.4 Clinical decision tools, patient education aids, and various training interventions have shown promising, although inconsistent results.23, 24

Little is known about SDM in inpatient settings where unique patient, clinician, and environmental factors may influence SDM. This study describes the quality and possible predictors of inpatient SDM during attending rounds in 4 academic training settings. Although SDM may occur anytime during a hospitalization, attending rounds present a valuable opportunity for SDM observation given their centrality to inpatient care and teaching.25,26 Because attending physicians bear ultimate responsibility for patient management, we examined whether SDM performance varies among attendings within each service. In addition, we tested the hypothesis that service-level, team-level, and patient-level features explain variation in SDM quality more than individual attending physicians. Finally, we compared peer-observer perspectives of SDM behaviors with patient and/or guardian perspectives.

METHODS

Study Design and Setting

This cross-sectional, observational study examined the diversity of SDM practice within and between 4 inpatient services during attending rounds, including the internal medicine and pediatrics services at Stanford University and the University of California, San Francisco (UCSF). Both institutions provide quaternary care to diverse patient populations with approximately half enrolled in Medicare and/or Medicaid.

One institution had 42 internal medicine (Med-1) and 15 pediatric hospitalists (Peds-1) compared to 8 internal medicine (Med-2) and 12 pediatric hospitalists (Peds-2) at the second location. Both pediatric services used family-centered rounds that included discussions between the patients’ families and the whole team. One medicine service used a similar rounding model that did not necessarily involve the patients’ families. In contrast, the smaller medicine service typically began rounds by discussing all patients in a conference room and then visiting select patients afterwards.

From August 2014 to November 2014, peer observers gathered data on team SDM behaviors during attending rounds. After the rounding team departed, nonphysician interviewers surveyed consenting patients’ (or guardians’) views of the SDM experience, yielding paired evaluations for a subset of SDM encounters. Institutional review board approval was obtained from Stanford University and UCSF.

Participants and Inclusion Criteria

Attending physicians were hospitalists who supervised rounds at least 1 month per year, and did not include those conducting the study. All provided verbal assent to be observed on 3 days within a 7-day period. While team composition varied as needed (eg, to include the nurse, pharmacist, interpreter, etc), we restricted study observations to those teams with an attending and at least one learner (eg, resident, intern, medical student) to capture the influence of attending physicians in their training role. Because services vary in number of attendings on staff, rounds assigned per attending, and patients per round, it was not possible to enroll equal sample sizes per service in the study.

Nonintensive care unit patients who were deemed medically stable by the team were eligible for peer observation and participation in a subsequent patient interview once during the study period. Pediatric patients were invited for an interview if they were between 13 and 21 years old and had the option of having a parent or guardian present; if the pediatric patients were less than 13 years old or they were not interested in being interviewed, then their parents or guardians were invited to be interviewed. Interpreters were on rounds, and thus, non-English participants were able to participate in the peer observations, but could not participate in patient interviews because interpreters were not available during afternoons for study purposes. Consent was obtained from all participating patients and/or guardians.

Data Collection

Round and Patient Characteristics

Peer observers recorded rounding, team, and patient characteristics using a standardized form. Rounding data included date, attending name, duration of rounds, and patient census. Patient level data included the decision(s) discussed, the seniority of the clinician leading the discussion, team composition, minutes spent discussing the patient (both with the patient and/or guardian and total time), hospitalization week, and patient’s primary language. Additional patient data obtained from electronic health records included age, gender, race, ethnicity, date of admission, and admitting diagnosis.

SDM Measures

Peer-observed SDM behaviors were quantified per patient encounter using the 9-item Rochester Participatory Decision-Making Scale (RPAD), with credit given for SDM behaviors exhibited by anyone on the rounding team (team-level metric).27 Each item was scored on a 3-point scale (0 = absent, 0.5 = partial, and 1 = present) for a maximum of 9 points, with higher scores indicating higher-quality SDM (Peer-RPAD Score). We created semistructured patient interview guides by adapting each RPAD item into layperson language (Patient-RPAD Score) and adding open-ended questions to assess the patient experience.

Peer-Observer Training

Eight peer-observers (7 hospitalists and 1 palliative care physician) were trained to perform RPAD ratings using videos of patient encounters. Initially, raters viewed videos together and discussed ratings for each RPAD item. The observers incorporated behavioral anchors and clinical examples into the development of an RPAD rating guide, which they subsequently used to independently score 4 videos from an online medical communication library.28 These scores were discussed to resolve any differences before 4 additional videos were independently viewed, scored, and compared. Interrater reliability was achieved when the standard deviation of summed SDM scores across raters was less than 1 for all 4 videos.

Patient Interviewers

Interviewers were English-speaking volunteers without formal medical training. They were educated in hospital etiquette by a physician and in administering patient interviews through peer-to-peer role playing and an observation and feedback interview with at least 1 patient.

Data Analysis

The analysis set included every unique patient with whom a medical decision was made by an eligible clinical team. To account for the nested study design (patient-level scores within rounds, rounds within attending, and attendings within service), we used mixed-effects models to estimate mean (summary or item) RPAD score by levels of fixed covariate(s). The models included random effects accounting for attending-level and round-level correlations among scores via variance components, and allowing the attending-level random effect to differ by service. Analyses were performed using SAS version 9.4 (SAS Institute Inc, Cary, NC). We used descriptive statistics to summarize round- and patient-level characteristics.

SDM Variation by Attending and Service

Box plots were used to summarize raw patient-level, Peer-RPAD scores by service and attending. By using the methods described above, we estimated the mean score overall and by service. In both models, we examined the statistical significance of service-specific variation in attending-level random effects by using likelihood-ratio test (LRT) to compare models.

SDM Variation by Round and Patient Characteristics

We used the models described above to identify covariates associated with Peer-RPAD scores. We fit univariate models separately for each covariate, then fit 2 multivariable models, including (1) all covariates and (2) all effects significant in either model at P ≤ .20 according to F tests. For uniformity of presentation, we express continuous covariates categorically; however, we report P values based on continuous versions. Means generated by the multivariable models were calculated at the mean values of all other covariates in model.

Patient-Level RPAD Data

A subsample of patients completed semistructured interviews with analogous RPAD questions. To identify possible selection bias in the full sample, we summarized response rates by service and patient language and modeled Peer-RPAD scores by interview response status. Among responders, we estimated the mean Peer-RPAD and Patient-RPAD scores and their paired differences and correlations, testing for non-zero correlations via the Spearman rank test.

RESULTS

All Patient Encounters

The median duration of rounding sessions was 1.8 hours, median patient census was 9, and median patient encounter was 13 minutes. The duration of rounds and minutes per patient were longest at Med-2 and shortest at Peds-1. See Table 1 for other team characteristics.

Peer Evaluations of SDM Encounters

Characteristics of Patients

Teams spent a median of 13 minutes per SDM encounter, which was not higher than the round median. SDM topics discussed included 47% treatment, 15% diagnostic, 30% both treatment and diagnostic, and 7% other.

Variation in SDM Quality Among Attending Physicians

Overall Peer-RPAD Scores were normally distributed. After adjusting for the nested study design, the overall mean (standard error) score was 4.16 (0.11). Score variability among attendings differed significantly by service (LRT P = .0067). For example, raw scores were lower and more variable among attending physicians at Med-2 than other among attendings in other services (see Appendix Figure in Supporting Information). However, when service was included in the model as a fixed effect, mean scores varied significantly, from 3.0 at Med-2 to 4.7 at Med-1 (P < .0001), but the random variation among attendings no longer differed significantly by service (P = .13). This finding supports the hypothesis that service-level influences are stronger than influences of individual attending physicians, that is, that variation between services exceeded variation among attendings within service.

Aspects of SDM That Are More Prevalent on Rounds

Rounds and Patient Characteristics Associated With Peer-RPAD Scores

Patient-RPAD Results: Dissimilar Perspectives of Patients and/or Guardians and Physician Observers

Among responders, mean Patient-RPAD scores were 6.8 to 7.1 for medicine services and 7.6 to 7.8 for pediatric services (P = .01). The overall mean Patient-RPAD score, 7.46, was significantly greater than the paired Peer-RPAD score by 3.5 (P = .011); however, correlations were not statistically significantly different from 0 (by service, each P > .12).

To understand drivers of the differences between Peer-RPAD and Patient-RPAD scores, we analyzed findings by item. Each mean patient-item score exceeded its peer counterpart (P ≤ .01; panel B of Figure). Peer-item scores fell below 33% on 2 items (Items 9 and 4) and only exceeded 67% on 2 items (Items 1 and 6), whereas patient-item scores ranged from 60% (Item 8) to 97% (Item 7). Three paired differences exceeded 50% (Items 9, 4, and 7) and 3 were below 20% (Items 6, 8 and 1), underlying the lack of correlation between peer and patient scores.

DISCUSSION

In this multisite study of SDM during inpatient attending rounds, SDM quality, specific SDM behaviors, and factors contributing to SDM were identified. Our study found an adjusted overall Peer-RPAD Score of 4.4 out of 9, and found the following 3 SDM elements most needing improvement according to trained peer observers: (1) “Checking understanding of the patient’s perspective”, (2) “Examining barriers to follow-through with the treatment plan”, and (3) “Asking if the patient has questions.” Areas of strength included explaining the clinical issue or nature of the decision and matching medical language to the patient’s level of understanding, with each rated highly by both peer-observers and patients. Broadly speaking, physicians were skillful in delivering information to patients but failed to solicit input from patients. Characteristics associated with increased SDM in the multivariate analysis included the following: service, patient gender, timing of rounds during patient’s hospital stay, and amount of time rounding with each patient.

Patients similarly found that physicians could improve their abilities to elicit information from patients and families, noting the 3 lowest patient-rated SDM elements were as follows: (1) asking open-ended questions, (2) discussing alternatives or uncertainties, and (3) discussing barriers to treatment plan follow through. Overall, patients and guardians perceived the quantity and quality of SDM on rounds more favorably than peer observers, which is consistent with other studies of patient perceptions of communication. 29-31 It is possible that patient ratings are more influenced by demand characteristics, fear of negatively impacting their patient-provider relationships, and conflation of overall satisfaction with quality of communication.32 This difference in patient perception of SDM is worthy of further study.

Prior work has revealed that SDM may occur infrequently during inpatient rounds.11 This study further elucidates specific SDM behaviors used along with univariate and multivariate modeling to explore possible contributing factors. The strengths and weaknesses found were similar at all 4 services and the influence of the service was more important than variability across attendings. This study’s findings are similar to a study by Shields et al.,33 in which the findings in a geographically different outpatient setting 10 years earlier suggesting global and enduring challenges to SDM. To our knowledge, this is the first published study to characterize inpatient SDM behaviors and may serve as the basis for future interventions.

Although the item-level components were ranked similarly across services, on average the summary Peer-RPAD score was lowest at Med-2, where we observed high variability within and between attendings, and was highest at Med-1, where variability was low. Med-2 carried the highest caseload and held the longest rounds, while Med-1 carried the lowest caseload, suggesting that modifiable burdens may hamper SDM performance. Prior studies suggest that patients are often selected based on teaching opportunities, immediate medical need and being newly admitted.34 The high scores at Med-1 may reflect that service’s prediscussion of patients during card-flipping rounds or their selection of which patients to round on as a team. Consistent with prior studies29,35 of SDM and the family-centered rounding model, which includes the involvement of nurses, respiratory therapists, pharmacists, case managers, social workers, and interpreters on rounds, both pediatrics services showed higher SDM scores.

In contrast to prior studies,34,36 team size and number of learners did not affect SDM performance, nor did decision type. Despite teams having up to 17 members, 8 learners, and 14 complex patients, SDM scores did not vary significantly by team. Nonetheless, trends were in the directions expected: Scores tended to decrease as the team size or the percentage of trainees grew, and increased with the seniority of the presenting physician. Interestingly, SDM performance decreased with round-average minutes per patient, which may be measuring on-going intensity across cases that leads to exhaustion. Statistically significant patient factors for increased SDM included longer duration of patient encounters, second week of hospital stay, and female patient gender. Although we anticipated that the high number of decisions made early in hospitalization would facilitate higher SDM scores, continuity and stronger patient-provider relationships may enhance SDM.36 We report service-specific team and patient characteristics, in addition to SDM findings in anticipation that some readers will identify with 1 service more than others.

This study has several important limitations. First, our peer observers were not blinded and primarily observed encounters at their own site. To minimize bias, observers periodically rated videos to recalibrate RPAD scoring. Second, additional SDM conversations with a patient and/or guardian may have occurred outside of rounds and were not captured, and poor patient recall may have affected Patient-RPAD scores despite interviewer prompts and timeliness of interviews within 12 hours of rounds. Third, there might have been a selection bias for the one service who selected a smaller number of patients to see, compared with the three other services that performed bedside rounds on all patients. It is possible that attending physicians selected patients who were deemed most able to have SDM conversations, thus affecting RPAD scores on that service. Fourth, study services had fewer patients on average than other academic hospitals (median 9, range 3-14), which might limit its generalizability. Last, as in any observational study, there is always the possibility of the Hawthorne effect. However, neither teams nor patients knew the study objectives.

Nevertheless, important findings emerged through the use of RPAD Scores to evaluate inpatient SDM practices. In particular, we found that to increase SDM quality in inpatient settings, practitioners should (1) check their understanding of the patient’s perspective, (2) examine barriers to follow-through with the treatment plan, and (3) ask if the patient has questions. Variation among services remained very influential after adjusting for team and patient characteristics, which suggests that “climate” or service culture should be targeted by an intervention, rather than individual attendings or subgroups defined by team or patient characteristics. Notably, team size, number of learners, patient census, and type of decision being made did not affect SDM performance, suggesting that even large, busy services can perform SDM if properly trained.

Acknowledgments

The authors thank the patients, families, pediatric and internal medicine residents, and hospitalists at Stanford School of Medicine and University of California, San Francisco School of Medicine for their participation in this study. We would also like to thank the student volunteers who collected patient perspectives on the encounters.

Disclosure

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article. The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was supported by an NIH/NCCIH grant R25 AT006573.

The ethos of medicine has shifted from paternalistic, physician-driven care to patient autonomy and engagement, in which the physician shares information and advises.1-3 Although there are ethical, legal, and practical reasons to respect patient preferences,1-4 patient engagement also fosters quality and safety5 and may improve clinical outcomes.5-8 Patients whose preferences are respected are more likely to trust their doctor, feel empowered, and adhere to treatments.9

Providers may partner with patients through shared decision-making (SDM).10,11 Several SDM models describe the process of providers and patients balancing evidence, preferences and context to arrive at a clinical decision.12-15 The National Academy of Medicine and the American Academy of Pediatrics has called for more SDM,16,17 including when clinical evidence is limited,2 equally beneficial options exist,18 clinical stakes are high,19 and even with deferential patients.20 Despite its value, SDM does not reliably occur21,22 and SDM training is often unavailable.4 Clinical decision tools, patient education aids, and various training interventions have shown promising, although inconsistent results.23, 24

Little is known about SDM in inpatient settings where unique patient, clinician, and environmental factors may influence SDM. This study describes the quality and possible predictors of inpatient SDM during attending rounds in 4 academic training settings. Although SDM may occur anytime during a hospitalization, attending rounds present a valuable opportunity for SDM observation given their centrality to inpatient care and teaching.25,26 Because attending physicians bear ultimate responsibility for patient management, we examined whether SDM performance varies among attendings within each service. In addition, we tested the hypothesis that service-level, team-level, and patient-level features explain variation in SDM quality more than individual attending physicians. Finally, we compared peer-observer perspectives of SDM behaviors with patient and/or guardian perspectives.

METHODS

Study Design and Setting

This cross-sectional, observational study examined the diversity of SDM practice within and between 4 inpatient services during attending rounds, including the internal medicine and pediatrics services at Stanford University and the University of California, San Francisco (UCSF). Both institutions provide quaternary care to diverse patient populations with approximately half enrolled in Medicare and/or Medicaid.

One institution had 42 internal medicine (Med-1) and 15 pediatric hospitalists (Peds-1) compared to 8 internal medicine (Med-2) and 12 pediatric hospitalists (Peds-2) at the second location. Both pediatric services used family-centered rounds that included discussions between the patients’ families and the whole team. One medicine service used a similar rounding model that did not necessarily involve the patients’ families. In contrast, the smaller medicine service typically began rounds by discussing all patients in a conference room and then visiting select patients afterwards.

From August 2014 to November 2014, peer observers gathered data on team SDM behaviors during attending rounds. After the rounding team departed, nonphysician interviewers surveyed consenting patients’ (or guardians’) views of the SDM experience, yielding paired evaluations for a subset of SDM encounters. Institutional review board approval was obtained from Stanford University and UCSF.

Participants and Inclusion Criteria

Attending physicians were hospitalists who supervised rounds at least 1 month per year, and did not include those conducting the study. All provided verbal assent to be observed on 3 days within a 7-day period. While team composition varied as needed (eg, to include the nurse, pharmacist, interpreter, etc), we restricted study observations to those teams with an attending and at least one learner (eg, resident, intern, medical student) to capture the influence of attending physicians in their training role. Because services vary in number of attendings on staff, rounds assigned per attending, and patients per round, it was not possible to enroll equal sample sizes per service in the study.

Nonintensive care unit patients who were deemed medically stable by the team were eligible for peer observation and participation in a subsequent patient interview once during the study period. Pediatric patients were invited for an interview if they were between 13 and 21 years old and had the option of having a parent or guardian present; if the pediatric patients were less than 13 years old or they were not interested in being interviewed, then their parents or guardians were invited to be interviewed. Interpreters were on rounds, and thus, non-English participants were able to participate in the peer observations, but could not participate in patient interviews because interpreters were not available during afternoons for study purposes. Consent was obtained from all participating patients and/or guardians.

Data Collection

Round and Patient Characteristics

Peer observers recorded rounding, team, and patient characteristics using a standardized form. Rounding data included date, attending name, duration of rounds, and patient census. Patient level data included the decision(s) discussed, the seniority of the clinician leading the discussion, team composition, minutes spent discussing the patient (both with the patient and/or guardian and total time), hospitalization week, and patient’s primary language. Additional patient data obtained from electronic health records included age, gender, race, ethnicity, date of admission, and admitting diagnosis.

SDM Measures

Peer-observed SDM behaviors were quantified per patient encounter using the 9-item Rochester Participatory Decision-Making Scale (RPAD), with credit given for SDM behaviors exhibited by anyone on the rounding team (team-level metric).27 Each item was scored on a 3-point scale (0 = absent, 0.5 = partial, and 1 = present) for a maximum of 9 points, with higher scores indicating higher-quality SDM (Peer-RPAD Score). We created semistructured patient interview guides by adapting each RPAD item into layperson language (Patient-RPAD Score) and adding open-ended questions to assess the patient experience.

Peer-Observer Training

Eight peer-observers (7 hospitalists and 1 palliative care physician) were trained to perform RPAD ratings using videos of patient encounters. Initially, raters viewed videos together and discussed ratings for each RPAD item. The observers incorporated behavioral anchors and clinical examples into the development of an RPAD rating guide, which they subsequently used to independently score 4 videos from an online medical communication library.28 These scores were discussed to resolve any differences before 4 additional videos were independently viewed, scored, and compared. Interrater reliability was achieved when the standard deviation of summed SDM scores across raters was less than 1 for all 4 videos.

Patient Interviewers

Interviewers were English-speaking volunteers without formal medical training. They were educated in hospital etiquette by a physician and in administering patient interviews through peer-to-peer role playing and an observation and feedback interview with at least 1 patient.

Data Analysis

The analysis set included every unique patient with whom a medical decision was made by an eligible clinical team. To account for the nested study design (patient-level scores within rounds, rounds within attending, and attendings within service), we used mixed-effects models to estimate mean (summary or item) RPAD score by levels of fixed covariate(s). The models included random effects accounting for attending-level and round-level correlations among scores via variance components, and allowing the attending-level random effect to differ by service. Analyses were performed using SAS version 9.4 (SAS Institute Inc, Cary, NC). We used descriptive statistics to summarize round- and patient-level characteristics.

SDM Variation by Attending and Service

Box plots were used to summarize raw patient-level, Peer-RPAD scores by service and attending. By using the methods described above, we estimated the mean score overall and by service. In both models, we examined the statistical significance of service-specific variation in attending-level random effects by using likelihood-ratio test (LRT) to compare models.

SDM Variation by Round and Patient Characteristics

We used the models described above to identify covariates associated with Peer-RPAD scores. We fit univariate models separately for each covariate, then fit 2 multivariable models, including (1) all covariates and (2) all effects significant in either model at P ≤ .20 according to F tests. For uniformity of presentation, we express continuous covariates categorically; however, we report P values based on continuous versions. Means generated by the multivariable models were calculated at the mean values of all other covariates in model.

Patient-Level RPAD Data

A subsample of patients completed semistructured interviews with analogous RPAD questions. To identify possible selection bias in the full sample, we summarized response rates by service and patient language and modeled Peer-RPAD scores by interview response status. Among responders, we estimated the mean Peer-RPAD and Patient-RPAD scores and their paired differences and correlations, testing for non-zero correlations via the Spearman rank test.

RESULTS

All Patient Encounters

The median duration of rounding sessions was 1.8 hours, median patient census was 9, and median patient encounter was 13 minutes. The duration of rounds and minutes per patient were longest at Med-2 and shortest at Peds-1. See Table 1 for other team characteristics.

Peer Evaluations of SDM Encounters

Characteristics of Patients

Teams spent a median of 13 minutes per SDM encounter, which was not higher than the round median. SDM topics discussed included 47% treatment, 15% diagnostic, 30% both treatment and diagnostic, and 7% other.

Variation in SDM Quality Among Attending Physicians

Overall Peer-RPAD Scores were normally distributed. After adjusting for the nested study design, the overall mean (standard error) score was 4.16 (0.11). Score variability among attendings differed significantly by service (LRT P = .0067). For example, raw scores were lower and more variable among attending physicians at Med-2 than other among attendings in other services (see Appendix Figure in Supporting Information). However, when service was included in the model as a fixed effect, mean scores varied significantly, from 3.0 at Med-2 to 4.7 at Med-1 (P < .0001), but the random variation among attendings no longer differed significantly by service (P = .13). This finding supports the hypothesis that service-level influences are stronger than influences of individual attending physicians, that is, that variation between services exceeded variation among attendings within service.

Aspects of SDM That Are More Prevalent on Rounds

Rounds and Patient Characteristics Associated With Peer-RPAD Scores

Patient-RPAD Results: Dissimilar Perspectives of Patients and/or Guardians and Physician Observers