Health care–specific (eg, Healthgrades, Zocdoc, Vitals, WebMD) and general consumer websites (eg, Google, Yelp) are popular platforms for patients to find physicians, schedule appointments, and review physician experiences. Patients find ratings on these websites more trustworthy than standardized surveys distributed by hospitals, but many physicians do not trust the reviews on these sites. For example, in a survey of both physicians (n=828) and patients (n=494), 36% of physicians trusted online reviews compared to 57% of patients.1 The objective of this study was to determine if health care–specific or general consumer websites more accurately reflect overall patient sentiment. This knowledge can help physicians who are seeking to improve the patient experience understand which websites have more accurate and trustworthy reviews.

Methods

A list of dermatologists from the top 10 most and least dermatologist–dense areas in the United States was compiled to examine different physician populations.2 Equal numbers of male and female dermatologists were randomly selected from the most dense areas. All physicians were included from the least dense areas because of limited sample size. Ratings were collected from websites most likely to appear on the first page of a Google search for a physician name, as these are most likely to be seen by patients. Descriptive statistics were generated to describe the study population; mean and median physician rating (using a scale of 1–5); SD; and minimum, maximum, and interquartile ranges. Spearman correlation coefficients were generated to examine the strength of association between ratings from website pairs. P<.05 was considered statistically significant, with analyses performed in R (3.6.2) for Windows (the R Foundation).

Results

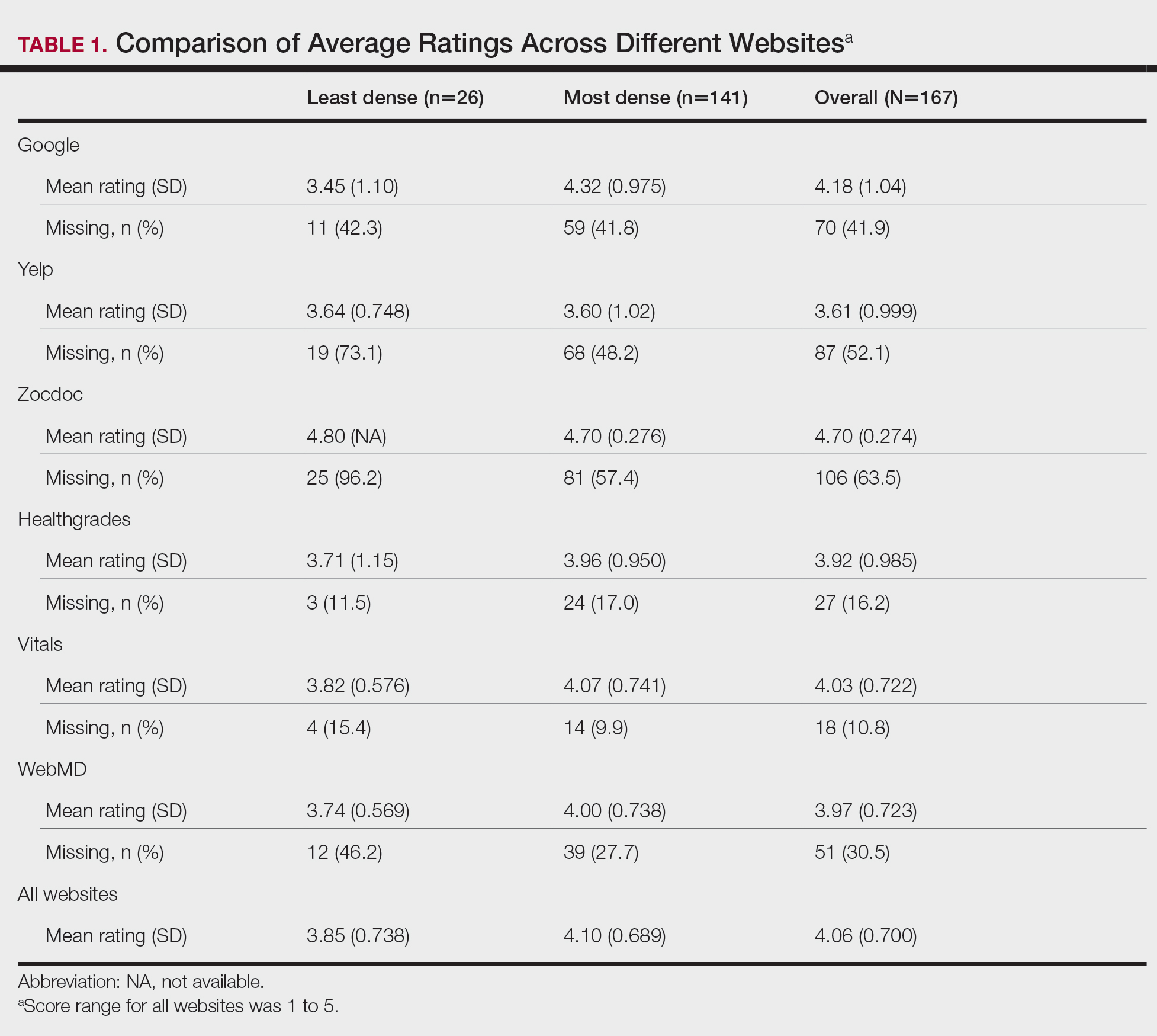

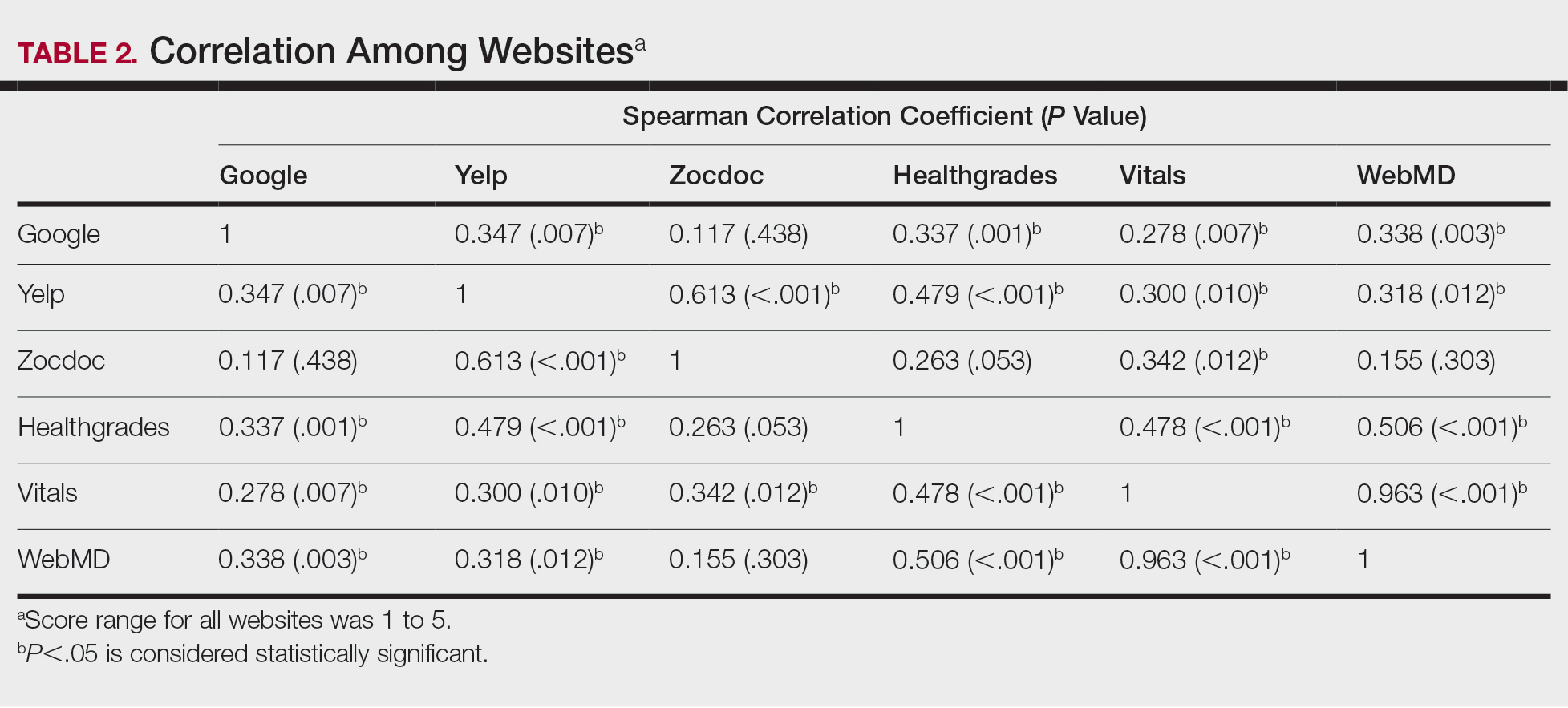

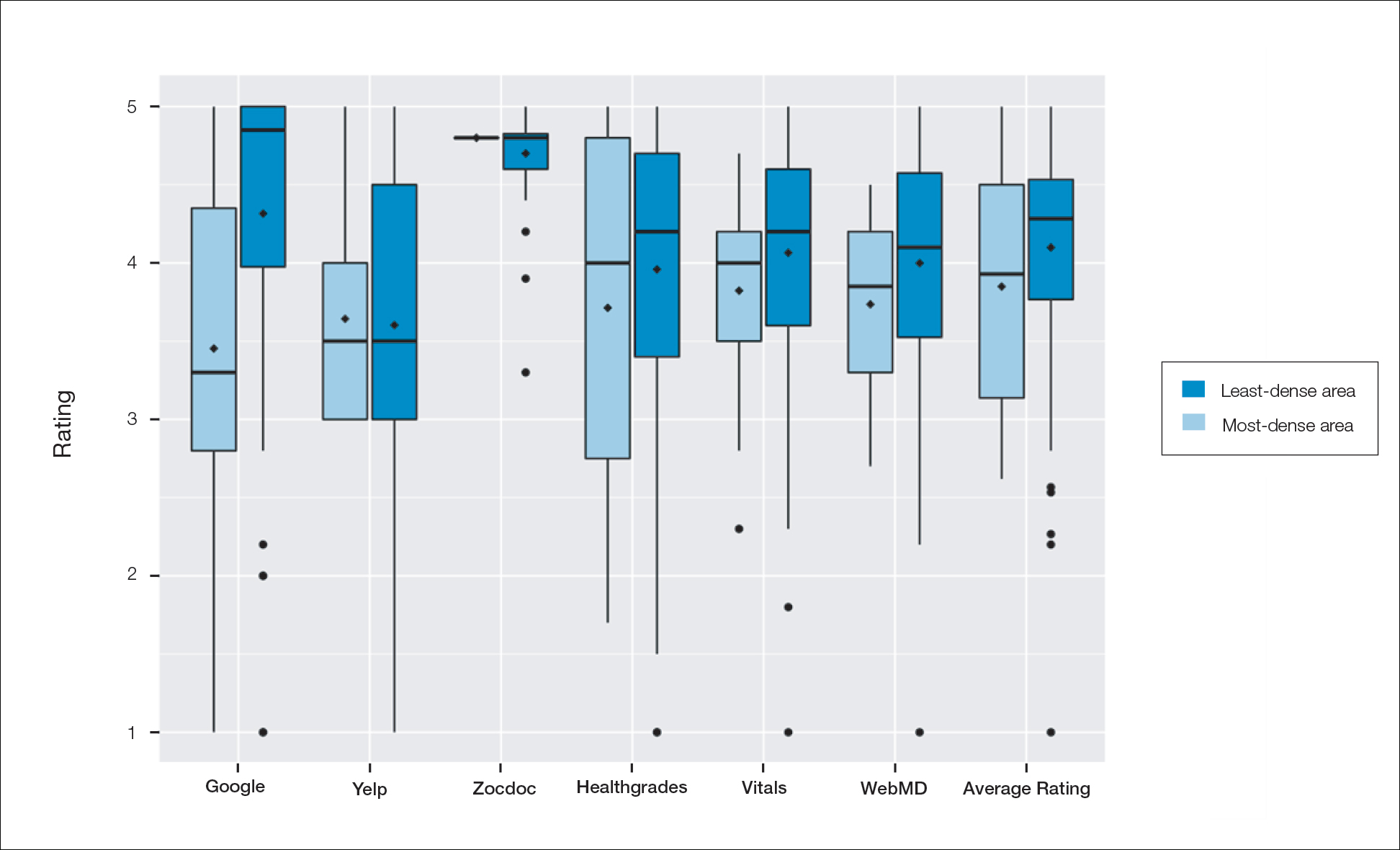

A total of 167 representative physicians were included in this analysis; 141 from the most dense areas, and 26 from the least dense areas. The lowest average ratings for the entire sample and most dermatologist–dense areas were found on Yelp (3.61 and 3.60, respectively), and the lowest ratings in the least dermatologist–dense areas were found on Google (3.45)(Table 1). Correlation coefficient values were lowest for Zocdoc and Healthgrades (0.263) and highest for Vitals and WebMD (0.963)(Table 2). The health care–specific sites were closer to the overall average (4.06) than the general consumer sites (eFigure).

Comment

Although dermatologist ratings on each site had a broad range, we found that patients typically expressed negative interactions on general consumer websites rather than health care–specific websites. When comparing the ratings of the same group of dermatologists across different sites, ratings on health care–specific sites had a higher degree of correlation, with physician ratings more similar between 2 health care–specific sites and less similar between a health care–specific and a general consumer website. This pattern was consistent in both dermatologist-dense and dermatologist-poor areas, despite patients having varying levels of access to dermatologic care and medical resources and potentially different regional preferences of consumer websites. Taken together, these findings imply that health care–specific websites more consistently reflect overall patient sentiment.

Although one 2016 study comparing reviews of dermatology practices on Zocdoc and Yelp also demonstrated lower average ratings on Yelp,3 our study suggests that this trend is not isolated to these 2 sites but can be seen when comparing many health care–specific sites vs general consumer sites.

Our study compared ratings of dermatologists among popular websites to understand those that are most representative of patient attitudes toward physicians. These findings are important because online reviews reflect the entire patient experience, not just the patient-physician interaction, which may explain why physician scores on standardized questionnaires, such as Press Ganey surveys, do not correlate well with their online reviews.4 In a study comparing 98 physicians with negative online ratings to 82 physicians in similar departments with positive ratings, there was no significant difference in scores on patient-physician interaction questions on the Press Ganey survey.5 However, physicians who received negative online reviews scored lower on Press Ganey questions related to nonphysician interactions (eg, office cleanliness, interactions with staff).

The current study was subject to several limitations. Our analysis included all physicians in our random selection without accounting for those physicians with a greater online presence who might be more cognizant of these ratings and try to manipulate them through a reputation-management company or public relations consultant.

Conclusion

Our study suggests that consumer websites are not primarily used by disgruntled patients wishing to express grievances; instead, on average, most physicians received positive reviews. Furthermore, health care–specific websites show a higher degree of concordance than and may more accurately reflect overall patient attitudes toward their physicians than general consumer sites. Reviews from these health care–specific sites may be more helpful than general consumer websites in allowing physicians to understand patient sentiment and improve patient experiences.