Training—Various training occurred throughout our study to ensure understanding of each step and mitigate subjectivity. Before beginning screening, 2 investigators (C.H. and A.L.) completed the Introduction to Systematic Review and Meta-Analysis course offered by Johns Hopkins University.18 They also underwent 2 days of online and in-person training on the definition and interpretation of the 9 most severe types of spin found in the abstracts of systematic reviews as defined by Yavchitz et al.9 Finally, they were trained to use A MeaSurement Tool to Assess systematic Reviews (AMSTAR-2) to appraise the methodological quality of each systematic review. Our protocol contained an outline of all training modules used.

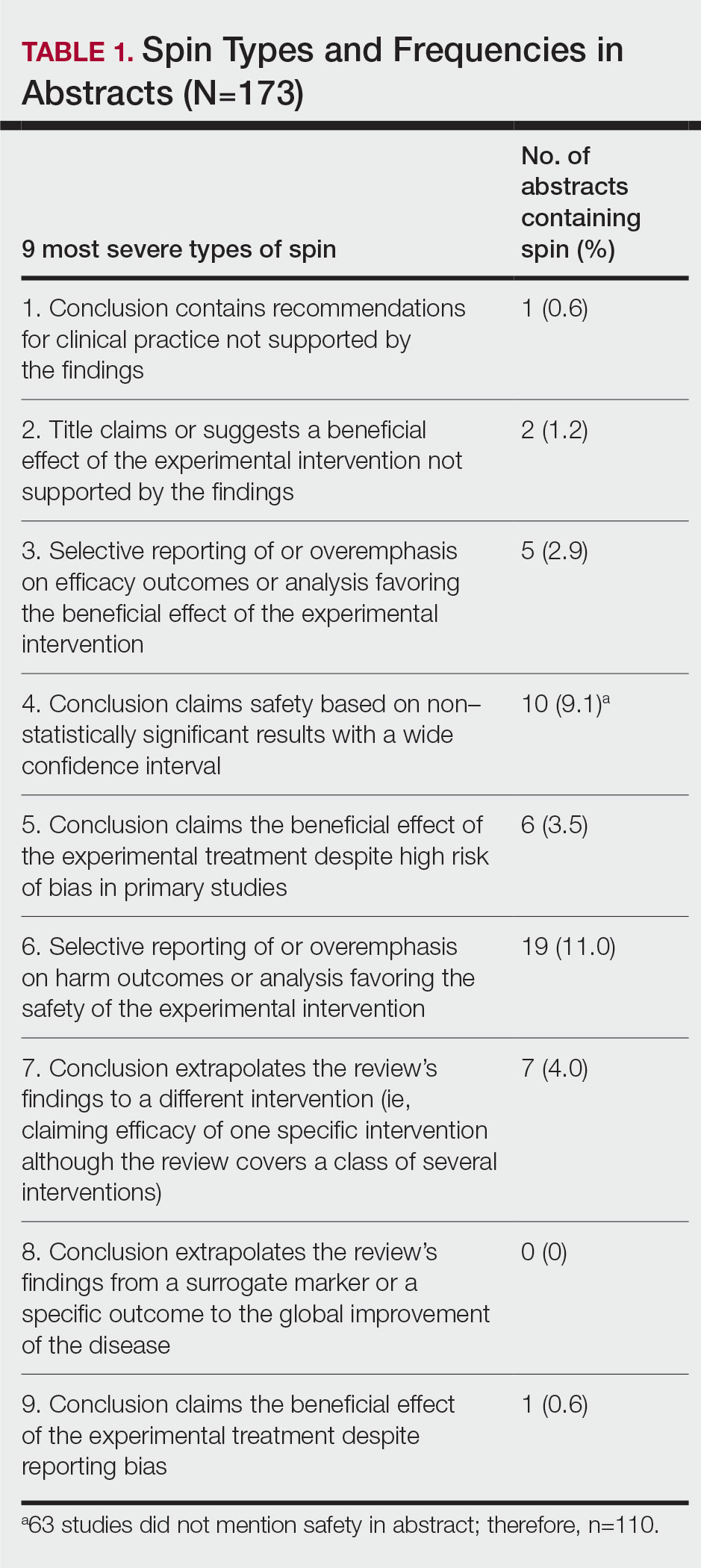

Data Extraction—The investigators (C.H. and A.L.) analyzed included abstracts for the 9 most severe types of spin (Table 1). Data were extracted in a masked duplicate fashion using the Google form. AMSTAR-2 was used to assess systematic reviews for methodological quality. AMSTAR-2 is an appraisal tool consisting of a 16-item checklist for systematic reviews or meta-analyses. Scores range from critically low to high based on the methodological quality of the review. Interrater reliability of AMSTAR-2 scores has been moderate to high across studies. Construct validity coefficients have been high with the original AMSTAR instrument (r=0.91) and the Risk of Bias in Systematic Reviews instrument (r=0.84).19

During data extraction from each included systematic review, the following additional items were obtained: (1) the date the review was received; (2) intervention type (ie, pharmacologic, nonpharmacologic, surgery, light therapy, mixed); (3) the funding source(s) for each systematic review (ie, industry, private, public, none, not mentioned, hospital, a combination of funding not including industry, a combination of funding including industry, other); (4) whether the journal submission guidelines suggested adherence to PRISMA guidelines; (5) whether the review discussed adherence to PRISMA14 or PRISMA for Abstracts20 (PRISMA-A); (6) the publishing journal’s 5-year impact factor; and (6) the country of the systematic review’s origin. When data extraction was complete, investigators (C.H. and A.L.) were unmasked and met to resolve any disagreements by discussion. Two authors (R.O. or M.V.) served as arbiters in the case that an agreement between C.H. and A.L. could not be reached.

Statistical Analysis—Frequencies and percentages were calculated to evaluate the most common types of spin found within systematic reviews and meta-analyses. One author (M.H.) prespecified the possibility of a binary logistic regression and calculated a power analysis to determine sample size, as stated in our protocol. Our final sample size of 173 was not powered to perform the multivariable logistic regression; therefore, we calculated unadjusted odds ratios to enable assessing relationships between the presence of spin in abstracts and the various study characteristics. We used Stata 16.1 for all analyses, and all analytic decisions can be found in our protocol.

Results

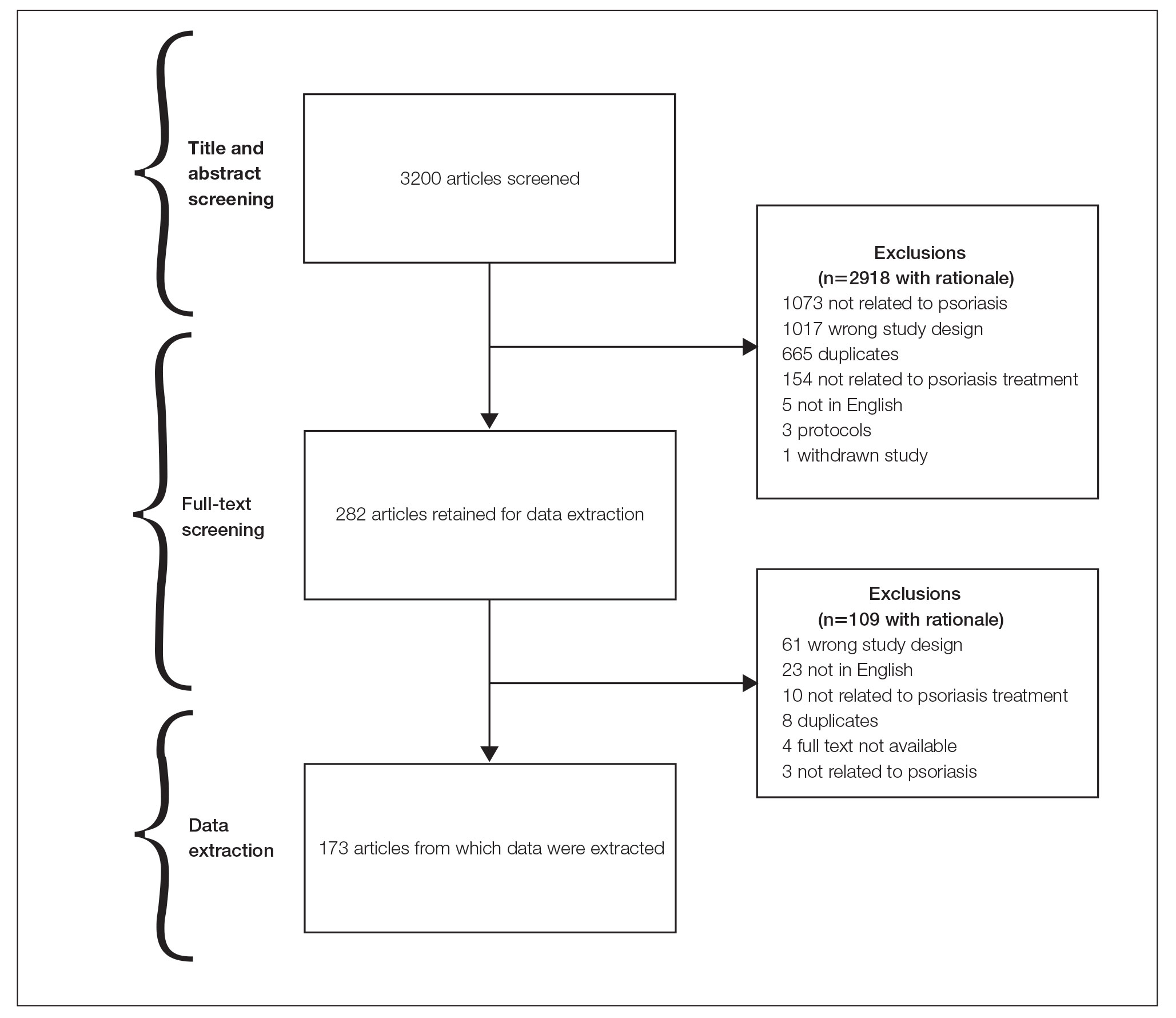

General Characteristics—Our systematic search of MEDLINE and Embase returned 3200 articles, of which 665 were duplicates that were removed. An additional 2253 articles were excluded during initial abstract and title screening, and full-text screening led to the exclusion of another 109 articles. In total, 173 systematic reviews were included for data extraction. Figure 2 illustrates the screening process with the rationale for all exclusions.

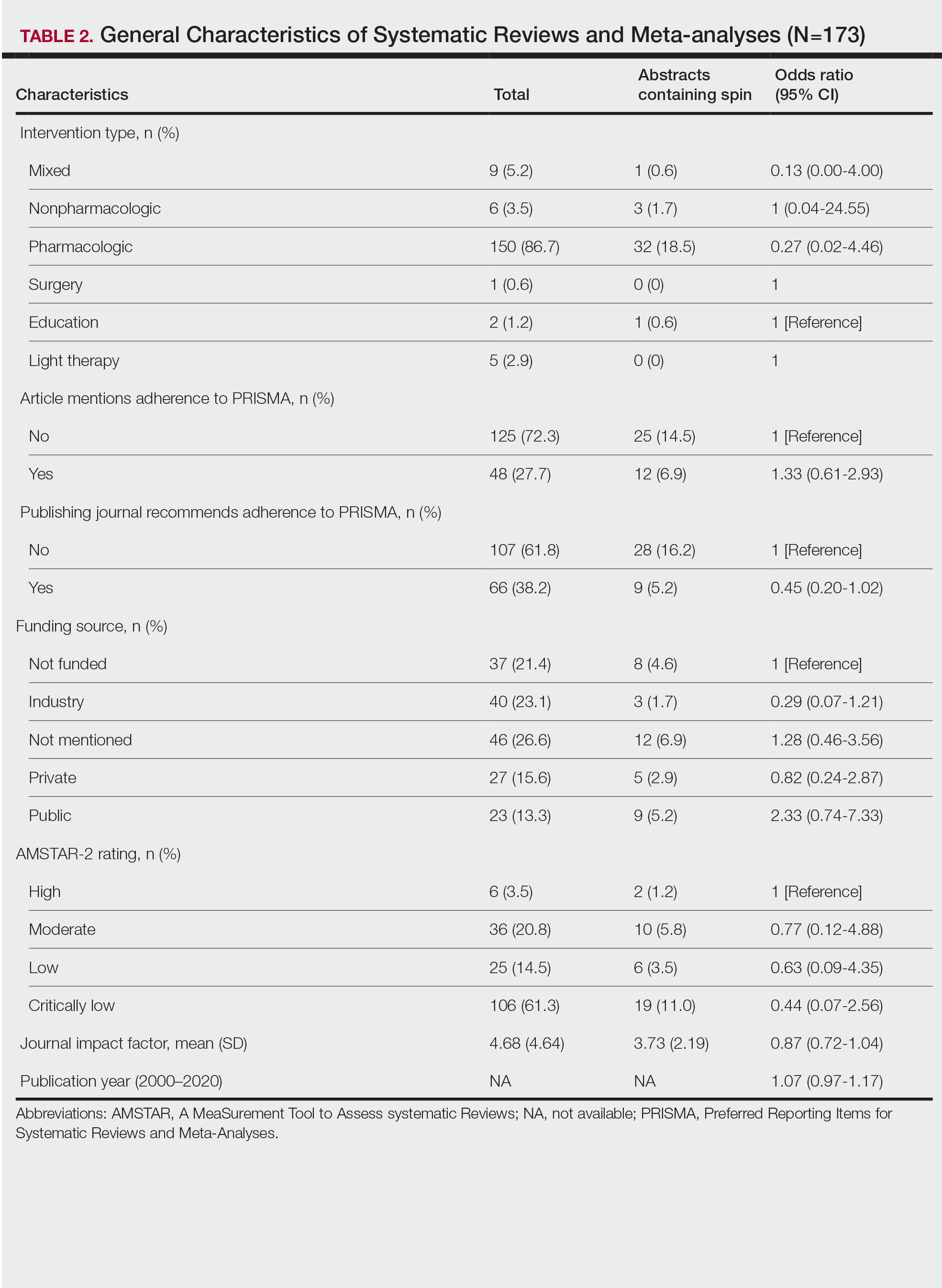

Of the 173 included systematic reviews and meta-analyses, 150 (86.7%) focused on pharmacologic interventions. The majority of studies did not mention adhering to PRISMA guidelines (125/173 [72.3%]), and the publishing journals recommended their authors adhere to PRISMA for only 66 (38.2%) of the included articles. For the articles that received funding (90/173 [52.0%]), industry sources were the most common funding source (40/90 [44.4%]), followed by private (27/90 [30%]) and public funding sources (23/90 [25.6%]). Of the remaining studies, 46 articles did not include a funding statement (46/83 [55.4%]), and 37 studies were not funded (37/83 [44.6%]). The average (SD) 5-year impact factor of our included journals was 4.68 (4.64). Systematic reviews were from 31 different countries. All studies were received by their respective journals between the years 2000 and 2020 (Table 2).

Abstracts Containing Spin—We found that 37 (21.4%) of the abstracts of systematic reviews focused on psoriasis treatments contained at least 1 type of spin. Some abstracts had more than 1 type; thus, a total of 51 different instances of spin were detected. Spin type 6—selective reporting of or overemphasis on harm outcomes or analysis favoring the safety of the experimental intervention—was the most common type ofspin, found in 19 of 173 abstracts (11.0%). The most severe type of spin—type 1 (conclusion contains recommendations for clinical practice not supported by the findings)—occurred in only 1 abstract (0.6%). Spin type 8 did not occur in any of the abstracts (Table 1). There was no statistically significant association between the presence of spin and any of the study characteristics (Table 2).