User login

How Old Are You? Stand on One Leg and I’ll Tell You

This transcript has been edited for clarity.

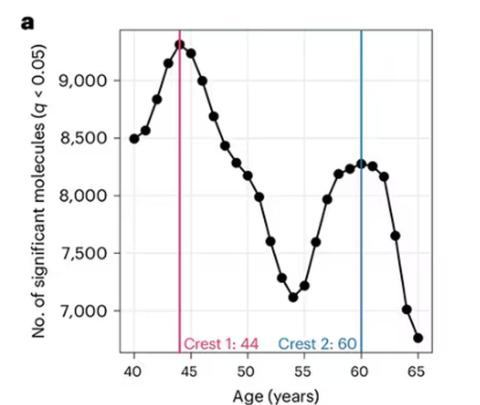

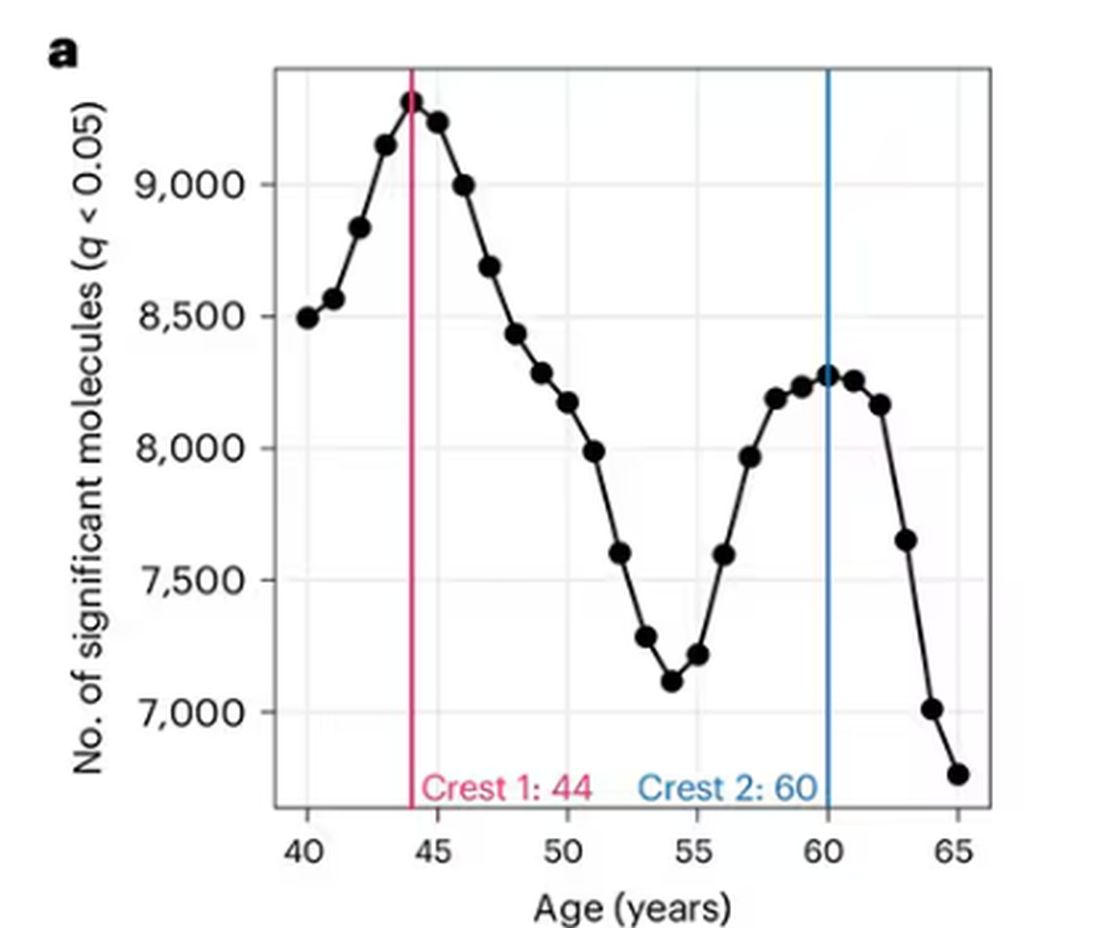

So I was lying in bed the other night, trying to read my phone, and started complaining to my wife about how my vision keeps getting worse, and then how stiff I feel when I wake up in the morning, and how a recent injury is taking too long to heal, and she said, “Well, yeah. You’re 44. That’s when things start to head downhill.”

And I was like, “Forty-four? That seems very specific. I thought 50 was what people complain about.” And she said, “No, it’s a thing — 44 years old and 60 years old. There’s a drop-off there.”

And you know what? She was right.

A study, “Nonlinear dynamics of multi-omics profiles during human aging,” published in Nature Aging in August 2024, analyzed a ton of proteins and metabolites in people of various ages and found, when you put it all together, that I should know better than to doubt my brilliant spouse.

But deep down, I believe the cliché that age is just a number. I don’t particularly care about being 44, or turning 50 or 60. I care about how my body and brain are aging. If I can be a happy, healthy, 80-year-old in full command of my faculties, I would consider that a major win no matter what the calendar says.

So I’m always interested in ways to quantify how my body is aging, independent of how many birthdays I have passed. And, according to a new study, there’s actually a really easy way to do this: Just stand on one leg.

The surprising results come from “Age-related changes in gait, balance, and strength parameters: A cross-sectional study,” appearing in PLOS One, which analyzed 40 individuals — half under age 65 and half over age 65 — across a variety of domains of strength, balance, and gait. The conceit of the study? We all know that things like strength and balance worsen over time, but what worsens fastest? What might be the best metric to tell us how our bodies are aging?

To that end, you have a variety of correlations between various metrics and calendar age.

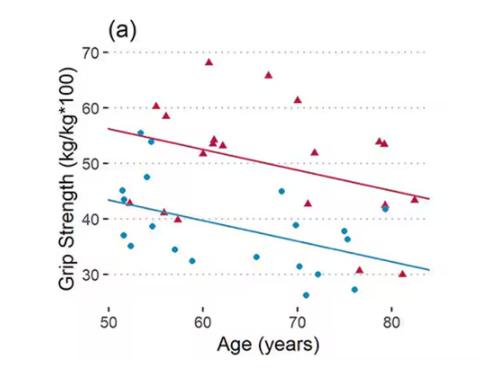

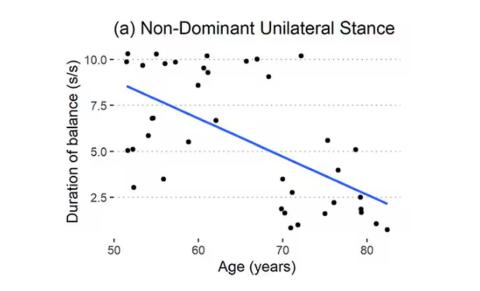

As age increases, grip strength goes down. Men (inexplicably in pink) have higher grip strength overall, and women (confusingly in blue) lower. Somewhat less strong correlations were seen for knee strength.

What about balance?

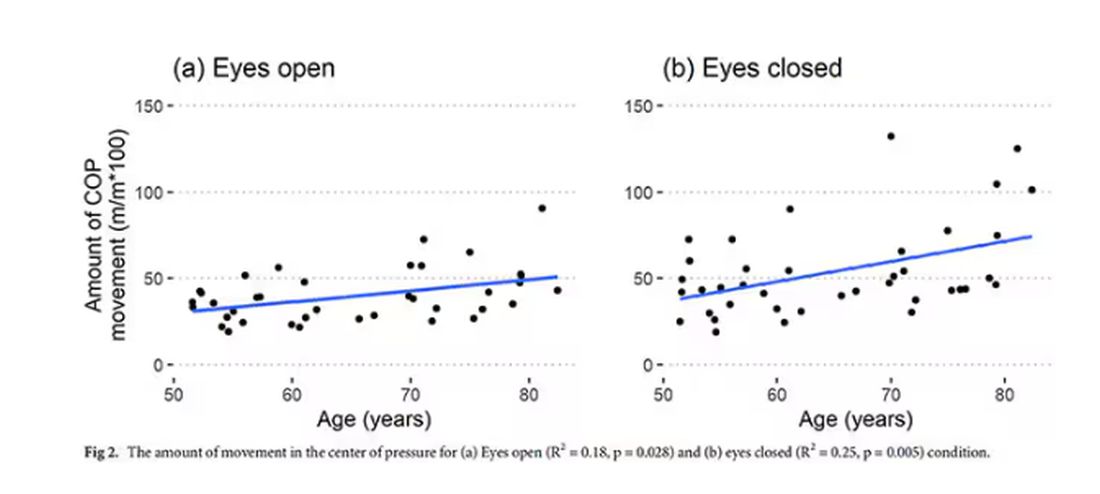

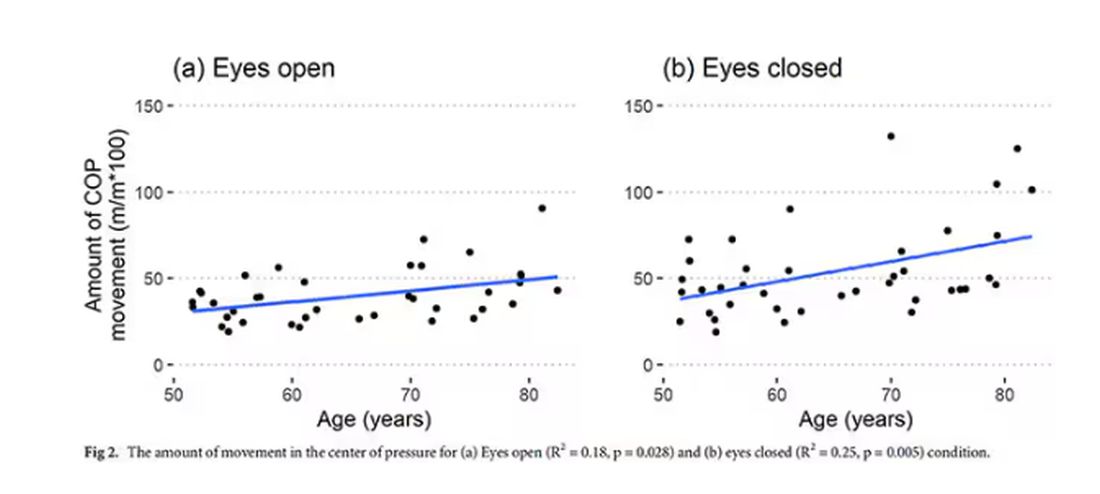

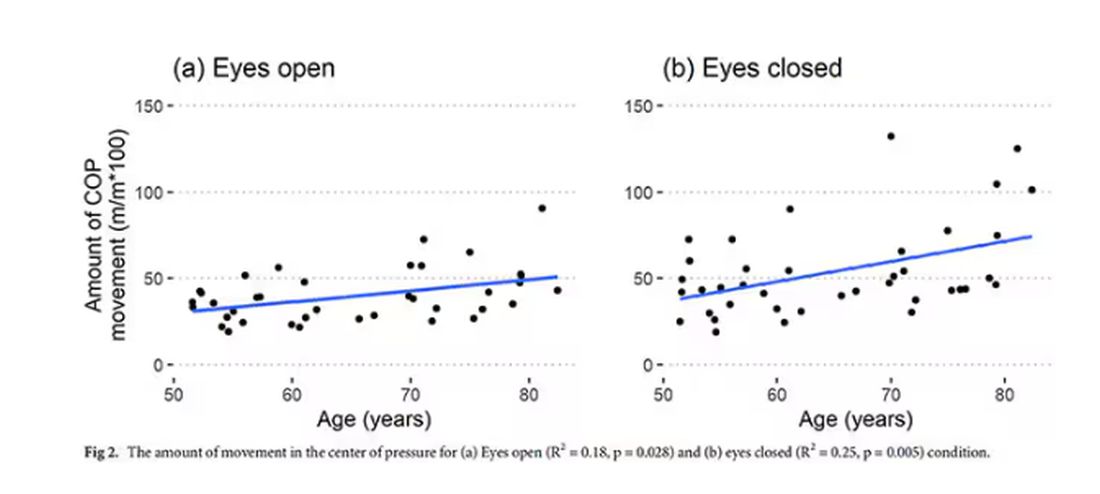

To assess this, the researchers had the participants stand on a pressure plate. In one scenario, they did this with eyes open, and the next with eyes closed. They then measured how much the pressure varied around the center of the individual on the plate — basically, how much the person swayed while they were standing there.

Sway increased as age increased. Sway increased a bit more with eyes closed than with eyes open.

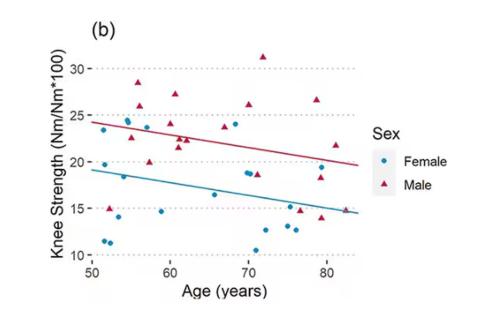

But the strongest correlation between any of these metrics and age was a simple one: How long can you stand on one leg?

Particularly for the nondominant leg, what you see here is a pretty dramatic drop-off in balance time around age 65, with younger people able to do 10 seconds with ease and some older people barely being able to make it to 2.

Of course, I had to try this for myself. And as I was standing around on one leg, it became clear to me exactly why this might be a good metric. It really integrates balance and strength in a way that the other tests don’t: balance, clearly, since you have to stay vertical over a relatively small base; but strength as well, because, well, one leg is holding up all the rest of you. You do feel it after a while.

So this metric passes the smell test to me, at least as a potential proxy for age-related physical decline.

But I should be careful to note that this was a cross-sectional study; the researchers looked at various people who were all different ages, not the same people over time to watch how these things change as they aged.

Also, the use of the correlation coefficient in graphs like this implies a certain linear relationship between age and standing-on-one-foot time. The raw data — the points on this graph — don’t appear that linear to me. As I mentioned above, it seems like there might be a bit of a sharp drop-off somewhere in the mid-60s. That means that we may not be able to use this as a sensitive test for aging that slowly changes as your body gets older. It might be that you’re able to essentially stand on one leg as long as you want until, one day, you can’t. That gives us less warning and less to act on.

And finally, we don’t know that changing this metric will change your health for the better. I’m sure a good physiatrist or physical therapist could design some exercises to increase any of our standing-on-one leg times. And no doubt, with practice, you could get your numbers way up. But that doesn’t necessarily mean you’re healthier. It’s like “teaching to the test”; you might score better on the standardized exam but you didn’t really learn the material.

So I am not adding one-leg standing to my daily exercise routine. But I won’t lie and tell you that, from time to time, and certainly on my 60th birthday, you may find me standing like a flamingo with a stopwatch in my hand.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

So I was lying in bed the other night, trying to read my phone, and started complaining to my wife about how my vision keeps getting worse, and then how stiff I feel when I wake up in the morning, and how a recent injury is taking too long to heal, and she said, “Well, yeah. You’re 44. That’s when things start to head downhill.”

And I was like, “Forty-four? That seems very specific. I thought 50 was what people complain about.” And she said, “No, it’s a thing — 44 years old and 60 years old. There’s a drop-off there.”

And you know what? She was right.

A study, “Nonlinear dynamics of multi-omics profiles during human aging,” published in Nature Aging in August 2024, analyzed a ton of proteins and metabolites in people of various ages and found, when you put it all together, that I should know better than to doubt my brilliant spouse.

But deep down, I believe the cliché that age is just a number. I don’t particularly care about being 44, or turning 50 or 60. I care about how my body and brain are aging. If I can be a happy, healthy, 80-year-old in full command of my faculties, I would consider that a major win no matter what the calendar says.

So I’m always interested in ways to quantify how my body is aging, independent of how many birthdays I have passed. And, according to a new study, there’s actually a really easy way to do this: Just stand on one leg.

The surprising results come from “Age-related changes in gait, balance, and strength parameters: A cross-sectional study,” appearing in PLOS One, which analyzed 40 individuals — half under age 65 and half over age 65 — across a variety of domains of strength, balance, and gait. The conceit of the study? We all know that things like strength and balance worsen over time, but what worsens fastest? What might be the best metric to tell us how our bodies are aging?

To that end, you have a variety of correlations between various metrics and calendar age.

As age increases, grip strength goes down. Men (inexplicably in pink) have higher grip strength overall, and women (confusingly in blue) lower. Somewhat less strong correlations were seen for knee strength.

What about balance?

To assess this, the researchers had the participants stand on a pressure plate. In one scenario, they did this with eyes open, and the next with eyes closed. They then measured how much the pressure varied around the center of the individual on the plate — basically, how much the person swayed while they were standing there.

Sway increased as age increased. Sway increased a bit more with eyes closed than with eyes open.

But the strongest correlation between any of these metrics and age was a simple one: How long can you stand on one leg?

Particularly for the nondominant leg, what you see here is a pretty dramatic drop-off in balance time around age 65, with younger people able to do 10 seconds with ease and some older people barely being able to make it to 2.

Of course, I had to try this for myself. And as I was standing around on one leg, it became clear to me exactly why this might be a good metric. It really integrates balance and strength in a way that the other tests don’t: balance, clearly, since you have to stay vertical over a relatively small base; but strength as well, because, well, one leg is holding up all the rest of you. You do feel it after a while.

So this metric passes the smell test to me, at least as a potential proxy for age-related physical decline.

But I should be careful to note that this was a cross-sectional study; the researchers looked at various people who were all different ages, not the same people over time to watch how these things change as they aged.

Also, the use of the correlation coefficient in graphs like this implies a certain linear relationship between age and standing-on-one-foot time. The raw data — the points on this graph — don’t appear that linear to me. As I mentioned above, it seems like there might be a bit of a sharp drop-off somewhere in the mid-60s. That means that we may not be able to use this as a sensitive test for aging that slowly changes as your body gets older. It might be that you’re able to essentially stand on one leg as long as you want until, one day, you can’t. That gives us less warning and less to act on.

And finally, we don’t know that changing this metric will change your health for the better. I’m sure a good physiatrist or physical therapist could design some exercises to increase any of our standing-on-one leg times. And no doubt, with practice, you could get your numbers way up. But that doesn’t necessarily mean you’re healthier. It’s like “teaching to the test”; you might score better on the standardized exam but you didn’t really learn the material.

So I am not adding one-leg standing to my daily exercise routine. But I won’t lie and tell you that, from time to time, and certainly on my 60th birthday, you may find me standing like a flamingo with a stopwatch in my hand.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

So I was lying in bed the other night, trying to read my phone, and started complaining to my wife about how my vision keeps getting worse, and then how stiff I feel when I wake up in the morning, and how a recent injury is taking too long to heal, and she said, “Well, yeah. You’re 44. That’s when things start to head downhill.”

And I was like, “Forty-four? That seems very specific. I thought 50 was what people complain about.” And she said, “No, it’s a thing — 44 years old and 60 years old. There’s a drop-off there.”

And you know what? She was right.

A study, “Nonlinear dynamics of multi-omics profiles during human aging,” published in Nature Aging in August 2024, analyzed a ton of proteins and metabolites in people of various ages and found, when you put it all together, that I should know better than to doubt my brilliant spouse.

But deep down, I believe the cliché that age is just a number. I don’t particularly care about being 44, or turning 50 or 60. I care about how my body and brain are aging. If I can be a happy, healthy, 80-year-old in full command of my faculties, I would consider that a major win no matter what the calendar says.

So I’m always interested in ways to quantify how my body is aging, independent of how many birthdays I have passed. And, according to a new study, there’s actually a really easy way to do this: Just stand on one leg.

The surprising results come from “Age-related changes in gait, balance, and strength parameters: A cross-sectional study,” appearing in PLOS One, which analyzed 40 individuals — half under age 65 and half over age 65 — across a variety of domains of strength, balance, and gait. The conceit of the study? We all know that things like strength and balance worsen over time, but what worsens fastest? What might be the best metric to tell us how our bodies are aging?

To that end, you have a variety of correlations between various metrics and calendar age.

As age increases, grip strength goes down. Men (inexplicably in pink) have higher grip strength overall, and women (confusingly in blue) lower. Somewhat less strong correlations were seen for knee strength.

What about balance?

To assess this, the researchers had the participants stand on a pressure plate. In one scenario, they did this with eyes open, and the next with eyes closed. They then measured how much the pressure varied around the center of the individual on the plate — basically, how much the person swayed while they were standing there.

Sway increased as age increased. Sway increased a bit more with eyes closed than with eyes open.

But the strongest correlation between any of these metrics and age was a simple one: How long can you stand on one leg?

Particularly for the nondominant leg, what you see here is a pretty dramatic drop-off in balance time around age 65, with younger people able to do 10 seconds with ease and some older people barely being able to make it to 2.

Of course, I had to try this for myself. And as I was standing around on one leg, it became clear to me exactly why this might be a good metric. It really integrates balance and strength in a way that the other tests don’t: balance, clearly, since you have to stay vertical over a relatively small base; but strength as well, because, well, one leg is holding up all the rest of you. You do feel it after a while.

So this metric passes the smell test to me, at least as a potential proxy for age-related physical decline.

But I should be careful to note that this was a cross-sectional study; the researchers looked at various people who were all different ages, not the same people over time to watch how these things change as they aged.

Also, the use of the correlation coefficient in graphs like this implies a certain linear relationship between age and standing-on-one-foot time. The raw data — the points on this graph — don’t appear that linear to me. As I mentioned above, it seems like there might be a bit of a sharp drop-off somewhere in the mid-60s. That means that we may not be able to use this as a sensitive test for aging that slowly changes as your body gets older. It might be that you’re able to essentially stand on one leg as long as you want until, one day, you can’t. That gives us less warning and less to act on.

And finally, we don’t know that changing this metric will change your health for the better. I’m sure a good physiatrist or physical therapist could design some exercises to increase any of our standing-on-one leg times. And no doubt, with practice, you could get your numbers way up. But that doesn’t necessarily mean you’re healthier. It’s like “teaching to the test”; you might score better on the standardized exam but you didn’t really learn the material.

So I am not adding one-leg standing to my daily exercise routine. But I won’t lie and tell you that, from time to time, and certainly on my 60th birthday, you may find me standing like a flamingo with a stopwatch in my hand.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Time-Restricted Eating Is Not a Metabolic Magic Bullet

This transcript has been edited for clarity.

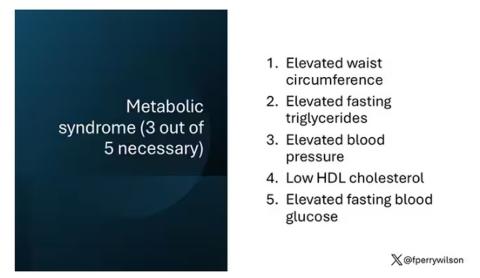

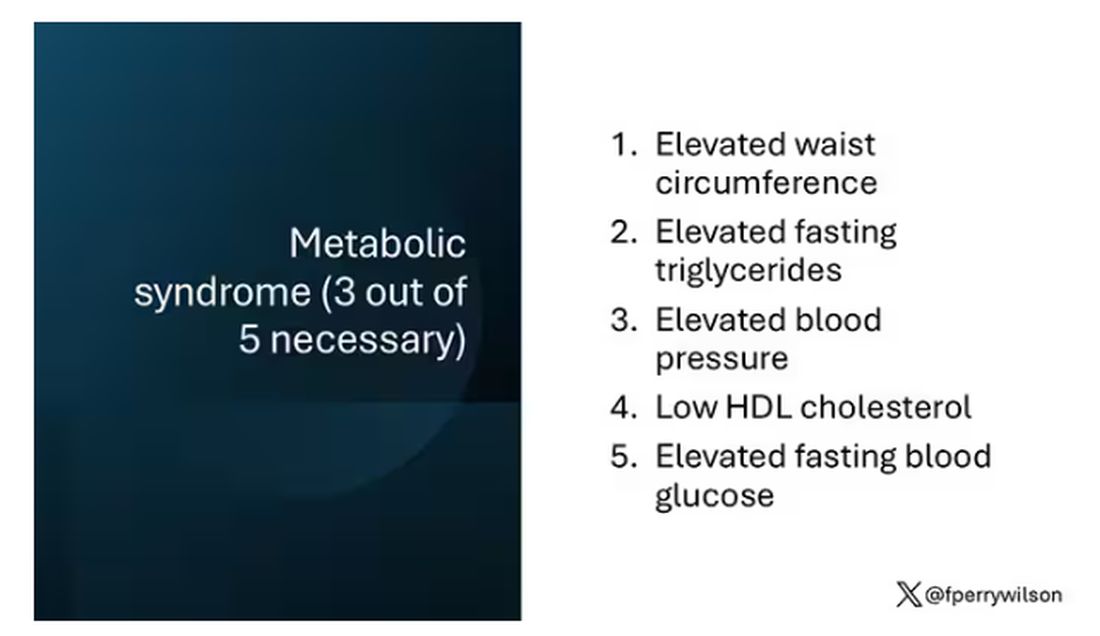

One out of three American adults — about 100 million people in this country — have the metabolic syndrome. I’m showing you the official criteria here, but essentially this is a syndrome of insulin resistance and visceral adiposity that predisposes us to a host of chronic diseases such as diabetes, heart disease, and even dementia.

The metabolic syndrome is, fundamentally, a lifestyle disease. There is a direct line between our dietary habits and the wide availability of carbohydrate-rich, highly processed foods, and the rise in the syndrome in the population.

A saying I learned from one of my epidemiology teachers comes to mind: “Lifestyle diseases require lifestyle reinterventions.” But you know what? I’m not so sure anymore.

I’ve been around long enough to see multiple dietary fads come and go with varying efficacy. I grew up in the low-fat era, probably the most detrimental time to our national health as food manufacturers started replacing fats with carbohydrates, driving much of the problem we’re faced with today.

But I was also around for the Atkins diet and the low-carb craze — a healthier approach, all things being equal. And I’ve seen variants of these: the paleo diet (essentially a low-carb, high-protein diet based on minimally processed foods) and the Mediterranean diet, which sought to replace some percentage of fats with healthier fats.

And, of course, there is time-restricted eating.

Time-restricted eating, a variant of intermittent fasting, has the advantage of being very simple. No cookbooks, no recipes. Eat what you want — but limit it to certain hours in the day, ideally a window of less than 10 hours, such as 8 a.m. to 6 p.m.

When it comes to weight loss, the diets that work tend to work because they reduce calorie intake. I know, people will get angry about this, but thermodynamics is not just a good idea, it’s the law.

But weight loss is not the only reason we need to eat healthier. What we eat can impact our health in multiple ways; certain foods lead to more atherosclerosis, more inflammation, increased strain on the kidney and liver, and can affect our glucose homeostasis.

So I was really interested when I saw this article, “Time-Restricted Eating in Adults With Metabolic Syndrome,” appearing in Annals of Internal Medicine October 1, which examined the effect of time-restricted eating on the metabolic syndrome itself. Could this lifestyle intervention cure this lifestyle disease?

In the study, 108 individuals, all of whom had the metabolic syndrome but not full-blown diabetes, were randomized to usual care — basically, nutrition education — vs time-restricted eating. In that group, participants were instructed to reduce their window of eating by at least 4 hours to achieve an 8- to 10-hour eating window. The groups were followed for 3 months.

Now, before we get to the results, it’s important to remember that the success of a lifestyle intervention trial is quite dependent on how well people adhere to the lifestyle intervention. Time-restricted eating is not as easy as taking a pill once a day.

The researchers had participants log their consumption using a smartphone app to confirm whether they were adhering to that restricted eating window.

Broadly speaking, they did. At baseline, both groups had an eating window of about 14 hours a day — think 7 a.m. to 9 p.m. The intervention group reduced that to just under 10 hours, with 10% of days falling outside of the target window.

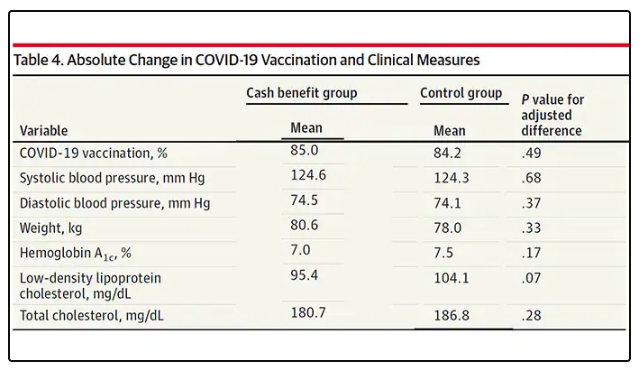

Lifestyle change achieved, the primary outcome was the change in hemoglobin A1c at 3 months. A1c integrates the serum glucose over time and is thus a good indicator of the success of the intervention in terms of insulin resistance. But the effect was, honestly, disappointing.

Technically, the time-restricted-eating group had a greater A1c change than the control group — by 0.1 percentage points. On average, they went from a baseline A1c of 5.87 to a 3-month A1c of 5.75.

Other metabolic syndrome markers were equally lackluster: no difference in fasting glucose, mean glucose, or fasting insulin.

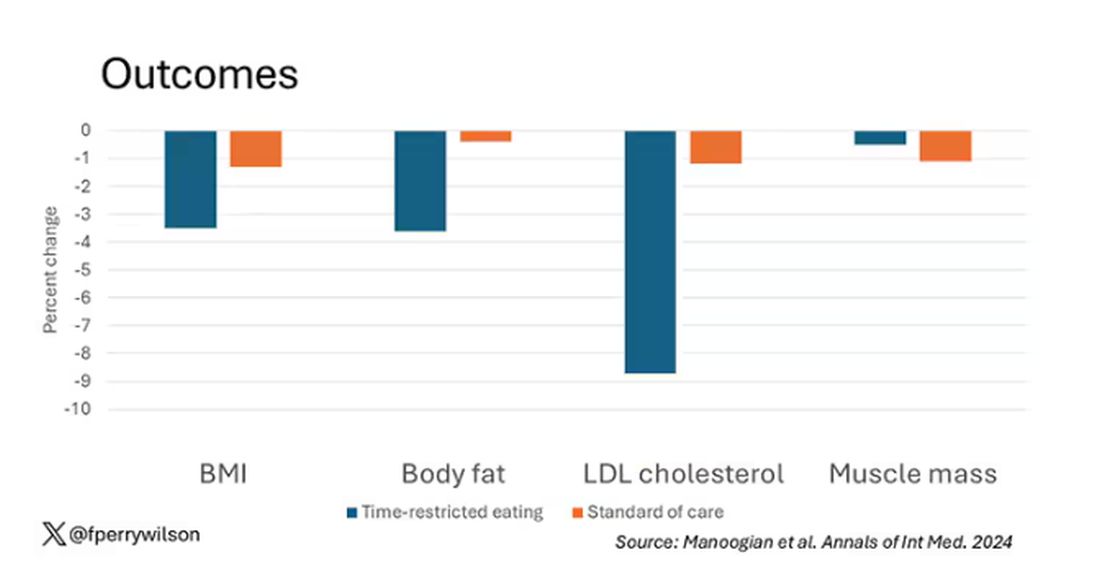

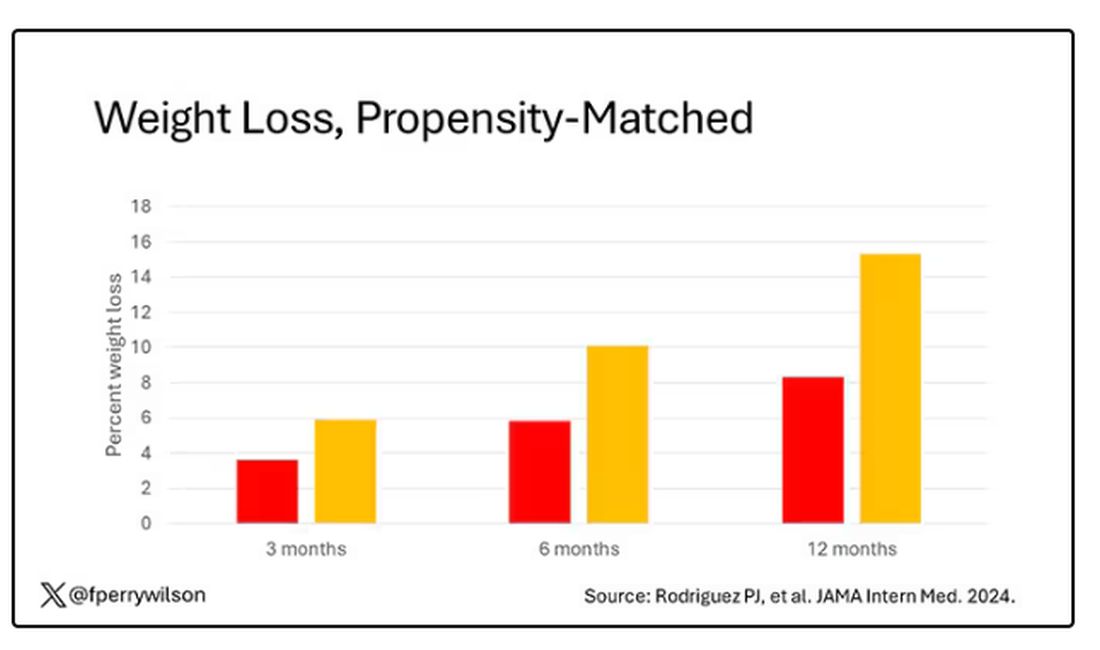

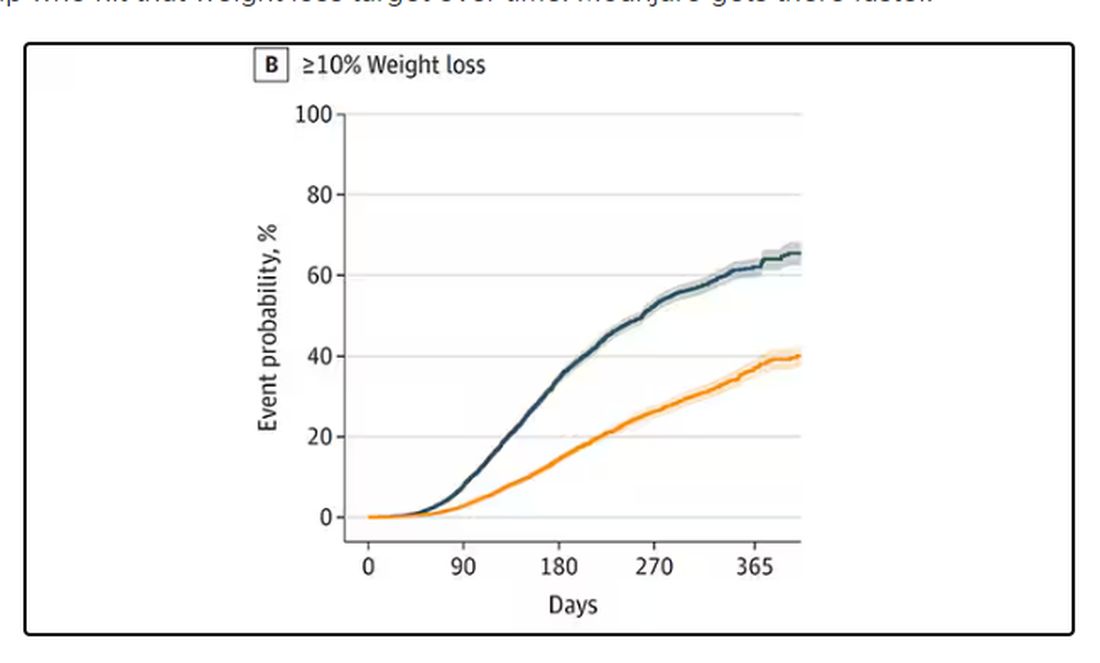

There was some weight change. The control group, which got that dietary education, lost 1.5% of body weight over the 3 months. The time-restricted-eating group lost 3.3% — about 7 pounds, which is reasonable.

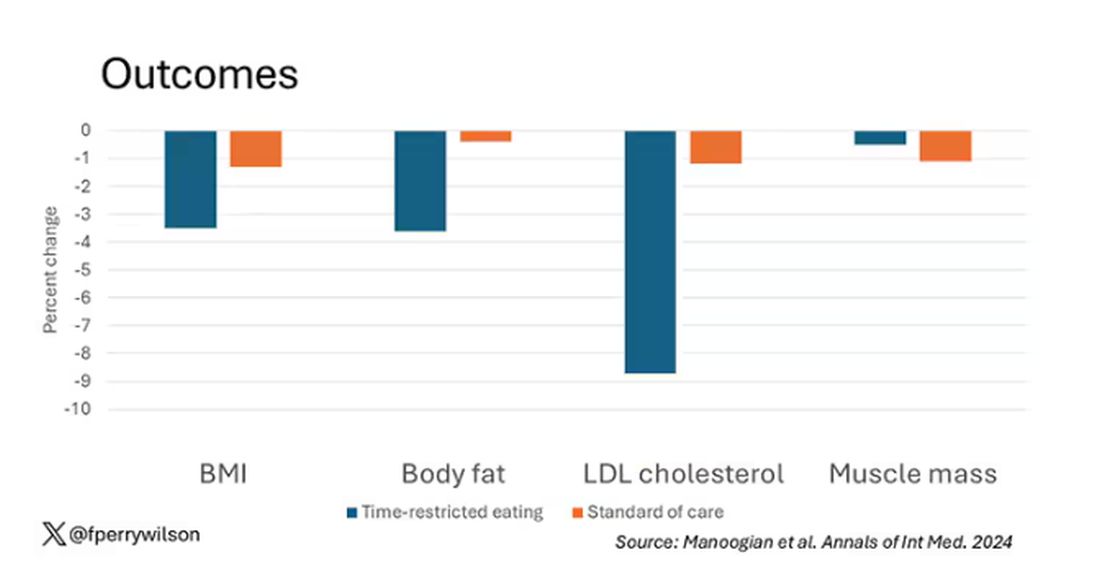

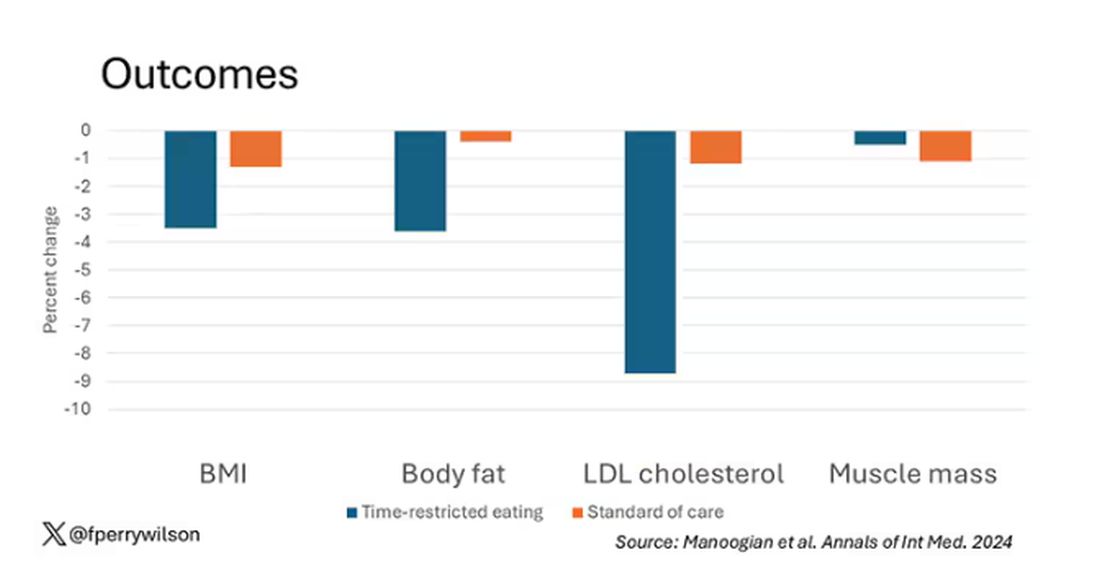

With that weight loss came statistically significant, albeit modest improvements in BMI, body fat percentage, and LDL cholesterol.

Of interest, despite the larger weight loss in the intermittent-fasting group, there was no difference in muscle mass loss, which is encouraging.

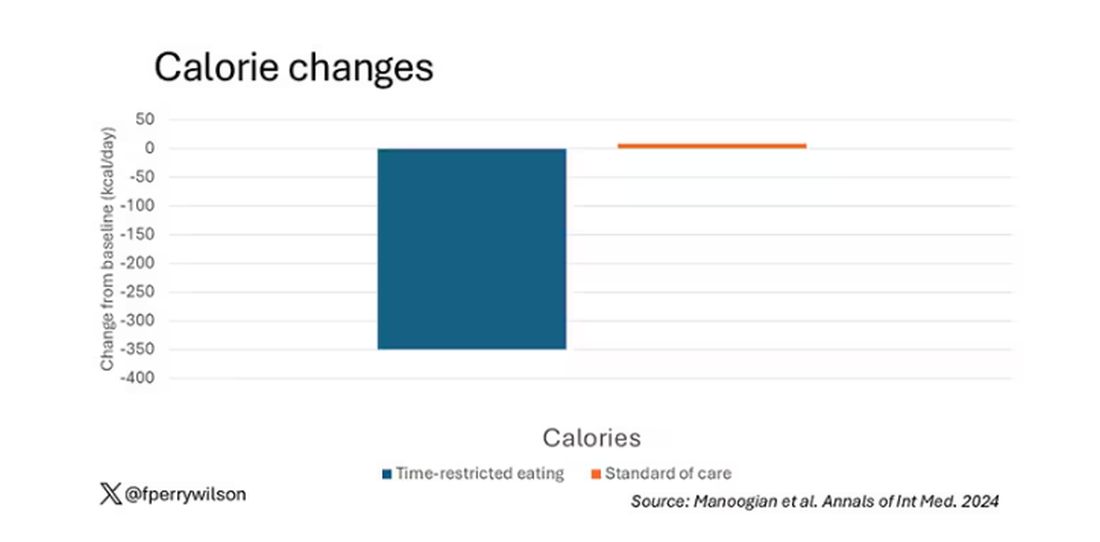

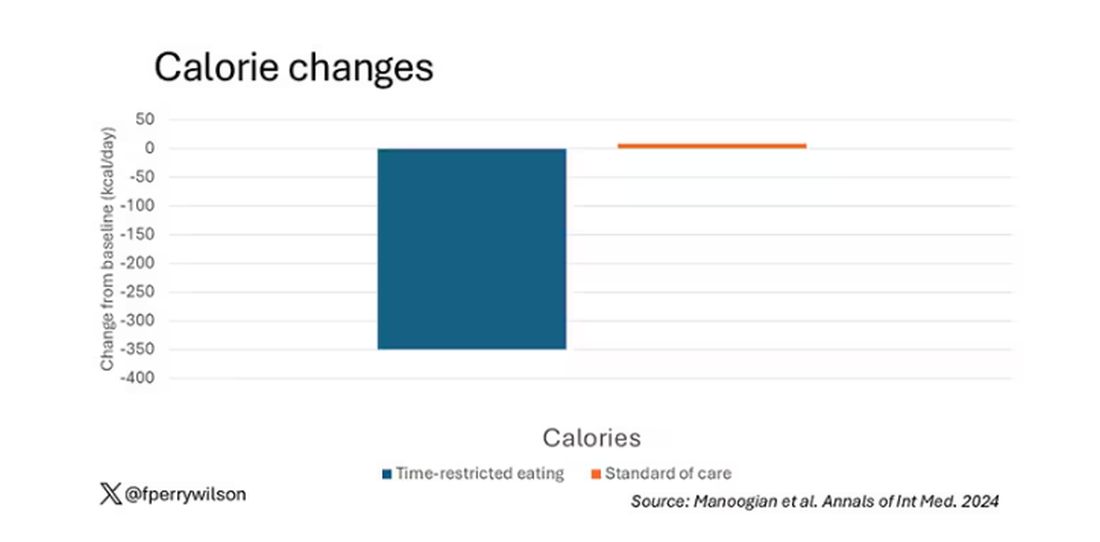

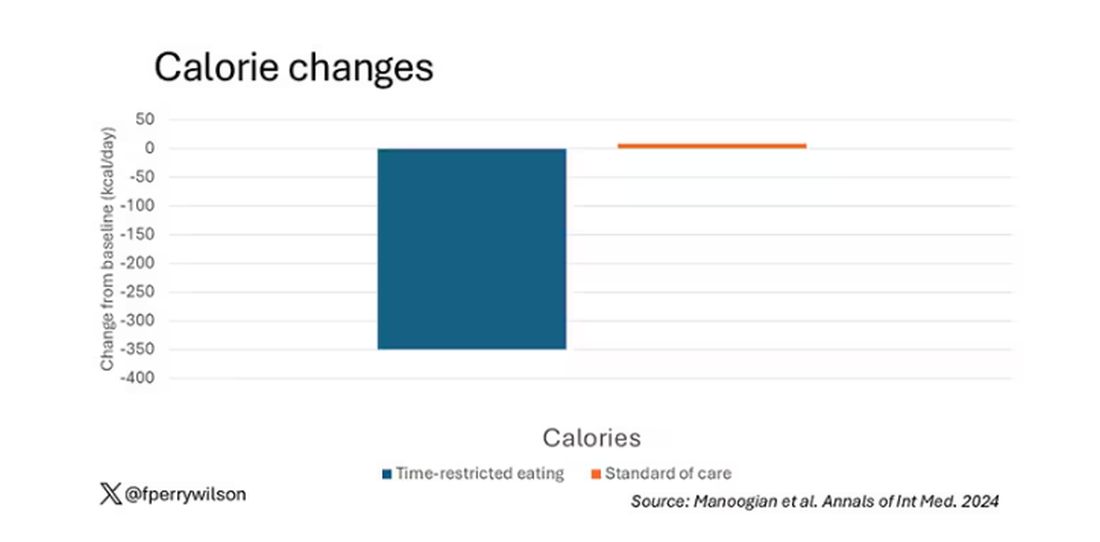

Taken together, we can say that, yes, it seems like time-restricted eating can help people lose some weight. This is essentially due to the fact that people eat fewer calories when they do time-restricted eating, as you can see here.

But, in the end, this trial examined whether this relatively straightforward lifestyle intervention would move the needle in terms of metabolic syndrome, and the data are not very compelling for that.

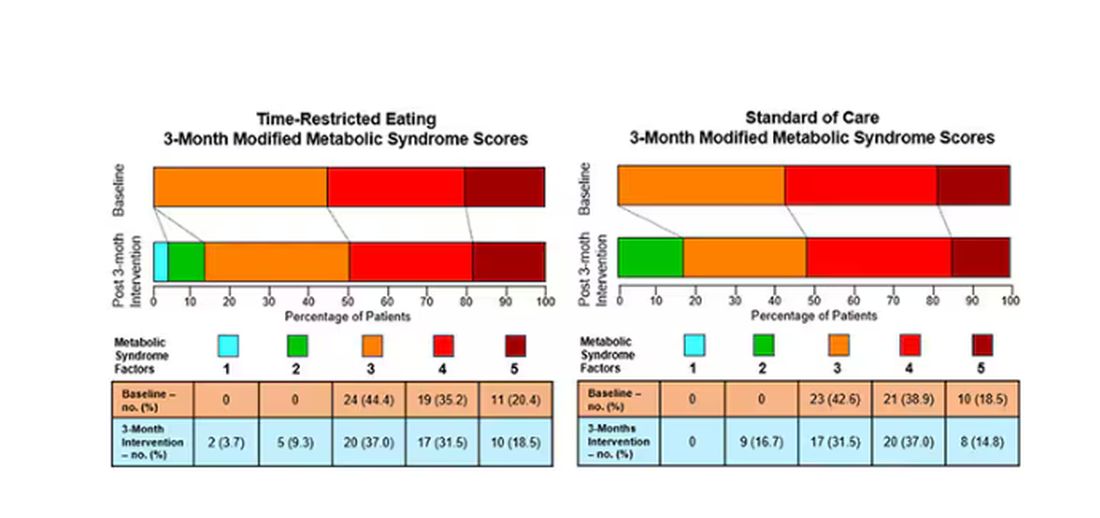

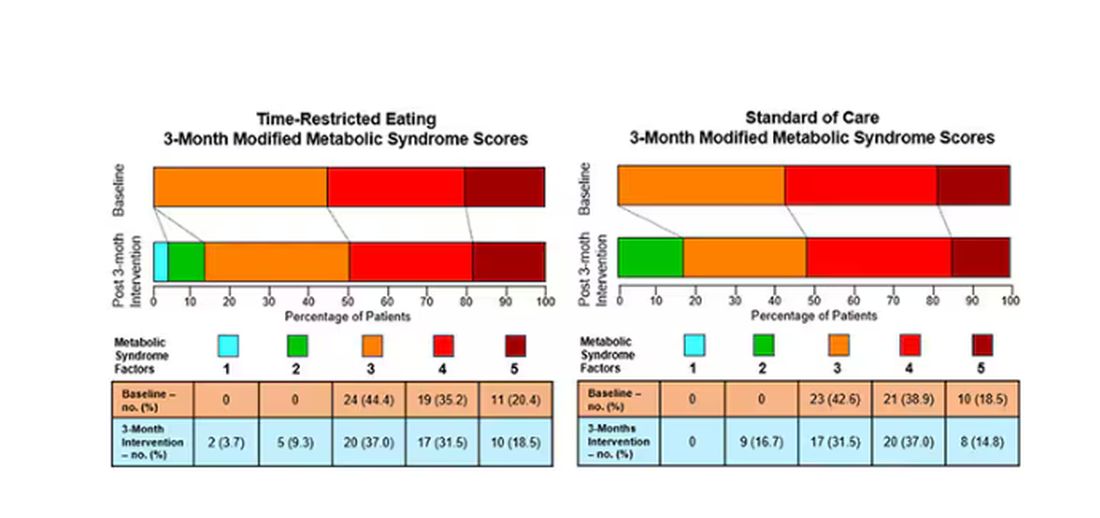

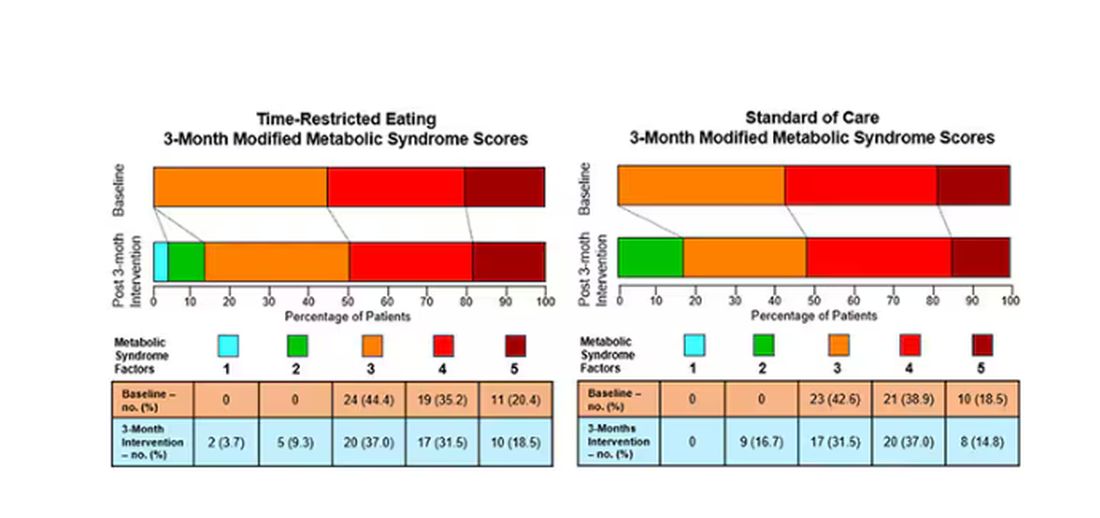

This graph shows how many of those five factors for metabolic syndrome the individuals in this trial had from the start to the end. You see that, over the 3 months, seven people in the time-restricted-eating group moved from having three criteria to two or one — being “cured” of metabolic syndrome, if you will. Nine people in the standard group were cured by that definition. Remember, they had to have at least three to have the syndrome and thus be eligible for the trial.

So If it just leads to weight loss by forcing people to consume less calories, then we need to acknowledge that we probably have better methods to achieve this same end. Ten years ago, I would have said that lifestyle change is the only way to end the epidemic of the metabolic syndrome in this country. Today, well, we live in a world of GLP-1 weight loss drugs. It is simply a different world now. Yes, they are expensive. Yes, they have side effects. But we need to evaluate them against the comparison. And so far, lifestyle changes alone are really no comparison.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

One out of three American adults — about 100 million people in this country — have the metabolic syndrome. I’m showing you the official criteria here, but essentially this is a syndrome of insulin resistance and visceral adiposity that predisposes us to a host of chronic diseases such as diabetes, heart disease, and even dementia.

The metabolic syndrome is, fundamentally, a lifestyle disease. There is a direct line between our dietary habits and the wide availability of carbohydrate-rich, highly processed foods, and the rise in the syndrome in the population.

A saying I learned from one of my epidemiology teachers comes to mind: “Lifestyle diseases require lifestyle reinterventions.” But you know what? I’m not so sure anymore.

I’ve been around long enough to see multiple dietary fads come and go with varying efficacy. I grew up in the low-fat era, probably the most detrimental time to our national health as food manufacturers started replacing fats with carbohydrates, driving much of the problem we’re faced with today.

But I was also around for the Atkins diet and the low-carb craze — a healthier approach, all things being equal. And I’ve seen variants of these: the paleo diet (essentially a low-carb, high-protein diet based on minimally processed foods) and the Mediterranean diet, which sought to replace some percentage of fats with healthier fats.

And, of course, there is time-restricted eating.

Time-restricted eating, a variant of intermittent fasting, has the advantage of being very simple. No cookbooks, no recipes. Eat what you want — but limit it to certain hours in the day, ideally a window of less than 10 hours, such as 8 a.m. to 6 p.m.

When it comes to weight loss, the diets that work tend to work because they reduce calorie intake. I know, people will get angry about this, but thermodynamics is not just a good idea, it’s the law.

But weight loss is not the only reason we need to eat healthier. What we eat can impact our health in multiple ways; certain foods lead to more atherosclerosis, more inflammation, increased strain on the kidney and liver, and can affect our glucose homeostasis.

So I was really interested when I saw this article, “Time-Restricted Eating in Adults With Metabolic Syndrome,” appearing in Annals of Internal Medicine October 1, which examined the effect of time-restricted eating on the metabolic syndrome itself. Could this lifestyle intervention cure this lifestyle disease?

In the study, 108 individuals, all of whom had the metabolic syndrome but not full-blown diabetes, were randomized to usual care — basically, nutrition education — vs time-restricted eating. In that group, participants were instructed to reduce their window of eating by at least 4 hours to achieve an 8- to 10-hour eating window. The groups were followed for 3 months.

Now, before we get to the results, it’s important to remember that the success of a lifestyle intervention trial is quite dependent on how well people adhere to the lifestyle intervention. Time-restricted eating is not as easy as taking a pill once a day.

The researchers had participants log their consumption using a smartphone app to confirm whether they were adhering to that restricted eating window.

Broadly speaking, they did. At baseline, both groups had an eating window of about 14 hours a day — think 7 a.m. to 9 p.m. The intervention group reduced that to just under 10 hours, with 10% of days falling outside of the target window.

Lifestyle change achieved, the primary outcome was the change in hemoglobin A1c at 3 months. A1c integrates the serum glucose over time and is thus a good indicator of the success of the intervention in terms of insulin resistance. But the effect was, honestly, disappointing.

Technically, the time-restricted-eating group had a greater A1c change than the control group — by 0.1 percentage points. On average, they went from a baseline A1c of 5.87 to a 3-month A1c of 5.75.

Other metabolic syndrome markers were equally lackluster: no difference in fasting glucose, mean glucose, or fasting insulin.

There was some weight change. The control group, which got that dietary education, lost 1.5% of body weight over the 3 months. The time-restricted-eating group lost 3.3% — about 7 pounds, which is reasonable.

With that weight loss came statistically significant, albeit modest improvements in BMI, body fat percentage, and LDL cholesterol.

Of interest, despite the larger weight loss in the intermittent-fasting group, there was no difference in muscle mass loss, which is encouraging.

Taken together, we can say that, yes, it seems like time-restricted eating can help people lose some weight. This is essentially due to the fact that people eat fewer calories when they do time-restricted eating, as you can see here.

But, in the end, this trial examined whether this relatively straightforward lifestyle intervention would move the needle in terms of metabolic syndrome, and the data are not very compelling for that.

This graph shows how many of those five factors for metabolic syndrome the individuals in this trial had from the start to the end. You see that, over the 3 months, seven people in the time-restricted-eating group moved from having three criteria to two or one — being “cured” of metabolic syndrome, if you will. Nine people in the standard group were cured by that definition. Remember, they had to have at least three to have the syndrome and thus be eligible for the trial.

So If it just leads to weight loss by forcing people to consume less calories, then we need to acknowledge that we probably have better methods to achieve this same end. Ten years ago, I would have said that lifestyle change is the only way to end the epidemic of the metabolic syndrome in this country. Today, well, we live in a world of GLP-1 weight loss drugs. It is simply a different world now. Yes, they are expensive. Yes, they have side effects. But we need to evaluate them against the comparison. And so far, lifestyle changes alone are really no comparison.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

One out of three American adults — about 100 million people in this country — have the metabolic syndrome. I’m showing you the official criteria here, but essentially this is a syndrome of insulin resistance and visceral adiposity that predisposes us to a host of chronic diseases such as diabetes, heart disease, and even dementia.

The metabolic syndrome is, fundamentally, a lifestyle disease. There is a direct line between our dietary habits and the wide availability of carbohydrate-rich, highly processed foods, and the rise in the syndrome in the population.

A saying I learned from one of my epidemiology teachers comes to mind: “Lifestyle diseases require lifestyle reinterventions.” But you know what? I’m not so sure anymore.

I’ve been around long enough to see multiple dietary fads come and go with varying efficacy. I grew up in the low-fat era, probably the most detrimental time to our national health as food manufacturers started replacing fats with carbohydrates, driving much of the problem we’re faced with today.

But I was also around for the Atkins diet and the low-carb craze — a healthier approach, all things being equal. And I’ve seen variants of these: the paleo diet (essentially a low-carb, high-protein diet based on minimally processed foods) and the Mediterranean diet, which sought to replace some percentage of fats with healthier fats.

And, of course, there is time-restricted eating.

Time-restricted eating, a variant of intermittent fasting, has the advantage of being very simple. No cookbooks, no recipes. Eat what you want — but limit it to certain hours in the day, ideally a window of less than 10 hours, such as 8 a.m. to 6 p.m.

When it comes to weight loss, the diets that work tend to work because they reduce calorie intake. I know, people will get angry about this, but thermodynamics is not just a good idea, it’s the law.

But weight loss is not the only reason we need to eat healthier. What we eat can impact our health in multiple ways; certain foods lead to more atherosclerosis, more inflammation, increased strain on the kidney and liver, and can affect our glucose homeostasis.

So I was really interested when I saw this article, “Time-Restricted Eating in Adults With Metabolic Syndrome,” appearing in Annals of Internal Medicine October 1, which examined the effect of time-restricted eating on the metabolic syndrome itself. Could this lifestyle intervention cure this lifestyle disease?

In the study, 108 individuals, all of whom had the metabolic syndrome but not full-blown diabetes, were randomized to usual care — basically, nutrition education — vs time-restricted eating. In that group, participants were instructed to reduce their window of eating by at least 4 hours to achieve an 8- to 10-hour eating window. The groups were followed for 3 months.

Now, before we get to the results, it’s important to remember that the success of a lifestyle intervention trial is quite dependent on how well people adhere to the lifestyle intervention. Time-restricted eating is not as easy as taking a pill once a day.

The researchers had participants log their consumption using a smartphone app to confirm whether they were adhering to that restricted eating window.

Broadly speaking, they did. At baseline, both groups had an eating window of about 14 hours a day — think 7 a.m. to 9 p.m. The intervention group reduced that to just under 10 hours, with 10% of days falling outside of the target window.

Lifestyle change achieved, the primary outcome was the change in hemoglobin A1c at 3 months. A1c integrates the serum glucose over time and is thus a good indicator of the success of the intervention in terms of insulin resistance. But the effect was, honestly, disappointing.

Technically, the time-restricted-eating group had a greater A1c change than the control group — by 0.1 percentage points. On average, they went from a baseline A1c of 5.87 to a 3-month A1c of 5.75.

Other metabolic syndrome markers were equally lackluster: no difference in fasting glucose, mean glucose, or fasting insulin.

There was some weight change. The control group, which got that dietary education, lost 1.5% of body weight over the 3 months. The time-restricted-eating group lost 3.3% — about 7 pounds, which is reasonable.

With that weight loss came statistically significant, albeit modest improvements in BMI, body fat percentage, and LDL cholesterol.

Of interest, despite the larger weight loss in the intermittent-fasting group, there was no difference in muscle mass loss, which is encouraging.

Taken together, we can say that, yes, it seems like time-restricted eating can help people lose some weight. This is essentially due to the fact that people eat fewer calories when they do time-restricted eating, as you can see here.

But, in the end, this trial examined whether this relatively straightforward lifestyle intervention would move the needle in terms of metabolic syndrome, and the data are not very compelling for that.

This graph shows how many of those five factors for metabolic syndrome the individuals in this trial had from the start to the end. You see that, over the 3 months, seven people in the time-restricted-eating group moved from having three criteria to two or one — being “cured” of metabolic syndrome, if you will. Nine people in the standard group were cured by that definition. Remember, they had to have at least three to have the syndrome and thus be eligible for the trial.

So If it just leads to weight loss by forcing people to consume less calories, then we need to acknowledge that we probably have better methods to achieve this same end. Ten years ago, I would have said that lifestyle change is the only way to end the epidemic of the metabolic syndrome in this country. Today, well, we live in a world of GLP-1 weight loss drugs. It is simply a different world now. Yes, they are expensive. Yes, they have side effects. But we need to evaluate them against the comparison. And so far, lifestyle changes alone are really no comparison.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Coffee’s ‘Sweet Spot’: Daily Consumption and Cardiometabolic Risk

Each and every day, 1 billion people on this planet ingest a particular psychoactive substance. This chemical has fairly profound physiologic effects. It increases levels of nitric oxide in the blood, leads to vasodilation, and, of course, makes you feel more awake. The substance comes in many forms but almost always in a liquid medium. Do you have it yet? That’s right. The substance is caffeine, quite possibly the healthiest recreational drug that has ever been discovered.

This might be my New England upbringing speaking, but when it comes to lifestyle and health, one of the rules I’ve internalized is that things that are pleasurable are generally bad for you. I know, I know — some of you love to exercise. Some of you love doing crosswords. But you know what I mean. I’m talking French fries, smoked meats, drugs, smoking, alcohol, binge-watching Firefly. You’d be suspicious if a study came out suggesting that eating ice cream in bed reduces your risk for heart attack, and so would I. So I’m always on the lookout for those unicorns of lifestyle factors, those rare things that you want to do and are also good for you.

So far, the data are strong for three things: sleeping, (safe) sexual activity, and coffee. You’ll have to stay tuned for articles about the first two. Today, we’re brewing up some deeper insights about the power of java.

I was inspired to write this article because of a paper, “Habitual Coffee, Tea, and Caffeine Consumption, Circulating Metabolites, and the Risk of Cardiometabolic Multimorbidity,” appearing September 17 in The Journal of Clinical Endocrinology and Metabolism (JCEM).

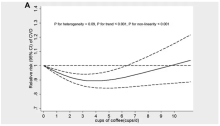

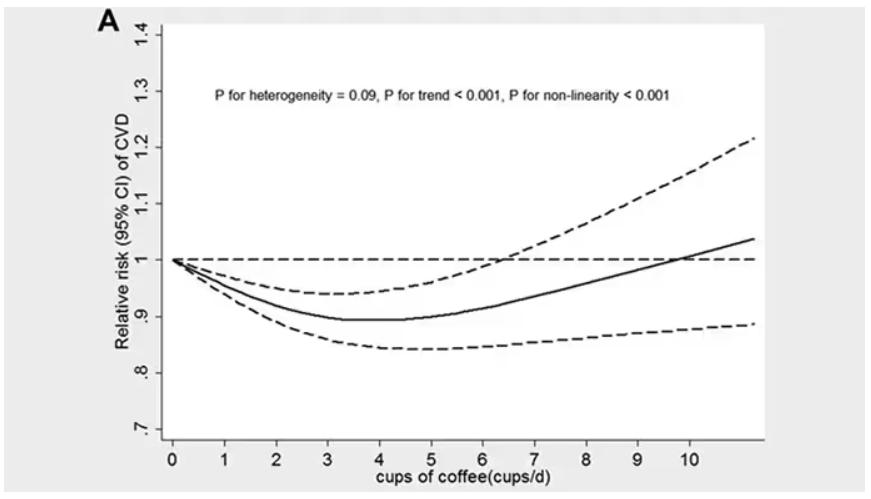

This is not the first study to suggest that coffee intake may be beneficial. A 2013 meta-analysis summarized the results of 36 studies with more than a million participants and found a U-shaped relationship between coffee intake and cardiovascular risk. The sweet spot was at three to five cups a day; people drinking that much coffee had about a 15% reduced risk for cardiovascular disease compared with nondrinkers.

But here’s the thing. Coffee contains caffeine, but it is much more than that. It is a heady brew of various chemicals and compounds, phenols, and chlorogenic acids. And, of course, you can get caffeine from stuff that isn’t coffee — natural things like tea — and decidedly unnatural things like energy drinks. How do you figure out where the benefit really lies?

The JCEM study leveraged the impressive UK Biobank dataset to figure this out. The Biobank recruited more than half a million people from the UK between 2006 and 2010 and collected a wealth of data from each of them: surveys, blood samples, biometrics, medical imaging — the works. And then they followed what would happen to those people medically over time. It’s a pretty amazing resource.

But for the purposes of this study, what you need to know is that just under 200,000 of those participants met the key criteria for this study: being free from cardiovascular disease at baseline; having completed a detailed survey about their coffee, tea, and other caffeinated beverage intake; and having adequate follow-up. A subset of that number, just under 100,000, had metabolomic data — which is where this study really gets interesting.

We’ll dive into the metabolome in a moment, but first let’s just talk about the main finding, the relationship between coffee, tea, or caffeine and cardiovascular disease. But to do that, we need to acknowledge that people who drink a lot of coffee are different from people who don’t, and it might be those differences, not the coffee itself, that are beneficial.

What were those differences? People who drank more coffee tended to be a bit older, were less likely to be female, and were slightly more likely to engage in physical activity. They ate less processed meat but also fewer vegetables. Some of those factors, like being female, are generally protective against cardiovascular disease; but some, like age, are definitely not. The authors adjusted for these and multiple other factors, including alcohol intake, BMI, kidney function, and many others to try to disentangle the effect of being the type of person who drinks a lot of coffee from the drinking a lot of coffee itself.

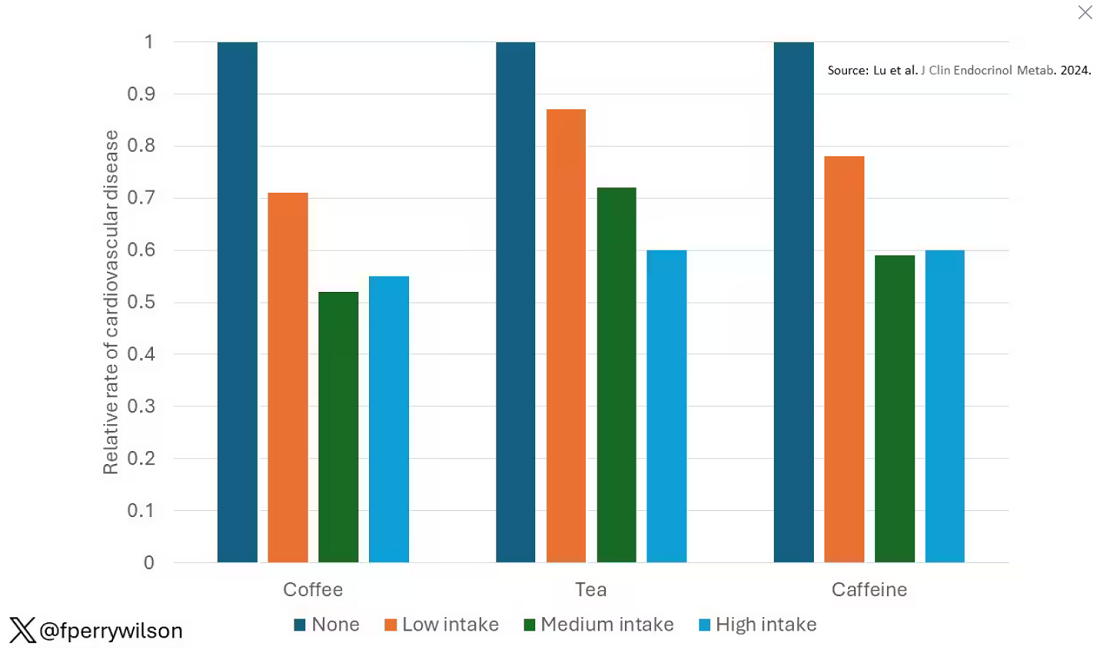

These are the results of the fully adjusted model. Compared with nonconsumers, you can see that people in the higher range of coffee, tea, or just caffeine intake have almost a 40% reduction in cardiovascular disease in follow-up.

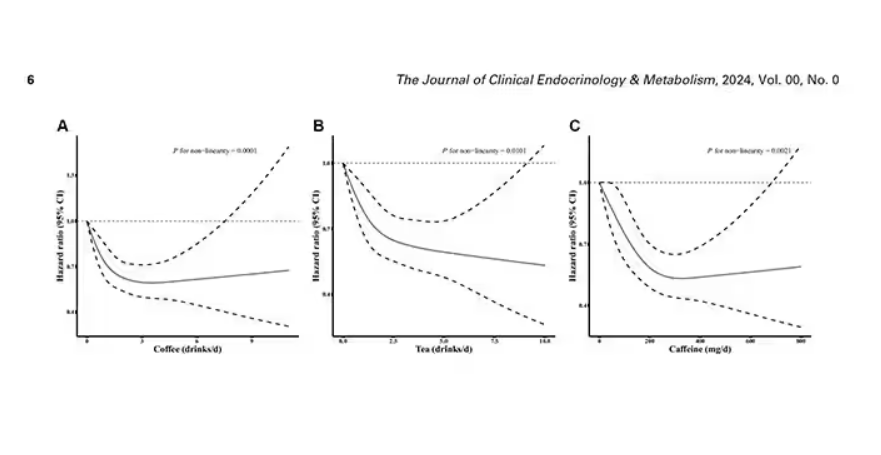

Looking at the benefit across the spectrum of intake, you again see that U-shaped curve, suggesting that a sweet spot for daily consumption can be found around 3 cups of coffee or tea (or 250 mg of caffeine). A standard energy drink contains about 120 mg of caffeine.

But if this is true, it would be good to know why. To figure that out, the authors turned to the metabolome. The idea here is that your body is constantly breaking stuff down, taking all these proteins and chemicals and compounds that we ingest and turning them into metabolites. Using advanced measurement techniques, researchers can measure hundreds or even thousands of metabolites from a single blood sample. They provide information, obviously, about the food you eat and the drinks you drink, but what is really intriguing is that some metabolites are associated with better health and some with worse

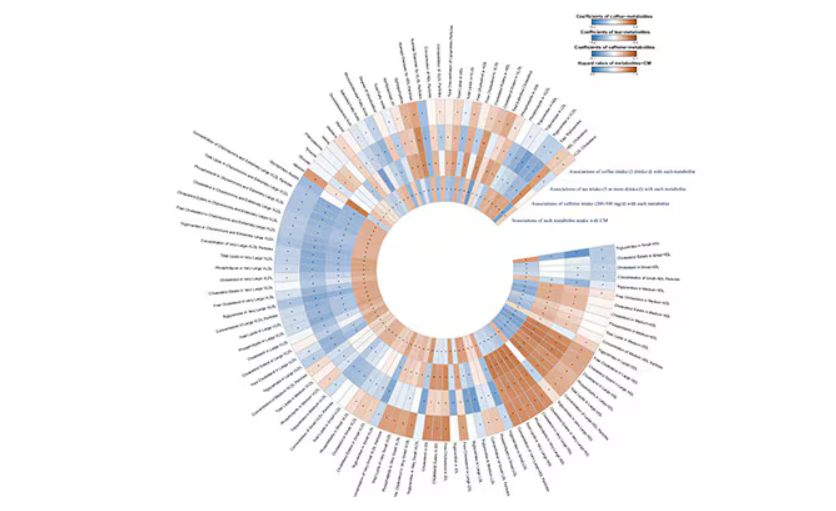

In this study, researchers measured 168 individual metabolites. Eighty of them, nearly half, were significantly altered in people who drank more coffee.

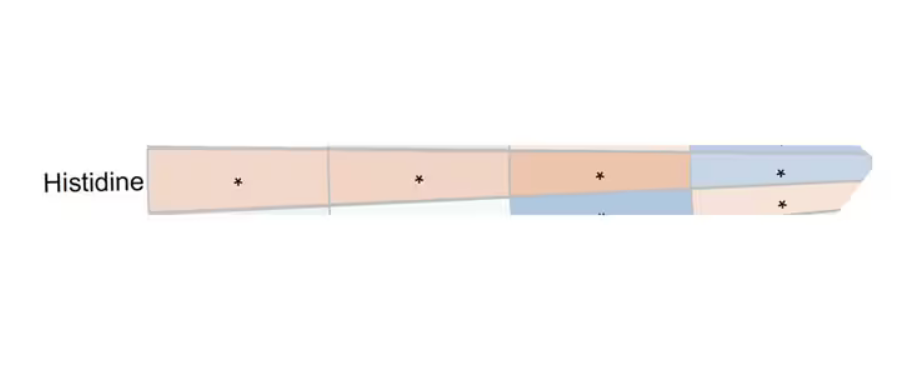

This figure summarizes the findings, and yes, this is way too complicated.

But here’s how to interpret it. The inner ring shows you how certain metabolites are associated with cardiovascular disease. The outer rings show you how those metabolites are associated with coffee, tea, or caffeine. The interesting part is that the sections of the ring (outer rings and inner rings) are very different colors.

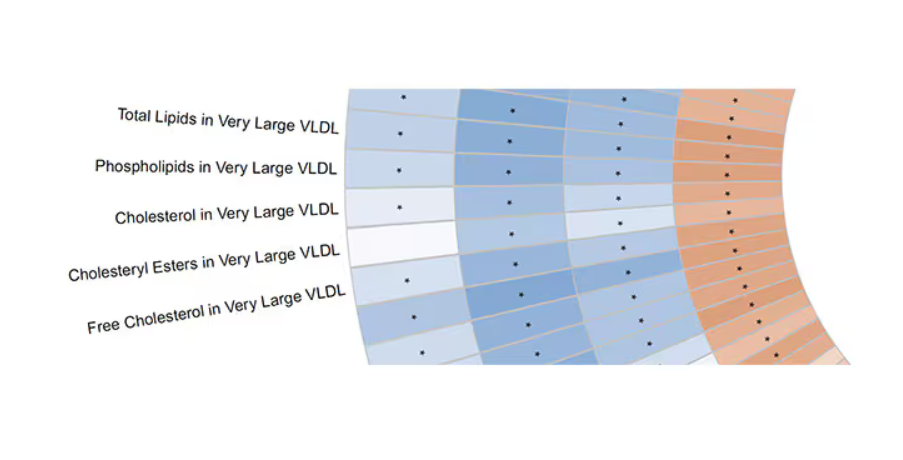

Like here.

What you see here is a fairly profound effect that coffee, tea, or caffeine intake has on metabolites of VLDL — bad cholesterol. The beverages lower it, and, of course, higher levels lead to cardiovascular disease. This means that this is a potential causal pathway from coffee intake to heart protection.

And that’s not the only one.

You see a similar relationship for saturated fatty acids. Higher levels lead to cardiovascular disease, and coffee intake lowers levels. The reverse works too: Lower levels of histidine (an amino acid) increase cardiovascular risk, and coffee seems to raise those levels.

Is this all too good to be true? It’s hard to say. The data on coffee’s benefits have been remarkably consistent. Still, I wouldn’t be a good doctor if I didn’t mention that clearly there is a difference between a cup of black coffee and a venti caramel Frappuccino.

Nevertheless, coffee remains firmly in my holy trinity of enjoyable things that are, for whatever reason, still good for you. So, when you’re having that second, or third, or maybe fourth cup of the day, you can take that to heart.

Dr. Wilson, associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator, reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

Each and every day, 1 billion people on this planet ingest a particular psychoactive substance. This chemical has fairly profound physiologic effects. It increases levels of nitric oxide in the blood, leads to vasodilation, and, of course, makes you feel more awake. The substance comes in many forms but almost always in a liquid medium. Do you have it yet? That’s right. The substance is caffeine, quite possibly the healthiest recreational drug that has ever been discovered.

This might be my New England upbringing speaking, but when it comes to lifestyle and health, one of the rules I’ve internalized is that things that are pleasurable are generally bad for you. I know, I know — some of you love to exercise. Some of you love doing crosswords. But you know what I mean. I’m talking French fries, smoked meats, drugs, smoking, alcohol, binge-watching Firefly. You’d be suspicious if a study came out suggesting that eating ice cream in bed reduces your risk for heart attack, and so would I. So I’m always on the lookout for those unicorns of lifestyle factors, those rare things that you want to do and are also good for you.

So far, the data are strong for three things: sleeping, (safe) sexual activity, and coffee. You’ll have to stay tuned for articles about the first two. Today, we’re brewing up some deeper insights about the power of java.

I was inspired to write this article because of a paper, “Habitual Coffee, Tea, and Caffeine Consumption, Circulating Metabolites, and the Risk of Cardiometabolic Multimorbidity,” appearing September 17 in The Journal of Clinical Endocrinology and Metabolism (JCEM).

This is not the first study to suggest that coffee intake may be beneficial. A 2013 meta-analysis summarized the results of 36 studies with more than a million participants and found a U-shaped relationship between coffee intake and cardiovascular risk. The sweet spot was at three to five cups a day; people drinking that much coffee had about a 15% reduced risk for cardiovascular disease compared with nondrinkers.

But here’s the thing. Coffee contains caffeine, but it is much more than that. It is a heady brew of various chemicals and compounds, phenols, and chlorogenic acids. And, of course, you can get caffeine from stuff that isn’t coffee — natural things like tea — and decidedly unnatural things like energy drinks. How do you figure out where the benefit really lies?

The JCEM study leveraged the impressive UK Biobank dataset to figure this out. The Biobank recruited more than half a million people from the UK between 2006 and 2010 and collected a wealth of data from each of them: surveys, blood samples, biometrics, medical imaging — the works. And then they followed what would happen to those people medically over time. It’s a pretty amazing resource.

But for the purposes of this study, what you need to know is that just under 200,000 of those participants met the key criteria for this study: being free from cardiovascular disease at baseline; having completed a detailed survey about their coffee, tea, and other caffeinated beverage intake; and having adequate follow-up. A subset of that number, just under 100,000, had metabolomic data — which is where this study really gets interesting.

We’ll dive into the metabolome in a moment, but first let’s just talk about the main finding, the relationship between coffee, tea, or caffeine and cardiovascular disease. But to do that, we need to acknowledge that people who drink a lot of coffee are different from people who don’t, and it might be those differences, not the coffee itself, that are beneficial.

What were those differences? People who drank more coffee tended to be a bit older, were less likely to be female, and were slightly more likely to engage in physical activity. They ate less processed meat but also fewer vegetables. Some of those factors, like being female, are generally protective against cardiovascular disease; but some, like age, are definitely not. The authors adjusted for these and multiple other factors, including alcohol intake, BMI, kidney function, and many others to try to disentangle the effect of being the type of person who drinks a lot of coffee from the drinking a lot of coffee itself.

These are the results of the fully adjusted model. Compared with nonconsumers, you can see that people in the higher range of coffee, tea, or just caffeine intake have almost a 40% reduction in cardiovascular disease in follow-up.

Looking at the benefit across the spectrum of intake, you again see that U-shaped curve, suggesting that a sweet spot for daily consumption can be found around 3 cups of coffee or tea (or 250 mg of caffeine). A standard energy drink contains about 120 mg of caffeine.

But if this is true, it would be good to know why. To figure that out, the authors turned to the metabolome. The idea here is that your body is constantly breaking stuff down, taking all these proteins and chemicals and compounds that we ingest and turning them into metabolites. Using advanced measurement techniques, researchers can measure hundreds or even thousands of metabolites from a single blood sample. They provide information, obviously, about the food you eat and the drinks you drink, but what is really intriguing is that some metabolites are associated with better health and some with worse

In this study, researchers measured 168 individual metabolites. Eighty of them, nearly half, were significantly altered in people who drank more coffee.

This figure summarizes the findings, and yes, this is way too complicated.

But here’s how to interpret it. The inner ring shows you how certain metabolites are associated with cardiovascular disease. The outer rings show you how those metabolites are associated with coffee, tea, or caffeine. The interesting part is that the sections of the ring (outer rings and inner rings) are very different colors.

Like here.

What you see here is a fairly profound effect that coffee, tea, or caffeine intake has on metabolites of VLDL — bad cholesterol. The beverages lower it, and, of course, higher levels lead to cardiovascular disease. This means that this is a potential causal pathway from coffee intake to heart protection.

And that’s not the only one.

You see a similar relationship for saturated fatty acids. Higher levels lead to cardiovascular disease, and coffee intake lowers levels. The reverse works too: Lower levels of histidine (an amino acid) increase cardiovascular risk, and coffee seems to raise those levels.

Is this all too good to be true? It’s hard to say. The data on coffee’s benefits have been remarkably consistent. Still, I wouldn’t be a good doctor if I didn’t mention that clearly there is a difference between a cup of black coffee and a venti caramel Frappuccino.

Nevertheless, coffee remains firmly in my holy trinity of enjoyable things that are, for whatever reason, still good for you. So, when you’re having that second, or third, or maybe fourth cup of the day, you can take that to heart.

Dr. Wilson, associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator, reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

Each and every day, 1 billion people on this planet ingest a particular psychoactive substance. This chemical has fairly profound physiologic effects. It increases levels of nitric oxide in the blood, leads to vasodilation, and, of course, makes you feel more awake. The substance comes in many forms but almost always in a liquid medium. Do you have it yet? That’s right. The substance is caffeine, quite possibly the healthiest recreational drug that has ever been discovered.

This might be my New England upbringing speaking, but when it comes to lifestyle and health, one of the rules I’ve internalized is that things that are pleasurable are generally bad for you. I know, I know — some of you love to exercise. Some of you love doing crosswords. But you know what I mean. I’m talking French fries, smoked meats, drugs, smoking, alcohol, binge-watching Firefly. You’d be suspicious if a study came out suggesting that eating ice cream in bed reduces your risk for heart attack, and so would I. So I’m always on the lookout for those unicorns of lifestyle factors, those rare things that you want to do and are also good for you.

So far, the data are strong for three things: sleeping, (safe) sexual activity, and coffee. You’ll have to stay tuned for articles about the first two. Today, we’re brewing up some deeper insights about the power of java.

I was inspired to write this article because of a paper, “Habitual Coffee, Tea, and Caffeine Consumption, Circulating Metabolites, and the Risk of Cardiometabolic Multimorbidity,” appearing September 17 in The Journal of Clinical Endocrinology and Metabolism (JCEM).

This is not the first study to suggest that coffee intake may be beneficial. A 2013 meta-analysis summarized the results of 36 studies with more than a million participants and found a U-shaped relationship between coffee intake and cardiovascular risk. The sweet spot was at three to five cups a day; people drinking that much coffee had about a 15% reduced risk for cardiovascular disease compared with nondrinkers.

But here’s the thing. Coffee contains caffeine, but it is much more than that. It is a heady brew of various chemicals and compounds, phenols, and chlorogenic acids. And, of course, you can get caffeine from stuff that isn’t coffee — natural things like tea — and decidedly unnatural things like energy drinks. How do you figure out where the benefit really lies?

The JCEM study leveraged the impressive UK Biobank dataset to figure this out. The Biobank recruited more than half a million people from the UK between 2006 and 2010 and collected a wealth of data from each of them: surveys, blood samples, biometrics, medical imaging — the works. And then they followed what would happen to those people medically over time. It’s a pretty amazing resource.

But for the purposes of this study, what you need to know is that just under 200,000 of those participants met the key criteria for this study: being free from cardiovascular disease at baseline; having completed a detailed survey about their coffee, tea, and other caffeinated beverage intake; and having adequate follow-up. A subset of that number, just under 100,000, had metabolomic data — which is where this study really gets interesting.

We’ll dive into the metabolome in a moment, but first let’s just talk about the main finding, the relationship between coffee, tea, or caffeine and cardiovascular disease. But to do that, we need to acknowledge that people who drink a lot of coffee are different from people who don’t, and it might be those differences, not the coffee itself, that are beneficial.

What were those differences? People who drank more coffee tended to be a bit older, were less likely to be female, and were slightly more likely to engage in physical activity. They ate less processed meat but also fewer vegetables. Some of those factors, like being female, are generally protective against cardiovascular disease; but some, like age, are definitely not. The authors adjusted for these and multiple other factors, including alcohol intake, BMI, kidney function, and many others to try to disentangle the effect of being the type of person who drinks a lot of coffee from the drinking a lot of coffee itself.

These are the results of the fully adjusted model. Compared with nonconsumers, you can see that people in the higher range of coffee, tea, or just caffeine intake have almost a 40% reduction in cardiovascular disease in follow-up.

Looking at the benefit across the spectrum of intake, you again see that U-shaped curve, suggesting that a sweet spot for daily consumption can be found around 3 cups of coffee or tea (or 250 mg of caffeine). A standard energy drink contains about 120 mg of caffeine.

But if this is true, it would be good to know why. To figure that out, the authors turned to the metabolome. The idea here is that your body is constantly breaking stuff down, taking all these proteins and chemicals and compounds that we ingest and turning them into metabolites. Using advanced measurement techniques, researchers can measure hundreds or even thousands of metabolites from a single blood sample. They provide information, obviously, about the food you eat and the drinks you drink, but what is really intriguing is that some metabolites are associated with better health and some with worse

In this study, researchers measured 168 individual metabolites. Eighty of them, nearly half, were significantly altered in people who drank more coffee.

This figure summarizes the findings, and yes, this is way too complicated.

But here’s how to interpret it. The inner ring shows you how certain metabolites are associated with cardiovascular disease. The outer rings show you how those metabolites are associated with coffee, tea, or caffeine. The interesting part is that the sections of the ring (outer rings and inner rings) are very different colors.

Like here.

What you see here is a fairly profound effect that coffee, tea, or caffeine intake has on metabolites of VLDL — bad cholesterol. The beverages lower it, and, of course, higher levels lead to cardiovascular disease. This means that this is a potential causal pathway from coffee intake to heart protection.

And that’s not the only one.

You see a similar relationship for saturated fatty acids. Higher levels lead to cardiovascular disease, and coffee intake lowers levels. The reverse works too: Lower levels of histidine (an amino acid) increase cardiovascular risk, and coffee seems to raise those levels.

Is this all too good to be true? It’s hard to say. The data on coffee’s benefits have been remarkably consistent. Still, I wouldn’t be a good doctor if I didn’t mention that clearly there is a difference between a cup of black coffee and a venti caramel Frappuccino.

Nevertheless, coffee remains firmly in my holy trinity of enjoyable things that are, for whatever reason, still good for you. So, when you’re having that second, or third, or maybe fourth cup of the day, you can take that to heart.

Dr. Wilson, associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator, reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

These Four Factors Account for 18 Years of Life Expectancy

This transcript has been edited for clarity.

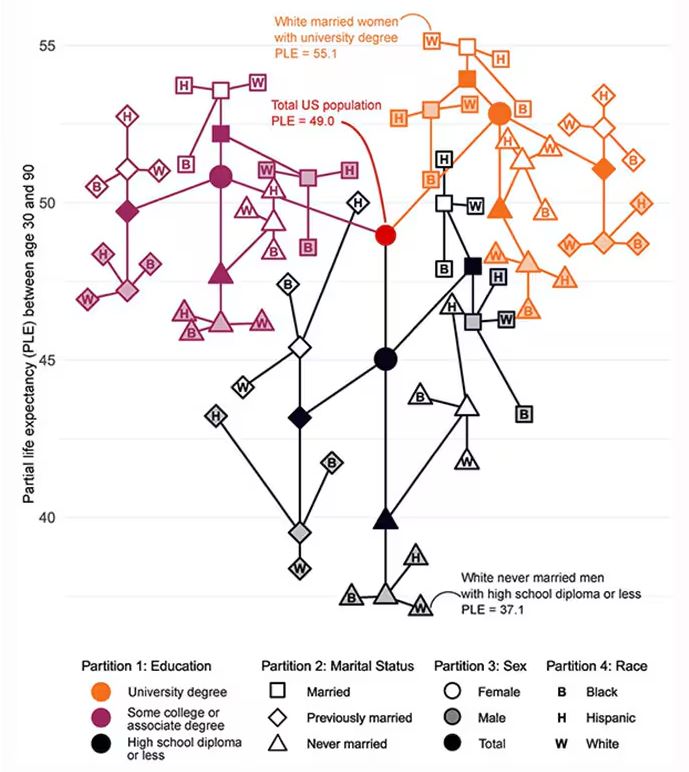

Two individuals in the United States are celebrating their 30th birthdays. It’s a good day. They are entering the prime of their lives. One is a married White woman with a university degree. The other is a never-married White man with a high school diploma.

How many more years of life can these two individuals look forward to?

There’s a fairly dramatic difference. The man can expect 37.1 more years of life on average, living to be about 67. The woman can expect to live to age 85. That’s a life-expectancy discrepancy of 18 years based solely on gender, education, and marital status.

I’m using these cases to illustrate the extremes of life expectancy across four key social determinants of health: sex, race, marital status, and education. We all have some sense of how these factors play out in terms of health, but a new study suggests that it’s actually quite a bit more complicated than we thought.

Let me start by acknowledging my own bias here. As a clinical researcher, I sometimes find it hard to appreciate the value of actuarial-type studies that look at life expectancy (or any metric, really) between groups defined by marital status, for example. I’m never quite sure what to do with the conclusion. Married people live longer, the headline says. Okay, but as a doctor, what am I supposed to do about that? Encourage my patients to settle down and commit? Studies showing that women live longer than men or that White people live longer than Black people are also hard for me to incorporate into my practice. These are not easily changeable states.

But studies examining these groups are a reasonable starting point to ask more relevant questions. Why do women live longer than men? Is it behavioral (men take more risks and are less likely to see doctors)? Or is it hormonal (estrogen has a lot of protective effects that testosterone does not)? Or is it something else?

Integrating these social determinants of health into a cohesive story is a bit harder than it might seem, as this study, appearing in BMJ Open, illustrates.

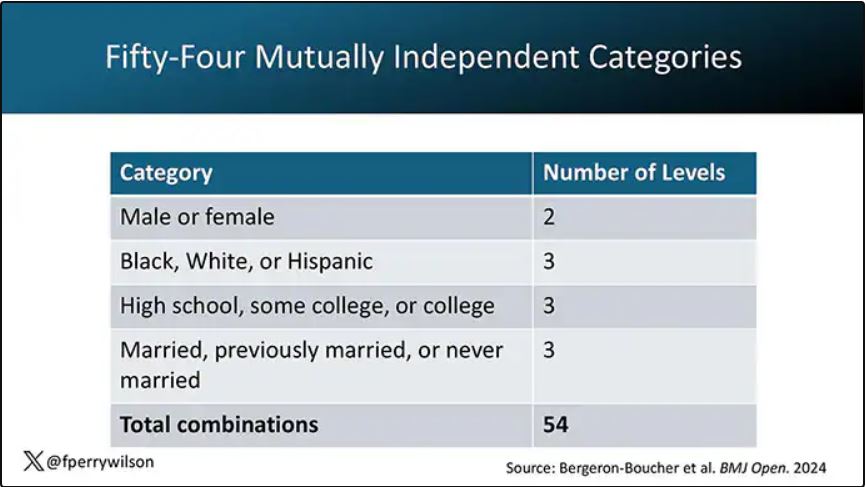

In the context of this study, every person in America can be placed into one of 54 mutually exclusive groups. You can be male or female. You can be Black, White, or Hispanic. You can have a high school diploma or less, an associate degree, or a college degree; and you can be married, previously married, or never married.

Of course, this does not capture the beautiful tapestry that is American life, but let’s give them a pass. They are working with data from the American Community Survey, which contains 8634 people — the statistics would run into trouble with more granular divisions. This survey can be population weighted, so you can scale up the results to reasonably represent the population of the United States.

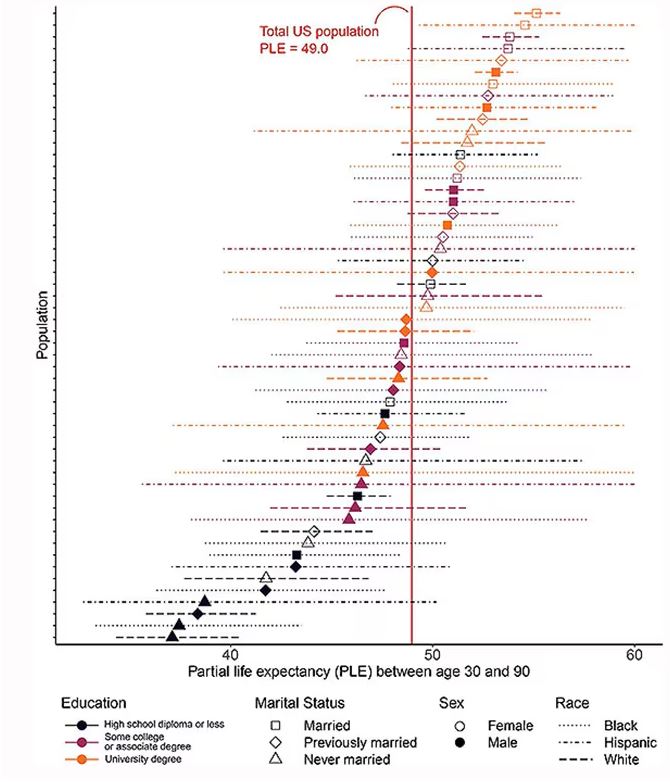

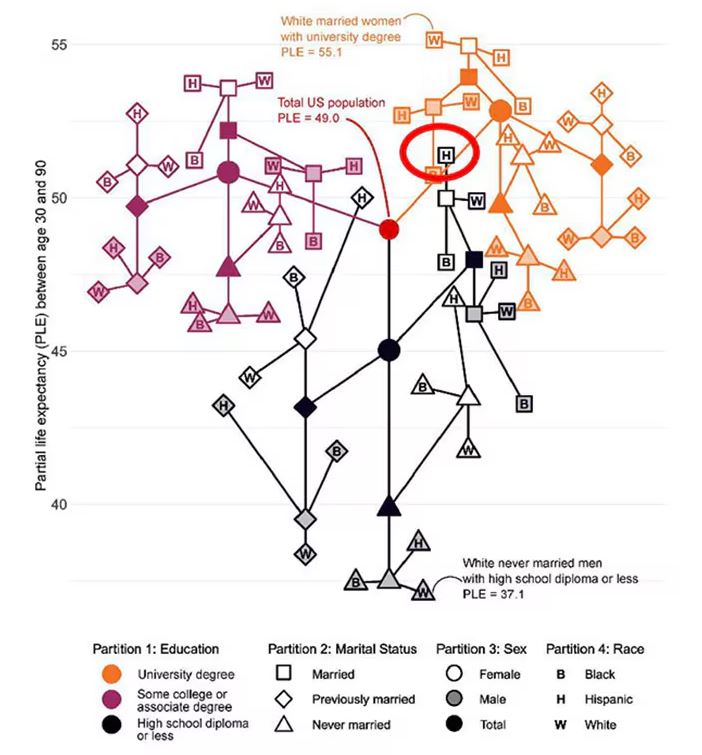

The survey collected data on the four broad categories of sex, race, education, and marital status and linked those survey results to the Multiple Cause of Death dataset from the CDC. From there, it’s a pretty simple task to rank the 54 categories in order from longest to shortest life expectancy, as you can see here.

But that’s not really the interesting part of this study. Sure, there is a lot of variation; it’s interesting that these four factors explain about 18 years’ difference in life expectancy in this country. What strikes me here, actually, is the lack of an entirely consistent message across this spectrum.

Let me walk you through the second figure in this paper, because this nicely illustrates the surprising heterogeneity that exists here.

This may seem overwhelming, but basically, shapes that are higher up on the Y-axis represent the groups with longer life expectancy.

You can tell, for example, that shapes that are black in color (groups with high school educations or less) are generally lower. But not universally so. This box represents married, Hispanic females who do quite well in terms of life expectancy, even at that lower educational level.

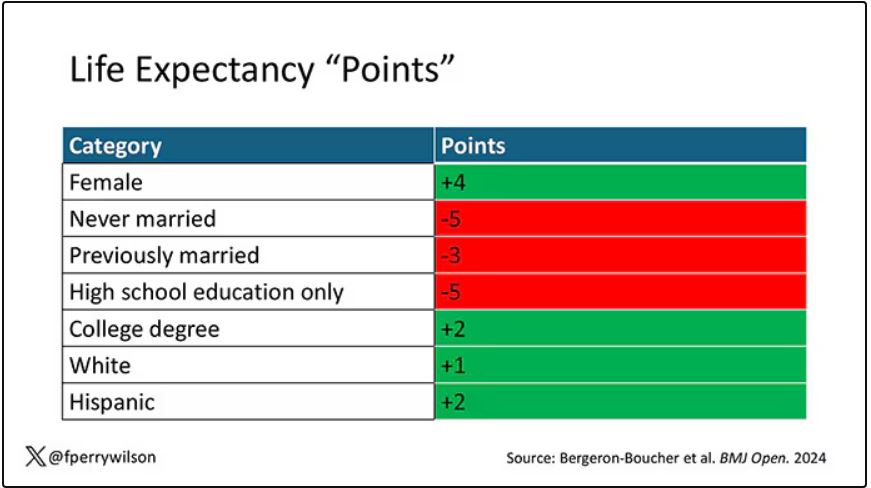

The authors quantify this phenomenon by creating a mortality risk score that integrates these findings. It looks something like this, with 0 being average morality for the United States.

As you can see, you get a bunch of points for being female, but you lose a bunch for not being married. Education plays a large role, with a big hit for those who have a high school diploma or less, and a bonus for those with a college degree. Race plays a relatively more minor role.

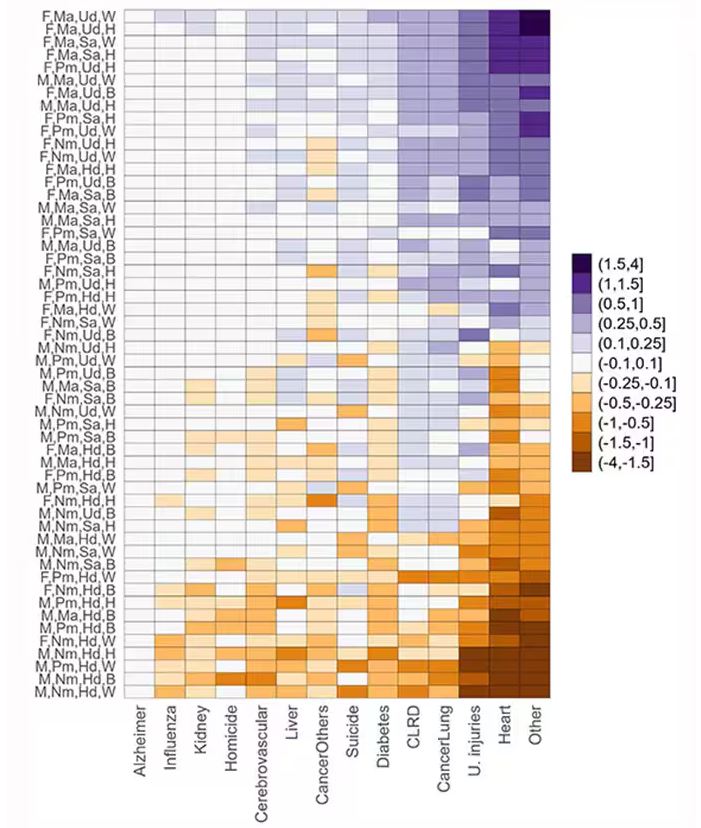

This is all very interesting, but as I said at the beginning, this isn’t terribly useful to me as a physician. More important is figuring out why these differences exist. And there are some clues in the study data, particularly when we examine causes of death. This figure ranks those 54 groups again, from the married, White, college-educated females down to the never-married, White, high school–educated males. The boxes show how much more or less likely this group is to die of a given condition than the general population.

Looking at the bottom groups, you can see a dramatically increased risk for death from unintentional injuries, heart disease, and lung cancer. You see an increased risk for suicide as well. In the upper tiers, the only place where risk seems higher than expected is for the category of “other cancers,” reminding us that many types of cancer do not respect definitions of socioeconomic status.

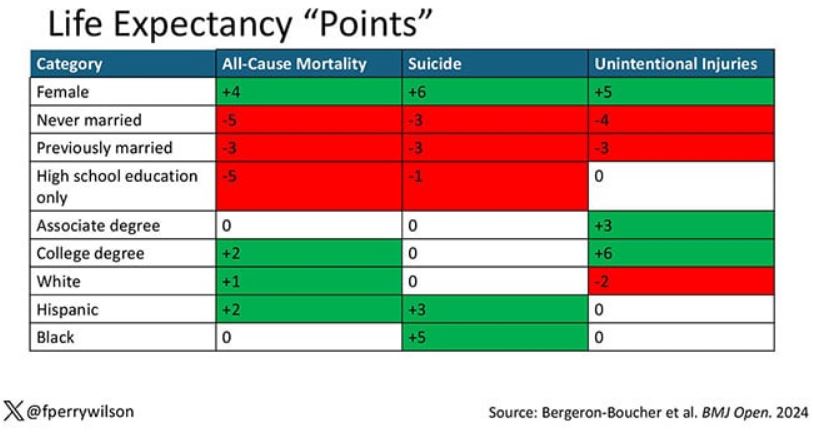

You can even update the risk-scoring system to reflect the risk for different causes of death. You can see here how White people, for example, are at higher risk for death from unintentional injuries relative to other populations, despite having a lower mortality overall.

So maybe, through cause of death, we get a little closer to the answer of why. But this paper is really just a start. Its primary effect should be to surprise us — that in a country as wealthy as the United States, such dramatic variation exists based on factors that, with the exception of sex, I suppose, are not really biological. Which means that to find the why, we may need to turn from physiology to sociology.

Dr. Wilson is associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

Two individuals in the United States are celebrating their 30th birthdays. It’s a good day. They are entering the prime of their lives. One is a married White woman with a university degree. The other is a never-married White man with a high school diploma.

How many more years of life can these two individuals look forward to?

There’s a fairly dramatic difference. The man can expect 37.1 more years of life on average, living to be about 67. The woman can expect to live to age 85. That’s a life-expectancy discrepancy of 18 years based solely on gender, education, and marital status.

I’m using these cases to illustrate the extremes of life expectancy across four key social determinants of health: sex, race, marital status, and education. We all have some sense of how these factors play out in terms of health, but a new study suggests that it’s actually quite a bit more complicated than we thought.

Let me start by acknowledging my own bias here. As a clinical researcher, I sometimes find it hard to appreciate the value of actuarial-type studies that look at life expectancy (or any metric, really) between groups defined by marital status, for example. I’m never quite sure what to do with the conclusion. Married people live longer, the headline says. Okay, but as a doctor, what am I supposed to do about that? Encourage my patients to settle down and commit? Studies showing that women live longer than men or that White people live longer than Black people are also hard for me to incorporate into my practice. These are not easily changeable states.

But studies examining these groups are a reasonable starting point to ask more relevant questions. Why do women live longer than men? Is it behavioral (men take more risks and are less likely to see doctors)? Or is it hormonal (estrogen has a lot of protective effects that testosterone does not)? Or is it something else?

Integrating these social determinants of health into a cohesive story is a bit harder than it might seem, as this study, appearing in BMJ Open, illustrates.

In the context of this study, every person in America can be placed into one of 54 mutually exclusive groups. You can be male or female. You can be Black, White, or Hispanic. You can have a high school diploma or less, an associate degree, or a college degree; and you can be married, previously married, or never married.

Of course, this does not capture the beautiful tapestry that is American life, but let’s give them a pass. They are working with data from the American Community Survey, which contains 8634 people — the statistics would run into trouble with more granular divisions. This survey can be population weighted, so you can scale up the results to reasonably represent the population of the United States.

The survey collected data on the four broad categories of sex, race, education, and marital status and linked those survey results to the Multiple Cause of Death dataset from the CDC. From there, it’s a pretty simple task to rank the 54 categories in order from longest to shortest life expectancy, as you can see here.

But that’s not really the interesting part of this study. Sure, there is a lot of variation; it’s interesting that these four factors explain about 18 years’ difference in life expectancy in this country. What strikes me here, actually, is the lack of an entirely consistent message across this spectrum.

Let me walk you through the second figure in this paper, because this nicely illustrates the surprising heterogeneity that exists here.

This may seem overwhelming, but basically, shapes that are higher up on the Y-axis represent the groups with longer life expectancy.

You can tell, for example, that shapes that are black in color (groups with high school educations or less) are generally lower. But not universally so. This box represents married, Hispanic females who do quite well in terms of life expectancy, even at that lower educational level.

The authors quantify this phenomenon by creating a mortality risk score that integrates these findings. It looks something like this, with 0 being average morality for the United States.

As you can see, you get a bunch of points for being female, but you lose a bunch for not being married. Education plays a large role, with a big hit for those who have a high school diploma or less, and a bonus for those with a college degree. Race plays a relatively more minor role.

This is all very interesting, but as I said at the beginning, this isn’t terribly useful to me as a physician. More important is figuring out why these differences exist. And there are some clues in the study data, particularly when we examine causes of death. This figure ranks those 54 groups again, from the married, White, college-educated females down to the never-married, White, high school–educated males. The boxes show how much more or less likely this group is to die of a given condition than the general population.

Looking at the bottom groups, you can see a dramatically increased risk for death from unintentional injuries, heart disease, and lung cancer. You see an increased risk for suicide as well. In the upper tiers, the only place where risk seems higher than expected is for the category of “other cancers,” reminding us that many types of cancer do not respect definitions of socioeconomic status.

You can even update the risk-scoring system to reflect the risk for different causes of death. You can see here how White people, for example, are at higher risk for death from unintentional injuries relative to other populations, despite having a lower mortality overall.

So maybe, through cause of death, we get a little closer to the answer of why. But this paper is really just a start. Its primary effect should be to surprise us — that in a country as wealthy as the United States, such dramatic variation exists based on factors that, with the exception of sex, I suppose, are not really biological. Which means that to find the why, we may need to turn from physiology to sociology.

Dr. Wilson is associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

Two individuals in the United States are celebrating their 30th birthdays. It’s a good day. They are entering the prime of their lives. One is a married White woman with a university degree. The other is a never-married White man with a high school diploma.

How many more years of life can these two individuals look forward to?

There’s a fairly dramatic difference. The man can expect 37.1 more years of life on average, living to be about 67. The woman can expect to live to age 85. That’s a life-expectancy discrepancy of 18 years based solely on gender, education, and marital status.

I’m using these cases to illustrate the extremes of life expectancy across four key social determinants of health: sex, race, marital status, and education. We all have some sense of how these factors play out in terms of health, but a new study suggests that it’s actually quite a bit more complicated than we thought.

Let me start by acknowledging my own bias here. As a clinical researcher, I sometimes find it hard to appreciate the value of actuarial-type studies that look at life expectancy (or any metric, really) between groups defined by marital status, for example. I’m never quite sure what to do with the conclusion. Married people live longer, the headline says. Okay, but as a doctor, what am I supposed to do about that? Encourage my patients to settle down and commit? Studies showing that women live longer than men or that White people live longer than Black people are also hard for me to incorporate into my practice. These are not easily changeable states.

But studies examining these groups are a reasonable starting point to ask more relevant questions. Why do women live longer than men? Is it behavioral (men take more risks and are less likely to see doctors)? Or is it hormonal (estrogen has a lot of protective effects that testosterone does not)? Or is it something else?

Integrating these social determinants of health into a cohesive story is a bit harder than it might seem, as this study, appearing in BMJ Open, illustrates.

In the context of this study, every person in America can be placed into one of 54 mutually exclusive groups. You can be male or female. You can be Black, White, or Hispanic. You can have a high school diploma or less, an associate degree, or a college degree; and you can be married, previously married, or never married.

Of course, this does not capture the beautiful tapestry that is American life, but let’s give them a pass. They are working with data from the American Community Survey, which contains 8634 people — the statistics would run into trouble with more granular divisions. This survey can be population weighted, so you can scale up the results to reasonably represent the population of the United States.

The survey collected data on the four broad categories of sex, race, education, and marital status and linked those survey results to the Multiple Cause of Death dataset from the CDC. From there, it’s a pretty simple task to rank the 54 categories in order from longest to shortest life expectancy, as you can see here.

But that’s not really the interesting part of this study. Sure, there is a lot of variation; it’s interesting that these four factors explain about 18 years’ difference in life expectancy in this country. What strikes me here, actually, is the lack of an entirely consistent message across this spectrum.

Let me walk you through the second figure in this paper, because this nicely illustrates the surprising heterogeneity that exists here.

This may seem overwhelming, but basically, shapes that are higher up on the Y-axis represent the groups with longer life expectancy.

You can tell, for example, that shapes that are black in color (groups with high school educations or less) are generally lower. But not universally so. This box represents married, Hispanic females who do quite well in terms of life expectancy, even at that lower educational level.

The authors quantify this phenomenon by creating a mortality risk score that integrates these findings. It looks something like this, with 0 being average morality for the United States.

As you can see, you get a bunch of points for being female, but you lose a bunch for not being married. Education plays a large role, with a big hit for those who have a high school diploma or less, and a bonus for those with a college degree. Race plays a relatively more minor role.

This is all very interesting, but as I said at the beginning, this isn’t terribly useful to me as a physician. More important is figuring out why these differences exist. And there are some clues in the study data, particularly when we examine causes of death. This figure ranks those 54 groups again, from the married, White, college-educated females down to the never-married, White, high school–educated males. The boxes show how much more or less likely this group is to die of a given condition than the general population.

Looking at the bottom groups, you can see a dramatically increased risk for death from unintentional injuries, heart disease, and lung cancer. You see an increased risk for suicide as well. In the upper tiers, the only place where risk seems higher than expected is for the category of “other cancers,” reminding us that many types of cancer do not respect definitions of socioeconomic status.

You can even update the risk-scoring system to reflect the risk for different causes of death. You can see here how White people, for example, are at higher risk for death from unintentional injuries relative to other populations, despite having a lower mortality overall.

So maybe, through cause of death, we get a little closer to the answer of why. But this paper is really just a start. Its primary effect should be to surprise us — that in a country as wealthy as the United States, such dramatic variation exists based on factors that, with the exception of sex, I suppose, are not really biological. Which means that to find the why, we may need to turn from physiology to sociology.

Dr. Wilson is associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator, New Haven, Connecticut. He has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Ozempic Curbs Hunger – And Not Just for Food

This transcript has been edited for clarity.

If you’ve been paying attention only to the headlines, when you think of “Ozempic” you’ll think of a few things: a blockbuster weight loss drug or the tip of the spear of a completely new industry — why not? A drug so popular that the people it was invented for (those with diabetes) can’t even get it.

Ozempic and other GLP-1 receptor agonists are undeniable game changers. Insofar as obesity is the number-one public health risk in the United States, antiobesity drugs hold immense promise even if all they do is reduce obesity.

In 2023, an article in Scientific Reports presented data suggesting that people on Ozempic might be reducing their alcohol intake, not just their total calories.

A 2024 article in Molecular Psychiatry found that the drug might positively impact cannabis use disorder. An article from Brain Sciences suggests that the drug reduces compulsive shopping.

A picture is starting to form, a picture that suggests these drugs curb hunger both literally and figuratively. That GLP-1 receptor agonists like Ozempic and Mounjaro are fundamentally anticonsumption drugs. In a society that — some would argue — is plagued by overconsumption, these drugs might be just what the doctor ordered.

If only they could stop people from smoking.

Oh, wait — they can.

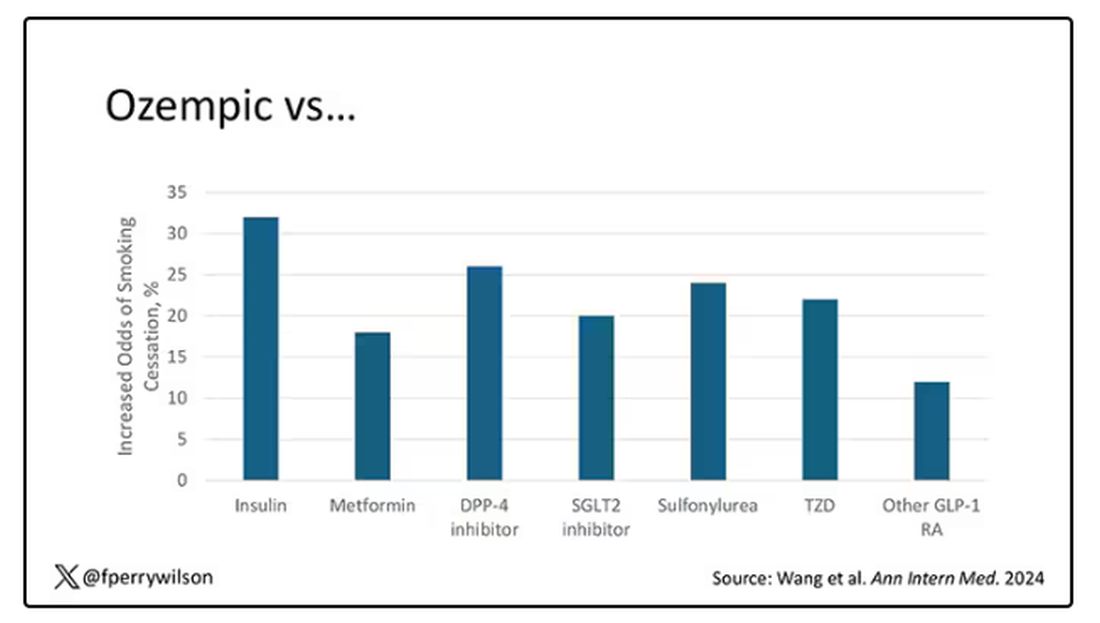

At least it seems they can, based on a new study appearing in Annals of Internal Medicine.

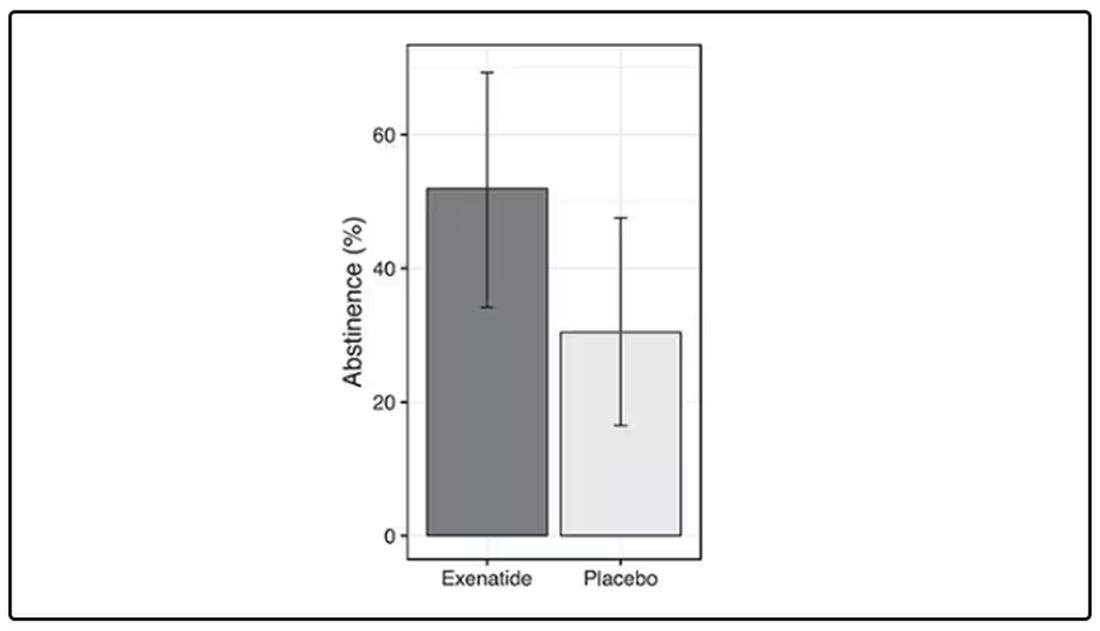

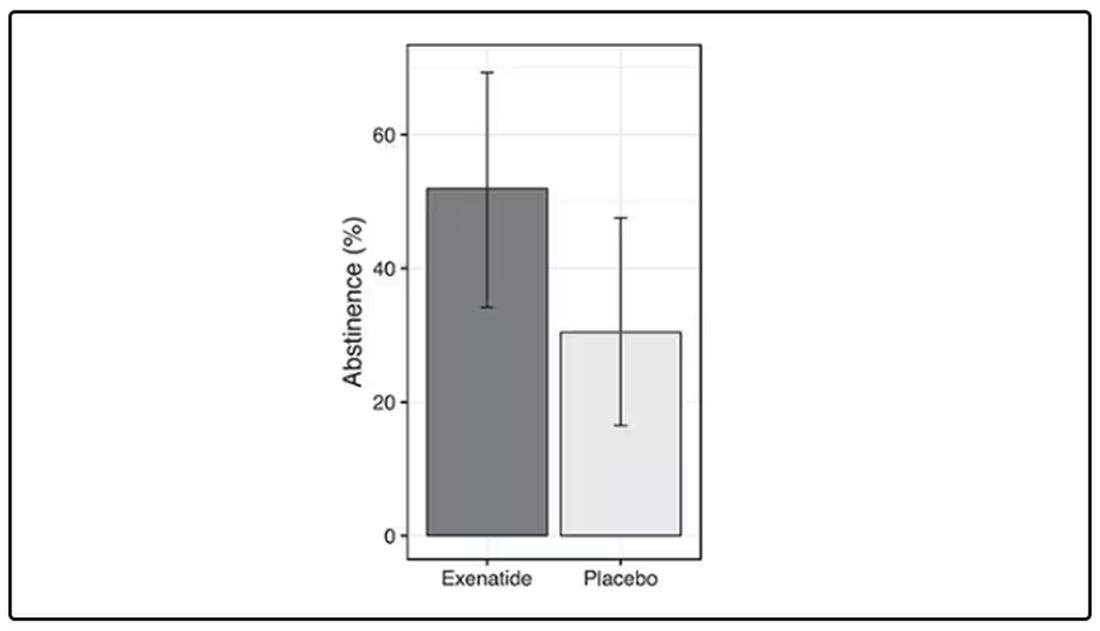

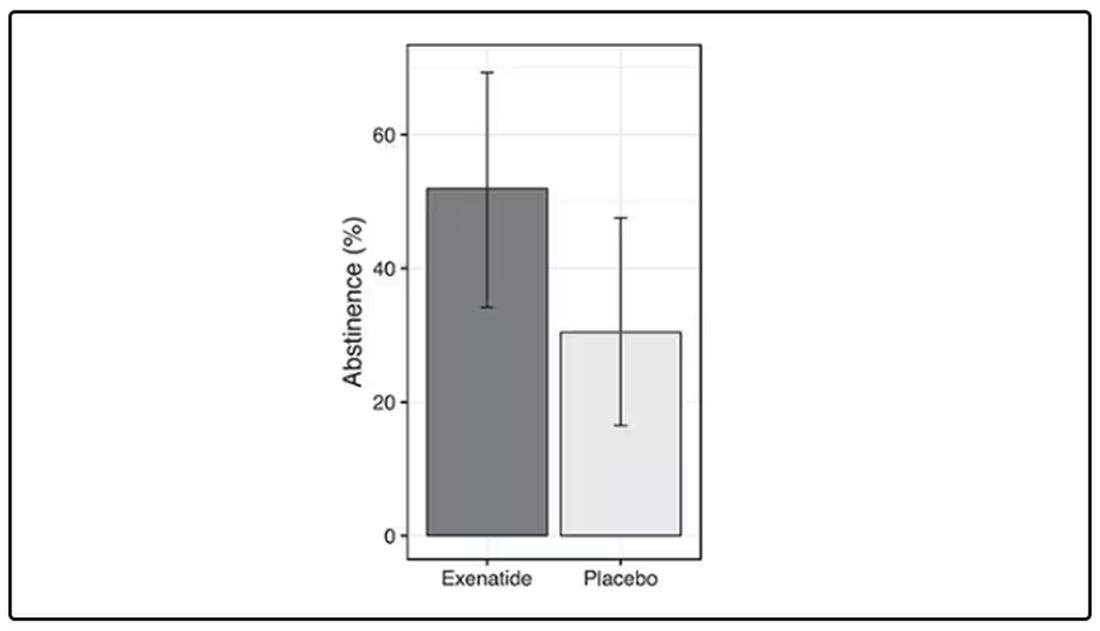

Before we get too excited, this is not a randomized trial. There actually was a small randomized trial of exenatide (Byetta), which is in the same class as Ozempic but probably a bit less potent, with promising results for smoking cessation.

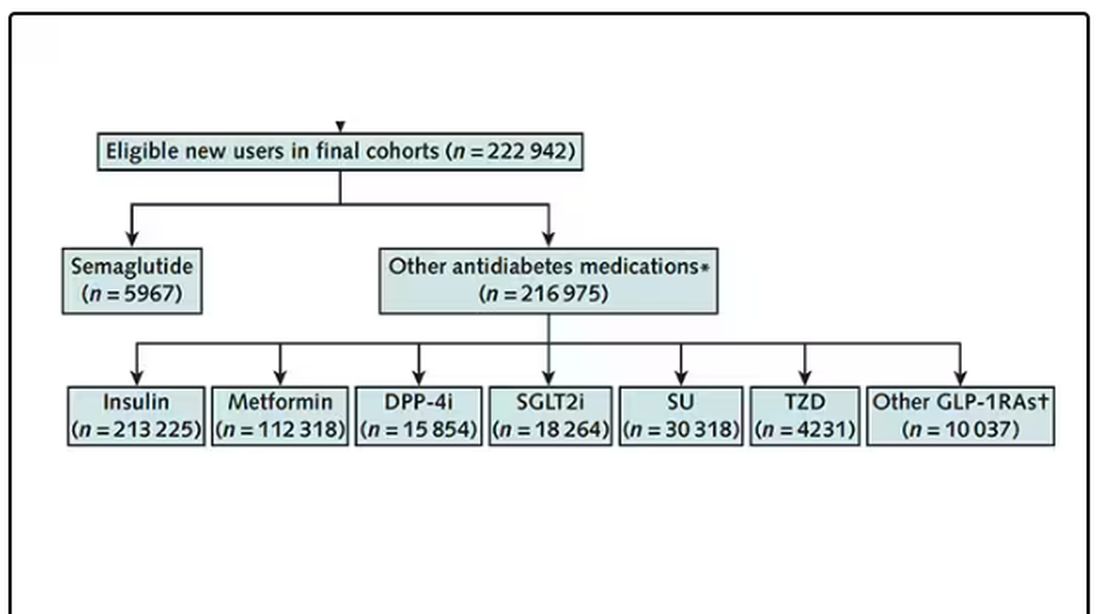

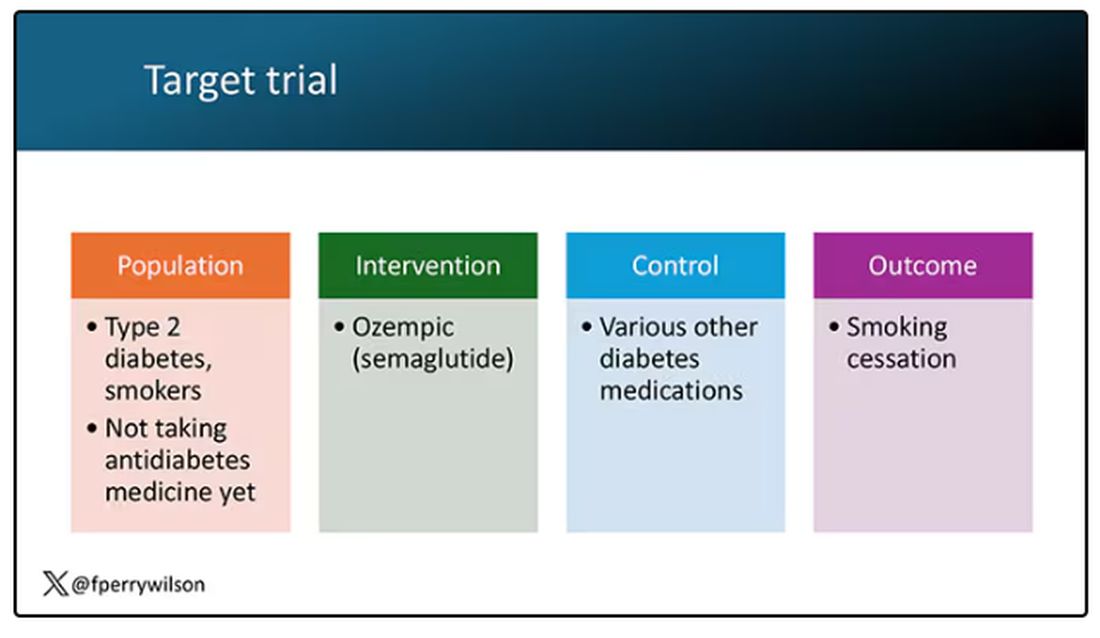

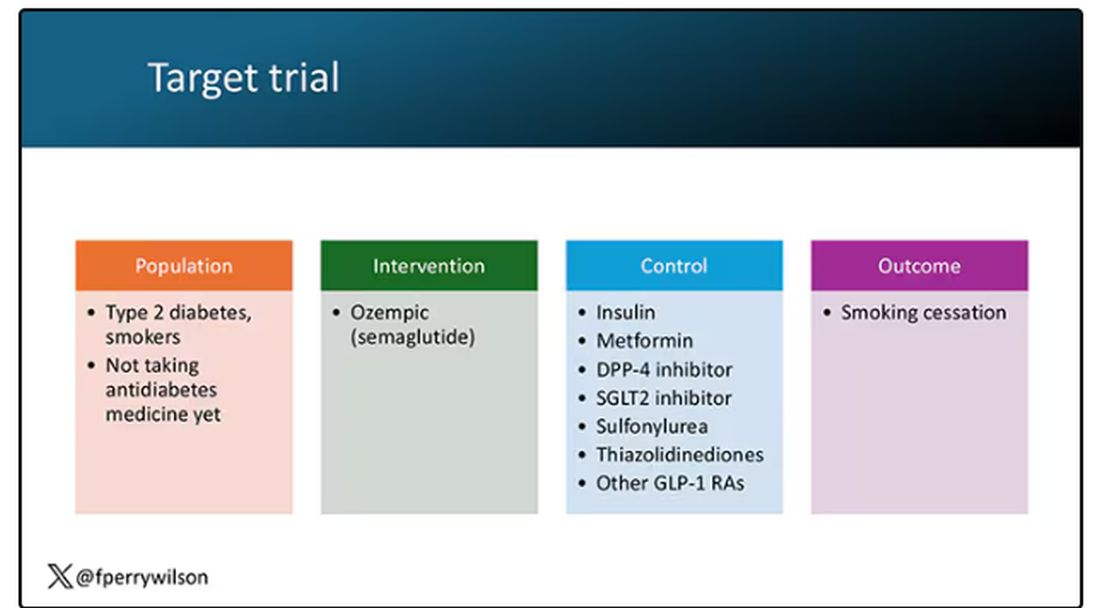

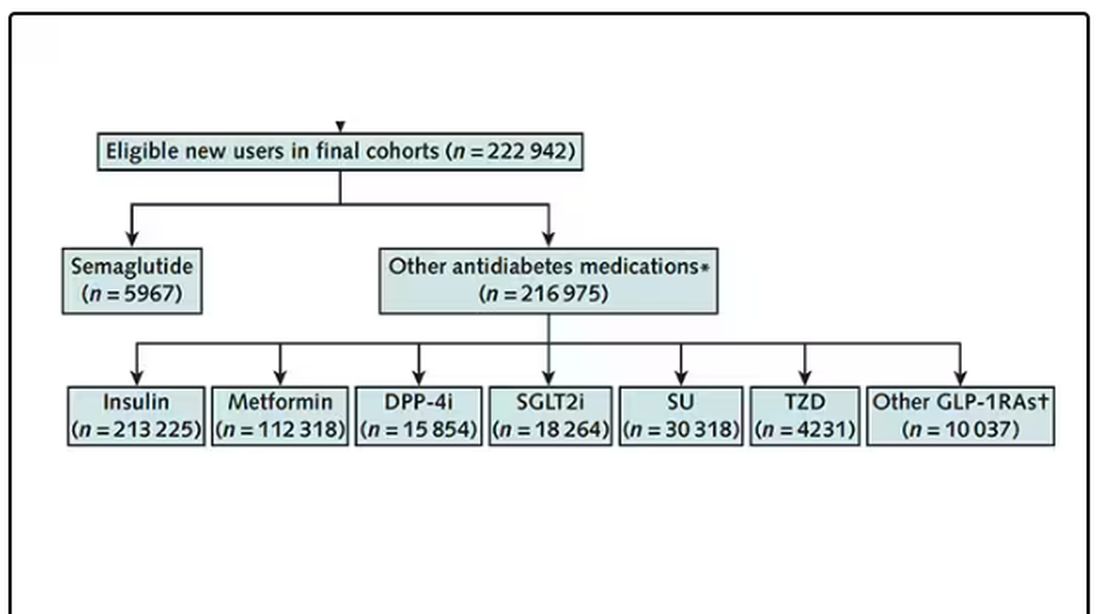

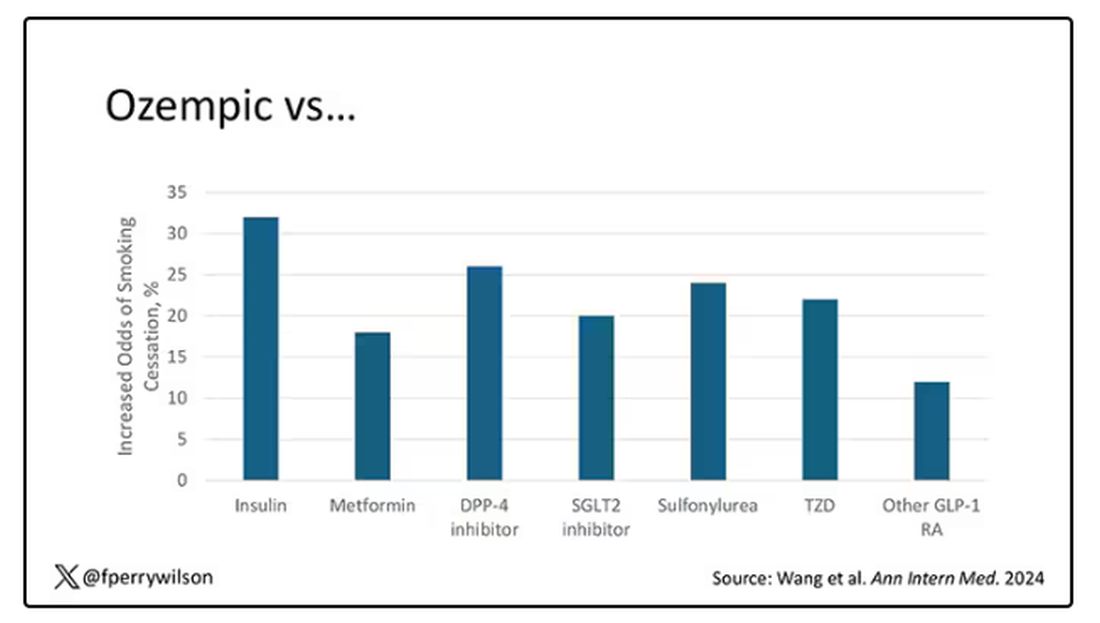

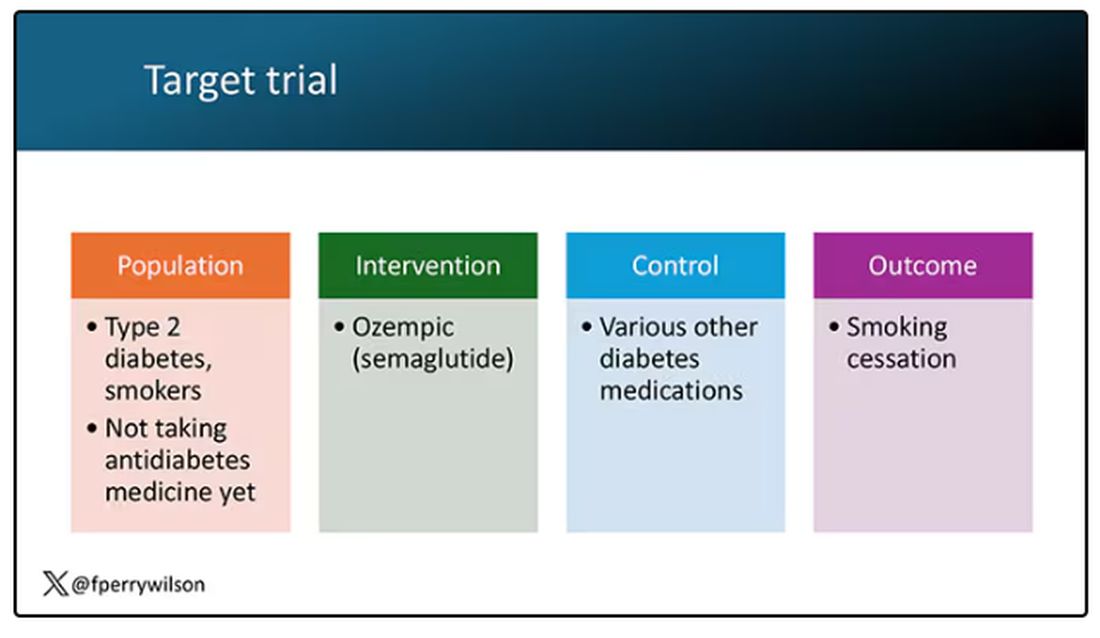

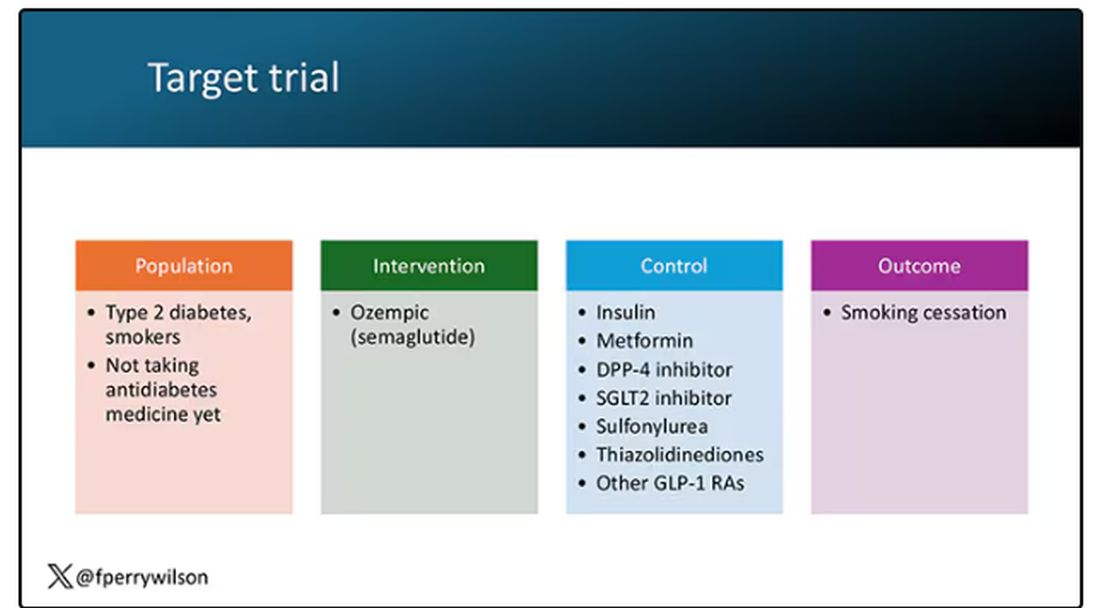

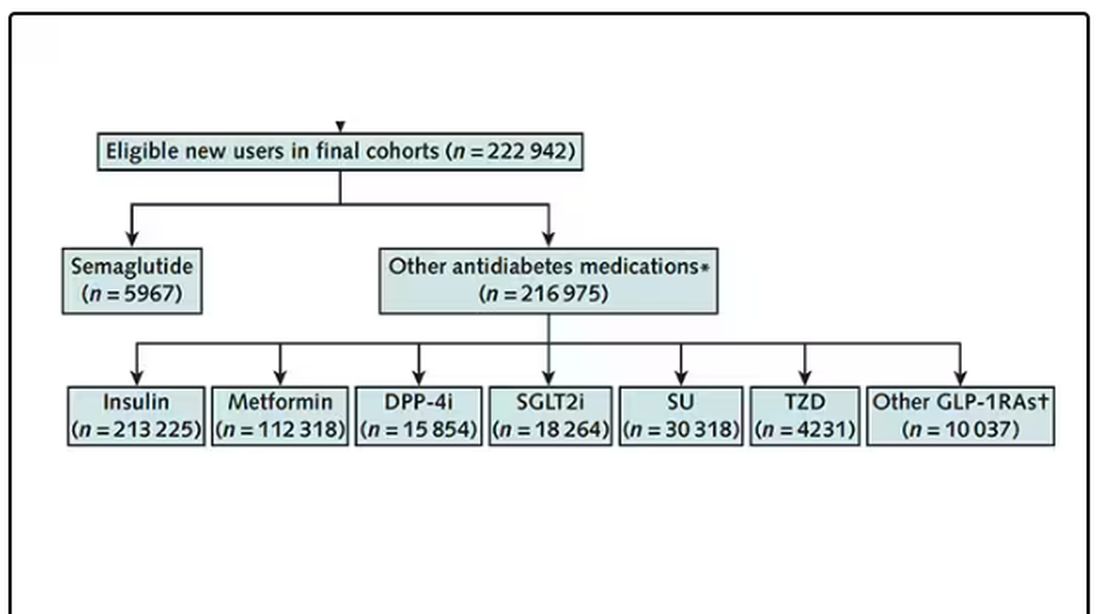

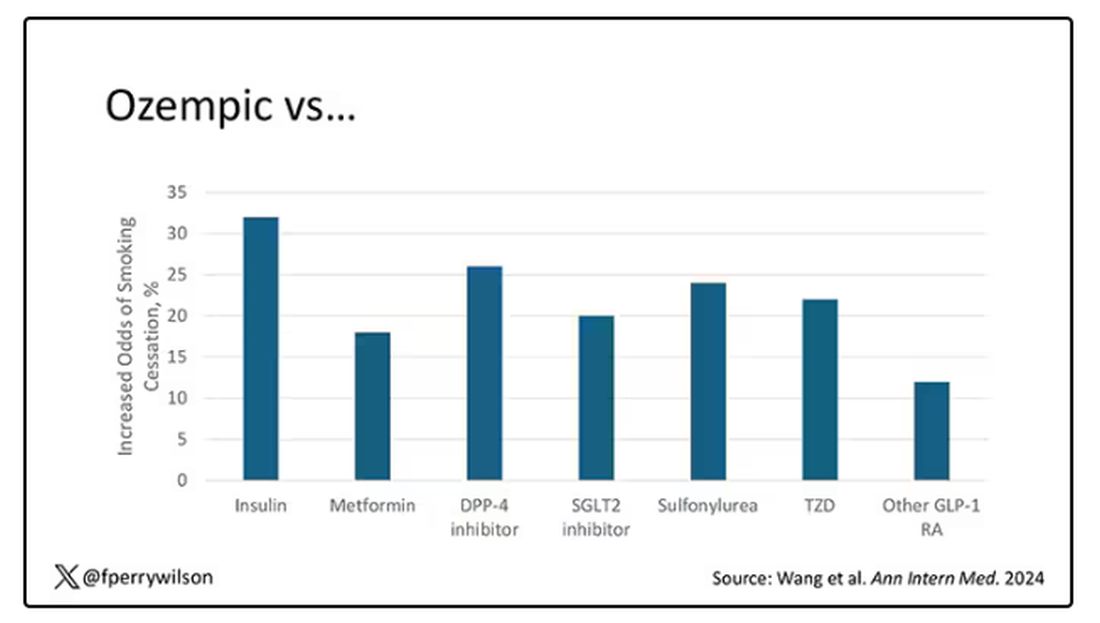

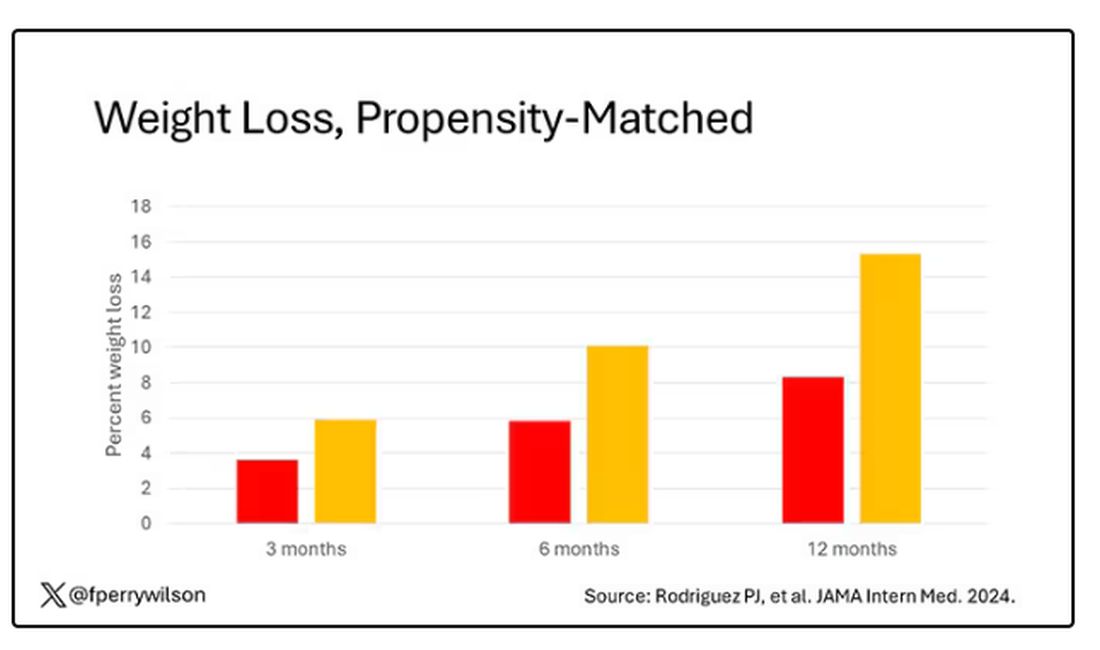

But Byetta is the weaker drug in this class; the market leader is Ozempic. So how can you figure out whether Ozempic can reduce smoking without doing a huge and expensive randomized trial? You can do what Nora Volkow and colleagues from the National Institute on Drug Abuse did: a target trial emulation study.