User login

Transitioning From Infusion Insulin

Hyperglycemia due to diabetes or stress is prevalent in the intensive care unit (ICU) and general ward setting. Umpierrez et al.1 reported hyperglycemia in 38% of hospitalized ward patients with 26% having a known history of diabetes. While patients with hyperglycemia admitted to the ICU are primarily treated with infusion insulin, those on the general wards usually receive a subcutaneous regimen of insulin. How best to transition patients from infusion insulin to a subcutaneous regimen remains elusive and under evaluated.

A recent observational pilot study of 24 surgical and 17 cardiac/medical intensive care patients at our university‐based hospital found that glycemic control significantly deteriorated when patients with diabetes transitioned from infusion insulin to subcutaneous insulin. A total of 21 critical care patients with a history of diabetes failed to receive basal insulin prior to discontinuation of the drip and developed uncontrolled hyperglycemia (mean glucose Day 1 of 216 mg/dL and Day 2 of 197 mg/dL). Patients without a history of diabetes did well post transition with a mean glucose of 142 mg/dL Day 1 and 133 mg/dL Day 2. A similar study by Czosnowski et al.2 demonstrated a significant increase in blood glucose from 123 26 mg/dL to 168 50 mg/dL upon discontinuation of infusion insulin.

This failed transition is disappointing, especially in view of the existence of a reliable subcutaneous (SC) insulin order set at our institution, but not surprising, as this is an inherently complex process. The severity of illness, the amount and mode of nutritional intake, geographic location, and provider team may all be in flux at the time of this transition. A few centers have demonstrated that a much improved transition is possible,36 however many of these solutions involve technology or incremental personnel that may not be available or the descriptions may lack sufficient detail to implement theses strategies with confidence elsewhere.

Therefore, we designed and piloted a protocol, coordinated by a multidisciplinary team, to transition patients from infusion insulin to SC insulin. The successful implementation of this protocol could serve as a blueprint to other institutions without the need for additional technology or personnel.

Methods

Patient Population/Setting

This was a prospective study of patients admitted to either the medical/cardiac intensive care unit (MICU/CCU) or surgical intensive care unit (SICU) at an academic medical facility and placed on infusion insulin for >24 hours. The Institutional Review Board (IRB) approved the study for prospective chart review and anonymous results reporting without individual consent.

Patients in the SICU were initiated on infusion insulin after 2 blood glucose readings were above 150 mg/dL, whereas initiation was left to the discretion of the attending physician in the MICU/CCU. A computerized system created in‐house recommends insulin infusion rates based on point‐of‐care (POC) glucose measurements with a target range of 91 mg/dL to 150 mg/dL.

Inclusion/Exclusion Criteria

All patients on continuous insulin infusion admitted to the SICU or the MICU/CCU between May 2008 and September 2008 were evaluated for the study (Figure 1). Patients were excluded from analysis if they were on the infusion for less than 24 hours, had a liver transplant, were discharged within 48 hours of transition, were made comfort care or transitioned to an insulin pump. All other patients were included in the final analysis.

Transition Protocol

Step 1: Does the Patient Need Basal SC Insulin?

Patients were recommended to receive basal SC insulin if they either: (1) were on medications for diabetes; (2) had an A1c 6%; or (3) received the equivalent of 60 mg of prednisone; AND had an infusion rate 1 unit/hour (Supporting Information Appendix 1). Patients on infusion insulin due to stress hyperglycemia, regardless of the infusion rate, were not placed on basal SC insulin. Patients on high dose steroids due to spinal injuries were excluded because their duration of steroid use was typically less than 48 hours and usually ended prior to the time of transition. The protocol recommends premeal correctional insulin for those not qualifying for basal insulin.

In order to establish patients in need of basal/nutritional insulin we opted to use A1c as well as past medical history to identify patients with diabetes. The American Diabetes Association (ADA) has recently accepted using an A1c 6.5% to make a new diagnosis of diabetes.7 In a 2‐week trial prior to initiating the protocol we used a cut off A1c of 6.5%. However, we found that patients with an A1c of 6% to 6.5% had poor glucose control post transition; therefore we chose 6% as our identifier. In addition, using a cut off A1c of 6% was reported by Rohlfing et al.8 and Greci et al.9 to be more than 97% sensitive at identifying a new diagnosis of diabetes.

To ensure an A1c was ordered and available at the time of transition, critical care pharmacists were given Pharmacy and Therapeutics Committee authorization to order an A1c at the start of the infusion. Pharmacists would also guide the primary team through the protocol's recommendations as well as alert the project team when a patient was expected to transition.

Step 2: Evaluate the Patient's Nutritional Intake to Calculate the Total Daily Dose (TDD) of Insulin

TDD is the total amount of insulin needed to cover both the nutritional and basal requirements of a patient over the course of 24 hours. TDD was calculated by averaging the hourly drip rate over the prior 6 hours and multiplying by 20 if taking in full nutrition or 40 if taking minimal nutrition while on the drip. A higher multiplier was used for those on minimal nutrition with the expectation that their insulin requirements would double once tolerating a full diet. Full nutrition was defined as eating >50% of meals, on goal tube feeds, or receiving total parenteral nutrition (TPN). Minimal nutrition was defined as taking nothing by mouth (or NPO), tolerating <50% of meals, or on a clear liquid diet.

Step 3: Divide the TDD Into the Appropriate Components of Insulin Treatment (Basal, Nutritional and Correction), Depending on the Nutritional Status

In Step 3, the TDD was evenly divided into basal and nutritional insulin. A total of 50% of the TDD was given as glargine (Lantus) 2 hours prior to stopping the infusion. The remaining 50% was divided into nutritional components as either Regular insulin every 6 hours for patients on tube feeds or lispro (Humalog) before meals if tolerating an oral diet. For patients on minimal nutrition, the 50% nutritional insulin dose was not initiated until the patient was tolerating full nutrition.

The protocol recommended basal insulin administration 2 hours prior to infusion discontinuation as recommended by the American Association of Clinical Endocrinologists (AACE) and ADA consensus statement on inpatient glycemic control as well as pharmacokinetics.10, 11 For these reasons, failure to receive basal insulin prior to transition was viewed as failure to follow the protocol.

Safety features of the protocol included a maximum TDD of 100 units unless the patient was on >100 units/day of insulin prior to admission. A pager was carried by rotating hospitalists or pharmacist study investigators at all hours during the protocol implementation phase to answer any questions regarding a patient's transition.

Data Collection/Monitoring

A multidisciplinary team consisting of hospitalists, ICU pharmacists, critical care physicians and nursing representatives was assembled during the study period. This team was responsible for protocol implementation, data collection, and surveillance of patient response to the protocol. Educational sessions with house staff and nurses in each unit were held prior to the beginning of the study as well as continued monthly educational efforts during the study. In addition, biweekly huddles to review ongoing patient transitions as well as more formal monthly reviews were held.

The primary objective was to improve glycemic control, defined as the mean daily glucose, during the first 48 hours post transition without a significant increase in the percentage of patients with hypoglycemia (41‐70 mg/dL) or severe hypoglycemia (40 mg/dL). Secondary endpoints included the percent of patients with severe hyperglycemia (300 mg/dL), length of stay (LOS) calculated from the day of transition, number of restarts back onto infusion insulin within 72 hours of transition, and day‐weighted glucose mean up to 12 days following transition for patients with diabetes.

Glucose values were collected and averaged over 6‐hour periods for 48 hours post transition. For patients with diabetes, POC glucose values were collected up to 12 days of hospitalization. Day‐weighted means were obtained by calculating the mean glucose for each hospital day, averaged across all hospital days.12

Analysis

Subjects were divided by the presence or absence of diabetes. Those with diabetes were recommended to receive basal SC insulin during the transition period. Within each group, subjects were further divided by adherence to the protocol. Failure to transition per protocol was defined as: not receiving at least 80% of the recommended basal insulin dose, receiving the initial dose of insulin after the drip was discontinued, or receiving basal insulin when none was recommended.

Descriptive statistics within subgroups comparing age, gender, LOS by analysis of variance for continuous data and by chi‐square for nominal data, were compared. Twenty‐four and 48‐hour post transition mean glucose values and the 12 day weighted‐mean glucose were compared using analysis of variance (Stata ver. 10). All data are expressed as mean standard deviation with a significance value established at P < 0.05.

Results

A total of 210 episodes of infusion insulin in ICU patients were evaluated for the study from May of 2008 to September 2008 (Figure 1). Ninety‐six of these episodes were excluded, most commonly due to time on infusion insulin <24 hours or transition to comfort care. The remaining 114 infusions were eligible to use the protocol. Because the protocol recommends insulin therapy based on a diagnosis of diabetes, patients were further divided into these subcategories. Of these 114 transitions, the protocol was followed 66 times (58%).

Patients With Diabetes

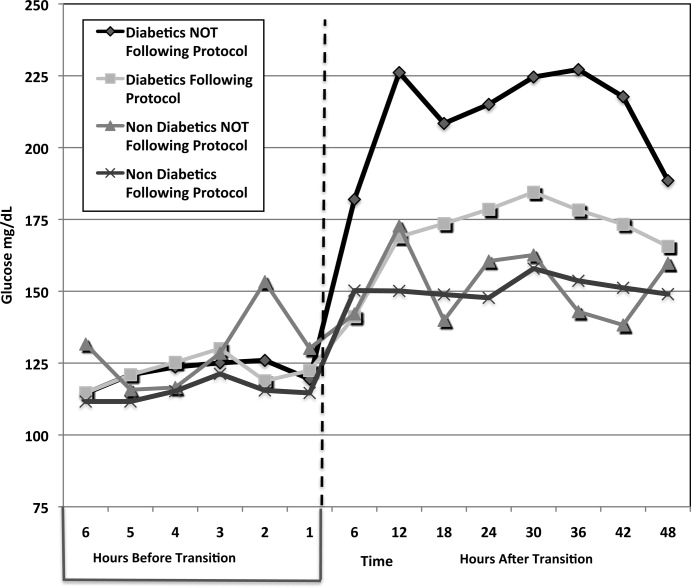

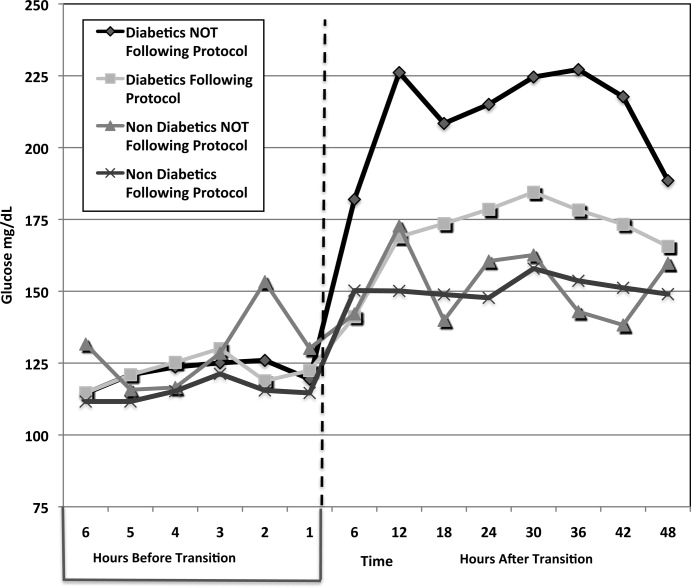

(Table 1: Patient Demographics; Table 2: Insulin Use and Glycemic Control; Figure 2: Transition Graph).

| Patients With Diabetes | P Value | Patients Without Diabetes | P Value | |||

|---|---|---|---|---|---|---|

| Protocol Followed, n = 29 Patients* | Protocol NOT Followed, n = 33 Patients | Protocol Followed, n = 30 Patients | Protocol NOT Followed, n = 9 Patients | |||

| ||||||

| Average age, years, mean SD | 57.7 12.1 | 57.8 12.3 | 0.681 | 56.5 18.1 | 62.4 15.5 | 0.532 |

| Male patients | 21 (72%) | 21 (63%) | 0.58 | 20 (66%) | 7 (77%) | 0.691 |

| BMI | 30.7 7.2 | 28.6 6.8 | 0.180 | 27 5.4 | 25.2 3 | 0.081 |

| History of diabetes* | 18 (64%) | 25 (86%) | 0.07 | 0 | 0 | |

| Mean Hgb A1c (%) | 6.61.2 | 7.3 1.8 | 0.136 | 5.6 0.3 | 5.4 0.4 | 0.095 |

| Full nutrition | 26 (79%) | 24 (61%) | 0.131 | 23 (70%) | 9 (100%) | |

| On hemodialysis | 5 (17%) | 9 (27%) | 0.380 | 3 (10%) | 0 | |

| On >60 mg prednisone or equivalent per day | 7 (24%) | 10 (30%) | 0.632 | 0 | 0 | |

| Patients With Diabetes | P Value | Patients Without Diabetes | P Value | |||

|---|---|---|---|---|---|---|

| Protocol Followed, n = 33 transitions | Protocol NOT followed, n = 39 transitions | Protocol Followed, n = 33 transitions | Protocol NOT Followed, n = 9 transitions | |||

| ||||||

| Average infusion rate, hours | 3.96 3.15 | 3.74 3.64 | 0.1597 | 2.34 1.5 | 4.78 1.6 | <0.001 |

| Average BG on infusion insulin (mg/dL) | 122.5 27.5 | 122.5 31.8 | 0.844 | 115.1 22.7 | 127.5 27.2 | 0.006 |

| Average basal dose (units) given | 34.5 14.4 | 14.4 15.3 | <0.001 | 0 | 32.7 | <0.001 |

| Hours before () or after (+) infusion stopped basal insulin given | 1.13 0.9 | 11.6 9.3 | <0.001 | n/a | 0.33 | * |

| Average BG 6 hours post transition (mg/dL) | 143.7 39.4 | 182 62.5 | 0.019 | 150.2 54.9 | 142.1 34.1 | 0.624 |

| Average BG 0 to 24 hours post transition (mg/dL) | 167.98 50.24 | 211.02 81.01 | <0.001 | 150.24 54.9 | 150.12 32.4 | 0.600 |

| Total insulin used from 0 to 24 hours (units) | 65 32.2 | 26.7 25.4 | <0.001 | 3.2 4.1 | 51.3 30.3 | <0.001 |

| Average BG 25 to 48 hours post transition (mg/dL) | 176.1 55.25 | 218.2 88.54 | <0.001 | 153 35.3 | 154.4 46.7 | 0.711 |

| Total insulin used from 25 to 48 hours (units) | 60.5 35.4 | 28.1 24.4 | <0.001 | 2.8 3.8 | 44.9 34 | <0.001 |

| # of patients with severe hypoglycemia (<40 mg/dL) | 1 (3%) | 1 (2.6%) | * | 0 | 1 | * |

| # of patients with hypoglycemia (4170 mg/dL) | 3 (9%) | 2 (5.1%) | * | 1 | 0 | * |

| % of BG values in goal range (80180 mg/dL) (# in range/total #) | 60.2% (153/254) | 38.2% (104/272) | 0.004 | 80.1% (173/216) | 75.4% (49/65) | 0.83 |

| # of patients with severe hyperglycemia (>300 mg/dL) | 5 (15.2%) | 19 (48.7%) | 0.002 | 1 (3%) | 1 (11.1%) | * |

| LOS from transition (days) | 14.6 11.3 | 14 11.4 | 0.836 | 25.3 24.4 | 13.6 7.5 | 0.168 |

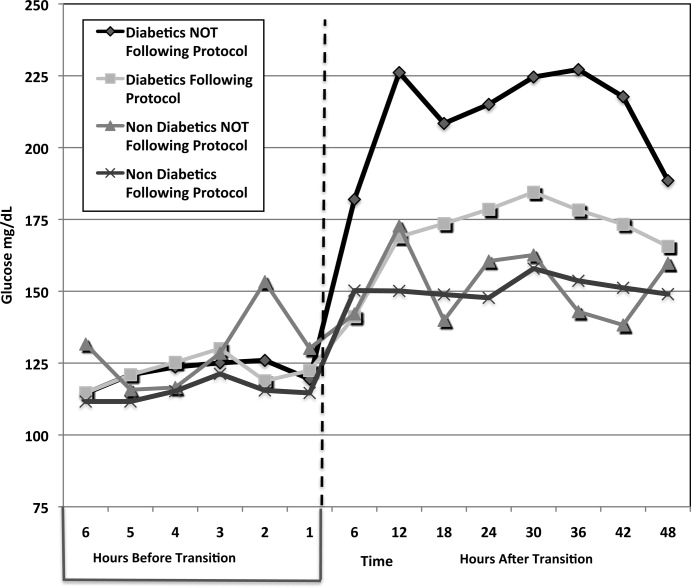

A total of 62 individual patients accounted for 72 separate transitions in patients with diabetes based on past medical history or an A1c 6% (n = 14). Of these 72 transitions, 33 (46%) adhered to the protocol while the remaining 39 (54%) transitions varied from the protocol at the treatment team's discretion. Despite similar insulin infusion rates and mean glucose values pretransition, patients with diabetes following the protocol had better glycemic control at both 24 hours and 48 hours after transition than those patients transitioned without the protocol. Day 1 mean blood glucose was 168 mg/dL vs. 211 mg/dL (P = <0.001) and day 2 mean blood glucose was 176 mg/dL vs. 218 mg/dL (P = <0.001) in protocol vs. nonprotocol patients with diabetes respectively (Figure 2).

There was a severe hypoglycemic event (40 mg/dL) in 1 patient with diabetes following the protocol and 1 patient not following the protocol within 48 hours of transition. Both events were secondary to nutritional‐insulin mismatch with emesis after insulin in one case and tube feeds being held in the second case. These findings were consistent with our prior examination of hypoglycemia cases.13 Severe hyperglycemia (glucose 300mg/dL) occurred in 5 (15 %) patients following the protocol vs. 19 (49%) patients not following protocol (P = 0.002.) Patients with diabetes following the protocol received significantly more insulin in the first 24 hours (mean of 65 units vs. 27 units, P 0.001) and 24 to 48 hours after transition (mean of 61 units vs. 28 units, p0.001) than those not following protocol.

An alternate method used at our institution and others14, 15 to calculate TDD is based on the patient's weight and body habitus. When we compared the projected TDD based on weight with the TDD using the transition protocol, we found that the weight based method was much less aggressive. For patients following the protocol, the weight based method projected a mean TDD of 46.3 16.9 units whereas the protocol projected a mean TDD of 65 33.2 units (P = 0.001).

Patients with diabetes following protocol received basal insulin an average of 1.13 hours prior to discontinuing the insulin infusion versus 11.6 hours after for those not following protocol.

Three patients with diabetes following the protocol and 3 patients with diabetes not following the protocol were restarted on infusion insulin within 72 hours of transition.

LOS from final transition to discharge was similar between protocol vs. nonprotocol patients (14.6 vs. 14 days, P = 0.836).

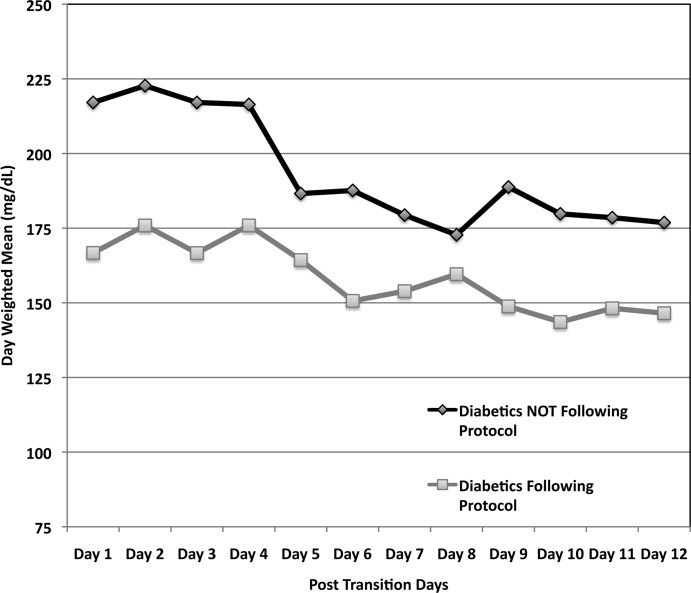

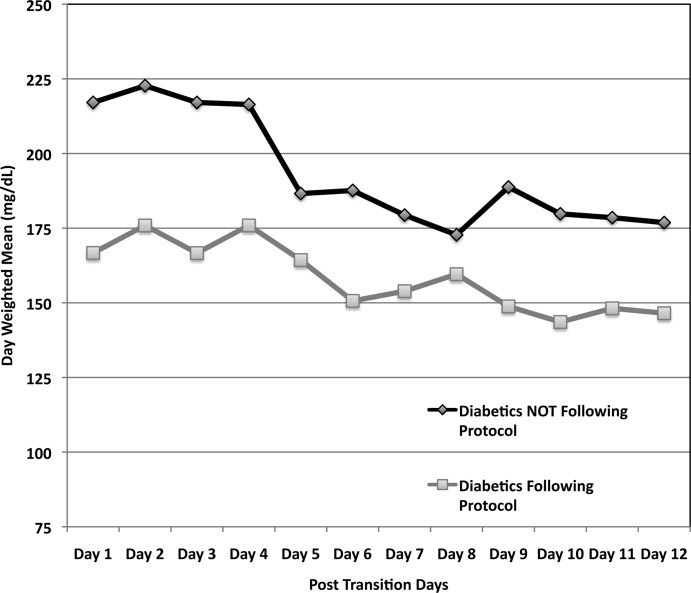

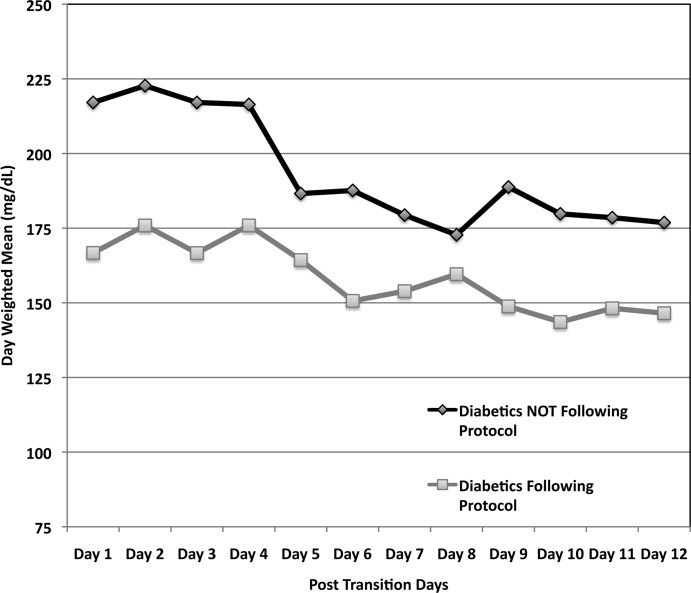

Figure 3 demonstrates that when used correctly, the protocol provides an extended period of glycemic control up to 12 days post transition. Patients transitioned per protocol had a day‐weighted mean glucose of 155 mg/dL vs. 184 mg/dL (P = 0.043) in patients not following protocol. There was only 1 glucose value less than 40 mg/dL between days 2 to 12 in the protocol group.

Patients Without Diabetes

Of the 39 individual patients without diabetes there were 42 transition events, 33 transitions (78.6%) were per protocol and placed on correctional insulin only. The remaining 9 transitions failed to follow protocol in that basal insulin was prescribed, but these patients maintained comparable glycemic control without an increase in hypoglycemic events. Following transition, patients without diabetes on protocol maintained a mean glucose of 150 mg/dL in the first 24 hours and 153 mg/dL in 24 to 48 hours post transition. They required a mean daily correctional insulin dose of 3.2 units on Day 1 and 2.8 units on Day 2 despite having an average drip rate of 2.3 units/hour at the time of transition (Table 2). There were no severe hypoglycemic events and 80% of blood sugars were within the goal range of 80 mg/dL to 180 mg/dL. Only 1 patient had a single blood glucose of >300mg/dL. No patient was restarted on infusion insulin once transitioned.

Patients without diabetes had a longer LOS after transition off of infusion insulin when compared to their diabetic counterparts (22 vs. 14 days).

Discussion

This study demonstrates the utility of hospitalist‐pharmacist collaboration in the creation and implementation of a safe and effective transition protocol for patients on infusion insulin. The protocol identifies patients appropriate for transition to a basal/nutritional insulin regimen versus those who will do well with premeal correctional insulin alone. Daily mean glucose was improved post transition for diabetic patients following the protocol compared to those not following the protocol without an increase in hypoglycemic events.

We found an equal number of insulin infusion restarts within 72 hours of transition and a similar LOS in protocol vs. nonprotocol patients with diabetes. The LOS was increased for patients without diabetes. This may be due to worse outcomes noted in patients with stress hyperglycemia in other studies.1, 16

The use of the higher multiplier for patients on minimal nutrition led to confusion among many protocol users. The protocol has since been modified to start by averaging the infusion rate over the prior 6 hours and then multiplying by 20 for all patients. This essentially calculates 80% of projected insulin requirements for the next 24 hours based on the patient's current needs. This calculation is then given as 50% basal and 50% nutritional for those on full nutrition vs. 100% basal for those on minimal nutrition. This protocol change has no impact on the amount of insulin received by the patient, but is more intuitive to providers. Instead of calculating the TDD as the projected requirement when full nutrition is obtained, the TDD is now calculated based on current insulin needs, and then doubled when patients who are receiving minimal nutrition advance to full nutrition.

Our study is limited by the lack of a true randomized control group. In lieu of this, we used our patients who did not follow protocol as our control. While not truly randomized, this group is comparable based on their age, gender mix, infusion rate, mean A1c, and projected TDD. This group was also similar to our preprotocol group mentioned in the Introduction.

Additional study limitations include the small number of nondiabetic patients not following the protocol (n = 9). We noted higher infusion rates in nondiabetics not following protocol versus those following protocol, which may have driven the primary team to give basal insulin. It is possible that these 9 patients were not yet ready to transition from infusion insulin or had other stressors not measured in our study. Unfortunately their small population size limits more extensive analysis.

The protocol was followed only 50% of the time for a variety of reasons. Patients who transitioned at night or on weekends were monitored by covering pharmacists and physicians who may not have been familiar with the protocol. Many physicians and nurses remain fearful of hypoglycemia and the outcomes of our study were not yet available for education. Some reported difficulty fully understanding how to use the protocol and why a higher multiplier was used for patients who were on minimal nutrition.

Efforts to improve adherence to the protocol are ongoing with some success, aided by the data demonstrating the safety and efficacy of the transition protocol.

Conclusion

By collaborating with ICU pharmacists we were able to design and implement a protocol that successfully and safely transitioned patients from infusion insulin to subcutaneous insulin. Patients following the protocol had a higher percentage of glucose values within the goal glucose range of 80 mg/dL to 180 mg/dL. In the future, we hope to automate the calculation of TDD and directly recommend a basal/bolus regimen for the clinical provider.

- ,,,,,.Hyperglycemia: an independent marker of in‐hospital mortality in patients with undiagnosed diabetes.J Clin Endocrinol Metab.2002;87:978–982.

- ,,,,,.Evaluation of glycemic control following discontinuation of an intensive insulin protocol.J Hosp Med.2009;4:28–34.

- ,,, et al.Inpatient management of hyperglycemia: the Northwestern experience.Endocr Pract.2006;12:491–505.

- ,,,.Intravenous insulin infusion therapy: indications, methods, and transition to subcutaneous insulin therapy.Endocr Pract.2004;10Suppl 2:71–80.

- ,,, et al.Conversion of intravenous insulin infusions to subcutaneously administered insulin glargine in patients with hyperglycemia.Endocr Pract.2006;12:641–650.

- ,.Effects of outcome on in‐hospital transition from intravenous insulin infusion to subcutaneous therapy.Am J Cardiol.2006;98:557–564.

- International Expert Committee report on the role of the A1C assay in the diagnosis of diabetes.Diabetes Care.2009;32:1327–1334.

- ,,, et al.Use of GHb (HbA1c) in screening for undiagnosed diabetes in the U.S. population.Diabetes Care.2000;23:187–191.

- ,,, et al.Utility of HbA(1c) levels for diabetes case finding in hospitalized patients with hyperglycemia.Diabetes Care.2003;26:1064–1068.

- ,,, et al.American Association of Clinical Endocrinologists and American Diabetes Association consensus statement on inpatient glycemic control.Endocr Pract.2009;15(4):353–369.

- ,,, et al.Pharmacokinetics and pharmacodynamics of subcutaneous injection of long‐acting human insulin analog glargine, NPH insulin, and ultralente human insulin and continuous subcutaneous infusion of insulin lispro.Diabetes.2000;49:2142–2148.

- ,,, et al.“Glucometrics”‐‐assessing the quality of inpatient glucose management.Diabetes Technol Ther.2006;8:560–569.

- ,,.Iatrogenic Inpatient Hypoglycemia: Risk Factors, Treatment, and Prevention: Analysis of Current Practice at an Academic Medical Center With Implications for Improvement Efforts.Diabetes Spectr.2008;21:241–247.

- ,,, et al.Management of diabetes and hyperglycemia in hospitals.Diabetes Care.2004;27:553–591.

- .Insulin management of diabetic patients on general medical and surgical floors.Endocr Pract.2006;12Suppl 3:86–90.

- ,,,.Inadequate blood glucose control is associated with in‐hospital mortality and morbidity in diabetic and nondiabetic patients undergoing cardiac surgery.Circulation.2008;118:113–123.

Hyperglycemia due to diabetes or stress is prevalent in the intensive care unit (ICU) and general ward setting. Umpierrez et al.1 reported hyperglycemia in 38% of hospitalized ward patients with 26% having a known history of diabetes. While patients with hyperglycemia admitted to the ICU are primarily treated with infusion insulin, those on the general wards usually receive a subcutaneous regimen of insulin. How best to transition patients from infusion insulin to a subcutaneous regimen remains elusive and under evaluated.

A recent observational pilot study of 24 surgical and 17 cardiac/medical intensive care patients at our university‐based hospital found that glycemic control significantly deteriorated when patients with diabetes transitioned from infusion insulin to subcutaneous insulin. A total of 21 critical care patients with a history of diabetes failed to receive basal insulin prior to discontinuation of the drip and developed uncontrolled hyperglycemia (mean glucose Day 1 of 216 mg/dL and Day 2 of 197 mg/dL). Patients without a history of diabetes did well post transition with a mean glucose of 142 mg/dL Day 1 and 133 mg/dL Day 2. A similar study by Czosnowski et al.2 demonstrated a significant increase in blood glucose from 123 26 mg/dL to 168 50 mg/dL upon discontinuation of infusion insulin.

This failed transition is disappointing, especially in view of the existence of a reliable subcutaneous (SC) insulin order set at our institution, but not surprising, as this is an inherently complex process. The severity of illness, the amount and mode of nutritional intake, geographic location, and provider team may all be in flux at the time of this transition. A few centers have demonstrated that a much improved transition is possible,36 however many of these solutions involve technology or incremental personnel that may not be available or the descriptions may lack sufficient detail to implement theses strategies with confidence elsewhere.

Therefore, we designed and piloted a protocol, coordinated by a multidisciplinary team, to transition patients from infusion insulin to SC insulin. The successful implementation of this protocol could serve as a blueprint to other institutions without the need for additional technology or personnel.

Methods

Patient Population/Setting

This was a prospective study of patients admitted to either the medical/cardiac intensive care unit (MICU/CCU) or surgical intensive care unit (SICU) at an academic medical facility and placed on infusion insulin for >24 hours. The Institutional Review Board (IRB) approved the study for prospective chart review and anonymous results reporting without individual consent.

Patients in the SICU were initiated on infusion insulin after 2 blood glucose readings were above 150 mg/dL, whereas initiation was left to the discretion of the attending physician in the MICU/CCU. A computerized system created in‐house recommends insulin infusion rates based on point‐of‐care (POC) glucose measurements with a target range of 91 mg/dL to 150 mg/dL.

Inclusion/Exclusion Criteria

All patients on continuous insulin infusion admitted to the SICU or the MICU/CCU between May 2008 and September 2008 were evaluated for the study (Figure 1). Patients were excluded from analysis if they were on the infusion for less than 24 hours, had a liver transplant, were discharged within 48 hours of transition, were made comfort care or transitioned to an insulin pump. All other patients were included in the final analysis.

Transition Protocol

Step 1: Does the Patient Need Basal SC Insulin?

Patients were recommended to receive basal SC insulin if they either: (1) were on medications for diabetes; (2) had an A1c 6%; or (3) received the equivalent of 60 mg of prednisone; AND had an infusion rate 1 unit/hour (Supporting Information Appendix 1). Patients on infusion insulin due to stress hyperglycemia, regardless of the infusion rate, were not placed on basal SC insulin. Patients on high dose steroids due to spinal injuries were excluded because their duration of steroid use was typically less than 48 hours and usually ended prior to the time of transition. The protocol recommends premeal correctional insulin for those not qualifying for basal insulin.

In order to establish patients in need of basal/nutritional insulin we opted to use A1c as well as past medical history to identify patients with diabetes. The American Diabetes Association (ADA) has recently accepted using an A1c 6.5% to make a new diagnosis of diabetes.7 In a 2‐week trial prior to initiating the protocol we used a cut off A1c of 6.5%. However, we found that patients with an A1c of 6% to 6.5% had poor glucose control post transition; therefore we chose 6% as our identifier. In addition, using a cut off A1c of 6% was reported by Rohlfing et al.8 and Greci et al.9 to be more than 97% sensitive at identifying a new diagnosis of diabetes.

To ensure an A1c was ordered and available at the time of transition, critical care pharmacists were given Pharmacy and Therapeutics Committee authorization to order an A1c at the start of the infusion. Pharmacists would also guide the primary team through the protocol's recommendations as well as alert the project team when a patient was expected to transition.

Step 2: Evaluate the Patient's Nutritional Intake to Calculate the Total Daily Dose (TDD) of Insulin

TDD is the total amount of insulin needed to cover both the nutritional and basal requirements of a patient over the course of 24 hours. TDD was calculated by averaging the hourly drip rate over the prior 6 hours and multiplying by 20 if taking in full nutrition or 40 if taking minimal nutrition while on the drip. A higher multiplier was used for those on minimal nutrition with the expectation that their insulin requirements would double once tolerating a full diet. Full nutrition was defined as eating >50% of meals, on goal tube feeds, or receiving total parenteral nutrition (TPN). Minimal nutrition was defined as taking nothing by mouth (or NPO), tolerating <50% of meals, or on a clear liquid diet.

Step 3: Divide the TDD Into the Appropriate Components of Insulin Treatment (Basal, Nutritional and Correction), Depending on the Nutritional Status

In Step 3, the TDD was evenly divided into basal and nutritional insulin. A total of 50% of the TDD was given as glargine (Lantus) 2 hours prior to stopping the infusion. The remaining 50% was divided into nutritional components as either Regular insulin every 6 hours for patients on tube feeds or lispro (Humalog) before meals if tolerating an oral diet. For patients on minimal nutrition, the 50% nutritional insulin dose was not initiated until the patient was tolerating full nutrition.

The protocol recommended basal insulin administration 2 hours prior to infusion discontinuation as recommended by the American Association of Clinical Endocrinologists (AACE) and ADA consensus statement on inpatient glycemic control as well as pharmacokinetics.10, 11 For these reasons, failure to receive basal insulin prior to transition was viewed as failure to follow the protocol.

Safety features of the protocol included a maximum TDD of 100 units unless the patient was on >100 units/day of insulin prior to admission. A pager was carried by rotating hospitalists or pharmacist study investigators at all hours during the protocol implementation phase to answer any questions regarding a patient's transition.

Data Collection/Monitoring

A multidisciplinary team consisting of hospitalists, ICU pharmacists, critical care physicians and nursing representatives was assembled during the study period. This team was responsible for protocol implementation, data collection, and surveillance of patient response to the protocol. Educational sessions with house staff and nurses in each unit were held prior to the beginning of the study as well as continued monthly educational efforts during the study. In addition, biweekly huddles to review ongoing patient transitions as well as more formal monthly reviews were held.

The primary objective was to improve glycemic control, defined as the mean daily glucose, during the first 48 hours post transition without a significant increase in the percentage of patients with hypoglycemia (41‐70 mg/dL) or severe hypoglycemia (40 mg/dL). Secondary endpoints included the percent of patients with severe hyperglycemia (300 mg/dL), length of stay (LOS) calculated from the day of transition, number of restarts back onto infusion insulin within 72 hours of transition, and day‐weighted glucose mean up to 12 days following transition for patients with diabetes.

Glucose values were collected and averaged over 6‐hour periods for 48 hours post transition. For patients with diabetes, POC glucose values were collected up to 12 days of hospitalization. Day‐weighted means were obtained by calculating the mean glucose for each hospital day, averaged across all hospital days.12

Analysis

Subjects were divided by the presence or absence of diabetes. Those with diabetes were recommended to receive basal SC insulin during the transition period. Within each group, subjects were further divided by adherence to the protocol. Failure to transition per protocol was defined as: not receiving at least 80% of the recommended basal insulin dose, receiving the initial dose of insulin after the drip was discontinued, or receiving basal insulin when none was recommended.

Descriptive statistics within subgroups comparing age, gender, LOS by analysis of variance for continuous data and by chi‐square for nominal data, were compared. Twenty‐four and 48‐hour post transition mean glucose values and the 12 day weighted‐mean glucose were compared using analysis of variance (Stata ver. 10). All data are expressed as mean standard deviation with a significance value established at P < 0.05.

Results

A total of 210 episodes of infusion insulin in ICU patients were evaluated for the study from May of 2008 to September 2008 (Figure 1). Ninety‐six of these episodes were excluded, most commonly due to time on infusion insulin <24 hours or transition to comfort care. The remaining 114 infusions were eligible to use the protocol. Because the protocol recommends insulin therapy based on a diagnosis of diabetes, patients were further divided into these subcategories. Of these 114 transitions, the protocol was followed 66 times (58%).

Patients With Diabetes

(Table 1: Patient Demographics; Table 2: Insulin Use and Glycemic Control; Figure 2: Transition Graph).

| Patients With Diabetes | P Value | Patients Without Diabetes | P Value | |||

|---|---|---|---|---|---|---|

| Protocol Followed, n = 29 Patients* | Protocol NOT Followed, n = 33 Patients | Protocol Followed, n = 30 Patients | Protocol NOT Followed, n = 9 Patients | |||

| ||||||

| Average age, years, mean SD | 57.7 12.1 | 57.8 12.3 | 0.681 | 56.5 18.1 | 62.4 15.5 | 0.532 |

| Male patients | 21 (72%) | 21 (63%) | 0.58 | 20 (66%) | 7 (77%) | 0.691 |

| BMI | 30.7 7.2 | 28.6 6.8 | 0.180 | 27 5.4 | 25.2 3 | 0.081 |

| History of diabetes* | 18 (64%) | 25 (86%) | 0.07 | 0 | 0 | |

| Mean Hgb A1c (%) | 6.61.2 | 7.3 1.8 | 0.136 | 5.6 0.3 | 5.4 0.4 | 0.095 |

| Full nutrition | 26 (79%) | 24 (61%) | 0.131 | 23 (70%) | 9 (100%) | |

| On hemodialysis | 5 (17%) | 9 (27%) | 0.380 | 3 (10%) | 0 | |

| On >60 mg prednisone or equivalent per day | 7 (24%) | 10 (30%) | 0.632 | 0 | 0 | |

| Patients With Diabetes | P Value | Patients Without Diabetes | P Value | |||

|---|---|---|---|---|---|---|

| Protocol Followed, n = 33 transitions | Protocol NOT followed, n = 39 transitions | Protocol Followed, n = 33 transitions | Protocol NOT Followed, n = 9 transitions | |||

| ||||||

| Average infusion rate, hours | 3.96 3.15 | 3.74 3.64 | 0.1597 | 2.34 1.5 | 4.78 1.6 | <0.001 |

| Average BG on infusion insulin (mg/dL) | 122.5 27.5 | 122.5 31.8 | 0.844 | 115.1 22.7 | 127.5 27.2 | 0.006 |

| Average basal dose (units) given | 34.5 14.4 | 14.4 15.3 | <0.001 | 0 | 32.7 | <0.001 |

| Hours before () or after (+) infusion stopped basal insulin given | 1.13 0.9 | 11.6 9.3 | <0.001 | n/a | 0.33 | * |

| Average BG 6 hours post transition (mg/dL) | 143.7 39.4 | 182 62.5 | 0.019 | 150.2 54.9 | 142.1 34.1 | 0.624 |

| Average BG 0 to 24 hours post transition (mg/dL) | 167.98 50.24 | 211.02 81.01 | <0.001 | 150.24 54.9 | 150.12 32.4 | 0.600 |

| Total insulin used from 0 to 24 hours (units) | 65 32.2 | 26.7 25.4 | <0.001 | 3.2 4.1 | 51.3 30.3 | <0.001 |

| Average BG 25 to 48 hours post transition (mg/dL) | 176.1 55.25 | 218.2 88.54 | <0.001 | 153 35.3 | 154.4 46.7 | 0.711 |

| Total insulin used from 25 to 48 hours (units) | 60.5 35.4 | 28.1 24.4 | <0.001 | 2.8 3.8 | 44.9 34 | <0.001 |

| # of patients with severe hypoglycemia (<40 mg/dL) | 1 (3%) | 1 (2.6%) | * | 0 | 1 | * |

| # of patients with hypoglycemia (4170 mg/dL) | 3 (9%) | 2 (5.1%) | * | 1 | 0 | * |

| % of BG values in goal range (80180 mg/dL) (# in range/total #) | 60.2% (153/254) | 38.2% (104/272) | 0.004 | 80.1% (173/216) | 75.4% (49/65) | 0.83 |

| # of patients with severe hyperglycemia (>300 mg/dL) | 5 (15.2%) | 19 (48.7%) | 0.002 | 1 (3%) | 1 (11.1%) | * |

| LOS from transition (days) | 14.6 11.3 | 14 11.4 | 0.836 | 25.3 24.4 | 13.6 7.5 | 0.168 |

A total of 62 individual patients accounted for 72 separate transitions in patients with diabetes based on past medical history or an A1c 6% (n = 14). Of these 72 transitions, 33 (46%) adhered to the protocol while the remaining 39 (54%) transitions varied from the protocol at the treatment team's discretion. Despite similar insulin infusion rates and mean glucose values pretransition, patients with diabetes following the protocol had better glycemic control at both 24 hours and 48 hours after transition than those patients transitioned without the protocol. Day 1 mean blood glucose was 168 mg/dL vs. 211 mg/dL (P = <0.001) and day 2 mean blood glucose was 176 mg/dL vs. 218 mg/dL (P = <0.001) in protocol vs. nonprotocol patients with diabetes respectively (Figure 2).

There was a severe hypoglycemic event (40 mg/dL) in 1 patient with diabetes following the protocol and 1 patient not following the protocol within 48 hours of transition. Both events were secondary to nutritional‐insulin mismatch with emesis after insulin in one case and tube feeds being held in the second case. These findings were consistent with our prior examination of hypoglycemia cases.13 Severe hyperglycemia (glucose 300mg/dL) occurred in 5 (15 %) patients following the protocol vs. 19 (49%) patients not following protocol (P = 0.002.) Patients with diabetes following the protocol received significantly more insulin in the first 24 hours (mean of 65 units vs. 27 units, P 0.001) and 24 to 48 hours after transition (mean of 61 units vs. 28 units, p0.001) than those not following protocol.

An alternate method used at our institution and others14, 15 to calculate TDD is based on the patient's weight and body habitus. When we compared the projected TDD based on weight with the TDD using the transition protocol, we found that the weight based method was much less aggressive. For patients following the protocol, the weight based method projected a mean TDD of 46.3 16.9 units whereas the protocol projected a mean TDD of 65 33.2 units (P = 0.001).

Patients with diabetes following protocol received basal insulin an average of 1.13 hours prior to discontinuing the insulin infusion versus 11.6 hours after for those not following protocol.

Three patients with diabetes following the protocol and 3 patients with diabetes not following the protocol were restarted on infusion insulin within 72 hours of transition.

LOS from final transition to discharge was similar between protocol vs. nonprotocol patients (14.6 vs. 14 days, P = 0.836).

Figure 3 demonstrates that when used correctly, the protocol provides an extended period of glycemic control up to 12 days post transition. Patients transitioned per protocol had a day‐weighted mean glucose of 155 mg/dL vs. 184 mg/dL (P = 0.043) in patients not following protocol. There was only 1 glucose value less than 40 mg/dL between days 2 to 12 in the protocol group.

Patients Without Diabetes

Of the 39 individual patients without diabetes there were 42 transition events, 33 transitions (78.6%) were per protocol and placed on correctional insulin only. The remaining 9 transitions failed to follow protocol in that basal insulin was prescribed, but these patients maintained comparable glycemic control without an increase in hypoglycemic events. Following transition, patients without diabetes on protocol maintained a mean glucose of 150 mg/dL in the first 24 hours and 153 mg/dL in 24 to 48 hours post transition. They required a mean daily correctional insulin dose of 3.2 units on Day 1 and 2.8 units on Day 2 despite having an average drip rate of 2.3 units/hour at the time of transition (Table 2). There were no severe hypoglycemic events and 80% of blood sugars were within the goal range of 80 mg/dL to 180 mg/dL. Only 1 patient had a single blood glucose of >300mg/dL. No patient was restarted on infusion insulin once transitioned.

Patients without diabetes had a longer LOS after transition off of infusion insulin when compared to their diabetic counterparts (22 vs. 14 days).

Discussion

This study demonstrates the utility of hospitalist‐pharmacist collaboration in the creation and implementation of a safe and effective transition protocol for patients on infusion insulin. The protocol identifies patients appropriate for transition to a basal/nutritional insulin regimen versus those who will do well with premeal correctional insulin alone. Daily mean glucose was improved post transition for diabetic patients following the protocol compared to those not following the protocol without an increase in hypoglycemic events.

We found an equal number of insulin infusion restarts within 72 hours of transition and a similar LOS in protocol vs. nonprotocol patients with diabetes. The LOS was increased for patients without diabetes. This may be due to worse outcomes noted in patients with stress hyperglycemia in other studies.1, 16

The use of the higher multiplier for patients on minimal nutrition led to confusion among many protocol users. The protocol has since been modified to start by averaging the infusion rate over the prior 6 hours and then multiplying by 20 for all patients. This essentially calculates 80% of projected insulin requirements for the next 24 hours based on the patient's current needs. This calculation is then given as 50% basal and 50% nutritional for those on full nutrition vs. 100% basal for those on minimal nutrition. This protocol change has no impact on the amount of insulin received by the patient, but is more intuitive to providers. Instead of calculating the TDD as the projected requirement when full nutrition is obtained, the TDD is now calculated based on current insulin needs, and then doubled when patients who are receiving minimal nutrition advance to full nutrition.

Our study is limited by the lack of a true randomized control group. In lieu of this, we used our patients who did not follow protocol as our control. While not truly randomized, this group is comparable based on their age, gender mix, infusion rate, mean A1c, and projected TDD. This group was also similar to our preprotocol group mentioned in the Introduction.

Additional study limitations include the small number of nondiabetic patients not following the protocol (n = 9). We noted higher infusion rates in nondiabetics not following protocol versus those following protocol, which may have driven the primary team to give basal insulin. It is possible that these 9 patients were not yet ready to transition from infusion insulin or had other stressors not measured in our study. Unfortunately their small population size limits more extensive analysis.

The protocol was followed only 50% of the time for a variety of reasons. Patients who transitioned at night or on weekends were monitored by covering pharmacists and physicians who may not have been familiar with the protocol. Many physicians and nurses remain fearful of hypoglycemia and the outcomes of our study were not yet available for education. Some reported difficulty fully understanding how to use the protocol and why a higher multiplier was used for patients who were on minimal nutrition.

Efforts to improve adherence to the protocol are ongoing with some success, aided by the data demonstrating the safety and efficacy of the transition protocol.

Conclusion

By collaborating with ICU pharmacists we were able to design and implement a protocol that successfully and safely transitioned patients from infusion insulin to subcutaneous insulin. Patients following the protocol had a higher percentage of glucose values within the goal glucose range of 80 mg/dL to 180 mg/dL. In the future, we hope to automate the calculation of TDD and directly recommend a basal/bolus regimen for the clinical provider.

Hyperglycemia due to diabetes or stress is prevalent in the intensive care unit (ICU) and general ward setting. Umpierrez et al.1 reported hyperglycemia in 38% of hospitalized ward patients with 26% having a known history of diabetes. While patients with hyperglycemia admitted to the ICU are primarily treated with infusion insulin, those on the general wards usually receive a subcutaneous regimen of insulin. How best to transition patients from infusion insulin to a subcutaneous regimen remains elusive and under evaluated.

A recent observational pilot study of 24 surgical and 17 cardiac/medical intensive care patients at our university‐based hospital found that glycemic control significantly deteriorated when patients with diabetes transitioned from infusion insulin to subcutaneous insulin. A total of 21 critical care patients with a history of diabetes failed to receive basal insulin prior to discontinuation of the drip and developed uncontrolled hyperglycemia (mean glucose Day 1 of 216 mg/dL and Day 2 of 197 mg/dL). Patients without a history of diabetes did well post transition with a mean glucose of 142 mg/dL Day 1 and 133 mg/dL Day 2. A similar study by Czosnowski et al.2 demonstrated a significant increase in blood glucose from 123 26 mg/dL to 168 50 mg/dL upon discontinuation of infusion insulin.

This failed transition is disappointing, especially in view of the existence of a reliable subcutaneous (SC) insulin order set at our institution, but not surprising, as this is an inherently complex process. The severity of illness, the amount and mode of nutritional intake, geographic location, and provider team may all be in flux at the time of this transition. A few centers have demonstrated that a much improved transition is possible,36 however many of these solutions involve technology or incremental personnel that may not be available or the descriptions may lack sufficient detail to implement theses strategies with confidence elsewhere.

Therefore, we designed and piloted a protocol, coordinated by a multidisciplinary team, to transition patients from infusion insulin to SC insulin. The successful implementation of this protocol could serve as a blueprint to other institutions without the need for additional technology or personnel.

Methods

Patient Population/Setting

This was a prospective study of patients admitted to either the medical/cardiac intensive care unit (MICU/CCU) or surgical intensive care unit (SICU) at an academic medical facility and placed on infusion insulin for >24 hours. The Institutional Review Board (IRB) approved the study for prospective chart review and anonymous results reporting without individual consent.

Patients in the SICU were initiated on infusion insulin after 2 blood glucose readings were above 150 mg/dL, whereas initiation was left to the discretion of the attending physician in the MICU/CCU. A computerized system created in‐house recommends insulin infusion rates based on point‐of‐care (POC) glucose measurements with a target range of 91 mg/dL to 150 mg/dL.

Inclusion/Exclusion Criteria

All patients on continuous insulin infusion admitted to the SICU or the MICU/CCU between May 2008 and September 2008 were evaluated for the study (Figure 1). Patients were excluded from analysis if they were on the infusion for less than 24 hours, had a liver transplant, were discharged within 48 hours of transition, were made comfort care or transitioned to an insulin pump. All other patients were included in the final analysis.

Transition Protocol

Step 1: Does the Patient Need Basal SC Insulin?

Patients were recommended to receive basal SC insulin if they either: (1) were on medications for diabetes; (2) had an A1c 6%; or (3) received the equivalent of 60 mg of prednisone; AND had an infusion rate 1 unit/hour (Supporting Information Appendix 1). Patients on infusion insulin due to stress hyperglycemia, regardless of the infusion rate, were not placed on basal SC insulin. Patients on high dose steroids due to spinal injuries were excluded because their duration of steroid use was typically less than 48 hours and usually ended prior to the time of transition. The protocol recommends premeal correctional insulin for those not qualifying for basal insulin.

In order to establish patients in need of basal/nutritional insulin we opted to use A1c as well as past medical history to identify patients with diabetes. The American Diabetes Association (ADA) has recently accepted using an A1c 6.5% to make a new diagnosis of diabetes.7 In a 2‐week trial prior to initiating the protocol we used a cut off A1c of 6.5%. However, we found that patients with an A1c of 6% to 6.5% had poor glucose control post transition; therefore we chose 6% as our identifier. In addition, using a cut off A1c of 6% was reported by Rohlfing et al.8 and Greci et al.9 to be more than 97% sensitive at identifying a new diagnosis of diabetes.

To ensure an A1c was ordered and available at the time of transition, critical care pharmacists were given Pharmacy and Therapeutics Committee authorization to order an A1c at the start of the infusion. Pharmacists would also guide the primary team through the protocol's recommendations as well as alert the project team when a patient was expected to transition.

Step 2: Evaluate the Patient's Nutritional Intake to Calculate the Total Daily Dose (TDD) of Insulin

TDD is the total amount of insulin needed to cover both the nutritional and basal requirements of a patient over the course of 24 hours. TDD was calculated by averaging the hourly drip rate over the prior 6 hours and multiplying by 20 if taking in full nutrition or 40 if taking minimal nutrition while on the drip. A higher multiplier was used for those on minimal nutrition with the expectation that their insulin requirements would double once tolerating a full diet. Full nutrition was defined as eating >50% of meals, on goal tube feeds, or receiving total parenteral nutrition (TPN). Minimal nutrition was defined as taking nothing by mouth (or NPO), tolerating <50% of meals, or on a clear liquid diet.

Step 3: Divide the TDD Into the Appropriate Components of Insulin Treatment (Basal, Nutritional and Correction), Depending on the Nutritional Status

In Step 3, the TDD was evenly divided into basal and nutritional insulin. A total of 50% of the TDD was given as glargine (Lantus) 2 hours prior to stopping the infusion. The remaining 50% was divided into nutritional components as either Regular insulin every 6 hours for patients on tube feeds or lispro (Humalog) before meals if tolerating an oral diet. For patients on minimal nutrition, the 50% nutritional insulin dose was not initiated until the patient was tolerating full nutrition.

The protocol recommended basal insulin administration 2 hours prior to infusion discontinuation as recommended by the American Association of Clinical Endocrinologists (AACE) and ADA consensus statement on inpatient glycemic control as well as pharmacokinetics.10, 11 For these reasons, failure to receive basal insulin prior to transition was viewed as failure to follow the protocol.

Safety features of the protocol included a maximum TDD of 100 units unless the patient was on >100 units/day of insulin prior to admission. A pager was carried by rotating hospitalists or pharmacist study investigators at all hours during the protocol implementation phase to answer any questions regarding a patient's transition.

Data Collection/Monitoring

A multidisciplinary team consisting of hospitalists, ICU pharmacists, critical care physicians and nursing representatives was assembled during the study period. This team was responsible for protocol implementation, data collection, and surveillance of patient response to the protocol. Educational sessions with house staff and nurses in each unit were held prior to the beginning of the study as well as continued monthly educational efforts during the study. In addition, biweekly huddles to review ongoing patient transitions as well as more formal monthly reviews were held.

The primary objective was to improve glycemic control, defined as the mean daily glucose, during the first 48 hours post transition without a significant increase in the percentage of patients with hypoglycemia (41‐70 mg/dL) or severe hypoglycemia (40 mg/dL). Secondary endpoints included the percent of patients with severe hyperglycemia (300 mg/dL), length of stay (LOS) calculated from the day of transition, number of restarts back onto infusion insulin within 72 hours of transition, and day‐weighted glucose mean up to 12 days following transition for patients with diabetes.

Glucose values were collected and averaged over 6‐hour periods for 48 hours post transition. For patients with diabetes, POC glucose values were collected up to 12 days of hospitalization. Day‐weighted means were obtained by calculating the mean glucose for each hospital day, averaged across all hospital days.12

Analysis

Subjects were divided by the presence or absence of diabetes. Those with diabetes were recommended to receive basal SC insulin during the transition period. Within each group, subjects were further divided by adherence to the protocol. Failure to transition per protocol was defined as: not receiving at least 80% of the recommended basal insulin dose, receiving the initial dose of insulin after the drip was discontinued, or receiving basal insulin when none was recommended.

Descriptive statistics within subgroups comparing age, gender, LOS by analysis of variance for continuous data and by chi‐square for nominal data, were compared. Twenty‐four and 48‐hour post transition mean glucose values and the 12 day weighted‐mean glucose were compared using analysis of variance (Stata ver. 10). All data are expressed as mean standard deviation with a significance value established at P < 0.05.

Results

A total of 210 episodes of infusion insulin in ICU patients were evaluated for the study from May of 2008 to September 2008 (Figure 1). Ninety‐six of these episodes were excluded, most commonly due to time on infusion insulin <24 hours or transition to comfort care. The remaining 114 infusions were eligible to use the protocol. Because the protocol recommends insulin therapy based on a diagnosis of diabetes, patients were further divided into these subcategories. Of these 114 transitions, the protocol was followed 66 times (58%).

Patients With Diabetes

(Table 1: Patient Demographics; Table 2: Insulin Use and Glycemic Control; Figure 2: Transition Graph).

| Patients With Diabetes | P Value | Patients Without Diabetes | P Value | |||

|---|---|---|---|---|---|---|

| Protocol Followed, n = 29 Patients* | Protocol NOT Followed, n = 33 Patients | Protocol Followed, n = 30 Patients | Protocol NOT Followed, n = 9 Patients | |||

| ||||||

| Average age, years, mean SD | 57.7 12.1 | 57.8 12.3 | 0.681 | 56.5 18.1 | 62.4 15.5 | 0.532 |

| Male patients | 21 (72%) | 21 (63%) | 0.58 | 20 (66%) | 7 (77%) | 0.691 |

| BMI | 30.7 7.2 | 28.6 6.8 | 0.180 | 27 5.4 | 25.2 3 | 0.081 |

| History of diabetes* | 18 (64%) | 25 (86%) | 0.07 | 0 | 0 | |

| Mean Hgb A1c (%) | 6.61.2 | 7.3 1.8 | 0.136 | 5.6 0.3 | 5.4 0.4 | 0.095 |

| Full nutrition | 26 (79%) | 24 (61%) | 0.131 | 23 (70%) | 9 (100%) | |

| On hemodialysis | 5 (17%) | 9 (27%) | 0.380 | 3 (10%) | 0 | |

| On >60 mg prednisone or equivalent per day | 7 (24%) | 10 (30%) | 0.632 | 0 | 0 | |

| Patients With Diabetes | P Value | Patients Without Diabetes | P Value | |||

|---|---|---|---|---|---|---|

| Protocol Followed, n = 33 transitions | Protocol NOT followed, n = 39 transitions | Protocol Followed, n = 33 transitions | Protocol NOT Followed, n = 9 transitions | |||

| ||||||

| Average infusion rate, hours | 3.96 3.15 | 3.74 3.64 | 0.1597 | 2.34 1.5 | 4.78 1.6 | <0.001 |

| Average BG on infusion insulin (mg/dL) | 122.5 27.5 | 122.5 31.8 | 0.844 | 115.1 22.7 | 127.5 27.2 | 0.006 |

| Average basal dose (units) given | 34.5 14.4 | 14.4 15.3 | <0.001 | 0 | 32.7 | <0.001 |

| Hours before () or after (+) infusion stopped basal insulin given | 1.13 0.9 | 11.6 9.3 | <0.001 | n/a | 0.33 | * |

| Average BG 6 hours post transition (mg/dL) | 143.7 39.4 | 182 62.5 | 0.019 | 150.2 54.9 | 142.1 34.1 | 0.624 |

| Average BG 0 to 24 hours post transition (mg/dL) | 167.98 50.24 | 211.02 81.01 | <0.001 | 150.24 54.9 | 150.12 32.4 | 0.600 |

| Total insulin used from 0 to 24 hours (units) | 65 32.2 | 26.7 25.4 | <0.001 | 3.2 4.1 | 51.3 30.3 | <0.001 |

| Average BG 25 to 48 hours post transition (mg/dL) | 176.1 55.25 | 218.2 88.54 | <0.001 | 153 35.3 | 154.4 46.7 | 0.711 |

| Total insulin used from 25 to 48 hours (units) | 60.5 35.4 | 28.1 24.4 | <0.001 | 2.8 3.8 | 44.9 34 | <0.001 |

| # of patients with severe hypoglycemia (<40 mg/dL) | 1 (3%) | 1 (2.6%) | * | 0 | 1 | * |

| # of patients with hypoglycemia (4170 mg/dL) | 3 (9%) | 2 (5.1%) | * | 1 | 0 | * |

| % of BG values in goal range (80180 mg/dL) (# in range/total #) | 60.2% (153/254) | 38.2% (104/272) | 0.004 | 80.1% (173/216) | 75.4% (49/65) | 0.83 |

| # of patients with severe hyperglycemia (>300 mg/dL) | 5 (15.2%) | 19 (48.7%) | 0.002 | 1 (3%) | 1 (11.1%) | * |

| LOS from transition (days) | 14.6 11.3 | 14 11.4 | 0.836 | 25.3 24.4 | 13.6 7.5 | 0.168 |

A total of 62 individual patients accounted for 72 separate transitions in patients with diabetes based on past medical history or an A1c 6% (n = 14). Of these 72 transitions, 33 (46%) adhered to the protocol while the remaining 39 (54%) transitions varied from the protocol at the treatment team's discretion. Despite similar insulin infusion rates and mean glucose values pretransition, patients with diabetes following the protocol had better glycemic control at both 24 hours and 48 hours after transition than those patients transitioned without the protocol. Day 1 mean blood glucose was 168 mg/dL vs. 211 mg/dL (P = <0.001) and day 2 mean blood glucose was 176 mg/dL vs. 218 mg/dL (P = <0.001) in protocol vs. nonprotocol patients with diabetes respectively (Figure 2).

There was a severe hypoglycemic event (40 mg/dL) in 1 patient with diabetes following the protocol and 1 patient not following the protocol within 48 hours of transition. Both events were secondary to nutritional‐insulin mismatch with emesis after insulin in one case and tube feeds being held in the second case. These findings were consistent with our prior examination of hypoglycemia cases.13 Severe hyperglycemia (glucose 300mg/dL) occurred in 5 (15 %) patients following the protocol vs. 19 (49%) patients not following protocol (P = 0.002.) Patients with diabetes following the protocol received significantly more insulin in the first 24 hours (mean of 65 units vs. 27 units, P 0.001) and 24 to 48 hours after transition (mean of 61 units vs. 28 units, p0.001) than those not following protocol.

An alternate method used at our institution and others14, 15 to calculate TDD is based on the patient's weight and body habitus. When we compared the projected TDD based on weight with the TDD using the transition protocol, we found that the weight based method was much less aggressive. For patients following the protocol, the weight based method projected a mean TDD of 46.3 16.9 units whereas the protocol projected a mean TDD of 65 33.2 units (P = 0.001).

Patients with diabetes following protocol received basal insulin an average of 1.13 hours prior to discontinuing the insulin infusion versus 11.6 hours after for those not following protocol.

Three patients with diabetes following the protocol and 3 patients with diabetes not following the protocol were restarted on infusion insulin within 72 hours of transition.

LOS from final transition to discharge was similar between protocol vs. nonprotocol patients (14.6 vs. 14 days, P = 0.836).

Figure 3 demonstrates that when used correctly, the protocol provides an extended period of glycemic control up to 12 days post transition. Patients transitioned per protocol had a day‐weighted mean glucose of 155 mg/dL vs. 184 mg/dL (P = 0.043) in patients not following protocol. There was only 1 glucose value less than 40 mg/dL between days 2 to 12 in the protocol group.

Patients Without Diabetes

Of the 39 individual patients without diabetes there were 42 transition events, 33 transitions (78.6%) were per protocol and placed on correctional insulin only. The remaining 9 transitions failed to follow protocol in that basal insulin was prescribed, but these patients maintained comparable glycemic control without an increase in hypoglycemic events. Following transition, patients without diabetes on protocol maintained a mean glucose of 150 mg/dL in the first 24 hours and 153 mg/dL in 24 to 48 hours post transition. They required a mean daily correctional insulin dose of 3.2 units on Day 1 and 2.8 units on Day 2 despite having an average drip rate of 2.3 units/hour at the time of transition (Table 2). There were no severe hypoglycemic events and 80% of blood sugars were within the goal range of 80 mg/dL to 180 mg/dL. Only 1 patient had a single blood glucose of >300mg/dL. No patient was restarted on infusion insulin once transitioned.

Patients without diabetes had a longer LOS after transition off of infusion insulin when compared to their diabetic counterparts (22 vs. 14 days).

Discussion

This study demonstrates the utility of hospitalist‐pharmacist collaboration in the creation and implementation of a safe and effective transition protocol for patients on infusion insulin. The protocol identifies patients appropriate for transition to a basal/nutritional insulin regimen versus those who will do well with premeal correctional insulin alone. Daily mean glucose was improved post transition for diabetic patients following the protocol compared to those not following the protocol without an increase in hypoglycemic events.

We found an equal number of insulin infusion restarts within 72 hours of transition and a similar LOS in protocol vs. nonprotocol patients with diabetes. The LOS was increased for patients without diabetes. This may be due to worse outcomes noted in patients with stress hyperglycemia in other studies.1, 16

The use of the higher multiplier for patients on minimal nutrition led to confusion among many protocol users. The protocol has since been modified to start by averaging the infusion rate over the prior 6 hours and then multiplying by 20 for all patients. This essentially calculates 80% of projected insulin requirements for the next 24 hours based on the patient's current needs. This calculation is then given as 50% basal and 50% nutritional for those on full nutrition vs. 100% basal for those on minimal nutrition. This protocol change has no impact on the amount of insulin received by the patient, but is more intuitive to providers. Instead of calculating the TDD as the projected requirement when full nutrition is obtained, the TDD is now calculated based on current insulin needs, and then doubled when patients who are receiving minimal nutrition advance to full nutrition.

Our study is limited by the lack of a true randomized control group. In lieu of this, we used our patients who did not follow protocol as our control. While not truly randomized, this group is comparable based on their age, gender mix, infusion rate, mean A1c, and projected TDD. This group was also similar to our preprotocol group mentioned in the Introduction.

Additional study limitations include the small number of nondiabetic patients not following the protocol (n = 9). We noted higher infusion rates in nondiabetics not following protocol versus those following protocol, which may have driven the primary team to give basal insulin. It is possible that these 9 patients were not yet ready to transition from infusion insulin or had other stressors not measured in our study. Unfortunately their small population size limits more extensive analysis.

The protocol was followed only 50% of the time for a variety of reasons. Patients who transitioned at night or on weekends were monitored by covering pharmacists and physicians who may not have been familiar with the protocol. Many physicians and nurses remain fearful of hypoglycemia and the outcomes of our study were not yet available for education. Some reported difficulty fully understanding how to use the protocol and why a higher multiplier was used for patients who were on minimal nutrition.

Efforts to improve adherence to the protocol are ongoing with some success, aided by the data demonstrating the safety and efficacy of the transition protocol.

Conclusion

By collaborating with ICU pharmacists we were able to design and implement a protocol that successfully and safely transitioned patients from infusion insulin to subcutaneous insulin. Patients following the protocol had a higher percentage of glucose values within the goal glucose range of 80 mg/dL to 180 mg/dL. In the future, we hope to automate the calculation of TDD and directly recommend a basal/bolus regimen for the clinical provider.

- ,,,,,.Hyperglycemia: an independent marker of in‐hospital mortality in patients with undiagnosed diabetes.J Clin Endocrinol Metab.2002;87:978–982.

- ,,,,,.Evaluation of glycemic control following discontinuation of an intensive insulin protocol.J Hosp Med.2009;4:28–34.

- ,,, et al.Inpatient management of hyperglycemia: the Northwestern experience.Endocr Pract.2006;12:491–505.

- ,,,.Intravenous insulin infusion therapy: indications, methods, and transition to subcutaneous insulin therapy.Endocr Pract.2004;10Suppl 2:71–80.

- ,,, et al.Conversion of intravenous insulin infusions to subcutaneously administered insulin glargine in patients with hyperglycemia.Endocr Pract.2006;12:641–650.

- ,.Effects of outcome on in‐hospital transition from intravenous insulin infusion to subcutaneous therapy.Am J Cardiol.2006;98:557–564.

- International Expert Committee report on the role of the A1C assay in the diagnosis of diabetes.Diabetes Care.2009;32:1327–1334.

- ,,, et al.Use of GHb (HbA1c) in screening for undiagnosed diabetes in the U.S. population.Diabetes Care.2000;23:187–191.

- ,,, et al.Utility of HbA(1c) levels for diabetes case finding in hospitalized patients with hyperglycemia.Diabetes Care.2003;26:1064–1068.

- ,,, et al.American Association of Clinical Endocrinologists and American Diabetes Association consensus statement on inpatient glycemic control.Endocr Pract.2009;15(4):353–369.

- ,,, et al.Pharmacokinetics and pharmacodynamics of subcutaneous injection of long‐acting human insulin analog glargine, NPH insulin, and ultralente human insulin and continuous subcutaneous infusion of insulin lispro.Diabetes.2000;49:2142–2148.

- ,,, et al.“Glucometrics”‐‐assessing the quality of inpatient glucose management.Diabetes Technol Ther.2006;8:560–569.

- ,,.Iatrogenic Inpatient Hypoglycemia: Risk Factors, Treatment, and Prevention: Analysis of Current Practice at an Academic Medical Center With Implications for Improvement Efforts.Diabetes Spectr.2008;21:241–247.

- ,,, et al.Management of diabetes and hyperglycemia in hospitals.Diabetes Care.2004;27:553–591.

- .Insulin management of diabetic patients on general medical and surgical floors.Endocr Pract.2006;12Suppl 3:86–90.

- ,,,.Inadequate blood glucose control is associated with in‐hospital mortality and morbidity in diabetic and nondiabetic patients undergoing cardiac surgery.Circulation.2008;118:113–123.

- ,,,,,.Hyperglycemia: an independent marker of in‐hospital mortality in patients with undiagnosed diabetes.J Clin Endocrinol Metab.2002;87:978–982.

- ,,,,,.Evaluation of glycemic control following discontinuation of an intensive insulin protocol.J Hosp Med.2009;4:28–34.

- ,,, et al.Inpatient management of hyperglycemia: the Northwestern experience.Endocr Pract.2006;12:491–505.

- ,,,.Intravenous insulin infusion therapy: indications, methods, and transition to subcutaneous insulin therapy.Endocr Pract.2004;10Suppl 2:71–80.

- ,,, et al.Conversion of intravenous insulin infusions to subcutaneously administered insulin glargine in patients with hyperglycemia.Endocr Pract.2006;12:641–650.

- ,.Effects of outcome on in‐hospital transition from intravenous insulin infusion to subcutaneous therapy.Am J Cardiol.2006;98:557–564.

- International Expert Committee report on the role of the A1C assay in the diagnosis of diabetes.Diabetes Care.2009;32:1327–1334.

- ,,, et al.Use of GHb (HbA1c) in screening for undiagnosed diabetes in the U.S. population.Diabetes Care.2000;23:187–191.

- ,,, et al.Utility of HbA(1c) levels for diabetes case finding in hospitalized patients with hyperglycemia.Diabetes Care.2003;26:1064–1068.

- ,,, et al.American Association of Clinical Endocrinologists and American Diabetes Association consensus statement on inpatient glycemic control.Endocr Pract.2009;15(4):353–369.

- ,,, et al.Pharmacokinetics and pharmacodynamics of subcutaneous injection of long‐acting human insulin analog glargine, NPH insulin, and ultralente human insulin and continuous subcutaneous infusion of insulin lispro.Diabetes.2000;49:2142–2148.

- ,,, et al.“Glucometrics”‐‐assessing the quality of inpatient glucose management.Diabetes Technol Ther.2006;8:560–569.

- ,,.Iatrogenic Inpatient Hypoglycemia: Risk Factors, Treatment, and Prevention: Analysis of Current Practice at an Academic Medical Center With Implications for Improvement Efforts.Diabetes Spectr.2008;21:241–247.

- ,,, et al.Management of diabetes and hyperglycemia in hospitals.Diabetes Care.2004;27:553–591.

- .Insulin management of diabetic patients on general medical and surgical floors.Endocr Pract.2006;12Suppl 3:86–90.

- ,,,.Inadequate blood glucose control is associated with in‐hospital mortality and morbidity in diabetic and nondiabetic patients undergoing cardiac surgery.Circulation.2008;118:113–123.

Copyright © 2010 Society of Hospital Medicine

Prevention of Hospital‐Acquired VTE

Pulmonary embolism (PE) and deep vein thrombosis (DVT), collectively referred to as venous thromboembolism (VTE), represent a major public health problem, affecting hundreds of thousands of Americans each year.1 The best estimates are that at least 100,000 deaths are attributable to VTE each year in the United States alone.1 VTE is primarily a problem of hospitalized and recently‐hospitalized patients.2 Although a recent meta‐analysis did not prove mortality benefit of prophylaxis in the medical population,3 PE is frequently estimated to be the most common preventable cause of hospital death.46

Pharmacologic methods to prevent VTE are safe, effective, cost‐effective, and advocated by authoritative guidelines.7 Even though the majority of medical and surgical inpatients have multiple risk factors for VTE, large prospective studies continue to demonstrate that these preventive methods are significantly underutilized, often with only 30% to 50% eligible patients receiving prophylaxis.812

The reasons for this underutilization include lack of physician familiarity or agreement with guidelines, underestimation of VTE risk, concern over risk of bleeding, and the perception that the guidelines are resource‐intensive or difficult to implement in a practical fashion.13 While many VTE risk‐assessment models are available in the literature,1418 a lack of prospectively validated models and issues regarding ease of use have further hampered widespread integration of VTE risk assessments into order sets and inpatient practice.

We sought to optimize prevention of hospital‐acquired (HA) VTE in our 350‐bed tertiary‐care academic center using a VTE prevention protocol and a multifaceted approach that could be replicated across a wide variety of medical centers.

Patients and Methods

Study Design

We developed, implemented, and refined a VTE prevention protocol and examined the impact of our efforts. We observed adult inpatients on a longitudinal basis for the prevalence of adequate VTE prophylaxis and for the incidence of HA VTE throughout a 36‐month period from calendar year 2005 through 2007, and performed a retrospective analysis for any potential adverse effects of increased VTE prophylaxis. The project adhered to the HIPAA requirements for privacy involving health‐related data from human research participants. The study was approved by the Institutional Review Board of the University of California, San Diego, which waived the requirement for individual patient informed consent.

We included all hospitalized adult patients (medical and surgical services) at our medical center in our observations and interventions, including patients of all ethnic groups, geriatric patients, prisoners, and the socially and economically disadvantaged in our population. Exclusion criteria were age under 14 years, and hospitalization on Psychiatry or Obstetrics/Gynecology services.

Development of a VTE Risk‐assessment Model and VTE Prevention Protocol

A core multidisciplinary team with hospitalists, pulmonary critical care VTE experts, pharmacists, nurses, and information specialists was formed. After gaining administrative support for standardization, we worked with medical staff leaders to gain consensus on a VTE prevention protocol for all medical and surgical areas from mid‐2005 through mid‐2006. The VTE prevention protocol included the elements of VTE risk stratification, definitions of adequate VTE prevention measures linked to the level of VTE risk, and definitions for contraindications to pharmacologic prophylactic measures. We piloted risk‐assessment model (RAM) drafts for ease of use and clarity, using rapid cycle feedback from pharmacy residents, house staff, and medical staff attending physicians. Models often cited in the literature15, 18 that include point‐based scoring of VTE risk factors (with prophylaxis choices hinging on the additive sum of scoring) were rejected based on the pilot experience.

We adopted a simple model with 3 levels of VTE risk that could be completed by the physician in seconds, and then proceeded to integrate this RAM into standardized data collection instruments and eventually (April 2006) into a computerized provider order entry (CPOE) order set (Siemmens Invision v26). Each level of VTE risk was firmly linked to a menu of acceptable prophylaxis options (Table 1). Simple text cues were used to define risk assessment, with more exhaustive listings of risk factors being relegated to accessible reference tables.

| Low | Moderate | High |

|---|---|---|

| ||

| Ambulatory patient without VTE risk factors; observation patient with expected LOS 2 days; same day surgery or minor surgery | All other patients (not in low‐risk or high‐risk category); most medical/surgical patients; respiratory insufficiency, heart failure, acute infectious, or inflammatory disease | Lower extremity arthroplasty; hip, pelvic, or severe lower extremity fractures; acute SCI with paresis; multiple major trauma; abdominal or pelvic surgery for cancer |

| Early ambulation | UFH 5000 units SC q 8 hours; OR LMWH q day; OR UFH 5000 units SC q 12 hours (if weight < 50 kg or age > 75 years); AND suggest adding IPC | LMWH (UFH if ESRD); OR fondaparinux 2.5 mg SC daily; OR warfarin, INR 2‐3; AND IPC (unless not feasible) |

Intermittent pneumatic compression devices were endorsed as an adjunct in all patients in the highest risk level, and as the primary method in patients with contraindications to pharmacologic prophylaxis. Aspirin was deemed an inappropriate choice for VTE prophylaxis. Subcutaneous unfractionated or low‐molecular‐weight heparin were endorsed as the primary method of prophylaxis for the majority of patients without contraindications.

Integration of the VTE Protocol into Order Sets

An essential strategy for the success of the VTE protocol included integrating guidance for the physician into the flow of patient care, via standardized order sets. The CPOE VTE prevention order set was modular by design, as opposed to a stand alone design. After conferring with appropriate stakeholders, preexisting and nonstandardized prompts for VTE prophylaxis were removed from commonly used order sets, and the standardized module was inserted in its place. This allowed for integration of the standardized VTE prevention module into all admission and transfer order sets, essentially insuring that all patients admitted or transferred within the medical center would be exposed to the protocol. Physicians using a variety of admission and transfer order sets were prompted to select each patient's risk for VTE, and declare the presence or absence of contraindications to pharmacologic prophylaxis. Only the VTE prevention options most appropriate for the patient's VTE and anticoagulation risk profile were presented as the default choice for VTE prophylaxis. Explicit designation of VTE risk level and a prophylaxis choice were presented in a hard stop mechanism, and utilization of these orders was therefore mandatory, not optional. Proper use (such as the proper classification of VTE risk by the ordering physician) was actively monitored on an auditing basis, and order sets were modified occasionally on the basis of subjective and objective feedback.

Assessment of VTE Risk Assessment Interobserver Agreement

Data from 150 randomly selected patients from the audit pool (from late 2005 through mid‐2006) were abstracted by the nurse practitioner in a detailed manner. Five independent reviewers assessed each patient for VTE risk level, and for a determination of whether or not they were on adequate VTE prophylaxis on the day of the audit per protocol. Interobserver agreement was calculated for these parameters using kappa scores.

Prospective Monitoring of Adequate VTE Prophylaxis