User login

Trends in Use of Postdischarge Intravenous Antibiotic Therapy for Children

In recent years, mounting evidence has emerged questioning the practice of using prolonged intravenous antibiotic therapy to treat certain serious bacterial infections in children, including complicated appendicitis, osteomyelitis, and complicated pneumonia. Historically, treatment of these conditions was often completed intravenously after hospital discharge using peripherally inserted central catheters (PICCs). Line infections, clots, mechanical problems, and general discomfort complicate PICCs, which led to their removal in more than 20% of children in one study.1 Oral antibiotics avoid these complications and are less burdensome to families.2 Recently, a series of multicenter studies showed no difference in outcomes between oral and postdischarge intravenous antibiotic therapy (PD-IV) for complicated appendicitis, osteomyelitis, and complicated pneumonia.3-5

Despite a growing body of evidence suggesting that oral therapy ought to be the default treatment strategy rather than PD-IV, the extent to which practices have changed is unknown. In this study, we measured national trends in PD-IV use and variation by hospital for complicated appendicitis, osteomyelitis, and complicated pneumonia.

METHODS

We performed a retrospective cohort study of children discharged from hospitals that contributed data to the Pediatric Health Information System (PHIS) database from January 2000 through December 2018. PHIS is an administrative database of children’s hospitals managed by the Children’s Hospital Association (Lenexa, Kansas) and contains deidentified patient-level demographic data, discharge diagnosis and procedure codes, and detailed billing information, including medical supply charges.

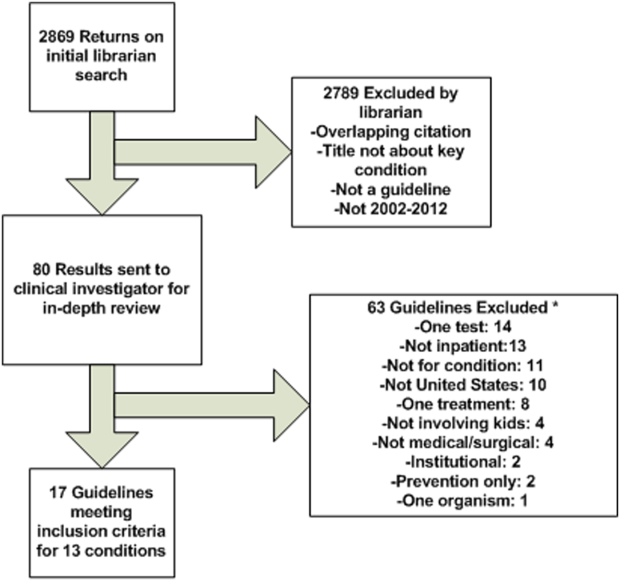

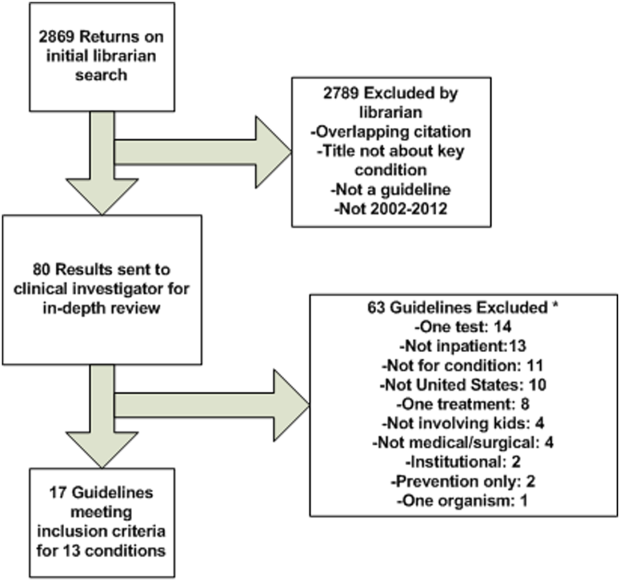

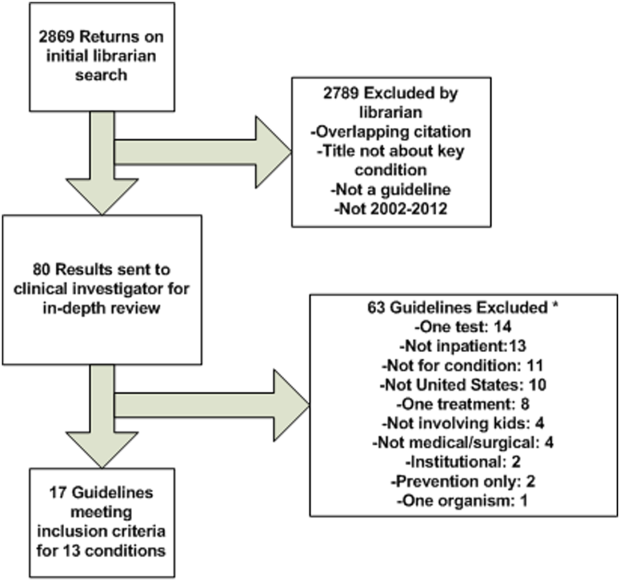

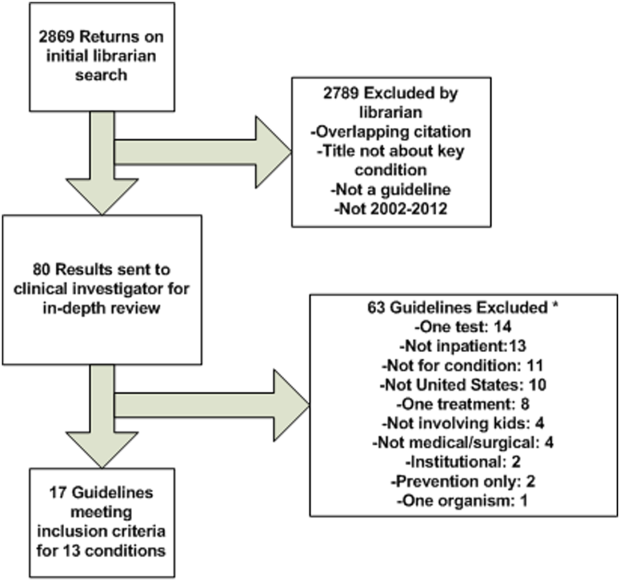

The cohorts were defined using International Classification of Diseases, 9th and 10th Revisions (ICD-9 and ICD-10) discharge diagnosis and procedure codes. Patients admitted through September 2015 were identified using ICD-9 codes and patients admitted from October 2015 through December 2018 were identified using ICD-10 codes. The Centers for Medicaid & Medicare Services crosswalk was used to align ICD-9 and ICD-10 codes.6 Inclusion and exclusion criteria identifying cohorts of children hospitalized for complicated appendicitis, osteomyelitis, or complicated pneumonia were based on prior studies using the PHIS database.3-5 These studies augmented the PHIS administrative dataset with local chart review to identify patients from 2009-2012 with the following inclusion and exclusion criteria: Patients with complicated appendicitis were defined by a diagnosis code for acute appendicitis and a procedure code for appendectomy, with postoperative length of stay lasting between 3 and 7 days. Patients with osteomyelitis had a diagnosis code of acute or unspecified osteomyelitis with a hospital length of stay between 2 and 14 days. Patients with complicated pneumonia were defined by a diagnosis code for both pneumonia and pleural effusion with one of these as the primary diagnosis. Patients were excluded if they were older than 18 years or if they were younger than 2 months for osteomyelitis and complicated pneumonia or younger than 3 years for appendicitis. For all three conditions, children with a complex chronic condition7 were excluded. Only the index encounter meeting inclusion and exclusion criteria for each patient was included. PD-IV therapy was defined using procedure codes and hospital charges during the index hospitalization. This definition for PD-IV therapy has been validated among children with complicated pneumonia, demonstrating positive and negative predictive values for PICC exposure of 85% and 99%, respectively.8

Trends in the percentage of patients receiving PD-IV were adjusted for age, race, insurance type, intensive care unit days, and hospital-level case mix index with use of Poisson regression. Calculated risk ratios represent the change in PD-IV across the entire 19-year study period for each condition (as opposed to an annual rate of change). An inflection point for each condition was identified using piecewise linear regression in which the line slope has one value up to a point in time and a second value after that point. The transition point is determined by maximizing model fit.

Some hospitals were added to the database throughout the time period and therefore did not have data for all years of the study. To account for the possibility of a group of high– or low–PD-IV use hospitals entering the cohort and biasing the overall trend, we performed a sensitivity analysis restricted to hospitals continuously contributing data to PHIS every year between 2004 (when a majority of hospitals joined PHIS) and 2018. Significance testing for individual hospital trends was conducted among continuously contributing hospitals, with each hospital tested in the above Poisson model independently.

For the most recent year of 2018, we reported the distribution of adjusted percentages of PD-IV at the individual hospital level. Only hospitals with at least five patients for a given condition are included in the percent PD-IV calculations for 2018. To examine the extent to which an individual hospital might be a low– or high–PD-IV user across conditions, we divided hospitals into quartiles based on PD-IV use for each condition in 2017-2018 and calculated the percent of hospitals in the lowest- and highest-use quartiles for all three conditions. All statistics were performed using Stata 15 (StataCorp).

RESULTS

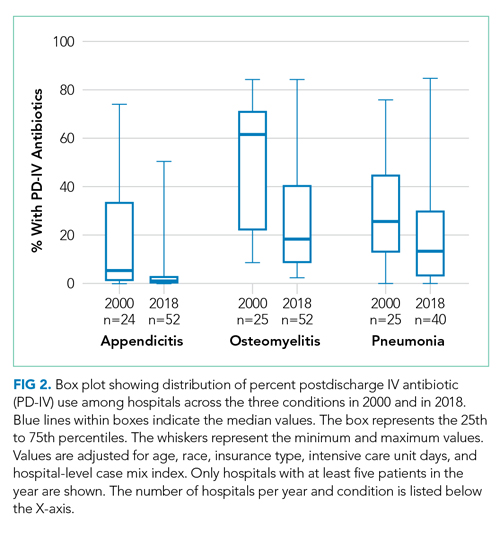

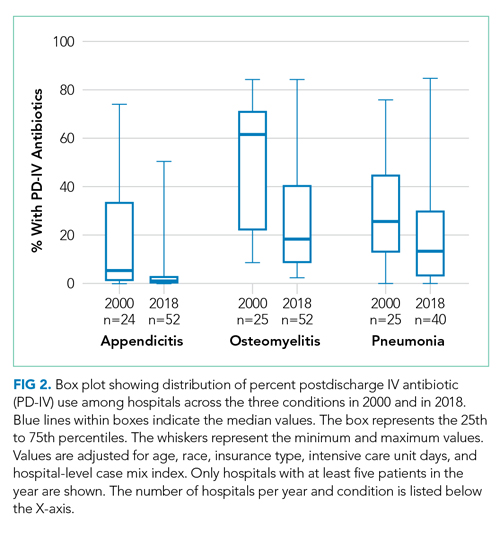

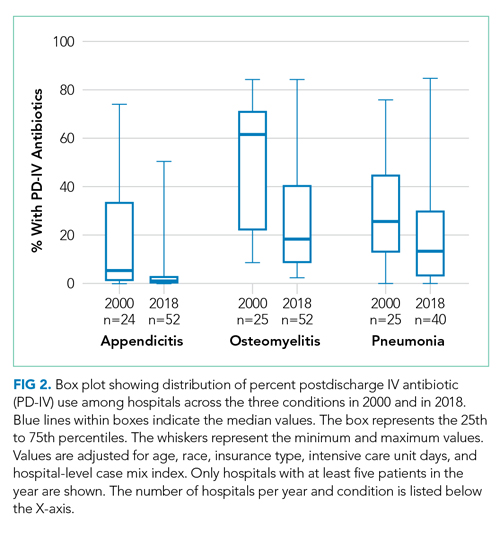

Among 52 hospitals over a 19-year study period, there were 60,575 hospitalizations for complicated appendicitis, 24,753 hospitalizations for osteomyelitis, and 13,700 hospitalizations for complicated pneumonia. From 2000 to 2018, PD-IV decreased from 13% to 2% (RR, 0.15; 95% CI, 0.14-0.16) for complicated appendicitis, from 61% to 22% (RR, 0.41; 95% CI, 0.39-0.43) for osteomyelitis, and from 29% to 19% (RR, 0.63; 95% CI, 0.58-0.69) for complicated pneumonia (Figure 1). The inflection points occurred in 2009 for complicated appendicitis, 2009 for complicated pneumonia, and 2010 for osteomyelitis. The sensitivity analysis included 31 hospitals that contributed data to PHIS for every year between 2004-2018 and revealed similar findings for all three conditions: Complicated appendicitis had an RR of 0.15 (95% CI, 0.14-0.17), osteomyelitis had an RR of 0.34 (95% CI, 0.32-0.36), and complicated pneumonia had an RR of 0.55 (95% CI, 0.49-0.61). Most individual hospitals decreased PD-IV use (complicated appendicitis: 21 decreased, 8 no change, 2 increased; osteomyelitis: 25 decreased, 6 no change; complicated pneumonia: 14 decreased, 16 no change, 1 increased). While overall decreases in PD-IV were observed for all three conditions, considerable variation remained in 2018 for use of PD-IV (Figure 2), particularly for osteomyelitis (median, 18%; interquartile range [IQR] 9%-40%) and complicated pneumonia (median, 13%; IQR, 3%-30%). In 2017-2018, 1 out of 52 hospitals was in the lowest PD-IV–use quartile for all three conditions, and three hospitals were in the highest-use quartile for all three conditions.

DISCUSSION

Over a 19-year period, we observed a national decline in use of PD-IV for three serious and common bacterial infections. The decline in PD-IV is notable given that it has occurred largely in the absence of nationally coordinated guidelines or improvement efforts. Despite the overall declines, substantial variation in the use of PD-IV for these conditions persists across children’s hospitals.

The observed decrease in PD-IV use is a natural example of deimplementation, the abandonment of medical practices found to be harmful or ineffective.9 What is most compelling about the deimplementation of PD-IV for these infectious conditions is the seemingly organic motivation that propelled it. Studies of physician practice patterns for interventions that have undergone evidence reversals demonstrate that physicians might readily implement new interventions with an early evidence base but be less willing to deimplement them when more definitive evidence later questions their efficacy.10 Therefore, concerted improvement efforts backed by national guidelines are often needed to reduce the use of a widely accepted medical practice. For example, as evidence questioning the efficacy of steroid use in bronchiolitis mounted,11 bronchiolitis guidelines recommended against steroid use12 and a national quality improvement effort led to reductions in exposure to steroids among patients hospitalized with bronchiolitis.13 Complicated intra-abdominal infection guidelines acknowledge oral antibiotic therapy as an option,14 but no such national guidelines or improvement projects exist for osteomyelitis or complicated pneumonia PD-IV.

What is it about PD-IV for complicated appendicitis, osteomyelitis, and complicated pneumonia that fostered the observed organic deimplementation? Our findings that few hospitals were in the top or bottom quartile of PD-IV across all three conditions suggest that the impetus to decrease PD-IV was not likely the product of a broad hospital-wide practice shift. Most deimplementation frameworks suggest that successful deimplementation must be supported by high-quality evidence that the intervention is not only ineffective, but also harmful.15 In this case, the inflection point for osteomyelitis occurred in 2009, the same year that the first large multicenter study suggesting efficacy and decreased complications of early oral therapy for osteomyelitis was published.16 A direct link between a publication and inflection points for complicated pneumonia and appendicitis is less clear. It is possible that growth of the field of pediatric hospital medicine,17 with a stated emphasis on healthcare value,18 played a role. Greater understanding of the drivers and barriers to deimplementation in this and similar contexts will be important.

Our study has some important limitations. While inclusion and exclusion criteria were consistent over the study period, practice patterns (ie, length of stay in uncomplicated patients) change and could alter the case-mix of patients over time. Additionally, the PHIS database largely comprises children’s hospitals, and the trends we observed in PD-IV may not generalize to community settings.

The degree of deimplementation of PD-IV observed across children’s hospitals is impressive, but opportunity for further improvement likely remains. We found that marked hospital-level variation in use of PD-IV still exists, with some hospitals almost never using PD-IV and others using it for most patients. While the ideal amount of PD-IV is probably not zero, a portion of the observed variation likely represents overuse of PD-IV. To reduce costs and complications associated with antibiotic therapy, national guidelines and a targeted national improvement collaborative may be necessary to achieve further reductions in PD-IV.

1. Jumani K, Advani S, Reich NG, Gosey L, Milstone AM. Risk factors for peripherally inserted central venous catheter complications in children. JAMA Pediatr. 2013;167(5):429-435. https://doi.org/10.1001/jamapediatrics.2013.775

2. Krah NM, Bardsley T, Nelson R, et al. Economic burden of home antimicrobial therapy: OPAT versus oral therapy. Hosp Pediatr. 2019;9(4):234-240. https://doi.org/10.1542/hpeds.2018-0193

3. Keren R, Shah SS, Srivastava R, et al. Comparative effectiveness of intravenous vs oral antibiotics for postdischarge treatment of acute osteomyelitis in children. JAMA Pediatr. 2015;169(2):120-128. https://doi.org/10.1001/jamapediatrics.2014.2822

4. Rangel SJ, Anderson BR, Srivastava R, et al. Intravenous versus oral antibiotics for the prevention of treatment failure in children with complicated appendicitis: has the abandonment of peripherally inserted catheters been justified? Ann Surg. 2017;266(2):361-368. https://doi.org/10.1097/SLA.0000000000001923

5. Shah SS, Srivastava R, Wu S, et al. Intravenous versus oral antibiotics for postdischarge treatment of complicated pneumonia. Pediatrics. 2016;138(6):e20161692. https://doi.org/10.1542/peds.2016-1692

6. Roth J. CMS’ ICD-9-CM to and from ICD-10-CM and ICD-10-PCS Crosswalk or General Equivalence Mappings. National Bureau of Economic Research. May 11, 2016. Accessed June 6, 2018. http://www.nber.org/data/icd9-icd-10-cm-and-pcs-crosswalk-general-equivalence-mapping.html

7. Feudtner C, Hays RM, Haynes G, Geyer JR, Neff JM, Koepsell TD. Deaths attributed to pediatric complex chronic conditions: national trends and implications for supportive care services. Pediatrics. 2001;107(6):E99. https://doi.org/10.1542/peds.107.6.e99

8. Coon ER, Srivastava R, Stoddard G, Wilkes J, Pavia AT, Shah SS. Shortened IV antibiotic course for uncomplicated, late-onset group B streptococcal bacteremia. Pediatrics. 2018;142(5):e20180345. https://doi.org/10.1542/peds.2018-0345

9. Niven DJ, Mrklas KJ, Holodinsky JK, et al. Towards understanding the de-adoption of low-value clinical practices: a scoping review. BMC Med. 2015;13:255. https://doi.org/10.1186/s12916-015-0488-z

10. Niven DJ, Rubenfeld GD, Kramer AA, Stelfox HT. Effect of published scientific evidence on glycemic control in adult intensive care units. JAMA Intern Med. 2015;175(5):801-809. https://doi.org/10.1001/jamainternmed.2015.0157

11. Fernandes RM, Bialy LM, Vandermeer B, et al. Glucocorticoids for acute viral bronchiolitis in infants and young children. Cochrane Database Syst Rev. 2013(6):CD004878. https://doi.org/10.1002/14651858.CD004878.pub4

12. Ralston SL, Lieberthal AS, Meissner HC, et al. Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134(5):e1474-e1502. https://doi.org/10.1542/peds.2014-2742

13. Ralston SL, Garber MD, Rice-Conboy E, et al. A multicenter collaborative to reduce unnecessary care in inpatient bronchiolitis. Pediatrics. 2016;137(1):10. https://doi.org/10.1542/peds.2015-0851

14. Solomkin JS, Mazuski JE, Bradley JS, et al. Diagnosis and management of complicated intra-abdominal infection in adults and children: guidelines by the Surgical Infection Society and the Infectious Diseases Society of America. Clin Infect Dis. 2010;50(2):133-164. https://doi.org/10.1086/649554

15. Norton WE, Chambers DA, Kramer BS. Conceptualizing de-implementation in cancer care delivery. J Clin Oncol. 2019;37(2):93-96. https://doi.org/10.1200/JCO.18.00589

16. Zaoutis T, Localio AR, Leckerman K, Saddlemire S, Bertoch D, Keren R. Prolonged intravenous therapy versus early transition to oral antimicrobial therapy for acute osteomyelitis in children. Pediatrics. 2009;123(2):636-642. https://doi.org/10.1542/peds.2008-0596

17. Fisher ES. Pediatric hospital medicine: historical perspectives, inspired future. Curr Probl Pediatr Adolesc Health Care. 2012;42(5):107-112. https://doi.org/10.1016/j.cppeds.2012.01.001

18. Landrigan CP, Conway PH, Edwards S, Srivastava R. Pediatric hospitalists: a systematic review of the literature. Pediatrics. 2006;117(5):1736-1744. https://doi.org/10.1542/peds.2005-0609

In recent years, mounting evidence has emerged questioning the practice of using prolonged intravenous antibiotic therapy to treat certain serious bacterial infections in children, including complicated appendicitis, osteomyelitis, and complicated pneumonia. Historically, treatment of these conditions was often completed intravenously after hospital discharge using peripherally inserted central catheters (PICCs). Line infections, clots, mechanical problems, and general discomfort complicate PICCs, which led to their removal in more than 20% of children in one study.1 Oral antibiotics avoid these complications and are less burdensome to families.2 Recently, a series of multicenter studies showed no difference in outcomes between oral and postdischarge intravenous antibiotic therapy (PD-IV) for complicated appendicitis, osteomyelitis, and complicated pneumonia.3-5

Despite a growing body of evidence suggesting that oral therapy ought to be the default treatment strategy rather than PD-IV, the extent to which practices have changed is unknown. In this study, we measured national trends in PD-IV use and variation by hospital for complicated appendicitis, osteomyelitis, and complicated pneumonia.

METHODS

We performed a retrospective cohort study of children discharged from hospitals that contributed data to the Pediatric Health Information System (PHIS) database from January 2000 through December 2018. PHIS is an administrative database of children’s hospitals managed by the Children’s Hospital Association (Lenexa, Kansas) and contains deidentified patient-level demographic data, discharge diagnosis and procedure codes, and detailed billing information, including medical supply charges.

The cohorts were defined using International Classification of Diseases, 9th and 10th Revisions (ICD-9 and ICD-10) discharge diagnosis and procedure codes. Patients admitted through September 2015 were identified using ICD-9 codes and patients admitted from October 2015 through December 2018 were identified using ICD-10 codes. The Centers for Medicaid & Medicare Services crosswalk was used to align ICD-9 and ICD-10 codes.6 Inclusion and exclusion criteria identifying cohorts of children hospitalized for complicated appendicitis, osteomyelitis, or complicated pneumonia were based on prior studies using the PHIS database.3-5 These studies augmented the PHIS administrative dataset with local chart review to identify patients from 2009-2012 with the following inclusion and exclusion criteria: Patients with complicated appendicitis were defined by a diagnosis code for acute appendicitis and a procedure code for appendectomy, with postoperative length of stay lasting between 3 and 7 days. Patients with osteomyelitis had a diagnosis code of acute or unspecified osteomyelitis with a hospital length of stay between 2 and 14 days. Patients with complicated pneumonia were defined by a diagnosis code for both pneumonia and pleural effusion with one of these as the primary diagnosis. Patients were excluded if they were older than 18 years or if they were younger than 2 months for osteomyelitis and complicated pneumonia or younger than 3 years for appendicitis. For all three conditions, children with a complex chronic condition7 were excluded. Only the index encounter meeting inclusion and exclusion criteria for each patient was included. PD-IV therapy was defined using procedure codes and hospital charges during the index hospitalization. This definition for PD-IV therapy has been validated among children with complicated pneumonia, demonstrating positive and negative predictive values for PICC exposure of 85% and 99%, respectively.8

Trends in the percentage of patients receiving PD-IV were adjusted for age, race, insurance type, intensive care unit days, and hospital-level case mix index with use of Poisson regression. Calculated risk ratios represent the change in PD-IV across the entire 19-year study period for each condition (as opposed to an annual rate of change). An inflection point for each condition was identified using piecewise linear regression in which the line slope has one value up to a point in time and a second value after that point. The transition point is determined by maximizing model fit.

Some hospitals were added to the database throughout the time period and therefore did not have data for all years of the study. To account for the possibility of a group of high– or low–PD-IV use hospitals entering the cohort and biasing the overall trend, we performed a sensitivity analysis restricted to hospitals continuously contributing data to PHIS every year between 2004 (when a majority of hospitals joined PHIS) and 2018. Significance testing for individual hospital trends was conducted among continuously contributing hospitals, with each hospital tested in the above Poisson model independently.

For the most recent year of 2018, we reported the distribution of adjusted percentages of PD-IV at the individual hospital level. Only hospitals with at least five patients for a given condition are included in the percent PD-IV calculations for 2018. To examine the extent to which an individual hospital might be a low– or high–PD-IV user across conditions, we divided hospitals into quartiles based on PD-IV use for each condition in 2017-2018 and calculated the percent of hospitals in the lowest- and highest-use quartiles for all three conditions. All statistics were performed using Stata 15 (StataCorp).

RESULTS

Among 52 hospitals over a 19-year study period, there were 60,575 hospitalizations for complicated appendicitis, 24,753 hospitalizations for osteomyelitis, and 13,700 hospitalizations for complicated pneumonia. From 2000 to 2018, PD-IV decreased from 13% to 2% (RR, 0.15; 95% CI, 0.14-0.16) for complicated appendicitis, from 61% to 22% (RR, 0.41; 95% CI, 0.39-0.43) for osteomyelitis, and from 29% to 19% (RR, 0.63; 95% CI, 0.58-0.69) for complicated pneumonia (Figure 1). The inflection points occurred in 2009 for complicated appendicitis, 2009 for complicated pneumonia, and 2010 for osteomyelitis. The sensitivity analysis included 31 hospitals that contributed data to PHIS for every year between 2004-2018 and revealed similar findings for all three conditions: Complicated appendicitis had an RR of 0.15 (95% CI, 0.14-0.17), osteomyelitis had an RR of 0.34 (95% CI, 0.32-0.36), and complicated pneumonia had an RR of 0.55 (95% CI, 0.49-0.61). Most individual hospitals decreased PD-IV use (complicated appendicitis: 21 decreased, 8 no change, 2 increased; osteomyelitis: 25 decreased, 6 no change; complicated pneumonia: 14 decreased, 16 no change, 1 increased). While overall decreases in PD-IV were observed for all three conditions, considerable variation remained in 2018 for use of PD-IV (Figure 2), particularly for osteomyelitis (median, 18%; interquartile range [IQR] 9%-40%) and complicated pneumonia (median, 13%; IQR, 3%-30%). In 2017-2018, 1 out of 52 hospitals was in the lowest PD-IV–use quartile for all three conditions, and three hospitals were in the highest-use quartile for all three conditions.

DISCUSSION

Over a 19-year period, we observed a national decline in use of PD-IV for three serious and common bacterial infections. The decline in PD-IV is notable given that it has occurred largely in the absence of nationally coordinated guidelines or improvement efforts. Despite the overall declines, substantial variation in the use of PD-IV for these conditions persists across children’s hospitals.

The observed decrease in PD-IV use is a natural example of deimplementation, the abandonment of medical practices found to be harmful or ineffective.9 What is most compelling about the deimplementation of PD-IV for these infectious conditions is the seemingly organic motivation that propelled it. Studies of physician practice patterns for interventions that have undergone evidence reversals demonstrate that physicians might readily implement new interventions with an early evidence base but be less willing to deimplement them when more definitive evidence later questions their efficacy.10 Therefore, concerted improvement efforts backed by national guidelines are often needed to reduce the use of a widely accepted medical practice. For example, as evidence questioning the efficacy of steroid use in bronchiolitis mounted,11 bronchiolitis guidelines recommended against steroid use12 and a national quality improvement effort led to reductions in exposure to steroids among patients hospitalized with bronchiolitis.13 Complicated intra-abdominal infection guidelines acknowledge oral antibiotic therapy as an option,14 but no such national guidelines or improvement projects exist for osteomyelitis or complicated pneumonia PD-IV.

What is it about PD-IV for complicated appendicitis, osteomyelitis, and complicated pneumonia that fostered the observed organic deimplementation? Our findings that few hospitals were in the top or bottom quartile of PD-IV across all three conditions suggest that the impetus to decrease PD-IV was not likely the product of a broad hospital-wide practice shift. Most deimplementation frameworks suggest that successful deimplementation must be supported by high-quality evidence that the intervention is not only ineffective, but also harmful.15 In this case, the inflection point for osteomyelitis occurred in 2009, the same year that the first large multicenter study suggesting efficacy and decreased complications of early oral therapy for osteomyelitis was published.16 A direct link between a publication and inflection points for complicated pneumonia and appendicitis is less clear. It is possible that growth of the field of pediatric hospital medicine,17 with a stated emphasis on healthcare value,18 played a role. Greater understanding of the drivers and barriers to deimplementation in this and similar contexts will be important.

Our study has some important limitations. While inclusion and exclusion criteria were consistent over the study period, practice patterns (ie, length of stay in uncomplicated patients) change and could alter the case-mix of patients over time. Additionally, the PHIS database largely comprises children’s hospitals, and the trends we observed in PD-IV may not generalize to community settings.

The degree of deimplementation of PD-IV observed across children’s hospitals is impressive, but opportunity for further improvement likely remains. We found that marked hospital-level variation in use of PD-IV still exists, with some hospitals almost never using PD-IV and others using it for most patients. While the ideal amount of PD-IV is probably not zero, a portion of the observed variation likely represents overuse of PD-IV. To reduce costs and complications associated with antibiotic therapy, national guidelines and a targeted national improvement collaborative may be necessary to achieve further reductions in PD-IV.

In recent years, mounting evidence has emerged questioning the practice of using prolonged intravenous antibiotic therapy to treat certain serious bacterial infections in children, including complicated appendicitis, osteomyelitis, and complicated pneumonia. Historically, treatment of these conditions was often completed intravenously after hospital discharge using peripherally inserted central catheters (PICCs). Line infections, clots, mechanical problems, and general discomfort complicate PICCs, which led to their removal in more than 20% of children in one study.1 Oral antibiotics avoid these complications and are less burdensome to families.2 Recently, a series of multicenter studies showed no difference in outcomes between oral and postdischarge intravenous antibiotic therapy (PD-IV) for complicated appendicitis, osteomyelitis, and complicated pneumonia.3-5

Despite a growing body of evidence suggesting that oral therapy ought to be the default treatment strategy rather than PD-IV, the extent to which practices have changed is unknown. In this study, we measured national trends in PD-IV use and variation by hospital for complicated appendicitis, osteomyelitis, and complicated pneumonia.

METHODS

We performed a retrospective cohort study of children discharged from hospitals that contributed data to the Pediatric Health Information System (PHIS) database from January 2000 through December 2018. PHIS is an administrative database of children’s hospitals managed by the Children’s Hospital Association (Lenexa, Kansas) and contains deidentified patient-level demographic data, discharge diagnosis and procedure codes, and detailed billing information, including medical supply charges.

The cohorts were defined using International Classification of Diseases, 9th and 10th Revisions (ICD-9 and ICD-10) discharge diagnosis and procedure codes. Patients admitted through September 2015 were identified using ICD-9 codes and patients admitted from October 2015 through December 2018 were identified using ICD-10 codes. The Centers for Medicaid & Medicare Services crosswalk was used to align ICD-9 and ICD-10 codes.6 Inclusion and exclusion criteria identifying cohorts of children hospitalized for complicated appendicitis, osteomyelitis, or complicated pneumonia were based on prior studies using the PHIS database.3-5 These studies augmented the PHIS administrative dataset with local chart review to identify patients from 2009-2012 with the following inclusion and exclusion criteria: Patients with complicated appendicitis were defined by a diagnosis code for acute appendicitis and a procedure code for appendectomy, with postoperative length of stay lasting between 3 and 7 days. Patients with osteomyelitis had a diagnosis code of acute or unspecified osteomyelitis with a hospital length of stay between 2 and 14 days. Patients with complicated pneumonia were defined by a diagnosis code for both pneumonia and pleural effusion with one of these as the primary diagnosis. Patients were excluded if they were older than 18 years or if they were younger than 2 months for osteomyelitis and complicated pneumonia or younger than 3 years for appendicitis. For all three conditions, children with a complex chronic condition7 were excluded. Only the index encounter meeting inclusion and exclusion criteria for each patient was included. PD-IV therapy was defined using procedure codes and hospital charges during the index hospitalization. This definition for PD-IV therapy has been validated among children with complicated pneumonia, demonstrating positive and negative predictive values for PICC exposure of 85% and 99%, respectively.8

Trends in the percentage of patients receiving PD-IV were adjusted for age, race, insurance type, intensive care unit days, and hospital-level case mix index with use of Poisson regression. Calculated risk ratios represent the change in PD-IV across the entire 19-year study period for each condition (as opposed to an annual rate of change). An inflection point for each condition was identified using piecewise linear regression in which the line slope has one value up to a point in time and a second value after that point. The transition point is determined by maximizing model fit.

Some hospitals were added to the database throughout the time period and therefore did not have data for all years of the study. To account for the possibility of a group of high– or low–PD-IV use hospitals entering the cohort and biasing the overall trend, we performed a sensitivity analysis restricted to hospitals continuously contributing data to PHIS every year between 2004 (when a majority of hospitals joined PHIS) and 2018. Significance testing for individual hospital trends was conducted among continuously contributing hospitals, with each hospital tested in the above Poisson model independently.

For the most recent year of 2018, we reported the distribution of adjusted percentages of PD-IV at the individual hospital level. Only hospitals with at least five patients for a given condition are included in the percent PD-IV calculations for 2018. To examine the extent to which an individual hospital might be a low– or high–PD-IV user across conditions, we divided hospitals into quartiles based on PD-IV use for each condition in 2017-2018 and calculated the percent of hospitals in the lowest- and highest-use quartiles for all three conditions. All statistics were performed using Stata 15 (StataCorp).

RESULTS

Among 52 hospitals over a 19-year study period, there were 60,575 hospitalizations for complicated appendicitis, 24,753 hospitalizations for osteomyelitis, and 13,700 hospitalizations for complicated pneumonia. From 2000 to 2018, PD-IV decreased from 13% to 2% (RR, 0.15; 95% CI, 0.14-0.16) for complicated appendicitis, from 61% to 22% (RR, 0.41; 95% CI, 0.39-0.43) for osteomyelitis, and from 29% to 19% (RR, 0.63; 95% CI, 0.58-0.69) for complicated pneumonia (Figure 1). The inflection points occurred in 2009 for complicated appendicitis, 2009 for complicated pneumonia, and 2010 for osteomyelitis. The sensitivity analysis included 31 hospitals that contributed data to PHIS for every year between 2004-2018 and revealed similar findings for all three conditions: Complicated appendicitis had an RR of 0.15 (95% CI, 0.14-0.17), osteomyelitis had an RR of 0.34 (95% CI, 0.32-0.36), and complicated pneumonia had an RR of 0.55 (95% CI, 0.49-0.61). Most individual hospitals decreased PD-IV use (complicated appendicitis: 21 decreased, 8 no change, 2 increased; osteomyelitis: 25 decreased, 6 no change; complicated pneumonia: 14 decreased, 16 no change, 1 increased). While overall decreases in PD-IV were observed for all three conditions, considerable variation remained in 2018 for use of PD-IV (Figure 2), particularly for osteomyelitis (median, 18%; interquartile range [IQR] 9%-40%) and complicated pneumonia (median, 13%; IQR, 3%-30%). In 2017-2018, 1 out of 52 hospitals was in the lowest PD-IV–use quartile for all three conditions, and three hospitals were in the highest-use quartile for all three conditions.

DISCUSSION

Over a 19-year period, we observed a national decline in use of PD-IV for three serious and common bacterial infections. The decline in PD-IV is notable given that it has occurred largely in the absence of nationally coordinated guidelines or improvement efforts. Despite the overall declines, substantial variation in the use of PD-IV for these conditions persists across children’s hospitals.

The observed decrease in PD-IV use is a natural example of deimplementation, the abandonment of medical practices found to be harmful or ineffective.9 What is most compelling about the deimplementation of PD-IV for these infectious conditions is the seemingly organic motivation that propelled it. Studies of physician practice patterns for interventions that have undergone evidence reversals demonstrate that physicians might readily implement new interventions with an early evidence base but be less willing to deimplement them when more definitive evidence later questions their efficacy.10 Therefore, concerted improvement efforts backed by national guidelines are often needed to reduce the use of a widely accepted medical practice. For example, as evidence questioning the efficacy of steroid use in bronchiolitis mounted,11 bronchiolitis guidelines recommended against steroid use12 and a national quality improvement effort led to reductions in exposure to steroids among patients hospitalized with bronchiolitis.13 Complicated intra-abdominal infection guidelines acknowledge oral antibiotic therapy as an option,14 but no such national guidelines or improvement projects exist for osteomyelitis or complicated pneumonia PD-IV.

What is it about PD-IV for complicated appendicitis, osteomyelitis, and complicated pneumonia that fostered the observed organic deimplementation? Our findings that few hospitals were in the top or bottom quartile of PD-IV across all three conditions suggest that the impetus to decrease PD-IV was not likely the product of a broad hospital-wide practice shift. Most deimplementation frameworks suggest that successful deimplementation must be supported by high-quality evidence that the intervention is not only ineffective, but also harmful.15 In this case, the inflection point for osteomyelitis occurred in 2009, the same year that the first large multicenter study suggesting efficacy and decreased complications of early oral therapy for osteomyelitis was published.16 A direct link between a publication and inflection points for complicated pneumonia and appendicitis is less clear. It is possible that growth of the field of pediatric hospital medicine,17 with a stated emphasis on healthcare value,18 played a role. Greater understanding of the drivers and barriers to deimplementation in this and similar contexts will be important.

Our study has some important limitations. While inclusion and exclusion criteria were consistent over the study period, practice patterns (ie, length of stay in uncomplicated patients) change and could alter the case-mix of patients over time. Additionally, the PHIS database largely comprises children’s hospitals, and the trends we observed in PD-IV may not generalize to community settings.

The degree of deimplementation of PD-IV observed across children’s hospitals is impressive, but opportunity for further improvement likely remains. We found that marked hospital-level variation in use of PD-IV still exists, with some hospitals almost never using PD-IV and others using it for most patients. While the ideal amount of PD-IV is probably not zero, a portion of the observed variation likely represents overuse of PD-IV. To reduce costs and complications associated with antibiotic therapy, national guidelines and a targeted national improvement collaborative may be necessary to achieve further reductions in PD-IV.

1. Jumani K, Advani S, Reich NG, Gosey L, Milstone AM. Risk factors for peripherally inserted central venous catheter complications in children. JAMA Pediatr. 2013;167(5):429-435. https://doi.org/10.1001/jamapediatrics.2013.775

2. Krah NM, Bardsley T, Nelson R, et al. Economic burden of home antimicrobial therapy: OPAT versus oral therapy. Hosp Pediatr. 2019;9(4):234-240. https://doi.org/10.1542/hpeds.2018-0193

3. Keren R, Shah SS, Srivastava R, et al. Comparative effectiveness of intravenous vs oral antibiotics for postdischarge treatment of acute osteomyelitis in children. JAMA Pediatr. 2015;169(2):120-128. https://doi.org/10.1001/jamapediatrics.2014.2822

4. Rangel SJ, Anderson BR, Srivastava R, et al. Intravenous versus oral antibiotics for the prevention of treatment failure in children with complicated appendicitis: has the abandonment of peripherally inserted catheters been justified? Ann Surg. 2017;266(2):361-368. https://doi.org/10.1097/SLA.0000000000001923

5. Shah SS, Srivastava R, Wu S, et al. Intravenous versus oral antibiotics for postdischarge treatment of complicated pneumonia. Pediatrics. 2016;138(6):e20161692. https://doi.org/10.1542/peds.2016-1692

6. Roth J. CMS’ ICD-9-CM to and from ICD-10-CM and ICD-10-PCS Crosswalk or General Equivalence Mappings. National Bureau of Economic Research. May 11, 2016. Accessed June 6, 2018. http://www.nber.org/data/icd9-icd-10-cm-and-pcs-crosswalk-general-equivalence-mapping.html

7. Feudtner C, Hays RM, Haynes G, Geyer JR, Neff JM, Koepsell TD. Deaths attributed to pediatric complex chronic conditions: national trends and implications for supportive care services. Pediatrics. 2001;107(6):E99. https://doi.org/10.1542/peds.107.6.e99

8. Coon ER, Srivastava R, Stoddard G, Wilkes J, Pavia AT, Shah SS. Shortened IV antibiotic course for uncomplicated, late-onset group B streptococcal bacteremia. Pediatrics. 2018;142(5):e20180345. https://doi.org/10.1542/peds.2018-0345

9. Niven DJ, Mrklas KJ, Holodinsky JK, et al. Towards understanding the de-adoption of low-value clinical practices: a scoping review. BMC Med. 2015;13:255. https://doi.org/10.1186/s12916-015-0488-z

10. Niven DJ, Rubenfeld GD, Kramer AA, Stelfox HT. Effect of published scientific evidence on glycemic control in adult intensive care units. JAMA Intern Med. 2015;175(5):801-809. https://doi.org/10.1001/jamainternmed.2015.0157

11. Fernandes RM, Bialy LM, Vandermeer B, et al. Glucocorticoids for acute viral bronchiolitis in infants and young children. Cochrane Database Syst Rev. 2013(6):CD004878. https://doi.org/10.1002/14651858.CD004878.pub4

12. Ralston SL, Lieberthal AS, Meissner HC, et al. Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134(5):e1474-e1502. https://doi.org/10.1542/peds.2014-2742

13. Ralston SL, Garber MD, Rice-Conboy E, et al. A multicenter collaborative to reduce unnecessary care in inpatient bronchiolitis. Pediatrics. 2016;137(1):10. https://doi.org/10.1542/peds.2015-0851

14. Solomkin JS, Mazuski JE, Bradley JS, et al. Diagnosis and management of complicated intra-abdominal infection in adults and children: guidelines by the Surgical Infection Society and the Infectious Diseases Society of America. Clin Infect Dis. 2010;50(2):133-164. https://doi.org/10.1086/649554

15. Norton WE, Chambers DA, Kramer BS. Conceptualizing de-implementation in cancer care delivery. J Clin Oncol. 2019;37(2):93-96. https://doi.org/10.1200/JCO.18.00589

16. Zaoutis T, Localio AR, Leckerman K, Saddlemire S, Bertoch D, Keren R. Prolonged intravenous therapy versus early transition to oral antimicrobial therapy for acute osteomyelitis in children. Pediatrics. 2009;123(2):636-642. https://doi.org/10.1542/peds.2008-0596

17. Fisher ES. Pediatric hospital medicine: historical perspectives, inspired future. Curr Probl Pediatr Adolesc Health Care. 2012;42(5):107-112. https://doi.org/10.1016/j.cppeds.2012.01.001

18. Landrigan CP, Conway PH, Edwards S, Srivastava R. Pediatric hospitalists: a systematic review of the literature. Pediatrics. 2006;117(5):1736-1744. https://doi.org/10.1542/peds.2005-0609

1. Jumani K, Advani S, Reich NG, Gosey L, Milstone AM. Risk factors for peripherally inserted central venous catheter complications in children. JAMA Pediatr. 2013;167(5):429-435. https://doi.org/10.1001/jamapediatrics.2013.775

2. Krah NM, Bardsley T, Nelson R, et al. Economic burden of home antimicrobial therapy: OPAT versus oral therapy. Hosp Pediatr. 2019;9(4):234-240. https://doi.org/10.1542/hpeds.2018-0193

3. Keren R, Shah SS, Srivastava R, et al. Comparative effectiveness of intravenous vs oral antibiotics for postdischarge treatment of acute osteomyelitis in children. JAMA Pediatr. 2015;169(2):120-128. https://doi.org/10.1001/jamapediatrics.2014.2822

4. Rangel SJ, Anderson BR, Srivastava R, et al. Intravenous versus oral antibiotics for the prevention of treatment failure in children with complicated appendicitis: has the abandonment of peripherally inserted catheters been justified? Ann Surg. 2017;266(2):361-368. https://doi.org/10.1097/SLA.0000000000001923

5. Shah SS, Srivastava R, Wu S, et al. Intravenous versus oral antibiotics for postdischarge treatment of complicated pneumonia. Pediatrics. 2016;138(6):e20161692. https://doi.org/10.1542/peds.2016-1692

6. Roth J. CMS’ ICD-9-CM to and from ICD-10-CM and ICD-10-PCS Crosswalk or General Equivalence Mappings. National Bureau of Economic Research. May 11, 2016. Accessed June 6, 2018. http://www.nber.org/data/icd9-icd-10-cm-and-pcs-crosswalk-general-equivalence-mapping.html

7. Feudtner C, Hays RM, Haynes G, Geyer JR, Neff JM, Koepsell TD. Deaths attributed to pediatric complex chronic conditions: national trends and implications for supportive care services. Pediatrics. 2001;107(6):E99. https://doi.org/10.1542/peds.107.6.e99

8. Coon ER, Srivastava R, Stoddard G, Wilkes J, Pavia AT, Shah SS. Shortened IV antibiotic course for uncomplicated, late-onset group B streptococcal bacteremia. Pediatrics. 2018;142(5):e20180345. https://doi.org/10.1542/peds.2018-0345

9. Niven DJ, Mrklas KJ, Holodinsky JK, et al. Towards understanding the de-adoption of low-value clinical practices: a scoping review. BMC Med. 2015;13:255. https://doi.org/10.1186/s12916-015-0488-z

10. Niven DJ, Rubenfeld GD, Kramer AA, Stelfox HT. Effect of published scientific evidence on glycemic control in adult intensive care units. JAMA Intern Med. 2015;175(5):801-809. https://doi.org/10.1001/jamainternmed.2015.0157

11. Fernandes RM, Bialy LM, Vandermeer B, et al. Glucocorticoids for acute viral bronchiolitis in infants and young children. Cochrane Database Syst Rev. 2013(6):CD004878. https://doi.org/10.1002/14651858.CD004878.pub4

12. Ralston SL, Lieberthal AS, Meissner HC, et al. Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134(5):e1474-e1502. https://doi.org/10.1542/peds.2014-2742

13. Ralston SL, Garber MD, Rice-Conboy E, et al. A multicenter collaborative to reduce unnecessary care in inpatient bronchiolitis. Pediatrics. 2016;137(1):10. https://doi.org/10.1542/peds.2015-0851

14. Solomkin JS, Mazuski JE, Bradley JS, et al. Diagnosis and management of complicated intra-abdominal infection in adults and children: guidelines by the Surgical Infection Society and the Infectious Diseases Society of America. Clin Infect Dis. 2010;50(2):133-164. https://doi.org/10.1086/649554

15. Norton WE, Chambers DA, Kramer BS. Conceptualizing de-implementation in cancer care delivery. J Clin Oncol. 2019;37(2):93-96. https://doi.org/10.1200/JCO.18.00589

16. Zaoutis T, Localio AR, Leckerman K, Saddlemire S, Bertoch D, Keren R. Prolonged intravenous therapy versus early transition to oral antimicrobial therapy for acute osteomyelitis in children. Pediatrics. 2009;123(2):636-642. https://doi.org/10.1542/peds.2008-0596

17. Fisher ES. Pediatric hospital medicine: historical perspectives, inspired future. Curr Probl Pediatr Adolesc Health Care. 2012;42(5):107-112. https://doi.org/10.1016/j.cppeds.2012.01.001

18. Landrigan CP, Conway PH, Edwards S, Srivastava R. Pediatric hospitalists: a systematic review of the literature. Pediatrics. 2006;117(5):1736-1744. https://doi.org/10.1542/peds.2005-0609

© 2020 Society of Hospital Medicine

Safety Huddle Intervention for Reducing Physiologic Monitor Alarms: A Hybrid Effectiveness-Implementation Cluster Randomized Trial

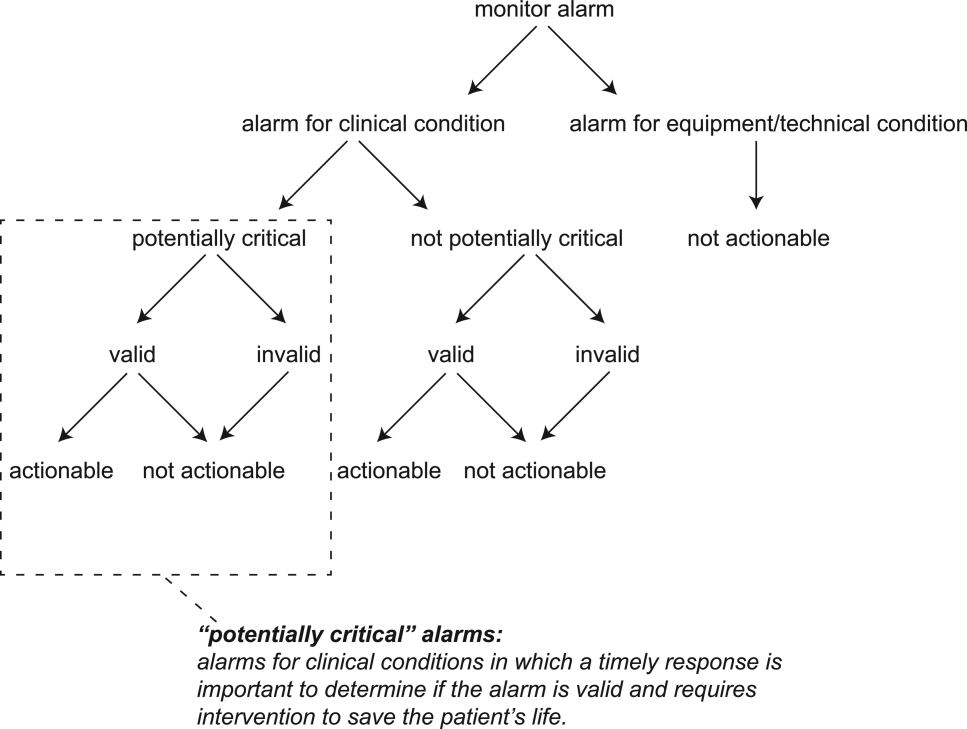

Physiologic monitor alarms occur frequently in the hospital environment, with average rates on pediatric wards between 42 and 155 alarms per monitored patient-day.1 However, average rates do not depict the full story, because only 9%–25% of patients are responsible for most alarms on inpatient wards.1,2 In addition, only 0.5%–1% of alarms on pediatric wards warrant action.3,4 Downstream consequences of high alarm rates include interruptions5,6 and alarm fatigue.3,4,7

Alarm customization, the process of reviewing individual patients’ alarm data and using that data to implement patient-specific alarm reduction interventions, has emerged as a potential approach to unit-wide alarm management.8-11 Potential customizations include broadening alarm thresholds, instituting delays between the time the alarm condition is met and the time the alarm sounds, and changing electrodes.8-11 However, the workflows within which to identify the patients who will benefit from customization, make decisions about how to customize, and implement customizations have not been delineated.

Safety huddles are brief structured discussions among physicians, nurses, and other staff aiming to identify and mitigate threats to patient safety.11-13 In this study, we aimed to evaluate the influence of a safety huddle-based alarm intervention strategy targeting high alarm pediatric ward patients on (a) unit-level alarm rates and (b) patient-level alarm rates, as well as to (c) evaluate implementation outcomes. We hypothesized that patients discussed in huddles would have greater reductions in alarm rates in the 24 hours following their huddle than patients who were not discussed. Given that most alarms are generated by a small fraction of patients,1,2 we hypothesized that patient-level reductions would translate to unit-level reductions.

METHODS

Human Subject Protection

The Institutional Review Board of Children’s Hospital of Philadelphia approved this study with a waiver of informed consent. We registered the study at ClinicalTrials.gov (identifier NCT02458872). The original protocol is available as an Online Supplement.

Design and Framework

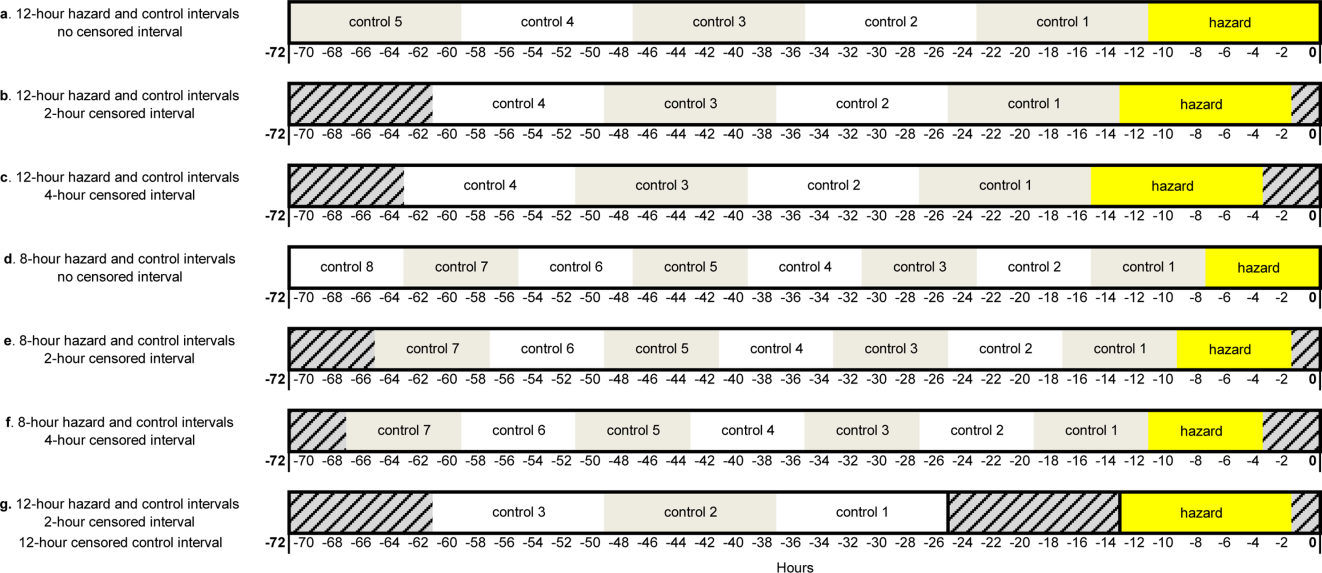

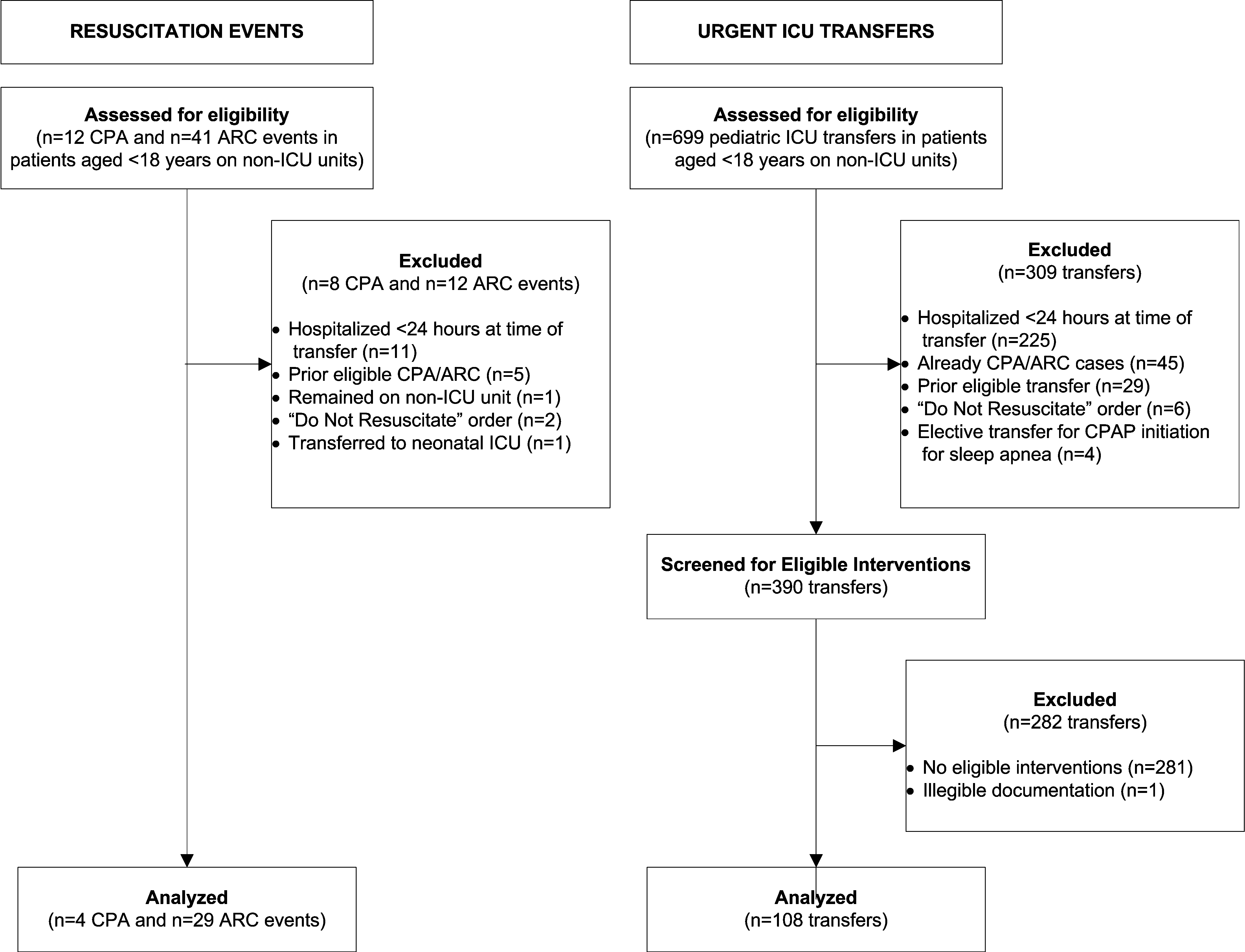

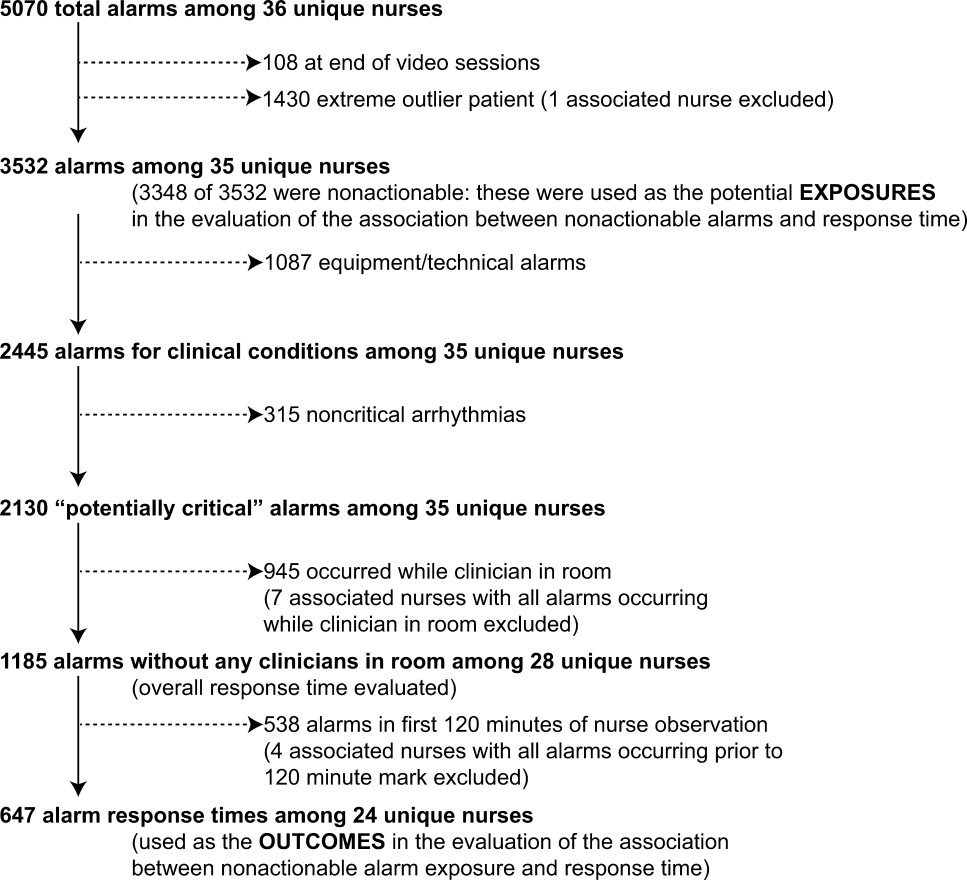

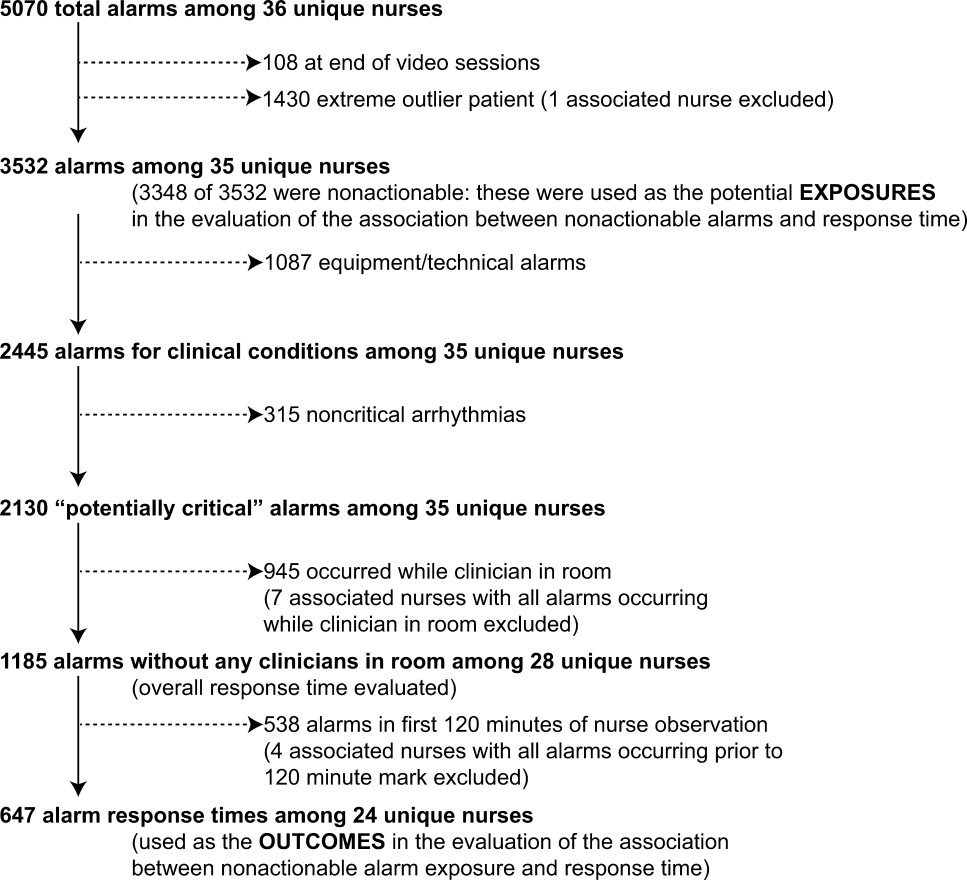

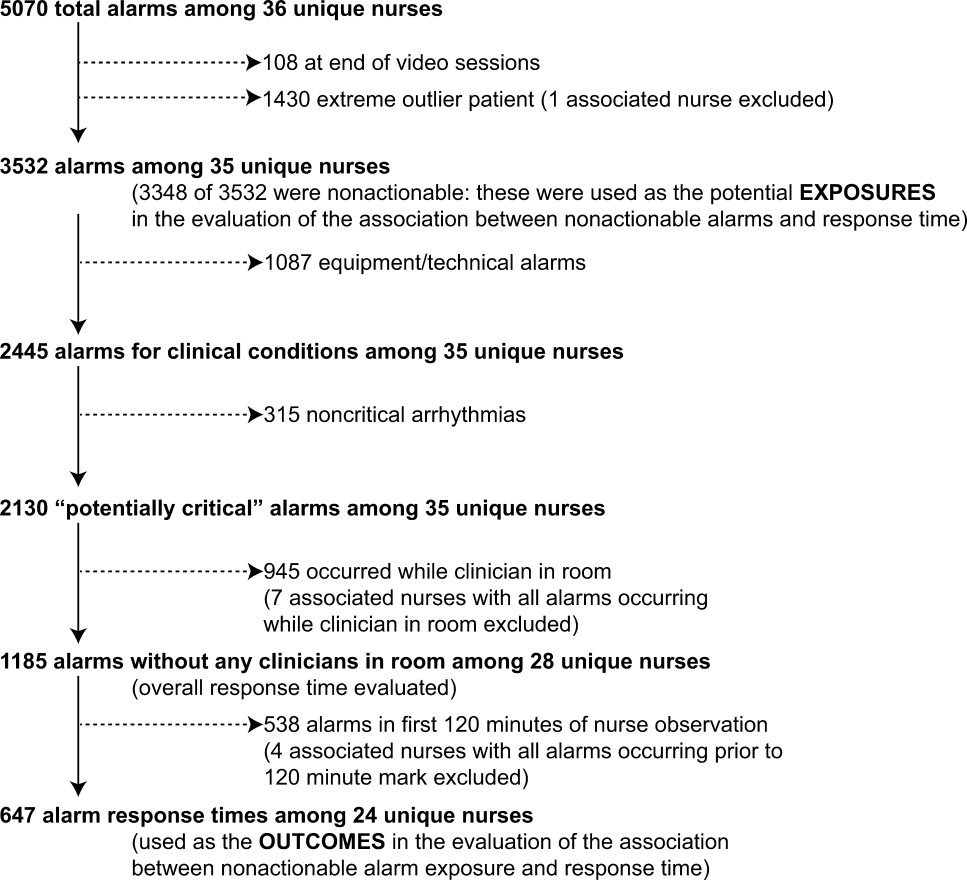

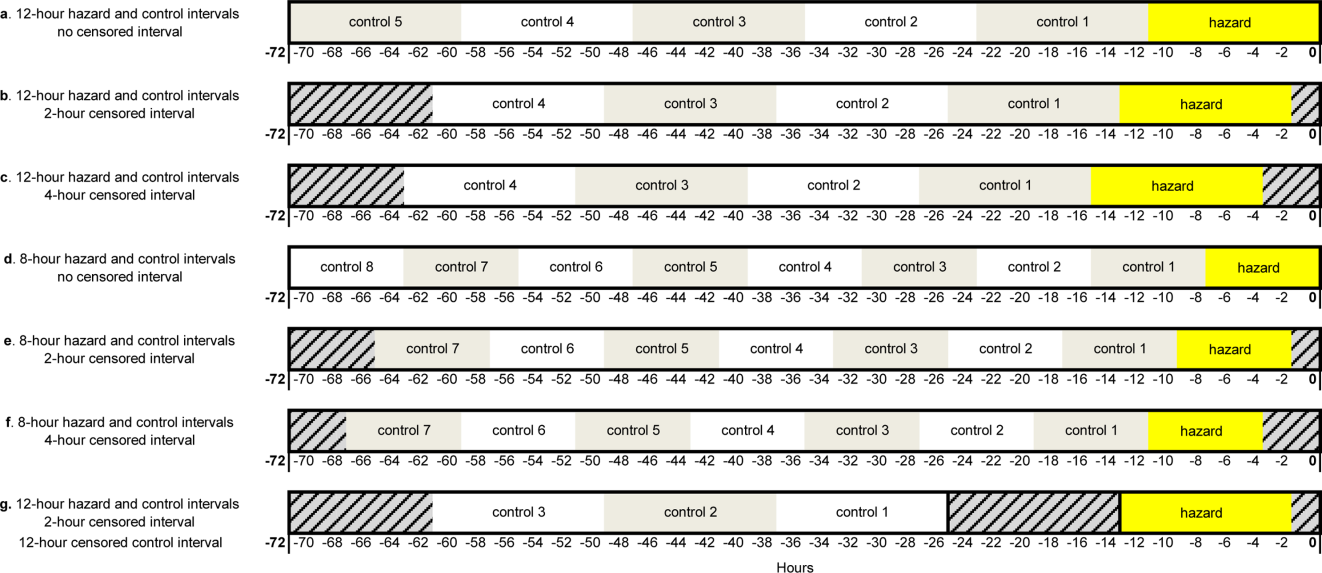

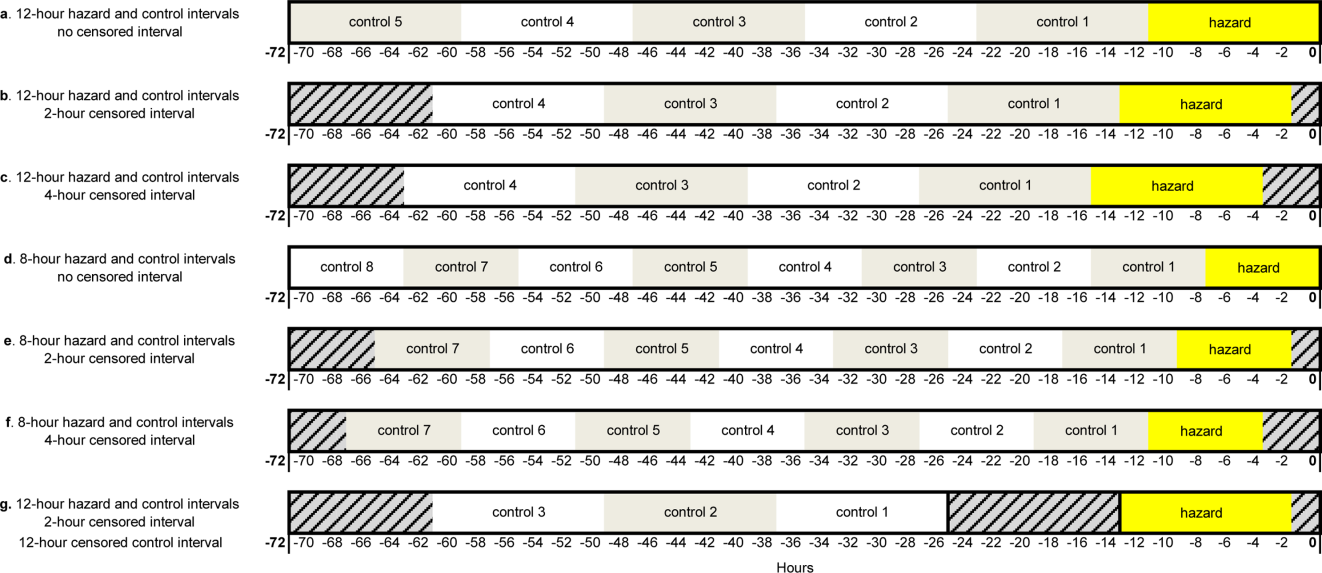

For our secondary effectiveness outcome evaluating the effect of the intervention on the alarm rates of the individual patients discussed in huddles, we used a cohort design embedded within the trial to analyze patient-specific alarm data collected only on randomly selected “intensive data collection days,” described below and in Figure 1.

Setting and Subjects

All patients hospitalized on 8 units that admit general pediatric and medical subspecialty patients at Children’s Hospital of Philadelphia between June 15, 2015 and May 8, 2016 were included in the primary (unit-level) analysis. Every patient’s bedside included a General Electric Dash 3000 physiologic monitor. Decisions to monitor patients were made by physicians and required orders. Default alarm settings are available in Supplementary Table 1; these settings required orders to change.

All 8 units were already convening scheduled safety huddles led by the charge nurse each day. All nurses and at least one resident were expected to attend; attending physicians and fellows were welcome but not expected to attend. Huddles focused on discussing safety concerns and patient flow. None of the preexisting huddles included alarm discussion.

Intervention

For each nonholiday weekday, we generated customized paper-based alarm huddle data “dashboards” (Supplementary Figure 1) displaying data from the patients (up to a maximum of 4) on each intervention unit with the highest numbers of high-acuity alarms (“crisis” and “warning” audible alarms, see Supplementary Table 2 for detailed listing of alarm types) in the preceding 4 hours by reviewing data from the monitor network using BedMasterEx v4.2 (Excel Medical Electronics). Dashboards listed the most frequent types of alarms, alarm settings, and included a script for discussing the alarms with checkboxes to indicate changes agreed upon by the team during the huddle. Patients with fewer than 20 alarms in the preceding 4h were not included; thus, sometimes fewer than 4 patients’ data were available for discussion. We hand-delivered dashboards to the charge nurses leading huddles, and they facilitated the multidisciplinary alarm discussions focused on reviewing alarm data and customizing settings to reduce unnecessary alarms.

Study Periods

The study had 3 periods as shown in Supplementary Figure 2: (1) 16-week baseline data collection, (2) phased intervention implementation during which we serially spent 2-8 weeks on each of the 4 intervention units implementing the intervention, and (3) 16-week postimplementation data collection.

Outcomes

The primary effectiveness outcome was the change in unit-level alarms per patient-day between the baseline and postimplementation periods in intervention versus control units, with all patients on the units included. The secondary effectiveness outcome (analyzed using the embedded cohort design) was the change in individual patient-level alarms between the 24 hours leading up to a huddle and the 24 hours following huddles in patients who were versus patients who were not discussed in huddles.

Implementation outcomes included adoption and fidelity measures. To measure adoption (defined as “intention to try” the intervention),16 we measured the frequency of discussions attended by patients’ nurses and physicians. We evaluated 3 elements of fidelity: adherence, dose, and quality of delivery.17 We measured adherence as the incorporation of alarm discussion into huddles when there were eligible patients to discuss. We measured dose as the average number of patients discussed on each unit per calendar day during the postimplementation period. We measured quality of delivery as the extent to which changes to monitoring that were agreed upon in the huddles were made at the bedside.

Safety Measures

To surveil for unintended consequences of reduced monitoring, we screened the hospital’s rapid response and code blue team database weekly for any events in patients previously discussed in huddles that occurred between huddle and hospital discharge. We reviewed charts to determine if the events were related to the intervention.

Randomization

Prior to randomization, the 8 units were divided into pairs based on participation in hospital-wide Joint Commission alarm management activities, use of alarm middleware that relayed detailed alarm information to nurses’ mobile phones, and baseline alarm rates. One unit in each pair was randomized to intervention and the other to control by coin flip.

Data Collection

We used Research Electronic Data Capture (REDCap)18 database tools.

Data for Unit-Level Analyses

We captured all alarms occurring on the study units during the study period using data from BedMasterEx. We obtained census data accurate to the hour from the Clinical Data Warehouse.

Data Captured in All Huddles

During each huddle, we collected the number of patients whose alarms were discussed, patient characteristics, presence of nurses and physicians, and monitoring changes agreed upon. We then followed up 4 hours later to determine if changes were made at the bedside by examining monitor settings.

Data Captured Only During Intensive Data Collection Days

We randomly selected 1 day during each of the 16 weeks of the postimplementation period to obtain additional patient-level data. On each intensive data collection day, the 4 monitored patients on each intervention and control unit with the most high-acuity alarms in the 4 hours prior to huddles occurring — regardless of whether or not these patients were later discussed in huddles — were identified for data collection. On these dates, a member of the research team reviewed each patient’s alarm counts in 4-hour blocks during the 24 hours before and after the huddle. Given that the huddles were not always at the same time every day (ranging between 10:00 and 13:00), we operationally set the huddle time as 12:00 for all units.

Data Analysis

We used Stata/SE 14.2 for all analyses.

Unit-Level Alarm Rates

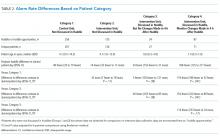

To compare unit-level rates, we performed an interrupted time series analysis using segmented (piecewise) regression to evaluate the impact of the intervention.19,20 We used a multivariable generalized estimating equation model with the negative binomial distribution21 and clustering by unit. We bootstrapped the model and generated percentile-based 95% confidence intervals. We then used the model to estimate the alarm rate difference in differences between the baseline data collection period and the postimplementation data collection period for intervention versus control units.

Patient-Level Alarm Rates

In contrast to unit-level analysis, we used an embedded cohort design to model the change in individual patients’ alarms between the 24 hours leading up to huddles and the 24 hours following huddles in patients who were versus patients who were not discussed in huddles. The analysis was restricted to the patients included in intensive data collection days. We performed bootstrapped linear regression and generated percentile-based 95% confidence intervals using the difference in 4-hour block alarm rate between pre- and posthuddle as the outcome. We clustered within patients. We stratified by unit and preceding alarm rate. We modeled the alarm rate difference between the 24-hour prehuddle and the 24-hour posthuddle for huddled and nonhuddled patients and the difference in differences between exposure groups.

Implementation Outcomes

We summarized adoption and fidelity using proportions.

RESULTS

Alarm dashboards informed 580 structured alarm discussions during 353 safety huddles (huddles often included discussion of more than one patient).

Unit-Level Alarm Rates

Visually, alarm rates over time on each individual unit appeared flat despite the intervention (Supplementary Figure 3). Using piecewise regression, we found that intervention and control units had small increases in alarm rates between the baseline and postimplementation periods with a nonsignificant difference in these differences between the control and intervention groups (Table 1).

Patient-Level Alarm Rates

We then restricted the analysis to the patients whose data were collected during intensive data collection days. We obtained data from 1974 pre-post pairs of 4-hour time periods.

Implementation Outcomes

Adoption

The patient’s nurse attended 482 of the 580 huddle discussions (83.1%), and at least one of the patient’s physicians (resident, fellow, or attending) attended 394 (67.9%).

Fidelity: Adherence

In addition to the 353 huddles that included alarm discussion, 123 instances had no patients with ≥20 high acuity alarms in the preceding 4 hours therefore, no data were brought to the huddle. There were an additional 30 instances when a huddle did not occur or there was no alarm discussion in the huddle despite data being available. Thus, adherence occurred in 353 of 383 huddles (92.2%).

Fidelity: Dose

During the 112 calendar day postimplementation period, 379 patients’ alarms were discussed in huddles for an average intervention dose of 0.85 discussions per unit per calendar day.

Fidelity: Quality of Delivery

In 362 of the 580 huddle discussions (62.4%), changes were agreed upon. The most frequently agreed upon changes were discontinuing monitoring (32.0%), monitoring only when asleep or unsupervised (23.8%), widening heart rate parameters (12.7%), changing electrocardiographic leads/wires (8.6%), changing the pulse oximetry probe (8.0%), and increasing the delay time between when oxygen desaturation was detected and when the alarm was generated (4.7%). Of the huddle discussions with changes agreed upon, 346 (95.6%) changes were enacted at the bedside.

Safety Measures

There were 0 code blue events and 26 rapid response team activations for patients discussed in huddles. None were related to the intervention.

Discussion

Our main finding was that the huddle strategy was effective in safely reducing the burden of alarms for the high alarm pediatric ward patients whose alarms were discussed, but it did not reduce unit-level alarm rates. Implementation outcomes explained this finding. Although adoption and adherence were high, the overall dose of the intervention was low.

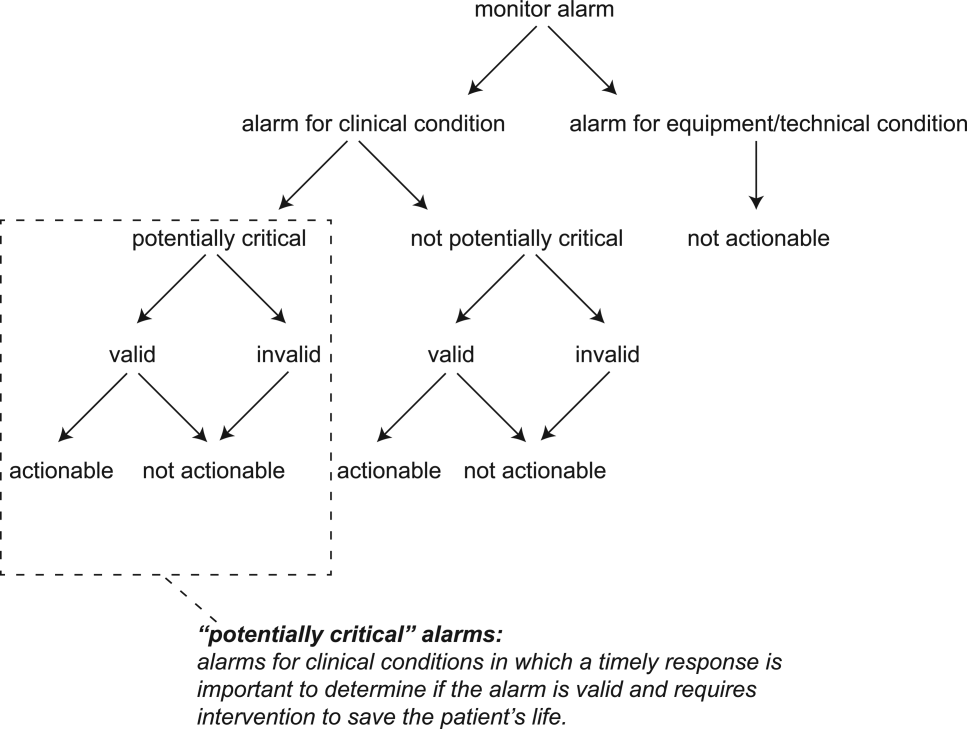

We also found that 36% of alarms had technical causes, the majority of which were related to the pulse oximetry probe detecting that it was off the patient or searching for a pulse. Although these alarms are likely perceived differently by clinical staff (most monitors generate different sounds for technical alarms), they still represent a substantial contribution to the alarm environment. Minimizing them in patients who must remain continuously monitored requires more intensive effort to implement other types of interventions than the main focus of this study, such as changing pulse oximetry probes and electrocardiographic leads/wires.

In one-third of huddles, monitoring was simply discontinued. We observed in many cases that, while these patients may have had legitimate indications for monitoring upon admission, their conditions had improved; after brief multidisciplinary discussion, the team concluded that monitoring was no longer indicated. This observation may suggest interventions at the ordering phase, such as prespecifying a monitoring duration.22,23

This study’s findings were consistent with a quasi-experimental study of safety huddle-based alarm discussions in a pediatric intensive care unit that showed a patient-level reduction of 116 alarms per patient-day in those discussed in huddles relative to controls.11 A smaller quasi-experimental study of implementing a nighttime alarm “ward round” in an adult intensive care unit showed a significant reduction in unit-level alarms/patient-day from 168 to 84.9 In a quality improvement report, a monitoring care process bundle that included discussion of alarm settings showed a reduction in unit-level alarms/patient-day from 180 to 40.10 Our study strengthens the body of literature using a cluster-randomized design, measuring patient- and unit-level outcomes, and including implementation outcomes that explain effectiveness findings.

On a hypothetical unit similar to the ones we studied with 20 occupied beds and 60 alarms/patient-day, an average of 1200 alarms would occur each day. We delivered the intervention to 0.85 patients per day. Changes were made at the bedside in 60% of those with the intervention delivered, and those patients had a difference in differences of 119 fewer alarms compared with the comparison patients on control units. In this scenario, we could expect a relative reduction of 0.85 x 0.60 x 119 = 61 fewer alarms/day total on the unit or a 5% reduction. However, that estimated reduction did not account for the arrival of new patients with high alarm rates, which certainly occurred in this study and explained the lack of effect at the unit level.

As described above, the intervention dose was low, which translated into a lack of effect at the unit level despite a strong effect at the patient level. This result was partly due to the manual process required to produce the alarm dashboards that restricted their availability to nonholiday weekdays. The study was performed at one hospital, which limited generalizability. The study hospital was already convening daily safety huddles that were well attended by nurses and physicians. Other hospitals without existing huddle structures may face challenges in implementing similar multidisciplinary alarm discussions. In addition, the study design was randomized at the unit (rather than patient) level, which limited our ability to balance potential confounders at the patient level.

Conclusion

A safety huddle intervention strategy to drive alarm customization was effective in safely reducing alarms for individual children discussed. However, unit-level alarm rates were not affected by the intervention due to a low dose. Leaders of efforts to reduce alarms should consider beginning with passive interventions (such as changes to default settings and alarm delays) and use huddle-based discussion as a second-line intervention to address remaining patients with high alarm rates.

Acknowledgments

We thank Matthew MacMurchy, BA, for his assistance with data collection.

Funding/Support

This study was supported by a Young Investigator Award (Bonafide, PI) from the Academic Pediatric Association.

Role of the Funder/Sponsor

The Academic Pediatric Association had no role in the design or conduct of the study; collection, management, analysis, or interpretation of the data; preparation, review, or approval of the manuscript; or decision to submit for publication.

Disclosures

No relevant financial activities, aside from the grant funding from the Academic Pediatric Association listed above, are reported.

1. Schondelmeyer AC, Brady PW, Goel VV, et al. Physiologic monitor alarm rates at 5 children’s hospitals. J Hosp Med. 2018;In press. PubMed

2. Cvach M, Kitchens M, Smith K, Harris P, Flack MN. Customizing alarm limits based on specific needs of patients. Biomed Instrum Technol. 2017;51(3):227-234. PubMed

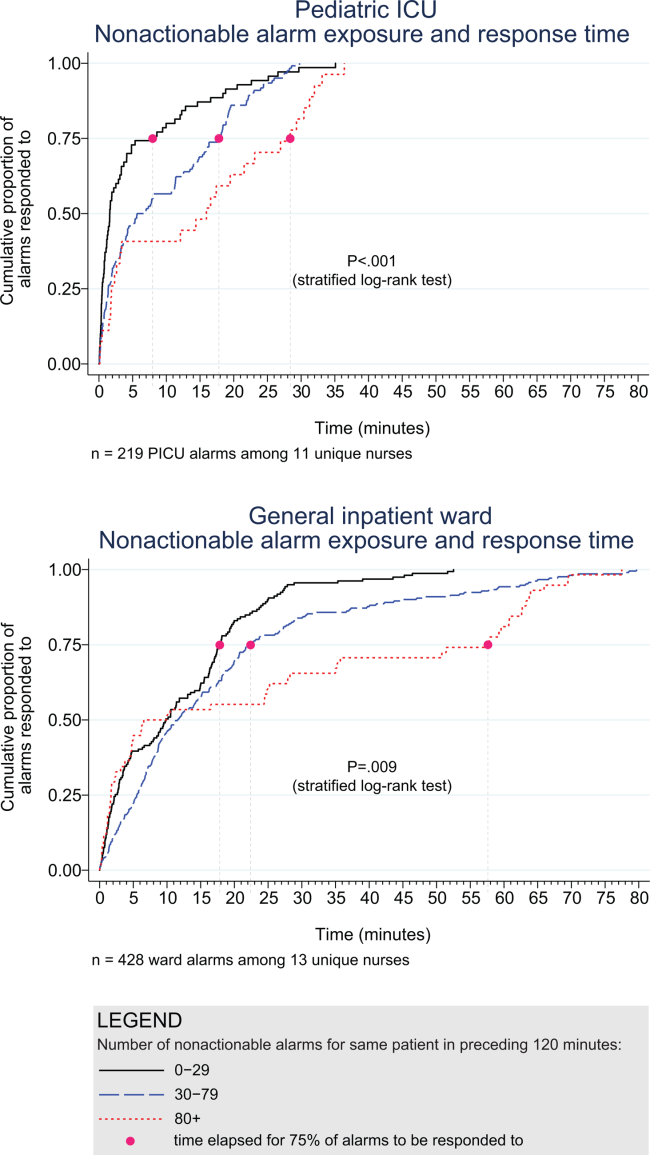

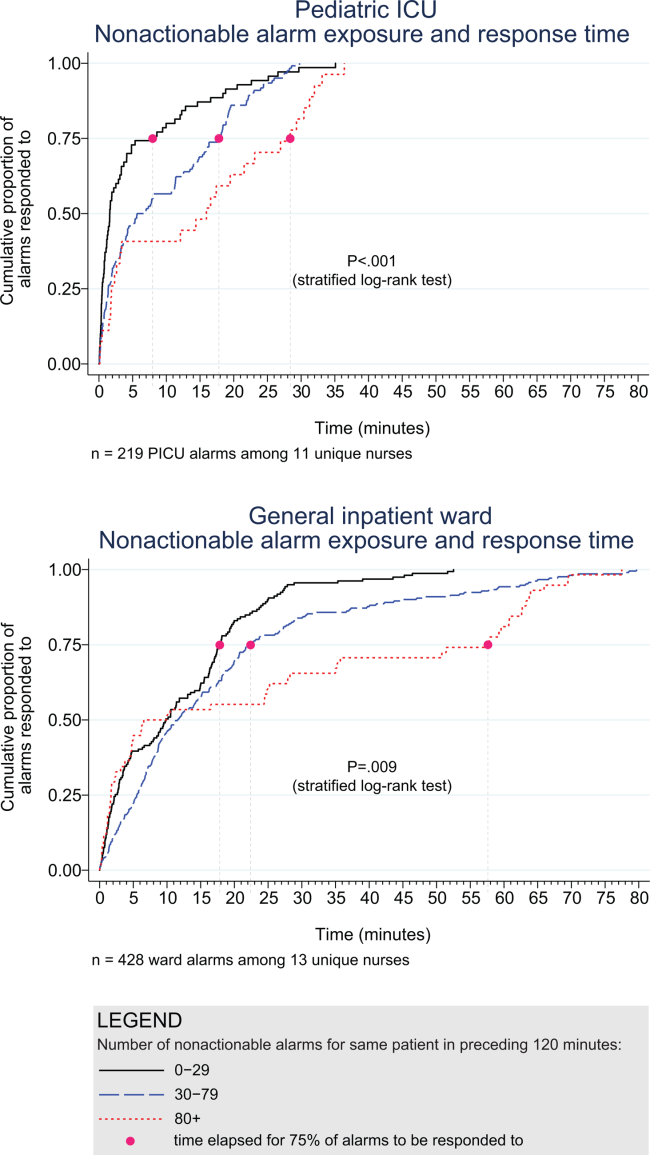

3. Bonafide CP, Lin R, Zander M, et al. Association between exposure to nonactionable physiologic monitor alarms and response time in a children’s hospital. J Hosp Med. 2015;10(6):345-351. PubMed

4. Bonafide CP, Localio AR, Holmes JH, et al. Video analysis of factors associated with response time to physiologic monitor alarms in a children’s hospital. JAMA Pediatr. 2017;171(6):524-531. PubMed

5. Lange K, Nowak M, Zoller R, Lauer W. Boundary conditions for safe detection of clinical alarms: An observational study to identify the cognitive and perceptual demands on an Intensive Care Unit. In: In: D. de Waard, K.A. Brookhuis, A. Toffetti, A. Stuiver, C. Weikert, D. Coelho, D. Manzey, A.B. Ünal, S. Röttger, and N. Merat (Eds.) Proceedings of the Human Factors and Ergonomics Society Europe Chapter 2015 Annual Conference. Groningen, Netherlands; 2016.

6. Westbrook JI, Li L, Hooper TD, Raban MZ, Middleton S, Lehnbom EC. Effectiveness of a ‘Do not interrupt’ bundled intervention to reduce interruptions during medication administration: a cluster randomised controlled feasibility study. BMJ Qual Saf. 2017;26:734-742. PubMed

7. Chopra V, McMahon LF Jr. Redesigning hospital alarms for patient safety: alarmed and potentially dangerous. JAMA. 2014;311(12):1199-1200. PubMed

8. Turmell JW, Coke L, Catinella R, Hosford T, Majeski A. Alarm fatigue: use of an evidence-based alarm management strategy. J Nurs Care Qual. 2017;32(1):47-54. PubMed

9. Koerber JP, Walker J, Worsley M, Thorpe CM. An alarm ward round reduces the frequency of false alarms on the ICU at night. J Intensive Care Soc. 2011;12(1):75-76.

10. Dandoy CE, Davies SM, Flesch L, et al. A team-based approach to reducing cardiac monitor alarms. Pediatrics. 2014;134(6):e1686-1694. PubMed

11. Dewan M, Wolfe H, Lin R, et al. Impact of a safety huddle–based intervention on monitor alarm rates in low-acuity pediatric intensive care unit patients. J Hosp Med. 2017;12(8):652-657. PubMed

12. Goldenhar LM, Brady PW, Sutcliffe KM, Muething SE. Huddling for high reliability and situation awareness. BMJ Qual Saf. 2013;22(11):899-906. PubMed

13. Brady PW, Muething S, Kotagal U, et al. Improving situation awareness to reduce unrecognized clinical deterioration and serious safety events. Pediatrics. 2013;131:e298-308. PubMed

14. Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217-226. PubMed

15. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50. PubMed

16. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65-76. PubMed

17. Allen JD, Linnan LA, Emmons KM. Fidelity and its relationship to implementation effectiveness, adaptation, and dissemination. In: Dissemination and Implementation Research in Health: Translating Science to Practice (Brownson RC, Proctor EK, Colditz GA Eds.). Oxford University Press; 2012:281-304.

18. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inf. 2009;42:377-381. PubMed

19. Singer JD, Willett JB. Applied Longitudinal Data Analysis: Modeling Change and Event Occurrence. New York: Oxford University Press; 2003.

20. Wagner AK, Soumerai SB, Zhang F, Ross-Degnan D. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther. 2002;27:299-309. PubMed

21. Gardner W, Mulvey EP, Shaw EC. Regression analyses of counts and rates: Poisson, overdispersed Poisson, and negative binomial models. Psychol Bull. 1995;118:392-404. PubMed

22. Dressler R, Dryer MM, Coletti C, Mahoney D, Doorey AJ. Altering overuse of cardiac telemetry in non-intensive care unit settings by hardwiring the use of American Heart Association guidelines. JAMA Intern Med. 2014;174(11):1852-1854. PubMed

23. Boggan JC, Navar-Boggan AM, Patel V, Schulteis RD, Simel DL. Reductions in telemetry order duration do not reduce telemetry utilization. J Hosp Med. 2014;9(12):795-796. PubMed

Physiologic monitor alarms occur frequently in the hospital environment, with average rates on pediatric wards between 42 and 155 alarms per monitored patient-day.1 However, average rates do not depict the full story, because only 9%–25% of patients are responsible for most alarms on inpatient wards.1,2 In addition, only 0.5%–1% of alarms on pediatric wards warrant action.3,4 Downstream consequences of high alarm rates include interruptions5,6 and alarm fatigue.3,4,7

Alarm customization, the process of reviewing individual patients’ alarm data and using that data to implement patient-specific alarm reduction interventions, has emerged as a potential approach to unit-wide alarm management.8-11 Potential customizations include broadening alarm thresholds, instituting delays between the time the alarm condition is met and the time the alarm sounds, and changing electrodes.8-11 However, the workflows within which to identify the patients who will benefit from customization, make decisions about how to customize, and implement customizations have not been delineated.

Safety huddles are brief structured discussions among physicians, nurses, and other staff aiming to identify and mitigate threats to patient safety.11-13 In this study, we aimed to evaluate the influence of a safety huddle-based alarm intervention strategy targeting high alarm pediatric ward patients on (a) unit-level alarm rates and (b) patient-level alarm rates, as well as to (c) evaluate implementation outcomes. We hypothesized that patients discussed in huddles would have greater reductions in alarm rates in the 24 hours following their huddle than patients who were not discussed. Given that most alarms are generated by a small fraction of patients,1,2 we hypothesized that patient-level reductions would translate to unit-level reductions.

METHODS

Human Subject Protection

The Institutional Review Board of Children’s Hospital of Philadelphia approved this study with a waiver of informed consent. We registered the study at ClinicalTrials.gov (identifier NCT02458872). The original protocol is available as an Online Supplement.

Design and Framework

For our secondary effectiveness outcome evaluating the effect of the intervention on the alarm rates of the individual patients discussed in huddles, we used a cohort design embedded within the trial to analyze patient-specific alarm data collected only on randomly selected “intensive data collection days,” described below and in Figure 1.

Setting and Subjects

All patients hospitalized on 8 units that admit general pediatric and medical subspecialty patients at Children’s Hospital of Philadelphia between June 15, 2015 and May 8, 2016 were included in the primary (unit-level) analysis. Every patient’s bedside included a General Electric Dash 3000 physiologic monitor. Decisions to monitor patients were made by physicians and required orders. Default alarm settings are available in Supplementary Table 1; these settings required orders to change.

All 8 units were already convening scheduled safety huddles led by the charge nurse each day. All nurses and at least one resident were expected to attend; attending physicians and fellows were welcome but not expected to attend. Huddles focused on discussing safety concerns and patient flow. None of the preexisting huddles included alarm discussion.

Intervention

For each nonholiday weekday, we generated customized paper-based alarm huddle data “dashboards” (Supplementary Figure 1) displaying data from the patients (up to a maximum of 4) on each intervention unit with the highest numbers of high-acuity alarms (“crisis” and “warning” audible alarms, see Supplementary Table 2 for detailed listing of alarm types) in the preceding 4 hours by reviewing data from the monitor network using BedMasterEx v4.2 (Excel Medical Electronics). Dashboards listed the most frequent types of alarms, alarm settings, and included a script for discussing the alarms with checkboxes to indicate changes agreed upon by the team during the huddle. Patients with fewer than 20 alarms in the preceding 4h were not included; thus, sometimes fewer than 4 patients’ data were available for discussion. We hand-delivered dashboards to the charge nurses leading huddles, and they facilitated the multidisciplinary alarm discussions focused on reviewing alarm data and customizing settings to reduce unnecessary alarms.

Study Periods

The study had 3 periods as shown in Supplementary Figure 2: (1) 16-week baseline data collection, (2) phased intervention implementation during which we serially spent 2-8 weeks on each of the 4 intervention units implementing the intervention, and (3) 16-week postimplementation data collection.

Outcomes

The primary effectiveness outcome was the change in unit-level alarms per patient-day between the baseline and postimplementation periods in intervention versus control units, with all patients on the units included. The secondary effectiveness outcome (analyzed using the embedded cohort design) was the change in individual patient-level alarms between the 24 hours leading up to a huddle and the 24 hours following huddles in patients who were versus patients who were not discussed in huddles.

Implementation outcomes included adoption and fidelity measures. To measure adoption (defined as “intention to try” the intervention),16 we measured the frequency of discussions attended by patients’ nurses and physicians. We evaluated 3 elements of fidelity: adherence, dose, and quality of delivery.17 We measured adherence as the incorporation of alarm discussion into huddles when there were eligible patients to discuss. We measured dose as the average number of patients discussed on each unit per calendar day during the postimplementation period. We measured quality of delivery as the extent to which changes to monitoring that were agreed upon in the huddles were made at the bedside.

Safety Measures

To surveil for unintended consequences of reduced monitoring, we screened the hospital’s rapid response and code blue team database weekly for any events in patients previously discussed in huddles that occurred between huddle and hospital discharge. We reviewed charts to determine if the events were related to the intervention.

Randomization

Prior to randomization, the 8 units were divided into pairs based on participation in hospital-wide Joint Commission alarm management activities, use of alarm middleware that relayed detailed alarm information to nurses’ mobile phones, and baseline alarm rates. One unit in each pair was randomized to intervention and the other to control by coin flip.

Data Collection

We used Research Electronic Data Capture (REDCap)18 database tools.

Data for Unit-Level Analyses

We captured all alarms occurring on the study units during the study period using data from BedMasterEx. We obtained census data accurate to the hour from the Clinical Data Warehouse.

Data Captured in All Huddles

During each huddle, we collected the number of patients whose alarms were discussed, patient characteristics, presence of nurses and physicians, and monitoring changes agreed upon. We then followed up 4 hours later to determine if changes were made at the bedside by examining monitor settings.

Data Captured Only During Intensive Data Collection Days

We randomly selected 1 day during each of the 16 weeks of the postimplementation period to obtain additional patient-level data. On each intensive data collection day, the 4 monitored patients on each intervention and control unit with the most high-acuity alarms in the 4 hours prior to huddles occurring — regardless of whether or not these patients were later discussed in huddles — were identified for data collection. On these dates, a member of the research team reviewed each patient’s alarm counts in 4-hour blocks during the 24 hours before and after the huddle. Given that the huddles were not always at the same time every day (ranging between 10:00 and 13:00), we operationally set the huddle time as 12:00 for all units.

Data Analysis

We used Stata/SE 14.2 for all analyses.

Unit-Level Alarm Rates

To compare unit-level rates, we performed an interrupted time series analysis using segmented (piecewise) regression to evaluate the impact of the intervention.19,20 We used a multivariable generalized estimating equation model with the negative binomial distribution21 and clustering by unit. We bootstrapped the model and generated percentile-based 95% confidence intervals. We then used the model to estimate the alarm rate difference in differences between the baseline data collection period and the postimplementation data collection period for intervention versus control units.

Patient-Level Alarm Rates

In contrast to unit-level analysis, we used an embedded cohort design to model the change in individual patients’ alarms between the 24 hours leading up to huddles and the 24 hours following huddles in patients who were versus patients who were not discussed in huddles. The analysis was restricted to the patients included in intensive data collection days. We performed bootstrapped linear regression and generated percentile-based 95% confidence intervals using the difference in 4-hour block alarm rate between pre- and posthuddle as the outcome. We clustered within patients. We stratified by unit and preceding alarm rate. We modeled the alarm rate difference between the 24-hour prehuddle and the 24-hour posthuddle for huddled and nonhuddled patients and the difference in differences between exposure groups.

Implementation Outcomes

We summarized adoption and fidelity using proportions.

RESULTS

Alarm dashboards informed 580 structured alarm discussions during 353 safety huddles (huddles often included discussion of more than one patient).

Unit-Level Alarm Rates

Visually, alarm rates over time on each individual unit appeared flat despite the intervention (Supplementary Figure 3). Using piecewise regression, we found that intervention and control units had small increases in alarm rates between the baseline and postimplementation periods with a nonsignificant difference in these differences between the control and intervention groups (Table 1).

Patient-Level Alarm Rates

We then restricted the analysis to the patients whose data were collected during intensive data collection days. We obtained data from 1974 pre-post pairs of 4-hour time periods.

Implementation Outcomes

Adoption

The patient’s nurse attended 482 of the 580 huddle discussions (83.1%), and at least one of the patient’s physicians (resident, fellow, or attending) attended 394 (67.9%).

Fidelity: Adherence

In addition to the 353 huddles that included alarm discussion, 123 instances had no patients with ≥20 high acuity alarms in the preceding 4 hours therefore, no data were brought to the huddle. There were an additional 30 instances when a huddle did not occur or there was no alarm discussion in the huddle despite data being available. Thus, adherence occurred in 353 of 383 huddles (92.2%).

Fidelity: Dose

During the 112 calendar day postimplementation period, 379 patients’ alarms were discussed in huddles for an average intervention dose of 0.85 discussions per unit per calendar day.

Fidelity: Quality of Delivery