User login

Associations of Physician Empathy with Patient Anxiety and Ratings of Communication in Hospital Admission Encounters

Admission to a hospital can be a stressful event,1,2 and patients report having many concerns at the time of hospital admission.3 Over the last 20 years, the United States has widely adopted the hospitalist model of inpatient care. Although this model has clear benefits, it also has the potential to contribute to patient stress, as hospitalized patients generally lack preexisting relationships with their inpatient physicians.4,5 In this changing hospital environment, defining and promoting effective medical communication has become an essential goal of both individual practitioners and medical centers.

Successful communication and strong therapeutic relationships with physicians support patients’ coping with illness-associated stress6,7 as well as promote adherence to medical treatment plans.8 Empathy serves as an important building block of patient-centered communication and encourages a strong therapeutic alliance.9 Studies from primary care, oncology, and intensive care unit (ICU) settings indicate that physician empathy is associated with decreased emotional distress,10,11 improved ratings of communication,12 and even better medical outcomes.13

Prior work has shown that hospitalists, like other clinicians, underutilize empathy as a tool in their daily interactions with patients.14-16 Our prior qualitative analysis of audio-recorded hospitalist-patient admission encounters indicated that how hospitalists respond to patient expressions of negative emotion influences relationships with patients and alignment around care plans.17 To determine whether empathic communication is associated with patient-reported outcomes in the hospitalist model, we quantitatively analyzed coded admission encounters and survey data to examine the association between hospitalists’ responses to patient expressions of negative emotion (anxiety, sadness, and anger) and patient anxiety and ratings of communication. Given the often-limited time hospitalists have to complete admission encounters, we also examined the association between response to emotion and encounter length.

METHODS

We analyzed data collected as part of an observational study of hospitalist-patient communication during hospital admission encounters14 to assess the association between the way physicians responded to patient expressions of negative emotion and patient anxiety, ratings of communication in the encounter, and encounter length. We collected data between August 2008 and March 2009 on the general medical service at 2 urban hospitals that are part of an academic medical center. Participants were attending hospitalists (not physician trainees), and patients admitted under participating hospitalists’ care who were able to communicate verbally in English and provide informed consent for the study. The institutional review board at the University of California, San Francisco approved the study; physician and patient participants provided written informed consent.

Enrollment and data collection has been described previously.17 Our cohort for this analysis included 76 patients of 27 physicians who completed encounter audio recordings and pre- and postencounter surveys. Following enrollment, patients completed a preencounter survey to collect demographic information and to measure their baseline anxiety via the State Anxiety Scale (STAI-S), which assesses transient anxious mood using 20 items answered on a 4-point scale for a final score range of 20 to 80.10,18,19 We timed and audio-recorded admission encounters. Encounter recordings were obtained solely from patient interactions with attending hospitalists and did not take into account the time patients may have spent with other physicians, including trainees. After the encounter, patients completed postencounter surveys, which included the STAI-S and patients’ ratings of communication during the encounter. To rate communication, patients responded to 7 items on a 0- to 10-point scale that were derived from previous work (Table 1)12,20,21; the anchors were “not at all” and “completely.” To identify patients with serious illness, which we used as a covariate in regression models, we asked physicians on a postencounter survey whether or not they “would be surprised by this patient’s death or admission to the ICU in the next year.”22

We considered physician as a clustering variable in the calculation of robust standard errors for all models. In addition, we included in each model covariates that were associated with the outcome at P ≤ 0.10, including patient gender, patient age, serious illness,22 preencounter anxiety, encounter length, and hospital. We considered P values < 0.05 to be statistically significant. We used Stata SE 13 (StataCorp LLC, College Station, TX) for all statistical analyses.

RESULTS

We analyzed data from admission encounters with 76 patients (consent rate 63%) and 27 hospitalists (consent rate 91%). Their characteristics are shown in Table 3. Median encounter length was 19 minutes (mean 21 minutes, range 3-68). Patients expressed negative emotion in 190 instances across all encounters; median number of expressions per encounter was 1 (range 0-14). Hospitalists responded empathically to 32% (n = 61) of the patient expressions, neutrally to 43% (n = 81), and nonempathically to 25% (n = 48).

The STAI-S was normally distributed. The mean preencounter STAI-S score was 39 (standard deviation [SD] 8.9). Mean postencounter STAI-S score was 38 (SD 10.7). Mean change in anxiety over the course of the encounter, calculated as the postencounter minus preencounter mean was −1.2 (SD 7.6). Table 1 shows summary statistics for the patient ratings of communication items. All items were rated highly. Across the items, between 51% and 78% of patients rated the highest score of 10.

Across the range of frequencies of emotional expressions per encounter in our data set (0-14 expressions), each additional empathic hospitalist response was associated with a 1.65-point decrease in the STAI-S (95% confidence interval [CI], 0.48-2.82). We did not find significant associations between changes in the STAI-S and the number of neutral hospitalist responses (−0.65 per response; 95% CI, −1.67-0.37) or nonempathic hospitalist responses (0.61 per response; 95% CI, −0.88-2.10).

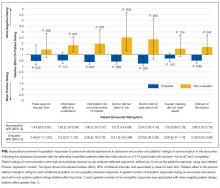

In addition, nonempathic responses were associated with more negative ratings of communication for 5 of the 7 items: ease of understanding information, covering points of interest, the doctor listening, the doctor caring, and trusting the doctor. For example, for the item “I felt this doctor cared about me,” each nonempathic hospitalist response was associated with a more than doubling of negative patient ratings (aRE: 2.3; 95% CI, 1.32-4.16). Neutral physician responses to patient expressions of negative emotion were associated with less negative patient ratings for 2 of the items: covering points of interest (aRE 0.68; 95% CI, 0.51-0.90) and trusting the doctor (aRE: 0.86; 95% CI, 0.75-0.99).

We did not find a statistical association between encounter length and the number of empathic hospitalist responses in the encounter (percent change in encounter length per response [PC]: 1%; 95% CI, −8%-10%) or the number of nonempathic responses (PC: 18%; 95% CI, −2%-42%). We did find a statistically significant association between the number of neutral responses and encounter length (PC: 13%; 95% CI, 3%-24%), corresponding to 2.5 minutes of additional encounter time per neutral response for the median encounter length of 19 minutes.

DISCUSSION

Our study set out to measure how hospitalists responded to expressions of negative emotion during admission encounters with patients and how those responses correlated with patient anxiety, ratings of communication, and encounter length. We found that empathic responses were associated with diminishing patient anxiety after the visit, as well as with better ratings of several domains of hospitalist communication. Moreover, nonempathic responses to negative emotion were associated with more strongly negative ratings of hospitalist communication. Finally, while clinicians may worry that encouraging patients to speak further about emotion will result in excessive visit lengths, we did not find a statistical association between empathic responses and encounter duration. To our knowledge, this is the first study to indicate an association between empathy and patient anxiety and communication ratings within the hospitalist model, which is rapidly becoming the predominant model for providing inpatient care in the United States.4,5

As in oncologic care, anxiety is an emotion commonly confronted by clinicians meeting admitted medical patients for the first time. Studies show that not only do patient anxiety levels remain high throughout a hospital course, patients who experience higher levels of anxiety tend to stay longer in the hospital.1,2,27-30 But unlike oncologic care or other therapy provided in an outpatient setting, the hospitalist model does not facilitate “continuity” of care, or the ability to care for the same patients over a long period of time. This reality of inpatient care makes rapid, effective rapport-building critical to establishing strong physician-patient relationships. In this setting, a simple communication tool that is potentially able to reduce inpatients’ anxiety could have a meaningful impact on hospitalist-provided care and patient outcomes.

In terms of the magnitude of the effect of empathic responses, the clinical significance of a 1.65-point decrease in the STAI-S anxiety score is not precisely clear. A prior study that examined the effect of music therapy on anxiety levels in patients with cancer found an average anxiety reduction of approximately 9.5 units on the STAIS-S scale after sensitivity analysis, suggesting a rather large meaningful effect size.31 Given we found a reduction of 1.65 points for each empathic response, however, with a range of 0-14 negative emotions expressed over a median 19-minute encounter, there is opportunity for hospitalists to achieve a clinically significant decrease in patient anxiety during an admission encounter. The potential to reduce anxiety is extended further when we consider that the impact of an empathic response may apply not just to the admission encounter alone but also to numerous other patient-clinician interactions over the course of a hospitalization.

A healthy body of communication research supports the associations we found in our study between empathy and patient ratings of communication and physicians. Families in ICU conferences rate communication more positively when physicians express empathy,12 and a number of studies indicate an association between empathy and patient satisfaction in outpatient settings.8 Given the associations we found with negative ratings on the items in our study, promoting empathic responses to expressions of emotion and, more importantly, stressing avoidance of nonempathic responses may be relevant efforts in working to improve patient satisfaction scores on surveys reporting “top box” percentages, such as Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS). More notably, evidence indicates that empathy has positive impacts beyond satisfaction surveys, such as adherence, better diagnostic and clinical outcomes, and strengthening of patient enablement.8Not all hospitalist responses to emotion were associated with patient ratings across the 7 communication items we assessed. For example, we did not find an association between how physicians responded to patient expressions of negative emotion and patient perception that enough time was spent in the visit or the degree to which talking with the doctor met a patient’s overall needs. It follows logically, and other research supports, that empathy would influence patient ratings of physician caring and trust,32 whereas other communication factors we were unable to measure (eg, physician body language, tone, and use of jargon and patient health literacy and primary language) may have a more significant association with patient ratings of the other items we assessed.

In considering the clinical application of our results, it is important to note that communication skills, including responding empathically to patient expressions of negative emotion, can be imparted through training in the same way as abdominal examination or electrocardiogram interpretation skills.33-35 However, training of hospitalists in communication skills requires time and some financial investment on the part of the physician, their hospital or group, or, ideally, both. Effective training methods, like those for other skill acquisition, involve learner-centered teaching and practicing skills with role-play and feedback.36 Given the importance of a learner-centered approach, learning would likely be better received and more effective if it was tailored to the specific needs and patient scenarios commonly encountered by hospitalist physicians. As these programs are developed, it will be important to assess the impact of any training on the patient-reported outcomes we assessed in this observational study, along with clinical outcomes.

Our study has several limitations. First, we were only able to evaluate whether hospitalists verbally responded to patient emotion and were thus not able to account for nonverbal empathy such as facial expressions, body language, or voice tone. Second, given our patient consent rate of 63%, patients who agreed to participate in the study may have had different opinions than those who declined to participate. Also, hospitalists and patients may have behaved differently as a result of being audio recorded. We only included patients who spoke English, and our patient population was predominately non-Hispanic white. Patients who spoke other languages or came from other cultural backgrounds may have had different responses. Third, we did not use a single validated scale for patient ratings of communication, and multiple analyses increase our risk of finding statistically significant associations by chance. The skewing of the communication rating items toward high scores may also have led to our results being driven by outliers, although the model we chose for analysis does penalize for this. Furthermore, our sample size was small, leading to wide CIs and potential for lack of statistical associations due to insufficient power. Our findings warrant replication in larger studies. Fourth, the setting of our study in an academic center may affect generalizability. Finally, the age of our data (collected between 2008 and 2009) is also a limitation. Given a recent focus on communication and patient experience since the initiation of HCAHPS feedback, a similar analysis of empathy and communication methods now may result in different outcomes.

In conclusion, our results suggest that enhancing hospitalists’ empathic responses to patient expressions of negative emotion could decrease patient anxiety and improve patients’ perceptions of (and thus possibly their relationships with) hospitalists, without sacrificing efficiency. Future work should focus on tailoring and implementing communication skills training programs for hospitalists and evaluating the impact of training on patient outcomes.

Acknowledgments

The authors extend their sincere thanks to the patients and physicians who participated in this study. Dr. Anderson was funded by the National Palliative Care Research Center and the University of California, San Francisco Clinical and Translational Science Institute Career Development Program, National Institutes of Health (NIH) grant number 5 KL2 RR024130-04. Project costs were funded by a grant from the University of California, San Francisco Academic Senate.

Disclosure

All coauthors have seen and agree with the contents of this manuscript. This submission is not under review by any other publication. Wendy Anderson received funding for this project from the National Palliative Care Research Center, University of California San Francisco Clinical and Translational Science Institute (NIH grant number 5KL2RR024130-04), and the University of San Francisco Academic Senate [From Section 2 of Author Disclosure Form]. Andy Auerbach has a Patient-Centered Outcomes Research Institute research grant in development [From Section 3 of the Author Disclosure Form].

1. Walker FB, Novack DH, Kaiser DL, Knight A, Oblinger P. Anxiety and depression among medical and surgical patients nearing hospital discharge. J Gen Intern Med. 1987;2(2):99-101. PubMed

2. Castillo MI, Cooke M, Macfarlane B, Aitken LM. Factors associated with anxiety in critically ill patients: A prospective observational cohort study. Int J Nurs Stud. 2016;60:225-233. PubMed

3. Anderson WG, Winters K, Auerbach AD. Patient concerns at hospital admission. Arch Intern Med. 2011;171(15):1399-1400. PubMed

4. Kuo Y-F, Sharma G, Freeman JL, Goodwin JS. Growth in the care of older patients by hospitalists in the United States. N Engl J Med. 2009;360(11):1102-1112. PubMed

5. Wachter RM, Goldman L. Zero to 50,000 - The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375(11):1009-1011. PubMed

6. Mack JW, Block SD, Nilsson M, et al. Measuring therapeutic alliance between oncologists and patients with advanced cancer: the Human Connection Scale. Cancer. 2009;115(14):3302-3311. PubMed

7. Huff NG, Nadig N, Ford DW, Cox CE. Therapeutic Alliance between the Caregivers of Critical Illness Survivors and Intensive Care Unit Clinicians. [published correction appears in Ann Am Thorac Soc. 2016;13(4):576]. Ann Am Thorac Soc. 2015;12(11):1646-1653. PubMed

8. Derksen F, Bensing J, Lagro-Janssen A. Effectiveness of empathy in general practice: a systematic review. Br J Gen Pract. 2013;63(606):e76-e84. PubMed

9. Dwamena F, Holmes-Rovner M, Gaulden CM, et al. Interventions for providers to promote a patient-centred approach in clinical consultations. Cochrane Database Syst Rev. 2012;12:CD003267. PubMed

10. Fogarty LA, Curbow BA, Wingard JR, McDonnell K, Somerfield MR. Can 40 seconds of compassion reduce patient anxiety? J Clin Oncol. 1999;17(1):371-379. PubMed

11. Roter DL, Hall JA, Kern DE, Barker LR, Cole KA, Roca RP. Improving physicians’ interviewing skills and reducing patients’ emotional distress. A randomized clinical trial. Arch Intern Med. 1995;155(17):1877-1884. PubMed

12. Stapleton RD, Engelberg RA, Wenrich MD, Goss CH, Curtis JR. Clinician statements and family satisfaction with family conferences in the intensive care unit. Crit Care Med. 2006;34(6):1679-1685. PubMed

13. Hojat M, Louis DZ, Markham FW, Wender R, Rabinowitz C, Gonnella JS. Physicians’ empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359-364. PubMed

14. Anderson WG, Winters K, Arnold RM, Puntillo KA, White DB, Auerbach AD. Studying physician-patient communication in the acute care setting: the hospitalist rapport study. Patient Educ Couns. 2011;82(2):275-279. PubMed

15. Pollak KI, Arnold RM, Jeffreys AS, et al. Oncologist communication about emotion during visits with patients with advanced cancer. J Clin Oncol. 2007;25(36):5748-5752. PubMed

16. Suchman AL, Markakis K, Beckman HB, Frankel R. A model of empathic communication in the medical interview. JAMA. 1997;277(8):678-682. PubMed

17. Adams K, Cimino JEW, Arnold RM, Anderson WG. Why should I talk about emotion? Communication patterns associated with physician discussion of patient expressions of negative emotion in hospital admission encounters. Patient Educ Couns. 2012;89(1):44-50. PubMed

18. Julian LJ. Measures of anxiety: State-Trait Anxiety Inventory (STAI), Beck Anxiety Inventory (BAI), and Hospital Anxiety and Depression Scale-Anxiety (HADS-A). Arthritis Care Res (Hoboken). 2011;63 Suppl 11:S467-S472. PubMed

19. Speilberger C, Ritterband L, Sydeman S, Reheiser E, Unger K. Assessment of emotional states and personality traits: measuring psychological vital signs. In: Butcher J, editor. Clinical personality assessment: practical approaches. New York: Oxford University Press; 1995.

20. Safran DG, Kosinski M, Tarlov AR, et al. The Primary Care Assessment Survey: tests of data quality and measurement performance. Med Care. 1998;36(5):728-739. PubMed

21. Azoulay E, Pochard F, Kentish-Barnes N, et al. Risk of post-traumatic stress symptoms in family members of intensive care unit patients. Am J Respir Crit Care Med. 2005;171(9):987-994. PubMed

22. Lynn J. Perspectives on care at the close of life. Serving patients who may die soon and their families: the role of hospice and other services. JAMA. 2001;285(7):925-932. PubMed

23. Kennifer SL, Alexander SC, Pollak KI, et al. Negative emotions in cancer care: do oncologists’ responses depend on severity and type of emotion? Patient Educ Couns. 2009;76(1):51-56. PubMed

24. Butow PN, Brown RF, Cogar S, Tattersall MHN, Dunn SM. Oncologists’ reactions to cancer patients’ verbal cues. Psychooncology. 2002;11(1):47-58. PubMed

25. Levinson W, Gorawara-Bhat R, Lamb J. A study of patient clues and physician responses in primary care and surgical settings. JAMA. 2000;284(8):1021-1027. PubMed

26. Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20(1):37-46.

27. Fulop G. Anxiety disorders in the general hospital setting. Psychiatr Med. 1990;8(3):187-195. PubMed

28. Gerson S, Mistry R, Bastani R, et al. Symptoms of depression and anxiety (MHI) following acute medical/surgical hospitalization and post-discharge psychiatric diagnoses (DSM) in 839 geriatric US veterans. Int J Geriatr Psychiatry. 2004;19(12):1155-1167. PubMed

29. Kathol RG, Wenzel RP. Natural history of symptoms of depression and anxiety during inpatient treatment on general medicine wards. J Gen Intern Med. 1992;7(3):287-293. PubMed

30. Unsal A, Unaldi C, Baytemir C. Anxiety and depression levels of inpatients in the city centre of Kirşehir in Turkey. Int J Nurs Pract. 2011;17(4):411-418. PubMed

31. Bradt J, Dileo C, Grocke D, Magill L. Music interventions for improving psychological and physical outcomes in cancer patients. [Update appears in Cochrane Database Syst Rev. 2016;(8):CD006911] Cochrane Database Syst Rev. 2011;(8):CD006911. PubMed

32. Kim SS, Kaplowitz S, Johnston MV. The effects of physician empathy on patient satisfaction and compliance. Eval Health Prof. 2004;27(3):237-251. PubMed

33. Tulsky JA, Arnold RM, Alexander SC, et al. Enhancing communication between oncologists and patients with a computer-based training program: a randomized trial. Ann Intern Med. 2011;155(9):593-601. PubMed

34. Bays AM, Engelberg RA, Back AL, et al. Interprofessional communication skills training for serious illness: evaluation of a small-group, simulated patient intervention. J Palliat Med. 2014;17(2):159-166. PubMed

35. Epstein RM, Duberstein PR, Fenton JJ, et al. Effect of a Patient-Centered Communication Intervention on Oncologist-Patient Communication, Quality of Life, and Health Care Utilization in Advanced Cancer: The VOICE Randomized Clinical Trial. JAMA Oncol. 2017;3(1):92-100. PubMed

36. Berkhof M, van Rijssen HJ, Schellart AJM, Anema JR, van der Beek AJ. Effective training strategies for teaching communication skills to physicians: an overview of systematic reviews. Patient Educ Couns. 2011;84(2):152-162. PubMed

Admission to a hospital can be a stressful event,1,2 and patients report having many concerns at the time of hospital admission.3 Over the last 20 years, the United States has widely adopted the hospitalist model of inpatient care. Although this model has clear benefits, it also has the potential to contribute to patient stress, as hospitalized patients generally lack preexisting relationships with their inpatient physicians.4,5 In this changing hospital environment, defining and promoting effective medical communication has become an essential goal of both individual practitioners and medical centers.

Successful communication and strong therapeutic relationships with physicians support patients’ coping with illness-associated stress6,7 as well as promote adherence to medical treatment plans.8 Empathy serves as an important building block of patient-centered communication and encourages a strong therapeutic alliance.9 Studies from primary care, oncology, and intensive care unit (ICU) settings indicate that physician empathy is associated with decreased emotional distress,10,11 improved ratings of communication,12 and even better medical outcomes.13

Prior work has shown that hospitalists, like other clinicians, underutilize empathy as a tool in their daily interactions with patients.14-16 Our prior qualitative analysis of audio-recorded hospitalist-patient admission encounters indicated that how hospitalists respond to patient expressions of negative emotion influences relationships with patients and alignment around care plans.17 To determine whether empathic communication is associated with patient-reported outcomes in the hospitalist model, we quantitatively analyzed coded admission encounters and survey data to examine the association between hospitalists’ responses to patient expressions of negative emotion (anxiety, sadness, and anger) and patient anxiety and ratings of communication. Given the often-limited time hospitalists have to complete admission encounters, we also examined the association between response to emotion and encounter length.

METHODS

We analyzed data collected as part of an observational study of hospitalist-patient communication during hospital admission encounters14 to assess the association between the way physicians responded to patient expressions of negative emotion and patient anxiety, ratings of communication in the encounter, and encounter length. We collected data between August 2008 and March 2009 on the general medical service at 2 urban hospitals that are part of an academic medical center. Participants were attending hospitalists (not physician trainees), and patients admitted under participating hospitalists’ care who were able to communicate verbally in English and provide informed consent for the study. The institutional review board at the University of California, San Francisco approved the study; physician and patient participants provided written informed consent.

Enrollment and data collection has been described previously.17 Our cohort for this analysis included 76 patients of 27 physicians who completed encounter audio recordings and pre- and postencounter surveys. Following enrollment, patients completed a preencounter survey to collect demographic information and to measure their baseline anxiety via the State Anxiety Scale (STAI-S), which assesses transient anxious mood using 20 items answered on a 4-point scale for a final score range of 20 to 80.10,18,19 We timed and audio-recorded admission encounters. Encounter recordings were obtained solely from patient interactions with attending hospitalists and did not take into account the time patients may have spent with other physicians, including trainees. After the encounter, patients completed postencounter surveys, which included the STAI-S and patients’ ratings of communication during the encounter. To rate communication, patients responded to 7 items on a 0- to 10-point scale that were derived from previous work (Table 1)12,20,21; the anchors were “not at all” and “completely.” To identify patients with serious illness, which we used as a covariate in regression models, we asked physicians on a postencounter survey whether or not they “would be surprised by this patient’s death or admission to the ICU in the next year.”22

We considered physician as a clustering variable in the calculation of robust standard errors for all models. In addition, we included in each model covariates that were associated with the outcome at P ≤ 0.10, including patient gender, patient age, serious illness,22 preencounter anxiety, encounter length, and hospital. We considered P values < 0.05 to be statistically significant. We used Stata SE 13 (StataCorp LLC, College Station, TX) for all statistical analyses.

RESULTS

We analyzed data from admission encounters with 76 patients (consent rate 63%) and 27 hospitalists (consent rate 91%). Their characteristics are shown in Table 3. Median encounter length was 19 minutes (mean 21 minutes, range 3-68). Patients expressed negative emotion in 190 instances across all encounters; median number of expressions per encounter was 1 (range 0-14). Hospitalists responded empathically to 32% (n = 61) of the patient expressions, neutrally to 43% (n = 81), and nonempathically to 25% (n = 48).

The STAI-S was normally distributed. The mean preencounter STAI-S score was 39 (standard deviation [SD] 8.9). Mean postencounter STAI-S score was 38 (SD 10.7). Mean change in anxiety over the course of the encounter, calculated as the postencounter minus preencounter mean was −1.2 (SD 7.6). Table 1 shows summary statistics for the patient ratings of communication items. All items were rated highly. Across the items, between 51% and 78% of patients rated the highest score of 10.

Across the range of frequencies of emotional expressions per encounter in our data set (0-14 expressions), each additional empathic hospitalist response was associated with a 1.65-point decrease in the STAI-S (95% confidence interval [CI], 0.48-2.82). We did not find significant associations between changes in the STAI-S and the number of neutral hospitalist responses (−0.65 per response; 95% CI, −1.67-0.37) or nonempathic hospitalist responses (0.61 per response; 95% CI, −0.88-2.10).

In addition, nonempathic responses were associated with more negative ratings of communication for 5 of the 7 items: ease of understanding information, covering points of interest, the doctor listening, the doctor caring, and trusting the doctor. For example, for the item “I felt this doctor cared about me,” each nonempathic hospitalist response was associated with a more than doubling of negative patient ratings (aRE: 2.3; 95% CI, 1.32-4.16). Neutral physician responses to patient expressions of negative emotion were associated with less negative patient ratings for 2 of the items: covering points of interest (aRE 0.68; 95% CI, 0.51-0.90) and trusting the doctor (aRE: 0.86; 95% CI, 0.75-0.99).

We did not find a statistical association between encounter length and the number of empathic hospitalist responses in the encounter (percent change in encounter length per response [PC]: 1%; 95% CI, −8%-10%) or the number of nonempathic responses (PC: 18%; 95% CI, −2%-42%). We did find a statistically significant association between the number of neutral responses and encounter length (PC: 13%; 95% CI, 3%-24%), corresponding to 2.5 minutes of additional encounter time per neutral response for the median encounter length of 19 minutes.

DISCUSSION

Our study set out to measure how hospitalists responded to expressions of negative emotion during admission encounters with patients and how those responses correlated with patient anxiety, ratings of communication, and encounter length. We found that empathic responses were associated with diminishing patient anxiety after the visit, as well as with better ratings of several domains of hospitalist communication. Moreover, nonempathic responses to negative emotion were associated with more strongly negative ratings of hospitalist communication. Finally, while clinicians may worry that encouraging patients to speak further about emotion will result in excessive visit lengths, we did not find a statistical association between empathic responses and encounter duration. To our knowledge, this is the first study to indicate an association between empathy and patient anxiety and communication ratings within the hospitalist model, which is rapidly becoming the predominant model for providing inpatient care in the United States.4,5

As in oncologic care, anxiety is an emotion commonly confronted by clinicians meeting admitted medical patients for the first time. Studies show that not only do patient anxiety levels remain high throughout a hospital course, patients who experience higher levels of anxiety tend to stay longer in the hospital.1,2,27-30 But unlike oncologic care or other therapy provided in an outpatient setting, the hospitalist model does not facilitate “continuity” of care, or the ability to care for the same patients over a long period of time. This reality of inpatient care makes rapid, effective rapport-building critical to establishing strong physician-patient relationships. In this setting, a simple communication tool that is potentially able to reduce inpatients’ anxiety could have a meaningful impact on hospitalist-provided care and patient outcomes.

In terms of the magnitude of the effect of empathic responses, the clinical significance of a 1.65-point decrease in the STAI-S anxiety score is not precisely clear. A prior study that examined the effect of music therapy on anxiety levels in patients with cancer found an average anxiety reduction of approximately 9.5 units on the STAIS-S scale after sensitivity analysis, suggesting a rather large meaningful effect size.31 Given we found a reduction of 1.65 points for each empathic response, however, with a range of 0-14 negative emotions expressed over a median 19-minute encounter, there is opportunity for hospitalists to achieve a clinically significant decrease in patient anxiety during an admission encounter. The potential to reduce anxiety is extended further when we consider that the impact of an empathic response may apply not just to the admission encounter alone but also to numerous other patient-clinician interactions over the course of a hospitalization.

A healthy body of communication research supports the associations we found in our study between empathy and patient ratings of communication and physicians. Families in ICU conferences rate communication more positively when physicians express empathy,12 and a number of studies indicate an association between empathy and patient satisfaction in outpatient settings.8 Given the associations we found with negative ratings on the items in our study, promoting empathic responses to expressions of emotion and, more importantly, stressing avoidance of nonempathic responses may be relevant efforts in working to improve patient satisfaction scores on surveys reporting “top box” percentages, such as Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS). More notably, evidence indicates that empathy has positive impacts beyond satisfaction surveys, such as adherence, better diagnostic and clinical outcomes, and strengthening of patient enablement.8Not all hospitalist responses to emotion were associated with patient ratings across the 7 communication items we assessed. For example, we did not find an association between how physicians responded to patient expressions of negative emotion and patient perception that enough time was spent in the visit or the degree to which talking with the doctor met a patient’s overall needs. It follows logically, and other research supports, that empathy would influence patient ratings of physician caring and trust,32 whereas other communication factors we were unable to measure (eg, physician body language, tone, and use of jargon and patient health literacy and primary language) may have a more significant association with patient ratings of the other items we assessed.

In considering the clinical application of our results, it is important to note that communication skills, including responding empathically to patient expressions of negative emotion, can be imparted through training in the same way as abdominal examination or electrocardiogram interpretation skills.33-35 However, training of hospitalists in communication skills requires time and some financial investment on the part of the physician, their hospital or group, or, ideally, both. Effective training methods, like those for other skill acquisition, involve learner-centered teaching and practicing skills with role-play and feedback.36 Given the importance of a learner-centered approach, learning would likely be better received and more effective if it was tailored to the specific needs and patient scenarios commonly encountered by hospitalist physicians. As these programs are developed, it will be important to assess the impact of any training on the patient-reported outcomes we assessed in this observational study, along with clinical outcomes.

Our study has several limitations. First, we were only able to evaluate whether hospitalists verbally responded to patient emotion and were thus not able to account for nonverbal empathy such as facial expressions, body language, or voice tone. Second, given our patient consent rate of 63%, patients who agreed to participate in the study may have had different opinions than those who declined to participate. Also, hospitalists and patients may have behaved differently as a result of being audio recorded. We only included patients who spoke English, and our patient population was predominately non-Hispanic white. Patients who spoke other languages or came from other cultural backgrounds may have had different responses. Third, we did not use a single validated scale for patient ratings of communication, and multiple analyses increase our risk of finding statistically significant associations by chance. The skewing of the communication rating items toward high scores may also have led to our results being driven by outliers, although the model we chose for analysis does penalize for this. Furthermore, our sample size was small, leading to wide CIs and potential for lack of statistical associations due to insufficient power. Our findings warrant replication in larger studies. Fourth, the setting of our study in an academic center may affect generalizability. Finally, the age of our data (collected between 2008 and 2009) is also a limitation. Given a recent focus on communication and patient experience since the initiation of HCAHPS feedback, a similar analysis of empathy and communication methods now may result in different outcomes.

In conclusion, our results suggest that enhancing hospitalists’ empathic responses to patient expressions of negative emotion could decrease patient anxiety and improve patients’ perceptions of (and thus possibly their relationships with) hospitalists, without sacrificing efficiency. Future work should focus on tailoring and implementing communication skills training programs for hospitalists and evaluating the impact of training on patient outcomes.

Acknowledgments

The authors extend their sincere thanks to the patients and physicians who participated in this study. Dr. Anderson was funded by the National Palliative Care Research Center and the University of California, San Francisco Clinical and Translational Science Institute Career Development Program, National Institutes of Health (NIH) grant number 5 KL2 RR024130-04. Project costs were funded by a grant from the University of California, San Francisco Academic Senate.

Disclosure

All coauthors have seen and agree with the contents of this manuscript. This submission is not under review by any other publication. Wendy Anderson received funding for this project from the National Palliative Care Research Center, University of California San Francisco Clinical and Translational Science Institute (NIH grant number 5KL2RR024130-04), and the University of San Francisco Academic Senate [From Section 2 of Author Disclosure Form]. Andy Auerbach has a Patient-Centered Outcomes Research Institute research grant in development [From Section 3 of the Author Disclosure Form].

Admission to a hospital can be a stressful event,1,2 and patients report having many concerns at the time of hospital admission.3 Over the last 20 years, the United States has widely adopted the hospitalist model of inpatient care. Although this model has clear benefits, it also has the potential to contribute to patient stress, as hospitalized patients generally lack preexisting relationships with their inpatient physicians.4,5 In this changing hospital environment, defining and promoting effective medical communication has become an essential goal of both individual practitioners and medical centers.

Successful communication and strong therapeutic relationships with physicians support patients’ coping with illness-associated stress6,7 as well as promote adherence to medical treatment plans.8 Empathy serves as an important building block of patient-centered communication and encourages a strong therapeutic alliance.9 Studies from primary care, oncology, and intensive care unit (ICU) settings indicate that physician empathy is associated with decreased emotional distress,10,11 improved ratings of communication,12 and even better medical outcomes.13

Prior work has shown that hospitalists, like other clinicians, underutilize empathy as a tool in their daily interactions with patients.14-16 Our prior qualitative analysis of audio-recorded hospitalist-patient admission encounters indicated that how hospitalists respond to patient expressions of negative emotion influences relationships with patients and alignment around care plans.17 To determine whether empathic communication is associated with patient-reported outcomes in the hospitalist model, we quantitatively analyzed coded admission encounters and survey data to examine the association between hospitalists’ responses to patient expressions of negative emotion (anxiety, sadness, and anger) and patient anxiety and ratings of communication. Given the often-limited time hospitalists have to complete admission encounters, we also examined the association between response to emotion and encounter length.

METHODS

We analyzed data collected as part of an observational study of hospitalist-patient communication during hospital admission encounters14 to assess the association between the way physicians responded to patient expressions of negative emotion and patient anxiety, ratings of communication in the encounter, and encounter length. We collected data between August 2008 and March 2009 on the general medical service at 2 urban hospitals that are part of an academic medical center. Participants were attending hospitalists (not physician trainees), and patients admitted under participating hospitalists’ care who were able to communicate verbally in English and provide informed consent for the study. The institutional review board at the University of California, San Francisco approved the study; physician and patient participants provided written informed consent.

Enrollment and data collection has been described previously.17 Our cohort for this analysis included 76 patients of 27 physicians who completed encounter audio recordings and pre- and postencounter surveys. Following enrollment, patients completed a preencounter survey to collect demographic information and to measure their baseline anxiety via the State Anxiety Scale (STAI-S), which assesses transient anxious mood using 20 items answered on a 4-point scale for a final score range of 20 to 80.10,18,19 We timed and audio-recorded admission encounters. Encounter recordings were obtained solely from patient interactions with attending hospitalists and did not take into account the time patients may have spent with other physicians, including trainees. After the encounter, patients completed postencounter surveys, which included the STAI-S and patients’ ratings of communication during the encounter. To rate communication, patients responded to 7 items on a 0- to 10-point scale that were derived from previous work (Table 1)12,20,21; the anchors were “not at all” and “completely.” To identify patients with serious illness, which we used as a covariate in regression models, we asked physicians on a postencounter survey whether or not they “would be surprised by this patient’s death or admission to the ICU in the next year.”22

We considered physician as a clustering variable in the calculation of robust standard errors for all models. In addition, we included in each model covariates that were associated with the outcome at P ≤ 0.10, including patient gender, patient age, serious illness,22 preencounter anxiety, encounter length, and hospital. We considered P values < 0.05 to be statistically significant. We used Stata SE 13 (StataCorp LLC, College Station, TX) for all statistical analyses.

RESULTS

We analyzed data from admission encounters with 76 patients (consent rate 63%) and 27 hospitalists (consent rate 91%). Their characteristics are shown in Table 3. Median encounter length was 19 minutes (mean 21 minutes, range 3-68). Patients expressed negative emotion in 190 instances across all encounters; median number of expressions per encounter was 1 (range 0-14). Hospitalists responded empathically to 32% (n = 61) of the patient expressions, neutrally to 43% (n = 81), and nonempathically to 25% (n = 48).

The STAI-S was normally distributed. The mean preencounter STAI-S score was 39 (standard deviation [SD] 8.9). Mean postencounter STAI-S score was 38 (SD 10.7). Mean change in anxiety over the course of the encounter, calculated as the postencounter minus preencounter mean was −1.2 (SD 7.6). Table 1 shows summary statistics for the patient ratings of communication items. All items were rated highly. Across the items, between 51% and 78% of patients rated the highest score of 10.

Across the range of frequencies of emotional expressions per encounter in our data set (0-14 expressions), each additional empathic hospitalist response was associated with a 1.65-point decrease in the STAI-S (95% confidence interval [CI], 0.48-2.82). We did not find significant associations between changes in the STAI-S and the number of neutral hospitalist responses (−0.65 per response; 95% CI, −1.67-0.37) or nonempathic hospitalist responses (0.61 per response; 95% CI, −0.88-2.10).

In addition, nonempathic responses were associated with more negative ratings of communication for 5 of the 7 items: ease of understanding information, covering points of interest, the doctor listening, the doctor caring, and trusting the doctor. For example, for the item “I felt this doctor cared about me,” each nonempathic hospitalist response was associated with a more than doubling of negative patient ratings (aRE: 2.3; 95% CI, 1.32-4.16). Neutral physician responses to patient expressions of negative emotion were associated with less negative patient ratings for 2 of the items: covering points of interest (aRE 0.68; 95% CI, 0.51-0.90) and trusting the doctor (aRE: 0.86; 95% CI, 0.75-0.99).

We did not find a statistical association between encounter length and the number of empathic hospitalist responses in the encounter (percent change in encounter length per response [PC]: 1%; 95% CI, −8%-10%) or the number of nonempathic responses (PC: 18%; 95% CI, −2%-42%). We did find a statistically significant association between the number of neutral responses and encounter length (PC: 13%; 95% CI, 3%-24%), corresponding to 2.5 minutes of additional encounter time per neutral response for the median encounter length of 19 minutes.

DISCUSSION

Our study set out to measure how hospitalists responded to expressions of negative emotion during admission encounters with patients and how those responses correlated with patient anxiety, ratings of communication, and encounter length. We found that empathic responses were associated with diminishing patient anxiety after the visit, as well as with better ratings of several domains of hospitalist communication. Moreover, nonempathic responses to negative emotion were associated with more strongly negative ratings of hospitalist communication. Finally, while clinicians may worry that encouraging patients to speak further about emotion will result in excessive visit lengths, we did not find a statistical association between empathic responses and encounter duration. To our knowledge, this is the first study to indicate an association between empathy and patient anxiety and communication ratings within the hospitalist model, which is rapidly becoming the predominant model for providing inpatient care in the United States.4,5

As in oncologic care, anxiety is an emotion commonly confronted by clinicians meeting admitted medical patients for the first time. Studies show that not only do patient anxiety levels remain high throughout a hospital course, patients who experience higher levels of anxiety tend to stay longer in the hospital.1,2,27-30 But unlike oncologic care or other therapy provided in an outpatient setting, the hospitalist model does not facilitate “continuity” of care, or the ability to care for the same patients over a long period of time. This reality of inpatient care makes rapid, effective rapport-building critical to establishing strong physician-patient relationships. In this setting, a simple communication tool that is potentially able to reduce inpatients’ anxiety could have a meaningful impact on hospitalist-provided care and patient outcomes.

In terms of the magnitude of the effect of empathic responses, the clinical significance of a 1.65-point decrease in the STAI-S anxiety score is not precisely clear. A prior study that examined the effect of music therapy on anxiety levels in patients with cancer found an average anxiety reduction of approximately 9.5 units on the STAIS-S scale after sensitivity analysis, suggesting a rather large meaningful effect size.31 Given we found a reduction of 1.65 points for each empathic response, however, with a range of 0-14 negative emotions expressed over a median 19-minute encounter, there is opportunity for hospitalists to achieve a clinically significant decrease in patient anxiety during an admission encounter. The potential to reduce anxiety is extended further when we consider that the impact of an empathic response may apply not just to the admission encounter alone but also to numerous other patient-clinician interactions over the course of a hospitalization.

A healthy body of communication research supports the associations we found in our study between empathy and patient ratings of communication and physicians. Families in ICU conferences rate communication more positively when physicians express empathy,12 and a number of studies indicate an association between empathy and patient satisfaction in outpatient settings.8 Given the associations we found with negative ratings on the items in our study, promoting empathic responses to expressions of emotion and, more importantly, stressing avoidance of nonempathic responses may be relevant efforts in working to improve patient satisfaction scores on surveys reporting “top box” percentages, such as Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS). More notably, evidence indicates that empathy has positive impacts beyond satisfaction surveys, such as adherence, better diagnostic and clinical outcomes, and strengthening of patient enablement.8Not all hospitalist responses to emotion were associated with patient ratings across the 7 communication items we assessed. For example, we did not find an association between how physicians responded to patient expressions of negative emotion and patient perception that enough time was spent in the visit or the degree to which talking with the doctor met a patient’s overall needs. It follows logically, and other research supports, that empathy would influence patient ratings of physician caring and trust,32 whereas other communication factors we were unable to measure (eg, physician body language, tone, and use of jargon and patient health literacy and primary language) may have a more significant association with patient ratings of the other items we assessed.

In considering the clinical application of our results, it is important to note that communication skills, including responding empathically to patient expressions of negative emotion, can be imparted through training in the same way as abdominal examination or electrocardiogram interpretation skills.33-35 However, training of hospitalists in communication skills requires time and some financial investment on the part of the physician, their hospital or group, or, ideally, both. Effective training methods, like those for other skill acquisition, involve learner-centered teaching and practicing skills with role-play and feedback.36 Given the importance of a learner-centered approach, learning would likely be better received and more effective if it was tailored to the specific needs and patient scenarios commonly encountered by hospitalist physicians. As these programs are developed, it will be important to assess the impact of any training on the patient-reported outcomes we assessed in this observational study, along with clinical outcomes.

Our study has several limitations. First, we were only able to evaluate whether hospitalists verbally responded to patient emotion and were thus not able to account for nonverbal empathy such as facial expressions, body language, or voice tone. Second, given our patient consent rate of 63%, patients who agreed to participate in the study may have had different opinions than those who declined to participate. Also, hospitalists and patients may have behaved differently as a result of being audio recorded. We only included patients who spoke English, and our patient population was predominately non-Hispanic white. Patients who spoke other languages or came from other cultural backgrounds may have had different responses. Third, we did not use a single validated scale for patient ratings of communication, and multiple analyses increase our risk of finding statistically significant associations by chance. The skewing of the communication rating items toward high scores may also have led to our results being driven by outliers, although the model we chose for analysis does penalize for this. Furthermore, our sample size was small, leading to wide CIs and potential for lack of statistical associations due to insufficient power. Our findings warrant replication in larger studies. Fourth, the setting of our study in an academic center may affect generalizability. Finally, the age of our data (collected between 2008 and 2009) is also a limitation. Given a recent focus on communication and patient experience since the initiation of HCAHPS feedback, a similar analysis of empathy and communication methods now may result in different outcomes.

In conclusion, our results suggest that enhancing hospitalists’ empathic responses to patient expressions of negative emotion could decrease patient anxiety and improve patients’ perceptions of (and thus possibly their relationships with) hospitalists, without sacrificing efficiency. Future work should focus on tailoring and implementing communication skills training programs for hospitalists and evaluating the impact of training on patient outcomes.

Acknowledgments

The authors extend their sincere thanks to the patients and physicians who participated in this study. Dr. Anderson was funded by the National Palliative Care Research Center and the University of California, San Francisco Clinical and Translational Science Institute Career Development Program, National Institutes of Health (NIH) grant number 5 KL2 RR024130-04. Project costs were funded by a grant from the University of California, San Francisco Academic Senate.

Disclosure

All coauthors have seen and agree with the contents of this manuscript. This submission is not under review by any other publication. Wendy Anderson received funding for this project from the National Palliative Care Research Center, University of California San Francisco Clinical and Translational Science Institute (NIH grant number 5KL2RR024130-04), and the University of San Francisco Academic Senate [From Section 2 of Author Disclosure Form]. Andy Auerbach has a Patient-Centered Outcomes Research Institute research grant in development [From Section 3 of the Author Disclosure Form].

1. Walker FB, Novack DH, Kaiser DL, Knight A, Oblinger P. Anxiety and depression among medical and surgical patients nearing hospital discharge. J Gen Intern Med. 1987;2(2):99-101. PubMed

2. Castillo MI, Cooke M, Macfarlane B, Aitken LM. Factors associated with anxiety in critically ill patients: A prospective observational cohort study. Int J Nurs Stud. 2016;60:225-233. PubMed

3. Anderson WG, Winters K, Auerbach AD. Patient concerns at hospital admission. Arch Intern Med. 2011;171(15):1399-1400. PubMed

4. Kuo Y-F, Sharma G, Freeman JL, Goodwin JS. Growth in the care of older patients by hospitalists in the United States. N Engl J Med. 2009;360(11):1102-1112. PubMed

5. Wachter RM, Goldman L. Zero to 50,000 - The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375(11):1009-1011. PubMed

6. Mack JW, Block SD, Nilsson M, et al. Measuring therapeutic alliance between oncologists and patients with advanced cancer: the Human Connection Scale. Cancer. 2009;115(14):3302-3311. PubMed

7. Huff NG, Nadig N, Ford DW, Cox CE. Therapeutic Alliance between the Caregivers of Critical Illness Survivors and Intensive Care Unit Clinicians. [published correction appears in Ann Am Thorac Soc. 2016;13(4):576]. Ann Am Thorac Soc. 2015;12(11):1646-1653. PubMed

8. Derksen F, Bensing J, Lagro-Janssen A. Effectiveness of empathy in general practice: a systematic review. Br J Gen Pract. 2013;63(606):e76-e84. PubMed

9. Dwamena F, Holmes-Rovner M, Gaulden CM, et al. Interventions for providers to promote a patient-centred approach in clinical consultations. Cochrane Database Syst Rev. 2012;12:CD003267. PubMed

10. Fogarty LA, Curbow BA, Wingard JR, McDonnell K, Somerfield MR. Can 40 seconds of compassion reduce patient anxiety? J Clin Oncol. 1999;17(1):371-379. PubMed

11. Roter DL, Hall JA, Kern DE, Barker LR, Cole KA, Roca RP. Improving physicians’ interviewing skills and reducing patients’ emotional distress. A randomized clinical trial. Arch Intern Med. 1995;155(17):1877-1884. PubMed

12. Stapleton RD, Engelberg RA, Wenrich MD, Goss CH, Curtis JR. Clinician statements and family satisfaction with family conferences in the intensive care unit. Crit Care Med. 2006;34(6):1679-1685. PubMed

13. Hojat M, Louis DZ, Markham FW, Wender R, Rabinowitz C, Gonnella JS. Physicians’ empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359-364. PubMed

14. Anderson WG, Winters K, Arnold RM, Puntillo KA, White DB, Auerbach AD. Studying physician-patient communication in the acute care setting: the hospitalist rapport study. Patient Educ Couns. 2011;82(2):275-279. PubMed

15. Pollak KI, Arnold RM, Jeffreys AS, et al. Oncologist communication about emotion during visits with patients with advanced cancer. J Clin Oncol. 2007;25(36):5748-5752. PubMed

16. Suchman AL, Markakis K, Beckman HB, Frankel R. A model of empathic communication in the medical interview. JAMA. 1997;277(8):678-682. PubMed

17. Adams K, Cimino JEW, Arnold RM, Anderson WG. Why should I talk about emotion? Communication patterns associated with physician discussion of patient expressions of negative emotion in hospital admission encounters. Patient Educ Couns. 2012;89(1):44-50. PubMed

18. Julian LJ. Measures of anxiety: State-Trait Anxiety Inventory (STAI), Beck Anxiety Inventory (BAI), and Hospital Anxiety and Depression Scale-Anxiety (HADS-A). Arthritis Care Res (Hoboken). 2011;63 Suppl 11:S467-S472. PubMed

19. Speilberger C, Ritterband L, Sydeman S, Reheiser E, Unger K. Assessment of emotional states and personality traits: measuring psychological vital signs. In: Butcher J, editor. Clinical personality assessment: practical approaches. New York: Oxford University Press; 1995.

20. Safran DG, Kosinski M, Tarlov AR, et al. The Primary Care Assessment Survey: tests of data quality and measurement performance. Med Care. 1998;36(5):728-739. PubMed

21. Azoulay E, Pochard F, Kentish-Barnes N, et al. Risk of post-traumatic stress symptoms in family members of intensive care unit patients. Am J Respir Crit Care Med. 2005;171(9):987-994. PubMed

22. Lynn J. Perspectives on care at the close of life. Serving patients who may die soon and their families: the role of hospice and other services. JAMA. 2001;285(7):925-932. PubMed

23. Kennifer SL, Alexander SC, Pollak KI, et al. Negative emotions in cancer care: do oncologists’ responses depend on severity and type of emotion? Patient Educ Couns. 2009;76(1):51-56. PubMed

24. Butow PN, Brown RF, Cogar S, Tattersall MHN, Dunn SM. Oncologists’ reactions to cancer patients’ verbal cues. Psychooncology. 2002;11(1):47-58. PubMed

25. Levinson W, Gorawara-Bhat R, Lamb J. A study of patient clues and physician responses in primary care and surgical settings. JAMA. 2000;284(8):1021-1027. PubMed

26. Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20(1):37-46.

27. Fulop G. Anxiety disorders in the general hospital setting. Psychiatr Med. 1990;8(3):187-195. PubMed

28. Gerson S, Mistry R, Bastani R, et al. Symptoms of depression and anxiety (MHI) following acute medical/surgical hospitalization and post-discharge psychiatric diagnoses (DSM) in 839 geriatric US veterans. Int J Geriatr Psychiatry. 2004;19(12):1155-1167. PubMed

29. Kathol RG, Wenzel RP. Natural history of symptoms of depression and anxiety during inpatient treatment on general medicine wards. J Gen Intern Med. 1992;7(3):287-293. PubMed

30. Unsal A, Unaldi C, Baytemir C. Anxiety and depression levels of inpatients in the city centre of Kirşehir in Turkey. Int J Nurs Pract. 2011;17(4):411-418. PubMed

31. Bradt J, Dileo C, Grocke D, Magill L. Music interventions for improving psychological and physical outcomes in cancer patients. [Update appears in Cochrane Database Syst Rev. 2016;(8):CD006911] Cochrane Database Syst Rev. 2011;(8):CD006911. PubMed

32. Kim SS, Kaplowitz S, Johnston MV. The effects of physician empathy on patient satisfaction and compliance. Eval Health Prof. 2004;27(3):237-251. PubMed

33. Tulsky JA, Arnold RM, Alexander SC, et al. Enhancing communication between oncologists and patients with a computer-based training program: a randomized trial. Ann Intern Med. 2011;155(9):593-601. PubMed

34. Bays AM, Engelberg RA, Back AL, et al. Interprofessional communication skills training for serious illness: evaluation of a small-group, simulated patient intervention. J Palliat Med. 2014;17(2):159-166. PubMed

35. Epstein RM, Duberstein PR, Fenton JJ, et al. Effect of a Patient-Centered Communication Intervention on Oncologist-Patient Communication, Quality of Life, and Health Care Utilization in Advanced Cancer: The VOICE Randomized Clinical Trial. JAMA Oncol. 2017;3(1):92-100. PubMed

36. Berkhof M, van Rijssen HJ, Schellart AJM, Anema JR, van der Beek AJ. Effective training strategies for teaching communication skills to physicians: an overview of systematic reviews. Patient Educ Couns. 2011;84(2):152-162. PubMed

1. Walker FB, Novack DH, Kaiser DL, Knight A, Oblinger P. Anxiety and depression among medical and surgical patients nearing hospital discharge. J Gen Intern Med. 1987;2(2):99-101. PubMed

2. Castillo MI, Cooke M, Macfarlane B, Aitken LM. Factors associated with anxiety in critically ill patients: A prospective observational cohort study. Int J Nurs Stud. 2016;60:225-233. PubMed

3. Anderson WG, Winters K, Auerbach AD. Patient concerns at hospital admission. Arch Intern Med. 2011;171(15):1399-1400. PubMed

4. Kuo Y-F, Sharma G, Freeman JL, Goodwin JS. Growth in the care of older patients by hospitalists in the United States. N Engl J Med. 2009;360(11):1102-1112. PubMed

5. Wachter RM, Goldman L. Zero to 50,000 - The 20th Anniversary of the Hospitalist. N Engl J Med. 2016;375(11):1009-1011. PubMed

6. Mack JW, Block SD, Nilsson M, et al. Measuring therapeutic alliance between oncologists and patients with advanced cancer: the Human Connection Scale. Cancer. 2009;115(14):3302-3311. PubMed

7. Huff NG, Nadig N, Ford DW, Cox CE. Therapeutic Alliance between the Caregivers of Critical Illness Survivors and Intensive Care Unit Clinicians. [published correction appears in Ann Am Thorac Soc. 2016;13(4):576]. Ann Am Thorac Soc. 2015;12(11):1646-1653. PubMed

8. Derksen F, Bensing J, Lagro-Janssen A. Effectiveness of empathy in general practice: a systematic review. Br J Gen Pract. 2013;63(606):e76-e84. PubMed

9. Dwamena F, Holmes-Rovner M, Gaulden CM, et al. Interventions for providers to promote a patient-centred approach in clinical consultations. Cochrane Database Syst Rev. 2012;12:CD003267. PubMed

10. Fogarty LA, Curbow BA, Wingard JR, McDonnell K, Somerfield MR. Can 40 seconds of compassion reduce patient anxiety? J Clin Oncol. 1999;17(1):371-379. PubMed

11. Roter DL, Hall JA, Kern DE, Barker LR, Cole KA, Roca RP. Improving physicians’ interviewing skills and reducing patients’ emotional distress. A randomized clinical trial. Arch Intern Med. 1995;155(17):1877-1884. PubMed

12. Stapleton RD, Engelberg RA, Wenrich MD, Goss CH, Curtis JR. Clinician statements and family satisfaction with family conferences in the intensive care unit. Crit Care Med. 2006;34(6):1679-1685. PubMed

13. Hojat M, Louis DZ, Markham FW, Wender R, Rabinowitz C, Gonnella JS. Physicians’ empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359-364. PubMed

14. Anderson WG, Winters K, Arnold RM, Puntillo KA, White DB, Auerbach AD. Studying physician-patient communication in the acute care setting: the hospitalist rapport study. Patient Educ Couns. 2011;82(2):275-279. PubMed

15. Pollak KI, Arnold RM, Jeffreys AS, et al. Oncologist communication about emotion during visits with patients with advanced cancer. J Clin Oncol. 2007;25(36):5748-5752. PubMed

16. Suchman AL, Markakis K, Beckman HB, Frankel R. A model of empathic communication in the medical interview. JAMA. 1997;277(8):678-682. PubMed

17. Adams K, Cimino JEW, Arnold RM, Anderson WG. Why should I talk about emotion? Communication patterns associated with physician discussion of patient expressions of negative emotion in hospital admission encounters. Patient Educ Couns. 2012;89(1):44-50. PubMed

18. Julian LJ. Measures of anxiety: State-Trait Anxiety Inventory (STAI), Beck Anxiety Inventory (BAI), and Hospital Anxiety and Depression Scale-Anxiety (HADS-A). Arthritis Care Res (Hoboken). 2011;63 Suppl 11:S467-S472. PubMed

19. Speilberger C, Ritterband L, Sydeman S, Reheiser E, Unger K. Assessment of emotional states and personality traits: measuring psychological vital signs. In: Butcher J, editor. Clinical personality assessment: practical approaches. New York: Oxford University Press; 1995.

20. Safran DG, Kosinski M, Tarlov AR, et al. The Primary Care Assessment Survey: tests of data quality and measurement performance. Med Care. 1998;36(5):728-739. PubMed

21. Azoulay E, Pochard F, Kentish-Barnes N, et al. Risk of post-traumatic stress symptoms in family members of intensive care unit patients. Am J Respir Crit Care Med. 2005;171(9):987-994. PubMed

22. Lynn J. Perspectives on care at the close of life. Serving patients who may die soon and their families: the role of hospice and other services. JAMA. 2001;285(7):925-932. PubMed

23. Kennifer SL, Alexander SC, Pollak KI, et al. Negative emotions in cancer care: do oncologists’ responses depend on severity and type of emotion? Patient Educ Couns. 2009;76(1):51-56. PubMed

24. Butow PN, Brown RF, Cogar S, Tattersall MHN, Dunn SM. Oncologists’ reactions to cancer patients’ verbal cues. Psychooncology. 2002;11(1):47-58. PubMed

25. Levinson W, Gorawara-Bhat R, Lamb J. A study of patient clues and physician responses in primary care and surgical settings. JAMA. 2000;284(8):1021-1027. PubMed

26. Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20(1):37-46.

27. Fulop G. Anxiety disorders in the general hospital setting. Psychiatr Med. 1990;8(3):187-195. PubMed

28. Gerson S, Mistry R, Bastani R, et al. Symptoms of depression and anxiety (MHI) following acute medical/surgical hospitalization and post-discharge psychiatric diagnoses (DSM) in 839 geriatric US veterans. Int J Geriatr Psychiatry. 2004;19(12):1155-1167. PubMed

29. Kathol RG, Wenzel RP. Natural history of symptoms of depression and anxiety during inpatient treatment on general medicine wards. J Gen Intern Med. 1992;7(3):287-293. PubMed

30. Unsal A, Unaldi C, Baytemir C. Anxiety and depression levels of inpatients in the city centre of Kirşehir in Turkey. Int J Nurs Pract. 2011;17(4):411-418. PubMed

31. Bradt J, Dileo C, Grocke D, Magill L. Music interventions for improving psychological and physical outcomes in cancer patients. [Update appears in Cochrane Database Syst Rev. 2016;(8):CD006911] Cochrane Database Syst Rev. 2011;(8):CD006911. PubMed

32. Kim SS, Kaplowitz S, Johnston MV. The effects of physician empathy on patient satisfaction and compliance. Eval Health Prof. 2004;27(3):237-251. PubMed

33. Tulsky JA, Arnold RM, Alexander SC, et al. Enhancing communication between oncologists and patients with a computer-based training program: a randomized trial. Ann Intern Med. 2011;155(9):593-601. PubMed

34. Bays AM, Engelberg RA, Back AL, et al. Interprofessional communication skills training for serious illness: evaluation of a small-group, simulated patient intervention. J Palliat Med. 2014;17(2):159-166. PubMed

35. Epstein RM, Duberstein PR, Fenton JJ, et al. Effect of a Patient-Centered Communication Intervention on Oncologist-Patient Communication, Quality of Life, and Health Care Utilization in Advanced Cancer: The VOICE Randomized Clinical Trial. JAMA Oncol. 2017;3(1):92-100. PubMed

36. Berkhof M, van Rijssen HJ, Schellart AJM, Anema JR, van der Beek AJ. Effective training strategies for teaching communication skills to physicians: an overview of systematic reviews. Patient Educ Couns. 2011;84(2):152-162. PubMed

© 2017 Society of Hospital Medicine

Automating venous thromboembolism risk calculation using electronic health record data upon hospital admission: The automated Padua Prediction Score

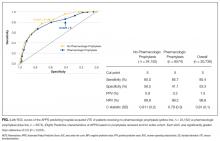

Hospital-acquired venous thromboembolism (VTE) continues to be a critical quality challenge for U.S. hospitals,1 and high-risk patients are often not adequately prophylaxed. Use of VTE prophylaxis (VTEP) varies as widely as 26% to 85% of patients in various studies, as does patient outcomes and care expenditures.2-6 The 9th edition of the American College of Chest Physicians (CHEST) guidelines7 recommend the Padua Prediction Score (PPS) to select individual patients who may be at high risk for venous thromboembolism (VTE) and could benefit from thromboprophylaxis. Use of the manually calculated PPS to select patients for thromboprophylaxis has been shown to help decrease 30-day and 90-day mortality associated with VTE events after hospitalization to medical services.8 However, the PPS requires time-consuming manual calculation by a provider, who may be focused on more immediate aspects of patient care and several other risk scores competing for his attention, potentially decreasing its use.

Other risk scores that use only discrete scalar data, such as vital signs and lab results to predict early recognition of sepsis, have been successfully automated and implemented within electronic health records (EHRs).9-11 Successful automation of scores requiring input of diagnoses, recent medical events, and current clinical status such as the PPS remains difficult.12 Data representing these characteristics are more prone to error, and harder to translate clearly into a single data field than discrete elements like heart rate, potentially impacting validity of the calculated result.13 To improve usage of guideline based VTE risk assessment and decrease physician burden, we developed an algorithm called Automated Padua Prediction Score (APPS) that automatically calculates the PPS using only EHR data available within prior encounters and the first 4 hours of admission, a similar timeframe to when admitting providers would be entering orders. Our goal was to assess if an automatically calculated version of the PPS, a score that depends on criteria more complex than vital signs and labs, would accurately assess risk for hospital-acquired VTE when compared to traditional manual calculation of the Padua Prediction Score by a provider.

METHODS

Site Description and Ethics

The study was conducted at University of California, San Francisco Medical Center, a 790-bed academic hospital; its Institutional Review Board approved the study and collection of data via chart review. Handling of patient information complied with the Health Insurance Portability and Accountability Act of 1996.

Patient Inclusion

Adult patients admitted to a medical or surgical service between July 1, 2012 and April 1, 2014 were included in the study if they were candidates for VTEP, defined as: length of stay (LOS) greater than 2 days, not on hospice care, not pregnant at admission, no present on admission VTE diagnosis, no known contraindications to prophylaxis (eg, gastrointestinal bleed), and were not receiving therapeutic doses of warfarin, low molecular weight heparins, heparin, or novel anticoagulants prior to admission.

Data Sources

Clinical variables were extracted from the EHR’s enterprise data warehouse (EDW) by SQL Server query (Microsoft, Redmond, Washington) and deposited in a secure database. Chart review was conducted by a trained researcher (Mr. Jacolbia) using the EHR and a standardized protocol. Findings were recorded using REDCap (REDCap Consortium, Vanderbilt University, Nashville, Tennessee). The specific ICD-9, procedure, and lab codes used to determine each criterion of APPS are available in the Appendix.

Creation of the Automated Padua Prediction Score (APPS)

We developed APPS from the original 11 criteria that comprise the Padua Prediction Score: active cancer, previous VTE (excluding superficial vein thrombosis), reduced mobility, known thrombophilic condition, recent (1 month or less) trauma and/or surgery, age 70 years or older, heart and/or respiratory failure, acute myocardial infarction and/or ischemic stroke, acute infection and/or rheumatologic disorder, body mass index (BMI) 30 or higher, and ongoing hormonal treatment.13 APPS has the same scoring methodology as PPS: criteria are weighted from 1 to 3 points and summed with a maximum score of 20, representing highest risk of VTE. To automate the score calculation from data routinely available in the EHR, APPS checks pre-selected structured data fields for specific values within laboratory results, orders, nursing flowsheets and claims. Claims data included all ICD-9 and procedure codes used for billing purposes. If any of the predetermined data elements are found, then the specific criterion is considered positive; otherwise, it is scored as negative. The creators of the PPS were consulted in the generation of these data queries to replicate the original standards for deeming a criterion positive. The automated calculation required no use of natural language processing.

Characterization of Study Population

We recorded patient demographics (age, race, gender, BMI), LOS, and rate of hospital-acquired VTE. These patients were separated into 2 cohorts determined by the VTE prophylaxis they received. The risk profile of patients who received pharmacologic prophylaxis was hypothesized to be inherently different from those who had not. To evaluate APPS within this heterogeneous cohort, patients were divided into 2 major categories: pharmacologic vs. no pharmacologic prophylaxis. If they had a completed order or medication administration record on the institution’s approved formulary for pharmacologic VTEP, they were considered to have received pharmacologic prophylaxis. If they had only a completed order for usage of mechanical prophylaxis (sequential compression devices) or no evidence of any form of VTEP, they were considered to have received no pharmacologic prophylaxis. Patients with evidence of both pharmacologic and mechanical were placed in the pharmacologic prophylaxis group. To ensure that automated designation of prophylaxis group was accurate, we reviewed 40 randomly chosen charts because prior researchers were able to achieve sensitivity and specificity greater than 90% with that sample size.14

The primary outcome of hospital-acquired VTE was defined as an ICD-9 code for VTE (specific codes are found in the Appendix) paired with a “present on admission = no” flag on that encounter’s hospital billing data, abstracted from the EDW. A previous study at this institution used the same methodology and found 212/226 (94%) of patients with a VTE ICD-9 code on claim had evidence of a hospital-acquired VTE event upon chart review.14 Chart review was also completed to ensure that the primary outcome of newly discovered hospital-acquired VTE was differentiated from chronic VTE or history of VTE. Theoretically, ICD-9 codes and other data elements treat chronic VTE, history of VTE, and hospital-acquired VTE as distinct diagnoses, but it was unclear if this was true in our dataset. For 75 randomly selected cases of presumed hospital-acquired VTE, charts were reviewed for evidence that confirmed newly found VTE during that encounter.

Validation of APPS through Comparison to Manual Calculation of the Original PPS