User login

Journal of Hospital Medicine – Nov. 2017

BACKGROUND AND OBJECTIVES: Adherence to American Academy of Pediatrics (AAP) bronchiolitis clinical practice guideline recommendations improved significantly through the AAP’s multi-institutional collaborative the Bronchiolitis Quality Improvement Project (BQIP). We assessed sustainability of improvements at participating institutions for 1 year following completion of the collaborative.

METHODS: Twenty-one multidisciplinary hospital-based teams provided monthly data for key inpatient bronchiolitis measures during baseline and intervention bronchiolitis seasons. Nine sites provided data in the season following completion of the collaborative. Encounters included children younger than 24 months who were hospitalized for bronchiolitis without comorbid chronic illness, prematurity, or intensive care. Changes between baseline-, intervention-, and sustainability-season data were assessed using generalized linear mixed-effects models with site-specific random effects. Differences between hospital characteristics, baseline performance, and initial improvement among sites that did and did not participate in the sustainability season were compared.

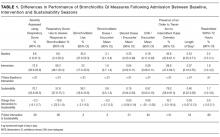

RESULTS: A total of 2,275 discharges were reviewed, comprising 995 baseline, 877 intervention, and 403 sustainability-season encounters. Improvements in all key bronchiolitis quality measures achieved during the intervention season were maintained during the sustainability season, and orders for intermittent pulse oximetry increased from 40.6% (95% confidence interval, 22.8-61.1) to 79.2% (95% CI, 58.0-91.3). Sites that did and did not participate in the sustainability season had similar characteristics.

DISCUSSION: BQIP participating sites maintained improvements in key bronchiolitis quality measures for 1 year following the project’s completion. This approach, which provided an evidence-based best-practice toolkit while building the quality-improvement capacity of local interdisciplinary teams, may support performance gains that persist beyond the active phase of the collaborative.

Also in JHM this month

The effect of an inpatient smoking cessation treatment program on hospital readmissions and length of stayAUTHORS: Eline M. van den Broek-Altenburg, MS, MA, Adam J. Atherly, PhD

Treatment trends and outcomes in healthcare-associated pneumoniaAUTHORS: Sarah Haessler, MD; Tara Lagu, MD, MPH; Peter K. Lindenauer, MD, MSc; Daniel J. Skiest, MD; Aruna Priya, MA, MSc; Penelope S. Pekow, PhD; Marya D. Zilberberg, MD, MPH; Thomas L. Higgins, MD, MBA; Michael B. Rothberg, MD, MPH

What’s the purpose of rounds? A qualitative study examining the perceptions of faculty and studentsAUTHORS: Oliver Hulland; Jeanne Farnan, MD, MHPE; Raphael Rabinowitz; Lisa Kearns, MD, MS; Michele Long, MD; Bradley Monash, MD; Priti Bhansali, MD; H. Barrett Fromme, MD, MHPE

Association between anemia and fatigue in hospitalized patients: does the measure of anemia matter?AUTHORS: Micah T. Prochaska, MD, MS; Richard Newcomb, BA; Graham Block, BA; Brian Park, BA; David O. Meltzer MD, PhD

Helping seniors plan for posthospital discharge needs before a hospitalization occurs: Results from the randomized control trial of planyourlifespan.orgAUTHORS: Lee A. Lindquist, MD, MPH, MBA; Vanessa Ramirez-Zohfeld, MPH; Priya D. Sunkara, MA; Chris Forcucci, RN, BSN; Dianne S. Campbell, BS; Phyllis Mitzen, MA; Jody D. Ciolino, PhD; Gayle Kricke, MSW; Anne Seltzer, LSW; Ana V. Ramirez, BA; Kenzie A. Cameron, PhD, MPH

BACKGROUND AND OBJECTIVES: Adherence to American Academy of Pediatrics (AAP) bronchiolitis clinical practice guideline recommendations improved significantly through the AAP’s multi-institutional collaborative the Bronchiolitis Quality Improvement Project (BQIP). We assessed sustainability of improvements at participating institutions for 1 year following completion of the collaborative.

METHODS: Twenty-one multidisciplinary hospital-based teams provided monthly data for key inpatient bronchiolitis measures during baseline and intervention bronchiolitis seasons. Nine sites provided data in the season following completion of the collaborative. Encounters included children younger than 24 months who were hospitalized for bronchiolitis without comorbid chronic illness, prematurity, or intensive care. Changes between baseline-, intervention-, and sustainability-season data were assessed using generalized linear mixed-effects models with site-specific random effects. Differences between hospital characteristics, baseline performance, and initial improvement among sites that did and did not participate in the sustainability season were compared.

RESULTS: A total of 2,275 discharges were reviewed, comprising 995 baseline, 877 intervention, and 403 sustainability-season encounters. Improvements in all key bronchiolitis quality measures achieved during the intervention season were maintained during the sustainability season, and orders for intermittent pulse oximetry increased from 40.6% (95% confidence interval, 22.8-61.1) to 79.2% (95% CI, 58.0-91.3). Sites that did and did not participate in the sustainability season had similar characteristics.

DISCUSSION: BQIP participating sites maintained improvements in key bronchiolitis quality measures for 1 year following the project’s completion. This approach, which provided an evidence-based best-practice toolkit while building the quality-improvement capacity of local interdisciplinary teams, may support performance gains that persist beyond the active phase of the collaborative.

Also in JHM this month

The effect of an inpatient smoking cessation treatment program on hospital readmissions and length of stayAUTHORS: Eline M. van den Broek-Altenburg, MS, MA, Adam J. Atherly, PhD

Treatment trends and outcomes in healthcare-associated pneumoniaAUTHORS: Sarah Haessler, MD; Tara Lagu, MD, MPH; Peter K. Lindenauer, MD, MSc; Daniel J. Skiest, MD; Aruna Priya, MA, MSc; Penelope S. Pekow, PhD; Marya D. Zilberberg, MD, MPH; Thomas L. Higgins, MD, MBA; Michael B. Rothberg, MD, MPH

What’s the purpose of rounds? A qualitative study examining the perceptions of faculty and studentsAUTHORS: Oliver Hulland; Jeanne Farnan, MD, MHPE; Raphael Rabinowitz; Lisa Kearns, MD, MS; Michele Long, MD; Bradley Monash, MD; Priti Bhansali, MD; H. Barrett Fromme, MD, MHPE

Association between anemia and fatigue in hospitalized patients: does the measure of anemia matter?AUTHORS: Micah T. Prochaska, MD, MS; Richard Newcomb, BA; Graham Block, BA; Brian Park, BA; David O. Meltzer MD, PhD

Helping seniors plan for posthospital discharge needs before a hospitalization occurs: Results from the randomized control trial of planyourlifespan.orgAUTHORS: Lee A. Lindquist, MD, MPH, MBA; Vanessa Ramirez-Zohfeld, MPH; Priya D. Sunkara, MA; Chris Forcucci, RN, BSN; Dianne S. Campbell, BS; Phyllis Mitzen, MA; Jody D. Ciolino, PhD; Gayle Kricke, MSW; Anne Seltzer, LSW; Ana V. Ramirez, BA; Kenzie A. Cameron, PhD, MPH

BACKGROUND AND OBJECTIVES: Adherence to American Academy of Pediatrics (AAP) bronchiolitis clinical practice guideline recommendations improved significantly through the AAP’s multi-institutional collaborative the Bronchiolitis Quality Improvement Project (BQIP). We assessed sustainability of improvements at participating institutions for 1 year following completion of the collaborative.

METHODS: Twenty-one multidisciplinary hospital-based teams provided monthly data for key inpatient bronchiolitis measures during baseline and intervention bronchiolitis seasons. Nine sites provided data in the season following completion of the collaborative. Encounters included children younger than 24 months who were hospitalized for bronchiolitis without comorbid chronic illness, prematurity, or intensive care. Changes between baseline-, intervention-, and sustainability-season data were assessed using generalized linear mixed-effects models with site-specific random effects. Differences between hospital characteristics, baseline performance, and initial improvement among sites that did and did not participate in the sustainability season were compared.

RESULTS: A total of 2,275 discharges were reviewed, comprising 995 baseline, 877 intervention, and 403 sustainability-season encounters. Improvements in all key bronchiolitis quality measures achieved during the intervention season were maintained during the sustainability season, and orders for intermittent pulse oximetry increased from 40.6% (95% confidence interval, 22.8-61.1) to 79.2% (95% CI, 58.0-91.3). Sites that did and did not participate in the sustainability season had similar characteristics.

DISCUSSION: BQIP participating sites maintained improvements in key bronchiolitis quality measures for 1 year following the project’s completion. This approach, which provided an evidence-based best-practice toolkit while building the quality-improvement capacity of local interdisciplinary teams, may support performance gains that persist beyond the active phase of the collaborative.

Also in JHM this month

The effect of an inpatient smoking cessation treatment program on hospital readmissions and length of stayAUTHORS: Eline M. van den Broek-Altenburg, MS, MA, Adam J. Atherly, PhD

Treatment trends and outcomes in healthcare-associated pneumoniaAUTHORS: Sarah Haessler, MD; Tara Lagu, MD, MPH; Peter K. Lindenauer, MD, MSc; Daniel J. Skiest, MD; Aruna Priya, MA, MSc; Penelope S. Pekow, PhD; Marya D. Zilberberg, MD, MPH; Thomas L. Higgins, MD, MBA; Michael B. Rothberg, MD, MPH

What’s the purpose of rounds? A qualitative study examining the perceptions of faculty and studentsAUTHORS: Oliver Hulland; Jeanne Farnan, MD, MHPE; Raphael Rabinowitz; Lisa Kearns, MD, MS; Michele Long, MD; Bradley Monash, MD; Priti Bhansali, MD; H. Barrett Fromme, MD, MHPE

Association between anemia and fatigue in hospitalized patients: does the measure of anemia matter?AUTHORS: Micah T. Prochaska, MD, MS; Richard Newcomb, BA; Graham Block, BA; Brian Park, BA; David O. Meltzer MD, PhD

Helping seniors plan for posthospital discharge needs before a hospitalization occurs: Results from the randomized control trial of planyourlifespan.orgAUTHORS: Lee A. Lindquist, MD, MPH, MBA; Vanessa Ramirez-Zohfeld, MPH; Priya D. Sunkara, MA; Chris Forcucci, RN, BSN; Dianne S. Campbell, BS; Phyllis Mitzen, MA; Jody D. Ciolino, PhD; Gayle Kricke, MSW; Anne Seltzer, LSW; Ana V. Ramirez, BA; Kenzie A. Cameron, PhD, MPH

Sustainability in the AAP Bronchiolitis Quality Improvement Project

Acute viral bronchiolitis is the most common cause of hospitalization for children less than 1 year of age.1 Overuse of ineffective therapies has persisted despite the existence of the evidence-based American Academy of Pediatrics (AAP) clinical practice guideline (CPG), which recommends primarily supportive care.2-8 Adherence to the AAP CPG recommendations for management of bronchiolitis improved significantly through the AAP’s Bronchiolitis Quality Improvement Project (BQIP), a 12-month, multiinstitutional collaborative of community and free-standing children’s hospitals.9 This subsequent study investigates if these improvements were sustained after completion of the formal 12-month project.

Published multiinstitutional bronchiolitis quality improvement (QI) work is limited to 1 study5 that describes the results of a single intervention season at academic medical centers. Multiyear bronchiolitis QI projects are limited to single-center studies, and results have been mixed.5,6,8,10-13 One study11 observed continued improvement in bronchodilator use in subsequent seasons, whereas a second study10 observed a return to baseline bronchodilator use in the following season. Mittal6 observed inconsistent improvements in key bronchiolitis measures during postintervention seasons.

Our specific aim was to assess the sustainability of improvements in bronchiolitis management at participating institutions 1 year following completion of the AAP BQIP collaborative.9 Because no studies demonstrate the most effective way to support long-term improvement through a QI collaborative, we hypothesized that the initial collaborative activities, which were designed to build the capacity of local interdisciplinary teams while providing standardized evidence-based care pathways, would lead to performance in the subsequent season at levels similar to or better than those observed during the active phase of the collaborative, without additional project interventions.

METHODS

Study Design and Setting

This was a follow-up study of the AAP Quality Improvement Innovation Networks project entitled “A Quality Collaborative for Improving Hospital Compliance with the AAP Bronchiolitis Guideline” (BQIP).9 The AAP Institutional Review Board approved this project.

Twenty-one multidisciplinary, hospital-based teams participated in the BQIP collaborative and provided monthly data during the January through March bronchiolitis season. Teams submitted 2013 baseline data and 2014 intervention data. Nine sites provided 2015 sustainability data following the completion of the collaborative.

Participants

Hospital encounters with a primary diagnosis of acute viral bronchiolitis were eligible for inclusion among patients from 1 month to 2 years of age. Encounters were excluded for prematurity (<35 weeks gestational age), congenital heart disease, bronchopulmonary dysplasia, genetic, congenital or neuromuscular abnormalities, and pediatric intensive-care admission.

Data Collection

Hospital characteristics were collected, including hospital type (academic, community), bed size, location (urban, rural), hospital distributions of race/ethnicity and public payer, cases of bronchiolitis per year, presence of an electronic medical record and a pediatric respiratory therapist, and self-rated QI knowledge of the multidisciplinary team (very knowledgeable, knowledgeable, and somewhat knowledgeable). A trained member at each site collected data through structured chart review in baseline, intervention, and sustainability bronchiolitis seasons for January, February, and March. Site members reviewed the first 20 charts per month that met the inclusion criteria or all charts if there were fewer than 20 eligible encounters. Sites input data about key quality measures into the AAP’s Quality Improvement Data Aggregator, a web-based data repository.

Intervention

The BQIP project was designed as a virtual collaborative consisting of monthly education webinars about QI methods and bronchiolitis management, opportunities for collaboration via teleconference and e-mail listserv, and individual site-coaching by e-mail or telephone.9 A change package was shared with sites that included examples of evidence-based pathways, ordersets, a respiratory scoring tool, communication tools for parents and referring physicians, and slide sets for individual site education efforts. Following completion of the collaborative, written resources remained available to participants, although virtual collaboration ceased and no additional project interventions to promote sustainability were introduced.

Bronchiolitis Process and Outcome Measures

Process measures following admission included the following: severity assessment using a respiratory score, respiratory score use to assess response to bronchodilators, bronchodilator use, bronchodilator doses, steroid doses per patient encounter, chest radiographs per encounter, and presence of an order to transition to intermittent pulse oximetry monitoring. Outcome measures included length of stay and readmissions within 72 hours.

Analysis

Changes among baseline-, intervention-, and sustainability-season data were assessed using generalized linear mixed-effects models with random effect for study sites. Negative binomial models were used for count variables to allow for overdispersion. Length of stay was log-transformed to achieve a normal distribution. We also analyzed each site individually to assess whether sustained improvements were the result of broad sustainability across all sites or whether they represented an aggregation of some sites that continued to improve while other sites actually worsened.

To address any bias introduced by the voluntary and incomplete participation of sites in the sustainability season, we planned a priori to conduct 3 additional analyses. First, we compared the characteristics of sites that did participate in the sustainability season with those that did not participate by using Chi-squared tests for differences in proportions and t tests for differences in means. Second, we determined whether the baseline-season process and outcome measures were different between sites that did and did not participate using descriptive statistics. Third, we assessed whether improvements between the baseline and intervention seasons were different between sites that did and did not participate using a linear mixed-effects model for normally distributed outcomes and generalized linear mixed-effects model with site-specific random effects for nonnormally distributed outcomes. All study outcomes were summarized in terms of model-adjusted means along with the corresponding 95% confidence intervals. All P values are 2-sided, and P < 0.05 was used to define statistical significance. Data analyses were conducted using SAS software (SAS Institute Inc., Cary, North Carolina) version 9.4.

RESULTS

Differences in baseline bronchiolitis quality measures between sites that did and did not participate in the sustainability season are displayed in Table 3. Sustainability sites had significantly lower baseline use of a respiratory score, both to assess severity of illness at any point after hospitalization as well as to assess responsiveness following bronchodilator treatments (P < 0.001). At baseline they also had fewer orders for intermittent pulse oximetry use (P = 0.01) and fewer doses of bronchodilators per encounter (P = 0.04). Sites were not significantly different in their baseline use of bronchodilators, oral steroid doses, or chest radiographs. Sites that participated in the sustainability season demonstated larger magnitude improvement between baseline and intervention seasons for respiratory score use (P < 0.001 for any use and P = 0.02 to assess bronchodilator responsiveness; Appendix 1b).

DISCUSSION

To our knowledge, this is the first report of sustained improvements in care achieved through a multiinstitutional QI collaborative of community and academic hospitals focused on bronchiolitis care. We found that overall sites participating in a national bronchiolitis QI project sustained improvements in key bronchiolitis quality measures for 1 year following the project’s completion. For the aggregate group no measures worsened, and one measure, orders for intermittent pulse oximetry monitoring, continued to increase during the sustainability season. Furthermore, the sustained improvements were primarily the result of consistent sustained performance of each individual site, as opposed to averages wherein some sites worsened while others improved (Appendix 1a). These findings suggest that designing a collaborative approach, which provides an evidence-based best-practice toolkit while building the QI capacity of local interdisciplinary teams, can support performance gains that persist beyond the project’s active phase.

There are a number of possible reasons why improvements were sustained following the collaborative. The BQIP requirement for institutional leadership buy-in may have motivated accountability to local leaders in subsequent bronchiolitis seasons at each site. We suspect that culture change such as flattened hierarchies through multidisciplinary teams,14 which empowered nurse and respiratory therapy staff, may have facilitated consistent use of tools created locally. The synergy of interdisciplinary teams composed of physician, nurse, and respiratory therapy champions may have created accountability to perpetuate the previous year’s efforts.15 In addition, the sites adopted elements of the evidence-based toolkit, such as pathways,16,17 forcing function tools13,18 and order sets that limited management decision options and bronchodilator use contingent on respiratory scores,9,19 which may have driven desired behaviors.

Moreover, the 2014 AAP CPG for the management of bronchiolitis,20 released prior to the sustainability bronchiolitis season, may have underscored the key concepts of the collaborative. Similarly, national exposure of best practices for bronchiolitis management, including the 3 widespread Choosing Wisely recommendations related to bronchiolitis,21 might have been a compelling reason for sites to maintain their improvement efforts and contribute to secular trends toward decreasing interventions in bronchiolitis management nationally.3 Lastly, the mechanisms developed for local data collection may have created opportunities at each site to conduct ongoing evaluation of performance on key bronchiolitis quality measures through data-driven feedback systems.22 Our study highlights the need for additional research in order to understand why improvements are or are not sustained.

Even with substantial, sustained improvements in this initiative, further reduction in unnecessary care may be possible. Findings from previous studies suggest that even multifaceted QI interventions, including provider education, guidelines and use of respiratory scores, may only modestly reduce bronchodilators, steroids, and chest radiograph use.8,13 To achieve continued improvements in bronchiolitis care, additional active efforts may be needed to develop new interventions that target root causes for areas of overuse at individual sites.

Future multiinstitutional collaboratives might benefit their participants if they include a focus on helping sites develop skills to ensure that local improvement activities continue after the collaborative phases are completed. Proactively scheduling intermittent check-ins with collaborative members to discuss experiences with both sustainability and ongoing improvement may be valuable and likely needs to be incorporated into the initial collaborative planning.

As these sustainability data represent a subset of 9 of the original 21 BQIP sites, there is concern for potential selection bias related to factors that could have motivated sites to participate in the sustainability season’s data collection and simultaneously influenced their performance. These concerns were mitigated to some extent through 3 specific analyses: finding limited differences in hospital characteristics, baseline performance in key bronchiolitis measures, and performance change from baseline to intervention seasons between sites that did and did not participate in the sustainability season.

Notably, sites that participated in the sustainability phase actually had lower baseline respiratory score use and fewer orders for intermittent pulse oximetry at baseline. Theoretically, if participation in the collaborative highlighted this disparity for these sites, it could have been a motivating factor for their continued participation and sustained performance across these measures. Similarly, sites that recognized their higher baseline performance through participation in the collaborative might have felt less motivation to participate in ongoing data collection during the sustainability season. Whether they might have also sustained, declined, or continued improving is not known. Additionally, the magnitude of improvement in the collaborative period might have also motivated ongoing participation during the sustainability phase. For example, although all sites improved in score use during the collaborative, sites participating in the sustainability season demonstrated significantly more improvement in these measures. Sites with a higher magnitude of improvement in collaborative measures might have more enthusiasm about the project, more commitment to the project activities, or feel a sense of obligation to respond to requests for additional data collection.

This work has several limitations. Selection bias may limit generalizability of the results, as sites that did not participate in the sustainability season may have had different results than those that did participate. It is unknown whether sites that regressed toward their baseline were deterred from participating in the sustainability season. The analyses that we were able to preform, however, suggest that the 2 groups were similar in their characteristics as well as in their baseline and improvement performance.

We have limited knowledge of the local improvement work that sites conducted between the completion of the collaborative and the sustainability season. Site-specific factors may have influenced improvement sustainability. For example, qualitative research with the original group found that team engagement had a quantitative association with better performance, but only for the bronchodilator use measure.23 Sites were responsible for their own data collection, and despite attempts to centralize and standardize the process, data collection inconsistencies may have occurred. For instance, it is unknown how closely that orders for intermittent pulse oximetry correlate with intermittent use at the bedside. Lastly, the absence of a control group limits examination of the causal relationships of interventions and the influence of secular trends.

CONCLUSIONS

Improvements gained during the BQIP collaborative were sustained at 1 year following completion of the collaborative. These findings are encouraging, as national QI collaborative efforts are increasingly common. Our findings suggest that opportunities exist to even further reduce unnecessary care in the management of bronchiolitis. Such opportunities highlight the importance of integrating strategies to both measure sustainability and plan for ongoing independent local activities after completion of the collaborative. Future efforts should focus on supporting local sites to continue individual practice-improvement as they transition from collaborative to independent quality initiatives.

Acknowledgments

The authors thank the 21 hospitals that participated in the BQIP collaborative, and in particular the 9 hospital teams that contributed sustainability data for their ongoing dedication. There was no external funding for this manuscript.

Disclosure

The authors report no financial conflicts of interest.

1. Healthcare Cost and Utilization Project (HCUP) KID Trends Supplemental File. Agency for Healthcare Research and Quality website. http://hcupnet.ahrq.gov/HCUPnet.jsp?Id=2C331B13FB40957D&Form=DispTab&JS=Y&Action=Accept. 2012. Accessed July 21, 2016.

2. Ralston S, Parikh K, Goodman D. Benchmarking overuse of medical interventions for bronchiolitis. JAMA Pediatr. 2015;169:805-806. PubMed

3. Parikh K, Hall M, Teach SJ. Bronchiolitis management before and after the AAP guidelines. Pediatrics. 2014;133:e1-e7. PubMed

4. Johnson LW, Robles J, Hudgins A, Osburn S, Martin D, Thompson A. Management of bronchiolitis in the emergency department: impact of evidence-based guidelines? Pediatrics. 2013;131 Suppl 1:S103-S109. PubMed

5. Kotagal UR, Robbins JM, Kini NM, Schoettker PJ, Atherton HD, Kirschbaum MS. Impact of a bronchiolitis guideline: a multisite demonstration project. Chest. 2002;121:1789-1797. PubMed

6. Mittal V, Darnell C, Walsh B, et al. Inpatient bronchiolitis guideline implementation and resource utilization. Pediatrics. 2014;133:e730-e737. PubMed

7. Mittal V, Hall M, Morse R, et al. Impact of inpatient bronchiolitis clinical practice guideline implementation on testing and treatment. J Pediatr. 2014;165:570.e3-576.e3. PubMed

8. Ralston S, Garber M, Narang S, et al. Decreasing unnecessary utilization in acute bronchiolitis care: results from the value in inpatient pediatrics network. J Hosp Med. 2013;8:25-30. PubMed

9. Ralston SL, Garber MD, Rice-Conboy E, et al. A multicenter collaborative to reduce unnecessary care in inpatient bronchiolitis. Pediatrics. 2016;137. PubMed

10. Perlstein PH, Kotagal UR, Schoettker PJ, et al. Sustaining the implementation of an evidence-based guideline for bronchiolitis. Arch Pediatr Adolesc Med. 2000;154:1001-1007. PubMed

11. Walker C, Danby S, Turner S. Impact of a bronchiolitis clinical care pathway on treatment and hospital stay. Eur J Pediatr. 2012;171:827-832. PubMed

12. Cheney J, Barber S, Altamirano L, et al. A clinical pathway for bronchiolitis is effective in reducing readmission rates. J Pediatr. 2005;147:622-626. PubMed

13. Ralston S, Comick A, Nichols E, Parker D, Lanter P. Effectiveness of quality improvement in hospitalization for bronchiolitis: a systematic review. Pediatrics. 2014;134:571-581. PubMed

14. Schwartz RW, Tumblin TF. The power of servant leadership to transform health care organizations for the 21st-century economy. Arch Surg. 2002;137:1419-1427; discussion 27. PubMed

15. Schalock RL, Verdugo M, Lee T. A systematic approach to an organization’s sustainability. Eval Program Plann. 2016;56:56-63. PubMed

16. Wilson SD, Dahl BB, Wells RD. An evidence-based clinical pathway for bronchiolitis safely reduces antibiotic overuse. Am J Med Qual. 2002;17:195-199. PubMed

17. Muething S, Schoettker PJ, Gerhardt WE, Atherton HD, Britto MT, Kotagal UR. Decreasing overuse of therapies in the treatment of bronchiolitis by incorporating evidence at the point of care. J Pediatr. 2004;144:703-710. PubMed

18. Streiff MB, Carolan HT, Hobson DB, et al. Lessons from the Johns Hopkins multi-disciplinary venous thromboembolism (VTE) prevention collaborative. BMJ. 2012;344:e3935. PubMed

19. Todd J, Bertoch D, Dolan S. Use of a large national database for comparative evaluation of the effect of a bronchiolitis/viral pneumonia clinical care guideline on patient outcome and resource utilization. Arch Pediatr Adolesc Med. 2002;156:1086-1090. PubMed

20. Ralston SL, Lieberthal AS, Meissner HC, et al. Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134:e1474-e1502. PubMed

21. Quinonez RA, Garber MD, Schroeder AR, et al. Choosing wisely in pediatric hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8:479-485. PubMed

22. Stone S, Lee HC, Sharek PJ. Perceived factors associated with sustained improvement following participation in a multicenter quality improvement collaborative. Jt Comm J Qual Patient Saf. 2016;42:309-315. PubMed

23. Ralston SL, Atwood EC, Garber MD, Holmes AV. What works to reduce unnecessary care for bronchiolitis? A qualitative analysis of a national collaborative. Acad Pediatr. 2017;17(2):198-204. PubMed

Acute viral bronchiolitis is the most common cause of hospitalization for children less than 1 year of age.1 Overuse of ineffective therapies has persisted despite the existence of the evidence-based American Academy of Pediatrics (AAP) clinical practice guideline (CPG), which recommends primarily supportive care.2-8 Adherence to the AAP CPG recommendations for management of bronchiolitis improved significantly through the AAP’s Bronchiolitis Quality Improvement Project (BQIP), a 12-month, multiinstitutional collaborative of community and free-standing children’s hospitals.9 This subsequent study investigates if these improvements were sustained after completion of the formal 12-month project.

Published multiinstitutional bronchiolitis quality improvement (QI) work is limited to 1 study5 that describes the results of a single intervention season at academic medical centers. Multiyear bronchiolitis QI projects are limited to single-center studies, and results have been mixed.5,6,8,10-13 One study11 observed continued improvement in bronchodilator use in subsequent seasons, whereas a second study10 observed a return to baseline bronchodilator use in the following season. Mittal6 observed inconsistent improvements in key bronchiolitis measures during postintervention seasons.

Our specific aim was to assess the sustainability of improvements in bronchiolitis management at participating institutions 1 year following completion of the AAP BQIP collaborative.9 Because no studies demonstrate the most effective way to support long-term improvement through a QI collaborative, we hypothesized that the initial collaborative activities, which were designed to build the capacity of local interdisciplinary teams while providing standardized evidence-based care pathways, would lead to performance in the subsequent season at levels similar to or better than those observed during the active phase of the collaborative, without additional project interventions.

METHODS

Study Design and Setting

This was a follow-up study of the AAP Quality Improvement Innovation Networks project entitled “A Quality Collaborative for Improving Hospital Compliance with the AAP Bronchiolitis Guideline” (BQIP).9 The AAP Institutional Review Board approved this project.

Twenty-one multidisciplinary, hospital-based teams participated in the BQIP collaborative and provided monthly data during the January through March bronchiolitis season. Teams submitted 2013 baseline data and 2014 intervention data. Nine sites provided 2015 sustainability data following the completion of the collaborative.

Participants

Hospital encounters with a primary diagnosis of acute viral bronchiolitis were eligible for inclusion among patients from 1 month to 2 years of age. Encounters were excluded for prematurity (<35 weeks gestational age), congenital heart disease, bronchopulmonary dysplasia, genetic, congenital or neuromuscular abnormalities, and pediatric intensive-care admission.

Data Collection

Hospital characteristics were collected, including hospital type (academic, community), bed size, location (urban, rural), hospital distributions of race/ethnicity and public payer, cases of bronchiolitis per year, presence of an electronic medical record and a pediatric respiratory therapist, and self-rated QI knowledge of the multidisciplinary team (very knowledgeable, knowledgeable, and somewhat knowledgeable). A trained member at each site collected data through structured chart review in baseline, intervention, and sustainability bronchiolitis seasons for January, February, and March. Site members reviewed the first 20 charts per month that met the inclusion criteria or all charts if there were fewer than 20 eligible encounters. Sites input data about key quality measures into the AAP’s Quality Improvement Data Aggregator, a web-based data repository.

Intervention

The BQIP project was designed as a virtual collaborative consisting of monthly education webinars about QI methods and bronchiolitis management, opportunities for collaboration via teleconference and e-mail listserv, and individual site-coaching by e-mail or telephone.9 A change package was shared with sites that included examples of evidence-based pathways, ordersets, a respiratory scoring tool, communication tools for parents and referring physicians, and slide sets for individual site education efforts. Following completion of the collaborative, written resources remained available to participants, although virtual collaboration ceased and no additional project interventions to promote sustainability were introduced.

Bronchiolitis Process and Outcome Measures

Process measures following admission included the following: severity assessment using a respiratory score, respiratory score use to assess response to bronchodilators, bronchodilator use, bronchodilator doses, steroid doses per patient encounter, chest radiographs per encounter, and presence of an order to transition to intermittent pulse oximetry monitoring. Outcome measures included length of stay and readmissions within 72 hours.

Analysis

Changes among baseline-, intervention-, and sustainability-season data were assessed using generalized linear mixed-effects models with random effect for study sites. Negative binomial models were used for count variables to allow for overdispersion. Length of stay was log-transformed to achieve a normal distribution. We also analyzed each site individually to assess whether sustained improvements were the result of broad sustainability across all sites or whether they represented an aggregation of some sites that continued to improve while other sites actually worsened.

To address any bias introduced by the voluntary and incomplete participation of sites in the sustainability season, we planned a priori to conduct 3 additional analyses. First, we compared the characteristics of sites that did participate in the sustainability season with those that did not participate by using Chi-squared tests for differences in proportions and t tests for differences in means. Second, we determined whether the baseline-season process and outcome measures were different between sites that did and did not participate using descriptive statistics. Third, we assessed whether improvements between the baseline and intervention seasons were different between sites that did and did not participate using a linear mixed-effects model for normally distributed outcomes and generalized linear mixed-effects model with site-specific random effects for nonnormally distributed outcomes. All study outcomes were summarized in terms of model-adjusted means along with the corresponding 95% confidence intervals. All P values are 2-sided, and P < 0.05 was used to define statistical significance. Data analyses were conducted using SAS software (SAS Institute Inc., Cary, North Carolina) version 9.4.

RESULTS

Differences in baseline bronchiolitis quality measures between sites that did and did not participate in the sustainability season are displayed in Table 3. Sustainability sites had significantly lower baseline use of a respiratory score, both to assess severity of illness at any point after hospitalization as well as to assess responsiveness following bronchodilator treatments (P < 0.001). At baseline they also had fewer orders for intermittent pulse oximetry use (P = 0.01) and fewer doses of bronchodilators per encounter (P = 0.04). Sites were not significantly different in their baseline use of bronchodilators, oral steroid doses, or chest radiographs. Sites that participated in the sustainability season demonstated larger magnitude improvement between baseline and intervention seasons for respiratory score use (P < 0.001 for any use and P = 0.02 to assess bronchodilator responsiveness; Appendix 1b).

DISCUSSION

To our knowledge, this is the first report of sustained improvements in care achieved through a multiinstitutional QI collaborative of community and academic hospitals focused on bronchiolitis care. We found that overall sites participating in a national bronchiolitis QI project sustained improvements in key bronchiolitis quality measures for 1 year following the project’s completion. For the aggregate group no measures worsened, and one measure, orders for intermittent pulse oximetry monitoring, continued to increase during the sustainability season. Furthermore, the sustained improvements were primarily the result of consistent sustained performance of each individual site, as opposed to averages wherein some sites worsened while others improved (Appendix 1a). These findings suggest that designing a collaborative approach, which provides an evidence-based best-practice toolkit while building the QI capacity of local interdisciplinary teams, can support performance gains that persist beyond the project’s active phase.

There are a number of possible reasons why improvements were sustained following the collaborative. The BQIP requirement for institutional leadership buy-in may have motivated accountability to local leaders in subsequent bronchiolitis seasons at each site. We suspect that culture change such as flattened hierarchies through multidisciplinary teams,14 which empowered nurse and respiratory therapy staff, may have facilitated consistent use of tools created locally. The synergy of interdisciplinary teams composed of physician, nurse, and respiratory therapy champions may have created accountability to perpetuate the previous year’s efforts.15 In addition, the sites adopted elements of the evidence-based toolkit, such as pathways,16,17 forcing function tools13,18 and order sets that limited management decision options and bronchodilator use contingent on respiratory scores,9,19 which may have driven desired behaviors.

Moreover, the 2014 AAP CPG for the management of bronchiolitis,20 released prior to the sustainability bronchiolitis season, may have underscored the key concepts of the collaborative. Similarly, national exposure of best practices for bronchiolitis management, including the 3 widespread Choosing Wisely recommendations related to bronchiolitis,21 might have been a compelling reason for sites to maintain their improvement efforts and contribute to secular trends toward decreasing interventions in bronchiolitis management nationally.3 Lastly, the mechanisms developed for local data collection may have created opportunities at each site to conduct ongoing evaluation of performance on key bronchiolitis quality measures through data-driven feedback systems.22 Our study highlights the need for additional research in order to understand why improvements are or are not sustained.

Even with substantial, sustained improvements in this initiative, further reduction in unnecessary care may be possible. Findings from previous studies suggest that even multifaceted QI interventions, including provider education, guidelines and use of respiratory scores, may only modestly reduce bronchodilators, steroids, and chest radiograph use.8,13 To achieve continued improvements in bronchiolitis care, additional active efforts may be needed to develop new interventions that target root causes for areas of overuse at individual sites.

Future multiinstitutional collaboratives might benefit their participants if they include a focus on helping sites develop skills to ensure that local improvement activities continue after the collaborative phases are completed. Proactively scheduling intermittent check-ins with collaborative members to discuss experiences with both sustainability and ongoing improvement may be valuable and likely needs to be incorporated into the initial collaborative planning.

As these sustainability data represent a subset of 9 of the original 21 BQIP sites, there is concern for potential selection bias related to factors that could have motivated sites to participate in the sustainability season’s data collection and simultaneously influenced their performance. These concerns were mitigated to some extent through 3 specific analyses: finding limited differences in hospital characteristics, baseline performance in key bronchiolitis measures, and performance change from baseline to intervention seasons between sites that did and did not participate in the sustainability season.

Notably, sites that participated in the sustainability phase actually had lower baseline respiratory score use and fewer orders for intermittent pulse oximetry at baseline. Theoretically, if participation in the collaborative highlighted this disparity for these sites, it could have been a motivating factor for their continued participation and sustained performance across these measures. Similarly, sites that recognized their higher baseline performance through participation in the collaborative might have felt less motivation to participate in ongoing data collection during the sustainability season. Whether they might have also sustained, declined, or continued improving is not known. Additionally, the magnitude of improvement in the collaborative period might have also motivated ongoing participation during the sustainability phase. For example, although all sites improved in score use during the collaborative, sites participating in the sustainability season demonstrated significantly more improvement in these measures. Sites with a higher magnitude of improvement in collaborative measures might have more enthusiasm about the project, more commitment to the project activities, or feel a sense of obligation to respond to requests for additional data collection.

This work has several limitations. Selection bias may limit generalizability of the results, as sites that did not participate in the sustainability season may have had different results than those that did participate. It is unknown whether sites that regressed toward their baseline were deterred from participating in the sustainability season. The analyses that we were able to preform, however, suggest that the 2 groups were similar in their characteristics as well as in their baseline and improvement performance.

We have limited knowledge of the local improvement work that sites conducted between the completion of the collaborative and the sustainability season. Site-specific factors may have influenced improvement sustainability. For example, qualitative research with the original group found that team engagement had a quantitative association with better performance, but only for the bronchodilator use measure.23 Sites were responsible for their own data collection, and despite attempts to centralize and standardize the process, data collection inconsistencies may have occurred. For instance, it is unknown how closely that orders for intermittent pulse oximetry correlate with intermittent use at the bedside. Lastly, the absence of a control group limits examination of the causal relationships of interventions and the influence of secular trends.

CONCLUSIONS

Improvements gained during the BQIP collaborative were sustained at 1 year following completion of the collaborative. These findings are encouraging, as national QI collaborative efforts are increasingly common. Our findings suggest that opportunities exist to even further reduce unnecessary care in the management of bronchiolitis. Such opportunities highlight the importance of integrating strategies to both measure sustainability and plan for ongoing independent local activities after completion of the collaborative. Future efforts should focus on supporting local sites to continue individual practice-improvement as they transition from collaborative to independent quality initiatives.

Acknowledgments

The authors thank the 21 hospitals that participated in the BQIP collaborative, and in particular the 9 hospital teams that contributed sustainability data for their ongoing dedication. There was no external funding for this manuscript.

Disclosure

The authors report no financial conflicts of interest.

Acute viral bronchiolitis is the most common cause of hospitalization for children less than 1 year of age.1 Overuse of ineffective therapies has persisted despite the existence of the evidence-based American Academy of Pediatrics (AAP) clinical practice guideline (CPG), which recommends primarily supportive care.2-8 Adherence to the AAP CPG recommendations for management of bronchiolitis improved significantly through the AAP’s Bronchiolitis Quality Improvement Project (BQIP), a 12-month, multiinstitutional collaborative of community and free-standing children’s hospitals.9 This subsequent study investigates if these improvements were sustained after completion of the formal 12-month project.

Published multiinstitutional bronchiolitis quality improvement (QI) work is limited to 1 study5 that describes the results of a single intervention season at academic medical centers. Multiyear bronchiolitis QI projects are limited to single-center studies, and results have been mixed.5,6,8,10-13 One study11 observed continued improvement in bronchodilator use in subsequent seasons, whereas a second study10 observed a return to baseline bronchodilator use in the following season. Mittal6 observed inconsistent improvements in key bronchiolitis measures during postintervention seasons.

Our specific aim was to assess the sustainability of improvements in bronchiolitis management at participating institutions 1 year following completion of the AAP BQIP collaborative.9 Because no studies demonstrate the most effective way to support long-term improvement through a QI collaborative, we hypothesized that the initial collaborative activities, which were designed to build the capacity of local interdisciplinary teams while providing standardized evidence-based care pathways, would lead to performance in the subsequent season at levels similar to or better than those observed during the active phase of the collaborative, without additional project interventions.

METHODS

Study Design and Setting

This was a follow-up study of the AAP Quality Improvement Innovation Networks project entitled “A Quality Collaborative for Improving Hospital Compliance with the AAP Bronchiolitis Guideline” (BQIP).9 The AAP Institutional Review Board approved this project.

Twenty-one multidisciplinary, hospital-based teams participated in the BQIP collaborative and provided monthly data during the January through March bronchiolitis season. Teams submitted 2013 baseline data and 2014 intervention data. Nine sites provided 2015 sustainability data following the completion of the collaborative.

Participants

Hospital encounters with a primary diagnosis of acute viral bronchiolitis were eligible for inclusion among patients from 1 month to 2 years of age. Encounters were excluded for prematurity (<35 weeks gestational age), congenital heart disease, bronchopulmonary dysplasia, genetic, congenital or neuromuscular abnormalities, and pediatric intensive-care admission.

Data Collection

Hospital characteristics were collected, including hospital type (academic, community), bed size, location (urban, rural), hospital distributions of race/ethnicity and public payer, cases of bronchiolitis per year, presence of an electronic medical record and a pediatric respiratory therapist, and self-rated QI knowledge of the multidisciplinary team (very knowledgeable, knowledgeable, and somewhat knowledgeable). A trained member at each site collected data through structured chart review in baseline, intervention, and sustainability bronchiolitis seasons for January, February, and March. Site members reviewed the first 20 charts per month that met the inclusion criteria or all charts if there were fewer than 20 eligible encounters. Sites input data about key quality measures into the AAP’s Quality Improvement Data Aggregator, a web-based data repository.

Intervention

The BQIP project was designed as a virtual collaborative consisting of monthly education webinars about QI methods and bronchiolitis management, opportunities for collaboration via teleconference and e-mail listserv, and individual site-coaching by e-mail or telephone.9 A change package was shared with sites that included examples of evidence-based pathways, ordersets, a respiratory scoring tool, communication tools for parents and referring physicians, and slide sets for individual site education efforts. Following completion of the collaborative, written resources remained available to participants, although virtual collaboration ceased and no additional project interventions to promote sustainability were introduced.

Bronchiolitis Process and Outcome Measures

Process measures following admission included the following: severity assessment using a respiratory score, respiratory score use to assess response to bronchodilators, bronchodilator use, bronchodilator doses, steroid doses per patient encounter, chest radiographs per encounter, and presence of an order to transition to intermittent pulse oximetry monitoring. Outcome measures included length of stay and readmissions within 72 hours.

Analysis

Changes among baseline-, intervention-, and sustainability-season data were assessed using generalized linear mixed-effects models with random effect for study sites. Negative binomial models were used for count variables to allow for overdispersion. Length of stay was log-transformed to achieve a normal distribution. We also analyzed each site individually to assess whether sustained improvements were the result of broad sustainability across all sites or whether they represented an aggregation of some sites that continued to improve while other sites actually worsened.

To address any bias introduced by the voluntary and incomplete participation of sites in the sustainability season, we planned a priori to conduct 3 additional analyses. First, we compared the characteristics of sites that did participate in the sustainability season with those that did not participate by using Chi-squared tests for differences in proportions and t tests for differences in means. Second, we determined whether the baseline-season process and outcome measures were different between sites that did and did not participate using descriptive statistics. Third, we assessed whether improvements between the baseline and intervention seasons were different between sites that did and did not participate using a linear mixed-effects model for normally distributed outcomes and generalized linear mixed-effects model with site-specific random effects for nonnormally distributed outcomes. All study outcomes were summarized in terms of model-adjusted means along with the corresponding 95% confidence intervals. All P values are 2-sided, and P < 0.05 was used to define statistical significance. Data analyses were conducted using SAS software (SAS Institute Inc., Cary, North Carolina) version 9.4.

RESULTS

Differences in baseline bronchiolitis quality measures between sites that did and did not participate in the sustainability season are displayed in Table 3. Sustainability sites had significantly lower baseline use of a respiratory score, both to assess severity of illness at any point after hospitalization as well as to assess responsiveness following bronchodilator treatments (P < 0.001). At baseline they also had fewer orders for intermittent pulse oximetry use (P = 0.01) and fewer doses of bronchodilators per encounter (P = 0.04). Sites were not significantly different in their baseline use of bronchodilators, oral steroid doses, or chest radiographs. Sites that participated in the sustainability season demonstated larger magnitude improvement between baseline and intervention seasons for respiratory score use (P < 0.001 for any use and P = 0.02 to assess bronchodilator responsiveness; Appendix 1b).

DISCUSSION

To our knowledge, this is the first report of sustained improvements in care achieved through a multiinstitutional QI collaborative of community and academic hospitals focused on bronchiolitis care. We found that overall sites participating in a national bronchiolitis QI project sustained improvements in key bronchiolitis quality measures for 1 year following the project’s completion. For the aggregate group no measures worsened, and one measure, orders for intermittent pulse oximetry monitoring, continued to increase during the sustainability season. Furthermore, the sustained improvements were primarily the result of consistent sustained performance of each individual site, as opposed to averages wherein some sites worsened while others improved (Appendix 1a). These findings suggest that designing a collaborative approach, which provides an evidence-based best-practice toolkit while building the QI capacity of local interdisciplinary teams, can support performance gains that persist beyond the project’s active phase.

There are a number of possible reasons why improvements were sustained following the collaborative. The BQIP requirement for institutional leadership buy-in may have motivated accountability to local leaders in subsequent bronchiolitis seasons at each site. We suspect that culture change such as flattened hierarchies through multidisciplinary teams,14 which empowered nurse and respiratory therapy staff, may have facilitated consistent use of tools created locally. The synergy of interdisciplinary teams composed of physician, nurse, and respiratory therapy champions may have created accountability to perpetuate the previous year’s efforts.15 In addition, the sites adopted elements of the evidence-based toolkit, such as pathways,16,17 forcing function tools13,18 and order sets that limited management decision options and bronchodilator use contingent on respiratory scores,9,19 which may have driven desired behaviors.

Moreover, the 2014 AAP CPG for the management of bronchiolitis,20 released prior to the sustainability bronchiolitis season, may have underscored the key concepts of the collaborative. Similarly, national exposure of best practices for bronchiolitis management, including the 3 widespread Choosing Wisely recommendations related to bronchiolitis,21 might have been a compelling reason for sites to maintain their improvement efforts and contribute to secular trends toward decreasing interventions in bronchiolitis management nationally.3 Lastly, the mechanisms developed for local data collection may have created opportunities at each site to conduct ongoing evaluation of performance on key bronchiolitis quality measures through data-driven feedback systems.22 Our study highlights the need for additional research in order to understand why improvements are or are not sustained.

Even with substantial, sustained improvements in this initiative, further reduction in unnecessary care may be possible. Findings from previous studies suggest that even multifaceted QI interventions, including provider education, guidelines and use of respiratory scores, may only modestly reduce bronchodilators, steroids, and chest radiograph use.8,13 To achieve continued improvements in bronchiolitis care, additional active efforts may be needed to develop new interventions that target root causes for areas of overuse at individual sites.

Future multiinstitutional collaboratives might benefit their participants if they include a focus on helping sites develop skills to ensure that local improvement activities continue after the collaborative phases are completed. Proactively scheduling intermittent check-ins with collaborative members to discuss experiences with both sustainability and ongoing improvement may be valuable and likely needs to be incorporated into the initial collaborative planning.

As these sustainability data represent a subset of 9 of the original 21 BQIP sites, there is concern for potential selection bias related to factors that could have motivated sites to participate in the sustainability season’s data collection and simultaneously influenced their performance. These concerns were mitigated to some extent through 3 specific analyses: finding limited differences in hospital characteristics, baseline performance in key bronchiolitis measures, and performance change from baseline to intervention seasons between sites that did and did not participate in the sustainability season.

Notably, sites that participated in the sustainability phase actually had lower baseline respiratory score use and fewer orders for intermittent pulse oximetry at baseline. Theoretically, if participation in the collaborative highlighted this disparity for these sites, it could have been a motivating factor for their continued participation and sustained performance across these measures. Similarly, sites that recognized their higher baseline performance through participation in the collaborative might have felt less motivation to participate in ongoing data collection during the sustainability season. Whether they might have also sustained, declined, or continued improving is not known. Additionally, the magnitude of improvement in the collaborative period might have also motivated ongoing participation during the sustainability phase. For example, although all sites improved in score use during the collaborative, sites participating in the sustainability season demonstrated significantly more improvement in these measures. Sites with a higher magnitude of improvement in collaborative measures might have more enthusiasm about the project, more commitment to the project activities, or feel a sense of obligation to respond to requests for additional data collection.

This work has several limitations. Selection bias may limit generalizability of the results, as sites that did not participate in the sustainability season may have had different results than those that did participate. It is unknown whether sites that regressed toward their baseline were deterred from participating in the sustainability season. The analyses that we were able to preform, however, suggest that the 2 groups were similar in their characteristics as well as in their baseline and improvement performance.

We have limited knowledge of the local improvement work that sites conducted between the completion of the collaborative and the sustainability season. Site-specific factors may have influenced improvement sustainability. For example, qualitative research with the original group found that team engagement had a quantitative association with better performance, but only for the bronchodilator use measure.23 Sites were responsible for their own data collection, and despite attempts to centralize and standardize the process, data collection inconsistencies may have occurred. For instance, it is unknown how closely that orders for intermittent pulse oximetry correlate with intermittent use at the bedside. Lastly, the absence of a control group limits examination of the causal relationships of interventions and the influence of secular trends.

CONCLUSIONS

Improvements gained during the BQIP collaborative were sustained at 1 year following completion of the collaborative. These findings are encouraging, as national QI collaborative efforts are increasingly common. Our findings suggest that opportunities exist to even further reduce unnecessary care in the management of bronchiolitis. Such opportunities highlight the importance of integrating strategies to both measure sustainability and plan for ongoing independent local activities after completion of the collaborative. Future efforts should focus on supporting local sites to continue individual practice-improvement as they transition from collaborative to independent quality initiatives.

Acknowledgments

The authors thank the 21 hospitals that participated in the BQIP collaborative, and in particular the 9 hospital teams that contributed sustainability data for their ongoing dedication. There was no external funding for this manuscript.

Disclosure

The authors report no financial conflicts of interest.

1. Healthcare Cost and Utilization Project (HCUP) KID Trends Supplemental File. Agency for Healthcare Research and Quality website. http://hcupnet.ahrq.gov/HCUPnet.jsp?Id=2C331B13FB40957D&Form=DispTab&JS=Y&Action=Accept. 2012. Accessed July 21, 2016.

2. Ralston S, Parikh K, Goodman D. Benchmarking overuse of medical interventions for bronchiolitis. JAMA Pediatr. 2015;169:805-806. PubMed

3. Parikh K, Hall M, Teach SJ. Bronchiolitis management before and after the AAP guidelines. Pediatrics. 2014;133:e1-e7. PubMed

4. Johnson LW, Robles J, Hudgins A, Osburn S, Martin D, Thompson A. Management of bronchiolitis in the emergency department: impact of evidence-based guidelines? Pediatrics. 2013;131 Suppl 1:S103-S109. PubMed

5. Kotagal UR, Robbins JM, Kini NM, Schoettker PJ, Atherton HD, Kirschbaum MS. Impact of a bronchiolitis guideline: a multisite demonstration project. Chest. 2002;121:1789-1797. PubMed

6. Mittal V, Darnell C, Walsh B, et al. Inpatient bronchiolitis guideline implementation and resource utilization. Pediatrics. 2014;133:e730-e737. PubMed

7. Mittal V, Hall M, Morse R, et al. Impact of inpatient bronchiolitis clinical practice guideline implementation on testing and treatment. J Pediatr. 2014;165:570.e3-576.e3. PubMed

8. Ralston S, Garber M, Narang S, et al. Decreasing unnecessary utilization in acute bronchiolitis care: results from the value in inpatient pediatrics network. J Hosp Med. 2013;8:25-30. PubMed

9. Ralston SL, Garber MD, Rice-Conboy E, et al. A multicenter collaborative to reduce unnecessary care in inpatient bronchiolitis. Pediatrics. 2016;137. PubMed

10. Perlstein PH, Kotagal UR, Schoettker PJ, et al. Sustaining the implementation of an evidence-based guideline for bronchiolitis. Arch Pediatr Adolesc Med. 2000;154:1001-1007. PubMed

11. Walker C, Danby S, Turner S. Impact of a bronchiolitis clinical care pathway on treatment and hospital stay. Eur J Pediatr. 2012;171:827-832. PubMed

12. Cheney J, Barber S, Altamirano L, et al. A clinical pathway for bronchiolitis is effective in reducing readmission rates. J Pediatr. 2005;147:622-626. PubMed

13. Ralston S, Comick A, Nichols E, Parker D, Lanter P. Effectiveness of quality improvement in hospitalization for bronchiolitis: a systematic review. Pediatrics. 2014;134:571-581. PubMed

14. Schwartz RW, Tumblin TF. The power of servant leadership to transform health care organizations for the 21st-century economy. Arch Surg. 2002;137:1419-1427; discussion 27. PubMed

15. Schalock RL, Verdugo M, Lee T. A systematic approach to an organization’s sustainability. Eval Program Plann. 2016;56:56-63. PubMed

16. Wilson SD, Dahl BB, Wells RD. An evidence-based clinical pathway for bronchiolitis safely reduces antibiotic overuse. Am J Med Qual. 2002;17:195-199. PubMed

17. Muething S, Schoettker PJ, Gerhardt WE, Atherton HD, Britto MT, Kotagal UR. Decreasing overuse of therapies in the treatment of bronchiolitis by incorporating evidence at the point of care. J Pediatr. 2004;144:703-710. PubMed

18. Streiff MB, Carolan HT, Hobson DB, et al. Lessons from the Johns Hopkins multi-disciplinary venous thromboembolism (VTE) prevention collaborative. BMJ. 2012;344:e3935. PubMed

19. Todd J, Bertoch D, Dolan S. Use of a large national database for comparative evaluation of the effect of a bronchiolitis/viral pneumonia clinical care guideline on patient outcome and resource utilization. Arch Pediatr Adolesc Med. 2002;156:1086-1090. PubMed

20. Ralston SL, Lieberthal AS, Meissner HC, et al. Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134:e1474-e1502. PubMed

21. Quinonez RA, Garber MD, Schroeder AR, et al. Choosing wisely in pediatric hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8:479-485. PubMed

22. Stone S, Lee HC, Sharek PJ. Perceived factors associated with sustained improvement following participation in a multicenter quality improvement collaborative. Jt Comm J Qual Patient Saf. 2016;42:309-315. PubMed

23. Ralston SL, Atwood EC, Garber MD, Holmes AV. What works to reduce unnecessary care for bronchiolitis? A qualitative analysis of a national collaborative. Acad Pediatr. 2017;17(2):198-204. PubMed

1. Healthcare Cost and Utilization Project (HCUP) KID Trends Supplemental File. Agency for Healthcare Research and Quality website. http://hcupnet.ahrq.gov/HCUPnet.jsp?Id=2C331B13FB40957D&Form=DispTab&JS=Y&Action=Accept. 2012. Accessed July 21, 2016.

2. Ralston S, Parikh K, Goodman D. Benchmarking overuse of medical interventions for bronchiolitis. JAMA Pediatr. 2015;169:805-806. PubMed

3. Parikh K, Hall M, Teach SJ. Bronchiolitis management before and after the AAP guidelines. Pediatrics. 2014;133:e1-e7. PubMed

4. Johnson LW, Robles J, Hudgins A, Osburn S, Martin D, Thompson A. Management of bronchiolitis in the emergency department: impact of evidence-based guidelines? Pediatrics. 2013;131 Suppl 1:S103-S109. PubMed

5. Kotagal UR, Robbins JM, Kini NM, Schoettker PJ, Atherton HD, Kirschbaum MS. Impact of a bronchiolitis guideline: a multisite demonstration project. Chest. 2002;121:1789-1797. PubMed

6. Mittal V, Darnell C, Walsh B, et al. Inpatient bronchiolitis guideline implementation and resource utilization. Pediatrics. 2014;133:e730-e737. PubMed

7. Mittal V, Hall M, Morse R, et al. Impact of inpatient bronchiolitis clinical practice guideline implementation on testing and treatment. J Pediatr. 2014;165:570.e3-576.e3. PubMed

8. Ralston S, Garber M, Narang S, et al. Decreasing unnecessary utilization in acute bronchiolitis care: results from the value in inpatient pediatrics network. J Hosp Med. 2013;8:25-30. PubMed

9. Ralston SL, Garber MD, Rice-Conboy E, et al. A multicenter collaborative to reduce unnecessary care in inpatient bronchiolitis. Pediatrics. 2016;137. PubMed

10. Perlstein PH, Kotagal UR, Schoettker PJ, et al. Sustaining the implementation of an evidence-based guideline for bronchiolitis. Arch Pediatr Adolesc Med. 2000;154:1001-1007. PubMed

11. Walker C, Danby S, Turner S. Impact of a bronchiolitis clinical care pathway on treatment and hospital stay. Eur J Pediatr. 2012;171:827-832. PubMed

12. Cheney J, Barber S, Altamirano L, et al. A clinical pathway for bronchiolitis is effective in reducing readmission rates. J Pediatr. 2005;147:622-626. PubMed

13. Ralston S, Comick A, Nichols E, Parker D, Lanter P. Effectiveness of quality improvement in hospitalization for bronchiolitis: a systematic review. Pediatrics. 2014;134:571-581. PubMed

14. Schwartz RW, Tumblin TF. The power of servant leadership to transform health care organizations for the 21st-century economy. Arch Surg. 2002;137:1419-1427; discussion 27. PubMed

15. Schalock RL, Verdugo M, Lee T. A systematic approach to an organization’s sustainability. Eval Program Plann. 2016;56:56-63. PubMed

16. Wilson SD, Dahl BB, Wells RD. An evidence-based clinical pathway for bronchiolitis safely reduces antibiotic overuse. Am J Med Qual. 2002;17:195-199. PubMed

17. Muething S, Schoettker PJ, Gerhardt WE, Atherton HD, Britto MT, Kotagal UR. Decreasing overuse of therapies in the treatment of bronchiolitis by incorporating evidence at the point of care. J Pediatr. 2004;144:703-710. PubMed

18. Streiff MB, Carolan HT, Hobson DB, et al. Lessons from the Johns Hopkins multi-disciplinary venous thromboembolism (VTE) prevention collaborative. BMJ. 2012;344:e3935. PubMed

19. Todd J, Bertoch D, Dolan S. Use of a large national database for comparative evaluation of the effect of a bronchiolitis/viral pneumonia clinical care guideline on patient outcome and resource utilization. Arch Pediatr Adolesc Med. 2002;156:1086-1090. PubMed

20. Ralston SL, Lieberthal AS, Meissner HC, et al. Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134:e1474-e1502. PubMed

21. Quinonez RA, Garber MD, Schroeder AR, et al. Choosing wisely in pediatric hospital medicine: five opportunities for improved healthcare value. J Hosp Med. 2013;8:479-485. PubMed

22. Stone S, Lee HC, Sharek PJ. Perceived factors associated with sustained improvement following participation in a multicenter quality improvement collaborative. Jt Comm J Qual Patient Saf. 2016;42:309-315. PubMed

23. Ralston SL, Atwood EC, Garber MD, Holmes AV. What works to reduce unnecessary care for bronchiolitis? A qualitative analysis of a national collaborative. Acad Pediatr. 2017;17(2):198-204. PubMed

© 2017 Society of Hospital Medicine

Hospitalist Versus Traditional Systems

In the United States, general medical inpatient care is increasingly provided by hospital‐based physicians, also called hospitalists.1 The field of pediatrics is no exception, and by 2005 there were an estimated 1000 pediatric hospitalists in the workforce.2 Current numbers are likely to be greater than 2500, as the need for pediatric hospitalists has grown considerably.

At the same time, the quality of care delivered by the United States health system has come under increased scrutiny. In 2001, the Institute of Medicine, in its report on the quality of healthcare in America, concluded that between the care we have and what we could have lies not just a gap but a chasm.3 Meanwhile, the cost of healthcare delivery continues to increase. The pressure to deliver cost‐effective, high quality care is among the more important forces driving the proliferation of hospitalists.4

Over the last decade, data supporting the role of hospitalists in improving quality of care for adult patients has continued to accumulate.58 A 2007 retrospective cohort study by Lindenaur et al.7 included nearly 77,000 adult patients and found small reductions in length of stay without adverse effects on mortality or readmission rates, and a 2009 systematic review by Peterson6 included 33 studies and concluded that in general inpatient care of general medical patients by hospitalist physicians leads to decreased hospital cost and length of stay. A 2002 study by Meltzer et al.8 is also interesting, suggesting that improvements in costs and short‐term mortality are related to the disease‐specific experience of hospitalists.

Similar data for pediatric hospitalists has been slower to emerge. A systematic review of the literature by Landrigan et al., which included studies through 2004, concluded that [R]esearch suggests that pediatric hospitalists decrease costs and length of stay . The quality of care in pediatric hospitalist systems is unclear, because rigorous metrics to evaluate quality are lacking.9 Since the publication of that review, there have been multiple studies which have sought to evaluate the quality of pediatric hospitalist systems. This review was undertaken to synthesize this new information, and to determine the effect of pediatric hospitalist systems on quality of care.

METHODS

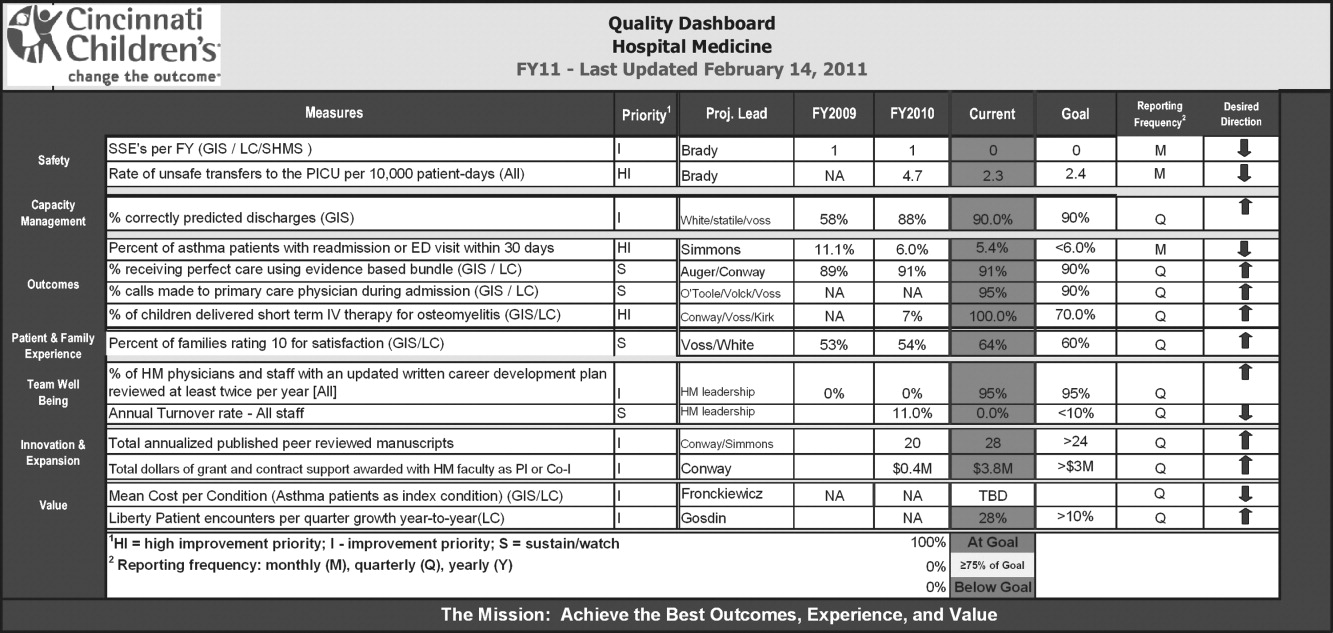

A review of the available English language literature on the Medline database was undertaken in November of 2010 to answer the question, What are the differences in quality of care and outcomes of inpatient medical care provided by hospitalists versus non‐hospitalists in the pediatric population? Care metrics of interest were categorized according to the Society of Hospital Medicine's recommendations for measuring hospital performance.10

Search terms used (with additional medical subject headings [MeSH] terms in parenthesis) were hospital medicine (hospitalist), pediatrics (child health, child welfare), cost (cost and cost analysis), quality (quality indicators, healthcare), outcomes (outcome assessment, healthcare; outcomes and process assessment, healthcare); volume, patient satisfaction, length of stay, productivity (efficiency), provider satisfaction (attitude of health personnel, job satisfaction), mortality, and readmission rate (patient readmission). The citing articles search tool was used to identify other articles that potentially could meet criteria. Finally, references cited in the selected articles, as well as in excluded literature reviews, were searched for additional articles.

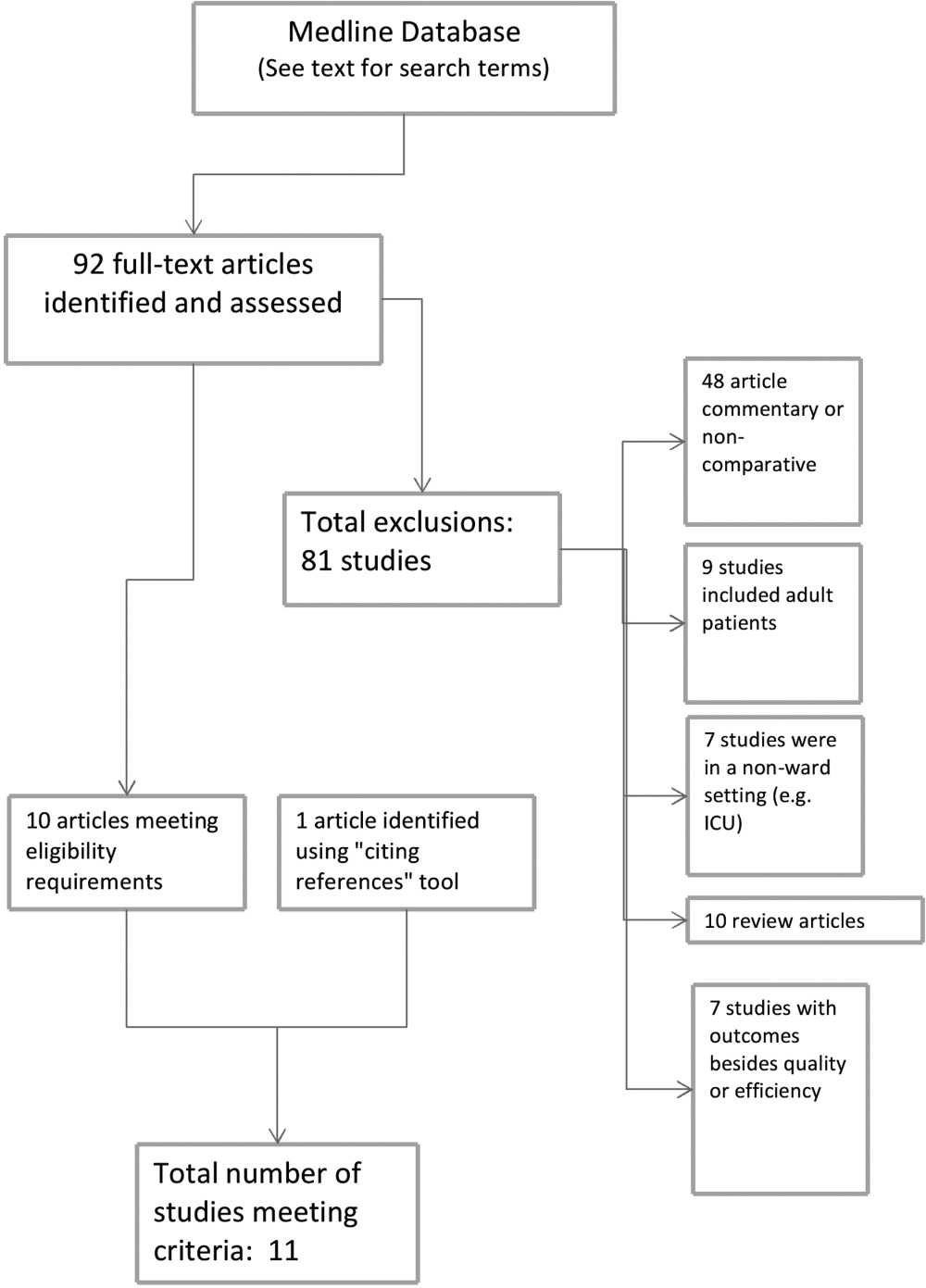

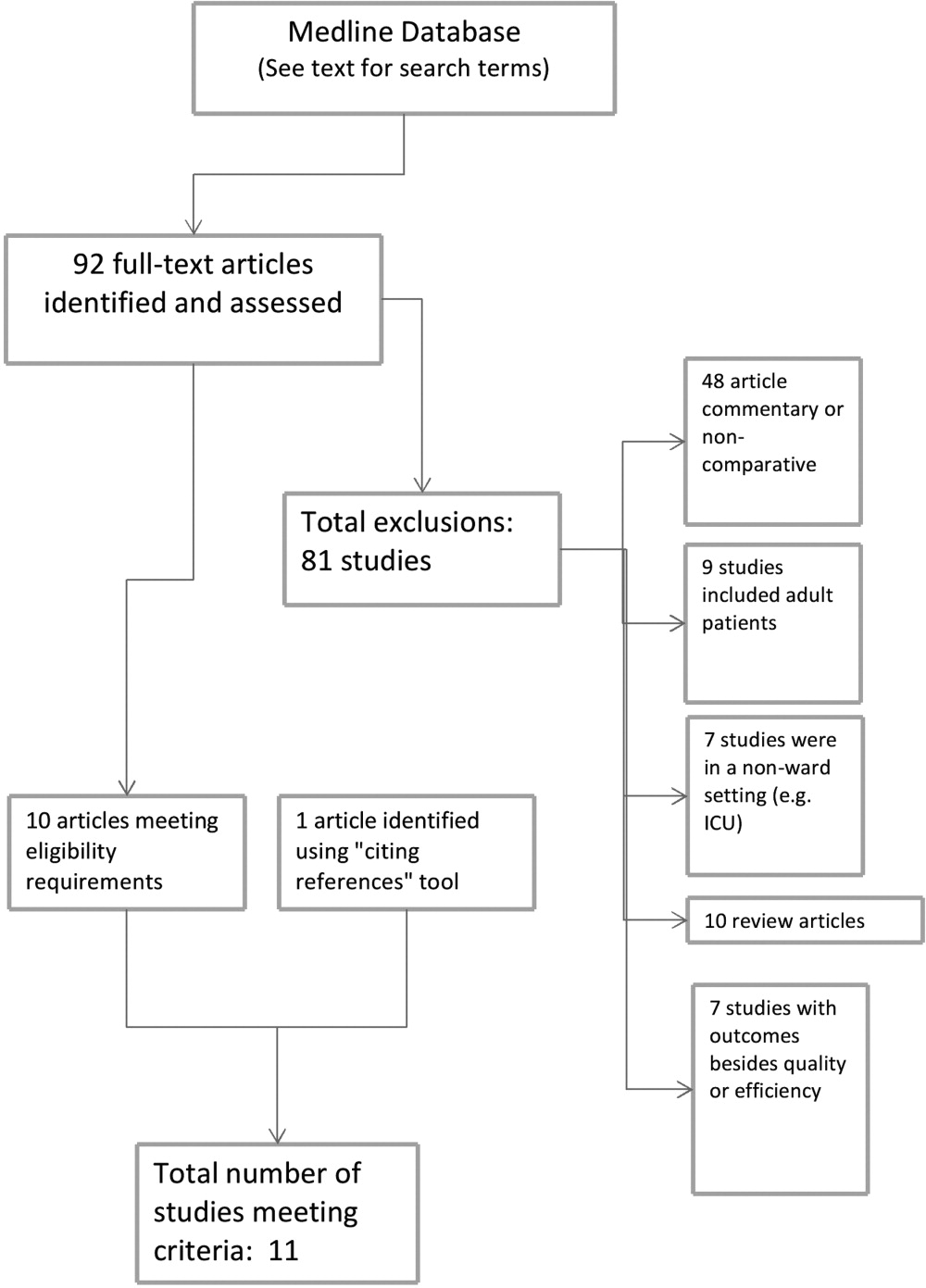

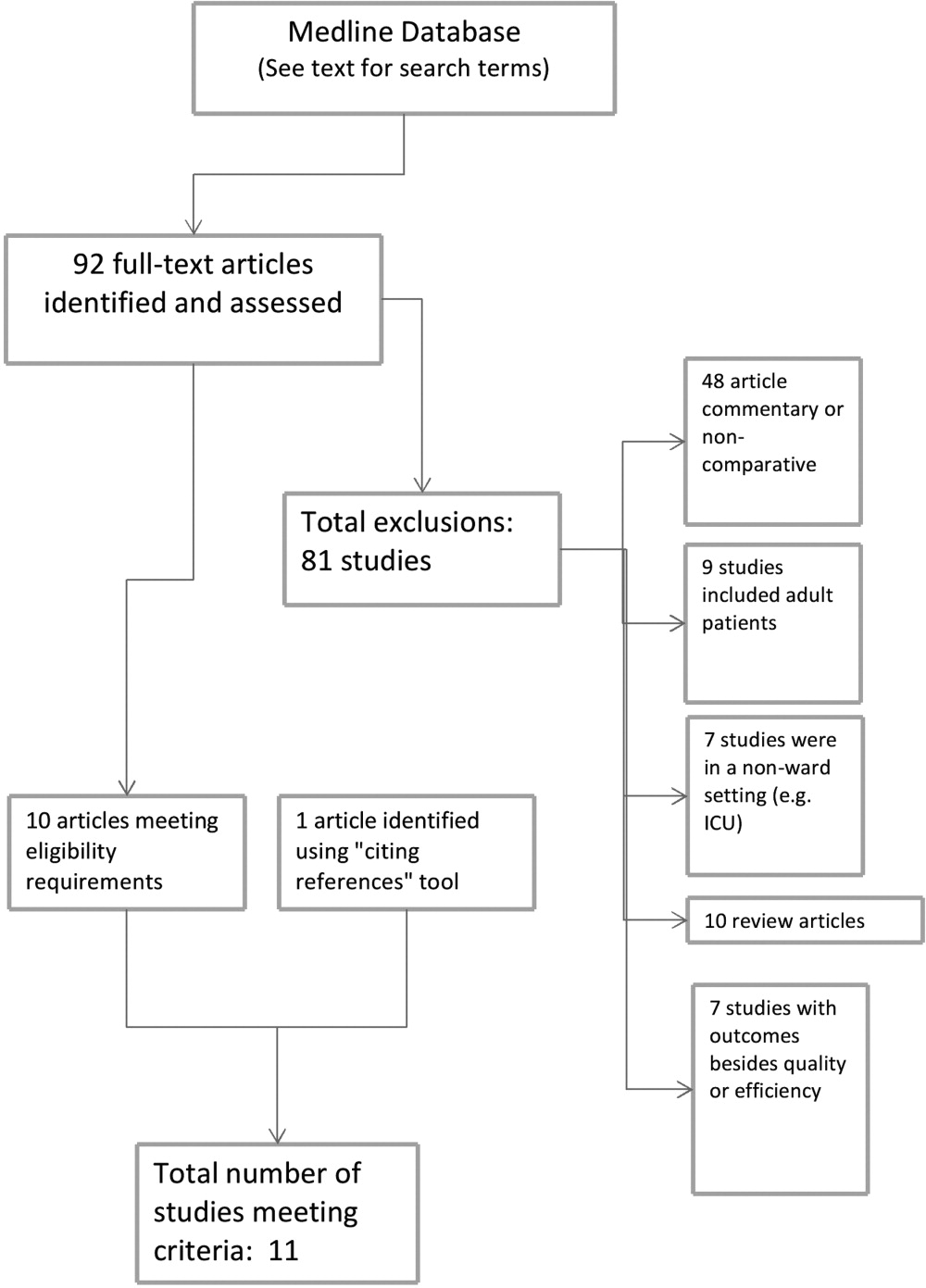

Articles were deemed eligible if they were published in a peer‐reviewed journal, if they had a comparative experimental design for hospitalists versus non‐hospitalists, and if they dealt exclusively with pediatric hospitalists. Noncomparative studies were excluded, as were studies that pertained to settings besides that of an inpatient pediatrics ward, such as pediatric intensive care units or emergency rooms. The search algorithm is diagrammed in Figure 1.

The selected articles were reviewed for the relevant outcome measures. The quality of each article was assessed using the Oxford Centre for Evidence‐Based Medicine levels of evidence,11 a widely accepted standard for critical analysis of studies. Levels of evidence are assigned to studies, from 1a (systematic reviews of randomized controlled trials) to 5 (expert opinion only). Well‐conducted prospective cohort studies receive a rating of 2c; those with wide confidence intervals due to small sample size receive a minus () modifier. This system does not specifically address survey studies, which were therefore not assigned a level of evidence.

RESULTS

The screening process yielded 92 possible relevant articles, which were then reviewed individually (by G.M.M.) by title and abstract. A total of 81 articles were excluded, including 48 studies that were either noncomparative or descriptive in nature. Ten of the identified articles were reviews and did not contain primary data. Nine studies were not restricted to the pediatric population. Also excluded were 7 studies that did not have outcomes related to quality (eg, billing performance), and 7 studies of hospitalists in settings besides general pediatric wards (eg, pediatric intensive care units). Ten studies were thus identified. The cited reference tool was used to identify an additional article which met criteria, yielding 11 total articles that were included in the review.

Five of the identified studies published prior to 2005 were previously reviewed by Landrigan et al.9 Since then, 6 additional studies of similar nature have been published and were included here. Articles that met criteria but appeared in an earlier review are included in Table 1; new articles appear in Table 2. The results of all 11 articles were included for this discussion.

| Source | Site | Study Design | Outcomes Measured (Oxford Level of Evidence) | Results for Hospitalists |

|---|---|---|---|---|

| ||||

| Bellet and Whitaker13 (2000) | Cincinnati Children's Hospital Medical Center, Cincinnati, OH | 1440 general pediatric patients | LOS, costs (2c) | LOS shorter (2.4 vs 2.7 days) |

| Retrospective cohort study | Readmission rate, subspecialty consultations, mortality (2c, low power) | Costs lower ($2720 vs $3002) | ||

| Readmissions higher for hospitalists (1% vs 3%) | ||||

| No differences in consultations | ||||

| No mortality in study | ||||

| Ogershok et al.16 (2001) | West Virginia University Children's Hospitals, Morgantown, WV | 2177 general pediatric patients | LOS, cost (2c) | No difference in LOS |

| Retrospective cohort study | Readmission rate, patient satisfaction, mortality (2c, low power) | Costs lower ($1238 vs $1421) | ||

| Lab and radiology tests ordered less often | ||||

| No difference in mortality or readmission rates | ||||

| No difference in satisfaction scores | ||||

| Wells et al.15 (2001) | Valley Children's Hospital, Madera, CA | 182 general pediatric patients | LOS, cost, patient satisfaction, follow‐up rate (2c, low power) | LOS shorter (45.2 vs 66.8 hr; P = 0.01) |

| Prospective cohort study | No LOS or cost benefit for patients with bronchiolitis, gastroenteritis, or pneumonia | |||

| Costs lower ($2701 vs $4854; P = 0.005) for patients with asthma | ||||

| No difference in outpatient follow‐up rate | ||||

| Landrigan et al.14 (2002) | Boston Children's Hospital, Boston, MA | 17,873 general pediatric patients | LOS, cost (2c) | LOS shorter (2.2 vs 2.5 days) |