User login

FDA modifies dosage regimen for nivolumab

The Food and Drug Administration has modified the dosage regimen for nivolumab for indications of renal cell carcinoma, metastatic melanoma, and non–small cell lung cancer.

The single-dose regimen of nivolumab (3 mg/kg IV every 2 weeks) is replaced with the new recommended regimen of 240 mg IV every 2 weeks until disease progression or intolerable toxicity, the FDA said in a written statement.

The nivolumab (Opdivo) dosing regimen in combination with ipilimumab for melanoma will stay the same (nivolumab 1 mg/kg IV, followed by ipilimumab on the same day, every 3 weeks for four doses); however, after completion of ipilimumab, the recommended nivolumab dose is modified to 240 mg every 2 weeks until disease progression or intolerable toxicity. The recommended dose for classical Hodgkin lymphoma remains at 3 mg/kg IV every 2 weeks until disease progression or intolerable toxicity.

The change was made based on analyses demonstrating the comparability of the pharmacokinetics exposure, safety, and efficacy of the proposed new dosing regimen with the previously approved regimen. “Based on simulations by the population pharmacokinetics model, [the] FDA determined that the overall exposure at 240 mg every 2 weeks flat dose is similar (less than 6% difference) to 3 mg/kg every 2 weeks. These differences in exposure are not likely to have a clinically meaningful effect on safety and efficacy, since dose/exposure response relationships appear to be relatively flat in these three indications,” the FDA said.

The Food and Drug Administration has modified the dosage regimen for nivolumab for indications of renal cell carcinoma, metastatic melanoma, and non–small cell lung cancer.

The single-dose regimen of nivolumab (3 mg/kg IV every 2 weeks) is replaced with the new recommended regimen of 240 mg IV every 2 weeks until disease progression or intolerable toxicity, the FDA said in a written statement.

The nivolumab (Opdivo) dosing regimen in combination with ipilimumab for melanoma will stay the same (nivolumab 1 mg/kg IV, followed by ipilimumab on the same day, every 3 weeks for four doses); however, after completion of ipilimumab, the recommended nivolumab dose is modified to 240 mg every 2 weeks until disease progression or intolerable toxicity. The recommended dose for classical Hodgkin lymphoma remains at 3 mg/kg IV every 2 weeks until disease progression or intolerable toxicity.

The change was made based on analyses demonstrating the comparability of the pharmacokinetics exposure, safety, and efficacy of the proposed new dosing regimen with the previously approved regimen. “Based on simulations by the population pharmacokinetics model, [the] FDA determined that the overall exposure at 240 mg every 2 weeks flat dose is similar (less than 6% difference) to 3 mg/kg every 2 weeks. These differences in exposure are not likely to have a clinically meaningful effect on safety and efficacy, since dose/exposure response relationships appear to be relatively flat in these three indications,” the FDA said.

The Food and Drug Administration has modified the dosage regimen for nivolumab for indications of renal cell carcinoma, metastatic melanoma, and non–small cell lung cancer.

The single-dose regimen of nivolumab (3 mg/kg IV every 2 weeks) is replaced with the new recommended regimen of 240 mg IV every 2 weeks until disease progression or intolerable toxicity, the FDA said in a written statement.

The nivolumab (Opdivo) dosing regimen in combination with ipilimumab for melanoma will stay the same (nivolumab 1 mg/kg IV, followed by ipilimumab on the same day, every 3 weeks for four doses); however, after completion of ipilimumab, the recommended nivolumab dose is modified to 240 mg every 2 weeks until disease progression or intolerable toxicity. The recommended dose for classical Hodgkin lymphoma remains at 3 mg/kg IV every 2 weeks until disease progression or intolerable toxicity.

The change was made based on analyses demonstrating the comparability of the pharmacokinetics exposure, safety, and efficacy of the proposed new dosing regimen with the previously approved regimen. “Based on simulations by the population pharmacokinetics model, [the] FDA determined that the overall exposure at 240 mg every 2 weeks flat dose is similar (less than 6% difference) to 3 mg/kg every 2 weeks. These differences in exposure are not likely to have a clinically meaningful effect on safety and efficacy, since dose/exposure response relationships appear to be relatively flat in these three indications,” the FDA said.

WHO updates ranking of critically important antimicrobials

In light of increasing antibiotic resistance among pathogens, the World Health Organization has revised its global rankings of critically important antimicrobials used in human medicine, designating quinolones, third- and fourth-generation cephalosporins, macrolides and ketolides, and glycopeptides as among the highest-priority drugs in the world.

Peter C. Collignon, MBBS, of Canberra (Australia) Hospital and his colleagues on the WHO Advisory Group on Integrated Surveillance of Antimicrobial Resistance, created the rankings for use in developing risk management strategies related to antimicrobial use in food production animals. According to Dr. Collignon and his coauthors, the rankings are intended to help regulators and other stakeholders know which types of antimicrobials used in animals present potentially higher risks to human populations and help inform how this use might be better managed (e.g. restriction to single-animal therapy or prohibition of mass treatment and extra-label use) to minimize the risk of transmission of resistance to the human population.

WHO studies previously suggested that antimicrobials which currently have no veterinary equivalent (for example, carbapenems) “as well as any new class of antimicrobial developed for human therapy should not be used in animals.” Dr. Collignon’s WHO Advisory Group followed two essential criteria to designate antimicrobials of utmost importance to human health in the new study: 1. antimicrobials that are the sole, or one of limited available therapies, to treat serious bacterial infections in people and 2. antimicrobials used to treat infections in people caused by either (a) bacteria that may be transmitted to humans from nonhuman sources or (b) bacteria that may acquire resistance genes from nonhuman sources.

The highest-priority and most critically important antimicrobials are those which meet the criteria listed above and that are used in greatest volume or highest frequency by humans. Another criteria for prioritization involves antimicrobial classes where evidence suggests that the “transmission of resistant bacteria or resistance genes from nonhuman sources is already occurring, or has occurred previously.” Quinolones, third- and fourth-generation cephalosporins, macrolides and ketolides, and glycopeptides were the only antimicrobials that met all criteria for prioritization.

“Antimicrobial resistance remains a threat to human health and drivers of resistance act in all sectors; human, animal, and the environment,” the WHO Advisory Group concluded. “Prioritizing the antimicrobials that are critically important for humans is a valuable and strategic risk-management tool and will be improved with the evidence-based approach which is currently underway.”

Read the full study in Clinical Infectious Diseases (doi: 10.1093/cid/ciw475).

In light of increasing antibiotic resistance among pathogens, the World Health Organization has revised its global rankings of critically important antimicrobials used in human medicine, designating quinolones, third- and fourth-generation cephalosporins, macrolides and ketolides, and glycopeptides as among the highest-priority drugs in the world.

Peter C. Collignon, MBBS, of Canberra (Australia) Hospital and his colleagues on the WHO Advisory Group on Integrated Surveillance of Antimicrobial Resistance, created the rankings for use in developing risk management strategies related to antimicrobial use in food production animals. According to Dr. Collignon and his coauthors, the rankings are intended to help regulators and other stakeholders know which types of antimicrobials used in animals present potentially higher risks to human populations and help inform how this use might be better managed (e.g. restriction to single-animal therapy or prohibition of mass treatment and extra-label use) to minimize the risk of transmission of resistance to the human population.

WHO studies previously suggested that antimicrobials which currently have no veterinary equivalent (for example, carbapenems) “as well as any new class of antimicrobial developed for human therapy should not be used in animals.” Dr. Collignon’s WHO Advisory Group followed two essential criteria to designate antimicrobials of utmost importance to human health in the new study: 1. antimicrobials that are the sole, or one of limited available therapies, to treat serious bacterial infections in people and 2. antimicrobials used to treat infections in people caused by either (a) bacteria that may be transmitted to humans from nonhuman sources or (b) bacteria that may acquire resistance genes from nonhuman sources.

The highest-priority and most critically important antimicrobials are those which meet the criteria listed above and that are used in greatest volume or highest frequency by humans. Another criteria for prioritization involves antimicrobial classes where evidence suggests that the “transmission of resistant bacteria or resistance genes from nonhuman sources is already occurring, or has occurred previously.” Quinolones, third- and fourth-generation cephalosporins, macrolides and ketolides, and glycopeptides were the only antimicrobials that met all criteria for prioritization.

“Antimicrobial resistance remains a threat to human health and drivers of resistance act in all sectors; human, animal, and the environment,” the WHO Advisory Group concluded. “Prioritizing the antimicrobials that are critically important for humans is a valuable and strategic risk-management tool and will be improved with the evidence-based approach which is currently underway.”

Read the full study in Clinical Infectious Diseases (doi: 10.1093/cid/ciw475).

In light of increasing antibiotic resistance among pathogens, the World Health Organization has revised its global rankings of critically important antimicrobials used in human medicine, designating quinolones, third- and fourth-generation cephalosporins, macrolides and ketolides, and glycopeptides as among the highest-priority drugs in the world.

Peter C. Collignon, MBBS, of Canberra (Australia) Hospital and his colleagues on the WHO Advisory Group on Integrated Surveillance of Antimicrobial Resistance, created the rankings for use in developing risk management strategies related to antimicrobial use in food production animals. According to Dr. Collignon and his coauthors, the rankings are intended to help regulators and other stakeholders know which types of antimicrobials used in animals present potentially higher risks to human populations and help inform how this use might be better managed (e.g. restriction to single-animal therapy or prohibition of mass treatment and extra-label use) to minimize the risk of transmission of resistance to the human population.

WHO studies previously suggested that antimicrobials which currently have no veterinary equivalent (for example, carbapenems) “as well as any new class of antimicrobial developed for human therapy should not be used in animals.” Dr. Collignon’s WHO Advisory Group followed two essential criteria to designate antimicrobials of utmost importance to human health in the new study: 1. antimicrobials that are the sole, or one of limited available therapies, to treat serious bacterial infections in people and 2. antimicrobials used to treat infections in people caused by either (a) bacteria that may be transmitted to humans from nonhuman sources or (b) bacteria that may acquire resistance genes from nonhuman sources.

The highest-priority and most critically important antimicrobials are those which meet the criteria listed above and that are used in greatest volume or highest frequency by humans. Another criteria for prioritization involves antimicrobial classes where evidence suggests that the “transmission of resistant bacteria or resistance genes from nonhuman sources is already occurring, or has occurred previously.” Quinolones, third- and fourth-generation cephalosporins, macrolides and ketolides, and glycopeptides were the only antimicrobials that met all criteria for prioritization.

“Antimicrobial resistance remains a threat to human health and drivers of resistance act in all sectors; human, animal, and the environment,” the WHO Advisory Group concluded. “Prioritizing the antimicrobials that are critically important for humans is a valuable and strategic risk-management tool and will be improved with the evidence-based approach which is currently underway.”

Read the full study in Clinical Infectious Diseases (doi: 10.1093/cid/ciw475).

FROM CLINICAL INFECTIOUS DISEASES

FDA rule will pull many consumer antibacterial soaps from market

Over-the-counter consumer antiseptic wash products with active ingredients such as triclosan and triclocarban will be pulled from the market, following a final rule issued Sept. 2 by the Food and Drug Administration.

Companies will no longer be able to sell antibacterial washes with those ingredients, the FDA said, because manufacturers failed to show the ingredients are safe for long-term daily use and are better than plain soap and water at preventing illness and the spread of infections.

The final rule targets consumer antiseptic wash products containing 1 or more of 19 active ingredients, including the 2 most commonly used ingredients, triclosan and triclocarban. Companies have 1 year to comply with the new rule.

The FDA’s rule does not apply to hand sanitizers, wipes, or antibacterial products used in health care settings.

The agency has deferred for 1 year a decision on the continued use of three other ingredients in consumer wash products: benzalkonium chloride, benzethonium chloride, and chloroxylenol.

The FDA’s decision was driven in part by concerns about the risks posed by long-term exposure to such products, including bacterial resistance or hormonal effects.

“Consumers may think antibacterial washes are more effective at preventing the spread of germs, but we have no scientific evidence that they are any better than plain soap and water,” said Janet Woodcock, MD, director of the FDA’s Center for Drug Evaluation and Research, in a statement. “In fact, some data suggest that antibacterial ingredients may do more harm than good over the long term.”

Washing with plain soap and water remains one of the most important steps consumers can take to prevent illness and the spread of infection, the FDA advised. The agency also recommended use of alcohol-based hand sanitizer with at least 60% alcohol.

Read the full press release on the FDA website.

Over-the-counter consumer antiseptic wash products with active ingredients such as triclosan and triclocarban will be pulled from the market, following a final rule issued Sept. 2 by the Food and Drug Administration.

Companies will no longer be able to sell antibacterial washes with those ingredients, the FDA said, because manufacturers failed to show the ingredients are safe for long-term daily use and are better than plain soap and water at preventing illness and the spread of infections.

The final rule targets consumer antiseptic wash products containing 1 or more of 19 active ingredients, including the 2 most commonly used ingredients, triclosan and triclocarban. Companies have 1 year to comply with the new rule.

The FDA’s rule does not apply to hand sanitizers, wipes, or antibacterial products used in health care settings.

The agency has deferred for 1 year a decision on the continued use of three other ingredients in consumer wash products: benzalkonium chloride, benzethonium chloride, and chloroxylenol.

The FDA’s decision was driven in part by concerns about the risks posed by long-term exposure to such products, including bacterial resistance or hormonal effects.

“Consumers may think antibacterial washes are more effective at preventing the spread of germs, but we have no scientific evidence that they are any better than plain soap and water,” said Janet Woodcock, MD, director of the FDA’s Center for Drug Evaluation and Research, in a statement. “In fact, some data suggest that antibacterial ingredients may do more harm than good over the long term.”

Washing with plain soap and water remains one of the most important steps consumers can take to prevent illness and the spread of infection, the FDA advised. The agency also recommended use of alcohol-based hand sanitizer with at least 60% alcohol.

Read the full press release on the FDA website.

Over-the-counter consumer antiseptic wash products with active ingredients such as triclosan and triclocarban will be pulled from the market, following a final rule issued Sept. 2 by the Food and Drug Administration.

Companies will no longer be able to sell antibacterial washes with those ingredients, the FDA said, because manufacturers failed to show the ingredients are safe for long-term daily use and are better than plain soap and water at preventing illness and the spread of infections.

The final rule targets consumer antiseptic wash products containing 1 or more of 19 active ingredients, including the 2 most commonly used ingredients, triclosan and triclocarban. Companies have 1 year to comply with the new rule.

The FDA’s rule does not apply to hand sanitizers, wipes, or antibacterial products used in health care settings.

The agency has deferred for 1 year a decision on the continued use of three other ingredients in consumer wash products: benzalkonium chloride, benzethonium chloride, and chloroxylenol.

The FDA’s decision was driven in part by concerns about the risks posed by long-term exposure to such products, including bacterial resistance or hormonal effects.

“Consumers may think antibacterial washes are more effective at preventing the spread of germs, but we have no scientific evidence that they are any better than plain soap and water,” said Janet Woodcock, MD, director of the FDA’s Center for Drug Evaluation and Research, in a statement. “In fact, some data suggest that antibacterial ingredients may do more harm than good over the long term.”

Washing with plain soap and water remains one of the most important steps consumers can take to prevent illness and the spread of infection, the FDA advised. The agency also recommended use of alcohol-based hand sanitizer with at least 60% alcohol.

Read the full press release on the FDA website.

PCV vaccines less prominent in children with meningitis

Pneumococcal conjugate vaccines 7-valent and 13-valent (PCV7/PCV13) in children younger than 5 years of age in Israel were less prominent in meningitis than in nonmeningitis invasive pneumococcal disease (nm-IPD), according to S. Ben-Shimol, MD, and associates.

Between July 2000 and June 2015, 4,168 IPD episodes were reported; 426 (10.2%) were meningitis. The PCV13 serotype (13VT) meningitis rates significantly declined by 93% (incidence rate ratio = 0.07), from 3.6 ± 1.3 in the pre-PCV period to 0.3 in the last year of the study. Also, the 13VT nm-IPD rates significantly declined by 95% (IRR = 0.05), from a rate of 40.0 ± 5.4 in the pre-PCV period to 1.9. The non-13VT meningitis rates significantly increased by 273% (IRR = 3.73), from 0.8 ± 0.3 in the pre-PCV period to 3.0. And the non-13VT nm-IPD rates also significantly increased by 162% (IRR = 2.62), from 4.5 ± 0.8 in the pre-PCV period to 11.8.

The researchers noted that the increase in non-13VT meningitis was partially driven by a sharp and significant increase of serotype 12F, along with the other predominant non-13VT serotypes that caused meningitis: 15B/C, 24F, and 27. The serotypes also were predominant in non-13VT nm-IPD, as were additional serotypes 8, 10A, 33F, 7B and 10B.

“This finding may be attributed to the younger age of children with meningitis and differences in causative serotypes between the two groups, as the decline of the incidence of meningitis and nm-IPD caused by vaccine-serotypes is similar,” researchers concluded. “Continuous monitoring of meningitis and nm-IPD is warranted.”

Find the full study in Vaccine (doi: 10.1016/j.vaccine.2016.07.038).

Pneumococcal conjugate vaccines 7-valent and 13-valent (PCV7/PCV13) in children younger than 5 years of age in Israel were less prominent in meningitis than in nonmeningitis invasive pneumococcal disease (nm-IPD), according to S. Ben-Shimol, MD, and associates.

Between July 2000 and June 2015, 4,168 IPD episodes were reported; 426 (10.2%) were meningitis. The PCV13 serotype (13VT) meningitis rates significantly declined by 93% (incidence rate ratio = 0.07), from 3.6 ± 1.3 in the pre-PCV period to 0.3 in the last year of the study. Also, the 13VT nm-IPD rates significantly declined by 95% (IRR = 0.05), from a rate of 40.0 ± 5.4 in the pre-PCV period to 1.9. The non-13VT meningitis rates significantly increased by 273% (IRR = 3.73), from 0.8 ± 0.3 in the pre-PCV period to 3.0. And the non-13VT nm-IPD rates also significantly increased by 162% (IRR = 2.62), from 4.5 ± 0.8 in the pre-PCV period to 11.8.

The researchers noted that the increase in non-13VT meningitis was partially driven by a sharp and significant increase of serotype 12F, along with the other predominant non-13VT serotypes that caused meningitis: 15B/C, 24F, and 27. The serotypes also were predominant in non-13VT nm-IPD, as were additional serotypes 8, 10A, 33F, 7B and 10B.

“This finding may be attributed to the younger age of children with meningitis and differences in causative serotypes between the two groups, as the decline of the incidence of meningitis and nm-IPD caused by vaccine-serotypes is similar,” researchers concluded. “Continuous monitoring of meningitis and nm-IPD is warranted.”

Find the full study in Vaccine (doi: 10.1016/j.vaccine.2016.07.038).

Pneumococcal conjugate vaccines 7-valent and 13-valent (PCV7/PCV13) in children younger than 5 years of age in Israel were less prominent in meningitis than in nonmeningitis invasive pneumococcal disease (nm-IPD), according to S. Ben-Shimol, MD, and associates.

Between July 2000 and June 2015, 4,168 IPD episodes were reported; 426 (10.2%) were meningitis. The PCV13 serotype (13VT) meningitis rates significantly declined by 93% (incidence rate ratio = 0.07), from 3.6 ± 1.3 in the pre-PCV period to 0.3 in the last year of the study. Also, the 13VT nm-IPD rates significantly declined by 95% (IRR = 0.05), from a rate of 40.0 ± 5.4 in the pre-PCV period to 1.9. The non-13VT meningitis rates significantly increased by 273% (IRR = 3.73), from 0.8 ± 0.3 in the pre-PCV period to 3.0. And the non-13VT nm-IPD rates also significantly increased by 162% (IRR = 2.62), from 4.5 ± 0.8 in the pre-PCV period to 11.8.

The researchers noted that the increase in non-13VT meningitis was partially driven by a sharp and significant increase of serotype 12F, along with the other predominant non-13VT serotypes that caused meningitis: 15B/C, 24F, and 27. The serotypes also were predominant in non-13VT nm-IPD, as were additional serotypes 8, 10A, 33F, 7B and 10B.

“This finding may be attributed to the younger age of children with meningitis and differences in causative serotypes between the two groups, as the decline of the incidence of meningitis and nm-IPD caused by vaccine-serotypes is similar,” researchers concluded. “Continuous monitoring of meningitis and nm-IPD is warranted.”

Find the full study in Vaccine (doi: 10.1016/j.vaccine.2016.07.038).

FROM VACCINE

Physical/functional limitations top risk factor for late-life depression

New findings show there are several major risk factors that influence late-life depression (LLD), with physical/functional limitations the most prevalent, according to Shun-Chiao Chang, ScD, of Brigham and Women’s Hospital, Boston, and her associates.

They examined 21,728 women aged older than 65 years who had no prior depression. During a 10-year follow-up, 3,945 incident LLD cases were identified. In those cases, social factors and lifestyle/behavioral factors did affect LLD, but the categories with the largest effect magnitudes for higher LLD risk were severe/very severe bodily pain (hazard ratio, 2.22; 95% confidence interval, 1.88-2.62), difficulty sleeping most/all the time (HR, 2.04; 95% CI, 1.77-2.36), and daily sleep of 10 hours or more (HR, 1.96; 95% CI, 1.56-2.46). The most prevalent risk factor, physical/functional limitations, was associated with a 42% increase in risk

Sleep difficulty some to all of the time, no/very little exercise, and moderate to very severe bodily pain also were factors, with population attributable fraction (PAF) values of 10% or higher. The factor with the largest PAF was physical/functional limitation (26.4%).

Overall, the behavioral factors appeared to contribute relatively equally to LLD among women with and without physical/functional limitations; however, health factors had much bigger contributions to risk among women with limitations.

“Together, model predictors accounted for almost 60% of all new LLD cases in this population, and physical/functional limitation is the largest single contributor to total risk,” the researchers concluded. “A substantial proportion of LLD cases may be preventable by increasing exercise and intervening or preventing sleep difficulties and pain.”

Find the full study in Preventive Medicine (doi: 10.1016/j.ypmed.2016.08.014).

New findings show there are several major risk factors that influence late-life depression (LLD), with physical/functional limitations the most prevalent, according to Shun-Chiao Chang, ScD, of Brigham and Women’s Hospital, Boston, and her associates.

They examined 21,728 women aged older than 65 years who had no prior depression. During a 10-year follow-up, 3,945 incident LLD cases were identified. In those cases, social factors and lifestyle/behavioral factors did affect LLD, but the categories with the largest effect magnitudes for higher LLD risk were severe/very severe bodily pain (hazard ratio, 2.22; 95% confidence interval, 1.88-2.62), difficulty sleeping most/all the time (HR, 2.04; 95% CI, 1.77-2.36), and daily sleep of 10 hours or more (HR, 1.96; 95% CI, 1.56-2.46). The most prevalent risk factor, physical/functional limitations, was associated with a 42% increase in risk

Sleep difficulty some to all of the time, no/very little exercise, and moderate to very severe bodily pain also were factors, with population attributable fraction (PAF) values of 10% or higher. The factor with the largest PAF was physical/functional limitation (26.4%).

Overall, the behavioral factors appeared to contribute relatively equally to LLD among women with and without physical/functional limitations; however, health factors had much bigger contributions to risk among women with limitations.

“Together, model predictors accounted for almost 60% of all new LLD cases in this population, and physical/functional limitation is the largest single contributor to total risk,” the researchers concluded. “A substantial proportion of LLD cases may be preventable by increasing exercise and intervening or preventing sleep difficulties and pain.”

Find the full study in Preventive Medicine (doi: 10.1016/j.ypmed.2016.08.014).

New findings show there are several major risk factors that influence late-life depression (LLD), with physical/functional limitations the most prevalent, according to Shun-Chiao Chang, ScD, of Brigham and Women’s Hospital, Boston, and her associates.

They examined 21,728 women aged older than 65 years who had no prior depression. During a 10-year follow-up, 3,945 incident LLD cases were identified. In those cases, social factors and lifestyle/behavioral factors did affect LLD, but the categories with the largest effect magnitudes for higher LLD risk were severe/very severe bodily pain (hazard ratio, 2.22; 95% confidence interval, 1.88-2.62), difficulty sleeping most/all the time (HR, 2.04; 95% CI, 1.77-2.36), and daily sleep of 10 hours or more (HR, 1.96; 95% CI, 1.56-2.46). The most prevalent risk factor, physical/functional limitations, was associated with a 42% increase in risk

Sleep difficulty some to all of the time, no/very little exercise, and moderate to very severe bodily pain also were factors, with population attributable fraction (PAF) values of 10% or higher. The factor with the largest PAF was physical/functional limitation (26.4%).

Overall, the behavioral factors appeared to contribute relatively equally to LLD among women with and without physical/functional limitations; however, health factors had much bigger contributions to risk among women with limitations.

“Together, model predictors accounted for almost 60% of all new LLD cases in this population, and physical/functional limitation is the largest single contributor to total risk,” the researchers concluded. “A substantial proportion of LLD cases may be preventable by increasing exercise and intervening or preventing sleep difficulties and pain.”

Find the full study in Preventive Medicine (doi: 10.1016/j.ypmed.2016.08.014).

FROM PREVENTIVE MEDICINE

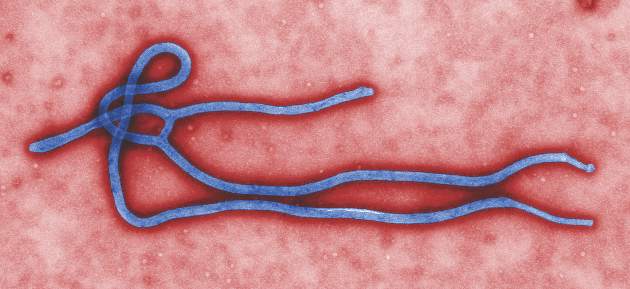

Mass administration of malaria drugs may cut morbidity during Ebola outbreaks

Mass administration of malaria chemoprevention during Ebola virus disease outbreaks may reduce cases of fever, according to a study published in PLOS ONE.

During the October-December 2014 Ebola virus disease (EVD) outbreak in Liberia, health care services were limited, negatively impacting malaria treatment. Hoping to reduce malaria-associated morbidity, investigators targeted four neighborhoods in Monrovia, Liberia – with a total population of 551,971 – for a mass drug administration (MDA) of malaria chemoprevention. MDA participants were divided into two treatment rounds, with 102,372 households verified as receiving treatment with the drug combination artesunate/amodiaquine by community leaders and a malaria committee in round 1, and 103,497 households verified in round 2.

Incidences of self-reported fever episodes declined significantly after round 1 (1.5%), compared with the month prior to round 1 (4.2%) (P < .0001). Self-reported fever incidences in children younger than 5 years of age (6.9%) and in older household members (3.8%) both decreased, to 1.1% and 1.6%, respectively, after round 1 of the MDA.

The researchers also found that self-reported fever was 4.9% lower after round 1 in household members who took a full course of artesunate/amodiaquine malaria chemoprevention (ASAQ-CP) but only 0.6% lower among household members who did not start or not complete a full course of ASAQ-CP. Still, reported incidence of fever declined in both groups, although the risk difference (RD) was significantly larger among the group that took part in the ASAQ-CP course (P < .001).

“Despite high acceptance and coverage of the MDA and the small impact of side effects, initiation of malaria chemoprevention was low, possibly due to health messaging and behavior in the pre-Ebola outbreak period and the ongoing lack of health care services,” researchers concluded. “Combining MDAs during Ebola outbreaks with longer-term interventions to prevent malaria and to improve access to health care might reduce the proportion of respondents saving their treatment for future malaria episodes.”

Read the full study in PLOS ONE (doi: 10.1371/journal.pone.0161311).

Mass administration of malaria chemoprevention during Ebola virus disease outbreaks may reduce cases of fever, according to a study published in PLOS ONE.

During the October-December 2014 Ebola virus disease (EVD) outbreak in Liberia, health care services were limited, negatively impacting malaria treatment. Hoping to reduce malaria-associated morbidity, investigators targeted four neighborhoods in Monrovia, Liberia – with a total population of 551,971 – for a mass drug administration (MDA) of malaria chemoprevention. MDA participants were divided into two treatment rounds, with 102,372 households verified as receiving treatment with the drug combination artesunate/amodiaquine by community leaders and a malaria committee in round 1, and 103,497 households verified in round 2.

Incidences of self-reported fever episodes declined significantly after round 1 (1.5%), compared with the month prior to round 1 (4.2%) (P < .0001). Self-reported fever incidences in children younger than 5 years of age (6.9%) and in older household members (3.8%) both decreased, to 1.1% and 1.6%, respectively, after round 1 of the MDA.

The researchers also found that self-reported fever was 4.9% lower after round 1 in household members who took a full course of artesunate/amodiaquine malaria chemoprevention (ASAQ-CP) but only 0.6% lower among household members who did not start or not complete a full course of ASAQ-CP. Still, reported incidence of fever declined in both groups, although the risk difference (RD) was significantly larger among the group that took part in the ASAQ-CP course (P < .001).

“Despite high acceptance and coverage of the MDA and the small impact of side effects, initiation of malaria chemoprevention was low, possibly due to health messaging and behavior in the pre-Ebola outbreak period and the ongoing lack of health care services,” researchers concluded. “Combining MDAs during Ebola outbreaks with longer-term interventions to prevent malaria and to improve access to health care might reduce the proportion of respondents saving their treatment for future malaria episodes.”

Read the full study in PLOS ONE (doi: 10.1371/journal.pone.0161311).

Mass administration of malaria chemoprevention during Ebola virus disease outbreaks may reduce cases of fever, according to a study published in PLOS ONE.

During the October-December 2014 Ebola virus disease (EVD) outbreak in Liberia, health care services were limited, negatively impacting malaria treatment. Hoping to reduce malaria-associated morbidity, investigators targeted four neighborhoods in Monrovia, Liberia – with a total population of 551,971 – for a mass drug administration (MDA) of malaria chemoprevention. MDA participants were divided into two treatment rounds, with 102,372 households verified as receiving treatment with the drug combination artesunate/amodiaquine by community leaders and a malaria committee in round 1, and 103,497 households verified in round 2.

Incidences of self-reported fever episodes declined significantly after round 1 (1.5%), compared with the month prior to round 1 (4.2%) (P < .0001). Self-reported fever incidences in children younger than 5 years of age (6.9%) and in older household members (3.8%) both decreased, to 1.1% and 1.6%, respectively, after round 1 of the MDA.

The researchers also found that self-reported fever was 4.9% lower after round 1 in household members who took a full course of artesunate/amodiaquine malaria chemoprevention (ASAQ-CP) but only 0.6% lower among household members who did not start or not complete a full course of ASAQ-CP. Still, reported incidence of fever declined in both groups, although the risk difference (RD) was significantly larger among the group that took part in the ASAQ-CP course (P < .001).

“Despite high acceptance and coverage of the MDA and the small impact of side effects, initiation of malaria chemoprevention was low, possibly due to health messaging and behavior in the pre-Ebola outbreak period and the ongoing lack of health care services,” researchers concluded. “Combining MDAs during Ebola outbreaks with longer-term interventions to prevent malaria and to improve access to health care might reduce the proportion of respondents saving their treatment for future malaria episodes.”

Read the full study in PLOS ONE (doi: 10.1371/journal.pone.0161311).

FROM PLOS ONE

Removal from play reduces concussion recovery time in athletes

Sport-related concussion (SRC) recovery time can be reduced if athletes are removed from game participation, according to R.J. Elbin, PhD, of the University of Arkansas, Fayetteville, and his associates.

In the prospective study, 95 athletes sought care for an SRC at a concussion specialty clinic between Sept. 1 and Dec. 1, 2014. The athletes were divided into two groups: those who continued to play after experiencing signs and symptoms of an SRC and those who were immediately removed from play. The played group took longer to recover (44 days) than did the removed group (22 days) (P = .003).

Post hoc analyses revealed that the played group demonstrated significantly worse verbal and visual memory, processing speed, and reaction time, and higher symptoms (all P less than or equal to .001), compared with the removed group at 1-7 days. From 8 to 30 days post injury, the played group demonstrated worse verbal memory (P = .009), visual memory (P less than or equal to .001), processing speed (P = .001), and greater symptoms (P = .001), compared with the removed group.

The study also showed that athletes in the played group were 8.80 times more likely to experience a protracted recovery, compared with athletes in the removed group (21 days or longer) (P less than .001). Athletes participated in a variety of sports including football, soccer, ice hockey, volleyball, field hockey, rugby, basketball, and wrestling.

“This study is the first to show that athletes who continue to play with an SRC experience a longer recovery and more time away from the sport,” researchers concluded. “These findings should be incorporated into SRC education and awareness programs for athletes, coaches, parents, and medical professionals.”

Find the full study in Pediatrics (doi: 10.1542/peds.2016-0910).

Sport-related concussion (SRC) recovery time can be reduced if athletes are removed from game participation, according to R.J. Elbin, PhD, of the University of Arkansas, Fayetteville, and his associates.

In the prospective study, 95 athletes sought care for an SRC at a concussion specialty clinic between Sept. 1 and Dec. 1, 2014. The athletes were divided into two groups: those who continued to play after experiencing signs and symptoms of an SRC and those who were immediately removed from play. The played group took longer to recover (44 days) than did the removed group (22 days) (P = .003).

Post hoc analyses revealed that the played group demonstrated significantly worse verbal and visual memory, processing speed, and reaction time, and higher symptoms (all P less than or equal to .001), compared with the removed group at 1-7 days. From 8 to 30 days post injury, the played group demonstrated worse verbal memory (P = .009), visual memory (P less than or equal to .001), processing speed (P = .001), and greater symptoms (P = .001), compared with the removed group.

The study also showed that athletes in the played group were 8.80 times more likely to experience a protracted recovery, compared with athletes in the removed group (21 days or longer) (P less than .001). Athletes participated in a variety of sports including football, soccer, ice hockey, volleyball, field hockey, rugby, basketball, and wrestling.

“This study is the first to show that athletes who continue to play with an SRC experience a longer recovery and more time away from the sport,” researchers concluded. “These findings should be incorporated into SRC education and awareness programs for athletes, coaches, parents, and medical professionals.”

Find the full study in Pediatrics (doi: 10.1542/peds.2016-0910).

Sport-related concussion (SRC) recovery time can be reduced if athletes are removed from game participation, according to R.J. Elbin, PhD, of the University of Arkansas, Fayetteville, and his associates.

In the prospective study, 95 athletes sought care for an SRC at a concussion specialty clinic between Sept. 1 and Dec. 1, 2014. The athletes were divided into two groups: those who continued to play after experiencing signs and symptoms of an SRC and those who were immediately removed from play. The played group took longer to recover (44 days) than did the removed group (22 days) (P = .003).

Post hoc analyses revealed that the played group demonstrated significantly worse verbal and visual memory, processing speed, and reaction time, and higher symptoms (all P less than or equal to .001), compared with the removed group at 1-7 days. From 8 to 30 days post injury, the played group demonstrated worse verbal memory (P = .009), visual memory (P less than or equal to .001), processing speed (P = .001), and greater symptoms (P = .001), compared with the removed group.

The study also showed that athletes in the played group were 8.80 times more likely to experience a protracted recovery, compared with athletes in the removed group (21 days or longer) (P less than .001). Athletes participated in a variety of sports including football, soccer, ice hockey, volleyball, field hockey, rugby, basketball, and wrestling.

“This study is the first to show that athletes who continue to play with an SRC experience a longer recovery and more time away from the sport,” researchers concluded. “These findings should be incorporated into SRC education and awareness programs for athletes, coaches, parents, and medical professionals.”

Find the full study in Pediatrics (doi: 10.1542/peds.2016-0910).

FROM PEDIATRICS

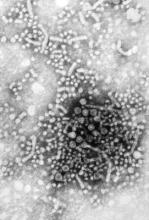

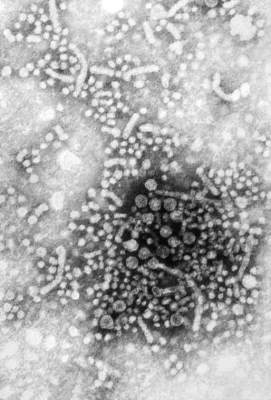

Patients with HBV inadequately monitored for disease activity

Chronic hepatitis B virus patients are insufficiently monitored for disease activity and hepatocellular carcinoma (HCC), according to Philip R. Spradling, MD, and his associates of the Centers for Disease Control and Prevention.

In a cohort study of 2,992 patients with CHB, 2,338 were used for assessment. Researchers used alanine aminotransferase (ALT) monitoring, HBV DNA monitoring, assessment for cirrhosis, and HBV antiviral therapy for examination. For ALT monitoring, 1,814 (78%) of patients had at least one ALT level obtained per year of follow-up. Only 876 patients (37%) had at least one HBV DNA level assessment per year of follow-up and 1,037 (44%) had less than annual testing, and 18% of patients never had an HBV DNA level assessed. Among patients with cirrhosis, 297 (54%) had HBV DNA testing done at least annually, 189 (35%) had testing done but less frequently than annually, and 61 (11%) never had an HBV DNA test done. And of the 547 patients with cirrhosis, 305 (56%) were prescribed HBV antiviral therapy.

It was noted that patients were monitored during 2006-2013. Only 68% of patients had not been prescribed treatment, and 72% had received liver-related specialty care.

“Our findings reiterate the need for clinicians who treat patients with [chronic HBV] to provide ongoing, continual assessment of disease activity based on HBV DNA and ALT levels, as well as liver imaging surveillance among patients at high risk for HCC,” researchers concluded. “As antiviral therapy for [chronic HBV] now includes potent and highly efficacious oral agents that have few contraindications and minimal side effects, as well as a high barrier to resistance, clinicians should be vigilant for opportunities to decrease the likelihood of poor clinical outcomes.”

Read the full study in Clinical Infectious Diseases here.

Chronic hepatitis B virus patients are insufficiently monitored for disease activity and hepatocellular carcinoma (HCC), according to Philip R. Spradling, MD, and his associates of the Centers for Disease Control and Prevention.

In a cohort study of 2,992 patients with CHB, 2,338 were used for assessment. Researchers used alanine aminotransferase (ALT) monitoring, HBV DNA monitoring, assessment for cirrhosis, and HBV antiviral therapy for examination. For ALT monitoring, 1,814 (78%) of patients had at least one ALT level obtained per year of follow-up. Only 876 patients (37%) had at least one HBV DNA level assessment per year of follow-up and 1,037 (44%) had less than annual testing, and 18% of patients never had an HBV DNA level assessed. Among patients with cirrhosis, 297 (54%) had HBV DNA testing done at least annually, 189 (35%) had testing done but less frequently than annually, and 61 (11%) never had an HBV DNA test done. And of the 547 patients with cirrhosis, 305 (56%) were prescribed HBV antiviral therapy.

It was noted that patients were monitored during 2006-2013. Only 68% of patients had not been prescribed treatment, and 72% had received liver-related specialty care.

“Our findings reiterate the need for clinicians who treat patients with [chronic HBV] to provide ongoing, continual assessment of disease activity based on HBV DNA and ALT levels, as well as liver imaging surveillance among patients at high risk for HCC,” researchers concluded. “As antiviral therapy for [chronic HBV] now includes potent and highly efficacious oral agents that have few contraindications and minimal side effects, as well as a high barrier to resistance, clinicians should be vigilant for opportunities to decrease the likelihood of poor clinical outcomes.”

Read the full study in Clinical Infectious Diseases here.

Chronic hepatitis B virus patients are insufficiently monitored for disease activity and hepatocellular carcinoma (HCC), according to Philip R. Spradling, MD, and his associates of the Centers for Disease Control and Prevention.

In a cohort study of 2,992 patients with CHB, 2,338 were used for assessment. Researchers used alanine aminotransferase (ALT) monitoring, HBV DNA monitoring, assessment for cirrhosis, and HBV antiviral therapy for examination. For ALT monitoring, 1,814 (78%) of patients had at least one ALT level obtained per year of follow-up. Only 876 patients (37%) had at least one HBV DNA level assessment per year of follow-up and 1,037 (44%) had less than annual testing, and 18% of patients never had an HBV DNA level assessed. Among patients with cirrhosis, 297 (54%) had HBV DNA testing done at least annually, 189 (35%) had testing done but less frequently than annually, and 61 (11%) never had an HBV DNA test done. And of the 547 patients with cirrhosis, 305 (56%) were prescribed HBV antiviral therapy.

It was noted that patients were monitored during 2006-2013. Only 68% of patients had not been prescribed treatment, and 72% had received liver-related specialty care.

“Our findings reiterate the need for clinicians who treat patients with [chronic HBV] to provide ongoing, continual assessment of disease activity based on HBV DNA and ALT levels, as well as liver imaging surveillance among patients at high risk for HCC,” researchers concluded. “As antiviral therapy for [chronic HBV] now includes potent and highly efficacious oral agents that have few contraindications and minimal side effects, as well as a high barrier to resistance, clinicians should be vigilant for opportunities to decrease the likelihood of poor clinical outcomes.”

Read the full study in Clinical Infectious Diseases here.

FROM CLINICAL INFECTIOUS DISEASES

Portable device may underestimate FEV1 in children

The PiKo-1 device (nSpire Health) has limited utility in determining forced expiratory volume in 1 second (FEV1) in children with asthma, according to Jonathan M. Gaffin, MD, and his associates.

In a study of 242 children, spirometry and PiKo-1 devices were used to test FEV1. In the Bland-Altman analysis, it reported a mean difference between FEV1 measured by spirometry and PiKo-1 of 0.14 L. The PiKo-1 FEV1 was found to be moderately biased to underestimate FEV1 with increasing volumes, for every 1-liter increase in spirometry FEV1, having the difference between spirometry and PiKo-1 increased by 0.19 L (P < .001).

Researchers also used the pulmonary function test (PFT) and t showed variability was 0.4 L for spirometry at 2 SDs, a significant smaller range than seen in the PFT-PiKo confidence intervals (1.1 L). It is noted that this indicates that differences are credited to distinctions in the devices themselves and not within the techniques of the person using them. There was no effect on the order of PFT or PiKo-1 performance (P = .88).

“The findings from this study suggest that the PiKo-1 device has limited utility in assessing FEV1 in clinical or research settings in children with asthma,” researchers concluded. “Further investigation of its use in this respect and with different populations may prove the device more valuable.”

Find the full study in the Annals of Allergy, Asthma and Immunology (doi: 10.1016/j.anai.2016.06.022).

The PiKo-1 device (nSpire Health) has limited utility in determining forced expiratory volume in 1 second (FEV1) in children with asthma, according to Jonathan M. Gaffin, MD, and his associates.

In a study of 242 children, spirometry and PiKo-1 devices were used to test FEV1. In the Bland-Altman analysis, it reported a mean difference between FEV1 measured by spirometry and PiKo-1 of 0.14 L. The PiKo-1 FEV1 was found to be moderately biased to underestimate FEV1 with increasing volumes, for every 1-liter increase in spirometry FEV1, having the difference between spirometry and PiKo-1 increased by 0.19 L (P < .001).

Researchers also used the pulmonary function test (PFT) and t showed variability was 0.4 L for spirometry at 2 SDs, a significant smaller range than seen in the PFT-PiKo confidence intervals (1.1 L). It is noted that this indicates that differences are credited to distinctions in the devices themselves and not within the techniques of the person using them. There was no effect on the order of PFT or PiKo-1 performance (P = .88).

“The findings from this study suggest that the PiKo-1 device has limited utility in assessing FEV1 in clinical or research settings in children with asthma,” researchers concluded. “Further investigation of its use in this respect and with different populations may prove the device more valuable.”

Find the full study in the Annals of Allergy, Asthma and Immunology (doi: 10.1016/j.anai.2016.06.022).

The PiKo-1 device (nSpire Health) has limited utility in determining forced expiratory volume in 1 second (FEV1) in children with asthma, according to Jonathan M. Gaffin, MD, and his associates.

In a study of 242 children, spirometry and PiKo-1 devices were used to test FEV1. In the Bland-Altman analysis, it reported a mean difference between FEV1 measured by spirometry and PiKo-1 of 0.14 L. The PiKo-1 FEV1 was found to be moderately biased to underestimate FEV1 with increasing volumes, for every 1-liter increase in spirometry FEV1, having the difference between spirometry and PiKo-1 increased by 0.19 L (P < .001).

Researchers also used the pulmonary function test (PFT) and t showed variability was 0.4 L for spirometry at 2 SDs, a significant smaller range than seen in the PFT-PiKo confidence intervals (1.1 L). It is noted that this indicates that differences are credited to distinctions in the devices themselves and not within the techniques of the person using them. There was no effect on the order of PFT or PiKo-1 performance (P = .88).

“The findings from this study suggest that the PiKo-1 device has limited utility in assessing FEV1 in clinical or research settings in children with asthma,” researchers concluded. “Further investigation of its use in this respect and with different populations may prove the device more valuable.”

Find the full study in the Annals of Allergy, Asthma and Immunology (doi: 10.1016/j.anai.2016.06.022).

FROM THE ANNALS OF ALLERGY, ASTHMA & IMMUNOLOGY

Postop delirium linked to greater long-term cognitive decline

Patients with postoperative delirium have significantly worse preoperative short-term cognitive performance and significantly greater long-term cognitive decline, compared with patients without delirium, according to Sharon K. Inouye, MD, and her associates.

In a prospective cohort study of 560 patients aged 70 years and older, 134 patients were selected for the delirium group and 426 for the nondelirium group. The delirium group had a significantly greater decline (–1.03 points) at 1 month, compared with those without delirium (P = .003). After cognitive function had recovered at 2 months, there were no significant differences between groups (P = 0.99). After 2 months, both groups decline on average; however, the delirium group declined significantly more (–1.07) in adjusted mean scores at 36 months (P =.02).

From baseline to 36 months, there was a significant change for the delirium group (–1.30, P less than .01) and no significant change for the group without delirium (–0.23, P = .30). Researchers noted that the effect of delirium remains undiminished after consecutive rehospitalizations, intercurrent illnesses, and major postoperative complications were controlled for.

The patients underwent major noncardiac surgery, such as total hip or knee replacement, open abdominal aortic aneurysm repair, colectomy, and lower-extremity arterial bypass.

“This study provides a novel presentation of the biphasic relationship of delirium and cognitive trajectory, both its well-recognized acute effects but also long-term effects,” the researchers wrote. “Our results suggest that after a period of initial recovery, patients with delirium experience a substantially accelerated trajectory of cognitive aging.”

Read the full study in Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association (doi:10.1016/j.jalz.2016.03.005).

Patients with postoperative delirium have significantly worse preoperative short-term cognitive performance and significantly greater long-term cognitive decline, compared with patients without delirium, according to Sharon K. Inouye, MD, and her associates.

In a prospective cohort study of 560 patients aged 70 years and older, 134 patients were selected for the delirium group and 426 for the nondelirium group. The delirium group had a significantly greater decline (–1.03 points) at 1 month, compared with those without delirium (P = .003). After cognitive function had recovered at 2 months, there were no significant differences between groups (P = 0.99). After 2 months, both groups decline on average; however, the delirium group declined significantly more (–1.07) in adjusted mean scores at 36 months (P =.02).

From baseline to 36 months, there was a significant change for the delirium group (–1.30, P less than .01) and no significant change for the group without delirium (–0.23, P = .30). Researchers noted that the effect of delirium remains undiminished after consecutive rehospitalizations, intercurrent illnesses, and major postoperative complications were controlled for.

The patients underwent major noncardiac surgery, such as total hip or knee replacement, open abdominal aortic aneurysm repair, colectomy, and lower-extremity arterial bypass.

“This study provides a novel presentation of the biphasic relationship of delirium and cognitive trajectory, both its well-recognized acute effects but also long-term effects,” the researchers wrote. “Our results suggest that after a period of initial recovery, patients with delirium experience a substantially accelerated trajectory of cognitive aging.”

Read the full study in Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association (doi:10.1016/j.jalz.2016.03.005).

Patients with postoperative delirium have significantly worse preoperative short-term cognitive performance and significantly greater long-term cognitive decline, compared with patients without delirium, according to Sharon K. Inouye, MD, and her associates.

In a prospective cohort study of 560 patients aged 70 years and older, 134 patients were selected for the delirium group and 426 for the nondelirium group. The delirium group had a significantly greater decline (–1.03 points) at 1 month, compared with those without delirium (P = .003). After cognitive function had recovered at 2 months, there were no significant differences between groups (P = 0.99). After 2 months, both groups decline on average; however, the delirium group declined significantly more (–1.07) in adjusted mean scores at 36 months (P =.02).

From baseline to 36 months, there was a significant change for the delirium group (–1.30, P less than .01) and no significant change for the group without delirium (–0.23, P = .30). Researchers noted that the effect of delirium remains undiminished after consecutive rehospitalizations, intercurrent illnesses, and major postoperative complications were controlled for.

The patients underwent major noncardiac surgery, such as total hip or knee replacement, open abdominal aortic aneurysm repair, colectomy, and lower-extremity arterial bypass.

“This study provides a novel presentation of the biphasic relationship of delirium and cognitive trajectory, both its well-recognized acute effects but also long-term effects,” the researchers wrote. “Our results suggest that after a period of initial recovery, patients with delirium experience a substantially accelerated trajectory of cognitive aging.”

Read the full study in Alzheimer’s & Dementia: The Journal of the Alzheimer’s Association (doi:10.1016/j.jalz.2016.03.005).

FROM ALZHEIMER’S & DEMENTIA