User login

Quality of care of hospitalized infective endocarditis patients: Report from a tertiary medical center

Infective endocarditis (IE) affected an estimated 46,800 Americans in 2011, and its incidence is increasing due to greater numbers of invasive procedures and prevalence of IE risk factors.1-3 Despite recent advances in the treatment of IE, morbidity and mortality remain high: in-hospital mortality in IE patients is 15% to 20%, and the 1-year mortality rate is approximately 40%.2,4,5

Poor IE outcomes may be the result of difficulty in diagnosing IE and identifying its optimal treatments. The American Heart Association (AHA), the American College of Cardiology (ACC), and the European Society of Cardiology (ESC) have published guidelines to address these challenges. Recent guidelines recommend a multidisciplinary approach that includes cardiology, cardiac surgery, and infectious disease (ID) specialty involvement in decision-making.5,6

In the absence of published quality measures for IE management, guidelines can be used to evaluate the quality of care of IE. Studies have showed poor concordance with guideline recommendations but did not examine agreement with more recently published guidelines.7,8 Furthermore, few studies have examined the management, outcomes, and quality of care received by IE patients. Therefore, we aimed to describe a modern cohort of patients with IE admitted to a tertiary medical center over a 4-year period. In particular, we aimed to assess quality of care received by this cohort, as measured by concordance with AHA and ACC guidelines to identify gaps in care and spur quality improvement (QI) efforts.

METHODS

Design and Study Population

We conducted a retrospective cohort study of adult IE patients admitted to Baystate Medical Center (BMC), a 716-bed tertiary academic center that covers a population of 800,000 people throughout western New England. We used the International Classification of Diseases (ICD)–Ninth Revision, to identify IE patients discharged with a principal or secondary diagnosis of IE between 2007 and 2011 (codes 421.0, 421.1, 421.9, 424.9, 424.90, and 424.91). Three co-authors confirmed the diagnosis by conducting a review of the electronic health records.

We included only patients who met modified Duke criteria for definite or possible IE.5 Definite IE defines patients with pathological criteria (microorganisms demonstrated by culture or histologic examination or a histologic examination showing active endocarditis); or patients with 2 major criteria (positive blood culture and evidence of endocardial involvement by echocardiogram), 1 major criterion and 3 minor criteria (minor criteria: predisposing heart conditions or intravenous drug (IVD) use, fever, vascular phenomena, immunologic phenomena, and microbiologic evidence that do not meet the major criteria) or 5 minor criteria. Possible IE defines patients with 1 major and 1 minor criterion or 3 minor criteria.5

Data Collection

We used billing and clinical databases to collect demographics, comorbidities, antibiotic treatment, 6-month readmission and 1-year mortality. Comorbid conditions were classified into Elixhauser comorbidities using software provided by the Healthcare Costs and Utilization Project of the Agency for Healthcare Research and Quality.9,10

We obtained all other data through electronic health record abstraction. These included microbiology, type of endocarditis (native valve endocarditis [NVE] or prosthetic valve endocarditis [PVE]), echocardiographic location of the vegetation, and complications involving the valve (eg, valve perforation, ruptured chorda, perivalvular abscess, or valvular insufficiency).

Using 2006 AHA/ACC guidelines,11 we identified quality metrics, including the presence of at least 2 sets of blood cultures prior to start of antibiotics and use of transthoracic echocardiogram (TTE) and transesophageal echocardiogram (TEE). Guidelines recommend using TTE as first-line to detect valvular vegetations and assess IE complications. TEE is recommended if TTE is nondiagnostic and also as first-line to diagnose PVE. We assessed the rate of consultation with ID, cardiology, and cardiac surgery specialties. While these consultations were not explicitly emphasized in the 2006 AHA/ACC guidelines, there is a class I recommendation in 2014 AHA/ACC guidelines5 to manage IE patients with consultation of all these specialties.

We reported the number of patients with intracardiac leads (pacemaker or defibrillator) who had documentation of intracardiac lead removal. Complete removal of intracardiac leads is indicated in IE patients with infection of leads or device (class I) and suggested for IE caused by Staphylococcus aureus or fungi (even without evidence of device or lead infection), and for patients undergoing valve surgery (class IIa).5 We entered abstracted data elements into a RedCap database, hosted by Tufts Clinical and Translational Science Institute.12

Outcomes

Outcomes included embolic events, strokes, need for cardiac surgery, LOS, inhospital mortality, 6-month readmission, and 1-year mortality. We identified embolic events using documentation of clinical or imaging evidence of an embolic event to the cerebral, coronary, peripheral arterial, renal, splenic, or pulmonary vasculature. We used record extraction to identify incidence of valve surgery. Nearly all patients who require surgery at BMC have it done onsite. We compared outcomes among patients who received less than 3 vs. 3 consultations provided by ID, cardiology, and cardiac surgery specialties. We also compared outcomes among patients who received 0, 1, 2, or 3 consultations to look for a trend.

Statistical Analysis

We divided the cohort into patients with NVE and PVE because there are differences in pathophysiology, treatment, and outcomes of these groups. We calculated descriptive statistics, including means/standard deviation (SD) and n (%). We conducted univariable analyses using Fisher exact (categorical), unpaired t tests (Gaussian), or Kruskal-Wallis equality-of-populations rank test (non-Gaussian). Common language effect sizes were also calculated to quantify group differences without respect to sample size.13,14 Analyses were performed using Stata 14.1. (StataCorp LLC, College Station, Texas). The BMC Institutional Review Board approved the protocol.

RESULTS

We identified a total of 317 hospitalizations at BMC meeting criteria for IE. Of these, 147 hospitalizations were readmissions or did not meet the clinical criteria of definite or possible IE. Thus, we included a total of 170 patients in the final analysis. Definite IE was present in 135 (79.4%) and possible IE in 35 (20.6%) patients.

Patient Characteristics

Of 170 patients, 127 (74.7%) had NVE and 43 (25.3%) had PVE. Mean ± SD age was 60.0 ± 17.9 years, 66.5% (n = 113) of patients were male, and 79.4% (n = 135) were white (Table 1). Hypertension and chronic kidney disease were the most common comorbidities. The median Gagne score15 was 4, corresponding to a 1-year mortality risk of 15%. Predisposing factors for IE included previous history of IE (n = 14 or 8.2%), IVD use (n = 23 or 13.5%), and presence of long-term venous catheters (n = 19 or 11.2%). Intracardiac leads were present in 17.1% (n = 29) of patients. Bicuspid aortic valve was reported in 6.5% (n = 11) of patients with NVE. Patients with PVE were older (+11.5 years, 95% confidence interval [CI] 5.5, 17.5) and more likely to have intracardiac leads (44.2% vs. 7.9%; P < 0.001; Table 1).

Microbiology and Antibiotics

Staphylococcus aureus was isolated in 40.0% of patients (methicillin-sensitive: 21.2%, n = 36; methicillin-resistant: 18.8%, n = 32) and vancomycin (88.2%, n = 150) was the most common initial antibiotic used. Nearly half (44.7%, n = 76) of patients received gentamicin as part of their initial antibiotic regimen. Appendix 1 provides information on final blood culture results, prosthetic versus native valve IE, and antimicrobial agents that each patient received. PVE patients were more likely to receive gentamicin as their initial antibiotic regimen than NVE (58.1% vs. 40.2%; P = 0.051; Table 1).

Echocardiography and Affected Valves

As per study inclusion criteria, all patients received echocardiography (either TTE, TEE, or both). Overall, the most common infected valve was mitral (41.3%), n = 59), followed by aortic valve (28.7%), n = 41). Patients in whom the location of infected valve could not be determined (15.9%, n = 27) had echocardiographic features of intracardiac device infection or intracardiac mass (Table 1).

Quality of Care

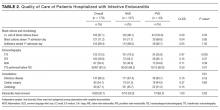

Nearly all (n = 165, 97.1%) of patients had at least 2 sets of blood cultures drawn, most on the first day of admission (71.2%). The vast majority of patients (n = 152, 89.4%) also received their first dose of antibiotics on the day of admission. Ten (5.9%) patients did not receive any consults, and 160 (94.1%) received at least 1 consultation. An ID consultation was obtained for most (147, 86.5%) patients; cardiac surgery consultation was obtained for about half of patients (92, 54.1%), and cardiology consultation was also obtained for nearly half of patients (80, 47.1%). One-third (53, 31.2%) did not receive a cardiology or cardiac surgery consult, two-thirds (117, 68.8%) received either a cardiology or a cardiac surgery consult, and one-third (55, 32.4%) received both.

Of the 29 patients who had an intracardiac lead, 6 patients had documentation of the device removal during the index hospitalization (5 or 50.0% of patients with NVE and 1 or 5.3% of patients with PVE; P = 0.02; Table 2).

Outcomes

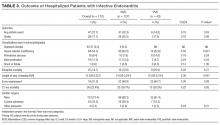

Evidence of any embolic events was seen in 27.7% (n = 47) of patients, including stroke in 17.1% (n = 29). Median LOS for all patients was 13.5 days, and 6-month readmission among patients who survived their index admission was 51.0% (n = 74/145; 95% CI, 45.9%-62.7%). Inhospital mortality was 14.7% (n = 25; 95% CI: 10.1%-20.9%) and 12-month mortality was 22.4% (n = 38; 95% CI, 16.7%-29.3%). Inhospital mortality was more frequent among patients with PVE than NVE (20.9% vs. 12.6%; P = 0.21), although this difference was not statistically significant. Complications were more common in NVE than PVE (any embolic event: 32.3% vs. 14.0%, P = 0.03; stroke, 20.5% vs. 7.0%, P = 0.06; Table 3).

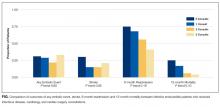

Although there was a trend toward reduction in 6-month readmission and 12-month mortality with incremental increase in the number of specialties consulted (ID, cardiology and cardiac surgery), the difference was not statistically significant (Figure). In addition, comparing outcomes of embolic events, stroke, 6-month readmission, and 12-month mortality between those who received 3 consults (28.8%, n = 49) to those with fewer than 3 (71.2%, n = 121) did not show statistically significant differences.

Of 92 patients who received a cardiac surgery consult, 73 had NVE and 19 had PVE. Of these, 47 underwent valve surgery, 39 (of 73) with NVE (53.42%) and 8 (of 19) with PVE (42.1%). Most of the NVE patients (73.2%) had more than 1 indication for surgery. The most common indications for surgery among NVE patients were significant valvular dysfunction resulting in heart failure (65.9%), followed by mobile vegetation (56.1%) and recurrent embolic events (26.8%). The most common indication for surgery in PVE was persistent bacteremia or recurrent embolic events (75.0%).

DISCUSSION

In this study, we described the management, quality of care, and outcomes of IE patients in a tertiary medical center. We found that the majority of hospitalized patients with IE were older white men with comorbidities and IE risk factors. The complication rate was high (27.7% with embolic events) and the inhospital mortality rate was in the lower range reported by prior studies [14.7% vs. 15%-20%].5 Nearly one-third of patients (n = 47, 27.7%) received valve surgery. Quality of care received was generally good, with most patients receiving early blood cultures, echocardiograms, early antibiotics, and timely ID consultation. We identified important gaps in care, including a failure to consult cardiac surgery in nearly half of patients and failure to consult cardiology in more than half of patients.

Our findings support work suggesting that IE is no longer primarily a chronic or subacute disease of younger patients with IVD use, positive human immunodeficiency virus status, or bicuspid aortic valves.1,4,16,17 The International Collaboration on Endocarditis-Prospective Cohort Study,4 a multinational prospective cohort study (2000-2005) of 2781 adults with IE, reported a higher prevalence of patients with diabetes or on hemodialysis, IVD users, and patients with long-term venous catheter and intracardiac leads than we found. Yet both studies suggest that the demographics of IE are changing. This may partially explain why IE mortality has not improved in recent years:2,3 patients with older age and higher comorbidity burden may not be considered good surgical candidates.

This study is among the first to contribute information on concordance with IE guidelines in a cohort of U.S. patients. Our findings suggest that most patients received timely blood culture, same-day administration of empiric antibiotics, and ID consultation, which is similar to European studies.7,18 Guideline concordance could be improved in some areas. Overall documentation of the management plan regarding the intracardiac leads could be improved. Only 6 of 29 patients with intracardiac leads had documentation of their removal during the index hospitalization.

The 2014 AHA/ACC guidelines5 and the ESC guidelines6 emphasized the importance of multidisciplinary management of IE. As part of the Heart Valve Team at BMC, cardiologists provide expertise in diagnosis, imaging and clinical management of IE, and cardiac surgeons provide consultation on whether to pursue surgery and optimal timing of surgery. Early discussion with surgical team is considered mandatory in all complicated cases of IE.6,18 Infectious disease consultation has been shown to improve the rate of IE diagnosis, reduce the 6-month relapse rate,19 and improve outcomes in patients with S aureus bacteremia.20 In our study 86.5% of patients had documentation of an ID consultation; cardiac surgery consultation was obtained in 54.1% and cardiology consultation in 47.1% of patients.

We observed a trend towards lower rates of 6-month readmission and 12-month mortality among patients who received all 3 consults (Figure 1), despite the fact that rates of embolic events and stroke were higher in patients with 3 consults compared to those with fewer than 3. Obviously, the lack of confounder adjustment and lack of power limits our ability to make inferences about this association, but it generates hypotheses for future work. Because subjects in our study were cared for prior to 2014, multidisciplinary management of IE with involvement of cardiology, cardiac surgery, and ID physicians was observed in only one-third of patients. However, 117 (68.8%) patients received either cardiology or cardiac surgery consults. It is possible that some physicians considered involving both cardiology and cardiac surgery consultants as unnecessary and, therefore, did not consult both specialties. We will focus future QI efforts in our institution on educating physicians about the benefits of multidisciplinary care and the importance of fully implementing the 2014 AHA/ACC guidelines.

Our findings around quality of care should be placed in the context of 2 studies by González de Molina et al8 and Delahaye et al7 These studies described considerable discordance between guideline recommendations and real-world IE care. However, these studies were performed more than a decade ago and were conducted before current recommendations to consult cardiology and cardiac surgery were published.

In the 2014 AHA/ACC guidelines, surgery prior to completion of antibiotics is indicated in patients with valve dysfunction resulting in heart failure; left-sided IE caused by highly resistant organisms (including fungus or S aureus); IE complicated by heart block, aortic abscess, or penetrating lesions; and presence of persistent infection (bacteremia or fever lasting longer than 5 to 7 days) after onset of appropriate antimicrobial therapy. In addition, there is a Class IIa indication of early surgery in patients with recurrent emboli and persistent vegetation despite appropriate antibiotic therapy and a Class IIb indication of early surgery in patients with NVE with mobile vegetation greater than 10 mm in length. Surgery is recommended for patients with PVE and relapsing infection.

It is recommended that IE patients be cared for in centers with immediate access to cardiac surgery because the urgent need for surgical intervention can arise rapidly.5 We found that nearly one-third of included patients underwent surgery. Although we did not collect data on indications for surgery in patients who did not receive surgery, we observed that 50% had a surgery consult, suggesting the presence of 1 or more surgical indications. Of these, half underwent valve surgery. Most of the NVE patients who underwent surgery had more than 1 indication for surgery. Our surgical rate is similar to a study from Italy3 and overall in the lower range of reported surgical rate (25%-50%) from other studies.21 The low rate of surgery at our center may be related to the fact that the use of surgery for IE has been hotly debated in the literature,21 and may also be due to the low rate of cardiac surgery consultation.

Our study has several limitations. We identified eligible patients using a discharge ICD-9 coding of IE and then confirmed the presence of Duke criteria using record review. Using discharge diagnosis codes for endocarditis has been validated, and our additional manual chart review to confirm Duke criteria likely improved the specificity significantly. However, by excluding patients who did not have documented evidence of Duke criteria, we may have missed some cases, lowering sensitivity. The performance on selected quality metrics may also have been affected by our inclusion criteria. Because we included only patients who met Duke criteria, we tended to include patients who had received blood cultures and echocardiograms, which are part of the criteria. Thus, we cannot comment on use of diagnostic testing or specialty consultation in patients with suspected IE. This was a single-center study and may not represent patients or current practices seen in other institutions. We did not collect data on some of the predisposing factors to NVE (for example, baseline rheumatic heart disease or preexisting valvular heart disease) because it is estimated that less than 5% of IE in the U.S. is superimposed on rheumatic heart disease.4 We likely underestimated 12-month mortality rate because we did not cross-reference our findings again the National Death Index; however, this should not affect the comparison of this outcome between groups.

CONCLUSION

Our study confirms reports that IE epidemiology has changed significantly in recent years. It also suggests that concordance with guideline recommendations is good for some aspects of care (eg, echocardiogram, blood cultures), but can be improved in other areas, particularly in use of specialty consultation during the hospitalization. Future QI efforts should emphasize the role of the heart valve team or endocarditis team that consists of an internist, ID physician, cardiologist, cardiac surgeon, and nursing. Finally, efforts should be made to develop strategies for community hospitals that do not have access to all of these specialists (eg, early transfer, telehealth).

Disclosure

Nothing to report.

1. Pant S, Patel NJ, Deshmukh A, Golwala H, Patel N, Badheka A, et al. Trends in infective endocarditis incidence, microbiology, and valve replacement in the United States from 2000 to 2011. J Am Coll Cardiol. 2015;65(19):2070-2076. PubMed

2. Bor DH, Woolhandler S, Nardin R, Brusch J, Himmelstein DU. Infective endocarditis in the U.S., 1998-2009: a nationwide study. PloS One. 2013;8(3):e60033. PubMed

3. Fedeli U, Schievano E, Buonfrate D, Pellizzer G, Spolaore P. Increasing incidence and mortality of infective endocarditis: a population-based study through a record-linkage system. BMC Infect Dis. 2011;11:48. PubMed

4. Murdoch DR, Corey GR, Hoen B, Miró JM, Fowler VG, Bayer AS, et al. Clinical presentation, etiology, and outcome of infective endocarditis in the 21st century: the International Collaboration on Endocarditis-Prospective Cohort Study. Arch Intern Med. 2009;169(5):463-473. PubMed

5. Nishimura RA, Otto CM, Bonow RO, Carabello BA, Erwin JP, Guyton RA, et al. 2014 AHA/ACC guideline for the management of patients with valvular heart disease: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines. J Am Coll Cardiol. 2014;63(22):2438-2488. PubMed

6. Habib G, Lancellotti P, Antunes MJ, Bongiorni MG, Casalta J-P, Del Zotti F, et al. 2015 ESC Guidelines for the management of infective endocarditis: The Task Force for the Management of Infective Endocarditis of the European Society of Cardiology (ESC). Endorsed by: European Association for Cardio-Thoracic Surgery (EACTS), the European Association of Nuclear Medicine (EANM). Eur Heart J. 2015;36(44):3075-3128. PubMed

7. Delahaye F, Rial MO, de Gevigney G, Ecochard R, Delaye J. A critical appraisal of the quality of the management of infective endocarditis. J Am Coll Cardiol. 1999;33(3):788-793. PubMed

8. González De Molina M, Fernández-Guerrero JC, Azpitarte J. [Infectious endocarditis: degree of discordance between clinical guidelines recommendations and clinical practice]. Rev Esp Cardiol. 2002;55(8):793-800. PubMed

9. Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8-27. PubMed

10. Quan H, Parsons GA, Ghali WA. Validity of information on comorbidity derived rom ICD-9-CCM administrative data. Med Care. 2002;40(8):675-685. PubMed

11. American College of Cardiology/American Heart Association Task Force on Practice Guidelines, Society of Cardiovascular Anesthesiologists, Society for Cardiovascular Angiography and Interventions, Society of Thoracic Surgeons, Bonow RO, Carabello BA, Kanu C, deLeon AC Jr, Faxon DP, Freed MD, et al. ACC/AHA 2006 guidelines for the management of patients with valvular heart disease: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines (writing committee to revise the 1998 Guidelines for the Management of Patients With Valvular Heart Disease): developed in collaboration with the Society of Cardiovascular Anesthesiologists: endorsed by the Society for Cardiovascular Angiography and Interventions and the Society of Thoracic Surgeons. Circulation. 2006;114(5):e84-e231.

12. REDCap [Internet]. [cited 2016 May 14]. Available from: https://collaborate.tuftsctsi.org/redcap/.

13. McGraw KO, Wong SP. A common language effect-size statistic. Psychol Bull. 1992;111:361-365.

14. Cohen J. The statistical power of abnormal-social psychological research: a review. J Abnorm Soc Psychol. 1962;65:145-153. PubMed

15. Gagne JJ, Glynn RJ, Avorn J, Levin R, Schneeweiss S. A combined comorbidity score predicted mortality in elderly patients better than existing scores. J Clin Epidemiol. 2011;64(7):749-759. PubMed

16. Baddour LM, Wilson WR, Bayer AS, Fowler VG Jr, Tleyjeh IM, Rybak MJ, et al. Infective endocarditis in adults: Diagnosis, antimicrobial therapy, and management of complications: A scientific statement for healthcare professionals from the American Heart Association. Circulation. 2015;132(15):1435-1486. PubMed

17. Cecchi E, Chirillo F, Castiglione A, Faggiano P, Cecconi M, Moreo A, et al. Clinical epidemiology in Italian Registry of Infective Endocarditis (RIEI): Focus on age, intravascular devices and enterococci. Int J Cardiol. 2015;190:151-156. PubMed

18. Tornos P, Iung B, Permanyer-Miralda G, Baron G, Delahaye F, Gohlke-Bärwolf Ch, et al. Infective endocarditis in Europe: lessons from the Euro heart survey. Heart. 2005;91(5):571-575. PubMed

19. Yamamoto S, Hosokawa N, Sogi M, Inakaku M, Imoto K, Ohji G, et al. Impact of infectious diseases service consultation on diagnosis of infective endocarditis. Scand J Infect Dis. 2012;44(4):270-275. PubMed

20. Rieg S, Küpper MF. Infectious diseases consultations can make the difference: a brief review and a plea for more infectious diseases specialists in Germany. Infection. 2016;(2):159-166. PubMed

21. Prendergast BD, Tornos P. Surgery for infective endocarditis: who and when? Circulation. 2010;121(9):11411152. PubMed

Infective endocarditis (IE) affected an estimated 46,800 Americans in 2011, and its incidence is increasing due to greater numbers of invasive procedures and prevalence of IE risk factors.1-3 Despite recent advances in the treatment of IE, morbidity and mortality remain high: in-hospital mortality in IE patients is 15% to 20%, and the 1-year mortality rate is approximately 40%.2,4,5

Poor IE outcomes may be the result of difficulty in diagnosing IE and identifying its optimal treatments. The American Heart Association (AHA), the American College of Cardiology (ACC), and the European Society of Cardiology (ESC) have published guidelines to address these challenges. Recent guidelines recommend a multidisciplinary approach that includes cardiology, cardiac surgery, and infectious disease (ID) specialty involvement in decision-making.5,6

In the absence of published quality measures for IE management, guidelines can be used to evaluate the quality of care of IE. Studies have showed poor concordance with guideline recommendations but did not examine agreement with more recently published guidelines.7,8 Furthermore, few studies have examined the management, outcomes, and quality of care received by IE patients. Therefore, we aimed to describe a modern cohort of patients with IE admitted to a tertiary medical center over a 4-year period. In particular, we aimed to assess quality of care received by this cohort, as measured by concordance with AHA and ACC guidelines to identify gaps in care and spur quality improvement (QI) efforts.

METHODS

Design and Study Population

We conducted a retrospective cohort study of adult IE patients admitted to Baystate Medical Center (BMC), a 716-bed tertiary academic center that covers a population of 800,000 people throughout western New England. We used the International Classification of Diseases (ICD)–Ninth Revision, to identify IE patients discharged with a principal or secondary diagnosis of IE between 2007 and 2011 (codes 421.0, 421.1, 421.9, 424.9, 424.90, and 424.91). Three co-authors confirmed the diagnosis by conducting a review of the electronic health records.

We included only patients who met modified Duke criteria for definite or possible IE.5 Definite IE defines patients with pathological criteria (microorganisms demonstrated by culture or histologic examination or a histologic examination showing active endocarditis); or patients with 2 major criteria (positive blood culture and evidence of endocardial involvement by echocardiogram), 1 major criterion and 3 minor criteria (minor criteria: predisposing heart conditions or intravenous drug (IVD) use, fever, vascular phenomena, immunologic phenomena, and microbiologic evidence that do not meet the major criteria) or 5 minor criteria. Possible IE defines patients with 1 major and 1 minor criterion or 3 minor criteria.5

Data Collection

We used billing and clinical databases to collect demographics, comorbidities, antibiotic treatment, 6-month readmission and 1-year mortality. Comorbid conditions were classified into Elixhauser comorbidities using software provided by the Healthcare Costs and Utilization Project of the Agency for Healthcare Research and Quality.9,10

We obtained all other data through electronic health record abstraction. These included microbiology, type of endocarditis (native valve endocarditis [NVE] or prosthetic valve endocarditis [PVE]), echocardiographic location of the vegetation, and complications involving the valve (eg, valve perforation, ruptured chorda, perivalvular abscess, or valvular insufficiency).

Using 2006 AHA/ACC guidelines,11 we identified quality metrics, including the presence of at least 2 sets of blood cultures prior to start of antibiotics and use of transthoracic echocardiogram (TTE) and transesophageal echocardiogram (TEE). Guidelines recommend using TTE as first-line to detect valvular vegetations and assess IE complications. TEE is recommended if TTE is nondiagnostic and also as first-line to diagnose PVE. We assessed the rate of consultation with ID, cardiology, and cardiac surgery specialties. While these consultations were not explicitly emphasized in the 2006 AHA/ACC guidelines, there is a class I recommendation in 2014 AHA/ACC guidelines5 to manage IE patients with consultation of all these specialties.

We reported the number of patients with intracardiac leads (pacemaker or defibrillator) who had documentation of intracardiac lead removal. Complete removal of intracardiac leads is indicated in IE patients with infection of leads or device (class I) and suggested for IE caused by Staphylococcus aureus or fungi (even without evidence of device or lead infection), and for patients undergoing valve surgery (class IIa).5 We entered abstracted data elements into a RedCap database, hosted by Tufts Clinical and Translational Science Institute.12

Outcomes

Outcomes included embolic events, strokes, need for cardiac surgery, LOS, inhospital mortality, 6-month readmission, and 1-year mortality. We identified embolic events using documentation of clinical or imaging evidence of an embolic event to the cerebral, coronary, peripheral arterial, renal, splenic, or pulmonary vasculature. We used record extraction to identify incidence of valve surgery. Nearly all patients who require surgery at BMC have it done onsite. We compared outcomes among patients who received less than 3 vs. 3 consultations provided by ID, cardiology, and cardiac surgery specialties. We also compared outcomes among patients who received 0, 1, 2, or 3 consultations to look for a trend.

Statistical Analysis

We divided the cohort into patients with NVE and PVE because there are differences in pathophysiology, treatment, and outcomes of these groups. We calculated descriptive statistics, including means/standard deviation (SD) and n (%). We conducted univariable analyses using Fisher exact (categorical), unpaired t tests (Gaussian), or Kruskal-Wallis equality-of-populations rank test (non-Gaussian). Common language effect sizes were also calculated to quantify group differences without respect to sample size.13,14 Analyses were performed using Stata 14.1. (StataCorp LLC, College Station, Texas). The BMC Institutional Review Board approved the protocol.

RESULTS

We identified a total of 317 hospitalizations at BMC meeting criteria for IE. Of these, 147 hospitalizations were readmissions or did not meet the clinical criteria of definite or possible IE. Thus, we included a total of 170 patients in the final analysis. Definite IE was present in 135 (79.4%) and possible IE in 35 (20.6%) patients.

Patient Characteristics

Of 170 patients, 127 (74.7%) had NVE and 43 (25.3%) had PVE. Mean ± SD age was 60.0 ± 17.9 years, 66.5% (n = 113) of patients were male, and 79.4% (n = 135) were white (Table 1). Hypertension and chronic kidney disease were the most common comorbidities. The median Gagne score15 was 4, corresponding to a 1-year mortality risk of 15%. Predisposing factors for IE included previous history of IE (n = 14 or 8.2%), IVD use (n = 23 or 13.5%), and presence of long-term venous catheters (n = 19 or 11.2%). Intracardiac leads were present in 17.1% (n = 29) of patients. Bicuspid aortic valve was reported in 6.5% (n = 11) of patients with NVE. Patients with PVE were older (+11.5 years, 95% confidence interval [CI] 5.5, 17.5) and more likely to have intracardiac leads (44.2% vs. 7.9%; P < 0.001; Table 1).

Microbiology and Antibiotics

Staphylococcus aureus was isolated in 40.0% of patients (methicillin-sensitive: 21.2%, n = 36; methicillin-resistant: 18.8%, n = 32) and vancomycin (88.2%, n = 150) was the most common initial antibiotic used. Nearly half (44.7%, n = 76) of patients received gentamicin as part of their initial antibiotic regimen. Appendix 1 provides information on final blood culture results, prosthetic versus native valve IE, and antimicrobial agents that each patient received. PVE patients were more likely to receive gentamicin as their initial antibiotic regimen than NVE (58.1% vs. 40.2%; P = 0.051; Table 1).

Echocardiography and Affected Valves

As per study inclusion criteria, all patients received echocardiography (either TTE, TEE, or both). Overall, the most common infected valve was mitral (41.3%), n = 59), followed by aortic valve (28.7%), n = 41). Patients in whom the location of infected valve could not be determined (15.9%, n = 27) had echocardiographic features of intracardiac device infection or intracardiac mass (Table 1).

Quality of Care

Nearly all (n = 165, 97.1%) of patients had at least 2 sets of blood cultures drawn, most on the first day of admission (71.2%). The vast majority of patients (n = 152, 89.4%) also received their first dose of antibiotics on the day of admission. Ten (5.9%) patients did not receive any consults, and 160 (94.1%) received at least 1 consultation. An ID consultation was obtained for most (147, 86.5%) patients; cardiac surgery consultation was obtained for about half of patients (92, 54.1%), and cardiology consultation was also obtained for nearly half of patients (80, 47.1%). One-third (53, 31.2%) did not receive a cardiology or cardiac surgery consult, two-thirds (117, 68.8%) received either a cardiology or a cardiac surgery consult, and one-third (55, 32.4%) received both.

Of the 29 patients who had an intracardiac lead, 6 patients had documentation of the device removal during the index hospitalization (5 or 50.0% of patients with NVE and 1 or 5.3% of patients with PVE; P = 0.02; Table 2).

Outcomes

Evidence of any embolic events was seen in 27.7% (n = 47) of patients, including stroke in 17.1% (n = 29). Median LOS for all patients was 13.5 days, and 6-month readmission among patients who survived their index admission was 51.0% (n = 74/145; 95% CI, 45.9%-62.7%). Inhospital mortality was 14.7% (n = 25; 95% CI: 10.1%-20.9%) and 12-month mortality was 22.4% (n = 38; 95% CI, 16.7%-29.3%). Inhospital mortality was more frequent among patients with PVE than NVE (20.9% vs. 12.6%; P = 0.21), although this difference was not statistically significant. Complications were more common in NVE than PVE (any embolic event: 32.3% vs. 14.0%, P = 0.03; stroke, 20.5% vs. 7.0%, P = 0.06; Table 3).

Although there was a trend toward reduction in 6-month readmission and 12-month mortality with incremental increase in the number of specialties consulted (ID, cardiology and cardiac surgery), the difference was not statistically significant (Figure). In addition, comparing outcomes of embolic events, stroke, 6-month readmission, and 12-month mortality between those who received 3 consults (28.8%, n = 49) to those with fewer than 3 (71.2%, n = 121) did not show statistically significant differences.

Of 92 patients who received a cardiac surgery consult, 73 had NVE and 19 had PVE. Of these, 47 underwent valve surgery, 39 (of 73) with NVE (53.42%) and 8 (of 19) with PVE (42.1%). Most of the NVE patients (73.2%) had more than 1 indication for surgery. The most common indications for surgery among NVE patients were significant valvular dysfunction resulting in heart failure (65.9%), followed by mobile vegetation (56.1%) and recurrent embolic events (26.8%). The most common indication for surgery in PVE was persistent bacteremia or recurrent embolic events (75.0%).

DISCUSSION

In this study, we described the management, quality of care, and outcomes of IE patients in a tertiary medical center. We found that the majority of hospitalized patients with IE were older white men with comorbidities and IE risk factors. The complication rate was high (27.7% with embolic events) and the inhospital mortality rate was in the lower range reported by prior studies [14.7% vs. 15%-20%].5 Nearly one-third of patients (n = 47, 27.7%) received valve surgery. Quality of care received was generally good, with most patients receiving early blood cultures, echocardiograms, early antibiotics, and timely ID consultation. We identified important gaps in care, including a failure to consult cardiac surgery in nearly half of patients and failure to consult cardiology in more than half of patients.

Our findings support work suggesting that IE is no longer primarily a chronic or subacute disease of younger patients with IVD use, positive human immunodeficiency virus status, or bicuspid aortic valves.1,4,16,17 The International Collaboration on Endocarditis-Prospective Cohort Study,4 a multinational prospective cohort study (2000-2005) of 2781 adults with IE, reported a higher prevalence of patients with diabetes or on hemodialysis, IVD users, and patients with long-term venous catheter and intracardiac leads than we found. Yet both studies suggest that the demographics of IE are changing. This may partially explain why IE mortality has not improved in recent years:2,3 patients with older age and higher comorbidity burden may not be considered good surgical candidates.

This study is among the first to contribute information on concordance with IE guidelines in a cohort of U.S. patients. Our findings suggest that most patients received timely blood culture, same-day administration of empiric antibiotics, and ID consultation, which is similar to European studies.7,18 Guideline concordance could be improved in some areas. Overall documentation of the management plan regarding the intracardiac leads could be improved. Only 6 of 29 patients with intracardiac leads had documentation of their removal during the index hospitalization.

The 2014 AHA/ACC guidelines5 and the ESC guidelines6 emphasized the importance of multidisciplinary management of IE. As part of the Heart Valve Team at BMC, cardiologists provide expertise in diagnosis, imaging and clinical management of IE, and cardiac surgeons provide consultation on whether to pursue surgery and optimal timing of surgery. Early discussion with surgical team is considered mandatory in all complicated cases of IE.6,18 Infectious disease consultation has been shown to improve the rate of IE diagnosis, reduce the 6-month relapse rate,19 and improve outcomes in patients with S aureus bacteremia.20 In our study 86.5% of patients had documentation of an ID consultation; cardiac surgery consultation was obtained in 54.1% and cardiology consultation in 47.1% of patients.

We observed a trend towards lower rates of 6-month readmission and 12-month mortality among patients who received all 3 consults (Figure 1), despite the fact that rates of embolic events and stroke were higher in patients with 3 consults compared to those with fewer than 3. Obviously, the lack of confounder adjustment and lack of power limits our ability to make inferences about this association, but it generates hypotheses for future work. Because subjects in our study were cared for prior to 2014, multidisciplinary management of IE with involvement of cardiology, cardiac surgery, and ID physicians was observed in only one-third of patients. However, 117 (68.8%) patients received either cardiology or cardiac surgery consults. It is possible that some physicians considered involving both cardiology and cardiac surgery consultants as unnecessary and, therefore, did not consult both specialties. We will focus future QI efforts in our institution on educating physicians about the benefits of multidisciplinary care and the importance of fully implementing the 2014 AHA/ACC guidelines.

Our findings around quality of care should be placed in the context of 2 studies by González de Molina et al8 and Delahaye et al7 These studies described considerable discordance between guideline recommendations and real-world IE care. However, these studies were performed more than a decade ago and were conducted before current recommendations to consult cardiology and cardiac surgery were published.

In the 2014 AHA/ACC guidelines, surgery prior to completion of antibiotics is indicated in patients with valve dysfunction resulting in heart failure; left-sided IE caused by highly resistant organisms (including fungus or S aureus); IE complicated by heart block, aortic abscess, or penetrating lesions; and presence of persistent infection (bacteremia or fever lasting longer than 5 to 7 days) after onset of appropriate antimicrobial therapy. In addition, there is a Class IIa indication of early surgery in patients with recurrent emboli and persistent vegetation despite appropriate antibiotic therapy and a Class IIb indication of early surgery in patients with NVE with mobile vegetation greater than 10 mm in length. Surgery is recommended for patients with PVE and relapsing infection.

It is recommended that IE patients be cared for in centers with immediate access to cardiac surgery because the urgent need for surgical intervention can arise rapidly.5 We found that nearly one-third of included patients underwent surgery. Although we did not collect data on indications for surgery in patients who did not receive surgery, we observed that 50% had a surgery consult, suggesting the presence of 1 or more surgical indications. Of these, half underwent valve surgery. Most of the NVE patients who underwent surgery had more than 1 indication for surgery. Our surgical rate is similar to a study from Italy3 and overall in the lower range of reported surgical rate (25%-50%) from other studies.21 The low rate of surgery at our center may be related to the fact that the use of surgery for IE has been hotly debated in the literature,21 and may also be due to the low rate of cardiac surgery consultation.

Our study has several limitations. We identified eligible patients using a discharge ICD-9 coding of IE and then confirmed the presence of Duke criteria using record review. Using discharge diagnosis codes for endocarditis has been validated, and our additional manual chart review to confirm Duke criteria likely improved the specificity significantly. However, by excluding patients who did not have documented evidence of Duke criteria, we may have missed some cases, lowering sensitivity. The performance on selected quality metrics may also have been affected by our inclusion criteria. Because we included only patients who met Duke criteria, we tended to include patients who had received blood cultures and echocardiograms, which are part of the criteria. Thus, we cannot comment on use of diagnostic testing or specialty consultation in patients with suspected IE. This was a single-center study and may not represent patients or current practices seen in other institutions. We did not collect data on some of the predisposing factors to NVE (for example, baseline rheumatic heart disease or preexisting valvular heart disease) because it is estimated that less than 5% of IE in the U.S. is superimposed on rheumatic heart disease.4 We likely underestimated 12-month mortality rate because we did not cross-reference our findings again the National Death Index; however, this should not affect the comparison of this outcome between groups.

CONCLUSION

Our study confirms reports that IE epidemiology has changed significantly in recent years. It also suggests that concordance with guideline recommendations is good for some aspects of care (eg, echocardiogram, blood cultures), but can be improved in other areas, particularly in use of specialty consultation during the hospitalization. Future QI efforts should emphasize the role of the heart valve team or endocarditis team that consists of an internist, ID physician, cardiologist, cardiac surgeon, and nursing. Finally, efforts should be made to develop strategies for community hospitals that do not have access to all of these specialists (eg, early transfer, telehealth).

Disclosure

Nothing to report.

Infective endocarditis (IE) affected an estimated 46,800 Americans in 2011, and its incidence is increasing due to greater numbers of invasive procedures and prevalence of IE risk factors.1-3 Despite recent advances in the treatment of IE, morbidity and mortality remain high: in-hospital mortality in IE patients is 15% to 20%, and the 1-year mortality rate is approximately 40%.2,4,5

Poor IE outcomes may be the result of difficulty in diagnosing IE and identifying its optimal treatments. The American Heart Association (AHA), the American College of Cardiology (ACC), and the European Society of Cardiology (ESC) have published guidelines to address these challenges. Recent guidelines recommend a multidisciplinary approach that includes cardiology, cardiac surgery, and infectious disease (ID) specialty involvement in decision-making.5,6

In the absence of published quality measures for IE management, guidelines can be used to evaluate the quality of care of IE. Studies have showed poor concordance with guideline recommendations but did not examine agreement with more recently published guidelines.7,8 Furthermore, few studies have examined the management, outcomes, and quality of care received by IE patients. Therefore, we aimed to describe a modern cohort of patients with IE admitted to a tertiary medical center over a 4-year period. In particular, we aimed to assess quality of care received by this cohort, as measured by concordance with AHA and ACC guidelines to identify gaps in care and spur quality improvement (QI) efforts.

METHODS

Design and Study Population

We conducted a retrospective cohort study of adult IE patients admitted to Baystate Medical Center (BMC), a 716-bed tertiary academic center that covers a population of 800,000 people throughout western New England. We used the International Classification of Diseases (ICD)–Ninth Revision, to identify IE patients discharged with a principal or secondary diagnosis of IE between 2007 and 2011 (codes 421.0, 421.1, 421.9, 424.9, 424.90, and 424.91). Three co-authors confirmed the diagnosis by conducting a review of the electronic health records.

We included only patients who met modified Duke criteria for definite or possible IE.5 Definite IE defines patients with pathological criteria (microorganisms demonstrated by culture or histologic examination or a histologic examination showing active endocarditis); or patients with 2 major criteria (positive blood culture and evidence of endocardial involvement by echocardiogram), 1 major criterion and 3 minor criteria (minor criteria: predisposing heart conditions or intravenous drug (IVD) use, fever, vascular phenomena, immunologic phenomena, and microbiologic evidence that do not meet the major criteria) or 5 minor criteria. Possible IE defines patients with 1 major and 1 minor criterion or 3 minor criteria.5

Data Collection

We used billing and clinical databases to collect demographics, comorbidities, antibiotic treatment, 6-month readmission and 1-year mortality. Comorbid conditions were classified into Elixhauser comorbidities using software provided by the Healthcare Costs and Utilization Project of the Agency for Healthcare Research and Quality.9,10

We obtained all other data through electronic health record abstraction. These included microbiology, type of endocarditis (native valve endocarditis [NVE] or prosthetic valve endocarditis [PVE]), echocardiographic location of the vegetation, and complications involving the valve (eg, valve perforation, ruptured chorda, perivalvular abscess, or valvular insufficiency).

Using 2006 AHA/ACC guidelines,11 we identified quality metrics, including the presence of at least 2 sets of blood cultures prior to start of antibiotics and use of transthoracic echocardiogram (TTE) and transesophageal echocardiogram (TEE). Guidelines recommend using TTE as first-line to detect valvular vegetations and assess IE complications. TEE is recommended if TTE is nondiagnostic and also as first-line to diagnose PVE. We assessed the rate of consultation with ID, cardiology, and cardiac surgery specialties. While these consultations were not explicitly emphasized in the 2006 AHA/ACC guidelines, there is a class I recommendation in 2014 AHA/ACC guidelines5 to manage IE patients with consultation of all these specialties.

We reported the number of patients with intracardiac leads (pacemaker or defibrillator) who had documentation of intracardiac lead removal. Complete removal of intracardiac leads is indicated in IE patients with infection of leads or device (class I) and suggested for IE caused by Staphylococcus aureus or fungi (even without evidence of device or lead infection), and for patients undergoing valve surgery (class IIa).5 We entered abstracted data elements into a RedCap database, hosted by Tufts Clinical and Translational Science Institute.12

Outcomes

Outcomes included embolic events, strokes, need for cardiac surgery, LOS, inhospital mortality, 6-month readmission, and 1-year mortality. We identified embolic events using documentation of clinical or imaging evidence of an embolic event to the cerebral, coronary, peripheral arterial, renal, splenic, or pulmonary vasculature. We used record extraction to identify incidence of valve surgery. Nearly all patients who require surgery at BMC have it done onsite. We compared outcomes among patients who received less than 3 vs. 3 consultations provided by ID, cardiology, and cardiac surgery specialties. We also compared outcomes among patients who received 0, 1, 2, or 3 consultations to look for a trend.

Statistical Analysis

We divided the cohort into patients with NVE and PVE because there are differences in pathophysiology, treatment, and outcomes of these groups. We calculated descriptive statistics, including means/standard deviation (SD) and n (%). We conducted univariable analyses using Fisher exact (categorical), unpaired t tests (Gaussian), or Kruskal-Wallis equality-of-populations rank test (non-Gaussian). Common language effect sizes were also calculated to quantify group differences without respect to sample size.13,14 Analyses were performed using Stata 14.1. (StataCorp LLC, College Station, Texas). The BMC Institutional Review Board approved the protocol.

RESULTS

We identified a total of 317 hospitalizations at BMC meeting criteria for IE. Of these, 147 hospitalizations were readmissions or did not meet the clinical criteria of definite or possible IE. Thus, we included a total of 170 patients in the final analysis. Definite IE was present in 135 (79.4%) and possible IE in 35 (20.6%) patients.

Patient Characteristics

Of 170 patients, 127 (74.7%) had NVE and 43 (25.3%) had PVE. Mean ± SD age was 60.0 ± 17.9 years, 66.5% (n = 113) of patients were male, and 79.4% (n = 135) were white (Table 1). Hypertension and chronic kidney disease were the most common comorbidities. The median Gagne score15 was 4, corresponding to a 1-year mortality risk of 15%. Predisposing factors for IE included previous history of IE (n = 14 or 8.2%), IVD use (n = 23 or 13.5%), and presence of long-term venous catheters (n = 19 or 11.2%). Intracardiac leads were present in 17.1% (n = 29) of patients. Bicuspid aortic valve was reported in 6.5% (n = 11) of patients with NVE. Patients with PVE were older (+11.5 years, 95% confidence interval [CI] 5.5, 17.5) and more likely to have intracardiac leads (44.2% vs. 7.9%; P < 0.001; Table 1).

Microbiology and Antibiotics

Staphylococcus aureus was isolated in 40.0% of patients (methicillin-sensitive: 21.2%, n = 36; methicillin-resistant: 18.8%, n = 32) and vancomycin (88.2%, n = 150) was the most common initial antibiotic used. Nearly half (44.7%, n = 76) of patients received gentamicin as part of their initial antibiotic regimen. Appendix 1 provides information on final blood culture results, prosthetic versus native valve IE, and antimicrobial agents that each patient received. PVE patients were more likely to receive gentamicin as their initial antibiotic regimen than NVE (58.1% vs. 40.2%; P = 0.051; Table 1).

Echocardiography and Affected Valves

As per study inclusion criteria, all patients received echocardiography (either TTE, TEE, or both). Overall, the most common infected valve was mitral (41.3%), n = 59), followed by aortic valve (28.7%), n = 41). Patients in whom the location of infected valve could not be determined (15.9%, n = 27) had echocardiographic features of intracardiac device infection or intracardiac mass (Table 1).

Quality of Care

Nearly all (n = 165, 97.1%) of patients had at least 2 sets of blood cultures drawn, most on the first day of admission (71.2%). The vast majority of patients (n = 152, 89.4%) also received their first dose of antibiotics on the day of admission. Ten (5.9%) patients did not receive any consults, and 160 (94.1%) received at least 1 consultation. An ID consultation was obtained for most (147, 86.5%) patients; cardiac surgery consultation was obtained for about half of patients (92, 54.1%), and cardiology consultation was also obtained for nearly half of patients (80, 47.1%). One-third (53, 31.2%) did not receive a cardiology or cardiac surgery consult, two-thirds (117, 68.8%) received either a cardiology or a cardiac surgery consult, and one-third (55, 32.4%) received both.

Of the 29 patients who had an intracardiac lead, 6 patients had documentation of the device removal during the index hospitalization (5 or 50.0% of patients with NVE and 1 or 5.3% of patients with PVE; P = 0.02; Table 2).

Outcomes

Evidence of any embolic events was seen in 27.7% (n = 47) of patients, including stroke in 17.1% (n = 29). Median LOS for all patients was 13.5 days, and 6-month readmission among patients who survived their index admission was 51.0% (n = 74/145; 95% CI, 45.9%-62.7%). Inhospital mortality was 14.7% (n = 25; 95% CI: 10.1%-20.9%) and 12-month mortality was 22.4% (n = 38; 95% CI, 16.7%-29.3%). Inhospital mortality was more frequent among patients with PVE than NVE (20.9% vs. 12.6%; P = 0.21), although this difference was not statistically significant. Complications were more common in NVE than PVE (any embolic event: 32.3% vs. 14.0%, P = 0.03; stroke, 20.5% vs. 7.0%, P = 0.06; Table 3).

Although there was a trend toward reduction in 6-month readmission and 12-month mortality with incremental increase in the number of specialties consulted (ID, cardiology and cardiac surgery), the difference was not statistically significant (Figure). In addition, comparing outcomes of embolic events, stroke, 6-month readmission, and 12-month mortality between those who received 3 consults (28.8%, n = 49) to those with fewer than 3 (71.2%, n = 121) did not show statistically significant differences.

Of 92 patients who received a cardiac surgery consult, 73 had NVE and 19 had PVE. Of these, 47 underwent valve surgery, 39 (of 73) with NVE (53.42%) and 8 (of 19) with PVE (42.1%). Most of the NVE patients (73.2%) had more than 1 indication for surgery. The most common indications for surgery among NVE patients were significant valvular dysfunction resulting in heart failure (65.9%), followed by mobile vegetation (56.1%) and recurrent embolic events (26.8%). The most common indication for surgery in PVE was persistent bacteremia or recurrent embolic events (75.0%).

DISCUSSION

In this study, we described the management, quality of care, and outcomes of IE patients in a tertiary medical center. We found that the majority of hospitalized patients with IE were older white men with comorbidities and IE risk factors. The complication rate was high (27.7% with embolic events) and the inhospital mortality rate was in the lower range reported by prior studies [14.7% vs. 15%-20%].5 Nearly one-third of patients (n = 47, 27.7%) received valve surgery. Quality of care received was generally good, with most patients receiving early blood cultures, echocardiograms, early antibiotics, and timely ID consultation. We identified important gaps in care, including a failure to consult cardiac surgery in nearly half of patients and failure to consult cardiology in more than half of patients.

Our findings support work suggesting that IE is no longer primarily a chronic or subacute disease of younger patients with IVD use, positive human immunodeficiency virus status, or bicuspid aortic valves.1,4,16,17 The International Collaboration on Endocarditis-Prospective Cohort Study,4 a multinational prospective cohort study (2000-2005) of 2781 adults with IE, reported a higher prevalence of patients with diabetes or on hemodialysis, IVD users, and patients with long-term venous catheter and intracardiac leads than we found. Yet both studies suggest that the demographics of IE are changing. This may partially explain why IE mortality has not improved in recent years:2,3 patients with older age and higher comorbidity burden may not be considered good surgical candidates.

This study is among the first to contribute information on concordance with IE guidelines in a cohort of U.S. patients. Our findings suggest that most patients received timely blood culture, same-day administration of empiric antibiotics, and ID consultation, which is similar to European studies.7,18 Guideline concordance could be improved in some areas. Overall documentation of the management plan regarding the intracardiac leads could be improved. Only 6 of 29 patients with intracardiac leads had documentation of their removal during the index hospitalization.

The 2014 AHA/ACC guidelines5 and the ESC guidelines6 emphasized the importance of multidisciplinary management of IE. As part of the Heart Valve Team at BMC, cardiologists provide expertise in diagnosis, imaging and clinical management of IE, and cardiac surgeons provide consultation on whether to pursue surgery and optimal timing of surgery. Early discussion with surgical team is considered mandatory in all complicated cases of IE.6,18 Infectious disease consultation has been shown to improve the rate of IE diagnosis, reduce the 6-month relapse rate,19 and improve outcomes in patients with S aureus bacteremia.20 In our study 86.5% of patients had documentation of an ID consultation; cardiac surgery consultation was obtained in 54.1% and cardiology consultation in 47.1% of patients.

We observed a trend towards lower rates of 6-month readmission and 12-month mortality among patients who received all 3 consults (Figure 1), despite the fact that rates of embolic events and stroke were higher in patients with 3 consults compared to those with fewer than 3. Obviously, the lack of confounder adjustment and lack of power limits our ability to make inferences about this association, but it generates hypotheses for future work. Because subjects in our study were cared for prior to 2014, multidisciplinary management of IE with involvement of cardiology, cardiac surgery, and ID physicians was observed in only one-third of patients. However, 117 (68.8%) patients received either cardiology or cardiac surgery consults. It is possible that some physicians considered involving both cardiology and cardiac surgery consultants as unnecessary and, therefore, did not consult both specialties. We will focus future QI efforts in our institution on educating physicians about the benefits of multidisciplinary care and the importance of fully implementing the 2014 AHA/ACC guidelines.

Our findings around quality of care should be placed in the context of 2 studies by González de Molina et al8 and Delahaye et al7 These studies described considerable discordance between guideline recommendations and real-world IE care. However, these studies were performed more than a decade ago and were conducted before current recommendations to consult cardiology and cardiac surgery were published.

In the 2014 AHA/ACC guidelines, surgery prior to completion of antibiotics is indicated in patients with valve dysfunction resulting in heart failure; left-sided IE caused by highly resistant organisms (including fungus or S aureus); IE complicated by heart block, aortic abscess, or penetrating lesions; and presence of persistent infection (bacteremia or fever lasting longer than 5 to 7 days) after onset of appropriate antimicrobial therapy. In addition, there is a Class IIa indication of early surgery in patients with recurrent emboli and persistent vegetation despite appropriate antibiotic therapy and a Class IIb indication of early surgery in patients with NVE with mobile vegetation greater than 10 mm in length. Surgery is recommended for patients with PVE and relapsing infection.

It is recommended that IE patients be cared for in centers with immediate access to cardiac surgery because the urgent need for surgical intervention can arise rapidly.5 We found that nearly one-third of included patients underwent surgery. Although we did not collect data on indications for surgery in patients who did not receive surgery, we observed that 50% had a surgery consult, suggesting the presence of 1 or more surgical indications. Of these, half underwent valve surgery. Most of the NVE patients who underwent surgery had more than 1 indication for surgery. Our surgical rate is similar to a study from Italy3 and overall in the lower range of reported surgical rate (25%-50%) from other studies.21 The low rate of surgery at our center may be related to the fact that the use of surgery for IE has been hotly debated in the literature,21 and may also be due to the low rate of cardiac surgery consultation.

Our study has several limitations. We identified eligible patients using a discharge ICD-9 coding of IE and then confirmed the presence of Duke criteria using record review. Using discharge diagnosis codes for endocarditis has been validated, and our additional manual chart review to confirm Duke criteria likely improved the specificity significantly. However, by excluding patients who did not have documented evidence of Duke criteria, we may have missed some cases, lowering sensitivity. The performance on selected quality metrics may also have been affected by our inclusion criteria. Because we included only patients who met Duke criteria, we tended to include patients who had received blood cultures and echocardiograms, which are part of the criteria. Thus, we cannot comment on use of diagnostic testing or specialty consultation in patients with suspected IE. This was a single-center study and may not represent patients or current practices seen in other institutions. We did not collect data on some of the predisposing factors to NVE (for example, baseline rheumatic heart disease or preexisting valvular heart disease) because it is estimated that less than 5% of IE in the U.S. is superimposed on rheumatic heart disease.4 We likely underestimated 12-month mortality rate because we did not cross-reference our findings again the National Death Index; however, this should not affect the comparison of this outcome between groups.

CONCLUSION

Our study confirms reports that IE epidemiology has changed significantly in recent years. It also suggests that concordance with guideline recommendations is good for some aspects of care (eg, echocardiogram, blood cultures), but can be improved in other areas, particularly in use of specialty consultation during the hospitalization. Future QI efforts should emphasize the role of the heart valve team or endocarditis team that consists of an internist, ID physician, cardiologist, cardiac surgeon, and nursing. Finally, efforts should be made to develop strategies for community hospitals that do not have access to all of these specialists (eg, early transfer, telehealth).

Disclosure

Nothing to report.

1. Pant S, Patel NJ, Deshmukh A, Golwala H, Patel N, Badheka A, et al. Trends in infective endocarditis incidence, microbiology, and valve replacement in the United States from 2000 to 2011. J Am Coll Cardiol. 2015;65(19):2070-2076. PubMed

2. Bor DH, Woolhandler S, Nardin R, Brusch J, Himmelstein DU. Infective endocarditis in the U.S., 1998-2009: a nationwide study. PloS One. 2013;8(3):e60033. PubMed

3. Fedeli U, Schievano E, Buonfrate D, Pellizzer G, Spolaore P. Increasing incidence and mortality of infective endocarditis: a population-based study through a record-linkage system. BMC Infect Dis. 2011;11:48. PubMed

4. Murdoch DR, Corey GR, Hoen B, Miró JM, Fowler VG, Bayer AS, et al. Clinical presentation, etiology, and outcome of infective endocarditis in the 21st century: the International Collaboration on Endocarditis-Prospective Cohort Study. Arch Intern Med. 2009;169(5):463-473. PubMed

5. Nishimura RA, Otto CM, Bonow RO, Carabello BA, Erwin JP, Guyton RA, et al. 2014 AHA/ACC guideline for the management of patients with valvular heart disease: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines. J Am Coll Cardiol. 2014;63(22):2438-2488. PubMed

6. Habib G, Lancellotti P, Antunes MJ, Bongiorni MG, Casalta J-P, Del Zotti F, et al. 2015 ESC Guidelines for the management of infective endocarditis: The Task Force for the Management of Infective Endocarditis of the European Society of Cardiology (ESC). Endorsed by: European Association for Cardio-Thoracic Surgery (EACTS), the European Association of Nuclear Medicine (EANM). Eur Heart J. 2015;36(44):3075-3128. PubMed

7. Delahaye F, Rial MO, de Gevigney G, Ecochard R, Delaye J. A critical appraisal of the quality of the management of infective endocarditis. J Am Coll Cardiol. 1999;33(3):788-793. PubMed

8. González De Molina M, Fernández-Guerrero JC, Azpitarte J. [Infectious endocarditis: degree of discordance between clinical guidelines recommendations and clinical practice]. Rev Esp Cardiol. 2002;55(8):793-800. PubMed

9. Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8-27. PubMed

10. Quan H, Parsons GA, Ghali WA. Validity of information on comorbidity derived rom ICD-9-CCM administrative data. Med Care. 2002;40(8):675-685. PubMed

11. American College of Cardiology/American Heart Association Task Force on Practice Guidelines, Society of Cardiovascular Anesthesiologists, Society for Cardiovascular Angiography and Interventions, Society of Thoracic Surgeons, Bonow RO, Carabello BA, Kanu C, deLeon AC Jr, Faxon DP, Freed MD, et al. ACC/AHA 2006 guidelines for the management of patients with valvular heart disease: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines (writing committee to revise the 1998 Guidelines for the Management of Patients With Valvular Heart Disease): developed in collaboration with the Society of Cardiovascular Anesthesiologists: endorsed by the Society for Cardiovascular Angiography and Interventions and the Society of Thoracic Surgeons. Circulation. 2006;114(5):e84-e231.

12. REDCap [Internet]. [cited 2016 May 14]. Available from: https://collaborate.tuftsctsi.org/redcap/.

13. McGraw KO, Wong SP. A common language effect-size statistic. Psychol Bull. 1992;111:361-365.

14. Cohen J. The statistical power of abnormal-social psychological research: a review. J Abnorm Soc Psychol. 1962;65:145-153. PubMed

15. Gagne JJ, Glynn RJ, Avorn J, Levin R, Schneeweiss S. A combined comorbidity score predicted mortality in elderly patients better than existing scores. J Clin Epidemiol. 2011;64(7):749-759. PubMed

16. Baddour LM, Wilson WR, Bayer AS, Fowler VG Jr, Tleyjeh IM, Rybak MJ, et al. Infective endocarditis in adults: Diagnosis, antimicrobial therapy, and management of complications: A scientific statement for healthcare professionals from the American Heart Association. Circulation. 2015;132(15):1435-1486. PubMed

17. Cecchi E, Chirillo F, Castiglione A, Faggiano P, Cecconi M, Moreo A, et al. Clinical epidemiology in Italian Registry of Infective Endocarditis (RIEI): Focus on age, intravascular devices and enterococci. Int J Cardiol. 2015;190:151-156. PubMed

18. Tornos P, Iung B, Permanyer-Miralda G, Baron G, Delahaye F, Gohlke-Bärwolf Ch, et al. Infective endocarditis in Europe: lessons from the Euro heart survey. Heart. 2005;91(5):571-575. PubMed

19. Yamamoto S, Hosokawa N, Sogi M, Inakaku M, Imoto K, Ohji G, et al. Impact of infectious diseases service consultation on diagnosis of infective endocarditis. Scand J Infect Dis. 2012;44(4):270-275. PubMed

20. Rieg S, Küpper MF. Infectious diseases consultations can make the difference: a brief review and a plea for more infectious diseases specialists in Germany. Infection. 2016;(2):159-166. PubMed

21. Prendergast BD, Tornos P. Surgery for infective endocarditis: who and when? Circulation. 2010;121(9):11411152. PubMed

1. Pant S, Patel NJ, Deshmukh A, Golwala H, Patel N, Badheka A, et al. Trends in infective endocarditis incidence, microbiology, and valve replacement in the United States from 2000 to 2011. J Am Coll Cardiol. 2015;65(19):2070-2076. PubMed

2. Bor DH, Woolhandler S, Nardin R, Brusch J, Himmelstein DU. Infective endocarditis in the U.S., 1998-2009: a nationwide study. PloS One. 2013;8(3):e60033. PubMed

3. Fedeli U, Schievano E, Buonfrate D, Pellizzer G, Spolaore P. Increasing incidence and mortality of infective endocarditis: a population-based study through a record-linkage system. BMC Infect Dis. 2011;11:48. PubMed

4. Murdoch DR, Corey GR, Hoen B, Miró JM, Fowler VG, Bayer AS, et al. Clinical presentation, etiology, and outcome of infective endocarditis in the 21st century: the International Collaboration on Endocarditis-Prospective Cohort Study. Arch Intern Med. 2009;169(5):463-473. PubMed

5. Nishimura RA, Otto CM, Bonow RO, Carabello BA, Erwin JP, Guyton RA, et al. 2014 AHA/ACC guideline for the management of patients with valvular heart disease: executive summary: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines. J Am Coll Cardiol. 2014;63(22):2438-2488. PubMed

6. Habib G, Lancellotti P, Antunes MJ, Bongiorni MG, Casalta J-P, Del Zotti F, et al. 2015 ESC Guidelines for the management of infective endocarditis: The Task Force for the Management of Infective Endocarditis of the European Society of Cardiology (ESC). Endorsed by: European Association for Cardio-Thoracic Surgery (EACTS), the European Association of Nuclear Medicine (EANM). Eur Heart J. 2015;36(44):3075-3128. PubMed

7. Delahaye F, Rial MO, de Gevigney G, Ecochard R, Delaye J. A critical appraisal of the quality of the management of infective endocarditis. J Am Coll Cardiol. 1999;33(3):788-793. PubMed

8. González De Molina M, Fernández-Guerrero JC, Azpitarte J. [Infectious endocarditis: degree of discordance between clinical guidelines recommendations and clinical practice]. Rev Esp Cardiol. 2002;55(8):793-800. PubMed

9. Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8-27. PubMed

10. Quan H, Parsons GA, Ghali WA. Validity of information on comorbidity derived rom ICD-9-CCM administrative data. Med Care. 2002;40(8):675-685. PubMed

11. American College of Cardiology/American Heart Association Task Force on Practice Guidelines, Society of Cardiovascular Anesthesiologists, Society for Cardiovascular Angiography and Interventions, Society of Thoracic Surgeons, Bonow RO, Carabello BA, Kanu C, deLeon AC Jr, Faxon DP, Freed MD, et al. ACC/AHA 2006 guidelines for the management of patients with valvular heart disease: a report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines (writing committee to revise the 1998 Guidelines for the Management of Patients With Valvular Heart Disease): developed in collaboration with the Society of Cardiovascular Anesthesiologists: endorsed by the Society for Cardiovascular Angiography and Interventions and the Society of Thoracic Surgeons. Circulation. 2006;114(5):e84-e231.

12. REDCap [Internet]. [cited 2016 May 14]. Available from: https://collaborate.tuftsctsi.org/redcap/.

13. McGraw KO, Wong SP. A common language effect-size statistic. Psychol Bull. 1992;111:361-365.

14. Cohen J. The statistical power of abnormal-social psychological research: a review. J Abnorm Soc Psychol. 1962;65:145-153. PubMed

15. Gagne JJ, Glynn RJ, Avorn J, Levin R, Schneeweiss S. A combined comorbidity score predicted mortality in elderly patients better than existing scores. J Clin Epidemiol. 2011;64(7):749-759. PubMed

16. Baddour LM, Wilson WR, Bayer AS, Fowler VG Jr, Tleyjeh IM, Rybak MJ, et al. Infective endocarditis in adults: Diagnosis, antimicrobial therapy, and management of complications: A scientific statement for healthcare professionals from the American Heart Association. Circulation. 2015;132(15):1435-1486. PubMed

17. Cecchi E, Chirillo F, Castiglione A, Faggiano P, Cecconi M, Moreo A, et al. Clinical epidemiology in Italian Registry of Infective Endocarditis (RIEI): Focus on age, intravascular devices and enterococci. Int J Cardiol. 2015;190:151-156. PubMed

18. Tornos P, Iung B, Permanyer-Miralda G, Baron G, Delahaye F, Gohlke-Bärwolf Ch, et al. Infective endocarditis in Europe: lessons from the Euro heart survey. Heart. 2005;91(5):571-575. PubMed

19. Yamamoto S, Hosokawa N, Sogi M, Inakaku M, Imoto K, Ohji G, et al. Impact of infectious diseases service consultation on diagnosis of infective endocarditis. Scand J Infect Dis. 2012;44(4):270-275. PubMed

20. Rieg S, Küpper MF. Infectious diseases consultations can make the difference: a brief review and a plea for more infectious diseases specialists in Germany. Infection. 2016;(2):159-166. PubMed

21. Prendergast BD, Tornos P. Surgery for infective endocarditis: who and when? Circulation. 2010;121(9):11411152. PubMed

© 2017 Society of Hospital Medicine

Hospitalist Utilization and Performance

The past several years have seen a dramatic increase in the percentage of patients cared for by hospitalists, yet an emerging body of literature examining the association between care given by hospitalists and performance on a number of process measures has shown mixed results. Hospitalists do not appear to provide higher quality of care for pneumonia,1, 2 while results in heart failure are mixed.35 Each of these studies was conducted at a single site, and examined patient‐level effects. More recently, Vasilevskis et al6 assessed the association between the intensity of hospitalist use (measured as the percentage of patients admitted by hospitalists) and performance on process measures. In a cohort of 208 California hospitals, they found a significant improvement in performance on process measures in patients with acute myocardial infarction, heart failure, and pneumonia with increasing percentages of patients admitted by hospitalists.6

To date, no study has examined the association between the use of hospitalists and the publicly reported 30‐day mortality and readmission measures. Specifically, the Centers for Medicare and Medicaid Services (CMS) have developed and now publicly report risk‐standardized 30‐day mortality (RSMR) and readmission rates (RSRR) for Medicare patients hospitalized for 3 common and costly conditionsacute myocardial infarction (AMI), heart failure (HF), and pneumonia.7 Performance on these hospital‐based quality measures varies widely, and vary by hospital volume, ownership status, teaching status, and nurse staffing levels.813 However, even accounting for these characteristics leaves much of the variation in outcomes unexplained. We hypothesized that the presence of hospitalists within a hospital would be associated with higher performance on 30‐day mortality and 30‐day readmission measures for AMI, HF, and pneumonia. We further hypothesized that for hospitals using hospitalists, there would be a positive correlation between increasing percentage of patients admitted by hospitalists and performance on outcome measures. To test these hypotheses, we conducted a national survey of hospitalist leaders, linking data from survey responses to data on publicly reported outcome measures for AMI, HF, and pneumonia.

MATERIALS AND METHODS

Study Sites

Of the 4289 hospitals in operation in 2008, 1945 had 25 or more AMI discharges. We identified hospitals using American Hospital Association (AHA) data, calling hospitals up to 6 times each until we reached our target sample size of 600. Using this methodology, we contacted 1558 hospitals of a possible 1920 with AHA data; of the 1558 called, 598 provided survey results.

Survey Data

Our survey was adapted from the survey developed by Vasilevskis et al.6 The entire survey can be found in the Appendix (see Supporting Information in the online version of this article). Our key questions were: 1) Does your hospital have at least 1 hospitalist program or group? 2) Approximately what percentage of all medical patients in your hospital are admitted by hospitalists? The latter question was intended as an approximation of the intensity of hospitalist use, and has been used in prior studies.6, 14 A more direct measure was not feasible given the complexity of obtaining admission data for such a large and diverse set of hospitals. Respondents were also asked about hospitalist care of AMI, HF, and pneumonia patients. Given the low likelihood of precise estimation of hospitalist participation in care for specific conditions, the response choices were divided into percentage quartiles: 025, 2650, 5175, and 76100. Finally, participants were asked a number of questions regarding hospitalist organizational and clinical characteristics.

Survey Process