User login

Study eyed endoscopic submucosal dissection for early-stage esophageal cancer

according to the findings of a single-center retrospective cohort study.

After a median of 21 months of follow-up (range, 6-73 months), rates of all-cause mortality were 7% with ESD and 11% with esophagectomy, said Yiqun Zhang of Zhongshan Hospital, Shanghai, China, and his associates. Rates of cancer recurrence or metastasis were 9.1% and 8.9%, respectively, while disease-specific mortality was lower with ESD (3.4% vs. 7.4% with esophagectomy; P = .049). Severe nonfatal adverse perioperative events occurred in 15% of ESD cases versus 28% of esophagectomy cases (P less than .001). The findings justify more studies of ESD in carefully selected patients with early-stage (T1a-m2/m3 or T1b) esophageal squamous cell carcinoma, the researchers wrote in Clinical Gastroenterology and Hepatology.

Esophagectomy is standard for managing early-stage esophageal squamous cell carcinoma but is associated with high rates of morbidity and mortality. While ESD is minimally invasive, it is considered risky because esophageal squamous cell carcinoma so frequently metastasizes to the lymph nodes, the investigators noted. For the study, they retrospectively compared 322 ESDs and 274 esophagectomies performed during 2011-2016 in patients with T1a-m2/m3 or T1b esophageal squamous cell carcinoma. All cases were pathologically confirmed, and none were premalignant (that is, high-grade intraepithelial neoplasias).

Endoscopic submucosal dissection was associated with significantly lower rates of esophageal fistula (0.3% with ESD vs. 16% with esophagectomy; P less than .001) and pulmonary complications (0.3% vs. 3.6%, respectively; P less than .001), which explained its overall superiority in terms of severe adverse perioperative events, the researchers wrote. Perioperative deaths were rare but occurred more often with esophagectomy (four patients) than with ESD (one patient). Depth of tumor invasion was the only significant correlate of all-cause mortality (hazard ratio for T1a–m3 or deeper tumors versus T1a–m2 tumors, 3.54; 95% confidence interval, 1.08-11.62; P = .04) in a Cox regression analysis that accounted for many potential confounders, such as demographic and tumor characteristics, hypertension, chronic obstructive pulmonary disease (COPD), nodal metastasis, chemotherapy, and radiotherapy.

Perhaps esophagectomy did not improve survival in this retrospective study because follow-up time was too short, because adjuvant therapy compensated for the increased risk of tumor relapse with ESD, or because of the confounding effects of unmeasured variables, such as submucosal stages of T1b cancer, lymphovascular invasion, or tumor morphology, the researchers wrote. “Since a randomized study comparing esophagectomy and ESD alone would not be practical, a potential strategy for future research may include serial treatments – that is, ESD first, followed by esophagectomy, radiotherapy, or chemotherapy, depending on the ESD pathology findings,” they added. “A quality-of-life analysis of ESD would also be helpful because this might be one of the biggest advantages of ESD over esophagectomy and was beyond the scope of this study.”

The study was supported by the National Natural Science Foundation of China, the Shanghai Committee of Science and Technology, and Zhongshan Hospital. The investigators reported having no relevant conflicts of interest.

SOURCE: Zhang Y et al. Clin Gastroenterol Hepatol. 2018 Apr 25. doi: 10.1016/j.cgh.2018.04.038.

This study adds more evidence supporting the use of endoscopic submucosal dissection (ESD) in early esophageal cancer. Unlike esophageal adenocarcinoma, esophageal squamous cell carcinoma (ESCC) has a higher risk of lymph node metastasis and tends to be multifocal. ESCC lesions invading the submucosa (T1b) have the highest risk of lymph node metastasis (up to 60% in lesions with deep submucosal invasion).

Historically, endoscopic resection was reserved for mucosal tumors while submucosal tumors were managed surgically. Several trials have investigated the role of ESD in ESCC limited to the mucosa with excellent results. However, data for ESCC invading the submucosa (T1b lesions) are lacking. This study included 596 patients, almost half of included patients (282 patients) had T1b lesions. Although most of the T1b lesions were treated surgically (200 patients), there was a large cohort of 82 T1b ESCC lesions treated by ESD.

Interestingly, there was no difference in tumor recurrence or overall mortality in patients treated with ESD, compared with surgery for both mucosal and submucosal lesions.

Another interesting finding in this study was the use of adjuvant treatment such as radiotherapy and chemotherapy for patients treated with ESD who were found to have evidence of lymphovascular invasion. The outcome of this subset of patients was not different from patients who underwent esophagectomy. Recent evidence from this study and other published data suggest that there is a subset of submucosal ESCC lesions that can be managed endoscopically, especially submucosal lesions limited to the upper third of the submucosa. Further studies investigating the role of adjuvant treatment after ESD for deep submucosal lesions or lesions with lymphovascular invasion are needed.

Mohamed O. Othman, MD, is an associate professor of medicine, director of advanced endoscopy, and chief of the section of gastroenterology, Baylor College of Medicine, Houston. He is a consultant for Olympus and Boston Scientific.

This study adds more evidence supporting the use of endoscopic submucosal dissection (ESD) in early esophageal cancer. Unlike esophageal adenocarcinoma, esophageal squamous cell carcinoma (ESCC) has a higher risk of lymph node metastasis and tends to be multifocal. ESCC lesions invading the submucosa (T1b) have the highest risk of lymph node metastasis (up to 60% in lesions with deep submucosal invasion).

Historically, endoscopic resection was reserved for mucosal tumors while submucosal tumors were managed surgically. Several trials have investigated the role of ESD in ESCC limited to the mucosa with excellent results. However, data for ESCC invading the submucosa (T1b lesions) are lacking. This study included 596 patients, almost half of included patients (282 patients) had T1b lesions. Although most of the T1b lesions were treated surgically (200 patients), there was a large cohort of 82 T1b ESCC lesions treated by ESD.

Interestingly, there was no difference in tumor recurrence or overall mortality in patients treated with ESD, compared with surgery for both mucosal and submucosal lesions.

Another interesting finding in this study was the use of adjuvant treatment such as radiotherapy and chemotherapy for patients treated with ESD who were found to have evidence of lymphovascular invasion. The outcome of this subset of patients was not different from patients who underwent esophagectomy. Recent evidence from this study and other published data suggest that there is a subset of submucosal ESCC lesions that can be managed endoscopically, especially submucosal lesions limited to the upper third of the submucosa. Further studies investigating the role of adjuvant treatment after ESD for deep submucosal lesions or lesions with lymphovascular invasion are needed.

Mohamed O. Othman, MD, is an associate professor of medicine, director of advanced endoscopy, and chief of the section of gastroenterology, Baylor College of Medicine, Houston. He is a consultant for Olympus and Boston Scientific.

This study adds more evidence supporting the use of endoscopic submucosal dissection (ESD) in early esophageal cancer. Unlike esophageal adenocarcinoma, esophageal squamous cell carcinoma (ESCC) has a higher risk of lymph node metastasis and tends to be multifocal. ESCC lesions invading the submucosa (T1b) have the highest risk of lymph node metastasis (up to 60% in lesions with deep submucosal invasion).

Historically, endoscopic resection was reserved for mucosal tumors while submucosal tumors were managed surgically. Several trials have investigated the role of ESD in ESCC limited to the mucosa with excellent results. However, data for ESCC invading the submucosa (T1b lesions) are lacking. This study included 596 patients, almost half of included patients (282 patients) had T1b lesions. Although most of the T1b lesions were treated surgically (200 patients), there was a large cohort of 82 T1b ESCC lesions treated by ESD.

Interestingly, there was no difference in tumor recurrence or overall mortality in patients treated with ESD, compared with surgery for both mucosal and submucosal lesions.

Another interesting finding in this study was the use of adjuvant treatment such as radiotherapy and chemotherapy for patients treated with ESD who were found to have evidence of lymphovascular invasion. The outcome of this subset of patients was not different from patients who underwent esophagectomy. Recent evidence from this study and other published data suggest that there is a subset of submucosal ESCC lesions that can be managed endoscopically, especially submucosal lesions limited to the upper third of the submucosa. Further studies investigating the role of adjuvant treatment after ESD for deep submucosal lesions or lesions with lymphovascular invasion are needed.

Mohamed O. Othman, MD, is an associate professor of medicine, director of advanced endoscopy, and chief of the section of gastroenterology, Baylor College of Medicine, Houston. He is a consultant for Olympus and Boston Scientific.

according to the findings of a single-center retrospective cohort study.

After a median of 21 months of follow-up (range, 6-73 months), rates of all-cause mortality were 7% with ESD and 11% with esophagectomy, said Yiqun Zhang of Zhongshan Hospital, Shanghai, China, and his associates. Rates of cancer recurrence or metastasis were 9.1% and 8.9%, respectively, while disease-specific mortality was lower with ESD (3.4% vs. 7.4% with esophagectomy; P = .049). Severe nonfatal adverse perioperative events occurred in 15% of ESD cases versus 28% of esophagectomy cases (P less than .001). The findings justify more studies of ESD in carefully selected patients with early-stage (T1a-m2/m3 or T1b) esophageal squamous cell carcinoma, the researchers wrote in Clinical Gastroenterology and Hepatology.

Esophagectomy is standard for managing early-stage esophageal squamous cell carcinoma but is associated with high rates of morbidity and mortality. While ESD is minimally invasive, it is considered risky because esophageal squamous cell carcinoma so frequently metastasizes to the lymph nodes, the investigators noted. For the study, they retrospectively compared 322 ESDs and 274 esophagectomies performed during 2011-2016 in patients with T1a-m2/m3 or T1b esophageal squamous cell carcinoma. All cases were pathologically confirmed, and none were premalignant (that is, high-grade intraepithelial neoplasias).

Endoscopic submucosal dissection was associated with significantly lower rates of esophageal fistula (0.3% with ESD vs. 16% with esophagectomy; P less than .001) and pulmonary complications (0.3% vs. 3.6%, respectively; P less than .001), which explained its overall superiority in terms of severe adverse perioperative events, the researchers wrote. Perioperative deaths were rare but occurred more often with esophagectomy (four patients) than with ESD (one patient). Depth of tumor invasion was the only significant correlate of all-cause mortality (hazard ratio for T1a–m3 or deeper tumors versus T1a–m2 tumors, 3.54; 95% confidence interval, 1.08-11.62; P = .04) in a Cox regression analysis that accounted for many potential confounders, such as demographic and tumor characteristics, hypertension, chronic obstructive pulmonary disease (COPD), nodal metastasis, chemotherapy, and radiotherapy.

Perhaps esophagectomy did not improve survival in this retrospective study because follow-up time was too short, because adjuvant therapy compensated for the increased risk of tumor relapse with ESD, or because of the confounding effects of unmeasured variables, such as submucosal stages of T1b cancer, lymphovascular invasion, or tumor morphology, the researchers wrote. “Since a randomized study comparing esophagectomy and ESD alone would not be practical, a potential strategy for future research may include serial treatments – that is, ESD first, followed by esophagectomy, radiotherapy, or chemotherapy, depending on the ESD pathology findings,” they added. “A quality-of-life analysis of ESD would also be helpful because this might be one of the biggest advantages of ESD over esophagectomy and was beyond the scope of this study.”

The study was supported by the National Natural Science Foundation of China, the Shanghai Committee of Science and Technology, and Zhongshan Hospital. The investigators reported having no relevant conflicts of interest.

SOURCE: Zhang Y et al. Clin Gastroenterol Hepatol. 2018 Apr 25. doi: 10.1016/j.cgh.2018.04.038.

according to the findings of a single-center retrospective cohort study.

After a median of 21 months of follow-up (range, 6-73 months), rates of all-cause mortality were 7% with ESD and 11% with esophagectomy, said Yiqun Zhang of Zhongshan Hospital, Shanghai, China, and his associates. Rates of cancer recurrence or metastasis were 9.1% and 8.9%, respectively, while disease-specific mortality was lower with ESD (3.4% vs. 7.4% with esophagectomy; P = .049). Severe nonfatal adverse perioperative events occurred in 15% of ESD cases versus 28% of esophagectomy cases (P less than .001). The findings justify more studies of ESD in carefully selected patients with early-stage (T1a-m2/m3 or T1b) esophageal squamous cell carcinoma, the researchers wrote in Clinical Gastroenterology and Hepatology.

Esophagectomy is standard for managing early-stage esophageal squamous cell carcinoma but is associated with high rates of morbidity and mortality. While ESD is minimally invasive, it is considered risky because esophageal squamous cell carcinoma so frequently metastasizes to the lymph nodes, the investigators noted. For the study, they retrospectively compared 322 ESDs and 274 esophagectomies performed during 2011-2016 in patients with T1a-m2/m3 or T1b esophageal squamous cell carcinoma. All cases were pathologically confirmed, and none were premalignant (that is, high-grade intraepithelial neoplasias).

Endoscopic submucosal dissection was associated with significantly lower rates of esophageal fistula (0.3% with ESD vs. 16% with esophagectomy; P less than .001) and pulmonary complications (0.3% vs. 3.6%, respectively; P less than .001), which explained its overall superiority in terms of severe adverse perioperative events, the researchers wrote. Perioperative deaths were rare but occurred more often with esophagectomy (four patients) than with ESD (one patient). Depth of tumor invasion was the only significant correlate of all-cause mortality (hazard ratio for T1a–m3 or deeper tumors versus T1a–m2 tumors, 3.54; 95% confidence interval, 1.08-11.62; P = .04) in a Cox regression analysis that accounted for many potential confounders, such as demographic and tumor characteristics, hypertension, chronic obstructive pulmonary disease (COPD), nodal metastasis, chemotherapy, and radiotherapy.

Perhaps esophagectomy did not improve survival in this retrospective study because follow-up time was too short, because adjuvant therapy compensated for the increased risk of tumor relapse with ESD, or because of the confounding effects of unmeasured variables, such as submucosal stages of T1b cancer, lymphovascular invasion, or tumor morphology, the researchers wrote. “Since a randomized study comparing esophagectomy and ESD alone would not be practical, a potential strategy for future research may include serial treatments – that is, ESD first, followed by esophagectomy, radiotherapy, or chemotherapy, depending on the ESD pathology findings,” they added. “A quality-of-life analysis of ESD would also be helpful because this might be one of the biggest advantages of ESD over esophagectomy and was beyond the scope of this study.”

The study was supported by the National Natural Science Foundation of China, the Shanghai Committee of Science and Technology, and Zhongshan Hospital. The investigators reported having no relevant conflicts of interest.

SOURCE: Zhang Y et al. Clin Gastroenterol Hepatol. 2018 Apr 25. doi: 10.1016/j.cgh.2018.04.038.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Key clinical point: Compared with esophagectomy, endoscopic submucosal dissection (ESD) was associated with significantly fewer severe adverse perioperative events and a similar rate of all-cause mortality in patients with early-stage esophageal squamous cell carcinoma.

Major finding: After a median of 21 months of follow-up, rates of all-cause mortality were 7% with ESD and 11% with esophagectomy (P = .21). Severe adverse perioperative events occurred in 15% of ESDs and 28% of esophagectomies.

Study details: Retrospective study of 596 patients with T1a-m2/m3 or T1b esophageal squamous cell carcinoma.

Disclosures: The study was supported by the National Natural Science Foundation of China, the Shanghai Committee of Science and Technology, and Zhongshan Hospital. The investigators reported having no relevant conflicts of interest.

Source: Zhang Y et al. Clin Gastroenterol Hepatol. 2018 Apr 25. doi: 10.1016/j.cgh.2018.04.038.

Topical retinoid found effective as microneedling for acne scars

according to a new study.

In a prospective, randomized, split-face study of adults with postacne scarring, both treatments resulted in similar efficacy after 6 months, reported T.P. Afra, MD, and associates from the department of dermatology, venereology, and leprology at the Postgraduate Institute of Medical Education and Research in Chandigarh, India. While the clinical usefulness of microneedling as a procedure for postacne scarring is well established, research exploring the effectiveness of topical therapies for acne scarring that could be used at home is lacking. “A home-based topical treatment with a comparable efficacy to microneedling and that is well tolerated would be a useful addition in the armamentarium of acne scar management,” they wrote in the study, published in JAMA Facial Plastic Surgery.

The study included 34 patients, aged 18-30 years, with grade 2-4 facial atrophic acne scars at their initial visit to the research team’s skin clinic. One side of each participants face was randomized to receive microneedling treatment for four sessions over 3 months (using a dermaroller with 1.5-mm needles). Topical tazarotene gel 0.1%, a retinoid approved by the Food and Drug Administration as a treatment for mild to moderate facial acne, was applied to the other side of their face once a night during the same time. Almost 81% were skin phototypes IV, the rest were type III or V. Patients followed up every month for 3 months, then at 6 months.

Changes in acne scar severity from baseline, the primary outcome, were assessed using Goodman and Baron quantitative and qualitative scores and a subjective dermatologist score. Patient satisfaction measured with a Patient Global Assessment (PGA) score and adverse events were secondary outcomes.

In 31 patients (91.2%), overall improvements from baseline to the 6-month visit in quantitative acne scar severity scores for both treatments were seen, with significant improvements from baseline to 6 months: A median improvement of 3 on the sides of the face treated with microneedling and a median improvement of 2.5 on the sides of the face treated with tazarotene (between-group comparison, P = .42). The qualitative acne scar severity score did not significantly improve with either treatment, the investigators noted.

The median improvement in the independent dermatologist score was also comparable for both methods at 3 and 6 months.

At 6 months, improvement in the mean PGA score was “slightly but significantly superior” for the microneedling treatment, compared with that for tazarotene (mean of 5.86 vs. 5.76, respectively; P less than .001), with both falling into the “satisfactory” range for the PGA, the investigators wrote. They also noted a positive correlation between previous exposure to oral isotretinoin and patient satisfaction.

“Although collagen accumulation has been considered a drawback of isotretinoin therapy owing to the development of hypertrophic scars, the better atrophic acne scar outcomes observed for both the present treatment groups in patients with a history of isotretinoin treatment indicates that the collagen accumulation in this case may actually be beneficial,” they wrote.

The topical retinoid was well tolerated by participants, with less than a third reporting dryness and scaling, and adverse effects associated with microneedling were described as “minimal.”

“The use of a modality such as tazarotene that prevents acne flares while addressing acne scarring is a practical addition to clinical practice,” the investigators concluded. “Tazarotene gel 0.1% would be a useful alternative to microneedling in the management of atrophic acne scars. Such a home-based medical management option for acne scarring may decrease physician dependence and health care expenditures for patients with postacne scarring.”

The study authors noted that, as collagen remodeling is a continuous process lasting more than 1 year, a limitation of their study was its short-follow-up of 6 months. However, a strength of the study was its use of validated acne scar severity scoring tools as well as patient and physician assessment of scar improvement in the outcome assessments.

The authors had no disclosures to report.

SOURCE: Afra TP et al. JAMA Facial Plast Surg. 2018 Nov 15. doi: 10.1001/jamafacial.2018.1404.

according to a new study.

In a prospective, randomized, split-face study of adults with postacne scarring, both treatments resulted in similar efficacy after 6 months, reported T.P. Afra, MD, and associates from the department of dermatology, venereology, and leprology at the Postgraduate Institute of Medical Education and Research in Chandigarh, India. While the clinical usefulness of microneedling as a procedure for postacne scarring is well established, research exploring the effectiveness of topical therapies for acne scarring that could be used at home is lacking. “A home-based topical treatment with a comparable efficacy to microneedling and that is well tolerated would be a useful addition in the armamentarium of acne scar management,” they wrote in the study, published in JAMA Facial Plastic Surgery.

The study included 34 patients, aged 18-30 years, with grade 2-4 facial atrophic acne scars at their initial visit to the research team’s skin clinic. One side of each participants face was randomized to receive microneedling treatment for four sessions over 3 months (using a dermaroller with 1.5-mm needles). Topical tazarotene gel 0.1%, a retinoid approved by the Food and Drug Administration as a treatment for mild to moderate facial acne, was applied to the other side of their face once a night during the same time. Almost 81% were skin phototypes IV, the rest were type III or V. Patients followed up every month for 3 months, then at 6 months.

Changes in acne scar severity from baseline, the primary outcome, were assessed using Goodman and Baron quantitative and qualitative scores and a subjective dermatologist score. Patient satisfaction measured with a Patient Global Assessment (PGA) score and adverse events were secondary outcomes.

In 31 patients (91.2%), overall improvements from baseline to the 6-month visit in quantitative acne scar severity scores for both treatments were seen, with significant improvements from baseline to 6 months: A median improvement of 3 on the sides of the face treated with microneedling and a median improvement of 2.5 on the sides of the face treated with tazarotene (between-group comparison, P = .42). The qualitative acne scar severity score did not significantly improve with either treatment, the investigators noted.

The median improvement in the independent dermatologist score was also comparable for both methods at 3 and 6 months.

At 6 months, improvement in the mean PGA score was “slightly but significantly superior” for the microneedling treatment, compared with that for tazarotene (mean of 5.86 vs. 5.76, respectively; P less than .001), with both falling into the “satisfactory” range for the PGA, the investigators wrote. They also noted a positive correlation between previous exposure to oral isotretinoin and patient satisfaction.

“Although collagen accumulation has been considered a drawback of isotretinoin therapy owing to the development of hypertrophic scars, the better atrophic acne scar outcomes observed for both the present treatment groups in patients with a history of isotretinoin treatment indicates that the collagen accumulation in this case may actually be beneficial,” they wrote.

The topical retinoid was well tolerated by participants, with less than a third reporting dryness and scaling, and adverse effects associated with microneedling were described as “minimal.”

“The use of a modality such as tazarotene that prevents acne flares while addressing acne scarring is a practical addition to clinical practice,” the investigators concluded. “Tazarotene gel 0.1% would be a useful alternative to microneedling in the management of atrophic acne scars. Such a home-based medical management option for acne scarring may decrease physician dependence and health care expenditures for patients with postacne scarring.”

The study authors noted that, as collagen remodeling is a continuous process lasting more than 1 year, a limitation of their study was its short-follow-up of 6 months. However, a strength of the study was its use of validated acne scar severity scoring tools as well as patient and physician assessment of scar improvement in the outcome assessments.

The authors had no disclosures to report.

SOURCE: Afra TP et al. JAMA Facial Plast Surg. 2018 Nov 15. doi: 10.1001/jamafacial.2018.1404.

according to a new study.

In a prospective, randomized, split-face study of adults with postacne scarring, both treatments resulted in similar efficacy after 6 months, reported T.P. Afra, MD, and associates from the department of dermatology, venereology, and leprology at the Postgraduate Institute of Medical Education and Research in Chandigarh, India. While the clinical usefulness of microneedling as a procedure for postacne scarring is well established, research exploring the effectiveness of topical therapies for acne scarring that could be used at home is lacking. “A home-based topical treatment with a comparable efficacy to microneedling and that is well tolerated would be a useful addition in the armamentarium of acne scar management,” they wrote in the study, published in JAMA Facial Plastic Surgery.

The study included 34 patients, aged 18-30 years, with grade 2-4 facial atrophic acne scars at their initial visit to the research team’s skin clinic. One side of each participants face was randomized to receive microneedling treatment for four sessions over 3 months (using a dermaroller with 1.5-mm needles). Topical tazarotene gel 0.1%, a retinoid approved by the Food and Drug Administration as a treatment for mild to moderate facial acne, was applied to the other side of their face once a night during the same time. Almost 81% were skin phototypes IV, the rest were type III or V. Patients followed up every month for 3 months, then at 6 months.

Changes in acne scar severity from baseline, the primary outcome, were assessed using Goodman and Baron quantitative and qualitative scores and a subjective dermatologist score. Patient satisfaction measured with a Patient Global Assessment (PGA) score and adverse events were secondary outcomes.

In 31 patients (91.2%), overall improvements from baseline to the 6-month visit in quantitative acne scar severity scores for both treatments were seen, with significant improvements from baseline to 6 months: A median improvement of 3 on the sides of the face treated with microneedling and a median improvement of 2.5 on the sides of the face treated with tazarotene (between-group comparison, P = .42). The qualitative acne scar severity score did not significantly improve with either treatment, the investigators noted.

The median improvement in the independent dermatologist score was also comparable for both methods at 3 and 6 months.

At 6 months, improvement in the mean PGA score was “slightly but significantly superior” for the microneedling treatment, compared with that for tazarotene (mean of 5.86 vs. 5.76, respectively; P less than .001), with both falling into the “satisfactory” range for the PGA, the investigators wrote. They also noted a positive correlation between previous exposure to oral isotretinoin and patient satisfaction.

“Although collagen accumulation has been considered a drawback of isotretinoin therapy owing to the development of hypertrophic scars, the better atrophic acne scar outcomes observed for both the present treatment groups in patients with a history of isotretinoin treatment indicates that the collagen accumulation in this case may actually be beneficial,” they wrote.

The topical retinoid was well tolerated by participants, with less than a third reporting dryness and scaling, and adverse effects associated with microneedling were described as “minimal.”

“The use of a modality such as tazarotene that prevents acne flares while addressing acne scarring is a practical addition to clinical practice,” the investigators concluded. “Tazarotene gel 0.1% would be a useful alternative to microneedling in the management of atrophic acne scars. Such a home-based medical management option for acne scarring may decrease physician dependence and health care expenditures for patients with postacne scarring.”

The study authors noted that, as collagen remodeling is a continuous process lasting more than 1 year, a limitation of their study was its short-follow-up of 6 months. However, a strength of the study was its use of validated acne scar severity scoring tools as well as patient and physician assessment of scar improvement in the outcome assessments.

The authors had no disclosures to report.

SOURCE: Afra TP et al. JAMA Facial Plast Surg. 2018 Nov 15. doi: 10.1001/jamafacial.2018.1404.

FROM JAMA FACIAL PLASTIC SURGERY

Key clinical point: The topical retinoid tazarotene could be a home-based option for treating atrophic acne scarring.

Major finding: Improvements in acne scarring were similar with microneedling and nightly applications of tazarotene gel 0.1% after 6 months.

Study details: A prospective, observer-blinded, split-face, randomized, clinical trial involving 34 patients with grade 2-4 facial atrophic postacne scars.

Disclosures: The authors had no disclosures to report.

Source: Afra TP et al. JAMA Facial Plast Surg. 2018 Nov 15. doi: 10.1001/jamafacial.2018.1404.

NIH director expresses concern over CRISPR-cas9 baby claim

The National Institutes of Health is deeply concerned about the work just presented at the Second International Summit on Human Genome Editing in Hong Kong by Dr. He Jiankui, who described his effort using CRISPR-Cas9 on human embryos to disable the CCR5 gene. He claims that the two embryos were subsequently implanted, and infant twins have been born.

This work represents a deeply disturbing willingness by Dr. He and his team to flout international ethical norms. The project was largely carried out in secret, the medical necessity for inactivation of CCR5 in these infants is utterly unconvincing, the informed consent process appears highly questionable, and the possibility of damaging off-target effects has not been satisfactorily explored. It is profoundly unfortunate that the first apparent application of this powerful technique to the human germline has been carried out so irresponsibly.

The need for development of binding international consensus on setting limits for this kind of research, now being debated in Hong Kong, has never been more apparent. Without such limits, the world will face the serious risk of a deluge of similarly ill-considered and unethical projects.

Should such epic scientific misadventures proceed, a technology with enormous promise for prevention and treatment of disease will be overshadowed by justifiable public outrage, fear, and disgust.

Lest there be any doubt, and as we have stated previously, NIH does not support the use of gene-editing technologies in human embryos.

Francis S. Collins, M.D., Ph.D. is director of the National Institutes of Health. His comments were made in a statement Nov. 28.

The National Institutes of Health is deeply concerned about the work just presented at the Second International Summit on Human Genome Editing in Hong Kong by Dr. He Jiankui, who described his effort using CRISPR-Cas9 on human embryos to disable the CCR5 gene. He claims that the two embryos were subsequently implanted, and infant twins have been born.

This work represents a deeply disturbing willingness by Dr. He and his team to flout international ethical norms. The project was largely carried out in secret, the medical necessity for inactivation of CCR5 in these infants is utterly unconvincing, the informed consent process appears highly questionable, and the possibility of damaging off-target effects has not been satisfactorily explored. It is profoundly unfortunate that the first apparent application of this powerful technique to the human germline has been carried out so irresponsibly.

The need for development of binding international consensus on setting limits for this kind of research, now being debated in Hong Kong, has never been more apparent. Without such limits, the world will face the serious risk of a deluge of similarly ill-considered and unethical projects.

Should such epic scientific misadventures proceed, a technology with enormous promise for prevention and treatment of disease will be overshadowed by justifiable public outrage, fear, and disgust.

Lest there be any doubt, and as we have stated previously, NIH does not support the use of gene-editing technologies in human embryos.

Francis S. Collins, M.D., Ph.D. is director of the National Institutes of Health. His comments were made in a statement Nov. 28.

The National Institutes of Health is deeply concerned about the work just presented at the Second International Summit on Human Genome Editing in Hong Kong by Dr. He Jiankui, who described his effort using CRISPR-Cas9 on human embryos to disable the CCR5 gene. He claims that the two embryos were subsequently implanted, and infant twins have been born.

This work represents a deeply disturbing willingness by Dr. He and his team to flout international ethical norms. The project was largely carried out in secret, the medical necessity for inactivation of CCR5 in these infants is utterly unconvincing, the informed consent process appears highly questionable, and the possibility of damaging off-target effects has not been satisfactorily explored. It is profoundly unfortunate that the first apparent application of this powerful technique to the human germline has been carried out so irresponsibly.

The need for development of binding international consensus on setting limits for this kind of research, now being debated in Hong Kong, has never been more apparent. Without such limits, the world will face the serious risk of a deluge of similarly ill-considered and unethical projects.

Should such epic scientific misadventures proceed, a technology with enormous promise for prevention and treatment of disease will be overshadowed by justifiable public outrage, fear, and disgust.

Lest there be any doubt, and as we have stated previously, NIH does not support the use of gene-editing technologies in human embryos.

Francis S. Collins, M.D., Ph.D. is director of the National Institutes of Health. His comments were made in a statement Nov. 28.

Less-distressed patients driving increase in outpatient services

Adults with less-severe psychological distress contributed to most of the recent increase in outpatient mental health services, based on survey data from nearly 140,000 adults.

“Rising national rates of suicide, opioid misuse, and opioid-related deaths further suggest increasing psychological distress,” wrote Mark Olfson, MD, MPH, of Columbia University, New York, and his colleagues. “However, it is not known whether or to what extent an increase in mental health treatment has occurred in response to rising rates of psychological distress.”

Dr. Olfson and his colleagues reviewed data from the Medical Expenditure Panel Surveys for the years 2004-2005, 2009-2010, and 2014-2015. Overall, 19% of adults received outpatient mental health services in 2004-2005; the percentage increased to 23% in 2014-2015. About half of the study subjects were women, 67% were white, and the average age was 46 years.

The total percentage of adults with serious psychological distress decreased from 5% in 2004-2005 to 4% in 2014-2015, the researchers noted, although those with serious psychological distress had a greater proportionate increase in the use of outpatient services during the study period, from 54% to 68%.

The number of adults with less-serious distress or no distress who were treated with outpatient mental health services increased from 35 million in 2004-2005 to 48 million in 2014-2015, the researchers wrote in JAMA Psychiatry.

The study results were limited by several factors, including the partial reliance on self-reports of mental health care use and on the limitations of the Kessler 6 scale as an assessment of psychological distress. Other limitations included an absence of data on the specific services used and on the effectiveness of treatments. However, the results suggest that, despite increases in outpatient mental health treatment, many adults with serious psychological distress received no mental health care, they wrote. Individuals with more-severe distress might view mental health care less favorably. In addition, the investigators emphasized the need for continued improvement in general medical settings for detecting and treating or referring adults for mental health service.

Dr. Olfson reported no disclosures. One of the coauthors, Steven C. Marcus, PhD, reported receiving consulting fees from several pharmaceutical companies. The study was supported in part by the National Institutes of Health and the New York State Psychiatric Institute. The Medical Expenditure Panel Surveys are sponsored by the Agency for Healthcare Research and Quality.

SOURCE: Olfson M et al. JAMA Psychiatry. 2018 Nov 28. doi: 10.1001/jamapsychiatry.2018.3550.

Adults with less-severe psychological distress contributed to most of the recent increase in outpatient mental health services, based on survey data from nearly 140,000 adults.

“Rising national rates of suicide, opioid misuse, and opioid-related deaths further suggest increasing psychological distress,” wrote Mark Olfson, MD, MPH, of Columbia University, New York, and his colleagues. “However, it is not known whether or to what extent an increase in mental health treatment has occurred in response to rising rates of psychological distress.”

Dr. Olfson and his colleagues reviewed data from the Medical Expenditure Panel Surveys for the years 2004-2005, 2009-2010, and 2014-2015. Overall, 19% of adults received outpatient mental health services in 2004-2005; the percentage increased to 23% in 2014-2015. About half of the study subjects were women, 67% were white, and the average age was 46 years.

The total percentage of adults with serious psychological distress decreased from 5% in 2004-2005 to 4% in 2014-2015, the researchers noted, although those with serious psychological distress had a greater proportionate increase in the use of outpatient services during the study period, from 54% to 68%.

The number of adults with less-serious distress or no distress who were treated with outpatient mental health services increased from 35 million in 2004-2005 to 48 million in 2014-2015, the researchers wrote in JAMA Psychiatry.

The study results were limited by several factors, including the partial reliance on self-reports of mental health care use and on the limitations of the Kessler 6 scale as an assessment of psychological distress. Other limitations included an absence of data on the specific services used and on the effectiveness of treatments. However, the results suggest that, despite increases in outpatient mental health treatment, many adults with serious psychological distress received no mental health care, they wrote. Individuals with more-severe distress might view mental health care less favorably. In addition, the investigators emphasized the need for continued improvement in general medical settings for detecting and treating or referring adults for mental health service.

Dr. Olfson reported no disclosures. One of the coauthors, Steven C. Marcus, PhD, reported receiving consulting fees from several pharmaceutical companies. The study was supported in part by the National Institutes of Health and the New York State Psychiatric Institute. The Medical Expenditure Panel Surveys are sponsored by the Agency for Healthcare Research and Quality.

SOURCE: Olfson M et al. JAMA Psychiatry. 2018 Nov 28. doi: 10.1001/jamapsychiatry.2018.3550.

Adults with less-severe psychological distress contributed to most of the recent increase in outpatient mental health services, based on survey data from nearly 140,000 adults.

“Rising national rates of suicide, opioid misuse, and opioid-related deaths further suggest increasing psychological distress,” wrote Mark Olfson, MD, MPH, of Columbia University, New York, and his colleagues. “However, it is not known whether or to what extent an increase in mental health treatment has occurred in response to rising rates of psychological distress.”

Dr. Olfson and his colleagues reviewed data from the Medical Expenditure Panel Surveys for the years 2004-2005, 2009-2010, and 2014-2015. Overall, 19% of adults received outpatient mental health services in 2004-2005; the percentage increased to 23% in 2014-2015. About half of the study subjects were women, 67% were white, and the average age was 46 years.

The total percentage of adults with serious psychological distress decreased from 5% in 2004-2005 to 4% in 2014-2015, the researchers noted, although those with serious psychological distress had a greater proportionate increase in the use of outpatient services during the study period, from 54% to 68%.

The number of adults with less-serious distress or no distress who were treated with outpatient mental health services increased from 35 million in 2004-2005 to 48 million in 2014-2015, the researchers wrote in JAMA Psychiatry.

The study results were limited by several factors, including the partial reliance on self-reports of mental health care use and on the limitations of the Kessler 6 scale as an assessment of psychological distress. Other limitations included an absence of data on the specific services used and on the effectiveness of treatments. However, the results suggest that, despite increases in outpatient mental health treatment, many adults with serious psychological distress received no mental health care, they wrote. Individuals with more-severe distress might view mental health care less favorably. In addition, the investigators emphasized the need for continued improvement in general medical settings for detecting and treating or referring adults for mental health service.

Dr. Olfson reported no disclosures. One of the coauthors, Steven C. Marcus, PhD, reported receiving consulting fees from several pharmaceutical companies. The study was supported in part by the National Institutes of Health and the New York State Psychiatric Institute. The Medical Expenditure Panel Surveys are sponsored by the Agency for Healthcare Research and Quality.

SOURCE: Olfson M et al. JAMA Psychiatry. 2018 Nov 28. doi: 10.1001/jamapsychiatry.2018.3550.

FROM JAMA PSYCHIATRY

Key clinical point: Overall use of outpatient mental health services is increasing, but most patients report less-severe or no psychological distress.

Major finding: The percentage of U.S. adults receiving outpatient mental health services increased from 19% in 2004-2005 to 23% in 2014-2015.

Study details: The data come from a review of nationally representative surveys taken in 2004-2005, 2009-2010, and 2014-2015 for a total of 139,862 adults aged 18 years and older.

Disclosures: Dr. Olson reported no disclosures. One of the coauthors, Steven C. Marcus, PhD, reported receiving consulting fees from several pharmaceutical companies. The study was supported in part by the National Institutes of Health and the New York State Psychiatric Institute. The Medical Expenditure Panel Surveys are sponsored by the Agency for Healthcare Research and Quality.

Source: Olfson M et al. JAMA Psychiatry. 2018 Nov 28. doi: 10.1001/jamapsychiatry.2018.3550.

Tested: U.S. News & World Report hospital rankings

Do the U.S. News & World Report “Best Hospitals” rankings stand up to scrutiny? When it comes to cardiovascular care, the answer is yes … and no.

The hospitals that were ranked as the Top 50 for “cardiology and heart surgery” had lower 30-day mortality for acute MI, heart failure, and coronary artery bypass grafting (CABG), compared with 3,502 nonranked hospitals, when David E. Wang, MD, and his associates at Brigham and Women’s Hospital in Boston looked at the Centers for Medicare & Medicaid Services Hospital Compare website.

The Top 50 hospitals also had higher patient satisfaction scores (3.9 vs. 3.3 on a scale of 1-5), based on the CMS Hospital Consumer Assessment of Healthcare Providers and Systems star ratings, the investigators said Nov. 28 in JAMA Cardiology.

A clear endorsement for the rankings, it would seem, but another run through the Hospital Compare data – this time for 30-day readmission rates – managed to muddy things up. The nonranked hospitals equaled the ranked hospitals in readmission rates for acute MI and CABG and were actually lower for heart failure, Dr. Wang and his associates said.

“In recent years, financial incentives for hospitals to reduce readmissions … have been 10- to 15-fold greater than incentives to improve mortality rates and have resulted in significant declines in cardiovascular readmissions. Our finding that top-ranked hospitals have lower mortality rates than nonranked hospitals but have generally similar readmission rates might reflect these incentives,” they wrote.

SOURCE: Wang DE et al. JAMA Cardiol. 2018 Nov 28. doi: 10.1001/jamacardio.2018.3951.

.

Do the U.S. News & World Report “Best Hospitals” rankings stand up to scrutiny? When it comes to cardiovascular care, the answer is yes … and no.

The hospitals that were ranked as the Top 50 for “cardiology and heart surgery” had lower 30-day mortality for acute MI, heart failure, and coronary artery bypass grafting (CABG), compared with 3,502 nonranked hospitals, when David E. Wang, MD, and his associates at Brigham and Women’s Hospital in Boston looked at the Centers for Medicare & Medicaid Services Hospital Compare website.

The Top 50 hospitals also had higher patient satisfaction scores (3.9 vs. 3.3 on a scale of 1-5), based on the CMS Hospital Consumer Assessment of Healthcare Providers and Systems star ratings, the investigators said Nov. 28 in JAMA Cardiology.

A clear endorsement for the rankings, it would seem, but another run through the Hospital Compare data – this time for 30-day readmission rates – managed to muddy things up. The nonranked hospitals equaled the ranked hospitals in readmission rates for acute MI and CABG and were actually lower for heart failure, Dr. Wang and his associates said.

“In recent years, financial incentives for hospitals to reduce readmissions … have been 10- to 15-fold greater than incentives to improve mortality rates and have resulted in significant declines in cardiovascular readmissions. Our finding that top-ranked hospitals have lower mortality rates than nonranked hospitals but have generally similar readmission rates might reflect these incentives,” they wrote.

SOURCE: Wang DE et al. JAMA Cardiol. 2018 Nov 28. doi: 10.1001/jamacardio.2018.3951.

.

Do the U.S. News & World Report “Best Hospitals” rankings stand up to scrutiny? When it comes to cardiovascular care, the answer is yes … and no.

The hospitals that were ranked as the Top 50 for “cardiology and heart surgery” had lower 30-day mortality for acute MI, heart failure, and coronary artery bypass grafting (CABG), compared with 3,502 nonranked hospitals, when David E. Wang, MD, and his associates at Brigham and Women’s Hospital in Boston looked at the Centers for Medicare & Medicaid Services Hospital Compare website.

The Top 50 hospitals also had higher patient satisfaction scores (3.9 vs. 3.3 on a scale of 1-5), based on the CMS Hospital Consumer Assessment of Healthcare Providers and Systems star ratings, the investigators said Nov. 28 in JAMA Cardiology.

A clear endorsement for the rankings, it would seem, but another run through the Hospital Compare data – this time for 30-day readmission rates – managed to muddy things up. The nonranked hospitals equaled the ranked hospitals in readmission rates for acute MI and CABG and were actually lower for heart failure, Dr. Wang and his associates said.

“In recent years, financial incentives for hospitals to reduce readmissions … have been 10- to 15-fold greater than incentives to improve mortality rates and have resulted in significant declines in cardiovascular readmissions. Our finding that top-ranked hospitals have lower mortality rates than nonranked hospitals but have generally similar readmission rates might reflect these incentives,” they wrote.

SOURCE: Wang DE et al. JAMA Cardiol. 2018 Nov 28. doi: 10.1001/jamacardio.2018.3951.

.

FROM JAMA CARDIOLOGY

Omega-3 fatty acid supplementation reduces risk of preterm birth

Taking omega-3 long-chain polyunsaturated fatty acids during pregnancy was associated with reduced risk of preterm birth, and also may reduce the risk of babies born at a low birth weight and risk of requiring neonatal intensive care, according to a Cochrane review of 70 randomized controlled trials.

“There are not many options for preventing premature birth, so these new findings are very important for pregnant women, babies, and the health professionals who care for them,” Philippa Middleton, MPH, PhD, of Cochrane Pregnancy and Childbirth Group and the South Australian Health and Medical Research Institute, in Adelaide, stated in a press release. “We don’t yet fully understand the causes of premature labor, so predicting and preventing early birth has always been a challenge. This is one of the reasons omega-3 supplementation in pregnancy is of such great interest to researchers around the world.”

Dr. Middleton and her colleagues performed a search of the Cochrane Pregnancy and Childbirth’s Trials Register, ClinicalTrials.gov, and the WHO International Clinical Trials Registry Platform and identified 70 randomized controlled trials (RCTs) where 19,927 women at varying levels of risk for preterm birth received omega-3 long-chain polyunsaturated fatty acids (LCPUFA), placebo, or no omega-3.

“Many pregnant women in the UK are already taking omega-3 supplements by personal choice rather than as a result of advice from health professionals,” Dr. Middleton said in the release. “It’s worth noting though that many supplements currently on the market don’t contain the optimal dose or type of omega-3 for preventing premature birth. Our review found the optimum dose was a daily supplement containing between 500 and 1,000 milligrams of long-chain omega-3 fats (containing at least 500 mg of DHA [docosahexaenoic acid]) starting at 12 weeks of pregnancy.”

In 26 RCTs (10,304 women), the risk of preterm birth under 37 weeks was 11% lower for women who took omega-3 LCPUFA compared with women who did not take omega-3 (relative risk, 0.89; 95% confidence interval, 0.81-0.97), while the risk for preterm birth under 34 weeks in 9 RCTs (5,204 women) was 42% lower for women compared with women who did not take omega-3 (RR, 0.58; 95% CI, 0.44-0.77).

With regard to infant health, use of omega-3 LCPUFA during pregnancy was associated in 10 RCTs (7,416 women) with a potential reduced risk of perinatal mortality (RR, 0.75; 95% CI, 0.54-1.03) and, in 9 RCTs (6,920 women), a reduced risk of neonatal intensive care admission (RR, 0.92; 95% CI, 0.83-1.03). The researchers noted that omega-3 use in 15 trials (8,449 women) was potentially associated with a reduced number of babies with low birth weight (RR, 0.90; 95% CI, 0.82-0.99), but an increase in babies who were large for their gestational age in 3,722 women from 6 RCTs (RR, 1.15; 95% CI, 0.97-1.36). There was no significant difference among groups with regard to babies who were born small for their gestational age or in uterine growth restriction, they said.

While maternal outcomes were examined, Dr. Middleton and her colleagues found no significant differences between groups in factors such as postterm induction, serious adverse events, admission to intensive care, and postnatal depression.

“Ultimately, we hope this review will make a real contribution to the evidence base we need to reduce premature births, which continue to be one of the most pressing and intractable maternal and child health problems in every country around the world,” Dr. Middleton said.

The National Institutes of Health funded the review. The authors reported no conflicts of interest.

SOURCE: Middleton P et al. Cochrane Database Syst Rev. 2018; doi: 10.1002/14651858.CD003402.pub3.

Taking omega-3 long-chain polyunsaturated fatty acids during pregnancy was associated with reduced risk of preterm birth, and also may reduce the risk of babies born at a low birth weight and risk of requiring neonatal intensive care, according to a Cochrane review of 70 randomized controlled trials.

“There are not many options for preventing premature birth, so these new findings are very important for pregnant women, babies, and the health professionals who care for them,” Philippa Middleton, MPH, PhD, of Cochrane Pregnancy and Childbirth Group and the South Australian Health and Medical Research Institute, in Adelaide, stated in a press release. “We don’t yet fully understand the causes of premature labor, so predicting and preventing early birth has always been a challenge. This is one of the reasons omega-3 supplementation in pregnancy is of such great interest to researchers around the world.”

Dr. Middleton and her colleagues performed a search of the Cochrane Pregnancy and Childbirth’s Trials Register, ClinicalTrials.gov, and the WHO International Clinical Trials Registry Platform and identified 70 randomized controlled trials (RCTs) where 19,927 women at varying levels of risk for preterm birth received omega-3 long-chain polyunsaturated fatty acids (LCPUFA), placebo, or no omega-3.

“Many pregnant women in the UK are already taking omega-3 supplements by personal choice rather than as a result of advice from health professionals,” Dr. Middleton said in the release. “It’s worth noting though that many supplements currently on the market don’t contain the optimal dose or type of omega-3 for preventing premature birth. Our review found the optimum dose was a daily supplement containing between 500 and 1,000 milligrams of long-chain omega-3 fats (containing at least 500 mg of DHA [docosahexaenoic acid]) starting at 12 weeks of pregnancy.”

In 26 RCTs (10,304 women), the risk of preterm birth under 37 weeks was 11% lower for women who took omega-3 LCPUFA compared with women who did not take omega-3 (relative risk, 0.89; 95% confidence interval, 0.81-0.97), while the risk for preterm birth under 34 weeks in 9 RCTs (5,204 women) was 42% lower for women compared with women who did not take omega-3 (RR, 0.58; 95% CI, 0.44-0.77).

With regard to infant health, use of omega-3 LCPUFA during pregnancy was associated in 10 RCTs (7,416 women) with a potential reduced risk of perinatal mortality (RR, 0.75; 95% CI, 0.54-1.03) and, in 9 RCTs (6,920 women), a reduced risk of neonatal intensive care admission (RR, 0.92; 95% CI, 0.83-1.03). The researchers noted that omega-3 use in 15 trials (8,449 women) was potentially associated with a reduced number of babies with low birth weight (RR, 0.90; 95% CI, 0.82-0.99), but an increase in babies who were large for their gestational age in 3,722 women from 6 RCTs (RR, 1.15; 95% CI, 0.97-1.36). There was no significant difference among groups with regard to babies who were born small for their gestational age or in uterine growth restriction, they said.

While maternal outcomes were examined, Dr. Middleton and her colleagues found no significant differences between groups in factors such as postterm induction, serious adverse events, admission to intensive care, and postnatal depression.

“Ultimately, we hope this review will make a real contribution to the evidence base we need to reduce premature births, which continue to be one of the most pressing and intractable maternal and child health problems in every country around the world,” Dr. Middleton said.

The National Institutes of Health funded the review. The authors reported no conflicts of interest.

SOURCE: Middleton P et al. Cochrane Database Syst Rev. 2018; doi: 10.1002/14651858.CD003402.pub3.

Taking omega-3 long-chain polyunsaturated fatty acids during pregnancy was associated with reduced risk of preterm birth, and also may reduce the risk of babies born at a low birth weight and risk of requiring neonatal intensive care, according to a Cochrane review of 70 randomized controlled trials.

“There are not many options for preventing premature birth, so these new findings are very important for pregnant women, babies, and the health professionals who care for them,” Philippa Middleton, MPH, PhD, of Cochrane Pregnancy and Childbirth Group and the South Australian Health and Medical Research Institute, in Adelaide, stated in a press release. “We don’t yet fully understand the causes of premature labor, so predicting and preventing early birth has always been a challenge. This is one of the reasons omega-3 supplementation in pregnancy is of such great interest to researchers around the world.”

Dr. Middleton and her colleagues performed a search of the Cochrane Pregnancy and Childbirth’s Trials Register, ClinicalTrials.gov, and the WHO International Clinical Trials Registry Platform and identified 70 randomized controlled trials (RCTs) where 19,927 women at varying levels of risk for preterm birth received omega-3 long-chain polyunsaturated fatty acids (LCPUFA), placebo, or no omega-3.

“Many pregnant women in the UK are already taking omega-3 supplements by personal choice rather than as a result of advice from health professionals,” Dr. Middleton said in the release. “It’s worth noting though that many supplements currently on the market don’t contain the optimal dose or type of omega-3 for preventing premature birth. Our review found the optimum dose was a daily supplement containing between 500 and 1,000 milligrams of long-chain omega-3 fats (containing at least 500 mg of DHA [docosahexaenoic acid]) starting at 12 weeks of pregnancy.”

In 26 RCTs (10,304 women), the risk of preterm birth under 37 weeks was 11% lower for women who took omega-3 LCPUFA compared with women who did not take omega-3 (relative risk, 0.89; 95% confidence interval, 0.81-0.97), while the risk for preterm birth under 34 weeks in 9 RCTs (5,204 women) was 42% lower for women compared with women who did not take omega-3 (RR, 0.58; 95% CI, 0.44-0.77).

With regard to infant health, use of omega-3 LCPUFA during pregnancy was associated in 10 RCTs (7,416 women) with a potential reduced risk of perinatal mortality (RR, 0.75; 95% CI, 0.54-1.03) and, in 9 RCTs (6,920 women), a reduced risk of neonatal intensive care admission (RR, 0.92; 95% CI, 0.83-1.03). The researchers noted that omega-3 use in 15 trials (8,449 women) was potentially associated with a reduced number of babies with low birth weight (RR, 0.90; 95% CI, 0.82-0.99), but an increase in babies who were large for their gestational age in 3,722 women from 6 RCTs (RR, 1.15; 95% CI, 0.97-1.36). There was no significant difference among groups with regard to babies who were born small for their gestational age or in uterine growth restriction, they said.

While maternal outcomes were examined, Dr. Middleton and her colleagues found no significant differences between groups in factors such as postterm induction, serious adverse events, admission to intensive care, and postnatal depression.

“Ultimately, we hope this review will make a real contribution to the evidence base we need to reduce premature births, which continue to be one of the most pressing and intractable maternal and child health problems in every country around the world,” Dr. Middleton said.

The National Institutes of Health funded the review. The authors reported no conflicts of interest.

SOURCE: Middleton P et al. Cochrane Database Syst Rev. 2018; doi: 10.1002/14651858.CD003402.pub3.

FROM COCHRANE DATABASE OF SYSTEMATIC REVIEWS

Key clinical point:

Major finding: In 26 randomized controlled trials, the risk of preterm birth at 37 weeks (10,304 women) was 11% lower and the risk of preterm birth at 34 weeks (5,204 women) in 9 RCTs was 42% lower for women taking omega-3, compared with women not taking omega-3.

Study details: A Cochrane review of 70 RCTs with a total of 19,927 women at varying levels of risk for preterm birth who received omega-3 long-chain polyunsaturated fatty acids, placebo, or no omega-3.

Disclosures: The National Institutes of Health funded the review. The authors reported no conflicts of interest.

Source: Middleton P et al. Cochrane Database Syst Rev. 2018. doi: 10.1002/14651858.CD003402.pub3.

Sofa and bed injuries very common among young children

ORLANDO – Injuries related to beds and sofas in children aged under 5 years occur more than twice as frequently than injuries related to stairs, according to new research.

“Findings from our analysis reveal that it is an important source of injury to young children and a leading cause of trauma to infants,” concluded David S. Liu, of Baylor College of Medicine, Houston, who presented the findings at the annual meeting of the American Academy of Pediatrics.

“The rate of bed- and sofa-related injuries is increasing, which underscores the need for increased prevention efforts, including parental education and improved safety design, to decrease soft furniture injuries among young children,” Mr. Liu and his colleagues wrote.

The researchers used the National Electronic Injury Surveillance System of the U.S. Consumer Product Safety Commission to conduct a retrospective analysis of injuries related to sofas and beds from 2007 to 2016.

They found that an estimated 2.3 million children aged under 5 years were treated for injuries related to soft furniture during those years, an average of 230,026 injuries a year, or 115 injuries per 10,000 children. To the surprise of the researchers, injuries related to beds and sofas were the most common types of accidental injury in that age group, occurring 2.5 times more often than stair-related injuries, which occurred at a rate of 47 per 10,000 population.

Boys were slightly more likely to be injured, making up 56% of all the cases. Soft tissue/internal organ injuries were most common, comprising 28% of all injuries, followed by lacerations in 24% of cases, abrasions in 15%, and fractures in 14%.

More than half the children (61%) sustained injuries to the head or face, and 3% were hospitalized for their injuries. Although infants (under 1 year old) only accounted for 28% of children injured, they were twice as likely to be hospitalized than older children.

The researchers also identified increases in injuries over the time period studied. Bed-related injuries increased 17% from 2007 to 2016, and sofa/couch-related injuries increased 17% during that period.

Although the vast majority of children were treated and released, approximately 4% of children were admitted or treated and transferred to another facility. Overall, an estimated 3,361 children died during the 9-year period, translating to a little over 370 children a year.

In a video interview, Mr. Liu discussed the implications of these findings.

“We know how dangerous car accidents and staircases are, and we often recommend car seats and stair gates for those,” Mr. Liu said. “Obviously we can’t put a gate or a barrier on every single sofa, couch, and bed in America, so as clinicians and parents, the best we can do is keep aware of how dangerous these items are. Just because of their soft nature doesn’t mean they’re inherently safer.”

The researchers reported no disclosures and the research received no external funding.

ORLANDO – Injuries related to beds and sofas in children aged under 5 years occur more than twice as frequently than injuries related to stairs, according to new research.

“Findings from our analysis reveal that it is an important source of injury to young children and a leading cause of trauma to infants,” concluded David S. Liu, of Baylor College of Medicine, Houston, who presented the findings at the annual meeting of the American Academy of Pediatrics.

“The rate of bed- and sofa-related injuries is increasing, which underscores the need for increased prevention efforts, including parental education and improved safety design, to decrease soft furniture injuries among young children,” Mr. Liu and his colleagues wrote.

The researchers used the National Electronic Injury Surveillance System of the U.S. Consumer Product Safety Commission to conduct a retrospective analysis of injuries related to sofas and beds from 2007 to 2016.

They found that an estimated 2.3 million children aged under 5 years were treated for injuries related to soft furniture during those years, an average of 230,026 injuries a year, or 115 injuries per 10,000 children. To the surprise of the researchers, injuries related to beds and sofas were the most common types of accidental injury in that age group, occurring 2.5 times more often than stair-related injuries, which occurred at a rate of 47 per 10,000 population.

Boys were slightly more likely to be injured, making up 56% of all the cases. Soft tissue/internal organ injuries were most common, comprising 28% of all injuries, followed by lacerations in 24% of cases, abrasions in 15%, and fractures in 14%.

More than half the children (61%) sustained injuries to the head or face, and 3% were hospitalized for their injuries. Although infants (under 1 year old) only accounted for 28% of children injured, they were twice as likely to be hospitalized than older children.

The researchers also identified increases in injuries over the time period studied. Bed-related injuries increased 17% from 2007 to 2016, and sofa/couch-related injuries increased 17% during that period.

Although the vast majority of children were treated and released, approximately 4% of children were admitted or treated and transferred to another facility. Overall, an estimated 3,361 children died during the 9-year period, translating to a little over 370 children a year.

In a video interview, Mr. Liu discussed the implications of these findings.

“We know how dangerous car accidents and staircases are, and we often recommend car seats and stair gates for those,” Mr. Liu said. “Obviously we can’t put a gate or a barrier on every single sofa, couch, and bed in America, so as clinicians and parents, the best we can do is keep aware of how dangerous these items are. Just because of their soft nature doesn’t mean they’re inherently safer.”

The researchers reported no disclosures and the research received no external funding.

ORLANDO – Injuries related to beds and sofas in children aged under 5 years occur more than twice as frequently than injuries related to stairs, according to new research.

“Findings from our analysis reveal that it is an important source of injury to young children and a leading cause of trauma to infants,” concluded David S. Liu, of Baylor College of Medicine, Houston, who presented the findings at the annual meeting of the American Academy of Pediatrics.

“The rate of bed- and sofa-related injuries is increasing, which underscores the need for increased prevention efforts, including parental education and improved safety design, to decrease soft furniture injuries among young children,” Mr. Liu and his colleagues wrote.

The researchers used the National Electronic Injury Surveillance System of the U.S. Consumer Product Safety Commission to conduct a retrospective analysis of injuries related to sofas and beds from 2007 to 2016.

They found that an estimated 2.3 million children aged under 5 years were treated for injuries related to soft furniture during those years, an average of 230,026 injuries a year, or 115 injuries per 10,000 children. To the surprise of the researchers, injuries related to beds and sofas were the most common types of accidental injury in that age group, occurring 2.5 times more often than stair-related injuries, which occurred at a rate of 47 per 10,000 population.

Boys were slightly more likely to be injured, making up 56% of all the cases. Soft tissue/internal organ injuries were most common, comprising 28% of all injuries, followed by lacerations in 24% of cases, abrasions in 15%, and fractures in 14%.

More than half the children (61%) sustained injuries to the head or face, and 3% were hospitalized for their injuries. Although infants (under 1 year old) only accounted for 28% of children injured, they were twice as likely to be hospitalized than older children.

The researchers also identified increases in injuries over the time period studied. Bed-related injuries increased 17% from 2007 to 2016, and sofa/couch-related injuries increased 17% during that period.

Although the vast majority of children were treated and released, approximately 4% of children were admitted or treated and transferred to another facility. Overall, an estimated 3,361 children died during the 9-year period, translating to a little over 370 children a year.

In a video interview, Mr. Liu discussed the implications of these findings.

“We know how dangerous car accidents and staircases are, and we often recommend car seats and stair gates for those,” Mr. Liu said. “Obviously we can’t put a gate or a barrier on every single sofa, couch, and bed in America, so as clinicians and parents, the best we can do is keep aware of how dangerous these items are. Just because of their soft nature doesn’t mean they’re inherently safer.”

The researchers reported no disclosures and the research received no external funding.

REPORTING FROM AAP 2018

Key clinical point: Injuries from beds and sofas/couches are common in children aged under 5 years, occurring 2.5 times more frequently than stairs-related injuries.

Major finding: An estimated 115 bed/sofa-related injuries per 10,000 children occur every year.

Study details: The findings are based on a retrospective analysis of injuries related to sofas and beds from 2007 to 2016.

Disclosures: The researchers reported no disclosures and the research received no external funding.

Allergy Testing in Dermatology and Beyond

Allergy testing typically refers to evaluation of a patient for suspected type I or type IV hypersensitivity.1,2 The possibility of type I hypersensitivity is raised in patients presenting with food allergies, allergic rhinitis, asthma, and immediate adverse reactions to medications, whereas type IV hypersensitivity is suspected in patients with eczematous eruptions, delayed adverse cutaneous reactions to medications, and failure of metallic implants (eg, metal joint replacements, cardiac stents) in conjunction with overlying skin rashes (Table 1).1-5 Type II (eg, pemphigus vulgaris) and type III (eg, IgA vasculitis) hypersensitivities are not evaluated with screening allergy tests.

Type I Sensitization

Type I hypersensitivity is an immediate hypersensitivity mediated predominantly by IgE activation of mast cells in the skin as well as the respiratory and gastric mucosa.1 Sensitization of an individual patient occurs when antigen-presenting cells induce a helper T cell (TH2) cytokine response leading to B-cell class switching and allergen-specific IgE production. Upon repeat exposure to the allergen, circulating antibodies then bind to high-affinity receptors on mast cells and basophils and initiate an allergic inflammatory response, leading to a clinical presentation of allergic rhinitis, urticaria, or immediate drug reactions. Confirming type I sensitization may be performed via serologic (in vitro) or skin testing (in vivo).5,6

Serologic Testing (In Vitro)

Serologic testing is a blood test that detects circulating IgE levels against specific allergens.5 The first such test, the radioallergosorbent test, was introduced in the 1970s but is not quantitative and is no longer used. Although common, it is inaccurate to describe current serum IgE (s-IgE) testing as radioallergosorbent testing. There are several US Food and Drug Administration-approved s-IgE assays in common use, and these tests may be helpful in elucidating relevant allergens and for tailoring therapy appropriately, which may consist of avoidance of certain foods or environmental agents and/or allergen immunotherapy.

Skin Testing (In Vivo)

Skin testing can be performed percutaneously (eg, percutaneous skin testing) or intradermally (eg, intradermal testing).6 Percutaneous skin testing is performed by placing a drop of allergen extract on the skin, after which a lancet is used to lightly scratch the skin; intradermal testing is performed by injecting a small amount of allergen extract into the dermis. In both cases, the skin is evaluated after 15 to 20 minutes for the presence and size of a cutaneous wheal. Medications with antihistaminergic activity must be discontinued prior to testing. Both s-IgE and skin testing assess for type I hypersensitivity, and factors such as extensive rash, concern for anaphylaxis, or inability to discontinue antihistamines may favor s-IgE testing versus skin testing. False-positive results can occur with both tests, and for this reason, test results should always be interpreted in conjunction with clinical examination and patient history to determine relevant allergies.

Type IV Sensitization

Type IV hypersensitivity is a delayed hypersensitivity mediated primarily by lymphocytes.2 Sensitization occurs when haptens bind to host proteins and are presented by epidermal and dermal dendritic cells to T lymphocytes in the skin. These lymphocytes then migrate to regional lymph nodes where antigen-specific T lymphocytes are produced and home back to the skin. Upon reexposure to the allergen, these memory T lymphocytes become activated and incite a delayed allergic response. Confirming type IV hypersensitivity primarily is accomplished via patch testing, though other testing modalities exist.

Skin Biopsy

Biopsy is sometimes performed in the workup of an individual presenting with allergic contact dermatitis (ACD) and typically will show spongiosis with normal stratum corneum and epidermal thickness in the setting of acute ACD and mild to marked acanthosis and parakeratosis in chronic ACD.7 The findings, however, are nonspecific and the differential of these histopathologic findings encompasses nummular dermatitis, atopic dermatitis, irritant contact dermatitis, and dyshidrotic eczema, among others. The presence of eosinophils and Langerhans cell microabscesses may provide supportive evidence for ACD over the other spongiotic dermatitides.7,8

Patch Testing

Patch testing is the gold standard in diagnosing type IV hypersensitivities resulting in a clinical presentation of ACD. Hundreds of allergens are commercially available for patch testing, and more commonly tested allergens fall into one of several categories, such as cosmetic preservatives, rubbers, metals, textiles, fragrances, adhesives, antibiotics, plants, and even corticosteroids. Of note, a common misconception is that ACD must result from new exposures; however, patients may develop ACD secondary to an exposure or product they have been using for many years without a problem.

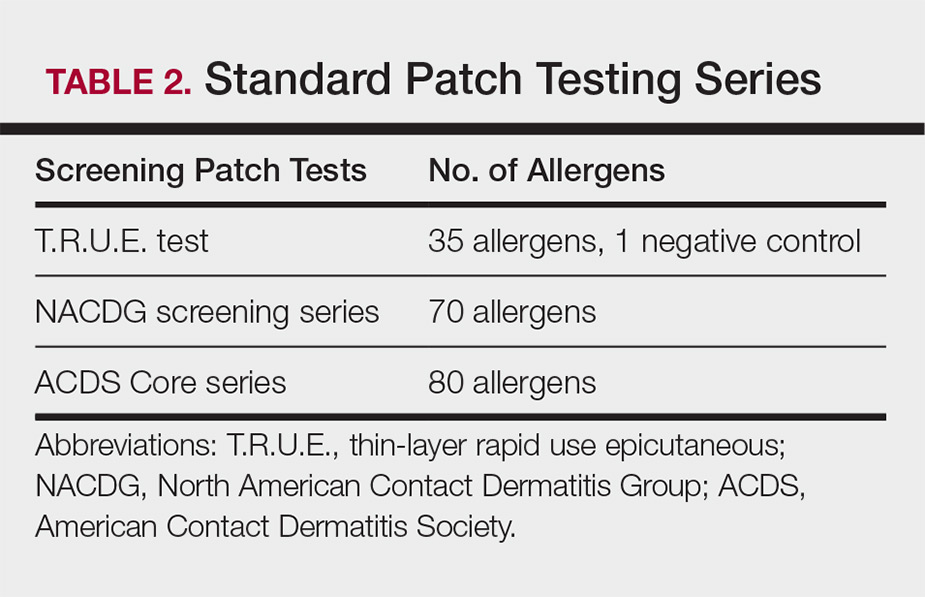

Three commonly used screening series are the thin-layer rapid use epicutaneous (T.R.U.E.) test (SmartPractice), North American Contact Dermatitis Group screening series, and American Contact Dermatitis Society Core 80 allergen series, which have some variation in the type and number of allergens included (Table 2). The T.R.U.E. test will miss a notable number of clinically relevant allergens in comparison to the North American Contact Dermatitis Group and American Contact Dermatitis Society Core series, and it may be of particularly low utility in identifying fragrance or preservative ACD.9

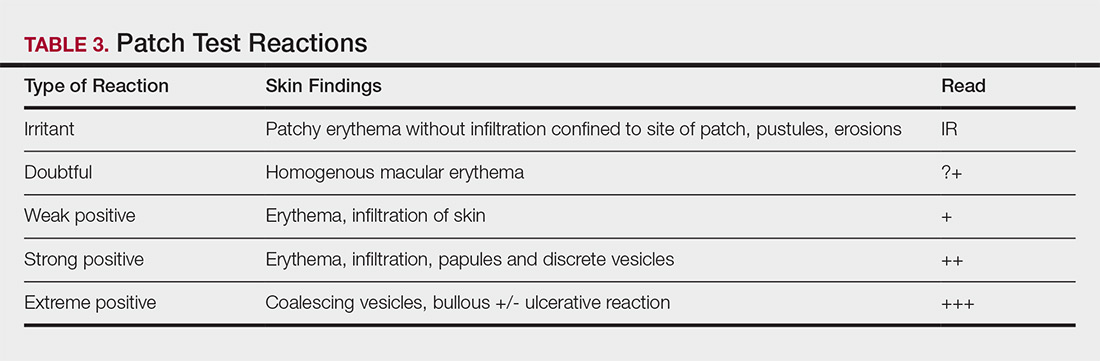

Allergens are placed on the back in chambers in a petrolatum or aqueous medium. The patches remain affixed for 48 hours, during which time the patient is asked to refrain from showering or exercising to prevent loss of patches. The patient's skin is then evaluated for reactions to allergens on 2 separate occasions: at the time of patch removal 48 hours after initial placement, then the areas of patches are marked for delayed readings at day 4 to day 7 after initial patch placement. Results are scored based on the degree of the inflammatory reaction (Table 3). Delayed readings beyond day 7 may be necessary for metals, specific preservatives (eg, dodecyl gallate, propolis), and neomycin.10