User login

Regional Variation in Standardized Costs of Care at Children’s Hospitals

With some areas of the country spending close to 3 times more on healthcare than others, regional variation in healthcare spending has been the focus of national attention.1-7 Since 1973, the Dartmouth Institute has studied regional variation in healthcare utilization and spending and concluded that variation is “unwarranted” because it is driven by providers’ practice patterns rather than differences in medical need, patient preferences, or evidence-based medicine.8-11 However, critics of the Dartmouth Institute’s findings argue that their approach does not adequately adjust for community-level income, and that higher costs in some areas reflect greater patient needs that are not reflected in illness acuity alone.12-14

While Medicare data have made it possible to study variations in spending for the senior population, fragmentation of insurance coverage and nonstandardized data structures make studying the pediatric population more difficult. However, the Children’s Hospital Association’s (CHA) Pediatric Health Information System (PHIS) has made large-scale comparisons more feasible. To overcome challenges associated with using charges and nonuniform cost data, PHIS-derived standardized costs provide new opportunities for comparisons.15,16 Initial analyses using PHIS data showed significant interhospital variations in costs of care,15 but they did not adjust for differences in populations and assess the drivers of variation. A more recent study that controlled for payer status, comorbidities, and illness severity found that intensive care unit (ICU) utilization varied significantly for children hospitalized for asthma, suggesting that hospital practice patterns drive differences in cost.17

This study uses PHIS data to analyze regional variations in standardized costs of care for 3 conditions for which children are hospitalized. To assess potential drivers of variation, the study investigates the effects of patient-level demographic and illness-severity variables as well as encounter-level variables on costs of care. It also estimates cost savings from reducing variation.

METHODS

Data Source

This retrospective cohort study uses the PHIS database (CHA, Overland Park, KS), which includes 48 freestanding children’s hospitals located in noncompeting markets across the United States and accounts for approximately 20% of pediatric hospitalizations. PHIS includes patient demographics, International Classification of Diseases, 9th Revision (ICD-9) diagnosis and procedure codes, as well as hospital charges. In addition to total charges, PHIS reports imaging, laboratory, pharmacy, and “other” charges. The “other” category aggregates clinical, supply, room, and nursing charges (including facility fees and ancillary staff services).

Inclusion Criteria

Inpatient- and observation-status hospitalizations for asthma, diabetic ketoacidosis (DKA), and acute gastroenteritis (AGE) at 46 PHIS hospitals from October 2014 to September 2015 were included. Two hospitals were excluded because of missing data. Hospitalizations for patients >18 years were excluded.

Hospitalizations were categorized by using All Patient Refined-Diagnosis Related Groups (APR-DRGs) version 24 (3M Health Information Systems, St. Paul, MN)18 based on the ICD-9 diagnosis and procedure codes assigned during the episode of care. Analyses included APR-DRG 141 (asthma), primary diagnosis ICD-9 codes 250.11 and 250.13 (DKA), and APR-DRG 249 (AGE). ICD-9 codes were used for DKA for increased specificity.19 These conditions were chosen to represent 3 clinical scenarios: (1) a diagnosis for which hospitals differ on whether certain aspects of care are provided in the ICU (asthma), (2) a diagnosis that frequently includes care in an ICU (DKA), and (3) a diagnosis that typically does not include ICU care (AGE).19

Study Design

To focus the analysis on variation in resource utilization across hospitals rather than variations in hospital item charges, each billed resource was assigned a standardized cost.15,16 For each clinical transaction code (CTC), the median unit cost was calculated for each hospital. The median of the hospital medians was defined as the standardized unit cost for that CTC.

The primary outcome variable was the total standardized cost for the hospitalization adjusted for patient-level demographic and illness-severity variables. Patient demographic and illness-severity covariates included age, race, gender, ZIP code-based median annual household income (HHI), rural-urban location, distance from home ZIP code to the hospital, chronic condition indicator (CCI), and severity-of-illness (SOI). When assessing drivers of variation, encounter-level covariates were added, including length of stay (LOS) in hours, ICU utilization, and 7-day readmission (an imprecise measure to account for quality of care during the index visit). The contribution of imaging, laboratory, pharmacy, and “other” costs was also considered.

Median annual HHI for patients’ home ZIP code was obtained from 2010 US Census data. Community-level HHI, a proxy for socioeconomic status (SES),20,21 was classified into categories based on the 2015 US federal poverty level (FPL) for a family of 422: HHI-1 = ≤ 1.5 × FPL; HHI-2 = 1.5 to 2 × FPL; HHI-3 = 2 to 3 × FPL; HHI-4 = ≥ 3 × FPL. Rural-urban commuting area (RUCA) codes were used to determine the rural-urban classification of the patient’s home.23 The distance from home ZIP code to the hospital was included as an additional control for illness severity because patients traveling longer distances are often more sick and require more resources.24

The Agency for Healthcare Research and Quality CCI classification system was used to identify the presence of a chronic condition.25 For asthma, CCI was flagged if the patient had a chronic condition other than asthma; for DKA, CCI was flagged if the patient had a chronic condition other than DKA; and for AGE, CCI was flagged if the patient had any chronic condition.

The APR-DRG system provides a 4-level SOI score with each APR-DRG category. Patient factors, such as comorbid diagnoses, are considered in severity scores generated through 3M’s proprietary algorithms.18

For the first analysis, the 46 hospitals were categorized into 7 geographic regions based on 2010 US Census Divisions.26 To overcome small hospital sample sizes, Mountain and Pacific were combined into West, and Middle Atlantic and New England were combined into North East. Because PHIS hospitals are located in noncompeting geographic regions, for the second analysis, we examined hospital-level variation (considering each hospital as its own region).

Data Analysis

To focus the analysis on “typical” patients and produce more robust estimates of central tendencies, the top and bottom 5% of hospitalizations with the most extreme standardized costs by condition were trimmed.27 Standardized costs were log-transformed because of their nonnormal distribution and analyzed by using linear mixed models. Covariates were added stepwise to assess the proportion of the variance explained by each predictor. Post-hoc tests with conservative single-step stepwise mutation model corrections for multiple testing were used to compare adjusted costs. Statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values < 0.05 were considered significant. The Children’s Hospital of Philadelphia Institutional Review Board did not classify this study as human subjects research.

RESULTS

During the study period, there were 26,430 hospitalizations for asthma, 5056 for DKA, and 16,274 for AGE (Table 1).

Variation Across Census Regions

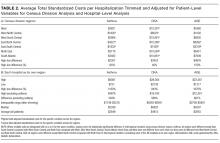

After adjusting for patient-level demographic and illness-severity variables, differences in adjusted total standardized costs remained between regions (P < 0.001). Although no region was an outlier compared to the overall mean for any of the conditions, regions were statistically different in pairwise comparison. The East North Central, South Atlantic, and West South Central regions had the highest adjusted total standardized costs for each of the conditions. The East South Central and West North Central regions had the lowest costs for each of the conditions. Adjusted total standardized costs were 120% higher for asthma ($1920 vs $4227), 46% higher for DKA ($7429 vs $10,881), and 150% higher for AGE ($3316 vs $8292) in the highest-cost region compared with the lowest-cost region (Table 2A).

Variation Within Census Regions

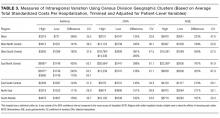

After controlling for patient-level demographic and illness-severity variables, standardized costs were different across hospitals in the same region (P < 0.001; panel A in Figure). This was true for all conditions in each region. Differences between the lowest- and highest-cost hospitals within the same region ranged from 111% to 420% for asthma, 101% to 398% for DKA, and 166% to 787% for AGE (Table 3).

Variation Across Hospitals (Each Hospital as Its Own Region)

One hospital had the highest adjusted standardized costs for all 3 conditions ($9087 for asthma, $28,564 for DKA, and $23,387 for AGE) and was outside of the 95% confidence interval compared with the overall means. The second highest-cost hospitals for asthma ($5977) and AGE ($18,780) were also outside of the 95% confidence interval. After removing these outliers, the difference between the highest- and lowest-cost hospitals was 549% for asthma ($721 vs $4678), 491% for DKA ($2738 vs $16,192), and 681% for AGE ($1317 vs $10,281; Table 2B).

Drivers of Variation Across Census Regions

Patient-level demographic and illness-severity variables explained very little of the variation in standardized costs across regions. For each of the conditions, age, race, gender, community-level HHI, RUCA, and distance from home to the hospital each accounted for <1.5% of variation, while SOI and CCI each accounted for <5%. Overall, patient-level variables explained 5.5%, 3.7%, and 6.7% of variation for asthma, DKA, and AGE.

Encounter-level variables explained a much larger percentage of the variation in costs. LOS accounted for 17.8% of the variation for asthma, 9.8% for DKA, and 8.7% for AGE. ICU utilization explained 6.9% of the variation for asthma and 12.5% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. The combination of patient-level and encounter-level variables explained 27%, 24%, and 15% of the variation for asthma, DKA, and AGE.

Drivers of Variation Across Hospitals

For each of the conditions, patient-level demographic variables each accounted for <2% of variation in costs between hospitals. SOI accounted for 4.5% of the variation for asthma and CCI accounted for 5.2% for AGE. Overall, patient-level variables explained 6.9%, 5.3%, and 7.3% of variation for asthma, DKA, and AGE.

Encounter-level variables accounted for a much larger percentage of the variation in cost. LOS explained 25.4% for asthma, 13.3% for DKA, and 14.2% for AGE. ICU utilization accounted for 13.4% for asthma and 21.9% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. Together, patient-level and encounter-level variables explained 40%, 36%, and 22% of variation for asthma, DKA, and AGE.

Imaging, Laboratory, Pharmacy, and “Other” Costs

The largest contributor to total costs adjusted for patient-level factors for all conditions was “other,” which aggregates room, nursing, clinical, and supply charges (panel B in Figure). When considering drivers of variation, this category explained >50% for each of the conditions. The next largest contributor to total costs was laboratory charges, which accounted for 15% of the variation across regions for asthma and 11% for DKA. Differences in imaging accounted for 18% of the variation for DKA and 15% for AGE. Differences in pharmacy charges accounted for <4% of the variation for each of the conditions. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 78%, and 72% of the variation across census regions for asthma, DKA, and AGE.

For the hospital-level analysis, differences in “other” remained the largest driver of cost variation. For asthma, “other” explained 61% of variation, while pharmacy, laboratory, and imaging each accounted for <8%. For DKA, differences in imaging accounted for 18% of the variation and laboratory charges accounted for 12%. For AGE, imaging accounted for 15% of the variation. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 72%, and 67% of the variation for asthma, DKA, and AGE.

Cost Savings

If all hospitals in this cohort with adjusted standardized costs above the national PHIS average achieved costs equal to the national PHIS average, estimated annual savings in adjusted standardized costs for these 3 conditions would be $69.1 million. If each hospital with adjusted costs above the average within its census region achieved costs equal to its regional average, estimated annual savings in adjusted standardized costs for these conditions would be $25.2 million.

DISCUSSION

This study reported on the regional variation in costs of care for 3 conditions treated at 46 children’s hospitals across 7 geographic regions, and it demonstrated that variations in costs of care exist in pediatrics. This study used standardized costs to compare utilization patterns across hospitals and adjusted for several patient-level demographic and illness-severity factors, and it found that differences in costs of care for children hospitalized with asthma, DKA, and AGE remained both between and within regions.

These variations are noteworthy, as hospitals strive to improve the value of healthcare. If the higher-cost hospitals in this cohort could achieve costs equal to the national PHIS averages, estimated annual savings in adjusted standardized costs for these conditions alone would equal $69.1 million. If higher-cost hospitals relative to the average in their own region reduced costs to their regional averages, annual standardized cost savings could equal $25.2 million for these conditions.

The differences observed are also significant in that they provide a foundation for exploring whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes.28 If so, studying what those hospitals do to achieve outcomes more efficiently can serve as the basis for the establishment of best practices.29 Standardizing best practices through protocols, pathways, and care-model redesign can reduce potentially unnecessary spending.30

Our findings showed that patient-level demographic and illness-severity covariates, including community-level HHI and SOI, did not consistently explain cost differences. Instead, LOS and ICU utilization were associated with higher costs.17,19 When considering the effect of the 4 cost components on the variation in total standardized costs between regions and between hospitals, the fact that the “other” category accounted for the largest percent of the variation is not surprising, because the cost of room occupancy and nursing services increases with longer LOS and more time in the ICU. Other individual cost components that were major drivers of variation were laboratory utilization for asthma and imaging for DKA and AGE31 (though they accounted for a much smaller proportion of total adjusted costs).19

To determine if these factors are modifiable, more information is needed to explain why practices differ. Many factors may contribute to varying utilization patterns, including differences in capabilities and resources (in the hospital and in the community) and patient volumes. For example, some hospitals provide continuous albuterol for status asthmaticus only in ICUs, while others provide it on regular units.32 But if certain hospitals do not have adequate resources or volumes to effectively care for certain populations outside of the ICU, their higher-value approach (considering quality and cost) may be to utilize ICU beds, even if some other hospitals care for those patients on non-ICU floors. Another possibility is that family preferences about care delivery (such as how long children stay in the hospital) may vary across regions.33

Other evidence suggests that physician practice and spending patterns are strongly influenced by the practices of the region where they trained.34 Because physicians often practice close to where they trained,35,36 this may partially explain how regional patterns are reinforced.

Even considering all mentioned covariates, our model did not fully explain variation in standardized costs. After adding the cost components as covariates, between one-third and one-fifth of the variation remained unexplained. It is possible that this unexplained variation stemmed from unmeasured patient-level factors.

In addition, while proxies for SES, including community-level HHI, did not significantly predict differences in costs across regions, it is possible that SES affected LOS differently in different regions. Previous studies have suggested that lower SES is associated with longer LOS.37 If this effect is more pronounced in certain regions (potentially because of differences in social service infrastructures), SES may be contributing to variations in cost through LOS.

Our findings were subject to limitations. First, this study only examined 3 diagnoses and did not include surgical or less common conditions. Second, while PHIS includes tertiary care, academic, and freestanding children’s hospitals, it does not include general hospitals, which is where most pediatric patients receive care.38 Third, we used ZIP code-based median annual HHI to account for SES, and we used ZIP codes to determine the distance to the hospital and rural-urban location of patients’ homes. These approximations lack precision because SES and distances vary within ZIP codes.39 Fourth, while adjusted standardized costs allow for comparisons between hospitals, they do not represent actual costs to patients or individual hospitals. Additionally, when determining whether variation remained after controlling for patient-level variables, we included SOI as a reflection of illness-severity at presentation. However, in practice, SOI scores may be assigned partially based on factors determined during the hospitalization.18 Finally, the use of other regional boundaries or the selection of different hospitals may yield different results.

CONCLUSION

This study reveals regional variations in costs of care for 3 inpatient pediatric conditions. Future studies should explore whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes. To the extent that variation is driven by modifiable factors and lower spending does not compromise outcomes, these data may prompt reviews of care models to reduce unwarranted variation and improve the value of care delivery at local, regional, and national levels.

Disclosure

Internal funds from the CHA and The Children’s Hospital of Philadelphia supported the conduct of this work. The authors have no financial interests, relationships, or affiliations relevant to the subject matter or materials discussed in the manuscript to disclose. The authors have no potential conflicts of interest relevant to the subject matter or materials discussed in the manuscript to disclose

1. Fisher E, Skinner J. Making Sense of Geographic Variations in Health Care: The New IOM Report. 2013; http://healthaffairs.org/blog/2013/07/24/making-sense-of-geographic-variations-in-health-care-the-new-iom-report/. Accessed on April 11, 2014.

With some areas of the country spending close to 3 times more on healthcare than others, regional variation in healthcare spending has been the focus of national attention.1-7 Since 1973, the Dartmouth Institute has studied regional variation in healthcare utilization and spending and concluded that variation is “unwarranted” because it is driven by providers’ practice patterns rather than differences in medical need, patient preferences, or evidence-based medicine.8-11 However, critics of the Dartmouth Institute’s findings argue that their approach does not adequately adjust for community-level income, and that higher costs in some areas reflect greater patient needs that are not reflected in illness acuity alone.12-14

While Medicare data have made it possible to study variations in spending for the senior population, fragmentation of insurance coverage and nonstandardized data structures make studying the pediatric population more difficult. However, the Children’s Hospital Association’s (CHA) Pediatric Health Information System (PHIS) has made large-scale comparisons more feasible. To overcome challenges associated with using charges and nonuniform cost data, PHIS-derived standardized costs provide new opportunities for comparisons.15,16 Initial analyses using PHIS data showed significant interhospital variations in costs of care,15 but they did not adjust for differences in populations and assess the drivers of variation. A more recent study that controlled for payer status, comorbidities, and illness severity found that intensive care unit (ICU) utilization varied significantly for children hospitalized for asthma, suggesting that hospital practice patterns drive differences in cost.17

This study uses PHIS data to analyze regional variations in standardized costs of care for 3 conditions for which children are hospitalized. To assess potential drivers of variation, the study investigates the effects of patient-level demographic and illness-severity variables as well as encounter-level variables on costs of care. It also estimates cost savings from reducing variation.

METHODS

Data Source

This retrospective cohort study uses the PHIS database (CHA, Overland Park, KS), which includes 48 freestanding children’s hospitals located in noncompeting markets across the United States and accounts for approximately 20% of pediatric hospitalizations. PHIS includes patient demographics, International Classification of Diseases, 9th Revision (ICD-9) diagnosis and procedure codes, as well as hospital charges. In addition to total charges, PHIS reports imaging, laboratory, pharmacy, and “other” charges. The “other” category aggregates clinical, supply, room, and nursing charges (including facility fees and ancillary staff services).

Inclusion Criteria

Inpatient- and observation-status hospitalizations for asthma, diabetic ketoacidosis (DKA), and acute gastroenteritis (AGE) at 46 PHIS hospitals from October 2014 to September 2015 were included. Two hospitals were excluded because of missing data. Hospitalizations for patients >18 years were excluded.

Hospitalizations were categorized by using All Patient Refined-Diagnosis Related Groups (APR-DRGs) version 24 (3M Health Information Systems, St. Paul, MN)18 based on the ICD-9 diagnosis and procedure codes assigned during the episode of care. Analyses included APR-DRG 141 (asthma), primary diagnosis ICD-9 codes 250.11 and 250.13 (DKA), and APR-DRG 249 (AGE). ICD-9 codes were used for DKA for increased specificity.19 These conditions were chosen to represent 3 clinical scenarios: (1) a diagnosis for which hospitals differ on whether certain aspects of care are provided in the ICU (asthma), (2) a diagnosis that frequently includes care in an ICU (DKA), and (3) a diagnosis that typically does not include ICU care (AGE).19

Study Design

To focus the analysis on variation in resource utilization across hospitals rather than variations in hospital item charges, each billed resource was assigned a standardized cost.15,16 For each clinical transaction code (CTC), the median unit cost was calculated for each hospital. The median of the hospital medians was defined as the standardized unit cost for that CTC.

The primary outcome variable was the total standardized cost for the hospitalization adjusted for patient-level demographic and illness-severity variables. Patient demographic and illness-severity covariates included age, race, gender, ZIP code-based median annual household income (HHI), rural-urban location, distance from home ZIP code to the hospital, chronic condition indicator (CCI), and severity-of-illness (SOI). When assessing drivers of variation, encounter-level covariates were added, including length of stay (LOS) in hours, ICU utilization, and 7-day readmission (an imprecise measure to account for quality of care during the index visit). The contribution of imaging, laboratory, pharmacy, and “other” costs was also considered.

Median annual HHI for patients’ home ZIP code was obtained from 2010 US Census data. Community-level HHI, a proxy for socioeconomic status (SES),20,21 was classified into categories based on the 2015 US federal poverty level (FPL) for a family of 422: HHI-1 = ≤ 1.5 × FPL; HHI-2 = 1.5 to 2 × FPL; HHI-3 = 2 to 3 × FPL; HHI-4 = ≥ 3 × FPL. Rural-urban commuting area (RUCA) codes were used to determine the rural-urban classification of the patient’s home.23 The distance from home ZIP code to the hospital was included as an additional control for illness severity because patients traveling longer distances are often more sick and require more resources.24

The Agency for Healthcare Research and Quality CCI classification system was used to identify the presence of a chronic condition.25 For asthma, CCI was flagged if the patient had a chronic condition other than asthma; for DKA, CCI was flagged if the patient had a chronic condition other than DKA; and for AGE, CCI was flagged if the patient had any chronic condition.

The APR-DRG system provides a 4-level SOI score with each APR-DRG category. Patient factors, such as comorbid diagnoses, are considered in severity scores generated through 3M’s proprietary algorithms.18

For the first analysis, the 46 hospitals were categorized into 7 geographic regions based on 2010 US Census Divisions.26 To overcome small hospital sample sizes, Mountain and Pacific were combined into West, and Middle Atlantic and New England were combined into North East. Because PHIS hospitals are located in noncompeting geographic regions, for the second analysis, we examined hospital-level variation (considering each hospital as its own region).

Data Analysis

To focus the analysis on “typical” patients and produce more robust estimates of central tendencies, the top and bottom 5% of hospitalizations with the most extreme standardized costs by condition were trimmed.27 Standardized costs were log-transformed because of their nonnormal distribution and analyzed by using linear mixed models. Covariates were added stepwise to assess the proportion of the variance explained by each predictor. Post-hoc tests with conservative single-step stepwise mutation model corrections for multiple testing were used to compare adjusted costs. Statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values < 0.05 were considered significant. The Children’s Hospital of Philadelphia Institutional Review Board did not classify this study as human subjects research.

RESULTS

During the study period, there were 26,430 hospitalizations for asthma, 5056 for DKA, and 16,274 for AGE (Table 1).

Variation Across Census Regions

After adjusting for patient-level demographic and illness-severity variables, differences in adjusted total standardized costs remained between regions (P < 0.001). Although no region was an outlier compared to the overall mean for any of the conditions, regions were statistically different in pairwise comparison. The East North Central, South Atlantic, and West South Central regions had the highest adjusted total standardized costs for each of the conditions. The East South Central and West North Central regions had the lowest costs for each of the conditions. Adjusted total standardized costs were 120% higher for asthma ($1920 vs $4227), 46% higher for DKA ($7429 vs $10,881), and 150% higher for AGE ($3316 vs $8292) in the highest-cost region compared with the lowest-cost region (Table 2A).

Variation Within Census Regions

After controlling for patient-level demographic and illness-severity variables, standardized costs were different across hospitals in the same region (P < 0.001; panel A in Figure). This was true for all conditions in each region. Differences between the lowest- and highest-cost hospitals within the same region ranged from 111% to 420% for asthma, 101% to 398% for DKA, and 166% to 787% for AGE (Table 3).

Variation Across Hospitals (Each Hospital as Its Own Region)

One hospital had the highest adjusted standardized costs for all 3 conditions ($9087 for asthma, $28,564 for DKA, and $23,387 for AGE) and was outside of the 95% confidence interval compared with the overall means. The second highest-cost hospitals for asthma ($5977) and AGE ($18,780) were also outside of the 95% confidence interval. After removing these outliers, the difference between the highest- and lowest-cost hospitals was 549% for asthma ($721 vs $4678), 491% for DKA ($2738 vs $16,192), and 681% for AGE ($1317 vs $10,281; Table 2B).

Drivers of Variation Across Census Regions

Patient-level demographic and illness-severity variables explained very little of the variation in standardized costs across regions. For each of the conditions, age, race, gender, community-level HHI, RUCA, and distance from home to the hospital each accounted for <1.5% of variation, while SOI and CCI each accounted for <5%. Overall, patient-level variables explained 5.5%, 3.7%, and 6.7% of variation for asthma, DKA, and AGE.

Encounter-level variables explained a much larger percentage of the variation in costs. LOS accounted for 17.8% of the variation for asthma, 9.8% for DKA, and 8.7% for AGE. ICU utilization explained 6.9% of the variation for asthma and 12.5% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. The combination of patient-level and encounter-level variables explained 27%, 24%, and 15% of the variation for asthma, DKA, and AGE.

Drivers of Variation Across Hospitals

For each of the conditions, patient-level demographic variables each accounted for <2% of variation in costs between hospitals. SOI accounted for 4.5% of the variation for asthma and CCI accounted for 5.2% for AGE. Overall, patient-level variables explained 6.9%, 5.3%, and 7.3% of variation for asthma, DKA, and AGE.

Encounter-level variables accounted for a much larger percentage of the variation in cost. LOS explained 25.4% for asthma, 13.3% for DKA, and 14.2% for AGE. ICU utilization accounted for 13.4% for asthma and 21.9% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. Together, patient-level and encounter-level variables explained 40%, 36%, and 22% of variation for asthma, DKA, and AGE.

Imaging, Laboratory, Pharmacy, and “Other” Costs

The largest contributor to total costs adjusted for patient-level factors for all conditions was “other,” which aggregates room, nursing, clinical, and supply charges (panel B in Figure). When considering drivers of variation, this category explained >50% for each of the conditions. The next largest contributor to total costs was laboratory charges, which accounted for 15% of the variation across regions for asthma and 11% for DKA. Differences in imaging accounted for 18% of the variation for DKA and 15% for AGE. Differences in pharmacy charges accounted for <4% of the variation for each of the conditions. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 78%, and 72% of the variation across census regions for asthma, DKA, and AGE.

For the hospital-level analysis, differences in “other” remained the largest driver of cost variation. For asthma, “other” explained 61% of variation, while pharmacy, laboratory, and imaging each accounted for <8%. For DKA, differences in imaging accounted for 18% of the variation and laboratory charges accounted for 12%. For AGE, imaging accounted for 15% of the variation. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 72%, and 67% of the variation for asthma, DKA, and AGE.

Cost Savings

If all hospitals in this cohort with adjusted standardized costs above the national PHIS average achieved costs equal to the national PHIS average, estimated annual savings in adjusted standardized costs for these 3 conditions would be $69.1 million. If each hospital with adjusted costs above the average within its census region achieved costs equal to its regional average, estimated annual savings in adjusted standardized costs for these conditions would be $25.2 million.

DISCUSSION

This study reported on the regional variation in costs of care for 3 conditions treated at 46 children’s hospitals across 7 geographic regions, and it demonstrated that variations in costs of care exist in pediatrics. This study used standardized costs to compare utilization patterns across hospitals and adjusted for several patient-level demographic and illness-severity factors, and it found that differences in costs of care for children hospitalized with asthma, DKA, and AGE remained both between and within regions.

These variations are noteworthy, as hospitals strive to improve the value of healthcare. If the higher-cost hospitals in this cohort could achieve costs equal to the national PHIS averages, estimated annual savings in adjusted standardized costs for these conditions alone would equal $69.1 million. If higher-cost hospitals relative to the average in their own region reduced costs to their regional averages, annual standardized cost savings could equal $25.2 million for these conditions.

The differences observed are also significant in that they provide a foundation for exploring whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes.28 If so, studying what those hospitals do to achieve outcomes more efficiently can serve as the basis for the establishment of best practices.29 Standardizing best practices through protocols, pathways, and care-model redesign can reduce potentially unnecessary spending.30

Our findings showed that patient-level demographic and illness-severity covariates, including community-level HHI and SOI, did not consistently explain cost differences. Instead, LOS and ICU utilization were associated with higher costs.17,19 When considering the effect of the 4 cost components on the variation in total standardized costs between regions and between hospitals, the fact that the “other” category accounted for the largest percent of the variation is not surprising, because the cost of room occupancy and nursing services increases with longer LOS and more time in the ICU. Other individual cost components that were major drivers of variation were laboratory utilization for asthma and imaging for DKA and AGE31 (though they accounted for a much smaller proportion of total adjusted costs).19

To determine if these factors are modifiable, more information is needed to explain why practices differ. Many factors may contribute to varying utilization patterns, including differences in capabilities and resources (in the hospital and in the community) and patient volumes. For example, some hospitals provide continuous albuterol for status asthmaticus only in ICUs, while others provide it on regular units.32 But if certain hospitals do not have adequate resources or volumes to effectively care for certain populations outside of the ICU, their higher-value approach (considering quality and cost) may be to utilize ICU beds, even if some other hospitals care for those patients on non-ICU floors. Another possibility is that family preferences about care delivery (such as how long children stay in the hospital) may vary across regions.33

Other evidence suggests that physician practice and spending patterns are strongly influenced by the practices of the region where they trained.34 Because physicians often practice close to where they trained,35,36 this may partially explain how regional patterns are reinforced.

Even considering all mentioned covariates, our model did not fully explain variation in standardized costs. After adding the cost components as covariates, between one-third and one-fifth of the variation remained unexplained. It is possible that this unexplained variation stemmed from unmeasured patient-level factors.

In addition, while proxies for SES, including community-level HHI, did not significantly predict differences in costs across regions, it is possible that SES affected LOS differently in different regions. Previous studies have suggested that lower SES is associated with longer LOS.37 If this effect is more pronounced in certain regions (potentially because of differences in social service infrastructures), SES may be contributing to variations in cost through LOS.

Our findings were subject to limitations. First, this study only examined 3 diagnoses and did not include surgical or less common conditions. Second, while PHIS includes tertiary care, academic, and freestanding children’s hospitals, it does not include general hospitals, which is where most pediatric patients receive care.38 Third, we used ZIP code-based median annual HHI to account for SES, and we used ZIP codes to determine the distance to the hospital and rural-urban location of patients’ homes. These approximations lack precision because SES and distances vary within ZIP codes.39 Fourth, while adjusted standardized costs allow for comparisons between hospitals, they do not represent actual costs to patients or individual hospitals. Additionally, when determining whether variation remained after controlling for patient-level variables, we included SOI as a reflection of illness-severity at presentation. However, in practice, SOI scores may be assigned partially based on factors determined during the hospitalization.18 Finally, the use of other regional boundaries or the selection of different hospitals may yield different results.

CONCLUSION

This study reveals regional variations in costs of care for 3 inpatient pediatric conditions. Future studies should explore whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes. To the extent that variation is driven by modifiable factors and lower spending does not compromise outcomes, these data may prompt reviews of care models to reduce unwarranted variation and improve the value of care delivery at local, regional, and national levels.

Disclosure

Internal funds from the CHA and The Children’s Hospital of Philadelphia supported the conduct of this work. The authors have no financial interests, relationships, or affiliations relevant to the subject matter or materials discussed in the manuscript to disclose. The authors have no potential conflicts of interest relevant to the subject matter or materials discussed in the manuscript to disclose

With some areas of the country spending close to 3 times more on healthcare than others, regional variation in healthcare spending has been the focus of national attention.1-7 Since 1973, the Dartmouth Institute has studied regional variation in healthcare utilization and spending and concluded that variation is “unwarranted” because it is driven by providers’ practice patterns rather than differences in medical need, patient preferences, or evidence-based medicine.8-11 However, critics of the Dartmouth Institute’s findings argue that their approach does not adequately adjust for community-level income, and that higher costs in some areas reflect greater patient needs that are not reflected in illness acuity alone.12-14

While Medicare data have made it possible to study variations in spending for the senior population, fragmentation of insurance coverage and nonstandardized data structures make studying the pediatric population more difficult. However, the Children’s Hospital Association’s (CHA) Pediatric Health Information System (PHIS) has made large-scale comparisons more feasible. To overcome challenges associated with using charges and nonuniform cost data, PHIS-derived standardized costs provide new opportunities for comparisons.15,16 Initial analyses using PHIS data showed significant interhospital variations in costs of care,15 but they did not adjust for differences in populations and assess the drivers of variation. A more recent study that controlled for payer status, comorbidities, and illness severity found that intensive care unit (ICU) utilization varied significantly for children hospitalized for asthma, suggesting that hospital practice patterns drive differences in cost.17

This study uses PHIS data to analyze regional variations in standardized costs of care for 3 conditions for which children are hospitalized. To assess potential drivers of variation, the study investigates the effects of patient-level demographic and illness-severity variables as well as encounter-level variables on costs of care. It also estimates cost savings from reducing variation.

METHODS

Data Source

This retrospective cohort study uses the PHIS database (CHA, Overland Park, KS), which includes 48 freestanding children’s hospitals located in noncompeting markets across the United States and accounts for approximately 20% of pediatric hospitalizations. PHIS includes patient demographics, International Classification of Diseases, 9th Revision (ICD-9) diagnosis and procedure codes, as well as hospital charges. In addition to total charges, PHIS reports imaging, laboratory, pharmacy, and “other” charges. The “other” category aggregates clinical, supply, room, and nursing charges (including facility fees and ancillary staff services).

Inclusion Criteria

Inpatient- and observation-status hospitalizations for asthma, diabetic ketoacidosis (DKA), and acute gastroenteritis (AGE) at 46 PHIS hospitals from October 2014 to September 2015 were included. Two hospitals were excluded because of missing data. Hospitalizations for patients >18 years were excluded.

Hospitalizations were categorized by using All Patient Refined-Diagnosis Related Groups (APR-DRGs) version 24 (3M Health Information Systems, St. Paul, MN)18 based on the ICD-9 diagnosis and procedure codes assigned during the episode of care. Analyses included APR-DRG 141 (asthma), primary diagnosis ICD-9 codes 250.11 and 250.13 (DKA), and APR-DRG 249 (AGE). ICD-9 codes were used for DKA for increased specificity.19 These conditions were chosen to represent 3 clinical scenarios: (1) a diagnosis for which hospitals differ on whether certain aspects of care are provided in the ICU (asthma), (2) a diagnosis that frequently includes care in an ICU (DKA), and (3) a diagnosis that typically does not include ICU care (AGE).19

Study Design

To focus the analysis on variation in resource utilization across hospitals rather than variations in hospital item charges, each billed resource was assigned a standardized cost.15,16 For each clinical transaction code (CTC), the median unit cost was calculated for each hospital. The median of the hospital medians was defined as the standardized unit cost for that CTC.

The primary outcome variable was the total standardized cost for the hospitalization adjusted for patient-level demographic and illness-severity variables. Patient demographic and illness-severity covariates included age, race, gender, ZIP code-based median annual household income (HHI), rural-urban location, distance from home ZIP code to the hospital, chronic condition indicator (CCI), and severity-of-illness (SOI). When assessing drivers of variation, encounter-level covariates were added, including length of stay (LOS) in hours, ICU utilization, and 7-day readmission (an imprecise measure to account for quality of care during the index visit). The contribution of imaging, laboratory, pharmacy, and “other” costs was also considered.

Median annual HHI for patients’ home ZIP code was obtained from 2010 US Census data. Community-level HHI, a proxy for socioeconomic status (SES),20,21 was classified into categories based on the 2015 US federal poverty level (FPL) for a family of 422: HHI-1 = ≤ 1.5 × FPL; HHI-2 = 1.5 to 2 × FPL; HHI-3 = 2 to 3 × FPL; HHI-4 = ≥ 3 × FPL. Rural-urban commuting area (RUCA) codes were used to determine the rural-urban classification of the patient’s home.23 The distance from home ZIP code to the hospital was included as an additional control for illness severity because patients traveling longer distances are often more sick and require more resources.24

The Agency for Healthcare Research and Quality CCI classification system was used to identify the presence of a chronic condition.25 For asthma, CCI was flagged if the patient had a chronic condition other than asthma; for DKA, CCI was flagged if the patient had a chronic condition other than DKA; and for AGE, CCI was flagged if the patient had any chronic condition.

The APR-DRG system provides a 4-level SOI score with each APR-DRG category. Patient factors, such as comorbid diagnoses, are considered in severity scores generated through 3M’s proprietary algorithms.18

For the first analysis, the 46 hospitals were categorized into 7 geographic regions based on 2010 US Census Divisions.26 To overcome small hospital sample sizes, Mountain and Pacific were combined into West, and Middle Atlantic and New England were combined into North East. Because PHIS hospitals are located in noncompeting geographic regions, for the second analysis, we examined hospital-level variation (considering each hospital as its own region).

Data Analysis

To focus the analysis on “typical” patients and produce more robust estimates of central tendencies, the top and bottom 5% of hospitalizations with the most extreme standardized costs by condition were trimmed.27 Standardized costs were log-transformed because of their nonnormal distribution and analyzed by using linear mixed models. Covariates were added stepwise to assess the proportion of the variance explained by each predictor. Post-hoc tests with conservative single-step stepwise mutation model corrections for multiple testing were used to compare adjusted costs. Statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values < 0.05 were considered significant. The Children’s Hospital of Philadelphia Institutional Review Board did not classify this study as human subjects research.

RESULTS

During the study period, there were 26,430 hospitalizations for asthma, 5056 for DKA, and 16,274 for AGE (Table 1).

Variation Across Census Regions

After adjusting for patient-level demographic and illness-severity variables, differences in adjusted total standardized costs remained between regions (P < 0.001). Although no region was an outlier compared to the overall mean for any of the conditions, regions were statistically different in pairwise comparison. The East North Central, South Atlantic, and West South Central regions had the highest adjusted total standardized costs for each of the conditions. The East South Central and West North Central regions had the lowest costs for each of the conditions. Adjusted total standardized costs were 120% higher for asthma ($1920 vs $4227), 46% higher for DKA ($7429 vs $10,881), and 150% higher for AGE ($3316 vs $8292) in the highest-cost region compared with the lowest-cost region (Table 2A).

Variation Within Census Regions

After controlling for patient-level demographic and illness-severity variables, standardized costs were different across hospitals in the same region (P < 0.001; panel A in Figure). This was true for all conditions in each region. Differences between the lowest- and highest-cost hospitals within the same region ranged from 111% to 420% for asthma, 101% to 398% for DKA, and 166% to 787% for AGE (Table 3).

Variation Across Hospitals (Each Hospital as Its Own Region)

One hospital had the highest adjusted standardized costs for all 3 conditions ($9087 for asthma, $28,564 for DKA, and $23,387 for AGE) and was outside of the 95% confidence interval compared with the overall means. The second highest-cost hospitals for asthma ($5977) and AGE ($18,780) were also outside of the 95% confidence interval. After removing these outliers, the difference between the highest- and lowest-cost hospitals was 549% for asthma ($721 vs $4678), 491% for DKA ($2738 vs $16,192), and 681% for AGE ($1317 vs $10,281; Table 2B).

Drivers of Variation Across Census Regions

Patient-level demographic and illness-severity variables explained very little of the variation in standardized costs across regions. For each of the conditions, age, race, gender, community-level HHI, RUCA, and distance from home to the hospital each accounted for <1.5% of variation, while SOI and CCI each accounted for <5%. Overall, patient-level variables explained 5.5%, 3.7%, and 6.7% of variation for asthma, DKA, and AGE.

Encounter-level variables explained a much larger percentage of the variation in costs. LOS accounted for 17.8% of the variation for asthma, 9.8% for DKA, and 8.7% for AGE. ICU utilization explained 6.9% of the variation for asthma and 12.5% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. The combination of patient-level and encounter-level variables explained 27%, 24%, and 15% of the variation for asthma, DKA, and AGE.

Drivers of Variation Across Hospitals

For each of the conditions, patient-level demographic variables each accounted for <2% of variation in costs between hospitals. SOI accounted for 4.5% of the variation for asthma and CCI accounted for 5.2% for AGE. Overall, patient-level variables explained 6.9%, 5.3%, and 7.3% of variation for asthma, DKA, and AGE.

Encounter-level variables accounted for a much larger percentage of the variation in cost. LOS explained 25.4% for asthma, 13.3% for DKA, and 14.2% for AGE. ICU utilization accounted for 13.4% for asthma and 21.9% for DKA; ICU use was not a major driver for AGE. Seven-day readmissions accounted for <0.5% for each of the conditions. Together, patient-level and encounter-level variables explained 40%, 36%, and 22% of variation for asthma, DKA, and AGE.

Imaging, Laboratory, Pharmacy, and “Other” Costs

The largest contributor to total costs adjusted for patient-level factors for all conditions was “other,” which aggregates room, nursing, clinical, and supply charges (panel B in Figure). When considering drivers of variation, this category explained >50% for each of the conditions. The next largest contributor to total costs was laboratory charges, which accounted for 15% of the variation across regions for asthma and 11% for DKA. Differences in imaging accounted for 18% of the variation for DKA and 15% for AGE. Differences in pharmacy charges accounted for <4% of the variation for each of the conditions. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 78%, and 72% of the variation across census regions for asthma, DKA, and AGE.

For the hospital-level analysis, differences in “other” remained the largest driver of cost variation. For asthma, “other” explained 61% of variation, while pharmacy, laboratory, and imaging each accounted for <8%. For DKA, differences in imaging accounted for 18% of the variation and laboratory charges accounted for 12%. For AGE, imaging accounted for 15% of the variation. Adding the 4 cost components to the other patient- and encounter-level covariates, the model explained 81%, 72%, and 67% of the variation for asthma, DKA, and AGE.

Cost Savings

If all hospitals in this cohort with adjusted standardized costs above the national PHIS average achieved costs equal to the national PHIS average, estimated annual savings in adjusted standardized costs for these 3 conditions would be $69.1 million. If each hospital with adjusted costs above the average within its census region achieved costs equal to its regional average, estimated annual savings in adjusted standardized costs for these conditions would be $25.2 million.

DISCUSSION

This study reported on the regional variation in costs of care for 3 conditions treated at 46 children’s hospitals across 7 geographic regions, and it demonstrated that variations in costs of care exist in pediatrics. This study used standardized costs to compare utilization patterns across hospitals and adjusted for several patient-level demographic and illness-severity factors, and it found that differences in costs of care for children hospitalized with asthma, DKA, and AGE remained both between and within regions.

These variations are noteworthy, as hospitals strive to improve the value of healthcare. If the higher-cost hospitals in this cohort could achieve costs equal to the national PHIS averages, estimated annual savings in adjusted standardized costs for these conditions alone would equal $69.1 million. If higher-cost hospitals relative to the average in their own region reduced costs to their regional averages, annual standardized cost savings could equal $25.2 million for these conditions.

The differences observed are also significant in that they provide a foundation for exploring whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes.28 If so, studying what those hospitals do to achieve outcomes more efficiently can serve as the basis for the establishment of best practices.29 Standardizing best practices through protocols, pathways, and care-model redesign can reduce potentially unnecessary spending.30

Our findings showed that patient-level demographic and illness-severity covariates, including community-level HHI and SOI, did not consistently explain cost differences. Instead, LOS and ICU utilization were associated with higher costs.17,19 When considering the effect of the 4 cost components on the variation in total standardized costs between regions and between hospitals, the fact that the “other” category accounted for the largest percent of the variation is not surprising, because the cost of room occupancy and nursing services increases with longer LOS and more time in the ICU. Other individual cost components that were major drivers of variation were laboratory utilization for asthma and imaging for DKA and AGE31 (though they accounted for a much smaller proportion of total adjusted costs).19

To determine if these factors are modifiable, more information is needed to explain why practices differ. Many factors may contribute to varying utilization patterns, including differences in capabilities and resources (in the hospital and in the community) and patient volumes. For example, some hospitals provide continuous albuterol for status asthmaticus only in ICUs, while others provide it on regular units.32 But if certain hospitals do not have adequate resources or volumes to effectively care for certain populations outside of the ICU, their higher-value approach (considering quality and cost) may be to utilize ICU beds, even if some other hospitals care for those patients on non-ICU floors. Another possibility is that family preferences about care delivery (such as how long children stay in the hospital) may vary across regions.33

Other evidence suggests that physician practice and spending patterns are strongly influenced by the practices of the region where they trained.34 Because physicians often practice close to where they trained,35,36 this may partially explain how regional patterns are reinforced.

Even considering all mentioned covariates, our model did not fully explain variation in standardized costs. After adding the cost components as covariates, between one-third and one-fifth of the variation remained unexplained. It is possible that this unexplained variation stemmed from unmeasured patient-level factors.

In addition, while proxies for SES, including community-level HHI, did not significantly predict differences in costs across regions, it is possible that SES affected LOS differently in different regions. Previous studies have suggested that lower SES is associated with longer LOS.37 If this effect is more pronounced in certain regions (potentially because of differences in social service infrastructures), SES may be contributing to variations in cost through LOS.

Our findings were subject to limitations. First, this study only examined 3 diagnoses and did not include surgical or less common conditions. Second, while PHIS includes tertiary care, academic, and freestanding children’s hospitals, it does not include general hospitals, which is where most pediatric patients receive care.38 Third, we used ZIP code-based median annual HHI to account for SES, and we used ZIP codes to determine the distance to the hospital and rural-urban location of patients’ homes. These approximations lack precision because SES and distances vary within ZIP codes.39 Fourth, while adjusted standardized costs allow for comparisons between hospitals, they do not represent actual costs to patients or individual hospitals. Additionally, when determining whether variation remained after controlling for patient-level variables, we included SOI as a reflection of illness-severity at presentation. However, in practice, SOI scores may be assigned partially based on factors determined during the hospitalization.18 Finally, the use of other regional boundaries or the selection of different hospitals may yield different results.

CONCLUSION

This study reveals regional variations in costs of care for 3 inpatient pediatric conditions. Future studies should explore whether lower-cost regions or lower-cost hospitals achieve comparable quality outcomes. To the extent that variation is driven by modifiable factors and lower spending does not compromise outcomes, these data may prompt reviews of care models to reduce unwarranted variation and improve the value of care delivery at local, regional, and national levels.

Disclosure

Internal funds from the CHA and The Children’s Hospital of Philadelphia supported the conduct of this work. The authors have no financial interests, relationships, or affiliations relevant to the subject matter or materials discussed in the manuscript to disclose. The authors have no potential conflicts of interest relevant to the subject matter or materials discussed in the manuscript to disclose

1. Fisher E, Skinner J. Making Sense of Geographic Variations in Health Care: The New IOM Report. 2013; http://healthaffairs.org/blog/2013/07/24/making-sense-of-geographic-variations-in-health-care-the-new-iom-report/. Accessed on April 11, 2014.

1. Fisher E, Skinner J. Making Sense of Geographic Variations in Health Care: The New IOM Report. 2013; http://healthaffairs.org/blog/2013/07/24/making-sense-of-geographic-variations-in-health-care-the-new-iom-report/. Accessed on April 11, 2014.

© 2017 Society of Hospital Medicine

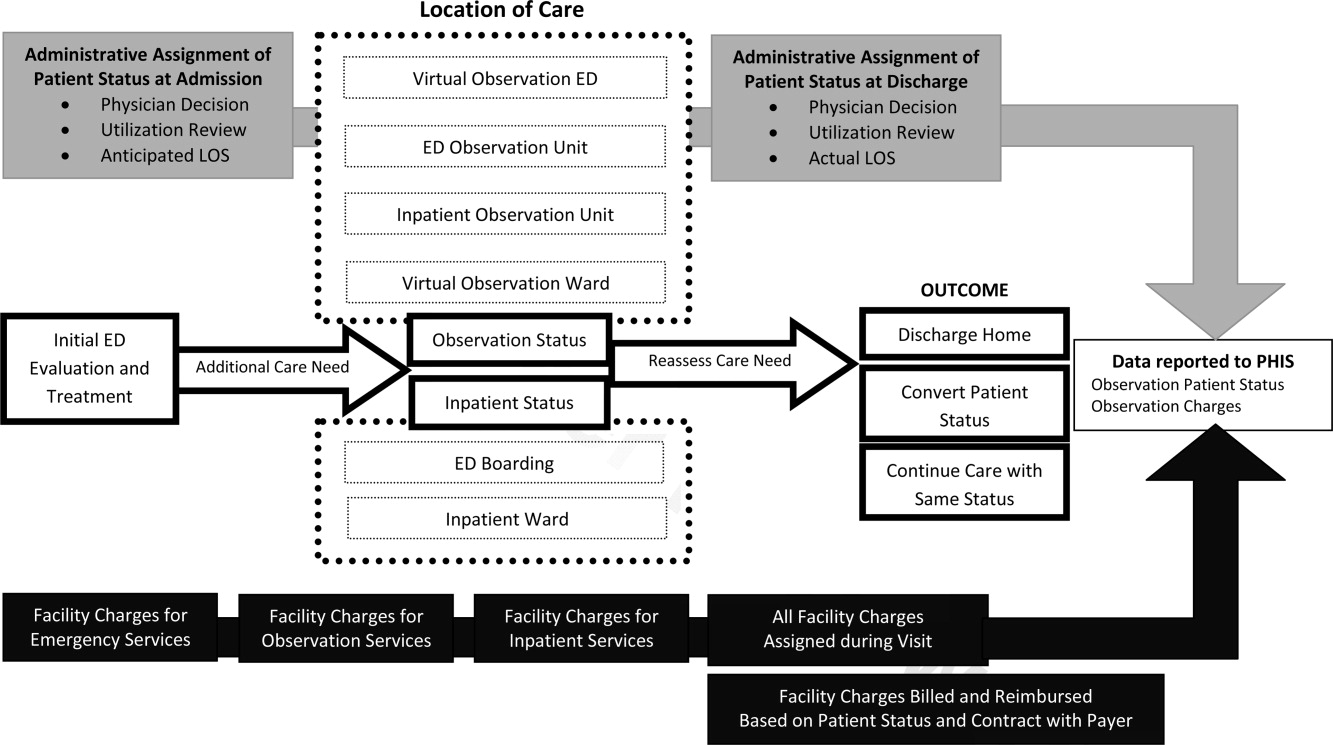

OUs and Patient Outcomes

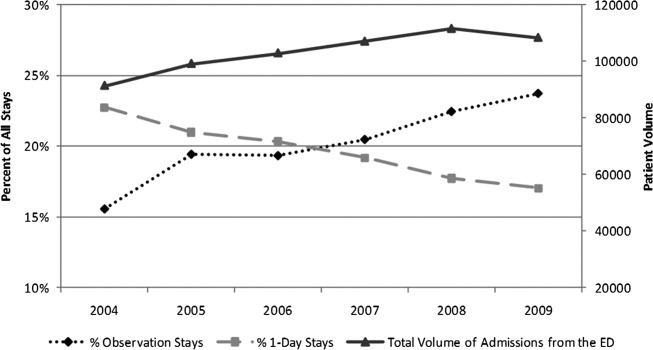

Many pediatric hospitalizations are of short duration, and more than half of short‐stay hospitalizations are designated as observation status.[1, 2] Observation status is an administrative label assigned to patients who do not meet hospital or payer criteria for inpatient‐status care. Short‐stay observation‐status patients do not fit in traditional models of emergency department (ED) or inpatient care. EDs often focus on discharging or admitting patients within a matter of hours, whereas inpatient units tend to measure length of stay (LOS) in terms of days[3] and may not have systems in place to facilitate rapid discharge of short‐stay patients.[4] Observation units (OUs) have been established in some hospitals to address the unique care needs of short‐stay patients.[5, 6, 7]

Single‐site reports from children's hospitals with successful OUs have demonstrated shorter LOS and lower costs compared with inpatient settings.[6, 8, 9, 10, 11, 12, 13, 14] No prior study has examined hospital‐level effects of an OU on observation‐status patient outcomes. The Pediatric Health Information System (PHIS) database provides a unique opportunity to explore this question, because unlike other national hospital administrative databases,[15, 16] the PHIS dataset contains information about children under observation status. In addition, we know which PHIS hospitals had a dedicated OU in 2011.7

We hypothesized that overall observation‐status stays in hospitals with a dedicated OU would be of shorter duration with earlier discharges at lower cost than observation‐status stays in hospitals without a dedicated OU. We compared hospitals with and without a dedicated OU on secondary outcomes including rates of conversion to inpatient status and return care for any reason.

METHODS

We conducted a cross‐sectional analysis of hospital administrative data using the 2011 PHIS databasea national administrative database that contains resource utilization data from 43 participating hospitals located in 26 states plus the District of Columbia. These hospitals account for approximately 20% of pediatric hospitalizations in the United States.

For each hospital encounter, PHIS includes patient demographics, up to 41 International Classification of Diseases, Ninth Revision, Clinical Modification (ICD‐9‐CM) diagnoses, up to 41 ICD‐9‐CM procedures, and hospital charges for services. Data are deidentified prior to inclusion, but unique identifiers allow for determination of return visits and readmissions following an index visit for an individual patient. Data quality and reliability are assured jointly by the Children's Hospital Association (formerly Child Health Corporation of America, Overland Park, KS), participating hospitals, and Truven Health Analytics (New York, NY). This study, using administrative data, was not considered human subjects research by the policies of the Cincinnati Children's Hospital Medical Center Institutional Review Board.

Hospital Selection and Hospital Characteristics

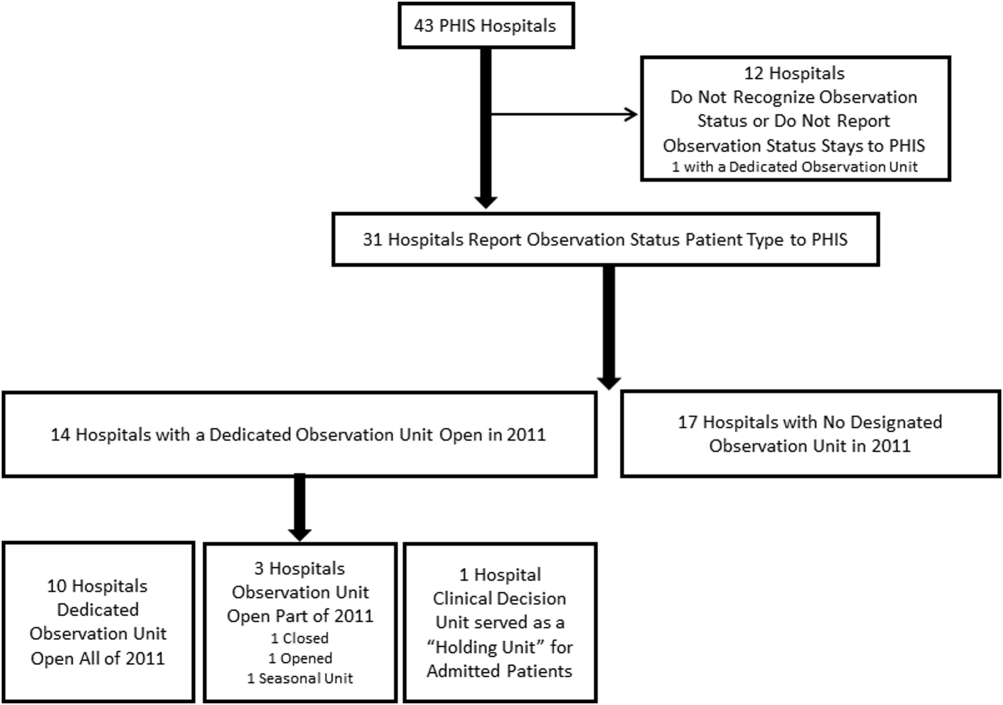

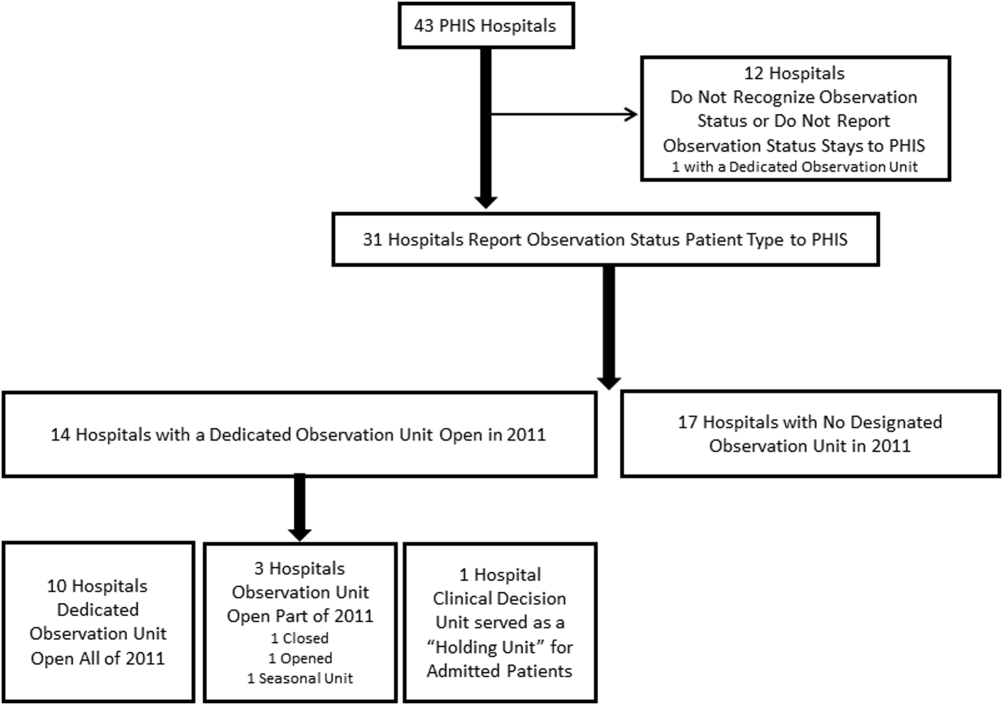

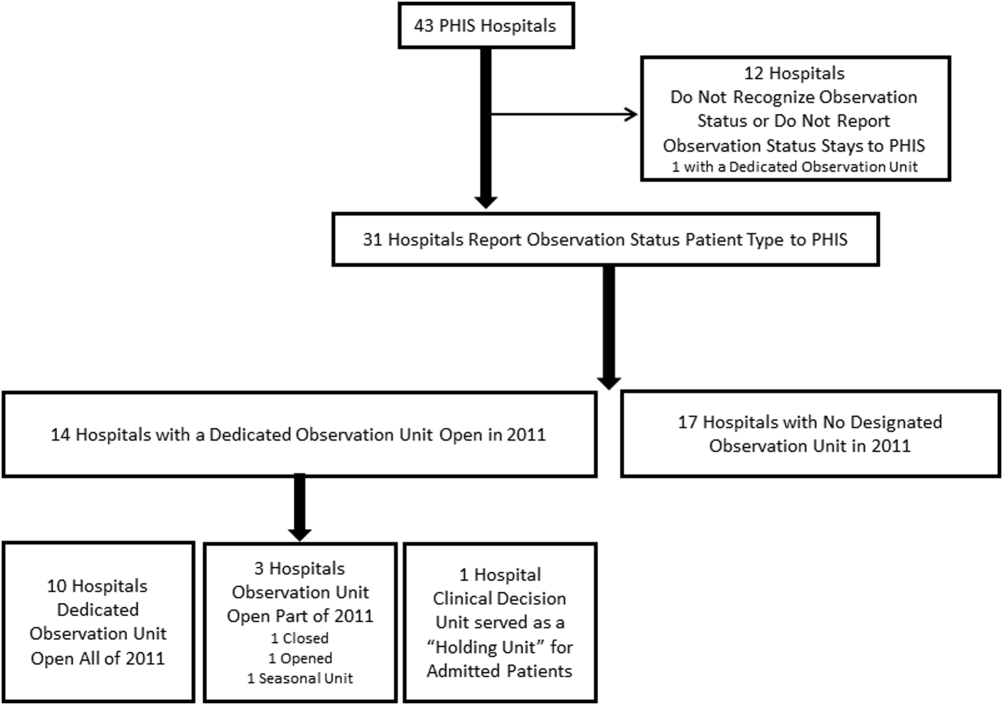

The study sample was drawn from the 31 hospitals that reported observation‐status patient data to PHIS in 2011. Analyses were conducted in 2013, at which time 2011 was the most recent year of data. We categorized 14 hospitals as having a dedicated OU during 2011 based on information collected in 2013.7 To summarize briefly, we interviewed by telephone representatives of hospitals responding to an email query as to the presence of a geographically distinct OU for the care of unscheduled patients from the ED. Three of the 14 representatives reported their hospital had 2 OUs, 1 of which was a separate surgical OU. Ten OUs cared for both ED patients and patients with scheduled procedures; 8 units received patients from non‐ED sources. Hospitalists provided staffing in more than half of the OUs.

We attempted to identify administrative data that would signal care delivered in a dedicated OU using hospital charge codes reported to PHIS, but learned this was not possible due to between‐hospital variation in the specificity of the charge codes. Therefore, we were unable to determine if patient care was delivered in a dedicated OU or another setting, such as a general inpatient unit or the ED. Other hospital characteristics available from the PHIS dataset included the number of inpatient beds, ED visits, inpatient admissions, observation‐status stays, and payer mix. We calculated the percentage of ED visits resulting in admission by dividing the number of ED visits with associated inpatient or observation status by the total number of ED visits and the percentage of admissions under observation status by dividing the number of observation‐status stays by the total number of admissions under observation or inpatient status.

Visit Selection and Patient Characteristics

All observation‐status stays regardless of the point of entry into the hospital were eligible for this study. We excluded stays that were birth‐related, included intensive care, or resulted in transfer or death. Patient demographic characteristics used to describe the cohort included age, gender, race/ethnicity, and primary payer. Stays that began in the ED were identified by an emergency room charge within PHIS. Eligible stays were categorized using All Patient Refined Diagnosis Related Groups (APR‐DRGs) version 24 using the ICD‐9‐CM code‐based proprietary 3M software (3M Health Information Systems, St. Paul, MN). We determined the 15 top‐ranking APR‐DRGs among observation‐status stays in hospitals with a dedicated OU and hospitals without. Procedural stays were identified based on procedural APR‐DRGs (eg, tonsil and adenoid procedures) or the presence of an ICD‐9‐CM procedure code (eg, 331 spinal tap).

Measured Outcomes

Outcomes of observation‐status stays were determined within 4 categories: (1) LOS, (2) standardized costs, (3) conversion to inpatient status, and (4) return visits and readmissions. LOS was calculated in terms of nights spent in hospital for all stays by subtracting the discharge date from the admission date and in terms of hours for stays in the 28 hospitals that report admission and discharge hour to the PHIS database. Discharge timing was examined in 4, 6‐hour blocks starting at midnight. Standardized costs were derived from a charge master index that was created by taking the median costs from all PHIS hospitals for each charged service.[17] Standardized costs represent the estimated cost of providing any particular clinical activity but are not the cost to patients, nor do they represent the actual cost to any given hospital. This approach allows for cost comparisons across hospitals, without biases arising from using charges or from deriving costs using hospitals' ratios of costs to charges.[18] Conversion from observation to inpatient status was calculated by dividing the number of inpatient‐status stays with observation codes by the number of observation‐statusonly stays plus the number of inpatient‐status stays with observation codes. All‐cause 3‐day ED return visits and 30‐day readmissions to the same hospital were assessed using patient‐specific identifiers that allowed for tracking of ED return visits and readmissions following the index observation stay.

Data Analysis

Descriptive statistics were calculated for hospital and patient characteristics using medians and interquartile ranges (IQRs) for continuous factors and frequencies with percentages for categorical factors. Comparisons of these factors between hospitals with dedicated OUs and without were made using [2] and Wilcoxon rank sum tests as appropriate. Multivariable regression was performed using generalized linear mixed models treating hospital as a random effect and used patient age, the case‐mix index based on the APR‐DRG severity of illness, ED visit, and procedures associated with the index observation‐status stay. For continuous outcomes, we performed a log transformation on the outcome, confirmed the normality assumption, and back transformed the results. Sensitivity analyses were conducted to compare LOS, standardized costs, and conversation rates by hospital type for 10 of the 15 top‐ranking APR‐DRGs commonly cared for by pediatric hospitalists and to compare hospitals that reported the presence of an OU that was consistently open (24 hours per day, 7 days per week) and operating during the entire 2011 calendar year, and those without. Based on information gathered from the telephone interviews, hospitals with partially open OUs were similar to hospitals with continuously open OUs, such that they were included in our main analyses. All statistical analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC). P values <0.05 were considered statistically significant.

RESULTS

Hospital Characteristics

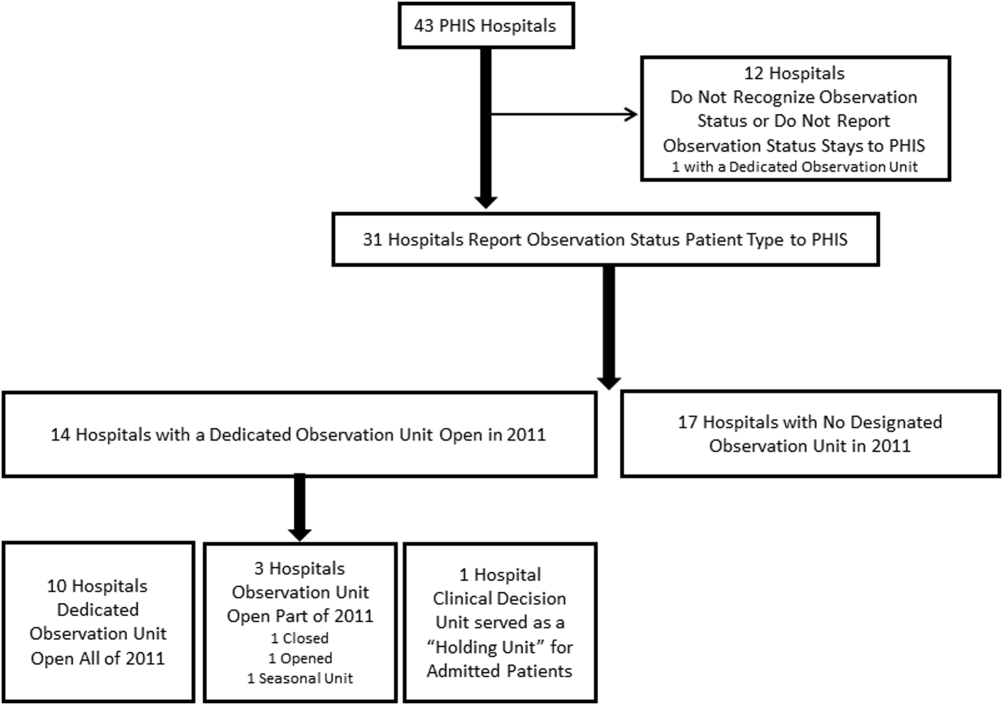

Dedicated OUs were present in 14 of the 31 hospitals that reported observation‐status patient data to PHIS (Figure 1). Three of these hospitals had OUs that were open for 5 months or less in 2011; 1 unit opened, 1 unit closed, and 1 hospital operated a seasonal unit. The remaining 17 hospitals reported no OU that admitted unscheduled patients from the ED during 2011. Hospitals with a dedicated OU had more inpatient beds and higher median number of inpatient admissions than those without (Table 1). Hospitals were statistically similar in terms of total volume of ED visits, percentage of ED visits resulting in admission, total number of observation‐status stays, percentage of admissions under observation status, and payer mix.

| Overall, Median (IQR) | Hospitals With a Dedicated Observation Unit, Median (IQR) | Hospitals Without a Dedicated Observation Unit, Median (IQR) | P Value | |

|---|---|---|---|---|

| ||||

| No. of hospitals | 31 | 14 | 17 | |

| Total no. of inpatient beds | 273 (213311) | 304 (269425) | 246 (175293) | 0.006 |

| Total no. ED visits | 62971 (47,50497,723) | 87,892 (55,102117,119) | 53,151 (4750470,882) | 0.21 |

| ED visits resulting in admission, % | 13.1 (9.715.0) | 13.8 (10.5, 19.1) | 12.5 (9.714.5) | 0.31 |

| Total no. of inpatient admissions | 11,537 (9,26814,568) | 13,206 (11,32517,869) | 10,207 (8,64013,363) | 0.04 |

| Admissions under observation status, % | 25.7 (19.733.8) | 25.5 (21.431.4) | 26.0 (16.935.1) | 0.98 |

| Total no. of observation stays | 3,820 (27935672) | 4,850 (3,309 6,196) | 3,141 (2,3654,616) | 0.07 |

| Government payer, % | 60.2 (53.371.2) | 62.1 (54.9, 65.9) | 59.2 (53.373.7) | 0.89 |

Observation‐Status Patients by Hospital Type

In 2011, there were a total of 136,239 observation‐status stays69,983 (51.4%) within the 14 hospitals with a dedicated OU and 66,256 (48.6%) within the 17 hospitals without. Patient care originated in the ED for 57.8% observation‐status stays in hospitals with an OU compared with 53.0% of observation‐status stays in hospitals without (P<0.001). Compared with hospitals with a dedicated OU, those without a dedicated OU had higher percentages of observation‐status patients older than 12 years and non‐Hispanic and a higher percentage of observation‐status patients with private payer type (Table 2). The 15 top‐ranking APR‐DRGs accounted for roughly half of all observation‐status stays and were relatively consistent between hospitals with and without a dedicated OU (Table 3). Procedural care was frequently associated with observation‐status stays.

| Overall, No. (%) | Hospitals With a Dedicated Observation Unit, No. (%)* | Hospitals Without a Dedicated Observation Unit, No. (%) | P Value | |

|---|---|---|---|---|

| ||||

| Age | ||||

| <1 year | 23,845 (17.5) | 12,101 (17.3) | 11,744 (17.7) | <0.001 |

| 15 years | 53,405 (38.5) | 28,052 (40.1) | 24,353 (36.8) | |

| 612 years | 33,674 (24.7) | 17,215 (24.6) | 16,459 (24.8) | |

| 1318 years | 23,607 (17.3) | 11,472 (16.4) | 12,135 (18.3) | |

| >18 years | 2,708 (2) | 1,143 (1.6) | 1,565 (2.4) | |

| Gender | ||||

| Male | 76,142 (55.9) | 39,178 (56) | 36,964 (55.8) | 0.43 |

| Female | 60,025 (44.1) | 30,756 (44) | 29,269 (44.2) | |

| Race/ethnicity | ||||

| Non‐Hispanic white | 72,183 (53.0) | 30,653 (43.8) | 41,530 (62.7) | <0.001 |

| Non‐Hispanic black | 30,995 (22.8) | 16,314 (23.3) | 14,681 (22.2) | |

| Hispanic | 21,255 (15.6) | 16,583 (23.7) | 4,672 (7.1) | |

| Asian | 2,075 (1.5) | 1,313 (1.9) | 762 (1.2) | |

| Non‐Hispanic other | 9,731 (7.1) | 5,120 (7.3) | 4,611 (7.0) | |

| Payer | ||||

| Government | 68,725 (50.4) | 36,967 (52.8) | 31,758 (47.9) | <0.001 |

| Private | 48,416 (35.5) | 21,112 (30.2) | 27,304 (41.2) | |

| Other | 19,098 (14.0) | 11,904 (17) | 7,194 (10.9) | |

| Observation‐Status Patients in Hospitals With a Dedicated Observation Unit* | Observation‐Status Patients in Hospitals Without a Dedicated Observation Unit | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Rank | APR‐DRG | No. | % of All Observation Status Stays | % Began in ED | Rank | APR‐DRG | No. | % of All Observation Status Stays | % Began in ED |

| |||||||||

| 1 | Tonsil and adenoid procedures | 4,621 | 6.6 | 1.3 | 1 | Tonsil and adenoid procedures | 3,806 | 5.7 | 1.6 |

| 2 | Asthma | 4,246 | 6.1 | 85.3 | 2 | Asthma | 3,756 | 5.7 | 79.0 |

| 3 | Seizure | 3,516 | 5.0 | 52.0 | 3 | Seizure | 2,846 | 4.3 | 54.9 |

| 4 | Nonbacterial gastroenteritis | 3,286 | 4.7 | 85.8 | 4 | Upper respiratory infections | 2,733 | 4.1 | 69.6 |

| 5 | Bronchiolitis, RSV pneumonia | 3,093 | 4.4 | 78.5 | 5 | Nonbacterial gastroenteritis | 2,682 | 4.0 | 74.5 |

| 6 | Upper respiratory infections | 2,923 | 4.2 | 80.0 | 6 | Other digestive system diagnoses | 2,545 | 3.8 | 66.3 |

| 7 | Other digestive system diagnoses | 2,064 | 2.9 | 74.0 | 7 | Bronchiolitis, RSV pneumonia | 2,544 | 3.8 | 69.2 |

| 8 | Respiratory signs, symptoms, diagnoses | 2,052 | 2.9 | 81.6 | 8 | Shoulder and arm procedures | 1,862 | 2.8 | 72.6 |

| 9 | Other ENT/cranial/facial diagnoses | 1,684 | 2.4 | 43.6 | 9 | Appendectomy | 1,785 | 2.7 | 79.2 |

| 10 | Shoulder and arm procedures | 1,624 | 2.3 | 79.1 | 10 | Other ENT/cranial/facial diagnoses | 1,624 | 2.5 | 29.9 |

| 11 | Abdominal pain | 1,612 | 2.3 | 86.2 | 11 | Abdominal pain | 1,461 | 2.2 | 82.3 |

| 12 | Fever | 1,494 | 2.1 | 85.1 | 12 | Other factors influencing health status | 1,461 | 2.2 | 66.3 |

| 13 | Appendectomy | 1,465 | 2.1 | 66.4 | 13 | Cellulitis/other bacterial skin infections | 1,383 | 2.1 | 84.2 |

| 14 | Cellulitis/other bacterial skin infections | 1,393 | 2.0 | 86.4 | 14 | Respiratory signs, symptoms, diagnoses | 1,308 | 2.0 | 39.1 |

| 15 | Pneumonia NEC | 1,356 | 1.9 | 79.1 | 15 | Pneumonia NEC | 1,245 | 1.9 | 73.1 |

| Total | 36,429 | 52.0 | 57.8 | Total | 33,041 | 49.87 | 53.0 | ||

Outcomes of Observation‐Status Stays

A greater percentage of observation‐status stays in hospitals with a dedicated OU experienced a same‐day discharge (Table 4). In addition, a higher percentage of discharges occurred between midnight and 11 am in hospitals with a dedicated OU. However, overall risk‐adjusted LOS in hours (12.8 vs 12.2 hours, P=0.90) and risk‐adjusted total standardized costs ($2551 vs $2433, P=0.75) were similar between hospital types. These findings were consistent within the 1 APR‐DRGs commonly cared for by pediatric hospitalists (see Supporting Information, Appendix 1, in the online version of this article). Overall, conversion from observation to inpatient status was significantly higher in hospitals with a dedicated OU compared with hospitals without; however, this pattern was not consistent across the 10 APR‐DRGs commonly cared for by pediatric hospitalists (see Supporting Information, Appendix 1, in the online version of this article). Adjusted odds of 3‐day ED return visits and 30‐day readmissions were comparable between hospital groups.

| Observation‐Status Patients in Hospitals With a Dedicated Observation Unit | Observation‐Status Patients in Hospitals Without a Dedicated Observation Unit | P Value | |

|---|---|---|---|

| |||

| No. of hospitals | 14 | 17 | |

| Length of stay, h, median (IQR) | 12.8 (6.923.7) | 12.2 (721.3) | 0.90 |

| 0 midnights, no. (%) | 16,678 (23.8) | 14,648 (22.1) | <.001 |

| 1 midnight, no. (%) | 46,144 (65.9) | 44,559 (67.3) | |

| 2 midnights or more, no. (%) | 7,161 (10.2) | 7,049 (10.6) | |

| Discharge timing, no. (%) | |||

| Midnight5 am | 1,223 (1.9) | 408 (0.7) | <0.001 |

| 6 am11 am | 18,916 (29.3) | 15,914 (27.1) | |

| Noon5 pm | 32,699 (50.7) | 31,619 (53.9) | |

| 6 pm11 pm | 11,718 (18.2) | 10,718 (18.3) | |

| Total standardized costs, $, median (IQR) | 2,551.3 (2,053.93,169.1) | 2,433.4 (1,998.42,963) | 0.75 |

| Conversion to inpatient status | 11.06% | 9.63% | <0.01 |

| Return care, AOR (95% CI) | |||

| 3‐day ED return visit | 0.93 (0.77‐1.12) | Referent | 0.46 |

| 30‐day readmission | 0.88 (0.67‐1.15) | Referent | 0.36 |

We found similar results in sensitivity analyses comparing observation‐status stays in hospitals with a continuously open OU (open 24 hours per day, 7 days per week, for all of 2011 [n=10 hospitals]) to those without(see Supporting Information, Appendix 2, in the online version of this article). However, there were, on average, more observation‐status stays in hospitals with a continuously open OU (median 5605, IQR 42077089) than hospitals without (median 3309, IQR 26784616) (P=0.04). In contrast to our main results, conversion to inpatient status was lower in hospitals with a continuously open OU compared with hospitals without (8.52% vs 11.57%, P<0.01).

DISCUSSION

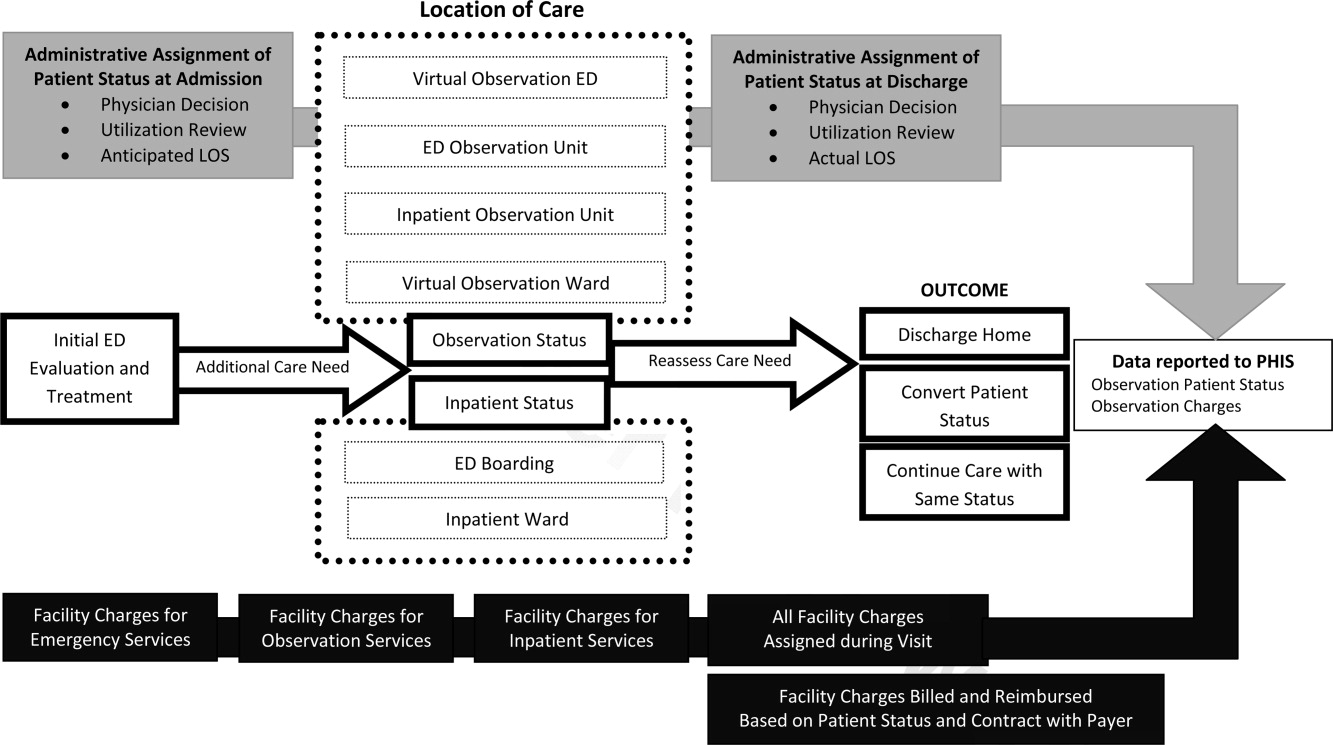

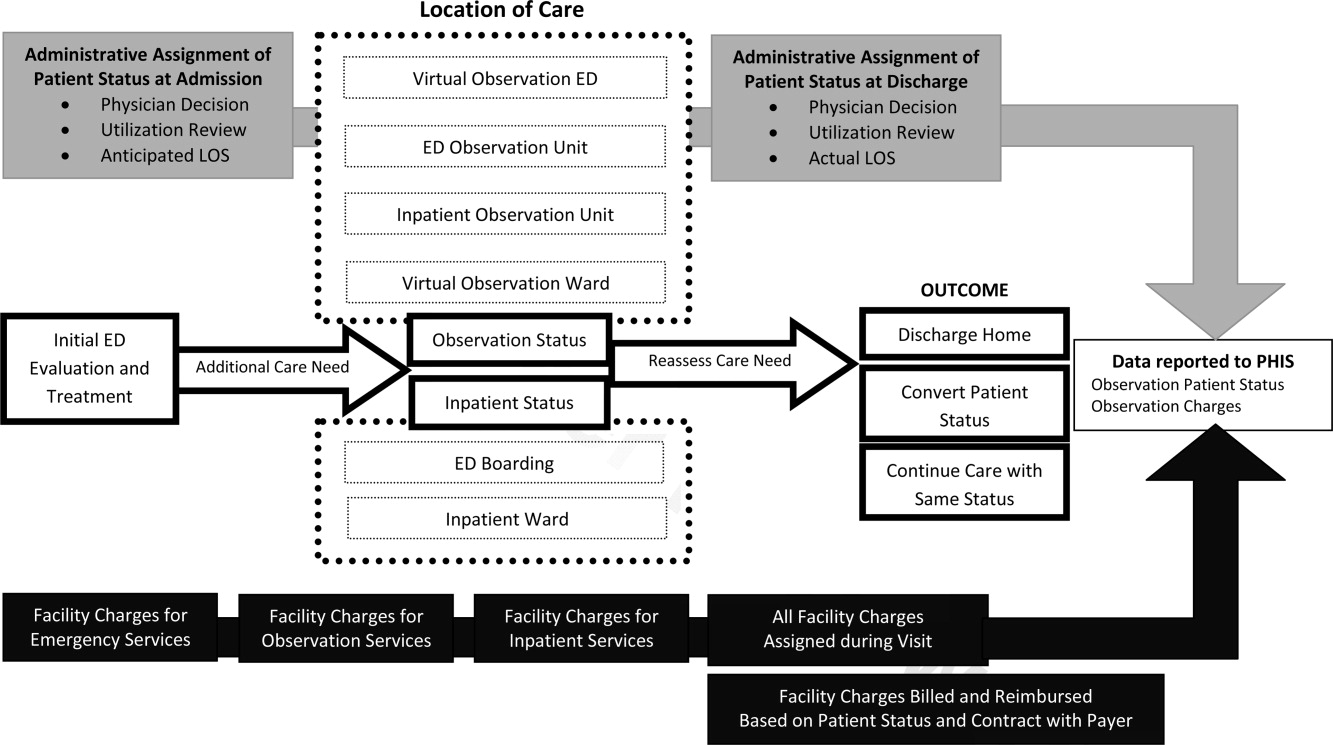

Counter to our hypothesis, we did not find hospital‐level differences in length of stay or costs for observation‐status patients cared for in hospitals with and without a dedicated OU, though hospitals with dedicated OUs did have more same‐day discharges and more morning discharges. The lack of observed differences in LOS and costs may reflect the fact that many children under observation status are treated throughout the hospital, even in facilities with a dedicated OU. Access to a dedicated OU is limited by factors including small numbers of OU beds and specific low acuity/low complexity OU admission criteria.[7] The inclusion of all children admitted under observation status in our analyses may have diluted any effect of dedicated OUs at the hospital level, but was necessary due to the inability to identify location of care for children admitted under observation status. Location of care is an important variable that should be incorporated into administrative databases to allow for comparative effectiveness research designs. Until such data are available, chart review at individual hospitals would be necessary to determine which patients received care in an OU.