User login

CV disease and mortality risk higher with younger age of type 2 diabetes diagnosis

Individuals who are younger when diagnosed with type 2 diabetes are at greater risk of cardiovascular disease and death, compared with those diagnosed at an older age, according to a retrospective study involving almost 2 million people.

People diagnosed with type 2 diabetes at age 40 or younger were at greatest risk of most outcomes, reported lead author Naveed Sattar, MD, PhD, professor of metabolic medicine, University of Glasgow, Scotland, and his colleagues. “Treatment target recommendations in regards to the risk factor control may need to be more aggressive in people developing diabetes at younger ages,” they wrote in Circulation.

In contrast, developing type 2 diabetes over the age of 80 years had little impact on risks.

“[R]eassessment of treatment goals in elderly might be useful,” the investigators wrote. “Diabetes screening needs for the elderly (above 80) should also be reevaluated.”

The study involved 318,083 patients with type 2 diabetes registered in the Swedish National Diabetes Registry between 1998 and 2012. Each patient was matched with 5 individuals from the general population based on sex, age, and country of residence, providing a control population of 1,575,108. Outcomes assessed included non-cardiovascular mortality, cardiovascular mortality, all causemortality, hospitalization for heart failure, coronary heart disease, stroke, atrial fibrillation, and acute myocardial infarction. Patients were followed for cardiovascular outcomes from 1998 to December 2013, while mortality surveillance continued through 2014.

In comparison with controls, patients 40 years or less had the highest excess risk of the most outcomes. *Excess risk of heart failure was elevated almost 5-fold (hazard ratio (HR), R 4.77), and risk of coronary heart disease wasn’t far behind (HR, 4.33). Risks of acute MI (HR, 3.41), stroke (HR, 3.58), and atrial fibrillation (HR, 1.95) were also elevated. Cardiovascular-related mortality was increased almost 3-fold (HR, 2.72), while total mortality (HR, 2.05) and non-cardiovascular mortality (HR, 1.95) were raised to a lesser degree.

“Thereafter, incremental risks generally declined with each higher decade age at diagnosis” of type 2 diabetes,” the investigators wrote.

After 80 years of age, all relative mortality risk factors dropped to less than 1, indicating lower risk than controls. Although non-fatal outcomes were still greater than 1 in this age group, these risks were “substantially attenuated compared with relative incremental risks in those diagnosed with T2DM at younger ages,” the investigators wrote.

The study was funded by the Swedish Association of Local Authorities Regions, the Swedish Heart and Lung Foundation, and the Swedish Research Council.

The investigators disclosed financial relationships with Amgen, AstraZeneca, Eli Lilly, and other pharmaceutical companies.

SOURCE: Sattar et al. Circulation. 2019 Apr 8. doi:10.1161/CIRCULATIONAHA.118.037885.

Individuals who are younger when diagnosed with type 2 diabetes are at greater risk of cardiovascular disease and death, compared with those diagnosed at an older age, according to a retrospective study involving almost 2 million people.

People diagnosed with type 2 diabetes at age 40 or younger were at greatest risk of most outcomes, reported lead author Naveed Sattar, MD, PhD, professor of metabolic medicine, University of Glasgow, Scotland, and his colleagues. “Treatment target recommendations in regards to the risk factor control may need to be more aggressive in people developing diabetes at younger ages,” they wrote in Circulation.

In contrast, developing type 2 diabetes over the age of 80 years had little impact on risks.

“[R]eassessment of treatment goals in elderly might be useful,” the investigators wrote. “Diabetes screening needs for the elderly (above 80) should also be reevaluated.”

The study involved 318,083 patients with type 2 diabetes registered in the Swedish National Diabetes Registry between 1998 and 2012. Each patient was matched with 5 individuals from the general population based on sex, age, and country of residence, providing a control population of 1,575,108. Outcomes assessed included non-cardiovascular mortality, cardiovascular mortality, all causemortality, hospitalization for heart failure, coronary heart disease, stroke, atrial fibrillation, and acute myocardial infarction. Patients were followed for cardiovascular outcomes from 1998 to December 2013, while mortality surveillance continued through 2014.

In comparison with controls, patients 40 years or less had the highest excess risk of the most outcomes. *Excess risk of heart failure was elevated almost 5-fold (hazard ratio (HR), R 4.77), and risk of coronary heart disease wasn’t far behind (HR, 4.33). Risks of acute MI (HR, 3.41), stroke (HR, 3.58), and atrial fibrillation (HR, 1.95) were also elevated. Cardiovascular-related mortality was increased almost 3-fold (HR, 2.72), while total mortality (HR, 2.05) and non-cardiovascular mortality (HR, 1.95) were raised to a lesser degree.

“Thereafter, incremental risks generally declined with each higher decade age at diagnosis” of type 2 diabetes,” the investigators wrote.

After 80 years of age, all relative mortality risk factors dropped to less than 1, indicating lower risk than controls. Although non-fatal outcomes were still greater than 1 in this age group, these risks were “substantially attenuated compared with relative incremental risks in those diagnosed with T2DM at younger ages,” the investigators wrote.

The study was funded by the Swedish Association of Local Authorities Regions, the Swedish Heart and Lung Foundation, and the Swedish Research Council.

The investigators disclosed financial relationships with Amgen, AstraZeneca, Eli Lilly, and other pharmaceutical companies.

SOURCE: Sattar et al. Circulation. 2019 Apr 8. doi:10.1161/CIRCULATIONAHA.118.037885.

Individuals who are younger when diagnosed with type 2 diabetes are at greater risk of cardiovascular disease and death, compared with those diagnosed at an older age, according to a retrospective study involving almost 2 million people.

People diagnosed with type 2 diabetes at age 40 or younger were at greatest risk of most outcomes, reported lead author Naveed Sattar, MD, PhD, professor of metabolic medicine, University of Glasgow, Scotland, and his colleagues. “Treatment target recommendations in regards to the risk factor control may need to be more aggressive in people developing diabetes at younger ages,” they wrote in Circulation.

In contrast, developing type 2 diabetes over the age of 80 years had little impact on risks.

“[R]eassessment of treatment goals in elderly might be useful,” the investigators wrote. “Diabetes screening needs for the elderly (above 80) should also be reevaluated.”

The study involved 318,083 patients with type 2 diabetes registered in the Swedish National Diabetes Registry between 1998 and 2012. Each patient was matched with 5 individuals from the general population based on sex, age, and country of residence, providing a control population of 1,575,108. Outcomes assessed included non-cardiovascular mortality, cardiovascular mortality, all causemortality, hospitalization for heart failure, coronary heart disease, stroke, atrial fibrillation, and acute myocardial infarction. Patients were followed for cardiovascular outcomes from 1998 to December 2013, while mortality surveillance continued through 2014.

In comparison with controls, patients 40 years or less had the highest excess risk of the most outcomes. *Excess risk of heart failure was elevated almost 5-fold (hazard ratio (HR), R 4.77), and risk of coronary heart disease wasn’t far behind (HR, 4.33). Risks of acute MI (HR, 3.41), stroke (HR, 3.58), and atrial fibrillation (HR, 1.95) were also elevated. Cardiovascular-related mortality was increased almost 3-fold (HR, 2.72), while total mortality (HR, 2.05) and non-cardiovascular mortality (HR, 1.95) were raised to a lesser degree.

“Thereafter, incremental risks generally declined with each higher decade age at diagnosis” of type 2 diabetes,” the investigators wrote.

After 80 years of age, all relative mortality risk factors dropped to less than 1, indicating lower risk than controls. Although non-fatal outcomes were still greater than 1 in this age group, these risks were “substantially attenuated compared with relative incremental risks in those diagnosed with T2DM at younger ages,” the investigators wrote.

The study was funded by the Swedish Association of Local Authorities Regions, the Swedish Heart and Lung Foundation, and the Swedish Research Council.

The investigators disclosed financial relationships with Amgen, AstraZeneca, Eli Lilly, and other pharmaceutical companies.

SOURCE: Sattar et al. Circulation. 2019 Apr 8. doi:10.1161/CIRCULATIONAHA.118.037885.

FROM CIRCULATION

Key clinical point: Patients who are younger when diagnosed with type 2 diabetes mellitus (T2DM) are at greater risk of cardiovascular disease and death than patients diagnosed at an older age.

Major finding: Patients diagnosed with T2DM at age 40 or younger had twice the risk of death from any cause, compared with age-matched controls (hazard ratio, 2.05).

Study details: A retrospective analysis of type 2 diabetes and associations with cardiovascular and mortality risks, using data from 318,083 patients in the Swedish National Diabetes Registry.

Disclosures: The study was funded by the Swedish Association of Local Authorities Regions, the Swedish Heart and Lung Foundation, and the Swedish Research Council. The investigators disclosed financial relationships with Amgen, Astra-Zeneca, Eli Lilly, and others.

Source: Sattar et al. Circulation. 2019 Apr 8. doi:10.1161/CIRCULATIONAHA.118.037885.

Managing Eating Disorders on a General Pediatrics Unit: A Centralized Video Monitoring Pilot

Hospitalizations for nutritional rehabilitation of patients with restrictive eating disorders are increasing.1 Among primary mental health admissions at free-standing children’s hospitals, eating disorders represent 5.5% of hospitalizations and are associated with the longest length of stay (LOS; mean 14.3 days) and costliest care (mean $46,130).2 Admission is necessary to ensure initial weight restoration and monitoring for symptoms of refeeding syndrome, including electrolyte shifts and vital sign abnormalities.3-5

Supervision is generally considered an essential element of caring for hospitalized patients with eating disorders, who may experience difficulty adhering to nutritional treatment, perform excessive movement or exercise, or demonstrate purging or self-harming behaviors. Supervision is presumed to prevent counterproductive behaviors, facilitating weight gain and earlier discharge to psychiatric treatment. Best practices for patient supervision to address these challenges have not been established but often include meal time or continuous one-to-one supervision by nursing assistants (NAs) or other staff.6,7 While meal supervision has been shown to decrease medical LOS, it is costly, reduces staff availability for the care of other patient care, and can be a barrier to caring for patients with eating disorders in many institutions.8

Although not previously used in patients with eating disorders, centralized video monitoring (CVM) may provide an additional mode of supervision. CVM is an emerging technology consisting of real-time video streaming, without video recording, enabling tracking of patient movement, redirection of behaviors, and communication with unit nurses when necessary. CVM has been used in multiple patient safety initiatives to reduce falls, address staffing shortages, reduce costs,9,10 supervise patients at risk for self-harm or elopement, and prevent controlled medication diversion.10,11

We sought to pilot a novel use of CVM to replace our institution’s standard practice of continuous one-to-one nursing assistant (NA) supervision of patients admitted for medical stabilization of an eating disorder. Our objective was to evaluate the supervision cost and feasibility of CVM, using LOS and days to weight gain as balancing measures.

METHODS

Setting and Participants

This retrospective cohort study included patients 12-18 years old admitted to the pediatric hospital medicine service on a general unit of an academic quaternary care children’s hospital for medical stabilization of an eating disorder between September 2013 and March 2017. Patients were identified using administrative data based on primary or secondary diagnosis of anorexia nervosa, eating disorder not other wise specified, or another specified eating disorder (ICD 9 3071, 20759, or ICD 10 f5000, 5001, f5089, f509).12,13 This research study was considered exempt by the University of Wisconsin School of Medicine and Public Health’s Institutional Review Board.

Supervision Interventions

A standard medical stabilization protocol was used for patients admitted with an eating disorder throughout the study period (Appendix). All patients received continuous one-to-one NA supervision until they reached the target calorie intake and demonstrated the ability to follow the nutritional meal protocol. Beginning July 2015, patients received continuous CVM supervision unless they expressed suicidal ideation (SI), which triggered one-to-one NA supervision until they no longer endorsed suicidality.

Centralized Video Monitoring Implementation

Institutional CVM technology was AvaSys TeleSitter Solution (AvaSure, Inc). Our institution purchased CVM devices for use in adult settings, and one was assigned for pediatric CVM. Mobile CVM video carts were deployed to patient rooms and generated live video streams, without recorded capture, which were supervised by CVM technicians. These technicians were NAs hired and trained specifically for this role; worked four-, eight-, and 12-hour shifts; and observed up to eight camera feeds on a single monitor in a centralized room. Patients and family members could refuse CVM, which would trigger one-to-one NA supervision. Patients were not observed by CVM while in the restroom; staff were notified by either the patient or technician, and one-to-one supervision was provided. CVM had two-way audio communication, which allowed technicians to redirect patients verbally. Technicians could contact nursing staff directly by phone when additional intervention was needed.

Supervision Costs

NA supervision costs were estimated at $19/hour, based upon institutional human resources average NA salaries at that time. No additional mealtime supervision was included, as in-person supervision was already occurring.

CVM supervision costs were defined as the sum of the device cost plus CVM technician costs and two hours of one-to-one NA mealtime supervision per day. The CVM device cost was estimated at $2.10/hour, assuming a 10-year machine life expectancy (single unit cost $82,893 in 2015, 3,944 hours of use in fiscal year of 2018). CVM technician costs were $19/hour, based upon institutional human resources average CVM technician salaries at that time. Because technicians monitored an average of six patients simultaneously during this study, one-sixth of a CVM technician’s salary (ie, $3.17/hour) was used for each hour of CVM monitoring. Patients with mixed (NA and CVM) supervision were analyzed with those having CVM supervision. These patients’ costs were the sum of their NA supervision costs plus their CVM supervision costs.

Data Collection

Descriptive variables including age, gender, race/ethnicity, insurance, and LOS were collected from administrative data. The duration and type of supervision for all patients were collected from daily staffing logs. The eating disorder protocol standardized the process of obtaining daily weights (Appendix). Days to weight gain following admission were defined as the total number of days from admission to the first day of weight gain that was followed by another day of weight gain or maintaining the same weight

Data Analysis

Patient and hospitalization characteristics were summarized. A sample size of at least 14 in each group was estimated as necessary to detect a 50% reduction in supervision cost between the groups using alpha = 0.05, a power of 80%, a mean cost of $4,400 in the NA group, and a standard deviation of $1,600.Wilcoxon rank-sum tests were used to assess differences in median supervision cost between NA and CVM use. Differences in mean LOS and days to weight gain between NA and CVM use were assessed with t-tests because these data were normally distributed.

RESULTS

Patient Characteristics and Supervision Costs

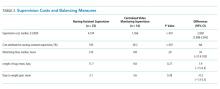

The study included 37 consecutive admissions (NA = 23 and CVM = 14) with 35 unique patients. Patients were female, primarily non-Hispanic White, and privately insured (Table 1). Median supervision cost for the NA was statistically significantly more expensive at $4,104/admission versus $1,166/admission for CVM (P < .001, Table 2).

Balancing Measures, Acceptability, and Feasibility

Mean LOS was 11.7 days for NA and 9.8 days for CVM (P = .27; Table 2). The mean number of days to weight gain was 3.1 and 3.6 days, respectively (P = .28). No patients converted from CVM to NA supervision. One patient with SI converted to CVM after SI resolved and two patients required ongoing NA supervision due to continued SI. There were no reported refusals, technology failures, or unplanned discontinuations of CVM. One patient/family reported excessive CVM redirection of behavior.

DISCUSSION

This is the first description of CVM use in adolescent patients or patients with eating disorders. Our results suggest that CVM appears feasible and less costly in this population than one-to-one NA supervision, without statistically significant differences in LOS or time to weight gain. Patients with CVM with any NA supervision (except mealtime alone) were analyzed in the CVM group; therefore, this study may underestimate cost savings from CVM supervision. This innovative use of CVM may represent an opportunity for hospitals to repurpose monitoring technology for more efficient supervision of patients with eating disorders.

This pediatric pilot study adds to the growing body of literature in adult patients suggesting CVM supervision may be a feasible inpatient cost-reduction strategy.9,10 One single-center study demonstrated that the use of CVM with adult inpatients led to fewer unsafe behaviors, eg, patient removal of intravenous catheters and oxygen therapy. Personnel savings exceeded the original investment cost of the monitor within one fiscal quarter.9 Results of another study suggest that CVM use with hospitalized adults who required supervision to prevent falls was associated with improved patient and family satisfaction.14 In the absence of a gold standard for supervision of patients hospitalized with eating disorders, CVM technology is a tool that may balance cost, care quality, and patient experience. Given the upfront investment in CVM units, this technology may be most appropriate for institutions already using CVM for other inpatient indications.

Although our institutional cost of CVM use was similar to that reported by other institutions,11,15 the single-center design of this pilot study limits the generalizability of our findings. Unadjusted results of this observational study may be confounded by indication bias. As this was a pilot study, it was powered to detect a clinically significant difference in cost between NA and CVM supervision. While statistically significant differences were not seen in LOS or weight gain, this pilot study was not powered to detect potential differences or to adjust for all potential confounders (eg, other mental health conditions or comorbidities, eating disorder type, previous hospitalizations). Future studies should include these considerations in estimating sample sizes. The ability to conduct a robust cost-effectiveness analysis was also limited by cost data availability and reliance on staffing assumptions to calculate supervision costs. However, these findings will be important for valid effect size estimates for future interventional studies that rigorously evaluate CVM effectiveness and safety. Patients and families were not formally surveyed about their experiences with CVM, and the patient and family experience is another important outcome to consider in future studies.

CONCLUSION

The results of this pilot study suggest that supervision costs for patients admitted for medical stabilization of eating disorders were statistically significantly lower with CVM when compared with one-to-one NA supervision, without a change in hospitalization LOS or time to weight gain. These findings are particularly important as hospitals seek opportunities to reduce costs while providing safe and effective care. Future efforts should focus on evaluating clinical outcomes and patient experiences with this technology and strategies to maximize efficiency to offset the initial device cost.

Disclosures

The authors have no financial relationships relevant to this article to disclose. The authors have no conflicts of interest relevant to this article to disclose.

1. Zhao Y, Encinosa W. An update on hospitalizations for eating disorders, 1999 to 2009: statistical brief #120. In: Healthcare Cost and Utilization Project (HCUP) Statistical Briefs. Rockville, MD: Agency for Healthcare Research and Quality (US); 2006. PubMed

2. Bardach NS, Coker TR, Zima BT, et al. Common and costly hospitalizations for pediatric mental health disorders. Pediatrics. 2014;133(4):602-609. doi: 10.1542/peds.2013-3165. PubMed

3. Society for Adolescent H, Medicine, Golden NH, et al. Position Paper of the Society for Adolescent Health and Medicine: medical management of restrictive eating disorders in adolescents and young adults. J Adolesc Health. 2015;56(1):121-125. doi: 10.1016/j.jadohealth.2014.10.259. PubMed

4. Katzman DK. Medical complications in adolescents with anorexia nervosa: a review of the literature. Int J Eat Disord. 2005;37(S1):S52-S59; discussion S87-S59. doi: 10.1002/eat.20118. PubMed

5. Strandjord SE, Sieke EH, Richmond M, Khadilkar A, Rome ES. Medical stabilization of adolescents with nutritional insufficiency: a clinical care path. Eat Weight Disord. 2016;21(3):403-410. doi: 10.1007/s40519-015-0245-5. PubMed

6. Kells M, Davidson K, Hitchko L, O’Neil K, Schubert-Bob P, McCabe M. Examining supervised meals in patients with restrictive eating disorders. Appl Nurs Res. 2013;26(2):76-79. doi: 10.1016/j.apnr.2012.06.003. PubMed

7. Leclerc A, Turrini T, Sherwood K, Katzman DK. Evaluation of a nutrition rehabilitation protocol in hospitalized adolescents with restrictive eating disorders. J Adolesc Health. 2013;53(5):585-589. doi: 10.1016/j.jadohealth.2013.06.001. PubMed

8. Kells M, Schubert-Bob P, Nagle K, et al. Meal supervision during medical hospitalization for eating disorders. Clin Nurs Res. 2017;26(4):525-537. doi: 10.1177/1054773816637598. PubMed

9. Jeffers S, Searcey P, Boyle K, et al. Centralized video monitoring for patient safety: a Denver Health Lean journey. Nurs Econ. 2013;31(6):298-306. PubMed

10. Sand-Jecklin K, Johnson JR, Tylka S. Protecting patient safety: can video monitoring prevent falls in high-risk patient populations? J Nurs Care Qual. 2016;31(2):131-138. doi: 10.1097/NCQ.0000000000000163. PubMed

11. Burtson PL, Vento L. Sitter reduction through mobile video monitoring: a nurse-driven sitter protocol and administrative oversight. J Nurs Adm. 2015;45(7-8):363-369. doi: 10.1097/NNA.0000000000000216. PubMed

12. Prevention CfDCa. ICD-9-CM Guidelines, 9th ed. https://www.cdc.gov/nchs/data/icd/icd9cm_guidelines_2011.pdf. Accessed April 11, 2018.

13. Prevention CfDca. IDC-9-CM Code Conversion Table. https://www.cdc.gov/nchs/data/icd/icd-9-cm_fy14_cnvtbl_final.pdf. Accessed April 11, 2018.

14. Cournan M, Fusco-Gessick B, Wright L. Improving patient safety through video monitoring. Rehabil Nurs. 2016. doi: 10.1002/rnj.308. PubMed

15. Rochefort CM, Ward L, Ritchie JA, Girard N, Tamblyn RM. Patient and nurse staffing characteristics associated with high sitter use costs. J Adv Nurs. 2012;68(8):1758-1767. doi: 10.1111/j.1365-2648.2011.05864.x. PubMed

Hospitalizations for nutritional rehabilitation of patients with restrictive eating disorders are increasing.1 Among primary mental health admissions at free-standing children’s hospitals, eating disorders represent 5.5% of hospitalizations and are associated with the longest length of stay (LOS; mean 14.3 days) and costliest care (mean $46,130).2 Admission is necessary to ensure initial weight restoration and monitoring for symptoms of refeeding syndrome, including electrolyte shifts and vital sign abnormalities.3-5

Supervision is generally considered an essential element of caring for hospitalized patients with eating disorders, who may experience difficulty adhering to nutritional treatment, perform excessive movement or exercise, or demonstrate purging or self-harming behaviors. Supervision is presumed to prevent counterproductive behaviors, facilitating weight gain and earlier discharge to psychiatric treatment. Best practices for patient supervision to address these challenges have not been established but often include meal time or continuous one-to-one supervision by nursing assistants (NAs) or other staff.6,7 While meal supervision has been shown to decrease medical LOS, it is costly, reduces staff availability for the care of other patient care, and can be a barrier to caring for patients with eating disorders in many institutions.8

Although not previously used in patients with eating disorders, centralized video monitoring (CVM) may provide an additional mode of supervision. CVM is an emerging technology consisting of real-time video streaming, without video recording, enabling tracking of patient movement, redirection of behaviors, and communication with unit nurses when necessary. CVM has been used in multiple patient safety initiatives to reduce falls, address staffing shortages, reduce costs,9,10 supervise patients at risk for self-harm or elopement, and prevent controlled medication diversion.10,11

We sought to pilot a novel use of CVM to replace our institution’s standard practice of continuous one-to-one nursing assistant (NA) supervision of patients admitted for medical stabilization of an eating disorder. Our objective was to evaluate the supervision cost and feasibility of CVM, using LOS and days to weight gain as balancing measures.

METHODS

Setting and Participants

This retrospective cohort study included patients 12-18 years old admitted to the pediatric hospital medicine service on a general unit of an academic quaternary care children’s hospital for medical stabilization of an eating disorder between September 2013 and March 2017. Patients were identified using administrative data based on primary or secondary diagnosis of anorexia nervosa, eating disorder not other wise specified, or another specified eating disorder (ICD 9 3071, 20759, or ICD 10 f5000, 5001, f5089, f509).12,13 This research study was considered exempt by the University of Wisconsin School of Medicine and Public Health’s Institutional Review Board.

Supervision Interventions

A standard medical stabilization protocol was used for patients admitted with an eating disorder throughout the study period (Appendix). All patients received continuous one-to-one NA supervision until they reached the target calorie intake and demonstrated the ability to follow the nutritional meal protocol. Beginning July 2015, patients received continuous CVM supervision unless they expressed suicidal ideation (SI), which triggered one-to-one NA supervision until they no longer endorsed suicidality.

Centralized Video Monitoring Implementation

Institutional CVM technology was AvaSys TeleSitter Solution (AvaSure, Inc). Our institution purchased CVM devices for use in adult settings, and one was assigned for pediatric CVM. Mobile CVM video carts were deployed to patient rooms and generated live video streams, without recorded capture, which were supervised by CVM technicians. These technicians were NAs hired and trained specifically for this role; worked four-, eight-, and 12-hour shifts; and observed up to eight camera feeds on a single monitor in a centralized room. Patients and family members could refuse CVM, which would trigger one-to-one NA supervision. Patients were not observed by CVM while in the restroom; staff were notified by either the patient or technician, and one-to-one supervision was provided. CVM had two-way audio communication, which allowed technicians to redirect patients verbally. Technicians could contact nursing staff directly by phone when additional intervention was needed.

Supervision Costs

NA supervision costs were estimated at $19/hour, based upon institutional human resources average NA salaries at that time. No additional mealtime supervision was included, as in-person supervision was already occurring.

CVM supervision costs were defined as the sum of the device cost plus CVM technician costs and two hours of one-to-one NA mealtime supervision per day. The CVM device cost was estimated at $2.10/hour, assuming a 10-year machine life expectancy (single unit cost $82,893 in 2015, 3,944 hours of use in fiscal year of 2018). CVM technician costs were $19/hour, based upon institutional human resources average CVM technician salaries at that time. Because technicians monitored an average of six patients simultaneously during this study, one-sixth of a CVM technician’s salary (ie, $3.17/hour) was used for each hour of CVM monitoring. Patients with mixed (NA and CVM) supervision were analyzed with those having CVM supervision. These patients’ costs were the sum of their NA supervision costs plus their CVM supervision costs.

Data Collection

Descriptive variables including age, gender, race/ethnicity, insurance, and LOS were collected from administrative data. The duration and type of supervision for all patients were collected from daily staffing logs. The eating disorder protocol standardized the process of obtaining daily weights (Appendix). Days to weight gain following admission were defined as the total number of days from admission to the first day of weight gain that was followed by another day of weight gain or maintaining the same weight

Data Analysis

Patient and hospitalization characteristics were summarized. A sample size of at least 14 in each group was estimated as necessary to detect a 50% reduction in supervision cost between the groups using alpha = 0.05, a power of 80%, a mean cost of $4,400 in the NA group, and a standard deviation of $1,600.Wilcoxon rank-sum tests were used to assess differences in median supervision cost between NA and CVM use. Differences in mean LOS and days to weight gain between NA and CVM use were assessed with t-tests because these data were normally distributed.

RESULTS

Patient Characteristics and Supervision Costs

The study included 37 consecutive admissions (NA = 23 and CVM = 14) with 35 unique patients. Patients were female, primarily non-Hispanic White, and privately insured (Table 1). Median supervision cost for the NA was statistically significantly more expensive at $4,104/admission versus $1,166/admission for CVM (P < .001, Table 2).

Balancing Measures, Acceptability, and Feasibility

Mean LOS was 11.7 days for NA and 9.8 days for CVM (P = .27; Table 2). The mean number of days to weight gain was 3.1 and 3.6 days, respectively (P = .28). No patients converted from CVM to NA supervision. One patient with SI converted to CVM after SI resolved and two patients required ongoing NA supervision due to continued SI. There were no reported refusals, technology failures, or unplanned discontinuations of CVM. One patient/family reported excessive CVM redirection of behavior.

DISCUSSION

This is the first description of CVM use in adolescent patients or patients with eating disorders. Our results suggest that CVM appears feasible and less costly in this population than one-to-one NA supervision, without statistically significant differences in LOS or time to weight gain. Patients with CVM with any NA supervision (except mealtime alone) were analyzed in the CVM group; therefore, this study may underestimate cost savings from CVM supervision. This innovative use of CVM may represent an opportunity for hospitals to repurpose monitoring technology for more efficient supervision of patients with eating disorders.

This pediatric pilot study adds to the growing body of literature in adult patients suggesting CVM supervision may be a feasible inpatient cost-reduction strategy.9,10 One single-center study demonstrated that the use of CVM with adult inpatients led to fewer unsafe behaviors, eg, patient removal of intravenous catheters and oxygen therapy. Personnel savings exceeded the original investment cost of the monitor within one fiscal quarter.9 Results of another study suggest that CVM use with hospitalized adults who required supervision to prevent falls was associated with improved patient and family satisfaction.14 In the absence of a gold standard for supervision of patients hospitalized with eating disorders, CVM technology is a tool that may balance cost, care quality, and patient experience. Given the upfront investment in CVM units, this technology may be most appropriate for institutions already using CVM for other inpatient indications.

Although our institutional cost of CVM use was similar to that reported by other institutions,11,15 the single-center design of this pilot study limits the generalizability of our findings. Unadjusted results of this observational study may be confounded by indication bias. As this was a pilot study, it was powered to detect a clinically significant difference in cost between NA and CVM supervision. While statistically significant differences were not seen in LOS or weight gain, this pilot study was not powered to detect potential differences or to adjust for all potential confounders (eg, other mental health conditions or comorbidities, eating disorder type, previous hospitalizations). Future studies should include these considerations in estimating sample sizes. The ability to conduct a robust cost-effectiveness analysis was also limited by cost data availability and reliance on staffing assumptions to calculate supervision costs. However, these findings will be important for valid effect size estimates for future interventional studies that rigorously evaluate CVM effectiveness and safety. Patients and families were not formally surveyed about their experiences with CVM, and the patient and family experience is another important outcome to consider in future studies.

CONCLUSION

The results of this pilot study suggest that supervision costs for patients admitted for medical stabilization of eating disorders were statistically significantly lower with CVM when compared with one-to-one NA supervision, without a change in hospitalization LOS or time to weight gain. These findings are particularly important as hospitals seek opportunities to reduce costs while providing safe and effective care. Future efforts should focus on evaluating clinical outcomes and patient experiences with this technology and strategies to maximize efficiency to offset the initial device cost.

Disclosures

The authors have no financial relationships relevant to this article to disclose. The authors have no conflicts of interest relevant to this article to disclose.

Hospitalizations for nutritional rehabilitation of patients with restrictive eating disorders are increasing.1 Among primary mental health admissions at free-standing children’s hospitals, eating disorders represent 5.5% of hospitalizations and are associated with the longest length of stay (LOS; mean 14.3 days) and costliest care (mean $46,130).2 Admission is necessary to ensure initial weight restoration and monitoring for symptoms of refeeding syndrome, including electrolyte shifts and vital sign abnormalities.3-5

Supervision is generally considered an essential element of caring for hospitalized patients with eating disorders, who may experience difficulty adhering to nutritional treatment, perform excessive movement or exercise, or demonstrate purging or self-harming behaviors. Supervision is presumed to prevent counterproductive behaviors, facilitating weight gain and earlier discharge to psychiatric treatment. Best practices for patient supervision to address these challenges have not been established but often include meal time or continuous one-to-one supervision by nursing assistants (NAs) or other staff.6,7 While meal supervision has been shown to decrease medical LOS, it is costly, reduces staff availability for the care of other patient care, and can be a barrier to caring for patients with eating disorders in many institutions.8

Although not previously used in patients with eating disorders, centralized video monitoring (CVM) may provide an additional mode of supervision. CVM is an emerging technology consisting of real-time video streaming, without video recording, enabling tracking of patient movement, redirection of behaviors, and communication with unit nurses when necessary. CVM has been used in multiple patient safety initiatives to reduce falls, address staffing shortages, reduce costs,9,10 supervise patients at risk for self-harm or elopement, and prevent controlled medication diversion.10,11

We sought to pilot a novel use of CVM to replace our institution’s standard practice of continuous one-to-one nursing assistant (NA) supervision of patients admitted for medical stabilization of an eating disorder. Our objective was to evaluate the supervision cost and feasibility of CVM, using LOS and days to weight gain as balancing measures.

METHODS

Setting and Participants

This retrospective cohort study included patients 12-18 years old admitted to the pediatric hospital medicine service on a general unit of an academic quaternary care children’s hospital for medical stabilization of an eating disorder between September 2013 and March 2017. Patients were identified using administrative data based on primary or secondary diagnosis of anorexia nervosa, eating disorder not other wise specified, or another specified eating disorder (ICD 9 3071, 20759, or ICD 10 f5000, 5001, f5089, f509).12,13 This research study was considered exempt by the University of Wisconsin School of Medicine and Public Health’s Institutional Review Board.

Supervision Interventions

A standard medical stabilization protocol was used for patients admitted with an eating disorder throughout the study period (Appendix). All patients received continuous one-to-one NA supervision until they reached the target calorie intake and demonstrated the ability to follow the nutritional meal protocol. Beginning July 2015, patients received continuous CVM supervision unless they expressed suicidal ideation (SI), which triggered one-to-one NA supervision until they no longer endorsed suicidality.

Centralized Video Monitoring Implementation

Institutional CVM technology was AvaSys TeleSitter Solution (AvaSure, Inc). Our institution purchased CVM devices for use in adult settings, and one was assigned for pediatric CVM. Mobile CVM video carts were deployed to patient rooms and generated live video streams, without recorded capture, which were supervised by CVM technicians. These technicians were NAs hired and trained specifically for this role; worked four-, eight-, and 12-hour shifts; and observed up to eight camera feeds on a single monitor in a centralized room. Patients and family members could refuse CVM, which would trigger one-to-one NA supervision. Patients were not observed by CVM while in the restroom; staff were notified by either the patient or technician, and one-to-one supervision was provided. CVM had two-way audio communication, which allowed technicians to redirect patients verbally. Technicians could contact nursing staff directly by phone when additional intervention was needed.

Supervision Costs

NA supervision costs were estimated at $19/hour, based upon institutional human resources average NA salaries at that time. No additional mealtime supervision was included, as in-person supervision was already occurring.

CVM supervision costs were defined as the sum of the device cost plus CVM technician costs and two hours of one-to-one NA mealtime supervision per day. The CVM device cost was estimated at $2.10/hour, assuming a 10-year machine life expectancy (single unit cost $82,893 in 2015, 3,944 hours of use in fiscal year of 2018). CVM technician costs were $19/hour, based upon institutional human resources average CVM technician salaries at that time. Because technicians monitored an average of six patients simultaneously during this study, one-sixth of a CVM technician’s salary (ie, $3.17/hour) was used for each hour of CVM monitoring. Patients with mixed (NA and CVM) supervision were analyzed with those having CVM supervision. These patients’ costs were the sum of their NA supervision costs plus their CVM supervision costs.

Data Collection

Descriptive variables including age, gender, race/ethnicity, insurance, and LOS were collected from administrative data. The duration and type of supervision for all patients were collected from daily staffing logs. The eating disorder protocol standardized the process of obtaining daily weights (Appendix). Days to weight gain following admission were defined as the total number of days from admission to the first day of weight gain that was followed by another day of weight gain or maintaining the same weight

Data Analysis

Patient and hospitalization characteristics were summarized. A sample size of at least 14 in each group was estimated as necessary to detect a 50% reduction in supervision cost between the groups using alpha = 0.05, a power of 80%, a mean cost of $4,400 in the NA group, and a standard deviation of $1,600.Wilcoxon rank-sum tests were used to assess differences in median supervision cost between NA and CVM use. Differences in mean LOS and days to weight gain between NA and CVM use were assessed with t-tests because these data were normally distributed.

RESULTS

Patient Characteristics and Supervision Costs

The study included 37 consecutive admissions (NA = 23 and CVM = 14) with 35 unique patients. Patients were female, primarily non-Hispanic White, and privately insured (Table 1). Median supervision cost for the NA was statistically significantly more expensive at $4,104/admission versus $1,166/admission for CVM (P < .001, Table 2).

Balancing Measures, Acceptability, and Feasibility

Mean LOS was 11.7 days for NA and 9.8 days for CVM (P = .27; Table 2). The mean number of days to weight gain was 3.1 and 3.6 days, respectively (P = .28). No patients converted from CVM to NA supervision. One patient with SI converted to CVM after SI resolved and two patients required ongoing NA supervision due to continued SI. There were no reported refusals, technology failures, or unplanned discontinuations of CVM. One patient/family reported excessive CVM redirection of behavior.

DISCUSSION

This is the first description of CVM use in adolescent patients or patients with eating disorders. Our results suggest that CVM appears feasible and less costly in this population than one-to-one NA supervision, without statistically significant differences in LOS or time to weight gain. Patients with CVM with any NA supervision (except mealtime alone) were analyzed in the CVM group; therefore, this study may underestimate cost savings from CVM supervision. This innovative use of CVM may represent an opportunity for hospitals to repurpose monitoring technology for more efficient supervision of patients with eating disorders.

This pediatric pilot study adds to the growing body of literature in adult patients suggesting CVM supervision may be a feasible inpatient cost-reduction strategy.9,10 One single-center study demonstrated that the use of CVM with adult inpatients led to fewer unsafe behaviors, eg, patient removal of intravenous catheters and oxygen therapy. Personnel savings exceeded the original investment cost of the monitor within one fiscal quarter.9 Results of another study suggest that CVM use with hospitalized adults who required supervision to prevent falls was associated with improved patient and family satisfaction.14 In the absence of a gold standard for supervision of patients hospitalized with eating disorders, CVM technology is a tool that may balance cost, care quality, and patient experience. Given the upfront investment in CVM units, this technology may be most appropriate for institutions already using CVM for other inpatient indications.

Although our institutional cost of CVM use was similar to that reported by other institutions,11,15 the single-center design of this pilot study limits the generalizability of our findings. Unadjusted results of this observational study may be confounded by indication bias. As this was a pilot study, it was powered to detect a clinically significant difference in cost between NA and CVM supervision. While statistically significant differences were not seen in LOS or weight gain, this pilot study was not powered to detect potential differences or to adjust for all potential confounders (eg, other mental health conditions or comorbidities, eating disorder type, previous hospitalizations). Future studies should include these considerations in estimating sample sizes. The ability to conduct a robust cost-effectiveness analysis was also limited by cost data availability and reliance on staffing assumptions to calculate supervision costs. However, these findings will be important for valid effect size estimates for future interventional studies that rigorously evaluate CVM effectiveness and safety. Patients and families were not formally surveyed about their experiences with CVM, and the patient and family experience is another important outcome to consider in future studies.

CONCLUSION

The results of this pilot study suggest that supervision costs for patients admitted for medical stabilization of eating disorders were statistically significantly lower with CVM when compared with one-to-one NA supervision, without a change in hospitalization LOS or time to weight gain. These findings are particularly important as hospitals seek opportunities to reduce costs while providing safe and effective care. Future efforts should focus on evaluating clinical outcomes and patient experiences with this technology and strategies to maximize efficiency to offset the initial device cost.

Disclosures

The authors have no financial relationships relevant to this article to disclose. The authors have no conflicts of interest relevant to this article to disclose.

1. Zhao Y, Encinosa W. An update on hospitalizations for eating disorders, 1999 to 2009: statistical brief #120. In: Healthcare Cost and Utilization Project (HCUP) Statistical Briefs. Rockville, MD: Agency for Healthcare Research and Quality (US); 2006. PubMed

2. Bardach NS, Coker TR, Zima BT, et al. Common and costly hospitalizations for pediatric mental health disorders. Pediatrics. 2014;133(4):602-609. doi: 10.1542/peds.2013-3165. PubMed

3. Society for Adolescent H, Medicine, Golden NH, et al. Position Paper of the Society for Adolescent Health and Medicine: medical management of restrictive eating disorders in adolescents and young adults. J Adolesc Health. 2015;56(1):121-125. doi: 10.1016/j.jadohealth.2014.10.259. PubMed

4. Katzman DK. Medical complications in adolescents with anorexia nervosa: a review of the literature. Int J Eat Disord. 2005;37(S1):S52-S59; discussion S87-S59. doi: 10.1002/eat.20118. PubMed

5. Strandjord SE, Sieke EH, Richmond M, Khadilkar A, Rome ES. Medical stabilization of adolescents with nutritional insufficiency: a clinical care path. Eat Weight Disord. 2016;21(3):403-410. doi: 10.1007/s40519-015-0245-5. PubMed

6. Kells M, Davidson K, Hitchko L, O’Neil K, Schubert-Bob P, McCabe M. Examining supervised meals in patients with restrictive eating disorders. Appl Nurs Res. 2013;26(2):76-79. doi: 10.1016/j.apnr.2012.06.003. PubMed

7. Leclerc A, Turrini T, Sherwood K, Katzman DK. Evaluation of a nutrition rehabilitation protocol in hospitalized adolescents with restrictive eating disorders. J Adolesc Health. 2013;53(5):585-589. doi: 10.1016/j.jadohealth.2013.06.001. PubMed

8. Kells M, Schubert-Bob P, Nagle K, et al. Meal supervision during medical hospitalization for eating disorders. Clin Nurs Res. 2017;26(4):525-537. doi: 10.1177/1054773816637598. PubMed

9. Jeffers S, Searcey P, Boyle K, et al. Centralized video monitoring for patient safety: a Denver Health Lean journey. Nurs Econ. 2013;31(6):298-306. PubMed

10. Sand-Jecklin K, Johnson JR, Tylka S. Protecting patient safety: can video monitoring prevent falls in high-risk patient populations? J Nurs Care Qual. 2016;31(2):131-138. doi: 10.1097/NCQ.0000000000000163. PubMed

11. Burtson PL, Vento L. Sitter reduction through mobile video monitoring: a nurse-driven sitter protocol and administrative oversight. J Nurs Adm. 2015;45(7-8):363-369. doi: 10.1097/NNA.0000000000000216. PubMed

12. Prevention CfDCa. ICD-9-CM Guidelines, 9th ed. https://www.cdc.gov/nchs/data/icd/icd9cm_guidelines_2011.pdf. Accessed April 11, 2018.

13. Prevention CfDca. IDC-9-CM Code Conversion Table. https://www.cdc.gov/nchs/data/icd/icd-9-cm_fy14_cnvtbl_final.pdf. Accessed April 11, 2018.

14. Cournan M, Fusco-Gessick B, Wright L. Improving patient safety through video monitoring. Rehabil Nurs. 2016. doi: 10.1002/rnj.308. PubMed

15. Rochefort CM, Ward L, Ritchie JA, Girard N, Tamblyn RM. Patient and nurse staffing characteristics associated with high sitter use costs. J Adv Nurs. 2012;68(8):1758-1767. doi: 10.1111/j.1365-2648.2011.05864.x. PubMed

1. Zhao Y, Encinosa W. An update on hospitalizations for eating disorders, 1999 to 2009: statistical brief #120. In: Healthcare Cost and Utilization Project (HCUP) Statistical Briefs. Rockville, MD: Agency for Healthcare Research and Quality (US); 2006. PubMed

2. Bardach NS, Coker TR, Zima BT, et al. Common and costly hospitalizations for pediatric mental health disorders. Pediatrics. 2014;133(4):602-609. doi: 10.1542/peds.2013-3165. PubMed

3. Society for Adolescent H, Medicine, Golden NH, et al. Position Paper of the Society for Adolescent Health and Medicine: medical management of restrictive eating disorders in adolescents and young adults. J Adolesc Health. 2015;56(1):121-125. doi: 10.1016/j.jadohealth.2014.10.259. PubMed

4. Katzman DK. Medical complications in adolescents with anorexia nervosa: a review of the literature. Int J Eat Disord. 2005;37(S1):S52-S59; discussion S87-S59. doi: 10.1002/eat.20118. PubMed

5. Strandjord SE, Sieke EH, Richmond M, Khadilkar A, Rome ES. Medical stabilization of adolescents with nutritional insufficiency: a clinical care path. Eat Weight Disord. 2016;21(3):403-410. doi: 10.1007/s40519-015-0245-5. PubMed

6. Kells M, Davidson K, Hitchko L, O’Neil K, Schubert-Bob P, McCabe M. Examining supervised meals in patients with restrictive eating disorders. Appl Nurs Res. 2013;26(2):76-79. doi: 10.1016/j.apnr.2012.06.003. PubMed

7. Leclerc A, Turrini T, Sherwood K, Katzman DK. Evaluation of a nutrition rehabilitation protocol in hospitalized adolescents with restrictive eating disorders. J Adolesc Health. 2013;53(5):585-589. doi: 10.1016/j.jadohealth.2013.06.001. PubMed

8. Kells M, Schubert-Bob P, Nagle K, et al. Meal supervision during medical hospitalization for eating disorders. Clin Nurs Res. 2017;26(4):525-537. doi: 10.1177/1054773816637598. PubMed

9. Jeffers S, Searcey P, Boyle K, et al. Centralized video monitoring for patient safety: a Denver Health Lean journey. Nurs Econ. 2013;31(6):298-306. PubMed

10. Sand-Jecklin K, Johnson JR, Tylka S. Protecting patient safety: can video monitoring prevent falls in high-risk patient populations? J Nurs Care Qual. 2016;31(2):131-138. doi: 10.1097/NCQ.0000000000000163. PubMed

11. Burtson PL, Vento L. Sitter reduction through mobile video monitoring: a nurse-driven sitter protocol and administrative oversight. J Nurs Adm. 2015;45(7-8):363-369. doi: 10.1097/NNA.0000000000000216. PubMed

12. Prevention CfDCa. ICD-9-CM Guidelines, 9th ed. https://www.cdc.gov/nchs/data/icd/icd9cm_guidelines_2011.pdf. Accessed April 11, 2018.

13. Prevention CfDca. IDC-9-CM Code Conversion Table. https://www.cdc.gov/nchs/data/icd/icd-9-cm_fy14_cnvtbl_final.pdf. Accessed April 11, 2018.

14. Cournan M, Fusco-Gessick B, Wright L. Improving patient safety through video monitoring. Rehabil Nurs. 2016. doi: 10.1002/rnj.308. PubMed

15. Rochefort CM, Ward L, Ritchie JA, Girard N, Tamblyn RM. Patient and nurse staffing characteristics associated with high sitter use costs. J Adv Nurs. 2012;68(8):1758-1767. doi: 10.1111/j.1365-2648.2011.05864.x. PubMed

© 2019 Society of Hospital Medicine

Interhospital Transfer: Transfer Processes and Patient Outcomes

The transfer of patients between acute care hospitals (interhospital transfer [IHT]) occurs regularly among patients with a variety of diagnoses, in theory, to gain access to unique specialty services and/or a higher level of care, among other reasons.1,2

However, the practice of IHT is variable and nonstandardized,3,4 and existing data largely suggests that transferred patients experience worse outcomes, including longer length of stay, higher hospitalization costs, longer ICU time, and greater mortality, even with rigorous adjustment for confounding by indication.5,6 Though there are many possible reasons for these findings, existing literature suggests that there may be aspects of the transfer process itself which contribute to these outcomes.2,6,7

Understanding which aspects of the transfer process contribute to poor patient outcomes is a key first step toward the development of targeted quality improvement initiatives to improve this process of care. In this study, we aim to examine the association between select characteristics of the transfer process, including the timing of transfer and workload of the admitting physician team, and clinical outcomes among patients undergoing IHT.

METHODS

Data and Study Population

We performed a retrospective analysis of patients ≥age 18 years who transferred to Brigham and Women’s Hospital (BWH), a 777-bed tertiary care hospital, from another acute care hospital between January 2005, and September 2013. Dates of inclusion were purposefully chosen prior to BWH implementation of a new electronic health records system to avoid potential information bias. As at most academic medical centers, night coverage at BWH differs by service and includes a combination of long-call admitting teams and night float coverage. On weekends, many services are less well staffed, and some procedures may only be available if needed emergently. Some services have caps on the daily number of admissions or total patient census, but none have caps on the number of discharges per day. Patients were excluded from analysis if they left BWH against medical advice, were transferred from closely affiliated hospitals with shared personnel and electronic health records (Brigham and Women’s Faulkner Hospital, Dana Farber Cancer Institute), transferred from inpatient psychiatric or inpatient hospice facilities, or transferred to obstetrics or nursery services. Data were obtained from administrative sources and the research patient data repository (RPDR), a centralized clinical data repository that gathers data from various hospital legacy systems and stores them in one data warehouse.8 Our study was approved by the Partners Institutional Review Board (IRB) with a waiver of patient consent.

Transfer Process Characteristics

Predictors included select characteristics of the transfer process, including (1) Day of week of transfer, dichotomized into Friday through Sunday (“weekend”), versus Monday through Thursday (“weekday”);9 Friday was included with “weekend” given the suggestion of increased volume of transfers in advance of the weekend; (2) Time of arrival of the transferred patient, categorized into “daytime” (7

Outcomes

Outcomes included transfer to the intensive care unit (ICU) within 48 hours of arrival and 30-day mortality from date of index admission.5,6

Patient Characteristics

Covariates for adjustment included: patient age, sex, race, Elixhauser comorbidity score,11 Diagnosis-Related Group (DRG)-weight, insurance status, year of admission, number of preadmission medications, and service of admission.

Statistical Analyses

We used descriptive statistics to display baseline characteristics and performed a series of univariable and multivariable logistic regression models to obtain the adjusted odds of each transfer process characteristic on each outcome, adjusting for all covariates (proc logistic, SAS Statistical Software, Cary, North Carolina). For analyses of ICU transfer within 48 hours of arrival, all patients initially admitted to the ICU at time of transfer were excluded.

In the secondary analyses, we used a combined day-of-week and time-of-day variable (ie, Monday day, Monday evening, Monday night, Tuesday day, and so on, with Monday day as the reference group) to obtain a more detailed evaluation of timing of transfer on patient outcomes. We also performed stratified analyses to evaluate each transfer process characteristic on adjusted odds of 30-day mortality stratified by service of admission (ie, at the time of transfer to BWH), adjusting for all covariates. For all analyses, two-sided P values < .05 were considered significant.

RESULTS

Overall, 24,352 patients met our inclusion criteria and underwent IHT, of whom 2,174 (8.9%) died within 30 days. Of the 22,910 transferred patients originally admitted to a non-ICU service, 5,464 (23.8%) underwent ICU transfer within 48 hours of arrival. Cohort characteristics are shown in Table 1.

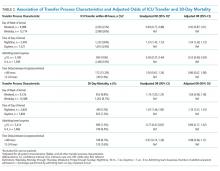

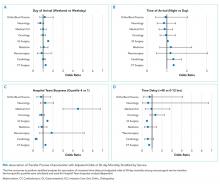

Multivariable regression analyses demonstrated no significant association between weekend (versus weekday) transfer or increased time delay between patient acceptance and arrival (>48 hours) and adjusted odds of ICU transfer within 48 hours or 30-day mortality. However, they did demonstrate that nighttime (versus daytime) transfer was associated with greater adjusted odds of both ICU transfer and 30-day mortality. Increased admitting team busyness was associated with lower adjusted odds of ICU transfer but was not significantly associated with adjusted odds of 30-day mortality (Table 2). As expected, decreased time delay between patient acceptance and arrival (0-12 hours) was associated with increased adjusted odds of both ICU transfer (adjusted OR 2.68; 95% CI 2.29, 3.15) and 30-day mortality (adjusted OR 1.25; 95% CI 1.03, 1.53) compared with 12-24 hours (results not shown). Time delay >48 hours was not associated with either outcome.

Regression analyses with the combined day/time variable demonstrated that compared with Monday daytime transfer, Sunday night transfer was significantly associated with increased adjusted odds of 30-day mortality, and Friday night transfer was associated with a trend toward increased 30-day mortality (adjusted OR [aOR] 1.88; 95% CI 1.25, 2.82, and aOR 1.43; 95% CI 0.99, 2.06, respectively). We also found that all nighttime transfers (ie, Monday through Sunday night) were associated with increased adjusted odds of ICU transfer within 48 hours (as compared with Monday daytime transfer). Other days/time analyses were not significant.

Univariable and multivariable analyses stratified by service were performed (Appendix). Multivariable stratified analyses demonstrated that weekend transfer, nighttime transfer, and increased admitting team busyness were associated with increased adjusted odds of 30-day mortality among cardiothoracic (CT) and gastrointestinal (GI) surgical service patients. Increased admitting team busyness was also associated with increased mortality among ICU service patients but was associated with decreased mortality among cardiology service patients. An increased time delay between patient acceptance and arrival was associated with decreased mortality among CT and GI surgical service patients (Figure; Appendix). Other adjusted stratified outcomes were not significant.

DISCUSSION

In this study of 24,352 patients undergoing IHT, we found no significant association between weekend transfer or increased time delay between transfer acceptance and arrival and patient outcomes in the cohort as a whole; but we found that nighttime transfer is associated with increased adjusted odds of both ICU transfer within 48 hours and 30-day mortality. Our analyses combining day-of-week and time-of-day demonstrate that Sunday night transfer is particularly associated with increased adjusted odds of 30-day mortality (as compared with Monday daytime transfer), and show a trend toward increased mortality with Friday night transfers. These detailed analyses otherwise reinforce that nighttime transfer across all nights of the week is associated with increased adjusted odds of ICU transfer within 48 hours. We also found that increased admitting team busyness on the day of patient transfer is associated with decreased odds of ICU transfer, though this may solely be reflective of higher turnover services (ie, cardiology) caring for lower acuity patients, as suggested by secondary analyses stratified by service. In addition, secondary analyses demonstrated differential associations between weekend transfers, nighttime transfers, and increased team busyness on the odds of 30-day mortality based on service of transfer. These analyses showed that patients transferred to higher acuity services requiring procedural care, including CT surgery, GI surgery, and Medical ICU, do worse under all three circumstances as compared with patients transferred to other services. Secondary analyses also demonstrated that increased time delay between patient acceptance and arrival is inversely associated with 30-day mortality among CT and GI surgery service patients, likely reflecting lower acuity patients (ie, less sick patients are less rapidly transferred).

There are several possible explanations for these findings. Patients transferred to surgical services at night may reflect a more urgent need for surgery and include a sicker cohort of patients, possibly explaining these findings. Alternatively, or in addition, both weekend and nighttime hospital admission expose patients to similar potential risks, ie, limited resources available during off-peak hours. Our findings could, therefore, reflect the possibility that patients transferred to higher acuity services in need of procedural care are most vulnerable to off-peak timing of transfer. Similar data looking at patients admitted through the emergency room (ER) find the strongest effect of off-peak admissions on patients in need of procedures, including GI hemorrhage,12 atrial fibrillation13 and acute myocardial infarction (AMI),14 arguably because of the limited availability of necessary interventions. Patients undergoing IHT are a sicker cohort of patients than those admitted through the ER, and, therefore, may be even more vulnerable to these issues.3,5 This is supported by our findings that Sunday night transfers (and trend toward Friday night transfers) are associated with greater mortality compared with Monday daytime transfers, when at-the-ready resources and/or specialty personnel may be less available (Sunday night), and delays until receipt of necessary procedures may be longer (Friday night). Though we did not observe similar results among cardiology service transfers, as may be expected based on existing literature,13,14 this subset of patients includes more heterogeneous diagnoses, (ie, not solely those that require acute intervention) and exhibited a low level of acuity (low Elixhauser score and DRG-weight, data not shown).

We also found that increased admitting team busyness on the day of patient transfer is associated with increased odds of 30-day mortality among CT surgery, GI surgery, and ICU service transfers. As above, there are several possible explanations for this finding. It is possible that among these services, only the sickest/neediest patients are accepted for transfer when teams are busiest, explaining our findings. Though this explanation is possible, the measure of team “busyness” includes patient discharge, thereby increasing, not decreasing, availability for incoming patients, making this explanation less likely. Alternatively, it is possible that this finding is reflective of reverse causation, ie, that teams have less ability to discharge/admit new patients when caring for particularly sick/unstable patient transfers, though this assumes that transferred patients arrive earlier in the day, (eg, in time to influence discharge decisions), which infrequently occurs (Table 1). Lastly, it is possible that this subset of patients will be more vulnerable to the workload of the team that is caring for them at the time of their arrival. With high patient turnover (admissions/discharges), the time allocated to each patient’s care may be diminished (ie, “work compression,” trying to do the same amount of work in less time), and may result in decreased time to care for the transferred patient. This has been shown to influence patient outcomes at the time of patient discharge.10

In trying to understand why we observed an inverse relationship between admitting team busyness and odds of ICU transfer within 48 hours, we believe this finding is largely driven by cardiology service transfers, which comprise the highest volume of transferred patients in our cohort (Table 1), and are low acuity patients. Within this population of patients, admitting team busyness is likely a surrogate variable for high turnover/low acuity. This idea is supported by our findings that admitting team busyness is associated with decreased adjusted odds of 30-day mortality in this group (and only in this group).

Similarly, our observed inverse relationship between increased time delay and 30-day mortality among CT and GI surgical service patients is also likely reflective of lower acuity patients. We anticipated that decreased time delay (0-12 hours) would be reflective of greater patient acuity (supported by our findings that decreased time delay is associated with increased odds of ICU transfer and 30-day mortality). However, our findings also suggest that increased time delay (>48 hours) is similarly representative of lower patient acuity and therefore an imperfect measure of discontinuity and/or harmful delays in care during IHT (see limitations below).

Our study is subject to several limitations. This is a single site study; given known variation in transfer practices between hospitals,3 it is possible that our findings are not generalizable. However, given similar existing data on patients admitted through the ER, it is likely our findings may be reflective of IHT to similar tertiary referral hospitals. Second, although we adjusted for patient characteristics, there remains the possibility of unmeasured confounding and other bias that account for our results, as discussed. Third, although the definition of “busyness” used in this study was chosen based on prior data demonstrating an effect on patient outcomes,10 we did not include other measures of busyness that may influence outcomes of transferred patients such as overall team census or hospital busyness. However, the workload associated with a high volume of patient admissions and discharges is arguably a greater reflection of “work compression” for the admitting team compared with overall team census, which may reflect a more static workload with less impact on the care of a newly transferred patient. Also, although hospital census may influence the ability to transfer (ie, lower volume of transferred patients during times of high hospital census), this likely has less of an impact on the direct care of transferred patients than the admitting team’s workload. It is more likely that it would serve as a confounder (eg, sicker patients are accepted for transfer despite high hospital census, while lower risk patients are not).

Nevertheless, future studies should further evaluate the association with other measures of busyness/workload and outcomes of transferred patients. Lastly, though we anticipated time delay between transfer acceptance and arrival would be correlated with patient acuity, we hypothesized that longer delay might affect patient continuity and communication and impact patient outcomes. However, our results demonstrate that our measurement of this variable was unsuccessful in unraveling patient acuity from our intended evaluation of these vulnerable aspects of IHT. It is likely that a more detailed evaluation is required to explore potential challenges more fully that may occur with greater time delays (eg, suboptimal communication regarding changes in clinical status during this time period, delays in treatment). Similarly, though our study evaluates the association between nighttime and weekend transfer (and the interaction between these) with patient outcomes, we did not evaluate other intermediate outcomes that may be more affected by the timing of transfer, such as diagnostic errors or delays in procedural care, which warrant further investigation. We do not directly examine the underlying reasons that explain our observed associations, and thus more research is needed to identify these as well as design and evaluate solutions.

Collectively, our findings suggest that high acuity patients in need of procedural care experience worse outcomes during off-peak times of transfer, and during times of high care-team workload. Though further research is needed to identify underlying reasons to explain our findings, both the timing of patient transfer (when modifiable) and workload of the team caring for the patient on arrival may serve as potential targets for interventions to improve the quality and safety of IHT for patients at greatest risk.

Disclosures

Dr. Mueller and Dr. Schnipper have nothing to disclose. Ms. Fiskio has nothing to disclose. Dr. Schnipper is the recipient of grant funding from Mallinckrodt Pharmaceuticals to conduct an investigator-initiated study of predictors and impact of opioid-related adverse drug events.

1. Iwashyna TJ. The incomplete infrastructure for interhospital patient transfer. Crit Care Med. 2 012;40(8):2470-2478. https://doi.org/10.1097/CCM.0b013e318254516f.

2. Mueller SK, Shannon E, Dalal A, Schnipper JL, Dykes P. Patient and physician experience with interhospital transfer: a qualitative study. J Patient Saf. 2018. https://doi.org/10.1097/PTS.0000000000000501

3. Mueller SK, Zheng J, Orav EJ, Schnipper JL. Rates, predictors and variability of interhospital transfers: a national evaluation. J Hosp Med. 2017;12(6):435-442.https://doi.org/10.12788/jhm.2747.

4. Bosk EA, Veinot T, Iwashyna TJ. Which patients and where: a qualitative study of patient transfers from community hospitals. Med Care. 2011;49(6):592-598. https://doi.org/10.1097/MLR.0b013e31820fb71b.

5. Sokol-Hessner L, White AA, Davis KF, Herzig SJ, Hohmann SF. Interhospital transfer patients discharged by academic hospitalists and general internists: characteristics and outcomes. J Hosp Med. 2016;11(4):245-50. https://doi.org/10.1002/jhm.2515.

6. Mueller S, Zheng J, Orav EJP, Schnipper JL. Inter-hospital transfer and patient outcomes: a retrospective cohort study. BMJ Qual Saf. 2018. https://doi.org/10.1136/bmjqs-2018-008087.

7. Mueller SK, Schnipper JL. Physician perspectives on interhospital transfers. J Patient Saf. 2016. https://doi.org/10.1097/PTS.0000000000000312.

8. Research Patient Data Registry (RPDR). http://rc.partners.org/rpdr. Accessed April 20, 2018.

9. Bell CM, Redelmeier DA. Mortality among patients admitted to hospitals on weekends as compared with weekdays. N Engl J Med. 2001;345(9):663-668. https://doi.org/10.1056/NEJMsa003376

10. Mueller SK, Donze J, Schnipper JL. Intern workload and discontinuity of care on 30-day readmission. Am J Med. 2013;126(1):81-88. https://doi.org/10.1016/j.amjmed.2012.09.003.

11. Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8-27. PubMed

12. Ananthakrishnan AN, McGinley EL, Saeian K. Outcomes of weekend admissions for upper gastrointestinal hemorrhage: a nationwide analysis. Clin Gastroenterol Hepatol. 2009;7(3):296-302e1. https://doi.org/10.1016/j.cgh.2008.08.013.

13. Deshmukh A, Pant S, Kumar G, Bursac Z, Paydak H, Mehta JL. Comparison of outcomes of weekend versus weekday admissions for atrial fibrillation. Am J Cardiol. 2012;110(2):208-211. https://doi.org/10.1016/j.amjcard.2012.03.011.

14. Clarke MS, Wills RA, Bowman RV, et al. Exploratory study of the ‘weekend effect’ for acute medical admissions to public hospitals in Queensland, Australia. Intern Med J. 2010;40(11):777-783. https://doi.org/-10.1111/j.1445-5994.2009.02067.x.

The transfer of patients between acute care hospitals (interhospital transfer [IHT]) occurs regularly among patients with a variety of diagnoses, in theory, to gain access to unique specialty services and/or a higher level of care, among other reasons.1,2

However, the practice of IHT is variable and nonstandardized,3,4 and existing data largely suggests that transferred patients experience worse outcomes, including longer length of stay, higher hospitalization costs, longer ICU time, and greater mortality, even with rigorous adjustment for confounding by indication.5,6 Though there are many possible reasons for these findings, existing literature suggests that there may be aspects of the transfer process itself which contribute to these outcomes.2,6,7

Understanding which aspects of the transfer process contribute to poor patient outcomes is a key first step toward the development of targeted quality improvement initiatives to improve this process of care. In this study, we aim to examine the association between select characteristics of the transfer process, including the timing of transfer and workload of the admitting physician team, and clinical outcomes among patients undergoing IHT.

METHODS

Data and Study Population

We performed a retrospective analysis of patients ≥age 18 years who transferred to Brigham and Women’s Hospital (BWH), a 777-bed tertiary care hospital, from another acute care hospital between January 2005, and September 2013. Dates of inclusion were purposefully chosen prior to BWH implementation of a new electronic health records system to avoid potential information bias. As at most academic medical centers, night coverage at BWH differs by service and includes a combination of long-call admitting teams and night float coverage. On weekends, many services are less well staffed, and some procedures may only be available if needed emergently. Some services have caps on the daily number of admissions or total patient census, but none have caps on the number of discharges per day. Patients were excluded from analysis if they left BWH against medical advice, were transferred from closely affiliated hospitals with shared personnel and electronic health records (Brigham and Women’s Faulkner Hospital, Dana Farber Cancer Institute), transferred from inpatient psychiatric or inpatient hospice facilities, or transferred to obstetrics or nursery services. Data were obtained from administrative sources and the research patient data repository (RPDR), a centralized clinical data repository that gathers data from various hospital legacy systems and stores them in one data warehouse.8 Our study was approved by the Partners Institutional Review Board (IRB) with a waiver of patient consent.

Transfer Process Characteristics

Predictors included select characteristics of the transfer process, including (1) Day of week of transfer, dichotomized into Friday through Sunday (“weekend”), versus Monday through Thursday (“weekday”);9 Friday was included with “weekend” given the suggestion of increased volume of transfers in advance of the weekend; (2) Time of arrival of the transferred patient, categorized into “daytime” (7

Outcomes

Outcomes included transfer to the intensive care unit (ICU) within 48 hours of arrival and 30-day mortality from date of index admission.5,6

Patient Characteristics

Covariates for adjustment included: patient age, sex, race, Elixhauser comorbidity score,11 Diagnosis-Related Group (DRG)-weight, insurance status, year of admission, number of preadmission medications, and service of admission.

Statistical Analyses

We used descriptive statistics to display baseline characteristics and performed a series of univariable and multivariable logistic regression models to obtain the adjusted odds of each transfer process characteristic on each outcome, adjusting for all covariates (proc logistic, SAS Statistical Software, Cary, North Carolina). For analyses of ICU transfer within 48 hours of arrival, all patients initially admitted to the ICU at time of transfer were excluded.

In the secondary analyses, we used a combined day-of-week and time-of-day variable (ie, Monday day, Monday evening, Monday night, Tuesday day, and so on, with Monday day as the reference group) to obtain a more detailed evaluation of timing of transfer on patient outcomes. We also performed stratified analyses to evaluate each transfer process characteristic on adjusted odds of 30-day mortality stratified by service of admission (ie, at the time of transfer to BWH), adjusting for all covariates. For all analyses, two-sided P values < .05 were considered significant.

RESULTS

Overall, 24,352 patients met our inclusion criteria and underwent IHT, of whom 2,174 (8.9%) died within 30 days. Of the 22,910 transferred patients originally admitted to a non-ICU service, 5,464 (23.8%) underwent ICU transfer within 48 hours of arrival. Cohort characteristics are shown in Table 1.