User login

Merkel Cell: Immunotherapy Not Used for Many Patients With Metastatic Disease

PHOENIX — Immunotherapy has revolutionized outcomes for patients are better at high-volume centers.

The study has important implications, said study author Shayan Cheraghlou, MD, an incoming fellow in Mohs surgery at New York University, New York City. “We can see that in a real-world setting, these agents have an impact on survival,” he said. “We also found high-volume centers were significantly more likely to use the agents than low-volume centers.” He presented the findings at the annual meeting of the American College of Mohs Surgery.

MCC is a neuroendocrine skin cancer with a high rate of mortality, and even though it remains relatively rare, its incidence has been rising rapidly since the late 1990s and continues to increase. There were no approved treatments available until 2017, when the US Food and Drug Administration (FDA) approved the immunotherapy drug avelumab (Bavencio) to treat advanced MCC. Two years later, pembrolizumab (Keytruda) also received regulatory approval for MCC, and these two agents have revolutionized outcomes.

“In clinical trial settings, these agents led to significant and durable responses, and they are now the recommended treatments in guidelines for metastatic Merkel cell carcinoma,” said Dr. Cheraghlou. “However, we don’t have data as to how they are being used in the real-world setting and if survival outcomes are similar.”

Real World vs Clinical Trials

Real-world outcomes can differ from clinical trial data, and the adoption of novel therapeutics can be gradual. The goal of this study was to see if clinical trial data matched what was being observed in actual clinical use and if the agents were being used uniformly in centers across the United States.

The authors used data from the National Cancer Database that included patients diagnosed with cancer from 2004 to 2019 and identified 1017 adult cases of metastatic MCC. They then looked at the association of a variety of patient characteristics, tumors, and system factors with the likelihood of receiving systemic treatment for their disease.

“Our first finding was maybe the least surprising,” he said. “Patients who received these therapeutic agents had significantly improved survival compared to those who have not.”

Those who received immunotherapy had a 35% decrease in the risk for death per year compared with those who did not. The 1-, 3-, and 5-year survival rates were 47.2%, 21.8%, and 16.5%, respectively, for patients who did not receive immunotherapy compared with 62.7%, 34.4%, and 23.6%, respectively, for those who were treated with these agents.

Dr. Cheraghlou noted that they started to get some “surprising” findings when they looked at utilization data. “While it has been increasing over time, it is not as high as it should be,” he emphasized.

From 2017 to 2019, 54.2% of patients with metastatic MCC received immunotherapy. The data also showed an increase in use from 45.1% in 2017 to 63.0% in 2019. “This is an effective treatment for aggressive malignancy, so we have to ask why more patients aren’t getting them,” said Dr. Cheraghlou.

Their findings did suggest one possible reason, and that was that high-volume centers were significantly more likely to use the agents than low-volume centers. Centers that were in the top percentile for MCC case volume were three times as likely to use immunotherapy for MCC compared with other institutions. “So, if you have metastatic Merkel cell carcinoma and go to a low volume center, you may be less likely to get potential lifesaving treatment,” he noted.

Implications Going Forward

Dr. Cheraghlou concluded his presentation by pointing out that this study has important implications. The data showed that in a real-world setting, these agents have an impact on survival, but all eligible patients do not have access. “In other countries, there are established referral patterns for all patients with aggressive rare malignancies and really all cancers,” he added. “But in the US, cancer care is more decentralized. Studies like this and others show that high-volume centers have much better outcomes for aggressive rare malignancies, and we should be looking at why this is the case and mitigating these disparities and outcomes.”

Commenting on the study results, Jeffrey M. Farma, MD, co-director of the Melanoma and Skin Cancer Program and professor of surgical oncology at Fox Chase Cancer Center, Philadelphia, referred to the two immunotherapies that have been approved for MCC since 2017, which have demonstrated a survival benefit and improved outcomes in patients with metastatic MCC.

“In their study, immunotherapy was associated with improved outcomes,” said Dr. Farma. “This study highlights the continued lag of implementation of guidelines when new therapies are approved, and that for rare cancers like Merkel cell carcinoma, being treated at high-volume centers and the regionalization of care can lead to improved outcomes for patients.”

Dr. Cheraghlou and Dr. Farma had no disclosures.

A version of this article appeared on Medscape.com.

PHOENIX — Immunotherapy has revolutionized outcomes for patients are better at high-volume centers.

The study has important implications, said study author Shayan Cheraghlou, MD, an incoming fellow in Mohs surgery at New York University, New York City. “We can see that in a real-world setting, these agents have an impact on survival,” he said. “We also found high-volume centers were significantly more likely to use the agents than low-volume centers.” He presented the findings at the annual meeting of the American College of Mohs Surgery.

MCC is a neuroendocrine skin cancer with a high rate of mortality, and even though it remains relatively rare, its incidence has been rising rapidly since the late 1990s and continues to increase. There were no approved treatments available until 2017, when the US Food and Drug Administration (FDA) approved the immunotherapy drug avelumab (Bavencio) to treat advanced MCC. Two years later, pembrolizumab (Keytruda) also received regulatory approval for MCC, and these two agents have revolutionized outcomes.

“In clinical trial settings, these agents led to significant and durable responses, and they are now the recommended treatments in guidelines for metastatic Merkel cell carcinoma,” said Dr. Cheraghlou. “However, we don’t have data as to how they are being used in the real-world setting and if survival outcomes are similar.”

Real World vs Clinical Trials

Real-world outcomes can differ from clinical trial data, and the adoption of novel therapeutics can be gradual. The goal of this study was to see if clinical trial data matched what was being observed in actual clinical use and if the agents were being used uniformly in centers across the United States.

The authors used data from the National Cancer Database that included patients diagnosed with cancer from 2004 to 2019 and identified 1017 adult cases of metastatic MCC. They then looked at the association of a variety of patient characteristics, tumors, and system factors with the likelihood of receiving systemic treatment for their disease.

“Our first finding was maybe the least surprising,” he said. “Patients who received these therapeutic agents had significantly improved survival compared to those who have not.”

Those who received immunotherapy had a 35% decrease in the risk for death per year compared with those who did not. The 1-, 3-, and 5-year survival rates were 47.2%, 21.8%, and 16.5%, respectively, for patients who did not receive immunotherapy compared with 62.7%, 34.4%, and 23.6%, respectively, for those who were treated with these agents.

Dr. Cheraghlou noted that they started to get some “surprising” findings when they looked at utilization data. “While it has been increasing over time, it is not as high as it should be,” he emphasized.

From 2017 to 2019, 54.2% of patients with metastatic MCC received immunotherapy. The data also showed an increase in use from 45.1% in 2017 to 63.0% in 2019. “This is an effective treatment for aggressive malignancy, so we have to ask why more patients aren’t getting them,” said Dr. Cheraghlou.

Their findings did suggest one possible reason, and that was that high-volume centers were significantly more likely to use the agents than low-volume centers. Centers that were in the top percentile for MCC case volume were three times as likely to use immunotherapy for MCC compared with other institutions. “So, if you have metastatic Merkel cell carcinoma and go to a low volume center, you may be less likely to get potential lifesaving treatment,” he noted.

Implications Going Forward

Dr. Cheraghlou concluded his presentation by pointing out that this study has important implications. The data showed that in a real-world setting, these agents have an impact on survival, but all eligible patients do not have access. “In other countries, there are established referral patterns for all patients with aggressive rare malignancies and really all cancers,” he added. “But in the US, cancer care is more decentralized. Studies like this and others show that high-volume centers have much better outcomes for aggressive rare malignancies, and we should be looking at why this is the case and mitigating these disparities and outcomes.”

Commenting on the study results, Jeffrey M. Farma, MD, co-director of the Melanoma and Skin Cancer Program and professor of surgical oncology at Fox Chase Cancer Center, Philadelphia, referred to the two immunotherapies that have been approved for MCC since 2017, which have demonstrated a survival benefit and improved outcomes in patients with metastatic MCC.

“In their study, immunotherapy was associated with improved outcomes,” said Dr. Farma. “This study highlights the continued lag of implementation of guidelines when new therapies are approved, and that for rare cancers like Merkel cell carcinoma, being treated at high-volume centers and the regionalization of care can lead to improved outcomes for patients.”

Dr. Cheraghlou and Dr. Farma had no disclosures.

A version of this article appeared on Medscape.com.

PHOENIX — Immunotherapy has revolutionized outcomes for patients are better at high-volume centers.

The study has important implications, said study author Shayan Cheraghlou, MD, an incoming fellow in Mohs surgery at New York University, New York City. “We can see that in a real-world setting, these agents have an impact on survival,” he said. “We also found high-volume centers were significantly more likely to use the agents than low-volume centers.” He presented the findings at the annual meeting of the American College of Mohs Surgery.

MCC is a neuroendocrine skin cancer with a high rate of mortality, and even though it remains relatively rare, its incidence has been rising rapidly since the late 1990s and continues to increase. There were no approved treatments available until 2017, when the US Food and Drug Administration (FDA) approved the immunotherapy drug avelumab (Bavencio) to treat advanced MCC. Two years later, pembrolizumab (Keytruda) also received regulatory approval for MCC, and these two agents have revolutionized outcomes.

“In clinical trial settings, these agents led to significant and durable responses, and they are now the recommended treatments in guidelines for metastatic Merkel cell carcinoma,” said Dr. Cheraghlou. “However, we don’t have data as to how they are being used in the real-world setting and if survival outcomes are similar.”

Real World vs Clinical Trials

Real-world outcomes can differ from clinical trial data, and the adoption of novel therapeutics can be gradual. The goal of this study was to see if clinical trial data matched what was being observed in actual clinical use and if the agents were being used uniformly in centers across the United States.

The authors used data from the National Cancer Database that included patients diagnosed with cancer from 2004 to 2019 and identified 1017 adult cases of metastatic MCC. They then looked at the association of a variety of patient characteristics, tumors, and system factors with the likelihood of receiving systemic treatment for their disease.

“Our first finding was maybe the least surprising,” he said. “Patients who received these therapeutic agents had significantly improved survival compared to those who have not.”

Those who received immunotherapy had a 35% decrease in the risk for death per year compared with those who did not. The 1-, 3-, and 5-year survival rates were 47.2%, 21.8%, and 16.5%, respectively, for patients who did not receive immunotherapy compared with 62.7%, 34.4%, and 23.6%, respectively, for those who were treated with these agents.

Dr. Cheraghlou noted that they started to get some “surprising” findings when they looked at utilization data. “While it has been increasing over time, it is not as high as it should be,” he emphasized.

From 2017 to 2019, 54.2% of patients with metastatic MCC received immunotherapy. The data also showed an increase in use from 45.1% in 2017 to 63.0% in 2019. “This is an effective treatment for aggressive malignancy, so we have to ask why more patients aren’t getting them,” said Dr. Cheraghlou.

Their findings did suggest one possible reason, and that was that high-volume centers were significantly more likely to use the agents than low-volume centers. Centers that were in the top percentile for MCC case volume were three times as likely to use immunotherapy for MCC compared with other institutions. “So, if you have metastatic Merkel cell carcinoma and go to a low volume center, you may be less likely to get potential lifesaving treatment,” he noted.

Implications Going Forward

Dr. Cheraghlou concluded his presentation by pointing out that this study has important implications. The data showed that in a real-world setting, these agents have an impact on survival, but all eligible patients do not have access. “In other countries, there are established referral patterns for all patients with aggressive rare malignancies and really all cancers,” he added. “But in the US, cancer care is more decentralized. Studies like this and others show that high-volume centers have much better outcomes for aggressive rare malignancies, and we should be looking at why this is the case and mitigating these disparities and outcomes.”

Commenting on the study results, Jeffrey M. Farma, MD, co-director of the Melanoma and Skin Cancer Program and professor of surgical oncology at Fox Chase Cancer Center, Philadelphia, referred to the two immunotherapies that have been approved for MCC since 2017, which have demonstrated a survival benefit and improved outcomes in patients with metastatic MCC.

“In their study, immunotherapy was associated with improved outcomes,” said Dr. Farma. “This study highlights the continued lag of implementation of guidelines when new therapies are approved, and that for rare cancers like Merkel cell carcinoma, being treated at high-volume centers and the regionalization of care can lead to improved outcomes for patients.”

Dr. Cheraghlou and Dr. Farma had no disclosures.

A version of this article appeared on Medscape.com.

FROM ACMS 2024

Post–Mohs Surgery Opioid Prescribing More Common in Some Patient Groups

PHOENIX — The study also found that patients who do receive opioids postoperatively are at an increased risk for chronic opioid use and complications.

This report represents the largest analysis to date of opioid prescribing after dermatologic surgery, said lead author Kyle C. Lauck, MD, a dermatology resident at Baylor University Medical Center, Dallas, Texas. “Females, African Americans, and Latino patients may be at a higher risk of opioid prescription after dermatologic surgery. Surgeons should be aware of these populations and the risks they face when determining candidacy for postsurgical opioid analgesia.”

He presented the results at the annual meeting of the American College of Mohs Surgery.

The opioid epidemic is a concern across all areas of medicine, and the majority of opioid prescriptions in dermatology are given following surgery. Dr. Lauck noted that even though guidelines delegate opioids as second line for pain control, the existing data on opioid prescribing in dermatologic surgery is mixed. For example, some reports have shown that up to 58% of patients receive opioids postoperatively. “No consensus exists when we should routinely give opioids to these patients,” he said.

Even though most surgeons prescribe short courses of opioids, even brief regimens are associated with increased risks for overuse and substance abuse. Population-level data are limited concerning opioid prescriptions in dermatologic surgery, and in particular, there is an absence of data on the risk for long-term complications associated with use.

Certain Populations at Risk

To evaluate opioid prescription rates in dermatologic surgery, focusing on disparities between demographic populations, as well as the risk for long-term complications of postoperative opioid prescriptions, Dr. Lauck and colleagues conducted a retrospective study that included 914,721 dermatologic surgery patients, with billing codes for Mohs micrographic surgery. Patient data were obtained from TriNetX, a federated health research network.

The mean age of patients in this cohort was 54 years, and 124,494 (13.6%) were prescribed postsurgical oral opioids. The most common was oxycodone, prescribed to 43% of patients. Dr. Lauck noted that, according to their data, certain groups appeared more likely to receive a prescription for opioids following surgery. These included Black or African American patients (23.75% vs 12.86% for White patients), females (13.73% vs 13.16% for males), and Latino or Hispanic patients (17.02% vs 13.61% non-Latino/Hispanic patients).

Patients with a history of prior oral opioid prescription, prior opioid abuse or dependence, and any type of substance abuse had a significant increase in absolute risk of being prescribed postsurgical opioids (P < .0001).

The type of surgery also was associated with prescribed postop opioids. For a malignant excision, 18.29% of patients were prescribed postop opioids compared with 14.9% for a benign excision. About a third of patients (34.9%) undergoing a graft repair received opioids.

There was an elevated rate of postop opioid prescribing that was specific to the site of surgery, with the highest rates observed with eyelids, scalp and neck, trunk, and genital sites. The highest overall rates of opioid prescriptions were for patients who underwent excisions in the genital area (54.5%).

Long-Term Consequences

The authors also looked at the longer-term consequences of postop opioid use. “Nearly one in three patients who were prescribed opioids needed subsequent prescriptions down the line,” said Dr. Lauck.

From 3 months to 5 years after surgery, patients who received postsurgical opioids were at significantly higher risk for not only subsequent oral opioid prescription but also opiate abuse, any substance abuse, overdose by opioid narcotics, constipation, and chronic pain. “An opioid prescription may confer further risks of longitudinal complications of chronic opioid use,” he concluded.

Commenting on the study, Jesse M. Lewin, MD, chief of Mohs micrographic and dermatologic surgery at Icahn School of Medicine at Mount Sinai, New York City, noted an important finding of this study was the long-term sequelae of patients who did receive postop opioids.

“This is striking given that postsurgical opiate prescriptions are for short durations and limited number of pills,” he told this news organization. “This study highlights the potential danger of even short course of opiates and should serve as a reminder to dermatologic surgeons to be judicious about opiate prescribing.”

Dr. Lauck and Dr. Lewin had no disclosures.

A version of this article appeared on Medscape.com.

PHOENIX — The study also found that patients who do receive opioids postoperatively are at an increased risk for chronic opioid use and complications.

This report represents the largest analysis to date of opioid prescribing after dermatologic surgery, said lead author Kyle C. Lauck, MD, a dermatology resident at Baylor University Medical Center, Dallas, Texas. “Females, African Americans, and Latino patients may be at a higher risk of opioid prescription after dermatologic surgery. Surgeons should be aware of these populations and the risks they face when determining candidacy for postsurgical opioid analgesia.”

He presented the results at the annual meeting of the American College of Mohs Surgery.

The opioid epidemic is a concern across all areas of medicine, and the majority of opioid prescriptions in dermatology are given following surgery. Dr. Lauck noted that even though guidelines delegate opioids as second line for pain control, the existing data on opioid prescribing in dermatologic surgery is mixed. For example, some reports have shown that up to 58% of patients receive opioids postoperatively. “No consensus exists when we should routinely give opioids to these patients,” he said.

Even though most surgeons prescribe short courses of opioids, even brief regimens are associated with increased risks for overuse and substance abuse. Population-level data are limited concerning opioid prescriptions in dermatologic surgery, and in particular, there is an absence of data on the risk for long-term complications associated with use.

Certain Populations at Risk

To evaluate opioid prescription rates in dermatologic surgery, focusing on disparities between demographic populations, as well as the risk for long-term complications of postoperative opioid prescriptions, Dr. Lauck and colleagues conducted a retrospective study that included 914,721 dermatologic surgery patients, with billing codes for Mohs micrographic surgery. Patient data were obtained from TriNetX, a federated health research network.

The mean age of patients in this cohort was 54 years, and 124,494 (13.6%) were prescribed postsurgical oral opioids. The most common was oxycodone, prescribed to 43% of patients. Dr. Lauck noted that, according to their data, certain groups appeared more likely to receive a prescription for opioids following surgery. These included Black or African American patients (23.75% vs 12.86% for White patients), females (13.73% vs 13.16% for males), and Latino or Hispanic patients (17.02% vs 13.61% non-Latino/Hispanic patients).

Patients with a history of prior oral opioid prescription, prior opioid abuse or dependence, and any type of substance abuse had a significant increase in absolute risk of being prescribed postsurgical opioids (P < .0001).

The type of surgery also was associated with prescribed postop opioids. For a malignant excision, 18.29% of patients were prescribed postop opioids compared with 14.9% for a benign excision. About a third of patients (34.9%) undergoing a graft repair received opioids.

There was an elevated rate of postop opioid prescribing that was specific to the site of surgery, with the highest rates observed with eyelids, scalp and neck, trunk, and genital sites. The highest overall rates of opioid prescriptions were for patients who underwent excisions in the genital area (54.5%).

Long-Term Consequences

The authors also looked at the longer-term consequences of postop opioid use. “Nearly one in three patients who were prescribed opioids needed subsequent prescriptions down the line,” said Dr. Lauck.

From 3 months to 5 years after surgery, patients who received postsurgical opioids were at significantly higher risk for not only subsequent oral opioid prescription but also opiate abuse, any substance abuse, overdose by opioid narcotics, constipation, and chronic pain. “An opioid prescription may confer further risks of longitudinal complications of chronic opioid use,” he concluded.

Commenting on the study, Jesse M. Lewin, MD, chief of Mohs micrographic and dermatologic surgery at Icahn School of Medicine at Mount Sinai, New York City, noted an important finding of this study was the long-term sequelae of patients who did receive postop opioids.

“This is striking given that postsurgical opiate prescriptions are for short durations and limited number of pills,” he told this news organization. “This study highlights the potential danger of even short course of opiates and should serve as a reminder to dermatologic surgeons to be judicious about opiate prescribing.”

Dr. Lauck and Dr. Lewin had no disclosures.

A version of this article appeared on Medscape.com.

PHOENIX — The study also found that patients who do receive opioids postoperatively are at an increased risk for chronic opioid use and complications.

This report represents the largest analysis to date of opioid prescribing after dermatologic surgery, said lead author Kyle C. Lauck, MD, a dermatology resident at Baylor University Medical Center, Dallas, Texas. “Females, African Americans, and Latino patients may be at a higher risk of opioid prescription after dermatologic surgery. Surgeons should be aware of these populations and the risks they face when determining candidacy for postsurgical opioid analgesia.”

He presented the results at the annual meeting of the American College of Mohs Surgery.

The opioid epidemic is a concern across all areas of medicine, and the majority of opioid prescriptions in dermatology are given following surgery. Dr. Lauck noted that even though guidelines delegate opioids as second line for pain control, the existing data on opioid prescribing in dermatologic surgery is mixed. For example, some reports have shown that up to 58% of patients receive opioids postoperatively. “No consensus exists when we should routinely give opioids to these patients,” he said.

Even though most surgeons prescribe short courses of opioids, even brief regimens are associated with increased risks for overuse and substance abuse. Population-level data are limited concerning opioid prescriptions in dermatologic surgery, and in particular, there is an absence of data on the risk for long-term complications associated with use.

Certain Populations at Risk

To evaluate opioid prescription rates in dermatologic surgery, focusing on disparities between demographic populations, as well as the risk for long-term complications of postoperative opioid prescriptions, Dr. Lauck and colleagues conducted a retrospective study that included 914,721 dermatologic surgery patients, with billing codes for Mohs micrographic surgery. Patient data were obtained from TriNetX, a federated health research network.

The mean age of patients in this cohort was 54 years, and 124,494 (13.6%) were prescribed postsurgical oral opioids. The most common was oxycodone, prescribed to 43% of patients. Dr. Lauck noted that, according to their data, certain groups appeared more likely to receive a prescription for opioids following surgery. These included Black or African American patients (23.75% vs 12.86% for White patients), females (13.73% vs 13.16% for males), and Latino or Hispanic patients (17.02% vs 13.61% non-Latino/Hispanic patients).

Patients with a history of prior oral opioid prescription, prior opioid abuse or dependence, and any type of substance abuse had a significant increase in absolute risk of being prescribed postsurgical opioids (P < .0001).

The type of surgery also was associated with prescribed postop opioids. For a malignant excision, 18.29% of patients were prescribed postop opioids compared with 14.9% for a benign excision. About a third of patients (34.9%) undergoing a graft repair received opioids.

There was an elevated rate of postop opioid prescribing that was specific to the site of surgery, with the highest rates observed with eyelids, scalp and neck, trunk, and genital sites. The highest overall rates of opioid prescriptions were for patients who underwent excisions in the genital area (54.5%).

Long-Term Consequences

The authors also looked at the longer-term consequences of postop opioid use. “Nearly one in three patients who were prescribed opioids needed subsequent prescriptions down the line,” said Dr. Lauck.

From 3 months to 5 years after surgery, patients who received postsurgical opioids were at significantly higher risk for not only subsequent oral opioid prescription but also opiate abuse, any substance abuse, overdose by opioid narcotics, constipation, and chronic pain. “An opioid prescription may confer further risks of longitudinal complications of chronic opioid use,” he concluded.

Commenting on the study, Jesse M. Lewin, MD, chief of Mohs micrographic and dermatologic surgery at Icahn School of Medicine at Mount Sinai, New York City, noted an important finding of this study was the long-term sequelae of patients who did receive postop opioids.

“This is striking given that postsurgical opiate prescriptions are for short durations and limited number of pills,” he told this news organization. “This study highlights the potential danger of even short course of opiates and should serve as a reminder to dermatologic surgeons to be judicious about opiate prescribing.”

Dr. Lauck and Dr. Lewin had no disclosures.

A version of this article appeared on Medscape.com.

FROM ACMS 2024

Subcutaneous Antifibrinolytic Reduces Bleeding After Mohs Surgery

“Though Mohs micrographic surgery is associated with low bleeding complication rates, around 1% of patients in the literature report postoperative bleeding,” corresponding author Abigail H. Waldman, MD, director of the Mohs and Dermatologic Surgery Center, at Brigham and Women’s Hospital, Boston, and colleagues wrote in the study, which was published online in the Journal of the American Academy of Dermatology. “Intravenous tranexamic acid has been used across surgical specialties to reduce perioperative blood loss. Prior studies have shown topical TXA, an antifibrinolytic agent, following MMS may be effective in reducing postoperative bleeding complications, but there are no large cohort studies on injectable TXA utilization in all patients undergoing MMS.”

To improve the understanding of this intervention, the researchers examined the impact of off-label, locally injected TXA on postoperative bleeding outcomes following MMS conducted at Brigham and Women’s Hospital. They evaluated two cohorts: 1843 patients who underwent MMS from January 1, 2019, to December 31, 2019 (the pre-TXA cohort), and 2101 patients who underwent MMS from July 1, 2022, to June 30, 2023 (the TXA cohort), and extracted data, including patient and tumor characteristics, MMS procedure details, antithrombotic medication use, systemic conditions that predispose to bleeding, encounters reporting postoperative bleeding, and interventions required for postoperative bleeding, from electronic medical records. Patients reconstructed by a non-MMS surgeon were excluded from the analysis.

Overall, 2509 cases among 1843 patients and 2818 cases among 2101 were included in the pre-TXA and TXA cohorts, respectively. The researchers found that local subcutaneous injection of TXA reduced the risk for postoperative phone calls or visits for bleeding by 25% (RR [risk ratio], 0.75; 0.57-0.99) and risk for bleeding necessitating a medical visit by 51% (RR, 0.49; 0.32-0.77).

The use of preoperative TXA in several subgroups of patients also was also associated with a reduction in visits for bleeding, including those using alcohol (52% reduction; RR, 0.47; 0.26-0.85), cigarettes (57% reduction; RR, 0.43; 0.23-0.82), oral anticoagulants (61% reduction; RR, 0.39; 0.20-0.77), or antiplatelets (60% reduction; RR, 0.40; 0.20-0.79). The use of TXA was also associated with reduced visits for bleeding in tumors of the head and neck (RR, 0.45; 0.26-0.77) and tumors with a preoperative diameter > 2 cm (RR, 0.37; 0.15-0.90).

Impact of Surgical Repair Type

In other findings, the type of surgical repair was a potential confounder, the authors reported. Grafts and flaps were associated with an increased risk for bleeding across both cohorts (RR, 2.36 [1.5-3.6] and 1.7 [1.1-2.6], respectively) and together comprised 15% of all procedures in the pre-TXA cohort compared with 11.1% in TXA cohort. Two patients in the TXA cohort (0.11%) developed deep vein thrombosis (DVT) 10- and 20-days postoperation, a rate that the authors said is comparable to that of the general population. The two patients had risk factors for hypercoagulability, including advanced cancer and recurrent DVT.

“Overall, local injection of TXA was an effective method for reducing the risk of clinically significant bleeding following MMS,” the researchers concluded. “Perioperative TXA may help to limit the risk of bleeding overall, as well as in populations predisposed to bleeding.” Adverse events with TXA use were rare “and delayed beyond the activity of TXA, indicating a low likelihood of being due to TXA,” they wrote.

“Dermatologists performing MMS may consider incorporating local TXA injection into their regular practice,” they noted, adding that “legal counsel on adverse effects in the setting of off-label pharmaceutical usage may be advised.”

In an interview, Patricia M. Richey, MD, director of Mohs surgery at Boston Medical Center, who was asked to comment on the study, said that postoperative bleeding is one of the most commonly encountered Mohs surgery complications. “Because of increased clinic visits and phone calls, it can also often result in decreased patient satisfaction,” she said.

“This study is particularly notable in that we see that local subcutaneous TXA injection decreased visits for bleeding even in those using oral anticoagulants, antiplatelets, alcohol, and cigarettes. Dermatologic surgery has a very low complication rate, even in patients on anticoagulant and antiplatelet medications, but this study shows that TXA is a fantastic option for Mohs surgeons and patients.”

Neither the study authors nor Dr. Richey reported having financial disclosures.

A version of this article first appeared on Medscape.com.

“Though Mohs micrographic surgery is associated with low bleeding complication rates, around 1% of patients in the literature report postoperative bleeding,” corresponding author Abigail H. Waldman, MD, director of the Mohs and Dermatologic Surgery Center, at Brigham and Women’s Hospital, Boston, and colleagues wrote in the study, which was published online in the Journal of the American Academy of Dermatology. “Intravenous tranexamic acid has been used across surgical specialties to reduce perioperative blood loss. Prior studies have shown topical TXA, an antifibrinolytic agent, following MMS may be effective in reducing postoperative bleeding complications, but there are no large cohort studies on injectable TXA utilization in all patients undergoing MMS.”

To improve the understanding of this intervention, the researchers examined the impact of off-label, locally injected TXA on postoperative bleeding outcomes following MMS conducted at Brigham and Women’s Hospital. They evaluated two cohorts: 1843 patients who underwent MMS from January 1, 2019, to December 31, 2019 (the pre-TXA cohort), and 2101 patients who underwent MMS from July 1, 2022, to June 30, 2023 (the TXA cohort), and extracted data, including patient and tumor characteristics, MMS procedure details, antithrombotic medication use, systemic conditions that predispose to bleeding, encounters reporting postoperative bleeding, and interventions required for postoperative bleeding, from electronic medical records. Patients reconstructed by a non-MMS surgeon were excluded from the analysis.

Overall, 2509 cases among 1843 patients and 2818 cases among 2101 were included in the pre-TXA and TXA cohorts, respectively. The researchers found that local subcutaneous injection of TXA reduced the risk for postoperative phone calls or visits for bleeding by 25% (RR [risk ratio], 0.75; 0.57-0.99) and risk for bleeding necessitating a medical visit by 51% (RR, 0.49; 0.32-0.77).

The use of preoperative TXA in several subgroups of patients also was also associated with a reduction in visits for bleeding, including those using alcohol (52% reduction; RR, 0.47; 0.26-0.85), cigarettes (57% reduction; RR, 0.43; 0.23-0.82), oral anticoagulants (61% reduction; RR, 0.39; 0.20-0.77), or antiplatelets (60% reduction; RR, 0.40; 0.20-0.79). The use of TXA was also associated with reduced visits for bleeding in tumors of the head and neck (RR, 0.45; 0.26-0.77) and tumors with a preoperative diameter > 2 cm (RR, 0.37; 0.15-0.90).

Impact of Surgical Repair Type

In other findings, the type of surgical repair was a potential confounder, the authors reported. Grafts and flaps were associated with an increased risk for bleeding across both cohorts (RR, 2.36 [1.5-3.6] and 1.7 [1.1-2.6], respectively) and together comprised 15% of all procedures in the pre-TXA cohort compared with 11.1% in TXA cohort. Two patients in the TXA cohort (0.11%) developed deep vein thrombosis (DVT) 10- and 20-days postoperation, a rate that the authors said is comparable to that of the general population. The two patients had risk factors for hypercoagulability, including advanced cancer and recurrent DVT.

“Overall, local injection of TXA was an effective method for reducing the risk of clinically significant bleeding following MMS,” the researchers concluded. “Perioperative TXA may help to limit the risk of bleeding overall, as well as in populations predisposed to bleeding.” Adverse events with TXA use were rare “and delayed beyond the activity of TXA, indicating a low likelihood of being due to TXA,” they wrote.

“Dermatologists performing MMS may consider incorporating local TXA injection into their regular practice,” they noted, adding that “legal counsel on adverse effects in the setting of off-label pharmaceutical usage may be advised.”

In an interview, Patricia M. Richey, MD, director of Mohs surgery at Boston Medical Center, who was asked to comment on the study, said that postoperative bleeding is one of the most commonly encountered Mohs surgery complications. “Because of increased clinic visits and phone calls, it can also often result in decreased patient satisfaction,” she said.

“This study is particularly notable in that we see that local subcutaneous TXA injection decreased visits for bleeding even in those using oral anticoagulants, antiplatelets, alcohol, and cigarettes. Dermatologic surgery has a very low complication rate, even in patients on anticoagulant and antiplatelet medications, but this study shows that TXA is a fantastic option for Mohs surgeons and patients.”

Neither the study authors nor Dr. Richey reported having financial disclosures.

A version of this article first appeared on Medscape.com.

“Though Mohs micrographic surgery is associated with low bleeding complication rates, around 1% of patients in the literature report postoperative bleeding,” corresponding author Abigail H. Waldman, MD, director of the Mohs and Dermatologic Surgery Center, at Brigham and Women’s Hospital, Boston, and colleagues wrote in the study, which was published online in the Journal of the American Academy of Dermatology. “Intravenous tranexamic acid has been used across surgical specialties to reduce perioperative blood loss. Prior studies have shown topical TXA, an antifibrinolytic agent, following MMS may be effective in reducing postoperative bleeding complications, but there are no large cohort studies on injectable TXA utilization in all patients undergoing MMS.”

To improve the understanding of this intervention, the researchers examined the impact of off-label, locally injected TXA on postoperative bleeding outcomes following MMS conducted at Brigham and Women’s Hospital. They evaluated two cohorts: 1843 patients who underwent MMS from January 1, 2019, to December 31, 2019 (the pre-TXA cohort), and 2101 patients who underwent MMS from July 1, 2022, to June 30, 2023 (the TXA cohort), and extracted data, including patient and tumor characteristics, MMS procedure details, antithrombotic medication use, systemic conditions that predispose to bleeding, encounters reporting postoperative bleeding, and interventions required for postoperative bleeding, from electronic medical records. Patients reconstructed by a non-MMS surgeon were excluded from the analysis.

Overall, 2509 cases among 1843 patients and 2818 cases among 2101 were included in the pre-TXA and TXA cohorts, respectively. The researchers found that local subcutaneous injection of TXA reduced the risk for postoperative phone calls or visits for bleeding by 25% (RR [risk ratio], 0.75; 0.57-0.99) and risk for bleeding necessitating a medical visit by 51% (RR, 0.49; 0.32-0.77).

The use of preoperative TXA in several subgroups of patients also was also associated with a reduction in visits for bleeding, including those using alcohol (52% reduction; RR, 0.47; 0.26-0.85), cigarettes (57% reduction; RR, 0.43; 0.23-0.82), oral anticoagulants (61% reduction; RR, 0.39; 0.20-0.77), or antiplatelets (60% reduction; RR, 0.40; 0.20-0.79). The use of TXA was also associated with reduced visits for bleeding in tumors of the head and neck (RR, 0.45; 0.26-0.77) and tumors with a preoperative diameter > 2 cm (RR, 0.37; 0.15-0.90).

Impact of Surgical Repair Type

In other findings, the type of surgical repair was a potential confounder, the authors reported. Grafts and flaps were associated with an increased risk for bleeding across both cohorts (RR, 2.36 [1.5-3.6] and 1.7 [1.1-2.6], respectively) and together comprised 15% of all procedures in the pre-TXA cohort compared with 11.1% in TXA cohort. Two patients in the TXA cohort (0.11%) developed deep vein thrombosis (DVT) 10- and 20-days postoperation, a rate that the authors said is comparable to that of the general population. The two patients had risk factors for hypercoagulability, including advanced cancer and recurrent DVT.

“Overall, local injection of TXA was an effective method for reducing the risk of clinically significant bleeding following MMS,” the researchers concluded. “Perioperative TXA may help to limit the risk of bleeding overall, as well as in populations predisposed to bleeding.” Adverse events with TXA use were rare “and delayed beyond the activity of TXA, indicating a low likelihood of being due to TXA,” they wrote.

“Dermatologists performing MMS may consider incorporating local TXA injection into their regular practice,” they noted, adding that “legal counsel on adverse effects in the setting of off-label pharmaceutical usage may be advised.”

In an interview, Patricia M. Richey, MD, director of Mohs surgery at Boston Medical Center, who was asked to comment on the study, said that postoperative bleeding is one of the most commonly encountered Mohs surgery complications. “Because of increased clinic visits and phone calls, it can also often result in decreased patient satisfaction,” she said.

“This study is particularly notable in that we see that local subcutaneous TXA injection decreased visits for bleeding even in those using oral anticoagulants, antiplatelets, alcohol, and cigarettes. Dermatologic surgery has a very low complication rate, even in patients on anticoagulant and antiplatelet medications, but this study shows that TXA is a fantastic option for Mohs surgeons and patients.”

Neither the study authors nor Dr. Richey reported having financial disclosures.

A version of this article first appeared on Medscape.com.

FROM JOURNAL OF THE AMERICAN ACADEMY OF DERMATOLOGY

Urine Tests Could Be ‘Enormous Step’ in Diagnosing Cancer

Emerging science suggests that the body’s “liquid gold” could be particularly useful for liquid biopsies, offering a convenient, pain-free, and cost-effective way to spot otherwise hard-to-detect cancers.

“The search for cancer biomarkers that can be detected in urine could provide an enormous step forward to decrease cancer patient mortality,” said Kenneth R. Shroyer, MD, PhD, a pathologist at Stony Brook University, Stony Brook, New York, who studies cancer biomarkers.

Physicians have long known that urine can reveal a lot about our health — that’s why urinalysis has been part of medicine for 6000 years. Urine tests can detect diabetes, pregnancy, drug use, and urinary or kidney conditions.

But other conditions leave clues in urine, too, and cancer may be one of the most promising. “Urine testing could detect biomarkers of early-stage cancers, not only from local but also distant sites,” Dr. Shroyer said. It could also help flag recurrence in cancer survivors who have undergone treatment.

Granted, cancer biomarkers in urine are not nearly as widely studied as those in the blood, Dr. Shroyer noted. But a new wave of urine tests suggests research is gaining pace.

“The recent availability of high-throughput screening technologies has enabled researchers to investigate cancer from a top-down, comprehensive approach,” said Pak Kin Wong, PhD, professor of mechanical engineering, biomedical engineering, and surgery at The Pennsylvania State University. “We are starting to understand the rich information that can be obtained from urine.”

Urine is mostly water (about 95%) and urea, a metabolic byproduct that imparts that signature yellow color (about 2%). The other 3% is a mix of waste products, minerals, and other compounds the kidneys removed from the blood. Even in trace amounts, these substances say a lot.

Among them are “exfoliated cancer cells, cell-free DNA, hormones, and the urine microbiota — the collection of microbes in our urinary tract system,” Dr. Wong said.

“It is highly promising to be one of the major biological fluids used for screening, diagnosis, prognosis, and monitoring treatment efficiency in the era of precision medicine,” Dr. Wong said.

How Urine Testing Could Reveal Cancer

Still, as exciting as the prospect is, there’s a lot to consider in the hunt for cancer biomarkers in urine. These biomarkers must be able to pass through the renal nephrons (filtering units), remain stable in urine, and have high-level sensitivity, Dr. Shroyer said. They should also have high specificity for cancer vs benign conditions and be expressed at early stages, before the primary tumor has spread.

“At this stage, few circulating biomarkers have been found that are both sensitive and specific for early-stage disease,” said Dr. Shroyer.

But there are a few promising examples under investigation in humans:

Prostate cancer. Researchers at the University of Michigan have developed a urine test that detects high-grade prostate cancer more accurately than existing tests, including PHI, SelectMDx, 4Kscore, EPI, MPS, and IsoPSA.

The MyProstateScore 2.0 (MPS2) test, which looks for 18 genes associated with high-grade tumors, could reduce unnecessary biopsies in men with elevated prostate-specific antigen levels, according to a paper published in JAMA Oncology.

It makes sense. The prostate gland secretes fluid that becomes part of the semen, traces of which enter urine. After a digital rectal exam, even more prostate fluid enters the urine. If a patient has prostate cancer, genetic material from the cancer cells will infiltrate the urine.

In the MPS2 test, researchers used polymerase chain reaction (PCR) testing in urine. “The technology used for COVID PCR is essentially the same as the PCR used to detect transcripts associated with high-grade prostate cancer in urine,” said study author Arul Chinnaiyan, MD, PhD, director of the Michigan Center for Translational Pathology at the University of Michigan, Ann Arbor. “In the case of the MPS2 test, we are doing PCR on 18 genes simultaneously on urine samples.”

A statistical model uses levels of that genetic material to predict the risk for high-grade disease, helping doctors decide what to do next. At 95% sensitivity, the MPS2 model could eliminate 35%-45% of unnecessary biopsies, compared with 15%-30% for the other tests, and reduce repeat biopsies by 46%-51%, compared with 9%-21% for the other tests.

Head and neck cancer. In a paper published in JCI Insight, researchers described a test that finds ultra-short fragments of DNA in urine to enable early detection of head and neck cancers caused by human papillomavirus.

“Our data show that a relatively small volume of urine (30-60 mL) gives overall detection results comparable to a tube of blood,” said study author Muneesh Tewari, MD, PhD, professor of hematology and oncology at the University of Michigan .

A larger volume of urine could potentially “make cancer detection even more sensitive than blood,” Dr. Tewari said, “allowing cancers to be detected at the earliest stages when they are more curable.”

The team used a technique called droplet digital PCR to detect DNA fragments that are “ultra-short” (less than 50 base pairs long) and usually missed by conventional PCR testing. This transrenal cell-free tumor DNA, which travels from the tumor into the bloodstream, is broken down small enough to pass through the kidneys and into the urine. But the fragments are still long enough to carry information about the tumor’s genetic signature.

This test could spot cancer before a tumor grows big enough — about a centimeter wide and carrying a billion cells — to spot on a CT scan or other imaging test. “When we are instead detecting fragments of DNA released from a tumor,” said Dr. Tewari, “our testing methods are very sensitive and can detect DNA in urine that came from just 5-10 cells in a tumor that died and released their DNA into the blood, which then made its way into the urine.”

Pancreatic cancer. Pancreatic ductal adenocarcinoma is one of the deadliest cancers, largely because it is diagnosed so late. A urine panel now in clinical trials could help doctors diagnose the cancer before it has spread so more people can have the tumor surgically removed, improving prognosis.

Using enzyme-linked immunosorbent assay test, a common lab method that detects antibodies and other proteins, the team measured expression levels for three genes (LYVE1, REG1B, and TFF1) in urine samples collected from people up to 5 years before they were diagnosed with pancreatic cancer. The researchers combined this result with patients’ urinary creatinine levels, a common component of existing urinalysis, and their age to develop a risk score.

This score performed similarly to an existing blood test, CA19-9, in predicting patients’ risk for pancreatic cancer up to 1 year before diagnosis. When combined with CA19-9, the urinary panel helped spot cancer up to 2 years before diagnosis.

According to a paper in the International Journal of Cancer, “the urine panel and affiliated PancRISK are currently being validated in a prospective clinical study (UroPanc).” If all goes well, they could be implemented in clinical practice in a few years as a “noninvasive stratification tool” to identify patients for further testing, speeding up diagnosis, and saving lives.

Limitations and Promises

Each cancer type is different, and more research is needed to map out which substances in urine predict which cancers and to develop tests for mass adoption. “There are medical and technological hurdles to the large-scale implementation of urine analysis for complex diseases such as cancer,” said Dr. Wong.

One possibility: Scientists and clinicians could collaborate and use artificial intelligence techniques to combine urine test results with other data.

“It is likely that future diagnostics may combine urine with other biological samples such as feces and saliva, among others,” said Dr. Wong. “This is especially true when novel data science and machine learning techniques can integrate comprehensive data from patients that span genetic, proteomic, metabolic, microbiomic, and even behavioral data to evaluate a patient’s condition.”

One thing that excites Dr. Tewari about urine-based cancer testing: “We think it could be especially impactful for patients living in rural areas or other areas with less access to healthcare services,” he said.

A version of this article appeared on Medscape.com.

Emerging science suggests that the body’s “liquid gold” could be particularly useful for liquid biopsies, offering a convenient, pain-free, and cost-effective way to spot otherwise hard-to-detect cancers.

“The search for cancer biomarkers that can be detected in urine could provide an enormous step forward to decrease cancer patient mortality,” said Kenneth R. Shroyer, MD, PhD, a pathologist at Stony Brook University, Stony Brook, New York, who studies cancer biomarkers.

Physicians have long known that urine can reveal a lot about our health — that’s why urinalysis has been part of medicine for 6000 years. Urine tests can detect diabetes, pregnancy, drug use, and urinary or kidney conditions.

But other conditions leave clues in urine, too, and cancer may be one of the most promising. “Urine testing could detect biomarkers of early-stage cancers, not only from local but also distant sites,” Dr. Shroyer said. It could also help flag recurrence in cancer survivors who have undergone treatment.

Granted, cancer biomarkers in urine are not nearly as widely studied as those in the blood, Dr. Shroyer noted. But a new wave of urine tests suggests research is gaining pace.

“The recent availability of high-throughput screening technologies has enabled researchers to investigate cancer from a top-down, comprehensive approach,” said Pak Kin Wong, PhD, professor of mechanical engineering, biomedical engineering, and surgery at The Pennsylvania State University. “We are starting to understand the rich information that can be obtained from urine.”

Urine is mostly water (about 95%) and urea, a metabolic byproduct that imparts that signature yellow color (about 2%). The other 3% is a mix of waste products, minerals, and other compounds the kidneys removed from the blood. Even in trace amounts, these substances say a lot.

Among them are “exfoliated cancer cells, cell-free DNA, hormones, and the urine microbiota — the collection of microbes in our urinary tract system,” Dr. Wong said.

“It is highly promising to be one of the major biological fluids used for screening, diagnosis, prognosis, and monitoring treatment efficiency in the era of precision medicine,” Dr. Wong said.

How Urine Testing Could Reveal Cancer

Still, as exciting as the prospect is, there’s a lot to consider in the hunt for cancer biomarkers in urine. These biomarkers must be able to pass through the renal nephrons (filtering units), remain stable in urine, and have high-level sensitivity, Dr. Shroyer said. They should also have high specificity for cancer vs benign conditions and be expressed at early stages, before the primary tumor has spread.

“At this stage, few circulating biomarkers have been found that are both sensitive and specific for early-stage disease,” said Dr. Shroyer.

But there are a few promising examples under investigation in humans:

Prostate cancer. Researchers at the University of Michigan have developed a urine test that detects high-grade prostate cancer more accurately than existing tests, including PHI, SelectMDx, 4Kscore, EPI, MPS, and IsoPSA.

The MyProstateScore 2.0 (MPS2) test, which looks for 18 genes associated with high-grade tumors, could reduce unnecessary biopsies in men with elevated prostate-specific antigen levels, according to a paper published in JAMA Oncology.

It makes sense. The prostate gland secretes fluid that becomes part of the semen, traces of which enter urine. After a digital rectal exam, even more prostate fluid enters the urine. If a patient has prostate cancer, genetic material from the cancer cells will infiltrate the urine.

In the MPS2 test, researchers used polymerase chain reaction (PCR) testing in urine. “The technology used for COVID PCR is essentially the same as the PCR used to detect transcripts associated with high-grade prostate cancer in urine,” said study author Arul Chinnaiyan, MD, PhD, director of the Michigan Center for Translational Pathology at the University of Michigan, Ann Arbor. “In the case of the MPS2 test, we are doing PCR on 18 genes simultaneously on urine samples.”

A statistical model uses levels of that genetic material to predict the risk for high-grade disease, helping doctors decide what to do next. At 95% sensitivity, the MPS2 model could eliminate 35%-45% of unnecessary biopsies, compared with 15%-30% for the other tests, and reduce repeat biopsies by 46%-51%, compared with 9%-21% for the other tests.

Head and neck cancer. In a paper published in JCI Insight, researchers described a test that finds ultra-short fragments of DNA in urine to enable early detection of head and neck cancers caused by human papillomavirus.

“Our data show that a relatively small volume of urine (30-60 mL) gives overall detection results comparable to a tube of blood,” said study author Muneesh Tewari, MD, PhD, professor of hematology and oncology at the University of Michigan .

A larger volume of urine could potentially “make cancer detection even more sensitive than blood,” Dr. Tewari said, “allowing cancers to be detected at the earliest stages when they are more curable.”

The team used a technique called droplet digital PCR to detect DNA fragments that are “ultra-short” (less than 50 base pairs long) and usually missed by conventional PCR testing. This transrenal cell-free tumor DNA, which travels from the tumor into the bloodstream, is broken down small enough to pass through the kidneys and into the urine. But the fragments are still long enough to carry information about the tumor’s genetic signature.

This test could spot cancer before a tumor grows big enough — about a centimeter wide and carrying a billion cells — to spot on a CT scan or other imaging test. “When we are instead detecting fragments of DNA released from a tumor,” said Dr. Tewari, “our testing methods are very sensitive and can detect DNA in urine that came from just 5-10 cells in a tumor that died and released their DNA into the blood, which then made its way into the urine.”

Pancreatic cancer. Pancreatic ductal adenocarcinoma is one of the deadliest cancers, largely because it is diagnosed so late. A urine panel now in clinical trials could help doctors diagnose the cancer before it has spread so more people can have the tumor surgically removed, improving prognosis.

Using enzyme-linked immunosorbent assay test, a common lab method that detects antibodies and other proteins, the team measured expression levels for three genes (LYVE1, REG1B, and TFF1) in urine samples collected from people up to 5 years before they were diagnosed with pancreatic cancer. The researchers combined this result with patients’ urinary creatinine levels, a common component of existing urinalysis, and their age to develop a risk score.

This score performed similarly to an existing blood test, CA19-9, in predicting patients’ risk for pancreatic cancer up to 1 year before diagnosis. When combined with CA19-9, the urinary panel helped spot cancer up to 2 years before diagnosis.

According to a paper in the International Journal of Cancer, “the urine panel and affiliated PancRISK are currently being validated in a prospective clinical study (UroPanc).” If all goes well, they could be implemented in clinical practice in a few years as a “noninvasive stratification tool” to identify patients for further testing, speeding up diagnosis, and saving lives.

Limitations and Promises

Each cancer type is different, and more research is needed to map out which substances in urine predict which cancers and to develop tests for mass adoption. “There are medical and technological hurdles to the large-scale implementation of urine analysis for complex diseases such as cancer,” said Dr. Wong.

One possibility: Scientists and clinicians could collaborate and use artificial intelligence techniques to combine urine test results with other data.

“It is likely that future diagnostics may combine urine with other biological samples such as feces and saliva, among others,” said Dr. Wong. “This is especially true when novel data science and machine learning techniques can integrate comprehensive data from patients that span genetic, proteomic, metabolic, microbiomic, and even behavioral data to evaluate a patient’s condition.”

One thing that excites Dr. Tewari about urine-based cancer testing: “We think it could be especially impactful for patients living in rural areas or other areas with less access to healthcare services,” he said.

A version of this article appeared on Medscape.com.

Emerging science suggests that the body’s “liquid gold” could be particularly useful for liquid biopsies, offering a convenient, pain-free, and cost-effective way to spot otherwise hard-to-detect cancers.

“The search for cancer biomarkers that can be detected in urine could provide an enormous step forward to decrease cancer patient mortality,” said Kenneth R. Shroyer, MD, PhD, a pathologist at Stony Brook University, Stony Brook, New York, who studies cancer biomarkers.

Physicians have long known that urine can reveal a lot about our health — that’s why urinalysis has been part of medicine for 6000 years. Urine tests can detect diabetes, pregnancy, drug use, and urinary or kidney conditions.

But other conditions leave clues in urine, too, and cancer may be one of the most promising. “Urine testing could detect biomarkers of early-stage cancers, not only from local but also distant sites,” Dr. Shroyer said. It could also help flag recurrence in cancer survivors who have undergone treatment.

Granted, cancer biomarkers in urine are not nearly as widely studied as those in the blood, Dr. Shroyer noted. But a new wave of urine tests suggests research is gaining pace.

“The recent availability of high-throughput screening technologies has enabled researchers to investigate cancer from a top-down, comprehensive approach,” said Pak Kin Wong, PhD, professor of mechanical engineering, biomedical engineering, and surgery at The Pennsylvania State University. “We are starting to understand the rich information that can be obtained from urine.”

Urine is mostly water (about 95%) and urea, a metabolic byproduct that imparts that signature yellow color (about 2%). The other 3% is a mix of waste products, minerals, and other compounds the kidneys removed from the blood. Even in trace amounts, these substances say a lot.

Among them are “exfoliated cancer cells, cell-free DNA, hormones, and the urine microbiota — the collection of microbes in our urinary tract system,” Dr. Wong said.

“It is highly promising to be one of the major biological fluids used for screening, diagnosis, prognosis, and monitoring treatment efficiency in the era of precision medicine,” Dr. Wong said.

How Urine Testing Could Reveal Cancer

Still, as exciting as the prospect is, there’s a lot to consider in the hunt for cancer biomarkers in urine. These biomarkers must be able to pass through the renal nephrons (filtering units), remain stable in urine, and have high-level sensitivity, Dr. Shroyer said. They should also have high specificity for cancer vs benign conditions and be expressed at early stages, before the primary tumor has spread.

“At this stage, few circulating biomarkers have been found that are both sensitive and specific for early-stage disease,” said Dr. Shroyer.

But there are a few promising examples under investigation in humans:

Prostate cancer. Researchers at the University of Michigan have developed a urine test that detects high-grade prostate cancer more accurately than existing tests, including PHI, SelectMDx, 4Kscore, EPI, MPS, and IsoPSA.

The MyProstateScore 2.0 (MPS2) test, which looks for 18 genes associated with high-grade tumors, could reduce unnecessary biopsies in men with elevated prostate-specific antigen levels, according to a paper published in JAMA Oncology.

It makes sense. The prostate gland secretes fluid that becomes part of the semen, traces of which enter urine. After a digital rectal exam, even more prostate fluid enters the urine. If a patient has prostate cancer, genetic material from the cancer cells will infiltrate the urine.

In the MPS2 test, researchers used polymerase chain reaction (PCR) testing in urine. “The technology used for COVID PCR is essentially the same as the PCR used to detect transcripts associated with high-grade prostate cancer in urine,” said study author Arul Chinnaiyan, MD, PhD, director of the Michigan Center for Translational Pathology at the University of Michigan, Ann Arbor. “In the case of the MPS2 test, we are doing PCR on 18 genes simultaneously on urine samples.”

A statistical model uses levels of that genetic material to predict the risk for high-grade disease, helping doctors decide what to do next. At 95% sensitivity, the MPS2 model could eliminate 35%-45% of unnecessary biopsies, compared with 15%-30% for the other tests, and reduce repeat biopsies by 46%-51%, compared with 9%-21% for the other tests.

Head and neck cancer. In a paper published in JCI Insight, researchers described a test that finds ultra-short fragments of DNA in urine to enable early detection of head and neck cancers caused by human papillomavirus.

“Our data show that a relatively small volume of urine (30-60 mL) gives overall detection results comparable to a tube of blood,” said study author Muneesh Tewari, MD, PhD, professor of hematology and oncology at the University of Michigan .

A larger volume of urine could potentially “make cancer detection even more sensitive than blood,” Dr. Tewari said, “allowing cancers to be detected at the earliest stages when they are more curable.”

The team used a technique called droplet digital PCR to detect DNA fragments that are “ultra-short” (less than 50 base pairs long) and usually missed by conventional PCR testing. This transrenal cell-free tumor DNA, which travels from the tumor into the bloodstream, is broken down small enough to pass through the kidneys and into the urine. But the fragments are still long enough to carry information about the tumor’s genetic signature.

This test could spot cancer before a tumor grows big enough — about a centimeter wide and carrying a billion cells — to spot on a CT scan or other imaging test. “When we are instead detecting fragments of DNA released from a tumor,” said Dr. Tewari, “our testing methods are very sensitive and can detect DNA in urine that came from just 5-10 cells in a tumor that died and released their DNA into the blood, which then made its way into the urine.”

Pancreatic cancer. Pancreatic ductal adenocarcinoma is one of the deadliest cancers, largely because it is diagnosed so late. A urine panel now in clinical trials could help doctors diagnose the cancer before it has spread so more people can have the tumor surgically removed, improving prognosis.

Using enzyme-linked immunosorbent assay test, a common lab method that detects antibodies and other proteins, the team measured expression levels for three genes (LYVE1, REG1B, and TFF1) in urine samples collected from people up to 5 years before they were diagnosed with pancreatic cancer. The researchers combined this result with patients’ urinary creatinine levels, a common component of existing urinalysis, and their age to develop a risk score.

This score performed similarly to an existing blood test, CA19-9, in predicting patients’ risk for pancreatic cancer up to 1 year before diagnosis. When combined with CA19-9, the urinary panel helped spot cancer up to 2 years before diagnosis.

According to a paper in the International Journal of Cancer, “the urine panel and affiliated PancRISK are currently being validated in a prospective clinical study (UroPanc).” If all goes well, they could be implemented in clinical practice in a few years as a “noninvasive stratification tool” to identify patients for further testing, speeding up diagnosis, and saving lives.

Limitations and Promises

Each cancer type is different, and more research is needed to map out which substances in urine predict which cancers and to develop tests for mass adoption. “There are medical and technological hurdles to the large-scale implementation of urine analysis for complex diseases such as cancer,” said Dr. Wong.

One possibility: Scientists and clinicians could collaborate and use artificial intelligence techniques to combine urine test results with other data.

“It is likely that future diagnostics may combine urine with other biological samples such as feces and saliva, among others,” said Dr. Wong. “This is especially true when novel data science and machine learning techniques can integrate comprehensive data from patients that span genetic, proteomic, metabolic, microbiomic, and even behavioral data to evaluate a patient’s condition.”

One thing that excites Dr. Tewari about urine-based cancer testing: “We think it could be especially impactful for patients living in rural areas or other areas with less access to healthcare services,” he said.

A version of this article appeared on Medscape.com.

Exploring Skin Pigmentation Adaptation: A Systematic Review on the Vitamin D Adaptation Hypothesis

The risk for developing skin cancer can be somewhat attributed to variations in skin pigmentation. Historically, lighter skin pigmentation has been observed in populations living in higher latitudes and darker pigmentation in populations near the equator. Although skin pigmentation is a conglomeration of genetic and environmental factors, anthropologic studies have demonstrated an association of human skin lightening with historic human migratory patterns.1 It is postulated that migration to latitudes with less UVB light penetration has resulted in a compensatory natural selection of lighter skin types. Furthermore, the driving force behind this migration-associated skin lightening has remained unclear.1

The need for folate metabolism, vitamin D synthesis, and barrier protection, as well as cultural practices, has been postulated as driving factors for skin pigmentation variation. Synthesis of vitamin D is a UV radiation (UVR)–dependent process and has remained a prominent theoretical driver for the basis of evolutionary skin lightening. Vitamin D can be acquired both exogenously or endogenously via dietary supplementation or sunlight; however, historically it has been obtained through UVB exposure primarily. Once UVB is absorbed by the skin, it catalyzes conversion of 7-dehydrocholesterol to previtamin D3, which is converted to vitamin D in the kidneys.2,3 It is suggested that lighter skin tones have an advantage over darker skin tones in synthesizing vitamin D at higher latitudes where there is less UVB, thus leading to the adaptation process.1 In this systematic review, we analyzed the evolutionary vitamin D adaptation hypothesis and assessed the validity of evidence supporting this theory in the literature.

Methods

A search of PubMed, Embase, and the Cochrane Reviews database was conducted using the terms evolution, vitamin D, and skin to generate articles published from 2010 to 2022 that evaluated the influence of UVR-dependent production of vitamin D on skin pigmentation through historical migration patterns (Figure). Studies were excluded during an initial screening of abstracts followed by full-text assessment if they only had abstracts and if articles were inaccessible for review or in the form of case reports and commentaries.

The following data were extracted from each included study: reference citation, affiliated institutions of authors, author specialties, journal name, year of publication, study period, type of article, type of study, mechanism of adaptation, data concluding or supporting vitamin D as the driver, and data concluding or suggesting against vitamin D as the driver. Data concluding or supporting vitamin D as the driver were recorded from statistically significant results, study conclusions, and direct quotations. Data concluding or suggesting against vitamin D as the driver also were recorded from significant results, study conclusions, and direct quotes. The mechanism of adaptation was based on vitamin D synthesis modulation, melanin upregulation, genetic selections, genetic drift, mating patterns, increased vitamin D sensitivity, interbreeding, and diet.

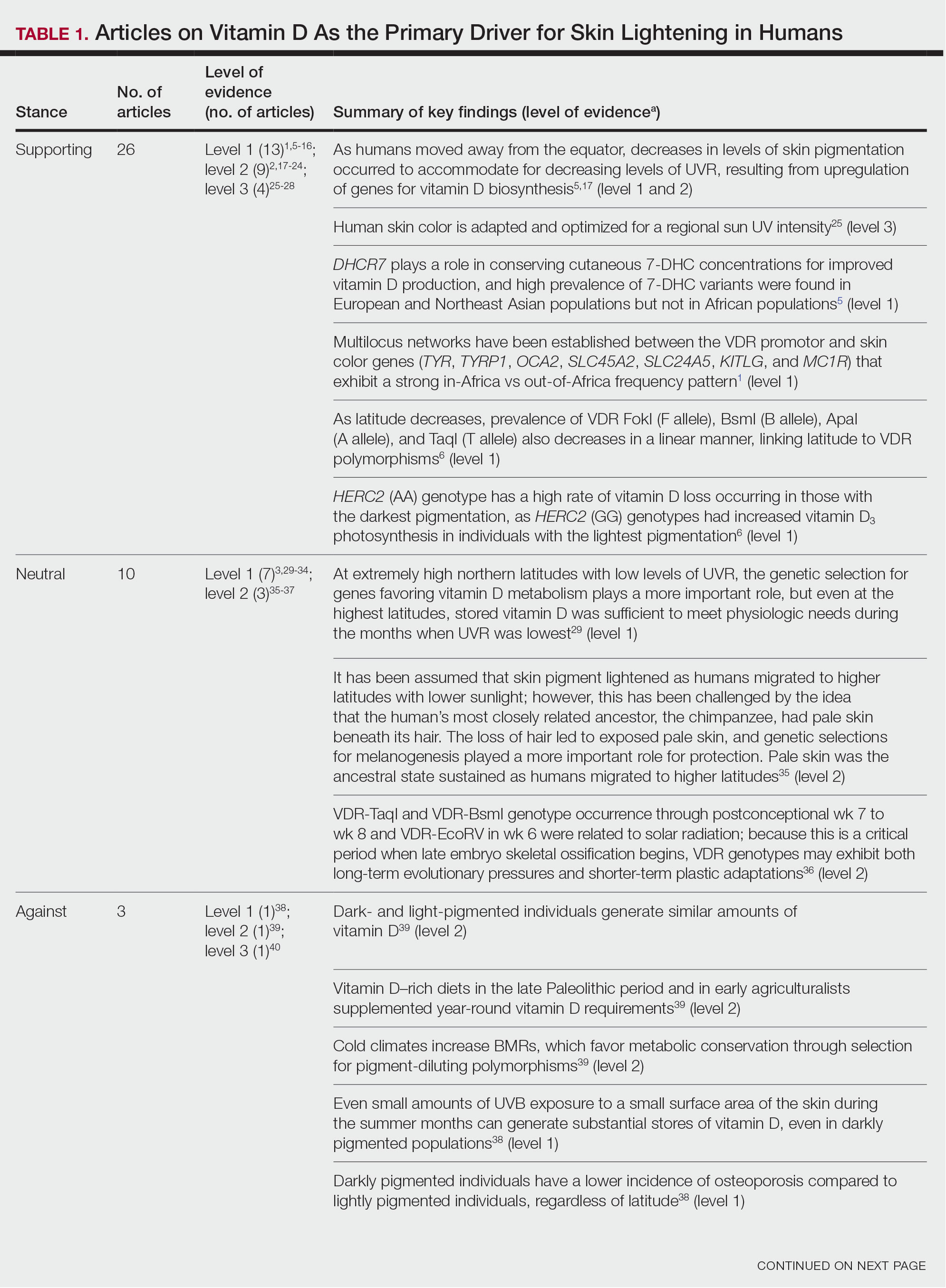

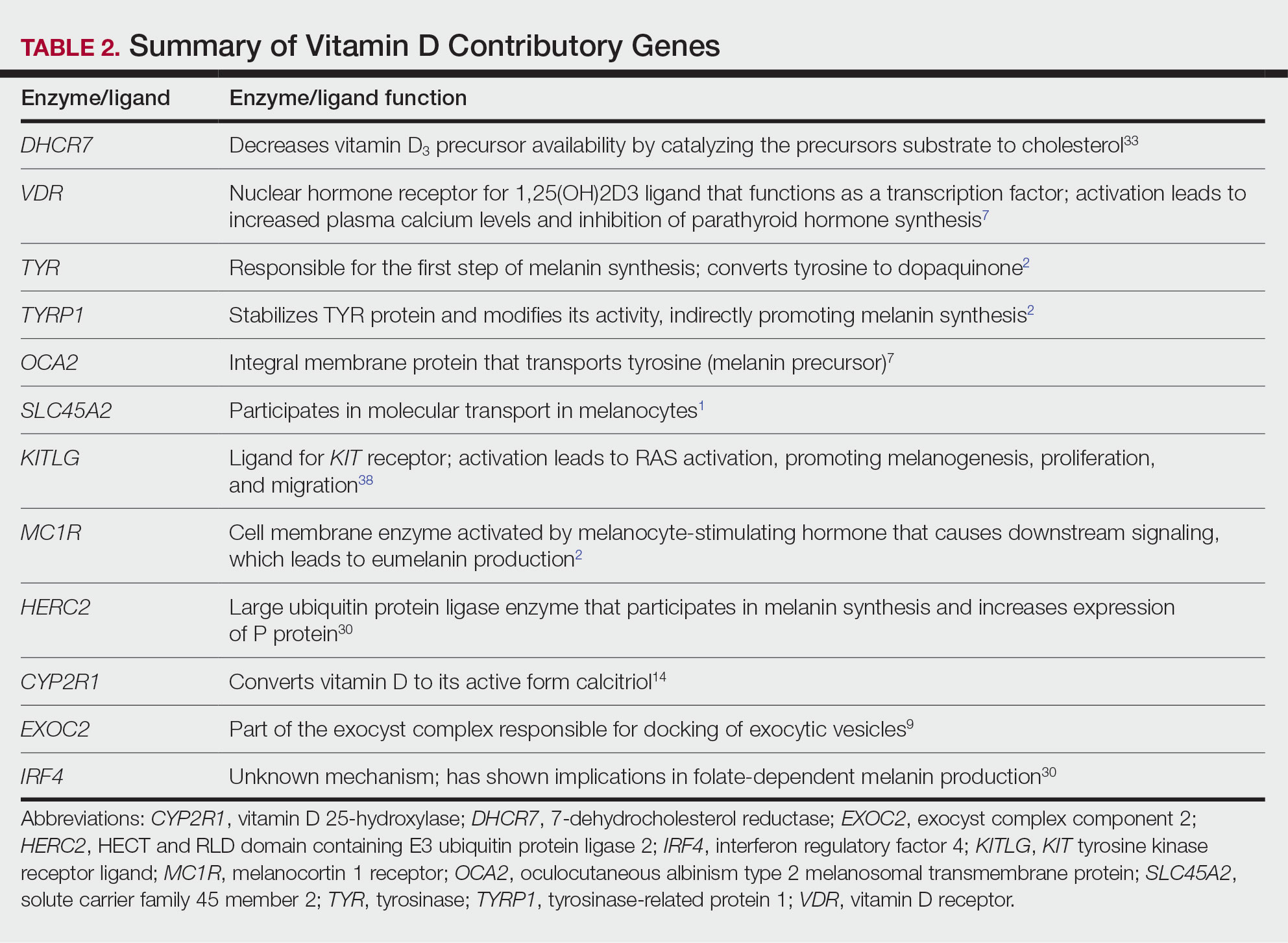

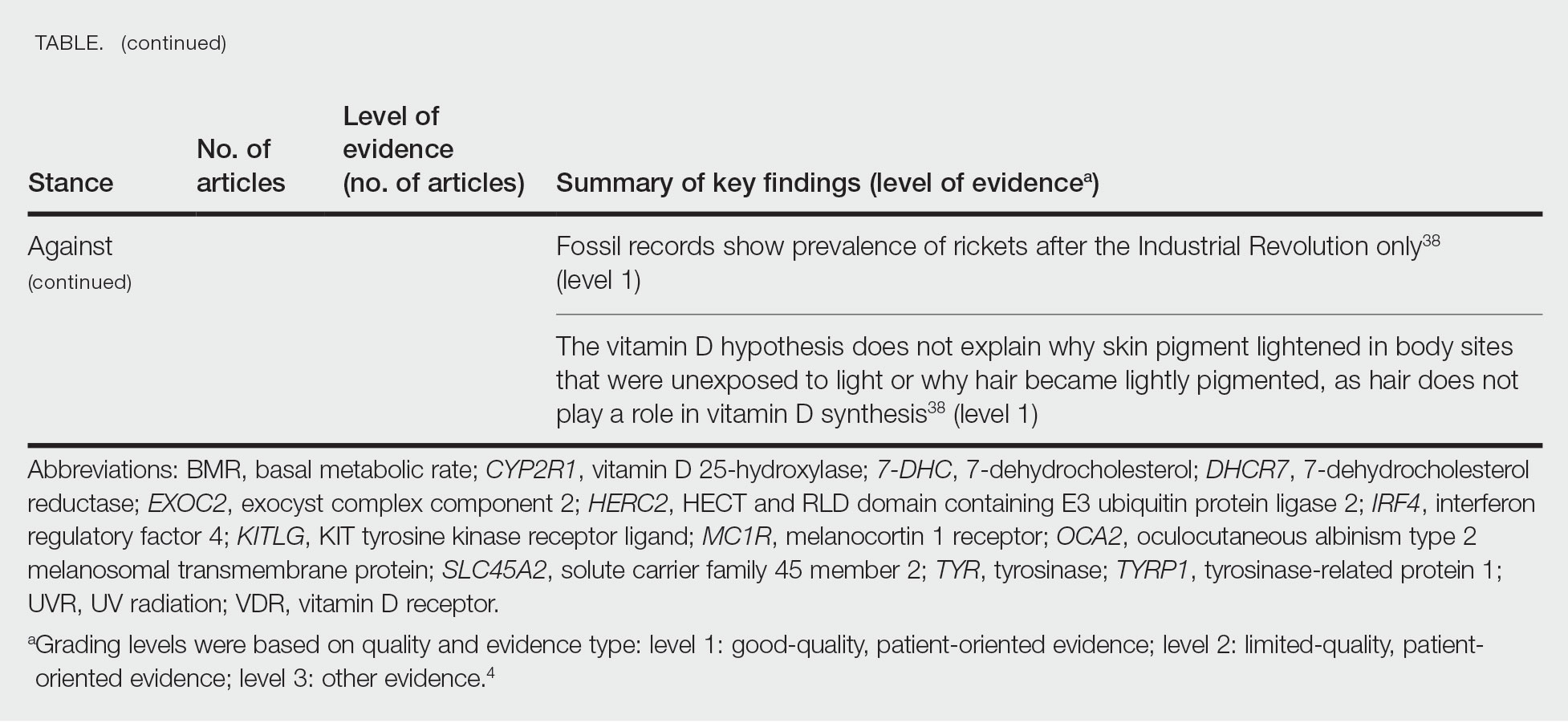

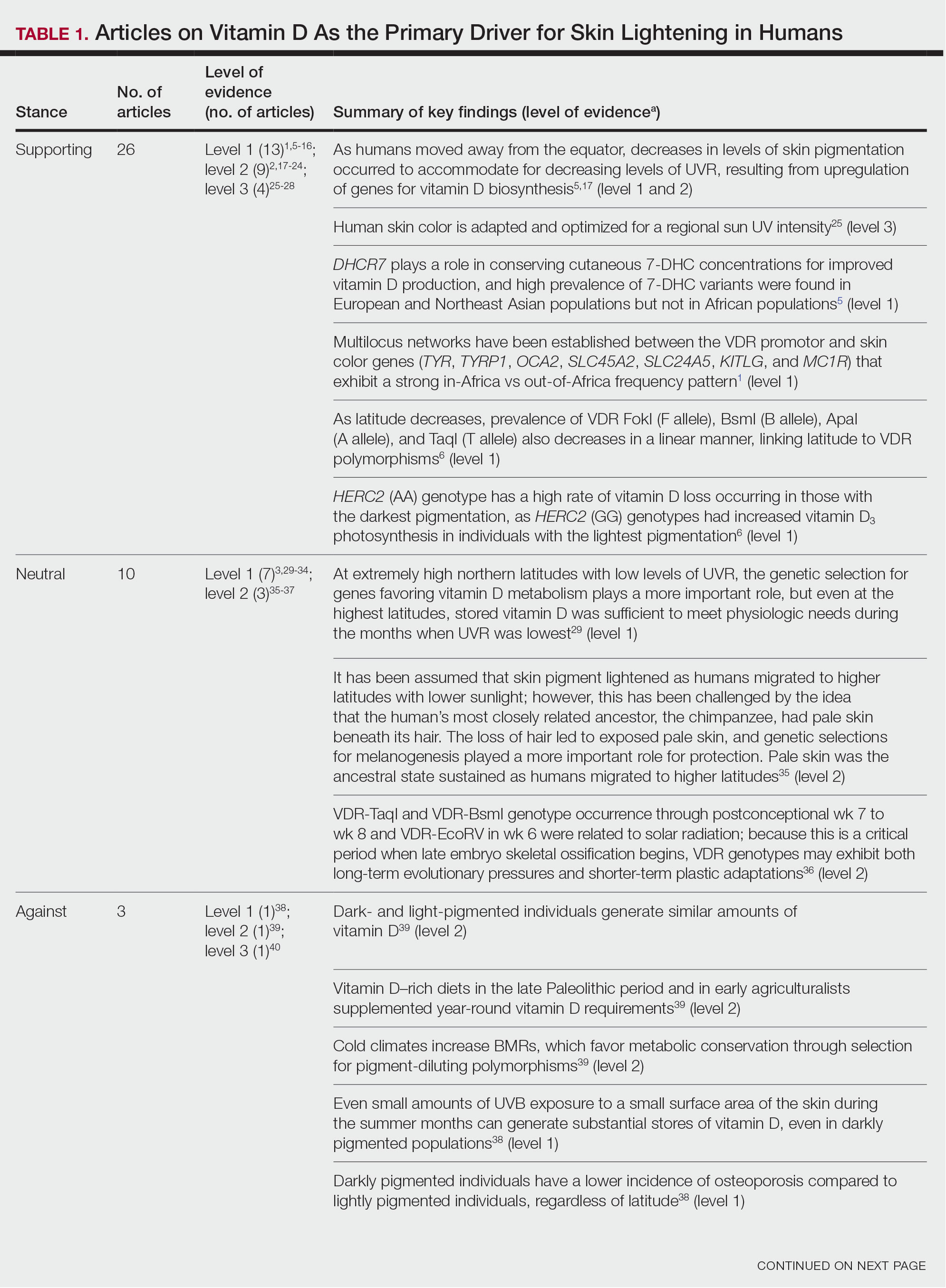

Studies included in the analysis were placed into 1 of 3 categories: supporting, neutral, and against. Strength of Recommendation Taxonomy (SORT) criteria were used to classify the level of evidence of each article.4 Each article’s level of evidence was then graded (Table 1). The SORT grading levels were based on quality and evidence type: level 1 signified good-quality, patient-oriented evidence; level 2 signified limited-quality, patient-oriented evidence; and level 3 signified other evidence.4

Results

Article Selection—A total of 229 articles were identified for screening, and 39 studies met inclusion criteria.1-3,5-40 Systematic and retrospective reviews were the most common types of studies. Genomic analysis/sequencing/genome-wide association studies (GWAS) were the most common methods of analysis. Of these 39 articles, 26 were classified as supporting the evolutionary vitamin D adaptation hypothesis, 10 were classified as neutral, and 3 were classified as against (Table 1).

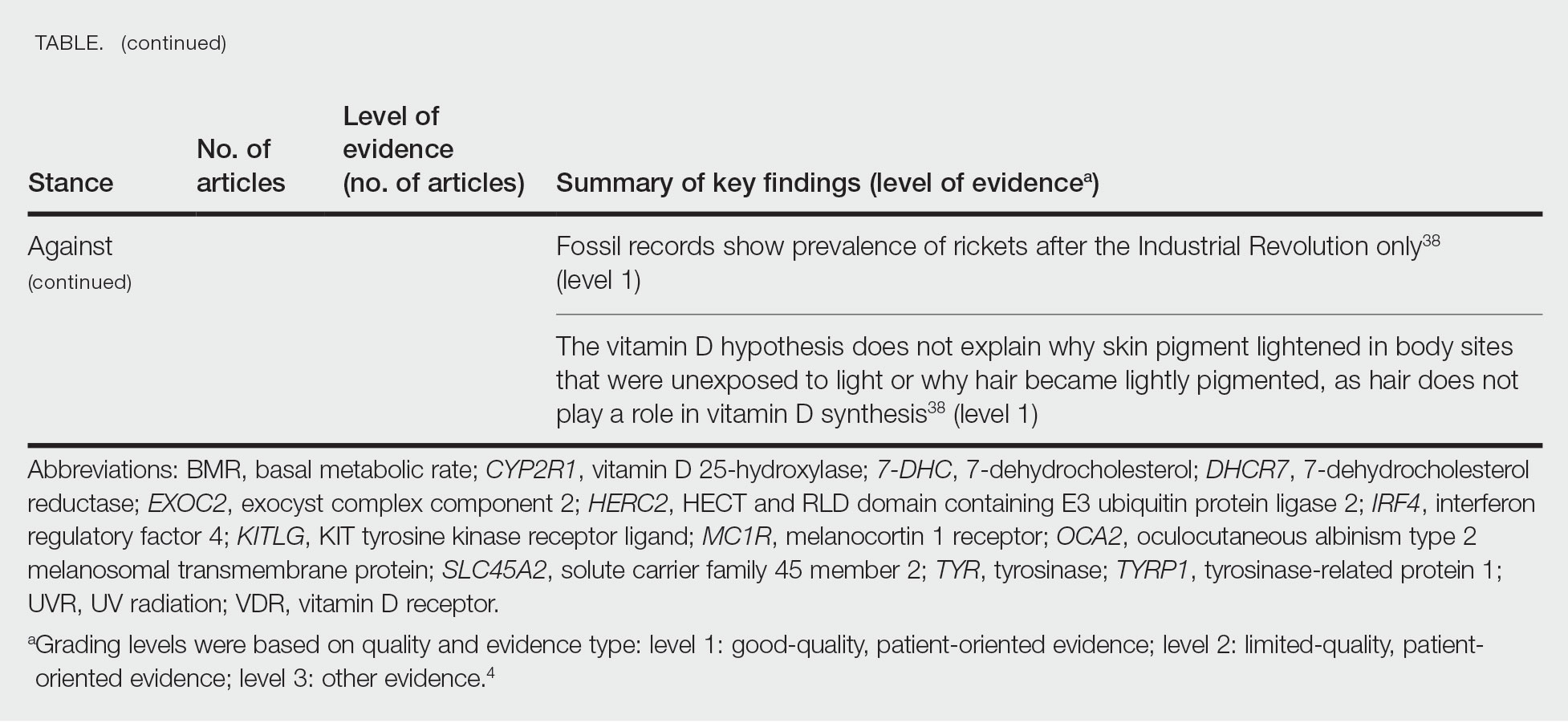

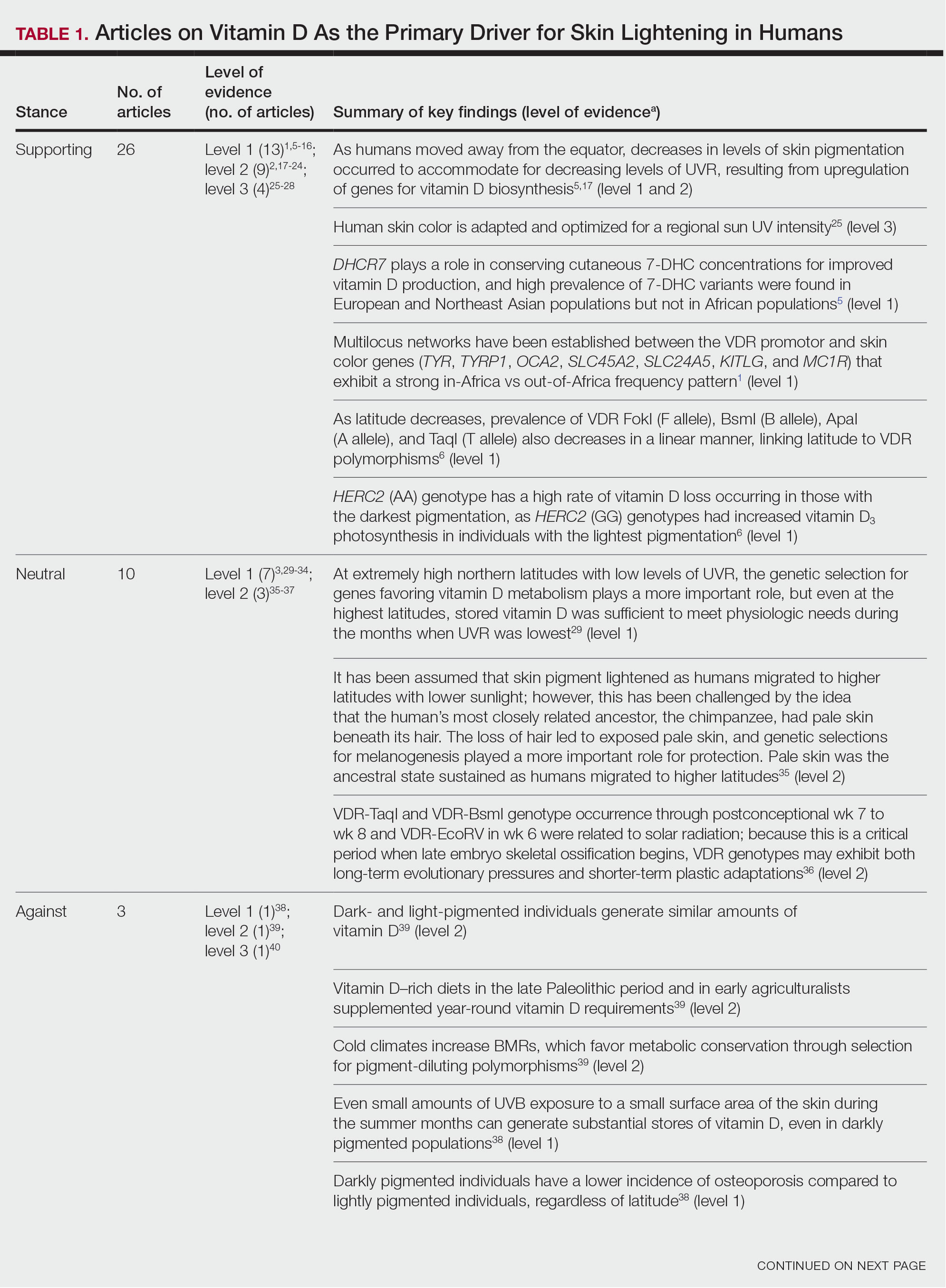

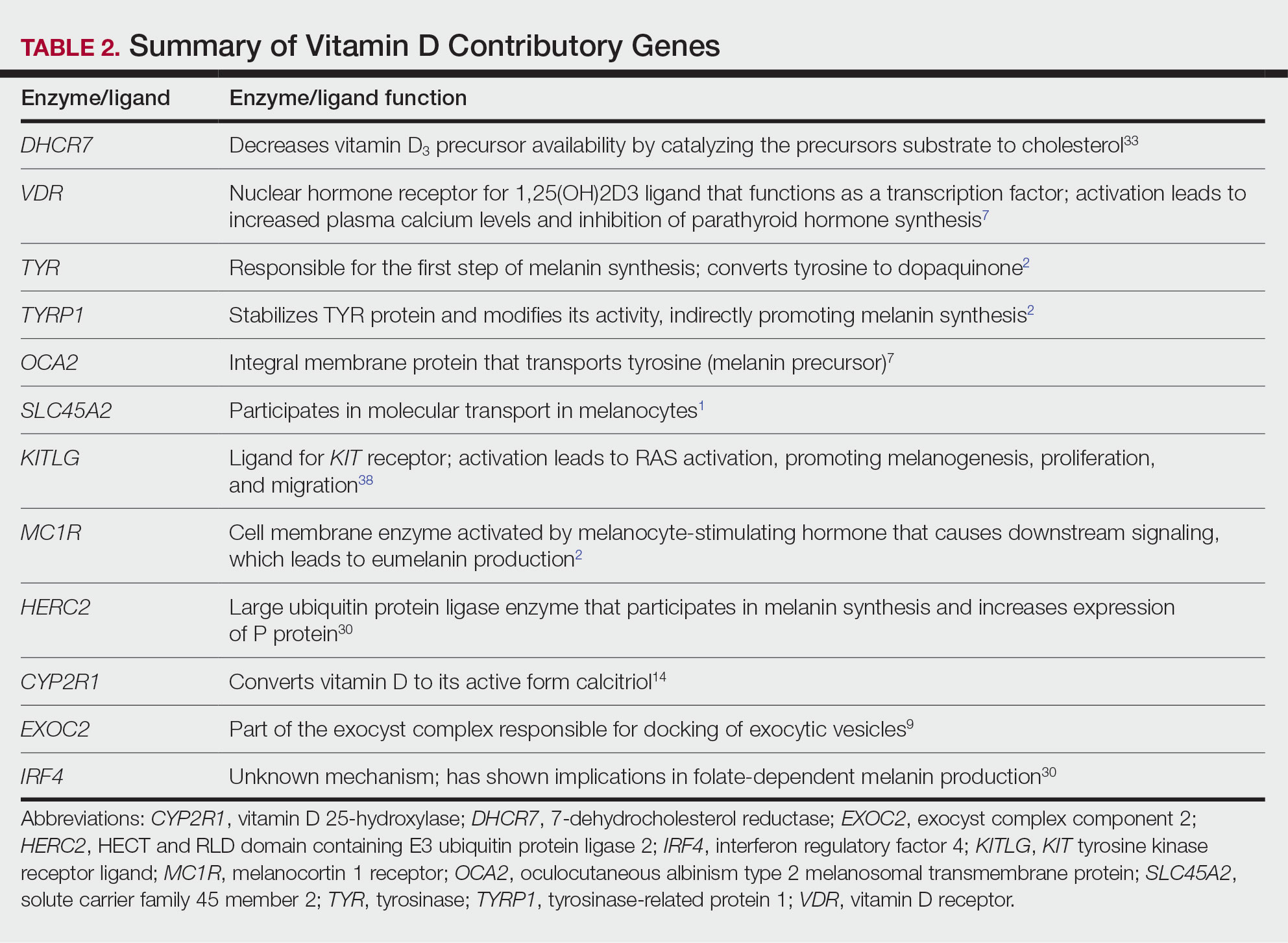

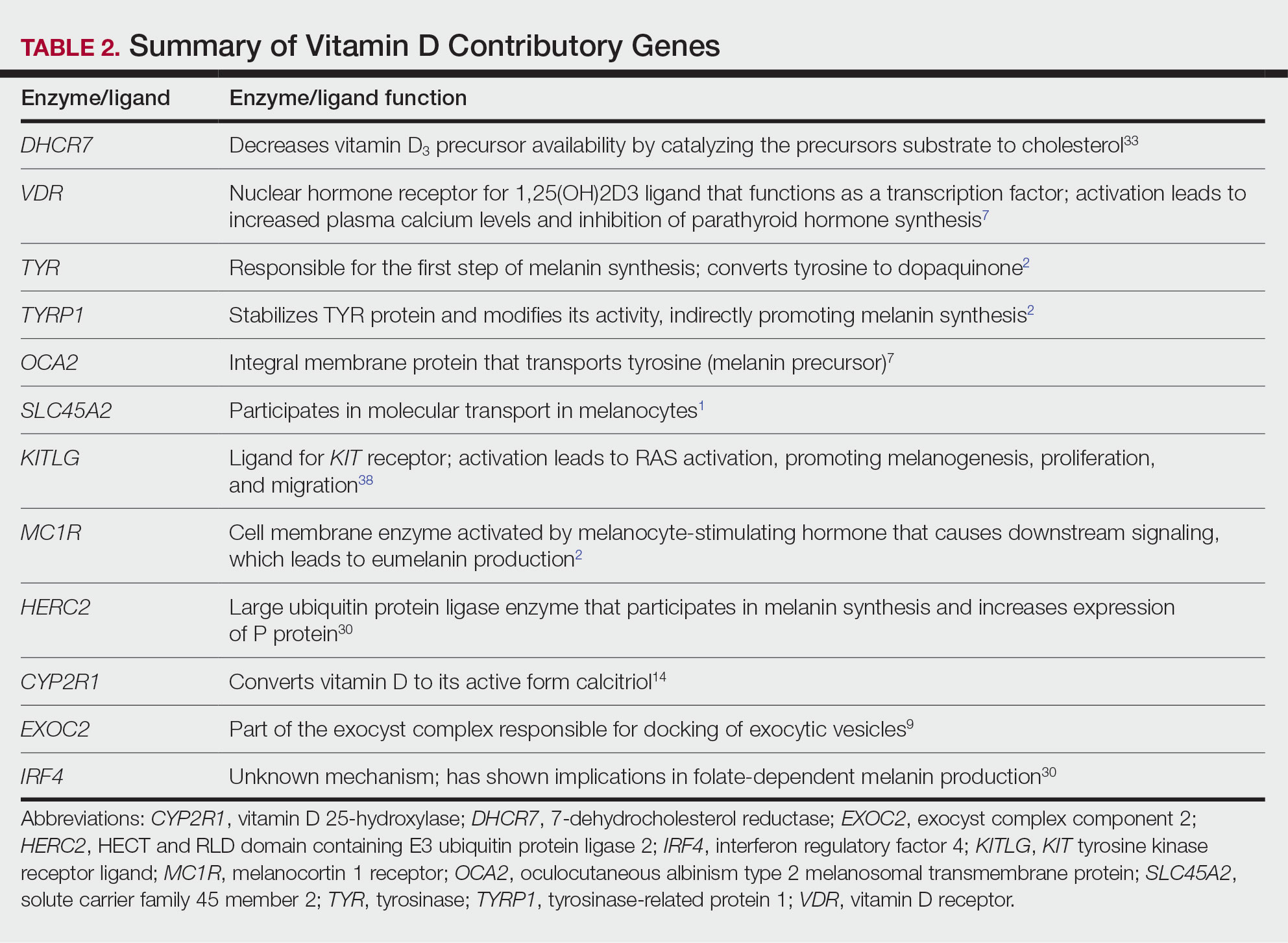

Of the articles classified as supporting the vitamin D hypothesis, 13 articles were level 1 evidence, 9 were level 2, and 4 were level 3. Key findings supporting the vitamin D hypothesis included genetic natural selection favoring vitamin D synthesis genes at higher latitudes with lower UVR and the skin lightening that occurred to protect against vitamin D deficiency (Table 1). Specific genes supporting these findings included 7-dehydrocholesterol reductase (DHCR7), vitamin D receptor (VDR), tyrosinase (TYR), tyrosinase-related protein 1 (TYRP1), oculocutaneous albinism type 2 melanosomal transmembrane protein (OCA2), solute carrier family 45 member 2 (SLC45A2), solute carrier family 4 member 5 (SLC24A5), Kit ligand (KITLG), melanocortin 1 receptor (MC1R), and HECT and RLD domain containing E3 ubiquitin protein ligase 2 (HERC2)(Table 2).

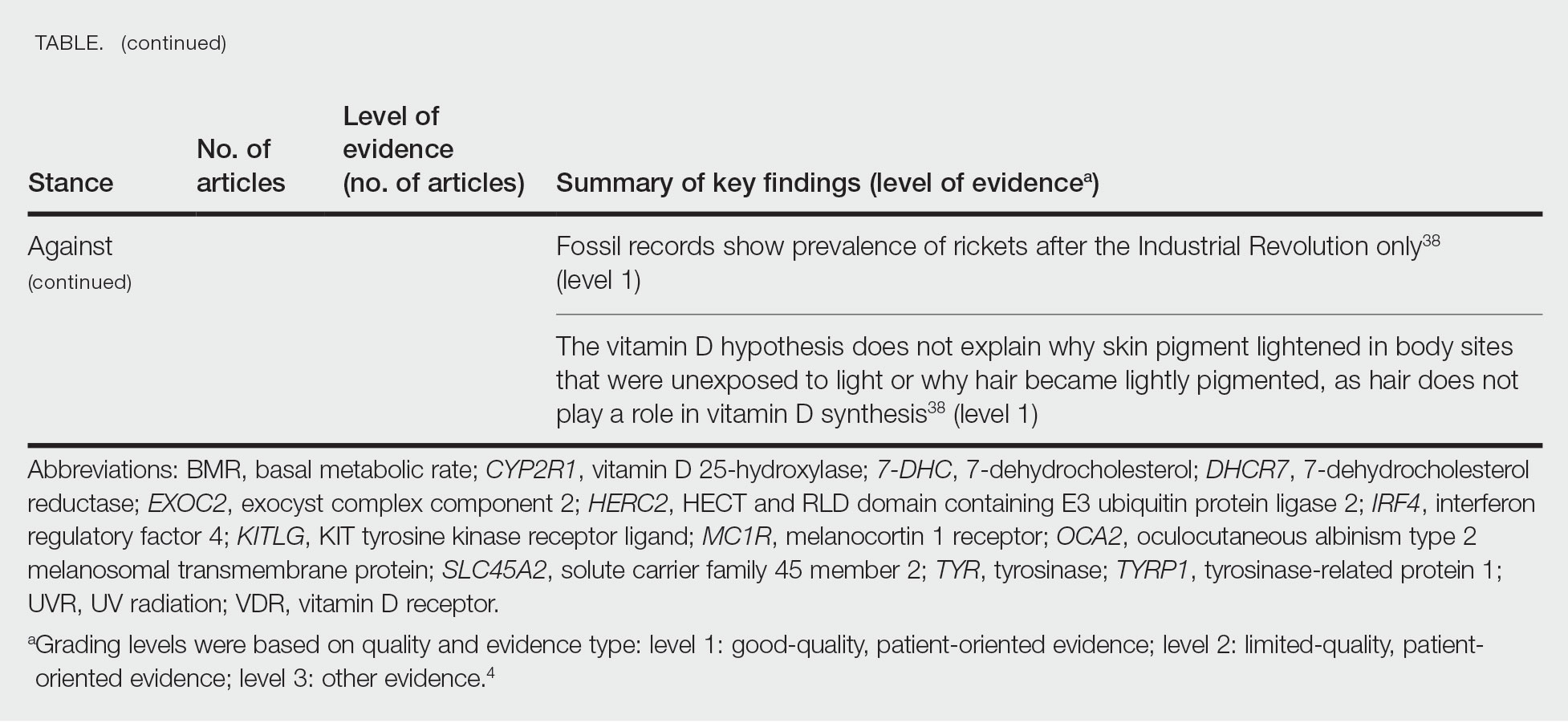

Of the articles classified as being against the vitamin D hypothesis, 1 article was level 1 evidence, 1 was level 2, and 1 was level 3. Key findings refuting the vitamin D hypothesis included similar amounts of vitamin D synthesis in contemporary dark- and light-pigmented individuals, vitamin D–rich diets in the late Paleolithic period and in early agriculturalists, and metabolic conservation being the primary driver (Table 1).

Of the articles classified as neutral to the hypothesis, 7 articles were level 1 evidence and 3 were level 2. Key findings of these articles included genetic selection favoring vitamin D synthesis only for populations at extremely northern latitudes, skin lightening that was sustained in northern latitudes from the neighboring human ancestor the chimpanzee, and evidence for long-term evolutionary pressures and short-term plastic adaptations in vitamin D genes (Table 1).

Comment

The importance of appropriate vitamin D levels is hypothesized as a potent driver in skin lightening because the vitamin is essential for many biochemical processes within the human body. Proper calcification of bones requires activated vitamin D to prevent rickets in childhood. Pelvic deformation in women with rickets can obstruct childbirth in primitive medical environments.15 This direct reproductive impairment suggests a strong selective pressure for skin lightening in populations that migrated northward to enhance vitamin D synthesis.

Of the 39 articles that we reviewed, the majority (n=26 [66.7%]) supported the hypothesis that vitamin D synthesis was the main driver behind skin lightening, whereas 3 (7.7%) did not support the hypothesis and 10 (25.6%) were neutral. Other leading theories explaining skin lightening included the idea that enhanced melanogenesis protected against folate degradation; genetic selection for light-skin alleles due to genetic drift; skin lightening being the result of sexual selection; and a combination of factors, including dietary choices, clothing preferences, and skin permeability barriers.

Articles With Supporting Evidence for the Vitamin D Theory—As Homo sapiens migrated out of Africa, migration patterns demonstrated the correlation between distance from the equator and skin pigmentation from natural selection. Individuals with darker skin pigment required higher levels of UVR to synthesize vitamin D. According to Beleza et al,1 as humans migrated to areas of higher latitudes with lower levels of UVR, natural selection favored the development of lighter skin to maximize vitamin D production. Vitamin D is linked to calcium metabolism, and its deficiency can lead to bone malformations and poor immune function.35 Several genes affecting melanogenesis and skin pigment have been found to have geospatial patterns that map to different geographic locations of various populations, indicating how human migration patterns out of Africa created this natural selection for skin lightening. The gene KITLG—associated with lighter skin pigmentation—has been found in high frequencies in both European and East Asian populations and is proposed to have increased in frequency after the migration out of Africa. However, the genes TYRP1, SLC24A5, and SLC45A2 were found at high frequencies only in European populations, and this selection occurred 11,000 to 19,000 years ago during the Last Glacial Maximum (15,000–20,000 years ago), demonstrating the selection for European over East Asian characteristics. During this period, seasonal changes increased the risk for vitamin D deficiency and provided an urgency for selection to a lighter skin pigment.1