User login

Thoracentesis Referral

Internal medicine (IM) residents and hospitalist physicians commonly conduct bedside thoracenteses for both diagnostic and therapeutic purposes.[1] The American Board of Internal Medicine only requires that certification candidates understand the indications, complications, and management of thoracenteses.[2] A disconnect between clinical practice patterns and board requirements may increase patient risk because poorly trained physicians are more likely to cause complications.[3] National practice patterns show that many thoracenteses are referred to interventional radiology (IR).[4] However, research links performance of bedside procedures to reduced hospital length of stay and lower costs, without increasing risk of complications.[1, 5, 6]

Simulation‐based education offers a controlled environment where trainees improve procedural knowledge and skills without patient harm.[7] Simulation‐based mastery learning (SBML) is a rigorous form of competency‐based education that improves clinical skills and reduces iatrogenic complications and healthcare costs.[5, 6, 8] SBML also is an effective method to boost thoracentesis skills among IM residents.[9] However, there are no data to show that thoracentesis skills acquired in the simulation laboratory transfer to clinical environments and affect referral patterns.

We hypothesized that a thoracentesis SBML intervention would improve skills and increase procedural self‐confidence while reducing procedure referrals. This study aimed to (1) assess the effect of thoracentesis SBML on a cohort of IM residents' simulated skills and (2) compare traditionally trained (nonSBML‐trained) residents, SBML‐trained residents, and hospitalist physicians regarding procedure referral patterns, self‐confidence, procedure experience, and reasons for referral.

METHODS AND MATERIALS

Study Design

We surveyed physicians about thoracenteses performed on patients cared for by postgraduate year (PGY)‐2 and PGY‐3 IM residents and hospitalist physicians at Northwestern Memorial Hospital (NMH) from December 2012 to May 2015. NMH is an 896‐bed, tertiary academic medical center, located in Chicago, Illinois. A random sample of IM residents participated in a thoracentesis SBML intervention, whereas hospitalist physicians did not. We compared referral patterns, self‐confidence, procedure experience, and reasons for referral between traditionally trained residents, SBML‐trained residents, and hospitalist physicians. The Northwestern University Institutional Review Board approved this study, and all study participants provided informed consent.

At NMH, resident‐staffed services include general IM and nonintensive care subspecialty medical services. There are also 2 nonteaching floors staffed by hospitalist attending physicians without residents. Thoracenteses performed on these services can either be done at the bedside or referred to pulmonary medicine or IR. The majority of thoracenteses performed by pulmonary medicine occur at the patients' bedside, and the patients also receive a clinical consultation. IR procedures are done in the IR suite without additional clinical consultation.

Procedure

One hundred sixty residents were available for training over the study period. We randomly selected 20% of the approximately 20 PGY‐2 and PGY‐3 IM residents assigned to the NMH medicine services each month to participate in SBML thoracentesis training before their rotation. Randomly selected residents were required to undergo SBML training but were not required to participate in the study. This selection process was repeated before every rotation during the study period. This randomized wait‐list control method allowed residents to serve as controls if not initially selected for training and remain eligible for SBML training in subsequent rotations.

Intervention

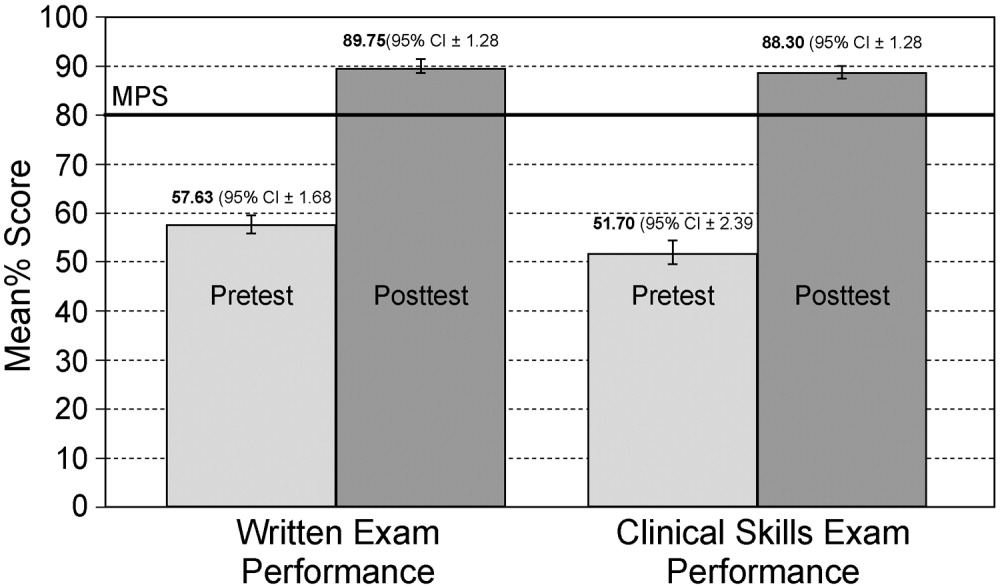

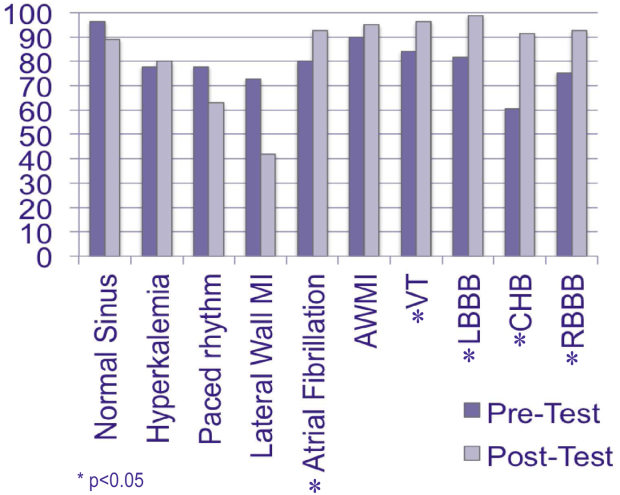

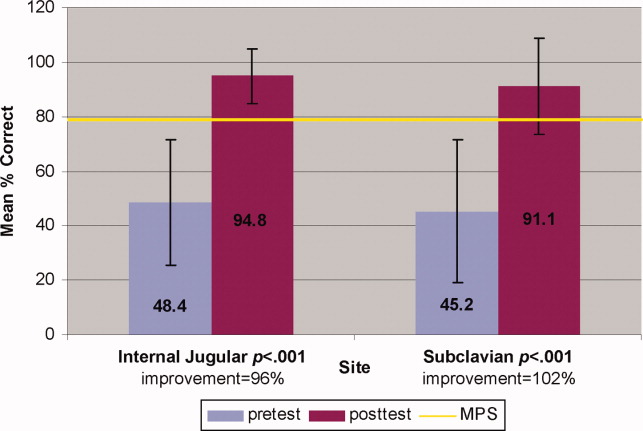

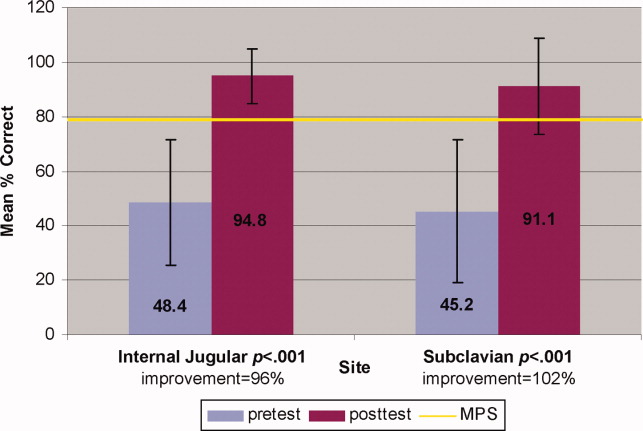

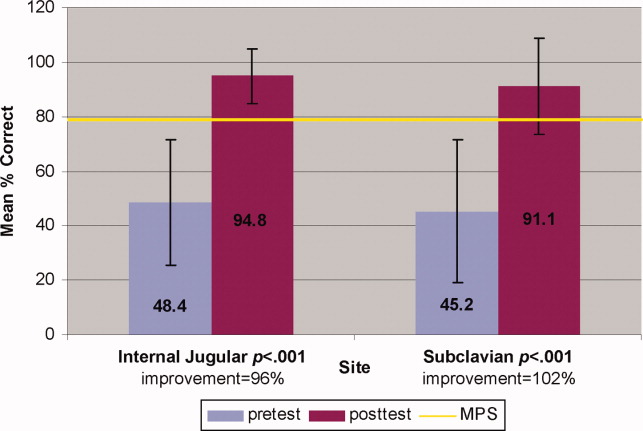

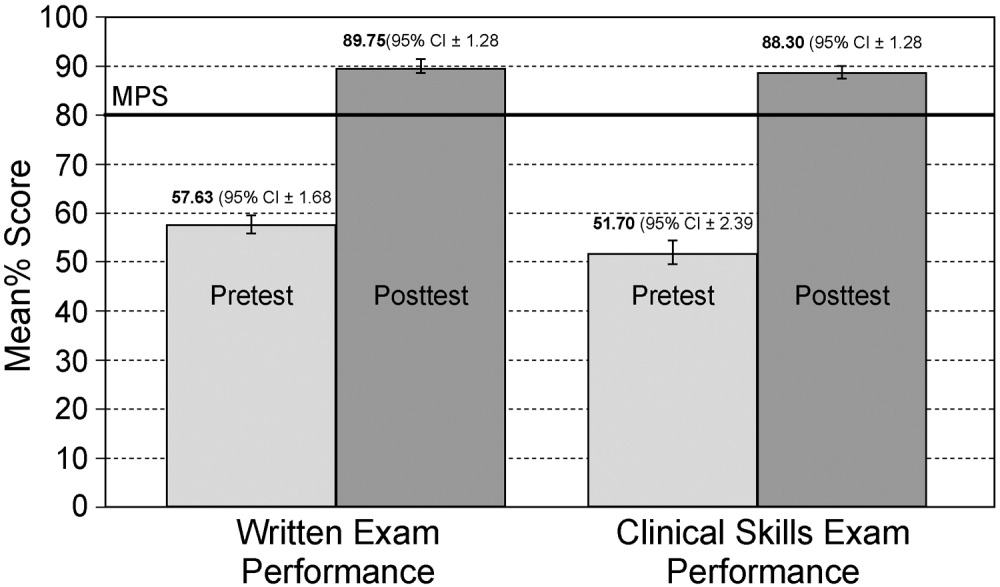

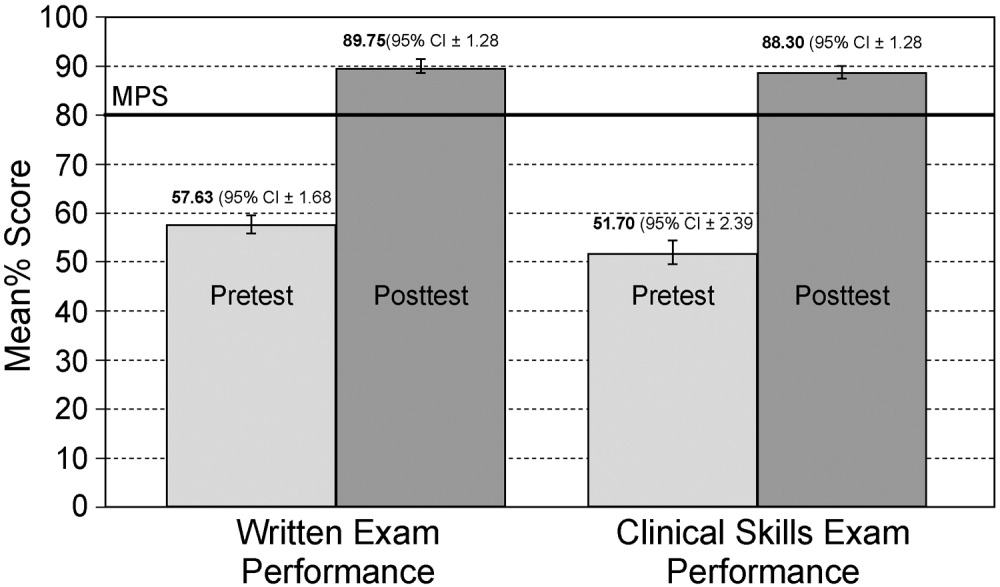

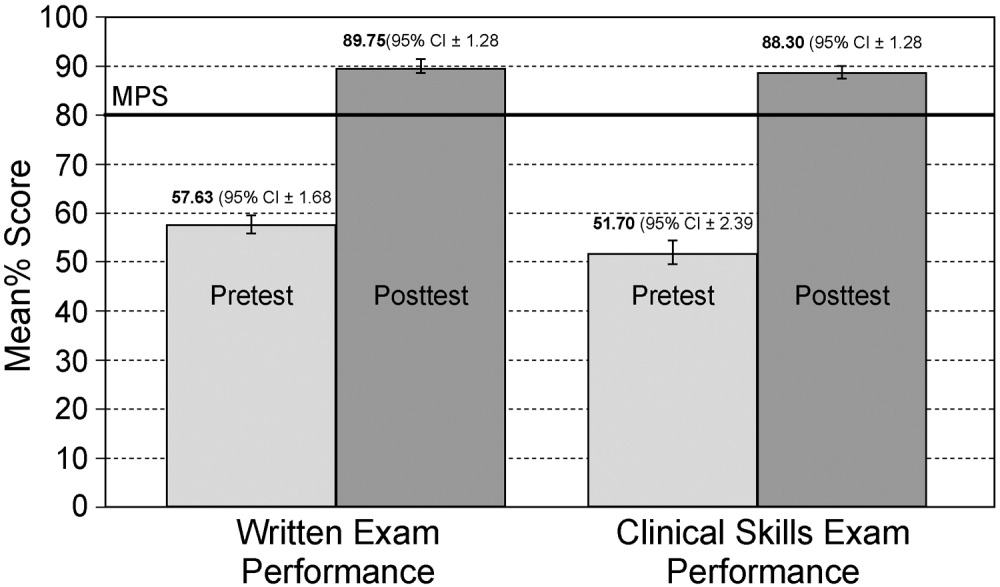

The SBML intervention used a pretest/post‐test design, as described elsewhere.[9] Residents completed a clinical skills pretest on a thoracentesis simulator using a previously published 26‐item checklist.[9] Following the pretest, residents participated in 2, 1‐hour training sessions including a lecture, video, and deliberate practice on the simulator with feedback from an expert instructor. Finally, residents completed a clinical skills post‐test using the checklist within 1 week from training (but on a different day) and were required to meet or exceed an 84.3% minimum passing score (MPS). The entire training, including pre‐ and post‐tests, took approximately 3 hours to complete, and residents were given an additional 1 hour refresher training every 6 months for up to a year after original training. We compared pre‐ and post‐test checklist scores to evaluate skills improvement.

Thoracentesis Patient Identification

The NMH electronic health record (EHR) was used to identify medical service inpatients who underwent a thoracentesis during the study period. NMH clinicians must place an EHR order for procedure kits, consults, and laboratory analysis of thoracentesis fluid. We developed a real‐time query of NMH's EHR that identified all patients with electronic orders for thoracenteses and monitored this daily.

Physician Surveys

After each thoracentesis, we surveyed the PGY‐2 or PGY‐3 resident or hospitalist caring for the patient about the procedure. A research coordinator, blind to whether the resident received SBML, performed the surveys face‐to‐face on Monday to Friday during normal business hours. Residents were not considered SBML‐trained until they met or exceeded the MPS on the simulated skills checklist at post‐test. Surveys occurred on Monday for procedures performed on Friday evening through Sunday. Survey questions asked physicians about who performed the procedure, their procedural self‐confidence, and total number of thoracenteses performed in their career. For referred procedures, physicians were asked about reasons for referral including lack of confidence, work hour restrictions (residents only), and low reimbursement rates.[10] There was also an option to add other reasons.

Measurement

The thoracentesis skills checklist documented all required steps for an evidence‐based thoracentesis. Each task received equal weight (0 = done incorrectly/not done, 1 = done correctly).[9] For physician surveys, self‐confidence about performing the procedure was rated on a scale of 0 = not confident to 100 = very confident. Reasons for referral were scored on a Likert scale 1 to 5 (1 = not at all important, 5 = very important). Other reasons for referral were categorized.

Statistical Analysis

The clinical skills pre‐ and post‐test checklist scores were compared using a Wilcoxon matched pairs rank test. Physician survey data were compared between different procedure performers using the 2 test, independent t test, analysis of variance (ANOVA), or Kruskal‐Wallis test depending on data properties. Referral patterns measured by the Likert scale were averaged, and differences between physician groups were evaluated using ANOVA. Counts of other reasons for referral were compared using the 2 test. We performed all statistical analyses using IBM SPSS Statistics version 23 (IBM Corp., Armonk, NY).

RESULTS

Thoracentesis Clinical Skills

One hundred twelve (70%) residents were randomized to SBML, and all completed the protocol. Median pretest scores were 57.6% (interquartile range [IQR] 43.376.9), and final post‐test mastery scores were 96.2 (IQR 96.2100.0; P < 0.001). Twenty‐three residents (21.0%) failed to meet the MPS at initial post‐test, but met the MPS on retest after <1 hour of additional training.

Physician Surveys

The EHR query identified 474 procedures eligible for physician surveys. One hundred twenty‐two residents and 51 hospitalist physicians completed surveys for 472 procedures (99.6%); 182 patients by traditionally trained residents, 145 by SBML‐trained residents, and 145 by hospitalist physicians. As shown in Table 1, 413 (88%) of all procedures were referred to another service. Traditionally trained residents were more likely to refer to IR compared to SBML‐trained residents or hospitalist physicians. SBML‐trained residents were more likely to perform bedside procedures, whereas hospitalist physicians were most likely to refer to pulmonary medicine. SBML‐trained residents were most confident in their procedural skills, despite hospitalist physicians performing more actual procedures.

| Traditionally Trained Resident Surveys, n = 182 | SBML‐Trained Resident Surveys, n = 145 | Hospitalist Physician Surveys, n = 145 | P Value | |

|---|---|---|---|---|

| ||||

| Bedside procedures, no. (%) | 26 (14.3%) | 32 (22.1%) | 1 (0.7%) | <0.001 |

| IR procedures, no. (%) | 119 (65.4%) | 74 (51.0%) | 82 (56.6%) | 0.029 |

| Pulmonary procedures, no. (%) | 37 (20.3%) | 39 (26.9%) | 62 (42.8%) | <0.001 |

| Procedure self‐confidence, mean (SD)* | 43.6 (28.66) | 68.2 (25.17) | 55.7 (31.17) | <0.001 |

| Experience performing actual procedures, median (IQR) | 1 (13) | 2 (13.5) | 10 (425) | <0.001 |

Traditionally trained residents were most likely to rate low confidence as reasons why they referred thoracenteses (Table 2). Hospitalist physicians were more likely to cite lack of time to perform the procedure themselves. Other reasons were different across groups. SBML‐trained residents were more likely to refer because of attending preference, whereas traditionally trained residents were mostly like to refer because of high risk/technically difficult cases.

| Traditionally Trained Residents, n = 156 | SBML‐Trained Residents, n = 113 | Hospitalist Physicians, n = 144 | P Value | |

|---|---|---|---|---|

| ||||

| Lack of confidence to perform procedure, mean (SD)* | 3.46 (1.32) | 2.52 (1.45) | 2.89 (1.60) | <0.001 |

| Work hour restrictions, mean (SD) * | 2.05 (1.37) | 1.50 (1.11) | n/a | 0.001 |

| Low reimbursement, mean (SD)* | 1.02 (0.12) | 1.0 (0) | 1.22 (0.69) | <0.001 |

| Other reasons for referral, no. (%) | ||||

| Attending preference | 8 (5.1%) | 11 (9.7%) | 3 (2.1%) | 0.025 |

| Don't know how | 6 (3.8%) | 0 | 0 | 0.007 |

| Failed bedside | 0 | 2 (1.8%) | 0 | 0.07 |

| High risk/technically difficult case | 24 (15.4%) | 12 (10.6%) | 5 (3.5%) | 0.003 |

| IR or pulmonary patient | 5 (3.2%) | 2 (1.8%) | 4 (2.8%) | 0.77 |

| Other IR procedure taking place | 11 (7.1%) | 9 (8.0%) | 4 (2.8%) | 0.13 |

| Patient preference | 2 (1.3%) | 7 (6.2%) | 2 (3.5%) | 0.024 |

| Time | 9 (5.8%) | 7 (6.2%) | 29 (20.1%) | <0.001 |

DISCUSSION

This study confirms earlier research showing that thoracentesis SBML improves residents' clinical skills, but is the first to use a randomized study design.[9] Use of the mastery model in health professions education ensures that all learners are competent to provide patient care including performing invasive procedures. Such rigorous education yields downstream translational outcomes including safety profiles comparable to experts.[1, 6]

This study also shows that SBML‐trained residents displayed higher self‐confidence and performed significantly more bedside procedures than traditionally trained residents and more experienced hospitalist physicians. Although the Society of Hospital Medicine considers thoracentesis skills a core competency for hospitalist physicians,[11] we speculate that some hospitalist physicians had not performed a thoracentesis in years. A recent national survey showed that only 44% of hospitalist physicians performed at least 1 thoracentesis within the past year.[10] Research also shows a shift in medical culture to refer procedures to specialty services, such as IR, by over 900% in the past 2 decades.[4] Our results provide novel information about procedure referrals because we show that SBML provides translational outcomes by improving skills and self‐confidence that influence referral patterns. SBML‐trained residents performed almost a quarter of procedures at the bedside. Although this only represents an 8% absolute difference in bedside procedures compared to traditionally trained residents, if a large number of residents are trained using SBML this results in a meaningful number of procedures shifted to the patient bedside. According to University HealthSystem Consortium data, in US teaching hospitals, approximately 35,325 thoracenteses are performed yearly.[1] Shifting even 8% of these procedures to the bedside would result in significant clinical benefit and cost savings. Reduced referrals increase additional bedside procedures that are safe, cost‐effective, and highly satisfying to patients.[1, 12, 13] Further study is required to determine the impact on referral patterns after providing SMBL training to attending physicians.

Our study also provides information about the rationale for procedure referrals. Earlier work speculates that financial incentive, training and time may explain high procedure referral rates.[10] One report on IM residents noted an 87% IR referral rate for thoracentesis, and confirmed that both training and time were major reasons.[14] Hospitalist physicians reported lack of time as the major factor leading to procedural referrals, which is problematic because bedside procedures yield similar clinical outcomes at lower costs.[1, 12] Attending preference also prevented 11 additional bedside procedures in the SBML‐trained group. Schedule adjustments and SBML training of hospitalist physicians should be considered, because bundled payments in the Affordable Care Act may favor shifting to the higher‐value approach of bedside thoracenteses.[15]

Our study has several limitations. First, we only performed surveys at 1 institution and the results may not be generalizable. Second, we relied on an electronic query to alert us to thoracenteses. Our query may have missed procedures that were unsuccessful or did not have EHR orders entered. Third, physicians may have been surveyed more than once for different or the same patient(s), but opinions may have shifted over time. Fourth, some items such as time needed to be written in the survey and were not specifically asked. This could have resulted in under‐reporting. Finally, we did not assess the clinical outcomes of thoracenteses in this study, although earlier work shows that residents who complete SBML have safety outcomes similar to IR.[1, 6]

In summary, IM residents who complete thoracentesis SBML demonstrate improved clinical skills and are more likely to perform bedside procedures. In an era of bundled payments, rethinking current care models to promote cost‐effective care is necessary. We believe providing additional education, training, and support to hospitalist physicians to promote bedside procedures is a promising strategy that warrants further study.

Acknowledgements

The authors acknowledge Drs. Douglas Vaughan and Kevin O'Leary for their support and encouragement of this work. The authors also thank the internal medicine residents at Northwestern for their dedication to patient care.

Disclosures: This project was supported by grant R18HS021202‐01 from the Agency for Healthcare Research and Quality (AHRQ). AHRQ had no role in the preparation, review, or approval of the manuscript. Trial Registration:

- , , , , . Thoracentesis procedures at university hospitals: comparing outcomes by specialty. Jt Comm J Qual Patient Saf. 2015;42(1):34–40.

- American Board of Internal Medicine. Internal medicine policies. Available at: http://www.abim.org/certification/policies/internal‐medicine‐subspecialty‐policies/internal‐medicine.aspx. Accessed March 9, 2016.

- , , , . Pneumothorax following thoracentesis: a systematic review and meta‐analysis. Arch Intern Med. 2010;170(4):332–339.

- , , . National fluid shifts: fifteen‐year trends in paracentesis and thoracentesis procedures. J Am Coll Radiol. 2010;7(11):859–864.

- , , , et al. Cost savings of performing paracentesis procedures at the bedside after simulation‐based education. Simul Healthc. 2014;9(5):312–318.

- , , , , . Clinical outcomes after bedside and interventional radiology paracentesis procedures. Am J Med. 2013;126(4):349–356.

- , , , et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282(9):861–866.

- , , , et al. Cost savings from reduced catheter‐related bloodstream infection after simulation‐based education for residents in a medical intensive care unit. Simul Healthc. 2010;5(2):98–102.

- , , , , . Mastery learning of thoracentesis skills by internal medicine residents using simulation technology and deliberate practice. J Hosp Med. 2008;3(1):48–54.

- , , , , . Procedures performed by hospitalist and non‐hospitalist general internists. J Gen Intern Med. 2010;25(5):448–452.

- , , , , . Core competencies in hospital medicine: development and methodology. J Hosp Med. 2006;1(suppl 1):48–56.

- , , , , , . Specialties performing paracentesis procedures at university hospitals: implications for training and certification. J Hosp Med. 2014;9(3):162–168.

- , , , , , . Are we providing patient‐centered care? Preferences about paracentesis and thoracentesis procedures. Patient Exp J. 2014;1(2):94–103. Available at: http://pxjournal.org/cgi/viewcontent.cgi?article=1024

Internal medicine (IM) residents and hospitalist physicians commonly conduct bedside thoracenteses for both diagnostic and therapeutic purposes.[1] The American Board of Internal Medicine only requires that certification candidates understand the indications, complications, and management of thoracenteses.[2] A disconnect between clinical practice patterns and board requirements may increase patient risk because poorly trained physicians are more likely to cause complications.[3] National practice patterns show that many thoracenteses are referred to interventional radiology (IR).[4] However, research links performance of bedside procedures to reduced hospital length of stay and lower costs, without increasing risk of complications.[1, 5, 6]

Simulation‐based education offers a controlled environment where trainees improve procedural knowledge and skills without patient harm.[7] Simulation‐based mastery learning (SBML) is a rigorous form of competency‐based education that improves clinical skills and reduces iatrogenic complications and healthcare costs.[5, 6, 8] SBML also is an effective method to boost thoracentesis skills among IM residents.[9] However, there are no data to show that thoracentesis skills acquired in the simulation laboratory transfer to clinical environments and affect referral patterns.

We hypothesized that a thoracentesis SBML intervention would improve skills and increase procedural self‐confidence while reducing procedure referrals. This study aimed to (1) assess the effect of thoracentesis SBML on a cohort of IM residents' simulated skills and (2) compare traditionally trained (nonSBML‐trained) residents, SBML‐trained residents, and hospitalist physicians regarding procedure referral patterns, self‐confidence, procedure experience, and reasons for referral.

METHODS AND MATERIALS

Study Design

We surveyed physicians about thoracenteses performed on patients cared for by postgraduate year (PGY)‐2 and PGY‐3 IM residents and hospitalist physicians at Northwestern Memorial Hospital (NMH) from December 2012 to May 2015. NMH is an 896‐bed, tertiary academic medical center, located in Chicago, Illinois. A random sample of IM residents participated in a thoracentesis SBML intervention, whereas hospitalist physicians did not. We compared referral patterns, self‐confidence, procedure experience, and reasons for referral between traditionally trained residents, SBML‐trained residents, and hospitalist physicians. The Northwestern University Institutional Review Board approved this study, and all study participants provided informed consent.

At NMH, resident‐staffed services include general IM and nonintensive care subspecialty medical services. There are also 2 nonteaching floors staffed by hospitalist attending physicians without residents. Thoracenteses performed on these services can either be done at the bedside or referred to pulmonary medicine or IR. The majority of thoracenteses performed by pulmonary medicine occur at the patients' bedside, and the patients also receive a clinical consultation. IR procedures are done in the IR suite without additional clinical consultation.

Procedure

One hundred sixty residents were available for training over the study period. We randomly selected 20% of the approximately 20 PGY‐2 and PGY‐3 IM residents assigned to the NMH medicine services each month to participate in SBML thoracentesis training before their rotation. Randomly selected residents were required to undergo SBML training but were not required to participate in the study. This selection process was repeated before every rotation during the study period. This randomized wait‐list control method allowed residents to serve as controls if not initially selected for training and remain eligible for SBML training in subsequent rotations.

Intervention

The SBML intervention used a pretest/post‐test design, as described elsewhere.[9] Residents completed a clinical skills pretest on a thoracentesis simulator using a previously published 26‐item checklist.[9] Following the pretest, residents participated in 2, 1‐hour training sessions including a lecture, video, and deliberate practice on the simulator with feedback from an expert instructor. Finally, residents completed a clinical skills post‐test using the checklist within 1 week from training (but on a different day) and were required to meet or exceed an 84.3% minimum passing score (MPS). The entire training, including pre‐ and post‐tests, took approximately 3 hours to complete, and residents were given an additional 1 hour refresher training every 6 months for up to a year after original training. We compared pre‐ and post‐test checklist scores to evaluate skills improvement.

Thoracentesis Patient Identification

The NMH electronic health record (EHR) was used to identify medical service inpatients who underwent a thoracentesis during the study period. NMH clinicians must place an EHR order for procedure kits, consults, and laboratory analysis of thoracentesis fluid. We developed a real‐time query of NMH's EHR that identified all patients with electronic orders for thoracenteses and monitored this daily.

Physician Surveys

After each thoracentesis, we surveyed the PGY‐2 or PGY‐3 resident or hospitalist caring for the patient about the procedure. A research coordinator, blind to whether the resident received SBML, performed the surveys face‐to‐face on Monday to Friday during normal business hours. Residents were not considered SBML‐trained until they met or exceeded the MPS on the simulated skills checklist at post‐test. Surveys occurred on Monday for procedures performed on Friday evening through Sunday. Survey questions asked physicians about who performed the procedure, their procedural self‐confidence, and total number of thoracenteses performed in their career. For referred procedures, physicians were asked about reasons for referral including lack of confidence, work hour restrictions (residents only), and low reimbursement rates.[10] There was also an option to add other reasons.

Measurement

The thoracentesis skills checklist documented all required steps for an evidence‐based thoracentesis. Each task received equal weight (0 = done incorrectly/not done, 1 = done correctly).[9] For physician surveys, self‐confidence about performing the procedure was rated on a scale of 0 = not confident to 100 = very confident. Reasons for referral were scored on a Likert scale 1 to 5 (1 = not at all important, 5 = very important). Other reasons for referral were categorized.

Statistical Analysis

The clinical skills pre‐ and post‐test checklist scores were compared using a Wilcoxon matched pairs rank test. Physician survey data were compared between different procedure performers using the 2 test, independent t test, analysis of variance (ANOVA), or Kruskal‐Wallis test depending on data properties. Referral patterns measured by the Likert scale were averaged, and differences between physician groups were evaluated using ANOVA. Counts of other reasons for referral were compared using the 2 test. We performed all statistical analyses using IBM SPSS Statistics version 23 (IBM Corp., Armonk, NY).

RESULTS

Thoracentesis Clinical Skills

One hundred twelve (70%) residents were randomized to SBML, and all completed the protocol. Median pretest scores were 57.6% (interquartile range [IQR] 43.376.9), and final post‐test mastery scores were 96.2 (IQR 96.2100.0; P < 0.001). Twenty‐three residents (21.0%) failed to meet the MPS at initial post‐test, but met the MPS on retest after <1 hour of additional training.

Physician Surveys

The EHR query identified 474 procedures eligible for physician surveys. One hundred twenty‐two residents and 51 hospitalist physicians completed surveys for 472 procedures (99.6%); 182 patients by traditionally trained residents, 145 by SBML‐trained residents, and 145 by hospitalist physicians. As shown in Table 1, 413 (88%) of all procedures were referred to another service. Traditionally trained residents were more likely to refer to IR compared to SBML‐trained residents or hospitalist physicians. SBML‐trained residents were more likely to perform bedside procedures, whereas hospitalist physicians were most likely to refer to pulmonary medicine. SBML‐trained residents were most confident in their procedural skills, despite hospitalist physicians performing more actual procedures.

| Traditionally Trained Resident Surveys, n = 182 | SBML‐Trained Resident Surveys, n = 145 | Hospitalist Physician Surveys, n = 145 | P Value | |

|---|---|---|---|---|

| ||||

| Bedside procedures, no. (%) | 26 (14.3%) | 32 (22.1%) | 1 (0.7%) | <0.001 |

| IR procedures, no. (%) | 119 (65.4%) | 74 (51.0%) | 82 (56.6%) | 0.029 |

| Pulmonary procedures, no. (%) | 37 (20.3%) | 39 (26.9%) | 62 (42.8%) | <0.001 |

| Procedure self‐confidence, mean (SD)* | 43.6 (28.66) | 68.2 (25.17) | 55.7 (31.17) | <0.001 |

| Experience performing actual procedures, median (IQR) | 1 (13) | 2 (13.5) | 10 (425) | <0.001 |

Traditionally trained residents were most likely to rate low confidence as reasons why they referred thoracenteses (Table 2). Hospitalist physicians were more likely to cite lack of time to perform the procedure themselves. Other reasons were different across groups. SBML‐trained residents were more likely to refer because of attending preference, whereas traditionally trained residents were mostly like to refer because of high risk/technically difficult cases.

| Traditionally Trained Residents, n = 156 | SBML‐Trained Residents, n = 113 | Hospitalist Physicians, n = 144 | P Value | |

|---|---|---|---|---|

| ||||

| Lack of confidence to perform procedure, mean (SD)* | 3.46 (1.32) | 2.52 (1.45) | 2.89 (1.60) | <0.001 |

| Work hour restrictions, mean (SD) * | 2.05 (1.37) | 1.50 (1.11) | n/a | 0.001 |

| Low reimbursement, mean (SD)* | 1.02 (0.12) | 1.0 (0) | 1.22 (0.69) | <0.001 |

| Other reasons for referral, no. (%) | ||||

| Attending preference | 8 (5.1%) | 11 (9.7%) | 3 (2.1%) | 0.025 |

| Don't know how | 6 (3.8%) | 0 | 0 | 0.007 |

| Failed bedside | 0 | 2 (1.8%) | 0 | 0.07 |

| High risk/technically difficult case | 24 (15.4%) | 12 (10.6%) | 5 (3.5%) | 0.003 |

| IR or pulmonary patient | 5 (3.2%) | 2 (1.8%) | 4 (2.8%) | 0.77 |

| Other IR procedure taking place | 11 (7.1%) | 9 (8.0%) | 4 (2.8%) | 0.13 |

| Patient preference | 2 (1.3%) | 7 (6.2%) | 2 (3.5%) | 0.024 |

| Time | 9 (5.8%) | 7 (6.2%) | 29 (20.1%) | <0.001 |

DISCUSSION

This study confirms earlier research showing that thoracentesis SBML improves residents' clinical skills, but is the first to use a randomized study design.[9] Use of the mastery model in health professions education ensures that all learners are competent to provide patient care including performing invasive procedures. Such rigorous education yields downstream translational outcomes including safety profiles comparable to experts.[1, 6]

This study also shows that SBML‐trained residents displayed higher self‐confidence and performed significantly more bedside procedures than traditionally trained residents and more experienced hospitalist physicians. Although the Society of Hospital Medicine considers thoracentesis skills a core competency for hospitalist physicians,[11] we speculate that some hospitalist physicians had not performed a thoracentesis in years. A recent national survey showed that only 44% of hospitalist physicians performed at least 1 thoracentesis within the past year.[10] Research also shows a shift in medical culture to refer procedures to specialty services, such as IR, by over 900% in the past 2 decades.[4] Our results provide novel information about procedure referrals because we show that SBML provides translational outcomes by improving skills and self‐confidence that influence referral patterns. SBML‐trained residents performed almost a quarter of procedures at the bedside. Although this only represents an 8% absolute difference in bedside procedures compared to traditionally trained residents, if a large number of residents are trained using SBML this results in a meaningful number of procedures shifted to the patient bedside. According to University HealthSystem Consortium data, in US teaching hospitals, approximately 35,325 thoracenteses are performed yearly.[1] Shifting even 8% of these procedures to the bedside would result in significant clinical benefit and cost savings. Reduced referrals increase additional bedside procedures that are safe, cost‐effective, and highly satisfying to patients.[1, 12, 13] Further study is required to determine the impact on referral patterns after providing SMBL training to attending physicians.

Our study also provides information about the rationale for procedure referrals. Earlier work speculates that financial incentive, training and time may explain high procedure referral rates.[10] One report on IM residents noted an 87% IR referral rate for thoracentesis, and confirmed that both training and time were major reasons.[14] Hospitalist physicians reported lack of time as the major factor leading to procedural referrals, which is problematic because bedside procedures yield similar clinical outcomes at lower costs.[1, 12] Attending preference also prevented 11 additional bedside procedures in the SBML‐trained group. Schedule adjustments and SBML training of hospitalist physicians should be considered, because bundled payments in the Affordable Care Act may favor shifting to the higher‐value approach of bedside thoracenteses.[15]

Our study has several limitations. First, we only performed surveys at 1 institution and the results may not be generalizable. Second, we relied on an electronic query to alert us to thoracenteses. Our query may have missed procedures that were unsuccessful or did not have EHR orders entered. Third, physicians may have been surveyed more than once for different or the same patient(s), but opinions may have shifted over time. Fourth, some items such as time needed to be written in the survey and were not specifically asked. This could have resulted in under‐reporting. Finally, we did not assess the clinical outcomes of thoracenteses in this study, although earlier work shows that residents who complete SBML have safety outcomes similar to IR.[1, 6]

In summary, IM residents who complete thoracentesis SBML demonstrate improved clinical skills and are more likely to perform bedside procedures. In an era of bundled payments, rethinking current care models to promote cost‐effective care is necessary. We believe providing additional education, training, and support to hospitalist physicians to promote bedside procedures is a promising strategy that warrants further study.

Acknowledgements

The authors acknowledge Drs. Douglas Vaughan and Kevin O'Leary for their support and encouragement of this work. The authors also thank the internal medicine residents at Northwestern for their dedication to patient care.

Disclosures: This project was supported by grant R18HS021202‐01 from the Agency for Healthcare Research and Quality (AHRQ). AHRQ had no role in the preparation, review, or approval of the manuscript. Trial Registration:

Internal medicine (IM) residents and hospitalist physicians commonly conduct bedside thoracenteses for both diagnostic and therapeutic purposes.[1] The American Board of Internal Medicine only requires that certification candidates understand the indications, complications, and management of thoracenteses.[2] A disconnect between clinical practice patterns and board requirements may increase patient risk because poorly trained physicians are more likely to cause complications.[3] National practice patterns show that many thoracenteses are referred to interventional radiology (IR).[4] However, research links performance of bedside procedures to reduced hospital length of stay and lower costs, without increasing risk of complications.[1, 5, 6]

Simulation‐based education offers a controlled environment where trainees improve procedural knowledge and skills without patient harm.[7] Simulation‐based mastery learning (SBML) is a rigorous form of competency‐based education that improves clinical skills and reduces iatrogenic complications and healthcare costs.[5, 6, 8] SBML also is an effective method to boost thoracentesis skills among IM residents.[9] However, there are no data to show that thoracentesis skills acquired in the simulation laboratory transfer to clinical environments and affect referral patterns.

We hypothesized that a thoracentesis SBML intervention would improve skills and increase procedural self‐confidence while reducing procedure referrals. This study aimed to (1) assess the effect of thoracentesis SBML on a cohort of IM residents' simulated skills and (2) compare traditionally trained (nonSBML‐trained) residents, SBML‐trained residents, and hospitalist physicians regarding procedure referral patterns, self‐confidence, procedure experience, and reasons for referral.

METHODS AND MATERIALS

Study Design

We surveyed physicians about thoracenteses performed on patients cared for by postgraduate year (PGY)‐2 and PGY‐3 IM residents and hospitalist physicians at Northwestern Memorial Hospital (NMH) from December 2012 to May 2015. NMH is an 896‐bed, tertiary academic medical center, located in Chicago, Illinois. A random sample of IM residents participated in a thoracentesis SBML intervention, whereas hospitalist physicians did not. We compared referral patterns, self‐confidence, procedure experience, and reasons for referral between traditionally trained residents, SBML‐trained residents, and hospitalist physicians. The Northwestern University Institutional Review Board approved this study, and all study participants provided informed consent.

At NMH, resident‐staffed services include general IM and nonintensive care subspecialty medical services. There are also 2 nonteaching floors staffed by hospitalist attending physicians without residents. Thoracenteses performed on these services can either be done at the bedside or referred to pulmonary medicine or IR. The majority of thoracenteses performed by pulmonary medicine occur at the patients' bedside, and the patients also receive a clinical consultation. IR procedures are done in the IR suite without additional clinical consultation.

Procedure

One hundred sixty residents were available for training over the study period. We randomly selected 20% of the approximately 20 PGY‐2 and PGY‐3 IM residents assigned to the NMH medicine services each month to participate in SBML thoracentesis training before their rotation. Randomly selected residents were required to undergo SBML training but were not required to participate in the study. This selection process was repeated before every rotation during the study period. This randomized wait‐list control method allowed residents to serve as controls if not initially selected for training and remain eligible for SBML training in subsequent rotations.

Intervention

The SBML intervention used a pretest/post‐test design, as described elsewhere.[9] Residents completed a clinical skills pretest on a thoracentesis simulator using a previously published 26‐item checklist.[9] Following the pretest, residents participated in 2, 1‐hour training sessions including a lecture, video, and deliberate practice on the simulator with feedback from an expert instructor. Finally, residents completed a clinical skills post‐test using the checklist within 1 week from training (but on a different day) and were required to meet or exceed an 84.3% minimum passing score (MPS). The entire training, including pre‐ and post‐tests, took approximately 3 hours to complete, and residents were given an additional 1 hour refresher training every 6 months for up to a year after original training. We compared pre‐ and post‐test checklist scores to evaluate skills improvement.

Thoracentesis Patient Identification

The NMH electronic health record (EHR) was used to identify medical service inpatients who underwent a thoracentesis during the study period. NMH clinicians must place an EHR order for procedure kits, consults, and laboratory analysis of thoracentesis fluid. We developed a real‐time query of NMH's EHR that identified all patients with electronic orders for thoracenteses and monitored this daily.

Physician Surveys

After each thoracentesis, we surveyed the PGY‐2 or PGY‐3 resident or hospitalist caring for the patient about the procedure. A research coordinator, blind to whether the resident received SBML, performed the surveys face‐to‐face on Monday to Friday during normal business hours. Residents were not considered SBML‐trained until they met or exceeded the MPS on the simulated skills checklist at post‐test. Surveys occurred on Monday for procedures performed on Friday evening through Sunday. Survey questions asked physicians about who performed the procedure, their procedural self‐confidence, and total number of thoracenteses performed in their career. For referred procedures, physicians were asked about reasons for referral including lack of confidence, work hour restrictions (residents only), and low reimbursement rates.[10] There was also an option to add other reasons.

Measurement

The thoracentesis skills checklist documented all required steps for an evidence‐based thoracentesis. Each task received equal weight (0 = done incorrectly/not done, 1 = done correctly).[9] For physician surveys, self‐confidence about performing the procedure was rated on a scale of 0 = not confident to 100 = very confident. Reasons for referral were scored on a Likert scale 1 to 5 (1 = not at all important, 5 = very important). Other reasons for referral were categorized.

Statistical Analysis

The clinical skills pre‐ and post‐test checklist scores were compared using a Wilcoxon matched pairs rank test. Physician survey data were compared between different procedure performers using the 2 test, independent t test, analysis of variance (ANOVA), or Kruskal‐Wallis test depending on data properties. Referral patterns measured by the Likert scale were averaged, and differences between physician groups were evaluated using ANOVA. Counts of other reasons for referral were compared using the 2 test. We performed all statistical analyses using IBM SPSS Statistics version 23 (IBM Corp., Armonk, NY).

RESULTS

Thoracentesis Clinical Skills

One hundred twelve (70%) residents were randomized to SBML, and all completed the protocol. Median pretest scores were 57.6% (interquartile range [IQR] 43.376.9), and final post‐test mastery scores were 96.2 (IQR 96.2100.0; P < 0.001). Twenty‐three residents (21.0%) failed to meet the MPS at initial post‐test, but met the MPS on retest after <1 hour of additional training.

Physician Surveys

The EHR query identified 474 procedures eligible for physician surveys. One hundred twenty‐two residents and 51 hospitalist physicians completed surveys for 472 procedures (99.6%); 182 patients by traditionally trained residents, 145 by SBML‐trained residents, and 145 by hospitalist physicians. As shown in Table 1, 413 (88%) of all procedures were referred to another service. Traditionally trained residents were more likely to refer to IR compared to SBML‐trained residents or hospitalist physicians. SBML‐trained residents were more likely to perform bedside procedures, whereas hospitalist physicians were most likely to refer to pulmonary medicine. SBML‐trained residents were most confident in their procedural skills, despite hospitalist physicians performing more actual procedures.

| Traditionally Trained Resident Surveys, n = 182 | SBML‐Trained Resident Surveys, n = 145 | Hospitalist Physician Surveys, n = 145 | P Value | |

|---|---|---|---|---|

| ||||

| Bedside procedures, no. (%) | 26 (14.3%) | 32 (22.1%) | 1 (0.7%) | <0.001 |

| IR procedures, no. (%) | 119 (65.4%) | 74 (51.0%) | 82 (56.6%) | 0.029 |

| Pulmonary procedures, no. (%) | 37 (20.3%) | 39 (26.9%) | 62 (42.8%) | <0.001 |

| Procedure self‐confidence, mean (SD)* | 43.6 (28.66) | 68.2 (25.17) | 55.7 (31.17) | <0.001 |

| Experience performing actual procedures, median (IQR) | 1 (13) | 2 (13.5) | 10 (425) | <0.001 |

Traditionally trained residents were most likely to rate low confidence as reasons why they referred thoracenteses (Table 2). Hospitalist physicians were more likely to cite lack of time to perform the procedure themselves. Other reasons were different across groups. SBML‐trained residents were more likely to refer because of attending preference, whereas traditionally trained residents were mostly like to refer because of high risk/technically difficult cases.

| Traditionally Trained Residents, n = 156 | SBML‐Trained Residents, n = 113 | Hospitalist Physicians, n = 144 | P Value | |

|---|---|---|---|---|

| ||||

| Lack of confidence to perform procedure, mean (SD)* | 3.46 (1.32) | 2.52 (1.45) | 2.89 (1.60) | <0.001 |

| Work hour restrictions, mean (SD) * | 2.05 (1.37) | 1.50 (1.11) | n/a | 0.001 |

| Low reimbursement, mean (SD)* | 1.02 (0.12) | 1.0 (0) | 1.22 (0.69) | <0.001 |

| Other reasons for referral, no. (%) | ||||

| Attending preference | 8 (5.1%) | 11 (9.7%) | 3 (2.1%) | 0.025 |

| Don't know how | 6 (3.8%) | 0 | 0 | 0.007 |

| Failed bedside | 0 | 2 (1.8%) | 0 | 0.07 |

| High risk/technically difficult case | 24 (15.4%) | 12 (10.6%) | 5 (3.5%) | 0.003 |

| IR or pulmonary patient | 5 (3.2%) | 2 (1.8%) | 4 (2.8%) | 0.77 |

| Other IR procedure taking place | 11 (7.1%) | 9 (8.0%) | 4 (2.8%) | 0.13 |

| Patient preference | 2 (1.3%) | 7 (6.2%) | 2 (3.5%) | 0.024 |

| Time | 9 (5.8%) | 7 (6.2%) | 29 (20.1%) | <0.001 |

DISCUSSION

This study confirms earlier research showing that thoracentesis SBML improves residents' clinical skills, but is the first to use a randomized study design.[9] Use of the mastery model in health professions education ensures that all learners are competent to provide patient care including performing invasive procedures. Such rigorous education yields downstream translational outcomes including safety profiles comparable to experts.[1, 6]

This study also shows that SBML‐trained residents displayed higher self‐confidence and performed significantly more bedside procedures than traditionally trained residents and more experienced hospitalist physicians. Although the Society of Hospital Medicine considers thoracentesis skills a core competency for hospitalist physicians,[11] we speculate that some hospitalist physicians had not performed a thoracentesis in years. A recent national survey showed that only 44% of hospitalist physicians performed at least 1 thoracentesis within the past year.[10] Research also shows a shift in medical culture to refer procedures to specialty services, such as IR, by over 900% in the past 2 decades.[4] Our results provide novel information about procedure referrals because we show that SBML provides translational outcomes by improving skills and self‐confidence that influence referral patterns. SBML‐trained residents performed almost a quarter of procedures at the bedside. Although this only represents an 8% absolute difference in bedside procedures compared to traditionally trained residents, if a large number of residents are trained using SBML this results in a meaningful number of procedures shifted to the patient bedside. According to University HealthSystem Consortium data, in US teaching hospitals, approximately 35,325 thoracenteses are performed yearly.[1] Shifting even 8% of these procedures to the bedside would result in significant clinical benefit and cost savings. Reduced referrals increase additional bedside procedures that are safe, cost‐effective, and highly satisfying to patients.[1, 12, 13] Further study is required to determine the impact on referral patterns after providing SMBL training to attending physicians.

Our study also provides information about the rationale for procedure referrals. Earlier work speculates that financial incentive, training and time may explain high procedure referral rates.[10] One report on IM residents noted an 87% IR referral rate for thoracentesis, and confirmed that both training and time were major reasons.[14] Hospitalist physicians reported lack of time as the major factor leading to procedural referrals, which is problematic because bedside procedures yield similar clinical outcomes at lower costs.[1, 12] Attending preference also prevented 11 additional bedside procedures in the SBML‐trained group. Schedule adjustments and SBML training of hospitalist physicians should be considered, because bundled payments in the Affordable Care Act may favor shifting to the higher‐value approach of bedside thoracenteses.[15]

Our study has several limitations. First, we only performed surveys at 1 institution and the results may not be generalizable. Second, we relied on an electronic query to alert us to thoracenteses. Our query may have missed procedures that were unsuccessful or did not have EHR orders entered. Third, physicians may have been surveyed more than once for different or the same patient(s), but opinions may have shifted over time. Fourth, some items such as time needed to be written in the survey and were not specifically asked. This could have resulted in under‐reporting. Finally, we did not assess the clinical outcomes of thoracenteses in this study, although earlier work shows that residents who complete SBML have safety outcomes similar to IR.[1, 6]

In summary, IM residents who complete thoracentesis SBML demonstrate improved clinical skills and are more likely to perform bedside procedures. In an era of bundled payments, rethinking current care models to promote cost‐effective care is necessary. We believe providing additional education, training, and support to hospitalist physicians to promote bedside procedures is a promising strategy that warrants further study.

Acknowledgements

The authors acknowledge Drs. Douglas Vaughan and Kevin O'Leary for their support and encouragement of this work. The authors also thank the internal medicine residents at Northwestern for their dedication to patient care.

Disclosures: This project was supported by grant R18HS021202‐01 from the Agency for Healthcare Research and Quality (AHRQ). AHRQ had no role in the preparation, review, or approval of the manuscript. Trial Registration:

- , , , , . Thoracentesis procedures at university hospitals: comparing outcomes by specialty. Jt Comm J Qual Patient Saf. 2015;42(1):34–40.

- American Board of Internal Medicine. Internal medicine policies. Available at: http://www.abim.org/certification/policies/internal‐medicine‐subspecialty‐policies/internal‐medicine.aspx. Accessed March 9, 2016.

- , , , . Pneumothorax following thoracentesis: a systematic review and meta‐analysis. Arch Intern Med. 2010;170(4):332–339.

- , , . National fluid shifts: fifteen‐year trends in paracentesis and thoracentesis procedures. J Am Coll Radiol. 2010;7(11):859–864.

- , , , et al. Cost savings of performing paracentesis procedures at the bedside after simulation‐based education. Simul Healthc. 2014;9(5):312–318.

- , , , , . Clinical outcomes after bedside and interventional radiology paracentesis procedures. Am J Med. 2013;126(4):349–356.

- , , , et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282(9):861–866.

- , , , et al. Cost savings from reduced catheter‐related bloodstream infection after simulation‐based education for residents in a medical intensive care unit. Simul Healthc. 2010;5(2):98–102.

- , , , , . Mastery learning of thoracentesis skills by internal medicine residents using simulation technology and deliberate practice. J Hosp Med. 2008;3(1):48–54.

- , , , , . Procedures performed by hospitalist and non‐hospitalist general internists. J Gen Intern Med. 2010;25(5):448–452.

- , , , , . Core competencies in hospital medicine: development and methodology. J Hosp Med. 2006;1(suppl 1):48–56.

- , , , , , . Specialties performing paracentesis procedures at university hospitals: implications for training and certification. J Hosp Med. 2014;9(3):162–168.

- , , , , , . Are we providing patient‐centered care? Preferences about paracentesis and thoracentesis procedures. Patient Exp J. 2014;1(2):94–103. Available at: http://pxjournal.org/cgi/viewcontent.cgi?article=1024

- , , , , . Thoracentesis procedures at university hospitals: comparing outcomes by specialty. Jt Comm J Qual Patient Saf. 2015;42(1):34–40.

- American Board of Internal Medicine. Internal medicine policies. Available at: http://www.abim.org/certification/policies/internal‐medicine‐subspecialty‐policies/internal‐medicine.aspx. Accessed March 9, 2016.

- , , , . Pneumothorax following thoracentesis: a systematic review and meta‐analysis. Arch Intern Med. 2010;170(4):332–339.

- , , . National fluid shifts: fifteen‐year trends in paracentesis and thoracentesis procedures. J Am Coll Radiol. 2010;7(11):859–864.

- , , , et al. Cost savings of performing paracentesis procedures at the bedside after simulation‐based education. Simul Healthc. 2014;9(5):312–318.

- , , , , . Clinical outcomes after bedside and interventional radiology paracentesis procedures. Am J Med. 2013;126(4):349–356.

- , , , et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282(9):861–866.

- , , , et al. Cost savings from reduced catheter‐related bloodstream infection after simulation‐based education for residents in a medical intensive care unit. Simul Healthc. 2010;5(2):98–102.

- , , , , . Mastery learning of thoracentesis skills by internal medicine residents using simulation technology and deliberate practice. J Hosp Med. 2008;3(1):48–54.

- , , , , . Procedures performed by hospitalist and non‐hospitalist general internists. J Gen Intern Med. 2010;25(5):448–452.

- , , , , . Core competencies in hospital medicine: development and methodology. J Hosp Med. 2006;1(suppl 1):48–56.

- , , , , , . Specialties performing paracentesis procedures at university hospitals: implications for training and certification. J Hosp Med. 2014;9(3):162–168.

- , , , , , . Are we providing patient‐centered care? Preferences about paracentesis and thoracentesis procedures. Patient Exp J. 2014;1(2):94–103. Available at: http://pxjournal.org/cgi/viewcontent.cgi?article=1024

Specialties Performing Paracentesis

Cirrhosis affects up to 3% of the population and is 1 of the 10 most common causes of death in the United States.[1, 2, 3, 4] Paracentesis procedures are frequently performed in patients with liver disease and ascites for diagnostic and/or therapeutic purposes. These procedures can be performed safely by trained clinicians at the bedside or referred to interventional radiology (IR).[2, 3, 4]

National practice patterns show that paracentesis procedures are increasingly referred to IR rather than performed at the bedside by internal medicine or gastroenterology clinicians.[5, 6, 7] In fact, a recent study of Medicare beneficiaries showed that inpatient and outpatient paracentesis procedures performed by radiologists increased by 964% from 1993 to 2008.[7] Reasons for the decline in bedside procedures include the increased availability of IR, lack of sufficient reimbursement, and the time required to perform paracentesis procedures.[5, 6, 7, 8] Surveys of internal medicine and family medicine residents and gastroenterology fellows show trainees often lack the confidence and experience needed to perform the procedure safely.[9, 10, 11] Additionally, many clinicians do not have expertise with ultrasound use and may not have access to necessary equipment.

Inconsistent certification requirements may also impact the competence and experience of physicians to perform paracentesis procedures. Internal medicine residents are no longer required by the American Board of Internal Medicine (ABIM) to demonstrate competency in procedures such as paracentesis for certification.[12] However, the Accreditation Council for Graduate Medical Education (ACGME) requirements state that internal medicine programs must offer residents the opportunity to demonstrate competence in the performance of procedures such as paracentesis, thoracentesis, and central venous catheter insertion.[13] The American Board of Family Medicine (ABFM) does not outline specific procedural competence for initial certification.[14] The ACGME states that family medicine residents must receive training to perform those clinical procedures required for their future practices but allows each program to determine which procedures to require.[15] Due to this uncertainty, practicing hospitalists are likely to have variable training and competence in bedside procedures such as paracentesis.

We previously showed that internal medicine residents rotating on the hepatology service of an academic medical center performed 59% of paracentesis procedures at the bedside.[16] These findings are in contrast to national data showing that 74% of paracentesis procedures performed on Medicare beneficiaries were performed by radiologists.[7] Practice patterns at university hospitals may not be reflected in this data because the study was limited to Medicare beneficiaries and included ambulatory patients.[7] In addition to uncertainty about who is performing this procedure in inpatient settings, little is known about the effect of specialty on postparacentesis clinical outcomes.[16, 17]

The current study had 3 aims: (1) evaluate which clinical specialties perform paracentesis procedures at university hospitals; (2) model patient characteristics associated with procedures performed at the bedside versus those referred to IR; and (3) among patients with a similar likelihood of IR referral, evaluate length of stay (LOS) and hospital costs of patients undergoing procedures performed by different specialties.

METHODS

We performed an observational administrative database review of patients who underwent paracentesis procedures in hospitals participating in the University HealthSystem Consortium (UHC) Clinical Database from January 2010 through December 2012. UHC is an alliance of 120 nonprofit academic medical centers and their 290 affiliated hospitals. UHC maintains databases containing clinical, operational, financial, and patient safety data from affiliated hospitals. Using the UHC database, we described the characteristics of all patients who underwent paracentesis procedures by clinical specialty performing the procedure. We then modeled the effects of patient characteristics on decision‐making about IR referral. Finally, among patients with a homogeneous predicted probability of IR referral, we compared LOS and direct costs by specialty performing the procedure. The Northwestern University institutional review board approved this study.

Procedure

We queried the UHC database for all patients over the age of 18 years who underwent paracentesis procedures (International Classification of Disease Revision 9 [ICD‐9] procedure code 54.91) and had at least 1 diagnosis code of liver disease (571.x). We excluded patients admitted to obstetrics. The query included patient and clinical characteristics such as admission, discharge, and procedure date; age, gender, procedure provider specialty, and intensive care unit (ICU) stay. We also obtained all ICD‐9 codes associated with the admission including obesity, severe liver disease, coagulation disorders, blood loss anemia, hyponatremia, hypotension, thrombocytopenia, liver transplant before or during the admission, awaiting liver transplant, and complications of liver transplant. We used ICD‐9 codes to calculate patients' Charlson score[18, 19] to assess severity of illness on admission.

LOS and total direct hospital costs were compared among patients with a paracentesis performed by a single clinical group and among patients with a similar predicted probability of IR referral. UHC generates direct cost estimates by applying Medicare Cost Report ratios of cost to charges with the labor cost further adjusted by the respective area wage index. Hospital costs were not available from 8.3% of UHC hospitals. We therefore based cost estimates on nonmissing data.

Paracentesis provider specialties were divided into 6 general categories: (1) IR (interventional and diagnostic radiology); (2) medicine (family medicine, general medicine, and hospital medicine); (3) subspecialty medicine (infectious disease, cardiology, nephrology, hematology/oncology, endocrinology, pulmonary, and geriatrics); (4) gastroenterology/hepatology (gastroenterology, hepatology, and transplant medicine); (5) general surgery (general surgery and transplant surgery); and (6) all other (included unclassified specialties). We present patient characteristics categorized by these specialty groups and for admissions in which multiple specialties performed procedures.

Study Design

To analyze an individual patient's likelihood of IR referral, we needed to restrict our sample to discharges where only 1 clinical specialty performed a paracentesis. Therefore, we excluded hybrid discharges with procedures performed by more than 1 specialty in a single admission as well as discharges with procedures performed by all other specialties. To compare LOS and direct cost outcomes, and to minimize selection bias among exclusively IR‐treated patients, we excluded hospitals without procedures done by both IR and medicine.

We modeled referral to IR as a function of patients' demographic and clinical variables, which we believed would affect the probability of referral. We then examined the IR referral model predicted probabilities (propensity score).[20] Finally, we examined mean differences in LOS and direct costs among discharges with a single clinical specialty group, while using the predicted probability of referral as a filter to compare these outcomes by specialty. We further tested specialty differences in LOS and direct costs controlling for demographic and clinical variables.

Statistical Analysis

To test the significance of differences between demographic and clinical characteristics of patients across specialties, we used 2 tests for categorical variables and analysis of variance or the Kruskal‐Wallis rank test for continuous variables. Random effects logistic regression, which adjusts standard errors for clustering by hospital, was used to model the likelihood of referral to IR. Independent variables included patient age, gender, obesity, coagulation disorders, blood loss anemia, hyponatremia, hypotension, thrombocytopenia, liver transplant before hospitalization, liver transplant during hospitalization, awaiting transplant, complications of liver transplant, ICU stay, Charlson score, and number of paracentesis procedures performed during the admission. Predicted probabilities derived from this IR referral model were used to investigate selection bias in our subsequent analyses of LOS and costs.[20]

We used random effects multiple linear regression to test the association of procedure specialty with hospital LOS and total direct costs, controlling for the same independent variables listed above. Analyses were conducted using both actual LOS in days and Medicare costs. We also performed a log transformation of LOS and costs to account for rightward skew. We only present actual LOS and cost results because results were virtually identical. We used SAS version 9 (SAS Institute Inc., Cary, NC) to extract data from the UHC Clinical Database. We performed all statistical analyses using Stata version 12 (StataCorp LP, College Station, TX).

RESULTS

Procedure and Discharge Level Results

There were 97,577 paracentesis procedures performed during 70,862 hospital admissions in 204 UHC hospitals during the study period. Table 1 shows specific specialty groups for each procedure. The all other category consisted of 17,558 subspecialty groups including 9,434 with specialty unknown. Twenty‐nine percent of procedures were performed in IR versus 27% by medicine, 11% by gastroenterology/hepatology, and 11% by subspecialty medicine.

| Specialty Group | No. | % |

|---|---|---|

| Interventional radiology | 28,414 | 29.1 |

| Medicine | 26,031 | 26.7 |

| Family medicine | 1,026 | 1.1 |

| General medicine | 21,787 | 22.3 |

| Hospitalist | 3,218 | 3.3 |

| Subspecialty medicine | 10,558 | 10.8 |

| Infectious disease | 848 | 0.9 |

| Nephrology | 615 | 0.6 |

| Cardiology | 991 | 1.0 |

| Hematology oncology | 795 | 0.8 |

| Endocrinology | 359 | 0.4 |

| Pulmonology | 6,605 | 6.8 |

| Geriatrics | 345 | 0.4 |

| Gastroenterology/hepatology | 11,143 | 11.4 |

| Transplant medicine | 99 | 0.1 |

| Hepatology | 874 | 0.9 |

| Gastroenterology | 10,170 | 10.4 |

| General surgery | 3,873 | 4.0 |

| Transplant surgery | 2,146 | 2.2 |

| General surgery | 1,727 | 1.8 |

| All other | 17,558 | 18.0 |

| Specialty unknown | 9,434 | 9.7 |

Table 2 presents patient characteristics for 70,862 hospital discharges with paracentesis procedures grouped by whether single or multiple specialties performed procedures. Patient characteristics were significantly different across specialty groups. Medicine, subspecialty medicine, and gastroenterology/hepatology patients were younger, more likely to be male, and more likely to have severe liver disease, coagulation disorders, hypotension, and hyponatremia than IR patients.

| All Discharges, N=70,862 | Interventional Radiology, n=9,348 | Medicine, n=13,789 | Subspecialty Medicine, n=5,085 | Gastroenterology/Hepatology, n=6,664 | General Surgery, n=1,891 | All Other, n=7,912 | Discharges With Multiple Specialties, n=26,173 | |

|---|---|---|---|---|---|---|---|---|

| ||||||||

| Age group, y (%) | ||||||||

| 1849 | 25.4 | 22.5 | 27.6 | 24.9 | 23.5 | 20.8 | 25.5 | 26.1 |

| 5059 | 39.8 | 39.8 | 40.9 | 39.4 | 41.5 | 40.3 | 40.0 | 38.7 |

| 6069 | 24.7 | 24.9 | 21.6 | 24.7 | 26.5 | 30.0 | 23.6 | 25.8 |

| 70+ | 10.1 | 12.9 | 9.9 | 11.1 | 8.4 | 8.9 | 11.0 | 9.4 |

| Male (%) | 65.5 | 64.2 | 67.6 | 67.5 | 65.7 | 66.6 | 65.7 | 64.2 |

| Severe liver disease (%)a | 73.7 | 65.3 | 67.8 | 71.0 | 75.3 | 66.6 | 67.6 | 82.1 |

| Obesity (BMI 40+) (%) | 6.3 | 6.1 | 5.3 | 5.7 | 5.1 | 5.8 | 5.2 | 7.6 |

| Any intensive care unit stay (%) | 31.0 | 10.9 | 16.8 | 50.5 | 16.9 | 36.7 | 22.3 | 47.8 |

| Coagulation disorders (%) | 24.3 | 14.8 | 20.2 | 29.9 | 16.1 | 19.0 | 17.8 | 33.1 |

| Blood loss anemia (%) | 3.4 | 1.3 | 2.8 | 2.7 | 2.7 | 1.9 | 2.1 | 5.2 |

| Hyponatremia (%) | 29.9 | 27.1 | 29.2 | 28.9 | 28.0 | 26.6 | 27.3 | 33.1 |

| Hypotension (%) | 9.8 | 7.0 | 8.0 | 11.0 | 7.7 | 10.5 | 8.1 | 12.4 |

| Thrombocytopenia (%) | 29.6 | 24.6 | 28.3 | 32.5 | 22.1 | 21.5 | 24.0 | 35.8 |

| Complication of transplant (%) | 3.3 | 2.1 | 1.1 | 2.4 | 4.0 | 10.3 | 2.7 | 4.7 |

| Awaiting liver transplant (%) | 7.6 | 6.4 | 4.0 | 5.4 | 12.8 | 16.0 | 7.8 | 8.2 |

| Prior liver transplant (%) | 0.5 | 0.8 | 0.3 | 0.3 | 0.7 | 0.7 | 0.4 | 0.6 |

| Liver transplant procedure (%) | 2.7 | 0.0 | 0.0 | 0.3 | 0.4 | 15.6 | 1.6 | 5.6 |

| Mean Charlson score (SD) | 4.51 (2.17) | 4.28 (2.26) | 4.16 (2.17) | 4.72 (2.30) | 4.30 (1.98) | 4.26 (2.22) | 4.36 (2.30) | 4.84 (2.07) |

| Mean paracentesis procedures per discharge (SD) | 1.38 (0.88) | 1.21 (0.56) | 1.26 (0.66) | 1.30 (0.76) | 1.31 (0.70) | 1.28 (0.78) | 1.22 (0.61) | 1.58 (1.13) |

IR Referral Model

We first excluded 6030/70,862 discharges (8.5%) from 59 hospitals without both IR and medicine procedures. We then further excluded 24,986/70,862 (35.3%) discharges with procedures performed by multiple specialties during the same admission. Finally, we excluded 5555/70,862 (7.8%) of discharges with procedure specialty coded as all other. Therefore, 34,291 (48.4%) discharges (43,337/97,577; 44.4% procedures) from 145 UHC hospitals with paracentesis procedures performed by a single clinical specialty group remained for the IR referral analysis sample. Among admissions with multiple specialty paracentesis performed within the same admission, 3128/26,606 admissions with any IR procedure (11.8%) had a different specialty ascribed to the first, second, or third paracentesis with a subsequent IR procedure.

Model results (Table 3) indicate that patients who were obese (odds ratio [OR]: 1.25; 95% confidence interval [CI]: 1.10‐1.43) or had a liver transplant on a prior admission (OR: 2.03; 95% CI: 1.40‐2.95) were more likely to be referred to IR. However, male patients (OR: 0.89; 95% CI: 0.83‐0.95), or patients who required an ICU stay (OR: 0.39; 95% CI: 0.36‐0.43) were less likely to have IR procedures. Other patient factors reducing the likelihood of IR referral included characteristics associated with higher severity of illness (coagulation disorders, hyponatremia, hypotension, and thrombocytopenia).

| Odds Ratio | 95% CI | ||

|---|---|---|---|

| Lower | Upper | ||

| |||

| Age group, y | |||

| 1849 | Reference | ||

| 5059 | 1.05 | 0.97 | 1.14 |

| 6069 | 1.12 | 1.02 | 1.22 |

| 70+ | 1.11 | 0.99 | 1.24 |

| Male | 0.89 | 0.83 | 0.95 |

| Obesity, BMI 40+ | 1.25 | 1.10 | 1.43 |

| ICU care | 0.39 | 0.36 | 0.43 |

| Coagulation disorders | 0.68 | 0.63 | 0.75 |

| Blood loss anemia | 0.52 | 0.41 | 0.66 |

| Hyponatremia | 0.85 | 0.80 | 0.92 |

| Hypotension | 0.83 | 0.74 | 0.93 |

| Thrombocytopenia | 0.94 | 0.87 | 1.01 |

| Prior liver transplant | 0.08 | 0.03 | 0.23 |

| Awaiting liver transplant | 0.86 | 0.76 | 0.98 |

| Complication of liver transplant | 1.07 | 0.88 | 1.31 |

| Liver transplant procedure | 2.03 | 1.40 | 2.95 |

| Charlson score | 1.00 | 0.99 | 1.01 |

| Number of paracentesis procedures | 0.90 | 0.85 | 0.95 |

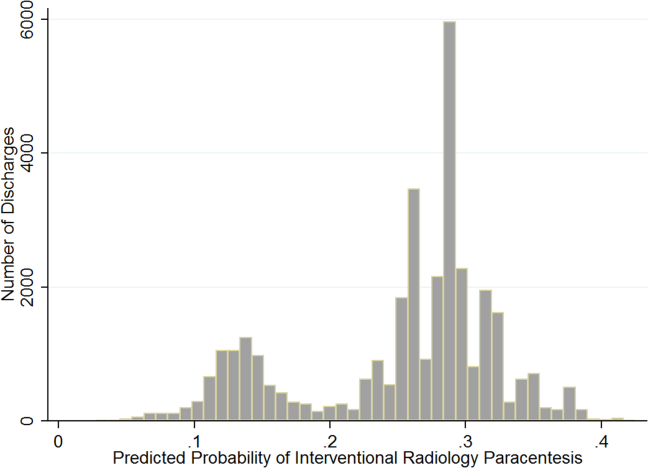

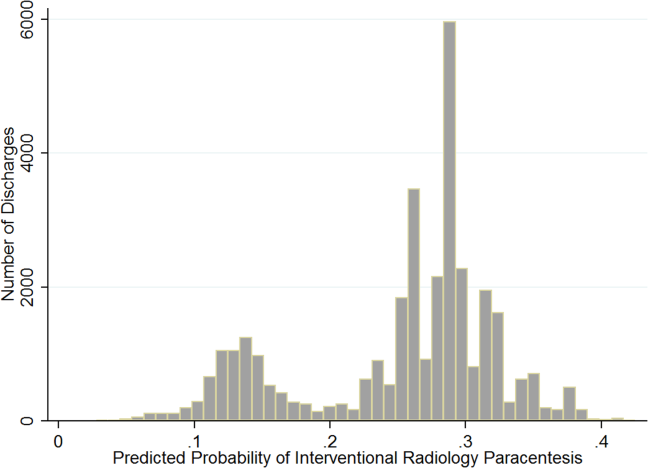

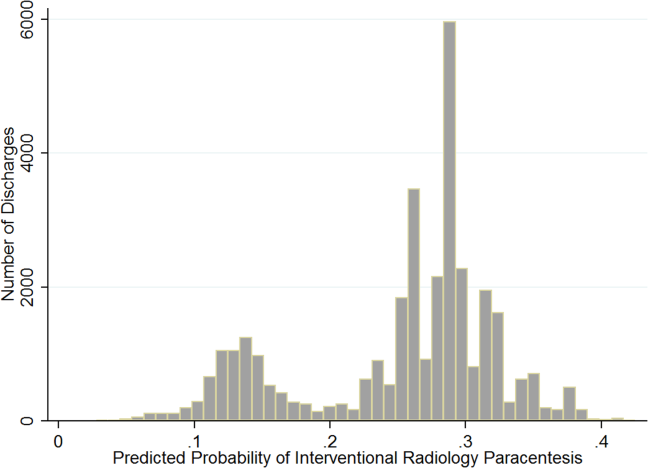

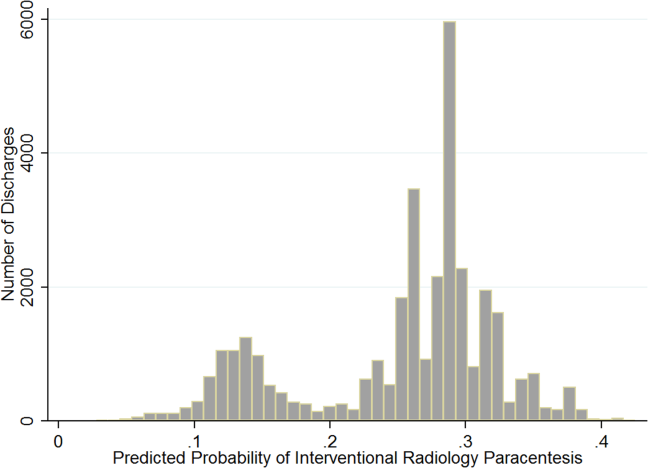

Predicted Probabilities of IR Referral

Figure 1 presents the distribution of predicted probabilities for IR referral. Predicted probabilities were low overall, with very few patients having an equal chance of referralthe standard often used in comparative effectiveness analyses from observational data. Figure 1 indicates that IR referral probabilities were clustered in an unusual bimodal distribution. The cluster on the left, which centers around a 15% predicted probability of IR referral, consists of discharges with patient characteristics that were associated with a very low chance of an IR paracentesis. We therefore used this distribution to conduct comparative analyses of admission outcomes between clinical specialty groups, choosing to examine patients with a 20% or greater chance of IR referral.

Post hoc analysis revealed that the biggest factor driving low predicted probability of IR referral was whether patients experienced an ICU stay at any time during hospitalization. Among the discharges with a predicted probability 0.2 (n=26,615 discharges), there were only 87 discharges with ICU stays (0.3%). For the discharges with predicted probability <0.2 (n=7676), 91.9% (n=7055) had an ICU admission. We therefore used a threshold of 0.2 or greater to present the most comparable LOS and direct cost differences.

LOS and Cost Comparisons by Specialty

Mean LOS and hospital direct costs by specialty for our final analysis sample can be found in Table 4; differences between specialties were significant (P<0.0001). Patients undergoing IR procedures had equivalent LOS and costs to medicine patients, but lower LOS and costs than other clinical specialty groups. Random effects linear regression showed that neither medicine nor gastroenterology/hepatology patients had significantly different LOS from IR patients, but subspecialty medicine was associated with 0.89 additional days and general surgery with 1.47 additional days (both P<0.0001; R2=0.10). In the direct cost regression model, medicine patients were associated with $1308 lower costs and gastroenterology/hepatology patients with $803 lower costs than IR patients (both P=0.0001), whereas subspecialty medicine and general surgery had higher direct costs per discharge of $1886 and $3039, respectively (both P<0.0001, R2=0.19). Older age, obesity, coagulopathy, hyponatremia, hypotension, thrombocytopenia, liver transplant status, ICU care, higher Charlson score, and higher number of paracentesis procedures performed were all significantly associated with higher LOS and hospital costs in these linear models.

| All Admissions n=26,615 | Interventional Radiology n=7,677 | Medicine n=10,413 | Medicine Subspecialties n=2,210 | Gastroenterology/ Hepatology n=5,182 | General Surgery n=1,133 | |

|---|---|---|---|---|---|---|

| All Admissions n=24,408 | Interventional Radiology n =7,265 | Medicine n=8,965, | Medicine Subspecialties n=2,064 | Gastroenterology/Hepatology n=5,031 | General Surgery n=1,083 | |

| ||||||

| Mean length of stay, d (SD) | 5.57 (5.63) | 5.20 (4.72) | 5.59 (5.85) | 6.28 (6.47) | 5.54 (5.31) | 6.67 (8.16) |

| Mean total direct cost, $ (SD)a | 11,447 (12,247) | 10,975 (9,723) | 10,517 (10,895) | 13,705 (16,591) | 12,000 (11,712) | 15,448 (23,807) |

DISCUSSION

This study showed that internal medicine‐ and family medicine‐trained clinicians perform approximately half of the inpatient paracentesis procedures at university hospitals and their affiliates. This confirms findings from our earlier single‐institution study[16] but contrasts with previously published reports involving Medicare data. The earlier report, using Medicare claims and including ambulatory procedures, revealed that primary care physicians and gastroenterologists only performed approximately 10% of US paracentesis procedures in 2008.[7] Our findings suggest that practices are different at university hospitals, where patients with severe liver disease often seek care. Because we used the UHC database, it was not possible to determine if the clinicians who performed paracentesis procedures in this study were internal medicine or family medicine residents, fellows, or attending physicians. However, findings from our own institution show that the vast majority of bedside paracentesis procedures are performed by internal medicine residents.[16]

Our findings have implications for certification of internal medicine and family medicine trainees. In 2008, the ABIM removed the requirement that internal medicine residents demonstrate competency in paracentesis.[12] This decision was informed by a lack of standardized methods to determine procedural competency and published surveys showing that internal medicine and hospitalist physicians rarely performed bedside procedures.[5, 6] Despite this policy change, our findings show that current clinical practice at university hospitals does not reflect national practice patterns or certification requirements, because many internal medicine‐ and family medicine‐trained clinicians still perform paracentesis procedures. This is concerning because internal medicine and family medicine trainees report variable confidence, experience, expertise, and supervision regarding performance of invasive procedures.[9, 10, 21, 22, 23, 24] Furthermore, earlier research also demonstrates that graduating residents and fellows are not able to competently perform common bedside procedures such as thoracentesis, temporary hemodialysis catheter insertion, and lumbar puncture.[25, 26, 27]

The American Association for the Study of Liver Diseases (AASLD) recommends that trained clinicians perform paracentesis procedures.[3, 4] However, the AASLD provides no definition for how training should occur. Because competency in this procedure is not specifically required by the ABIM, ABFM, or ACGME, a paradoxical situation occurs in which internal medicine and family medicine residents, and internal medicine‐trained fellows and faculty continue to perform paracentesis procedures on highly complex patients, but are no longer required to be competent to do so.

In earlier research we showed that simulation‐based mastery learning (SBML) was an effective method to boost internal medicine residents' paracentesis skills.[28] In SBML, all trainees must meet or exceed a minimum passing score on a simulated procedure before performing one on an actual patient.[29] This approach improves clinical care and outcomes in procedures such as central venous catheter insertion[30, 31] and advanced cardiac life support.[32] SBML‐trained residents also performed safe paracentesis procedures with shorter hospital LOS, fewer ICU transfers, and fewer blood product transfusions than IR procedures.[16] Based on the results of this study, AASLD guidelines regarding training, and our experience with SBML, we recommend that all clinicians complete paracentesis SBML training before performing procedures on patients.

Using our propensity model we identified patient characteristics that were associated with IR referral. Patients with a liver transplant were more likely to be cared for in IR. This may be due to a belief that postoperative procedures are anatomically more complex or because surgical trainees do not commonly perform this procedure. The current study confirms findings from earlier work that obese and female patients are more likely to be referred to IR.[16] IR referral of obese patients is likely to occur because paracentesis procedures are technically more difficult. We have no explanation why female patients were more likely to be referred to IR, because most decisions appear to be discretionary. Prospective studies are needed to determine evidence‐based recommendations regarding paracentesis procedure location. Patients with more comorbidities (eg, ICU stay, awaiting liver transplant, coagulation disorders) were more likely to undergo bedside procedures. The complexity of patients undergoing bedside paracentesis procedures reinforces the need for rigorous skill assessment for clinicians who perform them because complications such as intraperitoneal bleeding can be fatal.

Finally, we showed that LOS was similar but hospital direct costs were $800 to $1300 lower for patients whose paracentesis procedure was performed by medicine or gastroenterology/hepatology compared to IR. Medical subspecialties and surgery procedures were more expensive than IR, consistent with the higher LOS seen in these groups. IR procedures add costs due to facility charges for space, personnel, and equipment.[33] At our institution, the hospital cost of an IR paracentesis in 2012 was $361. If we use this figure, and assume costs are similar across university hospitals, the resultant cost savings would be $10,257,454 (for the procedure alone) if all procedures referred to IR in this 2‐year study were instead performed at the bedside. This estimate is approximate because it does not consider factors such as cost of clinician staffing models, which may differ across UHC hospitals. As hospitals look to reduce costs, potential savings due to appropriate use of bedside and IR procedures should be considered. This is especially important because there is no evidence that the extra expense of IR procedures is justified due to improved patient outcomes.

This study has several limitations. First, this was an observational study. Although the database was large, we were limited by coding accuracy and could not control for all potential confounding factors such as Model for End‐Stage Liver Disease score,[34, 35] other specific laboratory values, amount of ascites fluid removed, or bedside procedure failures later referred to IR. However, we do know that only a small number of second, third, or fourth procedures were subsequently referred to IR after earlier ones were performed at the bedside. Additionally the UHC database does not include patient‐specific data, and therefore we could not adjust for multiple visits or procedures by the same patient. Second, we were unable to determine the level of teaching involvement at each UHC affiliated hospital. Community hospitals where attendings managed most of the patients without trainees could not be differentiated from university hospitals where trainees were involved in most patients' care. Third, we did not have specialty information for 9434 (9.7%) procedures and had to exclude these cases. We also excluded a large number of paracentesis procedures in our final outcomes analysis. However, this was necessary because we needed to perform a patient‐level analysis to ensure the propensity and outcomes models were accurate. Finally, we did not evaluate inpatient mortality or 30‐day hospital readmission rates. Mortality and readmission from complications of a paracentesis procedure are rare events.[3, 4, 36] However, mortality and hospital readmission among patients with liver disease are relatively common.[37, 38] It was impossible to link these outcomes to a paracentesis procedure without the ability to perform medical records review.