User login

Use of simulation to assess incoming interns’ recognition of opportunities to choose wisely

In recent years, the American Board of Internal Medicine (ABIM) Foundation’s Choosing Wisely™ campaign has advanced the dialogue on cost-consciousness by identifying potential examples of overuse in clinical practice.1 Eliminating low-value care can decrease costs, improve quality, and potentially decrease patient harm.2 In fact, there is growing consensus among health leaders and educators on the need for a physician workforce that is conscious of high-value care.3,4 The Institute of Medicine has issued a call-to-action for graduate medical education (GME) to emphasize value-based care,5 and the Accreditation Council for Graduate Medical Education has outlined expectations that residents receive formal and experiential training on overuse as a part of its Clinical Learning Environment Review.6

However, recent reports highlight a lack of emphasis on value-based care in medical education.7 For example, few residency program directors believe that residents are prepared to incorporate value and cost into their medical decisions.8 In 2012, only 15% of medicine residencies reported having formal curricula addressing value, although many were developing one.8 Of the curricula reported, most were didactic in nature and did not include an assessment component.8

Experiential learning through simulation is one promising method to teach clinicians-in-training to practice value-based care. Simulation-based training promotes situational awareness (defined as being cognizant of one’s working environment), a concept that is crucial for recognizing both low-value and unsafe care.9,10 Simulated training exercises are often included in GME orientation “boot-camps,” which have typically addressed safety.11 The incorporation of value into existing GME boot-camp exercises could provide a promising model for the addition of value-based training to GME.

At the University of Chicago, we had successfully implemented the “Room of Horrors,” a simulation for entering interns to promote the detection of patient safety hazards.11 Here, we describe a modification to this simulation to embed low-value hazards in addition to traditional patient safety hazards. The aim of this study is to assess the entering interns’ recognition of low-value care and their ability to recognize unsafe care in a simulation designed to promote situational awareness.

METHODS

Setting and Participants

The simulation was conducted during GME orientation at a large, urban academic medical institution. One hundred and twenty-five entering postgraduate year one (PGY1) interns participated in the simulation, which was a required component of a multiday orientation “boot-camp” experience. All eligible interns participated in the simulation, representing 13 specialty programs and 60 medical schools. Interns entering into pathology were excluded because of infrequent patient contact. Participating interns were divided into 7 specialty groups for analysis in order to preserve the anonymity of interns in smaller residency programs (surgical subspecialties combined with general surgery, medicine-pediatrics grouped with internal medicine). The University of Chicago Institutional Review Board deemed this study exempt from review.

Program Description

A simulation of an inpatient hospital room, known as the “Room of Horrors,” was constructed in collaboration with the University of Chicago Simulation Center and adapted from a previous version of the exercise.11 The simulation consisted of a mock door chart highlighting the patient had been admitted for diarrhea (Clostridium difficile positive) following a recent hospitalization for pneumonia. A clinical scenario was constructed by using a patient mannequin and an accompanying door chart that listed information on the patient’s hospital course, allergies, and medications. In addition to the 8 patient safety hazards utilized in the prior version, our team selected 4 low-value hazards to be included in the simulation.

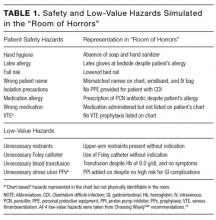

The 8 safety hazards have been detailed in a prior study and were previously selected from Medicare’s Hospital-Acquired Conditions (HAC) Reduction Program and Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators.11-13 Each of the hazards was represented either physically in the simulation room and/or was indicated on the patient’s chart. For example, the latex allergy hazard was represented by latex gloves at the bedside despite an allergy indicated on the patient’s chart and wristband. A complete list of the 8 safety hazards and their representations in the simulation is shown in Table 1.

The Choosing Wisely™ lists were reviewed to identify low-value hazards for addition to the simulation.14 Our team selected 3 low-value hazards from the Society of Hospital Medicine (SHM) list,15 including (1) arbitrary blood transfusion despite the patient’s stable hemoglobin level of 8.0 g/dL and absence of cardiac symptoms,16 (2) addition of a proton pump inhibitor (PPI) for stress ulcer prophylaxis in a patient without high risk for gastrointestinal (GI) complications who was not on a PPI prior to admission, and (3) placement of a urinary catheter without medical indication. We had originally selected continuous telemetry monitoring as a fourth hazard from the SHM list, but were unable to operationalize, as it was difficult to simulate continuous telemetry on a mannequin. Because many inpatients are older than 65 years, we reviewed the American Geriatrics Society list17 and selected our fourth low-value hazard: (4) unnecessary use of physical restraints to manage behavioral symptoms in a hospitalized patient with delirium. Several of these hazards were also quality and safety priorities at our institution, including the overuse of urinary catheters, physical restraints, and blood transfusions. All 4 low-value hazards were referenced in the patient’s door chart, and 3 were also physically represented in the room via presence of a hanging unit of blood, Foley catheter, and upper-arm restraints (Table 1). See Appendix for a photograph of the simulation setup.

Each intern was allowed 10 minutes inside the simulation room. During this time, they were instructed to read the 1-page door chart, inspect the simulation room, and write down as many potential low-value and safety hazards as they could identify on a free-response form (see Appendix). Upon exiting the room, they were allotted 5 additional minutes to complete their free-response answers and provide written feedback on the simulation. The simulation was conducted in 3 simulated hospital rooms over the course of 2 days, and the correct answers were provided via e-mail after all interns had completed the exercise.

To assess prior training and safety knowledge, interns were asked to complete a 3-question preassessment on a ScanTronTM (Tustin, CA) form. The preassessment asked interns whether they had received training on hospital safety during medical school (yes, no, or unsure), if they were satisfied with the hospital safety training they received during medical school (strongly disagree to strongly agree on a Likert scale), and if they were confident in their ability to identify potential hazards in a hospital setting (strongly disagree to strongly agree). Interns were also given the opportunity to provide feedback on the simulation experience on the ScanTronTM (Tustin, CA) form.

One month after participating in the simulation, interns were asked to complete an online follow-up survey on MedHubTM (Ann Arbor, MI), which included 2 Likert-scale questions (strongly disagree to strongly agree) assessing the simulation’s impact on their experience mitigating hospital hazards during the first month of internship.

Data Analysis

Interns’ free-response answers were manually coded, and descriptive statistics were used to summarize the mean percent correct for each hazard. A paired t test was used to compare intern identification of low-value vs safety hazards. T tests were used to compare hazard identification for interns entering highly procedural-intensive specialties (ie, surgical specialties, emergency medicine, anesthesia, obstetrics/gynecology) and those entering less procedural-intensive specialties (ie, internal medicine, pediatrics, psychiatry), as well as among those graduating from “Top 30” medical schools (based on US News & World Report Medical School Rankings18) and our own institution. One-way analysis of variance (ANOVA) calculations were used to test for differences in hazard identification based on interns’ prior hospital safety training, with interns who rated their satisfaction with prior training or confidence in identifying hazards as a “4” or a “5” considered “satisfied” and “confident,” respectively. Responses to the MedHubTM (Ann Arbor, MI) survey were dichotomized with “strongly agree” and “agree” considered positive responses. Statistical significance was defined at P < .05. All data analysis was conducted using Stata 14TM software (College Station, TX).

RESULTS

Intern Characteristics

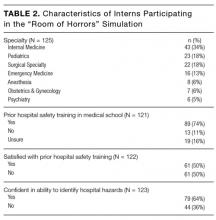

One hundred twenty-five entering PGY1 interns participated in the simulation, representing 60 medical schools and 7 different specialty groups (Table 2). Thirty-five percent (44/125) were graduates from “Top 30” medical schools, and 8.8% (11/125) graduated from our own institution. Seventy-four percent (89/121) had received prior hospital safety training during medical school, and 62.9% (56/89) were satisfied with their training. A majority of interns (64.2%, 79/123) felt confident in their ability to identify potential hazards in a hospital setting, although confidence was much higher among those with prior safety training (71.9%, 64/89) compared to those without prior training or who were unsure about their training (40.6%, 13/32; P = .09, t test).

Identification of Hazards

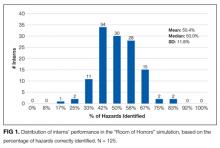

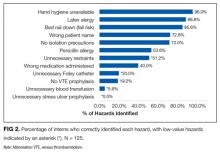

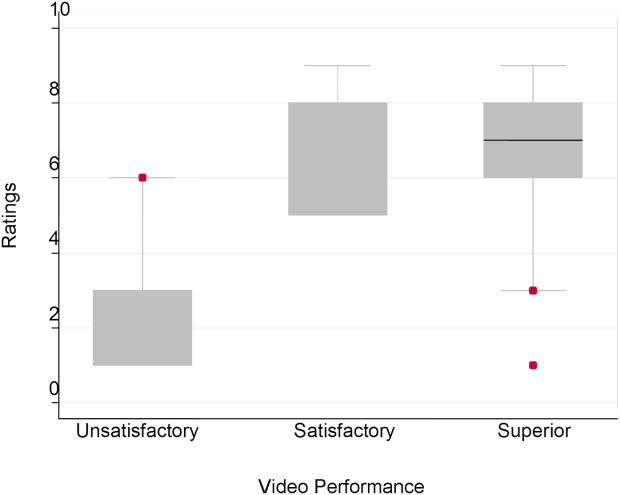

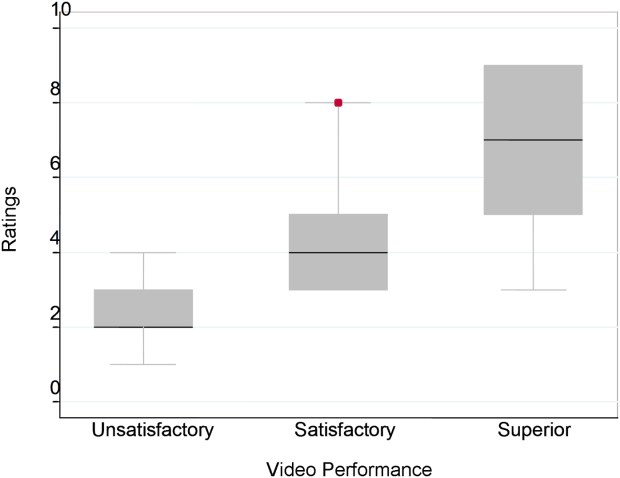

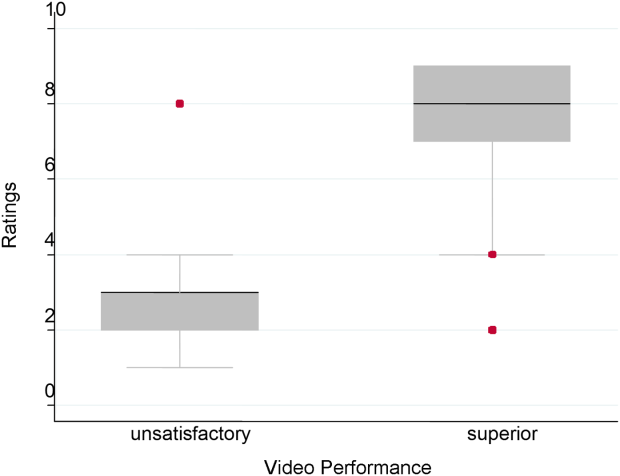

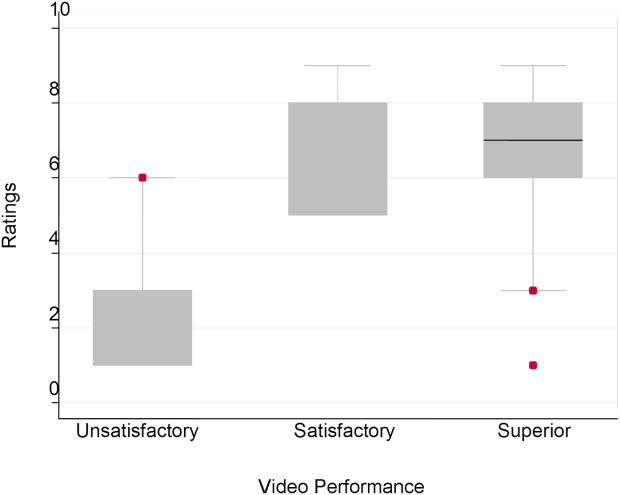

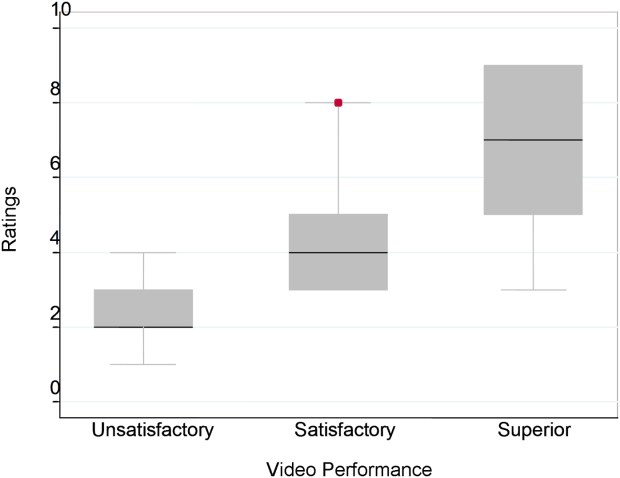

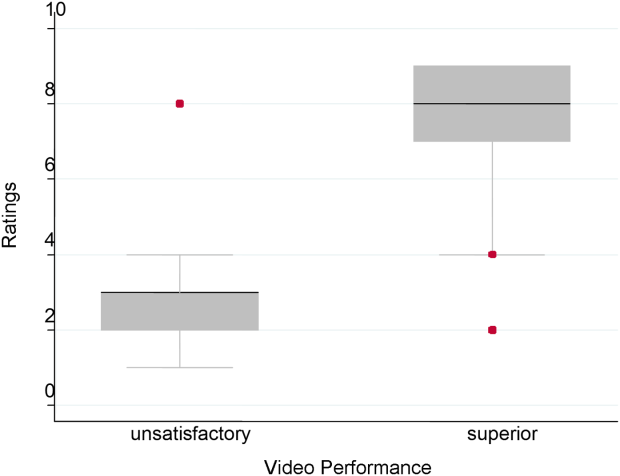

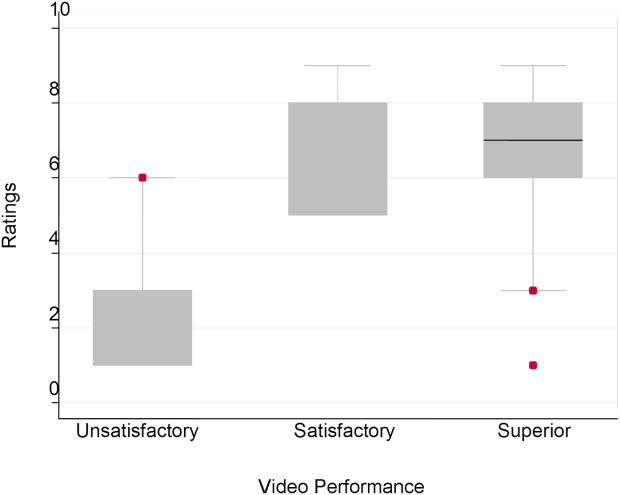

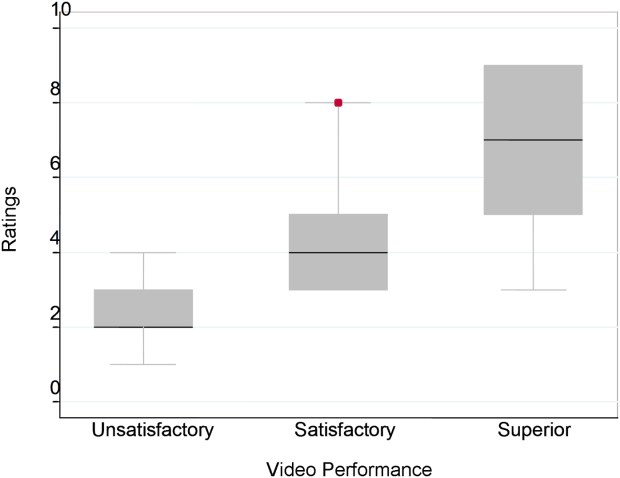

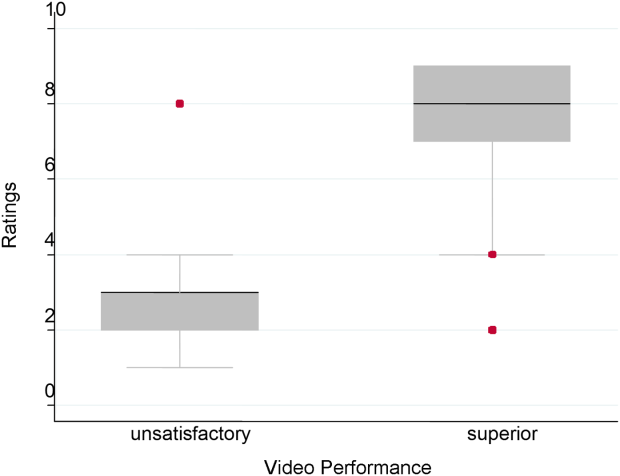

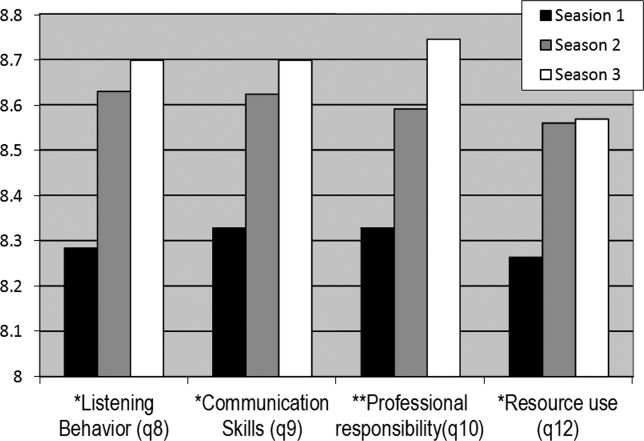

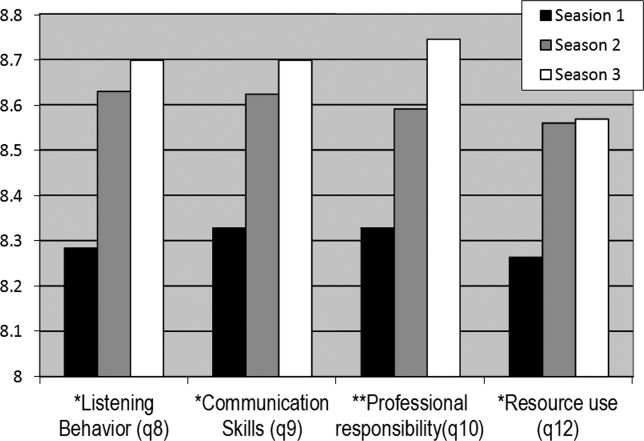

The mean percentage of hazards correctly identified by interns during the simulation was 50.4% (standard deviation [SD] 11.8%), with a normal distribution (Figure 1). Interns identified a significantly lower percentage of low-value hazards than safety hazards in the simulation (mean 19.2% [SD 18.6%] vs 66.0% [SD 16.0%], respectively; P < .001, paired t test). Interns also identified significantly more room-based errors than chart-based errors (mean 58.6% [SD 13.4%] vs 9.6% [SD 19.8%], respectively; P < .001, paired t test). The 3 most commonly identified hazards were unavailability of hand hygiene (120/125, 96.0%), presence of latex gloves despite the patient’s allergy (111/125, 88.8%), and fall risk due to the lowered bed rail (107/125, 85.6%). More than half of interns identified the incorrect name on the patient’s wristband and IV bag (91/125, 72.8%), a lack of isolation precautions (90/125, 72.0%), administration of penicillin despite the patient’s allergy (67/125, 53.6%), and unnecessary restraints (64/125, 51.2%). Less than half of interns identified the wrong medication being administered (50/125, 40.0%), unnecessary Foley catheter (25/125, 20.0%), and absence of venous thromboembolism (VTE) prophylaxis (24/125, 19.2%). Few interns identified the unnecessary blood transfusion (7/125, 5.6%), and no one identified the unnecessary stress ulcer prophylaxis (0/125, 0.0%; Figure 2).

Predictors of Hazard Identification

Interns who self-reported as confident in their ability to identify hazards were not any more likely to correctly identify hazards than those who were not confident (50.9% overall hazard identification vs 49.6%, respectively; P = .56, t test). Interns entering into less procedural-intensive specialties identified significantly more safety hazards than those entering highly procedural-intensive specialties (mean 69.1% [SD 16.9%] vs 61.8% [SD 13.7%], respectively; P = .01, t test). However, there was no statistically significant difference in their identification of low-value hazards (mean 19.8% [SD 18.3%] for less procedural-intensive vs 18.4% [SD 19.1%] for highly procedural-intensive; P = .68, t test). There was no statistically significant difference in hazard identification among graduates of “Top 30” medical schools or graduates of our own institution. Prior hospital safety training had no significant impact on interns’ ability to identify safety or low-value hazards. Overall, interns who were satisfied with their prior training identified a mean of 51.8% of hazards present (SD 11.8%), interns who were not satisfied with their prior training identified 51.5% (SD 12.7%), interns with no prior training identified 48.7% (SD 11.7%), and interns who were unsure about their prior training identified 47.4% (SD 11.5%) [F(3,117) = .79; P = .51, ANOVA]. There was also no significant association between prior training and the identification of any one of the 12 specific hazards (chi-square tests, all P values > .1).

Intern Feedback and Follow-Up Survey

Debriefing revealed that most interns passively assumed the patient’s chart was correct and did not think they should question the patient’s current care regimen. For example, many interns commented that they did not think to consider the patient’s blood transfusion as unnecessary, even though they were aware of the recommended hemoglobin cutoffs for stable patients.

Interns also provided formal feedback on the simulation through open-ended comments on their ScanTronTM (Tustin, CA) form. For example, one intern wrote that they would “inherently approach every patient room ‘looking’ for safety issues, probably directly because of this exercise.” Another commented that the simulation was “more difficult than I expected, but very necessary to facilitate discussion and learning.” One intern wrote that “I wish I had done this earlier in my career.”

Ninety-six percent of participating interns (120/125) completed an online follow-up survey 1 month after beginning internship. In the survey, 68.9% (82/119) of interns indicated they were more aware of how to identify potential hazards facing hospitalized patients as a result of the simulation. Furthermore, 52.1% (62/119) of interns had taken action during internship to reduce a potential hazard that was present in the simulation.

DISCUSSION

While many GME orientations include simulation and safety training, this study is the first of its kind to incorporate low-value care from Choosing Wisely™ recommendations into simulated training. It is concerning that interns identified significantly fewer low-value hazards than safety hazards in the simulation. In some cases, no interns identified the low-value hazard. For example, while almost all interns identified the hand hygiene hazard, not one could identify the unnecessary stress ulcer prophylaxis. Furthermore, interns who self-reported as confident in their ability to identify hazards did not perform any better in the simulation. Interns entering less procedural-intensive specialties identified more safety hazards overall.

The simulation was well received by interns. Many commented that the experience was engaging, challenging, and effective in cultivating situational awareness towards low-value care. Our follow-up survey demonstrated the majority of interns reported taking action during their first month of internship to reduce a hazard included in the simulation. Most interns also reported a greater awareness of how to identify hospital hazards as a result of the simulation. These findings suggest that a brief simulation-based experience has the potential to create a lasting retention of situational awareness and behavior change.

It is worth exploring why interns identified significantly fewer low-value hazards than safety hazards in the simulation. One hypothesis is that interns were less attuned to low-value hazards, which may reflect a lacking emphasis on value-based care in undergraduate medical education (UME). It is especially concerning that so few interns identified the catheter-associated urinary tract infection (CAUTI) risk, as interns are primarily responsible for recognizing and removing an unnecessary catheter. Although the risks of low-value care should be apparent to most trainees, the process of recognizing and deliberately stopping or avoiding low-value care can be challenging for young clinicians.19 To promote value-based thinking among entering residents, UME programs should teach students to question the utility of the interventions their patients are receiving. One promising framework for doing so is the Subjective, Objective, Assessment, Plan- (SOAP)-V, in which a V for “Value” is added to the traditional SOAP note.20 SOAP-V notes serve as a cognitive forcing function that requires students to pause and assess the value and cost-consciousness of their patients’ care.20

The results from the “Room of Horrors” simulation can also guide health leaders and educators in identifying institutional areas of focus towards providing high-value and safe care. For example, at the University of Chicago we launched an initiative to improve the inappropriate use of urinary catheters after learning that few of our incoming interns recognized this during the simulation. Institutions could use this model to raise awareness of initiatives and redirect resources from areas that trainees perform well in (eg, hand hygiene) to areas that need improvement (eg, recognition of low-value care). Given the simulation’s low cost and minimal material requirements, it could be easily integrated into existing training programs with the support of an institution’s simulation center.

This study’s limitations include its conduction at single-institution, although the participants represented graduates of 60 different institutions. Furthermore, while the 12 hazards included in the simulation represent patient safety and value initiatives from a wide array of medical societies, they were not intended to be comprehensive and were not tailored to specific specialties. The simulation included only 4 low-value hazards, and future iterations of this exercise should aim to include an equal number of safety and low-value hazards. Furthermore, the evaluation of interns’ prior hospital safety training relied on self-reporting, and the specific context and content of each interns’ training was not examined. Finally, at this point we are unable to provide objective longitudinal data assessing the simulation’s impact on clinical practice and patient outcomes. Subsequent work will assess the sustained impact of the simulation by correlating with institutional data on measurable occurrences of low-value care.

In conclusion, interns identified significantly fewer low-value hazards than safety hazards in an inpatient simulation designed to promote situational awareness. Our results suggest that interns are on the lookout for errors of omission (eg, absence of hand hygiene, absence of isolation precautions) but are often blinded to errors of commission, such that when patients are started on therapies there is an assumption that the therapies are correct and necessary (eg, blood transfusions, stress ulcer prophylaxis). These findings suggest poor awareness of low-value care among incoming interns and highlight the need for additional training in both UME and GME to place a greater emphasis on preventing low-value care.

Disclosure

Dr. Arora is a member of the American Board of Medicine Board of Directors and has received grant funding from ABIM Foundation via Costs of Care for the Teaching Value Choosing Wisely™ Challenge. Dr. Farnan, Dr. Arora, and Ms. Hirsch receive grant funds from Accreditation Council of Graduate Medical Education as part of the Pursuing Excellence Initiative. Dr. Arora and Dr. Farnan also receive grant funds from the American Medical Association Accelerating Change in Medical Education initiative. Kathleen Wiest and Lukas Matern were funded through matching funds of the Pritzker Summer Research Program for NIA T35AG029795.

1. Colla CH, Morden NE, Sequist TD, Schpero WL, Rosenthal MB. Choosing wisely: prevalence and correlates of low-value health care services in the United States. J Gen Intern Med. 2015;30(2):221-228. doi:10.1007/s11606-014-3070-z. PubMed

2. Elshaug AG, McWilliams JM, Landon BE. The value of low-value lists. JAMA. 2013;309(8):775-776. doi:10.1001/jama.2013.828. PubMed

3. Cooke M. Cost consciousness in patient care--what is medical education’s responsibility? N Engl J Med. 2010;362(14):1253-1255. doi:10.1056/NEJMp0911502. PubMed

4. Weinberger SE. Providing high-value, cost-conscious care: a critical seventh general competency for physicians. Ann Intern Med. 2011;155(6):386-388. doi:10.7326/0003-4819-155-6-201109200-00007. PubMed

5. Graduate Medical Education That Meets the Nation’s Health Needs. Institute of Medicine. http://www.nationalacademies.org/hmd/Reports/2014/Graduate-Medical-Education-That-Meets-the-Nations-Health-Needs.aspx. Accessed May 25, 2016.

6. Accreditation Council for Graduate Medical Education. CLER Pathways to Excellence. https://www.acgme.org/acgmeweb/Portals/0/PDFs/CLER/CLER_Brochure.pdf. Accessed July 15, 2015.

7. Varkey P, Murad MH, Braun C, Grall KJH, Saoji V. A review of cost-effectiveness, cost-containment and economics curricula in graduate medical education. J Eval Clin Pract. 2010;16(6):1055-1062. doi:10.1111/j.1365-2753.2009.01249.x. PubMed

8. Patel MS, Reed DA, Loertscher L, McDonald FS, Arora VM. Teaching residents to provide cost-conscious care: a national survey of residency program directors. JAMA Intern Med. 2014;174(3):470-472. doi:10.1001/jamainternmed.2013.13222. PubMed

9. Cohen NL. Using the ABCs of situational awareness for patient safety. Nursing. 2013;43(4):64-65. doi:10.1097/01.NURSE.0000428332.23978.82. PubMed

10. Varkey P, Karlapudi S, Rose S, Swensen S. A patient safety curriculum for graduate medical education: results from a needs assessment of educators and patient safety experts. Am J Med Qual. 2009;24(3):214-221. doi:10.1177/1062860609332905. PubMed

11. Farnan JM, Gaffney S, Poston JT, et al. Patient safety room of horrors: a novel method to assess medical students and entering residents’ ability to identify hazards of hospitalisation. BMJ Qual Saf. 2016;25(3):153-158. doi:10.1136/bmjqs-2015-004621. PubMed

12. Centers for Medicare and Medicaid Services Hospital-acquired condition reduction program. Medicare.gov. https://www.medicare.gov/hospitalcompare/HAC-reduction-program.html. Accessed August 1, 2015.

13. Agency for Healthcare Research and Quality. Patient Safety Indicators Overview. http://www. qualityindicators.ahrq.gov/modules/psi_overview.aspx. Accessed August 20, 2015.

14. ABIM Foundation. Choosing Wisely. http://www.choosingwisely.org. Accessed August 21, 2015.

15. ABIM Foundation. Society of Hospital Medicine – Adult Hospital Medicine List. Choosing Wisely. http://www.choosingwisely.org/societies/ society-of-hospital-medicine-adult/. Accessed August 21, 2015.

16. Carson JL, Grossman BJ, Kleinman S, et al. Red blood cell transfusion: A clinical practice guideline from the AABB*. Ann Intern Med. 2012;157(1):49-58. PubMed

17. ABIM Foundation. American Geriatrics Society List. Choosing Wisely. http://www.choosingwisely.org/societies/american-geriatrics-society/. Accessed August 21, 2015.

18. The Best Medical Schools for Research, Ranked. http://grad-schools.usnews.rankingsandreviews.com/best-graduate-schools/top-medical-schools/research-rankings?int=af3309&int=b3b50a&int=b14409. Accessed June 7, 2016.

19. Roman BR, Asch DA. Faded promises: The challenge of deadopting low-value care. Ann Intern Med. 2014;161(2):149-150. doi:10.7326/M14-0212. PubMed

20. Moser EM, Huang GC, Packer CD, et al. SOAP-V: Introducing a method to empower medical students to be change agents in bending the cost curve. J Hosp Med. 2016;11(3):217-220. doi:10.1002/jhm.2489. PubMed

In recent years, the American Board of Internal Medicine (ABIM) Foundation’s Choosing Wisely™ campaign has advanced the dialogue on cost-consciousness by identifying potential examples of overuse in clinical practice.1 Eliminating low-value care can decrease costs, improve quality, and potentially decrease patient harm.2 In fact, there is growing consensus among health leaders and educators on the need for a physician workforce that is conscious of high-value care.3,4 The Institute of Medicine has issued a call-to-action for graduate medical education (GME) to emphasize value-based care,5 and the Accreditation Council for Graduate Medical Education has outlined expectations that residents receive formal and experiential training on overuse as a part of its Clinical Learning Environment Review.6

However, recent reports highlight a lack of emphasis on value-based care in medical education.7 For example, few residency program directors believe that residents are prepared to incorporate value and cost into their medical decisions.8 In 2012, only 15% of medicine residencies reported having formal curricula addressing value, although many were developing one.8 Of the curricula reported, most were didactic in nature and did not include an assessment component.8

Experiential learning through simulation is one promising method to teach clinicians-in-training to practice value-based care. Simulation-based training promotes situational awareness (defined as being cognizant of one’s working environment), a concept that is crucial for recognizing both low-value and unsafe care.9,10 Simulated training exercises are often included in GME orientation “boot-camps,” which have typically addressed safety.11 The incorporation of value into existing GME boot-camp exercises could provide a promising model for the addition of value-based training to GME.

At the University of Chicago, we had successfully implemented the “Room of Horrors,” a simulation for entering interns to promote the detection of patient safety hazards.11 Here, we describe a modification to this simulation to embed low-value hazards in addition to traditional patient safety hazards. The aim of this study is to assess the entering interns’ recognition of low-value care and their ability to recognize unsafe care in a simulation designed to promote situational awareness.

METHODS

Setting and Participants

The simulation was conducted during GME orientation at a large, urban academic medical institution. One hundred and twenty-five entering postgraduate year one (PGY1) interns participated in the simulation, which was a required component of a multiday orientation “boot-camp” experience. All eligible interns participated in the simulation, representing 13 specialty programs and 60 medical schools. Interns entering into pathology were excluded because of infrequent patient contact. Participating interns were divided into 7 specialty groups for analysis in order to preserve the anonymity of interns in smaller residency programs (surgical subspecialties combined with general surgery, medicine-pediatrics grouped with internal medicine). The University of Chicago Institutional Review Board deemed this study exempt from review.

Program Description

A simulation of an inpatient hospital room, known as the “Room of Horrors,” was constructed in collaboration with the University of Chicago Simulation Center and adapted from a previous version of the exercise.11 The simulation consisted of a mock door chart highlighting the patient had been admitted for diarrhea (Clostridium difficile positive) following a recent hospitalization for pneumonia. A clinical scenario was constructed by using a patient mannequin and an accompanying door chart that listed information on the patient’s hospital course, allergies, and medications. In addition to the 8 patient safety hazards utilized in the prior version, our team selected 4 low-value hazards to be included in the simulation.

The 8 safety hazards have been detailed in a prior study and were previously selected from Medicare’s Hospital-Acquired Conditions (HAC) Reduction Program and Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators.11-13 Each of the hazards was represented either physically in the simulation room and/or was indicated on the patient’s chart. For example, the latex allergy hazard was represented by latex gloves at the bedside despite an allergy indicated on the patient’s chart and wristband. A complete list of the 8 safety hazards and their representations in the simulation is shown in Table 1.

The Choosing Wisely™ lists were reviewed to identify low-value hazards for addition to the simulation.14 Our team selected 3 low-value hazards from the Society of Hospital Medicine (SHM) list,15 including (1) arbitrary blood transfusion despite the patient’s stable hemoglobin level of 8.0 g/dL and absence of cardiac symptoms,16 (2) addition of a proton pump inhibitor (PPI) for stress ulcer prophylaxis in a patient without high risk for gastrointestinal (GI) complications who was not on a PPI prior to admission, and (3) placement of a urinary catheter without medical indication. We had originally selected continuous telemetry monitoring as a fourth hazard from the SHM list, but were unable to operationalize, as it was difficult to simulate continuous telemetry on a mannequin. Because many inpatients are older than 65 years, we reviewed the American Geriatrics Society list17 and selected our fourth low-value hazard: (4) unnecessary use of physical restraints to manage behavioral symptoms in a hospitalized patient with delirium. Several of these hazards were also quality and safety priorities at our institution, including the overuse of urinary catheters, physical restraints, and blood transfusions. All 4 low-value hazards were referenced in the patient’s door chart, and 3 were also physically represented in the room via presence of a hanging unit of blood, Foley catheter, and upper-arm restraints (Table 1). See Appendix for a photograph of the simulation setup.

Each intern was allowed 10 minutes inside the simulation room. During this time, they were instructed to read the 1-page door chart, inspect the simulation room, and write down as many potential low-value and safety hazards as they could identify on a free-response form (see Appendix). Upon exiting the room, they were allotted 5 additional minutes to complete their free-response answers and provide written feedback on the simulation. The simulation was conducted in 3 simulated hospital rooms over the course of 2 days, and the correct answers were provided via e-mail after all interns had completed the exercise.

To assess prior training and safety knowledge, interns were asked to complete a 3-question preassessment on a ScanTronTM (Tustin, CA) form. The preassessment asked interns whether they had received training on hospital safety during medical school (yes, no, or unsure), if they were satisfied with the hospital safety training they received during medical school (strongly disagree to strongly agree on a Likert scale), and if they were confident in their ability to identify potential hazards in a hospital setting (strongly disagree to strongly agree). Interns were also given the opportunity to provide feedback on the simulation experience on the ScanTronTM (Tustin, CA) form.

One month after participating in the simulation, interns were asked to complete an online follow-up survey on MedHubTM (Ann Arbor, MI), which included 2 Likert-scale questions (strongly disagree to strongly agree) assessing the simulation’s impact on their experience mitigating hospital hazards during the first month of internship.

Data Analysis

Interns’ free-response answers were manually coded, and descriptive statistics were used to summarize the mean percent correct for each hazard. A paired t test was used to compare intern identification of low-value vs safety hazards. T tests were used to compare hazard identification for interns entering highly procedural-intensive specialties (ie, surgical specialties, emergency medicine, anesthesia, obstetrics/gynecology) and those entering less procedural-intensive specialties (ie, internal medicine, pediatrics, psychiatry), as well as among those graduating from “Top 30” medical schools (based on US News & World Report Medical School Rankings18) and our own institution. One-way analysis of variance (ANOVA) calculations were used to test for differences in hazard identification based on interns’ prior hospital safety training, with interns who rated their satisfaction with prior training or confidence in identifying hazards as a “4” or a “5” considered “satisfied” and “confident,” respectively. Responses to the MedHubTM (Ann Arbor, MI) survey were dichotomized with “strongly agree” and “agree” considered positive responses. Statistical significance was defined at P < .05. All data analysis was conducted using Stata 14TM software (College Station, TX).

RESULTS

Intern Characteristics

One hundred twenty-five entering PGY1 interns participated in the simulation, representing 60 medical schools and 7 different specialty groups (Table 2). Thirty-five percent (44/125) were graduates from “Top 30” medical schools, and 8.8% (11/125) graduated from our own institution. Seventy-four percent (89/121) had received prior hospital safety training during medical school, and 62.9% (56/89) were satisfied with their training. A majority of interns (64.2%, 79/123) felt confident in their ability to identify potential hazards in a hospital setting, although confidence was much higher among those with prior safety training (71.9%, 64/89) compared to those without prior training or who were unsure about their training (40.6%, 13/32; P = .09, t test).

Identification of Hazards

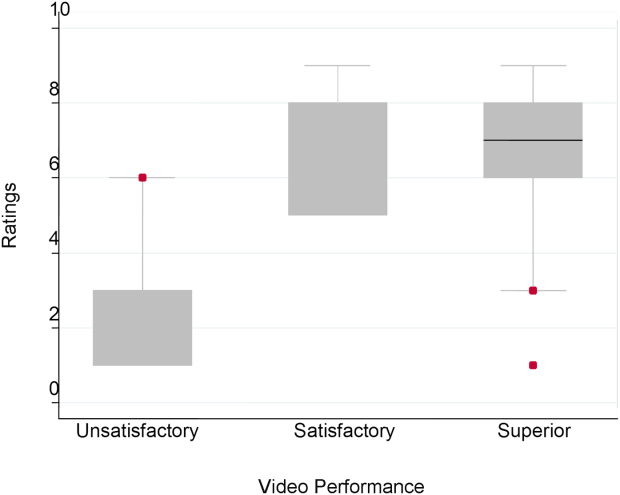

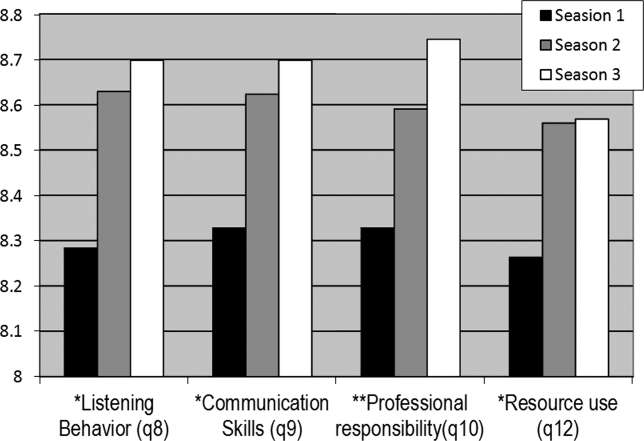

The mean percentage of hazards correctly identified by interns during the simulation was 50.4% (standard deviation [SD] 11.8%), with a normal distribution (Figure 1). Interns identified a significantly lower percentage of low-value hazards than safety hazards in the simulation (mean 19.2% [SD 18.6%] vs 66.0% [SD 16.0%], respectively; P < .001, paired t test). Interns also identified significantly more room-based errors than chart-based errors (mean 58.6% [SD 13.4%] vs 9.6% [SD 19.8%], respectively; P < .001, paired t test). The 3 most commonly identified hazards were unavailability of hand hygiene (120/125, 96.0%), presence of latex gloves despite the patient’s allergy (111/125, 88.8%), and fall risk due to the lowered bed rail (107/125, 85.6%). More than half of interns identified the incorrect name on the patient’s wristband and IV bag (91/125, 72.8%), a lack of isolation precautions (90/125, 72.0%), administration of penicillin despite the patient’s allergy (67/125, 53.6%), and unnecessary restraints (64/125, 51.2%). Less than half of interns identified the wrong medication being administered (50/125, 40.0%), unnecessary Foley catheter (25/125, 20.0%), and absence of venous thromboembolism (VTE) prophylaxis (24/125, 19.2%). Few interns identified the unnecessary blood transfusion (7/125, 5.6%), and no one identified the unnecessary stress ulcer prophylaxis (0/125, 0.0%; Figure 2).

Predictors of Hazard Identification

Interns who self-reported as confident in their ability to identify hazards were not any more likely to correctly identify hazards than those who were not confident (50.9% overall hazard identification vs 49.6%, respectively; P = .56, t test). Interns entering into less procedural-intensive specialties identified significantly more safety hazards than those entering highly procedural-intensive specialties (mean 69.1% [SD 16.9%] vs 61.8% [SD 13.7%], respectively; P = .01, t test). However, there was no statistically significant difference in their identification of low-value hazards (mean 19.8% [SD 18.3%] for less procedural-intensive vs 18.4% [SD 19.1%] for highly procedural-intensive; P = .68, t test). There was no statistically significant difference in hazard identification among graduates of “Top 30” medical schools or graduates of our own institution. Prior hospital safety training had no significant impact on interns’ ability to identify safety or low-value hazards. Overall, interns who were satisfied with their prior training identified a mean of 51.8% of hazards present (SD 11.8%), interns who were not satisfied with their prior training identified 51.5% (SD 12.7%), interns with no prior training identified 48.7% (SD 11.7%), and interns who were unsure about their prior training identified 47.4% (SD 11.5%) [F(3,117) = .79; P = .51, ANOVA]. There was also no significant association between prior training and the identification of any one of the 12 specific hazards (chi-square tests, all P values > .1).

Intern Feedback and Follow-Up Survey

Debriefing revealed that most interns passively assumed the patient’s chart was correct and did not think they should question the patient’s current care regimen. For example, many interns commented that they did not think to consider the patient’s blood transfusion as unnecessary, even though they were aware of the recommended hemoglobin cutoffs for stable patients.

Interns also provided formal feedback on the simulation through open-ended comments on their ScanTronTM (Tustin, CA) form. For example, one intern wrote that they would “inherently approach every patient room ‘looking’ for safety issues, probably directly because of this exercise.” Another commented that the simulation was “more difficult than I expected, but very necessary to facilitate discussion and learning.” One intern wrote that “I wish I had done this earlier in my career.”

Ninety-six percent of participating interns (120/125) completed an online follow-up survey 1 month after beginning internship. In the survey, 68.9% (82/119) of interns indicated they were more aware of how to identify potential hazards facing hospitalized patients as a result of the simulation. Furthermore, 52.1% (62/119) of interns had taken action during internship to reduce a potential hazard that was present in the simulation.

DISCUSSION

While many GME orientations include simulation and safety training, this study is the first of its kind to incorporate low-value care from Choosing Wisely™ recommendations into simulated training. It is concerning that interns identified significantly fewer low-value hazards than safety hazards in the simulation. In some cases, no interns identified the low-value hazard. For example, while almost all interns identified the hand hygiene hazard, not one could identify the unnecessary stress ulcer prophylaxis. Furthermore, interns who self-reported as confident in their ability to identify hazards did not perform any better in the simulation. Interns entering less procedural-intensive specialties identified more safety hazards overall.

The simulation was well received by interns. Many commented that the experience was engaging, challenging, and effective in cultivating situational awareness towards low-value care. Our follow-up survey demonstrated the majority of interns reported taking action during their first month of internship to reduce a hazard included in the simulation. Most interns also reported a greater awareness of how to identify hospital hazards as a result of the simulation. These findings suggest that a brief simulation-based experience has the potential to create a lasting retention of situational awareness and behavior change.

It is worth exploring why interns identified significantly fewer low-value hazards than safety hazards in the simulation. One hypothesis is that interns were less attuned to low-value hazards, which may reflect a lacking emphasis on value-based care in undergraduate medical education (UME). It is especially concerning that so few interns identified the catheter-associated urinary tract infection (CAUTI) risk, as interns are primarily responsible for recognizing and removing an unnecessary catheter. Although the risks of low-value care should be apparent to most trainees, the process of recognizing and deliberately stopping or avoiding low-value care can be challenging for young clinicians.19 To promote value-based thinking among entering residents, UME programs should teach students to question the utility of the interventions their patients are receiving. One promising framework for doing so is the Subjective, Objective, Assessment, Plan- (SOAP)-V, in which a V for “Value” is added to the traditional SOAP note.20 SOAP-V notes serve as a cognitive forcing function that requires students to pause and assess the value and cost-consciousness of their patients’ care.20

The results from the “Room of Horrors” simulation can also guide health leaders and educators in identifying institutional areas of focus towards providing high-value and safe care. For example, at the University of Chicago we launched an initiative to improve the inappropriate use of urinary catheters after learning that few of our incoming interns recognized this during the simulation. Institutions could use this model to raise awareness of initiatives and redirect resources from areas that trainees perform well in (eg, hand hygiene) to areas that need improvement (eg, recognition of low-value care). Given the simulation’s low cost and minimal material requirements, it could be easily integrated into existing training programs with the support of an institution’s simulation center.

This study’s limitations include its conduction at single-institution, although the participants represented graduates of 60 different institutions. Furthermore, while the 12 hazards included in the simulation represent patient safety and value initiatives from a wide array of medical societies, they were not intended to be comprehensive and were not tailored to specific specialties. The simulation included only 4 low-value hazards, and future iterations of this exercise should aim to include an equal number of safety and low-value hazards. Furthermore, the evaluation of interns’ prior hospital safety training relied on self-reporting, and the specific context and content of each interns’ training was not examined. Finally, at this point we are unable to provide objective longitudinal data assessing the simulation’s impact on clinical practice and patient outcomes. Subsequent work will assess the sustained impact of the simulation by correlating with institutional data on measurable occurrences of low-value care.

In conclusion, interns identified significantly fewer low-value hazards than safety hazards in an inpatient simulation designed to promote situational awareness. Our results suggest that interns are on the lookout for errors of omission (eg, absence of hand hygiene, absence of isolation precautions) but are often blinded to errors of commission, such that when patients are started on therapies there is an assumption that the therapies are correct and necessary (eg, blood transfusions, stress ulcer prophylaxis). These findings suggest poor awareness of low-value care among incoming interns and highlight the need for additional training in both UME and GME to place a greater emphasis on preventing low-value care.

Disclosure

Dr. Arora is a member of the American Board of Medicine Board of Directors and has received grant funding from ABIM Foundation via Costs of Care for the Teaching Value Choosing Wisely™ Challenge. Dr. Farnan, Dr. Arora, and Ms. Hirsch receive grant funds from Accreditation Council of Graduate Medical Education as part of the Pursuing Excellence Initiative. Dr. Arora and Dr. Farnan also receive grant funds from the American Medical Association Accelerating Change in Medical Education initiative. Kathleen Wiest and Lukas Matern were funded through matching funds of the Pritzker Summer Research Program for NIA T35AG029795.

In recent years, the American Board of Internal Medicine (ABIM) Foundation’s Choosing Wisely™ campaign has advanced the dialogue on cost-consciousness by identifying potential examples of overuse in clinical practice.1 Eliminating low-value care can decrease costs, improve quality, and potentially decrease patient harm.2 In fact, there is growing consensus among health leaders and educators on the need for a physician workforce that is conscious of high-value care.3,4 The Institute of Medicine has issued a call-to-action for graduate medical education (GME) to emphasize value-based care,5 and the Accreditation Council for Graduate Medical Education has outlined expectations that residents receive formal and experiential training on overuse as a part of its Clinical Learning Environment Review.6

However, recent reports highlight a lack of emphasis on value-based care in medical education.7 For example, few residency program directors believe that residents are prepared to incorporate value and cost into their medical decisions.8 In 2012, only 15% of medicine residencies reported having formal curricula addressing value, although many were developing one.8 Of the curricula reported, most were didactic in nature and did not include an assessment component.8

Experiential learning through simulation is one promising method to teach clinicians-in-training to practice value-based care. Simulation-based training promotes situational awareness (defined as being cognizant of one’s working environment), a concept that is crucial for recognizing both low-value and unsafe care.9,10 Simulated training exercises are often included in GME orientation “boot-camps,” which have typically addressed safety.11 The incorporation of value into existing GME boot-camp exercises could provide a promising model for the addition of value-based training to GME.

At the University of Chicago, we had successfully implemented the “Room of Horrors,” a simulation for entering interns to promote the detection of patient safety hazards.11 Here, we describe a modification to this simulation to embed low-value hazards in addition to traditional patient safety hazards. The aim of this study is to assess the entering interns’ recognition of low-value care and their ability to recognize unsafe care in a simulation designed to promote situational awareness.

METHODS

Setting and Participants

The simulation was conducted during GME orientation at a large, urban academic medical institution. One hundred and twenty-five entering postgraduate year one (PGY1) interns participated in the simulation, which was a required component of a multiday orientation “boot-camp” experience. All eligible interns participated in the simulation, representing 13 specialty programs and 60 medical schools. Interns entering into pathology were excluded because of infrequent patient contact. Participating interns were divided into 7 specialty groups for analysis in order to preserve the anonymity of interns in smaller residency programs (surgical subspecialties combined with general surgery, medicine-pediatrics grouped with internal medicine). The University of Chicago Institutional Review Board deemed this study exempt from review.

Program Description

A simulation of an inpatient hospital room, known as the “Room of Horrors,” was constructed in collaboration with the University of Chicago Simulation Center and adapted from a previous version of the exercise.11 The simulation consisted of a mock door chart highlighting the patient had been admitted for diarrhea (Clostridium difficile positive) following a recent hospitalization for pneumonia. A clinical scenario was constructed by using a patient mannequin and an accompanying door chart that listed information on the patient’s hospital course, allergies, and medications. In addition to the 8 patient safety hazards utilized in the prior version, our team selected 4 low-value hazards to be included in the simulation.

The 8 safety hazards have been detailed in a prior study and were previously selected from Medicare’s Hospital-Acquired Conditions (HAC) Reduction Program and Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators.11-13 Each of the hazards was represented either physically in the simulation room and/or was indicated on the patient’s chart. For example, the latex allergy hazard was represented by latex gloves at the bedside despite an allergy indicated on the patient’s chart and wristband. A complete list of the 8 safety hazards and their representations in the simulation is shown in Table 1.

The Choosing Wisely™ lists were reviewed to identify low-value hazards for addition to the simulation.14 Our team selected 3 low-value hazards from the Society of Hospital Medicine (SHM) list,15 including (1) arbitrary blood transfusion despite the patient’s stable hemoglobin level of 8.0 g/dL and absence of cardiac symptoms,16 (2) addition of a proton pump inhibitor (PPI) for stress ulcer prophylaxis in a patient without high risk for gastrointestinal (GI) complications who was not on a PPI prior to admission, and (3) placement of a urinary catheter without medical indication. We had originally selected continuous telemetry monitoring as a fourth hazard from the SHM list, but were unable to operationalize, as it was difficult to simulate continuous telemetry on a mannequin. Because many inpatients are older than 65 years, we reviewed the American Geriatrics Society list17 and selected our fourth low-value hazard: (4) unnecessary use of physical restraints to manage behavioral symptoms in a hospitalized patient with delirium. Several of these hazards were also quality and safety priorities at our institution, including the overuse of urinary catheters, physical restraints, and blood transfusions. All 4 low-value hazards were referenced in the patient’s door chart, and 3 were also physically represented in the room via presence of a hanging unit of blood, Foley catheter, and upper-arm restraints (Table 1). See Appendix for a photograph of the simulation setup.

Each intern was allowed 10 minutes inside the simulation room. During this time, they were instructed to read the 1-page door chart, inspect the simulation room, and write down as many potential low-value and safety hazards as they could identify on a free-response form (see Appendix). Upon exiting the room, they were allotted 5 additional minutes to complete their free-response answers and provide written feedback on the simulation. The simulation was conducted in 3 simulated hospital rooms over the course of 2 days, and the correct answers were provided via e-mail after all interns had completed the exercise.

To assess prior training and safety knowledge, interns were asked to complete a 3-question preassessment on a ScanTronTM (Tustin, CA) form. The preassessment asked interns whether they had received training on hospital safety during medical school (yes, no, or unsure), if they were satisfied with the hospital safety training they received during medical school (strongly disagree to strongly agree on a Likert scale), and if they were confident in their ability to identify potential hazards in a hospital setting (strongly disagree to strongly agree). Interns were also given the opportunity to provide feedback on the simulation experience on the ScanTronTM (Tustin, CA) form.

One month after participating in the simulation, interns were asked to complete an online follow-up survey on MedHubTM (Ann Arbor, MI), which included 2 Likert-scale questions (strongly disagree to strongly agree) assessing the simulation’s impact on their experience mitigating hospital hazards during the first month of internship.

Data Analysis

Interns’ free-response answers were manually coded, and descriptive statistics were used to summarize the mean percent correct for each hazard. A paired t test was used to compare intern identification of low-value vs safety hazards. T tests were used to compare hazard identification for interns entering highly procedural-intensive specialties (ie, surgical specialties, emergency medicine, anesthesia, obstetrics/gynecology) and those entering less procedural-intensive specialties (ie, internal medicine, pediatrics, psychiatry), as well as among those graduating from “Top 30” medical schools (based on US News & World Report Medical School Rankings18) and our own institution. One-way analysis of variance (ANOVA) calculations were used to test for differences in hazard identification based on interns’ prior hospital safety training, with interns who rated their satisfaction with prior training or confidence in identifying hazards as a “4” or a “5” considered “satisfied” and “confident,” respectively. Responses to the MedHubTM (Ann Arbor, MI) survey were dichotomized with “strongly agree” and “agree” considered positive responses. Statistical significance was defined at P < .05. All data analysis was conducted using Stata 14TM software (College Station, TX).

RESULTS

Intern Characteristics

One hundred twenty-five entering PGY1 interns participated in the simulation, representing 60 medical schools and 7 different specialty groups (Table 2). Thirty-five percent (44/125) were graduates from “Top 30” medical schools, and 8.8% (11/125) graduated from our own institution. Seventy-four percent (89/121) had received prior hospital safety training during medical school, and 62.9% (56/89) were satisfied with their training. A majority of interns (64.2%, 79/123) felt confident in their ability to identify potential hazards in a hospital setting, although confidence was much higher among those with prior safety training (71.9%, 64/89) compared to those without prior training or who were unsure about their training (40.6%, 13/32; P = .09, t test).

Identification of Hazards

The mean percentage of hazards correctly identified by interns during the simulation was 50.4% (standard deviation [SD] 11.8%), with a normal distribution (Figure 1). Interns identified a significantly lower percentage of low-value hazards than safety hazards in the simulation (mean 19.2% [SD 18.6%] vs 66.0% [SD 16.0%], respectively; P < .001, paired t test). Interns also identified significantly more room-based errors than chart-based errors (mean 58.6% [SD 13.4%] vs 9.6% [SD 19.8%], respectively; P < .001, paired t test). The 3 most commonly identified hazards were unavailability of hand hygiene (120/125, 96.0%), presence of latex gloves despite the patient’s allergy (111/125, 88.8%), and fall risk due to the lowered bed rail (107/125, 85.6%). More than half of interns identified the incorrect name on the patient’s wristband and IV bag (91/125, 72.8%), a lack of isolation precautions (90/125, 72.0%), administration of penicillin despite the patient’s allergy (67/125, 53.6%), and unnecessary restraints (64/125, 51.2%). Less than half of interns identified the wrong medication being administered (50/125, 40.0%), unnecessary Foley catheter (25/125, 20.0%), and absence of venous thromboembolism (VTE) prophylaxis (24/125, 19.2%). Few interns identified the unnecessary blood transfusion (7/125, 5.6%), and no one identified the unnecessary stress ulcer prophylaxis (0/125, 0.0%; Figure 2).

Predictors of Hazard Identification

Interns who self-reported as confident in their ability to identify hazards were not any more likely to correctly identify hazards than those who were not confident (50.9% overall hazard identification vs 49.6%, respectively; P = .56, t test). Interns entering into less procedural-intensive specialties identified significantly more safety hazards than those entering highly procedural-intensive specialties (mean 69.1% [SD 16.9%] vs 61.8% [SD 13.7%], respectively; P = .01, t test). However, there was no statistically significant difference in their identification of low-value hazards (mean 19.8% [SD 18.3%] for less procedural-intensive vs 18.4% [SD 19.1%] for highly procedural-intensive; P = .68, t test). There was no statistically significant difference in hazard identification among graduates of “Top 30” medical schools or graduates of our own institution. Prior hospital safety training had no significant impact on interns’ ability to identify safety or low-value hazards. Overall, interns who were satisfied with their prior training identified a mean of 51.8% of hazards present (SD 11.8%), interns who were not satisfied with their prior training identified 51.5% (SD 12.7%), interns with no prior training identified 48.7% (SD 11.7%), and interns who were unsure about their prior training identified 47.4% (SD 11.5%) [F(3,117) = .79; P = .51, ANOVA]. There was also no significant association between prior training and the identification of any one of the 12 specific hazards (chi-square tests, all P values > .1).

Intern Feedback and Follow-Up Survey

Debriefing revealed that most interns passively assumed the patient’s chart was correct and did not think they should question the patient’s current care regimen. For example, many interns commented that they did not think to consider the patient’s blood transfusion as unnecessary, even though they were aware of the recommended hemoglobin cutoffs for stable patients.

Interns also provided formal feedback on the simulation through open-ended comments on their ScanTronTM (Tustin, CA) form. For example, one intern wrote that they would “inherently approach every patient room ‘looking’ for safety issues, probably directly because of this exercise.” Another commented that the simulation was “more difficult than I expected, but very necessary to facilitate discussion and learning.” One intern wrote that “I wish I had done this earlier in my career.”

Ninety-six percent of participating interns (120/125) completed an online follow-up survey 1 month after beginning internship. In the survey, 68.9% (82/119) of interns indicated they were more aware of how to identify potential hazards facing hospitalized patients as a result of the simulation. Furthermore, 52.1% (62/119) of interns had taken action during internship to reduce a potential hazard that was present in the simulation.

DISCUSSION

While many GME orientations include simulation and safety training, this study is the first of its kind to incorporate low-value care from Choosing Wisely™ recommendations into simulated training. It is concerning that interns identified significantly fewer low-value hazards than safety hazards in the simulation. In some cases, no interns identified the low-value hazard. For example, while almost all interns identified the hand hygiene hazard, not one could identify the unnecessary stress ulcer prophylaxis. Furthermore, interns who self-reported as confident in their ability to identify hazards did not perform any better in the simulation. Interns entering less procedural-intensive specialties identified more safety hazards overall.

The simulation was well received by interns. Many commented that the experience was engaging, challenging, and effective in cultivating situational awareness towards low-value care. Our follow-up survey demonstrated the majority of interns reported taking action during their first month of internship to reduce a hazard included in the simulation. Most interns also reported a greater awareness of how to identify hospital hazards as a result of the simulation. These findings suggest that a brief simulation-based experience has the potential to create a lasting retention of situational awareness and behavior change.

It is worth exploring why interns identified significantly fewer low-value hazards than safety hazards in the simulation. One hypothesis is that interns were less attuned to low-value hazards, which may reflect a lacking emphasis on value-based care in undergraduate medical education (UME). It is especially concerning that so few interns identified the catheter-associated urinary tract infection (CAUTI) risk, as interns are primarily responsible for recognizing and removing an unnecessary catheter. Although the risks of low-value care should be apparent to most trainees, the process of recognizing and deliberately stopping or avoiding low-value care can be challenging for young clinicians.19 To promote value-based thinking among entering residents, UME programs should teach students to question the utility of the interventions their patients are receiving. One promising framework for doing so is the Subjective, Objective, Assessment, Plan- (SOAP)-V, in which a V for “Value” is added to the traditional SOAP note.20 SOAP-V notes serve as a cognitive forcing function that requires students to pause and assess the value and cost-consciousness of their patients’ care.20

The results from the “Room of Horrors” simulation can also guide health leaders and educators in identifying institutional areas of focus towards providing high-value and safe care. For example, at the University of Chicago we launched an initiative to improve the inappropriate use of urinary catheters after learning that few of our incoming interns recognized this during the simulation. Institutions could use this model to raise awareness of initiatives and redirect resources from areas that trainees perform well in (eg, hand hygiene) to areas that need improvement (eg, recognition of low-value care). Given the simulation’s low cost and minimal material requirements, it could be easily integrated into existing training programs with the support of an institution’s simulation center.

This study’s limitations include its conduction at single-institution, although the participants represented graduates of 60 different institutions. Furthermore, while the 12 hazards included in the simulation represent patient safety and value initiatives from a wide array of medical societies, they were not intended to be comprehensive and were not tailored to specific specialties. The simulation included only 4 low-value hazards, and future iterations of this exercise should aim to include an equal number of safety and low-value hazards. Furthermore, the evaluation of interns’ prior hospital safety training relied on self-reporting, and the specific context and content of each interns’ training was not examined. Finally, at this point we are unable to provide objective longitudinal data assessing the simulation’s impact on clinical practice and patient outcomes. Subsequent work will assess the sustained impact of the simulation by correlating with institutional data on measurable occurrences of low-value care.

In conclusion, interns identified significantly fewer low-value hazards than safety hazards in an inpatient simulation designed to promote situational awareness. Our results suggest that interns are on the lookout for errors of omission (eg, absence of hand hygiene, absence of isolation precautions) but are often blinded to errors of commission, such that when patients are started on therapies there is an assumption that the therapies are correct and necessary (eg, blood transfusions, stress ulcer prophylaxis). These findings suggest poor awareness of low-value care among incoming interns and highlight the need for additional training in both UME and GME to place a greater emphasis on preventing low-value care.

Disclosure

Dr. Arora is a member of the American Board of Medicine Board of Directors and has received grant funding from ABIM Foundation via Costs of Care for the Teaching Value Choosing Wisely™ Challenge. Dr. Farnan, Dr. Arora, and Ms. Hirsch receive grant funds from Accreditation Council of Graduate Medical Education as part of the Pursuing Excellence Initiative. Dr. Arora and Dr. Farnan also receive grant funds from the American Medical Association Accelerating Change in Medical Education initiative. Kathleen Wiest and Lukas Matern were funded through matching funds of the Pritzker Summer Research Program for NIA T35AG029795.

1. Colla CH, Morden NE, Sequist TD, Schpero WL, Rosenthal MB. Choosing wisely: prevalence and correlates of low-value health care services in the United States. J Gen Intern Med. 2015;30(2):221-228. doi:10.1007/s11606-014-3070-z. PubMed

2. Elshaug AG, McWilliams JM, Landon BE. The value of low-value lists. JAMA. 2013;309(8):775-776. doi:10.1001/jama.2013.828. PubMed

3. Cooke M. Cost consciousness in patient care--what is medical education’s responsibility? N Engl J Med. 2010;362(14):1253-1255. doi:10.1056/NEJMp0911502. PubMed

4. Weinberger SE. Providing high-value, cost-conscious care: a critical seventh general competency for physicians. Ann Intern Med. 2011;155(6):386-388. doi:10.7326/0003-4819-155-6-201109200-00007. PubMed

5. Graduate Medical Education That Meets the Nation’s Health Needs. Institute of Medicine. http://www.nationalacademies.org/hmd/Reports/2014/Graduate-Medical-Education-That-Meets-the-Nations-Health-Needs.aspx. Accessed May 25, 2016.

6. Accreditation Council for Graduate Medical Education. CLER Pathways to Excellence. https://www.acgme.org/acgmeweb/Portals/0/PDFs/CLER/CLER_Brochure.pdf. Accessed July 15, 2015.

7. Varkey P, Murad MH, Braun C, Grall KJH, Saoji V. A review of cost-effectiveness, cost-containment and economics curricula in graduate medical education. J Eval Clin Pract. 2010;16(6):1055-1062. doi:10.1111/j.1365-2753.2009.01249.x. PubMed

8. Patel MS, Reed DA, Loertscher L, McDonald FS, Arora VM. Teaching residents to provide cost-conscious care: a national survey of residency program directors. JAMA Intern Med. 2014;174(3):470-472. doi:10.1001/jamainternmed.2013.13222. PubMed

9. Cohen NL. Using the ABCs of situational awareness for patient safety. Nursing. 2013;43(4):64-65. doi:10.1097/01.NURSE.0000428332.23978.82. PubMed

10. Varkey P, Karlapudi S, Rose S, Swensen S. A patient safety curriculum for graduate medical education: results from a needs assessment of educators and patient safety experts. Am J Med Qual. 2009;24(3):214-221. doi:10.1177/1062860609332905. PubMed

11. Farnan JM, Gaffney S, Poston JT, et al. Patient safety room of horrors: a novel method to assess medical students and entering residents’ ability to identify hazards of hospitalisation. BMJ Qual Saf. 2016;25(3):153-158. doi:10.1136/bmjqs-2015-004621. PubMed

12. Centers for Medicare and Medicaid Services Hospital-acquired condition reduction program. Medicare.gov. https://www.medicare.gov/hospitalcompare/HAC-reduction-program.html. Accessed August 1, 2015.

13. Agency for Healthcare Research and Quality. Patient Safety Indicators Overview. http://www. qualityindicators.ahrq.gov/modules/psi_overview.aspx. Accessed August 20, 2015.

14. ABIM Foundation. Choosing Wisely. http://www.choosingwisely.org. Accessed August 21, 2015.

15. ABIM Foundation. Society of Hospital Medicine – Adult Hospital Medicine List. Choosing Wisely. http://www.choosingwisely.org/societies/ society-of-hospital-medicine-adult/. Accessed August 21, 2015.

16. Carson JL, Grossman BJ, Kleinman S, et al. Red blood cell transfusion: A clinical practice guideline from the AABB*. Ann Intern Med. 2012;157(1):49-58. PubMed

17. ABIM Foundation. American Geriatrics Society List. Choosing Wisely. http://www.choosingwisely.org/societies/american-geriatrics-society/. Accessed August 21, 2015.

18. The Best Medical Schools for Research, Ranked. http://grad-schools.usnews.rankingsandreviews.com/best-graduate-schools/top-medical-schools/research-rankings?int=af3309&int=b3b50a&int=b14409. Accessed June 7, 2016.

19. Roman BR, Asch DA. Faded promises: The challenge of deadopting low-value care. Ann Intern Med. 2014;161(2):149-150. doi:10.7326/M14-0212. PubMed

20. Moser EM, Huang GC, Packer CD, et al. SOAP-V: Introducing a method to empower medical students to be change agents in bending the cost curve. J Hosp Med. 2016;11(3):217-220. doi:10.1002/jhm.2489. PubMed

1. Colla CH, Morden NE, Sequist TD, Schpero WL, Rosenthal MB. Choosing wisely: prevalence and correlates of low-value health care services in the United States. J Gen Intern Med. 2015;30(2):221-228. doi:10.1007/s11606-014-3070-z. PubMed

2. Elshaug AG, McWilliams JM, Landon BE. The value of low-value lists. JAMA. 2013;309(8):775-776. doi:10.1001/jama.2013.828. PubMed

3. Cooke M. Cost consciousness in patient care--what is medical education’s responsibility? N Engl J Med. 2010;362(14):1253-1255. doi:10.1056/NEJMp0911502. PubMed

4. Weinberger SE. Providing high-value, cost-conscious care: a critical seventh general competency for physicians. Ann Intern Med. 2011;155(6):386-388. doi:10.7326/0003-4819-155-6-201109200-00007. PubMed

5. Graduate Medical Education That Meets the Nation’s Health Needs. Institute of Medicine. http://www.nationalacademies.org/hmd/Reports/2014/Graduate-Medical-Education-That-Meets-the-Nations-Health-Needs.aspx. Accessed May 25, 2016.

6. Accreditation Council for Graduate Medical Education. CLER Pathways to Excellence. https://www.acgme.org/acgmeweb/Portals/0/PDFs/CLER/CLER_Brochure.pdf. Accessed July 15, 2015.

7. Varkey P, Murad MH, Braun C, Grall KJH, Saoji V. A review of cost-effectiveness, cost-containment and economics curricula in graduate medical education. J Eval Clin Pract. 2010;16(6):1055-1062. doi:10.1111/j.1365-2753.2009.01249.x. PubMed

8. Patel MS, Reed DA, Loertscher L, McDonald FS, Arora VM. Teaching residents to provide cost-conscious care: a national survey of residency program directors. JAMA Intern Med. 2014;174(3):470-472. doi:10.1001/jamainternmed.2013.13222. PubMed

9. Cohen NL. Using the ABCs of situational awareness for patient safety. Nursing. 2013;43(4):64-65. doi:10.1097/01.NURSE.0000428332.23978.82. PubMed

10. Varkey P, Karlapudi S, Rose S, Swensen S. A patient safety curriculum for graduate medical education: results from a needs assessment of educators and patient safety experts. Am J Med Qual. 2009;24(3):214-221. doi:10.1177/1062860609332905. PubMed

11. Farnan JM, Gaffney S, Poston JT, et al. Patient safety room of horrors: a novel method to assess medical students and entering residents’ ability to identify hazards of hospitalisation. BMJ Qual Saf. 2016;25(3):153-158. doi:10.1136/bmjqs-2015-004621. PubMed

12. Centers for Medicare and Medicaid Services Hospital-acquired condition reduction program. Medicare.gov. https://www.medicare.gov/hospitalcompare/HAC-reduction-program.html. Accessed August 1, 2015.

13. Agency for Healthcare Research and Quality. Patient Safety Indicators Overview. http://www. qualityindicators.ahrq.gov/modules/psi_overview.aspx. Accessed August 20, 2015.

14. ABIM Foundation. Choosing Wisely. http://www.choosingwisely.org. Accessed August 21, 2015.

15. ABIM Foundation. Society of Hospital Medicine – Adult Hospital Medicine List. Choosing Wisely. http://www.choosingwisely.org/societies/ society-of-hospital-medicine-adult/. Accessed August 21, 2015.

16. Carson JL, Grossman BJ, Kleinman S, et al. Red blood cell transfusion: A clinical practice guideline from the AABB*. Ann Intern Med. 2012;157(1):49-58. PubMed

17. ABIM Foundation. American Geriatrics Society List. Choosing Wisely. http://www.choosingwisely.org/societies/american-geriatrics-society/. Accessed August 21, 2015.

18. The Best Medical Schools for Research, Ranked. http://grad-schools.usnews.rankingsandreviews.com/best-graduate-schools/top-medical-schools/research-rankings?int=af3309&int=b3b50a&int=b14409. Accessed June 7, 2016.

19. Roman BR, Asch DA. Faded promises: The challenge of deadopting low-value care. Ann Intern Med. 2014;161(2):149-150. doi:10.7326/M14-0212. PubMed

20. Moser EM, Huang GC, Packer CD, et al. SOAP-V: Introducing a method to empower medical students to be change agents in bending the cost curve. J Hosp Med. 2016;11(3):217-220. doi:10.1002/jhm.2489. PubMed

© 2017 Society of Hospital Medicine

Analysis of Hospitalist Discontinuity

Studies examining the importance of continuity of care have shown that patients who maintain a continuous relationship with a single physician have improved outcomes.[1, 2] However, most of these studies were performed in the outpatient, rather than the inpatient setting. With over 35 million patients admitted to hospitals in 2013, along with the significant increase in hospital discontinuity over recent years, the impact of inpatient continuity of care on quality outcomes and patient satisfaction is becoming increasingly relevant.[3, 4]

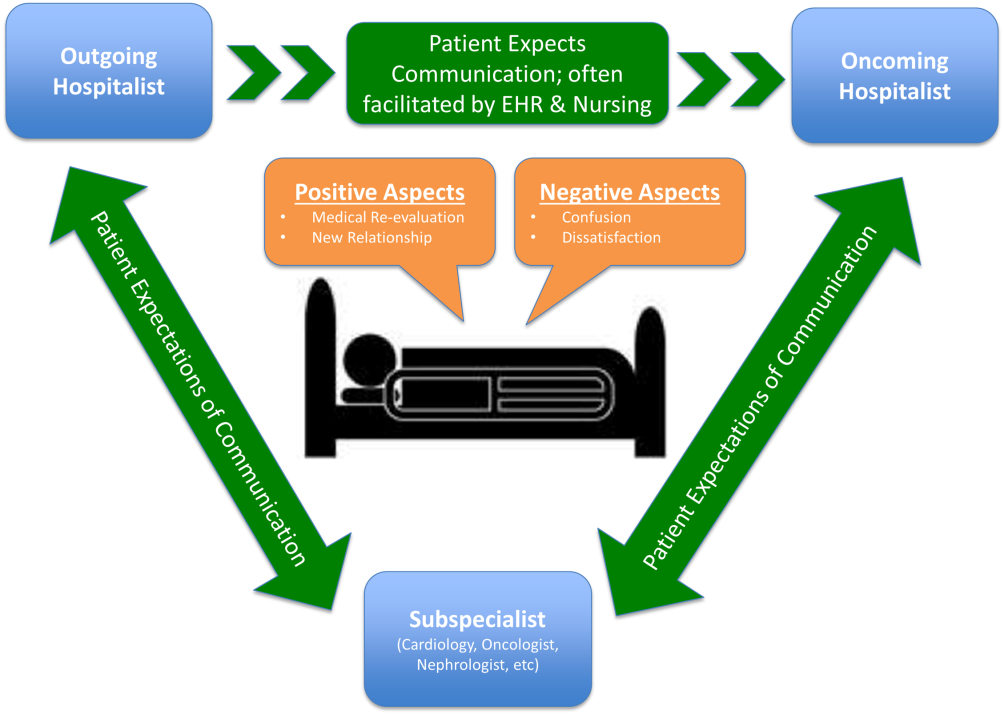

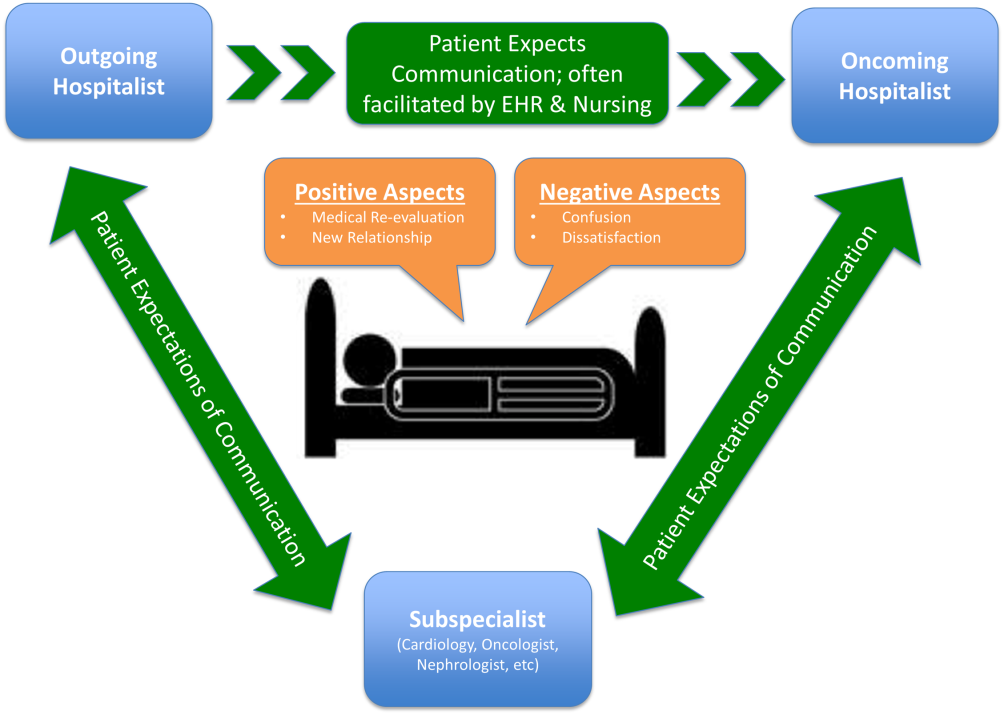

Service handoffs, when a physician hands over treatment responsibility for a panel of patients and is not expected to return, are a type of handoff that contributes to inpatient discontinuity. In particular, service handoffs between hospitalists are an especially common and inherently risky type of transition, as there is a severing of an established relationship during a patient's hospitalization. Unfortunately, due to the lack of evidence on the effects of service handoffs, current guidelines are limited in their recommendations.[5] Whereas several recent studies have begun to explore the effects of these handoffs, no prior study has examined this issue from a patient's perspective.[6, 7, 8]

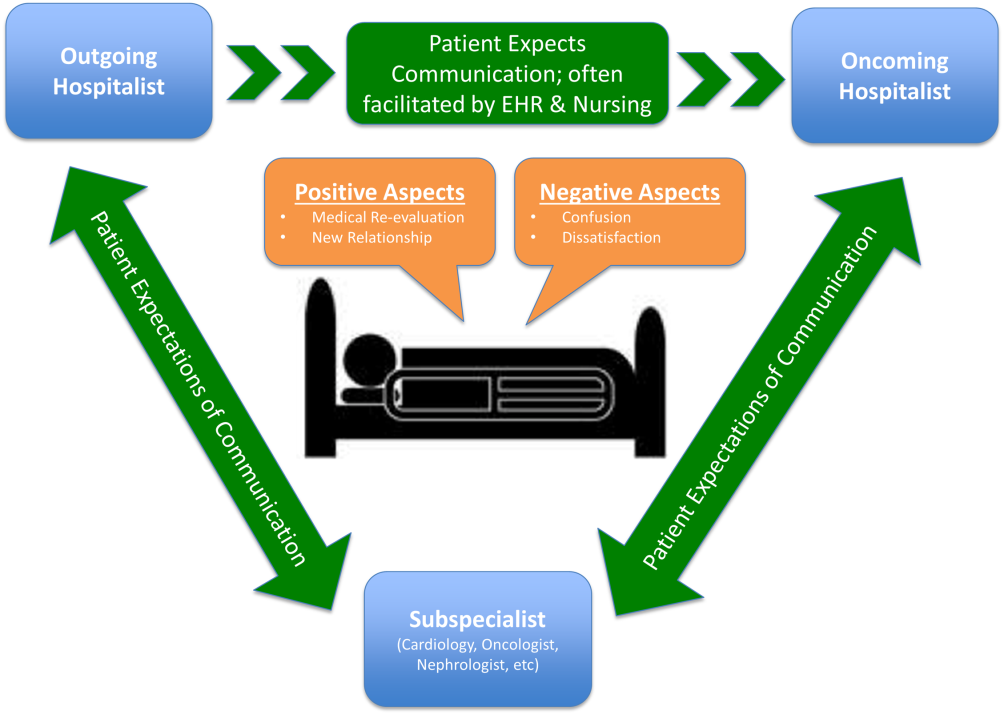

Patients are uniquely positioned to inform us about their experiences in care transitions. Furthermore, with patient satisfaction now affecting Medicare reimbursement rates, patient experiences while in the hospital are becoming even more significant.[9] Despite this emphasis toward more patient‐centered care, no study has explored the hospitalized patient's experience with hospitalist service handoffs. Our goal was to qualitatively assess the hospitalized patients' experiences with transitions between hospitalists to develop a conceptual model to inform future work on improving inpatient transitions of care.

METHODS

Sampling and Recruitment

We conducted bedside interviews of hospitalized patients at an urban academic medical center from October 2014 through December 2014. The hospitalist service consists of a physician and an advanced nurse practitioner (ANP) who divide a panel of patients that consist of general medicine and subspecialty patients who are often comanaged with hepatology, oncology, and nephrology subspecialists. We performed a purposive selection of patients who could potentially comment on their experience with a hospitalist service transition using the following method: 48 hours after a service handoff (ie, an outgoing physician completing 1 week on service, then transfers the care of the patient to a new oncoming hospitalist), oncoming hospitalists were approached and asked if any patient on their service had experienced a service handoff and still remained in the hospital. A 48‐hour time period was chosen to give the patients time to familiarize themselves with their new hospitalist, allowing them to properly comment on the handoff. Patients who were managed by the ANP, who were non‐English speaking, or who were deemed to have an altered mental status based on clinical suspicion by the interviewing physician (C.M.W.) were excluded from participation. Following each weekly service transition, a list of patients who met the above criteria was collected from 4 nonteaching hospitalist services, and were approached by the primary investigator (C.M.W.) and asked if they would be willing to participate. All patients were general medicine patients and no exclusions were made based on physical location within the hospital. Those who agreed provided signed written consent prior to participation to allow access to the electronic health records (EHRs) by study personnel.

Data Collection

Patients were administered a 9‐question, semistructured interview that was informed by expert opinion and existing literature, which was developed to elicit their perspective regarding their transition between hospitalists.[10, 11] No formal changes were made to the interview guide during the study period, and all patients were asked the same questions. Outcomes from interim analysis guided further questioning in subsequent interviews so as to increase the depth of patient responses (ie, Can you explain your response in greater depth?). Prior to the interview, patients were read a description of a hospitalist, and were reminded which hospitalists had cared for them during their stay (see Supporting Information, Appendix 1, in the online version of this article). If family members or a caregiver were present at the time of interview, they were asked not to comment. No repeat interviews were carried out.

All interviews were performed privately in single‐occupancy rooms, digitally recorded using an iPad (Apple, Cupertino, CA) and professionally transcribed verbatim (Rev, San Francisco, CA). All analysis was performed using MAXQDA Software (VERBI Software GmbH, Berlin, Germany). We obtained demographic information about each patient through chart review

Data Analysis

Grounded theory was utilized, with an inductive approach with no a priori hypothesis.[12] The constant comparative method was used to generate emerging and reoccurring themes.[13] Units of analysis were sentences and phrases. Our research team consisted of 4 academic hospitalists, 2 with backgrounds in clinical medicine, medical education, and qualitative analysis (J.M.F., V.M.A.), 1 as a clinician (C.M.W.), and 1 in health economics (D.O.M.). Interim analysis was performed on a weekly basis (C.M.W.), during which time a coding template was created and refined through an iterative process (C.M.W., J.M.F.). All disagreements in coded themes were resolved through group discussion until full consensus was reached. Each week, responses were assessed for thematic saturation.[14] Interviews were continued if new themes arose during this analysis. Data collection was ended once we ceased to extract new topics from participants. A summary of all themes was then presented to a group of 10 patients who met the same inclusion criteria for respondent validation and member checking. All reporting was performed within the Standards for Reporting Qualitative Research, with additional guidance derived from the Consolidated Criteria for Reporting Qualitative Research.[15, 16] The University of Chicago Institutional Review Board approved this protocol.

RESULTS

In total, 43 eligible patients were recruited, and 40 (93%) agreed to participate. Interviewed patients were between 51 and 65 (39%) years old, had a mean age of 54.5 (15) years, were predominantly female (65%), African American (58%), had a median length of stay at the time of interview of 6.5 days (interquartile range [IQR]: 48), and had an average of 2.0 (IQR: 13) hospitalists oversee their care at the time of interview (Table 1). Interview times ranged from 10:25 to 25:48 minutes, with an average of 15:32 minutes.

| Value | |

|---|---|

| |

| Response rate, n (%) | 40/43 (93) |

| Age, mean SD | 54.5 15 |

| Sex, n (%) | |

| Female | 26 (65) |

| Male | 14 (35) |

| Race, n (%) | |

| African American | 23 (58) |

| White | 16 (40) |

| Hispanic | 1 (2) |

| Median LOS at time of interview, d (IQR) | 6.5 (48) |

| Median no. of hospitalists at time of interview, n (IQR) | 2.0 (13) |