User login

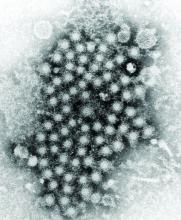

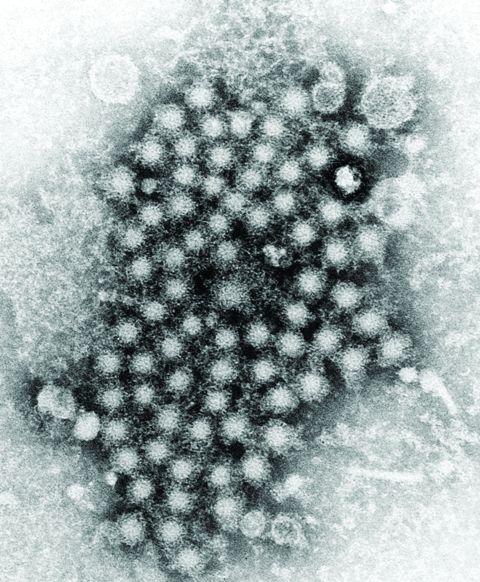

HCC linked to mitochondrial damage, iron accumulation from HCV

according to an extensive literature review.

Although the mechanisms underlying the hepatocellular carcinoma development are not fully understood, it is known that oxidative stress exists to a greater degree in hepatitis C virus (HCV) infection, compared with other inflammatory liver diseases. Such stress has been proposed as a major mechanism of liver injury in patients with chronic HCV, the authors reported in Free Radical Biology and Medicine.

Patients with HCV have significant hepatocellular mitochondrial alterations, and iron accumulation is also a well-known characteristic in patients with chronic HCV. Such alterations in mitochondria and iron accumulation are closely related to oxidative stress, since the mitochondria are the main site of reactive oxygen species generation, and iron produces hydroxy radicals via the Fenton reaction, according to the review.

“The greatest concern is whether mitochondrial damage and iron metabolic dysregulation persist even after HCV eradication and to what extent such pathological conditions affect the development of HCC. Determining the molecular signaling that underlies the mitophagy induced by iron depletion is another topic of interest and is expected to lead to potential therapeutic approaches for multiple diseases,” the researchers concluded.

Support was from the Research Program on Hepatitis from the Japan Agency for Medical Research and Development. The authors reported no disclosures.

SOURCE: Keisuke H et al. Free Radic Biol Med. 2019;133:193-9.

according to an extensive literature review.

Although the mechanisms underlying the hepatocellular carcinoma development are not fully understood, it is known that oxidative stress exists to a greater degree in hepatitis C virus (HCV) infection, compared with other inflammatory liver diseases. Such stress has been proposed as a major mechanism of liver injury in patients with chronic HCV, the authors reported in Free Radical Biology and Medicine.

Patients with HCV have significant hepatocellular mitochondrial alterations, and iron accumulation is also a well-known characteristic in patients with chronic HCV. Such alterations in mitochondria and iron accumulation are closely related to oxidative stress, since the mitochondria are the main site of reactive oxygen species generation, and iron produces hydroxy radicals via the Fenton reaction, according to the review.

“The greatest concern is whether mitochondrial damage and iron metabolic dysregulation persist even after HCV eradication and to what extent such pathological conditions affect the development of HCC. Determining the molecular signaling that underlies the mitophagy induced by iron depletion is another topic of interest and is expected to lead to potential therapeutic approaches for multiple diseases,” the researchers concluded.

Support was from the Research Program on Hepatitis from the Japan Agency for Medical Research and Development. The authors reported no disclosures.

SOURCE: Keisuke H et al. Free Radic Biol Med. 2019;133:193-9.

according to an extensive literature review.

Although the mechanisms underlying the hepatocellular carcinoma development are not fully understood, it is known that oxidative stress exists to a greater degree in hepatitis C virus (HCV) infection, compared with other inflammatory liver diseases. Such stress has been proposed as a major mechanism of liver injury in patients with chronic HCV, the authors reported in Free Radical Biology and Medicine.

Patients with HCV have significant hepatocellular mitochondrial alterations, and iron accumulation is also a well-known characteristic in patients with chronic HCV. Such alterations in mitochondria and iron accumulation are closely related to oxidative stress, since the mitochondria are the main site of reactive oxygen species generation, and iron produces hydroxy radicals via the Fenton reaction, according to the review.

“The greatest concern is whether mitochondrial damage and iron metabolic dysregulation persist even after HCV eradication and to what extent such pathological conditions affect the development of HCC. Determining the molecular signaling that underlies the mitophagy induced by iron depletion is another topic of interest and is expected to lead to potential therapeutic approaches for multiple diseases,” the researchers concluded.

Support was from the Research Program on Hepatitis from the Japan Agency for Medical Research and Development. The authors reported no disclosures.

SOURCE: Keisuke H et al. Free Radic Biol Med. 2019;133:193-9.

FROM FREE RADICAL BIOLOGY AND MEDICINE

Soft bedding most common source of accidental suffocation in infants

according to research published in Pediatrics.

Alexa B. Erck Lambert, MPH, of DB Consulting Group in Silver Spring, Md., and her associates conducted an analysis of 1,812 cases of sudden unexpected infant death (SUID) in children aged 1 year or less included in a Centers for Disease Control and Prevention registry. Of those 1,812 SUID cases, 250 (14%) were classified as accidental suffocation.

Airway obstruction by soft bedding was by far the most common mechanism of accidental suffocation, contributing to death in 69% of cases. Overlay was attributed in 19% of cases and wedging was attributed in 12%. The median age for soft bedding, overlay, and wedging death was 3 months, 2 months, and 6 months, respectively. The majority of cases were male (55%), born after at least 37 weeks’ gestation (81%), non-Hispanic white or African American (74%), and insured by Medicaid (70%).

In deaths attributed to soft bedding, 49% occurred while the infant was in an adult bed, 92% occurred while the infant was in a nonsupine position, and 34% occurred while a blanket was obstructing the airway. While infants aged 5-11 months were twice as likely to have had a blanket obstructing their airway as infants aged 0-4 months (55% vs. 27%), younger infants were twice as likely to have had a pillow or couch cushion obstructing their airway (25% vs. 11%).

Of the 51 overlay deaths, 71% occurred in an adult’s bed, 51% were found nonsupine, and 41% were found in a bed with more than one adult. Most deaths were attributed to neck or chest compression, rather than nose or mouth obstruction. Of the 33 wedging deaths, 45% were sharing a sleep surface and 73% were in an adult bed; the most common objects the infant was wedged between were a mattress and wall.

“The safest place for infants to sleep is on their backs, on an unshared sleep surface, in a crib or bassinet in the caregivers’ room, and without soft bedding in their sleep area,” the investigators wrote. “Improving our understanding of the characteristics and risk factors ... of suffocation deaths by mechanism of airway obstruction can inform the development of more targeted strategies to prevent these injuries and deaths.”

The authors reported no potential conflicts of interest. Ms. Erck Lambert was supported by a contract her employer and the Centers for Disease Control and Prevention. Meghan Faulkner’s agency also received funds from the Centers for Disease Control and Prevention.

SOURCE: Erck Lambert AB et al. Pediatrics. 2019 Apr 22. doi: 10.1542/peds.2018-3408.

according to research published in Pediatrics.

Alexa B. Erck Lambert, MPH, of DB Consulting Group in Silver Spring, Md., and her associates conducted an analysis of 1,812 cases of sudden unexpected infant death (SUID) in children aged 1 year or less included in a Centers for Disease Control and Prevention registry. Of those 1,812 SUID cases, 250 (14%) were classified as accidental suffocation.

Airway obstruction by soft bedding was by far the most common mechanism of accidental suffocation, contributing to death in 69% of cases. Overlay was attributed in 19% of cases and wedging was attributed in 12%. The median age for soft bedding, overlay, and wedging death was 3 months, 2 months, and 6 months, respectively. The majority of cases were male (55%), born after at least 37 weeks’ gestation (81%), non-Hispanic white or African American (74%), and insured by Medicaid (70%).

In deaths attributed to soft bedding, 49% occurred while the infant was in an adult bed, 92% occurred while the infant was in a nonsupine position, and 34% occurred while a blanket was obstructing the airway. While infants aged 5-11 months were twice as likely to have had a blanket obstructing their airway as infants aged 0-4 months (55% vs. 27%), younger infants were twice as likely to have had a pillow or couch cushion obstructing their airway (25% vs. 11%).

Of the 51 overlay deaths, 71% occurred in an adult’s bed, 51% were found nonsupine, and 41% were found in a bed with more than one adult. Most deaths were attributed to neck or chest compression, rather than nose or mouth obstruction. Of the 33 wedging deaths, 45% were sharing a sleep surface and 73% were in an adult bed; the most common objects the infant was wedged between were a mattress and wall.

“The safest place for infants to sleep is on their backs, on an unshared sleep surface, in a crib or bassinet in the caregivers’ room, and without soft bedding in their sleep area,” the investigators wrote. “Improving our understanding of the characteristics and risk factors ... of suffocation deaths by mechanism of airway obstruction can inform the development of more targeted strategies to prevent these injuries and deaths.”

The authors reported no potential conflicts of interest. Ms. Erck Lambert was supported by a contract her employer and the Centers for Disease Control and Prevention. Meghan Faulkner’s agency also received funds from the Centers for Disease Control and Prevention.

SOURCE: Erck Lambert AB et al. Pediatrics. 2019 Apr 22. doi: 10.1542/peds.2018-3408.

according to research published in Pediatrics.

Alexa B. Erck Lambert, MPH, of DB Consulting Group in Silver Spring, Md., and her associates conducted an analysis of 1,812 cases of sudden unexpected infant death (SUID) in children aged 1 year or less included in a Centers for Disease Control and Prevention registry. Of those 1,812 SUID cases, 250 (14%) were classified as accidental suffocation.

Airway obstruction by soft bedding was by far the most common mechanism of accidental suffocation, contributing to death in 69% of cases. Overlay was attributed in 19% of cases and wedging was attributed in 12%. The median age for soft bedding, overlay, and wedging death was 3 months, 2 months, and 6 months, respectively. The majority of cases were male (55%), born after at least 37 weeks’ gestation (81%), non-Hispanic white or African American (74%), and insured by Medicaid (70%).

In deaths attributed to soft bedding, 49% occurred while the infant was in an adult bed, 92% occurred while the infant was in a nonsupine position, and 34% occurred while a blanket was obstructing the airway. While infants aged 5-11 months were twice as likely to have had a blanket obstructing their airway as infants aged 0-4 months (55% vs. 27%), younger infants were twice as likely to have had a pillow or couch cushion obstructing their airway (25% vs. 11%).

Of the 51 overlay deaths, 71% occurred in an adult’s bed, 51% were found nonsupine, and 41% were found in a bed with more than one adult. Most deaths were attributed to neck or chest compression, rather than nose or mouth obstruction. Of the 33 wedging deaths, 45% were sharing a sleep surface and 73% were in an adult bed; the most common objects the infant was wedged between were a mattress and wall.

“The safest place for infants to sleep is on their backs, on an unshared sleep surface, in a crib or bassinet in the caregivers’ room, and without soft bedding in their sleep area,” the investigators wrote. “Improving our understanding of the characteristics and risk factors ... of suffocation deaths by mechanism of airway obstruction can inform the development of more targeted strategies to prevent these injuries and deaths.”

The authors reported no potential conflicts of interest. Ms. Erck Lambert was supported by a contract her employer and the Centers for Disease Control and Prevention. Meghan Faulkner’s agency also received funds from the Centers for Disease Control and Prevention.

SOURCE: Erck Lambert AB et al. Pediatrics. 2019 Apr 22. doi: 10.1542/peds.2018-3408.

FROM PEDIATRICS

Employee Wellness Programs: Location, Location, Location

Employee wellness programs (EWPs) have a good track record, with plenty of affirmative research showing benefits: lowered stress levels, fewer sick days, reduced absenteeism, health care savings. Studies have found that for every dollar spent on an EWP, medical costs fall by $3 to $6. Moreover, studies have found that organizations that invest in EWPs have higher rates of employee satisfaction, morale, and retention.

But does one size of EWP fit all needs? Not according to researchers from Northern Arizona University. They studied factors that go in to successful EWPs and suggest that geography should play a big part in decision making: from whether to have an EWP to what it should offer. Take Houston, Texas, for instance, where influenza lasts longer than in most other places. Geographic and climatologic variables, the researchers say, like those necessitate “tailored wellness responses,” such as spending more money on outreach and educational programs to make sure people are prepared for seasonal outbreaks.

The researchers endorse the idea of “individualism in city preferences”—that is, that cities maximize their wellness offerings by taking advantage of the characteristics of the spaces, cultures, and lifestyles unique to them. The researchers note that cities with high incidence of obesity, such as Memphis, Tennessee, and Louisville, Kentucky, tend to put more money into weight-loss programs. Cities with more elderly workers, like Phoenix, Arizona, and Jacksonville, Florida, have “robust programs” for managing chronic diseases associated with age. Stress management may be a priority in impoverished areas. Similarly, EWPs need to factor in geography to make sure the programs meet local needs. Some cities have more and longer days with sunshine: How much of the year can people be active outside? Some have farmland nearby: EWPs can encourage eating healthy locally sourced foods. It is significant, the researchers say, that EWPs in “lower obesity” regions have a proportionately higher intake of activities associated with the outdoors.

Geography is only one consideration among many, the researchers emphasize. City leadership and commitment and incentive structures lead to recreational investments and investments in healthy-living infrastructures, which in turn, lead to higher livability rankings and quality-of-life indexes, workforce productivity, and attracting new business. Those lead to more community involvement, cohesion, and reduced reliance on public health care facilities. And ultimately, the researchers say, the components of a successful EWP all lead to a positive impact on health and longevity.

Employee wellness programs (EWPs) have a good track record, with plenty of affirmative research showing benefits: lowered stress levels, fewer sick days, reduced absenteeism, health care savings. Studies have found that for every dollar spent on an EWP, medical costs fall by $3 to $6. Moreover, studies have found that organizations that invest in EWPs have higher rates of employee satisfaction, morale, and retention.

But does one size of EWP fit all needs? Not according to researchers from Northern Arizona University. They studied factors that go in to successful EWPs and suggest that geography should play a big part in decision making: from whether to have an EWP to what it should offer. Take Houston, Texas, for instance, where influenza lasts longer than in most other places. Geographic and climatologic variables, the researchers say, like those necessitate “tailored wellness responses,” such as spending more money on outreach and educational programs to make sure people are prepared for seasonal outbreaks.

The researchers endorse the idea of “individualism in city preferences”—that is, that cities maximize their wellness offerings by taking advantage of the characteristics of the spaces, cultures, and lifestyles unique to them. The researchers note that cities with high incidence of obesity, such as Memphis, Tennessee, and Louisville, Kentucky, tend to put more money into weight-loss programs. Cities with more elderly workers, like Phoenix, Arizona, and Jacksonville, Florida, have “robust programs” for managing chronic diseases associated with age. Stress management may be a priority in impoverished areas. Similarly, EWPs need to factor in geography to make sure the programs meet local needs. Some cities have more and longer days with sunshine: How much of the year can people be active outside? Some have farmland nearby: EWPs can encourage eating healthy locally sourced foods. It is significant, the researchers say, that EWPs in “lower obesity” regions have a proportionately higher intake of activities associated with the outdoors.

Geography is only one consideration among many, the researchers emphasize. City leadership and commitment and incentive structures lead to recreational investments and investments in healthy-living infrastructures, which in turn, lead to higher livability rankings and quality-of-life indexes, workforce productivity, and attracting new business. Those lead to more community involvement, cohesion, and reduced reliance on public health care facilities. And ultimately, the researchers say, the components of a successful EWP all lead to a positive impact on health and longevity.

Employee wellness programs (EWPs) have a good track record, with plenty of affirmative research showing benefits: lowered stress levels, fewer sick days, reduced absenteeism, health care savings. Studies have found that for every dollar spent on an EWP, medical costs fall by $3 to $6. Moreover, studies have found that organizations that invest in EWPs have higher rates of employee satisfaction, morale, and retention.

But does one size of EWP fit all needs? Not according to researchers from Northern Arizona University. They studied factors that go in to successful EWPs and suggest that geography should play a big part in decision making: from whether to have an EWP to what it should offer. Take Houston, Texas, for instance, where influenza lasts longer than in most other places. Geographic and climatologic variables, the researchers say, like those necessitate “tailored wellness responses,” such as spending more money on outreach and educational programs to make sure people are prepared for seasonal outbreaks.

The researchers endorse the idea of “individualism in city preferences”—that is, that cities maximize their wellness offerings by taking advantage of the characteristics of the spaces, cultures, and lifestyles unique to them. The researchers note that cities with high incidence of obesity, such as Memphis, Tennessee, and Louisville, Kentucky, tend to put more money into weight-loss programs. Cities with more elderly workers, like Phoenix, Arizona, and Jacksonville, Florida, have “robust programs” for managing chronic diseases associated with age. Stress management may be a priority in impoverished areas. Similarly, EWPs need to factor in geography to make sure the programs meet local needs. Some cities have more and longer days with sunshine: How much of the year can people be active outside? Some have farmland nearby: EWPs can encourage eating healthy locally sourced foods. It is significant, the researchers say, that EWPs in “lower obesity” regions have a proportionately higher intake of activities associated with the outdoors.

Geography is only one consideration among many, the researchers emphasize. City leadership and commitment and incentive structures lead to recreational investments and investments in healthy-living infrastructures, which in turn, lead to higher livability rankings and quality-of-life indexes, workforce productivity, and attracting new business. Those lead to more community involvement, cohesion, and reduced reliance on public health care facilities. And ultimately, the researchers say, the components of a successful EWP all lead to a positive impact on health and longevity.

FDA approves IL-23 inhibitor risankizumab for treating plaque psoriasis

Risankizumab, an interleukin-23 inhibitor, has been approved by the Food and Drug Administration for treating moderate to severe plaque psoriasis in adults who are candidates for systemic therapy or phototherapy, the manufacturer announced on April 23.

Risankizumab selectively inhibits interleukin-23 (IL-23), a key inflammatory protein, by binding to its p19 subunit. The drug is administered at a dose of 150 mg, in two subcutaneous injections, every 12 weeks, after starting doses at weeks 0 and 4. It will be available in early May, according to an AbbVie press release announcing the approval.

The approval was based in part on data from two phase 3, 2-year studies, In UltIMMA-1 and UltIMMA-2, at 16 weeks, 75% of risankizumab patients in both studies achieved a Psoriasis Area and Severity Index (PASI 90), compared with 5% and 2% of those on placebo, respectively. These results were published in 2018 (Lancet. 2018 Aug 25;392[10148]:650-61).

At 1 year, 82% and 81% of those treated with risankizumab in the two studies achieved a PASI 90, and 56% and 60% achieved a PASI 100, respectively, according to the company.

Approval was also based on additional phase 3 studies, IMMhance and IMMvent.

Upper respiratory infections were among the most common adverse events associated with risankizumab in trials, reported in 13%, according to the company. Other adverse events associated with treatment included headache (3.5 %), fatigue (2.5 %), injection site reactions (1.5%) and tinea infections (1.1%). The AbbVie release states that candidates for treatment should be evaluated for tuberculosis before starting therapy, and patients should be instructed to report signs and symptoms of infection.

Risankizumab, which will be marketed as Skyrizi, was recently approved in Canada for the same indication, and in Japan, for plaque psoriasis, generalized pustular psoriasis, erythrodermic psoriasis and psoriatic arthritis in adults. It currently is under review in Europe.

AbbVie and Boehringer Ingelheim are collaborating on the development of risankizumab, according to an AbbVie press release. Studies of risankizumab for treatment of psoriatic arthritis and Crohn’s disease are underway.

Risankizumab, an interleukin-23 inhibitor, has been approved by the Food and Drug Administration for treating moderate to severe plaque psoriasis in adults who are candidates for systemic therapy or phototherapy, the manufacturer announced on April 23.

Risankizumab selectively inhibits interleukin-23 (IL-23), a key inflammatory protein, by binding to its p19 subunit. The drug is administered at a dose of 150 mg, in two subcutaneous injections, every 12 weeks, after starting doses at weeks 0 and 4. It will be available in early May, according to an AbbVie press release announcing the approval.

The approval was based in part on data from two phase 3, 2-year studies, In UltIMMA-1 and UltIMMA-2, at 16 weeks, 75% of risankizumab patients in both studies achieved a Psoriasis Area and Severity Index (PASI 90), compared with 5% and 2% of those on placebo, respectively. These results were published in 2018 (Lancet. 2018 Aug 25;392[10148]:650-61).

At 1 year, 82% and 81% of those treated with risankizumab in the two studies achieved a PASI 90, and 56% and 60% achieved a PASI 100, respectively, according to the company.

Approval was also based on additional phase 3 studies, IMMhance and IMMvent.

Upper respiratory infections were among the most common adverse events associated with risankizumab in trials, reported in 13%, according to the company. Other adverse events associated with treatment included headache (3.5 %), fatigue (2.5 %), injection site reactions (1.5%) and tinea infections (1.1%). The AbbVie release states that candidates for treatment should be evaluated for tuberculosis before starting therapy, and patients should be instructed to report signs and symptoms of infection.

Risankizumab, which will be marketed as Skyrizi, was recently approved in Canada for the same indication, and in Japan, for plaque psoriasis, generalized pustular psoriasis, erythrodermic psoriasis and psoriatic arthritis in adults. It currently is under review in Europe.

AbbVie and Boehringer Ingelheim are collaborating on the development of risankizumab, according to an AbbVie press release. Studies of risankizumab for treatment of psoriatic arthritis and Crohn’s disease are underway.

Risankizumab, an interleukin-23 inhibitor, has been approved by the Food and Drug Administration for treating moderate to severe plaque psoriasis in adults who are candidates for systemic therapy or phototherapy, the manufacturer announced on April 23.

Risankizumab selectively inhibits interleukin-23 (IL-23), a key inflammatory protein, by binding to its p19 subunit. The drug is administered at a dose of 150 mg, in two subcutaneous injections, every 12 weeks, after starting doses at weeks 0 and 4. It will be available in early May, according to an AbbVie press release announcing the approval.

The approval was based in part on data from two phase 3, 2-year studies, In UltIMMA-1 and UltIMMA-2, at 16 weeks, 75% of risankizumab patients in both studies achieved a Psoriasis Area and Severity Index (PASI 90), compared with 5% and 2% of those on placebo, respectively. These results were published in 2018 (Lancet. 2018 Aug 25;392[10148]:650-61).

At 1 year, 82% and 81% of those treated with risankizumab in the two studies achieved a PASI 90, and 56% and 60% achieved a PASI 100, respectively, according to the company.

Approval was also based on additional phase 3 studies, IMMhance and IMMvent.

Upper respiratory infections were among the most common adverse events associated with risankizumab in trials, reported in 13%, according to the company. Other adverse events associated with treatment included headache (3.5 %), fatigue (2.5 %), injection site reactions (1.5%) and tinea infections (1.1%). The AbbVie release states that candidates for treatment should be evaluated for tuberculosis before starting therapy, and patients should be instructed to report signs and symptoms of infection.

Risankizumab, which will be marketed as Skyrizi, was recently approved in Canada for the same indication, and in Japan, for plaque psoriasis, generalized pustular psoriasis, erythrodermic psoriasis and psoriatic arthritis in adults. It currently is under review in Europe.

AbbVie and Boehringer Ingelheim are collaborating on the development of risankizumab, according to an AbbVie press release. Studies of risankizumab for treatment of psoriatic arthritis and Crohn’s disease are underway.

Ixekizumab posts positive results in phase 3 nr-axSpA trial

Eli Lilly has announced positive results from COAST-X, a 52-week, placebo-controlled, phase 3 trial evaluating ixekizumab (Taltz) in biologic disease-modifying antirheumatic drug–naive patients with nonradiographic axial spondyloarthritis (nr-axSpA).

Ixekizumab met the primary endpoint of statistically significant improvement in nr-axSpA symptoms as measured by Assessment of Spondyloarthritis International Society 40 response at both week 16 and week 52, compared with patients who received placebo. The drug also met all secondary endpoints, including significant improvement in Ankylosing Spondylitis Disease Activity Score, significant improvement in Bath Ankylosing Spondylitis Disease Activity, proportion of patients achieving low disease activity, significant improvement in sacroiliac joint inflammation as assessed by MRI, and significant improvement in 36-Item Short Form Health Survey Physical Component Summary score.

The safety profile of ixekizumab was broadly similar to what has been seen in previous phase 3 trials; the most common adverse events include injection site reactions, upper respiratory tract infections, nausea, and tinea infections, the company said.

“Nonradiographic axSpA is a challenging diagnosis that is not only missed in clinics, but also has limited treatment options for physicians to offer patients. The COAST-X results offer compelling evidence that Taltz could provide a much-needed new alternative if approved for this patient population,” Atul A. Deodhar, MD, professor of medicine at Oregon Health & Science University, Portland, and clinical investigator for the COAST program, said in the press release.

Find the full press release on the Eli Lilly website.

Eli Lilly has announced positive results from COAST-X, a 52-week, placebo-controlled, phase 3 trial evaluating ixekizumab (Taltz) in biologic disease-modifying antirheumatic drug–naive patients with nonradiographic axial spondyloarthritis (nr-axSpA).

Ixekizumab met the primary endpoint of statistically significant improvement in nr-axSpA symptoms as measured by Assessment of Spondyloarthritis International Society 40 response at both week 16 and week 52, compared with patients who received placebo. The drug also met all secondary endpoints, including significant improvement in Ankylosing Spondylitis Disease Activity Score, significant improvement in Bath Ankylosing Spondylitis Disease Activity, proportion of patients achieving low disease activity, significant improvement in sacroiliac joint inflammation as assessed by MRI, and significant improvement in 36-Item Short Form Health Survey Physical Component Summary score.

The safety profile of ixekizumab was broadly similar to what has been seen in previous phase 3 trials; the most common adverse events include injection site reactions, upper respiratory tract infections, nausea, and tinea infections, the company said.

“Nonradiographic axSpA is a challenging diagnosis that is not only missed in clinics, but also has limited treatment options for physicians to offer patients. The COAST-X results offer compelling evidence that Taltz could provide a much-needed new alternative if approved for this patient population,” Atul A. Deodhar, MD, professor of medicine at Oregon Health & Science University, Portland, and clinical investigator for the COAST program, said in the press release.

Find the full press release on the Eli Lilly website.

Eli Lilly has announced positive results from COAST-X, a 52-week, placebo-controlled, phase 3 trial evaluating ixekizumab (Taltz) in biologic disease-modifying antirheumatic drug–naive patients with nonradiographic axial spondyloarthritis (nr-axSpA).

Ixekizumab met the primary endpoint of statistically significant improvement in nr-axSpA symptoms as measured by Assessment of Spondyloarthritis International Society 40 response at both week 16 and week 52, compared with patients who received placebo. The drug also met all secondary endpoints, including significant improvement in Ankylosing Spondylitis Disease Activity Score, significant improvement in Bath Ankylosing Spondylitis Disease Activity, proportion of patients achieving low disease activity, significant improvement in sacroiliac joint inflammation as assessed by MRI, and significant improvement in 36-Item Short Form Health Survey Physical Component Summary score.

The safety profile of ixekizumab was broadly similar to what has been seen in previous phase 3 trials; the most common adverse events include injection site reactions, upper respiratory tract infections, nausea, and tinea infections, the company said.

“Nonradiographic axSpA is a challenging diagnosis that is not only missed in clinics, but also has limited treatment options for physicians to offer patients. The COAST-X results offer compelling evidence that Taltz could provide a much-needed new alternative if approved for this patient population,” Atul A. Deodhar, MD, professor of medicine at Oregon Health & Science University, Portland, and clinical investigator for the COAST program, said in the press release.

Find the full press release on the Eli Lilly website.

Looking back at 10 years of the AGA Center for GI Innovation and Technology

SAN FRANCISCO – Jay Pasricha, MD, director of the Johns Hopkins Center for Neurogastroenterology, in Baltimore, reminisced about the early days of the AGA Center for GI Innovation and Technology in an interview at the AGA Tech Summit. “I was a founder,” he said, “along with Joel Brill and others.”

He goes back to when the idea was first pitched to the AGA Institute Council in 2009 as a technology center. He recalls that the first summit was held in Palo Alto, Calif., and that it was a “terrific success” because it filled a void. Dr. Pasricha said that the CGIT has fulfilled most if not all of its early expectations and – in some cases – went beyond expectations. Importantly, it transformed how people thought about GI as a specialty – GI was considered a risk-averse specialty previously. CGIT helped to develop relationships with many stakeholders, including the Food and Drug Administration. Dr. Pasricha predicts that CGIT will continue to do well because of its leadership and because AGA is completely invested in its success.

SAN FRANCISCO – Jay Pasricha, MD, director of the Johns Hopkins Center for Neurogastroenterology, in Baltimore, reminisced about the early days of the AGA Center for GI Innovation and Technology in an interview at the AGA Tech Summit. “I was a founder,” he said, “along with Joel Brill and others.”

He goes back to when the idea was first pitched to the AGA Institute Council in 2009 as a technology center. He recalls that the first summit was held in Palo Alto, Calif., and that it was a “terrific success” because it filled a void. Dr. Pasricha said that the CGIT has fulfilled most if not all of its early expectations and – in some cases – went beyond expectations. Importantly, it transformed how people thought about GI as a specialty – GI was considered a risk-averse specialty previously. CGIT helped to develop relationships with many stakeholders, including the Food and Drug Administration. Dr. Pasricha predicts that CGIT will continue to do well because of its leadership and because AGA is completely invested in its success.

SAN FRANCISCO – Jay Pasricha, MD, director of the Johns Hopkins Center for Neurogastroenterology, in Baltimore, reminisced about the early days of the AGA Center for GI Innovation and Technology in an interview at the AGA Tech Summit. “I was a founder,” he said, “along with Joel Brill and others.”

He goes back to when the idea was first pitched to the AGA Institute Council in 2009 as a technology center. He recalls that the first summit was held in Palo Alto, Calif., and that it was a “terrific success” because it filled a void. Dr. Pasricha said that the CGIT has fulfilled most if not all of its early expectations and – in some cases – went beyond expectations. Importantly, it transformed how people thought about GI as a specialty – GI was considered a risk-averse specialty previously. CGIT helped to develop relationships with many stakeholders, including the Food and Drug Administration. Dr. Pasricha predicts that CGIT will continue to do well because of its leadership and because AGA is completely invested in its success.

REPORTING FROM 2019 AGA TECH SUMMIT

Quality of life decrement with salvage ASCT is short-lived

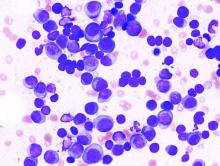

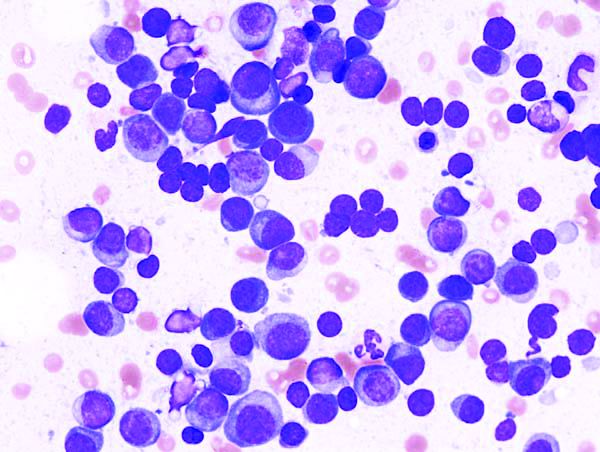

For patients with multiple myeloma in relapse after an autologous stem cell transplant (ASCT), salvage ASCT is associated with reduced quality of life and greater pain in the near term, compared with nontransplantation consolidation (NTC) therapy, a secondary analysis from the United Kingdom’s Myeloma X trial suggested.

But global health status scores for salvage ASCT (sASCT) lagged only in the first 100 days after randomization, whereas pain scores were worse with salvage transplantation in the first 2 years but slightly better thereafter, reported Sam H. Ahmedzai, MBChB, from the University of Sheffield, England, and his colleagues.

“The small and diminishing differences in global health status and side effects of treatment need to be considered alongside the results of Myeloma X, which showed a significant benefit of sASCT on [overall survival]. The benefits of sASCT should be considered alongside the relatively short-term negative effects on [quality of life] and pain when making patient treatment decisions and further support the use of sASCT,” they wrote in the Journal of Clinical Oncology.

The BSBMT/UKMF Myeloma X trial was a multicenter, randomized, phase 3 trial comparing sASCT with weekly oral cyclophosphamide in patients with multiple myeloma who had relapsed after a prior ASCT. In the final overall survival analysis, median overall survival was superior for the sASCT, at 67 months vs. 52 months for nontransplantation consolidation (P = .022; hazard ratio, 0.56; P = .0169).

In the current study, the investigators reported on secondary patient-reported pain and quality of life outcomes assessed using the validated European Organization for Research and Treatment of Cancer Questionnaire (QLQ-C30) and its myeloma-specific module, QLQ-MY20; the Brief Pain Inventory (Short Form), and the Leeds Assessment of Neuropathic Symptoms and Signs (Self-Assessment) scale.

Of the 297 patients enrolled, 288 had consented to the quality of life portion of the study, and of this group, 171 (88 assigned to sASCT and 83 assigned to NTC) were included.

After a median follow-up of 52 months, the QLQ-C30 global health status scores were 9.2 points higher (indicating better) for patients in the nontransplantation group (P = .0496) at 100 days after transplantation, but there were no significant differences between the groups for this measure at any later time point.

“This deterioration in global health status for patients receiving sASCT, compared with NTC, dissipated to a trivial difference at 6 months and a smaller trivial difference at 1 year,” Dr. Ahmedzai and his colleagues wrote.

At 2 years, the pendulum had swung to favor sASCT, but also by a “trivial” amount.

The side effects of treatment subscale was slightly higher (worse) with sASCT at 100 days and 6 months after treatment, but this difference dwindled thereafter.

Pain interference scores adjusted for baseline score and baseline neuropathic pain level were not significantly different 100 days after randomization, but there were significant differences at both 6 months and up to 2 years. At all the time points considered, pain interference scores were approximately 1 point lower in the NTC group, which the authors noted is a clinically relevant difference.

Patients who had undergone sASCT and reported below-median scores on a side-effect subscale had significantly longer time to progression, compared with patients who received NTC (HR, 0.24; P = .003), a difference that held up on multivariable regression analysis (HR, 0.20; P = .0499).

Pain scores were not significantly predictive of either time to progression or overall survival, however.

The study was supported by Cancer Research UK, Janssen-Cilag, and Chugai Pharma UK. Dr. Ahmedzai reported honoraria, consulting, research funding, and travel fees from various companies, not including the study sponsors.

SOURCE: Ahmedzai SH et al. J Clin Oncol. 2019 Apr 10. doi: 10.1200/JCO.18.01006.

For patients with multiple myeloma in relapse after an autologous stem cell transplant (ASCT), salvage ASCT is associated with reduced quality of life and greater pain in the near term, compared with nontransplantation consolidation (NTC) therapy, a secondary analysis from the United Kingdom’s Myeloma X trial suggested.

But global health status scores for salvage ASCT (sASCT) lagged only in the first 100 days after randomization, whereas pain scores were worse with salvage transplantation in the first 2 years but slightly better thereafter, reported Sam H. Ahmedzai, MBChB, from the University of Sheffield, England, and his colleagues.

“The small and diminishing differences in global health status and side effects of treatment need to be considered alongside the results of Myeloma X, which showed a significant benefit of sASCT on [overall survival]. The benefits of sASCT should be considered alongside the relatively short-term negative effects on [quality of life] and pain when making patient treatment decisions and further support the use of sASCT,” they wrote in the Journal of Clinical Oncology.

The BSBMT/UKMF Myeloma X trial was a multicenter, randomized, phase 3 trial comparing sASCT with weekly oral cyclophosphamide in patients with multiple myeloma who had relapsed after a prior ASCT. In the final overall survival analysis, median overall survival was superior for the sASCT, at 67 months vs. 52 months for nontransplantation consolidation (P = .022; hazard ratio, 0.56; P = .0169).

In the current study, the investigators reported on secondary patient-reported pain and quality of life outcomes assessed using the validated European Organization for Research and Treatment of Cancer Questionnaire (QLQ-C30) and its myeloma-specific module, QLQ-MY20; the Brief Pain Inventory (Short Form), and the Leeds Assessment of Neuropathic Symptoms and Signs (Self-Assessment) scale.

Of the 297 patients enrolled, 288 had consented to the quality of life portion of the study, and of this group, 171 (88 assigned to sASCT and 83 assigned to NTC) were included.

After a median follow-up of 52 months, the QLQ-C30 global health status scores were 9.2 points higher (indicating better) for patients in the nontransplantation group (P = .0496) at 100 days after transplantation, but there were no significant differences between the groups for this measure at any later time point.

“This deterioration in global health status for patients receiving sASCT, compared with NTC, dissipated to a trivial difference at 6 months and a smaller trivial difference at 1 year,” Dr. Ahmedzai and his colleagues wrote.

At 2 years, the pendulum had swung to favor sASCT, but also by a “trivial” amount.

The side effects of treatment subscale was slightly higher (worse) with sASCT at 100 days and 6 months after treatment, but this difference dwindled thereafter.

Pain interference scores adjusted for baseline score and baseline neuropathic pain level were not significantly different 100 days after randomization, but there were significant differences at both 6 months and up to 2 years. At all the time points considered, pain interference scores were approximately 1 point lower in the NTC group, which the authors noted is a clinically relevant difference.

Patients who had undergone sASCT and reported below-median scores on a side-effect subscale had significantly longer time to progression, compared with patients who received NTC (HR, 0.24; P = .003), a difference that held up on multivariable regression analysis (HR, 0.20; P = .0499).

Pain scores were not significantly predictive of either time to progression or overall survival, however.

The study was supported by Cancer Research UK, Janssen-Cilag, and Chugai Pharma UK. Dr. Ahmedzai reported honoraria, consulting, research funding, and travel fees from various companies, not including the study sponsors.

SOURCE: Ahmedzai SH et al. J Clin Oncol. 2019 Apr 10. doi: 10.1200/JCO.18.01006.

For patients with multiple myeloma in relapse after an autologous stem cell transplant (ASCT), salvage ASCT is associated with reduced quality of life and greater pain in the near term, compared with nontransplantation consolidation (NTC) therapy, a secondary analysis from the United Kingdom’s Myeloma X trial suggested.

But global health status scores for salvage ASCT (sASCT) lagged only in the first 100 days after randomization, whereas pain scores were worse with salvage transplantation in the first 2 years but slightly better thereafter, reported Sam H. Ahmedzai, MBChB, from the University of Sheffield, England, and his colleagues.

“The small and diminishing differences in global health status and side effects of treatment need to be considered alongside the results of Myeloma X, which showed a significant benefit of sASCT on [overall survival]. The benefits of sASCT should be considered alongside the relatively short-term negative effects on [quality of life] and pain when making patient treatment decisions and further support the use of sASCT,” they wrote in the Journal of Clinical Oncology.

The BSBMT/UKMF Myeloma X trial was a multicenter, randomized, phase 3 trial comparing sASCT with weekly oral cyclophosphamide in patients with multiple myeloma who had relapsed after a prior ASCT. In the final overall survival analysis, median overall survival was superior for the sASCT, at 67 months vs. 52 months for nontransplantation consolidation (P = .022; hazard ratio, 0.56; P = .0169).

In the current study, the investigators reported on secondary patient-reported pain and quality of life outcomes assessed using the validated European Organization for Research and Treatment of Cancer Questionnaire (QLQ-C30) and its myeloma-specific module, QLQ-MY20; the Brief Pain Inventory (Short Form), and the Leeds Assessment of Neuropathic Symptoms and Signs (Self-Assessment) scale.

Of the 297 patients enrolled, 288 had consented to the quality of life portion of the study, and of this group, 171 (88 assigned to sASCT and 83 assigned to NTC) were included.

After a median follow-up of 52 months, the QLQ-C30 global health status scores were 9.2 points higher (indicating better) for patients in the nontransplantation group (P = .0496) at 100 days after transplantation, but there were no significant differences between the groups for this measure at any later time point.

“This deterioration in global health status for patients receiving sASCT, compared with NTC, dissipated to a trivial difference at 6 months and a smaller trivial difference at 1 year,” Dr. Ahmedzai and his colleagues wrote.

At 2 years, the pendulum had swung to favor sASCT, but also by a “trivial” amount.

The side effects of treatment subscale was slightly higher (worse) with sASCT at 100 days and 6 months after treatment, but this difference dwindled thereafter.

Pain interference scores adjusted for baseline score and baseline neuropathic pain level were not significantly different 100 days after randomization, but there were significant differences at both 6 months and up to 2 years. At all the time points considered, pain interference scores were approximately 1 point lower in the NTC group, which the authors noted is a clinically relevant difference.

Patients who had undergone sASCT and reported below-median scores on a side-effect subscale had significantly longer time to progression, compared with patients who received NTC (HR, 0.24; P = .003), a difference that held up on multivariable regression analysis (HR, 0.20; P = .0499).

Pain scores were not significantly predictive of either time to progression or overall survival, however.

The study was supported by Cancer Research UK, Janssen-Cilag, and Chugai Pharma UK. Dr. Ahmedzai reported honoraria, consulting, research funding, and travel fees from various companies, not including the study sponsors.

SOURCE: Ahmedzai SH et al. J Clin Oncol. 2019 Apr 10. doi: 10.1200/JCO.18.01006.

FROM THE JOURNAL OF CLINICAL ONCOLOGY

Make your evaluations and progress notes sing

I was talking to a physical therapy (PT) colleague and she was lamenting how much she hated doing documentation on patients she was treating. I suggested to her that she make her evaluations and progress notes sing. This is a concept I would sometimes use with patients who might be depressed, for example, I would ask them if anything made their heart sing to get an idea how “depressed” they might be. If they were unhappy or sad, I would advise that they engage in “heart singing” activities and behaviors, as I believe it is the “simple pleasures” in life that keep us resilient and persistent.

It is funny, when I was a resident and working in Jackson Park Hospital’s first psychiatric ward in 1972, one day in a note I wrote “I am going to give this acutely psychotic patient the big T – Thorazine to help them get some sleep at night,” I did not really think much about it until one of the nurses brought it to my attention because she thought it was unique – and a funny way of reporting plans in my progress notes.

My PT colleague told me that she remembered the first time she read one of my notes on a patient we were treating together (she needed to know the patient’s psychiatric status before she engaged them in physical therapy), and it struck her that I reported the patient was “befuddled,” and she wondered who would use befuddled in a note (lately, I have started using “flummoxed”). Another time, I was charting on a patient, and I used the word “flapdoodle” to describe the nonsense the patient was spewing (I recall this particular patient told me they graduated from grammar school at 5 years old). Another favorite word of mine that I use to describe nonsense is “claptrap.”

So, I have been making my evaluations and progress notes sing for a very long time, as doing so improves my writing skills, stimulates my thinking, turns the drudgery of charting into some fun, and creates an adventure in writing.

I have also been a big user of mental status templates to cut down my time. The essential elements of a mental status are in the narrative template, and all I need to do is to edit the verbiage in the template to fit the patient’s presentation so that the mental status sings. Early on, I understood that, to be a good psychiatrist, you needed a good vocabulary so you can speak with as much precision as possible when describing a patient’s mental status.

I was seeing many Alzheimer’s patients at one point. So I developed a special mental status template for them (female and male), so all I had to do to it was cut and paste, and then edit the template to fit the patient like a glove. Template example: This is a xx-year-old female who was appropriately groomed and who was cooperative with the interview, but she could not give much information. She was not hyperactive or lethargic. Her mood was bland, and her affect was flat and bland. Her speech did not contain any relevant information. Thought processes were not evident, although she was awake. I could not get a history of delusions or current auditory or visual hallucinations. Her thought content was nondescript. She was attentive, and her recent and remote memory were poor. Clinical estimate of her intelligence could not be determined. Her judgment and insight were poor. I could not determine whether there was any suicidal or homicidal ideation.

Formulation: This is a xx-year-old female who has a major neurocognitive disorder (formerly known as dementia). She is not overtly psychotic, suicidal, homicidal, or gravely disabled, but her level of functioning leaves a lot to be desired, which is why she needs a sheltered living circumstance.

Dx: Major neurocognitive disorder (formerly known as dementia).

Here’s another example: This is a xx-year-old male who was appropriately groomed and who was cooperative with the interview. He was not hyperactive or lethargic. His mood was euthymic and he had a wide range of affect as he was able to smile, get serious, and be sad (about xxx). His speech was relevant, linear, and goal directed. Thought processes did not show any signs of loose associations, tangentiality, or circumstantiality. He denies any delusions or current auditory or visual hallucinations. His thought content was surrounding xxx. He was attentive, and his recent and remote memory were intact. Clinical estimate of his intelligence was xxx average. His judgment and insight were poor as xxx. No report of suicidal or homicidal ideation.

Formulation: xxx. He is not overtly psychotic, suicidal, homicidal, or gravely disabled so I will clear him for psychiatric discharge.

Dx: xxx.

Just me trying to make work a little easier for myself and everyone else.

Dr. Bell is a staff psychiatrist at Jackson Park Hospital’s Medical/Surgical-Psychiatry Inpatient Unit in Chicago, clinical psychiatrist emeritus in the department of psychiatry at the University of Illinois at Chicago, former president/CEO of Community Mental Health Council, and former director of the Institute for Juvenile Research (birthplace of child psychiatry), also in Chicago.

I was talking to a physical therapy (PT) colleague and she was lamenting how much she hated doing documentation on patients she was treating. I suggested to her that she make her evaluations and progress notes sing. This is a concept I would sometimes use with patients who might be depressed, for example, I would ask them if anything made their heart sing to get an idea how “depressed” they might be. If they were unhappy or sad, I would advise that they engage in “heart singing” activities and behaviors, as I believe it is the “simple pleasures” in life that keep us resilient and persistent.

It is funny, when I was a resident and working in Jackson Park Hospital’s first psychiatric ward in 1972, one day in a note I wrote “I am going to give this acutely psychotic patient the big T – Thorazine to help them get some sleep at night,” I did not really think much about it until one of the nurses brought it to my attention because she thought it was unique – and a funny way of reporting plans in my progress notes.

My PT colleague told me that she remembered the first time she read one of my notes on a patient we were treating together (she needed to know the patient’s psychiatric status before she engaged them in physical therapy), and it struck her that I reported the patient was “befuddled,” and she wondered who would use befuddled in a note (lately, I have started using “flummoxed”). Another time, I was charting on a patient, and I used the word “flapdoodle” to describe the nonsense the patient was spewing (I recall this particular patient told me they graduated from grammar school at 5 years old). Another favorite word of mine that I use to describe nonsense is “claptrap.”

So, I have been making my evaluations and progress notes sing for a very long time, as doing so improves my writing skills, stimulates my thinking, turns the drudgery of charting into some fun, and creates an adventure in writing.

I have also been a big user of mental status templates to cut down my time. The essential elements of a mental status are in the narrative template, and all I need to do is to edit the verbiage in the template to fit the patient’s presentation so that the mental status sings. Early on, I understood that, to be a good psychiatrist, you needed a good vocabulary so you can speak with as much precision as possible when describing a patient’s mental status.

I was seeing many Alzheimer’s patients at one point. So I developed a special mental status template for them (female and male), so all I had to do to it was cut and paste, and then edit the template to fit the patient like a glove. Template example: This is a xx-year-old female who was appropriately groomed and who was cooperative with the interview, but she could not give much information. She was not hyperactive or lethargic. Her mood was bland, and her affect was flat and bland. Her speech did not contain any relevant information. Thought processes were not evident, although she was awake. I could not get a history of delusions or current auditory or visual hallucinations. Her thought content was nondescript. She was attentive, and her recent and remote memory were poor. Clinical estimate of her intelligence could not be determined. Her judgment and insight were poor. I could not determine whether there was any suicidal or homicidal ideation.

Formulation: This is a xx-year-old female who has a major neurocognitive disorder (formerly known as dementia). She is not overtly psychotic, suicidal, homicidal, or gravely disabled, but her level of functioning leaves a lot to be desired, which is why she needs a sheltered living circumstance.

Dx: Major neurocognitive disorder (formerly known as dementia).

Here’s another example: This is a xx-year-old male who was appropriately groomed and who was cooperative with the interview. He was not hyperactive or lethargic. His mood was euthymic and he had a wide range of affect as he was able to smile, get serious, and be sad (about xxx). His speech was relevant, linear, and goal directed. Thought processes did not show any signs of loose associations, tangentiality, or circumstantiality. He denies any delusions or current auditory or visual hallucinations. His thought content was surrounding xxx. He was attentive, and his recent and remote memory were intact. Clinical estimate of his intelligence was xxx average. His judgment and insight were poor as xxx. No report of suicidal or homicidal ideation.

Formulation: xxx. He is not overtly psychotic, suicidal, homicidal, or gravely disabled so I will clear him for psychiatric discharge.

Dx: xxx.

Just me trying to make work a little easier for myself and everyone else.

Dr. Bell is a staff psychiatrist at Jackson Park Hospital’s Medical/Surgical-Psychiatry Inpatient Unit in Chicago, clinical psychiatrist emeritus in the department of psychiatry at the University of Illinois at Chicago, former president/CEO of Community Mental Health Council, and former director of the Institute for Juvenile Research (birthplace of child psychiatry), also in Chicago.

I was talking to a physical therapy (PT) colleague and she was lamenting how much she hated doing documentation on patients she was treating. I suggested to her that she make her evaluations and progress notes sing. This is a concept I would sometimes use with patients who might be depressed, for example, I would ask them if anything made their heart sing to get an idea how “depressed” they might be. If they were unhappy or sad, I would advise that they engage in “heart singing” activities and behaviors, as I believe it is the “simple pleasures” in life that keep us resilient and persistent.

It is funny, when I was a resident and working in Jackson Park Hospital’s first psychiatric ward in 1972, one day in a note I wrote “I am going to give this acutely psychotic patient the big T – Thorazine to help them get some sleep at night,” I did not really think much about it until one of the nurses brought it to my attention because she thought it was unique – and a funny way of reporting plans in my progress notes.

My PT colleague told me that she remembered the first time she read one of my notes on a patient we were treating together (she needed to know the patient’s psychiatric status before she engaged them in physical therapy), and it struck her that I reported the patient was “befuddled,” and she wondered who would use befuddled in a note (lately, I have started using “flummoxed”). Another time, I was charting on a patient, and I used the word “flapdoodle” to describe the nonsense the patient was spewing (I recall this particular patient told me they graduated from grammar school at 5 years old). Another favorite word of mine that I use to describe nonsense is “claptrap.”

So, I have been making my evaluations and progress notes sing for a very long time, as doing so improves my writing skills, stimulates my thinking, turns the drudgery of charting into some fun, and creates an adventure in writing.

I have also been a big user of mental status templates to cut down my time. The essential elements of a mental status are in the narrative template, and all I need to do is to edit the verbiage in the template to fit the patient’s presentation so that the mental status sings. Early on, I understood that, to be a good psychiatrist, you needed a good vocabulary so you can speak with as much precision as possible when describing a patient’s mental status.

I was seeing many Alzheimer’s patients at one point. So I developed a special mental status template for them (female and male), so all I had to do to it was cut and paste, and then edit the template to fit the patient like a glove. Template example: This is a xx-year-old female who was appropriately groomed and who was cooperative with the interview, but she could not give much information. She was not hyperactive or lethargic. Her mood was bland, and her affect was flat and bland. Her speech did not contain any relevant information. Thought processes were not evident, although she was awake. I could not get a history of delusions or current auditory or visual hallucinations. Her thought content was nondescript. She was attentive, and her recent and remote memory were poor. Clinical estimate of her intelligence could not be determined. Her judgment and insight were poor. I could not determine whether there was any suicidal or homicidal ideation.

Formulation: This is a xx-year-old female who has a major neurocognitive disorder (formerly known as dementia). She is not overtly psychotic, suicidal, homicidal, or gravely disabled, but her level of functioning leaves a lot to be desired, which is why she needs a sheltered living circumstance.

Dx: Major neurocognitive disorder (formerly known as dementia).

Here’s another example: This is a xx-year-old male who was appropriately groomed and who was cooperative with the interview. He was not hyperactive or lethargic. His mood was euthymic and he had a wide range of affect as he was able to smile, get serious, and be sad (about xxx). His speech was relevant, linear, and goal directed. Thought processes did not show any signs of loose associations, tangentiality, or circumstantiality. He denies any delusions or current auditory or visual hallucinations. His thought content was surrounding xxx. He was attentive, and his recent and remote memory were intact. Clinical estimate of his intelligence was xxx average. His judgment and insight were poor as xxx. No report of suicidal or homicidal ideation.

Formulation: xxx. He is not overtly psychotic, suicidal, homicidal, or gravely disabled so I will clear him for psychiatric discharge.

Dx: xxx.

Just me trying to make work a little easier for myself and everyone else.

Dr. Bell is a staff psychiatrist at Jackson Park Hospital’s Medical/Surgical-Psychiatry Inpatient Unit in Chicago, clinical psychiatrist emeritus in the department of psychiatry at the University of Illinois at Chicago, former president/CEO of Community Mental Health Council, and former director of the Institute for Juvenile Research (birthplace of child psychiatry), also in Chicago.

Smartphone interventions benefit schizophrenia patients

Mobile devices viewed as a unique opportunity

ORLANDO – Smartphones offer a way to give people with schizophrenia access to immediate medical guidance in times of need and clinicians a convenient way to check in with patients, an expert said at the annual congress of the Schizophrenia International Research Society.

Dror Ben-Zeev, PhD, professor of psychiatry and behavioral sciences at the University of Washington, Seattle, said that, despite the many differences in the habits and experiences of people with schizophrenia, there do not appear to be many differences in the way they use mobile technology, compared with the general population.

Even as far back as 2012, when smartphone technology was much less widely adopted, and he and his colleagues conducted a survey of patients in the Chicago area, 63% of schizophrenia patients said they had a mobile device. Ninety percent of them said they used the device to talk, one-third used it for text messaging, and 13% used it to browse the Internet.

More recently, a meta-analysis of 15 studies, published in 2016, found that, among those with psychotic disorders who were surveyed since 2014, 81% owned a mobile device. A majority said they favored using mobile technology for contact with medical services and for supporting self-management (Schizophr Bull. 2016 Mar;42[2]:448-55).

“Do they own phones? Do they use phones? Absolutely, they do,” Dr. Ben-Zeev said. “And in a surprising way, it might be one of the areas where the gap between psychotic illness and the general population is close to nonexistent.”

FOCUS, a smartphone app designed for easy use by patients to allow them to quickly cope with symptoms and to allow clinicians to ask how they’re doing, has helped to improve patient symptoms, Dr. Ben-Zeev said. Patients receive three daily prompts to check in with the app, which offers a chance to report symptoms. It also offers “on-demand” resources 24 hours a day for help with handling voices, social challenges, medications, sleep issues, and mood difficulties.

When patients report hearing voices, for instance, they are asked to describe them in multiple choice fashion, including an option to supply their own description. If a patient reports that, for example, the voices “know everything,” the app asks them to think of a time when the voices were sure something would happen, but it didn’t. The app also offers videos in which therapists give advice to help patients with symptoms.

In a 30-day trial, participants used the FOCUS app an average of five times a day in the previous week, and 63% of the uses were participant initiated rather than app initiated. Positive and Negative Syndrome Scale scores (77.6 vs. 71.5; P less than .001) and depression scores (19.7 vs. 13.9; P less than .01) were both significantly improved after the trial, compared with before (Schizophr Bull. 2014 Nov;40[6]:1244-53).

Meanwhile, a 3-month randomized, controlled trial comparing the FOCUS intervention with Wellness Recovery Action Plan (WRAP), a clinic-based group intervention, had what Dr. Ben-Zeev referred in an interview as “very compelling findings” (Psychiatr Serv. 2018 Sep 1;69[9]:978-85). That study, lead by Dr. Ben-Zeev and his colleagues, found that participants with serious mental illness who were assigned to FOCUS were more likely than those assigned to WRAP to begin treatment (90% vs. 58%) and to remain fully engaged in care over an 8-week period.

Researchers are also exploring the benefits of a program called CrossCheck, in which patients’ use of smartphones relays information that could predict a psychosis relapse (Psychiatr Rehab J. 2017 Sep;40[3]:266-75). For instance, use in the middle of the night indicates sleeping difficulties, and location data could indicate a change in residence. Both are warning signs of a possible impending relapse.

“There is a unique opportunity,” Dr. Ben-Zeev said, “to leverage this status to try to improve what we do.”

Dr. Ben-Zeev also is codirector of the university’s Behavioral Research in Technology and Engineering Center and director of the mHealth for Mental Health Program, a research collaborative that focuses on developing, evaluating, and implementing mobile technologies. Dr. Ben-Zeev has a licensing and consulting agreement with Pear Therapeutics and a consulting agreement with eQuility.

Mobile devices viewed as a unique opportunity

Mobile devices viewed as a unique opportunity

ORLANDO – Smartphones offer a way to give people with schizophrenia access to immediate medical guidance in times of need and clinicians a convenient way to check in with patients, an expert said at the annual congress of the Schizophrenia International Research Society.

Dror Ben-Zeev, PhD, professor of psychiatry and behavioral sciences at the University of Washington, Seattle, said that, despite the many differences in the habits and experiences of people with schizophrenia, there do not appear to be many differences in the way they use mobile technology, compared with the general population.

Even as far back as 2012, when smartphone technology was much less widely adopted, and he and his colleagues conducted a survey of patients in the Chicago area, 63% of schizophrenia patients said they had a mobile device. Ninety percent of them said they used the device to talk, one-third used it for text messaging, and 13% used it to browse the Internet.

More recently, a meta-analysis of 15 studies, published in 2016, found that, among those with psychotic disorders who were surveyed since 2014, 81% owned a mobile device. A majority said they favored using mobile technology for contact with medical services and for supporting self-management (Schizophr Bull. 2016 Mar;42[2]:448-55).

“Do they own phones? Do they use phones? Absolutely, they do,” Dr. Ben-Zeev said. “And in a surprising way, it might be one of the areas where the gap between psychotic illness and the general population is close to nonexistent.”

FOCUS, a smartphone app designed for easy use by patients to allow them to quickly cope with symptoms and to allow clinicians to ask how they’re doing, has helped to improve patient symptoms, Dr. Ben-Zeev said. Patients receive three daily prompts to check in with the app, which offers a chance to report symptoms. It also offers “on-demand” resources 24 hours a day for help with handling voices, social challenges, medications, sleep issues, and mood difficulties.

When patients report hearing voices, for instance, they are asked to describe them in multiple choice fashion, including an option to supply their own description. If a patient reports that, for example, the voices “know everything,” the app asks them to think of a time when the voices were sure something would happen, but it didn’t. The app also offers videos in which therapists give advice to help patients with symptoms.

In a 30-day trial, participants used the FOCUS app an average of five times a day in the previous week, and 63% of the uses were participant initiated rather than app initiated. Positive and Negative Syndrome Scale scores (77.6 vs. 71.5; P less than .001) and depression scores (19.7 vs. 13.9; P less than .01) were both significantly improved after the trial, compared with before (Schizophr Bull. 2014 Nov;40[6]:1244-53).

Meanwhile, a 3-month randomized, controlled trial comparing the FOCUS intervention with Wellness Recovery Action Plan (WRAP), a clinic-based group intervention, had what Dr. Ben-Zeev referred in an interview as “very compelling findings” (Psychiatr Serv. 2018 Sep 1;69[9]:978-85). That study, lead by Dr. Ben-Zeev and his colleagues, found that participants with serious mental illness who were assigned to FOCUS were more likely than those assigned to WRAP to begin treatment (90% vs. 58%) and to remain fully engaged in care over an 8-week period.

Researchers are also exploring the benefits of a program called CrossCheck, in which patients’ use of smartphones relays information that could predict a psychosis relapse (Psychiatr Rehab J. 2017 Sep;40[3]:266-75). For instance, use in the middle of the night indicates sleeping difficulties, and location data could indicate a change in residence. Both are warning signs of a possible impending relapse.

“There is a unique opportunity,” Dr. Ben-Zeev said, “to leverage this status to try to improve what we do.”

Dr. Ben-Zeev also is codirector of the university’s Behavioral Research in Technology and Engineering Center and director of the mHealth for Mental Health Program, a research collaborative that focuses on developing, evaluating, and implementing mobile technologies. Dr. Ben-Zeev has a licensing and consulting agreement with Pear Therapeutics and a consulting agreement with eQuility.

ORLANDO – Smartphones offer a way to give people with schizophrenia access to immediate medical guidance in times of need and clinicians a convenient way to check in with patients, an expert said at the annual congress of the Schizophrenia International Research Society.

Dror Ben-Zeev, PhD, professor of psychiatry and behavioral sciences at the University of Washington, Seattle, said that, despite the many differences in the habits and experiences of people with schizophrenia, there do not appear to be many differences in the way they use mobile technology, compared with the general population.

Even as far back as 2012, when smartphone technology was much less widely adopted, and he and his colleagues conducted a survey of patients in the Chicago area, 63% of schizophrenia patients said they had a mobile device. Ninety percent of them said they used the device to talk, one-third used it for text messaging, and 13% used it to browse the Internet.

More recently, a meta-analysis of 15 studies, published in 2016, found that, among those with psychotic disorders who were surveyed since 2014, 81% owned a mobile device. A majority said they favored using mobile technology for contact with medical services and for supporting self-management (Schizophr Bull. 2016 Mar;42[2]:448-55).

“Do they own phones? Do they use phones? Absolutely, they do,” Dr. Ben-Zeev said. “And in a surprising way, it might be one of the areas where the gap between psychotic illness and the general population is close to nonexistent.”

FOCUS, a smartphone app designed for easy use by patients to allow them to quickly cope with symptoms and to allow clinicians to ask how they’re doing, has helped to improve patient symptoms, Dr. Ben-Zeev said. Patients receive three daily prompts to check in with the app, which offers a chance to report symptoms. It also offers “on-demand” resources 24 hours a day for help with handling voices, social challenges, medications, sleep issues, and mood difficulties.

When patients report hearing voices, for instance, they are asked to describe them in multiple choice fashion, including an option to supply their own description. If a patient reports that, for example, the voices “know everything,” the app asks them to think of a time when the voices were sure something would happen, but it didn’t. The app also offers videos in which therapists give advice to help patients with symptoms.

In a 30-day trial, participants used the FOCUS app an average of five times a day in the previous week, and 63% of the uses were participant initiated rather than app initiated. Positive and Negative Syndrome Scale scores (77.6 vs. 71.5; P less than .001) and depression scores (19.7 vs. 13.9; P less than .01) were both significantly improved after the trial, compared with before (Schizophr Bull. 2014 Nov;40[6]:1244-53).

Meanwhile, a 3-month randomized, controlled trial comparing the FOCUS intervention with Wellness Recovery Action Plan (WRAP), a clinic-based group intervention, had what Dr. Ben-Zeev referred in an interview as “very compelling findings” (Psychiatr Serv. 2018 Sep 1;69[9]:978-85). That study, lead by Dr. Ben-Zeev and his colleagues, found that participants with serious mental illness who were assigned to FOCUS were more likely than those assigned to WRAP to begin treatment (90% vs. 58%) and to remain fully engaged in care over an 8-week period.

Researchers are also exploring the benefits of a program called CrossCheck, in which patients’ use of smartphones relays information that could predict a psychosis relapse (Psychiatr Rehab J. 2017 Sep;40[3]:266-75). For instance, use in the middle of the night indicates sleeping difficulties, and location data could indicate a change in residence. Both are warning signs of a possible impending relapse.

“There is a unique opportunity,” Dr. Ben-Zeev said, “to leverage this status to try to improve what we do.”

Dr. Ben-Zeev also is codirector of the university’s Behavioral Research in Technology and Engineering Center and director of the mHealth for Mental Health Program, a research collaborative that focuses on developing, evaluating, and implementing mobile technologies. Dr. Ben-Zeev has a licensing and consulting agreement with Pear Therapeutics and a consulting agreement with eQuility.

EXPERT ANALYSIS FROM SIRS 2019

Blunted cardiac reserve strongly predicts incident hepatorenal syndrome

VIENNA – Patients with cirrhosis and undergoing work-up for a possible liver transplant who had low cardiac reserve had a nearly fourfold increased rate of developing hepatorenal syndrome (HRS) during an average 17 months of follow-up, compared with patients with normal cardiac reserve, in a review of 560 Australian patients assessed for a possible liver transplant.

The findings suggest that patients with advanced liver disease should routinely undergo assessment for low cardiac reserve, Anoop N. Koshy, MBBS, said at the meeting sponsored by the European Association for the Study of the Liver.

said Dr. Koshy, a cardiologist with Austin Health in Melbourne. “We propose that it’s not low cardiac output that leads to HRS, but an inability of patients to increase their cardiac output” in response to usual stimuli.

The findings also add to the concerns about using nonselective beta-blocker drugs in patients with cirrhosis because of the potential of these drugs to further blunt increases in cardiac output; they also suggest that noninvasive measurement of cardiac reserve could identify patients with low cardiac reserve who could benefit from closer monitoring and new approaches to treatment, he suggested. About 10%-30% of patients with cirrhosis develop HRS, and the new finding suggests a noninvasive way to identify patients with the highest risk for this complication.

The study included 560 consecutive patients with cirrhosis and end-stage liver disease who were awaiting a liver transplant at the Victoria Liver Transplant Unit in Melbourne and underwent assessment by stress echocardiography using low-dose dobutamine (10 mcg/kg per min) during 2010-2017 as part of their standard pretransplant work-up. Exclusion of patients with known cardiac disease prior to their stress echo examination or incomplete measurement left 488 patients, of whom 424 were free from HRS at baseline. Patients with HRS at the time of their stress echo assessment had on average a cardiac output that was about 25% higher than patients without HRS, a statistically significant difference driven by both a significantly increased heart rate and stroke volume.

Among the 424 patients free from HRS at baseline, 85 developed HRS during an average 17-month follow-up. Patients with low cardiac reserve after dobutamine challenge, defined as an increase in cardiac output of less than 25%, had a 3.9-fold increased rate of incident HRS during follow-up, compared with patients who had a larger rise in their cardiac output after adjustment for several clinical and echocardiographic baseline variables, Dr. Koshy reported. In this analysis low cardiac reserve was the strongest predictor of subsequent HRS, he said.

Dr. Koshy had no disclosures.