User login

Hospitalist and Internal Medicine Leaders’ Perspectives of Early Discharge Challenges at Academic Medical Centers

The discharge process is a critical bottleneck for efficient patient flow through the hospital. Delayed discharges translate into delays in admissions and other patient transitions, often leading to excess costs, patient dissatisfaction, and even patient harm.1-3 The emergency department is particularly impacted by these delays; bottlenecks there lead to overcrowding, increased overall hospital length of stay, and increased risks for bad outcomes during hospitalization.2

Academic medical centers in particular may struggle with delayed discharges. In a typical teaching hospital, a team composed of an attending physician and housestaff share responsibility for determining the discharge plan. Additionally, clinical teaching activities may affect the process and quality of discharge.4-6

The prevalence and causes of delayed discharges vary greatly.7-9 To improve efficiency around discharge, many hospitals have launched initiatives designed to discharge patients earlier in the day, including goal setting (“discharge by noon”), scheduling discharge appointments, and using quality-improvement methods, such as Lean Methodology (LEAN), to remove inefficiencies within discharge processes.10-12 However, there are few data on the prevalence and effectiveness of different strategies.

The aim of this study was to survey academic hospitalist and general internal medicine physician leaders to elicit their perspectives on the factors contributing to discharge timing and the relative importance and effectiveness of early-discharge initiatives.

METHODS

Study Design, Participants, and Oversight

We obtained a list of 115 university-affiliated hospitals associated with a residency program and, in most cases, a medical school from Vizient Inc. (formerly University HealthSystem Consortium), an alliance of academic medical centers and affiliated hospitals. Each member institution submits clinical data to allow for the benchmarking of outcomes to drive transparency and quality improvement.13 More than 95% of the nation’s academic medical centers and affiliated hospitals participate in this collaborative. Vizient works with members but does not set nor promote quality metrics, such as discharge timeliness. E-mail addresses for hospital medicine physician leaders (eg, division chief) of major academic medical centers were obtained from each institution via publicly available data (eg, the institution’s website). When an institution did not have a hospital medicine section, we identified the division chief of general internal medicine. The University of California, San Francisco Institutional Review Board approved this study.

Survey Development and Domains

We developed a 30-item survey to evaluate 5 main domains of interest: current discharge practices, degree of prioritization of early discharge on the inpatient service, barriers to timely discharge, prevalence and perceived effectiveness of implemented early-discharge initiatives, and barriers to implementation of early-discharge initiatives.

Respondents were first asked to identify their institutions’ goals for discharge time. They were then asked to compare the priority of early-discharge initiatives to other departmental quality-improvement initiatives, such as reducing 30-day readmissions, improving interpreter use, and improving patient satisfaction. Next, respondents were asked to estimate the degree to which clinical or patient factors contributed to delays in discharge. Respondents were then asked whether specific early-discharge initiatives, such as changes to rounding practices or communication interventions, were implemented at their institutions and, if so, the perceived effectiveness of these initiatives at meeting discharge targets. We piloted the questions locally with physicians and researchers prior to finalizing the survey.

Data Collection

We sent surveys via an online platform (Research Electronic Data Capture).14 Nonresponders were sent 2 e-mail reminders and then a follow-up telephone call asking them to complete the survey. Only 1 survey per academic medical center was collected. Any respondent who completed the survey within 2 weeks of receiving it was entered to win a Kindle Fire.

Data Analysis

We summarized survey responses using descriptive statistics. Analysis was completed in IBM SPSS version 22 (Armonk, NY).

RESULTS

Survey Respondent and Institutional Characteristics

Of the 115 institutions surveyed, we received 61 responses (response rate of 53%), with 39 (64%) respondents from divisions of hospital medicine and 22 (36%) from divisions of general internal medicine. A majority (n = 53; 87%) stated their medicine services have a combination of teaching (with residents) and nonteaching (without residents) teams. Thirty-nine (64%) reported having daily multidisciplinary rounds.

Early Discharge as a Priority

Forty-seven (77%) institutional representatives strongly agreed or agreed that early discharge was a priority, with discharge by noon being the most common target time (n = 23; 38%). Thirty (50%) respondents rated early discharge as more important than improving interpreter use for non-English-speaking patients and equally important as reducing 30-day readmissions (n = 29; 48%) and improving patient satisfaction (n = 27; 44%).

Factors Delaying Discharge

The most common factors perceived as delaying discharge were considered external to the hospital, such as postacute care bed availability or scheduled (eg, ambulance) transport delays (n = 48; 79%), followed by patient factors such as patient transport issues (n = 44; 72%). Less commonly reported were workflow issues, such as competing primary team priorities or case manager bandwidth (n = 38; 62%; Table 1).

Initiatives to Improve Discharge

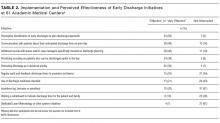

The most commonly implemented initiatives perceived as effective at improving discharge times were the preemptive identification of early discharges to plan discharge paperwork (n = 34; 56%), communication with patients about anticipated discharge time on the day prior to discharge (n = 29; 48%), and the implementation of additional rounds between physician teams and case managers specifically around discharge planning (n = 28; 46%). Initiatives not commonly implemented included regular audit of and feedback on discharge times to providers and teams (n = 21; 34%), the use of a discharge readiness checklist (n = 26; 43%), incentives such as bonuses or penalties (n = 37; 61%), the use of a whiteboard to indicate discharge times (n = 23; 38%), and dedicated quality-improvement approaches such as LEAN (n = 37; 61%; Table 2).

DISCUSSION

Our study suggests early discharge for medicine patients is a priority among academic institutions. Hospitalist and general internal medicine physician leaders in our study generally attributed delayed discharges to external factors, particularly unavailability of postacute care facilities and transportation delays. Having issues with finding postacute care placements is consistent with previous findings by Selker et al.15 and Carey et al.8 This is despite the 20-year difference between Selker et al.’s study and the current study, reflecting a continued opportunity for improvement, including stronger partnerships with local and regional postacute care facilities to expedite care transition and stronger discharge-planning efforts early in the admission process. Efforts in postacute care placement may be particularly important for Medicaid-insured and uninsured patients.

Our responders, hospitalist and internal medicine physician leaders, did not perceive the additional responsibilities of teaching and supervising trainees to be factors that significantly delayed patient discharge. This is in contrast to previous studies, which attributed delays in discharge to prolonged clinical decision-making related to teaching and supervision.4-6,8 This discrepancy may be due to the fact that we only surveyed single physician leaders at each institution and not residents. Our finding warrants further investigation to understand the degree to which resident skills may impact discharge planning and processes.

Institutions represented in our study have attempted a variety of initiatives promoting earlier discharge, with varying levels of perceived success. Initiatives perceived to be the most effective by hospital leaders centered on 2 main areas: (1) changing individual provider practice and (2) anticipatory discharge preparation. Interestingly, this is in discordance with the main factors labeled as causing delays in discharges, such as obtaining postacute care beds, busy case managers, and competing demands on primary teams. We hypothesize this may be because such changes require organization- or system-level changes and are perceived as more arduous than changes at the individual level. In addition, changes to individual provider behavior may be more cost- and time-effective than more systemic initiatives.

Our findings are consistent with the work published by Wertheimer and colleagues,11 who show that additional afternoon interdisciplinary rounds can help identify patients who may be discharged before noon the next day. In their study, identifying such patients in advance improved the overall early-discharge rate the following day.

Our findings should be interpreted in light of several limitations. Our survey only considers the perspectives of hospitalist and general internal medicine physician leaders at academic medical centers that are part of the Vizient Inc. collaborative. They do not represent all academic or community-based medical centers. Although the perceived effectiveness of some initiatives was high, we did not collect empirical data to support these claims or to determine which initiative had the greatest relative impact on discharge timeliness. Lastly, we did not obtain resident, nursing, or case manager perspectives on discharge practices. Given their roles as frontline providers, we may have missed these alternative perspectives.

Our study shows there is a strong interest in increasing early discharges in an effort to improve hospital throughput and patient flow.

Acknowledgments

The authors thank all participants who completed the survey and Danielle Carrier at Vizient Inc. (formally University HealthSystem Consortium) for her assistance in obtaining data.

Disclosures

Hemali Patel, Margaret Fang, Michelle Mourad, Adrienne Green, Ryan Murphy, and James Harrison report no conflicts of interest. At the time the research was conducted, Robert Wachter reported that he is a member of the Lucian Leape Institute at the National Patient Safety Foundation (no compensation except travel expenses); recently chaired an advisory board to England’s National Health Service (NHS) reviewing the NHS’s digital health strategy (no compensation except travel expenses); has a contract with UCSF from the Agency for Healthcare Research and Quality to edit a patient-safety website; receives compensation from John Wiley & Sons for writing a blog; receives royalties from Lippincott Williams & Wilkins and McGraw-Hill Education for writing and/or editing several books; receives stock options for serving on the board of Acuity Medical Management Systems; receives a yearly stipend for serving on the board of The Doctors Company; serves on the scientific advisory boards for amino.com, PatientSafe Solutions Inc., Twine, and EarlySense (for which he receives stock options); has a small royalty stake in CareWeb, a hospital communication tool developed at UCSF; and holds the Marc and Lynne Benioff Endowed Chair in Hospital Medicine and the Holly Smith Distinguished Professorship in Science and Medicine at UCSF.

1. Khanna S, Boyle J, Good N, Lind J. Impact of admission and discharge peak times on hospital overcrowding. Stud Health Technol Inform. 2011;168:82-88. PubMed

2. White BA, Biddinger PD, Chang Y, Grabowski B, Carignan S, Brown DFM. Boarding Inpatients in the Emergency Department Increases Discharged Patient Length of Stay. J Emerg Med. 2013;44(1):230-235. doi:10.1016/j.jemermed.2012.05.007. PubMed

3. Derlet RW, Richards JR. Overcrowding in the nation’s emergency departments: complex causes and disturbing effects. Ann Emerg Med. 2000;35(1):63-68. PubMed

4. da Silva SA, Valácio RA, Botelho FC, Amaral CFS. Reasons for discharge delays in teaching hospitals. Rev Saúde Pública. 2014;48(2):314-321. doi:10.1590/S0034-8910.2014048004971. PubMed

5. Greysen SR, Schiliro D, Horwitz LI, Curry L, Bradley EH. “Out of Sight, Out of Mind”: Housestaff Perceptions of Quality-Limiting Factors in Discharge Care at Teaching Hospitals. J Hosp Med Off Publ Soc Hosp Med. 2012;7(5):376-381. doi:10.1002/jhm.1928. PubMed

6. Goldman J, Reeves S, Wu R, Silver I, MacMillan K, Kitto S. Medical Residents and Interprofessional Interactions in Discharge: An Ethnographic Exploration of Factors That Affect Negotiation. J Gen Intern Med. 2015;30(10):1454-1460. doi:10.1007/s11606-015-3306-6. PubMed

7. Okoniewska B, Santana MJ, Groshaus H, et al. Barriers to discharge in an acute care medical teaching unit: a qualitative analysis of health providers’ perceptions. J Multidiscip Healthc. 2015;8:83-89. doi:10.2147/JMDH.S72633. PubMed

8. Carey MR, Sheth H, Scott Braithwaite R. A Prospective Study of Reasons for Prolonged Hospitalizations on a General Medicine Teaching Service. J Gen Intern Med. 2005;20(2):108-115. doi:10.1111/j.1525-1497.2005.40269.x. PubMed

9. Kim CS, Hart AL, Paretti RF, et al. Excess Hospitalization Days in an Academic Medical Center: Perceptions of Hospitalists and Discharge Planners. Am J Manag Care. 2011;17(2):e34-e42. http://www.ajmc.com/journals/issue/2011/2011-2-vol17-n2/AJMC_11feb_Kim_WebX_e34to42/. Accessed on October 26, 2016.

10. Gershengorn HB, Kocher R, Factor P. Management Strategies to Effect Change in Intensive Care Units: Lessons from the World of Business. Part II. Quality-Improvement Strategies. Ann Am Thorac Soc. 2014;11(3):444-453. doi:10.1513/AnnalsATS.201311-392AS. PubMed

11. Wertheimer B, Jacobs REA, Bailey M, et al. Discharge before noon: An achievable hospital goal. J Hosp Med. 2014;9(4):210-214. doi:10.1002/jhm.2154. PubMed

12. Manning DM, Tammel KJ, Blegen RN, et al. In-room display of day and time patient is anticipated to leave hospital: a “discharge appointment.” J Hosp Med. 2007;2(1):13-16. doi:10.1002/jhm.146. PubMed

13. Networks for academic medical centers. https://www.vizientinc.com/Our-networks/Networks-for-academic-medical-centers. Accessed on July 13, 2017.

14. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi:10.1016/j.jbi.2008.08.010. PubMed

15. Selker HP, Beshansky JR, Pauker SG, Kassirer JP. The epidemiology of delays in a teaching hospital. The development and use of a tool that detects unnecessary hospital days. Med Care. 1989;27(2):112-129. PubMed

The discharge process is a critical bottleneck for efficient patient flow through the hospital. Delayed discharges translate into delays in admissions and other patient transitions, often leading to excess costs, patient dissatisfaction, and even patient harm.1-3 The emergency department is particularly impacted by these delays; bottlenecks there lead to overcrowding, increased overall hospital length of stay, and increased risks for bad outcomes during hospitalization.2

Academic medical centers in particular may struggle with delayed discharges. In a typical teaching hospital, a team composed of an attending physician and housestaff share responsibility for determining the discharge plan. Additionally, clinical teaching activities may affect the process and quality of discharge.4-6

The prevalence and causes of delayed discharges vary greatly.7-9 To improve efficiency around discharge, many hospitals have launched initiatives designed to discharge patients earlier in the day, including goal setting (“discharge by noon”), scheduling discharge appointments, and using quality-improvement methods, such as Lean Methodology (LEAN), to remove inefficiencies within discharge processes.10-12 However, there are few data on the prevalence and effectiveness of different strategies.

The aim of this study was to survey academic hospitalist and general internal medicine physician leaders to elicit their perspectives on the factors contributing to discharge timing and the relative importance and effectiveness of early-discharge initiatives.

METHODS

Study Design, Participants, and Oversight

We obtained a list of 115 university-affiliated hospitals associated with a residency program and, in most cases, a medical school from Vizient Inc. (formerly University HealthSystem Consortium), an alliance of academic medical centers and affiliated hospitals. Each member institution submits clinical data to allow for the benchmarking of outcomes to drive transparency and quality improvement.13 More than 95% of the nation’s academic medical centers and affiliated hospitals participate in this collaborative. Vizient works with members but does not set nor promote quality metrics, such as discharge timeliness. E-mail addresses for hospital medicine physician leaders (eg, division chief) of major academic medical centers were obtained from each institution via publicly available data (eg, the institution’s website). When an institution did not have a hospital medicine section, we identified the division chief of general internal medicine. The University of California, San Francisco Institutional Review Board approved this study.

Survey Development and Domains

We developed a 30-item survey to evaluate 5 main domains of interest: current discharge practices, degree of prioritization of early discharge on the inpatient service, barriers to timely discharge, prevalence and perceived effectiveness of implemented early-discharge initiatives, and barriers to implementation of early-discharge initiatives.

Respondents were first asked to identify their institutions’ goals for discharge time. They were then asked to compare the priority of early-discharge initiatives to other departmental quality-improvement initiatives, such as reducing 30-day readmissions, improving interpreter use, and improving patient satisfaction. Next, respondents were asked to estimate the degree to which clinical or patient factors contributed to delays in discharge. Respondents were then asked whether specific early-discharge initiatives, such as changes to rounding practices or communication interventions, were implemented at their institutions and, if so, the perceived effectiveness of these initiatives at meeting discharge targets. We piloted the questions locally with physicians and researchers prior to finalizing the survey.

Data Collection

We sent surveys via an online platform (Research Electronic Data Capture).14 Nonresponders were sent 2 e-mail reminders and then a follow-up telephone call asking them to complete the survey. Only 1 survey per academic medical center was collected. Any respondent who completed the survey within 2 weeks of receiving it was entered to win a Kindle Fire.

Data Analysis

We summarized survey responses using descriptive statistics. Analysis was completed in IBM SPSS version 22 (Armonk, NY).

RESULTS

Survey Respondent and Institutional Characteristics

Of the 115 institutions surveyed, we received 61 responses (response rate of 53%), with 39 (64%) respondents from divisions of hospital medicine and 22 (36%) from divisions of general internal medicine. A majority (n = 53; 87%) stated their medicine services have a combination of teaching (with residents) and nonteaching (without residents) teams. Thirty-nine (64%) reported having daily multidisciplinary rounds.

Early Discharge as a Priority

Forty-seven (77%) institutional representatives strongly agreed or agreed that early discharge was a priority, with discharge by noon being the most common target time (n = 23; 38%). Thirty (50%) respondents rated early discharge as more important than improving interpreter use for non-English-speaking patients and equally important as reducing 30-day readmissions (n = 29; 48%) and improving patient satisfaction (n = 27; 44%).

Factors Delaying Discharge

The most common factors perceived as delaying discharge were considered external to the hospital, such as postacute care bed availability or scheduled (eg, ambulance) transport delays (n = 48; 79%), followed by patient factors such as patient transport issues (n = 44; 72%). Less commonly reported were workflow issues, such as competing primary team priorities or case manager bandwidth (n = 38; 62%; Table 1).

Initiatives to Improve Discharge

The most commonly implemented initiatives perceived as effective at improving discharge times were the preemptive identification of early discharges to plan discharge paperwork (n = 34; 56%), communication with patients about anticipated discharge time on the day prior to discharge (n = 29; 48%), and the implementation of additional rounds between physician teams and case managers specifically around discharge planning (n = 28; 46%). Initiatives not commonly implemented included regular audit of and feedback on discharge times to providers and teams (n = 21; 34%), the use of a discharge readiness checklist (n = 26; 43%), incentives such as bonuses or penalties (n = 37; 61%), the use of a whiteboard to indicate discharge times (n = 23; 38%), and dedicated quality-improvement approaches such as LEAN (n = 37; 61%; Table 2).

DISCUSSION

Our study suggests early discharge for medicine patients is a priority among academic institutions. Hospitalist and general internal medicine physician leaders in our study generally attributed delayed discharges to external factors, particularly unavailability of postacute care facilities and transportation delays. Having issues with finding postacute care placements is consistent with previous findings by Selker et al.15 and Carey et al.8 This is despite the 20-year difference between Selker et al.’s study and the current study, reflecting a continued opportunity for improvement, including stronger partnerships with local and regional postacute care facilities to expedite care transition and stronger discharge-planning efforts early in the admission process. Efforts in postacute care placement may be particularly important for Medicaid-insured and uninsured patients.

Our responders, hospitalist and internal medicine physician leaders, did not perceive the additional responsibilities of teaching and supervising trainees to be factors that significantly delayed patient discharge. This is in contrast to previous studies, which attributed delays in discharge to prolonged clinical decision-making related to teaching and supervision.4-6,8 This discrepancy may be due to the fact that we only surveyed single physician leaders at each institution and not residents. Our finding warrants further investigation to understand the degree to which resident skills may impact discharge planning and processes.

Institutions represented in our study have attempted a variety of initiatives promoting earlier discharge, with varying levels of perceived success. Initiatives perceived to be the most effective by hospital leaders centered on 2 main areas: (1) changing individual provider practice and (2) anticipatory discharge preparation. Interestingly, this is in discordance with the main factors labeled as causing delays in discharges, such as obtaining postacute care beds, busy case managers, and competing demands on primary teams. We hypothesize this may be because such changes require organization- or system-level changes and are perceived as more arduous than changes at the individual level. In addition, changes to individual provider behavior may be more cost- and time-effective than more systemic initiatives.

Our findings are consistent with the work published by Wertheimer and colleagues,11 who show that additional afternoon interdisciplinary rounds can help identify patients who may be discharged before noon the next day. In their study, identifying such patients in advance improved the overall early-discharge rate the following day.

Our findings should be interpreted in light of several limitations. Our survey only considers the perspectives of hospitalist and general internal medicine physician leaders at academic medical centers that are part of the Vizient Inc. collaborative. They do not represent all academic or community-based medical centers. Although the perceived effectiveness of some initiatives was high, we did not collect empirical data to support these claims or to determine which initiative had the greatest relative impact on discharge timeliness. Lastly, we did not obtain resident, nursing, or case manager perspectives on discharge practices. Given their roles as frontline providers, we may have missed these alternative perspectives.

Our study shows there is a strong interest in increasing early discharges in an effort to improve hospital throughput and patient flow.

Acknowledgments

The authors thank all participants who completed the survey and Danielle Carrier at Vizient Inc. (formally University HealthSystem Consortium) for her assistance in obtaining data.

Disclosures

Hemali Patel, Margaret Fang, Michelle Mourad, Adrienne Green, Ryan Murphy, and James Harrison report no conflicts of interest. At the time the research was conducted, Robert Wachter reported that he is a member of the Lucian Leape Institute at the National Patient Safety Foundation (no compensation except travel expenses); recently chaired an advisory board to England’s National Health Service (NHS) reviewing the NHS’s digital health strategy (no compensation except travel expenses); has a contract with UCSF from the Agency for Healthcare Research and Quality to edit a patient-safety website; receives compensation from John Wiley & Sons for writing a blog; receives royalties from Lippincott Williams & Wilkins and McGraw-Hill Education for writing and/or editing several books; receives stock options for serving on the board of Acuity Medical Management Systems; receives a yearly stipend for serving on the board of The Doctors Company; serves on the scientific advisory boards for amino.com, PatientSafe Solutions Inc., Twine, and EarlySense (for which he receives stock options); has a small royalty stake in CareWeb, a hospital communication tool developed at UCSF; and holds the Marc and Lynne Benioff Endowed Chair in Hospital Medicine and the Holly Smith Distinguished Professorship in Science and Medicine at UCSF.

The discharge process is a critical bottleneck for efficient patient flow through the hospital. Delayed discharges translate into delays in admissions and other patient transitions, often leading to excess costs, patient dissatisfaction, and even patient harm.1-3 The emergency department is particularly impacted by these delays; bottlenecks there lead to overcrowding, increased overall hospital length of stay, and increased risks for bad outcomes during hospitalization.2

Academic medical centers in particular may struggle with delayed discharges. In a typical teaching hospital, a team composed of an attending physician and housestaff share responsibility for determining the discharge plan. Additionally, clinical teaching activities may affect the process and quality of discharge.4-6

The prevalence and causes of delayed discharges vary greatly.7-9 To improve efficiency around discharge, many hospitals have launched initiatives designed to discharge patients earlier in the day, including goal setting (“discharge by noon”), scheduling discharge appointments, and using quality-improvement methods, such as Lean Methodology (LEAN), to remove inefficiencies within discharge processes.10-12 However, there are few data on the prevalence and effectiveness of different strategies.

The aim of this study was to survey academic hospitalist and general internal medicine physician leaders to elicit their perspectives on the factors contributing to discharge timing and the relative importance and effectiveness of early-discharge initiatives.

METHODS

Study Design, Participants, and Oversight

We obtained a list of 115 university-affiliated hospitals associated with a residency program and, in most cases, a medical school from Vizient Inc. (formerly University HealthSystem Consortium), an alliance of academic medical centers and affiliated hospitals. Each member institution submits clinical data to allow for the benchmarking of outcomes to drive transparency and quality improvement.13 More than 95% of the nation’s academic medical centers and affiliated hospitals participate in this collaborative. Vizient works with members but does not set nor promote quality metrics, such as discharge timeliness. E-mail addresses for hospital medicine physician leaders (eg, division chief) of major academic medical centers were obtained from each institution via publicly available data (eg, the institution’s website). When an institution did not have a hospital medicine section, we identified the division chief of general internal medicine. The University of California, San Francisco Institutional Review Board approved this study.

Survey Development and Domains

We developed a 30-item survey to evaluate 5 main domains of interest: current discharge practices, degree of prioritization of early discharge on the inpatient service, barriers to timely discharge, prevalence and perceived effectiveness of implemented early-discharge initiatives, and barriers to implementation of early-discharge initiatives.

Respondents were first asked to identify their institutions’ goals for discharge time. They were then asked to compare the priority of early-discharge initiatives to other departmental quality-improvement initiatives, such as reducing 30-day readmissions, improving interpreter use, and improving patient satisfaction. Next, respondents were asked to estimate the degree to which clinical or patient factors contributed to delays in discharge. Respondents were then asked whether specific early-discharge initiatives, such as changes to rounding practices or communication interventions, were implemented at their institutions and, if so, the perceived effectiveness of these initiatives at meeting discharge targets. We piloted the questions locally with physicians and researchers prior to finalizing the survey.

Data Collection

We sent surveys via an online platform (Research Electronic Data Capture).14 Nonresponders were sent 2 e-mail reminders and then a follow-up telephone call asking them to complete the survey. Only 1 survey per academic medical center was collected. Any respondent who completed the survey within 2 weeks of receiving it was entered to win a Kindle Fire.

Data Analysis

We summarized survey responses using descriptive statistics. Analysis was completed in IBM SPSS version 22 (Armonk, NY).

RESULTS

Survey Respondent and Institutional Characteristics

Of the 115 institutions surveyed, we received 61 responses (response rate of 53%), with 39 (64%) respondents from divisions of hospital medicine and 22 (36%) from divisions of general internal medicine. A majority (n = 53; 87%) stated their medicine services have a combination of teaching (with residents) and nonteaching (without residents) teams. Thirty-nine (64%) reported having daily multidisciplinary rounds.

Early Discharge as a Priority

Forty-seven (77%) institutional representatives strongly agreed or agreed that early discharge was a priority, with discharge by noon being the most common target time (n = 23; 38%). Thirty (50%) respondents rated early discharge as more important than improving interpreter use for non-English-speaking patients and equally important as reducing 30-day readmissions (n = 29; 48%) and improving patient satisfaction (n = 27; 44%).

Factors Delaying Discharge

The most common factors perceived as delaying discharge were considered external to the hospital, such as postacute care bed availability or scheduled (eg, ambulance) transport delays (n = 48; 79%), followed by patient factors such as patient transport issues (n = 44; 72%). Less commonly reported were workflow issues, such as competing primary team priorities or case manager bandwidth (n = 38; 62%; Table 1).

Initiatives to Improve Discharge

The most commonly implemented initiatives perceived as effective at improving discharge times were the preemptive identification of early discharges to plan discharge paperwork (n = 34; 56%), communication with patients about anticipated discharge time on the day prior to discharge (n = 29; 48%), and the implementation of additional rounds between physician teams and case managers specifically around discharge planning (n = 28; 46%). Initiatives not commonly implemented included regular audit of and feedback on discharge times to providers and teams (n = 21; 34%), the use of a discharge readiness checklist (n = 26; 43%), incentives such as bonuses or penalties (n = 37; 61%), the use of a whiteboard to indicate discharge times (n = 23; 38%), and dedicated quality-improvement approaches such as LEAN (n = 37; 61%; Table 2).

DISCUSSION

Our study suggests early discharge for medicine patients is a priority among academic institutions. Hospitalist and general internal medicine physician leaders in our study generally attributed delayed discharges to external factors, particularly unavailability of postacute care facilities and transportation delays. Having issues with finding postacute care placements is consistent with previous findings by Selker et al.15 and Carey et al.8 This is despite the 20-year difference between Selker et al.’s study and the current study, reflecting a continued opportunity for improvement, including stronger partnerships with local and regional postacute care facilities to expedite care transition and stronger discharge-planning efforts early in the admission process. Efforts in postacute care placement may be particularly important for Medicaid-insured and uninsured patients.

Our responders, hospitalist and internal medicine physician leaders, did not perceive the additional responsibilities of teaching and supervising trainees to be factors that significantly delayed patient discharge. This is in contrast to previous studies, which attributed delays in discharge to prolonged clinical decision-making related to teaching and supervision.4-6,8 This discrepancy may be due to the fact that we only surveyed single physician leaders at each institution and not residents. Our finding warrants further investigation to understand the degree to which resident skills may impact discharge planning and processes.

Institutions represented in our study have attempted a variety of initiatives promoting earlier discharge, with varying levels of perceived success. Initiatives perceived to be the most effective by hospital leaders centered on 2 main areas: (1) changing individual provider practice and (2) anticipatory discharge preparation. Interestingly, this is in discordance with the main factors labeled as causing delays in discharges, such as obtaining postacute care beds, busy case managers, and competing demands on primary teams. We hypothesize this may be because such changes require organization- or system-level changes and are perceived as more arduous than changes at the individual level. In addition, changes to individual provider behavior may be more cost- and time-effective than more systemic initiatives.

Our findings are consistent with the work published by Wertheimer and colleagues,11 who show that additional afternoon interdisciplinary rounds can help identify patients who may be discharged before noon the next day. In their study, identifying such patients in advance improved the overall early-discharge rate the following day.

Our findings should be interpreted in light of several limitations. Our survey only considers the perspectives of hospitalist and general internal medicine physician leaders at academic medical centers that are part of the Vizient Inc. collaborative. They do not represent all academic or community-based medical centers. Although the perceived effectiveness of some initiatives was high, we did not collect empirical data to support these claims or to determine which initiative had the greatest relative impact on discharge timeliness. Lastly, we did not obtain resident, nursing, or case manager perspectives on discharge practices. Given their roles as frontline providers, we may have missed these alternative perspectives.

Our study shows there is a strong interest in increasing early discharges in an effort to improve hospital throughput and patient flow.

Acknowledgments

The authors thank all participants who completed the survey and Danielle Carrier at Vizient Inc. (formally University HealthSystem Consortium) for her assistance in obtaining data.

Disclosures

Hemali Patel, Margaret Fang, Michelle Mourad, Adrienne Green, Ryan Murphy, and James Harrison report no conflicts of interest. At the time the research was conducted, Robert Wachter reported that he is a member of the Lucian Leape Institute at the National Patient Safety Foundation (no compensation except travel expenses); recently chaired an advisory board to England’s National Health Service (NHS) reviewing the NHS’s digital health strategy (no compensation except travel expenses); has a contract with UCSF from the Agency for Healthcare Research and Quality to edit a patient-safety website; receives compensation from John Wiley & Sons for writing a blog; receives royalties from Lippincott Williams & Wilkins and McGraw-Hill Education for writing and/or editing several books; receives stock options for serving on the board of Acuity Medical Management Systems; receives a yearly stipend for serving on the board of The Doctors Company; serves on the scientific advisory boards for amino.com, PatientSafe Solutions Inc., Twine, and EarlySense (for which he receives stock options); has a small royalty stake in CareWeb, a hospital communication tool developed at UCSF; and holds the Marc and Lynne Benioff Endowed Chair in Hospital Medicine and the Holly Smith Distinguished Professorship in Science and Medicine at UCSF.

1. Khanna S, Boyle J, Good N, Lind J. Impact of admission and discharge peak times on hospital overcrowding. Stud Health Technol Inform. 2011;168:82-88. PubMed

2. White BA, Biddinger PD, Chang Y, Grabowski B, Carignan S, Brown DFM. Boarding Inpatients in the Emergency Department Increases Discharged Patient Length of Stay. J Emerg Med. 2013;44(1):230-235. doi:10.1016/j.jemermed.2012.05.007. PubMed

3. Derlet RW, Richards JR. Overcrowding in the nation’s emergency departments: complex causes and disturbing effects. Ann Emerg Med. 2000;35(1):63-68. PubMed

4. da Silva SA, Valácio RA, Botelho FC, Amaral CFS. Reasons for discharge delays in teaching hospitals. Rev Saúde Pública. 2014;48(2):314-321. doi:10.1590/S0034-8910.2014048004971. PubMed

5. Greysen SR, Schiliro D, Horwitz LI, Curry L, Bradley EH. “Out of Sight, Out of Mind”: Housestaff Perceptions of Quality-Limiting Factors in Discharge Care at Teaching Hospitals. J Hosp Med Off Publ Soc Hosp Med. 2012;7(5):376-381. doi:10.1002/jhm.1928. PubMed

6. Goldman J, Reeves S, Wu R, Silver I, MacMillan K, Kitto S. Medical Residents and Interprofessional Interactions in Discharge: An Ethnographic Exploration of Factors That Affect Negotiation. J Gen Intern Med. 2015;30(10):1454-1460. doi:10.1007/s11606-015-3306-6. PubMed

7. Okoniewska B, Santana MJ, Groshaus H, et al. Barriers to discharge in an acute care medical teaching unit: a qualitative analysis of health providers’ perceptions. J Multidiscip Healthc. 2015;8:83-89. doi:10.2147/JMDH.S72633. PubMed

8. Carey MR, Sheth H, Scott Braithwaite R. A Prospective Study of Reasons for Prolonged Hospitalizations on a General Medicine Teaching Service. J Gen Intern Med. 2005;20(2):108-115. doi:10.1111/j.1525-1497.2005.40269.x. PubMed

9. Kim CS, Hart AL, Paretti RF, et al. Excess Hospitalization Days in an Academic Medical Center: Perceptions of Hospitalists and Discharge Planners. Am J Manag Care. 2011;17(2):e34-e42. http://www.ajmc.com/journals/issue/2011/2011-2-vol17-n2/AJMC_11feb_Kim_WebX_e34to42/. Accessed on October 26, 2016.

10. Gershengorn HB, Kocher R, Factor P. Management Strategies to Effect Change in Intensive Care Units: Lessons from the World of Business. Part II. Quality-Improvement Strategies. Ann Am Thorac Soc. 2014;11(3):444-453. doi:10.1513/AnnalsATS.201311-392AS. PubMed

11. Wertheimer B, Jacobs REA, Bailey M, et al. Discharge before noon: An achievable hospital goal. J Hosp Med. 2014;9(4):210-214. doi:10.1002/jhm.2154. PubMed

12. Manning DM, Tammel KJ, Blegen RN, et al. In-room display of day and time patient is anticipated to leave hospital: a “discharge appointment.” J Hosp Med. 2007;2(1):13-16. doi:10.1002/jhm.146. PubMed

13. Networks for academic medical centers. https://www.vizientinc.com/Our-networks/Networks-for-academic-medical-centers. Accessed on July 13, 2017.

14. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi:10.1016/j.jbi.2008.08.010. PubMed

15. Selker HP, Beshansky JR, Pauker SG, Kassirer JP. The epidemiology of delays in a teaching hospital. The development and use of a tool that detects unnecessary hospital days. Med Care. 1989;27(2):112-129. PubMed

1. Khanna S, Boyle J, Good N, Lind J. Impact of admission and discharge peak times on hospital overcrowding. Stud Health Technol Inform. 2011;168:82-88. PubMed

2. White BA, Biddinger PD, Chang Y, Grabowski B, Carignan S, Brown DFM. Boarding Inpatients in the Emergency Department Increases Discharged Patient Length of Stay. J Emerg Med. 2013;44(1):230-235. doi:10.1016/j.jemermed.2012.05.007. PubMed

3. Derlet RW, Richards JR. Overcrowding in the nation’s emergency departments: complex causes and disturbing effects. Ann Emerg Med. 2000;35(1):63-68. PubMed

4. da Silva SA, Valácio RA, Botelho FC, Amaral CFS. Reasons for discharge delays in teaching hospitals. Rev Saúde Pública. 2014;48(2):314-321. doi:10.1590/S0034-8910.2014048004971. PubMed

5. Greysen SR, Schiliro D, Horwitz LI, Curry L, Bradley EH. “Out of Sight, Out of Mind”: Housestaff Perceptions of Quality-Limiting Factors in Discharge Care at Teaching Hospitals. J Hosp Med Off Publ Soc Hosp Med. 2012;7(5):376-381. doi:10.1002/jhm.1928. PubMed

6. Goldman J, Reeves S, Wu R, Silver I, MacMillan K, Kitto S. Medical Residents and Interprofessional Interactions in Discharge: An Ethnographic Exploration of Factors That Affect Negotiation. J Gen Intern Med. 2015;30(10):1454-1460. doi:10.1007/s11606-015-3306-6. PubMed

7. Okoniewska B, Santana MJ, Groshaus H, et al. Barriers to discharge in an acute care medical teaching unit: a qualitative analysis of health providers’ perceptions. J Multidiscip Healthc. 2015;8:83-89. doi:10.2147/JMDH.S72633. PubMed

8. Carey MR, Sheth H, Scott Braithwaite R. A Prospective Study of Reasons for Prolonged Hospitalizations on a General Medicine Teaching Service. J Gen Intern Med. 2005;20(2):108-115. doi:10.1111/j.1525-1497.2005.40269.x. PubMed

9. Kim CS, Hart AL, Paretti RF, et al. Excess Hospitalization Days in an Academic Medical Center: Perceptions of Hospitalists and Discharge Planners. Am J Manag Care. 2011;17(2):e34-e42. http://www.ajmc.com/journals/issue/2011/2011-2-vol17-n2/AJMC_11feb_Kim_WebX_e34to42/. Accessed on October 26, 2016.

10. Gershengorn HB, Kocher R, Factor P. Management Strategies to Effect Change in Intensive Care Units: Lessons from the World of Business. Part II. Quality-Improvement Strategies. Ann Am Thorac Soc. 2014;11(3):444-453. doi:10.1513/AnnalsATS.201311-392AS. PubMed

11. Wertheimer B, Jacobs REA, Bailey M, et al. Discharge before noon: An achievable hospital goal. J Hosp Med. 2014;9(4):210-214. doi:10.1002/jhm.2154. PubMed

12. Manning DM, Tammel KJ, Blegen RN, et al. In-room display of day and time patient is anticipated to leave hospital: a “discharge appointment.” J Hosp Med. 2007;2(1):13-16. doi:10.1002/jhm.146. PubMed

13. Networks for academic medical centers. https://www.vizientinc.com/Our-networks/Networks-for-academic-medical-centers. Accessed on July 13, 2017.

14. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi:10.1016/j.jbi.2008.08.010. PubMed

15. Selker HP, Beshansky JR, Pauker SG, Kassirer JP. The epidemiology of delays in a teaching hospital. The development and use of a tool that detects unnecessary hospital days. Med Care. 1989;27(2):112-129. PubMed

© 2017 Society of Hospital Medicine

Hospital Medicine in 2015

This year, we celebrate the 10th anniversary of this esteemed publication, and it is indeed an occasion for celebration. For those of us who were there at the creation of the hospitalist field, the establishment of a vibrant academic journal was a dream, one whose fulfillment was central to the legitimization of our field as a full‐fledged specialty. After a decade and 83 issues, the Journal of Hospital Medicine is a formidable source of information, cohesion, and pride.

The anniversary comes at a particularly interesting time for hospitals and hospitalists. Our field's lifeblood has been in trailblazing and continuous reinvention. We were the first physician specialty that embraced the mantra of systems thinking, as captured in our famous metaphor that we care for two sick patients: the person and the system. We were the first field that proudly, and without a hint of shame, allied ourselves with hospital leaders, believing that we were mutually dependent on one another, and that our ability to make change happen and stick was better if we were working with our institutions' leaders. In creating our professional society (and this journal), we took unusual pains to be inclusiveof academic and community‐based hospitalists, or hospitalists entering the field from a variety of backgrounds, of hospitalists caring for adults and kids, and of nonphysician providers.

Our efforts have paid off. Leaders as prominent as Don Berwick have observed that hospitalists have become the essential army of improvers in hospitals and healthcare systems. Hospitalists have made immense contributions at their own institutions, and are increasingly assuming leadership roles both locally and nationally. It is not a coincidence that Medicare's top physician (Patrick Conway) and the Surgeon General (Vivek Murthy) are both hospitalists. Although there have been a few bumps along the way, hospitalists are generally satisfied with their careers, respected by their colleagues, accepted by their patients, and pleased to be members of the fastest growing specialty in the history of modern medicine.

All of this should leave us all feeling warm, proud and more than a little nervous. We are now a mature medical specialty, no longer upstarts, and the natural inclination, in a changing world, will be to hunker down and protect what we have. Of course, some of that is reasonable and appropriate (for example, to fight for our fair share of a bundled payment pie),[1] but some of it will be wrong, even self‐defeating. The world of healthcare is changing fast, and our ability to stay relevant and indispensable will depend on our ability to evolve to meet new conditions and needs.

Let us consider some of the major trends playing out in healthcare. The biggest is the brisk and unmistakable shift from volume to value.[2] This is a trend we have been on top of, because this really has been our field's raison d'tre: improving value in the hospital by cutting costs and length of stay while improving (or at least keeping neutral) quality and safety.[3] However, a world under intense value pressure will work hard to move patients from hospital to less expensive postacute settings, and will insist on seamless handoffs between the hospital and such settings. Thoughtful hospital medicine groups are thinking hard about this trend, and many are placing colleagues in skilled nursing facilities, or at the very least tightening their connections to the postacute facilities in their healthcare ecosystem. We no longer have the luxury of confining our talents and energies to those things that take place within the 4 walls of the hospital.

Another trend is the digitization of healthcare, a trend turbocharged by $30 billion in federal incentive payments distributed between 2009 and 2014.[4] Here too, hospitalists have emerged as leaders in information technology (IT) implementations, and a disproportionate number of chief medical information officers and other IT leaders seem to be hospitalists. Splendid. But it is also up to us to help figure out how to use IT tools effectively. The notes have morphed into bloated, copy‐and‐pasteridden monstrosities: let us figure out what a good note should look like in the digital era, and then implement educational and system changes to create a new standard. We no longer go to radiology because we do not need to to see our films; let us think about what the loss of the collegial exchange with our radiology colleagues has cost, and then set out to develop new systems to reimagine it. Right now, big data are mostly hype and unrequited promise. Who better than hospitalists to dive in and start making sense of the data to predict risks or help point to better treatments?

Another trend is population health. Although I do not foresee a return to the Marcus Welby model of a kindly physician following the patient everywhere, I can imagine certain patients (mostly those with several social and clinical comorbidities and at least 3 admissions per year) who might be well served by a back‐to‐the‐future system in which a primary care provider follows them into the hospital, perhaps comanaging the patients with the on‐service hospitalist. David Meltzer, at the University of Chicago, is currently studying such a model, and I look forward to seeing his results.[5] Rather than rejecting such experiments as violating the usual hospitalist structure, we must embrace them, at least until the evidence is in.

In the end, the field of hospital medicine emerged and thrived because of the promise, and later the evidence, that our presence led to better quality, safety, patient experience, education, and efficiency. This mandate must remain our mantra, even if it means that we have to evolve our model in keeping with a changing healthcare landscape. The minute we stop evolving is the minute our field starts planting the seeds of its own destruction.

Disclosure

Dr. Wachter reports that he is a member of the board of directors of IPC Healthcare.

- . Bundled payment. Hospitals see the advantages but face big challenges, too. Hospitals 367:292–295.

- , . The emerging role of “hospitalists” in the American health care system. N Engl J Med. 1995;335:514–517.

- . The Digital Doctor: Hope, Hype, and Harm at the Dawn of Medicine's Computer Age. New York, NY: McGraw‐Hill; 2015.

- . Comprehensive care physicians: an emerging specialty for chronic care. Fierce Healthcare website. Available at: http://www.fiercehealthcare.com/story/comprehensivists‐close‐chronic‐care‐communication‐gaps/2011‐05‐02. Published May 2, 2011. Last accessed May 29, 2015.

This year, we celebrate the 10th anniversary of this esteemed publication, and it is indeed an occasion for celebration. For those of us who were there at the creation of the hospitalist field, the establishment of a vibrant academic journal was a dream, one whose fulfillment was central to the legitimization of our field as a full‐fledged specialty. After a decade and 83 issues, the Journal of Hospital Medicine is a formidable source of information, cohesion, and pride.

The anniversary comes at a particularly interesting time for hospitals and hospitalists. Our field's lifeblood has been in trailblazing and continuous reinvention. We were the first physician specialty that embraced the mantra of systems thinking, as captured in our famous metaphor that we care for two sick patients: the person and the system. We were the first field that proudly, and without a hint of shame, allied ourselves with hospital leaders, believing that we were mutually dependent on one another, and that our ability to make change happen and stick was better if we were working with our institutions' leaders. In creating our professional society (and this journal), we took unusual pains to be inclusiveof academic and community‐based hospitalists, or hospitalists entering the field from a variety of backgrounds, of hospitalists caring for adults and kids, and of nonphysician providers.

Our efforts have paid off. Leaders as prominent as Don Berwick have observed that hospitalists have become the essential army of improvers in hospitals and healthcare systems. Hospitalists have made immense contributions at their own institutions, and are increasingly assuming leadership roles both locally and nationally. It is not a coincidence that Medicare's top physician (Patrick Conway) and the Surgeon General (Vivek Murthy) are both hospitalists. Although there have been a few bumps along the way, hospitalists are generally satisfied with their careers, respected by their colleagues, accepted by their patients, and pleased to be members of the fastest growing specialty in the history of modern medicine.

All of this should leave us all feeling warm, proud and more than a little nervous. We are now a mature medical specialty, no longer upstarts, and the natural inclination, in a changing world, will be to hunker down and protect what we have. Of course, some of that is reasonable and appropriate (for example, to fight for our fair share of a bundled payment pie),[1] but some of it will be wrong, even self‐defeating. The world of healthcare is changing fast, and our ability to stay relevant and indispensable will depend on our ability to evolve to meet new conditions and needs.

Let us consider some of the major trends playing out in healthcare. The biggest is the brisk and unmistakable shift from volume to value.[2] This is a trend we have been on top of, because this really has been our field's raison d'tre: improving value in the hospital by cutting costs and length of stay while improving (or at least keeping neutral) quality and safety.[3] However, a world under intense value pressure will work hard to move patients from hospital to less expensive postacute settings, and will insist on seamless handoffs between the hospital and such settings. Thoughtful hospital medicine groups are thinking hard about this trend, and many are placing colleagues in skilled nursing facilities, or at the very least tightening their connections to the postacute facilities in their healthcare ecosystem. We no longer have the luxury of confining our talents and energies to those things that take place within the 4 walls of the hospital.

Another trend is the digitization of healthcare, a trend turbocharged by $30 billion in federal incentive payments distributed between 2009 and 2014.[4] Here too, hospitalists have emerged as leaders in information technology (IT) implementations, and a disproportionate number of chief medical information officers and other IT leaders seem to be hospitalists. Splendid. But it is also up to us to help figure out how to use IT tools effectively. The notes have morphed into bloated, copy‐and‐pasteridden monstrosities: let us figure out what a good note should look like in the digital era, and then implement educational and system changes to create a new standard. We no longer go to radiology because we do not need to to see our films; let us think about what the loss of the collegial exchange with our radiology colleagues has cost, and then set out to develop new systems to reimagine it. Right now, big data are mostly hype and unrequited promise. Who better than hospitalists to dive in and start making sense of the data to predict risks or help point to better treatments?

Another trend is population health. Although I do not foresee a return to the Marcus Welby model of a kindly physician following the patient everywhere, I can imagine certain patients (mostly those with several social and clinical comorbidities and at least 3 admissions per year) who might be well served by a back‐to‐the‐future system in which a primary care provider follows them into the hospital, perhaps comanaging the patients with the on‐service hospitalist. David Meltzer, at the University of Chicago, is currently studying such a model, and I look forward to seeing his results.[5] Rather than rejecting such experiments as violating the usual hospitalist structure, we must embrace them, at least until the evidence is in.

In the end, the field of hospital medicine emerged and thrived because of the promise, and later the evidence, that our presence led to better quality, safety, patient experience, education, and efficiency. This mandate must remain our mantra, even if it means that we have to evolve our model in keeping with a changing healthcare landscape. The minute we stop evolving is the minute our field starts planting the seeds of its own destruction.

Disclosure

Dr. Wachter reports that he is a member of the board of directors of IPC Healthcare.

This year, we celebrate the 10th anniversary of this esteemed publication, and it is indeed an occasion for celebration. For those of us who were there at the creation of the hospitalist field, the establishment of a vibrant academic journal was a dream, one whose fulfillment was central to the legitimization of our field as a full‐fledged specialty. After a decade and 83 issues, the Journal of Hospital Medicine is a formidable source of information, cohesion, and pride.

The anniversary comes at a particularly interesting time for hospitals and hospitalists. Our field's lifeblood has been in trailblazing and continuous reinvention. We were the first physician specialty that embraced the mantra of systems thinking, as captured in our famous metaphor that we care for two sick patients: the person and the system. We were the first field that proudly, and without a hint of shame, allied ourselves with hospital leaders, believing that we were mutually dependent on one another, and that our ability to make change happen and stick was better if we were working with our institutions' leaders. In creating our professional society (and this journal), we took unusual pains to be inclusiveof academic and community‐based hospitalists, or hospitalists entering the field from a variety of backgrounds, of hospitalists caring for adults and kids, and of nonphysician providers.

Our efforts have paid off. Leaders as prominent as Don Berwick have observed that hospitalists have become the essential army of improvers in hospitals and healthcare systems. Hospitalists have made immense contributions at their own institutions, and are increasingly assuming leadership roles both locally and nationally. It is not a coincidence that Medicare's top physician (Patrick Conway) and the Surgeon General (Vivek Murthy) are both hospitalists. Although there have been a few bumps along the way, hospitalists are generally satisfied with their careers, respected by their colleagues, accepted by their patients, and pleased to be members of the fastest growing specialty in the history of modern medicine.

All of this should leave us all feeling warm, proud and more than a little nervous. We are now a mature medical specialty, no longer upstarts, and the natural inclination, in a changing world, will be to hunker down and protect what we have. Of course, some of that is reasonable and appropriate (for example, to fight for our fair share of a bundled payment pie),[1] but some of it will be wrong, even self‐defeating. The world of healthcare is changing fast, and our ability to stay relevant and indispensable will depend on our ability to evolve to meet new conditions and needs.

Let us consider some of the major trends playing out in healthcare. The biggest is the brisk and unmistakable shift from volume to value.[2] This is a trend we have been on top of, because this really has been our field's raison d'tre: improving value in the hospital by cutting costs and length of stay while improving (or at least keeping neutral) quality and safety.[3] However, a world under intense value pressure will work hard to move patients from hospital to less expensive postacute settings, and will insist on seamless handoffs between the hospital and such settings. Thoughtful hospital medicine groups are thinking hard about this trend, and many are placing colleagues in skilled nursing facilities, or at the very least tightening their connections to the postacute facilities in their healthcare ecosystem. We no longer have the luxury of confining our talents and energies to those things that take place within the 4 walls of the hospital.

Another trend is the digitization of healthcare, a trend turbocharged by $30 billion in federal incentive payments distributed between 2009 and 2014.[4] Here too, hospitalists have emerged as leaders in information technology (IT) implementations, and a disproportionate number of chief medical information officers and other IT leaders seem to be hospitalists. Splendid. But it is also up to us to help figure out how to use IT tools effectively. The notes have morphed into bloated, copy‐and‐pasteridden monstrosities: let us figure out what a good note should look like in the digital era, and then implement educational and system changes to create a new standard. We no longer go to radiology because we do not need to to see our films; let us think about what the loss of the collegial exchange with our radiology colleagues has cost, and then set out to develop new systems to reimagine it. Right now, big data are mostly hype and unrequited promise. Who better than hospitalists to dive in and start making sense of the data to predict risks or help point to better treatments?

Another trend is population health. Although I do not foresee a return to the Marcus Welby model of a kindly physician following the patient everywhere, I can imagine certain patients (mostly those with several social and clinical comorbidities and at least 3 admissions per year) who might be well served by a back‐to‐the‐future system in which a primary care provider follows them into the hospital, perhaps comanaging the patients with the on‐service hospitalist. David Meltzer, at the University of Chicago, is currently studying such a model, and I look forward to seeing his results.[5] Rather than rejecting such experiments as violating the usual hospitalist structure, we must embrace them, at least until the evidence is in.

In the end, the field of hospital medicine emerged and thrived because of the promise, and later the evidence, that our presence led to better quality, safety, patient experience, education, and efficiency. This mandate must remain our mantra, even if it means that we have to evolve our model in keeping with a changing healthcare landscape. The minute we stop evolving is the minute our field starts planting the seeds of its own destruction.

Disclosure

Dr. Wachter reports that he is a member of the board of directors of IPC Healthcare.

- . Bundled payment. Hospitals see the advantages but face big challenges, too. Hospitals 367:292–295.

- , . The emerging role of “hospitalists” in the American health care system. N Engl J Med. 1995;335:514–517.

- . The Digital Doctor: Hope, Hype, and Harm at the Dawn of Medicine's Computer Age. New York, NY: McGraw‐Hill; 2015.

- . Comprehensive care physicians: an emerging specialty for chronic care. Fierce Healthcare website. Available at: http://www.fiercehealthcare.com/story/comprehensivists‐close‐chronic‐care‐communication‐gaps/2011‐05‐02. Published May 2, 2011. Last accessed May 29, 2015.

- . Bundled payment. Hospitals see the advantages but face big challenges, too. Hospitals 367:292–295.

- , . The emerging role of “hospitalists” in the American health care system. N Engl J Med. 1995;335:514–517.

- . The Digital Doctor: Hope, Hype, and Harm at the Dawn of Medicine's Computer Age. New York, NY: McGraw‐Hill; 2015.

- . Comprehensive care physicians: an emerging specialty for chronic care. Fierce Healthcare website. Available at: http://www.fiercehealthcare.com/story/comprehensivists‐close‐chronic‐care‐communication‐gaps/2011‐05‐02. Published May 2, 2011. Last accessed May 29, 2015.

Hospital High‐Value Care Program

With a United States medical system that spends as much as $750 billion each year on care that does not result in improved health outcomes,[1] many policy initiatives, including the Centers for Medicare and Medicaid Services' Value‐Based Purchasing program, seek to realign hospitals' financial incentives from a focus on production to one on value (quality divided by cost).[2, 3] Professional organizations have now deemed resource stewardship an ethical responsibility for professionalism,[4, 5] and campaigns such as the American Board of Internal Medicine (ABIM) Foundation's Choosing Wisely effort and the American College of Physicians' High‐Value Care platform are calling on frontline clinicians to address unnecessary and wasteful services.[6, 7]

Despite these pressures and initiatives, most physicians lack the knowledge and tools necessary to prioritize the delivery of their own healthcare services according to value.[8, 9, 10] Hospital medicine physicians are unaware of the costs associated with the interventions they order,[10] and the majority of medical training programs lack curricula focused on healthcare costs,[11] creating a large gap between physicians' perceived, desired, and actual knowledge related to costs.[12] Novel frameworks and frontline physician engagement are required if clinicians are to improve the value of the care they deliver.

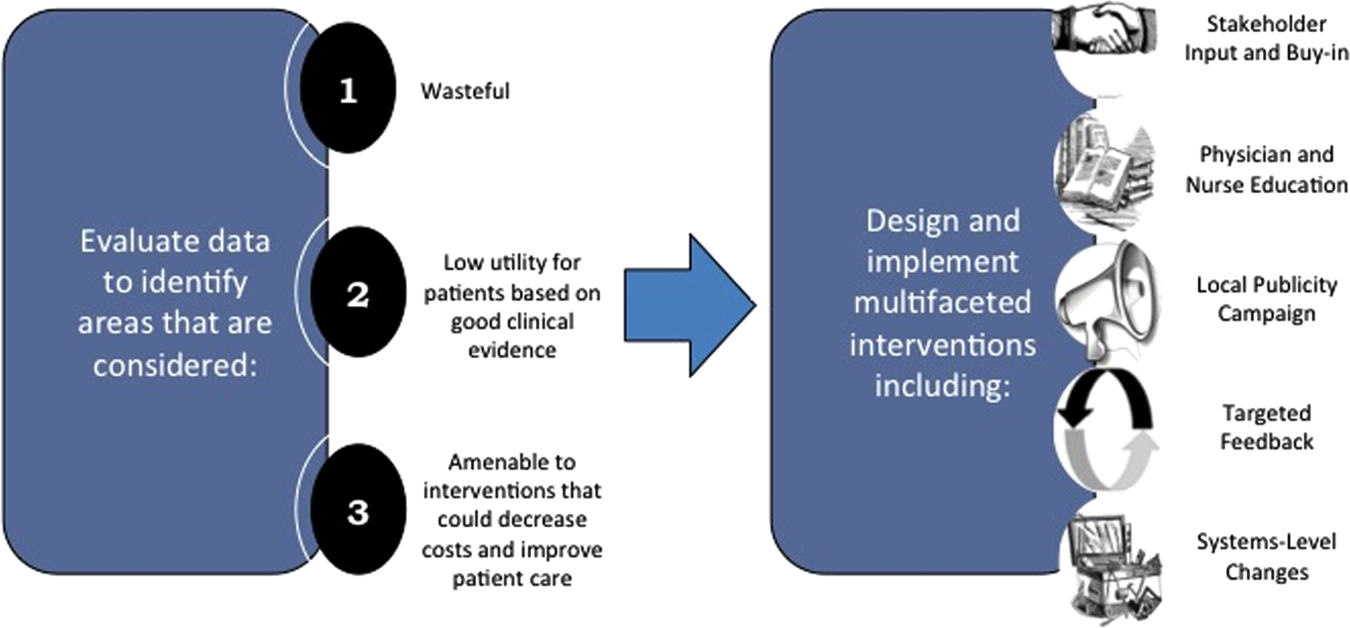

We describe 1 of our first steps at the University of California, San Francisco (UCSF) to promote high‐value care (HVC) delivery: the creation of a HVC program led by clinicians and administrators focused on identifying and addressing wasteful practices within our hospitalist group. The program aims to (1) use financial and clinical data to identify areas with clear evidence of waste in the hospital, (2) promote evidence‐based interventions that improve both quality of care and value, and (3) pair interventions with evidence‐based cost awareness education to drive culture change. Our experience and inaugural projects provide a model of the key features, inherent challenges, and lessons learned, which may help inform similar efforts.

METHODS

In March 2012, we launched an HVC program within our Division of Hospital Medicine at UCSF Medical Center, a 600‐bed academic medical center in an urban setting. During the 2013 academic year, our division included 45 physicians. The medicine service, comprised of 8 teaching medical ward teams (1 attending, 1 resident, 2 interns, and variable number of medical students), and 1 nonteaching medical ward team (1 attending), admitted 4700 patients that year.

Organizational Framework

The HVC program is co‐led by a UCSF hospitalist (C.M.) and the administrator of the Division of Hospital Medicine (M.N.). Team members include hospitalists, hospital medicine fellows, resident physicians, pharmacists, project coordinators, and other administrators. The team meets in person for 1 hour every month. Project teams and ad hoc subcommittee groups often convene between meetings.

Our HVC program was placed within the infrastructure, and under the leadership, of our already established quality improvement (QI) program at UCSF. Our Division of Hospital Medicine Director of Quality and Safety (M.M.) thus oversees the QI, patient safety, patient experience, and high‐value care efforts.

The HVC program funding is largely in personnel costs. The physician leader (15% effort) is funded by the Division of Hospital Medicine, whereas the administrator is cofunded by both the division and by the medical center (largely through her roles as both division administrator and service line director). An administrative assistant within the division is also assigned to help with administrative tasks. Some additional data gathering and project support comes from existing medical center QI infrastructure, the decision support services unit, and through UCSF's new Center for Healthcare Value. Other ancillary costs for our projects have included publicity, data analytics, and information technology infrastructure. We estimate that the costs of this program are approximately $50,000 to $75,000 annually.

Framework for Identifying Target Projects

Robust Analysis of Costs

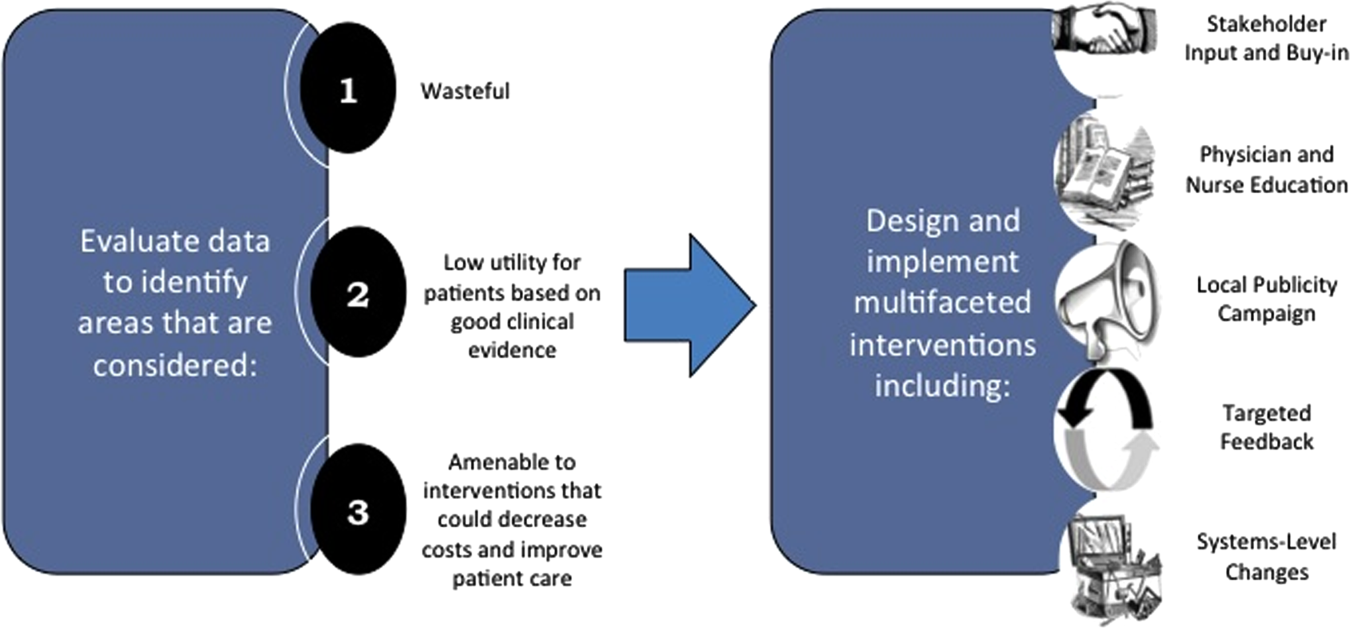

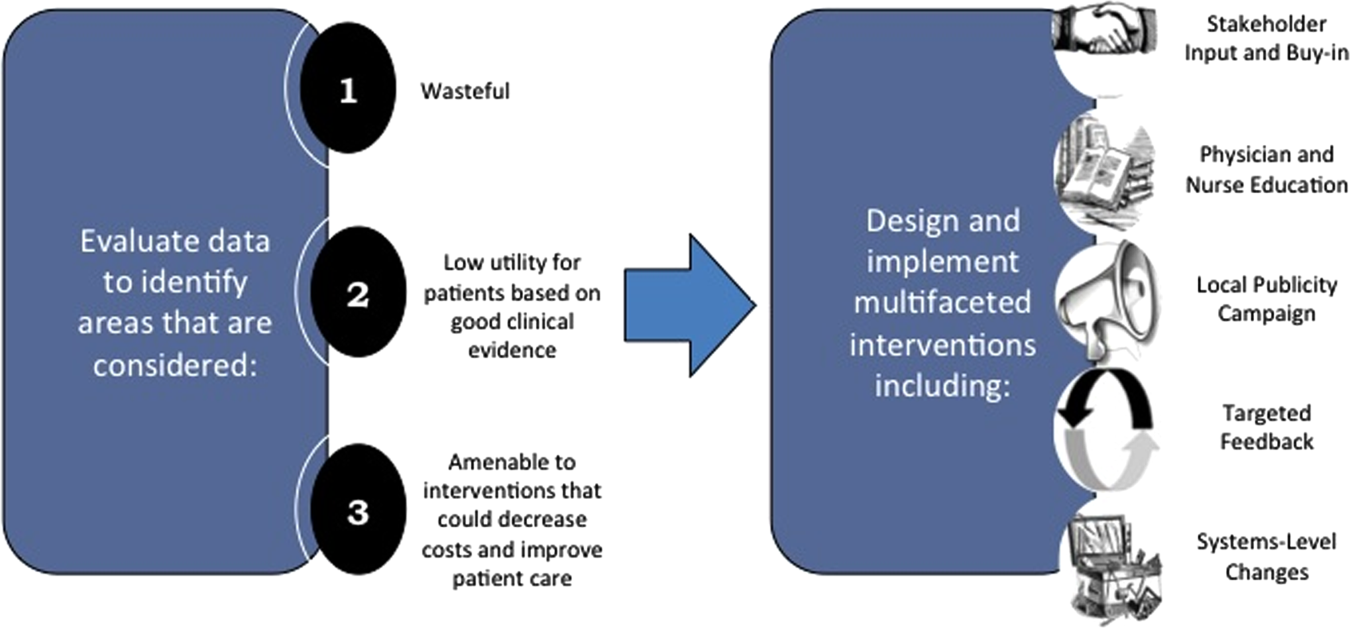

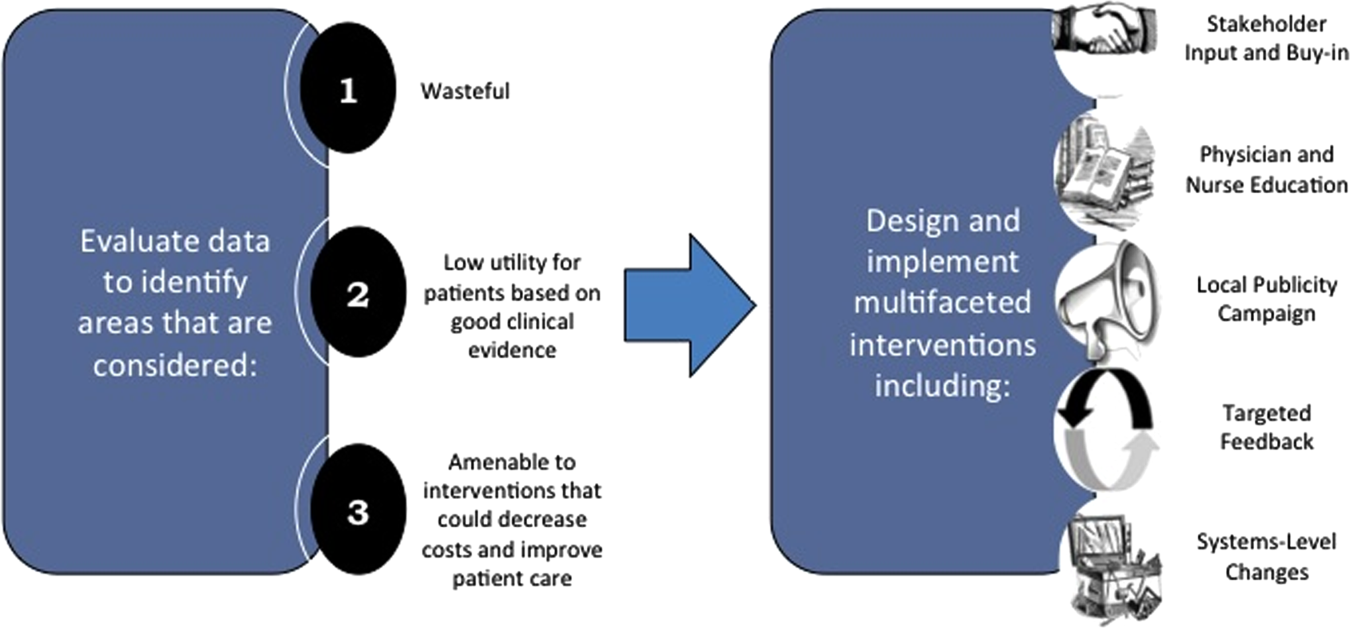

We created a framework for identifying, designing, and promoting projects specifically aimed at improving healthcare value (Figure 1). Financial data were used to identify areas with clear evidence of waste in the hospital, areas of high cost with no benefit in health outcomes. We focused particularly on obtaining cost and billing data for our medical service, which provided important insight into potential targets for improvements in value. For example, in 2011, the Division of Hospital Medicine spent more than $1 million annually in direct costs for the administration of nebulized bronchodilator therapies (nebs) to nonintensive care unit patients on the medical service.[13] These high costs, exposed by billing data, were believed to represent potential unnecessary testing and/or procedures. Not every area of high cost was deemed a target for intervention. For example, the use of recombinant factor VIII appeared a necessary expenditure (over $1 million per year) for our patients with hemophilia. Although our efforts focused on reducing waste, it is worth noting that healthcare value can also be increased by improving the delivery of high‐value services.

Recognized Benefits in Quality of Care

The program also evaluated the impact of cost reduction efforts on the quality of care, based on a high standard of current evidence. Though value can be improved by interventions that decrease costs while being quality neutral, our group chose to focus first on projects that would simultaneously improve quality while decreasing costs. We felt that this win‐win strategy would help obtain buy‐in from clinicians weary of prior cost‐cutting programs. For example, we pursued interventions aimed at reducing inappropriate gastric stress ulcer prophylaxis, which had the potential to both cut costs and minimize risks of hospital‐acquired pneumonia and Clostridium difficile infections.[14, 15] All proposed HVC targets were vetted through a review of the literature and published guidelines. In general, our initial projects had to be strongly supported by evidence, with high‐quality studies, preferably meta‐analyses or systematic reviews, that displayed the safety of our recommended changes. We reviewed the literature with experts. For example, we met with faculty pulmonologists to discuss the evidence supporting the use of inhalers instead of nebulizers in adults with obstructive pulmonary disease. The goals of our projects were chosen by our HVC committee, based on an analysis of our baseline data and the perceived potential effects of our proposed interventions.

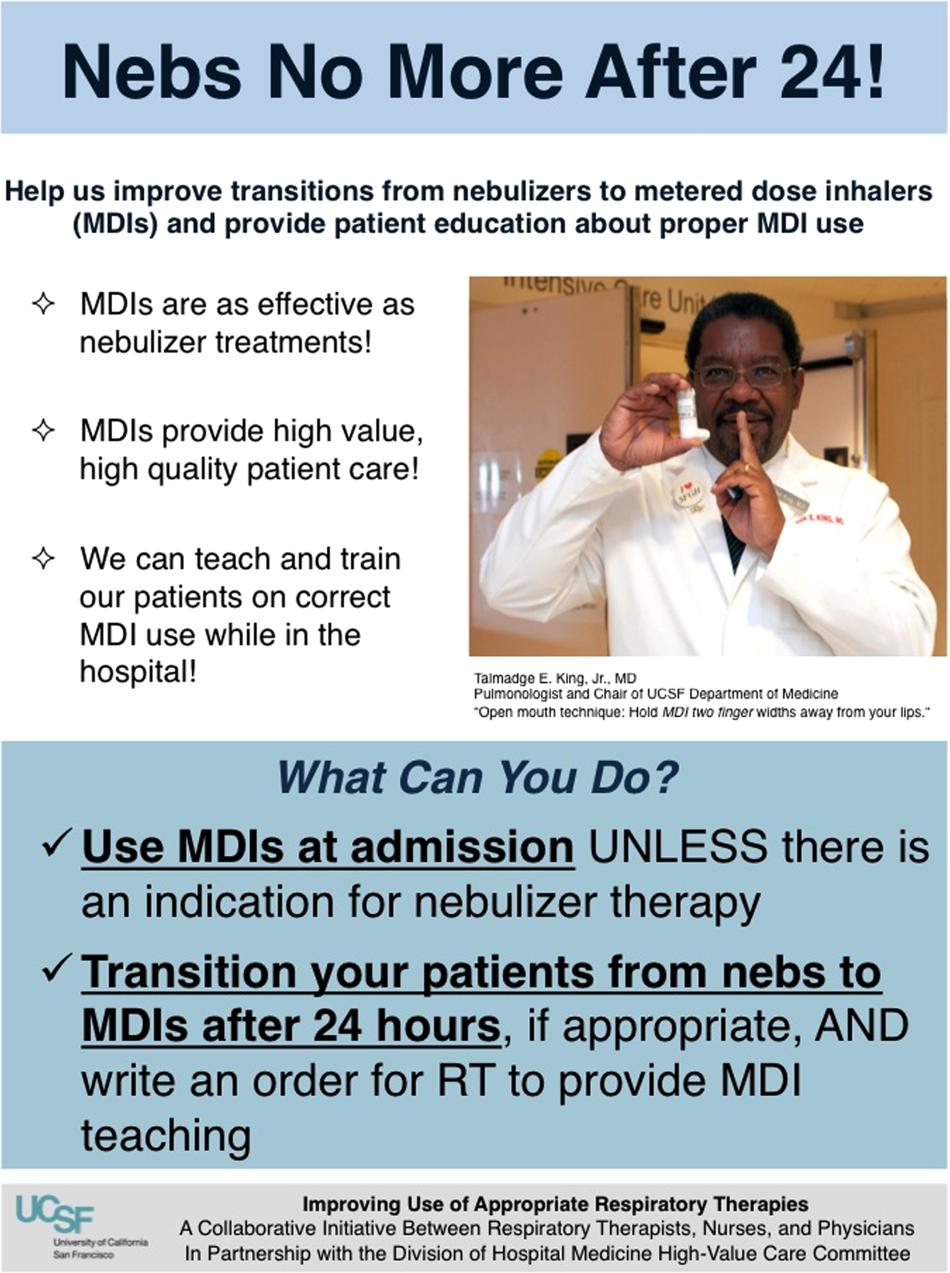

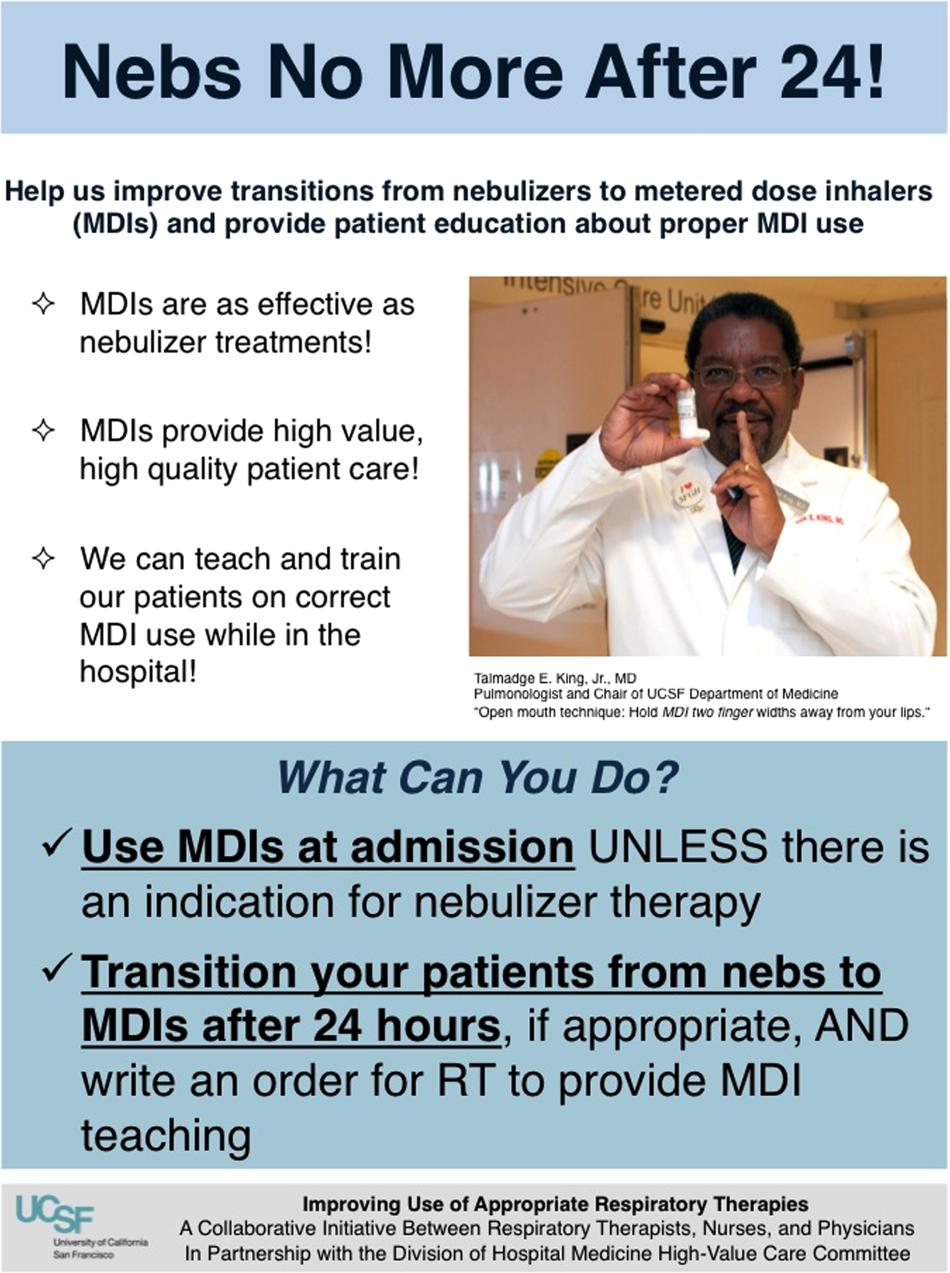

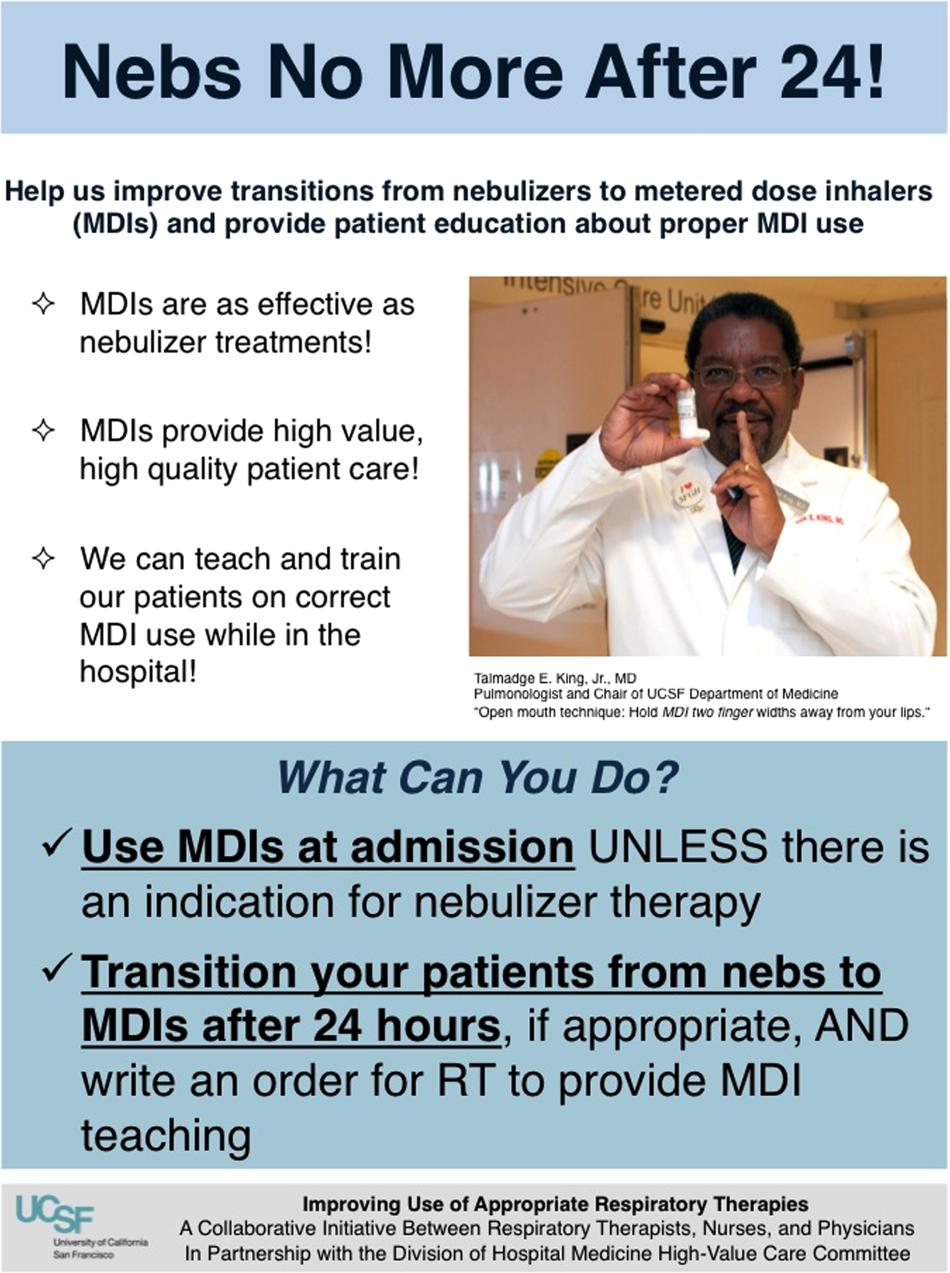

Educational Intervention

Last, we paired interventions with evidence‐based cost awareness education to drive culture change. At UCSF we have an ongoing longitudinal cost‐awareness curriculum for residents, which has previously been described.[16] We took advantage of this educational forum to address gaps in clinician knowledge related to the targeted areas. When launching the initiative to decrease unnecessary inpatient nebulizer usage and improve transitions to inhalers, we utilized the chronic obstructive pulmonary disease case in the cost‐awareness series. Doing so allowed us to both review the evidence behind the effectiveness of inhalers, and introduce our Nebs No More After 24 campaign, which sought to transition adult inpatients with obstructive pulmonary symptoms from nebs to inhalers within 24 hours of admission.[13]

Intervention Strategy

Our general approach has been to design and implement multifaceted interventions, adapted from previous QI literature (Figure 1).[17] Given the importance of frontline clinician engagement to successful project implementation,[18, 19, 20] our interventions are physician‐driven and are vetted by a large group of clinicians prior to launch. The HVC program also explicitly seeks stakeholder input, perspective, and buy‐in prior to implementation. For example, we involved respiratory therapists (RTs) in the design of the Nebs No More After 24 project, thus ensuring that the interventions fit within their workflow and align with their care‐delivery goals.

Local publicity campaigns provide education and reminders for clinicians. Posters, such as the Nebs No More After 24 poster (Figure 2), were hung in physician, nursing, and RT work areas. Pens featuring the catchphrase Nebs No More After 24 were distributed to clinicians.

In addition to presentations to residents through the UCSF cost awareness curriculum, educational presentations were also delivered to attending physicians and to other allied members of the healthcare team (eg, nurses, RTs) during regularly scheduled staff meetings.

The metrics for each of the projects were regularly monitored, and targeted feedback was provided to clinicians. For the Nebs No More After 24 campaign, data for the number of nebs delivered on the target floor were provided to resident physicians during the cost awareness conference each month, and the data were presented to attending hospitalists in the monthly QI newsletter. This academic year, transfusion and telemetry data are presented via the same strategy.

Stakeholder recruitment, education, and promotional campaigns are important to program launches, but to sustain projects over the long‐term, system changes may be necessary. We have pursued changes in the computerized provider order entry (CPOE) system, such as removing nebs from the admission order set or putting a default duration for certain telemetry orders. Systems‐level interventions, although more difficult to achieve, play an important role in creating enduring changes when paired with educational interventions.

RESULTS

During our first 2 years we have initiated ongoing projects directed at 6 major targets (Table 1). Our flagship project, Nebs No More After 24, resulted in a decrease of nebulizer rates by more than 50% on a high‐acuity medical floor, as previously published.[13] We created a financial model that primarily accounted for RT time and pharmaceutical costs, and estimated a savings of approximately $250,000 annually on this single medical ward (see Supporting Information, Table 1, in the online version of this article).[13]

| High‐Value Care Projects | Relevant Baseline Data | Goals of Project | Strategies |

|---|---|---|---|

| |||

| Nebs No More After 24: Improving appropriate use of respiratory services | The medicine service spent $1 million in direct costs on approximately 25,000 nebs for non‐ICU inpatients. | Reduce unnecessary nebs >15% over 9 months. | Removed nebs from admit order set. |

| Improve transitions from nebs to MDIs. | Enlisted RTs and RNs to help with MDI teaching for patients. | ||

| Improve patient self‐administration of MDIs. | Implemented an educational program for medicine physicians. | ||

| Created local publicity: posters, flyers, and pens. | |||

| Provided data feedback to providers. | |||

| Next step: Introduce a CPOE‐linked intervention. | |||

| Improving use of stress ulcer prophylaxis | 77% of ICU patients on acid suppressive therapy; 31% of these patients did not meet criteria for appropriate prophylaxis. | Reduce overuse and inappropriate use of SUP. | A team of pharmacists, nurses, and physicians developed targeted and evidence‐based UCSF guidelines on use of SUP. |

| Developed and implemented a pharmacist‐led intervention to reduce inappropriate SUP in the ICUs that included the following: | |||

| Reminders on admission and discharge from ICU | |||

| Education and awareness initiative for prescribers | |||

| ICU and service champions | |||

| Culture change | |||

| Next step: Incorporate indications in CPOE and work with ICU to incorporate appropriate GI prophylaxis as part of the standard ICU care bundle. | |||

| Blood utilization stewardship | 30% of transfusions on the hospital medicine service are provided to patients with a hemoglobin >8 g/dL. | Decrease units of blood transfused for a hemoglobin >8.0 g/dL by 25%. | Launched an educational campaign for attending and resident physicians. |

| Monthly feedback to residents and attending physicians. | |||

| Next step: Introduce a decision support system in the CPOE for blood transfusion orders in patients with most recent hemoglobin level >8. | |||

| Improving telemetry utilization | 44% of monitored inpatients on the medical service (with length of stay >48 hours) remain on telemetry until discharge. | Decrease by 15% the number of patients (with length of stay >48 hours) who remain on telemetry until discharge. | Implemented an educational campaign for nursing groups and the medicine and cardiology housestaff. |

| Launched a messaging campaign consisting of posters and pocket cards on appropriate telemetry use. | |||

| Designed a feedback campaign with monthly e‐mail to housestaff on their ward team's telemetry use stats. | |||

| Next step: Build a CPOE intervention that asks users to specify an approved indication for telemetry when they order monitoring. The indication then dictates how long the order is active (24, 48, 72 hours or ongoing), and the MD must renew the order after the elapsed time. | |||