User login

RCT confirms CT scan screening catches lung cancer early

CT scan screening of older people with heavy smoking histories – using lesion volume, not diameter, as a trigger for further work-up – reduced lung cancer deaths by about 30% in a randomized trial from the Netherlands and Belgium with almost 16,000 current and former smokers, investigators reported in the New England Journal of Medicine.

The Dutch-Belgian lung-cancer screening trial (Nederlands-Leuvens Longkanker Screenings Onderzoek [NELSON]) is “arguably the only adequately powered trial other than the” National Lung Screening Trial (NLST) in the United States to assess the role of CT scan screening among smokers, wrote University of London cancer epidemiologist Stephen Duffy, MSc, and University of Liverpool molecular oncology professor John Field, PhD, in an accompanying editorial.

NLST, which used lesion diameter, found an approximately 20% lower lung cancer mortality than screening with chest x-rays among 53,454 heavy smokers after a median follow-up of 6.5 years. The trial ultimately led the U.S. Preventive Services Task Force to recommend annual screening for people aged 55-80 years with a history of at least 30 pack-years.

European countries have considered similar programs but have hesitated “partly due to doubts fostered by the early publication of inconclusive results of a number of smaller trials in Europe. These doubts should be laid to rest,” Mr. Duffy and Dr. Field wrote.

“With the NELSON results, the efficacy of low-dose CT screening for lung cancer is confirmed. Our job is no longer to assess whether low-dose CT screening for lung cancer works; it does. Our job is to identify the target population in which it will be acceptable and cost effective,” they added.

The 15,789 NELSON participants (84% men, with a median age of 58 years and 38 pack-year history) were randomized about 1:1 to either low-dose CT scan screening at baseline and 1, 3, and 5.5 years, or to no screening.

At 10 years follow-up, there were 5.58 lung cancer cases and 2.5 deaths per 1,000 person-years in the screened group versus 4.91 cases and 3.3 deaths per 1,000 person-years among controls. Lung-cancer mortality was 24% lower among screened subjects overall, and 33% lower among screened women. The team estimated that screening prevented about 60 lung cancer deaths.

Using volume instead of diameter “resulted in low[er] referral rates” – 2.1% with a positive predictive value of 43.5% versus 24% with a positive predictive value of 3.8% in NLST – for additional work-up, explained investigators led by H.J. de Koning, MD, PhD, of the department of public health at Erasmus University Medical Center in Rotterdam, the Netherlands.

The upper limit of overdiagnosis risk – a major concern with any screening program – was 18.5% with NLST versus 8.9% with NELSON, they wrote.

In short: “Volume CT screening enabled a significant reduction of harms (e.g., false positive tests and unnecessary work-up procedures) without jeopardizing favorable outcomes,” the investigators wrote. Indeed, an ad hoc analysis suggested “more-favorable effects on lung-cancer mortality than in the NLST, despite lower referral rates for suspicious lesions” and the fact that NLST used annual screening.

“Recently,” Mr. Duffy and Dr. Field explained in their editorial, “the NELSON investigators evaluated both diameter and volume measurement to estimate lung-nodule size as an imaging biomarker for nodule management; this provided evidence that using mean or maximum axial diameter to assess nodule volume led to a substantial overestimation of nodule volume.” Direct measurement of volume “resulted in a substantial number of early-stage cancers identified at the time of diagnosis and avoided false positives from the overestimation incurred by management based on diameter.”

“The lung-nodule management system used in the NELSON trial has been advocated in the European position statement on lung-cancer screening. This will improve the acceptability of the intervention, because the rate of further investigation has been a major concern in lung cancer screening,” they wrote.

Baseline characteristics did not differ significantly between the screened and unscreened in NELSON, except for a slightly longer duration of smoking in the screened group.

The work was funded by the Netherlands Organization of Health Research and Development, among others. Mr. Duffy and Dr. de Koning didn’t report any disclosures. Dr. Field is an advisor for AstraZeneca, Epigenomics, and Nucleix, and has a research grant to his university from Janssen.

SOURCE: de Honing HJ et al. N Engl J Med. 2020 Jan 29. doi: 10.1056/NEJMoa1911793.

CT scan screening of older people with heavy smoking histories – using lesion volume, not diameter, as a trigger for further work-up – reduced lung cancer deaths by about 30% in a randomized trial from the Netherlands and Belgium with almost 16,000 current and former smokers, investigators reported in the New England Journal of Medicine.

The Dutch-Belgian lung-cancer screening trial (Nederlands-Leuvens Longkanker Screenings Onderzoek [NELSON]) is “arguably the only adequately powered trial other than the” National Lung Screening Trial (NLST) in the United States to assess the role of CT scan screening among smokers, wrote University of London cancer epidemiologist Stephen Duffy, MSc, and University of Liverpool molecular oncology professor John Field, PhD, in an accompanying editorial.

NLST, which used lesion diameter, found an approximately 20% lower lung cancer mortality than screening with chest x-rays among 53,454 heavy smokers after a median follow-up of 6.5 years. The trial ultimately led the U.S. Preventive Services Task Force to recommend annual screening for people aged 55-80 years with a history of at least 30 pack-years.

European countries have considered similar programs but have hesitated “partly due to doubts fostered by the early publication of inconclusive results of a number of smaller trials in Europe. These doubts should be laid to rest,” Mr. Duffy and Dr. Field wrote.

“With the NELSON results, the efficacy of low-dose CT screening for lung cancer is confirmed. Our job is no longer to assess whether low-dose CT screening for lung cancer works; it does. Our job is to identify the target population in which it will be acceptable and cost effective,” they added.

The 15,789 NELSON participants (84% men, with a median age of 58 years and 38 pack-year history) were randomized about 1:1 to either low-dose CT scan screening at baseline and 1, 3, and 5.5 years, or to no screening.

At 10 years follow-up, there were 5.58 lung cancer cases and 2.5 deaths per 1,000 person-years in the screened group versus 4.91 cases and 3.3 deaths per 1,000 person-years among controls. Lung-cancer mortality was 24% lower among screened subjects overall, and 33% lower among screened women. The team estimated that screening prevented about 60 lung cancer deaths.

Using volume instead of diameter “resulted in low[er] referral rates” – 2.1% with a positive predictive value of 43.5% versus 24% with a positive predictive value of 3.8% in NLST – for additional work-up, explained investigators led by H.J. de Koning, MD, PhD, of the department of public health at Erasmus University Medical Center in Rotterdam, the Netherlands.

The upper limit of overdiagnosis risk – a major concern with any screening program – was 18.5% with NLST versus 8.9% with NELSON, they wrote.

In short: “Volume CT screening enabled a significant reduction of harms (e.g., false positive tests and unnecessary work-up procedures) without jeopardizing favorable outcomes,” the investigators wrote. Indeed, an ad hoc analysis suggested “more-favorable effects on lung-cancer mortality than in the NLST, despite lower referral rates for suspicious lesions” and the fact that NLST used annual screening.

“Recently,” Mr. Duffy and Dr. Field explained in their editorial, “the NELSON investigators evaluated both diameter and volume measurement to estimate lung-nodule size as an imaging biomarker for nodule management; this provided evidence that using mean or maximum axial diameter to assess nodule volume led to a substantial overestimation of nodule volume.” Direct measurement of volume “resulted in a substantial number of early-stage cancers identified at the time of diagnosis and avoided false positives from the overestimation incurred by management based on diameter.”

“The lung-nodule management system used in the NELSON trial has been advocated in the European position statement on lung-cancer screening. This will improve the acceptability of the intervention, because the rate of further investigation has been a major concern in lung cancer screening,” they wrote.

Baseline characteristics did not differ significantly between the screened and unscreened in NELSON, except for a slightly longer duration of smoking in the screened group.

The work was funded by the Netherlands Organization of Health Research and Development, among others. Mr. Duffy and Dr. de Koning didn’t report any disclosures. Dr. Field is an advisor for AstraZeneca, Epigenomics, and Nucleix, and has a research grant to his university from Janssen.

SOURCE: de Honing HJ et al. N Engl J Med. 2020 Jan 29. doi: 10.1056/NEJMoa1911793.

CT scan screening of older people with heavy smoking histories – using lesion volume, not diameter, as a trigger for further work-up – reduced lung cancer deaths by about 30% in a randomized trial from the Netherlands and Belgium with almost 16,000 current and former smokers, investigators reported in the New England Journal of Medicine.

The Dutch-Belgian lung-cancer screening trial (Nederlands-Leuvens Longkanker Screenings Onderzoek [NELSON]) is “arguably the only adequately powered trial other than the” National Lung Screening Trial (NLST) in the United States to assess the role of CT scan screening among smokers, wrote University of London cancer epidemiologist Stephen Duffy, MSc, and University of Liverpool molecular oncology professor John Field, PhD, in an accompanying editorial.

NLST, which used lesion diameter, found an approximately 20% lower lung cancer mortality than screening with chest x-rays among 53,454 heavy smokers after a median follow-up of 6.5 years. The trial ultimately led the U.S. Preventive Services Task Force to recommend annual screening for people aged 55-80 years with a history of at least 30 pack-years.

European countries have considered similar programs but have hesitated “partly due to doubts fostered by the early publication of inconclusive results of a number of smaller trials in Europe. These doubts should be laid to rest,” Mr. Duffy and Dr. Field wrote.

“With the NELSON results, the efficacy of low-dose CT screening for lung cancer is confirmed. Our job is no longer to assess whether low-dose CT screening for lung cancer works; it does. Our job is to identify the target population in which it will be acceptable and cost effective,” they added.

The 15,789 NELSON participants (84% men, with a median age of 58 years and 38 pack-year history) were randomized about 1:1 to either low-dose CT scan screening at baseline and 1, 3, and 5.5 years, or to no screening.

At 10 years follow-up, there were 5.58 lung cancer cases and 2.5 deaths per 1,000 person-years in the screened group versus 4.91 cases and 3.3 deaths per 1,000 person-years among controls. Lung-cancer mortality was 24% lower among screened subjects overall, and 33% lower among screened women. The team estimated that screening prevented about 60 lung cancer deaths.

Using volume instead of diameter “resulted in low[er] referral rates” – 2.1% with a positive predictive value of 43.5% versus 24% with a positive predictive value of 3.8% in NLST – for additional work-up, explained investigators led by H.J. de Koning, MD, PhD, of the department of public health at Erasmus University Medical Center in Rotterdam, the Netherlands.

The upper limit of overdiagnosis risk – a major concern with any screening program – was 18.5% with NLST versus 8.9% with NELSON, they wrote.

In short: “Volume CT screening enabled a significant reduction of harms (e.g., false positive tests and unnecessary work-up procedures) without jeopardizing favorable outcomes,” the investigators wrote. Indeed, an ad hoc analysis suggested “more-favorable effects on lung-cancer mortality than in the NLST, despite lower referral rates for suspicious lesions” and the fact that NLST used annual screening.

“Recently,” Mr. Duffy and Dr. Field explained in their editorial, “the NELSON investigators evaluated both diameter and volume measurement to estimate lung-nodule size as an imaging biomarker for nodule management; this provided evidence that using mean or maximum axial diameter to assess nodule volume led to a substantial overestimation of nodule volume.” Direct measurement of volume “resulted in a substantial number of early-stage cancers identified at the time of diagnosis and avoided false positives from the overestimation incurred by management based on diameter.”

“The lung-nodule management system used in the NELSON trial has been advocated in the European position statement on lung-cancer screening. This will improve the acceptability of the intervention, because the rate of further investigation has been a major concern in lung cancer screening,” they wrote.

Baseline characteristics did not differ significantly between the screened and unscreened in NELSON, except for a slightly longer duration of smoking in the screened group.

The work was funded by the Netherlands Organization of Health Research and Development, among others. Mr. Duffy and Dr. de Koning didn’t report any disclosures. Dr. Field is an advisor for AstraZeneca, Epigenomics, and Nucleix, and has a research grant to his university from Janssen.

SOURCE: de Honing HJ et al. N Engl J Med. 2020 Jan 29. doi: 10.1056/NEJMoa1911793.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

The scents-less life and the speaking mummy

If I only had a nose

Deaf and blind people get all the attention. Special schools, Braille, sign language, even a pinball-focused rock opera. And it is easy to see why: Those senses are kind of important when it comes to navigating the world. But what if you have to live without one of the less-cool senses? What if the nose doesn’t know?

According to research published in Clinical Otolaryngology, up to 5% of the world’s population has some sort of smell disorder, preventing them from either smelling correctly or smelling anything at all. And the effects of this on everyday life are drastic.

In a survey of 71 people with smell disorders, the researchers found that patients experience a smorgasbord of negative effects – ranging from poor hazard perception and poor sense of personal hygiene, to an inability to enjoy food and an inability to link smell to happy memories. The whiff of gingerbread on Christmas morning, the smoke of a bonfire on a summer evening – the smell-deprived miss out on them all. The negative emotions those people experience read like a recipe for your very own homemade Sith lord: sadness, regret, isolation, anxiety, anger, frustration. A path to the dark side, losing your scent is.

Speaking of fictional bad guys, this nasal-based research really could have benefited one Lord Volde ... fine, You-Know-Who. Just look at that face. That’s a man who can’t smell. You can’t tell us he wouldn’t have turned out better if only Dorothy had picked him up on the yellow brick road instead of some dumb scarecrow.

The sound of hieroglyphics

The Rosetta Stone revealed the meaning of Egyptian hieroglyphics and unlocked the ancient language of the Pharaohs for modern humans. But that mute stele said nothing about what those who uttered that ancient tongue sounded like.

Researchers at London’s Royal Holloway College may now know the answer. At least, a monosyllabic one.

The answer comes (indirectly) from Egyptian priest Nesyamun, a former resident of Thebes who worked at the temple of Karnak, but who now calls the Leeds City Museum home. Or, to be precise, Nesyamun’s 3,000-year-old mummified remains live on in Leeds. Nesyamun’s religious duties during his Karnak career likely required a smooth singing style and an accomplished speaking voice.

In a paper published in Scientific Reports, the British scientists say they’ve now heard the sound of the Egyptian priest’s long-silenced liturgical voice.

Working from CT scans of Nesyamun’s relatively well-preserved vocal-tract soft tissue, the scientists used a 3D-printed vocal tract and an electronic larynx to synthesize the actual sound of his voice.

And the result? Did the crooning priest of Karnak utter a Boris Karloffian curse upon those who had disturbed his millennia-long slumber? Did he deliver a rousing hymn of praise to Egypt’s ruler during the turbulent 1070s bce, Ramses XI?

In fact, what emerged from Nesyamun’s synthesized throat was ... “eh.” Maybe “a,” as in “bad.”

Given the state of the priest’s tongue (shrunken) and his soft palate (missing), the researchers say those monosyllabic sounds are the best Nesyamun can muster in his present state. Other experts say actual words from the ancients are likely impossible.

Perhaps one day, science will indeed be able to synthesize whole words or sentences from other well-preserved residents of the distant past. May we all live to hear an unyielding Ramses II himself chew the scenery like his Hollywood doppelganger, Yul Brynner: “So let it be written! So let it be done!”

To beard or not to beard

People are funny, and men, who happen to be people, are no exception.

Men, you see, have these things called beards, and there are definitely more men running around with facial hair these days. A lot of women go through a lot of trouble to get rid of a lot of their hair. But men, well, we grow extra hair. Why?

That’s what Honest Amish, a maker of beard-care products, wanted to know. They commissioned OnePoll to conduct a survey of 2,000 Americans, both men and women, to learn all kinds of things about beards.

So what did they find? Facial hair confidence, that’s what. Three-quarters of men said that a beard made them feel more confident than did a bare face, and 73% said that facial hair makes a man more attractive. That number was a bit lower among women, 63% of whom said that facial hair made a man more attractive.

That doesn’t seem very funny, does it? We’re getting there.

Male respondents also were asked what they would do to get the perfect beard: 40% would be willing to spend a night in jail or give up coffee for a year, and 38% would stand in line at the DMV for an entire day. Somewhat less popular responses included giving up sex for a year (22%) – seems like a waste of all that new-found confidence – and shaving their heads (18%).

And that, we don’t mind saying, is a hair-raising conclusion.

If I only had a nose

Deaf and blind people get all the attention. Special schools, Braille, sign language, even a pinball-focused rock opera. And it is easy to see why: Those senses are kind of important when it comes to navigating the world. But what if you have to live without one of the less-cool senses? What if the nose doesn’t know?

According to research published in Clinical Otolaryngology, up to 5% of the world’s population has some sort of smell disorder, preventing them from either smelling correctly or smelling anything at all. And the effects of this on everyday life are drastic.

In a survey of 71 people with smell disorders, the researchers found that patients experience a smorgasbord of negative effects – ranging from poor hazard perception and poor sense of personal hygiene, to an inability to enjoy food and an inability to link smell to happy memories. The whiff of gingerbread on Christmas morning, the smoke of a bonfire on a summer evening – the smell-deprived miss out on them all. The negative emotions those people experience read like a recipe for your very own homemade Sith lord: sadness, regret, isolation, anxiety, anger, frustration. A path to the dark side, losing your scent is.

Speaking of fictional bad guys, this nasal-based research really could have benefited one Lord Volde ... fine, You-Know-Who. Just look at that face. That’s a man who can’t smell. You can’t tell us he wouldn’t have turned out better if only Dorothy had picked him up on the yellow brick road instead of some dumb scarecrow.

The sound of hieroglyphics

The Rosetta Stone revealed the meaning of Egyptian hieroglyphics and unlocked the ancient language of the Pharaohs for modern humans. But that mute stele said nothing about what those who uttered that ancient tongue sounded like.

Researchers at London’s Royal Holloway College may now know the answer. At least, a monosyllabic one.

The answer comes (indirectly) from Egyptian priest Nesyamun, a former resident of Thebes who worked at the temple of Karnak, but who now calls the Leeds City Museum home. Or, to be precise, Nesyamun’s 3,000-year-old mummified remains live on in Leeds. Nesyamun’s religious duties during his Karnak career likely required a smooth singing style and an accomplished speaking voice.

In a paper published in Scientific Reports, the British scientists say they’ve now heard the sound of the Egyptian priest’s long-silenced liturgical voice.

Working from CT scans of Nesyamun’s relatively well-preserved vocal-tract soft tissue, the scientists used a 3D-printed vocal tract and an electronic larynx to synthesize the actual sound of his voice.

And the result? Did the crooning priest of Karnak utter a Boris Karloffian curse upon those who had disturbed his millennia-long slumber? Did he deliver a rousing hymn of praise to Egypt’s ruler during the turbulent 1070s bce, Ramses XI?

In fact, what emerged from Nesyamun’s synthesized throat was ... “eh.” Maybe “a,” as in “bad.”

Given the state of the priest’s tongue (shrunken) and his soft palate (missing), the researchers say those monosyllabic sounds are the best Nesyamun can muster in his present state. Other experts say actual words from the ancients are likely impossible.

Perhaps one day, science will indeed be able to synthesize whole words or sentences from other well-preserved residents of the distant past. May we all live to hear an unyielding Ramses II himself chew the scenery like his Hollywood doppelganger, Yul Brynner: “So let it be written! So let it be done!”

To beard or not to beard

People are funny, and men, who happen to be people, are no exception.

Men, you see, have these things called beards, and there are definitely more men running around with facial hair these days. A lot of women go through a lot of trouble to get rid of a lot of their hair. But men, well, we grow extra hair. Why?

That’s what Honest Amish, a maker of beard-care products, wanted to know. They commissioned OnePoll to conduct a survey of 2,000 Americans, both men and women, to learn all kinds of things about beards.

So what did they find? Facial hair confidence, that’s what. Three-quarters of men said that a beard made them feel more confident than did a bare face, and 73% said that facial hair makes a man more attractive. That number was a bit lower among women, 63% of whom said that facial hair made a man more attractive.

That doesn’t seem very funny, does it? We’re getting there.

Male respondents also were asked what they would do to get the perfect beard: 40% would be willing to spend a night in jail or give up coffee for a year, and 38% would stand in line at the DMV for an entire day. Somewhat less popular responses included giving up sex for a year (22%) – seems like a waste of all that new-found confidence – and shaving their heads (18%).

And that, we don’t mind saying, is a hair-raising conclusion.

If I only had a nose

Deaf and blind people get all the attention. Special schools, Braille, sign language, even a pinball-focused rock opera. And it is easy to see why: Those senses are kind of important when it comes to navigating the world. But what if you have to live without one of the less-cool senses? What if the nose doesn’t know?

According to research published in Clinical Otolaryngology, up to 5% of the world’s population has some sort of smell disorder, preventing them from either smelling correctly or smelling anything at all. And the effects of this on everyday life are drastic.

In a survey of 71 people with smell disorders, the researchers found that patients experience a smorgasbord of negative effects – ranging from poor hazard perception and poor sense of personal hygiene, to an inability to enjoy food and an inability to link smell to happy memories. The whiff of gingerbread on Christmas morning, the smoke of a bonfire on a summer evening – the smell-deprived miss out on them all. The negative emotions those people experience read like a recipe for your very own homemade Sith lord: sadness, regret, isolation, anxiety, anger, frustration. A path to the dark side, losing your scent is.

Speaking of fictional bad guys, this nasal-based research really could have benefited one Lord Volde ... fine, You-Know-Who. Just look at that face. That’s a man who can’t smell. You can’t tell us he wouldn’t have turned out better if only Dorothy had picked him up on the yellow brick road instead of some dumb scarecrow.

The sound of hieroglyphics

The Rosetta Stone revealed the meaning of Egyptian hieroglyphics and unlocked the ancient language of the Pharaohs for modern humans. But that mute stele said nothing about what those who uttered that ancient tongue sounded like.

Researchers at London’s Royal Holloway College may now know the answer. At least, a monosyllabic one.

The answer comes (indirectly) from Egyptian priest Nesyamun, a former resident of Thebes who worked at the temple of Karnak, but who now calls the Leeds City Museum home. Or, to be precise, Nesyamun’s 3,000-year-old mummified remains live on in Leeds. Nesyamun’s religious duties during his Karnak career likely required a smooth singing style and an accomplished speaking voice.

In a paper published in Scientific Reports, the British scientists say they’ve now heard the sound of the Egyptian priest’s long-silenced liturgical voice.

Working from CT scans of Nesyamun’s relatively well-preserved vocal-tract soft tissue, the scientists used a 3D-printed vocal tract and an electronic larynx to synthesize the actual sound of his voice.

And the result? Did the crooning priest of Karnak utter a Boris Karloffian curse upon those who had disturbed his millennia-long slumber? Did he deliver a rousing hymn of praise to Egypt’s ruler during the turbulent 1070s bce, Ramses XI?

In fact, what emerged from Nesyamun’s synthesized throat was ... “eh.” Maybe “a,” as in “bad.”

Given the state of the priest’s tongue (shrunken) and his soft palate (missing), the researchers say those monosyllabic sounds are the best Nesyamun can muster in his present state. Other experts say actual words from the ancients are likely impossible.

Perhaps one day, science will indeed be able to synthesize whole words or sentences from other well-preserved residents of the distant past. May we all live to hear an unyielding Ramses II himself chew the scenery like his Hollywood doppelganger, Yul Brynner: “So let it be written! So let it be done!”

To beard or not to beard

People are funny, and men, who happen to be people, are no exception.

Men, you see, have these things called beards, and there are definitely more men running around with facial hair these days. A lot of women go through a lot of trouble to get rid of a lot of their hair. But men, well, we grow extra hair. Why?

That’s what Honest Amish, a maker of beard-care products, wanted to know. They commissioned OnePoll to conduct a survey of 2,000 Americans, both men and women, to learn all kinds of things about beards.

So what did they find? Facial hair confidence, that’s what. Three-quarters of men said that a beard made them feel more confident than did a bare face, and 73% said that facial hair makes a man more attractive. That number was a bit lower among women, 63% of whom said that facial hair made a man more attractive.

That doesn’t seem very funny, does it? We’re getting there.

Male respondents also were asked what they would do to get the perfect beard: 40% would be willing to spend a night in jail or give up coffee for a year, and 38% would stand in line at the DMV for an entire day. Somewhat less popular responses included giving up sex for a year (22%) – seems like a waste of all that new-found confidence – and shaving their heads (18%).

And that, we don’t mind saying, is a hair-raising conclusion.

Psoriasis: A look back over the past 50 years, and forward to next steps

Imagine a patient suffering with horrible psoriasis for decades having failed “every available treatment.” Imagine him living all that time with “flaking, cracking, painful, itchy skin,” only to develop cirrhosis after exposure to toxic therapies.

Then imagine the experience for that patient when, 2 weeks after initiating treatment with a new interleukin-17 inhibitor, his skin clears completely.

“Two weeks later it’s all gone – it was a moment to behold,” said Joel M. Gelfand, MD, professor of dermatology and epidemiology at the University of Pennsylvania, Philadelphia, who had cared for the man for many years before a psoriasis treatment revolution of sorts took the field of dermatology by storm.

“The progress has been breathtaking – there’s no other way to describe it – and it feels like a miracle every time I see a new patient who has tough disease and I have all these things to offer them,” he continued. “For most patients, I can really help them and make a major difference in their life.”

said Mark Lebwohl, MD, Waldman professor of dermatology and chair of the Kimberly and Eric J. Waldman department of dermatology at the Icahn School of Medicine at Mount Sinai, New York.

Dr. Lebwohl recounted some of his own experiences with psoriasis patients before the advent of treatments – particularly biologics – that have transformed practice.

There was a time when psoriasis patients had little more to turn to than the effective – but “disgusting” – Goeckerman Regimen involving cycles of UVB light exposure and topical crude coal tar application. Initially, the regimen, which was introduced in the 1920s, was used around the clock on an inpatient basis until the skin cleared, Dr. Lebwohl said.

In the 1970s, the immunosuppressive chemotherapy drug methotrexate became the first oral systemic therapy approved for severe psoriasis. For those with disabling disease, it offered some hope for relief, but only about 40% of patients achieved at least a 75% reduction in the Psoriasis Area and Severity Index score (PASI 75), he said, adding that they did so at the expense of the liver and bone marrow. “But it was the only thing we had for severe psoriasis other than light treatments.”

In the 1980s and 1990s, oral retinoids emerged as a treatment for psoriasis, and the immunosuppressive drug cyclosporine used to prevent organ rejection in some transplant patients was found to clear psoriasis in affected transplant recipients. Although they brought relief to some patients with severe, disabling disease, these also came with a high price. “It’s not that effective, and it has lots of side effects ... and causes kidney damage in essentially 100% of patients,” Dr. Lebwohl said of cyclosporine.

“So we had treatments that worked, but because the side effects were sufficiently severe, a lot of patients were not treated,” he said.

Enter the biologics era

The early 2000s brought the first two approvals for psoriasis: alefacept (Amevive), a “modestly effective, but quite safe” immunosuppressive dimeric fusion protein approved in early 2003 for moderate to severe plaque psoriasis, and efalizumab (Raptiva), a recombinant humanized monoclonal antibody approved in October 2003; both were T-cell–targeted therapies. The former was withdrawn from the market voluntarily as newer agents became available, and the latter was withdrawn in 2009 because of a link with development of progressive multifocal leukoencephalopathy.

Tumor necrosis factor (TNF) blockers, which had been used effectively for RA and Crohn’s disease, emerged next, and were highly effective, much safer than the systemic treatments, and gained “very widespread use,” Dr. Lebwohl said.

His colleague Alice B. Gottlieb, MD, PhD, was among the pioneers in the development of TNF blockers for the treatment of psoriasis. Her seminal, investigator-initiated paper on the efficacy and safety of infliximab (Remicade) monotherapy for plaque-type psoriasis published in the Lancet in 2001 helped launch the current era in which many psoriasis patients achieve 100% PASI responses with limited side effects, he said, explaining that subsequent research elucidated the role of IL-12 and -23 – leading to effective treatments like ustekinumab (Stelara), and later IL-17, which is, “in fact, the molecule closest to the pathogenesis of psoriasis.”

“If you block IL-17, you get rid of psoriasis,” he said, noting that there are now several companies with approved antibodies to IL-17. “Taltz [ixekizumab] and Cosentyx [secukinumab] are the leading ones, and Siliq [brodalumab] blocks the receptor for IL-17, so it is very effective.”

Another novel biologic – bimekizumab – is on the horizon. It blocks both IL-17a and IL-17f, and appears highly effective in psoriasis and psoriatic arthritis (PsA). “Biologics were the real start of the [psoriasis treatment] revolution,” he said. “When I started out I would speak at patient meetings and the patients were angry at their physicians; they thought they weren’t aggressive enough, they were very frustrated.”

Dr. Lebwohl described patients he would see at annual National Psoriasis Foundation meetings: “There were patients in wheel chairs, because they couldn’t walk. They would be red and scaly all over ... you could have literally swept up scale like it was snow after one of those meetings.

“You go forward to around 2010 – nobody’s in wheelchairs anymore, everybody has clear skin, and it’s become a party; patients are no longer angry – they are thrilled with the results they are getting from much safer and much more effective drugs,” he said. “So it’s been a pleasure taking care of those patients and going from a very difficult time of treating them, to a time where we’ve done a great job treating them.”

Dr. Lebwohl noted that a “large number of dermatologists have been involved with the development of these drugs and making sure they succeed, and that has also been a pleasure to see.”

Dr. Gottlieb, who Dr. Lebwohl has described as “a superstar” in the fields of dermatology and rheumatology, is one such researcher. In an interview, she looked back on her work and the ways that her work “opened the field,” led to many of her trainees also doing “great work,” and changed the lives of patients.

“It’s nice to feel that I really did change, fundamentally, how psoriasis patients are treated,” said Dr. Gottlieb, who is a clinical professor in the department of dermatology at the Icahn School of Medicine at Mount Sinai. “That obviously feels great.”

She recalled a patient – “a 6-foot-5 biker with bad psoriasis” – who “literally, the minute the door closed, he was crying about how horrible his disease was.”

“And I cleared him ... and then you get big hugs – it just feels extremely good ... giving somebody their life back,” she said.

Dr. Gottlieb has been involved in much of the work in developing biologics for psoriasis, including the ongoing work with bimekizumab for PsA as mentioned by Dr. Lebwohl.

If the phase 2 data with bimekizumab are replicated in the ongoing phase 3 trials now underway at her center, “that can really raise the bar ... so if it’s reproducible, it’s very exciting.”

“It’s exciting to have an IL-23 blocker that, at least in clinical trials, showed inhibition of radiographic progression [in PsA],” she said. “That’s guselkumab those data are already out, and I was involved with that.”

The early work of Dr. Gottlieb and others has also “spread to other diseases,” like hidradenitis suppurativa and atopic dermatitis, she said, noting that numerous studies are underway.

Aside from curing all patients, her ultimate goal is getting to a point where psoriasis has no effect on patients’ quality of life.

“And I see it already,” she said. “It’s happening, and it’s nice to see that it’s happening in children now, too; several of the drugs are approved in kids.”

Alan Menter, MD, chairman of the division of dermatology at Baylor University Medical Center, Dallas, also a prolific researcher – and chair of the guidelines committee that published two new sets of guidelines for psoriasis treatment in 2019 – said that the field of dermatology was “late to the biologic evolution,” as many of the early biologics were first approved for PsA.

“But over the last 10 years, things have changed dramatically,” he said. “After that we suddenly leapt ahead of everybody. ... We now have 11 biologic drugs approved for psoriasis, which is more than any other disease has available.”

It’s been “highly exciting” to see this “evolution and revolution,” he commented, adding that one of the next challenges is to address the comorbidities, such as cardiovascular disease, associated with psoriasis.

“The big question now ... is if you improve skin and you improve joints, can you potentially reduce the risk of coronary artery disease,” he said. “Everybody is looking at that, and to me it’s one of the most exciting things that we’re doing.”

Work is ongoing to look at whether the IL-17s and IL-23s have “other indications outside of the skin and joints,” both within and outside of dermatology.

Like Dr. Gottlieb, Dr. Menter also mentioned the potential for hidradenitis suppurativa, and also for a condition that is rarely discussed or studied: genital psoriasis. Ixekizumab has recently been shown to work in about 75% of patients with genital psoriasis, he noted.

Another important area of research is the identification of biomarkers for predicting response and relapse, he said. For now, biomarker research has disappointed, he added, predicting that it will take at least 3-5 years before biomarkers to help guide treatment are identified.

Indeed, Dr. Gelfand, who also is director of the Psoriasis and Phototherapy Treatment Center, vice chair of clinical research, and medical director of the dermatology clinical studies unit at the University of Pennsylvania, agreed there is a need for research to improve treatment selection.

Advances are being made in genetics – with more than 80 different genes now identified as being related to psoriasis – and in medical informatics – which allow thousands of patients to be followed for years, he said, noting that this could elucidate immunopathological features that can improve treatments, predict and prevent comorbidity, and further improve outcomes.

“We also need care that is more patient centered,” he said, describing the ongoing pragmatic LITE trial of home- or office-based phototherapy for which he is the lead investigator, and other studies that he hopes will expand access to care.

Kenneth Brian Gordon, MD, chair and professor of dermatology at the Medical College of Wisconsin, Milwaukee, whose career started in the basic science immunology arena, added the need for expanding benefit to patients with more-moderate disease. Like Dr. Menter, he identified psoriasis as the area in medicine that has had the greatest degree of advancement, except perhaps for hepatitis C.

He described the process not as a “bench-to-bedside” story, but as a bedside-to-bench, then “back-to-bedside” story.

It was really about taking those early T-cell–targeted biologics and anti-TNF agents from bedside to bench with the realization of the importance of the IL-23 and IL-17 pathways, and that understanding led back to the bedside with the development of the newest agents – and to a “huge difference in patient’s lives.”

“But we’ve gotten so good at treating patients with severe disease ... the question now is how to take care of those with more-moderate disease,” he said, noting that a focus on cost and better delivery systems will be needed for that population.

That research is underway, and the future looks bright – and clear.

“I think with psoriasis therapy and where we’ve come in the last 20 years ... we have a hard time remembering what it was like before we had biologic agents” he said. “Our perspective has changed a lot, and sometimes we forget that.”

In fact, “psoriasis has sort of dragged dermatology into the world of modern clinical trial science, and we can now apply that to all sorts of other diseases,” he said. “The psoriasis trials were the first really well-done large-scale trials in dermatology, and I think that has given dermatology a real leg up in how we do clinical research and how we do evidence-based medicine.”

All of the doctors interviewed for this story have received funds and/or honoraria from, consulted with, are employed with, or served on the advisory boards of manufacturers of biologics. Dr. Gelfand is a copatent holder of resiquimod for treatment of cutaneous T-cell lymphoma and is deputy editor of the Journal of Investigative Dermatology.

Imagine a patient suffering with horrible psoriasis for decades having failed “every available treatment.” Imagine him living all that time with “flaking, cracking, painful, itchy skin,” only to develop cirrhosis after exposure to toxic therapies.

Then imagine the experience for that patient when, 2 weeks after initiating treatment with a new interleukin-17 inhibitor, his skin clears completely.

“Two weeks later it’s all gone – it was a moment to behold,” said Joel M. Gelfand, MD, professor of dermatology and epidemiology at the University of Pennsylvania, Philadelphia, who had cared for the man for many years before a psoriasis treatment revolution of sorts took the field of dermatology by storm.

“The progress has been breathtaking – there’s no other way to describe it – and it feels like a miracle every time I see a new patient who has tough disease and I have all these things to offer them,” he continued. “For most patients, I can really help them and make a major difference in their life.”

said Mark Lebwohl, MD, Waldman professor of dermatology and chair of the Kimberly and Eric J. Waldman department of dermatology at the Icahn School of Medicine at Mount Sinai, New York.

Dr. Lebwohl recounted some of his own experiences with psoriasis patients before the advent of treatments – particularly biologics – that have transformed practice.

There was a time when psoriasis patients had little more to turn to than the effective – but “disgusting” – Goeckerman Regimen involving cycles of UVB light exposure and topical crude coal tar application. Initially, the regimen, which was introduced in the 1920s, was used around the clock on an inpatient basis until the skin cleared, Dr. Lebwohl said.

In the 1970s, the immunosuppressive chemotherapy drug methotrexate became the first oral systemic therapy approved for severe psoriasis. For those with disabling disease, it offered some hope for relief, but only about 40% of patients achieved at least a 75% reduction in the Psoriasis Area and Severity Index score (PASI 75), he said, adding that they did so at the expense of the liver and bone marrow. “But it was the only thing we had for severe psoriasis other than light treatments.”

In the 1980s and 1990s, oral retinoids emerged as a treatment for psoriasis, and the immunosuppressive drug cyclosporine used to prevent organ rejection in some transplant patients was found to clear psoriasis in affected transplant recipients. Although they brought relief to some patients with severe, disabling disease, these also came with a high price. “It’s not that effective, and it has lots of side effects ... and causes kidney damage in essentially 100% of patients,” Dr. Lebwohl said of cyclosporine.

“So we had treatments that worked, but because the side effects were sufficiently severe, a lot of patients were not treated,” he said.

Enter the biologics era

The early 2000s brought the first two approvals for psoriasis: alefacept (Amevive), a “modestly effective, but quite safe” immunosuppressive dimeric fusion protein approved in early 2003 for moderate to severe plaque psoriasis, and efalizumab (Raptiva), a recombinant humanized monoclonal antibody approved in October 2003; both were T-cell–targeted therapies. The former was withdrawn from the market voluntarily as newer agents became available, and the latter was withdrawn in 2009 because of a link with development of progressive multifocal leukoencephalopathy.

Tumor necrosis factor (TNF) blockers, which had been used effectively for RA and Crohn’s disease, emerged next, and were highly effective, much safer than the systemic treatments, and gained “very widespread use,” Dr. Lebwohl said.

His colleague Alice B. Gottlieb, MD, PhD, was among the pioneers in the development of TNF blockers for the treatment of psoriasis. Her seminal, investigator-initiated paper on the efficacy and safety of infliximab (Remicade) monotherapy for plaque-type psoriasis published in the Lancet in 2001 helped launch the current era in which many psoriasis patients achieve 100% PASI responses with limited side effects, he said, explaining that subsequent research elucidated the role of IL-12 and -23 – leading to effective treatments like ustekinumab (Stelara), and later IL-17, which is, “in fact, the molecule closest to the pathogenesis of psoriasis.”

“If you block IL-17, you get rid of psoriasis,” he said, noting that there are now several companies with approved antibodies to IL-17. “Taltz [ixekizumab] and Cosentyx [secukinumab] are the leading ones, and Siliq [brodalumab] blocks the receptor for IL-17, so it is very effective.”

Another novel biologic – bimekizumab – is on the horizon. It blocks both IL-17a and IL-17f, and appears highly effective in psoriasis and psoriatic arthritis (PsA). “Biologics were the real start of the [psoriasis treatment] revolution,” he said. “When I started out I would speak at patient meetings and the patients were angry at their physicians; they thought they weren’t aggressive enough, they were very frustrated.”

Dr. Lebwohl described patients he would see at annual National Psoriasis Foundation meetings: “There were patients in wheel chairs, because they couldn’t walk. They would be red and scaly all over ... you could have literally swept up scale like it was snow after one of those meetings.

“You go forward to around 2010 – nobody’s in wheelchairs anymore, everybody has clear skin, and it’s become a party; patients are no longer angry – they are thrilled with the results they are getting from much safer and much more effective drugs,” he said. “So it’s been a pleasure taking care of those patients and going from a very difficult time of treating them, to a time where we’ve done a great job treating them.”

Dr. Lebwohl noted that a “large number of dermatologists have been involved with the development of these drugs and making sure they succeed, and that has also been a pleasure to see.”

Dr. Gottlieb, who Dr. Lebwohl has described as “a superstar” in the fields of dermatology and rheumatology, is one such researcher. In an interview, she looked back on her work and the ways that her work “opened the field,” led to many of her trainees also doing “great work,” and changed the lives of patients.

“It’s nice to feel that I really did change, fundamentally, how psoriasis patients are treated,” said Dr. Gottlieb, who is a clinical professor in the department of dermatology at the Icahn School of Medicine at Mount Sinai. “That obviously feels great.”

She recalled a patient – “a 6-foot-5 biker with bad psoriasis” – who “literally, the minute the door closed, he was crying about how horrible his disease was.”

“And I cleared him ... and then you get big hugs – it just feels extremely good ... giving somebody their life back,” she said.

Dr. Gottlieb has been involved in much of the work in developing biologics for psoriasis, including the ongoing work with bimekizumab for PsA as mentioned by Dr. Lebwohl.

If the phase 2 data with bimekizumab are replicated in the ongoing phase 3 trials now underway at her center, “that can really raise the bar ... so if it’s reproducible, it’s very exciting.”

“It’s exciting to have an IL-23 blocker that, at least in clinical trials, showed inhibition of radiographic progression [in PsA],” she said. “That’s guselkumab those data are already out, and I was involved with that.”

The early work of Dr. Gottlieb and others has also “spread to other diseases,” like hidradenitis suppurativa and atopic dermatitis, she said, noting that numerous studies are underway.

Aside from curing all patients, her ultimate goal is getting to a point where psoriasis has no effect on patients’ quality of life.

“And I see it already,” she said. “It’s happening, and it’s nice to see that it’s happening in children now, too; several of the drugs are approved in kids.”

Alan Menter, MD, chairman of the division of dermatology at Baylor University Medical Center, Dallas, also a prolific researcher – and chair of the guidelines committee that published two new sets of guidelines for psoriasis treatment in 2019 – said that the field of dermatology was “late to the biologic evolution,” as many of the early biologics were first approved for PsA.

“But over the last 10 years, things have changed dramatically,” he said. “After that we suddenly leapt ahead of everybody. ... We now have 11 biologic drugs approved for psoriasis, which is more than any other disease has available.”

It’s been “highly exciting” to see this “evolution and revolution,” he commented, adding that one of the next challenges is to address the comorbidities, such as cardiovascular disease, associated with psoriasis.

“The big question now ... is if you improve skin and you improve joints, can you potentially reduce the risk of coronary artery disease,” he said. “Everybody is looking at that, and to me it’s one of the most exciting things that we’re doing.”

Work is ongoing to look at whether the IL-17s and IL-23s have “other indications outside of the skin and joints,” both within and outside of dermatology.

Like Dr. Gottlieb, Dr. Menter also mentioned the potential for hidradenitis suppurativa, and also for a condition that is rarely discussed or studied: genital psoriasis. Ixekizumab has recently been shown to work in about 75% of patients with genital psoriasis, he noted.

Another important area of research is the identification of biomarkers for predicting response and relapse, he said. For now, biomarker research has disappointed, he added, predicting that it will take at least 3-5 years before biomarkers to help guide treatment are identified.

Indeed, Dr. Gelfand, who also is director of the Psoriasis and Phototherapy Treatment Center, vice chair of clinical research, and medical director of the dermatology clinical studies unit at the University of Pennsylvania, agreed there is a need for research to improve treatment selection.

Advances are being made in genetics – with more than 80 different genes now identified as being related to psoriasis – and in medical informatics – which allow thousands of patients to be followed for years, he said, noting that this could elucidate immunopathological features that can improve treatments, predict and prevent comorbidity, and further improve outcomes.

“We also need care that is more patient centered,” he said, describing the ongoing pragmatic LITE trial of home- or office-based phototherapy for which he is the lead investigator, and other studies that he hopes will expand access to care.

Kenneth Brian Gordon, MD, chair and professor of dermatology at the Medical College of Wisconsin, Milwaukee, whose career started in the basic science immunology arena, added the need for expanding benefit to patients with more-moderate disease. Like Dr. Menter, he identified psoriasis as the area in medicine that has had the greatest degree of advancement, except perhaps for hepatitis C.

He described the process not as a “bench-to-bedside” story, but as a bedside-to-bench, then “back-to-bedside” story.

It was really about taking those early T-cell–targeted biologics and anti-TNF agents from bedside to bench with the realization of the importance of the IL-23 and IL-17 pathways, and that understanding led back to the bedside with the development of the newest agents – and to a “huge difference in patient’s lives.”

“But we’ve gotten so good at treating patients with severe disease ... the question now is how to take care of those with more-moderate disease,” he said, noting that a focus on cost and better delivery systems will be needed for that population.

That research is underway, and the future looks bright – and clear.

“I think with psoriasis therapy and where we’ve come in the last 20 years ... we have a hard time remembering what it was like before we had biologic agents” he said. “Our perspective has changed a lot, and sometimes we forget that.”

In fact, “psoriasis has sort of dragged dermatology into the world of modern clinical trial science, and we can now apply that to all sorts of other diseases,” he said. “The psoriasis trials were the first really well-done large-scale trials in dermatology, and I think that has given dermatology a real leg up in how we do clinical research and how we do evidence-based medicine.”

All of the doctors interviewed for this story have received funds and/or honoraria from, consulted with, are employed with, or served on the advisory boards of manufacturers of biologics. Dr. Gelfand is a copatent holder of resiquimod for treatment of cutaneous T-cell lymphoma and is deputy editor of the Journal of Investigative Dermatology.

Imagine a patient suffering with horrible psoriasis for decades having failed “every available treatment.” Imagine him living all that time with “flaking, cracking, painful, itchy skin,” only to develop cirrhosis after exposure to toxic therapies.

Then imagine the experience for that patient when, 2 weeks after initiating treatment with a new interleukin-17 inhibitor, his skin clears completely.

“Two weeks later it’s all gone – it was a moment to behold,” said Joel M. Gelfand, MD, professor of dermatology and epidemiology at the University of Pennsylvania, Philadelphia, who had cared for the man for many years before a psoriasis treatment revolution of sorts took the field of dermatology by storm.

“The progress has been breathtaking – there’s no other way to describe it – and it feels like a miracle every time I see a new patient who has tough disease and I have all these things to offer them,” he continued. “For most patients, I can really help them and make a major difference in their life.”

said Mark Lebwohl, MD, Waldman professor of dermatology and chair of the Kimberly and Eric J. Waldman department of dermatology at the Icahn School of Medicine at Mount Sinai, New York.

Dr. Lebwohl recounted some of his own experiences with psoriasis patients before the advent of treatments – particularly biologics – that have transformed practice.

There was a time when psoriasis patients had little more to turn to than the effective – but “disgusting” – Goeckerman Regimen involving cycles of UVB light exposure and topical crude coal tar application. Initially, the regimen, which was introduced in the 1920s, was used around the clock on an inpatient basis until the skin cleared, Dr. Lebwohl said.

In the 1970s, the immunosuppressive chemotherapy drug methotrexate became the first oral systemic therapy approved for severe psoriasis. For those with disabling disease, it offered some hope for relief, but only about 40% of patients achieved at least a 75% reduction in the Psoriasis Area and Severity Index score (PASI 75), he said, adding that they did so at the expense of the liver and bone marrow. “But it was the only thing we had for severe psoriasis other than light treatments.”

In the 1980s and 1990s, oral retinoids emerged as a treatment for psoriasis, and the immunosuppressive drug cyclosporine used to prevent organ rejection in some transplant patients was found to clear psoriasis in affected transplant recipients. Although they brought relief to some patients with severe, disabling disease, these also came with a high price. “It’s not that effective, and it has lots of side effects ... and causes kidney damage in essentially 100% of patients,” Dr. Lebwohl said of cyclosporine.

“So we had treatments that worked, but because the side effects were sufficiently severe, a lot of patients were not treated,” he said.

Enter the biologics era

The early 2000s brought the first two approvals for psoriasis: alefacept (Amevive), a “modestly effective, but quite safe” immunosuppressive dimeric fusion protein approved in early 2003 for moderate to severe plaque psoriasis, and efalizumab (Raptiva), a recombinant humanized monoclonal antibody approved in October 2003; both were T-cell–targeted therapies. The former was withdrawn from the market voluntarily as newer agents became available, and the latter was withdrawn in 2009 because of a link with development of progressive multifocal leukoencephalopathy.

Tumor necrosis factor (TNF) blockers, which had been used effectively for RA and Crohn’s disease, emerged next, and were highly effective, much safer than the systemic treatments, and gained “very widespread use,” Dr. Lebwohl said.

His colleague Alice B. Gottlieb, MD, PhD, was among the pioneers in the development of TNF blockers for the treatment of psoriasis. Her seminal, investigator-initiated paper on the efficacy and safety of infliximab (Remicade) monotherapy for plaque-type psoriasis published in the Lancet in 2001 helped launch the current era in which many psoriasis patients achieve 100% PASI responses with limited side effects, he said, explaining that subsequent research elucidated the role of IL-12 and -23 – leading to effective treatments like ustekinumab (Stelara), and later IL-17, which is, “in fact, the molecule closest to the pathogenesis of psoriasis.”

“If you block IL-17, you get rid of psoriasis,” he said, noting that there are now several companies with approved antibodies to IL-17. “Taltz [ixekizumab] and Cosentyx [secukinumab] are the leading ones, and Siliq [brodalumab] blocks the receptor for IL-17, so it is very effective.”

Another novel biologic – bimekizumab – is on the horizon. It blocks both IL-17a and IL-17f, and appears highly effective in psoriasis and psoriatic arthritis (PsA). “Biologics were the real start of the [psoriasis treatment] revolution,” he said. “When I started out I would speak at patient meetings and the patients were angry at their physicians; they thought they weren’t aggressive enough, they were very frustrated.”

Dr. Lebwohl described patients he would see at annual National Psoriasis Foundation meetings: “There were patients in wheel chairs, because they couldn’t walk. They would be red and scaly all over ... you could have literally swept up scale like it was snow after one of those meetings.

“You go forward to around 2010 – nobody’s in wheelchairs anymore, everybody has clear skin, and it’s become a party; patients are no longer angry – they are thrilled with the results they are getting from much safer and much more effective drugs,” he said. “So it’s been a pleasure taking care of those patients and going from a very difficult time of treating them, to a time where we’ve done a great job treating them.”

Dr. Lebwohl noted that a “large number of dermatologists have been involved with the development of these drugs and making sure they succeed, and that has also been a pleasure to see.”

Dr. Gottlieb, who Dr. Lebwohl has described as “a superstar” in the fields of dermatology and rheumatology, is one such researcher. In an interview, she looked back on her work and the ways that her work “opened the field,” led to many of her trainees also doing “great work,” and changed the lives of patients.

“It’s nice to feel that I really did change, fundamentally, how psoriasis patients are treated,” said Dr. Gottlieb, who is a clinical professor in the department of dermatology at the Icahn School of Medicine at Mount Sinai. “That obviously feels great.”

She recalled a patient – “a 6-foot-5 biker with bad psoriasis” – who “literally, the minute the door closed, he was crying about how horrible his disease was.”

“And I cleared him ... and then you get big hugs – it just feels extremely good ... giving somebody their life back,” she said.

Dr. Gottlieb has been involved in much of the work in developing biologics for psoriasis, including the ongoing work with bimekizumab for PsA as mentioned by Dr. Lebwohl.

If the phase 2 data with bimekizumab are replicated in the ongoing phase 3 trials now underway at her center, “that can really raise the bar ... so if it’s reproducible, it’s very exciting.”

“It’s exciting to have an IL-23 blocker that, at least in clinical trials, showed inhibition of radiographic progression [in PsA],” she said. “That’s guselkumab those data are already out, and I was involved with that.”

The early work of Dr. Gottlieb and others has also “spread to other diseases,” like hidradenitis suppurativa and atopic dermatitis, she said, noting that numerous studies are underway.

Aside from curing all patients, her ultimate goal is getting to a point where psoriasis has no effect on patients’ quality of life.

“And I see it already,” she said. “It’s happening, and it’s nice to see that it’s happening in children now, too; several of the drugs are approved in kids.”

Alan Menter, MD, chairman of the division of dermatology at Baylor University Medical Center, Dallas, also a prolific researcher – and chair of the guidelines committee that published two new sets of guidelines for psoriasis treatment in 2019 – said that the field of dermatology was “late to the biologic evolution,” as many of the early biologics were first approved for PsA.

“But over the last 10 years, things have changed dramatically,” he said. “After that we suddenly leapt ahead of everybody. ... We now have 11 biologic drugs approved for psoriasis, which is more than any other disease has available.”

It’s been “highly exciting” to see this “evolution and revolution,” he commented, adding that one of the next challenges is to address the comorbidities, such as cardiovascular disease, associated with psoriasis.

“The big question now ... is if you improve skin and you improve joints, can you potentially reduce the risk of coronary artery disease,” he said. “Everybody is looking at that, and to me it’s one of the most exciting things that we’re doing.”

Work is ongoing to look at whether the IL-17s and IL-23s have “other indications outside of the skin and joints,” both within and outside of dermatology.

Like Dr. Gottlieb, Dr. Menter also mentioned the potential for hidradenitis suppurativa, and also for a condition that is rarely discussed or studied: genital psoriasis. Ixekizumab has recently been shown to work in about 75% of patients with genital psoriasis, he noted.

Another important area of research is the identification of biomarkers for predicting response and relapse, he said. For now, biomarker research has disappointed, he added, predicting that it will take at least 3-5 years before biomarkers to help guide treatment are identified.

Indeed, Dr. Gelfand, who also is director of the Psoriasis and Phototherapy Treatment Center, vice chair of clinical research, and medical director of the dermatology clinical studies unit at the University of Pennsylvania, agreed there is a need for research to improve treatment selection.

Advances are being made in genetics – with more than 80 different genes now identified as being related to psoriasis – and in medical informatics – which allow thousands of patients to be followed for years, he said, noting that this could elucidate immunopathological features that can improve treatments, predict and prevent comorbidity, and further improve outcomes.

“We also need care that is more patient centered,” he said, describing the ongoing pragmatic LITE trial of home- or office-based phototherapy for which he is the lead investigator, and other studies that he hopes will expand access to care.

Kenneth Brian Gordon, MD, chair and professor of dermatology at the Medical College of Wisconsin, Milwaukee, whose career started in the basic science immunology arena, added the need for expanding benefit to patients with more-moderate disease. Like Dr. Menter, he identified psoriasis as the area in medicine that has had the greatest degree of advancement, except perhaps for hepatitis C.

He described the process not as a “bench-to-bedside” story, but as a bedside-to-bench, then “back-to-bedside” story.

It was really about taking those early T-cell–targeted biologics and anti-TNF agents from bedside to bench with the realization of the importance of the IL-23 and IL-17 pathways, and that understanding led back to the bedside with the development of the newest agents – and to a “huge difference in patient’s lives.”

“But we’ve gotten so good at treating patients with severe disease ... the question now is how to take care of those with more-moderate disease,” he said, noting that a focus on cost and better delivery systems will be needed for that population.

That research is underway, and the future looks bright – and clear.

“I think with psoriasis therapy and where we’ve come in the last 20 years ... we have a hard time remembering what it was like before we had biologic agents” he said. “Our perspective has changed a lot, and sometimes we forget that.”

In fact, “psoriasis has sort of dragged dermatology into the world of modern clinical trial science, and we can now apply that to all sorts of other diseases,” he said. “The psoriasis trials were the first really well-done large-scale trials in dermatology, and I think that has given dermatology a real leg up in how we do clinical research and how we do evidence-based medicine.”

All of the doctors interviewed for this story have received funds and/or honoraria from, consulted with, are employed with, or served on the advisory boards of manufacturers of biologics. Dr. Gelfand is a copatent holder of resiquimod for treatment of cutaneous T-cell lymphoma and is deputy editor of the Journal of Investigative Dermatology.

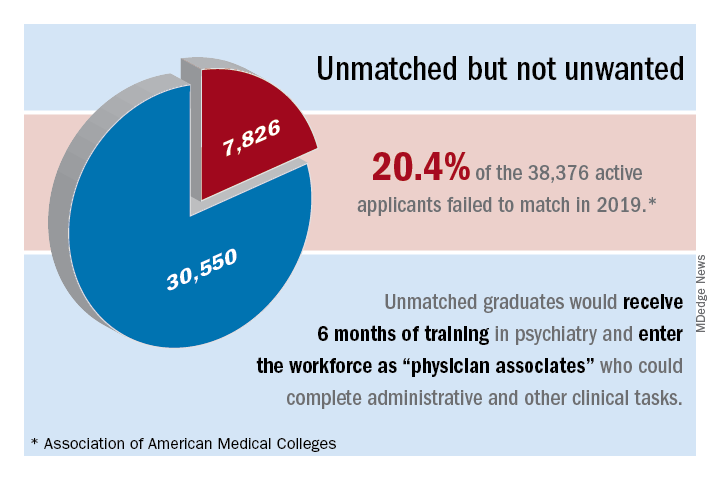

Are unmatched residency graduates a solution for ‘shrinking shrinks’?

‘Physician associates’ could be used to expand the reach of psychiatry

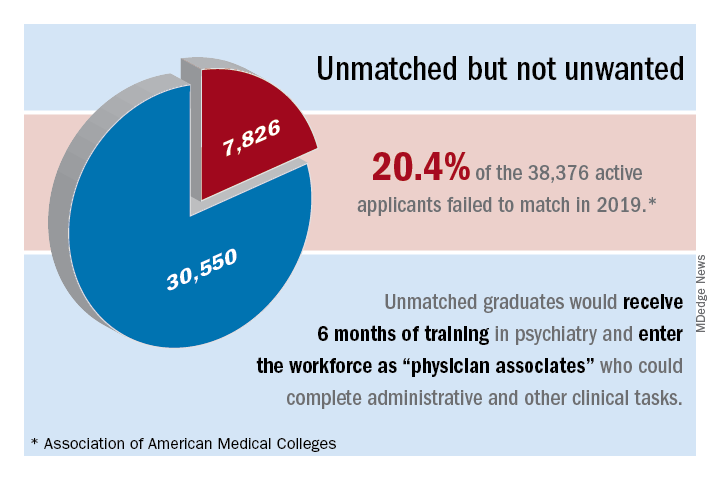

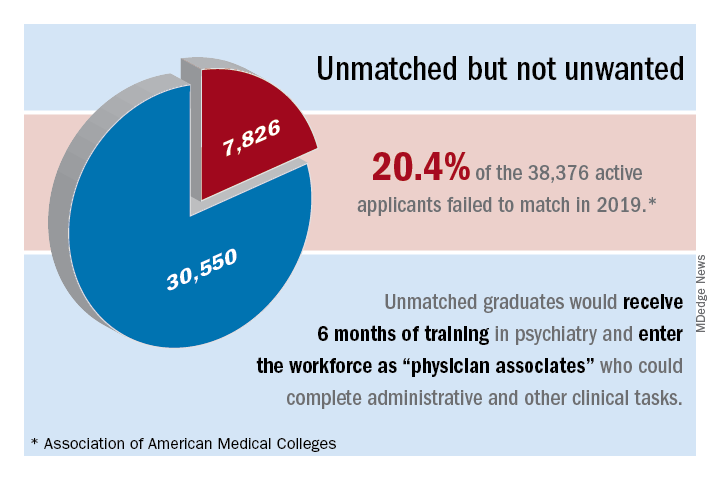

For many years now, we have been lamenting the shortage of psychiatrists practicing in the United States. At this point, we must identify possible solutions.1,2 Currently, the shortage of practicing psychiatrists in the United States could be as high as 45,000.3 The major problem is that the number of psychiatry residency positions will not increase in the foreseeable future, thus generating more psychiatrists is not an option.

Medicare pays about $150,000 per residency slot per year. To solve the mental health access problem, $27 billion (45,000 x $150,000 x 4 years)* would be required from Medicare, which is not feasible.4 The national average starting salary for psychiatrists from 2018-2019 was about $273,000 (much lower in academic institutions), according to Merritt Hawkins, the physician recruiting firm. That salary is modest, compared with those offered in other medical specialties. For this reason, many graduates choose other lucrative specialties. And we know that increasing the salaries of psychiatrists alone would not lead more people to choose psychiatry. On paper, it may say they work a 40-hour week, but they end up working 60 hours a week.

To make matters worse, family medicine and internal medicine doctors generally would rather not deal with people with mental illness and do “cherry-picking and lemon-dropping.” While many patients present to primary care with mental health issues, lack of time and education in psychiatric disorders and treatment hinder these physicians. In short, the mental health field cannot count on primary care physicians.

Meanwhile, there are thousands of unmatched residency graduates. In light of those realities, perhaps psychiatry residency programs could provide these unmatched graduates with 6 months of training and use them to supplement the workforce. These medical doctors, or “physician associates,” could be paired with a few psychiatrists to do clinical and administrative work. With one in four individuals having mental health issues, and more and more people seeking help because of increasing awareness and the benefits that accompanied the Affordable Care Act (ACA), physician associates might ease the workload of psychiatrists so that they can deliver better care to more people. We must take advantage of these two trends: The surge in unmatched graduates and “shrinking shrinks,” or the decline in the psychiatric workforce pool. (The Royal College of Physicians has established a category of clinicians called physician associates,5 but they are comparable to physician assistants in the United States. As you will see, the construct I am proposing is different.)

The current landscape

Currently, psychiatrists are under a lot of pressure to see a certain number of patients. Patients consistently complain that psychiatrists spend a maximum of 15 minutes with them, that the visits are interrupted by phone calls, and that they are not being heard and helped. Burnout, a silent epidemic among physicians, is relatively prevalent in psychiatry.6 Hence, some psychiatrists are reducing their hours and retiring early. Psychiatry has the third-oldest workforce, with 59% of current psychiatrists aged 55 years or older.7 A better pay/work ratio and work/life balance would enable psychiatrists to enjoy more fulfilling careers.

Many psychiatrists are spending a lot of their time in research, administration, and the classroom. In addition to those issues, the United States currently has a broken mental health care system.8 Finally, the medical practice landscape has changed dramatically in recent years, and those changes undermine both the effectiveness and well-being of clinicians.

The historical landscape

Some people proudly refer to the deinstitutionalization of mental asylums and state mental hospitals in the United States. But where have these patients gone? According to a U.S. Justice Department report, 2,220,300 adults were incarcerated in U.S. federal and state prisons and county jails in 2013.9 In addition, 4,751,400 adults in 2013 were on probation or parole. The percentages of inmates in state and federal prisons and local jails with a psychiatric diagnosis were 56%, 45%, and 64%, respectively.

I work at the Maryland correctional institutions, part of the Maryland Department of Public Safety and Correctional Services. One thing that I consistently hear from several correctional officers is “had these inmates received timely help and care, they wouldn’t have ended up behind bars.” Because of the criminalization of mental illness, in 44 states, the number of people with mental illness is higher in a jail or prison than in the largest state psychiatric hospital, according to the Treatment Advocacy Center. We have to be responsible for many of the inmates currently in correctional facilities for committing crimes related to mental health problems. In Maryland, a small state, there are 30,000 inmates in jails, and state and federal prison. The average cost of a meal is $1.36, thus $1.36 x 3 meals x 30,000 inmates = $122,400.00 for food alone for 1 day – this average does not take other expenses into account. By using money and manpower wisely and taking care of individuals’ mental health problems before they commit crimes, better outcomes could be achieved.

I used to work for MedOptions Inc. doing psychiatry consults at nursing homes and assisted-living facilities. Because of the shortage of psychiatrists and nurse practitioners, especially in the suburbs and rural areas, those patients could not be seen in a timely manner even for their 3-month routine follow-ups. As my colleagues and I have written previously, many elderly individuals with major neurocognitive disorders are not on the Food and Drug Administration–approved cognitive enhancers, such as donepezil, galantamine, and memantine.10 Instead, those patients are on benzodiazepines, which are associated with cognitive impairments, and increased risk of pneumonia and falls. Benzodiazepines also can cause and/or worsen disinhibited behavior. Also, in those settings, crisis situations often are addressed days to weeks later because of the doctor shortage. This situation is going to get worse, because this patient population is growing.

Child and geriatric psychiatry shortages

Child and geriatric psychiatrist shortages are even higher than those in general psychiatry.11 Many years of training and low salaries are a few of the reasons some choose not to do a fellowship. These residency graduates would rather join a practice at an attending salary than at a fellow’s salary, which requires an additional 1 to 2 years of training. Student loans of $100,000–$500,000 after residency also discourage some from pursuing fellowship opportunities. We need to consider models such as 2 years of residency with 2 years of a child psychiatry fellowship or 3 years of residency with 1 year of geriatric psychiatry fellowship. Working as an adult attending physician (50% of the time) and concurrently doing a fellowship (50% of the time) while receiving an attending salary might motivate more people to complete a fellowship.

In specialties such as radiology, international medical graduates (IMGs) who have completed residency training in radiology in other countries can complete a radiology fellowship in a particular area for several years and can practice in the United States as board-eligible certified MDs. Likewise, in line with the model proposed here, we could provide unmatched graduates who have no residency training with 3 to 4 years of child psychiatry and geriatric psychiatry training in addition to some adult psychiatry training.

Implementation of such a model might take care of the shortage of child and geriatric psychiatrists. In 2015, there were 56 geriatric psychiatry fellowship programs; 54 positions were filled, and 51 fellows completed training.12 “It appears that a reasonable percentage of IMGs who obtain a fellowship in geriatric psychiatry do not have an intent of pursuing a career in the field,” Marc H. Zisselman, MD, former geriatric psychiatry fellowship director and currently with the Einstein Medical Center in Philadelphia, told me in 2016. These numbers are not at all sufficient to take care of the nation’s unmet need. Hence, implementing alternate strategies is imperative.

Administrative tasks and care

What consumes a psychiatrist’s time and leads to burnout? The answer has to do with administrative tasks at work. Administrative tasks are not an effective use of time for an MD who has spent more than a decade in medical school, residency, and fellowship training. Although electronic medical record (EMR) systems are considered a major advancement, engaging in the process throughout the day is associated with exhaustion.

Many physicians feel that EMRs have slowed them down, and some are not well-equipped to use them in quick and efficient ways. EMRs also have led to physicians making minimal eye contact in interviews with patients. Patients often complain: “I am talking, and the doctor is looking at the computer and typing.” Patients consider this behavior to be unprofessional and rude. In a survey of 57 U.S. physicians in family medicine, internal medicine, cardiology, and orthopedics, results showed that during the work day, 27% of their time was spent on direct clinical face time with patients and 49.2% was spent on EMR and desk work. While in the examination room with patients, physicians spent 52.9% of their time on direct clinical face time and 37.0% on EMR and desk work. Outside office hours, physicians spend up to 2 hours of personal time each night doing additional computer and other clerical work.13

Several EMR software systems, such as CareLogic, Cerner, Epic,NextGen, PointClickCare, and Sunrise, are used in the United States. The U.S. Veterans Affairs Medical Centers (VAMCs) use the computerized patient record system (CPRS) across the country. VA clinicians find CPRS extremely useful when they move from one VAMC to another. Likewise, hospitals and universities may use one software system such as the CPRS and thus, when clinicians change jobs, they find it hard to adapt to the new system.

Because psychiatrists are wasting a lot of time doing administrative tasks, they might be unable to do a good job with regard to making the right diagnoses and prescribing the best treatments.When I ask patients what are they diagnosed with, they tell me: “It depends on who you ask,” or “I’ve been diagnosed with everything.” This shows that we are not doing a good job or something is not right.

Currently, psychiatrists do not have the time and/or interest to make the right diagnoses and provide adequate psychoeducation for their patients. This also could be attributable to a variety of factors, including, but not limited to, time constraints, cynicism, and apathy. Time constraints also lead to the gross underutilization14 of relapse prevention strategies such as long-acting injectables and medications that can prevent suicide, such as lithium and clozapine.15