User login

Growing vaping habit may lead to nicotine addiction in adolescents

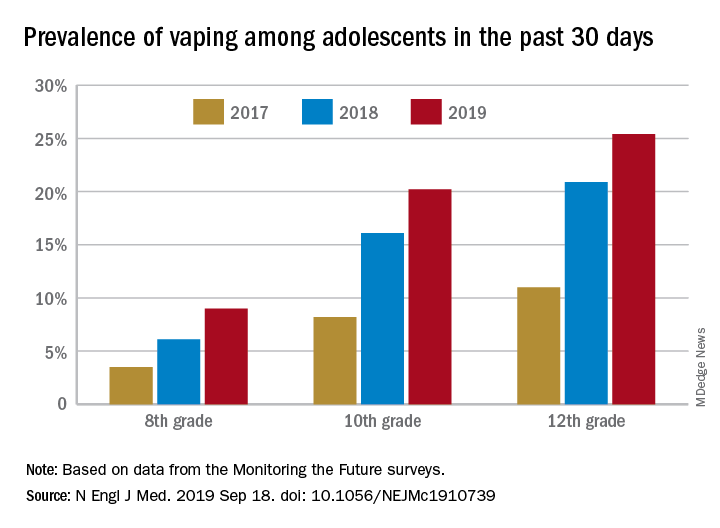

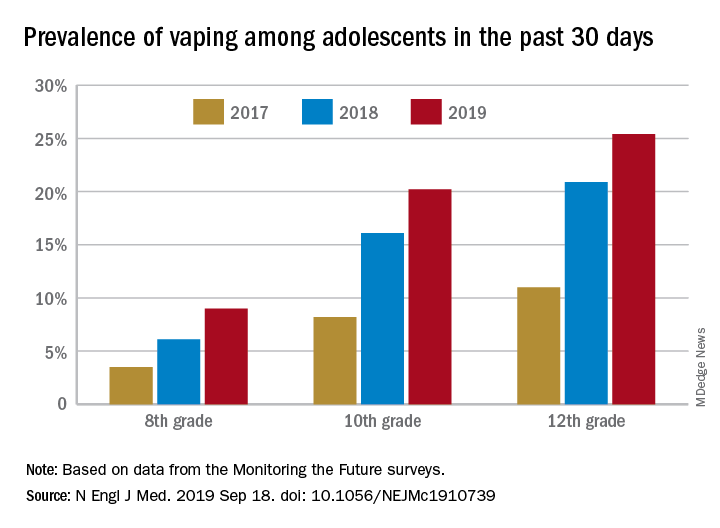

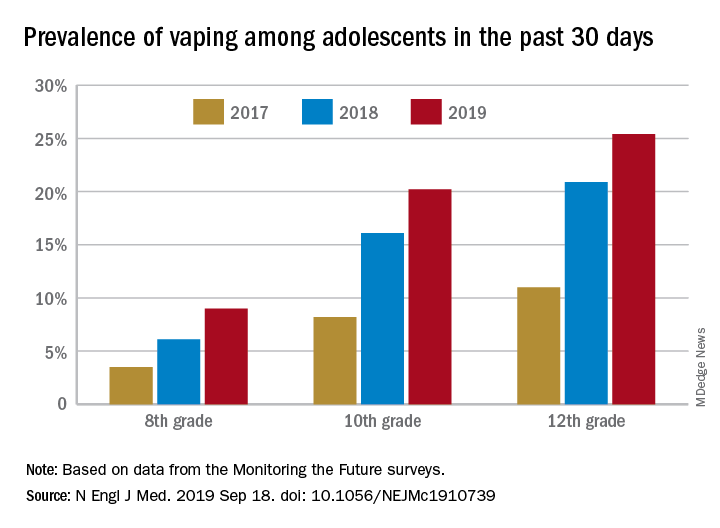

and in 2019 almost 12% of high school seniors reported that they were vaping every day, according to data from the Monitoring the Future surveys.

Daily use – defined as vaping on 20 or more of the previous 30 days – was reported by 6.9% of 10th-grade and 1.9% of 8th-grade respondents in the 2019 survey, which was the first time use in these age groups was assessed. “The substantial levels of daily vaping suggest the development of nicotine addiction,” Richard Miech, PhD, and associates said Sept. 18 in the New England Journal of Medicine.

From 2017 to 2019, e-cigarette use over the previous 30 days increased from 11.0% to 25.4% among 12th graders, from 8.2% to 20.2% in 10th graders, and from 3.5% to 9.0% of 8th graders, suggesting that “current efforts by the vaping industry, government agencies, and schools have thus far proved insufficient to stop the rapid spread of nicotine vaping among adolescents,” the investigators wrote.

By 2019, over 40% of 12th-grade students reported ever using e-cigarettes, along with more than 36% of 10th graders and almost 21% of 8th graders. Corresponding figures for past 12-month use were 35.1%, 31.1%, and 16.1%, they reported.

“New efforts are needed to protect youth from using nicotine during adolescence, when the developing brain is particularly susceptible to permanent changes from nicotine use and when almost all nicotine addiction is established,” the investigators wrote.

The analysis was funded by a grant from the National Institute on Drug Abuse to Dr. Miech.

SOURCE: Miech R et al. N Engl J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739.

and in 2019 almost 12% of high school seniors reported that they were vaping every day, according to data from the Monitoring the Future surveys.

Daily use – defined as vaping on 20 or more of the previous 30 days – was reported by 6.9% of 10th-grade and 1.9% of 8th-grade respondents in the 2019 survey, which was the first time use in these age groups was assessed. “The substantial levels of daily vaping suggest the development of nicotine addiction,” Richard Miech, PhD, and associates said Sept. 18 in the New England Journal of Medicine.

From 2017 to 2019, e-cigarette use over the previous 30 days increased from 11.0% to 25.4% among 12th graders, from 8.2% to 20.2% in 10th graders, and from 3.5% to 9.0% of 8th graders, suggesting that “current efforts by the vaping industry, government agencies, and schools have thus far proved insufficient to stop the rapid spread of nicotine vaping among adolescents,” the investigators wrote.

By 2019, over 40% of 12th-grade students reported ever using e-cigarettes, along with more than 36% of 10th graders and almost 21% of 8th graders. Corresponding figures for past 12-month use were 35.1%, 31.1%, and 16.1%, they reported.

“New efforts are needed to protect youth from using nicotine during adolescence, when the developing brain is particularly susceptible to permanent changes from nicotine use and when almost all nicotine addiction is established,” the investigators wrote.

The analysis was funded by a grant from the National Institute on Drug Abuse to Dr. Miech.

SOURCE: Miech R et al. N Engl J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739.

and in 2019 almost 12% of high school seniors reported that they were vaping every day, according to data from the Monitoring the Future surveys.

Daily use – defined as vaping on 20 or more of the previous 30 days – was reported by 6.9% of 10th-grade and 1.9% of 8th-grade respondents in the 2019 survey, which was the first time use in these age groups was assessed. “The substantial levels of daily vaping suggest the development of nicotine addiction,” Richard Miech, PhD, and associates said Sept. 18 in the New England Journal of Medicine.

From 2017 to 2019, e-cigarette use over the previous 30 days increased from 11.0% to 25.4% among 12th graders, from 8.2% to 20.2% in 10th graders, and from 3.5% to 9.0% of 8th graders, suggesting that “current efforts by the vaping industry, government agencies, and schools have thus far proved insufficient to stop the rapid spread of nicotine vaping among adolescents,” the investigators wrote.

By 2019, over 40% of 12th-grade students reported ever using e-cigarettes, along with more than 36% of 10th graders and almost 21% of 8th graders. Corresponding figures for past 12-month use were 35.1%, 31.1%, and 16.1%, they reported.

“New efforts are needed to protect youth from using nicotine during adolescence, when the developing brain is particularly susceptible to permanent changes from nicotine use and when almost all nicotine addiction is established,” the investigators wrote.

The analysis was funded by a grant from the National Institute on Drug Abuse to Dr. Miech.

SOURCE: Miech R et al. N Engl J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Adolescents who use e-cigarettes every day may be developing nicotine addiction.

Major finding: In 2019, almost 12% of high school seniors were vaping every day.

Study details: Monitoring the Future surveys nationally representative samples of 8th-, 10th-, and 12th-grade students each year.

Disclosures: The analysis was funded by a grant from the National Institute on Drug Abuse to Dr. Miech.

Source: Miech R et al. N Engl J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739.

Daily polypill lowers BP, cholesterol in underserved population

A daily polypill regimen improved cardiovascular risk factors in a socioeconomically vulnerable minority population, in a randomized controlled trial.

Patients at a federally qualified community health center in Alabama who received treatment with a combination pill for 1 year had greater reductions in systolic blood pressure and LDL cholesterol than did patients who received usual care, according to results published online on Sept. 19 in the New England Journal of Medicine.

“The simplicity and low cost of the polypill regimen make this approach attractive” when barriers such as lack of income, underinsurance, and difficulty attending clinic visits are common, said first author Daniel Muñoz, MD, of Vanderbilt University in Nashville, and coinvestigators. The investigators obtained the pills at a cost of $26 per month per participant.

People with low socioeconomic status and those who are nonwhite have high cardiovascular mortality, and the southeastern United States and rural areas have disproportionately high levels of cardiovascular disease burden, according to the investigators. The rates at which people with low socioeconomic status receive treatment for hypertension and hypercholesterolemia – leading cardiovascular disease risk factors – “are strikingly low,” Dr. Muñoz and colleagues said.

To assess the effectiveness of a polypill-based strategy in an underserved population with low socioeconomic status, the researchers conducted the randomized trial.

They enrolled 303 adults without cardiovascular disease, and 148 of the patients were randomized to receive the polypill, which contained generic versions of atorvastatin (10 mg), amlodipine (2.5 mg), losartan (25 mg), and hydrochlorothiazide (12.5 mg). The remaining 155 patients received usual care. All participants scheduled 2-month and 12-month follow-up visits.

The participants had an average age of 56 years, 60% were women, and more than 95% were black. More than 70% had an annual household income of less than $15,000. Baseline characteristics of the treatment groups did not significantly differ.

At baseline, the average BP was 140/83 mm Hg, and the average LDL cholesterol level was 113 mg/dL.

In all, 91% of the participants completed the 12-month trial visit. Average systolic BP decreased by 9 mm Hg in the group that received the polypill, compared with 2 mm Hg in the group that received usual care. Average LDL cholesterol level decreased by 15 mg/dL in the polypill group, versus 4 mg/dL in the usual-care group.

Changes in other medications

Clinicians discontinued or reduced doses of other antihypertensive or lipid-lowering medications in 44% of the patients in the polypill group and none in the usual-care group. Clinicians escalated therapy in 2% of the participants in the polypill group and in 10% of the usual-care group.

Side effects in participants who received the polypill included a 1% incidence of myalgias and a 1% incidence of hypotension or light-headedness. Liver function test results were normal.

Five serious adverse events that occurred during the trial – two in the polypill group and three in the usual-care group – were judged to be unrelated to the trial by a data and safety monitoring board.

The authors noted that limitations of the trial include its open-label design and that it was conducted at a single center.

“It is important to emphasize that use of the polypill does not preclude individualized, add-on therapies for residual elevations in blood-pressure or cholesterol levels, as judged by a patient’s physician,” said Dr. Muñoz and colleagues. “We recognize that a ‘one size fits all’ approach to cardiovascular disease prevention runs counter to current trends in precision medicine, in which clinical, genomic, and lifestyle factors are used for the development of individualized treatment strategies. Although the precision approach has clear virtues, a broader approach may benefit patients who face barriers to accessing the full advantages of precision medicine.”

The study was supported by grants from the American Heart Association Strategically Focused Prevention Research Network and the National Institutes of Health. One author disclosed personal fees from Novartis outside the study.

SOURCE: Muñoz D et al. N Engl J Med. 2019 Sep 18;381(12):1114-23. doi: 10.1056/NEJMoa1815359.

A daily polypill regimen improved cardiovascular risk factors in a socioeconomically vulnerable minority population, in a randomized controlled trial.

Patients at a federally qualified community health center in Alabama who received treatment with a combination pill for 1 year had greater reductions in systolic blood pressure and LDL cholesterol than did patients who received usual care, according to results published online on Sept. 19 in the New England Journal of Medicine.

“The simplicity and low cost of the polypill regimen make this approach attractive” when barriers such as lack of income, underinsurance, and difficulty attending clinic visits are common, said first author Daniel Muñoz, MD, of Vanderbilt University in Nashville, and coinvestigators. The investigators obtained the pills at a cost of $26 per month per participant.

People with low socioeconomic status and those who are nonwhite have high cardiovascular mortality, and the southeastern United States and rural areas have disproportionately high levels of cardiovascular disease burden, according to the investigators. The rates at which people with low socioeconomic status receive treatment for hypertension and hypercholesterolemia – leading cardiovascular disease risk factors – “are strikingly low,” Dr. Muñoz and colleagues said.

To assess the effectiveness of a polypill-based strategy in an underserved population with low socioeconomic status, the researchers conducted the randomized trial.

They enrolled 303 adults without cardiovascular disease, and 148 of the patients were randomized to receive the polypill, which contained generic versions of atorvastatin (10 mg), amlodipine (2.5 mg), losartan (25 mg), and hydrochlorothiazide (12.5 mg). The remaining 155 patients received usual care. All participants scheduled 2-month and 12-month follow-up visits.

The participants had an average age of 56 years, 60% were women, and more than 95% were black. More than 70% had an annual household income of less than $15,000. Baseline characteristics of the treatment groups did not significantly differ.

At baseline, the average BP was 140/83 mm Hg, and the average LDL cholesterol level was 113 mg/dL.

In all, 91% of the participants completed the 12-month trial visit. Average systolic BP decreased by 9 mm Hg in the group that received the polypill, compared with 2 mm Hg in the group that received usual care. Average LDL cholesterol level decreased by 15 mg/dL in the polypill group, versus 4 mg/dL in the usual-care group.

Changes in other medications

Clinicians discontinued or reduced doses of other antihypertensive or lipid-lowering medications in 44% of the patients in the polypill group and none in the usual-care group. Clinicians escalated therapy in 2% of the participants in the polypill group and in 10% of the usual-care group.

Side effects in participants who received the polypill included a 1% incidence of myalgias and a 1% incidence of hypotension or light-headedness. Liver function test results were normal.

Five serious adverse events that occurred during the trial – two in the polypill group and three in the usual-care group – were judged to be unrelated to the trial by a data and safety monitoring board.

The authors noted that limitations of the trial include its open-label design and that it was conducted at a single center.

“It is important to emphasize that use of the polypill does not preclude individualized, add-on therapies for residual elevations in blood-pressure or cholesterol levels, as judged by a patient’s physician,” said Dr. Muñoz and colleagues. “We recognize that a ‘one size fits all’ approach to cardiovascular disease prevention runs counter to current trends in precision medicine, in which clinical, genomic, and lifestyle factors are used for the development of individualized treatment strategies. Although the precision approach has clear virtues, a broader approach may benefit patients who face barriers to accessing the full advantages of precision medicine.”

The study was supported by grants from the American Heart Association Strategically Focused Prevention Research Network and the National Institutes of Health. One author disclosed personal fees from Novartis outside the study.

SOURCE: Muñoz D et al. N Engl J Med. 2019 Sep 18;381(12):1114-23. doi: 10.1056/NEJMoa1815359.

A daily polypill regimen improved cardiovascular risk factors in a socioeconomically vulnerable minority population, in a randomized controlled trial.

Patients at a federally qualified community health center in Alabama who received treatment with a combination pill for 1 year had greater reductions in systolic blood pressure and LDL cholesterol than did patients who received usual care, according to results published online on Sept. 19 in the New England Journal of Medicine.

“The simplicity and low cost of the polypill regimen make this approach attractive” when barriers such as lack of income, underinsurance, and difficulty attending clinic visits are common, said first author Daniel Muñoz, MD, of Vanderbilt University in Nashville, and coinvestigators. The investigators obtained the pills at a cost of $26 per month per participant.

People with low socioeconomic status and those who are nonwhite have high cardiovascular mortality, and the southeastern United States and rural areas have disproportionately high levels of cardiovascular disease burden, according to the investigators. The rates at which people with low socioeconomic status receive treatment for hypertension and hypercholesterolemia – leading cardiovascular disease risk factors – “are strikingly low,” Dr. Muñoz and colleagues said.

To assess the effectiveness of a polypill-based strategy in an underserved population with low socioeconomic status, the researchers conducted the randomized trial.

They enrolled 303 adults without cardiovascular disease, and 148 of the patients were randomized to receive the polypill, which contained generic versions of atorvastatin (10 mg), amlodipine (2.5 mg), losartan (25 mg), and hydrochlorothiazide (12.5 mg). The remaining 155 patients received usual care. All participants scheduled 2-month and 12-month follow-up visits.

The participants had an average age of 56 years, 60% were women, and more than 95% were black. More than 70% had an annual household income of less than $15,000. Baseline characteristics of the treatment groups did not significantly differ.

At baseline, the average BP was 140/83 mm Hg, and the average LDL cholesterol level was 113 mg/dL.

In all, 91% of the participants completed the 12-month trial visit. Average systolic BP decreased by 9 mm Hg in the group that received the polypill, compared with 2 mm Hg in the group that received usual care. Average LDL cholesterol level decreased by 15 mg/dL in the polypill group, versus 4 mg/dL in the usual-care group.

Changes in other medications

Clinicians discontinued or reduced doses of other antihypertensive or lipid-lowering medications in 44% of the patients in the polypill group and none in the usual-care group. Clinicians escalated therapy in 2% of the participants in the polypill group and in 10% of the usual-care group.

Side effects in participants who received the polypill included a 1% incidence of myalgias and a 1% incidence of hypotension or light-headedness. Liver function test results were normal.

Five serious adverse events that occurred during the trial – two in the polypill group and three in the usual-care group – were judged to be unrelated to the trial by a data and safety monitoring board.

The authors noted that limitations of the trial include its open-label design and that it was conducted at a single center.

“It is important to emphasize that use of the polypill does not preclude individualized, add-on therapies for residual elevations in blood-pressure or cholesterol levels, as judged by a patient’s physician,” said Dr. Muñoz and colleagues. “We recognize that a ‘one size fits all’ approach to cardiovascular disease prevention runs counter to current trends in precision medicine, in which clinical, genomic, and lifestyle factors are used for the development of individualized treatment strategies. Although the precision approach has clear virtues, a broader approach may benefit patients who face barriers to accessing the full advantages of precision medicine.”

The study was supported by grants from the American Heart Association Strategically Focused Prevention Research Network and the National Institutes of Health. One author disclosed personal fees from Novartis outside the study.

SOURCE: Muñoz D et al. N Engl J Med. 2019 Sep 18;381(12):1114-23. doi: 10.1056/NEJMoa1815359.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: A daily polypill regimen may improve cardiovascular disease prevention in underserved populations.

Major finding: Mean LDL cholesterol levels decreased by 15 mg/dL in the polypill group, vs. 4 mg/dL in the usual-care group.

Study details: An open-label, randomized trial that enrolled 303 adults without cardiovascular disease at a federally qualified community health center in Alabama.

Disclosures: The study was supported by grants from the American Heart Association Strategically Focused Prevention Research Network and the National Institutes of Health. One author disclosed personal fees from Novartis outside the study.

Source: Muñoz D et al. N Engl J Med. 2019;381(12):1114-23. doi: 10.1056/NEJMoa1815359.

Obesity, moderate drinking linked to psoriatic arthritis

a study has found.

Around one in five people with psoriasis will develop psoriatic arthritis (PsA), wrote Amelia Green of the University of Bath (England) and coauthors in the British Journal of Dermatology.

Previous studies have explored possible links between obesity, alcohol consumption, or smoking, and an increased risk of developing psoriatic arthritis. However, some of these studies found conflicting results or had limitations such as measuring only a single exposure.

In a cohort study, the Ms. Green and her colleagues examined data from the U.K. Clinical Practice Research Datalink for 90,189 individuals with psoriasis, 1,409 of whom were subsequently also diagnosed with psoriatic arthritis.

The analysis showed a significant association between increasing body mass index (BMI) and increasing odds of developing psoriatic arthritis. Compared with individuals with a BMI below 25 kg/m2, those with a BMI of 25.0-29.9 had a 79% greater odds of psoriatic arthritis, those with a BMI of 30.0-34.9 had a 2.10-fold greater odds, and those with a BMI at or above 35 had a 2.68-fold greater odds of developing psoriatic arthritis (P for trend less than .001). Adjustment for potential confounders such as sex, age, duration and severity of psoriasis, diabetes, smoking, and alcohol use slightly attenuated the association, but it remained statistically significant.

Researchers also examined the cumulative effect of lower BMIs over time, and found that over a 10-year period, reductions in BMI were associated with reductions in the risk of developing PsA, compared with remaining at the same BMI over that time.

“Here we have shown for the first time that losing weight over time could reduce the risk of developing PsA in a population with documented psoriasis,” the authors wrote. “As the effect of obesity on the risk of developing PsA may in fact occur with some delay and change over time, our analysis took into account both updated BMI measurements over time and the possible nonlinear and cumulative effects of BMI, which have not previously been investigated.”

Commenting on the mechanisms underlying the association between obesity and the development of PsA, the authors noted that adipose tissue is a source of inflammatory mediators such as adipokines and proinflammatory cytokines, which could lead to the development of PsA. Increasing body weight also could cause microtraumas of the connective tissue between tendon and bone, which may act as an initiating pathogenic event for PsA.

Moderate drinkers – defined as 0.1–3.0 drinks per day – had 57% higher odds of developing PsA when compared with nondrinkers, but former drinkers or heavy drinkers did not have an increased risk.

The study also didn’t see any effect of either past or current smoking on the risk of PsA, although there was a nonsignificant interaction with obesity that hinted at increased odds.

“While we found no association between smoking status and the development of PsA in people with psoriasis, further analysis revealed that the effect of smoking on the risk of PsA was possibly mediated through the effect of BMI on PsA; in other words, the protective effect of smoking may be associated with lower BMI among smokers,” the authors wrote.

Patients who developed PsA were also more likely to be younger (mean age of 44.7 years vs. 48.5 years), have severe psoriasis, and have had the disease for a shorter duration.

The study was funded by the National Institute for Health Research, and the authors declared grants from the funder during the conduct of the study. No other conflicts of interest were declared.

SOURCE: Green A et al. Br J Dermatol. 2019 Jun 18. doi: 10.1111/bjd.18227

a study has found.

Around one in five people with psoriasis will develop psoriatic arthritis (PsA), wrote Amelia Green of the University of Bath (England) and coauthors in the British Journal of Dermatology.

Previous studies have explored possible links between obesity, alcohol consumption, or smoking, and an increased risk of developing psoriatic arthritis. However, some of these studies found conflicting results or had limitations such as measuring only a single exposure.

In a cohort study, the Ms. Green and her colleagues examined data from the U.K. Clinical Practice Research Datalink for 90,189 individuals with psoriasis, 1,409 of whom were subsequently also diagnosed with psoriatic arthritis.

The analysis showed a significant association between increasing body mass index (BMI) and increasing odds of developing psoriatic arthritis. Compared with individuals with a BMI below 25 kg/m2, those with a BMI of 25.0-29.9 had a 79% greater odds of psoriatic arthritis, those with a BMI of 30.0-34.9 had a 2.10-fold greater odds, and those with a BMI at or above 35 had a 2.68-fold greater odds of developing psoriatic arthritis (P for trend less than .001). Adjustment for potential confounders such as sex, age, duration and severity of psoriasis, diabetes, smoking, and alcohol use slightly attenuated the association, but it remained statistically significant.

Researchers also examined the cumulative effect of lower BMIs over time, and found that over a 10-year period, reductions in BMI were associated with reductions in the risk of developing PsA, compared with remaining at the same BMI over that time.

“Here we have shown for the first time that losing weight over time could reduce the risk of developing PsA in a population with documented psoriasis,” the authors wrote. “As the effect of obesity on the risk of developing PsA may in fact occur with some delay and change over time, our analysis took into account both updated BMI measurements over time and the possible nonlinear and cumulative effects of BMI, which have not previously been investigated.”

Commenting on the mechanisms underlying the association between obesity and the development of PsA, the authors noted that adipose tissue is a source of inflammatory mediators such as adipokines and proinflammatory cytokines, which could lead to the development of PsA. Increasing body weight also could cause microtraumas of the connective tissue between tendon and bone, which may act as an initiating pathogenic event for PsA.

Moderate drinkers – defined as 0.1–3.0 drinks per day – had 57% higher odds of developing PsA when compared with nondrinkers, but former drinkers or heavy drinkers did not have an increased risk.

The study also didn’t see any effect of either past or current smoking on the risk of PsA, although there was a nonsignificant interaction with obesity that hinted at increased odds.

“While we found no association between smoking status and the development of PsA in people with psoriasis, further analysis revealed that the effect of smoking on the risk of PsA was possibly mediated through the effect of BMI on PsA; in other words, the protective effect of smoking may be associated with lower BMI among smokers,” the authors wrote.

Patients who developed PsA were also more likely to be younger (mean age of 44.7 years vs. 48.5 years), have severe psoriasis, and have had the disease for a shorter duration.

The study was funded by the National Institute for Health Research, and the authors declared grants from the funder during the conduct of the study. No other conflicts of interest were declared.

SOURCE: Green A et al. Br J Dermatol. 2019 Jun 18. doi: 10.1111/bjd.18227

a study has found.

Around one in five people with psoriasis will develop psoriatic arthritis (PsA), wrote Amelia Green of the University of Bath (England) and coauthors in the British Journal of Dermatology.

Previous studies have explored possible links between obesity, alcohol consumption, or smoking, and an increased risk of developing psoriatic arthritis. However, some of these studies found conflicting results or had limitations such as measuring only a single exposure.

In a cohort study, the Ms. Green and her colleagues examined data from the U.K. Clinical Practice Research Datalink for 90,189 individuals with psoriasis, 1,409 of whom were subsequently also diagnosed with psoriatic arthritis.

The analysis showed a significant association between increasing body mass index (BMI) and increasing odds of developing psoriatic arthritis. Compared with individuals with a BMI below 25 kg/m2, those with a BMI of 25.0-29.9 had a 79% greater odds of psoriatic arthritis, those with a BMI of 30.0-34.9 had a 2.10-fold greater odds, and those with a BMI at or above 35 had a 2.68-fold greater odds of developing psoriatic arthritis (P for trend less than .001). Adjustment for potential confounders such as sex, age, duration and severity of psoriasis, diabetes, smoking, and alcohol use slightly attenuated the association, but it remained statistically significant.

Researchers also examined the cumulative effect of lower BMIs over time, and found that over a 10-year period, reductions in BMI were associated with reductions in the risk of developing PsA, compared with remaining at the same BMI over that time.

“Here we have shown for the first time that losing weight over time could reduce the risk of developing PsA in a population with documented psoriasis,” the authors wrote. “As the effect of obesity on the risk of developing PsA may in fact occur with some delay and change over time, our analysis took into account both updated BMI measurements over time and the possible nonlinear and cumulative effects of BMI, which have not previously been investigated.”

Commenting on the mechanisms underlying the association between obesity and the development of PsA, the authors noted that adipose tissue is a source of inflammatory mediators such as adipokines and proinflammatory cytokines, which could lead to the development of PsA. Increasing body weight also could cause microtraumas of the connective tissue between tendon and bone, which may act as an initiating pathogenic event for PsA.

Moderate drinkers – defined as 0.1–3.0 drinks per day – had 57% higher odds of developing PsA when compared with nondrinkers, but former drinkers or heavy drinkers did not have an increased risk.

The study also didn’t see any effect of either past or current smoking on the risk of PsA, although there was a nonsignificant interaction with obesity that hinted at increased odds.

“While we found no association between smoking status and the development of PsA in people with psoriasis, further analysis revealed that the effect of smoking on the risk of PsA was possibly mediated through the effect of BMI on PsA; in other words, the protective effect of smoking may be associated with lower BMI among smokers,” the authors wrote.

Patients who developed PsA were also more likely to be younger (mean age of 44.7 years vs. 48.5 years), have severe psoriasis, and have had the disease for a shorter duration.

The study was funded by the National Institute for Health Research, and the authors declared grants from the funder during the conduct of the study. No other conflicts of interest were declared.

SOURCE: Green A et al. Br J Dermatol. 2019 Jun 18. doi: 10.1111/bjd.18227

FROM THE BRITISH JOURNAL OF DERMATOLOGY

Cancer with meatballs and the unkindest frozen cut

Two great tastes that cause cancer together

Spaghetti and meatballs. They go together like chocolate and peanut butter. It almost feels wrong to eat one without the other; but if you’re worried about cancer, you may have to go meatless.

The latest blow to an enjoyable meal comes courtesy of a study published in Molecular Nutrition & Food Research, which tested how lycopene – a carotenoid found in tomatoes that has notable anticancer properties – is absorbed by the body when in the presence of iron, which meat contains plenty of. When the study subjects drank a tomato-based shake infused with iron, lycopene was far less present in the blood and digestive system than in subjects who drank an iron-free tomato shake.

The study authors claim that either the iron is oxidizing with the lycopene or that the iron turns the mix of tomato and fat into something like separated salad dressing, preventing everything from mixing together when it enters the body.

Tastes like chicken

It’s an enduring oncologic mystery: How can some cancer cells endure what should be a lethal therapeutic beating, only to bounce off the canvas after an eight-count to deliver a devastating relapse-counterpunch of their own?

A new study offers an unsavory answer: cannibalism.

Turns out that dining on one’s weaker cancer cell neighbors during a chemotherapy barrage provides just enough energy to rope-a-dope and stage a late-round comeback.

Breast cancer cells with wild-type TP53 genes are particularly prone to revival after taking a beating at the hands of doxorubicin or other chemotherapy drugs. Like many of their cancerous compatriots, they retreat to a corner of the therapy ring during chemo and go gloves up in a state of senescence.

But researchers at Tulane University noticed that, in the midst of that pharmaceutical pummeling, those senescent wild-type TP53 cells start doing something that their other senescent, cancerous neighbors don’t: They engulf other cancer cells. Why? Seems those breast cancer cells with the wild-type TP53 gloves are equipped with gene expression programs similar to macrophages.

What’s more, the cannibals’ appetite for fellow cells appears to confer a survival advantage when the chemo rounds end.

We at the Bureau of LOTME will resist the impulse to ring out this item with a tasteless Donner Party punchline. Instead, we’ll indulge our high-brow inner child by retooling an elementary school comedy classic.

Why don’t cancer-cell cannibals eat cancer-cell comedians? They taste funny.

Poop, what is it good for?

One thing you can cross off the list: Cutting meat.

That might seem pretty obvious, but there’s actually a bit of history here. In a book published in 1998, anthropologist Wade Davis shared an account of an elderly Inuit man trapped alone in a storm. He had no tools and no food, so he made a knife out of his own frozen stool and used it to kill and butcher a dog.

That story, which has since become something of an urban legend, directly inspired the career of another anthropologist, Metin Eren, PhD, of Kent State University in Ohio. As director of the school’s laboratory of experimental archaeology, Dr. Eren decided that the time had come to prove or disprove the poop-knife hypothesis.

First, he and his team had to make such a knife. To produce the needed raw materials, Dr. Eren went on an 8-day “Arctic diet” that included lots of beef, turkey, and salmon, with some applesauce and butternut squash risotto thrown in, while a colleague stuck to a more Western diet. Their samples were then frozen to –58° F and sharpened with metal files.

“I was surprised at how hard human feces could get when frozen,” Dr. Eren told Live Science. “I started to think, ‘Oh my gosh, this might actually work!’ ”

The team’s attempts to cut refrigerated pig hide, however, were not successful. “Like a crayon, it just left brown streaks on the meat – no slices at all,” he said.

Today’s lesson? Don’t meat your heroes or their poop knives; they’re sure to disappoint you.

Two great tastes that cause cancer together

Spaghetti and meatballs. They go together like chocolate and peanut butter. It almost feels wrong to eat one without the other; but if you’re worried about cancer, you may have to go meatless.

The latest blow to an enjoyable meal comes courtesy of a study published in Molecular Nutrition & Food Research, which tested how lycopene – a carotenoid found in tomatoes that has notable anticancer properties – is absorbed by the body when in the presence of iron, which meat contains plenty of. When the study subjects drank a tomato-based shake infused with iron, lycopene was far less present in the blood and digestive system than in subjects who drank an iron-free tomato shake.

The study authors claim that either the iron is oxidizing with the lycopene or that the iron turns the mix of tomato and fat into something like separated salad dressing, preventing everything from mixing together when it enters the body.

Tastes like chicken

It’s an enduring oncologic mystery: How can some cancer cells endure what should be a lethal therapeutic beating, only to bounce off the canvas after an eight-count to deliver a devastating relapse-counterpunch of their own?

A new study offers an unsavory answer: cannibalism.

Turns out that dining on one’s weaker cancer cell neighbors during a chemotherapy barrage provides just enough energy to rope-a-dope and stage a late-round comeback.

Breast cancer cells with wild-type TP53 genes are particularly prone to revival after taking a beating at the hands of doxorubicin or other chemotherapy drugs. Like many of their cancerous compatriots, they retreat to a corner of the therapy ring during chemo and go gloves up in a state of senescence.

But researchers at Tulane University noticed that, in the midst of that pharmaceutical pummeling, those senescent wild-type TP53 cells start doing something that their other senescent, cancerous neighbors don’t: They engulf other cancer cells. Why? Seems those breast cancer cells with the wild-type TP53 gloves are equipped with gene expression programs similar to macrophages.

What’s more, the cannibals’ appetite for fellow cells appears to confer a survival advantage when the chemo rounds end.

We at the Bureau of LOTME will resist the impulse to ring out this item with a tasteless Donner Party punchline. Instead, we’ll indulge our high-brow inner child by retooling an elementary school comedy classic.

Why don’t cancer-cell cannibals eat cancer-cell comedians? They taste funny.

Poop, what is it good for?

One thing you can cross off the list: Cutting meat.

That might seem pretty obvious, but there’s actually a bit of history here. In a book published in 1998, anthropologist Wade Davis shared an account of an elderly Inuit man trapped alone in a storm. He had no tools and no food, so he made a knife out of his own frozen stool and used it to kill and butcher a dog.

That story, which has since become something of an urban legend, directly inspired the career of another anthropologist, Metin Eren, PhD, of Kent State University in Ohio. As director of the school’s laboratory of experimental archaeology, Dr. Eren decided that the time had come to prove or disprove the poop-knife hypothesis.

First, he and his team had to make such a knife. To produce the needed raw materials, Dr. Eren went on an 8-day “Arctic diet” that included lots of beef, turkey, and salmon, with some applesauce and butternut squash risotto thrown in, while a colleague stuck to a more Western diet. Their samples were then frozen to –58° F and sharpened with metal files.

“I was surprised at how hard human feces could get when frozen,” Dr. Eren told Live Science. “I started to think, ‘Oh my gosh, this might actually work!’ ”

The team’s attempts to cut refrigerated pig hide, however, were not successful. “Like a crayon, it just left brown streaks on the meat – no slices at all,” he said.

Today’s lesson? Don’t meat your heroes or their poop knives; they’re sure to disappoint you.

Two great tastes that cause cancer together

Spaghetti and meatballs. They go together like chocolate and peanut butter. It almost feels wrong to eat one without the other; but if you’re worried about cancer, you may have to go meatless.

The latest blow to an enjoyable meal comes courtesy of a study published in Molecular Nutrition & Food Research, which tested how lycopene – a carotenoid found in tomatoes that has notable anticancer properties – is absorbed by the body when in the presence of iron, which meat contains plenty of. When the study subjects drank a tomato-based shake infused with iron, lycopene was far less present in the blood and digestive system than in subjects who drank an iron-free tomato shake.

The study authors claim that either the iron is oxidizing with the lycopene or that the iron turns the mix of tomato and fat into something like separated salad dressing, preventing everything from mixing together when it enters the body.

Tastes like chicken

It’s an enduring oncologic mystery: How can some cancer cells endure what should be a lethal therapeutic beating, only to bounce off the canvas after an eight-count to deliver a devastating relapse-counterpunch of their own?

A new study offers an unsavory answer: cannibalism.

Turns out that dining on one’s weaker cancer cell neighbors during a chemotherapy barrage provides just enough energy to rope-a-dope and stage a late-round comeback.

Breast cancer cells with wild-type TP53 genes are particularly prone to revival after taking a beating at the hands of doxorubicin or other chemotherapy drugs. Like many of their cancerous compatriots, they retreat to a corner of the therapy ring during chemo and go gloves up in a state of senescence.

But researchers at Tulane University noticed that, in the midst of that pharmaceutical pummeling, those senescent wild-type TP53 cells start doing something that their other senescent, cancerous neighbors don’t: They engulf other cancer cells. Why? Seems those breast cancer cells with the wild-type TP53 gloves are equipped with gene expression programs similar to macrophages.

What’s more, the cannibals’ appetite for fellow cells appears to confer a survival advantage when the chemo rounds end.

We at the Bureau of LOTME will resist the impulse to ring out this item with a tasteless Donner Party punchline. Instead, we’ll indulge our high-brow inner child by retooling an elementary school comedy classic.

Why don’t cancer-cell cannibals eat cancer-cell comedians? They taste funny.

Poop, what is it good for?

One thing you can cross off the list: Cutting meat.

That might seem pretty obvious, but there’s actually a bit of history here. In a book published in 1998, anthropologist Wade Davis shared an account of an elderly Inuit man trapped alone in a storm. He had no tools and no food, so he made a knife out of his own frozen stool and used it to kill and butcher a dog.

That story, which has since become something of an urban legend, directly inspired the career of another anthropologist, Metin Eren, PhD, of Kent State University in Ohio. As director of the school’s laboratory of experimental archaeology, Dr. Eren decided that the time had come to prove or disprove the poop-knife hypothesis.

First, he and his team had to make such a knife. To produce the needed raw materials, Dr. Eren went on an 8-day “Arctic diet” that included lots of beef, turkey, and salmon, with some applesauce and butternut squash risotto thrown in, while a colleague stuck to a more Western diet. Their samples were then frozen to –58° F and sharpened with metal files.

“I was surprised at how hard human feces could get when frozen,” Dr. Eren told Live Science. “I started to think, ‘Oh my gosh, this might actually work!’ ”

The team’s attempts to cut refrigerated pig hide, however, were not successful. “Like a crayon, it just left brown streaks on the meat – no slices at all,” he said.

Today’s lesson? Don’t meat your heroes or their poop knives; they’re sure to disappoint you.

Esketamine nasal spray may get expanded indication

COPENHAGEN – Esketamine nasal spray achieved rapid reduction of major depressive disorder symptoms in patients at imminent risk for suicide in a pair of pivotal phase 3 clinical trials known as ASPIRE-1 and ASPIRE-2, Carla M. Canuso, MD, reported at the annual congress of the European College of Neuropsychopharmacology.

These were groundbreaking studies, which addressed a major unmet need familiar to every mental health professional, given that more than 800,000 suicides per year occur worldwide. Standard antidepressants are of only limited value during the period of acute suicidal crisis because they take 4-6 weeks to work. Moreover, this population of seriously depressed and suicidal patients has been understudied.

“Patients with active suicidal ideation and intent are routinely excluded from antidepressant trials,” observed Dr. Canuso, a psychiatrist who serves as senior director of neuroscience clinical development at Janssen Research and Development in Titusville, N.J.

It’s very important for the field to know that we can actually study these patients safely and effectively in clinical trials,” she said.

ASPIRE-1 and -2 were identically designed, randomized, double-blind, placebo-controlled, multinational studies conducted in patients with moderate to severe major depressive disorder as evidenced by a baseline Montgomery-Åsberg Depression Rating Scale (MADRS) total score of about 40, along with moderate to extreme active suicidal ideation and intent as assessed using the Clinical Global Impression-Severity of Suicidality-Revised (CGI-SS-R).

“These were all patients in psychiatric crisis seeking clinical care,” according to Dr. Canuso.

All 456 participants in the two phase 3 studies underwent an initial 5-14 days of psychiatric hospitalization, during which they began treatment with esketamine nasal spray at 84 mg twice weekly or placebo coupled with comprehensive standard of care, which included a newly initiated and/or optimized oral antidepressant regimen.

The primary endpoint in the two clinical trials was the change in MADRS total score 24 hours after the first dose of study medication. The esketamine-treated patients demonstrated a mean reduction of 16.4 and 15.7 points, respectively, in the two trials, which was 3.82 points greater than in the pooled placebo group. This represents a clinically meaningful and statistically significant between-group difference.

The treatment effect size was even larger in some of the prespecified patient subgroups. Dr. Canuso drew attention to two key groups: In the roughly 60% of ASPIRE participants with a prior suicide attempt, esketamine resulted in a mean 4.81-point greater reduction in MADRS total score than placebo, and in the nearly 30% of participants with a suicide attempt during the past month, the difference was 5.22 points.

A word on the study design: Patients received intranasal esketamine at 84 mg per dose or placebo in double-blind fashion twice weekly for 4 weeks, thereby giving time for their oral antidepressant therapy to kick in, and were then followed on the conventional therapy out to 90 days.

A between-group difference in MADRS total score in favor of the esketamine group was evident as early as 4 hours after the first dose and continued through day 25, the end of the double-blind treatment period, at which point 54% and 47% of the esketamine-plus-conventional-antidepressant groups in the two trials had achieved remission as defined by a MADRS score of 12 or less, as had about one-third of the control group.

The prespecified key secondary efficacy endpoint in ASPIRE-1 and -2 was change in the CGI-SS-R 24 hours after the first dose. Both the esketamine and placebo-treated patients experienced significant improvement in this domain, with a disappointing absence of between-group statistical significance.

“We think that this may be due to the effect of acute hospitalization in diffusing the suicidal crisis,” Dr. Canuso said.

She noted, however, that other suicidality indices did show significant improvement in the esketamine-treated group during assessments at 4 hours, 24 hours, and 25 days after the first dose. For example, the double-blind esketamine-treated patients were 2.62-fold more likely than controls to show a significant improvement in MADRS Suicidal Thoughts at 4 hours after dose number one, and 6.15 times more likely to do so 4 hours after their day-25 dose. The CGI structured physician assessments of suicide risk and frequency of suicidal thinking, as well as patient-reported frequency of suicidal thinking, showed consistent favorable numeric trends for improvement with esketamine, with odds ratios of 1.46-2.11 from 4 hours through 25 days, although those results generally failed to achieve statistical significance.

In terms of safety, the rate of serious adverse events was just under 12% in both the esketamine and placebo arms. As in earlier studies, the most common adverse events associated with the novel antidepressant were dizziness, dissociation, nausea, and sleepiness, all several-fold more frequent than with placebo.

Esketamine is the S-enantiomer of racemic ketamine. It’s a noncompetitive N-methyl-D-aspartate receptor antagonist.

Janssen, which already markets intranasal esketamine as Spravato in the United States for treatment-resistant depression, plans to file for an expanded indication on the basis of the ASPIRE-1 and -2 results. The Food and Drug Administration already has granted Breakthrough Therapy designation for research on esketamine for reduction of major depression symptoms in patients with active suicidal ideation.

The ASPIRE studies were funded by Janssen.

COPENHAGEN – Esketamine nasal spray achieved rapid reduction of major depressive disorder symptoms in patients at imminent risk for suicide in a pair of pivotal phase 3 clinical trials known as ASPIRE-1 and ASPIRE-2, Carla M. Canuso, MD, reported at the annual congress of the European College of Neuropsychopharmacology.

These were groundbreaking studies, which addressed a major unmet need familiar to every mental health professional, given that more than 800,000 suicides per year occur worldwide. Standard antidepressants are of only limited value during the period of acute suicidal crisis because they take 4-6 weeks to work. Moreover, this population of seriously depressed and suicidal patients has been understudied.

“Patients with active suicidal ideation and intent are routinely excluded from antidepressant trials,” observed Dr. Canuso, a psychiatrist who serves as senior director of neuroscience clinical development at Janssen Research and Development in Titusville, N.J.

It’s very important for the field to know that we can actually study these patients safely and effectively in clinical trials,” she said.

ASPIRE-1 and -2 were identically designed, randomized, double-blind, placebo-controlled, multinational studies conducted in patients with moderate to severe major depressive disorder as evidenced by a baseline Montgomery-Åsberg Depression Rating Scale (MADRS) total score of about 40, along with moderate to extreme active suicidal ideation and intent as assessed using the Clinical Global Impression-Severity of Suicidality-Revised (CGI-SS-R).

“These were all patients in psychiatric crisis seeking clinical care,” according to Dr. Canuso.

All 456 participants in the two phase 3 studies underwent an initial 5-14 days of psychiatric hospitalization, during which they began treatment with esketamine nasal spray at 84 mg twice weekly or placebo coupled with comprehensive standard of care, which included a newly initiated and/or optimized oral antidepressant regimen.

The primary endpoint in the two clinical trials was the change in MADRS total score 24 hours after the first dose of study medication. The esketamine-treated patients demonstrated a mean reduction of 16.4 and 15.7 points, respectively, in the two trials, which was 3.82 points greater than in the pooled placebo group. This represents a clinically meaningful and statistically significant between-group difference.

The treatment effect size was even larger in some of the prespecified patient subgroups. Dr. Canuso drew attention to two key groups: In the roughly 60% of ASPIRE participants with a prior suicide attempt, esketamine resulted in a mean 4.81-point greater reduction in MADRS total score than placebo, and in the nearly 30% of participants with a suicide attempt during the past month, the difference was 5.22 points.

A word on the study design: Patients received intranasal esketamine at 84 mg per dose or placebo in double-blind fashion twice weekly for 4 weeks, thereby giving time for their oral antidepressant therapy to kick in, and were then followed on the conventional therapy out to 90 days.

A between-group difference in MADRS total score in favor of the esketamine group was evident as early as 4 hours after the first dose and continued through day 25, the end of the double-blind treatment period, at which point 54% and 47% of the esketamine-plus-conventional-antidepressant groups in the two trials had achieved remission as defined by a MADRS score of 12 or less, as had about one-third of the control group.

The prespecified key secondary efficacy endpoint in ASPIRE-1 and -2 was change in the CGI-SS-R 24 hours after the first dose. Both the esketamine and placebo-treated patients experienced significant improvement in this domain, with a disappointing absence of between-group statistical significance.

“We think that this may be due to the effect of acute hospitalization in diffusing the suicidal crisis,” Dr. Canuso said.

She noted, however, that other suicidality indices did show significant improvement in the esketamine-treated group during assessments at 4 hours, 24 hours, and 25 days after the first dose. For example, the double-blind esketamine-treated patients were 2.62-fold more likely than controls to show a significant improvement in MADRS Suicidal Thoughts at 4 hours after dose number one, and 6.15 times more likely to do so 4 hours after their day-25 dose. The CGI structured physician assessments of suicide risk and frequency of suicidal thinking, as well as patient-reported frequency of suicidal thinking, showed consistent favorable numeric trends for improvement with esketamine, with odds ratios of 1.46-2.11 from 4 hours through 25 days, although those results generally failed to achieve statistical significance.

In terms of safety, the rate of serious adverse events was just under 12% in both the esketamine and placebo arms. As in earlier studies, the most common adverse events associated with the novel antidepressant were dizziness, dissociation, nausea, and sleepiness, all several-fold more frequent than with placebo.

Esketamine is the S-enantiomer of racemic ketamine. It’s a noncompetitive N-methyl-D-aspartate receptor antagonist.

Janssen, which already markets intranasal esketamine as Spravato in the United States for treatment-resistant depression, plans to file for an expanded indication on the basis of the ASPIRE-1 and -2 results. The Food and Drug Administration already has granted Breakthrough Therapy designation for research on esketamine for reduction of major depression symptoms in patients with active suicidal ideation.

The ASPIRE studies were funded by Janssen.

COPENHAGEN – Esketamine nasal spray achieved rapid reduction of major depressive disorder symptoms in patients at imminent risk for suicide in a pair of pivotal phase 3 clinical trials known as ASPIRE-1 and ASPIRE-2, Carla M. Canuso, MD, reported at the annual congress of the European College of Neuropsychopharmacology.

These were groundbreaking studies, which addressed a major unmet need familiar to every mental health professional, given that more than 800,000 suicides per year occur worldwide. Standard antidepressants are of only limited value during the period of acute suicidal crisis because they take 4-6 weeks to work. Moreover, this population of seriously depressed and suicidal patients has been understudied.

“Patients with active suicidal ideation and intent are routinely excluded from antidepressant trials,” observed Dr. Canuso, a psychiatrist who serves as senior director of neuroscience clinical development at Janssen Research and Development in Titusville, N.J.

It’s very important for the field to know that we can actually study these patients safely and effectively in clinical trials,” she said.

ASPIRE-1 and -2 were identically designed, randomized, double-blind, placebo-controlled, multinational studies conducted in patients with moderate to severe major depressive disorder as evidenced by a baseline Montgomery-Åsberg Depression Rating Scale (MADRS) total score of about 40, along with moderate to extreme active suicidal ideation and intent as assessed using the Clinical Global Impression-Severity of Suicidality-Revised (CGI-SS-R).

“These were all patients in psychiatric crisis seeking clinical care,” according to Dr. Canuso.

All 456 participants in the two phase 3 studies underwent an initial 5-14 days of psychiatric hospitalization, during which they began treatment with esketamine nasal spray at 84 mg twice weekly or placebo coupled with comprehensive standard of care, which included a newly initiated and/or optimized oral antidepressant regimen.

The primary endpoint in the two clinical trials was the change in MADRS total score 24 hours after the first dose of study medication. The esketamine-treated patients demonstrated a mean reduction of 16.4 and 15.7 points, respectively, in the two trials, which was 3.82 points greater than in the pooled placebo group. This represents a clinically meaningful and statistically significant between-group difference.

The treatment effect size was even larger in some of the prespecified patient subgroups. Dr. Canuso drew attention to two key groups: In the roughly 60% of ASPIRE participants with a prior suicide attempt, esketamine resulted in a mean 4.81-point greater reduction in MADRS total score than placebo, and in the nearly 30% of participants with a suicide attempt during the past month, the difference was 5.22 points.

A word on the study design: Patients received intranasal esketamine at 84 mg per dose or placebo in double-blind fashion twice weekly for 4 weeks, thereby giving time for their oral antidepressant therapy to kick in, and were then followed on the conventional therapy out to 90 days.

A between-group difference in MADRS total score in favor of the esketamine group was evident as early as 4 hours after the first dose and continued through day 25, the end of the double-blind treatment period, at which point 54% and 47% of the esketamine-plus-conventional-antidepressant groups in the two trials had achieved remission as defined by a MADRS score of 12 or less, as had about one-third of the control group.

The prespecified key secondary efficacy endpoint in ASPIRE-1 and -2 was change in the CGI-SS-R 24 hours after the first dose. Both the esketamine and placebo-treated patients experienced significant improvement in this domain, with a disappointing absence of between-group statistical significance.

“We think that this may be due to the effect of acute hospitalization in diffusing the suicidal crisis,” Dr. Canuso said.

She noted, however, that other suicidality indices did show significant improvement in the esketamine-treated group during assessments at 4 hours, 24 hours, and 25 days after the first dose. For example, the double-blind esketamine-treated patients were 2.62-fold more likely than controls to show a significant improvement in MADRS Suicidal Thoughts at 4 hours after dose number one, and 6.15 times more likely to do so 4 hours after their day-25 dose. The CGI structured physician assessments of suicide risk and frequency of suicidal thinking, as well as patient-reported frequency of suicidal thinking, showed consistent favorable numeric trends for improvement with esketamine, with odds ratios of 1.46-2.11 from 4 hours through 25 days, although those results generally failed to achieve statistical significance.

In terms of safety, the rate of serious adverse events was just under 12% in both the esketamine and placebo arms. As in earlier studies, the most common adverse events associated with the novel antidepressant were dizziness, dissociation, nausea, and sleepiness, all several-fold more frequent than with placebo.

Esketamine is the S-enantiomer of racemic ketamine. It’s a noncompetitive N-methyl-D-aspartate receptor antagonist.

Janssen, which already markets intranasal esketamine as Spravato in the United States for treatment-resistant depression, plans to file for an expanded indication on the basis of the ASPIRE-1 and -2 results. The Food and Drug Administration already has granted Breakthrough Therapy designation for research on esketamine for reduction of major depression symptoms in patients with active suicidal ideation.

The ASPIRE studies were funded by Janssen.

REPORTING FROM ECNP 2019

Most practices not screening for five social needs

that are associated with health outcomes, a study found.

Lead author Taressa K. Fraze, PhD, of the Dartmouth Institute for Health Policy and Clinical Practice in Lebanon, N.H., and colleagues conducted a cross-sectional survey analysis of responses by physician practices and hospitals that participated in the 2017-2018 National Survey of Healthcare Organizations and Systems. The investigators evaluated how many practices and hospitals reported screening of patients for five social needs: food insecurity, housing instability, utility needs, transportation needs, and experience with interpersonal violence. The final analysis included 2,190 physician practices and 739 hospitals.

Of physician practices, 56% reported screening for interpersonal violence, 35% screened for transportation needs, 30% for food insecurity, 28% for housing instability, and 23% for utility needs, according to the study published in JAMA Network Open.

Among hospitals, 75% reported screening for interpersonal violence, 74% for transportation needs, 60% for housing instability, 40% for food insecurity, and 36% for utility needs. Only 16% of physician practices and 24% of hospitals screened for all five social needs, the study found, while 33% of physician practices and 8% of hospitals reported screening for no social needs. The majority of the overall screening activity was driven by interpersonal violence screenings.

Physician practices that served more disadvantaged patients, including federally qualified health centers and those with more Medicaid revenue were more likely to screen for all five social needs. Practices in Medicaid accountable care organization contracts and those in Medicaid expansion states also had higher screening rates. Regionally, practices in the West had the highest screening rates, while practices in the Midwest had the lowest rates.

Among hospitals, the investigators found few significant screening differences based on hospital characteristics. Ownership, critical access status, delivery reform participation, rural status, region, and Medicaid expansion had no significant effects on screening rates, although academic medical centers were more likely to screen patients for all needs compared with nonacademic medical centers.

The study authors wrote that doctors and hospitals may need more resources and additional processes to screen for and/or to address the social needs of patients. They noted that practices and hospitals that did not screen for social needs were more likely to report a lack of financial resources, time, and incentives as major barriers.

To implement better screening protocols and address patients’ needs, the investigators wrote that doctors and hospitals will need financial support. For example, the Centers for Medicare & Medicaid Services should consider expanding care management billing to include managing care for patients who are both at risk or have clinically complex conditions in addition to social needs.

Dr. Fraze and three coauthors reported receiving grants from the Agency for Healthcare Research and Quality during the conduct of the study. Dr. Fraze also reported receiving grants from the Robert Wood Johnson Foundation during the conduct of the study and receiving grants as an investigator from the 6 Foundation Collaborative, Commonwealth Fund, and Centers for Disease Control and Prevention. One coauthor reported receiving grants from the National Institute on Aging/National Institutes of Health during the conduct of the study.

SOURCE: Fraze TK et al. JAMA Netw Open. 2019 Sep 18. doi: 10.1001/jamanetworkopen.2019.11514.

While momentum for social risk screening is growing nationally, the recent study by Fraze et al. illustrates that screening across multiple domains is not yet common in clinical settings, wrote Rachel Gold, PhD, of Kaiser Permanente Center for Health Research Northwest in Portland, Ore.

In an editorial accompanying the study, Dr. Gold and coauthor Laura Gottlieb, MD, an associate professor of family and community medicine at the University of California, San Francisco, wrote that a critical finding of the study is that reimbursement is associated with uptake of social risk screening (JAMA Network Open. 2019 Sep 18. doi: 10.1001/jamanetworkopen.2019.11513). Specifically, the analysis found that screening for social risks is more common in care settings that receive some form of payment to support such efforts, directly or indirectly.

“This finding aligns with other research showing that altering incentive structures may enhance the adoption of social risk screening in health care settings,” Dr. Gold and Dr. Gottlieb wrote. “But these findings are just a beginning. Disseminating and sustaining social risk screening will require a deep understanding of how best to structure financial and other incentives to optimally support social risk screening; high-quality research is needed to help design reimbursement models that reliably influence adoption.”

Further research is needed not only to explain challenges to the implementation of social risk screening, but also to reveal the best evidence-based methods for overcoming them, the authors wrote. Such methods will likely require a range of support strategies targeted to the needs of various health care settings.

“Documenting social risk data in health care settings requires identifying ways to implement such screening effectively and sustainably,” Dr. Gold and Dr. Gottlieb wrote. “These findings underscore how much we still have to learn about the types of support needed to implement and sustain these practices.”

Dr. Gold reported receiving grants from the National Institutes of Health during the conduct of the study. Dr. Gottlieb reported receiving grants from the Robert Wood Johnson Foundation, the Commonwealth Fund, Kaiser Permanente, Episcopal Health Foundation, the Agency for Healthcare Research and Quality, St. David’s Foundation, the Pritzker Family Fund, and the Harvard Research Network on Toxic Stress outside the submitted work.

While momentum for social risk screening is growing nationally, the recent study by Fraze et al. illustrates that screening across multiple domains is not yet common in clinical settings, wrote Rachel Gold, PhD, of Kaiser Permanente Center for Health Research Northwest in Portland, Ore.

In an editorial accompanying the study, Dr. Gold and coauthor Laura Gottlieb, MD, an associate professor of family and community medicine at the University of California, San Francisco, wrote that a critical finding of the study is that reimbursement is associated with uptake of social risk screening (JAMA Network Open. 2019 Sep 18. doi: 10.1001/jamanetworkopen.2019.11513). Specifically, the analysis found that screening for social risks is more common in care settings that receive some form of payment to support such efforts, directly or indirectly.

“This finding aligns with other research showing that altering incentive structures may enhance the adoption of social risk screening in health care settings,” Dr. Gold and Dr. Gottlieb wrote. “But these findings are just a beginning. Disseminating and sustaining social risk screening will require a deep understanding of how best to structure financial and other incentives to optimally support social risk screening; high-quality research is needed to help design reimbursement models that reliably influence adoption.”

Further research is needed not only to explain challenges to the implementation of social risk screening, but also to reveal the best evidence-based methods for overcoming them, the authors wrote. Such methods will likely require a range of support strategies targeted to the needs of various health care settings.

“Documenting social risk data in health care settings requires identifying ways to implement such screening effectively and sustainably,” Dr. Gold and Dr. Gottlieb wrote. “These findings underscore how much we still have to learn about the types of support needed to implement and sustain these practices.”

Dr. Gold reported receiving grants from the National Institutes of Health during the conduct of the study. Dr. Gottlieb reported receiving grants from the Robert Wood Johnson Foundation, the Commonwealth Fund, Kaiser Permanente, Episcopal Health Foundation, the Agency for Healthcare Research and Quality, St. David’s Foundation, the Pritzker Family Fund, and the Harvard Research Network on Toxic Stress outside the submitted work.

While momentum for social risk screening is growing nationally, the recent study by Fraze et al. illustrates that screening across multiple domains is not yet common in clinical settings, wrote Rachel Gold, PhD, of Kaiser Permanente Center for Health Research Northwest in Portland, Ore.

In an editorial accompanying the study, Dr. Gold and coauthor Laura Gottlieb, MD, an associate professor of family and community medicine at the University of California, San Francisco, wrote that a critical finding of the study is that reimbursement is associated with uptake of social risk screening (JAMA Network Open. 2019 Sep 18. doi: 10.1001/jamanetworkopen.2019.11513). Specifically, the analysis found that screening for social risks is more common in care settings that receive some form of payment to support such efforts, directly or indirectly.

“This finding aligns with other research showing that altering incentive structures may enhance the adoption of social risk screening in health care settings,” Dr. Gold and Dr. Gottlieb wrote. “But these findings are just a beginning. Disseminating and sustaining social risk screening will require a deep understanding of how best to structure financial and other incentives to optimally support social risk screening; high-quality research is needed to help design reimbursement models that reliably influence adoption.”

Further research is needed not only to explain challenges to the implementation of social risk screening, but also to reveal the best evidence-based methods for overcoming them, the authors wrote. Such methods will likely require a range of support strategies targeted to the needs of various health care settings.

“Documenting social risk data in health care settings requires identifying ways to implement such screening effectively and sustainably,” Dr. Gold and Dr. Gottlieb wrote. “These findings underscore how much we still have to learn about the types of support needed to implement and sustain these practices.”

Dr. Gold reported receiving grants from the National Institutes of Health during the conduct of the study. Dr. Gottlieb reported receiving grants from the Robert Wood Johnson Foundation, the Commonwealth Fund, Kaiser Permanente, Episcopal Health Foundation, the Agency for Healthcare Research and Quality, St. David’s Foundation, the Pritzker Family Fund, and the Harvard Research Network on Toxic Stress outside the submitted work.

that are associated with health outcomes, a study found.

Lead author Taressa K. Fraze, PhD, of the Dartmouth Institute for Health Policy and Clinical Practice in Lebanon, N.H., and colleagues conducted a cross-sectional survey analysis of responses by physician practices and hospitals that participated in the 2017-2018 National Survey of Healthcare Organizations and Systems. The investigators evaluated how many practices and hospitals reported screening of patients for five social needs: food insecurity, housing instability, utility needs, transportation needs, and experience with interpersonal violence. The final analysis included 2,190 physician practices and 739 hospitals.

Of physician practices, 56% reported screening for interpersonal violence, 35% screened for transportation needs, 30% for food insecurity, 28% for housing instability, and 23% for utility needs, according to the study published in JAMA Network Open.

Among hospitals, 75% reported screening for interpersonal violence, 74% for transportation needs, 60% for housing instability, 40% for food insecurity, and 36% for utility needs. Only 16% of physician practices and 24% of hospitals screened for all five social needs, the study found, while 33% of physician practices and 8% of hospitals reported screening for no social needs. The majority of the overall screening activity was driven by interpersonal violence screenings.

Physician practices that served more disadvantaged patients, including federally qualified health centers and those with more Medicaid revenue were more likely to screen for all five social needs. Practices in Medicaid accountable care organization contracts and those in Medicaid expansion states also had higher screening rates. Regionally, practices in the West had the highest screening rates, while practices in the Midwest had the lowest rates.

Among hospitals, the investigators found few significant screening differences based on hospital characteristics. Ownership, critical access status, delivery reform participation, rural status, region, and Medicaid expansion had no significant effects on screening rates, although academic medical centers were more likely to screen patients for all needs compared with nonacademic medical centers.

The study authors wrote that doctors and hospitals may need more resources and additional processes to screen for and/or to address the social needs of patients. They noted that practices and hospitals that did not screen for social needs were more likely to report a lack of financial resources, time, and incentives as major barriers.

To implement better screening protocols and address patients’ needs, the investigators wrote that doctors and hospitals will need financial support. For example, the Centers for Medicare & Medicaid Services should consider expanding care management billing to include managing care for patients who are both at risk or have clinically complex conditions in addition to social needs.

Dr. Fraze and three coauthors reported receiving grants from the Agency for Healthcare Research and Quality during the conduct of the study. Dr. Fraze also reported receiving grants from the Robert Wood Johnson Foundation during the conduct of the study and receiving grants as an investigator from the 6 Foundation Collaborative, Commonwealth Fund, and Centers for Disease Control and Prevention. One coauthor reported receiving grants from the National Institute on Aging/National Institutes of Health during the conduct of the study.

SOURCE: Fraze TK et al. JAMA Netw Open. 2019 Sep 18. doi: 10.1001/jamanetworkopen.2019.11514.

that are associated with health outcomes, a study found.

Lead author Taressa K. Fraze, PhD, of the Dartmouth Institute for Health Policy and Clinical Practice in Lebanon, N.H., and colleagues conducted a cross-sectional survey analysis of responses by physician practices and hospitals that participated in the 2017-2018 National Survey of Healthcare Organizations and Systems. The investigators evaluated how many practices and hospitals reported screening of patients for five social needs: food insecurity, housing instability, utility needs, transportation needs, and experience with interpersonal violence. The final analysis included 2,190 physician practices and 739 hospitals.

Of physician practices, 56% reported screening for interpersonal violence, 35% screened for transportation needs, 30% for food insecurity, 28% for housing instability, and 23% for utility needs, according to the study published in JAMA Network Open.

Among hospitals, 75% reported screening for interpersonal violence, 74% for transportation needs, 60% for housing instability, 40% for food insecurity, and 36% for utility needs. Only 16% of physician practices and 24% of hospitals screened for all five social needs, the study found, while 33% of physician practices and 8% of hospitals reported screening for no social needs. The majority of the overall screening activity was driven by interpersonal violence screenings.

Physician practices that served more disadvantaged patients, including federally qualified health centers and those with more Medicaid revenue were more likely to screen for all five social needs. Practices in Medicaid accountable care organization contracts and those in Medicaid expansion states also had higher screening rates. Regionally, practices in the West had the highest screening rates, while practices in the Midwest had the lowest rates.

Among hospitals, the investigators found few significant screening differences based on hospital characteristics. Ownership, critical access status, delivery reform participation, rural status, region, and Medicaid expansion had no significant effects on screening rates, although academic medical centers were more likely to screen patients for all needs compared with nonacademic medical centers.

The study authors wrote that doctors and hospitals may need more resources and additional processes to screen for and/or to address the social needs of patients. They noted that practices and hospitals that did not screen for social needs were more likely to report a lack of financial resources, time, and incentives as major barriers.

To implement better screening protocols and address patients’ needs, the investigators wrote that doctors and hospitals will need financial support. For example, the Centers for Medicare & Medicaid Services should consider expanding care management billing to include managing care for patients who are both at risk or have clinically complex conditions in addition to social needs.

Dr. Fraze and three coauthors reported receiving grants from the Agency for Healthcare Research and Quality during the conduct of the study. Dr. Fraze also reported receiving grants from the Robert Wood Johnson Foundation during the conduct of the study and receiving grants as an investigator from the 6 Foundation Collaborative, Commonwealth Fund, and Centers for Disease Control and Prevention. One coauthor reported receiving grants from the National Institute on Aging/National Institutes of Health during the conduct of the study.

SOURCE: Fraze TK et al. JAMA Netw Open. 2019 Sep 18. doi: 10.1001/jamanetworkopen.2019.11514.

FROM JAMA NETWORK OPEN

New engineered HIV-1 vaccine candidate shows improved immunogenicity in early trial

ALVAC-HIV vaccine showed immunogenicity across several HIV clades in an early trial involving 100 healthy patients at low risk of HIV infection, according to a study by Glenda E. Gray, MBBCH, FCPaed, of the University of the Witwatersrand, Johannesburg, South Africa, and colleagues that was published online in the Sep. 18 issue of Science Translational Medicine.